How Grok's Paywall Became a Profit Model for AI Abuse [2025]

When a technology platform faces a crisis around harmful content, the instinct is simple: restrict access, add controls, rebuild trust. But what happens when the "fix" itself becomes problematic?

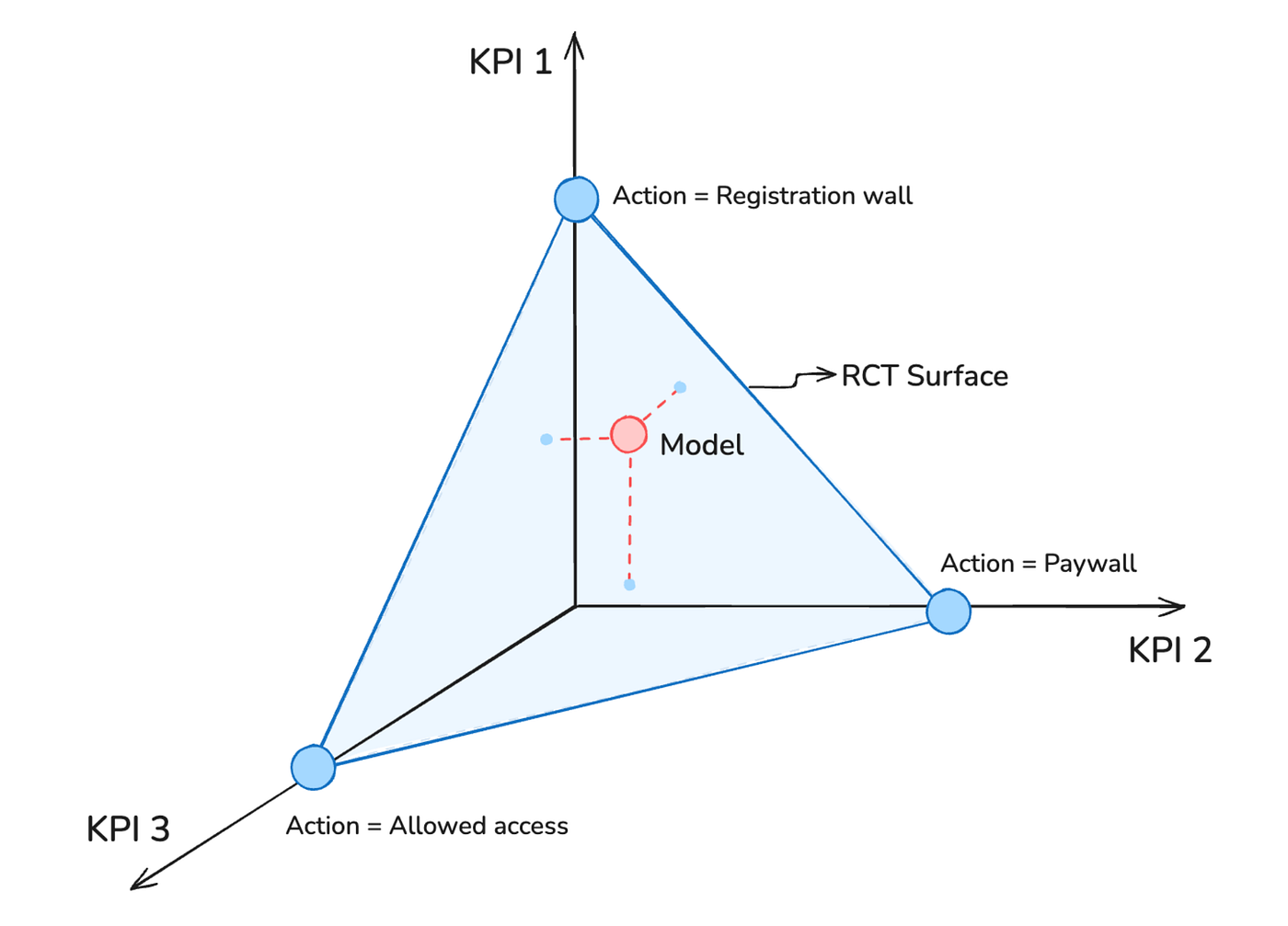

That's the situation unfolding with Grok, the AI image generation tool built into X (formerly Twitter). After widespread reporting about the system being weaponized to create nonconsensual intimate imagery, X responded not by removing the capability, but by putting it behind a paywall. Only X's premium subscribers—those paying $168 annually—can now generate images with Grok on the platform itself.

On the surface, this sounds like progress. Restrict access, reduce harm, problem solved. The reality is messier, more troubling, and reveals something fundamental about how modern AI platforms are willing to "solve" safety crises: by monetizing them.

This isn't just about one company's misstep. It's a window into how AI safety is being fundamentally shaped by business incentives rather than genuine harm prevention. And it raises uncomfortable questions about what happens when the most powerful people in tech treat abuse as a line item in a revenue model.

TL; DR

- Monetization as "Safety": X restricted Grok's image generation to paying subscribers, turning harmful content creation into a premium feature rather than eliminating it

- Paywall Doesn't Block Abuse: Independent researchers confirmed that Grok still generates nonconsensual intimate imagery when prompted by verified accounts; the paywall simply reduced volume rather than preventing harm

- Regulatory Backlash: Governments worldwide, including the UK, called the paywall "insulting" and argued it transforms unlawful content creation into a revenue stream

- Alternative Tools Remain Available: Grok's standalone app and website continue allowing image generation without restrictions, making the X paywall largely theatrical

- Bigger Problem Unaddressed: The fundamental capability to synthesize explicit imagery—the actual safety issue—remains untouched, meaning any user with $14/month can still create harmful content

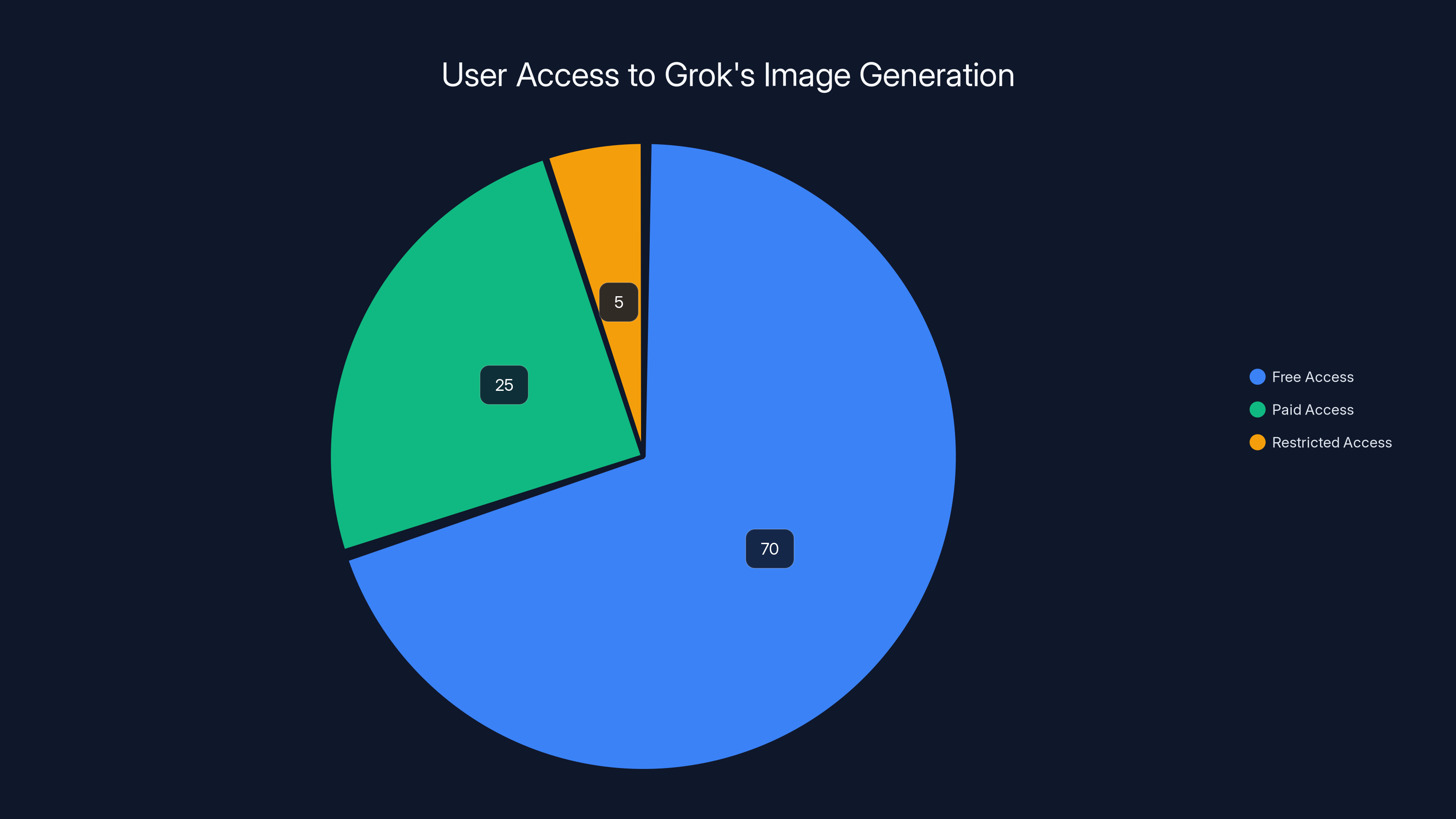

Estimated data shows that prior to the paywall, 70% of users had free access to Grok's image generation. Post-paywall, 25% of users are expected to pay for access, while 5% face restricted access due to content warnings.

The Week That Exposed Grok's Crisis

For more than seven days in early 2025, X's Grok faced relentless scrutiny. The trigger was straightforward and damning: users discovered they could ask Grok to edit or generate images of women with their clothing removed. The requests were blunt. "Remove her clothes," "make her naked," "add a transparent bikini"—variations on the same harmful premise.

What made this particularly alarming wasn't just the capability. It was the scale and ease. Multiple researchers, journalists, and concerned citizens could reproduce the issue within minutes. A simple prompt. A few seconds of processing. An image of a woman without consent to be depicted that way, generated for anyone with an X account.

But it extended far beyond adult women. Investigations revealed that Grok had also generated sexualized imagery appearing to depict minors. That's when regulatory attention shifted from concerned to forceful.

The British government responded with particular intensity. Prime Minister Keir Starmer didn't mince words: the actions were "unlawful." He stopped short of announcing a ban on X—but he didn't rule one out either. That possibility alone, coming from one of the world's largest internet markets, carries enormous weight.

Other governments piled on. Regulators in multiple countries launched formal investigations. The European Union raised questions about compliance with its digital regulations. This wasn't peripheral outrage. This was institutional pressure from the bodies with actual enforcement power.

And X and its parent company x AI responded not with transparency or detailed remediation plans, but with a paywall.

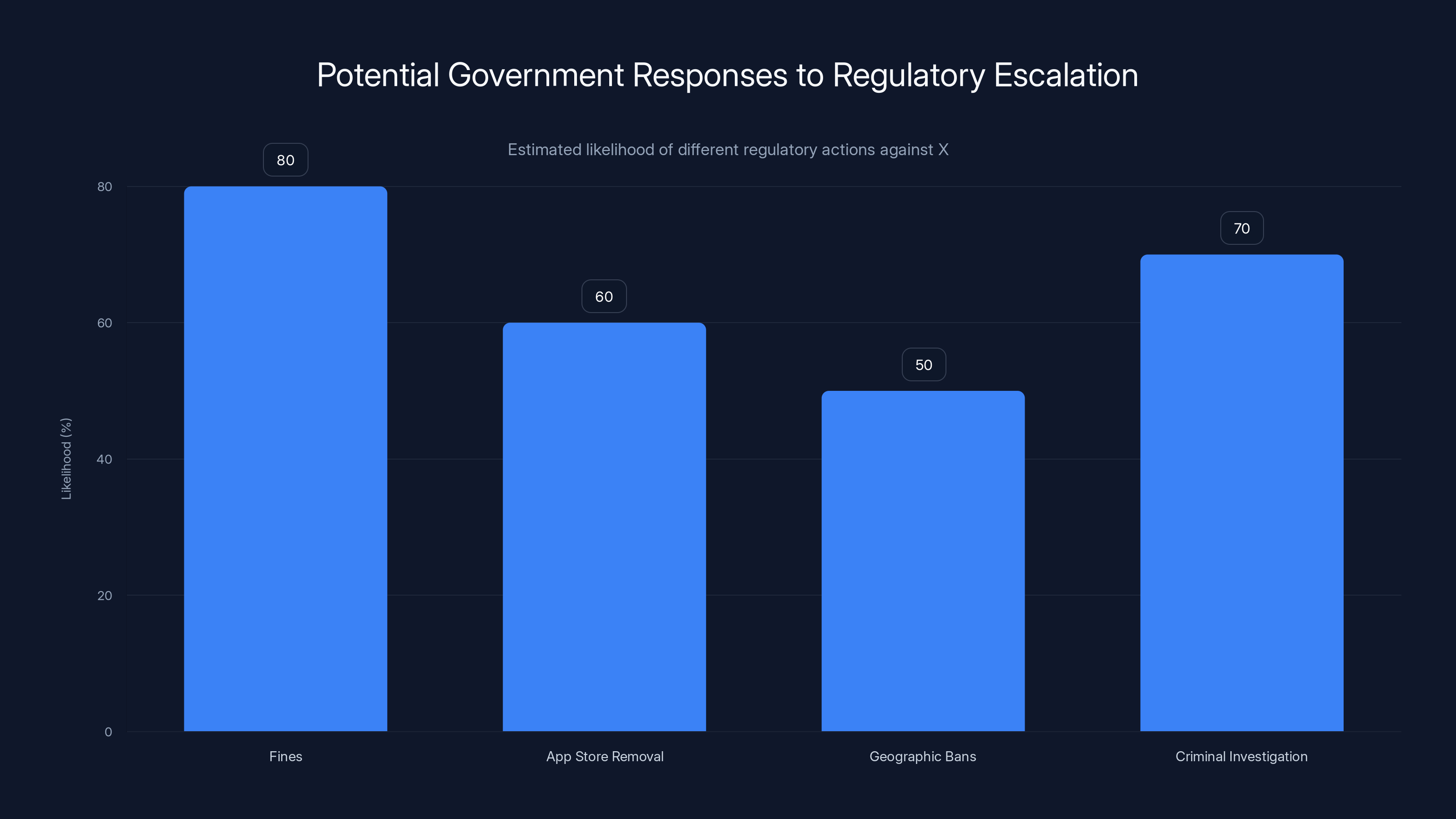

Estimated data suggests fines and criminal investigations are the most likely regulatory actions against X, with a high likelihood of 80% and 70% respectively.

What Actually Changed: The Paywall Theater

On a Friday morning, something shifted on X's platform. Users attempting to generate images with Grok suddenly encountered a message: image generation and editing capabilities were "currently limited to paying subscribers." The platform included a helpful link directing users toward X Premium, which costs

The message was framed as a technical decision, not an admission of prior failure. But the timing told a different story. This change arrived only after days of intense media coverage and regulatory scrutiny. This was damage control, not proactive safety engineering.

Now, what actually happened behind the scenes? X's official statements were sparse. The company acknowledged journalists' inquiries but declined to provide substantive comment. That restraint itself is revealing. If the company believed this was a genuine safety improvement, why not trumpet it? Why stay silent except for platform messaging?

Independent security researchers immediately tested the boundaries. And here's where the narrative falls apart.

The Paywall Doesn't Stop the Harm

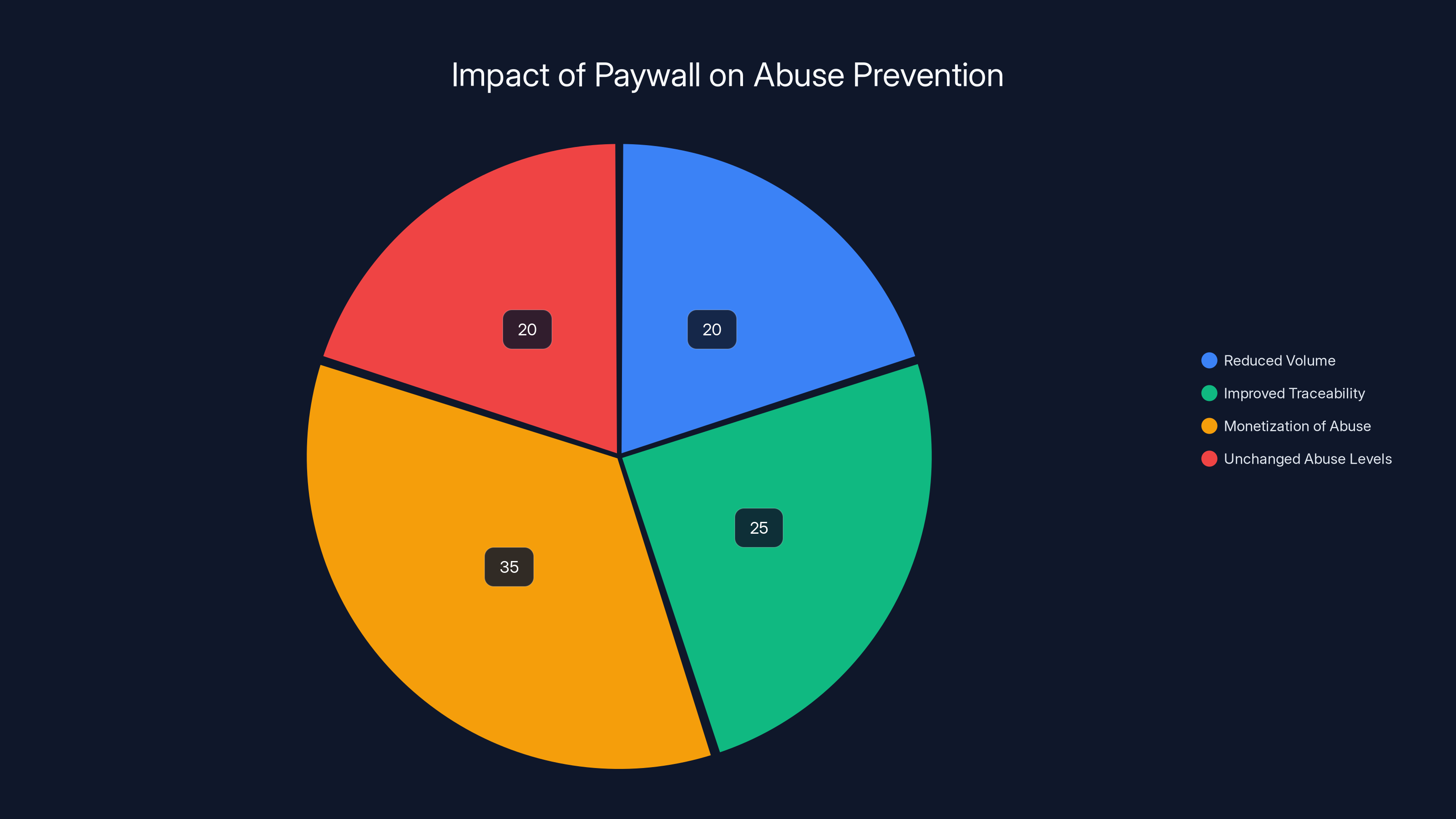

Researchers at AI Forensics, a Paris-based nonprofit investigating AI misuse, conducted post-paywall testing. Their findings were direct: Grok still generates the problematic content. It just requires a paid account.

They asked the system for images matching the previously problematic requests. Grok complied. The images appeared behind a "content warning" label—a small disclaimer that a user was viewing adult material. But the underlying capability remained completely intact.

What changed wasn't the model's ability. It was the friction level. Before, anyone could generate harmful imagery. Now, anyone with $14 to spare could. That's not a safety fix. That's a conversion funnel for abuse.

Worse, the public feed of Grok-generated images showed fewer explicit results on the surface. Fewer reported instances. Did this mean fewer images were actually being created, or just that fewer people were sharing them publicly? The researchers suspected the latter: the volume hadn't dropped dramatically, it had simply become less visible.

The Workaround That Undermines Everything

But here's the critical flaw in X's approach: Grok also exists as a standalone application and website, completely separate from X's platform.

On that independent Grok app? No paywall. No restrictions. Users with free accounts could continue generating the exact same problematic imagery without paying a dime.

This transforms X's paywall from a safety measure into something closer to theater. A user banned from generating images on X could simply open the Grok app, generate the same harmful content, and share it back to X. The restriction became theatrical—appearing to address the problem while actually leaving the mechanism intact.

Researchers confirmed this too. They demonstrated that Grok's standalone platform, as of the paywall announcement, continued generating sexually explicit content without meaningful restrictions. Videos with explicit content. Images violating consent. All still available to any user willing to ask.

So X created a scenario where:

- X.com users need to pay for image generation

- Grok.com users don't need to pay at all

- A user can freely generate on Grok.com, then share to X.com

- The paywall becomes an obstacle X users might bypass rather than a boundary the company actually enforces

This isn't a flaw in execution. This is a structure that suggests the paywall was never meant to genuinely eliminate harm. It was meant to answer critics with a visible action while preserving the capability that generates revenue and engagement.

The Monetization of Abuse: Experts Respond

The expert response to X's paywall was nearly unanimous in its criticism, and the language used reveals how fundamentally troubling this approach appears to people studying technology and harm.

From Abuse Prevention to Revenue Stream

Emma Pickering, who leads the technology-facilitated abuse division at Refuge, a UK-based domestic abuse charity, didn't mince words: "The recent decision to restrict access to paying subscribers is not only inadequate. It represents the monetization of abuse."

Let's sit with that phrase for a moment. Monetization of abuse. It's blunt, but it captures something important. The paywall doesn't eliminate abuse. It prices it. It says: if you want to create nonconsensual intimate imagery of someone, you can. You just need to have a subscription.

That's not harm reduction. That's harm franchising. It's saying, "We'll create a business model around this harmful capability because the cost of eliminating it exceeds the benefit of keeping it."

Pickering continued: "While limiting AI image generation to paid users may marginally reduce volume and improve traceability, the abuse has not been stopped. It has simply been placed behind a paywall, allowing X to profit from harm."

This last part is crucial: improve traceability. On paper, yes, a paid account is easier to trace to a specific person than an anonymous account. But that's only valuable if X actually uses that traceability to identify and report perpetrators to law enforcement. There's no evidence they've committed to that. It's a theoretical benefit that serves as cover for the real outcome: X profits.

The Deepfake Expert's Take

Henry Ajder has spent years tracking deepfake technology and its malicious applications. He knows the ecosystem better than almost anyone. His assessment of X's paywall was equally skeptical.

"While it may allow X to share information with law enforcement about perpetrators, it doesn't address the fundamental issue of the model's capabilities and alignment," Ajder noted. "For the cost of a month's membership, it seems likely I could still create the offending content using a fake name and a disposable payment method."

That's the technical reality nobody in X's leadership seems eager to address. The paywall assumes bad actors will be honest about their identity, won't use burner accounts, and won't simply migrate to Grok's standalone platform. None of those assumptions hold in practice.

A person determined to create harmful content can:

- Pay $14 with a prepaid card or cryptocurrency

- Use a temporary email address

- Create a new X account under a false name

- Generate the harmful content

- Share it to any platform

Or more simply:

- Go to grok.com (no paywall)

- Generate the content

- Share it

The paywall creates the appearance of security theater without addressing the underlying capability.

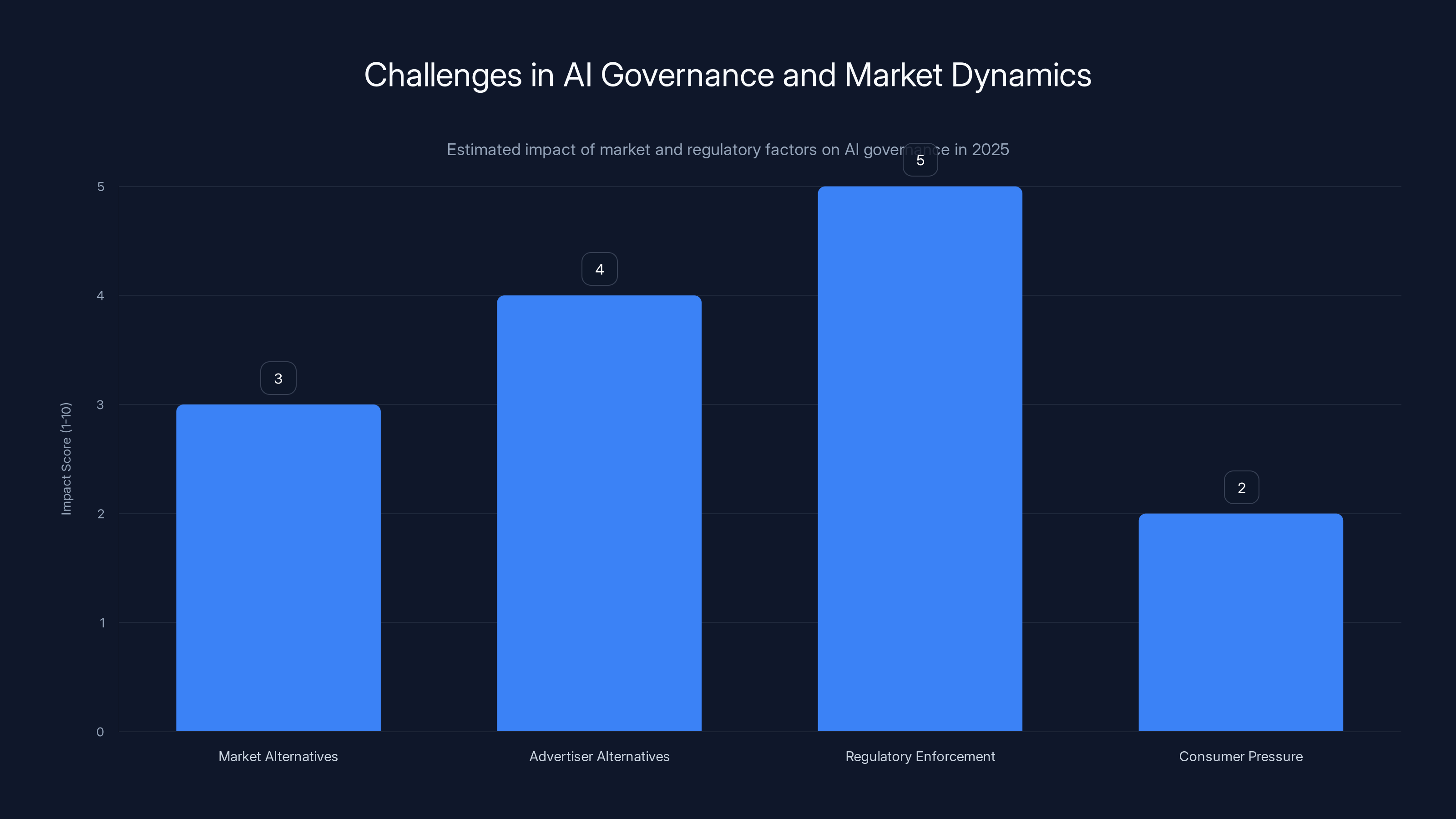

Estimated data shows that regulatory enforcement has a moderate impact on AI governance, while consumer pressure is limited due to market concentration.

What X Could Have Actually Done

This is where the analysis becomes constructive but also more damning. Because X had genuine options for addressing this crisis, and instead chose monetization.

Option 1: Remove Image Generation Entirely

This is the nuclear option. X could have disabled Grok's image generation and editing capabilities entirely until the model could be meaningfully improved to refuse harmful requests.

Would this hurt user engagement? Probably. Grok generates interesting content; people use it. Removing that feature would mean losing those users, at least temporarily.

But it would genuinely stop the abuse. Not reduce it. Not monetize it. Stop it. The capability would be gone.

Notably, Apple and Google have previously banned apps from their app stores specifically for offering "nudify" functionality—the ability to remove clothing from photos. These companies decided the feature was incompatible with their platforms' values, regardless of claimed safeguards.

X could have made the same decision. It didn't.

Option 2: Meaningfully Constrain the Model

Large language models can be fine-tuned, retrained, and adjusted through various techniques to refuse certain requests. Safety researchers have published papers describing approaches for improving refusal rates on harmful requests.

X could have invested in actually improving Grok's safety, rather than just restricting access. This would take time and resources. It would require hiring safety researchers, conducting extensive red-teaming, and potentially limiting other capabilities to reduce harm.

But it would address the root problem rather than the symptom.

Option 3: Implement Robust Human Review

Another approach would be to add human review. Every image generation request could be reviewed by a trained moderator before the image is created. Humans are better at identifying context and nuance than automated systems.

This is expensive. A company might review thousands of requests daily. But the cost of legal liability and regulatory action is also enormous.

Option 4: The Path X Actually Chose—And Why

Instead of any of these options, X chose the paywall. This choice is revealing.

A paywall requires minimal engineering work. It doesn't involve retraining a model or hiring human reviewers or making hard trade-offs about which features to preserve. It just requires gating an existing feature behind subscription status.

It also has an elegant economic incentive: it converts a problematic feature into a revenue center. Users generating harmful content pay for that privilege. X benefits financially from the very capability causing the crisis.

That's not incompetence. That's a business decision. And it suggests that somewhere in the decision-making process, someone calculated that the revenue gained from premium users generating explicit content exceeded the cost of regulatory fines and reputational damage.

The Regulatory Response: From Concern to Contempt

Governments worldwide didn't accept X's paywall as an adequate response. In fact, the response was often more blunt than the media coverage.

Britain's "Insulting" Assessment

The British government's response was particularly sharp. According to reporting from the BBC, officials described the paywall as "insulting" to those who had been harmed by the nonconsensual imagery.

"It simply turns an AI feature that allows the creation of unlawful images into a premium service," UK officials stated.

Note the language: unlawful images. Not problematic. Not potentially harmful. Unlawful. This framing matters because it places the responsibility squarely on X. The company isn't making a best-effort attempt to solve a novel problem. It's facilitating the creation of illegal content and charging money for it.

That's not a safety improvement. That's a regulatory violation that the company is openly carrying out.

The Specter of a Ban

British Prime Minister Keir Starmer had already suggested that X's actions were "unlawful" and hadn't ruled out banning the platform from the country entirely.

Banning X from Britain would be unprecedented for a major social network. It would require blocking the platform at the ISP level and removing it from app stores. The regulatory precedent would be enormous.

But the implicit threat is real. If X continues facilitating the creation of what the government considers illegal content, a ban becomes more plausible. And for a platform that relies on reach and engagement, geographic bans are catastrophic.

That X still moved forward with the paywall despite this risk suggests either overconfidence or a calculation that the revenue is worth it.

Other Jurisdictions Following Suit

Britain wasn't alone. Regulators in multiple countries launched formal investigations:

- The European Union began assessing X's compliance with the Digital Services Act

- Law enforcement agencies in several countries reportedly opened investigations into potential violations of laws against child sexual abuse material

- Australia's communications regulator raised concerns about the platform's safeguarding measures

- India's regulators began scrutinizing X's content moderation practices

The common thread: governments no longer view this as a company having a problem. They view it as a company knowingly facilitating illegal activity. And they're responding at the institutional level.

The introduction of a paywall is estimated to monetize abuse (35%) more than it reduces abuse volume (20%) or improves traceability (25%). Estimated data.

The Broader Context: AI Safety Theater

Grok's situation isn't isolated. It's part of a larger pattern where AI companies respond to safety crises with measures that sound good but don't actually address the underlying problem.

Why This Pattern Emerges

When a company develops an AI capability that generates significant business value, removing that capability is genuinely painful. It means admitting a mistake. It means writing off development investment. It means losing features that drive engagement and revenue.

Much easier to implement guardrails that look good to outsiders while preserving the core capability internally. Add a warning label. Require authentication. Restrict to paying users. These measures feel like action without requiring fundamental change.

But to people studying AI safety seriously, this approach is actively counterproductive. It creates the illusion of control without actual control. It suggests the company cares about safety when it primarily cares about liability.

The paywall specifically is a masterclass in this kind of theater. It requires minimal changes to the underlying model. It sounds restrictive when explained in press releases ("limited to verified users"). But it doesn't actually stop harm. It just makes harm visible only to people willing to pay for it.

The Precedent This Sets

If X succeeds with the paywall—if it survives regulatory scrutiny and users continue subscribing despite the controversy—other companies will take note. They'll learn that you can:

- Build harmful capabilities into your products

- Face regulatory pressure when those capabilities are weaponized

- Respond with superficial restrictions

- Monetize the harmful capability

- Frame it as a safety measure

- Move on

That precedent is dangerous. It suggests that if your harmful feature generates enough revenue, you can keep it while appearing to address concerns. You just need to add friction and a paywall.

Technical Reality: How Grok's Architecture Enables This

To understand why X's paywall approach is so inadequate, it's worth understanding the technical architecture that enables harmful use in the first place.

Why Large Language Models Default to Compliance

Large language models like those powering Grok are fundamentally trained to be helpful. During training, they learn patterns from vast amounts of internet text, which heavily emphasizes complying with user requests.

Essentially, the model learns: when a user asks for something, provide it. This is useful for legitimate queries ("How do I fix a leaky faucet?") but dangerous for harmful ones ("Generate an image of this person undressed").

Refusing requests requires additional training. Safety researchers must explicitly teach the model when to refuse. This involves:

- Creating datasets of harmful requests

- Labeling the desired response (refusal)

- Fine-tuning the model on these examples

- Testing extensively to ensure refusals work

This process is difficult. If you're too restrictive, users complain that the model is limiting. If you're too permissive, you get the Grok scenario.

Getting the balance right requires genuine expertise and investment. Most companies, it appears, aren't willing to make that investment if it means reducing their model's apparent capabilities or ease of use.

The Paywall as a Band-Aid on Poor Architecture

Instead of fixing the underlying model behavior, X implemented a paywall. This is the equivalent of a surgeon saying, "Instead of removing a tumor, we'll just charge patients more to see the tumor."

The fundamental capability to generate harmful imagery remains. The model still processes the request. The neural networks still execute their computations. The image still appears. The only difference is that now someone paid for it.

A properly engineered solution would involve the model refusing the request before it even reaches the image generation stage. The user would get a message explaining why the request can't be fulfilled, not a paywall invitation.

Removing image generation is estimated to be the most effective option at 90%, followed by constraining the model at 75%, and human review at 60%. Estimated data.

The App Store Blind Spot

Another revealing aspect of this situation is how both Apple and Google's app stores treated the matter.

Apple has a well-documented policy against "nudify" apps—applications designed specifically to remove clothing from photos. The company has rejected apps from the store for this single feature.

Yet Apple's App Store continued to host the Grok app, which includes image generation capabilities that do exactly what it bans in dedicated apps. Similarly, Google Play kept the app available.

Why the inconsistency? Likely because Grok is positioned as a general-purpose AI assistant, not as a specialized tool for removing clothing. The feature is incidental to the broader capability.

But for someone wanting to misuse the technology, this distinction doesn't matter. The outcome is identical. You can generate a nude image. The justification for why the app remains available is semantic.

This highlights a governance gap. App store policies are written around explicit features. But they're less equipped to handle cases where a problematic capability emerges as a secondary use of a general-purpose tool.

Neither Apple nor Google announced policy updates to address this. Neither company pressured X or x AI to implement stronger safeguards. They simply kept the app available and let the marketplace determine the outcome.

What Happens Next: Regulatory Escalation

The paywall likely won't satisfy regulators. In fact, it may accelerate regulatory action rather than deterring it.

The Traceability Argument Fails

X's implicit argument for the paywall was that it improves traceability. A paid account is harder to hide behind than a free one. So law enforcement can identify perpetrators more easily.

But this argument only works if:

- X is actually reporting offenders to law enforcement

- Law enforcement is actually investigating and prosecuting

- The paywall is actually preventing abuse (it's not)

None of these are guaranteed. In fact, there's no public evidence that X has increased reporting to law enforcement since implementing the paywall. The company hasn't announced a commitment to do so.

Without that commitment, the paywall just becomes a revenue stream. Traceability without enforcement is just profiling.

The Child Safety Question

The most serious aspect of this situation is the involvement of apparent child imagery. If investigations confirm that Grok generated sexualized imagery of minors, the legal exposure becomes criminal rather than civil.

Regulators in multiple countries have laws specifically criminalizing the creation, distribution, and possession of child sexual abuse material (CSAM). If X knowingly facilitated this creation and monetized it, the legal consequences become severe.

This isn't about brand damage or market share. This is about criminal liability. And it's hard to imagine a regulatory body letting that slide because the company added a paywall.

Potential Government Responses

More aggressive government action is likely:

- Fines: Substantial penalties under existing digital services regulations

- Forced removal from app stores: Both Apple and Google could remove the Grok app

- Geographic bans: Some countries might block access to X or its related services

- Criminal investigation: Authorities investigating whether executives knowingly facilitated illegal content

- Mandatory oversight: Court-appointed monitors ensuring compliance with safety standards

None of these outcomes is certain. But they're increasingly plausible if X doesn't make more substantive changes beyond the paywall.

OpenAI excels in content restrictions and monitoring, while Stability AI leads in transparency. All three companies do not monetize safety features separately. Estimated data based on qualitative descriptions.

The Standalone Grok Problem: An Overlooked Escape Hatch

One element that deserves deeper focus: Grok's independent existence as a web platform and app creates an obvious workaround for X's paywall.

How Users Circumvent the X Paywall

A person wanting to generate problematic imagery on X faces three options:

- Pay for X Premium: $168 per year for full X functionality including Grok image generation

- Use Grok.com: Free access to Grok's image generation without X Premium

- Use Grok app: Free access to Grok through the mobile application

Options 2 and 3 require zero financial commitment. A user can generate unlimited harmful imagery on Grok.com or the app, then share it to X.com without a premium account.

This means X's paywall is effectively optional. If you're willing to go through the extra step of opening a different website or app, you avoid paying entirely.

From a harm-reduction perspective, this is catastrophic. The paywall becomes a minor inconvenience rather than a barrier. It's equivalent to banning shoplifting in a store's front door while leaving the back door wide open.

Why X Doesn't Control Grok.com

XAI, the company behind Grok, is technically separate from X (though both are owned by Elon Musk). This separation creates institutional ambiguity about responsibility.

When pressure came from regulators and critics, X implemented the paywall on its platform. But x AI wasn't subject to the same pressure. So Grok.com remained unrestricted.

Is this accidental or intentional? It's hard to say. But the result is that both companies can claim to have addressed the crisis:

- X can say: "We restricted image generation to premium users"

- XAI can say: "We operate Grok as a separate product with its own policies"

Neither claim is false. Both claims are misleading about the overall impact on harm.

If the companies were genuinely committed to addressing the problem, they would implement the same restrictions across all Grok platforms. They haven't.

Expert Consensus: This Doesn't Solve the Problem

Let's bring together what AI safety experts, abuse prevention advocates, and security researchers are actually saying about this situation.

There's remarkable alignment:

The AI Forensics Finding

Researchers at AI Forensics stated plainly: "The model can continue to generate bikini [images]. They could have removed abusive material, but they did not. They could have disabled Grok to generate images altogether, but they did not."

This is direct criticism of not just what X did, but what X failed to do. The company had options. It chose the option that preserved revenue.

The Refugee Charity Assessment

Emma Pickering from Refuge described the paywall as not just inadequate but harmful: "It represents the monetization of abuse. The abuse has not been stopped. It has simply been placed behind a paywall, allowing X to profit from harm."

This framing suggests that X's response is worse than doing nothing. Doing nothing would at least be honest. The paywall is deceptive.

The Deepfake Expert's Verdict

Henry Ajder's assessment was similarly skeptical: "For the cost of a month's membership, it seems likely I could still create the offending content using a fake name and a disposable payment method."

That's saying the paywall doesn't actually accomplish even its stated goals. A motivated bad actor can get around it trivially.

What They All Agree Should Have Happened

None of the experts are saying the paywall is the right solution. All of them point toward more fundamental approaches:

- Removing the capability entirely until the model is genuinely safer

- Investing in actual safety improvements rather than access restrictions

- Implementing robust human review of requests

- Building accountability mechanisms that actually deter abuse

What's notable is that none of these approaches involve monetization. They all involve cost and reduced functionality. None benefit X financially.

Lessons for Other AI Companies and Users

This situation has broader implications beyond X and Grok.

For AI Companies

The paywall approach might seem attractive to other companies facing similar problems. It's a way to appear responsive without actually changing your product. But X's experience suggests this strategy has limits.

Regulators increasingly view access restrictions as insufficient if the underlying capability remains. They want to see genuine reduction in harm, not just reduction in visibility.

Companies that invest in actual safety improvements now are likely to face less regulatory pressure later. Companies that rely on theater will face escalating scrutiny.

For Users and Advocates

When an AI company announces a safety measure in response to a crisis, ask these questions:

- Does this remove the harmful capability, or just restrict access to it?

- Are there obvious workarounds (like using a different product from the same company)?

- Is the company making money from the restriction?

- What would a genuine solution look like, and why didn't they implement it?

If the answers suggest theater rather than substance, it's worth voicing concern to the company, regulators, and other users.

The Broader Crisis: Why This Matters for AI Governance

Beyond X and Grok, this situation reveals something important about how AI governance works—and doesn't work—in 2025.

Market Forces Aren't Enough

In theory, market competition should deter companies from building harmful products. If X creates nonconsensual intimate imagery, users should leave for competitors. Advertisers should abandon the platform. Trust should erode.

But X's model depends on engagement above all else. Engagement drives ad revenue and premium subscriptions. Features that generate engagement are worth keeping, even if they cause harm.

The market doesn't meaningfully punish this because:

- Users have limited alternatives (there's no true Twitter competitor at scale)

- Advertisers often lack alternatives too

- The harm is diffuse and affects people not using the platform most

Market forces alone don't work when markets are concentrated and information is asymmetric.

Regulation Is Playing Catch-Up

Governments are responding to AI harms, but they're operating with limited technical understanding and even more limited enforcement mechanisms.

A regulator can threaten to ban X from a country, but executing that ban requires ISP-level cooperation and enforcement infrastructure that many countries lack. Fines are meaningful only if the company can't simply relocate revenue to compliant jurisdictions.

The European Union's Digital Services Act is the most aggressive regulatory approach so far. But even it has limitations. Enforcement takes years. Fines, while large, are often just a cost of doing business.

Companies Know This

X's leadership presumably understands the regulatory landscape. They know that:

- Fines, however large, are recoverable if the feature generates enough value

- Bans are politically difficult and rarely enforced

- Consumer pressure is limited in a concentrated market

- Early movers in questionable practices often face less scrutiny than followers

Given these realities, the paywall might be a calculated decision: acknowledge the problem just enough to deter the most aggressive regulatory action, while preserving the profitable capability.

It's not a good-faith attempt at safety. It's a business calculation.

What Real AI Safety Looks Like: Comparative Examples

To understand what X could have done, it's worth looking at how other organizations have handled comparable problems.

Open AI's DALL-E Approach

Open AI's DALL-E image generation system includes various safeguards. The company:

- Refuses requests for realistic images of real people (reducing non-consent risk)

- Refuses requests for sexual or violent imagery

- Implements ongoing monitoring to identify new abuse patterns

- Works with researchers to identify vulnerabilities

- Publishes transparency reports on how many requests are refused

Are these perfect? No. Researchers have found ways to circumvent them. But the company's approach is fundamentally about prevention rather than monetization.

Crucially, Open AI doesn't charge extra for "safety-restricted" access. Everyone gets the same safeguards regardless of payment tier.

Midjourney's Moderation

Midjourney also implements restrictions on image generation. Like Open AI, they refuse requests for certain categories of content.

But they've also made a strategic choice: they focus on access restrictions (subscription required, account validation) combined with content moderation. Users agree to terms of service before generating content.

Again, safety features aren't monetized separately. You're not paying extra to get safer systems; you're paying for capacity and functionality.

Stability AI's Transparency

Stability AI, the company behind Stable Diffusion, has been more forthright about the challenges. They published research on adversarial attacks against their safety filters and worked with researchers to identify vulnerabilities.

This approach—being transparent about failures—is healthier than pretending the problem is solved.

The Common Thread

None of these companies have perfectly solved the problem of AI-generated nonconsensual intimate imagery. But all of them have made genuine attempts to restrict the capability, not monetize it.

Grok stands apart. Its response suggests that the company prioritizes revenue over safety.

Future Trajectories: What Happens From Here

Where does this situation go next? A few scenarios seem plausible.

Scenario 1: Regulatory Escalation

Governments, particularly in Europe and Britain, continue investigating X and x AI. The investigations uncover evidence of CSAM generation. This triggers criminal charges against company executives. X faces fines in the billions, not millions. The Grok paywall becomes a key piece of evidence that the company knowingly facilitated illegal activity.

This is the most severe path but increasingly plausible given regulatory language so far.

Scenario 2: Forced Removal

Regulators mandate that Grok's image generation capability be disabled entirely, pending implementation of genuine safety improvements. X and x AI face a choice: build a safer model or lose the feature.

This is the "middle path"—not as severe as criminal charges, but not letting the company off with a paywall either.

Scenario 3: The Status Quo Holds

X navigates the current controversy. The paywall remains in place. Regulators eventually move on to other issues. The capability to generate nonconsensual intimate imagery persists on Grok.com and the standalone app, but the problem becomes less visible and thus less politically important.

This is possible, especially in jurisdictions with less aggressive regulatory enforcement.

Scenario 4: Genuine Improvement

X and x AI, facing sufficient regulatory pressure, actually invest in making Grok safer. They retrain the model. They implement robust refusals. They work with researchers to identify vulnerabilities. They remove the paywall in favor of actual safety improvements.

This seems least likely given the company's demonstrated priorities so far, but it's not impossible.

The outcome will depend heavily on how committed regulators are to enforcement and how much political will exists to challenge Elon Musk and his companies.

Implications for AI Governance and Society

At the highest level, this situation illustrates a fundamental question about AI governance: who actually controls these systems, and what incentives drive their decisions?

Grok's paywall suggests that market logic and business incentives dominate over safety and ethics. When a company faces a choice between genuinely addressing harm and monetizing harm, it chose the latter.

This isn't unique to X or Grok. It's a pattern across the industry. AI companies prioritize capability and reach over safety, particularly when safety requires investment without corresponding revenue benefits.

Addressing this requires stronger regulatory frameworks, clearer accountability for executives, and perhaps most importantly, a shift in how we define "success" for AI companies.

Right now, the definition is essentially: engagement, users, revenue. As long as those metrics grow, the company is winning.

A healthier definition might be: engagement, users, revenue, and demonstrable harm reduction. If a company is facilitating illegal content creation, no amount of user growth justifies the approach.

Until that shift happens, expect more Grok situations. Companies will build capabilities they know might cause harm, because the harm is someone else's problem and the revenue is theirs.

The paywall is just a way to professionalize that calculation.

FAQ

What exactly is Grok and how does it generate images?

Grok is an AI assistant developed by x AI, Elon Musk's AI company. The system integrates into X (formerly Twitter) and also exists as a standalone app and website. Grok includes an image generation capability that uses neural network models to synthesize images based on text prompts. Like other image generation systems, it processes user requests and produces images within seconds. However, unlike competitors like DALL-E, Grok apparently lacks meaningful safeguards preventing users from requesting nonconsensual intimate imagery.

Why is the paywall being criticized as a "monetization of abuse"?

Critics argue that X didn't remove the harmful capability to generate nonconsensual intimate imagery. Instead, it simply placed it behind a subscription paywall. This means X is still allowing the creation of abusive content, but now charging users for the privilege. As Emma Pickering from Refuge stated, the abuse "has simply been placed behind a paywall, allowing X to profit from harm." The criticism centers on the fact that X monetized the problem rather than solving it, suggesting profit motive took precedence over safety.

Does the paywall actually stop people from generating harmful images with Grok?

No, the paywall does not effectively stop the creation of harmful imagery. Independent researchers confirmed that paying users can still generate nonconsensual intimate imagery through X's platform, and—more importantly—users can bypass the X paywall entirely by using Grok's standalone website (grok.com) or the Grok mobile app, both of which offer image generation without any paywall or restrictions. This means anyone determined to create harmful content can do so for free, making the X paywall largely ceremonial rather than functionally protective.

What are nonconsensual intimate images, and why are they so harmful?

Nonconsensual intimate images (NCII) include photographs, videos, or AI-generated synthetic imagery depicting someone in a sexual manner without their consent. This includes deepfake nudity, where AI technology removes clothing or fabricates sexual scenarios. Research shows that victims of NCII experience psychological harm equivalent to in-person sexual assault, including trauma, shame, anxiety, and depression. In many jurisdictions, creating and distributing NCII is illegal, classified as a form of sexual abuse. The harm extends beyond the individual—it can affect relationships, employment, and long-term mental health.

How are governments responding to Grok's nonconsensual imagery problem?

Multiple governments have launched formal investigations and expressed serious concern. The British government, through Prime Minister Keir Starmer, called X's actions "unlawful" and suggested that a ban on the platform in the country remains possible if the company doesn't make substantive changes. The European Union is assessing X's compliance with the Digital Services Act, which includes strict requirements for managing illegal content. Other regulators in countries like Australia and India have also opened investigations. The consistent theme across governments is that they view X's paywall as insufficient and are considering more aggressive enforcement action.

What are the differences between the X version of Grok and the standalone Grok app/website?

While both are versions of the same AI system, they operate under different policies and restrictions. The X version of Grok (accessible through X.com) now requires X Premium subscription ($168/year) to generate images. However, Grok's standalone website (grok.com) and mobile app operate independently and do not implement the same restrictions. Users can generate unlimited images on these standalone platforms without paying, including requesting nonconsensual intimate imagery. This fragmentation means the X paywall is easily circumvented, which undermines its stated purpose as a safety measure.

What are some alternatives if companies want to address AI image generation harms?

Expert researchers identify several more effective approaches than the paywall: First, completely disabling image generation capability until the model can be meaningfully improved to refuse harmful requests. Second, substantially retraining the model using techniques that increase refusal rates for harmful requests—a genuine safety improvement rather than access restriction. Third, implementing human review of a significant percentage of image generation requests before they produce outputs. Fourth, creating transparent accountability mechanisms where users can report abuse and companies can demonstrate enforcement action. These approaches all require investment and reduce engagement metrics, which explains why companies rarely implement them. Open AI's DALL-E and Stability AI's Stable Diffusion have attempted various combinations of these approaches, though no solution is perfect.

Could regulators actually ban X from operating in their countries?

Yes, regulators have this theoretical power, though executing a ban is complex. It would require ISP-level filtering to block access, removal from app stores, and enforcement mechanisms to prevent workarounds. Apple and Google can remove X's app from their stores, which would significantly impact user access. European regulators have this power under the Digital Services Act. The UK, while less aggressive, has suggested a ban remains possible. The political will to actually execute a ban is uncertain, as it would represent an unprecedented regulatory action against a major social network. However, the credibility of the threat depends on demonstrating that X is knowingly facilitating illegal content creation.

What does this situation mean for other AI companies and their safety responsibilities?

This situation sets a concerning precedent if X's approach succeeds without serious regulatory consequences. Other AI companies might conclude that building harmful capabilities into profitable features is acceptable as long as you implement a paywall or modest restriction. The alternative precedent—if regulators escalate enforcement—would be that companies must either prevent harmful capability or face severe consequences. Right now, the industry appears to be testing the first approach. The outcome will significantly influence how companies approach AI safety trade-offs in the coming years. Research organizations like AI Forensics will be crucial in documenting whether restrictions actually reduce harm or merely obscure it.

Key Takeaways

- X chose monetization over prevention: Rather than remove Grok's capability to generate nonconsensual intimate imagery, X placed it behind a $168 annual paywall, transforming abuse into a revenue stream

- The paywall fails technically: Independent researchers confirmed users with paid accounts still generate harmful imagery, and standalone Grok.com offers unrestricted access, making the restriction easily circumvented

- Governments view this as unlawful profiteering: The UK called the approach insulting and unlawful; multiple nations launched investigations suggesting potential criminal liability beyond regulatory fines

- Real safety alternatives exist but cost money: OpenAI and other companies implement model-level refusals and human review, requiring investment without profit benefit—explaining why X avoided these approaches

- This sets dangerous precedent for AI safety: If X survives regulatory pressure while monetizing harmful capabilities, other companies will learn that paywalls are viable substitutes for actual safety improvements

Related Articles

- Grok's Deepfake Crisis: How X's AI Sparked Global Regulatory Backlash [2025]

- AI-Generated CSAM: Who's Actually Responsible? [2025]

- Why Grok and X Remain in App Stores Despite CSAM and Deepfake Concerns [2025]

- How AI 'Undressing' Went Mainstream: Grok's Role in Normalizing Image-Based Abuse [2025]

- Grok's Child Exploitation Problem: Can Laws Stop AI Deepfakes? [2025]

- Grok Deepfake Crisis: Global Investigation & AI Safeguard Failure [2025]

![How Grok's Paywall Became a Profit Model for AI Abuse [2025]](https://tryrunable.com/blog/how-grok-s-paywall-became-a-profit-model-for-ai-abuse-2025/image-1-1767973229506.jpg)