How People Use Chat GPT: Open AI's First User Study Analysis [2025]

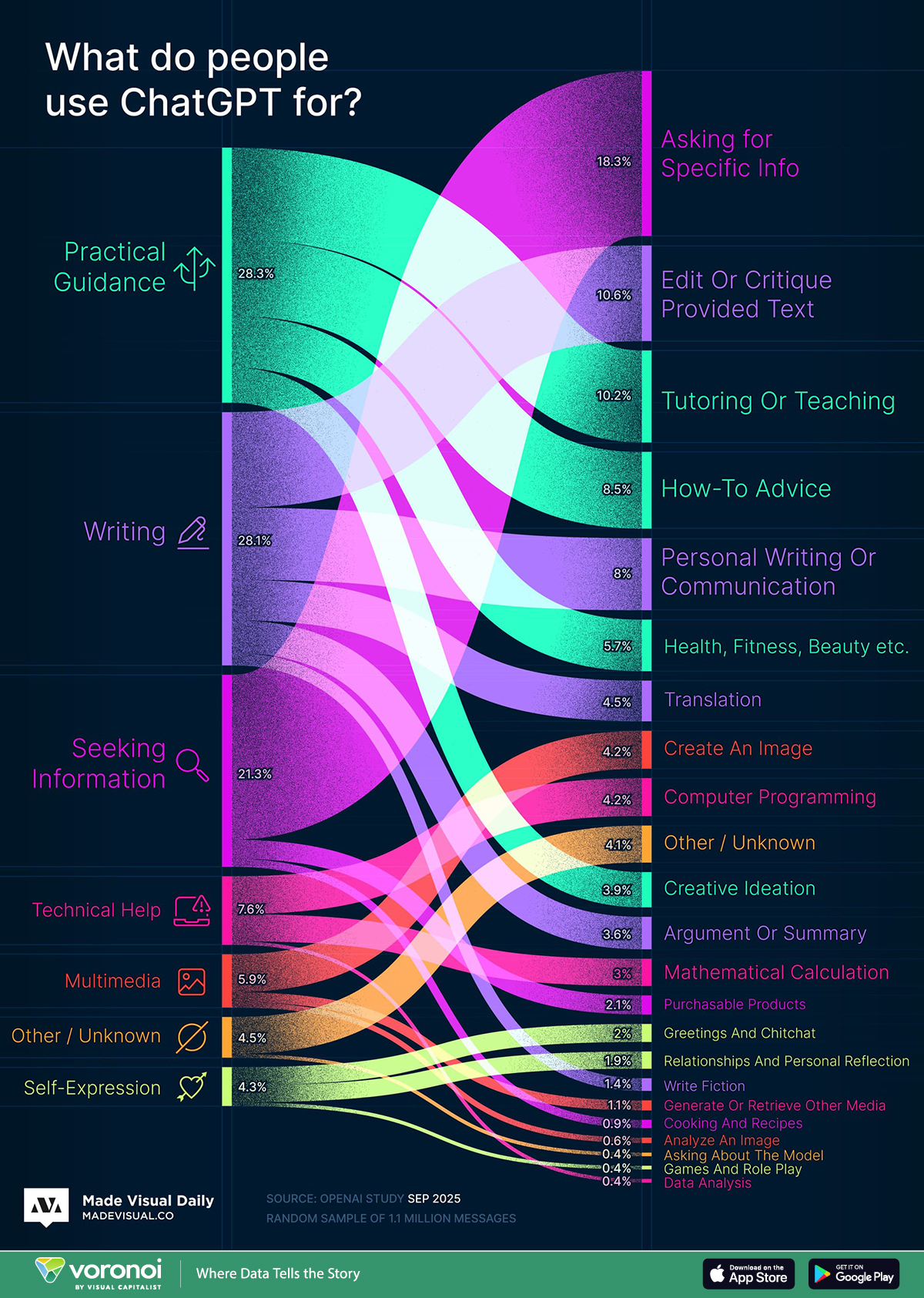

TL; DR

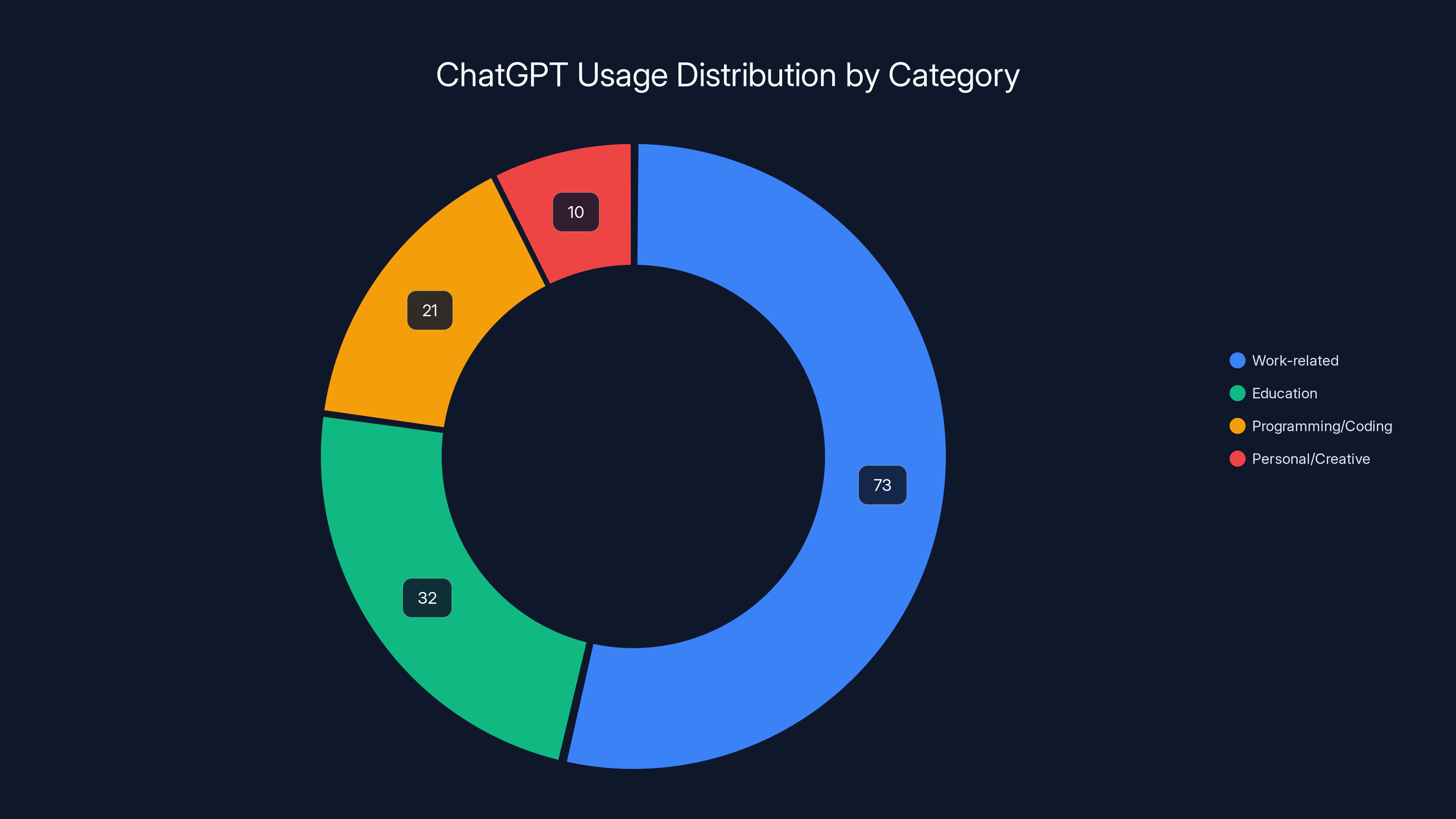

- 73% of Chat GPT users leverage it for work-related tasks, with drafting, summarization, and coding dominating professional workflows.

- Education emerges as the second-largest use case, with students and educators using the tool for explanations, practice problems, and lesson preparation.

- Technical users dominate adoption, with programming and coding accounting for 21% of all interactions, revealing AI's strongest foothold in technical problem-solving.

- Generational divides exist but are narrowing, as mid-career professionals increasingly embed Chat GPT into daily workflows rather than treating it as experimental.

- The productivity narrative is replacing the novelty narrative, shifting Chat GPT from curiosity-driven exploration to measurable efficiency gains in routine tasks.

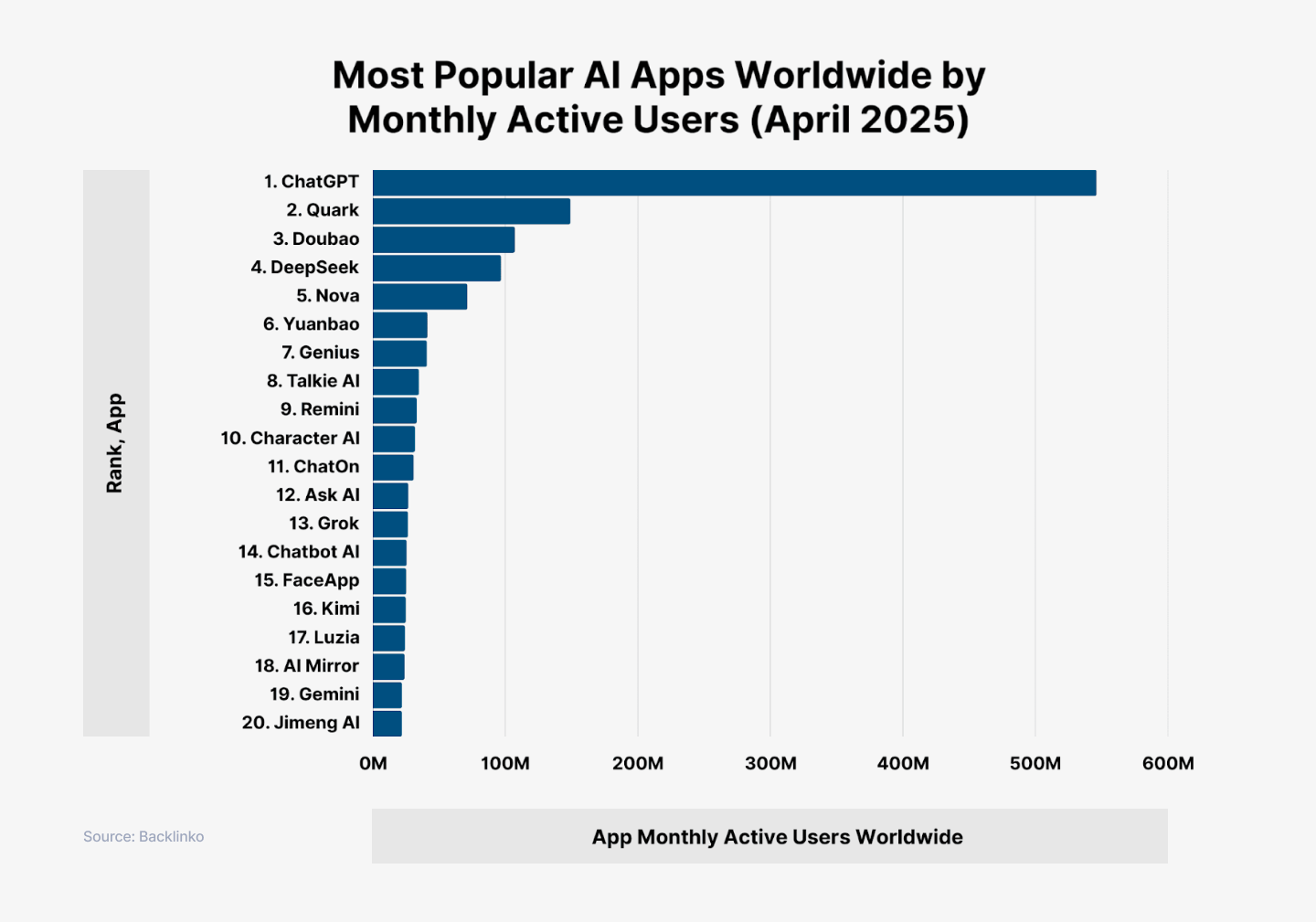

OpenAI's study reveals that 73% of ChatGPT usage is work-related, highlighting its role as a productivity tool. Personal and creative uses are less common, contradicting early narratives.

Introduction: When AI Moves From Hype to Habit

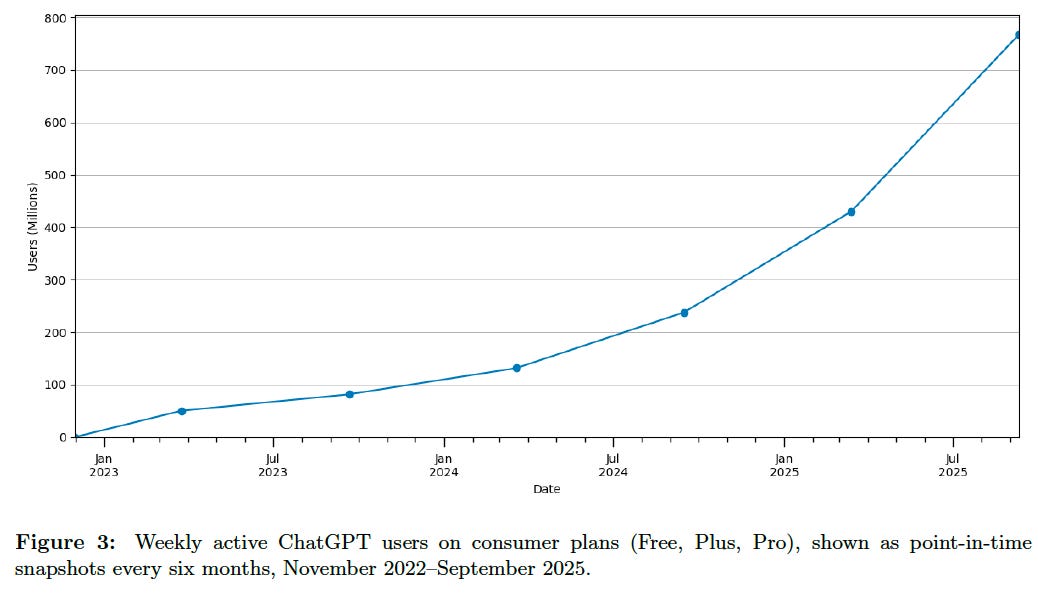

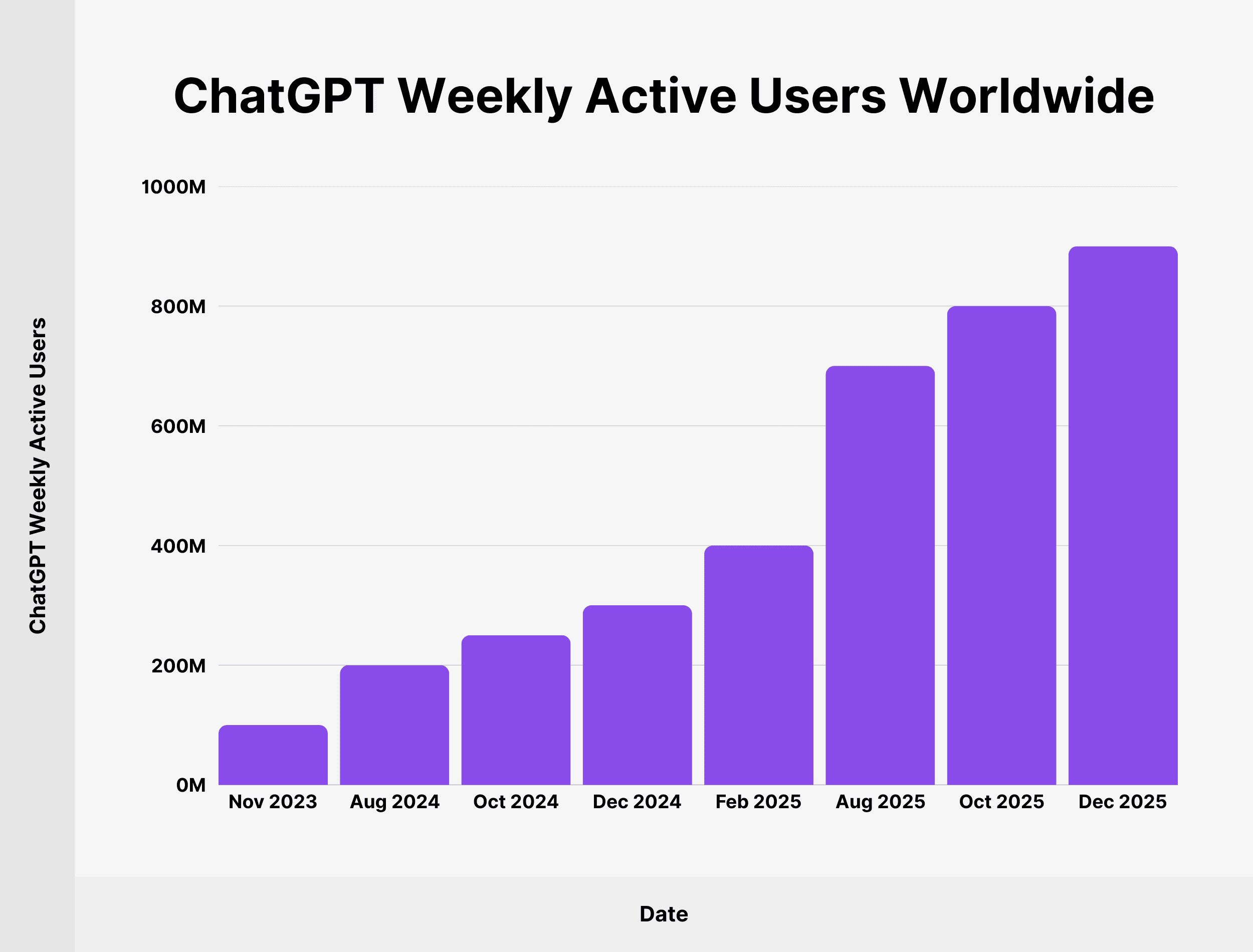

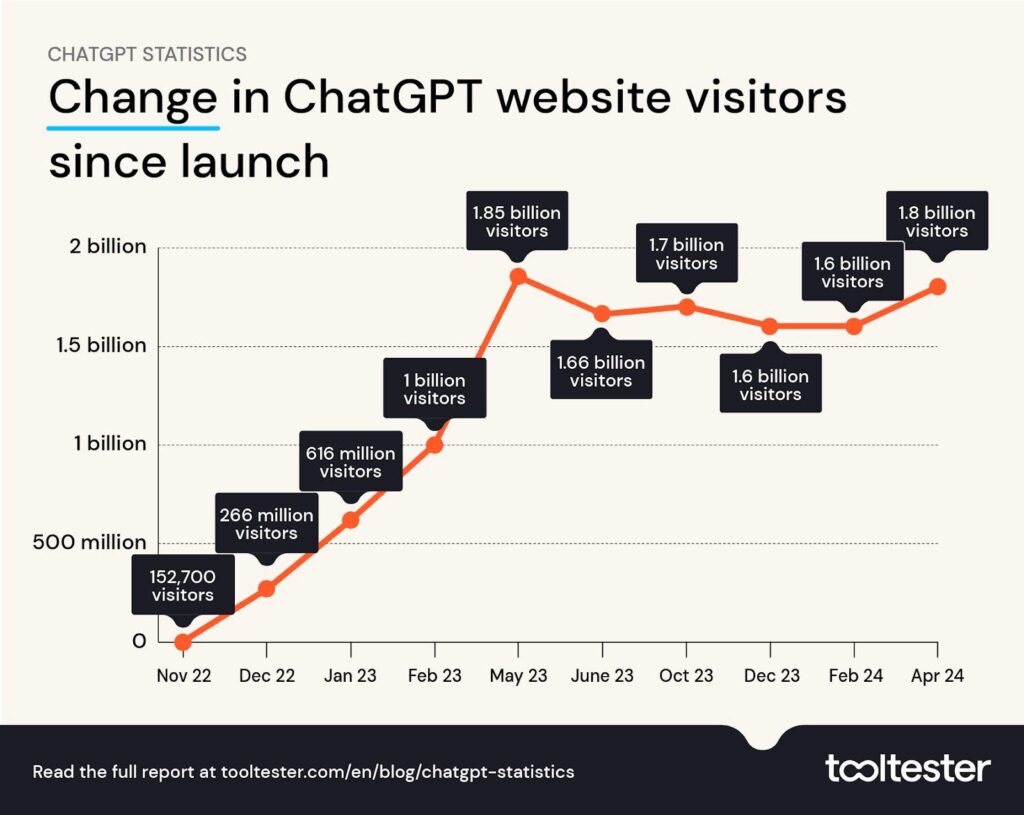

Last September, something important happened in the AI world, but it didn't involve flashy announcements or viral benchmarks. Open AI released the first comprehensive research paper examining how real people actually use Chat GPT. Not what they could do with it. Not what marketing departments think they should do. What they're actually doing.

This matters because for the past two years, Chat GPT has been surrounded by hype. Some people predicted mass unemployment. Others claimed it would revolutionize everything overnight. Pundits wrote ten thousand think pieces about the future of work. But actual data on how millions of people interact with the tool daily? That was missing.

Until now.

The paper, "How People Use Chat GPT," combines survey data from thousands of users, anonymized usage statistics, and interviews with educators, professionals, and enterprises. The researchers are economists and social scientists, not salespeople. What they found contradicts some assumptions and validates others in surprising ways.

Here's what stands out: Chat GPT isn't replacing jobs. It's reshaping how people do them. It's not a leisure tool. It's a productivity instrument. It's not equally adopted across all professions. Technical fields are eating it first. And most importantly, usage patterns are stabilizing from "let me play with this" into "this is how we work now."

For anyone trying to understand where AI actually fits into business and education right now, this research is your map. And it's more nuanced than the headlines suggest.

Let's break down what the data actually reveals, what it means for different industries, and how adoption is likely to evolve from here.

Understanding Open AI's Research Methodology

Before we dive into the findings, it's worth understanding how Open AI gathered this data. The methodology matters because it shapes what we can and can't conclude.

The research combined three primary data sources. First, they surveyed thousands of Chat GPT users directly, asking about frequency of use, type of tasks, industry, and perceived impact. Second, they analyzed anonymized usage logs from millions of interactions, tracking patterns without identifying individuals. Third, they conducted interviews with specific user segments: educators, enterprise customers, and professionals in different fields.

This mixed-methods approach is solid. Surveys capture subjective experiences ("Do you find this useful?"). Usage logs capture objective behavior ("How many people actually use this feature?"). Interviews provide context and nuance that numbers alone can't reveal.

But there are limitations worth acknowledging upfront.

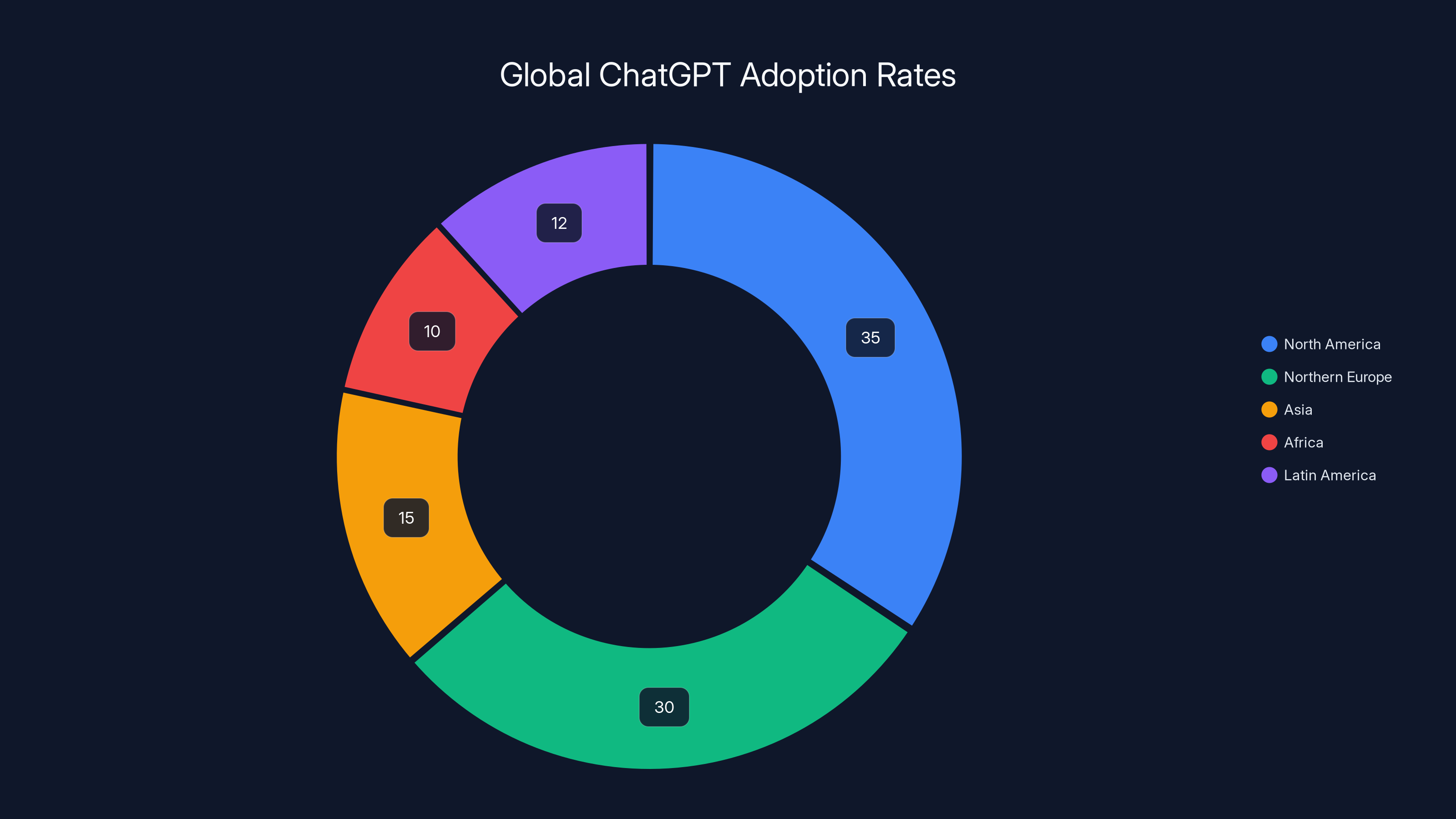

The data skews heavily toward North America and Europe. Users in Asia, Africa, Latin America, and the Middle East are underrepresented, which means adoption patterns in those regions might differ significantly from what the paper shows. Additionally, this is a snapshot from a relatively early stage of AI adoption. Sustained usage over years might differ from initial adoption patterns. Some features have changed since the data was collected, so specific feature usage numbers may shift.

The paper is also based on Chat GPT users specifically, not non-users. That means it can't tell us why people don't use Chat GPT, only how those who do are using it.

With those caveats in mind, the data still represents the most comprehensive public look at real-world Chat GPT usage to date.

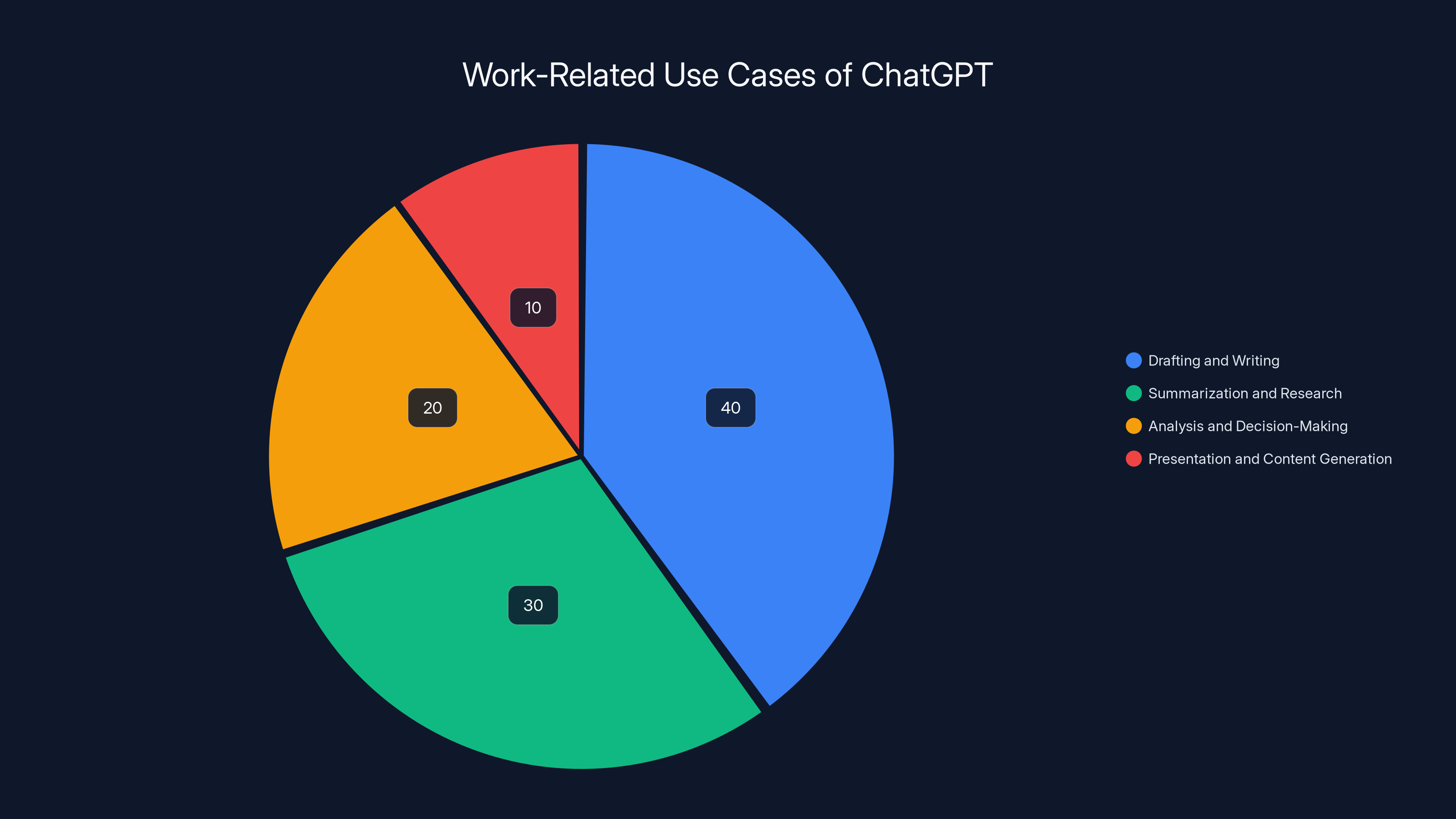

Drafting and Writing is the most common professional use of ChatGPT, accounting for 40% of work-related interactions. Estimated data.

The Work-First Revolution: Professional Adoption at 73%

The single most striking finding from the research is that approximately three-quarters of all Chat GPT interactions are work-related. Not leisure. Not entertainment. Work.

This fundamentally reframes the entire narrative around the tool. Chat GPT isn't replacing game nights or entertainment streaming. It's reshaping how people spend 8-10 hours of their day.

Within professional contexts, the breakdown reveals distinct patterns:

Drafting and Writing represents the largest share of work-related use. Professionals use Chat GPT to draft emails, reports, proposals, blog posts, technical documentation, and business communications. The tool handles the cognitive load of getting initial thoughts onto a page, which humans then refine with their judgment and domain expertise.

I've watched this in practice. A marketing manager who used to spend 90 minutes on an email might now spend 20 minutes: 5 minutes prompting Chat GPT and 15 minutes editing and personalizing the output. The time savings compound across dozens of emails per week.

Summarization and Research is the second primary work use case. Professionals feed the tool long documents, meeting transcripts, articles, or reports and ask it to extract key points, identify action items, or synthesize information. For executives drowning in reading material, this is genuinely transformative. A 40-page market analysis becomes a 3-minute summary.

Analysis and Decision-Making encompasses using Chat GPT as a sounding board. A consultant might use it to brainstorm approaches to a client problem. A manager might use it to think through the pros and cons of a strategic decision. Here, the tool isn't making the decision. It's forcing the human to articulate their thinking and challenging assumptions.

Presentation and Content Generation includes professionals using Chat GPT to outline presentations, generate presentation content, create slides, and structure reports. The tool doesn't create publication-ready content, but it dramatically accelerates the structure and first draft.

Code Generation and Debugging deserves its own section (which it gets below), but it's worth noting here that programming is woven throughout this professional use. Software developers aren't the only technical professionals; data analysts, financial engineers, and scientists also use it extensively for technical work.

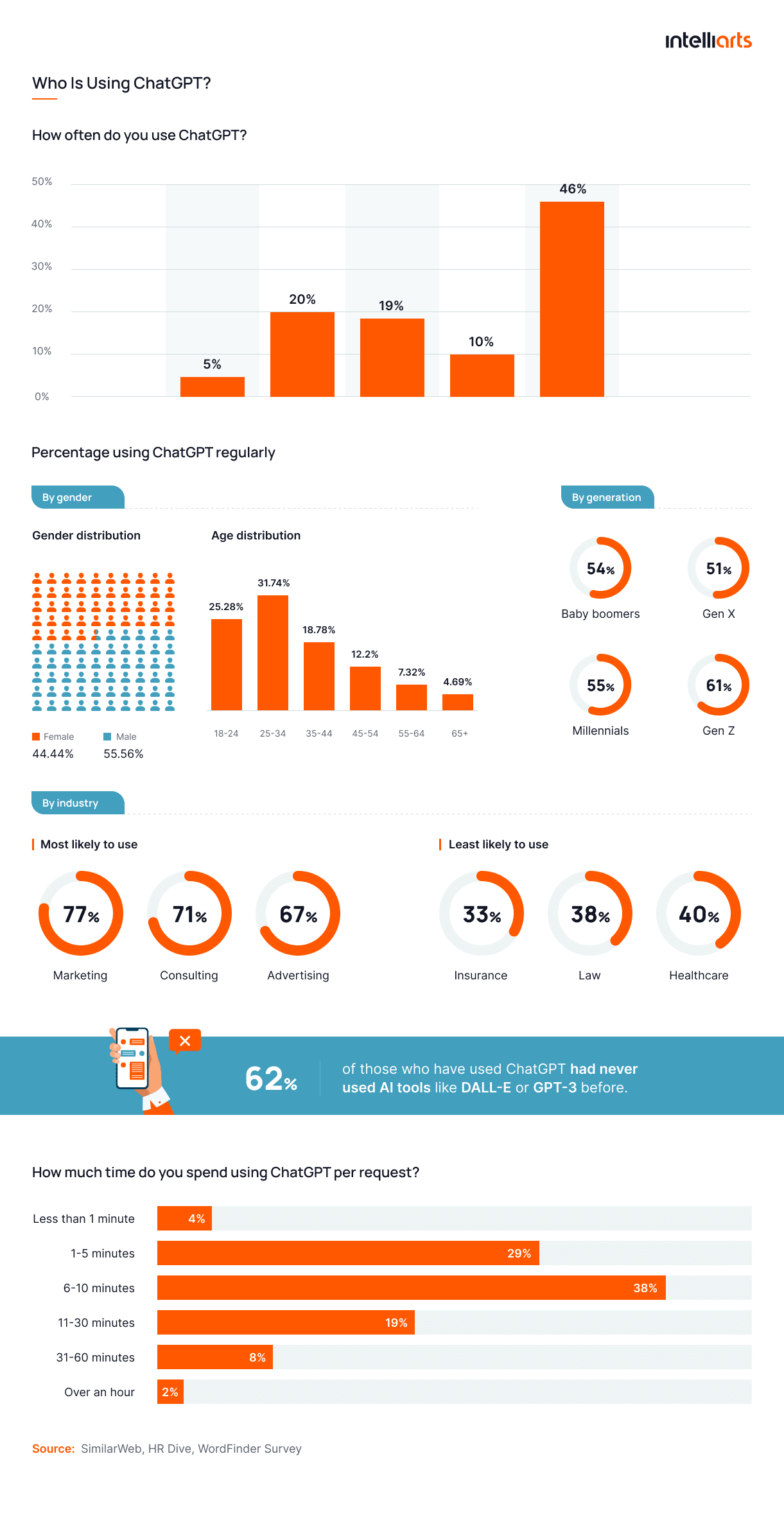

The professional adoption pattern also reveals important demographic insights. Younger professionals (25-35 years old) use Chat GPT more frequently and in more diverse ways than older cohorts. But the gap is narrowing quickly. Mid-career professionals (35-50 years old) are adopting rapidly, suggesting this isn't just a generational novelty but an actual tool migration.

Industry-specific adoption also matters. Technology companies have near-universal adoption among developers and analysts. Consulting firms use it extensively for client work. Financial services firms integrate it into analysis and documentation workflows. Even more conservative industries like legal and healthcare are experimenting, though at slower pace due to regulatory concerns.

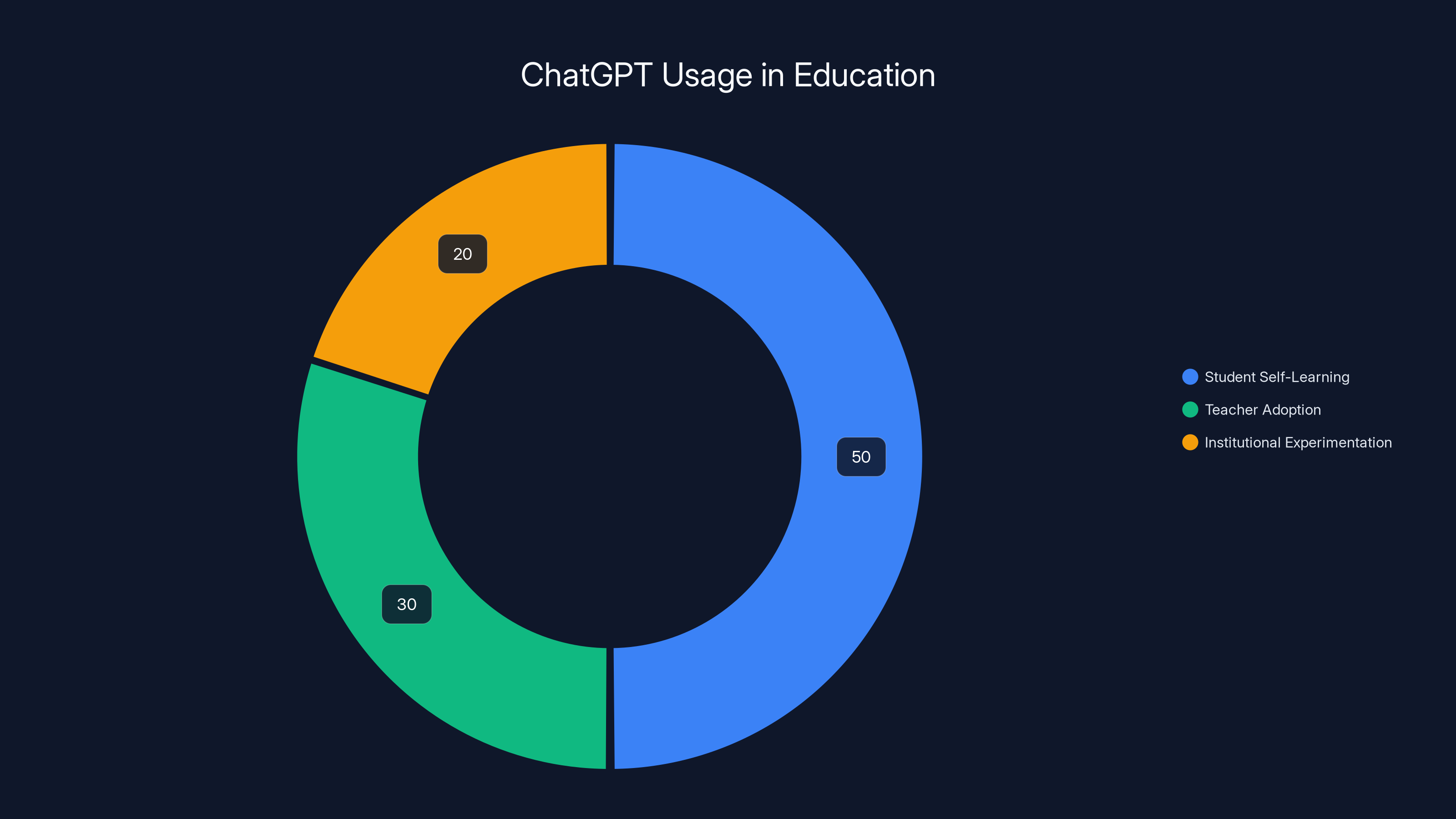

Education as the Proving Ground: 32% of Usage

Education represents the second-largest category of Chat GPT usage, but describing it as second place understates its importance. Education isn't just another use case. It's the frontier where AI's role in society is being actively negotiated, tested, and debated.

The educational adoption breaks down into three primary segments.

Student Self-Learning is the most common pattern. Students use Chat GPT to understand difficult concepts, generate practice problems, get explanations of complex topics, and yes, sometimes to help with homework completion. The tool has become a 24/7 tutor that never gets tired or frustrated.

What's interesting here is that the research shows students who use Chat GPT as a learning tool (testing themselves, using it to verify understanding) see learning benefits. Students who use it as a shortcut to completion without thinking see learning suffer. The tool's impact depends entirely on how it's used.

Teacher Adoption is more recent but accelerating. Teachers use Chat GPT to draft lesson plans, create quiz questions, generate multiple examples of concepts, personalize learning materials for different student needs, and provide feedback at scale. A teacher who can generate 20 different explanations of photosynthesis tailored to different learning styles can teach more effectively than one creating all materials manually.

The research shows that teachers appreciate Chat GPT most for freeing them from routine content generation, allowing more time for genuine human interaction with students. This contradicts the "AI will replace teachers" narrative. Instead, it's "AI will handle routine tasks so teachers can do what only humans can do."

Institutional Experimentation is happening rapidly across schools and universities. Institutions are developing AI literacy curricula, creating policies about when students can and can't use Chat GPT, and integrating it into learning platforms. Some schools prohibit it. Others require it. Most are figuring it out in real-time.

The scale of educational adoption is remarkable. In surveys, 40-60% of students in developed countries report using Chat GPT at least occasionally. The tool isn't in every classroom yet, but it's spreading faster than most educational technology in history.

One key finding: students and teachers who receive training on effective Chat GPT use see better outcomes than those who get access without guidance. The tool itself is neutral. The pedagogy around it matters.

Programming and Technical Work: The 21% That Changes Everything

When you look at the category breakdown, programming and coding account for 21% of all Chat GPT usage. That might not sound enormous until you realize what it means: approximately one in five interactions involves someone asking an AI to help them write, debug, or understand code.

The impact on software development is hard to overstate.

Developers use Chat GPT for several distinct tasks. Code generation is the most obvious: describing what you want and getting back working code. A developer might ask for a function that validates email addresses, and Chat GPT produces production-quality code in seconds. This replaces what used to be 5-10 minutes of manual typing and syntax remembering.

Debugging is often more valuable. A developer pastes error messages and code, and Chat GPT offers hypotheses about what's wrong. It won't always be right, but it often cuts debugging time in half by suggesting approaches a human developer would eventually think of anyway.

Documentation and explanation is underrated. New developers joining a project can ask Chat GPT to explain what code does. Internal documentation is often sparse. The tool fills that gap instantly.

Learning and exploration happens when developers use Chat GPT to understand frameworks, libraries, or languages they're new to. Instead of digging through documentation or Stack Overflow threads, they ask the AI to explain a concept.

Refactoring and optimization involves asking Chat GPT to improve code performance, readability, or security. "Here's my function; can you make this cleaner?" The tool often suggests legitimate improvements.

What's important to understand is that Chat GPT isn't replacing developers. It's making developers more productive. A developer who spent 8 hours per day writing code might now spend 8 hours per day solving problems, architecting systems, and making decisions. The tool handles routine coding tasks, freeing humans for judgment-intensive work.

The research shows that developers with strong fundamentals benefit most from Chat GPT. Developers trying to use it as a substitute for understanding don't get as much value because they can't evaluate whether the code is actually correct.

For enterprises, this has massive implications. Teams can ship features faster, maintain code better, and onboard junior developers more quickly. The risk is that junior developers might rely on the tool so much they don't develop deep technical skills. That's a real concern that responsible development organizations are actively addressing.

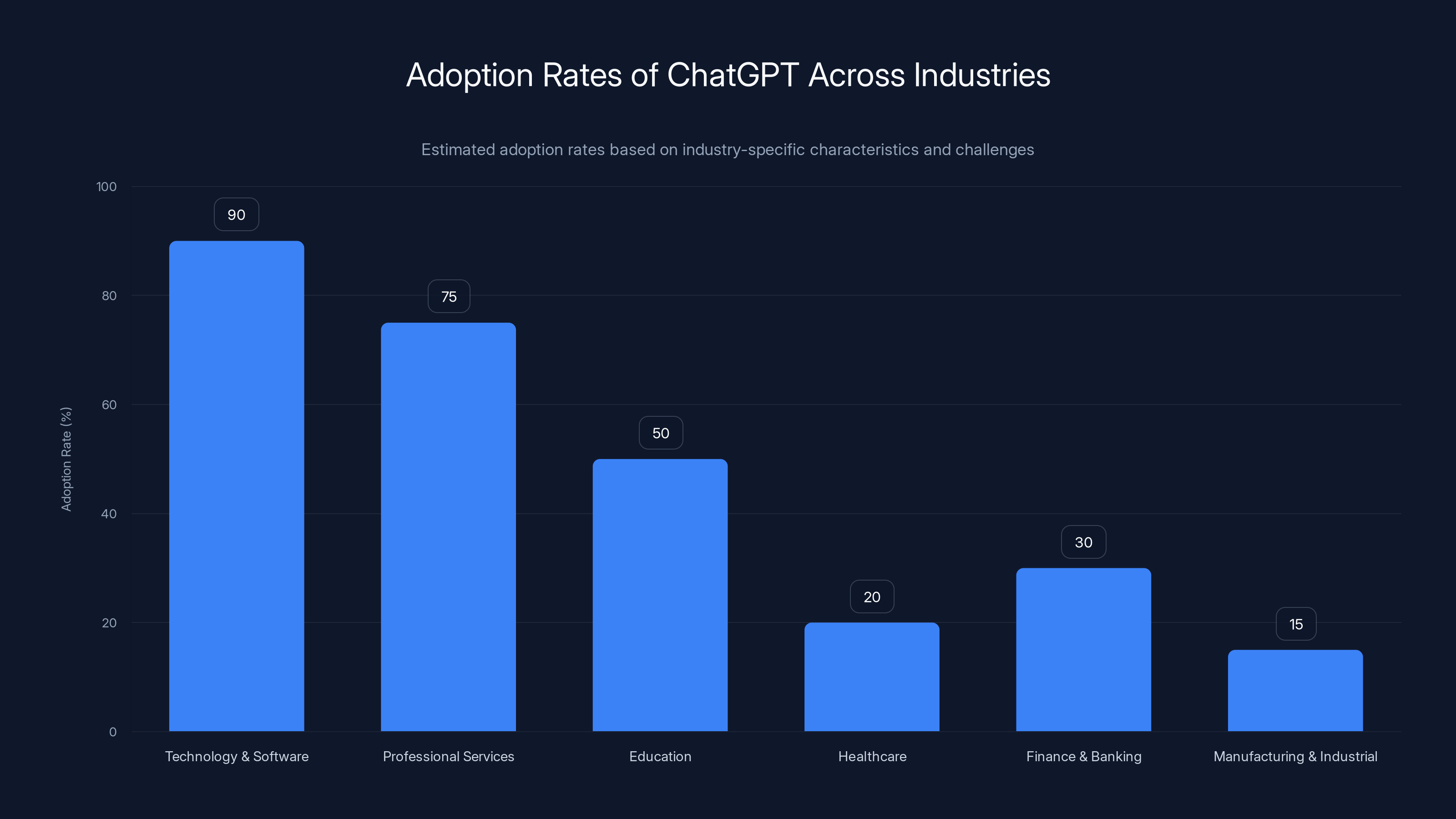

Technology and Software Development leads in ChatGPT adoption with nearly universal use, while Manufacturing and Industrial sectors show the lowest adoption rates. Estimated data.

Creative and Personal Uses: The Smaller Than Expected Category

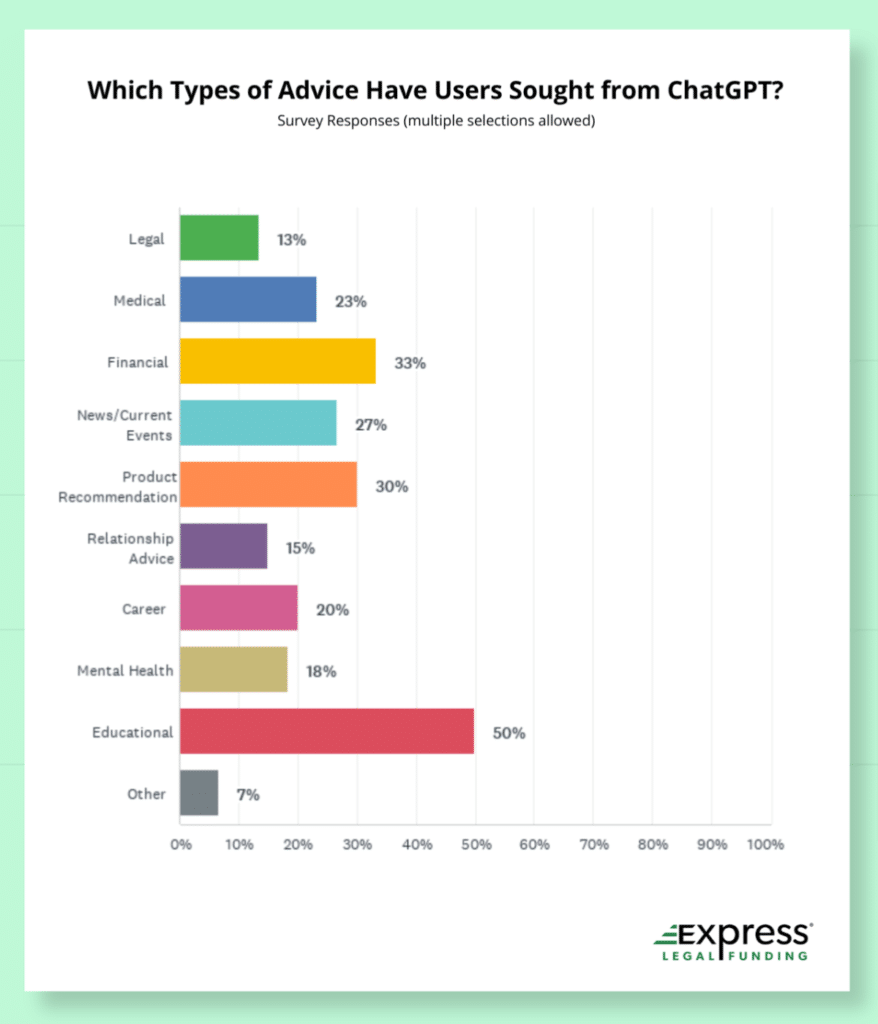

One of the more counterintuitive findings from the research is how relatively small the "creative and personal" use category is. Despite significant media coverage of Chat GPT generating stories, poetry, artwork, and personal advice, these uses represent only about 8-10% of total usage.

This finding challenges the narrative that AI will become a personal creative partner for everyone. For most people, that's not happening yet, if at all.

When personal use does occur, it breaks down into predictable patterns:

Brainstorming and ideation for personal projects—someone might ask Chat GPT for dinner recipe ideas, vacation planning advice, or home improvement suggestions. The tool generates options quickly, and humans select what appeals.

Creative writing for hobbies—amateur fiction writers use Chat GPT to overcome writer's block, generate story ideas, or get feedback on writing. These users typically use it as an editing partner, not as a primary author.

Self-help and life advice conversations where users treat Chat GPT like a therapist or life coach. Psychologists argue about whether this is helpful or potentially harmful if it replaces actual professional help.

Entertainment in the form of games, creative writing prompts, and social experiments. Some people just like talking to the AI to see what it says.

What the research reveals is that Chat GPT is not yet a mass-market entertainment platform like streaming services or social media. It hasn't fundamentally changed leisure time. It's optimized for productivity, not entertainment, and that's where real adoption has happened.

This might change as the interface improves, as users become more sophisticated in prompting, and as the tool's creative capabilities expand. But for now, the creative revolution is smaller than the headlines suggested.

Industry-by-Industry Breakdown: Where Adoption Thrives

The research breaks down adoption across specific sectors, revealing important patterns about where Chat GPT creates value and where it still struggles.

Technology and Software Development shows the highest adoption rates. Nearly every developer uses it at least occasionally, and many use it multiple times per day. The tool maps perfectly to the work of writing, testing, and debugging code. Adoption is so complete that IT managers now plan around Chat GPT being available to their teams.

Professional Services (consulting, law, accounting, financial advisory) shows the second-highest adoption. These industries are document-heavy and analysis-heavy, which are Chat GPT's strengths. Consultants use it for drafting client recommendations. Lawyers use it for legal research and contract analysis (with appropriate caution and fact-checking). Accountants use it for financial analysis and reporting.

Education shows adoption around 40-60% of educators and students, with this figure increasing monthly. The delay compared to technology and consulting is partly due to institutional policies (some schools prohibit it, others allow it) and partly due to more conservative adoption cycles in education.

Healthcare shows lower adoption rates (15-25%) despite clear potential. Doctors and healthcare administrators are cautiously experimenting, but regulatory concerns, liability worries, and the high stakes of medical errors make deployment slower. However, the research suggests this will change. The first healthcare organizations to figure out safe, effective integration will gain competitive advantages.

Finance and Banking shows moderate adoption (20-40%) with strong growth trajectory. Regulatory requirements mean deployment is methodical, but banks are actively developing Chat GPT use cases for customer service, internal analysis, and risk assessment.

Manufacturing and Industrial shows lower adoption (10-20%), reflecting the fact that these industries are less document-heavy and text-based than others. But adoption is accelerating as companies find uses for Chat GPT in quality control documentation, process optimization, and supply chain analysis.

Government and Public Sector shows lower adoption (15-25%), partly due to security and confidentiality concerns, partly due to slower procurement cycles. But pilot programs are expanding rapidly.

The pattern across all industries is clear: adoption is highest where the work is primarily knowledge work, document-based, or code-based. It's slower where the work is physical, regulatory-constrained, or requires irreplaceable human judgment.

Frequency Patterns: Casual Users vs. Power Users

Not all Chat GPT users are created equal. The research reveals dramatically different usage patterns across the user base, from casual explorers to daily power users.

Casual Users (30-40% of registered users) log in infrequently—maybe once or twice per month. They might try it out, ask a few questions, and then forget about it. These users report being less confident about the tool's capabilities and limitations. They might not understand how to write effective prompts. For them, Chat GPT is something interesting they've heard about but haven't integrated into their workflow.

Regular Users (30-40% of registered users) use Chat GPT weekly or a few times per week. They've identified a few specific use cases where it's useful and return for those tasks. A person who uses it once per week for email drafting is a regular user. They've identified value and integrated it into specific workflows, but it's not a daily habit.

Power Users (20-30% of registered users) use Chat GPT multiple times per day. Often, these are people in knowledge work or technical roles. A developer might use it dozens of times per day. A consultant might use it 5-10 times. These users have highly optimized prompting skills and have deeply integrated the tool into their work.

The research shows that frequency correlates with technical confidence. Developers and technical professionals become heavy users faster than non-technical professionals. This makes sense: they understand systems, they're comfortable with iteration and debugging, and they quickly learn how to get good outputs.

Interestingly, the frequency also correlates with satisfaction. People who use Chat GPT daily report much higher satisfaction than casual users. This isn't because the tool is different for different people. It's because people who use it daily have figured out how to use it effectively. They've overcome the learning curve.

One important note: the research also captures abandonment. Some people try Chat GPT, don't immediately see value, and stop using it. The abandonment rate was highest among users who tried it once, couldn't get good results, and never refined their approach. This suggests that education about how to effectively use the tool is crucial for sustained adoption.

Adoption of ChatGPT is highest in North America and Northern Europe, with rates between 30-40%. In contrast, Asia, Africa, and Latin America see lower adoption rates, reflecting economic and technological divides. (Estimated data)

The Generational Divide That's Closing Rapidly

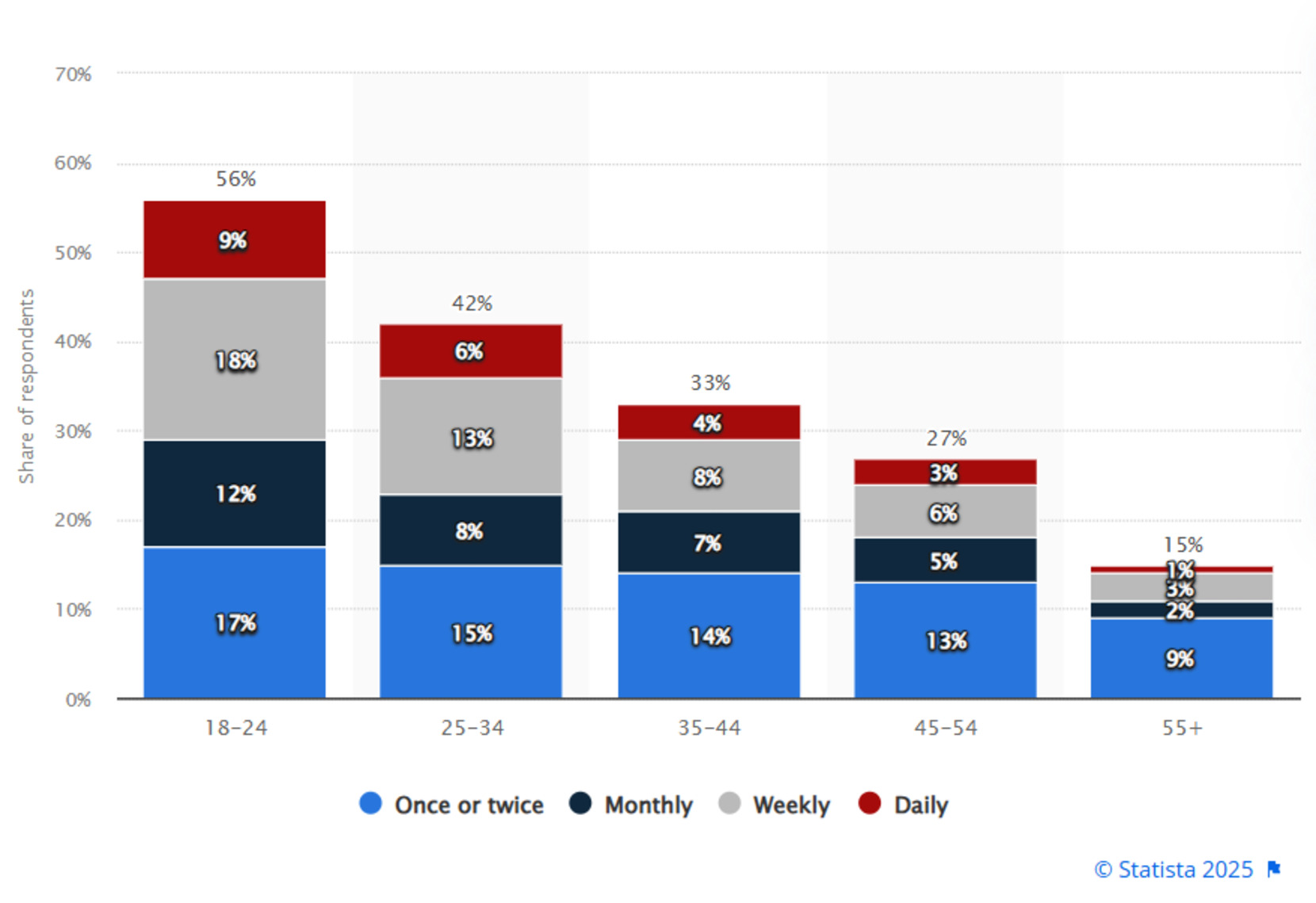

Young people use Chat GPT more than older people. That's expected and true. But the research reveals something more important: the generational divide is narrowing faster than with previous technologies.

With older technologies like social media, a 10-year age gap created a massive adoption gap. Teenagers adopted Tik Tok; grandparents didn't. That gap stayed wide. Not so with Chat GPT.

Users aged 18-25 use Chat GPT most intensively, averaging 3-5 interactions per day. For them, it's part of the basic infrastructure of digital life. They're the fastest to adopt new features and most experimental in their usage.

Users aged 25-40 (early career professionals and young parents) show rapid adoption, with many now reporting 1-3 interactions per day. This group has enough technical comfort to use the tool but also specific productivity needs where it provides immediate value. This group is the fastest-growing segment.

Users aged 40-60 (mid-career professionals and established professionals) show the most dramatic growth in the research. Adoption in this group accelerated significantly during the study period. The common story: "I was skeptical, but once I tried it for my actual work, I couldn't imagine going back." Many of these users are now daily users.

Users aged 60+ show lower overall adoption but higher growth rates among those who do adopt. When someone in this age group decides to use Chat GPT, they often become enthusiastic adopters. The barrier seems to be initial skepticism, not inability to use the tool.

The narrowing generational gap has an important implication: Chat GPT won't be a generational technology where young people use it but old people don't. It's more likely to become a general-purpose tool across all age groups, with adoption differences based on profession and personal productivity needs rather than age.

When Chat GPT Doesn't Work: Limitations and Failure Modes

The research doesn't shy away from where Chat GPT fails or underperforms. Understanding these limitations is as important as understanding successes.

Hallucination and Factual Errors remain the most common complaint. Users report that Chat GPT sometimes generates convincing-sounding but completely false information. A lawyer using it for legal research must fact-check everything. A doctor using it for medical information must verify against authoritative sources. A developer using it for code might deploy buggy code if they don't test it. The tool's confidence is not a reliable signal of accuracy.

Context Limitations affect long conversations. Chat GPT has a context window (it can only "remember" so much conversation history). For very long projects or detailed workflows, the tool loses track of earlier information. Users report that they have to repeatedly re-establish context, which adds friction.

Specialized Knowledge Gaps emerge in domain-specific work. Chat GPT's training data has gaps in specialized fields. Ask it about rare medical conditions, niche academic fields, or highly specialized technical work, and it often generates plausible-sounding but wrong answers.

Reasoning Limitations appear in complex multi-step problems. Chat GPT struggles with some types of logical reasoning, mathematical proofs, and very novel problem-solving. It can help with familiar problem types but often fails on genuinely new problems.

Real-time Information Gaps limit utility for tasks requiring current information. Chat GPT's knowledge has a cutoff date. For tasks requiring information about events after that date, it's useless.

Creative Originality Limits constrain truly novel creative work. The tool generates new combinations of existing patterns in its training data. It doesn't innovate in the way a truly creative human does. For initial brainstorming, it's useful. For original creative work, it's less helpful.

Understanding these limitations helps explain adoption patterns. Adoption is highest in domains where these limitations matter least (routine code generation, standard business writing) and lowest where they matter most (medical diagnosis, cutting-edge research, creative innovation).

Organizational Adoption: From Chaos to Policy

Early Chat GPT adoption in organizations was ad hoc. Individual employees downloaded it and used it in their workflows, often without formal policies or approval. This created security, liability, and intellectual property concerns.

The research documents a clear transition happening now: from "employees are secretly using Chat GPT" to "we need organizational policies about Chat GPT."

Organizations are moving through several stages:

Stage 1: Prohibition (now mostly abandoned). Some companies tried banning Chat GPT entirely. Banks prohibited it for security reasons. Legal firms worried about confidentiality. This stage is rapidly ending because the tool provides too much value to suppress, and suppression doesn't work (employees use it anyway, just without oversight).

Stage 2: Uncertainty (current state for many organizations). Companies allow Chat GPT use but haven't established clear policies. HR doesn't know the rules. Legal is nervous but hasn't provided guidance. IT hasn't decided whether to block it. This creates liability but also reflects genuine uncertainty about how to integrate the tool responsibly.

Stage 3: Cautious Integration (increasingly common in leading organizations). Companies develop explicit policies: which data can be fed to Chat GPT (nothing confidential or proprietary), which departments can use it for what purposes, how to document use, how to handle outputs. Training is provided on responsible use. Gradually, Chat GPT becomes part of official workflow.

Stage 4: Optimization (rare so far, increasingly common). Leading organizations integrate Chat GPT into official tools and workflows. APIs are used to integrate it into internal systems. Custom models are fine-tuned on company-specific data. Chat GPT becomes infrastructure, not an optional tool.

The research shows that organizations in Stage 3 and beyond see better adoption, higher employee satisfaction, and better risk management than those still in Stage 1 or 2.

Important industries face specific challenges. Healthcare organizations struggle with HIPAA compliance (patient privacy laws). Legal firms struggle with attorney-client privilege and conflict checking. Financial firms struggle with regulatory documentation. These industries are moving more slowly, but they're moving.

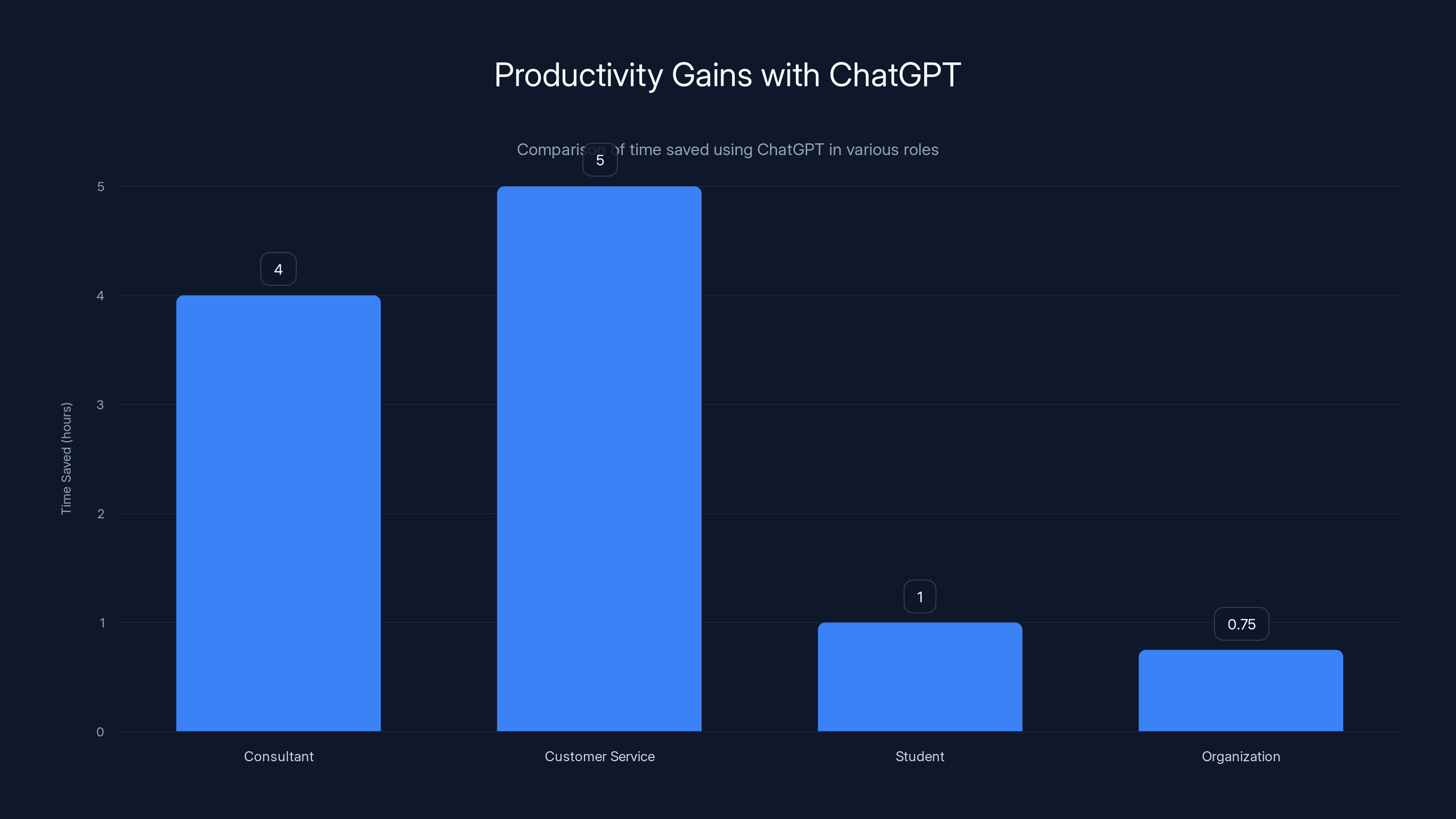

ChatGPT significantly reduces task time across various roles, with consultants saving 4 hours per report and customer service reducing response drafting time by 5x. Estimated data.

The Productivity Narrative: From Toy to Tool

One significant finding deserves its own section: how the narrative around Chat GPT has shifted from "this is a cool AI toy" to "this is a productivity tool."

Early media coverage focused on what Chat GPT could do that seemed magical. It could write essays. It could generate poetry. It could pass exams. These generated excitement and concern in equal measure.

The research shows a very different actual adoption narrative. Users adopted Chat GPT not because it could do amazing creative things, but because it could save 30 minutes per day on routine work.

A consultant adopts Chat GPT because it cuts report writing time from 6 hours to 2 hours. A customer service manager adopts it because it can draft responses to common questions 5x faster than humans. A student adopts it because it can explain concepts in a way that clicks for them.

The productivity gains are often modest—30 minutes here, an hour there. But they compound. An organization with 100 employees each saving 45 minutes per day is recovering 1,500 work hours per week. At

The ROI math is straightforward. That's why adoption is accelerating in organizations where the business case was made early.

The shift from "magical AI toy" to "productivity tool" is important because it changes how the tool is evaluated. It's no longer competing against the bar of "can it do something impossible?" It's competing against the bar of "does it save time and money?" For many use cases, the answer is unambiguously yes.

Geographic and Economic Divides in Adoption

The research data skews heavily North American and European. But the limited data available on global adoption reveals important inequalities.

Adoption is highest in wealthy countries with high English proficiency and strong technology infrastructure. In North America and Northern Europe, 30-40% of the population has tried Chat GPT. In Asia, Africa, and Latin America, the percentages are lower, though adoption is accelerating.

Why the divide? Several factors:

Language barriers limit adoption in non-English countries. Chat GPT works in many languages but performs best in English, where most of its training data originates. Users in countries where English isn't the primary language often get lower quality outputs.

Internet access and infrastructure matter. Chat GPT requires reliable broadband. Users in areas with unreliable connectivity have worse experiences.

Pricing affects adoption in lower-income countries.

Educational access to the tool varies. In wealthy countries, students in universities and even high schools have access. In developing countries, access is more limited.

Regulatory environment matters. Some countries restrict AI tools or require approvals not yet in place. Others embrace them quickly.

The implication is important: Chat GPT adoption is currently amplifying existing economic and technological divides rather than reducing them. This might change as the tool becomes more localized, as pricing options expand, and as access to internet and education improves.

The Learning Curve: Why Some People Thrive and Others Stall

Not everyone gets value from Chat GPT immediately. The research documents significant variation in user success and satisfaction, and much of it comes down to learning and expectation management.

Users with clear, specific tasks learn quickly. A person who knows exactly what they need Chat GPT to do—write an email, generate code, summarize a document—can learn in one or two attempts. Give it a try, see if the output is useful, refine if needed, keep going. These users often become daily users within a week.

Users with vague expectations struggle longer. Someone who thinks "I'll use AI to be more creative" without a specific creative task in mind will have a harder time. They'll try it, get outputs that are okay but not amazing, and lose interest. The tool needs specific targets to show its value.

Users who understand iteration and refinement succeed much faster. People in fields like software development, writing, and consulting understand that first drafts are rough. They expect to iterate. They prompt, look at output, refine the prompt, iterate. These users quickly develop mental models of how the tool works. Non-technical users might not understand iteration and give up after the first output.

Users with high technical confidence learn the tool's capabilities faster. They're comfortable experimenting, making mistakes, debugging. Less technical users sometimes hesitate to try new approaches.

The research suggests that organizations that provide training on effective Chat GPT use see much faster adoption and higher satisfaction than those that just hand the tool to people. Training doesn't need to be long—even 30-60 minutes on "here's what this tool is good at, here's how to write effective prompts, here's how to evaluate outputs" dramatically improves success rates.

Student self-learning accounts for the largest portion of ChatGPT usage in education, highlighting its role as a personal tutor. Teacher adoption and institutional experimentation are also significant, showing diverse applications of AI in education. Estimated data.

Ethical Concerns and Trust Issues

The research captures important concerns about Chat GPT's reliability, honesty, and potential misuse. These aren't small issues. They affect adoption trajectory and organizational policies.

Hallucinations and false information create trust problems. Users who ask Chat GPT a question, get a confident-sounding wrong answer, and don't notice have a problem. This is why adoption in high-stakes fields (medicine, law, finance) is more cautious. In these fields, every output requires verification against authoritative sources.

Bias and fairness concerns show up in the research. Chat GPT's training data reflects historical biases. Outputs sometimes perpetuate stereotypes or favor certain groups. Users in sensitive contexts (hiring, lending, diagnosis) are appropriately worried about this.

Intellectual property concerns emerge around training data. What if the training data included copyrighted content? What if outputs resemble training data? These are unsettled legal questions, and organizations are appropriately cautious.

Privacy concerns about feeding sensitive data to a third-party service are legitimate. A healthcare organization feeding patient information to Chat GPT, even anonymized, is creating liability.

Concentration of power among a few AI companies troubles many observers. Chat GPT, Claude, and a handful of others are becoming crucial infrastructure. Depending on a single company's decisions about moderation, availability, and pricing creates vulnerability.

Environmental impact of training large AI models is non-trivial. Running Chat GPT billions of times per day consumes significant electricity. That matters to some users.

These concerns are legitimate, not frivolous. The research shows that users in regulated industries and users with strong ethical commitments are more cautious about Chat GPT adoption than others. This might slow adoption in some sectors, but it also reflects appropriate risk management.

Integration with Existing Tools and Workflows

Chat GPT doesn't exist in isolation. Real adoption happens when it integrates with existing tools and workflows that professionals already use daily.

The research documents patterns of integration:

Browser-based workflows are the most common. A person working in Word, Google Docs, or email opens Chat GPT in a separate tab or window, copies text over, gets output, and pastes it back. This is friction-full but works.

Native integrations are expanding. Microsoft integrated Chat GPT into Office via Copilot. Google is integrating it into Workspace. Slack has Chat GPT bots. These integrations reduce friction significantly—you stay in your normal tool, the AI is available without context-switching.

API integrations allow organizations to build Chat GPT into custom internal tools. A customer service platform can integrate Chat GPT to auto-draft responses. A code editor can integrate it for in-line code suggestions. These integrations drive the deepest adoption.

Workflow automation platforms like Zapier are adding Chat GPT integration, allowing organizations to build automation that uses Chat GPT as a component. "When a customer emails, have Chat GPT draft a response, human reviews, then it auto-sends." This kind of integration multiplies productivity gains.

The research shows that ease of integration is a huge adoption factor. Tools that make Chat GPT easy to use within existing workflows see much higher adoption than those that require switching context or cumbersome copy-paste workflows.

Future Trends: What Comes Next

The research paper concludes with observations about likely future trajectories. These aren't predictions; they're informed observations about direction.

Specialization and fine-tuning will accelerate. Generic Chat GPT will remain useful, but more valuable will be versions trained on specific domains. A Chat GPT fine-tuned on medical data and medical literature could provide medical professionals something more useful than generic Chat GPT. A version fine-tuned on legal documents would be more useful to lawyers. We'll see organizations building custom AI models trained on their own data.

Multimodal integration will expand. Chat GPT currently processes text. Future versions will process images, video, audio, code, data more seamlessly. A user will be able to feed a video of a manufacturing process and ask the AI to identify inefficiencies. Or feed financial documents and ask it to extract and analyze key metrics across dozens of documents.

Real-time data access will overcome current limitations. Chat GPT's knowledge cutoff limits utility for current events, stock prices, news analysis. Future versions will have access to real-time data, making them useful for live decision-making.

Regulatory frameworks will develop. Right now, no country has clear regulations for how AI tools like Chat GPT should be used and governed. The EU is developing AI regulations. The US is developing guidelines. As regulations clarify, adoption will accelerate in regulated industries because organizations will understand the rules.

Enterprise integration will deepen. Rather than individuals using Chat GPT, entire organizations will have internal Chat GPT-like systems trained on internal data, hosted on internal infrastructure, governed by internal policies. This will accelerate adoption in large organizations concerned about data security and confidentiality.

Cost and pricing models will evolve. As competition increases and the market matures, prices will likely decrease. We'll see different pricing models: per-word pricing, subscription pricing, API pricing, and hybrid models. This will increase accessibility.

Human-AI collaboration patterns will mature. We're still learning how to effectively combine human judgment with AI capabilities. As we learn, workflows will become more sophisticated. "AI generates options, human selects and refines" will become a standard working pattern across many fields.

Key Implications for Different Audiences

The research has specific implications for different audiences. Let me spell them out.

For individuals: The question is no longer "should I use Chat GPT?" For anyone in knowledge work, it's "how do I use Chat GPT effectively?" The tool is here and it's productive. The competitive advantage goes to people who master it early. Invest 5-10 hours in learning how to use it well. Identify one specific task in your work that consumes significant time. Use Chat GPT to automate or accelerate that task. Iterate and improve. Then move to the next task.

For teams and organizations: The transition from individual experimentation to organizational policy is critical. Teams that develop clear policies about Chat GPT use, provide training, and integrate it into workflows will pull ahead of those that prohibit it or leave it to individual whims. The ROI case is strong enough that doing nothing is actually leaving money on the table.

For educators: Chat GPT is coming to your classrooms whether you like it or not. The choice isn't to ban it but to teach students how to use it responsibly. Integrate it into assignments. Teach students to use it as a research tool, learning tool, and writing assistant. Teach them to verify outputs. Teach them to understand its limitations. The institutions that do this best will graduate students best prepared for knowledge work.

For policymakers: Regulation is necessary but must be nuanced. Banning AI tools would leave your jurisdiction at a disadvantage. Allowing rampant use without oversight creates risks. The middle path is developing clear frameworks that enable responsible innovation while protecting against genuine harms. The key is moving quickly—regulatory lag in this space is costly.

For businesses: The first-mover advantage in your industry will go to organizations that figure out Chat GPT implementation early. The second-mover advantage is learning from first movers and improving. Organizations moving slowly will need to catch up later, at higher cost. The time to experiment and learn is now.

Comparing Chat GPT to Alternative AI Tools

While the Open AI research focuses specifically on Chat GPT, it's worth noting that the broader AI tool ecosystem is expanding rapidly. Other large language models are emerging, each with different strengths.

Claude, made by Anthropic, emphasizes safety and accuracy. It's often preferred for tasks requiring careful analysis. Google's Gemini has integration advantages with Google's ecosystem. Mistral and other open-source models offer alternatives with different trade-offs around cost, control, and customization.

For teams looking at the broader automation picture, platforms like Runable provide integrated AI capabilities for creating presentations, documents, reports, and automating workflows—often without requiring specialized prompting expertise. Zapier and Make.com allow organizations to chain AI operations together into more complex automation.

The key takeaway: Chat GPT is not the only AI tool. It's the most famous and in many ways the most capable. But as the market matures, organizations will choose tools that best fit their specific needs, integrations, and risk profile.

The Bottom Line: AI Is Embedded Now

The most important implication from Open AI's research is probably the simplest: Chat GPT is no longer a curiosity or experiment. It's embedded in how millions of people work, learn, and create.

The adoption trajectory isn't slowing. It's accelerating. The generational divide isn't hardening. It's closing. The ROI case isn't theoretical. It's measured in hours saved per day, per person, per team.

What started as a surprising AI chatbot has become operational infrastructure for knowledge work.

The question facing individuals, organizations, and societies isn't "will Chat GPT change how we work?" It already has and it is. The question is "how do we manage this change responsibly while capturing its benefits?"

For individuals, the answer is experimentation and learning. Find your use case. Practice. Improve. Build Chat GPT skills now while you have time. In five years, Chat GPT fluency will be as expected as email fluency is today.

For organizations, the answer is developing policies and workflows that enable responsible adoption. Prohibition doesn't work. Chaos creates risk. A deliberate, thoughtful approach to integration creates competitive advantage.

For regulators and policymakers, the answer is crafting frameworks that enable beneficial uses while constraining harms. This is genuinely difficult because the technology is advancing faster than policy can. But the cost of inaction is higher than the cost of getting some rules wrong and fixing them.

The research from Open AI fundamentally shifts the conversation from "is this real?" to "what comes next?" We know the tool works. We know people want to use it. We know it provides genuine value. The remaining questions are about scaling, integrating, securing, and governing AI tools in ways that serve people and institutions well.

That's the work ahead. The Open AI research is the map.

FAQ

What does Open AI's study reveal about Chat GPT's primary use cases?

Open AI's research shows that approximately 73% of Chat GPT usage is work-related, with the largest shares going to professional document drafting, summarization, and analysis. Education represents the second-largest category at 32% of usage, followed by programming and coding at 21%. Personal and creative uses make up a surprisingly small portion of overall usage, contradicting early media narratives about Chat GPT as an entertainment or creative tool. The research reveals that Chat GPT is fundamentally a productivity tool, not a leisure tool, with adoption highest where the work involves routine cognitive tasks like drafting, summarizing, and code generation.

How does Chat GPT adoption vary across different industries?

Adoption is highest in technology and software development, where nearly every developer uses it regularly. Professional services like consulting, law, and accounting show the second-highest adoption rates, as these industries are document-heavy and analysis-intensive. Education shows rapid adoption (40-60%) but varies by institution and policy. Healthcare, finance, and government show lower adoption (15-40%), largely due to regulatory concerns and security requirements, though adoption is accelerating in these sectors as organizations develop safe implementation frameworks. Manufacturing and less knowledge-intensive industries show the slowest adoption. The pattern is clear: adoption is highest where work is knowledge-based and text-intensive, and lowest where work is physical or requires irreplaceable human judgment in high-stakes contexts.

What are the main limitations identified in Chat GPT's capabilities according to the research?

The research documents several significant limitations that users encounter. Hallucination and factual errors remain the most common complaint, where Chat GPT generates confident-sounding but completely false information. Context limitations affect long conversations where the tool loses track of earlier information. Specialized knowledge gaps emerge in domain-specific work, particularly in medicine, law, and cutting-edge research. The tool struggles with certain types of complex reasoning and mathematical proofs. Real-time information gaps limit utility for tasks requiring current data, as Chat GPT's knowledge has a cutoff date. These limitations explain why adoption is more cautious in high-stakes regulated industries where errors carry significant consequences.

How do different user segments approach Chat GPT learning and adoption differently?

The research reveals significant variation in learning curves and user success. Users with clear, specific tasks learn quickly and become daily users within a week. Users with vague expectations struggle longer and often abandon the tool. Users who understand iteration and refinement—common in software development and writing—succeed much faster than less technical users. Technical professionals with high confidence experimenting with new tools develop mental models of Chat GPT's capabilities faster than non-technical users. Organizations that provide even minimal training (30-60 minutes) see dramatically higher adoption rates and user satisfaction than those that simply provide access without guidance, suggesting that learning approach significantly impacts success.

What organizational policies and governance approaches are emerging around Chat GPT use?

Organizations are transitioning from prohibition or uncertainty to cautious integration and eventual optimization. Leading organizations establish explicit policies defining what data can be fed to Chat GPT (avoiding confidential or proprietary information), which departments can use it for specific purposes, and how to document use. Training on responsible use is increasingly provided. Some organizations are integrating Chat GPT into official workflows and tools via APIs. Industries with regulatory requirements (healthcare, finance, legal) are moving more slowly but establishing governance frameworks that enable use while managing risk. The research shows organizations in advanced integration stages see better adoption, higher employee satisfaction, and better risk management than those still prohibiting or ignoring the tool.

How will Chat GPT usage likely evolve in the next 1-3 years based on the research findings?

The research suggests several likely trajectories. Specialization and fine-tuning will accelerate, with organizations creating domain-specific models trained on industry data. Multimodal integration will expand to handle images, video, audio, and code more seamlessly. Real-time data access will overcome knowledge cutoff limitations. Regulatory frameworks are developing in the EU, US, and elsewhere, which will accelerate adoption in regulated industries once rules clarify. Enterprise integration will deepen, with organizations building internal AI systems trained on proprietary data. Pricing and competition will likely decrease costs and expand accessibility. Human-AI collaboration patterns will mature as organizations learn more effective ways to combine AI capabilities with human judgment. The overall trajectory points toward deeper organizational embedding and more specialized, governance-conscious deployment rather than explosive growth of new use cases.

What concerns about bias, fairness, and ethics does the research address?

The research documents legitimate concerns about hallucinations creating trust problems, bias in training data perpetuating stereotypes, intellectual property questions about training data sources, privacy concerns about feeding sensitive data to third-party services, concentration of power among a few AI companies, and environmental impact of training large models. These concerns create appropriate caution in regulated industries and among ethically-conscious adopters. The research shows that users in high-stakes fields (medicine, law, finance) are more cautious about adoption and implement stronger verification processes. Rather than viewing these concerns as obstacles, responsible organizations address them through policy, governance, and human oversight to enable beneficial use while constraining risks. The research suggests that acknowledging and addressing these concerns is necessary for sustainable long-term adoption.

How does the generational divide in Chat GPT adoption compare to other technology adoptions?

Unlike previous technologies where generational divides remained wide and persistent, Chat GPT shows a narrowing generational divide. Users aged 18-25 use it most intensively (3-5 interactions daily), but users aged 40-60 are adopting rapidly and increasingly becoming daily users once they overcome initial skepticism. Adoption in users aged 60+ is lower overall but shows strong growth among those who do adopt. The research shows this gap is closing much faster than with technologies like social media, where generational divides remained large. The narrowing is likely because Chat GPT's utility is tied to professional productivity rather than social or entertainment factors, which motivates adoption across age groups. This suggests Chat GPT will become a general-purpose tool across all age groups rather than remaining a generational technology.

What geographic and economic disparities in Chat GPT adoption does the research reveal?

The research documents that adoption is highest in wealthy countries with high English proficiency and strong technology infrastructure (North America, Northern Europe). Several factors create these disparities: language barriers, as Chat GPT performs best in English; infrastructure limitations in regions with unreliable internet; pricing barriers, where subscription costs are more significant relative to income in lower-income countries; educational access differences; and regulatory environment variations. The research suggests Chat GPT adoption is currently amplifying existing technological and economic divides rather than reducing them, though this might change as localization improves, pricing options expand, and access increases. This disparity highlights that addressing global equity in AI access requires deliberate effort in language support, pricing models, and infrastructure development.

The Road Ahead: Integration and Maturation

Open AI's research marks a turning point. We've moved from "is Chat GPT real and useful?" to "how do we responsibly deploy and govern this technology?" That's progress. It means the conversation is maturing.

For you, reading this now, the practical implication is straightforward: if you haven't yet integrated Chat GPT into your work, you're increasingly an outlier. Not because it's trendy. Because it works. Because it saves time. Because it compounds.

The next phase of adoption isn't about novelty. It's about competence. It's about the organizations and individuals who figure out how to use these tools effectively pulling ahead of those who don't.

The research gives us the map. Now comes the execution.

Last updated: September 2025. This article synthesizes findings from Open AI's "How People Use Chat GPT" research study alongside additional industry research on AI adoption patterns.

Key Takeaways

- 73% of ChatGPT usage is work-related, with professional drafting, summarization, and coding dominating adoption.

- Education is the second-largest use category, with students and teachers leveraging the tool for learning and lesson preparation.

- Technical professionals and developers lead adoption, establishing ChatGPT as a productivity tool rather than a novelty.

- Organizational policies are transitioning from prohibition to cautious integration, with governance frameworks enabling responsible use.

- Generational divides in adoption are narrowing faster than historical technology adoption patterns across all age groups.

Related Articles

- Salesforce's New Slackbot AI Agent: The Workplace AI Battle [2025]

- AI Accountability & Society: Who Bears Responsibility? [2025]

- Is Alexa+ Worth It? The Real Truth Behind AI Assistant Expectations [2025]

- OpenAI's $10B Cerebras Deal: What It Means for AI Compute [2025]

- Anthropic's Cowork: Claude's Agentic AI for Non-Coders [2025]

- Why Grok's Image Generation Problem Demands Immediate Action [2025]

![How People Use ChatGPT: OpenAI's First User Study Analysis [2025]](https://tryrunable.com/blog/how-people-use-chatgpt-openai-s-first-user-study-analysis-20/image-1-1768484258341.jpg)