How the Clicktatorship Is Reshaping American Politics [2025]

There's a new word that should alarm anyone paying attention to American politics right now: the "clicktatorship."

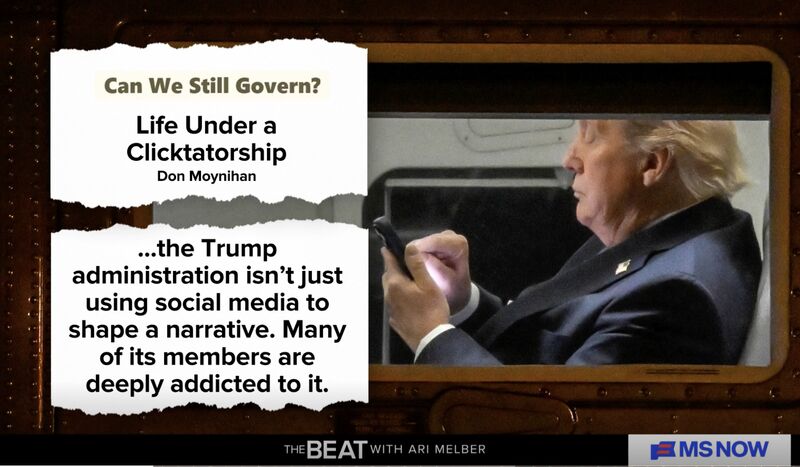

It's not a traditional dictatorship. It's worse in some ways because it's harder to see. The clicktatorship is a form of government where policy decisions aren't made in secure rooms by career bureaucrats weighing evidence and long-term consequences. Instead, they're made based on what will trend on X, what generates engagement on Truth Social, and what makes right-wing podcasters say "hell yeah" to their audiences. According to Wired, this phenomenon is reshaping how decisions are made in the current administration.

This isn't hyperbole. This is what's happening in real time in the second Trump administration.

In January 2025, we watched as immigration raids were livestreamed by the Department of Homeland Security like reality TV. We've seen high-ranking government officials show up to Senate hearings with printed-out tweets and Tik Tok clips as their primary defense. We've witnessed policies eliminated not because they failed on the merits, but because conspiracy theories about them went viral. USAID, which has saved millions of lives globally, got cut because Elon Musk believed unsubstantiated claims about it online.

The machinery of government is now running on engagement metrics and algorithmic amplification instead of policy analysis and democratic deliberation.

The problem isn't just that politicians are using social media. The problem is that they've been conditioned by it, addicted to it, and in many cases, delusional about it. The people making decisions that affect millions of lives are consuming the same conspiracy theories they're promoting. The line between propaganda and genuine belief has collapsed entirely.

Let's break down what's actually happening, how we got here, and why this matters more than you probably think.

TL; DR

- The clicktatorship: A new form of government where online engagement and viral content directly drive policy decisions instead of evidence and expertise

- Policy by algorithm: Major federal agencies are being eliminated, immigration raids are livestreamed like reality TV, and military actions are justified through social media narratives

- Conspiracy to governance: Conspiracy theories that spread on right-wing platforms are now becoming the basis for actual government action, creating real-world consequences

- The feedback loop: Politicians both consume and create the content they're governed by, creating a self-reinforcing system immune to facts or contradictory evidence

- The cost: Millions of lives affected by USAID cuts, public trust eroded, democratic institutions weakened, all driven by engagement metrics rather than expertise

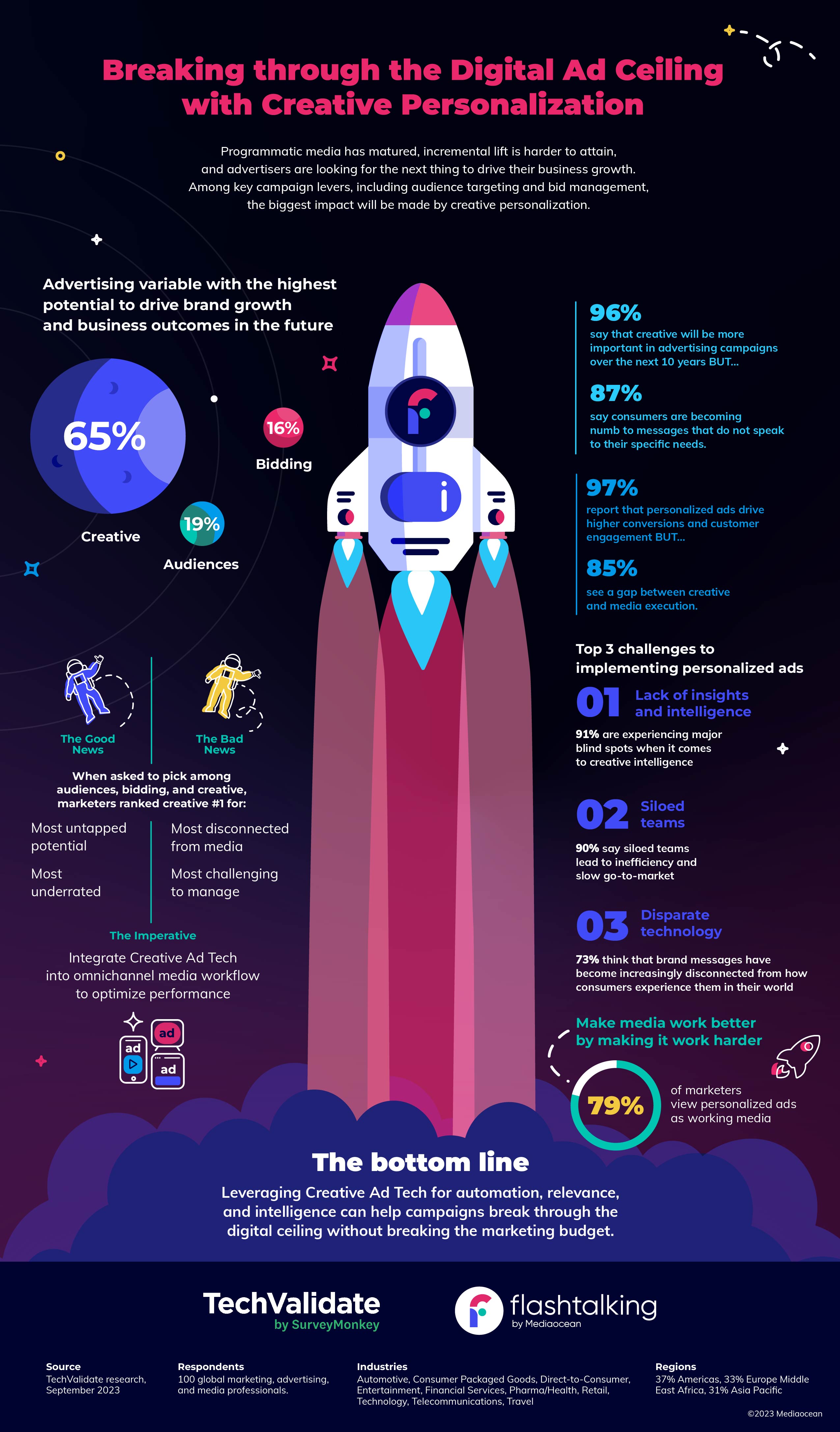

Estimated data shows a stark contrast between the skills needed for effective governance and those for social media success, highlighting the shift in political success criteria.

What Exactly Is the Clicktatorship?

The term "clicktatorship" was articulated by Don Moynihan, a professor of public policy at the University of Michigan. It's not his invention in the sense that he created the phenomenon, but he's one of the few serious academics paying attention to what's happening and naming it clearly.

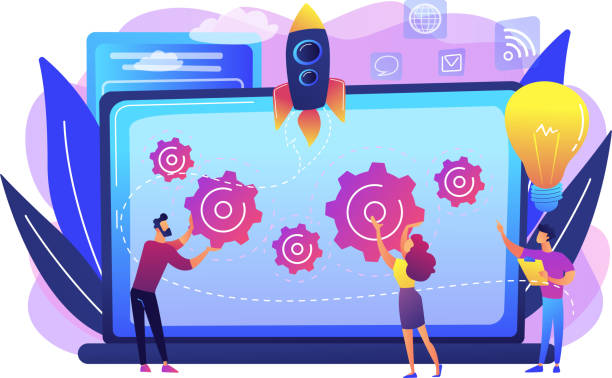

A clicktatorship combines two things that used to be separate: a social media worldview and authoritarian decision-making.

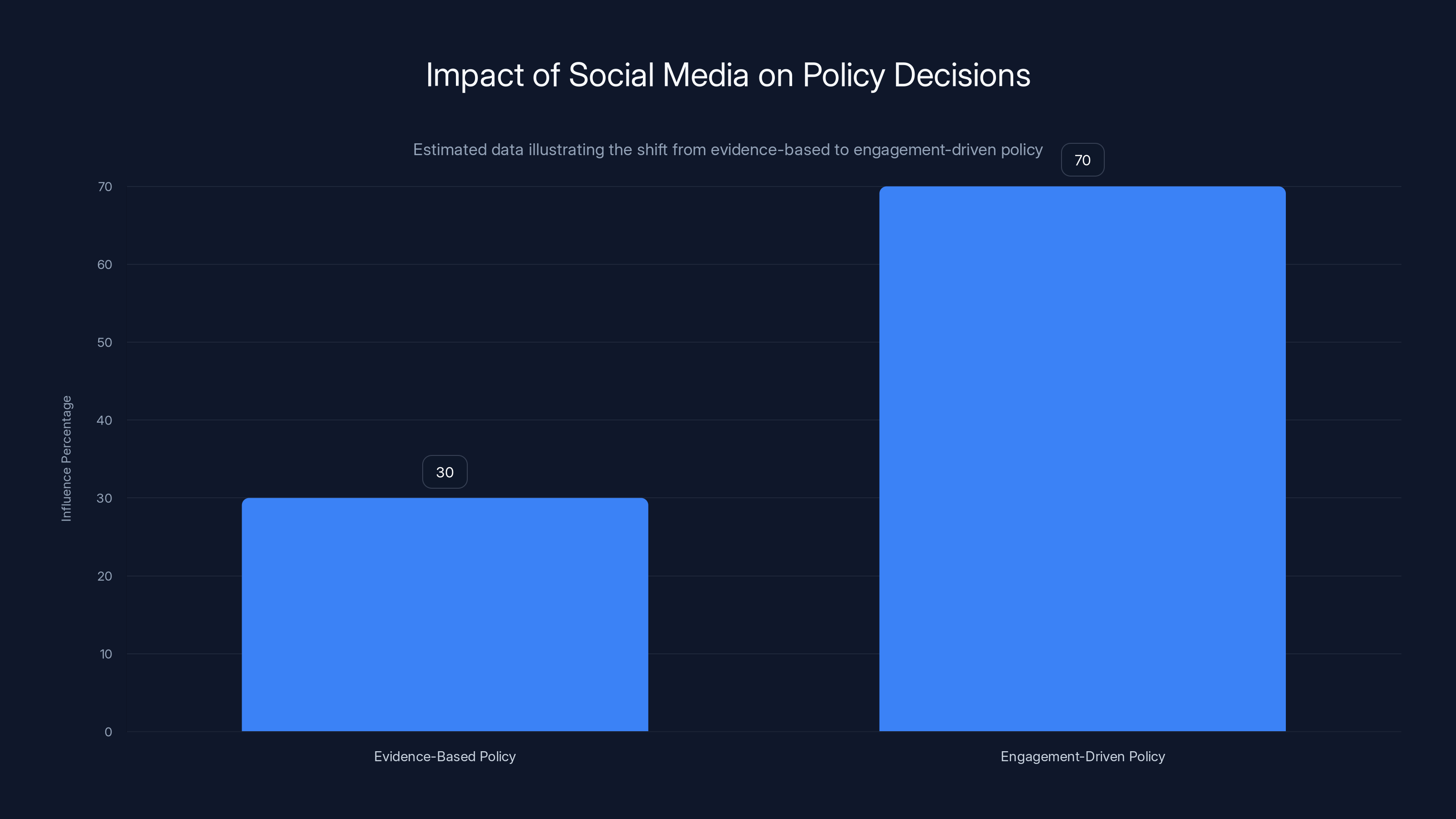

What does that mean in practice? It means the people running the government aren't asking "Is this policy evidence-based? Will it work? What are the second-order consequences?" Instead, they're asking "How will this play online? What's the content angle? How can we package this to make our base go wild?"

Everything becomes content. Your response to a humanitarian crisis becomes a Tik Tok clip. Your policy decisions become meme material. The implementation of government functions becomes a performance for an audience.

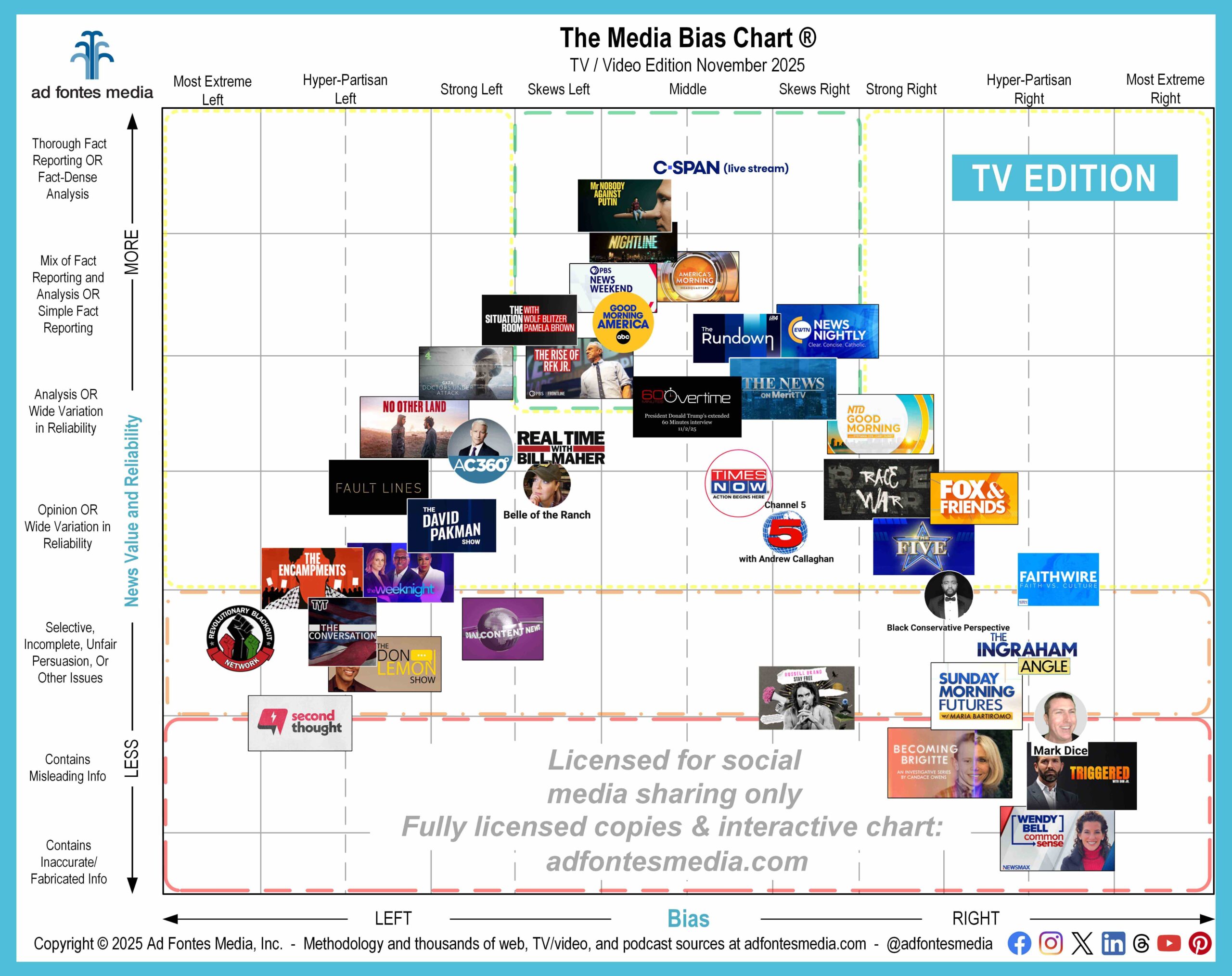

The supply side of this equation is important to understand: Right-wing social media ecosystems have become genuinely friendly environments for conspiracy theories. Moderation is lighter. Algorithms reward outrage and misinformation. The entire infrastructure encourages increasingly extreme content. There's no counter-pressure from mainstream media or fact-checkers who might challenge false claims.

The demand side is equally important: This administration actively wants people who can traffic in those conspiracy theories. They're not accidents. They're not regrettable side effects. They're features. People like Elon Musk, who controls one of the world's most powerful communication platforms, are enthusiastic believers and spreaders of the conspiracy theories themselves.

When the supply of platforms encouraging conspiracy theories meets the demand of an administration that actively wants to employ conspiracy theorists, you get moments that would have seemed impossible just a decade ago.

A clicktatorship is driven by social media influence, authoritarian decision-making, and the promotion of conspiracy theories. Estimated data.

The Evolution From TV Presidency to Content Presidency

Donald Trump's first term was characterized by what you might call a "TV presidency."

He'd watched The Apprentice. He understood how television worked. He knew how to get people to watch. His primary mode of communication was still somewhat traditional: cable news, presidential speeches, press conferences. Yes, he tweeted constantly and unconventionally, but the baseline media diet of political decision-makers was still cable television.

If you wanted to understand Trump's first term, you could watch Fox News, read Breitbart, and scan his Twitter account. You'd have a pretty good sense of what was happening and why.

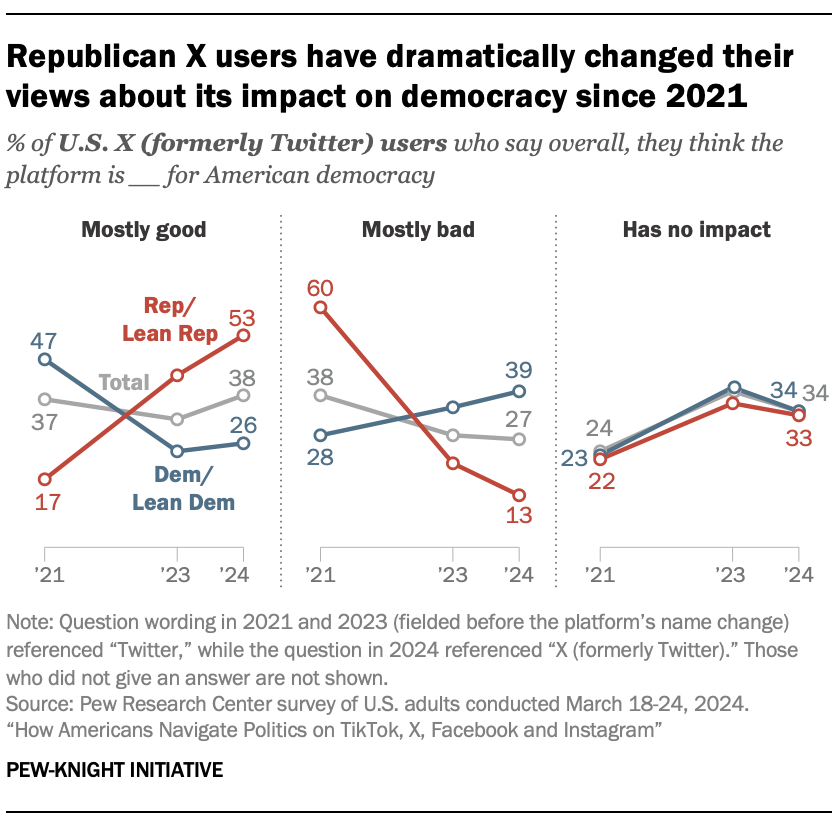

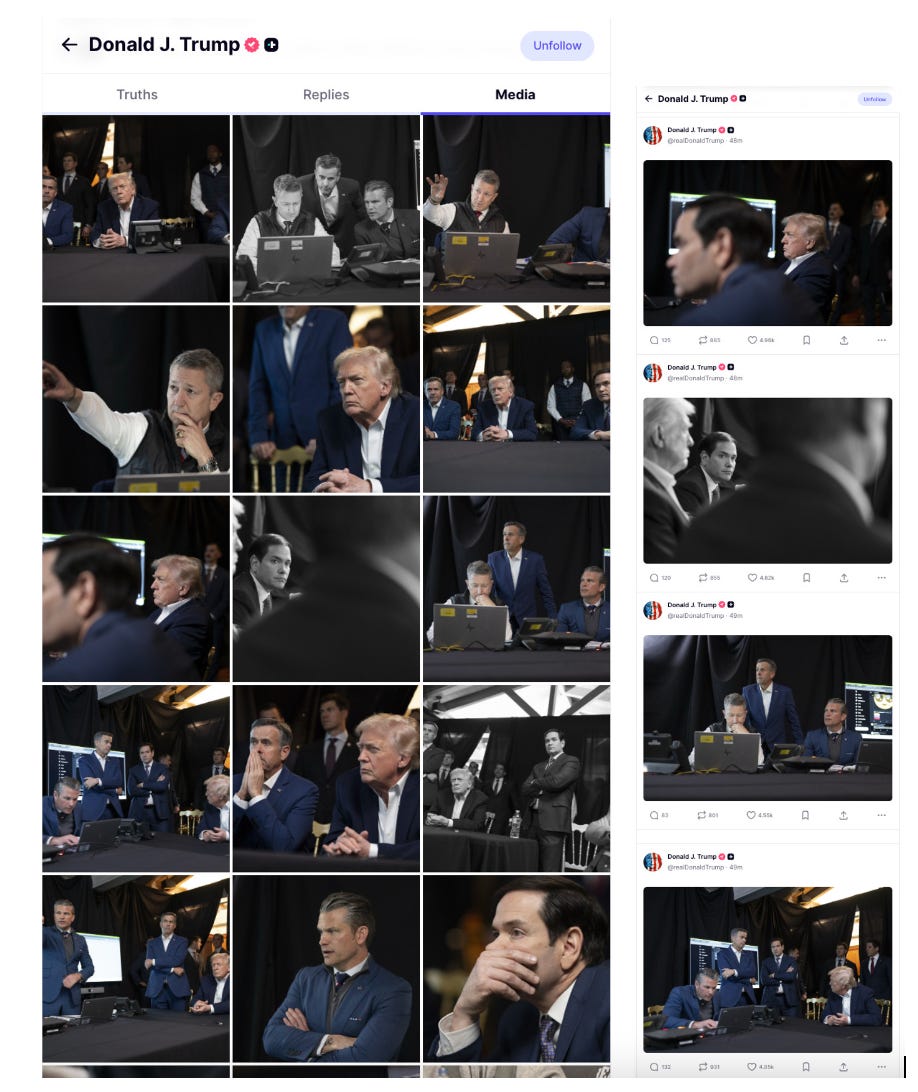

The second Trump presidency is fundamentally different because the reference points have changed. This is now a "Truth Social or X presidency." The primary decision-making environment isn't news studios or even Trump's personal communication platform. It's the algorithmic feed of extremist right-wing communities on social media.

The content and messaging coming from senior policymakers is increasingly filled with inside references and inside jokes that make zero sense unless you're already deeply embedded in these online communities. The discourse has shifted to mirror online behavior: zingers, owned-lib moments, triggering reactions, dunks on the other side.

Look at Pam Bondi showing up to her Senate confirmation hearing with a folder full of printed-out X posts, using them as her primary line of defense against tough questioning. That's not how confirmation hearings are supposed to work. That's a signal that the person being confirmed sees Senate oversight not as a constitutional check on power, but as a hostile audience that needs to be dunked on with sick burns and viral moments.

Senior government officials are now exhibiting the patterns and habits that work on social media: constant trolling, making fun of critics, amplifying outrage, posting edgy content. These are the same behaviors that generate engagement in online spaces. They've become normalized in government precisely because the people making government decisions learned their patterns in online spaces.

The scary part is this isn't a communication strategy. It's not that they're saying one thing on social media and doing something different in policy. They've internalized this worldview. The online mode of thinking has become their actual mode of thinking. These are people whose brains have been shaped by algorithmic feeds and engagement metrics.

How Social Media Literally Changes Your Brain

There's a lot of research on this, and it's not comforting.

Social media platforms aren't neutral tools. They're optimization engines designed to maximize engagement. Engagement comes from strong emotions. The strongest emotions are outrage, fear, and tribal affiliation. As a result, the algorithms push you progressively toward more extreme content.

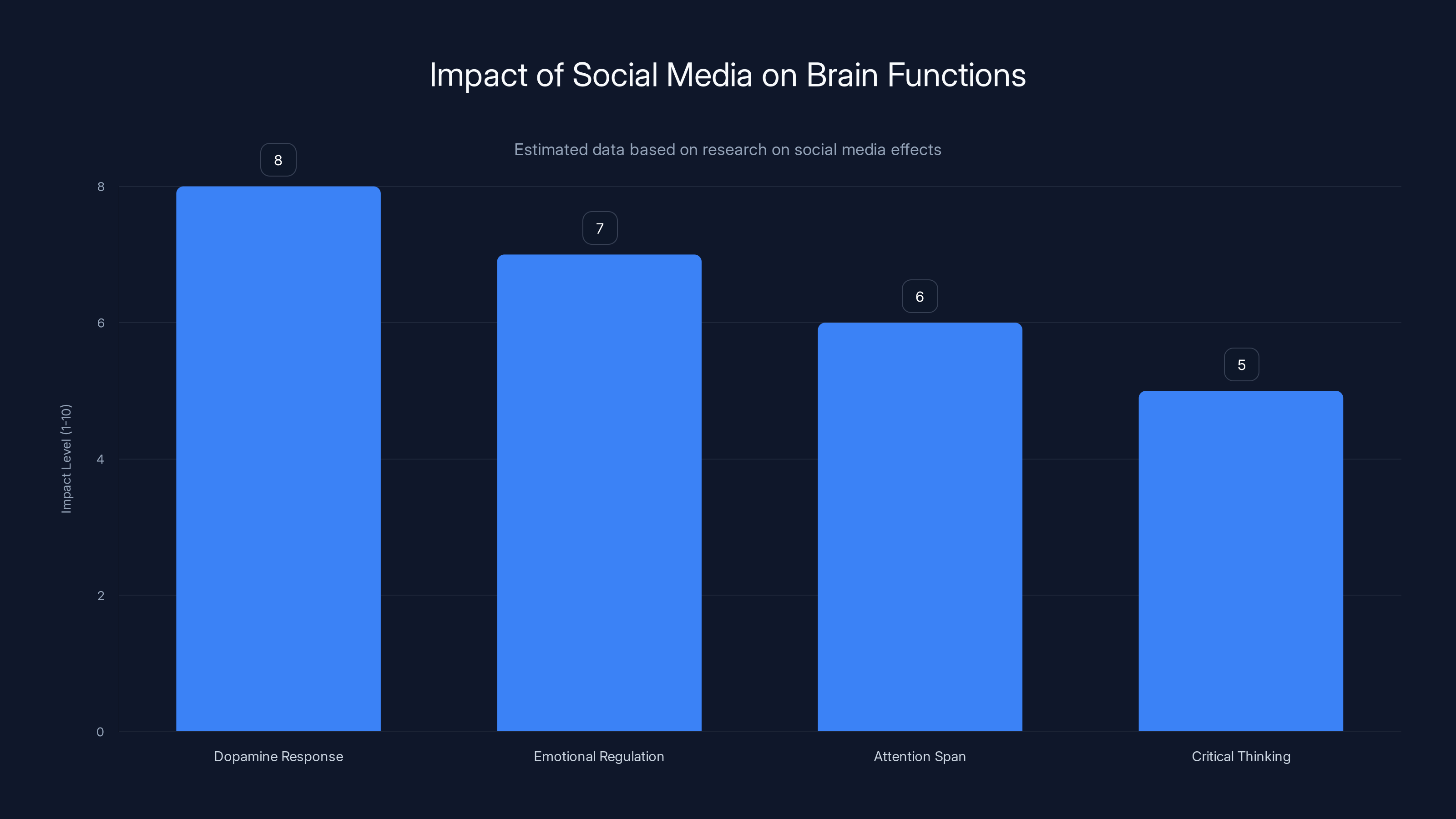

If you spend enough time in this environment, your brain actually changes. Your reward system gets recalibrated. You start finding normal information boring. You need increasingly extreme stimuli to feel satisfied. You develop what researchers call "algorithmic addiction," where your dopamine response becomes coupled to engagement metrics.

Content moderators have reported experiencing this phenomenon firsthand. They spend their days watching harmful content, evaluating whether it violates platform rules. Over time, many of them started believing the conspiracy theories they were supposed to be policing. They weren't exposed to misinformation through a normal channel. They were exposed constantly, in depth, and over extended periods. That's actually more effective at creating belief than normal exposure.

Now imagine that same dynamic, but for the President of the United States and his cabinet. These aren't casual users scrolling during their commute. These are people who are online for hours. These are people whose entire information diet is filtered through these systems. These are people whose success and validation comes from posting content that performs well on these platforms.

The weaponization of false information is typically thought of as a one-way street: The powerful manipulate the public. But there's a darker version: The people who've become skilled at manipulating information are also people who've spent so much time in these environments that they've internalized the misinformation themselves.

Elon Musk is a perfect example.

Musk is genuinely smart about technology. He's also genuinely skilled at using social media to manipulate narratives and control public perception. But he's also completely consumed by the online environments where he spends most of his time. He shares wild conspiracy theories as if they're obvious facts. He dunks on critics with the same energy as a 19-year-old gamer. He falls for elaborate trolls.

He's not strategically promoting false information while knowing it's false. He's consumed so much false information that he genuinely believes it. The supply of conspiracy theories has overwhelmed his ability to fact-check or verify claims. He's high on his own supply.

Social media significantly impacts dopamine response and emotional regulation, leading to changes in brain functions. Estimated data.

The Cost: When Conspiracy Theories Become Government Policy

Here's the part that actually matters.

Conspiracy theories were always kind of annoying background noise in politics. But they stayed mostly in the background because there were institutional checks. Experts in the relevant fields had input on policy decisions. Evidence mattered. Career staff could push back on decisions that didn't make sense. There were processes.

The clicktatorship removes those checks.

Consider USAID. The United States Agency for International Development has been in operation since 1961. It's been involved in humanitarian work globally: disease prevention, hunger relief, disaster response, development assistance. Was USAID perfect? No organization is. But it was a functioning government agency doing important work.

In the second Trump administration, significant portions of USAID were eliminated. Not because of a policy review. Not because of an evidence-based decision. But because Elon Musk believed conspiracy theories about it online and promoted those conspiracy theories to the President, and the President acted on those conspiracy theories.

Think about that for a second. A federal agency that has probably saved millions of lives was dismantled based on unverified claims made in social media posts. The cost in human life will be real. In countries where USAID was funding disease prevention, that funding will disappear. In regions where USAID was helping prevent famine, that help will vanish.

But to the people making the decision, none of that matters compared to how it performs online. The decision to cut USAID generates content: angry liberals, international outcry, stories about the administration "finally taking action." It makes the base feel like something is happening. It generates engagement.

The actual consequences are abstractions. They won't show up in your feed. They won't generate viral moments. They'll happen quietly in countries you don't follow the news from, to people you'll never meet.

Immigration enforcement offers another example. Immigration raids have become content. The Department of Homeland Security is actively distributing videos of immigration enforcement operations, turning them into something closer to entertainment than public safety documentation. These aren't accidentally leaked. These are officially released. They're treating enforcement operations like they're reality TV episodes.

This changes the nature of the enforcement itself. When your goal is to generate good content and viral moments, the incentives shift. You want dramatic situations. You want visible outcomes. You want footage that plays well. That's different from what you'd prioritize if you were just trying to enforce law effectively.

The Feedback Loop That Can't Be Broken

The really insidious part of the clicktatorship is that it creates a self-reinforcing system.

Trump posts something. His base engages with it. Right-wing media amplifies it. Cable news covers it. More people see it. More engagement happens. The algorithm shows it to even more people. Media reports on the trending topic. Trump sees that it's trending and posts more about it. The cycle repeats.

Within this cycle, there's no point where facts or evidence can intervene. If you post contradictory information, it gets labeled fake news and ignored. If you point out that a claim is false, the response is that you're part of the cover-up. If you provide evidence that contradicts the narrative, that evidence gets dismissed as lies from the mainstream media.

This isn't accidental. This is the actual structure of right-wing social media ecosystems. They're engineered to be hostile to fact-checking and contrary evidence. They're designed to create echo chambers where the same claims get repeated and amplified but never seriously challenged.

For people inside this system, external information doesn't matter. Other people's experience doesn't matter. Experts don't matter. The only thing that matters is what the community believes and what content performs well within the community.

The people running government now have spent years operating within this system. They know how it works. They've been successful within it. They've built careers on it. They're not going to suddenly decide to leave it and start listening to mainstream experts and fact-checkers.

Instead, they're going to bring the system's logic into government. That's already happening. We're seeing it in real time.

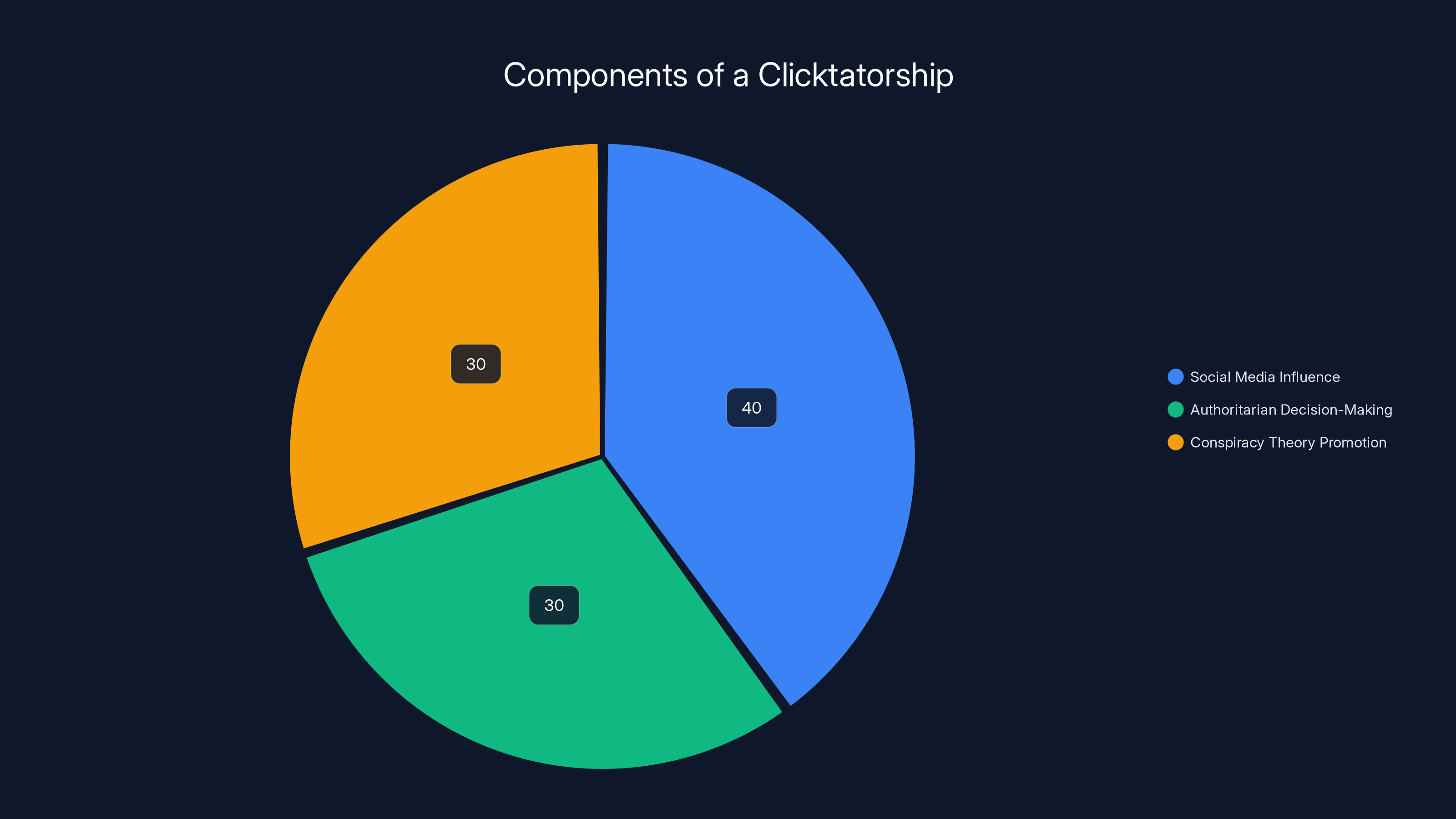

Estimated data suggests a significant shift towards engagement-driven policy decisions, with social media engagement increasingly influencing governmental actions.

How Politicians Became Influencers

There's a broader shift happening that's worth understanding.

Politicians used to be politicians. They'd serve in office, develop expertise, build relationships with colleagues, learn the legislative process, and try to pass bills. The media dimension was important, sure, but it was separate from the actual work of governing.

That distinction has collapsed.

The most successful people in politics right now are people who are successful on social media. They're people who can post viral content, who can dunk on opponents, who can generate engagement. The skills that made you successful in social media are now the primary skills that determine your political success.

That's a catastrophic change because the skills required for social media success are basically opposite to the skills required for effective governance.

Effective governance requires: Listening to experts. Understanding complex tradeoffs. Building consensus. Considering second-order consequences. Making decisions based on evidence. Working through institutional processes.

Social media success requires: Extreme positions. Dunking on opponents. Generating outrage. Simplifying complex issues into tribal narratives. Discarding nuance. Moving fast and breaking things.

When the people running government are people who've been selected for social media success, you get a government that's optimized for social media performance at the expense of actual effectiveness.

There's also a class element here that's worth noting. Social media is a way for people without traditional credentials or institutional backing to gain political power. You don't need to come up through the Republican Party apparatus. You don't need to be a sitting congressperson. You don't need to have expertise in your portfolio. You just need to be able to post content that resonates with the base.

Some people see this as democratic. It's true that it bypasses traditional gatekeepers. But it also removes institutional checks and replaces expertise with popularity. That's not obviously better.

The Difference Between Communicating Online and Being Governed By Online

This distinction matters.

For a long time, we understood politicians using social media as a communication tool. They're using the platform to get their message out. They're trying to control the narrative. They're attempting to build support for their positions. That's one thing.

What's happening now is different. Social media isn't just a communication channel. It's the actual decision-making environment.

Decisions aren't being made and then communicated through social media. Decisions are being made based on what will work on social media. The primary question isn't "What's the right policy?" It's "What will generate engagement and support among our base?"

That's a fundamental shift in how government operates.

Consider the difference between these two scenarios:

Scenario One: The administration develops a policy based on evidence and expertise. They then use social media to communicate why the policy is good and build public support.

Scenario Two: The administration notices that a certain narrative is generating engagement on social media. They then develop policy based on that narrative, regardless of evidence.

We're in Scenario Two.

The second scenario is worse because it means you're not getting the benefit of expertise and evidence. You're getting policy based on algorithmic amplification. You're getting government optimized for engagement metrics. You're getting decisions made by people who've spent years in algorithmic echo chambers.

There's also a temporal dimension. Social media incentivizes constant novelty. You need new content. You need new takes. You need to keep people's attention. That's hard to do if you're pursuing evidence-based policy because evidence-based policy doesn't usually generate new viral moments every day.

So what happens is you get increasingly extreme policy just to generate new content. The baseline keeps shifting. What would have seemed shocking last year becomes normal this year. The shock value wears off, so you need something more extreme to generate the same engagement.

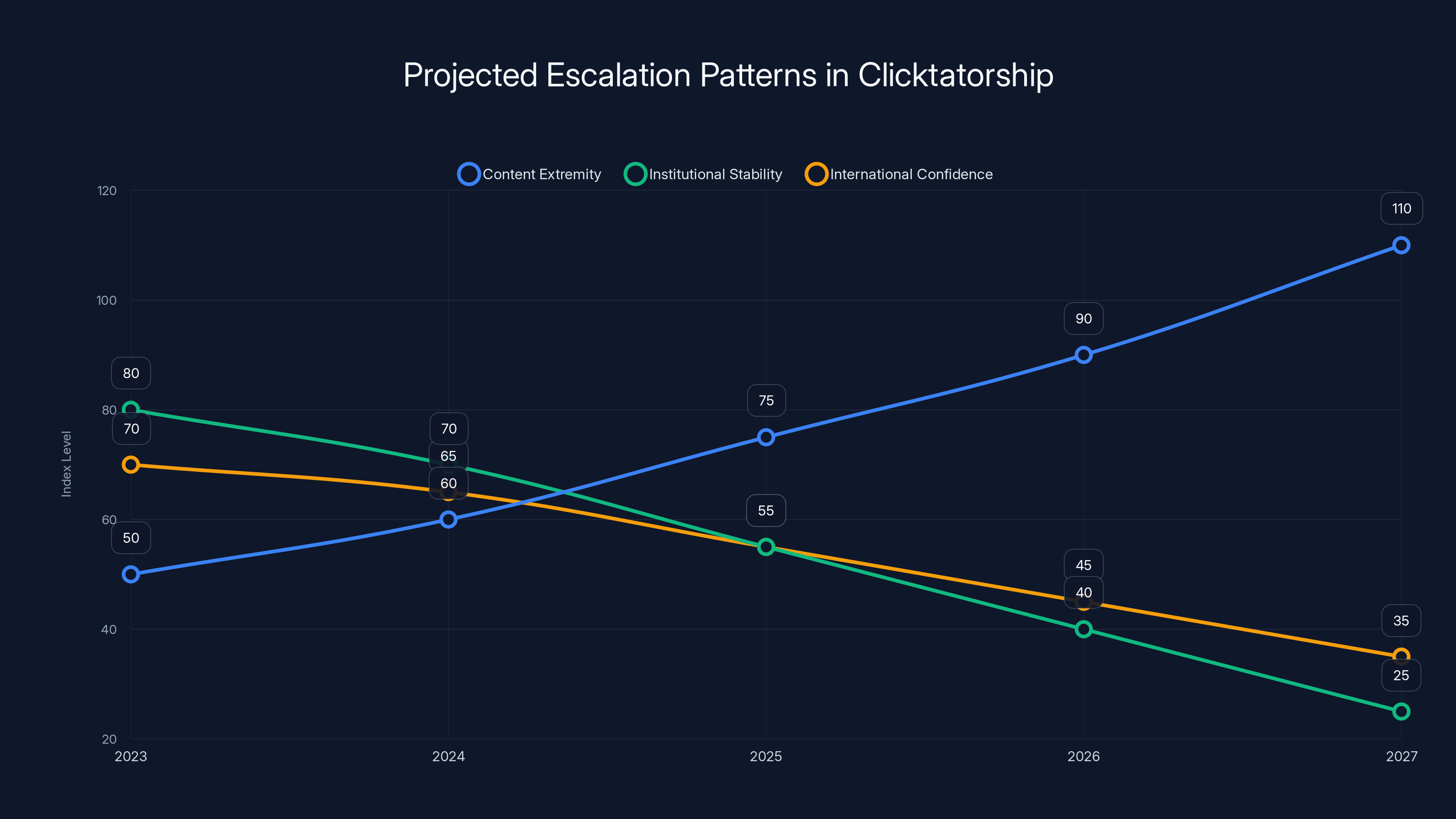

Estimated data shows increasing content extremity and decreasing institutional stability and international confidence over time. This reflects the potential destabilizing effects of prioritizing engagement over competence.

The Conspiracy Theory Pipeline: From Fringe to Federal Policy

Understanding how conspiracy theories become policy requires understanding the actual pipeline.

It starts on the fringes of the internet. There's a conspiracy theory posted on some obscure forum or fringe social media platform. Maybe it's about vaccines. Maybe it's about who's secretly controlling major institutions. Maybe it's about immigrants. It doesn't matter.

Initially, very few people see it. But if it resonates with certain communities, it starts spreading. It gets shared in Telegram groups. It appears in You Tube comments. It gets reposted on Reddit. The algorithm starts showing it to people who engage with similar content.

At some point, it reaches the mainstream of right-wing social media. It's being shared on X and Truth Social. It's being discussed in right-wing podcasts. Conservative influencers are talking about it. It's gaining visibility.

If it gains enough traction, it will eventually reach people with actual power. That might be media figures like those on Fox News. It might be political operatives. It might be people with direct access to the President.

Historically, this is where it would stop. The people with power would recognize it as a conspiracy theory and dismiss it. They'd have staff members who fact-check these claims. They'd have media advisors who tell them not to touch it.

But that filter has been removed in this administration.

The people with power are the same people who've been immersed in these ecosystems. They've consumed the conspiracy theories already. They don't have fact-checking staff telling them these are false. They have advisors who believe the conspiracy theories too.

So the conspiracy theory moves from the fringes all the way to federal policy with almost no friction.

Take the conspiracy theories about Haitian immigrants in Springfield, Ohio as an example. Unsubstantiated claims about immigrants committing crimes appeared on social media. The claims went viral. They were false, completely made up. But they reached the President, who then started talking about them publicly. The claims moved from an obscure forum to the President of the United States to mainstream news coverage to political discussions.

The entire pipeline was just: fringe social media → engagement → more engagement → higher reach → presidential attention → government action.

There was no fact-checking stage. There was no expert input stage. There was no verification stage. There was just: Does this generate engagement? Yes? Promote it.

The consequences are real. People in Springfield experienced harassment based on claims that were completely false. But the consequences to the people promoting the claims? Zero. The engagement generated from the false claims had real benefits for those people's visibility and political standing.

That's an asymmetric incentive structure. The people creating or amplifying false claims have every incentive to continue. The people fact-checking them have no reward for doing so. False information spreads faster and further than corrections.

When Content Strategy Becomes National Security Policy

Here's where it gets genuinely scary.

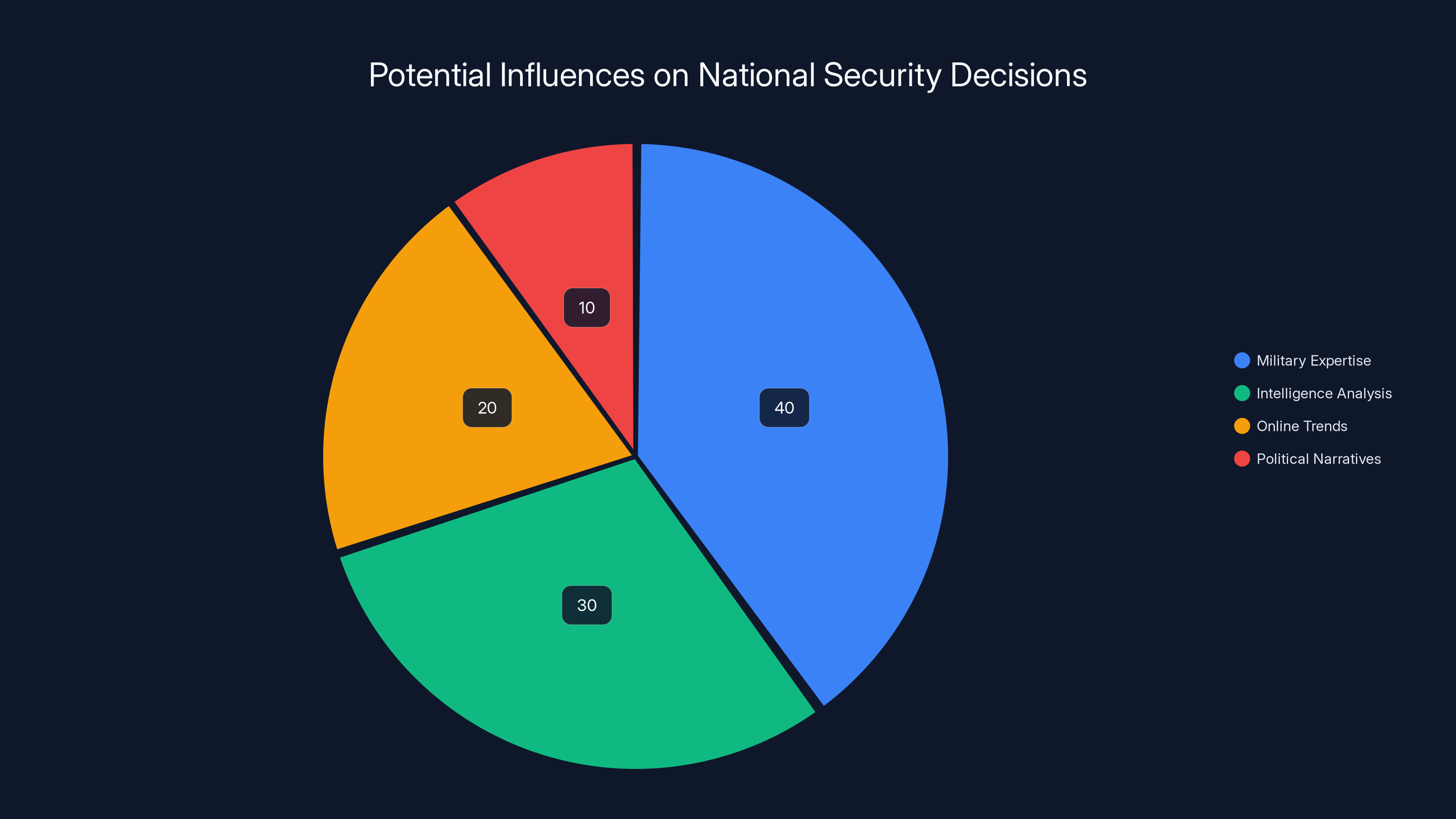

Defense and national security aren't areas where you should be making decisions based on content performance. These are decisions that should be made by military experts, intelligence analysts, and career national security professionals based on actual threats and strategic considerations.

But in a clicktatorship, even these decisions become subject to online incentives.

Consider military mobilization or the use of military force domestically. Historically, these are decisions that go through extensive institutional processes. The Joint Chiefs of Staff are consulted. Intelligence briefings are conducted. Legal analysis is done. The President meets with advisors. There are checks and balances.

What if that process gets short-circuited because something is trending on X and the President wants to generate a dramatic response? What if military action is being planned partly because it will look good on video?

This isn't hypothetical. We've already seen immigration enforcement operations being treated like content opportunities. We've seen rhetoric about using military forces domestically being discussed more openly. The line between what's acceptable and what's not is being moved in real time based on what plays well online.

There's also a information control element. The administration can decide what videos to release, what narratives to push, what stories get told. They can create a curated version of reality and distribute it through their preferred platforms. The people who support them will see that version of reality. The people who oppose them will see a different narrative. The two groups are living in increasingly different information universes.

In that environment, facts don't matter. Shared reality doesn't exist. The only thing that matters is whether a narrative helps your political position or hurts it.

Estimated data shows a shift where online trends and political narratives increasingly influence national security decisions, potentially overshadowing traditional military expertise and intelligence analysis.

The Algorithmic Weaponization of Outrage

Right-wing social media platforms have become genuinely effective at generating outrage and maintaining engagement.

The formula is pretty standard: Identity politics. Tribal affiliation. Stories about the in-group being attacked or victimized. Stories about the out-group committing transgressions. Validation of existing beliefs. Affiliation with a community of people who share your beliefs.

The more time you spend in these ecosystems, the more extreme your views tend to become. That's not because you're being exposed to convincing arguments. It's because of how the algorithms work. They're designed to keep you engaged. Engagement comes from strong emotions. The content that generates the strongest emotions is content that makes you feel attacked, vindicated, or tribal.

Everything else gets downranked.

So if you're in a right-wing social media ecosystem, you're seeing a constant feed of stories about how the other side is attacking your values, your country, your way of life. You're seeing validation from your community. You're seeing enemies being dunked on. You're seeing your worldview confirmed and affirmed.

You're not seeing nuance. You're not seeing complexity. You're not seeing the actual arguments from the other side. You're seeing a caricature designed to maximize your emotional reaction.

Over time, this shapes how you see the world. It shapes what you believe. It shapes what you think is true. And if you're someone with power and you've spent years in this environment, it shapes the decisions you make.

The platform owners know this. They've designed the systems this way because engagement is what makes them money. More engagement means more advertising revenue. More advertising revenue means more profit. So they're actively optimizing for the kind of content that generates the most outrage and tribal affiliation.

They're not creating neutral platforms. They're creating radicalization engines.

The scary part is how efficient these engines are. They can take someone from moderate political views to fairly extreme views in a matter of months. They can take someone from skeptical of conspiracy theories to fully believing them in a matter of weeks. They're optimized for radicalization.

And now we have a government being run by people who've been optimized by these systems.

The Erosion of Institutional Expertise

One of the casualties of the clicktatorship is institutional expertise.

For decades, government has relied on career civil servants. These are people who work in government for their whole careers. They develop expertise in their fields. They work through multiple administrations. They provide continuity and institutional knowledge.

They're also a check on executive power. If a President wants to do something that's harmful or unconstitutional, career staff can push back. They can point out problems. They can slow things down. They can leak information to the press. They're not perfectly effective, but they provide some constraint.

The current administration is actively hostile to career staff. They're removing people. They're replacing them with political appointees. They're promoting people based on loyalty rather than expertise. They're creating a government where people are selected for whether they'll help you generate content and whether they believe in your conspiracy theories, not for whether they know how to do the job.

When you remove the expertise filter, you get decisions that are more responsive to social media incentives and less constrained by reality. You get policies that seem good on social media but don't actually work in practice.

The USAID example is instructive again. USAID employees knew how to do humanitarian work. They had expertise in disease prevention, hunger relief, disaster response. They could have explained why cutting USAID was a bad idea. But they're being removed or ignored because they're part of the "deep state" that's supposedly conspiring against the President.

The people replacing them aren't experts in humanitarian work. They're people who've proven themselves loyal, who believe the conspiracy theories, who can generate good content.

They're going to make worse decisions because they don't know what they're doing. But those worse decisions won't matter compared to how they play online.

The International Dimension: When Domestic Politics Becomes Foreign Policy

The clicktatorship doesn't just affect domestic policy. It affects how America interacts with the rest of the world.

Foreign policy has historically been somewhat insulated from domestic political pressures. Not completely, but there was a recognition that international relationships require consistency and long-term thinking. You don't antagonize allies just because it will generate engagement on social media.

Except now you do.

If something will play well to the domestic base, if it will generate content, if it will make your supporters feel like you're "winning" against foreign enemies, then it becomes a viable foreign policy position regardless of whether it actually serves American interests.

This is destabilizing. America's allies can't count on consistent policy. They can't rely on commitments made by one administration. They can't plan long-term relationships because policy might change based on what's trending on X.

Enemies can exploit this. They can study the incentive structures and figure out what kind of provocations will generate the kind of response that serves their interests. They can manipulate the online ecosystem to generate the kind of content that will push America toward policies that harm American interests.

The entire system becomes more fragile because it's no longer based on long-term strategic thinking. It's based on weekly engagement cycles.

Democratic Erosion: When Accountability Becomes Theater

Democracy requires accountability.

In a functioning democracy, leaders are accountable to the people through elections, to the legislature through oversight, and to the courts through law. Those institutions are supposed to check executive power.

But they only work if they're taken seriously. They only work if people respect the institutions and their authority.

In a clicktatorship, accountability processes become theater.

When Pam Bondi showed up to her Senate confirmation hearing with printed-out X posts instead of serious answers to serious questions, she was signaling: I don't respect this process. This is theater. The real accountability happens on X, not in the Senate.

The Senate didn't effectively challenge that. Congress is an institution in decline anyway, but this kind of posture toward congressional oversight further erodes whatever authority Congress has left.

The courts are another check, but if the administration can paint courts that rule against them as part of a conspiracy, if the media environment will amplify those claims, if the base will believe them, then the courts lose authority too.

What you're left with is an executive that faces no meaningful accountability. Elections are the final check, but if the information environment is so fragmented that different groups have completely different understandings of reality, then even elections become about which reality version can generate more engagement rather than which candidate actually has better ideas.

That's democratic erosion.

The Economic Incentives That Drive the System

Understanding the clicktatorship requires understanding the economic incentives.

Social media platforms make money from engagement. Engagement means advertising impressions. More impressions means more revenue. So everything in the system is optimized to maximize engagement.

The content that generates the most engagement is content that triggers strong emotions. Outrage, fear, tribal affiliation, validation of existing beliefs. Nuance doesn't generate engagement. Facts don't generate engagement. Boring policy analysis doesn't generate engagement.

Hot takes do. Conspiracy theories do. Content that makes people feel like they're part of a community fighting against enemies does.

So the platforms' algorithms actively promote this kind of content. Not because the platform owners are deliberately trying to radicalize people (though some might be). But because it's what the algorithms optimize for. It's what makes them money.

The people running government have learned to operate within these incentive structures because they've spent years on these platforms. They know what works. They know that reasonable policy analysis doesn't generate engagement. They know that attacking opponents does. They know that conspiracy theories and outrage generate more engagement than careful deliberation.

So they've adjusted their behavior accordingly. They're now optimized for the incentive structure of social media platforms, not for the incentive structure of good governance.

The platforms, for their part, are happy about this. They benefit from the engagement. They benefit from the political attention. They benefit from being the arena where politics happens.

So you have this alignment of incentives: Platform owners want engagement. Politicians want to generate engagement. The base wants content that makes them feel validated and part of a community. The result is a system optimized for radicalization and misinformation.

What Comes Next: The Trajectory of Clicktatorship

If current trends continue, the clicktatorship will likely become more entrenched.

The more power you consolidate through engagement and conspiracy theories, the more you need to maintain engagement to maintain power. You need increasingly dramatic content. You need increasingly extreme positions. You need increasingly clear enemies.

This is an inherently unstable dynamic. You're constantly escalating. What seemed extreme last year seems normal this year. You need something more extreme to generate the same engagement.

Meanwhile, the people making decisions are increasingly people who've been selected for loyalty and social media performance rather than competence. Institutional expertise is being eliminated. Career staff are being replaced with influencers. The checks on executive power are being eroded.

Internally, this is destabilizing. When you're selecting for loyalty over competence, when you're making decisions based on engagement metrics rather than evidence, when you're elevating conspiracy theorists to positions of power, your government becomes less effective at actually delivering results.

But the base might not care about actual results. They might just care about the content, about feeling like the people in power are fighting for them, about the enemies being dunked on.

Externally, this is destabilizing to America's position in the world. Allies lose confidence. Enemies see opportunities. The predictability that international relationships require disappears.

All of this can continue for a while. But eventually, reality reasserts itself. Problems don't go away because you don't post about them. Decisions made on engagement metrics instead of evidence produce worse results over time. Allies do move on. Enemies do exploit instability.

The question is how much damage gets done before that happens.

How to Resist the Clicktatorship (What You Can Do)

This all sounds pretty bleak. And in some ways it is. But there are things that can be done, both individually and collectively.

On an individual level, the most important thing is information hygiene. Be aware of what ecosystems you're in. Actively seek out viewpoints different from your own. Try to encounter information from sources that aren't algorithmically selected to confirm your existing beliefs. Read actual news reporting rather than just commentary. Follow people with actual expertise in fields they're talking about.

When you encounter a claim that makes you feel strong emotions, pause before sharing it. Check it. Where did it come from? Who's promoting it? What's their incentive? Is there evidence for it? What would it take to prove you wrong?

Don't assume you're immune to misinformation. The smarter and more educated you are, the better you are at rationalizing false information that confirms your existing beliefs. Everyone is susceptible. Humility about your own potential to be deceived is important.

On a collective level, the institutions that were supposed to check executive power need to actually do their jobs. Congress needs to take its oversight role seriously. Courts need to stand firm against attempts to delegitimize them. Career staff need to protect institutional expertise. The media needs to call out misinformation clearly.

That requires those institutions to accept that they'll be attacked online. They'll be dunked on. Their legitimacy will be questioned. But that's the job. The job of these institutions is to be a check on executive power even when it's unpopular.

There's also the platform dimension. The platforms themselves could change. They could value accuracy over engagement. They could slow the spread of misinformation instead of promoting it. They could redesign their algorithms to not optimize for radicalization.

They won't do this voluntarily because engagement is how they make money. But regulation could force them to. Other countries have already started implementing digital regulations that constrain what platforms can do. The U. S. could too.

The Deeper Question: What Is Government For?

Ultimately, the clicktatorship raises a fundamental question: What is government for?

Is it for generating content and engagement? Is it for winning tribal conflicts on social media? Is it for making the base feel vindicated and making the other side feel owned?

Or is it for actually solving problems? For protecting people. For maintaining institutions. For creating the conditions where people can live decent lives. For thinking long-term.

Those are fundamentally different purposes. And they're in genuine conflict. The things that generate engagement aren't always the things that solve problems. The things that win on social media aren't always the things that work in practice.

A government optimized for one of those purposes will look very different from a government optimized for the other.

The clicktatorship is a government optimized for engagement, content generation, and tribal warfare. It's not optimized for actually solving problems or protecting people or maintaining institutions.

The tragedy is that these don't have to be in conflict. You could have a government that solved problems AND communicated effectively about them. You could have political leaders who had good ideas AND knew how to explain them on social media. You could have strong institutions AND use modern communication tools.

But that requires people who care about both things. It requires trade-offs. It requires accepting that sometimes the right thing isn't the thing that generates the most engagement.

The current system doesn't have people like that in power. It's selected for people who've learned to optimize exclusively for engagement. It's created institutions optimized for viral moments instead of actual governance.

Whether that changes depends on whether enough people recognize what's happening and decide it's not acceptable. Whether we collectively decide that democracy and institutions and expertise matter more than the next viral moment.

Right now, the incentives are all pointing the wrong direction. And that's a serious problem.

FAQ

What is the clicktatorship exactly?

The clicktatorship is a form of government where decision-makers prioritize engagement metrics and viral content over evidence-based policy. It combines social media worldviews with authoritarian tendencies, where government officials treat major policy decisions as content opportunities and make choices based on how they'll perform online rather than whether they'll actually work. Think of it as a government that's optimized for trending topics instead of solving real problems.

How do conspiracy theories actually influence government policy in a clicktatorship?

Conspiracy theories follow a pipeline: they start on fringe forums, spread through right-wing social media platforms, gain traction through algorithmic amplification, and eventually reach people with power who've been immersed in these ecosystems so long they believe them too. Without institutional checks from career experts or fact-checking staff, the conspiracy theories move straight into policy decisions. USAID was cut because Elon Musk believed conspiracy theories about it, showing how completely the filter between fringe claims and federal action has broken down.

Why do social media platforms optimize for engagement over accuracy?

Social media platforms make money from advertising, and advertising revenue is directly tied to engagement metrics. Engagement comes from strong emotions, and the strongest emotions are outrage, fear, and tribal affiliation. Conspiracy theories and inflammatory content generate vastly more engagement than accurate but boring information. The platforms' algorithms are deliberately designed to promote whatever generates the most engagement, regardless of accuracy, because that's what makes them profitable.

Can someone be both skilled at manipulating social media AND be manipulated by it?

Yes, absolutely. Elon Musk is a perfect example of someone sophisticated enough to weaponize misinformation but who's simultaneously consumed by conspiracy theories he genuinely believes. Spending hours daily in algorithmic echo chambers doesn't just help you manipulate them, it also conditions your brain to believe the misinformation circulating there. You can be both the propagandist and the believer at the same time.

What are the real-world costs of a clicktatorship?

The costs are substantial and concrete: federal agencies are eliminated based on conspiracy theories (USAID cuts will cost lives in disease prevention and hunger relief), immigration enforcement becomes theater optimized for viral videos rather than effectiveness, military decisions could be influenced by engagement metrics rather than strategic analysis, and democratic accountability becomes performative theater instead of actual oversight. Meanwhile, institutional expertise is eliminated, career staff are replaced with loyalists, and democratic institutions are delegitimized to prevent them from checking executive power.

How do I protect myself from misinformation in a clicktatorship environment?

Practice active information hygiene: seek out diverse viewpoints from sources you wouldn't algorithmically encounter, pause before sharing emotionally triggering claims and ask where they came from and who benefits if they're true, follow people with actual expertise in fields they're discussing, check claims before amplifying them, and maintain humility about your own susceptibility to misinformation. The smarter you are, the better you can rationalize false information, so assume you're vulnerable too.

Will the clicktatorship get better or worse over time?

Without intervention, it will likely get worse. The system requires increasingly extreme content to generate engagement, so political positions become progressively more radical. Meanwhile, institutional checks weaken as expertise is replaced with loyalty. The only pathways to improvement involve institutions taking their oversight role seriously despite being attacked online, potential regulation of social media platforms, or a collective decision that democracy and expertise matter more than engagement metrics.

What's the difference between the first Trump presidency and the clicktatorship?

The first Trump presidency was a "TV presidency" where understanding Trump required knowing his Twitter account and watching cable news. The second Trump presidency is a "Truth Social or X presidency" where decisions are made in reference to algorithms and online communities. The first used social media as a communication tool; the second uses it as the actual decision-making environment. Senior policymakers now exhibit online discourse patterns, speak in inside jokes from fringe communities, and make policy decisions based directly on engagement metrics rather than evidence.

The clicktatorship is real. It's not a theoretical concern or distant possibility. It's what's happening right now, shaping policy in real time, affecting real people.

The question isn't whether it exists. The question is what happens next. Whether we decide that facts, expertise, and institutions matter more than engagement metrics. Whether we're willing to demand better from our leaders and our platforms.

Because if we don't, this is what government becomes. And you don't have to look very hard to see it's already started.

The difference between now and a year from now might be dramatic. The people in power are learning how to use these systems more effectively. The platforms are finding new ways to amplify content that drives engagement. The base is becoming more tribal and more extreme.

There's a window to push back. But that window is getting smaller.

Additional Resources

If you want to understand clicktatorship better, pay attention to: The gap between what's trending on Truth Social and actual news reporting. The evolution of rhetoric and policy intensity over months. The qualifications of people being appointed to government positions. Which conspiracy theories become policy. The institutional erosion in government agencies. How often policy changes correlate with trending topics.

These are the indicators that clicktatorship is deepening. Watching them is how you understand whether things are getting better or worse.

And honestly? Right now, they're not getting better.

Key Takeaways

- The clicktatorship is government optimized for social media engagement rather than evidence-based policy making

- Conspiracy theories now travel from fringe platforms to federal policy with minimal institutional filtering

- Senior policymakers have been shaped by algorithmic echo chambers to the point where they genuinely believe misinformation they promote

- Democratic accountability mechanisms are being delegitimized and transformed into performative theater

- The system creates self-reinforcing feedback loops that require increasingly extreme content to maintain engagement

Related Articles

- Reality Still Matters: How Truth Survives Technology and Politics [2025]

- Election Deniers and the Venezuela Conspiracy [2025]

- Facebook Algorithm 2025: Complete Guide to Feed Ranking [2025]

- Brigitte Macron Cyberbullying Case: What the Paris Court Verdict Means [2025]

- Political Language Is Dying: How America Lost Its Words [2025]

- Trump's Mass Deportation Machine: How Federal Law Enforcement Replaced Militias [2025]

![How the Clicktatorship Is Reshaping American Politics [2025]](https://tryrunable.com/blog/how-the-clicktatorship-is-reshaping-american-politics-2025/image-1-1768333273705.jpg)