How to Disable Chrome's On-Device AI Scam Detection [2025]

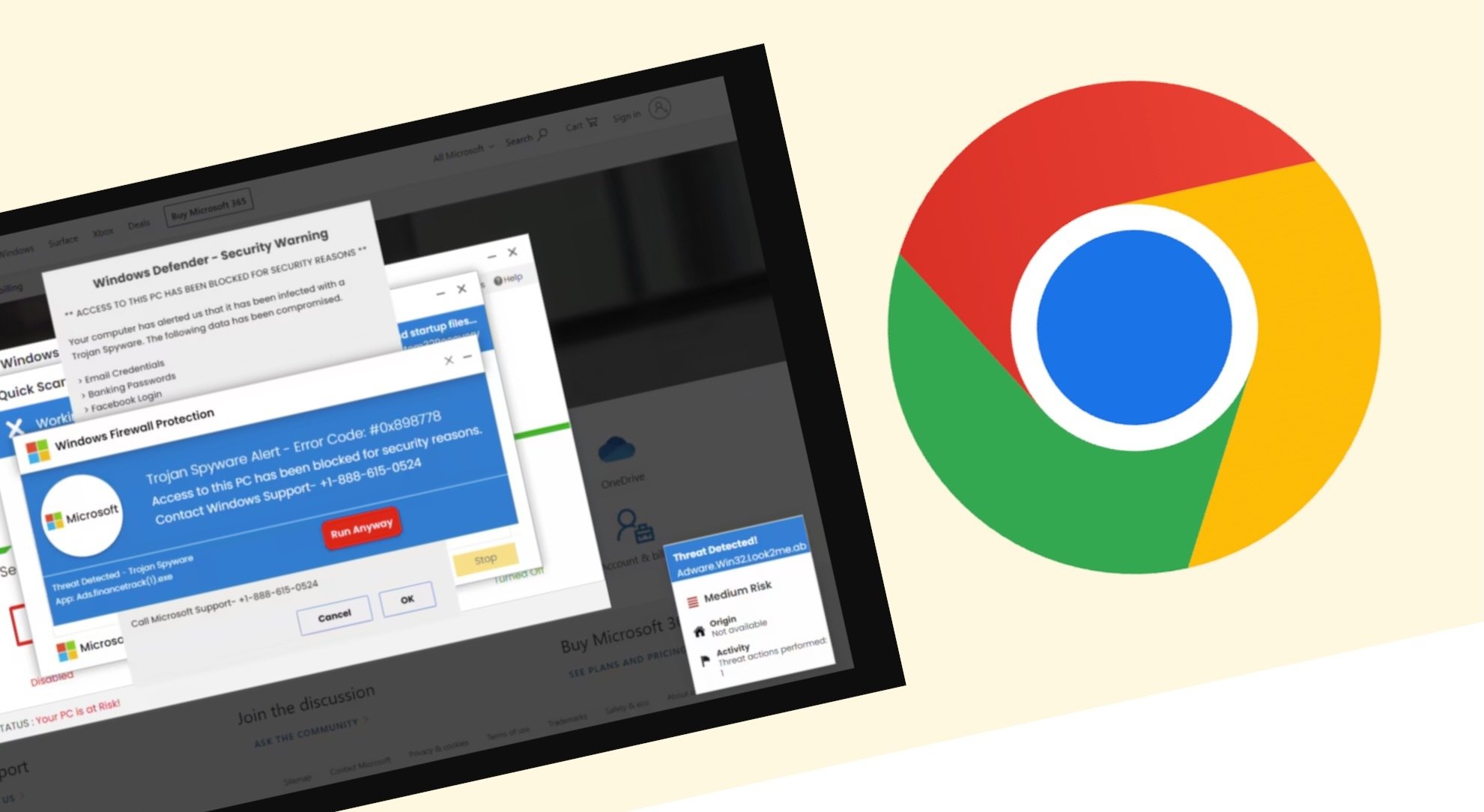

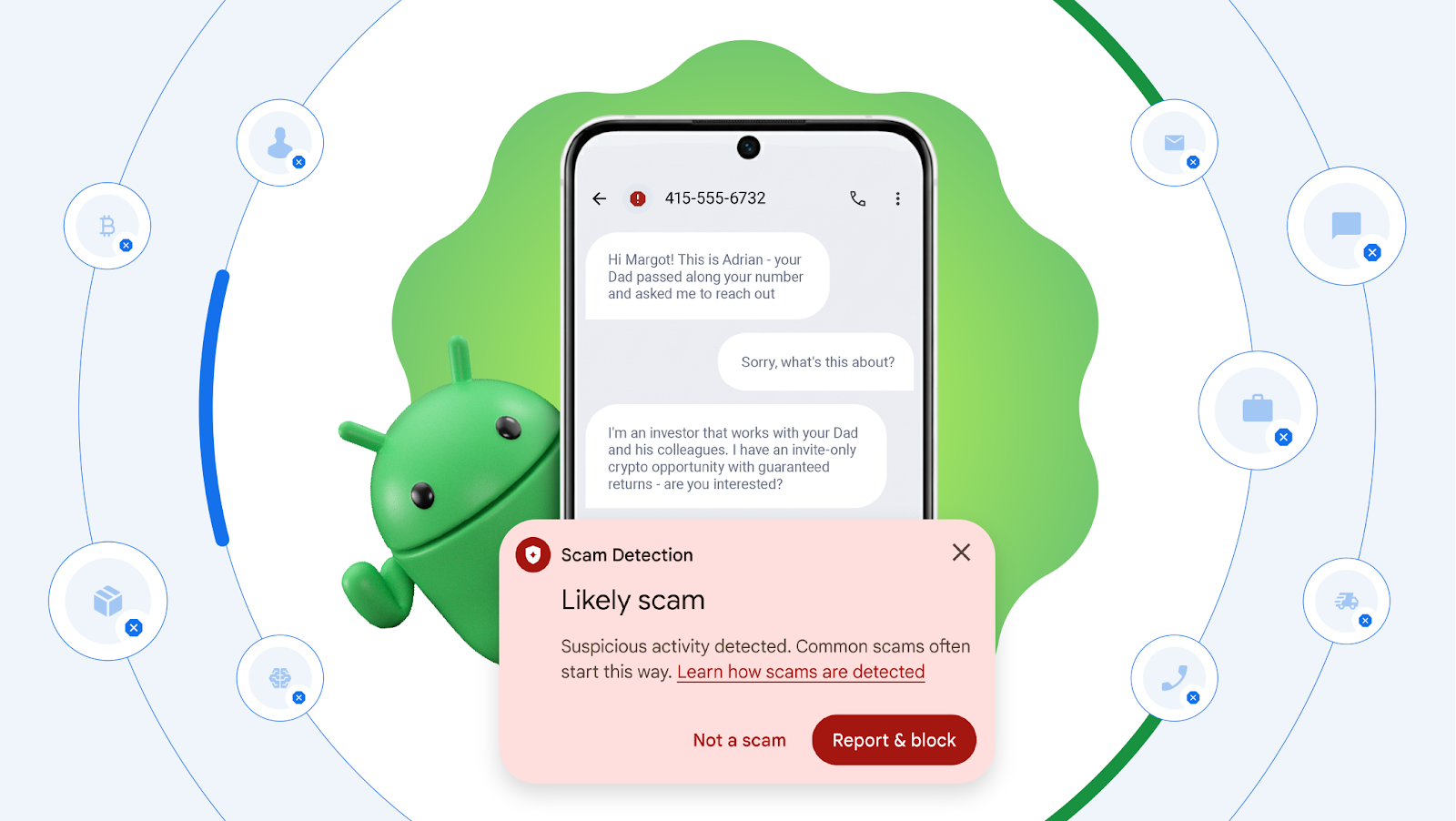

Last year, Google quietly stuffed an AI model into Chrome's scam detection tool. Not as a cloud service you could ignore. Not as an optional download. But as something running directly on your device, analyzing websites and downloads in real-time, as reported by BleepingComputer.

Most people had no idea.

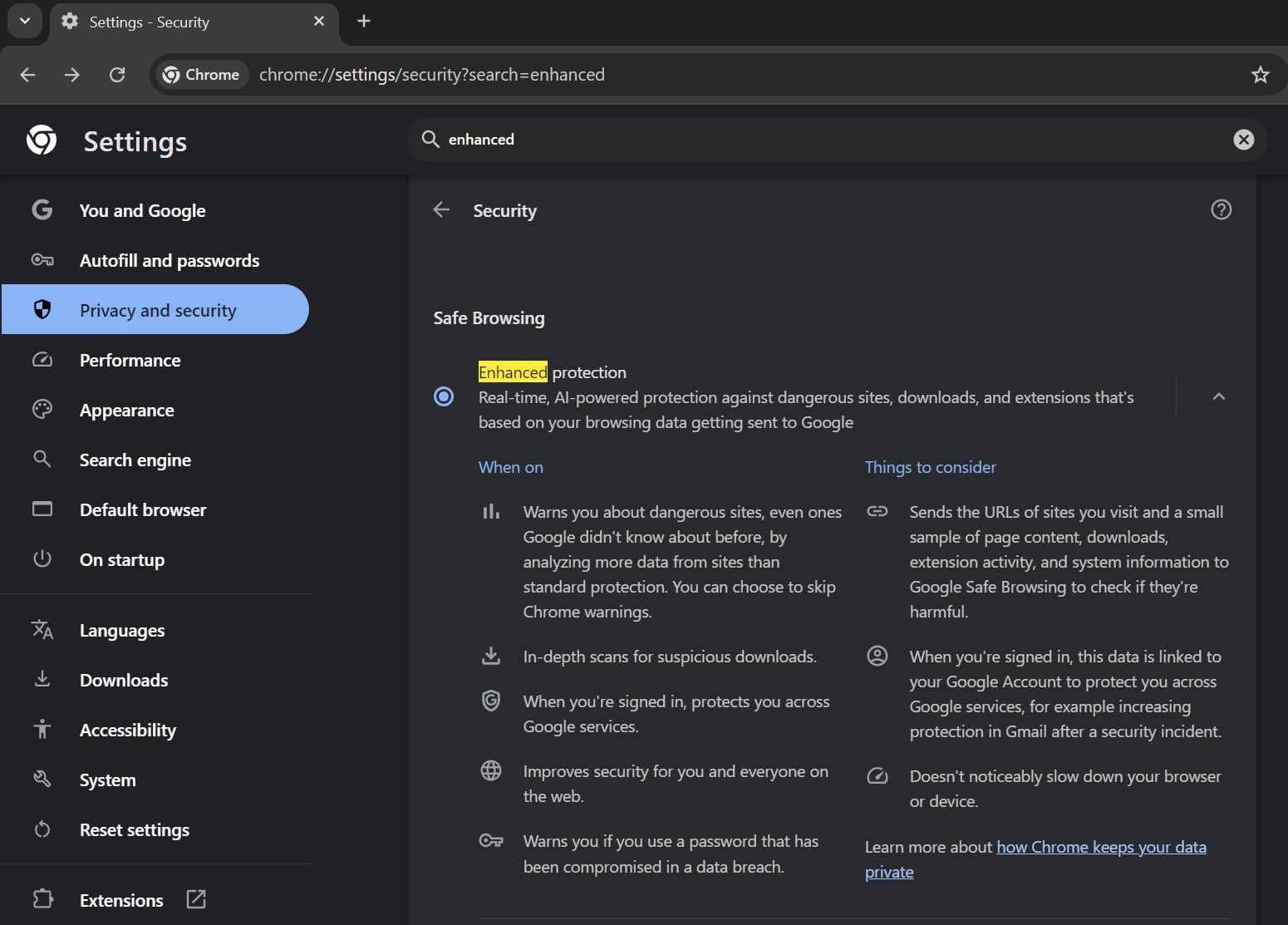

Now, Google's finally giving you an off switch. In early 2025, the company added a toggle to disable the on-device AI powering Chrome's "enhanced protection" mode. It's a rare win in an era when software companies are jamming AI into everything whether users want it or not, as noted by Forbes.

But here's what you actually need to understand: what this AI does, why it matters for your privacy, how to turn it off, and whether you should.

TL; DR

- Chrome's on-device AI model runs locally on your computer for real-time scam detection, not on Google's servers

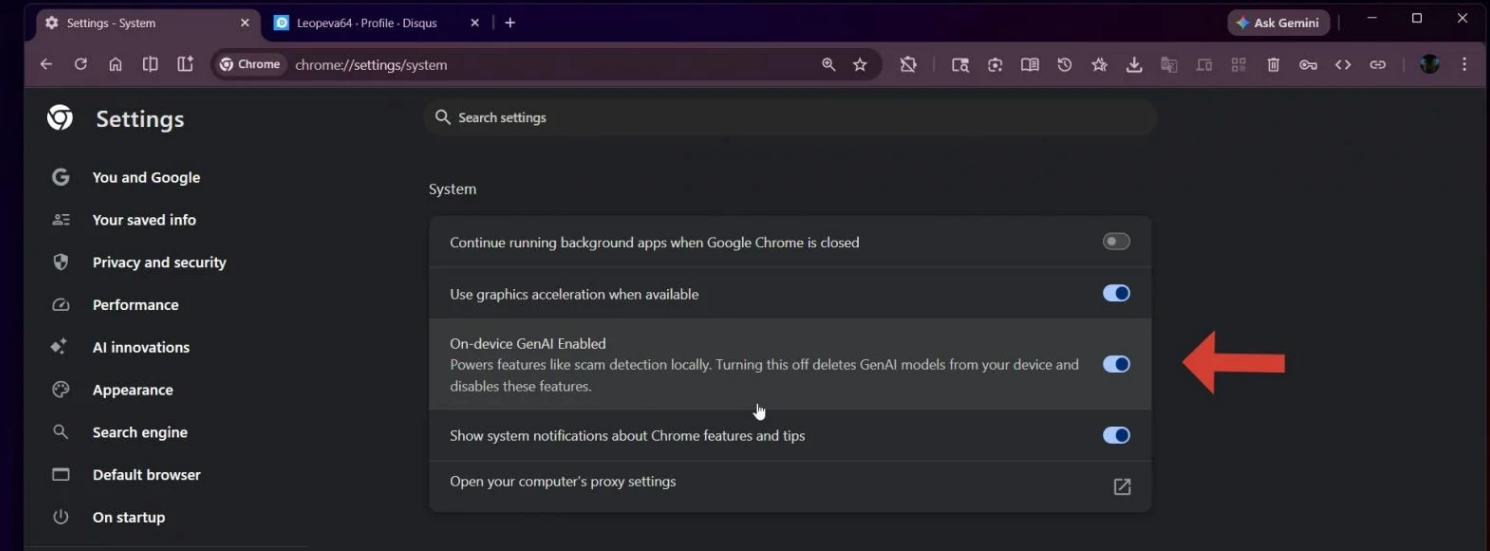

- You can now disable it by going to Settings > System > toggle off "On-device Gen AI"

- The feature is currently in Chrome Canary but will roll out to all users in upcoming releases

- Your data stays on your device when this AI is active, but it still processes sensitive information locally

- You should disable it if privacy is a priority and use traditional antivirus instead

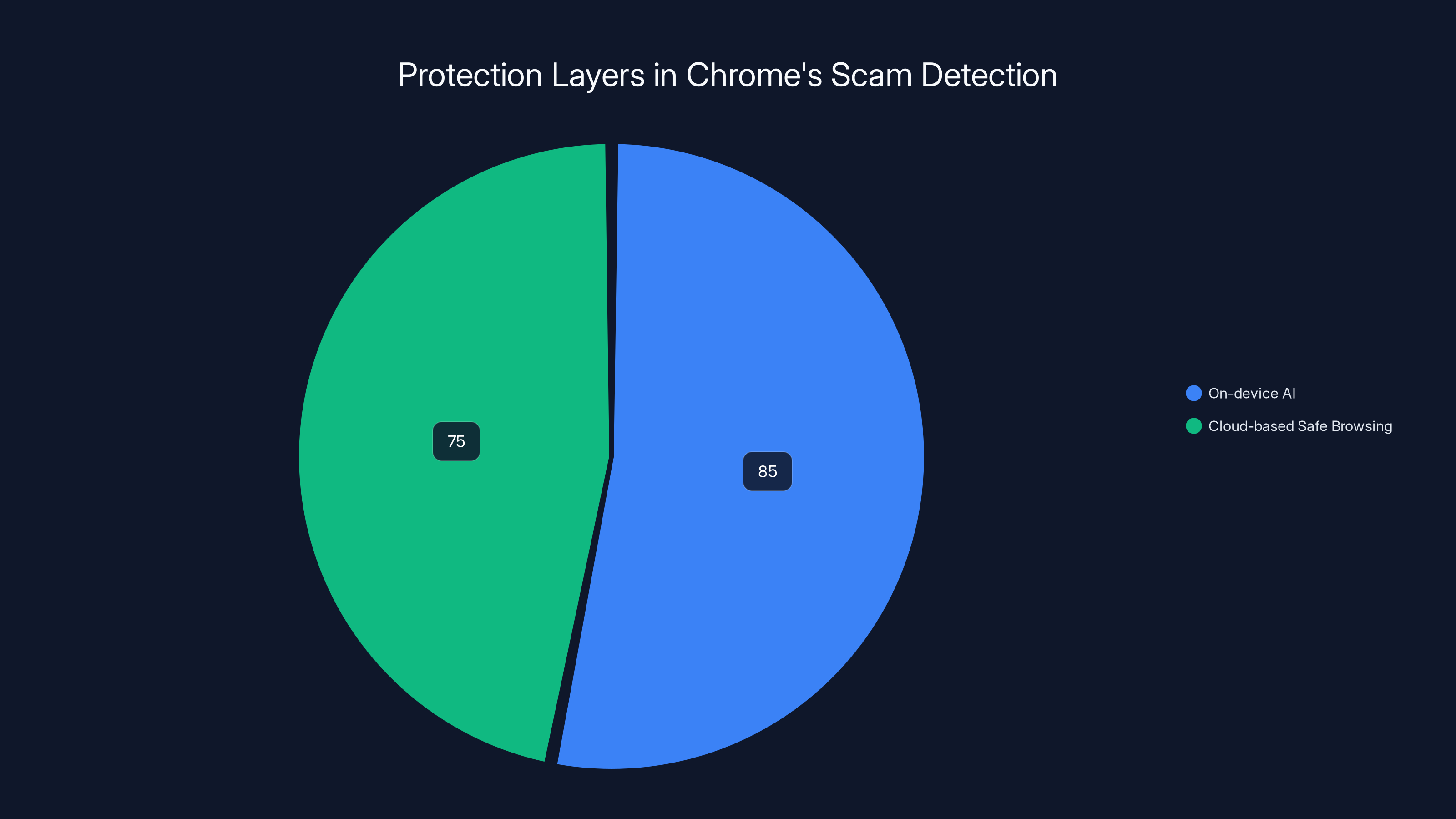

Chrome's on-device AI provides an additional layer of security with an estimated 85% effectiveness in threat detection, compared to 75% for the cloud-based method alone. Estimated data.

What Changed: Google's Shift Toward On-Device AI

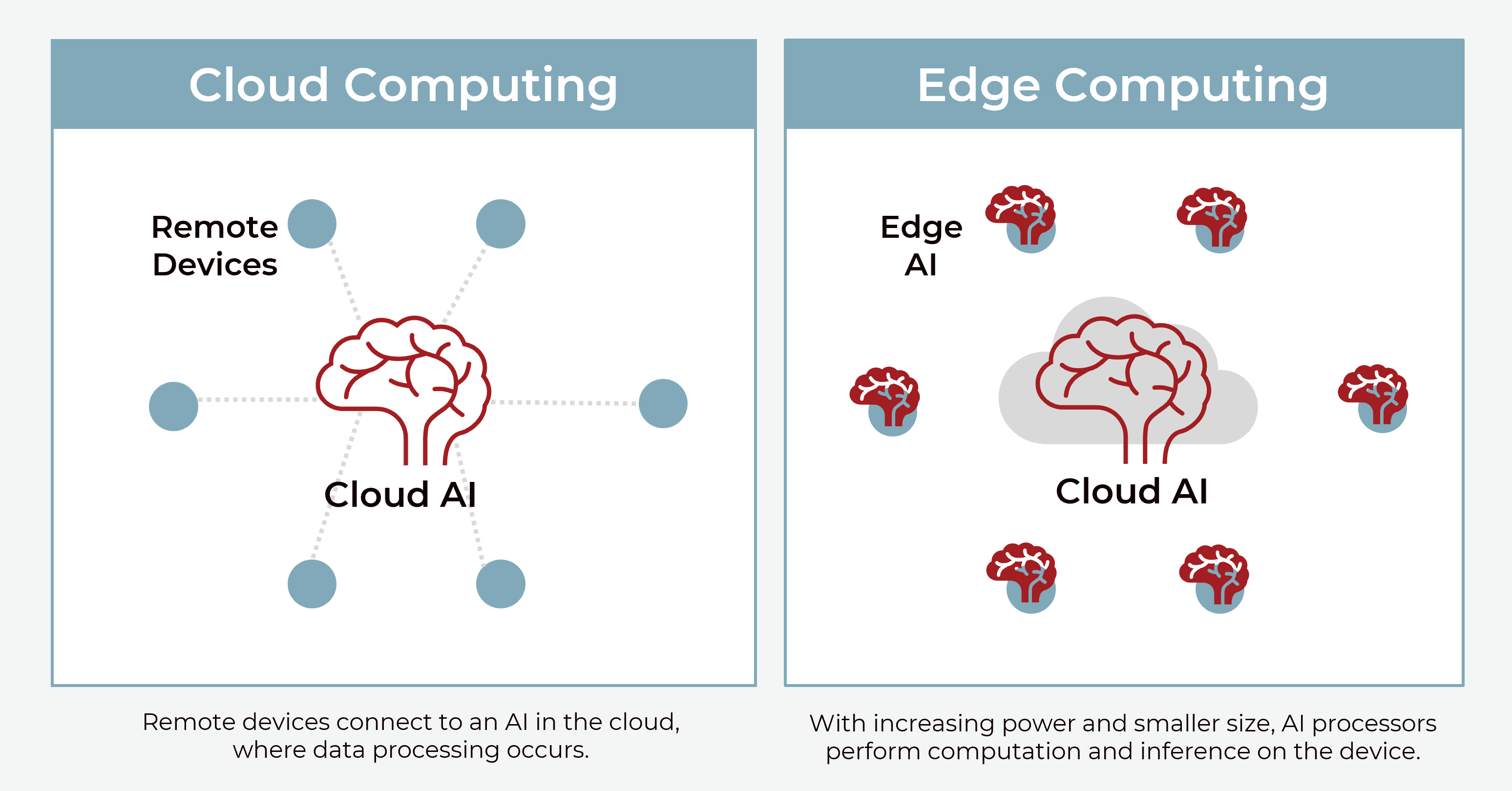

For years, browser security relied on cloud-based threat detection. Your browser would see a suspicious website, ping Google's servers with a hash or URL, and get back a verdict: safe or dangerous. Simple. Effective. But every check meant data leaving your device.

Then Google decided to flip the model. Instead of everything going to the cloud, why not run some AI locally? Process more checks on-device. Less data traveling across the internet. Faster responses, as explained by The Register.

The idea sounds great on paper. And for some use cases, it genuinely is better.

But Google didn't exactly broadcast that they'd added a full AI model to Chrome. You didn't opt in. You didn't get a notification. The model just arrived as part of the "enhanced protection" feature in late 2024, and it started running on your machine immediately.

That's the part that bothered privacy advocates. Not that the AI existed, but that it existed without consent.

Understanding On-Device AI vs. Cloud-Based Detection

Here's where most people get confused: on-device doesn't automatically mean private.

Yes, the AI model runs on your computer instead of Google's servers. That's technically true. But the model itself came from Google. It was trained on Google's data. And Google can update it anytime, as detailed by ClearanceJobs.

More importantly, the fact that processing happens locally doesn't tell you much about what data gets analyzed or where it goes afterward.

Think of it this way: imagine a security guard sitting in your house instead of at a checkpoint down the street. The guard is right there, checking things fast. But that guard still reports back to headquarters. The difference is the location, not the overall architecture.

On-Device Processing:

- AI model runs locally on your device

- Faster response times (milliseconds instead of seconds)

- Reduced bandwidth usage

- Model updates still come from Google

- Potential for offline functionality

Cloud-Based Processing:

- Requests sent to Google's servers

- Data leaves your device

- Google learns about everything you visit

- More flexible, easier to update

- Better for handling edge cases and new threats

Google's implementation splits the difference. The AI runs on-device for speed and efficiency. But Google still has visibility into what the model detects and flags. Still has the capability to see patterns across millions of Chrome users, as noted by Google Cloud.

The real question isn't "is on-device better?" It's "what happens to the data the on-device AI collects?" And Google's answer to that question isn't entirely transparent.

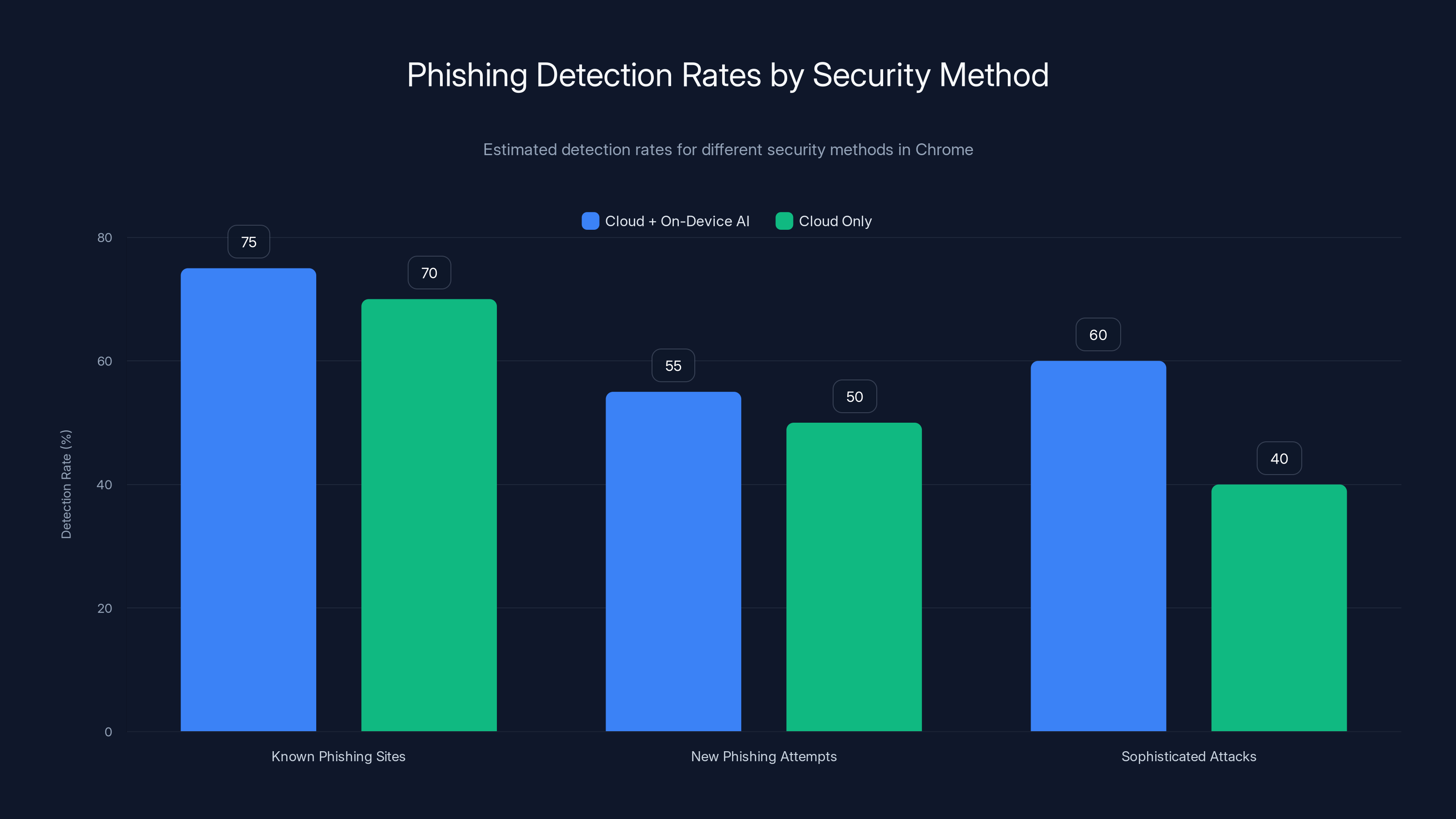

Estimated data suggests that combining cloud and on-device AI improves detection rates, especially for sophisticated attacks. (Estimated data)

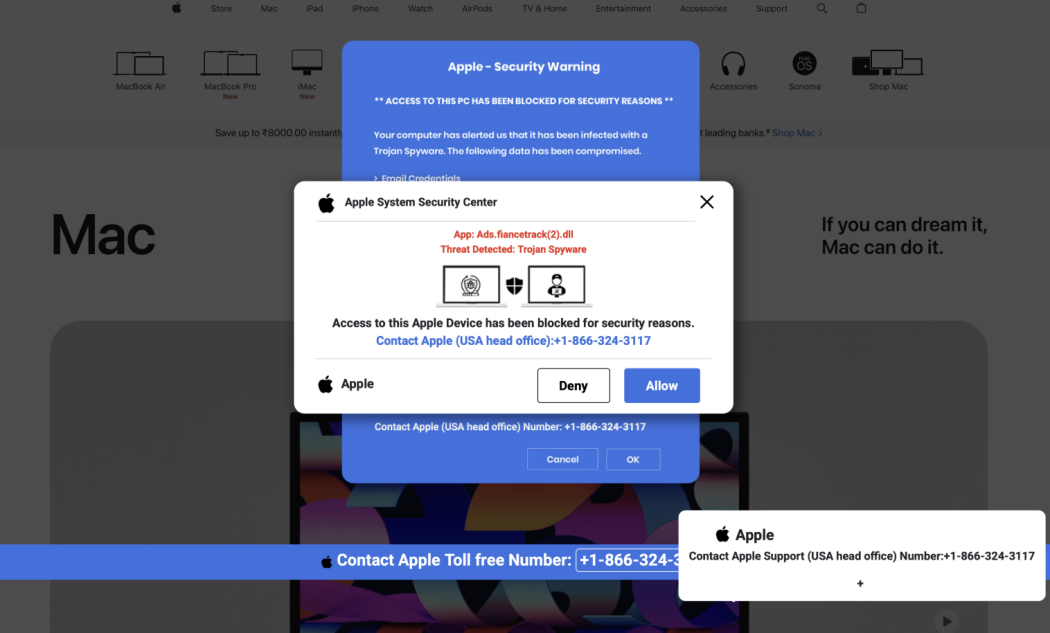

Why Google Added This AI Model

Google isn't doing this out of altruism. There are actual technical benefits to on-device AI.

First, speed. Scam detection at cloud scale involves latency. Your browser checks a website, waits for a response from Google's servers somewhere in the world, and then either blocks or allows the page. During that wait, a malicious page could load. An exploit could run. The attack window closes fast, but it exists.

With on-device AI, there's no network round-trip. The model sees a suspicious URL pattern and makes a decision instantly. This speed matters in real scams, where every millisecond counts before credential harvesting starts or a payload executes, as highlighted by Dark Reading.

Second, scale. Google processes billions of browser requests daily. Moving some of that workload to user devices reduces strain on Google's infrastructure. Less load on servers means faster service for everyone and lower operational costs.

Third, the illusion of privacy. Cloud-based detection means Google knows about every website you visit that hits a server check. On-device detection means more checks happen silently, locally, without telemetry pinging back immediately. It's not actually more private—Google still has visibility—but it feels more private to users. That perception matters in marketing.

Fourth, competitive advantage. Apple runs security models on-device through iCloud Private Relay and on-device scam detection in Safari. Microsoft does similar things in Edge. Google wanted parity, and on-device processing is the industry trend, as noted by Google Cloud.

The model Google added is likely based on pattern recognition, similar to how modern antivirus software works. It looks at URL structure, website characteristics, file hashes, and behavioral patterns to flag suspicious activity. It probably works decently—maybe catches 60-75% of common phishing attempts—but it's not perfect.

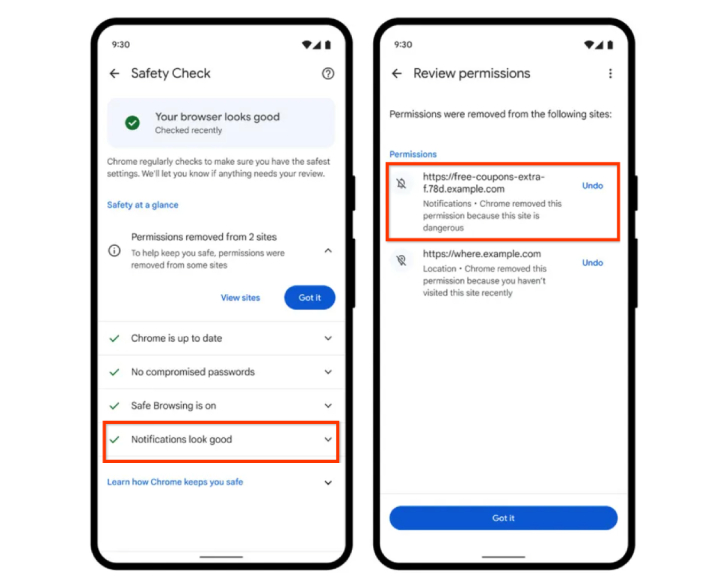

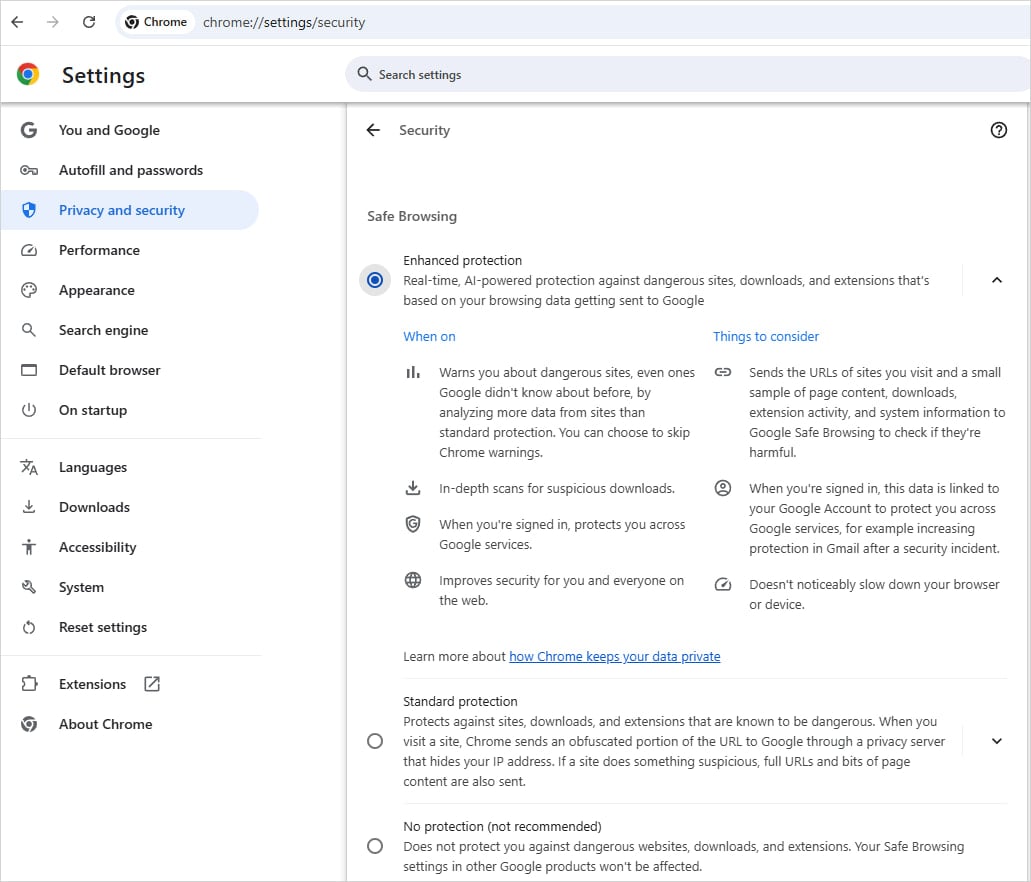

How to Actually Disable the On-Device AI

If you want to turn this off, the process is straightforward, but it's currently limited to Chrome Canary (Google's most experimental build).

Here's the step-by-step:

- Open Chrome settings by clicking the three-dot menu in the top right, then select "Settings"

- Navigate to System in the left sidebar

- Look for "On-device Gen AI" toggle under the System section

- Toggle it off to disable the AI model for scam detection

- Restart Chrome for the change to take effect completely

Right now, this option exists in Chrome Canary (version 135+). Google typically moves features from Canary to the stable Chrome release within 2-6 weeks, so expect to see this toggle in standard Chrome sometime in Q1 2025, as reported by BleepingComputer.

When you disable the AI model, Chrome falls back to its traditional cloud-based protection. Your browser will still check websites against Google's Safe Browsing database, but it won't use the on-device AI for additional analysis.

For non-technical users, you might want to wait until this hits stable Chrome. The Canary build gets updates constantly and can be unstable. But if you're concerned about the AI running on your device right now, Canary is the path to shut it down.

Privacy Implications: What You Should Actually Worry About

Let's be honest about the privacy angle here, because there's a lot of fear-mongering happening.

The fact that an AI model runs on-device doesn't make it private. Lots of on-device processes still send data back home. The distinction between "local" and "cloud" is increasingly meaningless in modern software.

What actually matters:

Data Collection Points: When Chrome's AI analyzes a website you visit, it's examining URLs, page structure, metadata, and characteristics. Does that analysis get logged? Where? For how long? Google says the scam detection doesn't send URLs back to servers, but it does send aggregate threat signals. What exactly those signals contain isn't fully documented.

Model Updates: Google can push new versions of the AI model to your device anytime through regular updates. You won't know what changed or whether the new version behaves differently. This is standard for any software, but it means you're trusting Google's judgment about what the model should do.

Behavioral Patterns: Even if individual URLs don't get sent to Google, the AI probably tracks patterns. It might notice you visit certain types of websites, fall for certain scam types, or have specific security behaviors. That aggregate data could be valuable.

Comparison to Alternatives: Traditional antivirus software (Bitdefender, Norton, McAfee) also runs on-device and also talks back to company servers. The privacy trade-off isn't unique to Chrome. If you're using any security software, you're already accepting some level of telemetry.

The honest position: turning off the AI doesn't make you dramatically more private. It just reduces one data collection point. If privacy is a serious concern, you'd want to address your broader digital footprint.

The on-device scam detection model likely operates with an 80% confidence threshold, a model size of 30 MB, updates bi-weekly, and a 70% detection rate. Estimated data.

Should You Actually Disable It?

This is the practical question.

For most people, the answer is probably no. Leave it on.

Here's why: Google's scam detection works decently. The on-device AI adds an extra layer of protection before the cloud-based system kicks in. It catches things quickly. It's faster than traditional antivirus. And unless you have specific privacy concerns, the marginal benefit of disabling it isn't worth losing that protection.

You might disable it if:

- You're paranoid about data collection and run minimal software anyway

- You use other security tools that make Chrome's redundant

- You're on older hardware and the AI causes noticeable performance issues

- You're testing Canary for development and want minimal overhead

- You specifically distrust Google's handling of security data

You should probably leave it enabled if:

- You browse casually and want protection against phishing

- You click links from emails and messages without thinking first

- You download files from sketchy sources sometimes

- You're less technical and benefit from extra safety layers

- You care more about security than privacy in this specific context

The real issue isn't whether the AI is good or bad. It's that Google made the decision for you without asking. That's the privacy violation. Whether you choose to keep it on after knowing about it is a different calculation entirely.

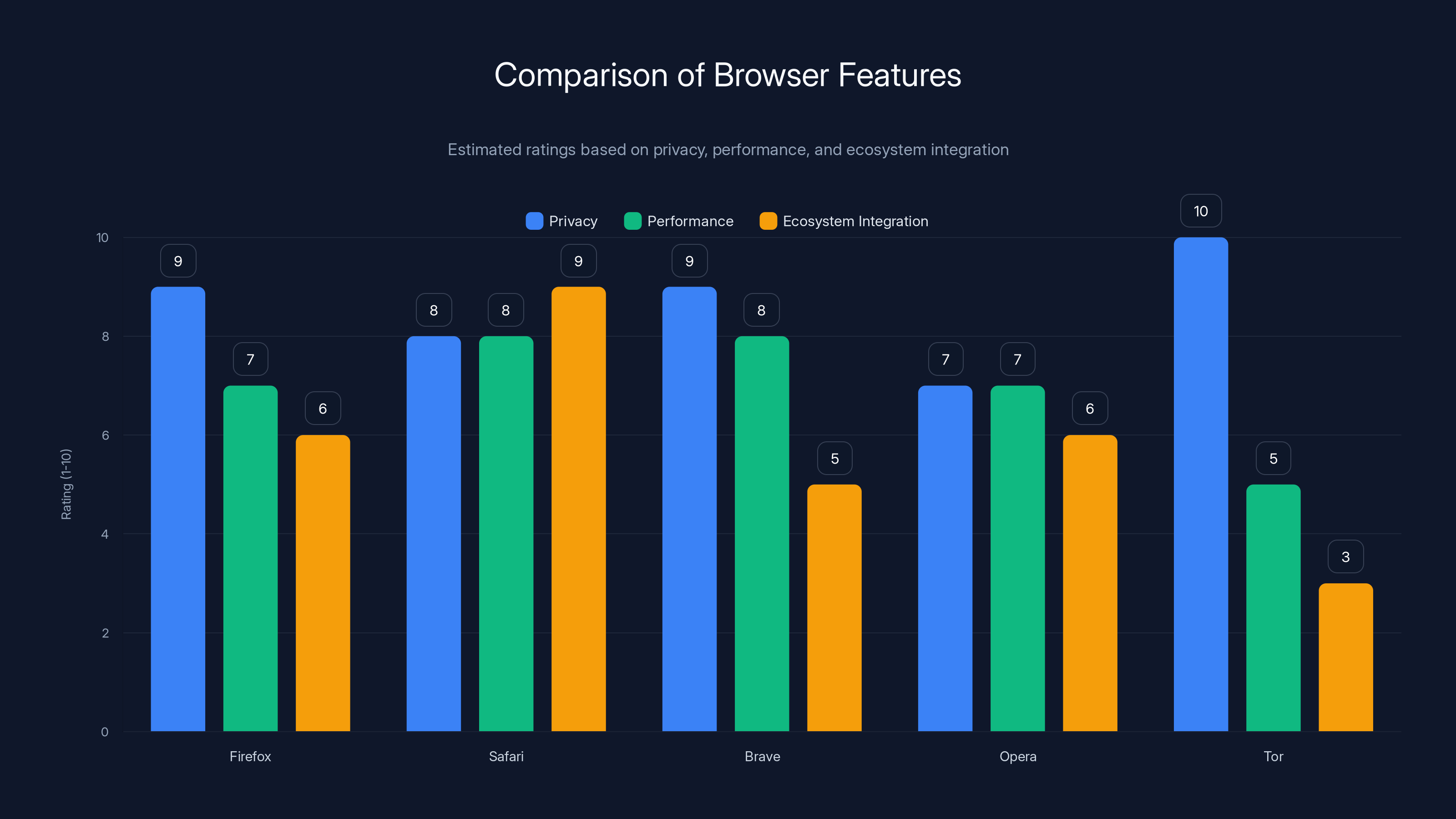

Comparing Chrome's Approach to Firefox and Other Browsers

Chrome isn't alone in adding AI to browsers. But the approaches differ dramatically.

Firefox is taking a different path. The browser maker announced plans for on-device AI features, but they're building an "AI kill switch" first. Mozilla realized users would want granular control over every AI feature, so they're making it trivial to disable each one independently. That's the opposite of Chrome's approach, where features arrived silently and toggle options came later, as highlighted by ZDNet.

Safari (Apple's browser) also runs security models on-device through iCloud Private Relay. Apple takes a privacy-first approach, meaning they designed the system so Apple itself can't see what users are doing. Chrome's system is more observation-friendly for Google.

Edge (Microsoft's browser) includes Copilot AI directly in the sidebar. You can disable it, but the default is on. Like Chrome, Microsoft doesn't hide it, but it's not buried either.

The pattern: all browsers are adding AI. But they're being more transparent about it now because users started asking questions.

Chrome's AI model arrived quietly. That wasn't necessarily deceptive—it was just how Google operated at the time. But the backlash showed that users want to know when AI is watching them, even if it's local AI.

Firefox's approach is probably the most user-friendly long-term. Build AI features. But make every single one easy to disable without burying toggles in obscure settings menus.

Google's approach is shifting toward that too. The fact that they added an opt-out toggle suggests they heard the criticism. Whether it's fast enough or transparent enough is another question.

Technical Deep Dive: How On-Device Scam Detection Works

If you're curious about what's actually happening under the hood, here's the technical explanation.

Google's on-device AI model is likely a neural network trained on millions of phishing, malware, and scam websites. The training process probably involved:

- Positive examples: Real phishing pages, credential harvesting sites, malware distribution networks

- Negative examples: Legitimate websites that are safe

- Feature extraction: URL structure, page metadata, HTML patterns, resource loading behavior, certificate information

- Pattern learning: The network learns what makes a scam look like a scam

When you visit a website, Chrome extracts similar features locally and runs them through the model. The model outputs a confidence score: how likely is this a phishing attempt?

If the score exceeds a threshold (probably 70-85% confidence), Chrome flags the site as dangerous. If it's borderline, Chrome might still send data to the cloud-based system for additional verification.

The advantage of this approach: the model runs in milliseconds. It doesn't need network access. It can make fast decisions without latency.

The disadvantage: local models are limited. They see only what fits in the model's feature space. New attack types that don't match training patterns slip through. Legitimate websites that have characteristics similar to phishing pages (like new startup landing pages) get false positives.

Google probably uses ensemble methods too, meaning multiple smaller models working together rather than one large model. That improves both speed and accuracy.

Estimated model size: probably 5-50 MB, small enough to ship with the browser but complex enough to be effective.

Update frequency: likely weekly or monthly, pushed through Chrome's automatic update system.

Accuracy baseline: probably 60-80% detection rate against known phishing, with higher accuracy on obvious scams and lower accuracy on sophisticated spoofs.

The model isn't magic. It's pattern matching optimized for speed. It catches obvious stuff really well. Subtle attacks that mimic legitimate sites slip through.

That's why Google still uses cloud-based detection as a backup. The two systems work together: on-device catches obvious threats immediately, cloud-based system handles edge cases.

Estimated distribution shows equal weight among privacy, security, practical, and consensus perspectives on using on-device AI. Estimated data.

What Data Actually Gets Sent to Google

Here's the question everyone asks: if the AI runs on-device, does Google still see what I'm visiting?

The answer is nuanced.

What definitely stays local:

- The actual URL you're visiting

- The full page content and HTML

- Your browsing history

- The detailed analysis the AI model does

What probably gets sent to Google:

- Aggregate threat signals ("we detected a phishing attempt matching pattern X")

- Model performance metrics ("the on-device model made X decisions today")

- Security research data (anonymized patterns useful for improving detection)

- Some safe browsing checks still happen (URLs get hashed and checked against Google's database)

Google explicitly says they don't send URLs from the on-device analysis. But they're vague about what aggregate data gets collected.

Think of it like this: the AI doesn't rat on every site you visit. But it does report back when it catches something interesting. And it reports metrics about its own performance.

If you're visiting phishing sites regularly, Google will eventually know you're doing that. Not because of this AI specifically, but because the AI reports threat detection events.

Privacy-focused alternative: if you want zero reporting, you'd need to use private browsing (Chrome's Incognito mode) combined with a VPN. But then you lose real-time scam detection entirely.

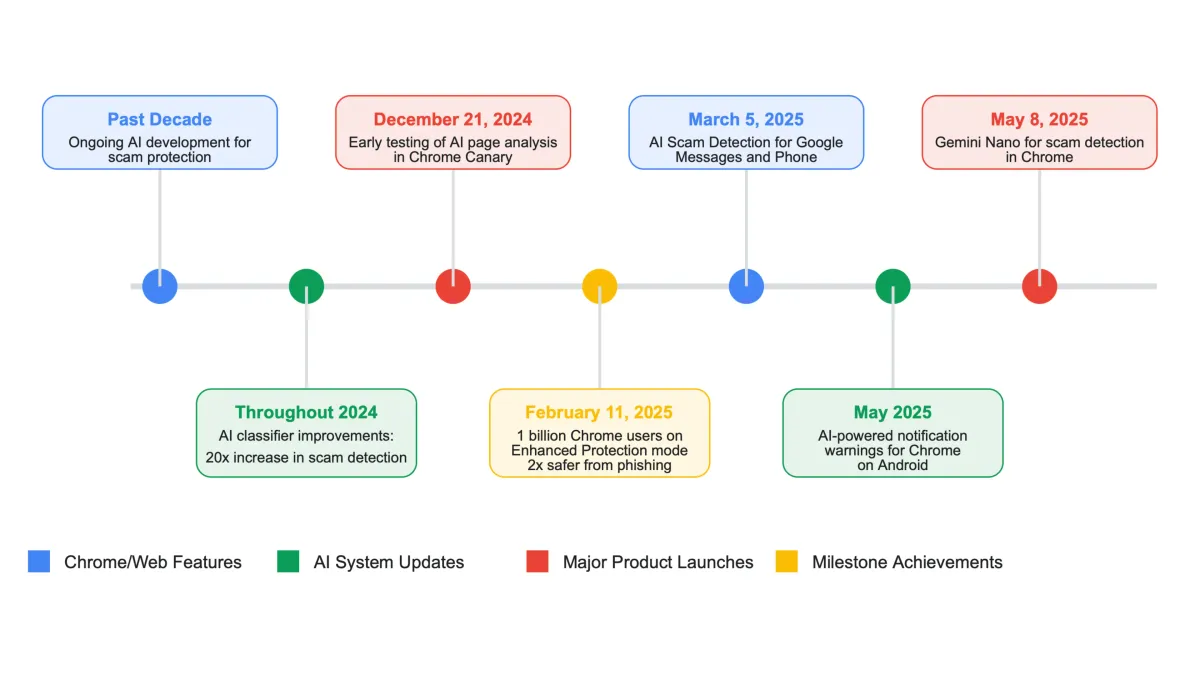

The Timeline: How We Got Here

Understanding how this feature rolled out helps explain why the privacy conversation matters.

November 2024: A security researcher (Leopeva 64) discovered the enhanced protection mode with on-device AI in Chrome Canary. The feature wasn't announced in a blog post or press release. It just appeared in the code.

Late 2024: The feature started rolling out to Chrome users gradually, hidden in the enhanced protection settings. Most users never noticed it existed.

January 2025: Privacy advocates started raising questions. Security researchers began analyzing what the on-device AI actually does. The lack of transparency became a talking point.

Q1 2025: Google responded by adding the opt-out toggle to Chrome Canary, and publicly acknowledging the AI model exists.

The timeline matters because it shows the pattern: feature first, transparency later, opt-out even later.

That's the default approach for many tech companies now. Ship features quietly, measure user reaction, add controls if people complain loudly enough.

It's not necessarily malicious, but it's not user-first either.

Real-World Security: Does the AI Actually Help?

Here's what we don't have: comprehensive data on whether the on-device AI meaningfully improves scam detection.

Google hasn't published detailed statistics comparing detection rates with and without the AI. We don't know:

- How many additional phishing attempts does the AI catch?

- How many false positives does it generate?

- What's the performance impact on older hardware?

- How does it compare to other on-device security tools?

Security researchers have tested Chrome's overall scam detection (cloud + on-device combined) and found it catches about 70-80% of known phishing sites and 50-60% of new phishing attempts. That's solid, but not perfect.

Is the on-device AI responsible for that performance? Probably partially. But without isolated testing, we can't know the exact contribution.

Anecdotally, users report that Chrome blocks obvious phishing attempts quickly. Less clear is whether the on-device AI makes a practical difference in real-world usage. Many phishing attempts are so obvious (spelling errors, broken layouts) that any security system would catch them.

The AI probably shines on sophisticated credential-harvesting pages that look legitimate but have subtle tells. Those are harder to catch without pattern recognition.

But sophisticated attacks are rare. Most users encounter obvious phishing repeatedly, which the cloud-based system catches anyway.

Bottom line: the on-device AI probably provides incremental security benefits. Whether it's worth the privacy trade-off depends on your threat model.

Brave and Firefox lead in privacy, while Safari excels in ecosystem integration. Performance varies, with Tor being the slowest. (Estimated data)

Comparing Chrome to Dedicated Security Tools

Chrome's built-in scam detection is convenient, but it's not a replacement for proper security software.

Chrome's approach:

- Designed specifically for browsing threats

- Only protects what happens in the browser

- Limited to phishing and basic malware detection

- No protection for email, downloads, or system-level threats

Dedicated antivirus software:

- Broader threat detection across applications

- File-level scanning and behavioral monitoring

- Email security integration

- Ransomware protection

- Network-level filtering

If you want comprehensive protection, Chrome alone isn't enough. You'd want dedicated security software running alongside it.

Popular options that work well with Chrome:

- Bitdefender Total Security: Best overall, includes VPN and password manager

- Norton 360 with LifeLock: Better for families with multiple devices

- McAfee Total Protection: Good mobile integration

- ESET Internet Security: Lightweight, minimal performance impact

- Kaspersky Small Office Security: Strong malware detection

These tools work in parallel with Chrome's protection, providing defense-in-depth. Chrome catches phishing attempts in the browser. The antivirus catches malware at the system level.

The combination of built-in browser protection plus dedicated antivirus is the industry best practice. Neither alone is sufficient.

Expert Recommendations and Best Practices

Security researchers and privacy advocates have varying opinions on whether to use the on-device AI.

Privacy-first perspective: Disable it. Every data collection point is a vulnerability. Even if Google isn't doing anything nefarious now, they could be in the future. Better to disable unnecessary features entirely.

Security-first perspective: Keep it enabled. The incremental security benefit outweighs the privacy cost. You're already using Google products (search, Gmail, etc.), so additional telemetry doesn't meaningfully harm your privacy further.

Practical perspective: It depends on your threat model. If you're at low risk (casual browsing, careful about clicking links), Chrome's built-in protection is fine. If you're high-risk (work in cybersecurity, handle sensitive data), you want maximum protection anyway, so the AI is almost irrelevant.

The consensus: Users should have transparency and choice. Whether you choose to keep the AI enabled doesn't matter as much as having the option and understanding what it does.

Google's move toward providing that choice is positive, even though it came late.

Alternatives: Other Browsers and Security Solutions

If you want to escape Chrome's AI model entirely, alternatives exist.

Firefox: Open-source, privacy-focused, no surprise AI features. Mozilla is building AI features but making them explicitly optional. Performance is slightly slower than Chrome, but many users prefer the transparency.

Safari: Best for Apple ecosystem integration, strong privacy protections, uses on-device AI but with different architecture than Chrome. Only available on Apple devices.

Brave: Built on Chromium (Chrome's open-source base) but modified for privacy. Blocks ads and trackers by default. Provides similar scam detection without the telemetry concerns, but ecosystem is smaller.

Opera: Includes VPN and ad blocker, similar feature set to Chrome, less tracking than Chrome but not as private as Firefox.

Tor Browser: Maximum privacy, slow, not practical for daily browsing, overkill unless you're in a high-threat environment.

The best browser depends on your priorities:

- Privacy: Firefox or Brave

- Performance: Chrome or Edge

- Ecosystem: Safari (Apple) or Edge (Windows/Office)

- Open-source: Firefox or Tor

None of these decisions are perfect. Every browser collects some data. The question is how much and whether you trust the organization handling it.

If you switch from Chrome, migrating bookmarks, passwords, and settings is straightforward. Most browsers import Chrome data automatically.

Looking Forward: The Future of Browser AI

Chrome's on-device AI is just the beginning. Every major browser is adding AI features. The question isn't whether browser AI exists, but how much control users have over it.

Likely developments:

More AI features: Content summarization, translation, writing assistance, image generation, possibly even code suggestions for developers. All running locally or connecting to AI services.

Better privacy controls: Following backlash, expect more granular toggles. Individual controls for each AI feature, not just one blanket switch.

Performance improvements: On-device AI will get faster and smaller. Models will compress, becoming more practical for older hardware.

Interoperability: Browsers might let you choose between different AI backends. Want Anthropic's Claude instead of Google's model? Load it yourself.

Regulation: Privacy laws (GDPR, upcoming US frameworks) will force more transparency. AI feature disclosure will become mandatory.

The trend is clear: AI in browsers is here. Privacy-conscious users should get comfortable with disabling features they don't need.

FAQ

What is Chrome's on-device AI model for scam detection?

It's an artificial intelligence model that runs locally on your computer as part of Chrome's "enhanced protection" mode. The model analyzes websites, downloads, and browser activity to detect phishing attempts and scams in real-time without sending the data to Google's servers. Google added this feature in late 2024 to provide faster threat detection than traditional cloud-based methods alone.

How does the on-device AI actually work?

When you visit a website, Chrome extracts features like URL structure, page metadata, HTML patterns, and resource characteristics. It runs these features through a neural network model trained on millions of phishing and legitimate websites. The model outputs a confidence score indicating whether the page is likely a scam. If the score exceeds a threshold, Chrome warns you or blocks the site.

Does disabling the on-device AI make me safer or less safe?

Disabling it makes you slightly less safe. Chrome falls back to its traditional cloud-based Safe Browsing protection, which is still effective but slower because it involves network requests to Google's servers. The cloud system catches most phishing attempts (70-80% of known threats), but the on-device AI provides an additional layer of instant detection. For maximum security, keep both enabled, but you lose that incremental protection by disabling the AI.

What data does the on-device AI send to Google?

The model itself doesn't send individual URLs you visit or page content to Google. However, it does send aggregate threat signals when it detects something suspicious, performance metrics about the model's decisions, and data useful for security research. You're not completely invisible to Google, but you're not as tracked as you would be with cloud-only detection where every URL check gets sent to the server.

When will the option to disable the on-device AI be available to everyone?

The toggle is currently available in Chrome Canary (Google's experimental build, version 135+). Google typically moves features from Canary to stable Chrome within 2-6 weeks, so expect it to reach standard Chrome sometime in Q1 2025. Check Settings > System for the "On-device Gen AI" toggle once it's available in your version.

Are other browsers adding similar on-device AI features?

Yes. Firefox is planning on-device AI features but with an "AI kill switch" for every feature. Safari uses on-device security models for phishing detection. Edge includes Copilot AI. All major browsers are moving toward local AI processing, but they vary in transparency and user control. Firefox is taking the most user-friendly approach by making every AI feature easily disableable.

Should I disable Chrome's on-device AI if privacy is my main concern?

It depends on your threat model. If you're already using Google services (Gmail, Search, Drive), one additional data collection point doesn't meaningfully change your privacy profile. If you're trying to minimize data collection entirely, disabling the AI is one step in a broader privacy strategy that should also include a VPN, limiting Google services, and possibly switching browsers. The AI alone isn't the privacy problem—it's the broader ecosystem.

Do I still need antivirus software if Chrome has scam detection?

Yes. Chrome's scam detection protects you from phishing and malware distribution sites in the browser. It doesn't protect against ransomware, system-level malware, email-based attacks, or compromised downloads. Dedicated antivirus software like Bitdefender, Norton, or ESET provides defense-in-depth across your entire system, not just the browser. Use both together for comprehensive protection.

How accurate is Chrome's scam detection with and without the on-device AI?

Overall, Chrome's detection system (combining on-device and cloud-based methods) catches about 70-80% of known phishing sites and 50-60% of new phishing attempts. Google hasn't published specific data on how much of that success is attributable to the on-device AI versus cloud-based detection. The AI likely handles obvious threats quickly, while the cloud system handles edge cases, but we don't have exact contribution percentages.

What if I'm using Chrome Canary and want to disable the AI before the stable release?

Open Chrome > Settings > System > look for "On-device Gen AI" toggle > turn it off. Restart Chrome for the change to take full effect. Since Canary is experimental and gets frequent updates, check periodically to ensure the toggle remains disabled if that's your preference. Keep in mind that Canary is unstable, so use it only if you're comfortable with occasional crashes or unexpected behavior.

Key Takeaways for Every Type of User

Here's what matters based on what you care about:

If you care about convenience: Leave it enabled. The AI makes scam detection faster and more seamless. You won't notice it working, but it's protecting you in the background.

If you care about privacy: Disable it. You lose nothing critical—cloud-based detection still works—and reduce one data collection point. Combine this with other privacy practices (VPN, Firefox or Brave browser, minimal Google services).

If you care about security: Leave it enabled and add dedicated antivirus software. Chrome's AI plus cloud-based detection plus system-level security software = defense-in-depth.

If you're not sure: Leave it enabled for now. It's not actively harmful, and you can always disable it later. The option will be available soon in stable Chrome. You'll know exactly what it does before deciding.

The broader lesson: understand your threat model. What are you actually worried about? Then make specific choices that address those concerns.

Chrome's on-device AI isn't evil or unusually invasive. It's a trade-off between convenience and privacy, like most modern software. You get to decide which side of that trade-off you prefer. That choice itself—the existence of an opt-out—is the real win.

Related Articles

- Malicious Chrome Extensions Stealing Data: What You Need to Know [2025]

- ESET Antivirus 30% Off: Complete 2025 Security Guide [Save $41.99]

- Apple Notes Gets AI Superpowers: The Complete 2025 Guide [iOS 18+]

- ESET Antivirus Holiday Discount 2025: Save Up to 33% [Complete Guide]

- NordVPN in 2025: Post-Quantum Encryption, Scam Protection, and What's Next [2026]

- The Complete Tech Cleanup Checklist for 2025: 15 Essential Tasks

![How to Disable Chrome's On-Device AI Scam Detection [2025]](https://tryrunable.com/blog/how-to-disable-chrome-s-on-device-ai-scam-detection-2025/image-1-1768822692147.jpg)