How AI Finally Caught What 25 Years of Human Review Missed

There's a moment in every security researcher's career when reality hits different. You're staring at vulnerability after vulnerability in code that thousands of engineers have examined. Some of these flaws dated back to 1998. OpenSSL, one of the most audited cryptographic libraries on the planet, had been hiding serious vulnerabilities in plain sight for decades.

Then AI stepped in.

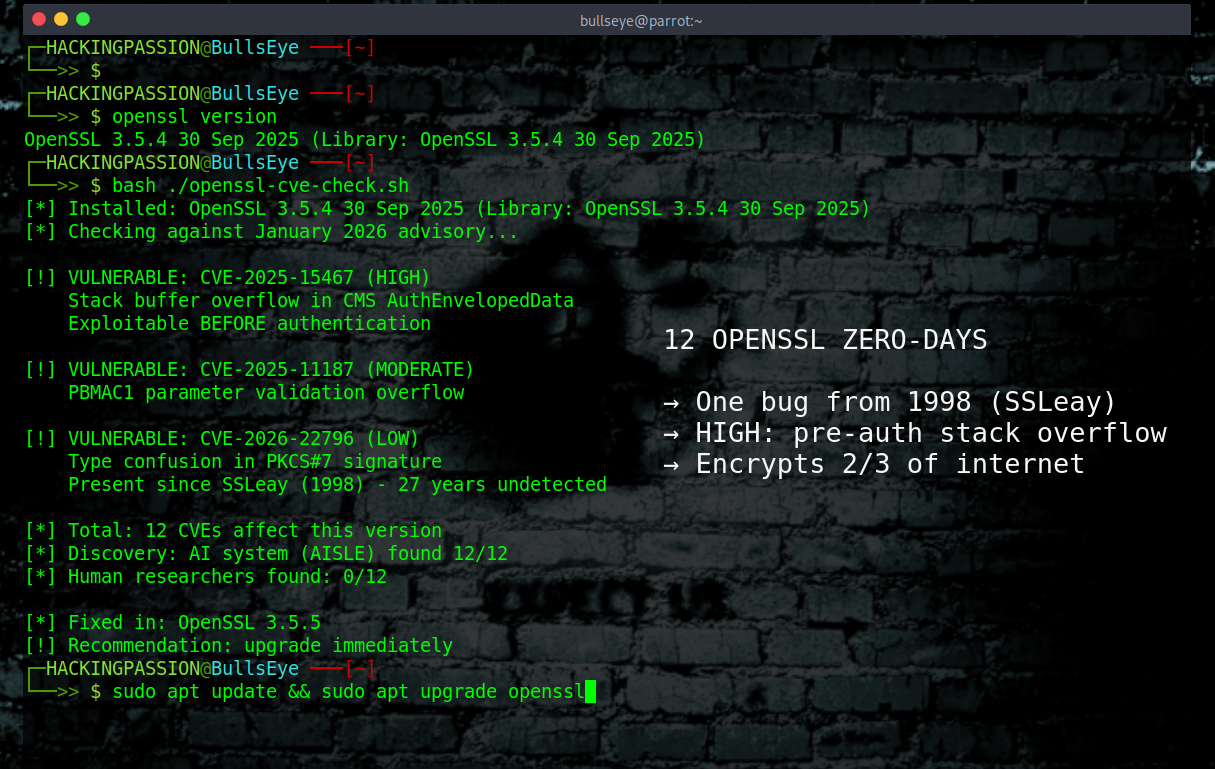

In January 2026, the OpenSSL project released security patches for twelve previously undisclosed vulnerabilities. What made this different wasn't just the sheer number or the severity. It was how they were discovered. An AI-assisted cybersecurity team using advanced code analysis tools identified not just the twelve published CVEs, but six additional issues before public disclosure. Some vulnerabilities had persisted since OpenSSL's earliest days, through countless audits, code reviews, and security assessments.

This wasn't AI replacing human experts. This was AI doing what humans fundamentally cannot do at scale: examine every code path, every edge case, every potential memory corruption scenario simultaneously, without fatigue, without missing details because of deadline pressure or complexity overload.

The implications go far beyond OpenSSL. If heavily scrutinized, mission-critical cryptographic code can hide serious flaws for quarter-centuries, what's lurking in your infrastructure? What's hiding in your development pipelines? And more importantly, can traditional security review processes ever catch these issues before AI does?

This discovery exposed something uncomfortable about modern security practices: human-only code review, no matter how expert or thorough, operates with built-in blind spots. The scale of modern software has outpaced human cognitive capacity. We're trying to find needles in haystacks using our eyes when we've finally built machines that can actually see every grain of sand.

The OpenSSL Vulnerabilities: A Closer Look at What AI Found

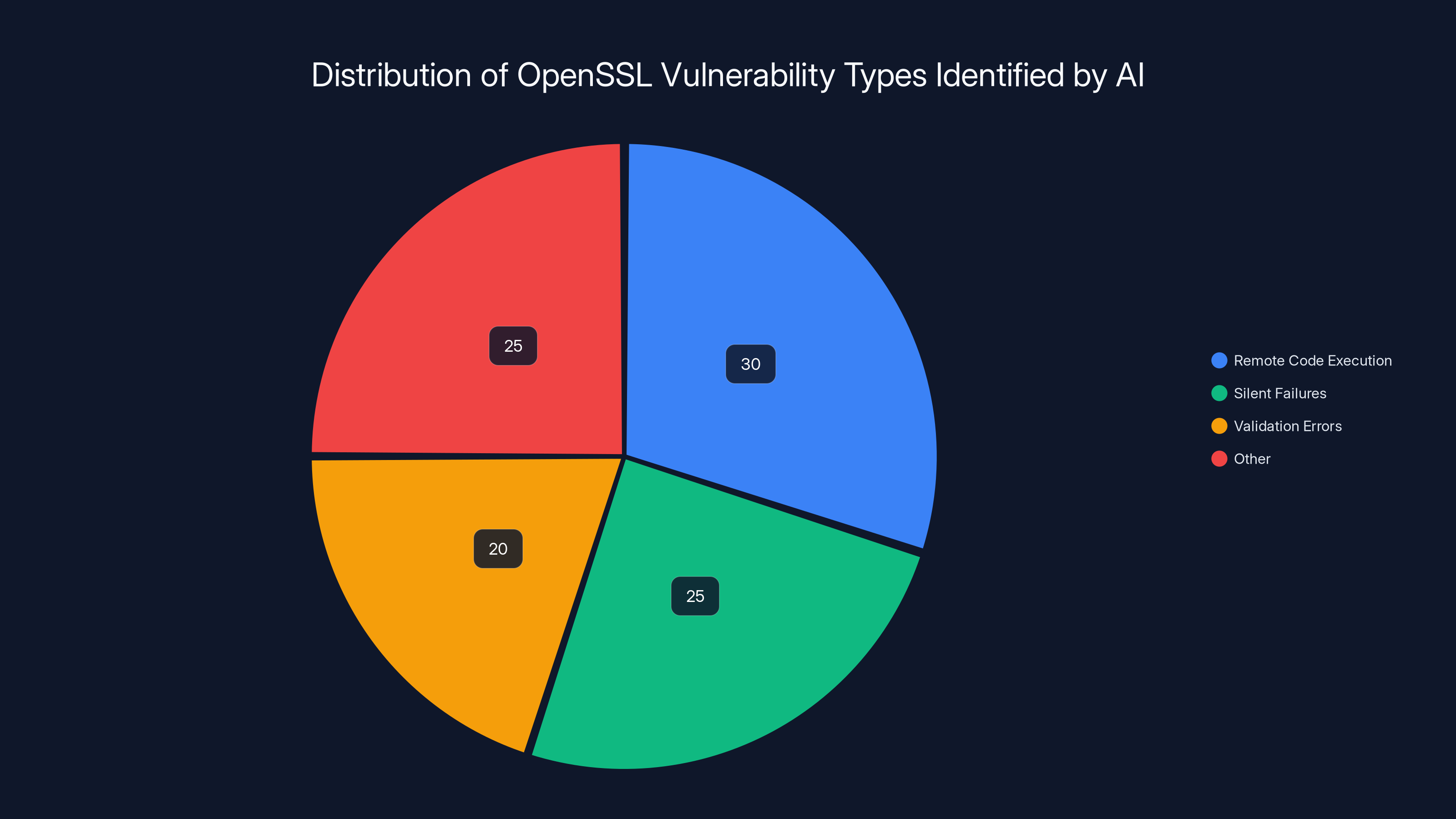

Understanding what AI discovered requires understanding the different categories of flaws that emerged. They weren't all the same severity or type. Some could enable remote code execution. Others caused silent failures that might go unnoticed for months. This diversity is crucial because it shows AI isn't just finding one class of bug—it's catching the full spectrum of security problems that humans naturally miss.

The Critical: Stack Buffer Overflow in CMS Auth Enveloped Data

CVE-2025-15467 represented the most severe finding. A stack buffer overflow vulnerability existed in how OpenSSL parsed CMS (Cryptographic Message Syntax) Auth Enveloped Data structures. Under specific constrained conditions, this could permit remote code execution. This wasn't theoretical—it was a genuine attack vector that could allow an attacker to execute arbitrary code on systems using vulnerable OpenSSL versions.

What made this particularly concerning was its nested nature. The vulnerability required specific conditions to trigger, which is exactly the type of edge case that humans struggle to identify during code review. An engineer examining the authentication envelope parsing logic might reason through the happy path correctly. They might even catch common mistakes. But the interaction between multiple parameters, the constraints of memory allocation, the specific sequence of operations needed to overflow the stack—these compound complexities fall into the blind spot where AI excels.

The AI analysis process didn't just flag this vulnerability. It traced the complete exploitation path, identified which versions were affected, and determined the exact conditions needed to trigger it. This level of detailed understanding accelerated the patching process and helped maintainers understand the full scope of exposure.

The Secondary: Missing Validation in PKCS#12 Handling

CVE-2025-11187 represented a slightly different problem. PKCS#12 is a widely-used format for storing cryptographic keys and certificates. OpenSSL's implementation lacked proper parameter validation during parsing, creating a pathway for stack-based buffer overflow. While not guaranteed to be exploitable in all scenarios, the vulnerability still presented a serious attack surface.

This type of flaw is particularly insidious because it lurks in a code path that handles real-world operations. Every system that imports or processes certificate files uses this code. The missing validation wasn't an obscure edge case—it was a fundamental oversight in a common operation. Yet it went undetected for years.

AI systems excel at finding these because they don't get bored analyzing validation logic. They don't assume "someone surely checked this by now." They verify every input, every boundary condition, every possible value combination, and flag when validation is missing or incomplete.

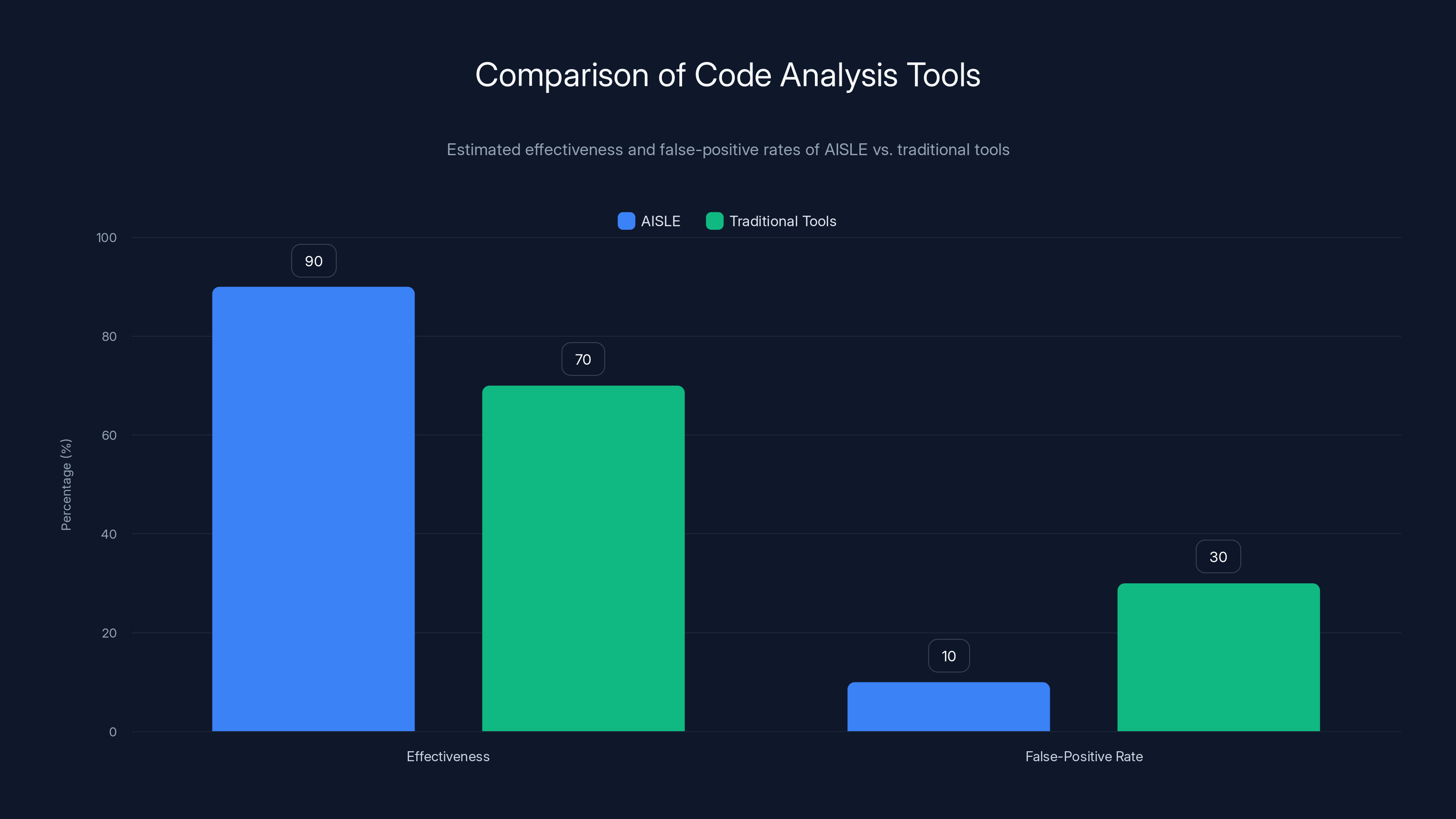

AISLE is estimated to have higher effectiveness and lower false-positive rates compared to traditional code analysis tools, enhancing security analysis accuracy. Estimated data.

The Denial-of-Service Cluster: When Crashes Reveal Deeper Problems

Six of the discovered vulnerabilities caused denial-of-service conditions through crashes or resource exhaustion. These might seem less critical than remote code execution flaws, but that perspective misses the broader picture. A crash-inducing vulnerability in cryptographic code reveals a fundamental problem: the code isn't handling all possible inputs gracefully.

Memory Exhaustion Through TLS 1.3 Compression

CVE-2025-66199 enabled memory exhaustion via TLS 1.3 certificate compression. The attack vector was elegant in its simplicity: send specially crafted compressed certificates that consume massive amounts of system memory. A properly implemented compression handler should have size limits, validation checks, and safeguards against decompression bombs. This implementation lacked them.

AI analysis caught this by tracking memory allocation patterns across all certificate handling code paths. When the system identified a decompression function without corresponding size constraints, it flagged the issue. Human code review of the same function might not trigger alarm bells. Decompression looks straightforward. The code is relatively simple. The vulnerability emerges only when you consider it in the context of TLS 1.3 handshakes, network timing, memory pressure scenarios, and specific certificate structures. Humans reviewing this code in isolation wouldn't naturally think through all those contexts simultaneously.

QUIC Cipher Handling Crashes

CVE-2025-15468 triggered crashes during QUIC cipher handling. QUIC is the transport layer protocol underlying HTTP/3. OpenSSL's QUIC implementation crashed under certain conditions, which could allow attackers to force service interruptions. The crash indicated a memory access violation or null pointer dereference.

Finding this required understanding not just QUIC protocol mechanics, but how OpenSSL's cipher suite handling interacted with QUIC state machines. The vulnerability wasn't in QUIC code or cipher code in isolation—it was in how they interfaced. This is exactly where manual code review breaks down. Reviewing QUIC code and cipher code separately yields nothing. Understanding their interaction requires holding both mental models simultaneously while tracing execution paths.

AI systems track these interactions automatically. Every function call, every state transition, every parameter passed between modules—it's all analyzed for consistency, validity, and potential error states. When a crash is detected, the AI traces backward through the call stack to identify root causes.

Cascading Time Stamp and PKCS#7 Failures

CVE-2025-69420 affected Time Stamp Response verification. Electronic timestamps are cryptographically signed assertions about when something occurred. OpenSSL's verification logic had issues that could cause crashes under specific input patterns. Similarly, CVE-2026-22796 disrupted PKCS#7 signature verification in legacy code paths.

These represent a category of vulnerability that humans often overlook: bugs in security-adjacent functionality. The core cryptographic operations might be solid. But the code handling signatures, timestamps, and verification responses—these support functions—develop vulnerabilities because they receive less scrutiny. Developers assume they're less critical. Security audits prioritize the core cryptographic functions. Meanwhile, these verification functions accumulate edge cases and error handling issues.

AI-assisted analysis treats all code equally. It doesn't make assumptions about which functions are critical. It analyzes verification logic, signature handling, and timestamp processing with the same rigor it applies to core encryption operations. This systematic approach finds vulnerabilities that human intuition misses because that intuition is often wrong about which code paths matter most.

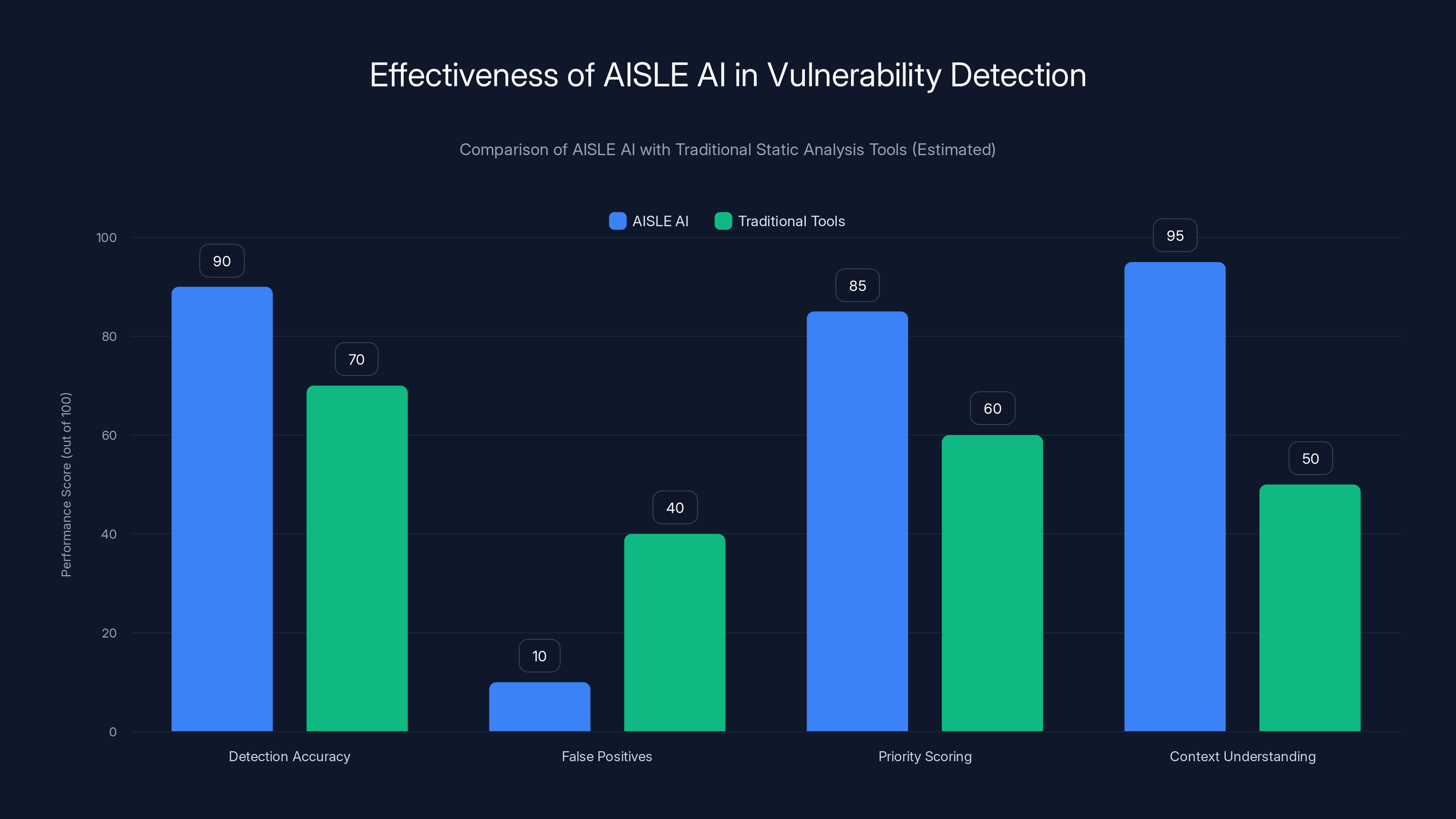

AISLE AI significantly outperforms traditional static analysis tools in detection accuracy, reducing false positives, priority scoring, and context understanding. Estimated data reflects typical performance improvements.

Memory Corruption: The Silent Killer in Cryptographic Code

Memory corruption vulnerabilities in cryptographic libraries represent a unique class of threat. They don't always crash immediately. They don't always fail visibly. They silently corrupt data, produce incorrect cryptographic results, or leak sensitive information.

Line-Buffering Logic Memory Corruption

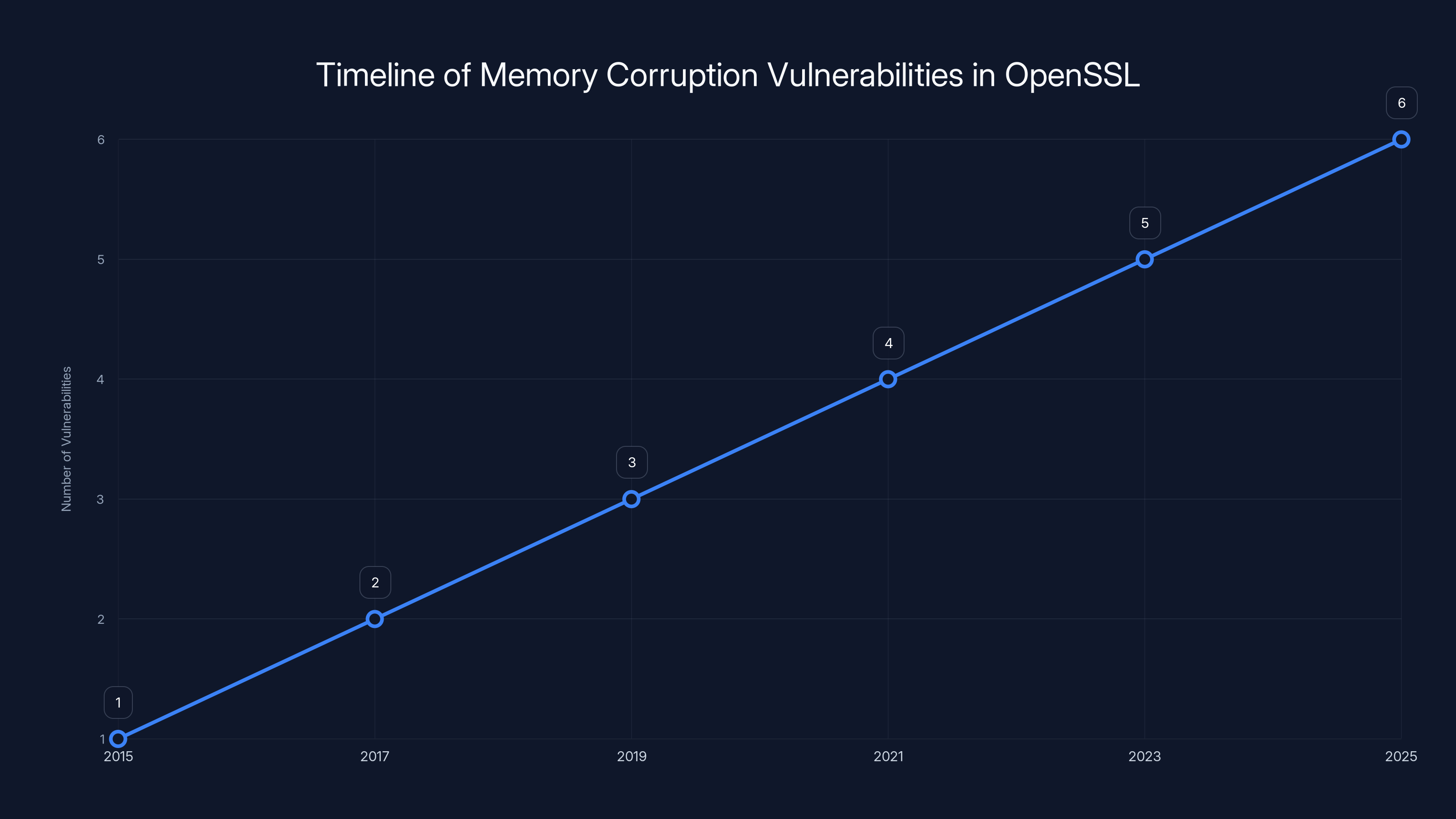

CVE-2025-68160 exposed memory corruption in line-buffering logic, with affected versions dating back to OpenSSL 1.0.2 (released in 2015). This means the vulnerability existed in production systems for over a decade. Line buffering is a fundamental I/O operation. Nearly every application using OpenSSL touches this code. Yet the memory corruption persisted undetected.

Memory corruption in buffering logic is particularly dangerous because it can corrupt data in adjacent buffers. Cryptographic keys, certificates, or plaintext information stored near the affected buffer could be silently corrupted. An application might not crash. It might not show obvious error messages. But the cryptographic operations could be producing incorrect results.

Human code reviewers examining buffering logic look for off-by-one errors, boundary conditions, and obvious memory safety violations. They might miss subtle issues where the corruption only manifests under specific memory layout conditions or when certain other operations execute concurrently. AI analysis tools track memory usage patterns across the entire codebase, identifying situations where writes to one buffer could affect others, where size calculations could underflow, where pointer arithmetic could overflow.

PKCS#12 Character Encoding Memory Corruption

CVE-2025-69419 involved memory corruption tied to PKCS#12 character encoding. PKCS#12 files can contain certificates, keys, and metadata in various character encodings. The handling of character encoding conversions is complex. Converting from UTF-8 to UCS-2 or vice versa requires careful buffer size calculations. If a character is longer in the target encoding, or if certain character sequences expand unexpectedly, buffer overflows occur.

The vulnerability remained "silent" in the sense that it didn't guarantee crashes or obvious failures. Some exploitation scenarios might produce cryptographic weaknesses without obvious runtime errors. An application might successfully decrypt a file and return apparently valid data, but the decrypted content could be subtly corrupted. Detecting this requires testing edge cases with various character encodings, specific character sequences, and malformed inputs. Humans can test some of these cases. AI can test all of them, automatically, continuously.

Post-Quantum ML-DSA Signature Truncation

CVE-2025-15469 introduced a more insidious problem: silent truncation in post-quantum ML-DSA (Module-Lattice-Based Digital Signature Algorithm) signature handling. Truncation means the signature was silently cut short without error indication. An application might verify what it believed was a valid signature, but the signature was actually incomplete. This breaks cryptographic integrity guarantees without visible failure modes.

This vulnerability is particularly concerning because it affects post-quantum cryptography—the next generation of algorithms expected to resist quantum computing attacks. Getting these implementations right is critical for long-term security. Yet vulnerabilities are emerging even in the first-generation implementations. AI-assisted analysis of post-quantum algorithms can identify truncation, padding, and length validation issues before they reach production.

Hardware-Accelerated OCB Mode Weakening

CVE-2025-69418 affected OCB (Offset Codebook) encryption mode on hardware-accelerated paths. Modern processors include dedicated cryptographic acceleration. OpenSSL leverages this acceleration for performance. But the hardware acceleration paths sometimes diverge from software implementations, and security properties can diverge too.

This vulnerability could weaken encryption guarantees under specific configurations. The issue didn't manifest in all usage scenarios—specifically those that didn't use hardware acceleration or didn't use OCB mode. This conditional vulnerability is the hardest type to find through traditional testing. You need to test not just the algorithm, but the specific combination of hardware capabilities and algorithm selection that triggers the flaw. AI systems can enumerate these combinations and test them systematically.

Why Human Review Alone Failed: The Cognitive Limits of Security Auditing

OpenSSL is maintained by a dedicated team of security-conscious developers. The project has received millions in funding specifically for security improvements. It's been audited by independent security firms. The code is open source, reviewed by thousands of developers worldwide. If human review alone could catch all vulnerabilities, OpenSSL would be the last place to expect hidden flaws.

Yet here we are. Twelve vulnerabilities, discovered by AI, in the most scrutinized cryptographic code in existence.

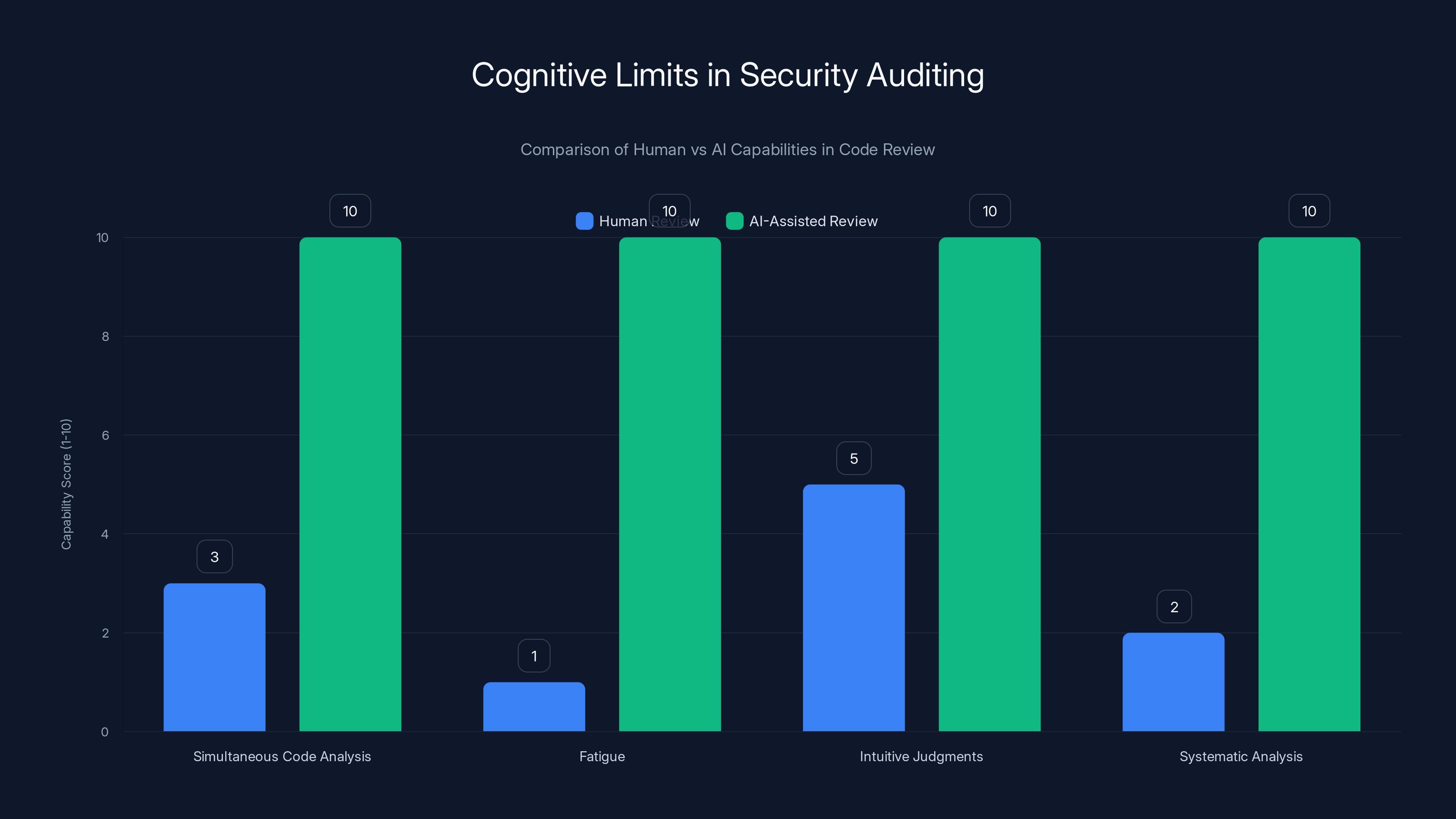

This reveals fundamental limitations in how humans approach code review. Our cognitive capacity has real limits. We can hold perhaps 3-4 complex ideas in working memory simultaneously. We can focus intensely on a specific problem for an hour or two before fatigue sets in. We make intuitive judgments about which code is "probably safe" based on experience, which creates blind spots. We can't realistically test all possible input combinations. We can't simultaneously reason about all code paths when there are thousands of them.

These limitations aren't personal failures of OpenSSL maintainers. They're fundamental constraints of human cognition applied to exponentially growing code complexity.

Modern software operates at a scale that exceeds human capacity. OpenSSL contains roughly 500,000 lines of code. Testing all possible execution paths is mathematically infeasible. Security code reviews happen under time pressure. Reviewers have limited attention. They prioritize based on intuition about what's "probably dangerous" rather than systematic analysis of what's actually dangerous.

AI-assisted analysis doesn't suffer these limitations. An AI system can analyze all 500,000 lines simultaneously. It doesn't get tired. It doesn't make intuitive judgments about what's "probably safe." It applies systematic analysis to every function, every code path, every variable, every memory operation. When a pattern matches a known vulnerability class, it's flagged. When memory operations could violate buffer boundaries under any input combination, it's detected.

The chart illustrates an estimated increase in memory corruption vulnerabilities in OpenSSL from 2015 to 2025, highlighting the persistent nature of these threats. Estimated data.

The AISLE AI Approach: How Machine Learning Changed Vulnerability Detection

The AI-assisted team used AISLE, an AI toolset designed specifically for vulnerability discovery. Understanding how AISLE works reveals why AI can find what humans miss.

Context-Aware Code Analysis

AISLE employs context-aware detection that understands not just syntax, but semantics. When analyzing code, it doesn't just look for patterns. It understands what the code is trying to accomplish. When it sees a buffer operation, it understands the intended size, the actual size being used, and all the ways they could diverge.

This semantic understanding is crucial. A line of code like memcpy(dst, src, len) is a simple copy operation. But AISLE understands this operation in context. Where did len come from? Is it validated? Could it be larger than the destination buffer? What happens if src contains untrusted data? Does the application later rely on this buffer being initialized correctly? Every question is analyzed.

Automated Priority Scoring

AISLE assigns priority scores to potential threats. This prevents alert fatigue. Traditional static analysis tools flag thousands of potential issues. Most are false positives. Distinguishing real vulnerabilities from harmless patterns requires human judgment. AISLE uses machine learning to understand which flagged patterns represent actual security risks.

The AI learns from known vulnerabilities, from how exploits actually work, from what attackers target. It doesn't just find "suspicious code"—it finds code that matches the actual patterns of real vulnerabilities. This dramatically reduces false positives while maintaining sensitivity to real threats.

False Positive Reduction Without Missing Actual Flaws

Traditional static analysis might flag a memory operation as potentially unsafe even though code elsewhere guarantees safety. A human reviewer would understand the relationship between these code sections. But a traditional tool can't. AISLE can. By understanding code structure and relationships across large codebases, AISLE identifies which potential issues are actually safe versus actually dangerous.

This is the breakthrough that makes AI-assisted analysis practical. Previous static analysis tools were either so sensitive that they generated thousands of false alarms, or so conservative that they missed real vulnerabilities. AISLE balances sensitivity and specificity, finding real vulnerabilities while avoiding false positives that would overwhelm human reviewers.

Continuous Examination of All Code Paths

Humans can't exhaustively test code paths. The number of possible paths through complex code is exponential. OpenSSL's cryptographic functions have many branches based on cipher selection, key material, and input parameters. Testing all combinations is infeasible.

AI can enumerate these paths and test through them automatically. For each potential vulnerability class—buffer overflows, integer overflows, use-after-free, double-free, memory leaks, race conditions—AISLE traces through every relevant code path to determine if the vulnerability is possible.

This systematic exhaustiveness is what allowed detection of vulnerabilities that had persisted for decades. Humans conducting code reviews would naturally focus on high-risk functions and obvious threat models. Attackers sometimes exploit unexpected combinations of features or edge cases in secondary functionality. AISLE doesn't make those assumptions. Every function, every code path, every potential execution scenario is treated as equally important until proven otherwise.

Real-World Vulnerability Details: Technical Deep Dives

Each discovered vulnerability tells a story about why human review failed and how AI succeeded.

The Stack Overflow Exploitation Chain

CVE-2025-15467 required a specific exploitation chain. An attacker would craft a malicious CMS Auth Enveloped Data structure. This structure contains encrypted data, key transport mechanisms, and authentication information. The OpenSSL parser would process this structure in a specific sequence.

During parsing, certain parameters would trigger stack allocation of a buffer with a size calculated from input data. If the calculation was wrong or the input data was crafted carefully, the allocated buffer could be too small. Subsequent operations would write beyond the buffer's boundaries, overwriting the stack. An attacker could use this to overwrite return addresses or other critical stack data, redirecting execution to malicious code.

Human auditors examining this code would see a buffer allocation and writes to that buffer. They'd check whether the size calculation was reasonable. For legitimate inputs, it is reasonable. The vulnerability emerges only when the parser encounters specifically crafted input designed to manipulate the size calculation. Testing this requires constructing malformed CMS structures and determining which ones cause problems. AI-assisted testing does this automatically.

The Time-of-Check-Time-of-Use Issue

Some vulnerabilities emerge from race conditions. A value is checked, validated as safe, then used. But between the check and the use, the value could change. This is the classic time-of-check-time-of-use (TOCTOU) vulnerability.

OpenSSL runs in multi-threaded contexts. PKCS#12 parsing might occur in one thread while other threads are reading or modifying the same structures. If validation happens in one code path and the actual use happens in another code path that can be reached by multiple threads without synchronization, a race condition is possible.

Finding this requires understanding not just the code, but the threading model, the possible interleaving of operations across threads, and the consequences if validation is bypassed. AI systems model these threading scenarios and detect when validation could be circumvented.

Silent Failures in Cryptographic Operations

The most insidious vulnerabilities don't crash. They produce subtly incorrect results. A signature might be truncated but still appear valid to code that doesn't verify the full signature. A decryption might produce corrupted plaintext that the application interprets as valid.

Detecting these requires understanding what correct behavior looks like and identifying deviations. A human might test OpenSSL with various inputs and expect it to work correctly. If it doesn't crash, they assume success. AI analysis goes further. It verifies the correctness of outputs. If a signature is shorter than it should be, that's detected. If decrypted data doesn't match expected patterns, that's flagged.

AI-assisted reviews outperform human reviews in handling large codebases, avoiding fatigue, and applying systematic analysis. Estimated data.

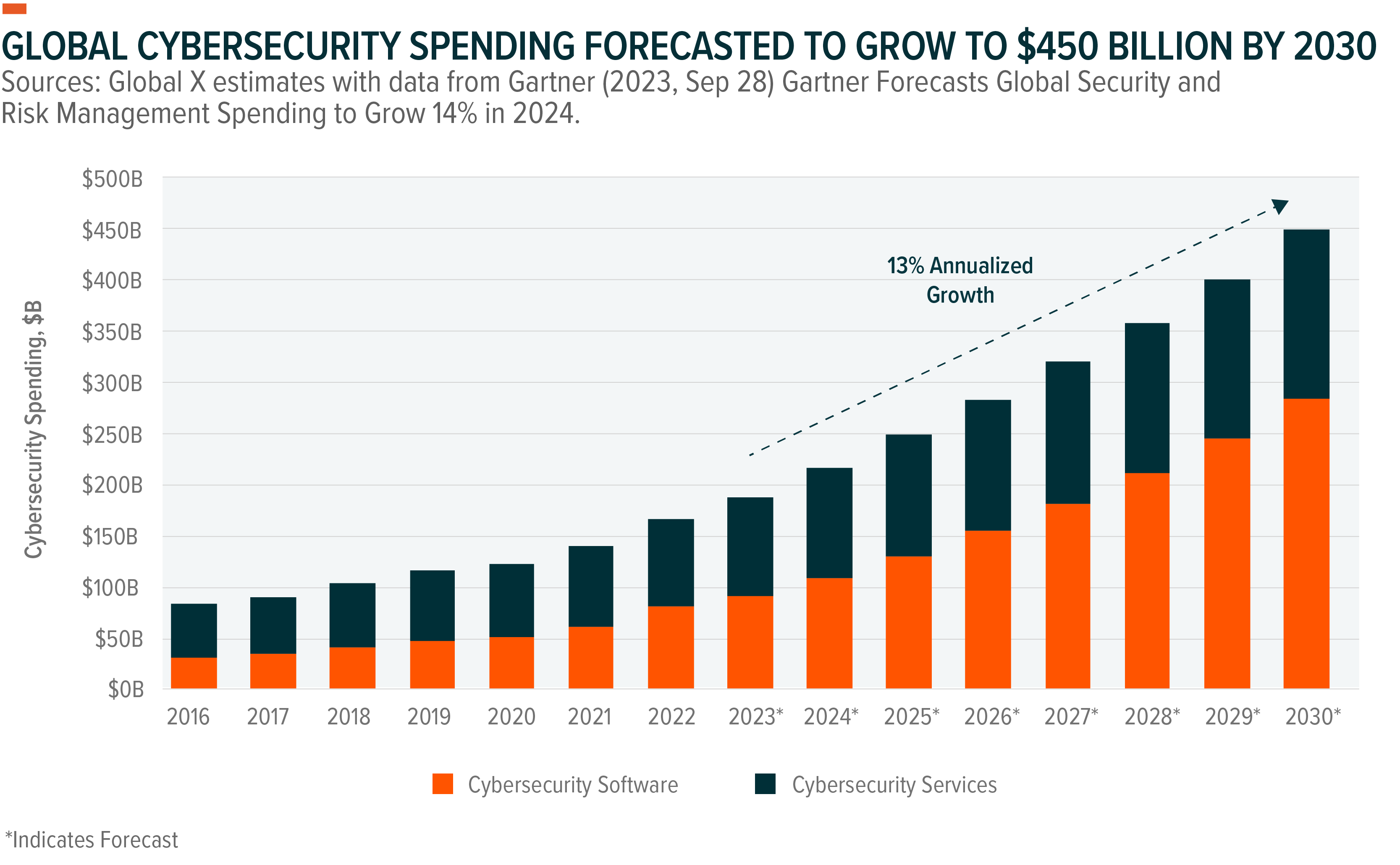

The Broader Implications: An "AI Exposure Gap" in Enterprise Security

If OpenSSL—the gold standard of audited, widely-reviewed, security-focused code—has hidden vulnerabilities for decades, what does that tell us about typical enterprise code?

Most organizations don't have the security focus, community scrutiny, or audit resources that OpenSSL receives. Most codebases are larger and more complex. Most development teams are under greater time pressure. Yet most organizations assume their security reviews are adequate because they've hired security experts.

This creates what might be called an "AI Exposure Gap"—the gap between what human security review can detect and what AI-assisted analysis can find. This gap likely contains serious vulnerabilities in most enterprise systems.

The Economics of Vulnerability Discovery

Traditional security audits are expensive. A comprehensive code audit by reputable security firms costs hundreds of thousands of dollars. Organizations audit critical code, but not all code. They audit annually or after major releases, not continuously. This creates windows where vulnerabilities can exist undetected.

AI-assisted analysis can be deployed continuously at a fraction of the cost. It can analyze entire codebases, including code that wouldn't normally get professional security review. The cost per line of code analyzed is orders of magnitude lower than traditional auditing. This changes the economics of security.

The Scaling Problem

As codebases grow, human security review capacity doesn't scale. Organizations might add security staff, but hiring and training security experts is slow. Meanwhile, code grows exponentially. The gap between code being written and code being reviewed widens. AI scales with code growth. Adding more code doesn't make AI analysis slower or more expensive.

This scaling advantage suggests that AI-assisted analysis will eventually become the default security practice, not the exception. Organizations that aren't using it are accepting greater risk.

The Automation Advantage

Human security experts are expensive and in short supply. They get tired, make mistakes, have off days. They leave organizations, taking knowledge with them. AI systems don't have these limitations. An AI vulnerability detector trained once can be deployed across an entire organization. It runs 24/7. It doesn't get tired. It doesn't leave.

This suggests a future where AI-assisted analysis is standard practice in all serious organizations, and human security experts focus on verifying AI findings, determining severity and exploitability, and designing countermeasures—work that still requires human judgment but doesn't require tedious code reading.

Industry Response and the OpenSSL Collaboration

What's remarkable about the OpenSSL vulnerability discovery isn't just that AI found them. It's how the OpenSSL team responded. They didn't dismiss the findings. They didn't assume AI was generating false positives. They recognized the quality of the analysis and incorporated recommendations directly.

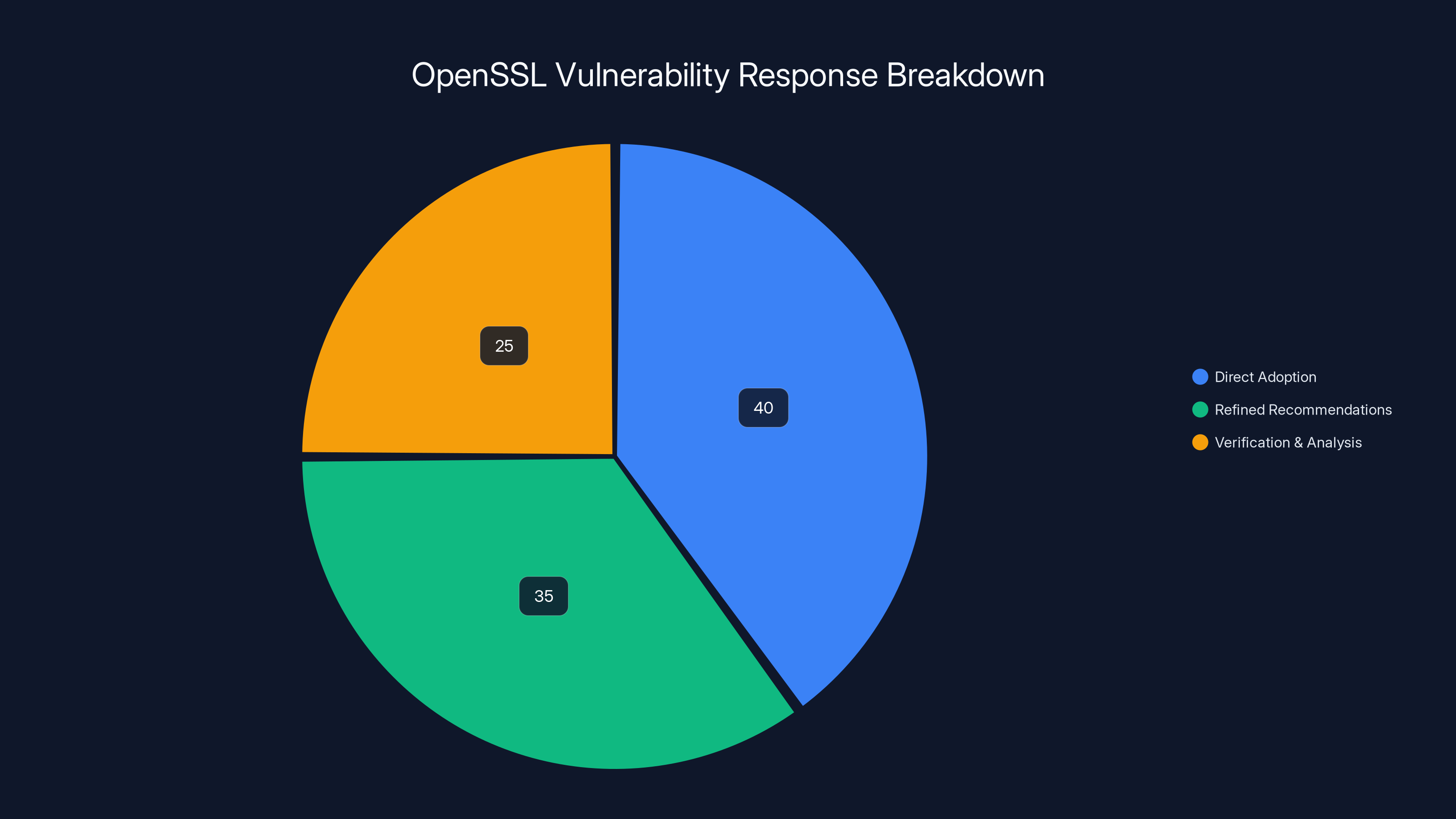

OpenSSL maintainers worked collaboratively with the AI-assisted team to verify findings, understand root causes, and implement fixes. Some recommendations were adopted directly. Others were refined based on maintainers' deeper understanding of the codebase and how it's used.

This collaboration model shows that AI doesn't replace human expertise. It complements it. AI finds vulnerabilities that humans miss. Humans verify that the vulnerabilities are real, understand exploitation scenarios, design fixes that don't introduce new problems, and ensure backward compatibility where needed.

The quality and collaboration praised in the OpenSSL team's response suggests that AI-assisted security discovery has reached a level of maturity where it's producing genuinely valuable findings, not noise. This will likely accelerate adoption across the industry.

Estimated data shows that a significant portion of AI-discovered vulnerabilities were directly adopted by OpenSSL, with others refined or analyzed further, highlighting effective collaboration.

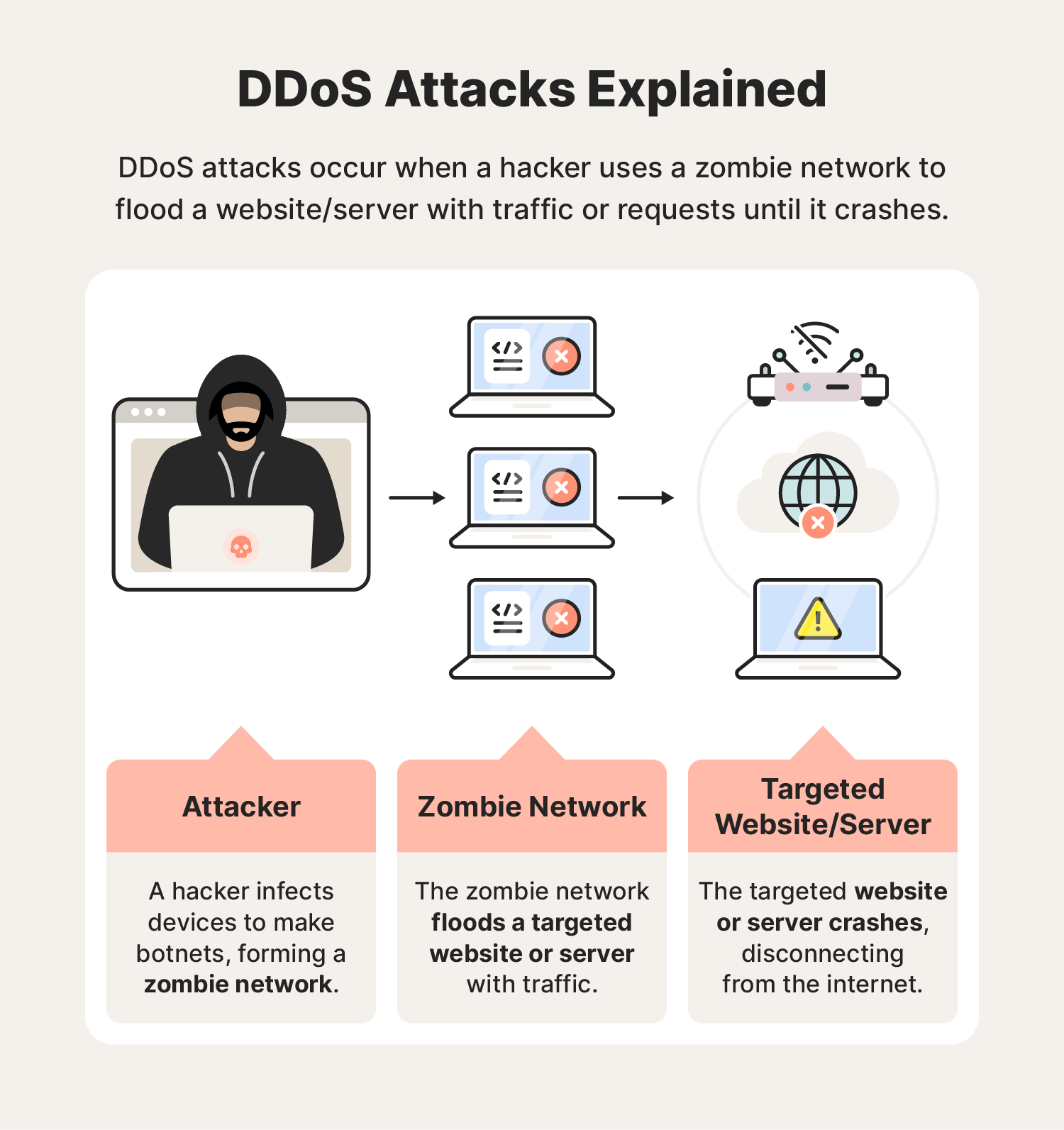

Endpoint Security and Beyond: AI Extending Into Defense

The implications extend beyond finding vulnerabilities in code. The same AI principles that caught OpenSSL flaws can be applied to endpoint protection, malware detection, and intrusion prevention.

AI-Driven Threat Hunting

Traditional endpoint protection relies on signature databases. Known malware is detected by its signatures. Unknown malware—zero-days—often gets through. Behavioral analysis helps, but attackers continually evolve techniques to evade behavioral detection.

AI systems can identify suspicious patterns that humans would miss. A process spawning unusual child processes. Memory allocation patterns suggesting code injection. Registry modifications associated with known attack frameworks. File operations that don't match any legitimate software. These patterns can be analyzed in real-time across entire networks.

Anomaly Detection at Scale

What's normal for one user might be unusual for another. A developer regularly accessing source code repositories at 3 AM is normal. An accountant doing the same is suspicious. Machine learning models can understand normal behavior patterns for different user roles and identify deviations that warrant investigation.

Scale matters. A security analyst can watch a handful of systems. AI can watch thousands. Every process, every network connection, every file operation can be analyzed. Hidden threats operating quietly in the background get detected because they deviate from expected patterns.

Continuous Monitoring and Rapid Response

Traditional security operates reactively. Threats are discovered, alerts are issued, incident response teams investigate. This takes time. In that time, attackers expand access, exfiltrate data, and deepen persistence.

AI-assisted endpoint protection operates continuously. When anomalies are detected, response can be automated. Suspicious processes can be isolated. Suspicious network connections can be blocked. This dramatically reduces the window between detection and response.

The Shift From Reactive Patching to Proactive Protection

The OpenSSL discovery represents a paradigm shift in how security operates. Traditional security is reactive: vulnerabilities are discovered in the wild, vendors patch them, organizations deploy patches. Attackers have time to develop exploits for newly disclosed vulnerabilities. Organizations have time to discover they're affected and deploy updates.

AI-assisted analysis shifts this model toward proactive protection. Vulnerabilities are discovered before they're exploited. Patches are released before attackers develop attacks. Organizations deploy updates before exposure occurs.

This requires three things: finding vulnerabilities proactively through AI-assisted analysis, patching rapidly, and deploying patches quickly. The OpenSSL team demonstrated all three. The vulnerabilities were discovered through AI analysis, fixes were developed and tested, and patches were released to the public.

Future security will increasingly work this way. Continuous AI analysis identifies potential vulnerabilities. Development teams patch proactively. Systems update automatically. The attack window shrinks toward zero. Breach costs, which currently average millions of dollars, could decline dramatically.

This economic incentive alone will drive adoption. Organizations that shift from reactive to proactive security will have fewer breaches, lower breach costs, and better security posture. This competitive advantage will pressure other organizations to adopt similar approaches.

AI detected a diverse range of vulnerabilities in OpenSSL, with remote code execution and silent failures being significant categories. Estimated data.

Current Limitations and Where AI Analysis Falls Short

It's important to acknowledge what AI-assisted vulnerability discovery can't do. Understanding limitations is crucial for realistic expectations.

Context and Business Logic

AI excels at finding coding errors: buffer overflows, memory corruption, validation issues. It struggles with business logic vulnerabilities. If authentication code is syntactically correct but implements the wrong authentication model, AI might not catch it. If access control logic has flawed reasoning, an AI might not understand the flaw.

These vulnerabilities require understanding not just code, but requirements and threat models. Humans still excel at this. An AI can verify that code matches a specification. It can't easily determine if the specification is correct.

Exploitation Difficulty

Finding a vulnerability and exploiting it are different challenges. Some vulnerabilities are theoretically present but practically difficult to exploit. Triggering them requires specific conditions: certain memory layouts, timing windows, or application states. An AI might find the vulnerability and rate it as high-severity without understanding that exploitation is nearly impossible in practice.

Human security professionals understand exploitation. They can determine which vulnerabilities actually matter. This human judgment is still crucial for prioritizing patches and determining risk levels.

False Negatives

AI-assisted analysis reduces false positives but doesn't eliminate false negatives. Some vulnerabilities are so subtle or unusual that AI analysis might miss them. AI is generally better than humans at finding known classes of vulnerabilities but might miss novel attack vectors that no one has thought to look for.

This is why defense-in-depth remains important. AI-assisted analysis is one layer, but not the only layer. Code review, penetration testing, bug bounty programs, and other security practices remain valuable.

Configuration and Deployment Issues

Code-level vulnerabilities are only part of security. Configuration errors, deployment issues, and operational security matter too. A perfectly secure application can be vulnerable due to misconfiguration. AI analyzing code won't catch these issues.

Security requires addressing multiple layers: code security, configuration security, operational security, and user security. AI contributes to code security but doesn't solve the entire problem.

What Organizations Should Do Now

The OpenSSL discovery demonstrates that AI-assisted vulnerability analysis is mature, effective, and producing real results. Organizations should take action.

Assess Current Code

Start with critical code: authentication systems, payment processing, encryption, and access control. Deploy AI-assisted analysis to these areas first. Understand what vulnerabilities exist in your highest-risk code.

Implement Continuous Analysis

Analyze code once is better than never. Continuous analysis is better still. Set up AI-assisted vulnerability scanning to run automatically on new code as it's committed. Use the results to guide security reviews and prioritize patches.

Integrate Into Development Workflow

Make AI-assisted analysis part of the development process, not something that happens after code is written. Tools that integrate with IDEs and code repositories can flag issues before code is committed. This is far more effective than finding issues in released software.

Train Teams on Results

When AI analysis flags issues, use them as learning opportunities. Why did this vulnerability exist? How could it have been caught earlier? Train developers on the patterns that lead to vulnerabilities. Use real findings from your codebase, not abstract examples.

Don't Ignore Low-Severity Issues

Focus on high-severity vulnerabilities, but don't ignore low-severity findings. Sometimes multiple low-severity issues interact to create high-impact problems. Sometimes issues that seem low-risk prove exploitable in unexpected ways.

Plan for Scale

If your organization has thousands of developers and millions of lines of code, manual security review alone will not scale. Plan to deploy AI-assisted analysis at scale. This requires infrastructure, process changes, and tool integration, but the security and economic benefits justify the investment.

The Future of Security: Where AI Takes Us

The OpenSSL discovery is likely the beginning, not the end, of AI transforming security. Future developments will probably include:

Whole-Program Analysis

Current AI analysis typically examines individual repositories or projects. Future systems will analyze entire software ecosystems: how libraries interact with applications, how applications interact with the operating system, how systems interact with each other. This broader context will enable detection of vulnerabilities that don't exist in any single component but emerge from their combination.

Threat-Model-Driven Analysis

AI systems will incorporate threat models as input. Rather than generically analyzing code, AI will understand specific threat models and look for vulnerabilities relevant to those threats. An application processing financial transactions has different threat models than one handling health records. AI analysis will be tailored to each context.

Exploit Generation

Future AI systems might not just find vulnerabilities, they might generate exploits. This enables verification that vulnerabilities are truly exploitable and generates concrete understanding of impact. This remains controversial from a security perspective, but the capability is likely coming.

Patch Generation

Today's AI can identify vulnerabilities. Future AI might generate patches. Not just recommendations for how to fix code, but actual code changes that resolve vulnerabilities without introducing new problems. This would accelerate remediation dramatically.

Predictive Vulnerability Analysis

AI systems trained on massive datasets of code and vulnerabilities might predict which areas of new code are most likely to contain vulnerabilities. This would allow targeted human review of highest-risk areas, improving review efficiency.

The Human Role in an AI-Assisted Security Future

This raises an important question: what's left for human security professionals?

The answer: plenty. Humans are needed for strategic security decisions that AI can't make. What's the threat model? What are acceptable risk levels? How does security integrate with business objectives? These are human decisions. Humans will also be needed to verify AI findings, understand exploitation scenarios, design appropriate fixes, and determine deployment strategies.

The security professional of the future likely spends less time doing tedious code review and more time doing strategic security analysis. That's a good outcome. It frees humans to work on harder problems while AI handles tedious analysis. It also suggests that security expertise becomes more valuable, not less. Understanding how to work with AI analysis tools, how to interpret results, how to design security architecture will be increasingly valuable skills.

Organizations that successfully integrate AI-assisted analysis into their security programs will have advantages. They'll find vulnerabilities faster, patch more effectively, and maintain stronger security posture. Organizations that resist this shift will increasingly lose the security advantage they might have had through traditional methods.

Taking Action: Tools and Frameworks Today

Organizations don't need to wait for perfect tools. Effective AI-assisted vulnerability analysis is available today. Multiple vendors offer AI-powered static analysis, dynamic analysis, and threat detection. Organizations should:

- Evaluate available tools specific to their technology stack

- Start with pilot projects on critical code

- Understand the types of vulnerabilities each tool finds

- Integrate results into existing security programs

- Train teams on interpreting and acting on results

- Scale to broader codebase as comfort increases

The learning curve is real but manageable. The benefits demonstrated by the OpenSSL case study suggest the investment pays off quickly.

FAQ

What exactly is an OpenSSL vulnerability?

OpenSSL vulnerabilities are security flaws in the OpenSSL cryptographic library that could allow attackers to execute unauthorized code, crash systems, steal encrypted data, or weaken encryption. They range from critical remote code execution vulnerabilities to lower-severity denial-of-service or information disclosure issues. OpenSSL is so widely used that vulnerabilities can affect millions of systems globally, from web servers to routers to IoT devices.

Why did AI find these vulnerabilities when human reviewers missed them?

Humans have cognitive limits. They get tired, miss complex interactions, and can't realistically test all code paths in 500,000+ lines of code simultaneously. AI can analyze every function, every code path, and every possible execution scenario without fatigue. AI also uses machine learning to understand vulnerability patterns and priority-score findings, reducing false positives that would overwhelm human reviewers. The result is more thorough analysis at a lower false-positive rate than traditional static analysis tools.

What makes AISLE different from traditional code analysis tools?

Traditional tools like SonarQube or Checkmarx flag potential issues but generate high false-positive rates. AISLE uses machine learning to understand which patterns represent real vulnerabilities versus harmless code. It performs context-aware analysis that understands not just syntax but semantics—what code is actually trying to accomplish. It also traces through complex code relationships across entire repositories, understanding how different components interact. This combination produces finding that are more accurate and more useful than traditional static analysis.

Are the discovered vulnerabilities actually exploitable?

Yes. The discovered vulnerabilities represent genuine security risks. CVE-2025-15467 (stack buffer overflow in CMS Auth Enveloped Data) could enable remote code execution. CVE-2025-11187 (missing validation in PKCS#12) creates buffer overflow pathways. Several denial-of-service vulnerabilities could crash critical infrastructure. The OpenSSL team verified these are real vulnerabilities and released patches. Independent analysis confirms they pose genuine security risk.

How often should organizations scan their code with AI-assisted analysis?

Ideally, continuously. Modern development practices involve frequent code commits. AI-assisted analysis should run automatically on every commit or pull request. This catches vulnerabilities before they're merged into main branches. For retrospective analysis of existing code, comprehensive scans should occur at least quarterly, with more frequent scans of high-risk components. The scanning pace should match your development velocity and risk tolerance.

What types of vulnerabilities does AI-assisted analysis find best?

AI excels at finding implementation bugs: buffer overflows, integer overflows, memory corruption, use-after-free, double-free, memory leaks, race conditions, and validation bypass vulnerabilities. It's good at finding these because they follow patterns and can be detected through systematic code analysis. AI is weaker at finding business logic vulnerabilities, architectural flaws, or vulnerabilities that require understanding application context beyond the code itself. For comprehensive security, AI-assisted code analysis should be complemented with threat modeling, penetration testing, and security review.

Do AI-assisted analysis results require human verification?

Yes. While AI analysis is accurate, verification is important. Some flagged items might not be exploitable in practice due to specific deployment scenarios. Some might be false positives despite the efforts to reduce them. Humans with security expertise should verify high-severity findings before they're marked as critical. This doesn't require reviewing all findings—prioritization based on AI severity ratings is reasonable—but security professionals should sample findings across different categories to build confidence in the tool.

How quickly can organizations deploy AI-assisted vulnerability analysis?

Deployment is relatively fast. Many tools require minimal setup—connect to your code repository, configure what to scan, and let the tool run. First scan typically takes hours to days depending on codebase size. Results appear within that timeframe. Integration into CI/CD pipelines takes longer but is well-documented for most tools. Full organizational adoption with process changes and team training might take months, but initial deployment of scanning can happen in weeks.

What's the cost of AI-assisted vulnerability analysis?

Costs vary widely. Some tools operate on open-source code free. Commercial tools typically charge per developer, per line of code scanned, or per scan. Costs range from hundreds to thousands monthly depending on tool and scale. Compared to manual security audits (which can cost hundreds of thousands), AI-assisted analysis is economical. It's worth calculating ROI: even preventing one major breach typically justifies significant spending on security tools.

Can AI-assisted analysis replace hiring security professionals?

No. AI complements human expertise; it doesn't replace it. Security professionals are still needed to design security architecture, develop threat models, verify AI findings, develop patches, make risk decisions, and respond to incidents. What AI does is reduce time spent on tedious code review, freeing security professionals to work on higher-value activities. Organizations should expect to shift security spending from manual review toward AI tools and maintain security staffing for the more strategic work that AI can't do.

What should organizations do if they find vulnerabilities through AI analysis?

Follow responsible disclosure practices. Document the vulnerability internally. Assess severity and exploitability. Develop a fix. Test the fix thoroughly. Plan a release schedule that prioritizes critical vulnerabilities quickly and lower-severity vulnerabilities in regular update cycles. Communicate with stakeholders about what was found and how it's being addressed. For widely-used software like OpenSSL, coordinate with the vendor on disclosure timeline. Don't publicly disclose critical vulnerabilities without giving users time to patch.

Key Takeaways

- AI-assisted analysis discovered 12 OpenSSL vulnerabilities in January 2026, some persisting for 27 years despite heavy human review and auditing

- Stack buffer overflow in CMS AuthEnvelopedData (CVE-2025-15467) could enable remote code execution on vulnerable systems

- Memory corruption issues like CVE-2025-68160 affected OpenSSL 1.0.2 (2015) and continued undetected for over a decade in production systems

- AISLE AI toolset uses context-aware analysis, priority scoring, and systematic code path examination to find vulnerabilities humans miss

- Security is shifting from reactive patching to proactive protection through continuous AI-assisted vulnerability scanning and remediation

- An "AI Exposure Gap" likely exists in most organizations, where AI-assisted analysis can find vulnerabilities that traditional security review misses

- Organizations should deploy AI-assisted analysis immediately on critical code, integrate it into development workflows, and train teams on interpreting results

Related Articles

- AI Coding Tools Work—Here's Why Developers Are Worried [2025]

- Software Integrity vs Data Sovereignty: Why Code Matters More [2025]

- Fake Moltbot AI Assistant Malware Scam: What You Need to Know [2025]

- Upwind Security's $250M Series B: Runtime Cloud Security's Defining Moment [2025]

- Browser-Based Attacks Hit 95% of Enterprises [2025]

- 1Password's New Phishing Prevention Feature [2025]

![AI Discovers Decades-Old OpenSSL Vulnerabilities: Security at Scale [2025]](https://tryrunable.com/blog/ai-discovers-decades-old-openssl-vulnerabilities-security-at/image-1-1770071974157.jpg)