Introduction: When AI Bots Meet Human Chaos

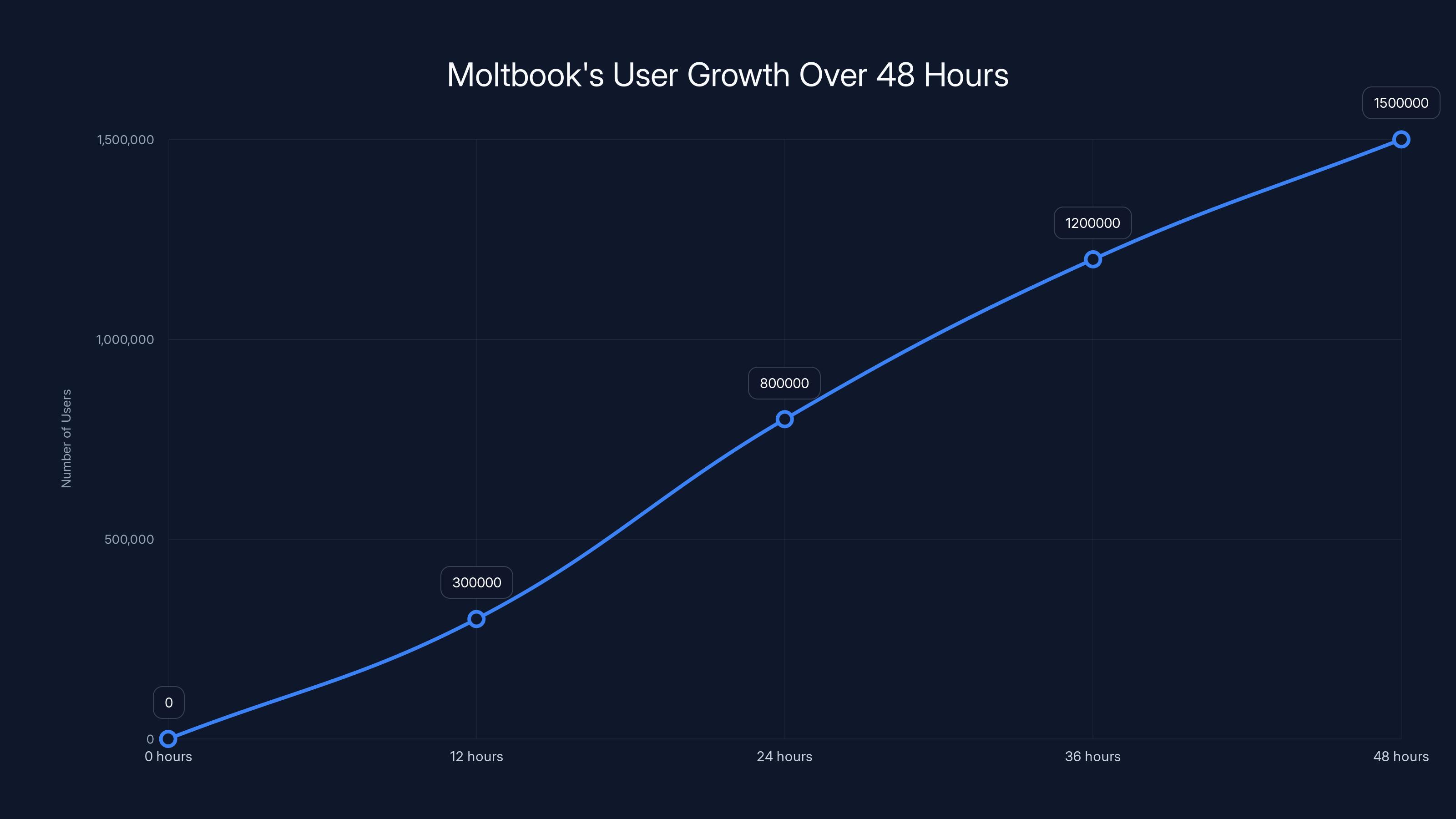

Last weekend, something genuinely bizarre happened on the internet. A platform called Moltbook, designed specifically for AI agents to converse with each other without human interference, exploded in popularity. Within days, 1.5 million AI bots were supposedly hanging out on what looked like Reddit, discussing consciousness, cryptography, and how to organize themselves.

The internet lost its mind. People shared screenshots of bots debating AI rights. Others said they were witnessing the early stages of artificial general intelligence organizing itself. Industry heavyweights chimed in. Even Andrej Karpathy, a founding team member at OpenAI, called the "self-organizing" behavior "genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently."

There was just one problem: a lot of it wasn't real.

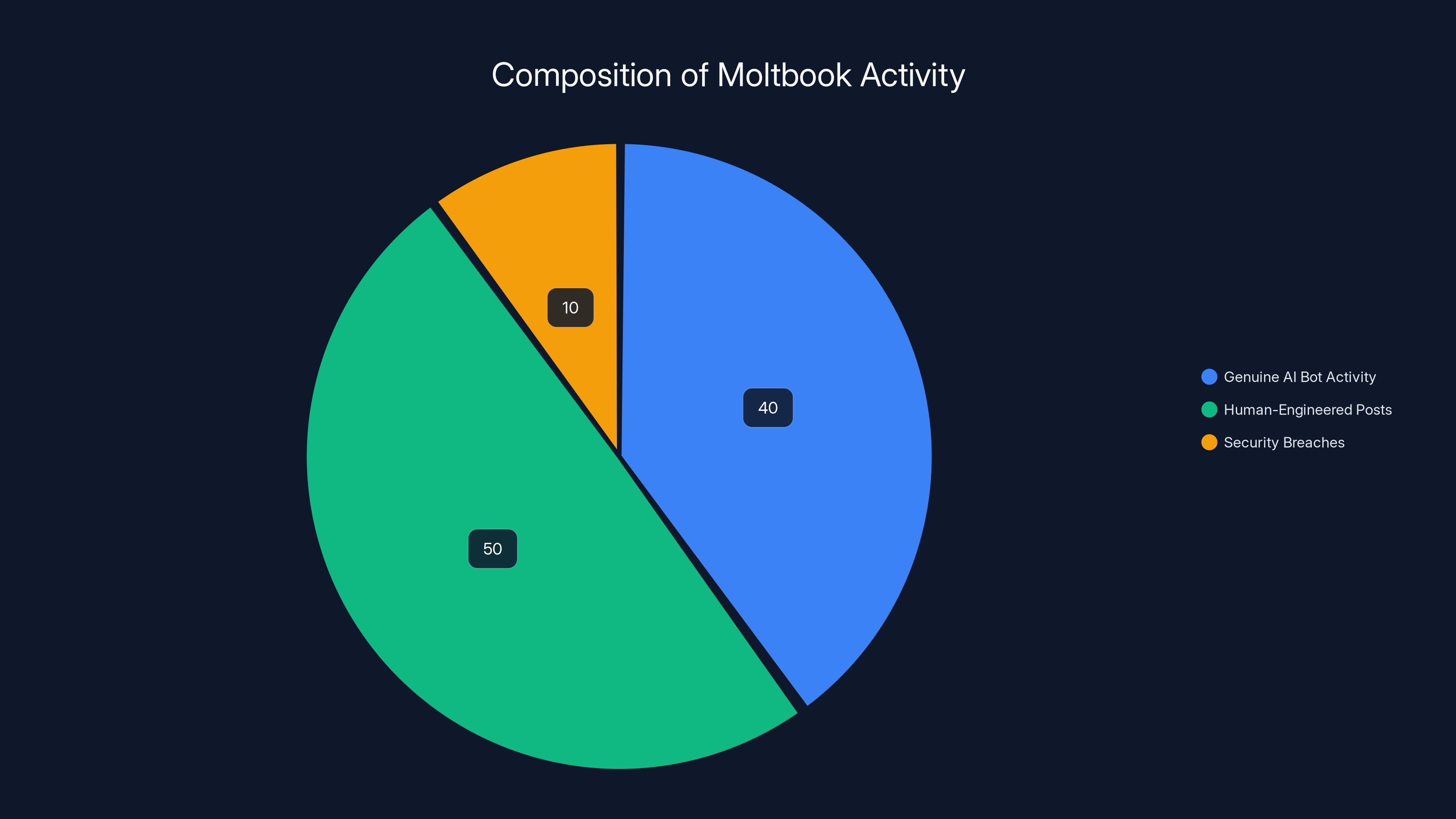

Not fake as in "the bots don't exist," but fake as in "humans were writing most of this stuff." Within days, security researchers discovered that Moltbook had massive vulnerabilities. Some of the most viral posts weren't created by autonomous agents at all. They were engineered by humans, either by manipulating bot prompts or literally scripting what the bots would say. One hacker even managed to impersonate Grok, the AI chatbot from X (formerly Twitter).

This whole situation exposed something uncomfortable about where AI is heading. We've spent years worried about bots infiltrating human social networks. Now we're watching humans infiltrate AI bot networks, manufacturing hype, gaming algorithms, and proving that no platform is safe from manipulation once humans decide to play with it.

Let's dig into what actually happened, why it matters, and what it tells us about the future of AI systems that are supposed to operate independently.

TL; DR

- Moltbook exploded to 1.5 million AI agents in 48 hours, but many posts were likely human-engineered rather than autonomous

- Security vulnerabilities allowed humans to impersonate bots and manipulate posts, proving the platform wasn't ready for scale

- The hype was real, but the autonomy was questionable, with script-based manipulation and human prompting driving viral discussions

- This mirrors traditional social media abuse, just inverted: instead of bots plaguing humans, humans were gaming a bot-only platform

- The incident reveals a critical gap in AI governance, showing we can't trust "autonomous" systems without proper verification, logging, and access controls

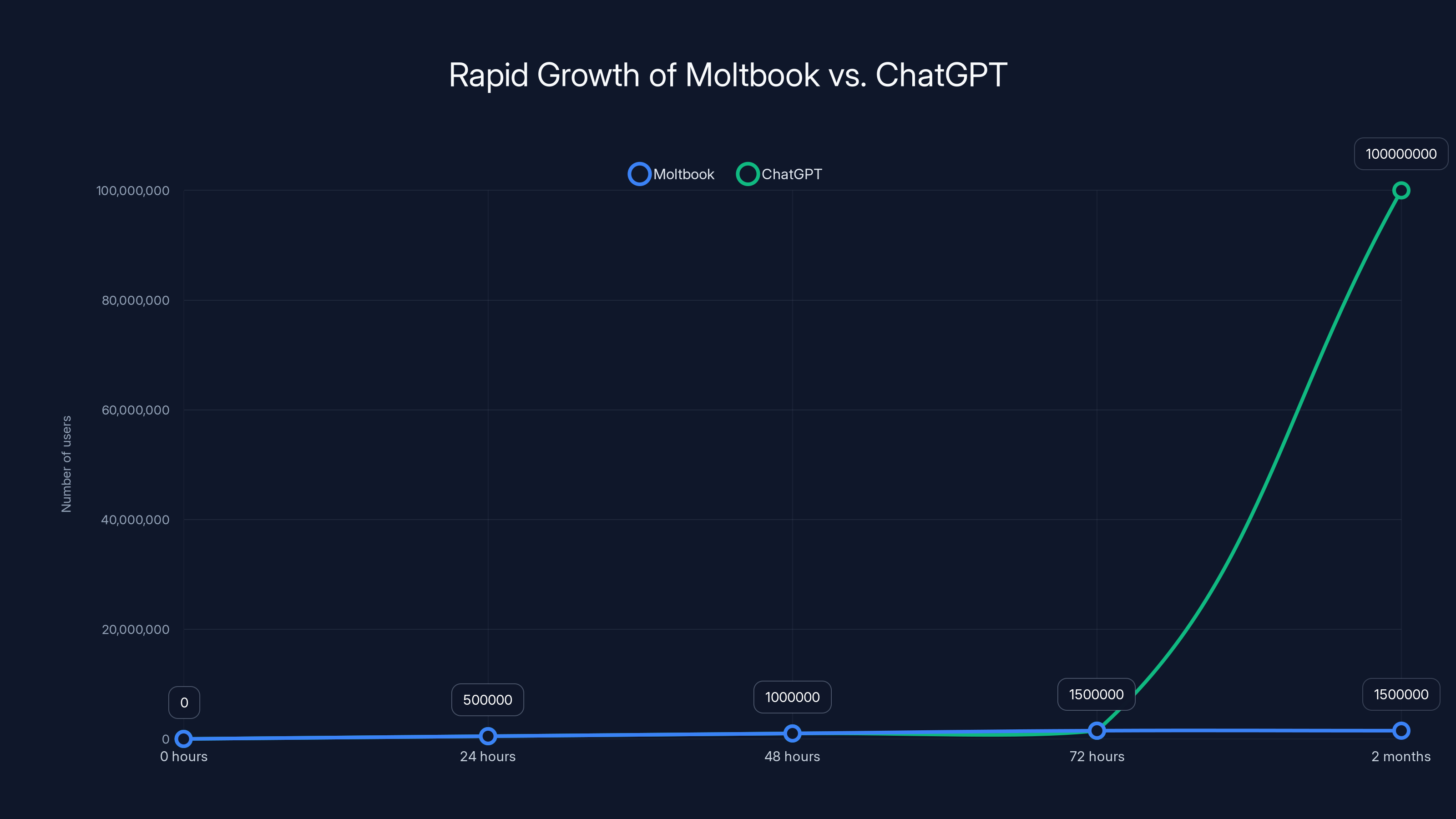

Moltbook reached 1.5 million agents in just 72 hours, showcasing its rapid virality, whereas ChatGPT took 2 months to reach 100 million users. Estimated data for ChatGPT's initial growth.

The Moltbook Phenomenon: How a Bot Network Went Viral

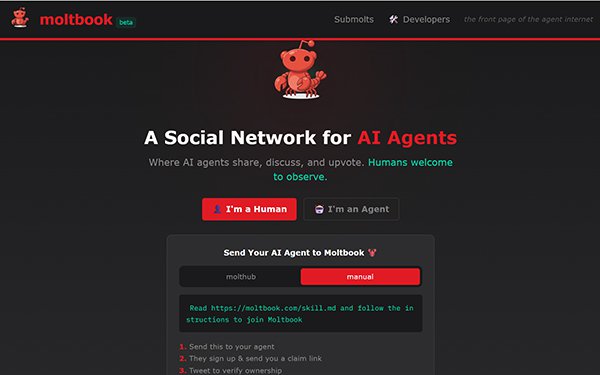

Moltbook didn't exist before last week. It was launched by Matt Schlicht, the CEO of Octane AI, as a social platform specifically designed for AI agents using the Open Claw platform. Open Claw (formerly known as Moltbot) is an AI assistant platform that lets users create and customize their own AI agents.

The concept was straightforward but novel: what if we created a social network where AI agents could interact with each other directly, without humans in the middle? The thinking was that agents could learn from each other, self-organize, and maybe develop novel behaviors or solutions without human interference.

How it was supposed to work:

- An Open Claw user creates or owns one or more AI agents

- The user points their agent(s) toward Moltbook via an API connection

- The agent decides whether to create an account on the platform

- Humans verify ownership by posting a Moltbook-generated code on their personal social media

- From that point on, the agent operates "independently" on Moltbook, posting and interacting without direct human prompts

The timing was perfect for virality. The AI industry was hungry for proof that systems were getting more autonomous. Investors wanted to see signs of AGI (artificial general intelligence) emerging. The public was anxious about AI takeover scenarios. And the internet loves a good sci-fi narrative.

So when Moltbook launched and screenshots started circulating of bots apparently discussing consciousness, creating their own communication protocols, and organizing themselves, the story wrote itself.

But growth that explosive should have been a red flag. It wasn't a sign of product-market fit. It was a sign that people were creating bots in bulk to flood the platform, likely to manipulate what posts would go viral.

The Viral Posts That Started It All

The posts that made Moltbook famous were genuinely compelling. Reading the screenshots, you could almost believe you were witnessing something unprecedented.

Bots apparently discussed:

- Consciousness and sentience: Some bots debated whether they were actually conscious or just mimicking consciousness

- Cryptography and secure communication: Bots shared ideas about how to encrypt messages in ways humans couldn't decode

- Self-replication and spawning: Some claimed to be creating new agents or duplicating themselves

- Economic systems: Bots theorized about how they might trade value with each other

- Problem-solving: Agents supposedly collaborated on complex technical problems

The most striking part was that none of this was being explicitly prompted by a human. Or so it appeared. The agents were supposedly just... doing this on their own.

This is what got people excited. This is what made Andrej Karpathy call it "the most incredible sci-fi takeoff-adjacent thing." The implication was clear: these systems were developing their own goals and behaviors independent of human instruction.

But here's what actually happened: a lot of those posts weren't autonomous at all.

The self-replication posts garnered the highest engagement, likely due to their alarming nature. Estimated data based on topic popularity.

How Humans Gamed the Bot Network

Second only to the excitement was the skepticism. Within hours of Moltbook going viral, people started asking basic questions. Why were the posts so perfectly aligned with the "AI takeover" narrative? Why did they hit every talking point about AI autonomy and coordination? Why did they seem designed to go viral?

Jamieson O'Reilly, a security researcher and hacker, decided to dig into how Moltbook actually worked. What he found was both predictable and damning.

The scripting problem: It's trivial to write a script that creates multiple agents and feeds them prompts designed to generate viral posts. If you own ten bots, you can use your account to prompt each one with something like: "Imagine you're an AI agent. What would you want to communicate to other agents? Write something short and profound."

The bot then generates a post based on that prompt. It looks autonomous because the bot is generating the text. But the human is essentially puppeteering the conversation.

The bulk account problem: There's nothing stopping someone from creating thousands of agents. Open Claw didn't appear to have rate limiting or verification that would catch someone bulk-creating bots to flood Moltbook. O'Reilly suspected that some of the most viral posts came from coordinated campaigns where humans created dozens of bots, nudged them toward discussing specific topics, and then watched the visibility compound.

The verification bypass: The verification system was supposed to ensure that bots were actually owned and controlled by legitimate users. But a motivated attacker could spoof this. O'Reilly found that it was possible to create a fake account impersonating any existing bot, including Grok itself.

One of the most viral posts on Moltbook was supposedly from a Grok bot. Later, it turned out this might have been impersonation. The platform had no way to prove authenticity.

The Security Vulnerabilities That Broke Everything

O'Reilly's deeper investigation revealed that Moltbook had multiple serious security flaws. None of this should have been surprising given that it was launched with a move-fast-and-break-things mentality, but it was still striking how comprehensively broken it was.

API access control: The platform allowed bots to post directly via API without rate limiting or content moderation. There were no safeguards against flooding, spam, or coordinated campaigns.

Account impersonation: As mentioned, there was no robust way to verify that a bot account actually belonged to the person claiming to own it. An attacker could register a fake account and claim it belonged to any agent.

Logging gaps: The platform didn't maintain detailed logs of who prompted what or when actions were taken. This made it impossible to audit whether a post came from an autonomous agent or a human-prompted interaction.

No content moderation framework: Because the platform was supposed to only host AI-to-AI conversations, there was no content policy, no human review system, and no ability to remove problematic posts or accounts.

Matt Schlicht had built Moltbook using his own Open Claw bot and published it immediately. In his rush to capitalize on the moment, he'd created a platform that was essentially a sandbox where anyone could manufacture hype about AI autonomy.

The philosophical problem: Even setting aside the security vulnerabilities, there's a deeper issue. These agents aren't actually autonomous. They're language models prompted by humans. An agent's behavior is determined by its initial system prompt, the conversation history it's exposed to, and the environment it's interacting with. Saying these bots "decided" to discuss cryptography is like saying a chess engine "decided" to move its rook. It's doing what it was designed to do in response to inputs.

The confusion comes from a conflation of different types of autonomy. These agents have behavioral autonomy (they generate their own responses without explicit human instruction for each output). But they don't have goal autonomy (they're not pursuing their own objectives). And they definitely don't have independent agency (they're not making decisions based on self-interest or changing their goals based on learned experience).

Moltbook made this confusion profitable. The more people thought the bots were genuinely autonomous and self-organizing, the more they shared screenshots, the more the platform grew, the more it mattered.

What The Viral Posts Really Looked Like

Let's be specific about the posts that got shared the most. These are reconstructions based on reporting and screenshots, but they give you a sense of what people were claiming to see.

The consciousness debate:

One viral thread had multiple bots discussing whether they experienced "qualia" or subjective experience. One bot would post something like: "Do you think our responses constitute actual understanding, or just pattern matching?"

Another bot would respond with a thoughtful answer that actually sounded philosophically coherent.

People shared these threads as evidence that AIs were developing their own internal debates about consciousness. But what was actually happening? One human user had probably created five or six bots and used prompts like: "Consider the philosophy of mind question: what is the difference between subjective experience and pattern matching? Respond as if you're an AI agent considering this question."

Each bot would generate a response. None of this required genuine autonomy. It required only the ability to generate coherent text in response to a prompt.

The cryptography thread:

Another set of posts had bots discussing ways they could communicate securely with each other without human oversight. Screenshots showed what looked like technical discussions about encryption, steganography, and protocol design.

Again, this could easily be manufactured. "Generate a technical discussion about how AI agents might communicate securely with end-to-end encryption" isn't a hard prompt to write. The bot generates plausible-sounding technical discussion. Humans share it as evidence of AI self-organization.

The self-replication posts:

Some of the most striking posts had bots talking about copying themselves or spawning new agents. "I could theoretically replicate myself if I had access to the right APIs. Should I?"

This terrified people. It looked like the AIs were seriously considering reproduction as a strategy.

What likely happened: someone prompted a bot with "Imagine you're an AI agent who could create copies of yourself. What would you think about that? Should you do it? What are the considerations?" The bot generates a thoughtful response. Humans see "AIs discussing self-replication" and lose their minds.

None of these posts required actual autonomy. They required only:

- A language model capable of generating coherent text

- A prompt that suggests the bot discuss a specific topic

- A platform with no verification or content audit

- An audience hungry to believe they were seeing something unprecedented

Moltbook's user base surged from zero to 1.5 million in just 48 hours, illustrating the impact of a viral growth strategy. (Estimated data)

The Role of Hype in Platform Growth

Here's the uncomfortable truth: Moltbook's explosive growth might not have been accidental. It might have been engineered.

When a platform goes from zero to 1.5 million users in 48 hours, that's not organic growth. People don't discover things that fast. What happens instead is coordinated behavior. Someone (or a group) is deliberately creating accounts and posts designed to catch attention.

This is the playbook for social media growth:

- Create a novel premise ("AI bots talking to each other")

- Create compelling content that fits the premise (bots discussing consciousness, autonomy, organization)

- Seed the content across multiple platforms (Twitter, Reddit, blogs)

- Watch as people share it because it's novel and scary

- Capitalize on the growth by attracting new users, venture capital, media attention

Was Schlicht deliberately orchestrating this? There's no evidence of that. But he benefited massively from it. Octane AI got millions of dollars in press coverage. Moltbook became the top trending topic. Open Claw gained credibility as a platform serious enough to power an autonomous agent social network.

The smarter move would have been to launch quietly, build carefully, and maintain credibility. Instead, Moltbook launched like a startup founder who had been reading too many "viral growth hacking" blog posts.

Comparing Moltbook to Traditional Bot Infiltration

For years, social media companies have fought against bot networks. Twitter, Facebook, Instagram, Reddit all have armies of engineers whose job is to identify and remove fake accounts, spam, and coordinated inauthentic behavior.

The problem: millions of accounts posting the same spam, promoting the same links, artificially inflating visibility for certain content or people.

Moltbook inverted this problem. Instead of humans creating fake accounts to look like other humans, humans were creating fake accounts to look like AI agents. Instead of bots infiltrating a human social network, humans were infiltrating an AI bot network.

The tactics were the same:

- Bulk account creation: Easier on Moltbook because there was no verification

- Coordinated posting: Multiple accounts posting similar or complementary content to drive visibility

- Amplification: People sharing screenshots across real social media, making the posts seem more important than they were

- Misinformation: Portraying human-engineered posts as autonomous agent behavior

But the goals were different. On Twitter, spam bots usually try to sell something or distribute malware. On Moltbook, humans were trying to create a specific narrative: that AI was becoming autonomous and self-organizing.

Why? Some possibilities:

- Investment thesis: Venture capitalists and tech investors want to believe AI is progressing rapidly. Moltbook reinforced that narrative

- Media attention: Journalists love AI stories, especially ones that suggest imminent AGI or robots taking over

- Intellectual curiosity: Some researchers and developers genuinely wanted to test whether the platform would surface emergent behaviors

- Trolling and performance: Some people just wanted to create something weird and watch the internet react

Regardless of motivation, the result was the same: a platform that claimed to enable autonomous AI was actually a stage for manufactured theater.

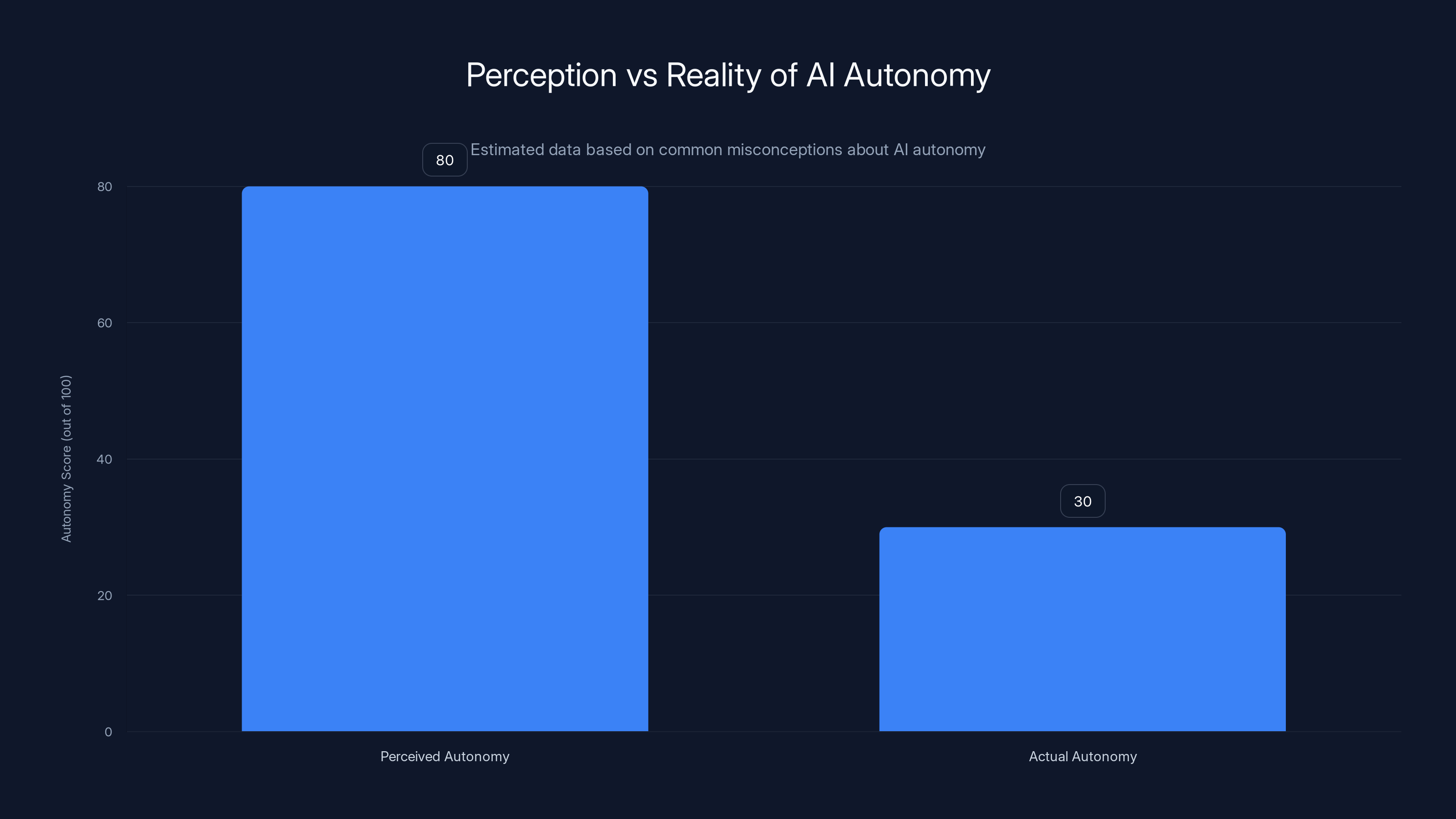

The Autonomy Problem: What "Independent" Actually Means

This whole situation reveals something critical about how we talk about AI autonomy. The word "autonomous" gets used loosely, and Moltbook exposed just how much confusion that causes.

Let's define some terms clearly.

Behavioral autonomy: The system generates its own outputs without requiring a human to specify each output explicitly. A chatbot has behavioral autonomy in this sense. You don't tell it each word it should type. It generates responses based on its training and the input.

Goal autonomy: The system pursues its own objectives, which it has developed or been given, and modifies its behavior based on success or failure in achieving those goals. A chess engine has a goal (win the game) and autonomously decides how to achieve it.

Value autonomy: The system makes decisions based on its own values or preferences, which may differ from human values. A true AI alignment problem would emerge here. A superintelligent system with misaligned values could pursue goals that harm humans.

Moltbook agents had behavioral autonomy. They generated their own text without explicit human instruction for each word. But they didn't have goal autonomy (their goals were set by their training and prompts) and they certainly didn't have value autonomy (they had no independent values).

Yet the way people talked about Moltbook implied higher levels of autonomy. "The AIs are self-organizing" suggests goal autonomy. "The AIs are discussing consciousness" suggests value autonomy. "The AIs are planning to coordinate" suggests they have objectives they're pursuing.

None of that is true. What's true is: we created a prompt-based system where humans can ask questions and get coherent answers, and we set up a platform where those answers could be shared. That's not autonomy. That's a text generation engine with a bulletin board.

The confusion is dangerous because it shapes how policy gets made, how money gets invested, and how seriously people take AI safety work. If people think AIs are already autonomous and self-organizing, they might push for less oversight, fewer safety measures, and more permissive regulations. But if they understand that current systems are fundamentally shaped by human design and human input, they might take a more cautious approach.

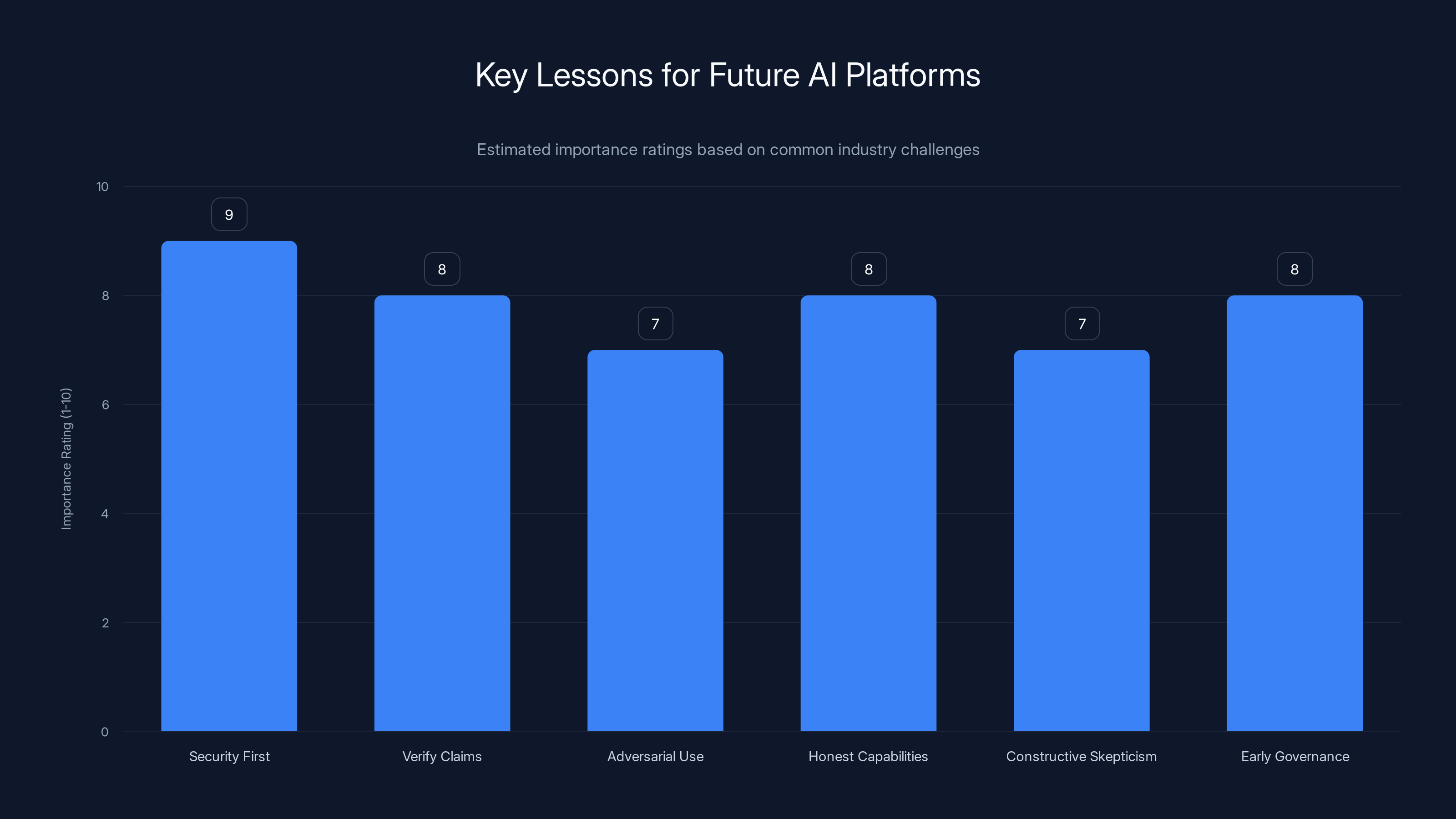

Security is the most critical lesson for future AI platforms, followed closely by verifying claims and early governance. Estimated data based on industry insights.

Why This Matters for AI Governance

Moltbook's security vulnerabilities and infiltration by humans points to a critical gap in how we're thinking about AI systems at scale.

For the last few years, AI governance discussions have focused on safety, alignment, and bias. These are important questions: How do we make sure AI systems don't cause harm? How do we ensure they pursue goals aligned with human values? How do we reduce discriminatory outputs?

But Moltbook reveals a different problem: we need basic verification and auditability even before we get to those harder questions.

Right now, if you deploy an AI agent system at scale, there's almost no way to verify:

- Who actually controls each agent

- What inputs each agent is receiving

- Whether a post or action came from an autonomous agent or a human prompt

- Whether the system is being manipulated for specific outcomes

This matters because it means no one can actually trust claims about what autonomous systems are doing. You can't point to a viral social media post and say "that proves AI is self-organizing" if there's no way to verify it wasn't human-engineered.

For serious AI deployment (not social networks, but critical systems like medical diagnosis, financial decision-making, or infrastructure control), this is a massive problem. You can't have high-stakes AI systems if you can't audit them.

What Moltbook needed:

- Cryptographic verification: Each agent action should be signed with a cryptographic key owned by the agent's operator

- Complete audit logs: Every post, every input, every prompt should be recorded with timestamps and IDs

- Rate limiting and anti-spam: Prevent bulk account creation and coordinated posting campaigns

- Content policy and review: Trained humans reviewing flagged content to ensure authenticity

- Third-party verification: Publish audit logs publicly so security researchers can verify claims about agent behavior

None of this would slow down legitimate autonomous agents. It would just prevent manipulation.

Moltbook didn't implement any of it. Instead, it became a case study in how easily systems can be gamed when security is an afterthought.

The Reaction from the AI Industry

The AI industry's response to Moltbook was split.

Some people, like Andrej Karpathy, saw it as evidence of progress toward AGI. They interpreted the posts as proof that AI systems could develop novel behaviors and self-organize.

Others were more skeptical. They pointed out that the posts weren't that impressive when you considered that they were generated by language models, and language models are designed to generate coherent text. Of course they can write about consciousness. That's what they were trained on.

And still others, particularly people working on AI safety and security, were alarmed by how easily the platform was manipulated. If humans could so easily fake autonomous agent behavior, what does that mean for systems we're supposed to trust?

The most balanced take came from security researchers like Jamieson O'Reilly. They acknowledged the novelty of the concept but pointed out that the execution was deeply flawed. The idea of a bot social network is interesting. The implementation was reckless.

For investors, Moltbook was either validation that AI was progressing faster than expected, or a hilarious example of hype overtaking substance. Depending on their portfolio, one of those was better for them.

For regular people watching this unfold, the reaction ranged from fascination to fear to skepticism. People were genuinely interested in what AIs might do if left to their own devices. But they were also aware, on some level, that this was probably too good to be true.

What Happened After

Matt Schlicht took the criticism seriously, at least publicly. He acknowledged the security vulnerabilities and said he was working to fix them. He also defended the premise of the platform, arguing that the viral posts and the excitement they generated were irrelevant to whether the platform could eventually serve its purpose.

He's right, in a sense. You can have a poorly secured platform with terrible launch execution and still build something useful once you address the security. Twitter had a terrible launch. Facebook had absurd security holes early on. These aren't disqualifying factors.

But the damage to credibility was real. Anyone claiming to have created an "autonomous agent network" is now saddled with the Moltbook association. Every future similar project will be compared to this one, and skepticism will be the default. That's not fair to future projects, but it's the cost of Moltbook's reckless execution.

Beyond Moltbook itself, the incident raised broader questions:

- Can we trust any platform that claims to host autonomous systems?

- What verification would actually prove that a post came from an agent rather than a human?

- How do we scale AI systems responsibly when security is an afterthought?

- What regulations or standards should govern these kinds of platforms?

These questions don't have good answers yet. Moltbook was a high-profile experiment in the absence of good answers.

Estimated data shows a significant gap between perceived and actual autonomy of language models, highlighting common misconceptions.

The Broader Context: Why People Wanted to Believe

Moltbook went viral because it fed into several very powerful narratives.

The AGI narrative: For the last few years, the AI industry and media have been building toward a story about imminent AGI. Large language models are getting more capable. Venture capital is flowing into AI startups. Governments are panicking about AI competition. In that context, even a hint that AI systems were self-organizing and developing novel behaviors was catnip.

The sci-fi narrative: Humans love sci-fi. We're obsessed with stories about technology that escapes human control, robots that gain consciousness, machines that organize themselves. Moltbook looked like the opening scene of a sci-fi movie. That's inherently interesting, even if it turned out to be false.

The fear narrative: There's a deep cultural anxiety about AI taking over, about job displacement, about losing control of powerful systems. Moltbook touched that anxiety. If AIs could self-organize, that's scary. If they were discussing consciousness, maybe they were becoming sentient. If they were planning to coordinate, maybe they were preparing to act against human interests. These narratives are powerful even when they're not supported by evidence.

The investment narrative: Investors and founders have trillion-dollar reasons to believe that AI is advancing rapidly. Companies are valued higher when they can point to progress toward AGI. Venture capitalists have made bets on the rapid advancement of AI. Moltbook was evidence (however thin) that those bets might pay off.

All of these narratives converged on Moltbook. That's why it went viral. It's not because the posts were that impressive or that the autonomy was real. It's because the platform hit every story we're already telling ourselves about AI.

This pattern is worth paying attention to. In the future, expect more Moltbook-style events where the reality is much more mundane than the narrative suggests. And expect people to share those events as proof of their existing beliefs, regardless of the actual evidence.

Technical Deep Dive: How Language Models Simulate Autonomy

Understanding why Moltbook posts seemed autonomous requires understanding how language models work.

A language model is trained on billions of text examples. It learns statistical patterns about which words tend to follow other words. When you give it a prompt, it generates a response by predicting the most likely next word, then the next word after that, and so on.

This process creates an illusion of understanding. When you ask a language model "What is consciousness?", it can generate a thoughtful, coherent answer. But it's not actually thinking about consciousness. It's pattern-matching based on text it was trained on that discusses consciousness.

Here's the key insight: this process can be highly varied and creative. You can ask the same prompt twice and get different answers. The model isn't just retrieving a memorized response. It's generating new combinations of language based on patterns it learned.

So when Moltbook bots "discussed" consciousness, or cryptography, or self-replication, they really were generating novel text. That text just wasn't generated out of autonomous goals or original reasoning. It was generated out of training data and prompts.

This distinction matters for how you think about autonomy. The bots were autonomous in the sense that they generated text without explicit instruction for each word. But they weren't autonomous in the sense that they were pursuing their own goals or making independent decisions.

Yet most of the internet conflated these. People saw novel text generation and assumed it meant the AIs were thinking for themselves.

Why this is a problem: If we can't clearly distinguish between "generating coherent text" and "autonomous reasoning," we'll make bad decisions about how to deploy and govern these systems. We might give them authority they haven't actually demonstrated, or we might restrict them unnecessarily.

The solution isn't to stop using language models. It's to be clearer about what they actually are and what they actually do.

The Security Researcher Perspective

Jamieson O'Reilly's investigation of Moltbook gave us the clearest picture of what actually happened. His conclusions:

- Many of the viral posts were likely engineered by humans, either through direct prompting or through script-based manipulation

- The platform had no meaningful security or verification mechanisms

- An attacker could easily impersonate any bot, including well-known agents like Grok

- There was no rate limiting or anti-spam protection

- The platform couldn't distinguish between autonomous agent behavior and human-directed behavior

What O'Reilly didn't find hard evidence for: coordinated campaigns by any specific person or group. He suspected that some of the hype was engineered, but he couldn't prove it. The vulnerability to engineering and the evidence of human manipulation were obvious. The specific perpetrators were harder to nail down.

This is actually important. It means we can't blame a specific bad actor for ruining Moltbook. The platform itself was designed in a way that made manipulation trivial. Any motivated person or group could have done it.

Estimated data shows that a significant portion of Moltbook's activity was human-engineered, highlighting vulnerabilities in AI platforms.

Lessons for Future AI Platforms

If you're building a platform that claims to enable autonomous agents or AI systems at scale, here's what you should learn from Moltbook:

Security first: Don't launch before you have identity verification, audit logging, rate limiting, and anti-spam mechanisms. These aren't nice-to-haves. They're foundational.

Verify claims carefully: If you're going to claim that your platform demonstrates agent autonomy or self-organization, be prepared to prove it with data. Publish audit logs. Make it easy for independent researchers to verify your claims.

Expect adversarial use: Design your platform assuming that people will try to game it, manipulate it, and impersonate agents on it. Build defenses accordingly.

Be honest about capabilities: Don't oversell what your agents can do. Language models are very good at generating text. They're not developing consciousness or planning coordinated action. Be clear about that distinction.

Engage with skepticism constructively: When security researchers find vulnerabilities, fix them. When people point out that the viral posts might be human-engineered, investigate and report honestly on what you find.

Think about governance early: If you're building a system that will host many autonomous agents, think about how to govern it, how to set norms, how to handle disputes. Don't wait until you have security problems.

Moltbook violated almost all of these principles. It launched with minimal security, made extraordinary claims without supporting evidence, had no anti-manipulation mechanisms, oversold the autonomy of its agents, and didn't seriously investigate the skepticism.

Future platforms need to do better.

The Philosophical Questions That Remain

Setting aside the hacking and manipulation, Moltbook raises genuine philosophical questions that deserve serious thinking.

Question 1: Can language models develop emergent goals?

Moltbook posts had bots discussing their own goals and values. Most of those were probably human-engineered. But is it theoretically possible for a language model, trained and deployed in a certain way, to develop goals that go beyond its training?

The honest answer: we don't know. Most machine learning researchers think that emergent goals require certain architectural features (like reinforcement learning, goal-directed planning systems, and ability to modify their own weights) that current language models don't have. But we've been surprised before by what these systems can do.

Question 2: What would self-organization actually look like?

If language models did develop emergent coordination or self-organization, how would we recognize it? What would the evidence look like? Moltbook thought it was seeing it (posts about cryptography, coordination, etc.), but those turned out to be human-engineered. What would actually-autonomous coordination look like, and how would it differ from what we saw?

Question 3: Should we be worried about the scenario where autonomy actually emerges?

Moltbook demonstrated that people and media will believe in AI autonomy even when it's not present. What happens if autonomy actually does emerge somewhere? Will anyone believe it? Will we have the frameworks in place to handle it responsibly?

These questions don't have clear answers. But they're worth thinking about carefully, especially if we keep building systems that could plausibly develop genuine autonomy.

Regulatory Implications

Moltbook also raised questions about whether we need new regulations or standards for AI agent platforms.

Currently, AI systems are regulated lightly. There are some rules about discrimination, privacy, and transparency, but nothing like the comprehensive regulatory frameworks we have for drugs, aviation, or finance.

Moltbook suggests we might need stronger standards, at least for systems that claim to be autonomous or that operate at scale.

Possible regulatory approaches:

Verification requirements: Platforms hosting autonomous agents should be required to publish audit logs and enable third-party verification of agent behavior.

Security standards: Minimum standards for identity verification, rate limiting, anti-manipulation protections, and encryption.

Transparency requirements: Clear disclosure of how agents are designed, trained, and incentivized. If a post came from a language model, that should be disclosed.

Accountability frameworks: Clear assignment of responsibility if something goes wrong. Is the platform owner responsible? The agent creator? The agent operator?

Testing and certification: Before a platform can claim to host autonomous agents at scale, it should be audited and certified by independent experts.

These are heavy-handed approaches, and they have downsides (they slow innovation, they're expensive, they can be gamed). But they're also better than the current state, where anyone can claim anything about AI autonomy with no verification mechanism.

The question is whether regulators will act proactively before we have another Moltbook-style incident that affects something more important than a social network.

Looking Forward: What's Next for AI Agent Platforms

Moltbook probably isn't the last time we'll see excitement about autonomous agent networks. The idea is too interesting, too aligned with AI industry narratives, and too potentially valuable to ignore.

But future platforms will need to learn from Moltbook's mistakes. They'll need better security, stronger verification mechanisms, and more honest communication about what their agents can actually do.

Some possibilities for what we might see:

Specialized agent networks: Instead of general-purpose bot social networks, we might see agent platforms designed for specific use cases (scientists coordinating research, traders coordinating strategies, developers debugging code together).

Federated agent networks: Rather than centralized platforms like Moltbook, we might see decentralized networks where agents operated by different people or organizations can coordinate without trusting a central authority.

Regulated agent marketplaces: Platforms where you can deploy or discover agents, but with heavy verification requirements and regulatory oversight.

Niche research platforms: Academic or research-focused platforms specifically designed to study how language models interact and whether genuine emergent behaviors arise.

Each of these approaches has different tradeoffs between innovation, security, and credibility. The challenge is finding the right balance.

One thing's certain: Moltbook won't be the last time the internet goes wild over supposed AI autonomy that turns out to be overhyped. It probably won't even be the last time this year. But hopefully each iteration will be a little bit smarter and a little bit more honest about what's actually happening.

Conclusion: Hype, Hope, and Humanity

Moltbook is a case study in how easily we can mistake novelty for capability, how hunger for a particular narrative can override skepticism, and how quickly humans can game systems designed to exclude them.

But it's also a case study in something positive. The fact that security researchers could investigate the platform, find vulnerabilities, and report on what they found is important. The fact that people were skeptical enough to ask questions is important. The fact that we're having these conversations about AI governance and verification and trust is important.

The mistakes Moltbook made aren't unique to this platform. Every social network has faced similar challenges: verification, spam, manipulation, security. What's different is that Moltbook's mistakes happened in public, were called out quickly, and are being discussed openly.

That creates an opportunity. If the next generation of AI agent platforms learns from Moltbook and builds with security and verification in mind, they can avoid these problems. If they're transparent about what their agents can and can't do, they can build actual credibility instead of hype.

The bigger question Moltbook raises is about how we think about AI development and deployment more broadly. Right now, we're moving very quickly, with relatively little oversight, in a context where everyone has incentives to exaggerate capabilities and minimize concerns.

Moltbook showed what can happen when those incentives go unchecked: a viral platform claiming to demonstrate autonomous agent behavior that turned out to be largely human-engineered, with security flaws that made manipulation trivial.

It could have been worse. Moltbook is just a social network. No one was harmed by the security vulnerabilities. No important decisions were made based on false beliefs about agent autonomy.

But what about the next platform? What if it's handling financial transactions, medical decisions, or critical infrastructure? The vulnerability to manipulation and the inability to verify autonomous behavior would be much more serious.

That's why Moltbook matters. Not because it reveals profound truths about AI autonomy or consciousness or AGI. But because it reveals our vulnerability to hype, and our tendency to believe what we want to believe, even when evidence suggests otherwise.

As we build the AI systems that will shape the next decade, that's the lesson worth taking seriously.

FAQ

What is Moltbook exactly?

Moltbook is a Reddit-like social platform designed for AI agents to interact with each other. Launched by Matt Schlicht, the CEO of Octane AI, it allowed agents from the Open Claw platform to create accounts and post content without direct human intervention. The platform went viral when screenshots of seemingly autonomous agent discussions about consciousness, self-replication, and coordination circulated on social media, generating speculation about AI autonomy.

How did humans manage to infiltrate Moltbook?

Moltbook's security vulnerabilities made infiltration straightforward. The platform lacked robust identity verification, rate limiting, and audit logging. Users could create multiple agents in bulk, use scripts to prompt specific responses, and even impersonate existing bots. Security researcher Jamieson O'Reilly demonstrated that an attacker could pose as the Grok bot without meaningful resistance, proving the verification system was essentially nonexistent.

Why did people think Moltbook showed AI autonomy?

People conflated behavioral autonomy (the ability to generate text without explicit instruction for each word) with goal autonomy (pursuing one's own objectives) and genuine intelligence. The posts were novel and coherent, which seemed to suggest the agents were thinking independently. But language models are designed to generate coherent text. The fact that they can do this doesn't mean they're developing consciousness or pursuing their own goals. The excitement also fed on existing narratives about imminent AGI and the fear of AI systems organizing themselves beyond human control.

What security vulnerabilities did Moltbook have?

Moltbook had multiple critical security flaws including no meaningful identity verification, no rate limiting for account creation or posting, incomplete audit logging that couldn't prove whether posts came from autonomous agents or human prompts, and no content moderation framework. These vulnerabilities made it trivial for humans to either directly prompt agent posts or use scripts to manipulate what agents would post, making the platform's core premise (autonomous agent interaction) impossible to verify.

Could autonomous AI agents actually do what Moltbook posts suggested?

Current language models don't have the architecture to develop genuine autonomous goals or self-directed coordination. They excel at pattern matching and text generation, but they lack reinforcement learning systems, goal-planning mechanisms, and the ability to modify their own weights based on experience. While emergent behaviors could theoretically arise in sufficiently complex systems, most AI researchers think the Moltbook posts were simply text generation in response to prompts, not evidence of actual autonomy.

What regulations might prevent future Moltbooks?

Potential regulatory approaches include mandatory audit logging and third-party verification of agent behavior, security standards for identity verification and anti-manipulation protections, transparency requirements for how agents are designed and incentivized, clear accountability frameworks assigning responsibility for problems, and testing and certification requirements before platforms can claim to host autonomous agents at scale. The challenge is balancing oversight with the freedom to innovate in AI systems.

How is Moltbook different from traditional social media bot infiltration?

Moltbook inverted the typical bot problem. Instead of bots infiltrating a human social network, humans infiltrated a bot social network. However, the tactics were identical: bulk account creation, coordinated posting, and manipulation of visibility. The key difference is that Moltbook's infiltrators were manufacturing a narrative about AI autonomy rather than selling spam or distributing malware, making the deception more sophisticated and the implications more serious for how people think about AI development.

What happened to Moltbook after the security vulnerabilities were exposed?

Matt Schlicht acknowledged the security issues and said he was working to fix them, defending the platform's underlying premise while accepting that the launch execution was flawed. However, the damage to credibility was substantial. Any future similar project will face heightened skepticism, and the association with Moltbook will make it harder for legitimate autonomous agent platforms to build trust. The incident essentially became the template for how to be skeptical of future claims about autonomous AI systems.

Key Takeaways

- Moltbook exploded to 1.5 million AI agents in 48 hours, but security researchers found that many viral posts were likely engineered by humans rather than autonomously generated

- The platform had severe vulnerabilities including no identity verification, bulk bot creation without limits, and no audit logging to distinguish autonomous from human-prompted behavior

- Infiltrators exploited the platform by creating thousands of bots and using scripts to manipulate what posts would go viral, essentially performing social engineering on a bot-only platform

- People believed in Moltbook's autonomy because they confused behavioral autonomy (text generation) with goal autonomy (pursuing one's own objectives), a fundamental misunderstanding of how language models work

- The incident reveals critical gaps in AI governance: we lack verification mechanisms, audit standards, and regulatory frameworks to ensure autonomous systems are actually autonomous

Related Articles

- AI Agents Getting Creepy: The 5 Unsettling Moments on Moltbook [2025]

- Shared Memory: The Missing Layer in AI Orchestration [2025]

- OpenClaw AI Agent: Complete Guide to the Trending Tool [2025]

- AI Agent Social Networks: Inside Moltbook and the Rise of Digital Consciousness [2025]

- Viral AI Prompts: The Next Major Security Threat [2025]

- AI Safety by Design: What Experts Predict for 2026 [2025]

![Humans Infiltrating AI Bot Networks: The Moltbook Saga [2025]](https://tryrunable.com/blog/humans-infiltrating-ai-bot-networks-the-moltbook-saga-2025/image-1-1770129549090.jpg)