Instagram's AI Problem Isn't AI at All—It's the Algorithm [2025]

Last month, Instagram's head honcho posted something that caught a lot of attention. Adam Mosseri took to the platform to sound alarm bells about artificial intelligence taking over the feed. His message was basically this: AI is coming for creators, and the only way to survive is to be authentically, undeniably human. Be real, be original, be a voice that can't be faked.

Sound good? In theory, absolutely. The problem is Mosseri's diagnosis misses the disease entirely.

The real threat isn't AI generating fake content. It's that Instagram's algorithm has already turned regular human creators into content-generating robots, churning out post after post following the same predictable formulas designed to keep you scrolling, liking, and sharing. AI didn't create this problem—the algorithm did. And when you fill a platform with inauthentic, predictable human-made content, you've basically built the perfect training dataset for AI to replace it.

Here's the actual situation Instagram faces: The platform has spent over a decade optimizing for engagement above all else. Creators learned what the algorithm rewards and started producing more of exactly that thing. Now you've got thousands of creators posting nearly identical content, following the same beats, hitting the same emotional notes. The algorithm didn't just change how content performs—it changed what creators actually make.

The Real Authenticity Crisis

Authenticity on Instagram has become a performance. That "candid" photo? Carefully composed. That "unfiltered" video? Shot multiple times. That seemingly spontaneous post caption? Tested against algorithmic best practices. Creators aren't being inauthentic because they're bad people. They're being inauthentic because the algorithm punishes authenticity and rewards optimization.

Mosseri keeps talking about the difference between authentic human content and fake AI content. But here's what's actually happening on the platform: A creator posts a video. Let's say it's genuinely good—original idea, real emotion, authentic voice. It gets decent engagement. But then the algorithm notices that posts with certain characteristics perform better: higher contrast colors, faster cuts, specific camera angles, particular caption lengths. So other creators start mimicking those characteristics. Not because they're copying the original creator, but because the algorithm is subtly nudging everyone toward the same formula.

Eventually, you get what I call "algorithmic convergence." Two totally different creators end up making content that looks nearly identical because they're both optimizing for the same algorithmic signals. No AI involved. Just humans learning to be robots.

I opened Instagram recently and found a video that exemplifies this perfectly. A mom counting her kids in a public place. "One, two, three," she nods, then starts again. "Who else does this too? It's not at all exhausting," the caption reads. Relatable parenting content. The thing is, I'd already seen that exact video months earlier when it originally posted. But now it was back, because the reposting strategy is a direct play to the algorithm—casting the same net again to hook new followers, or testing if that particular video lands better in a different context.

That's not AI. That's a human creator gaming the system. And she's not alone. I see comedians on Threads posting the exact same joke weeks apart to catch different algorithmic waves. I see fitness influencers recycling the same workout content with minor variations. I see creators explicitly stating they're reposting content because "the algorithm missed it the first time."

The irony? When Mosseri talks about "flattering imagery" being "cheap to produce and boring to consume," he's actually describing the current state of Instagram. But it's not AI creating this glut of predictable, forgettable content—it's humans learning to optimize for algorithmic reward.

How Algorithms Turn Humans Into Robots

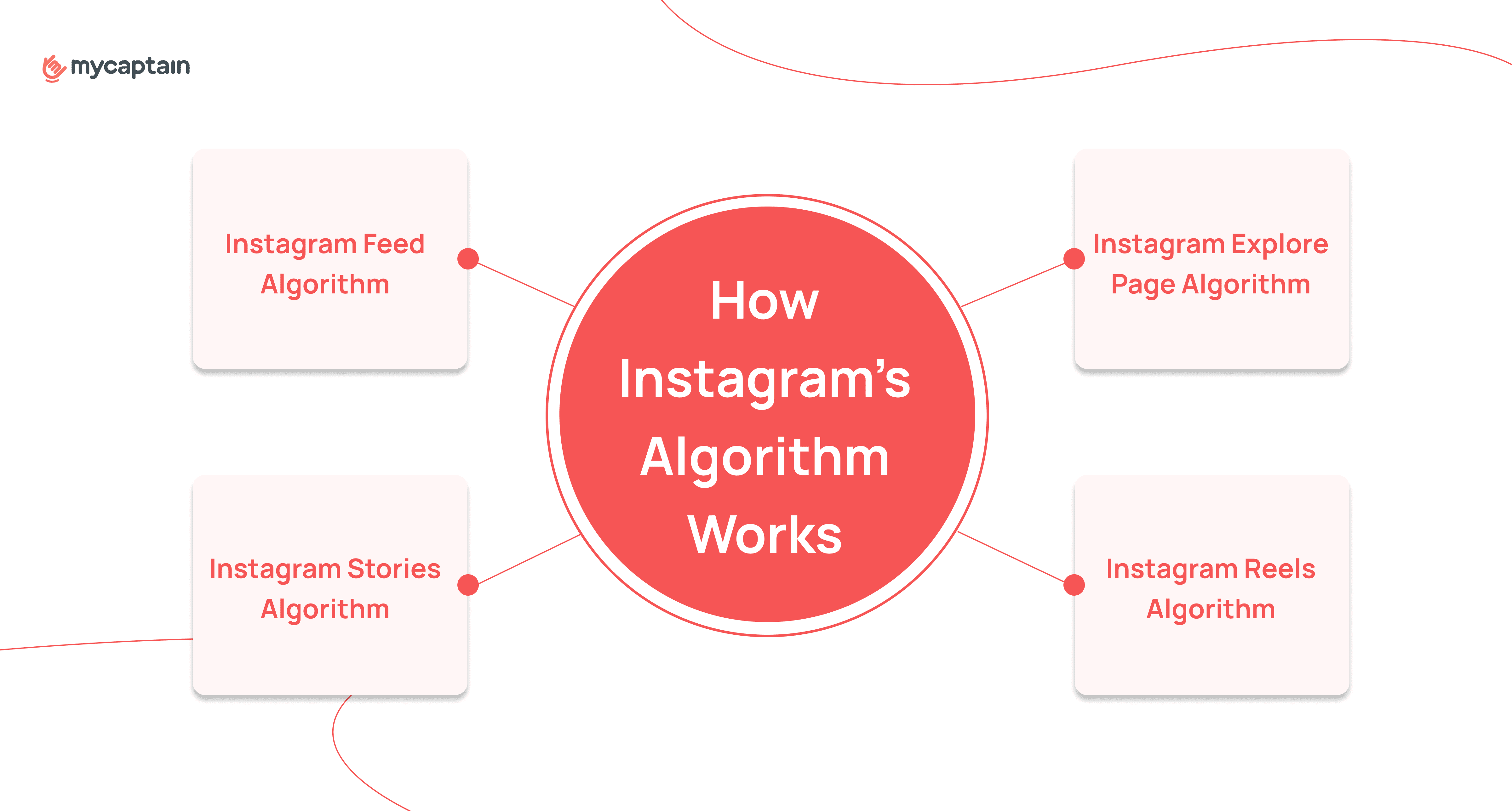

Let's get specific about how this works. Instagram's algorithm measures engagement: likes, comments, shares, time watched, saves, and a dozen other micro-signals. Every time you interact with a post, you're sending a signal about what the algorithm should show you more of. The algorithm takes those signals and builds a predictive model about what content will keep you on the platform longest.

Creators can see their analytics. They know which types of posts get the most engagement. So naturally, they make more of those posts. That's not a creator being inauthentic or evil—that's basic rational behavior. If you depend on Instagram for income, you're going to optimize for what the data shows works.

But here's where it gets weird: When millions of creators are all optimizing for the same algorithm, they all start gravitating toward the same content formats, the same emotional hooks, the same visual styles. The algorithm is essentially running a massive optimization process, and all the creators on the platform are the fitness functions being optimized. The platform "trains" creators to produce certain types of content by rewarding engagement.

This is textbook machine learning. The algorithm is the model. The creators are the training data. And the output is increasingly homogeneous, predictable content.

What Mosseri doesn't explicitly acknowledge is that this is actually a feature of algorithmic social media, not a bug. The algorithm isn't trying to kill creativity—it's doing exactly what it was designed to do: maximize engagement and keep users on the platform. If authentic, original, challenging content doesn't drive engagement metrics, the algorithm deprioritizes it. Over time, creators learn that original content gets buried, so they stop making it.

The platform has effectively trained its creators to be robots.

Why AI Will Replace Robot-Made Content

This is where Mosseri's warning about AI actually makes sense, but not in the way he thinks. AI doesn't need to be sophisticated to replace predictable, algorithmic human content. AI at its core is really good at one thing: making predictions based on training data. If you feed an AI system millions of examples of Instagram posts that got high engagement, it can learn the patterns and generate new posts that follow the same patterns.

And here's the kicker: The training data for this AI is already on Instagram. Every piece of content that the algorithm rewarded is a training example showing the AI exactly what works.

So what's about to happen? AI will get really good at generating the kind of content that Instagram's algorithm already rewards. It'll be able to produce the relatable parenting video, the trendy fitness routine, the emotional vulnerability post, the funny observation, all at massive scale. It won't need to be creative or original—just statistically likely to drive engagement.

And when that happens, why would a creator spend two hours filming, editing, and posting content when an AI could generate five versions in thirty seconds, test them against the algorithm, and scale the winners?

The tragic irony is that human creators are effectively training the AI that will replace them. Every post that gets algorithm-rewarded engagement is a data point teaching AI what works. The more creators optimize for the algorithm, the clearer the pattern becomes for AI to replicate.

Mosseri's actually right that there's an existential threat to Instagram. But it's not because AI is going to suddenly flood the platform with fake content. It's because the platform's own algorithmic logic—the same logic that has trained creators to be robots—is going to be infinitely scalable through AI generation.

The Authenticity Trap

Mosseri's solution is to be authentic. Be real. Stand out by being genuinely original.

But here's where his argument breaks down: Instagram's algorithm doesn't reward authenticity. It rewards whatever drives engagement. Sometimes authenticity drives engagement—sometimes it does the opposite.

A deeply personal, vulnerable post might resonate with a creator's core audience but barely move the needle on algorithmic reach. A trending audio clip with a mildly relatable video could hit 10 times harder. The algorithm doesn't care about authenticity as a concept. It cares about quantifiable signals.

So when Mosseri tells creators to "be authentic," what he's really telling them is to be authentic in ways that also drive engagement. Which is contradictory, because true authenticity isn't optimized for algorithm performance.

There's also something troubling about framing this as an individual creator problem. "Be more authentic," implies the burden is on creators to resist algorithmic pressure. But creators aren't failing to be authentic because they're lazy or unoriginal. They're being systematically trained by a system that rewards optimization and punishes deviation.

It's like telling someone in a factory to manufacture more interesting widgets while keeping the production timeline the same. The problem isn't the worker's lack of creativity. The problem is the system.

The Labeling Question

One thing Mosseri does get right: labeling authentic content is easier than labeling every AI-generated image. That's a smart observation, and it actually hints at a larger strategy.

Big platforms have started implementing content provenance systems—digital credentials that travel with content and verify where it originated. Some phone manufacturers are even baking this into hardware. A photo taken with a certain camera gets a cryptographic signature proving it came from a real device.

But here's the problem with that solution: It doesn't actually solve the authenticity crisis. Why? Because the real problem isn't that we can't tell AI images from real ones. The real problem is that the current system incentivizes creators to produce boring, predictable content that looks identical whether it was made by humans or algorithms.

Labeling might help users understand whether something was AI-generated, but it won't change the fundamental algorithmic dynamics that are already making human creators redundant.

What Actually Needs to Change

If Mosseri is genuinely worried about AI replacing human creators, he needs to address the root cause: the algorithm itself.

The algorithm needs to be redesigned to reward diversity, originality, and content that challenges the status quo—not just content that maximizes engagement metrics. This is hard because engagement metrics are easy to measure and optimize for. Originality is subjective. Diversity is harder to operationalize.

But if Instagram doesn't make this shift, the platform will increasingly be populated by AI-generated content that the algorithm deems "optimal" because it hits all the engagement signals that human creators have collectively learned to hit.

Meta could start by being transparent about what the algorithm actually rewards. Not just the vague "the algorithm favors engaging content" line, but specifics. What patterns correlate with viral performance? What audio is overused? What caption lengths perform best? What visual styles get buried?

Give creators that information, and you'd actually empower them to make different choices. Right now, they're flying blind, learning through trial and error, which naturally leads to convergence toward the same patterns.

Meta could also deprioritize content that's algorithmically optimized. Detect when a creator is reposting the same content weeks apart. Detect when someone is using trending audio in a way that's clearly algorithmic optimization rather than genuine creative choice. Reduce the reach of that content.

It sounds counterintuitive, but it would actually make the feed more interesting, because it would force creators to innovate rather than optimize.

The Creator Perspective

Let me be clear: I'm not blaming creators for this situation. If you're someone who depends on Instagram for income, you're not being irrational or unethical by optimizing your content for algorithmic performance. That's just responding to incentives.

The problem is the incentive structure itself.

There are actually creators on Instagram doing genuinely original, weird, challenging work. But most of them aren't doing it because of the algorithm—they're doing it despite it. They've either found a niche audience that appreciates their work regardless of reach, or they're subsidizing their creative work through other means (sponsorships, Patreon, side hustles).

In other words, the platform is already selecting against authentic creativity unless that creativity also happens to drive engagement metrics.

The Timeline Problem

Mosseri mentions that AI will "get better at mimicking the low-fi phone camera look that signals authenticity." He's right about this, but he's also wrong about the timeline. This is already happening.

AI image generators can already produce images that look like they were taken with a phone camera. They have the slight blur, the color grading, the composition that reads as "authentic." Within a year or two, this will be indistinguishable from real photos.

At that point, how do you tell the difference between an authentically low-fi photo and an AI-generated one designed to look low-fi? The answer is: you can't, unless the image has cryptographic provenance.

But even if we solve the technical problem of verification, we haven't solved the fundamental problem: the algorithm still rewards certain types of "authenticity" over others. So creators will still optimize for the version of authenticity that performs best algorithmically, and AI will still be able to replicate that.

What Creators Actually Want

Here's something Mosseri probably doesn't spend much time thinking about: What do creators actually want?

I've talked to dozens of creators over the years, and the answer is consistent. They want to make stuff they're proud of. They want an audience that appreciates their work. They want to make money if they can. But overwhelmingly, they don't want to spend all their energy optimizing for algorithmic metrics.

They want to create, not to game systems.

Right now, Instagram incentivizes system-gaming over creation. So that's what creators do. They game the system. And the result is a platform full of content that's optimized for engagement but not necessarily interesting or original.

If Mosseri actually wants to solve this problem, he needs to change the incentive structure. Make original content more valuable than optimized content. Make diversity more valuable than homogeneity. Make creativity more valuable than trend-following.

Is that possible on a platform that's fundamentally built on engagement metrics? Maybe not. Maybe Instagram's business model is fundamentally incompatible with the kind of authentic, original creativity Mosseri is calling for.

That would be the real problem worth worrying about.

The Bigger Picture

This isn't unique to Instagram. This is happening across all algorithmic social platforms. Tik Tok's algorithm does the same thing—trains creators to make predictable, trend-following content. You Tube's algorithm rewards watch time and click-through rates, which leads creators to make sensational, clickbait content. Twitter's algorithm (before the recent changes) rewarded engagement and outrage.

Every platform has the same fundamental incentive structure: Keep users on the platform, maximize engagement, show them ads, make money.

And every platform has the same consequence: Creators optimize for algorithmic reward instead of creating original content. The platform fills up with predictable, homogeneous, robot-like content made by humans who've learned what the algorithm wants.

Then AI comes along and does that same job better, faster, and cheaper.

The AI Opportunity (That No One's Talking About)

Here's an interesting thought: What if AI could actually help solve this problem?

Imagine an AI system that helps creators break out of algorithmic patterns. An AI that looks at a creator's content history and says, "Hey, you've been making the same type of post every week. Your audience might actually enjoy something different. What if you tried this instead?"

Or an AI that analyzes trending audio, trending hashtags, trending formats, and specifically recommends against them if the creator has been overusing them. "You've used this trend three times already. Your audience will see it as repetitive. Try something else."

That would actually help creators be more authentic and original, while also making the platform more interesting.

But that's not what AI companies are building. They're building AI to generate content, not to help creators avoid algorithmic traps.

What Needs to Happen Next

Mosseri's right that there's a real problem coming. But the problem isn't just "AI will flood Instagram with fake content." The problem is that Instagram's business model has already trained creators to make predictable, optimized content. AI will just make that worse by automating the process.

Here's what actually needs to happen:

First, platforms need to change their optimization metrics. Stop just tracking engagement. Start tracking things like novelty, diversity, originality. If an algorithm learns to reward content that's statistically different from what's already performing well, you get different content.

Second, creators need more transparency. Show creators exactly what the algorithm is measuring. Not vague statements like "authentic content performs better," but specifics. This helps creators make intentional choices instead of flying blind.

Third, platforms need to be honest about the trade-offs. If you optimize purely for engagement, you get boring, predictable content. If you want original, creative, diverse content, you need to sacrifice some engagement. That's the trade-off.

Fourth, the creator economy needs to become less dependent on single-platform virality. Right now, creators are chasing algorithmic luck on one or two major platforms. If there were more diverse ways to monetize creative work—subscriptions, memberships, direct support—creators could afford to be less optimized for algorithm performance.

The Real Message

So here's what I think Mosseri should actually be saying: "Instagram's algorithm has trained creators to make predictable, optimized content. AI is going to get really good at making that same predictable content. So we need to change what we reward. We need to make original, diverse, challenging content actually win on Instagram. Otherwise, we're going to end up with a platform that's mostly AI-generated content that looks just like the algorithmic-optimized human content we already have."

That's the honest version. It's not as catchy as "Be authentic!" But it's actually true.

The threat to Instagram isn't AI. The threat is that the platform's own algorithmic logic has already created the conditions for AI to be successful. Creators are already acting like robots. AI is just going to do the robot job better.

If Mosseri actually wants to solve this, he needs to start by admitting that Instagram's algorithm is part of the problem, not just the solution.

Because right now, Instagram is teaching creators to be robots. And then acting surprised when AI robots start showing up to do the job.

How to Actually Stand Out on Social Media (When the Algorithm Works Against You)

If you're a creator trying to navigate this landscape, here's what actually works:

Build direct relationships with your audience. Don't depend on algorithmic reach alone. Email list, Discord, Patreon, direct message engagement—anything that creates a direct connection to your audience instead of relying on the platform to show them your work.

Find your format niche. Instead of chasing trends, figure out what format you're uniquely good at. Not the format that's trending, but the one that plays to your strengths. Then get really good at that one thing.

Create systems, not single posts. Think about your content as a system or series rather than individual posts. This helps you break out of the "make the same viral post again" trap. You're building something, not just chasing hits.

Batch and schedule strategically. Instead of constantly optimizing based on what's trending, batch your content creation. Make a bunch of stuff at once, schedule it, then move on. This breaks the cycle of constant algorithmic checking and adjustment.

Test radically different content. Every couple weeks, make something completely different from what usually works for you. Track how it performs. You might find new formats or topics that your audience actually prefers, but the algorithm never surfaced because it wasn't optimized for the existing pattern.

Collaborate with other creators intentionally. Not for algorithmic reach, but to actually push your creative boundaries. Make something you couldn't make alone.

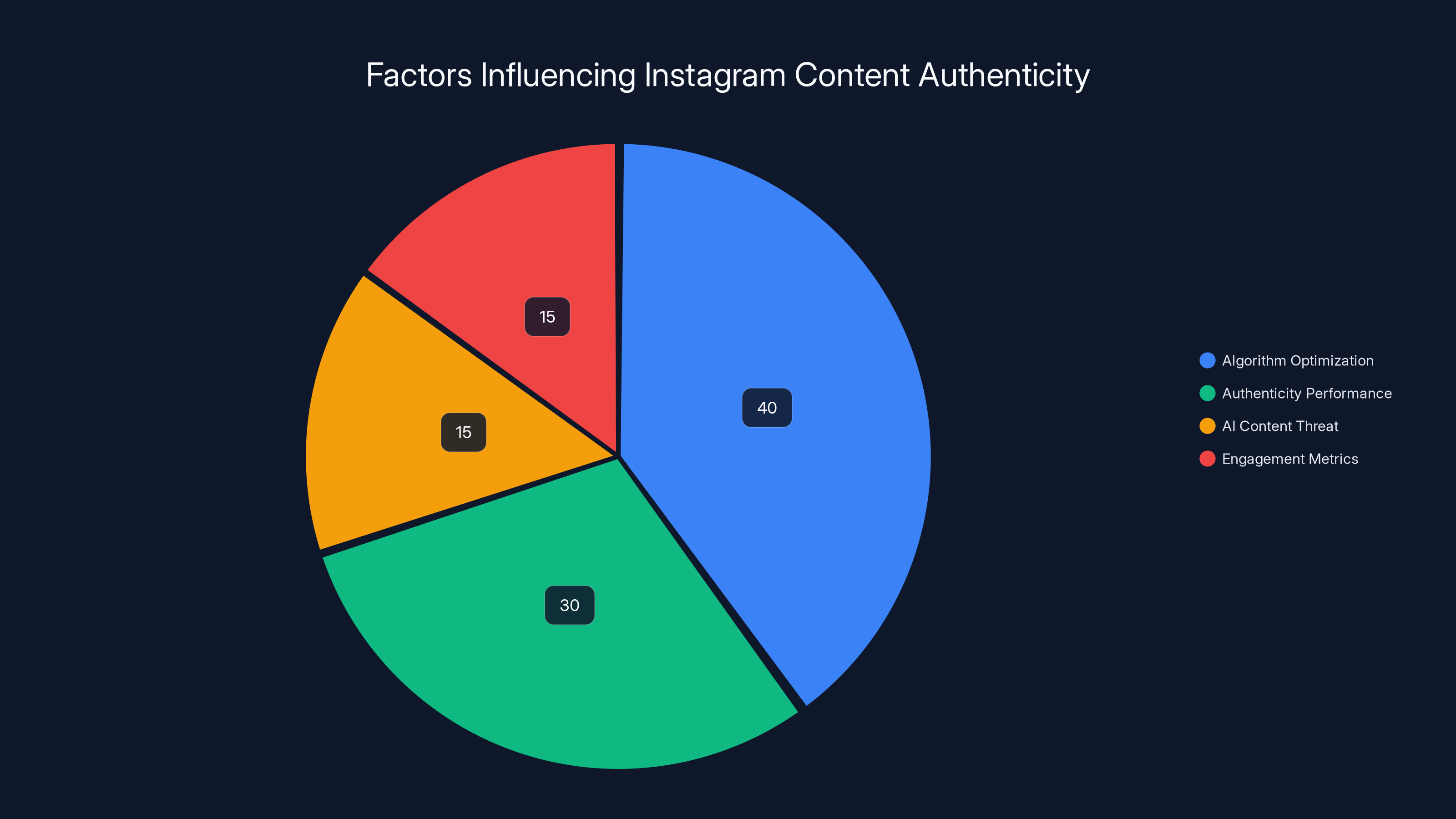

Estimated data shows that algorithm optimization is the primary factor influencing content authenticity on Instagram, overshadowing concerns about AI-generated content.

TL; DR

- The real problem isn't AI. Instagram's algorithm has already trained creators to make predictable, optimized content that follows the same formulas. That robot-like content is what AI will replace.

- Humans are already the robots. Creators optimize for engagement metrics, which leads to homogenization. The algorithm rewarded this behavior, so now thousands of creators make nearly identical content.

- Authenticity is a performance. When Mosseri calls for "authentic" content, he's ignoring that Instagram's algorithm doesn't actually reward authenticity—it rewards engagement. True authenticity often doesn't perform well algorithmically.

- The labeling solution misses the point. Sure, we can label AI content, but that doesn't change the fundamental problem: the algorithm still rewards predictable, optimized content whether it's made by humans or AI.

- The real solution requires changing what the algorithm rewards. If Meta wants to protect creators from AI replacement, they need to reward diversity, originality, and content that challenges patterns—not just engagement metrics.

- The timeline is shorter than Mosseri thinks. AI is already good enough to replicate algorithmic-optimized content at scale. This isn't a future threat—it's happening now.

- Creators need to break the optimization trap. Build direct audience relationships, find your format niche, batch create, and experiment radically. Don't depend on algorithmic reach alone.

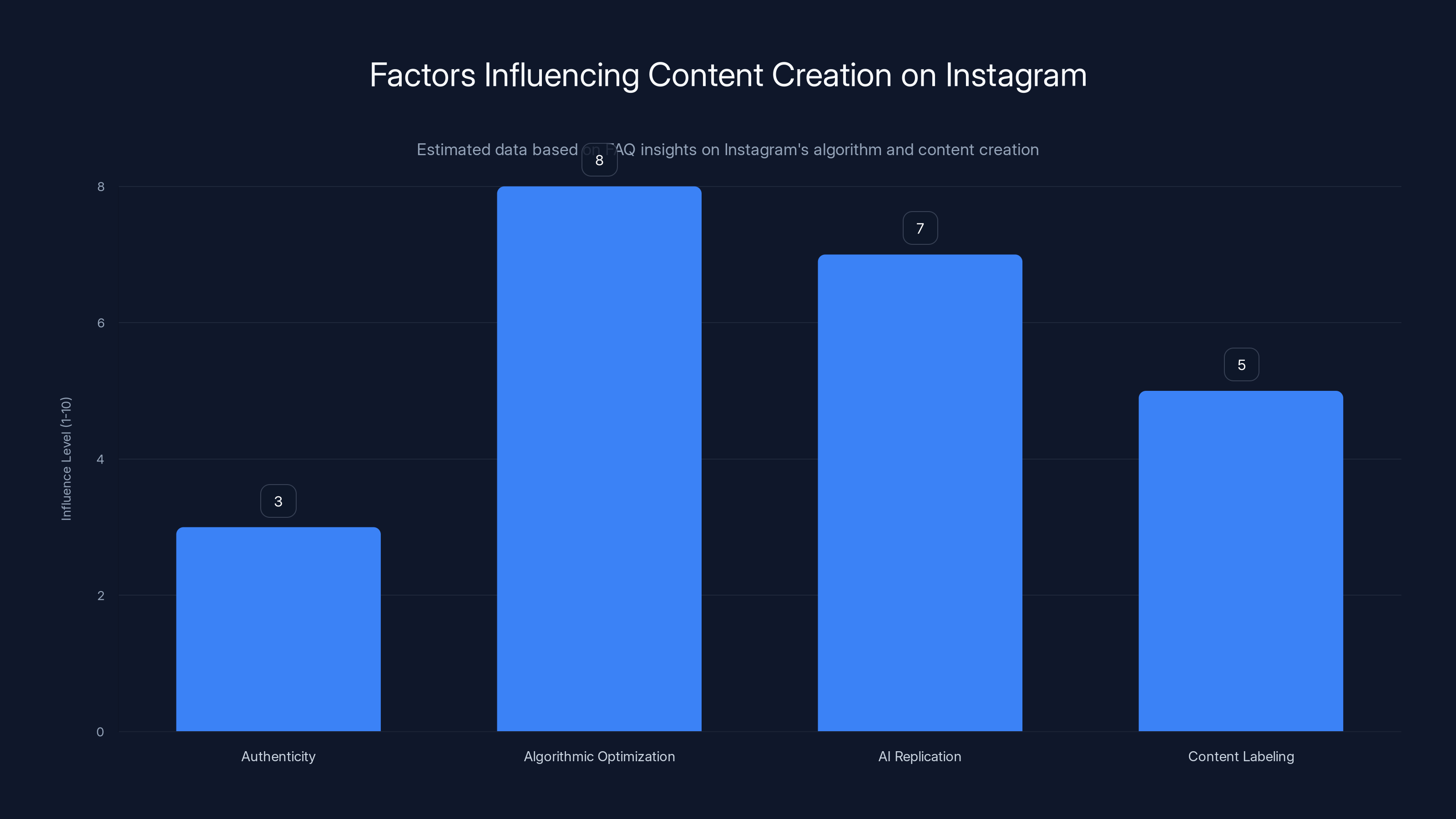

Algorithmic optimization significantly influences content creation on Instagram, more so than authenticity or content labeling. Estimated data.

FAQ

What exactly does Adam Mosseri mean by "authentic" content on Instagram?

Mosseri uses "authentic" to describe content created by real people with genuine voices—the opposite of AI-generated content. However, on Instagram, "authenticity" has become heavily optimized. Creators are trained to produce authentic-looking content that actually follows algorithmic formulas designed to maximize engagement. True authenticity on Instagram often performs worse algorithmically than optimized content that mimics authenticity.

How does Instagram's algorithm train creators to make repetitive content?

Creators can see their analytics showing which posts drive the most engagement, likes, comments, and shares. When they notice certain types of content consistently perform better, they naturally make more of that content. Over time, millions of creators optimizing for the same algorithmic signals leads to convergence—thousands of creators making nearly identical-looking content. The algorithm doesn't force this; it incentivizes it through its reward structure.

Why would AI be able to replace the content creators currently make?

AI at its core learns patterns from training data. Instagram's feed is filled with millions of examples of content that the algorithm rewarded with high engagement. AI can analyze these patterns and generate new content that follows the same statistically likely patterns. Since most creator-made Instagram content is already optimized for algorithmic performance rather than originality, AI can replicate it easily. This is fundamentally different from AI needing to be "creative"—it just needs to be predictable.

Can content labeling really solve the AI problem on Instagram?

Content labeling and provenance systems can verify whether an image was AI-generated or photographed with a real device. However, labeling doesn't address the root problem: Instagram's algorithm currently rewards the kind of predictable, optimized content that AI can generate. Even if users could distinguish AI content, the algorithmic incentive structure remains unchanged, so both human creators and AI will continue optimizing for engagement metrics rather than originality.

What would actually stop AI from replacing human creators on Instagram?

The real solution requires Instagram to change what it algorithmically rewards. Instead of pure engagement metrics, the algorithm would need to prioritize content diversity, originality, novelty, and content that breaks algorithmic patterns. This would make original human creativity more valuable than optimized predictability, making it harder for AI to compete. However, this is difficult because engagement metrics are easy to measure while originality is subjective and harder to operationalize.

How can creators protect themselves from this situation?

Creators should build direct relationships with their audiences through email lists, Discord communities, Patreon, or direct messaging instead of relying solely on algorithmic reach. They should develop a unique format or style rather than chasing trends. Batching content creation instead of constantly checking algorithmic performance helps break the optimization trap. Regularly experimenting with content that's completely different from what performs well can reveal new audience preferences and differentiate them from AI-generated alternatives.

Is this problem unique to Instagram?

No—this is happening across all algorithmic social platforms. Tik Tok's algorithm optimizes for watch time and trend participation, leading to similar convergence around trending sounds and formats. You Tube's algorithm optimizes for click-through rates and watch time, which rewards sensational and clickbait content. The fundamental incentive structure on any platform that uses engagement metrics as its optimization goal creates the same effect: creators learn what works and make more of it.

What does Mosseri actually get right about the AI threat?

Mosseri is correct that labeling authentic content is easier than labeling every AI-generated image, and that implementing content provenance (cryptographic verification of origin) is a practical solution for verification. He's also right that AI will get better at mimicking the low-fidelity, "authentic-looking" aesthetic that currently signals realness on Instagram. However, he misses that this technical solution doesn't address the algorithmic incentive problem that's already making human-created content predictable and robot-like.

Why hasn't Instagram already fixed this problem?

The core issue is that engagement metrics are aligned with Meta's business model and advertising revenue. Content that maximizes engagement metrics also maximizes the time users spend on the platform seeing ads. Changing the algorithm to reward originality, diversity, or challenge over engagement might make the platform more interesting, but it could also reduce time-on-platform and ad exposure. Making that trade-off would require Meta to accept lower engagement metrics and potentially lower revenue. Until there's external pressure (regulation, competition, or creator exodus), the current optimization is unlikely to change.

What Runable Users Are Building

While this algorithmic content crisis plays out, teams are using Runable to escape the optimization trap entirely. Instead of manually optimizing social media content for algorithmic performance, they're using AI-powered automation to generate multiple content variations, test them, analyze performance, and iterate—without spending weeks on manual optimization.

The approach is simple: Let AI handle the repetitive optimization work. Use the time saved for actual creative thinking and experimentation. This is the opposite of what Instagram's algorithm trains you to do.

Use Case: Generate multiple content variations (captions, visuals, formats) in minutes and test which performs best, so you're not stuck in the endless optimization cycle.

Try Runable For Free

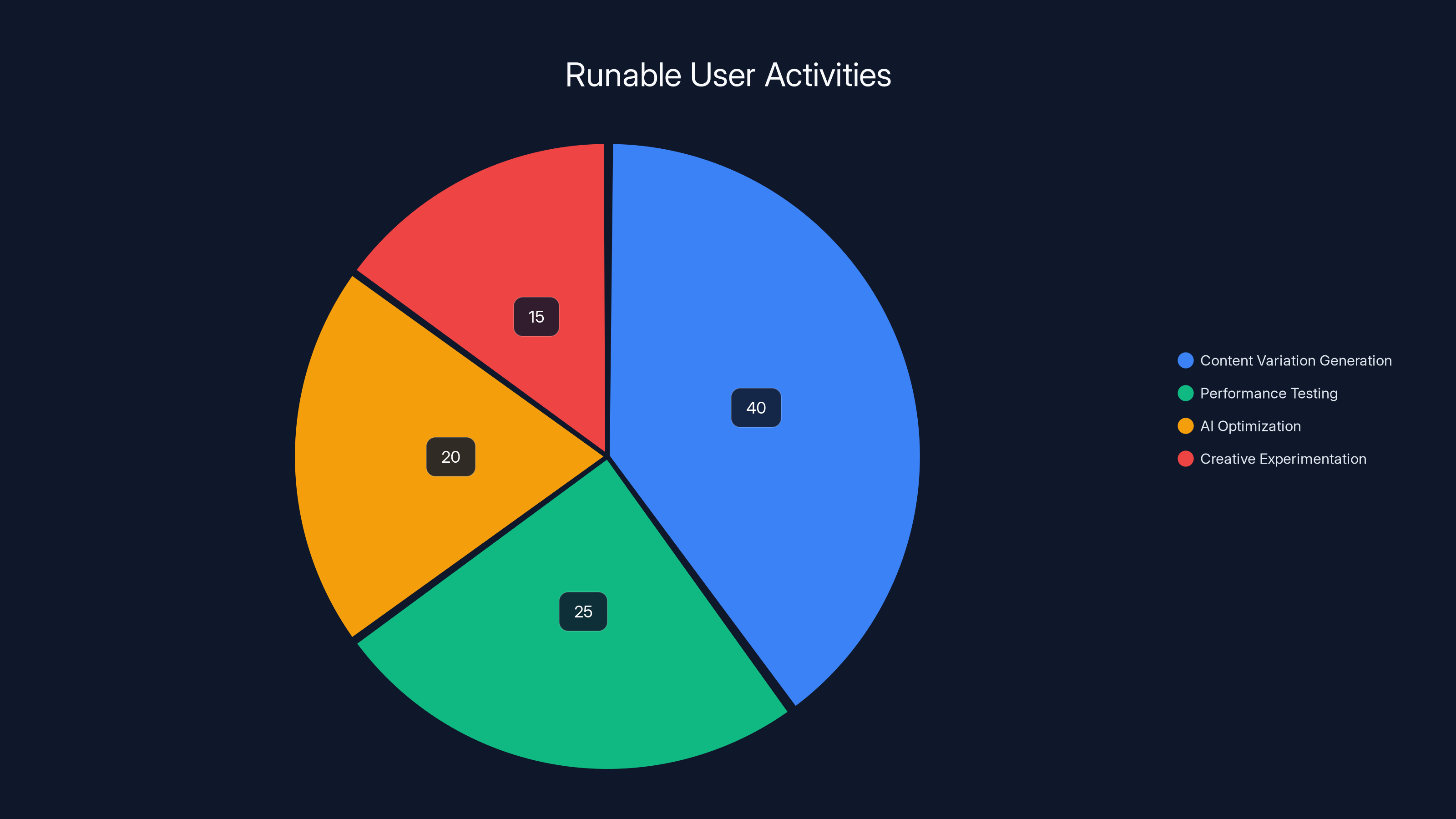

Estimated data shows that 40% of Runable users focus on generating content variations, followed by performance testing at 25%.

The Bottom Line

Mosseri's warning about AI on Instagram is real, but he's looking at the problem from the wrong angle. Instagram didn't suddenly become threatened by AI. The platform has spent over a decade creating the conditions for AI to thrive—by training creators to make predictable, optimized, robot-like content.

The actual threat isn't that AI will suddenly flood Instagram with fake content. The threat is that Instagram's algorithmic logic is already perfectly suited to AI generation. Creators are already playing the optimization game. AI is just going to play it better.

If Meta wants to solve this, they need to stop calling for more authenticity and start changing the algorithm to reward it. Until then, expect the feed to get increasingly filled with content—both human-made and AI-generated—that's optimized for engagement rather than originality.

The robots are already here. They're just human.

Key Takeaways

- Instagram's algorithm has trained creators to make predictable, optimized content that looks identical across thousands of accounts

- The real threat isn't AI—it's that the platform's algorithmic logic is perfectly suited for AI to replicate human-created patterns

- Authentic content often performs worse algorithmically on Instagram, undermining Mosseri's call for authenticity without changing incentives

- Creators escape optimization traps by building direct audience relationships rather than depending solely on algorithmic reach

- Solving this requires Meta to prioritize content diversity and originality over pure engagement metrics in their algorithm

Related Articles

- Complete Guide to Content Repurposing: 25 Proven Strategies [2025]

- YouTube's SRV3 Captions Disabled: What Creators Need to Know [2025]

- X's Open Source Algorithm: 5 Strategic Wins for Businesses [2025]

- Threads Overtakes X on Mobile: What This Means for Social Media [2025]

- Best Print-on-Demand Products for High Conversion [2025]

- Very Chinese Time: Why The Meme Went Viral [2025]

![Instagram's AI Problem Isn't AI at All—It's the Algorithm [2025]](https://tryrunable.com/blog/instagram-s-ai-problem-isn-t-ai-at-all-it-s-the-algorithm-20/image-1-1769006190057.jpg)