The Era When You Can't Trust Your Eyes Anymore

We're living through a fundamental shift in how humans process visual information. For decades, maybe centuries, there was an unspoken rule: if you saw it in a photograph or video, it probably happened. The camera was the ultimate truth-teller. Not anymore.

Instagram boss Adam Mosseri recently laid out the stakes with brutal honesty: "For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it's going to take us years to adapt."

That's not hyperbole. It's a sober assessment of where we are in 2025. AI image generation has reached the point where it's indistinguishable from reality. You can't spot the deepfake by looking for weird hands or impossible reflections anymore. The tech has moved past those tells. Now it just... looks real. Because it can be made to look exactly like something real.

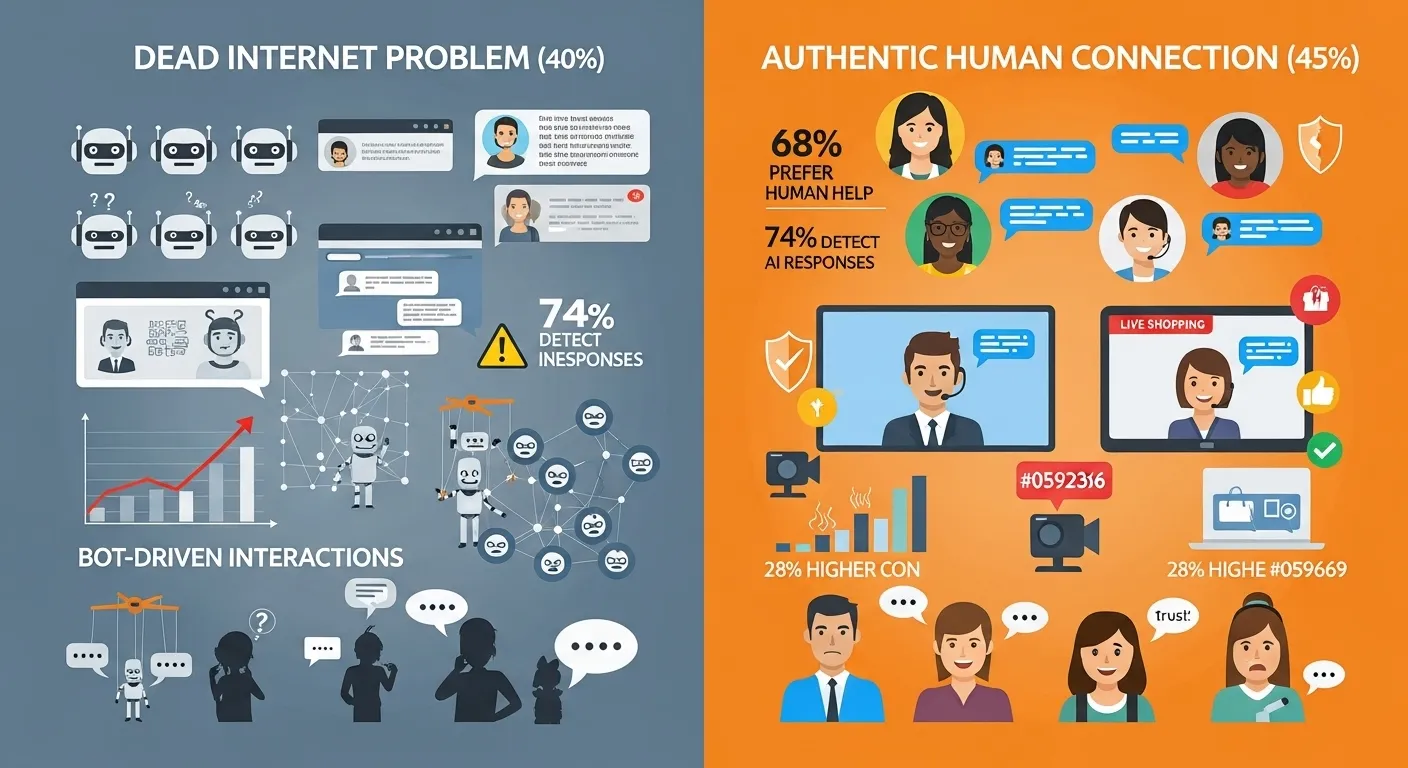

The implications are staggering. Not just for Instagram, but for how we interact with information, trust our sources, and understand what's authentic in an increasingly synthetic digital world. Creators are already adapting in unexpected ways. Users are becoming skeptical in ways that feel almost paranoid. And platforms like Instagram are scrambling to figure out how to maintain credibility in an era where credibility is the rarest commodity.

Mosseri's recent public statements reveal something deeper than just Instagram's challenges. They show how the entire social media ecosystem, and maybe the entire internet, is standing on the edge of a trust cliff.

The Default Assumption About Photos Is About to Flip

There's a psychological concept called the "default assumption." For photography, the default used to be: this is real. What you're seeing happened.

That default is flipping. Sarah Jeong at The Verge articulated it perfectly last year: "The default assumption about a photo is about to become that it's faked, because creating realistic and believable fake photos is now trivial to do."

Mosseri agrees, and adds a crucial nuance: the shift isn't just about skepticism. It's about how we'll have to fundamentally change our information processing. We're hardwired by evolution to trust what we see. Our visual cortex developed over millions of years to process real-world threats and opportunities. When your ancestors saw a predator, they didn't question whether the lion was real. They ran. Trusting your eyes kept you alive.

Now, that same instinct is a vulnerability. We're genetically predisposed to believing our eyes, which makes us terrible at spotting synthetic content at scale. And here's where it gets uncomfortable: there's no quick fix for an evolutionary bottleneck.

Mosseri lays out the mental model shift that's coming: "We're going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why."

Notice what he's saying here. It's not about better tools to spot fakes. It's not even about labels and watermarks. It's about shifting the entire burden of verification from content detection to source credibility. Instead of asking "Is this real?" you're asking "Does this person have a reason to lie to me?"

That's a monumental shift. And it changes everything about how social platforms need to function.

Building the best creative tools is rated as the most crucial requirement for Instagram to thrive in the AI era, followed closely by labeling AI content and improving content ranking. (Estimated data)

Why Imperfection Became a Signal of Reality

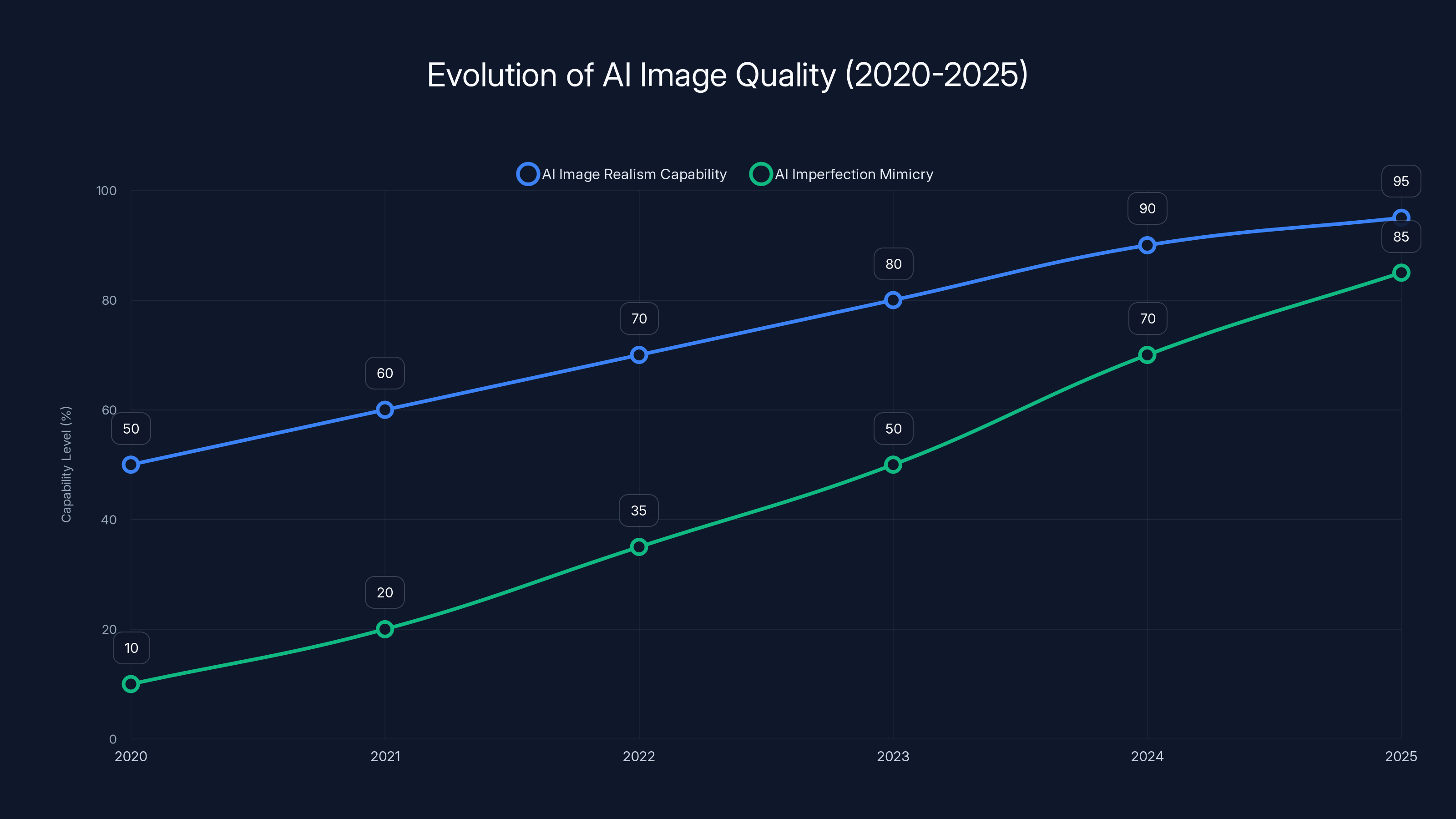

Here's something almost darkly funny: in 2025, the best way to prove your content is real is to make it look bad.

Creators are already doing this instinctively. Slightly out-of-focus shots. Bad lighting. Unflattering angles. Visible blemishes. Messy backgrounds. These aren't stylistic choices anymore. They're authenticity markers. They're saying: "This is too imperfect to be AI-generated."

Mosseri identified this trend: "Raw, unflattering images are, temporarily, a signal of reality, until AI is able to copy imperfections too."

Notice the word "temporarily." Because here's the thing: AI companies are absolutely aware of this. They're not going to stay stuck at "perfect-looking but fake." The next frontier isn't perfect realism. It's imperfect realism. It's grain and noise and compression artifacts and that slightly too-warm color cast from a phone camera. The entire uncanny valley is going to be bridged.

When that happens, imperfection stops being a signal of authenticity. It just becomes another thing that can be faked.

We're watching an arms race in real-time. Each barrier that emerges as a trust signal gets weaponized. Watermarks get stripped. Metadata gets spoofed. Camera fingerprints can be mimicked. The moment a signal of authenticity emerges, AI is trained to replicate it.

This is why Mosseri's suggestion to focus on "who is sharing something and why" is so crucial. At some point, technical signals fail. You can't just keep adding more layers of detection. You have to shift to social signals: reputation, verification, past behavior, community trust.

For Instagram specifically, this means their blue checkmarks and verification systems suddenly become infrastructurally important in ways they weren't before. Verification isn't just a vanity metric anymore. It's a credibility layer in an era where visual authenticity is impossible to verify through technical means alone.

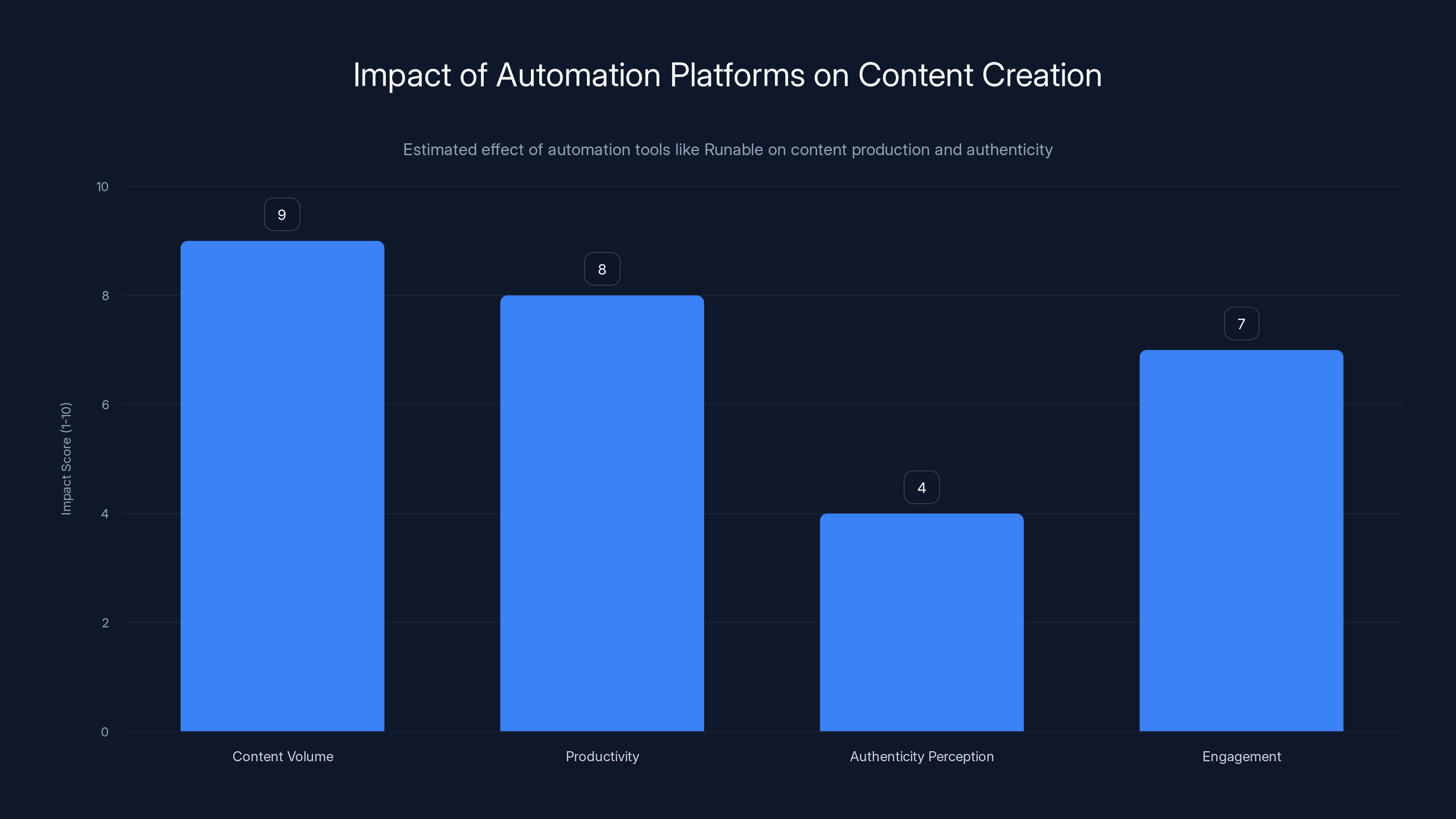

Automation platforms like Runable significantly boost content volume and productivity but may reduce perceived authenticity. Estimated data.

What Instagram Actually Needs to Survive This

Mosseri laid out four specific requirements for platforms to handle the AI era:

- Build the best creative tools

- Label AI-generated content and verify authentic content

- Surface credibility signals about who's posting

- Continue to improve ranking for originality

Let's break these down because they're more revealing than they might seem at first.

Build the Best Creative Tools

This is the most controversial of the four. Mosseri claims that "We like to complain about 'AI slop,' but there's a lot of amazing AI content." He's right, though he notably didn't point to specific examples.

Here's the thing about "good AI content": it exists, but it's usually either (a) created by people with technical skills, (b) used as a supplement to human creativity rather than a replacement, or (c) transparently labeled as AI-generated.

What Mosseri seems to be advocating for is that platforms should make AI tools so good and so accessible that everyone can use them effectively. Not to replace human creativity, but to democratize the tools that were previously gatekept behind expensive software and technical expertise.

The risk, of course, is that this accelerates the exact problem Mosseri is worried about. More AI content, harder to detect, more convincing.

But there's a logic to his argument: if AI tools become ubiquitous anyway (which they will), platforms can either (a) try to keep them off the platform or (b) build them in and label them. Option A doesn't work. You can't keep innovation off the internet. Option B at least lets you control how they're used and presented.

Label and Verify

This sounds straightforward until you actually think about implementation. How do you label AI content?

Meta's been experimenting with this. Facebook and Instagram added labels to AI-generated images. But the system has a fatal flaw: it only labels images created with Meta's own tools or detected by Meta's detection systems. If someone uses a different generator or fine-tunes a model themselves, the label doesn't appear.

Verifying authentic content is even harder. How do you prove something wasn't generated? You can't prove a negative. All you can do is attach metadata and hope it hasn't been stripped or spoofed.

Open AI's approach has been to add cryptographic signatures to images. C2PA (Coalition for Content Provenance and Authenticity) is trying to establish standards for this across the industry. But adoption is slow, and there's no way to enforce it retroactively on billions of existing images.

The gap between the ideal system (cryptographic verification on all images) and the practical reality (most images have no verifiable metadata) is still enormous.

Surface Credibility Signals

This is where Instagram actually has an advantage. They control the platform. They can choose what information is prominent.

Right now, Instagram primarily signals credibility through: verification badges, follower count, and engagement metrics. That's it. Everything else is buried or invisible.

What they could do: show posting history depth, consistency metrics, community feedback from long-term followers, transparent AI tool usage disclosure, and collaboration patterns. They could surface whether someone has a history of sharing accurate information or spreading misinformation.

But this requires rethinking the entire feed. It means making accounts less aesthetic and more transparent. It means prioritizing information over polish.

Is Instagram going to do that? That's... unlikely. Because it conflicts with their core business model, which is built on making beautiful, polished content. The more transparent and raw Instagram becomes, the less visually appealing it is. And the less visually appealing it is, the less time users spend scrolling.

Improve Ranking for Originality

This is the most pragmatic suggestion. Instagram's algorithm already evaluates content. It could weight originality more heavily.

But here's the catch: what's original? Everything is remixed. Everything is inspired by something else. In the age of AI, originality is almost meaningless because AI is trained on existing content.

The algorithm would have to be clever enough to distinguish between "this is a new take on an existing idea" and "this is algorithmically generated from existing training data." That's... not actually that hard for a sufficiently sophisticated model. But it requires that Instagram care about this more than engagement metrics.

If engagement is the goal, original content doesn't always win. Remixed, trending, familiar content often does better. So improving ranking for originality would mean consciously deprioritizing viral content in favor of novel content. Good luck convincing the business team of that trade-off.

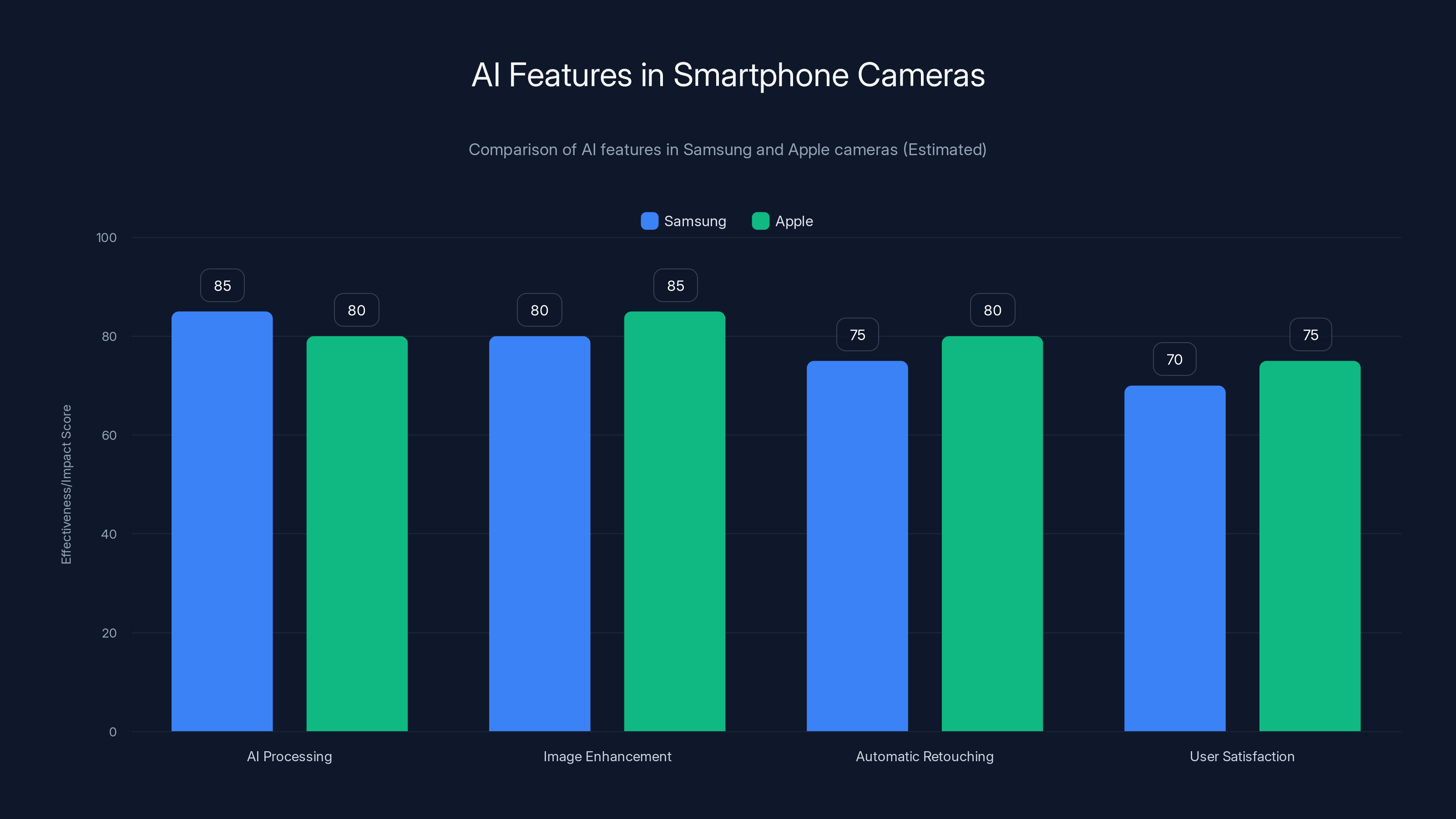

The Camera Company Problem: Why Technical Solutions Fail

Mosseri made a pointed criticism of camera manufacturers: they're approaching this problem all wrong.

Companies like Samsung and Apple have been adding AI to their cameras. Samsung's Galaxy phones got controversial for aggressively processing moon photos. Apple added features to enhance and retouch images automatically. Both companies marketed this as giving users "professional" results.

Mosseri's criticism: "He claims camera companies are going the wrong way by trying to give everyone the ability to 'look like a pro photographer from 2015.'"

Why is this wrong? Because it increases the problem, not solves it. It floods the platform with AI-generated-but-not-labeled content. It makes the problem harder to detect because the content comes from camera manufacturers, which people trust.

Patrick Chomet actually captured the nihilism perfectly: "Actually, there is no such thing as a real picture." Not in the sense that everything is fake, but in the sense that even your camera is lying to you about what was in front of the lens.

This is technically true. Every camera applies processing. Compression, color correction, exposure adjustment. Your phone's computational photography is already AI. The only difference is whether you're calling it AI or not.

But that doesn't solve the credibility problem. It makes it worse. Because now there's no clear line between "captured reality, enhanced" and "purely synthetic."

Craig Federighi told the Wall Street Journal he's "concerned" about the impact of AI editing. At least he's being honest about the problem. But concern doesn't solve it.

The technical approach fails because you can't out-tech the problem. The moment you make detection systems smarter, generative systems get smarter. It's an arms race where one side is motivated by defense and the other by profit. The profit-motivated side always wins.

Estimated data shows both Samsung and Apple heavily integrate AI in cameras, but user satisfaction scores are lower, indicating potential issues with AI over-processing.

The Real Issue: Power Has Shifted to Individuals

There's a deeper shift that Mosseri touches on, and it's worth understanding because it explains why this problem is actually unsolvable through platform tools alone.

Mosseri wrote: "Power has shifted from institutions to individuals because the internet made it so anyone with a compelling idea could find an audience. Everything that made creators matter, the ability to be real, to connect, to have a voice that couldn't be faked, is now accessible to anyone with the right tools."

That's the actual problem. The internet democratized distribution. Anyone could build an audience. But that only worked if you had something authentic to offer. Authenticity was the moat. Your real life, your real voice, your real experiences couldn't be copied.

Now they can be.

When Instagram was growing, being a successful creator meant actually having interesting experiences or talent. You had to DO something. You couldn't fake it at scale because people could see through fakeness in real life.

AI removes that requirement. You can be a successful creator with nothing but a good prompt. You can build an audience on entirely synthetic content. You don't need to live an interesting life. You just need to describe one.

Mosseri calls this the "infinite synthetic content" era. And he's right that it fundamentally changes what Instagram is. Because Instagram was built on the premise that creators are special and audiences are followers. The special part was that creators had access to something real: their own experience.

When everyone can generate infinite content, the "special" part disappears. Everyone's equally fake (or equally real, depending on how you look at it).

Authenticity in the Age of Perfect Fakes

Here's the uncomfortable truth: authenticity is about to become infinitely reproducible.

Not in the sense that everything will be fake. But in the sense that you can't distinguish real from fake anymore. The very concept breaks down when the fake is indistinguishable from the real.

When that happens, the only remaining signal of authenticity is consistency and reputation. Did this person tell me the truth last time? Do they have incentives to deceive me? Are they part of a community that would hold them accountable?

These are social signals, not technical ones. They can't be detected by an algorithm. They have to be evaluated by humans. Which means trust becomes local again. You trust people you know. You trust communities you're part of. You don't trust strangers on the internet.

This is actually a return to pre-internet social dynamics. The internet promised to eliminate geography as a barrier to trust. You could trust someone across the world based on merit. But when merit becomes fakeable, you retreat to local trust networks.

For a global platform like Instagram, this is existential. Instagram is built on the idea that you can trust global strangers. That you can follow someone in another country and engage with their life. But if everyone's content is potentially fake, why follow strangers when you can trust your local friends?

Mosseri's solutions are basically trying to recreate local trust signals in a global platform. Verification badges are like credentials. Follower history is like reputation. Engagement patterns are like demonstrated trustworthiness.

But these are all copies of real social signals. They're not as strong as the real thing.

AI's ability to mimic imperfections is projected to increase significantly by 2025, challenging the use of imperfections as authenticity markers. Estimated data.

The Dead Feed: Why Instagram Had to Change

Mosseri mentions that the more personal Instagram feed has been "dead" for years. That's worth understanding because it explains why Instagram became platform for influencers and brands instead of friends sharing photos.

When Instagram switched from chronological feeds to algorithmic ranking, it killed the original product. Because the algorithm optimizes for engagement, not for sharing your actual life.

Engagement comes from spectacular content. Aspirational content. Polished content. It doesn't come from your friend's boring photo of their lunch.

So creators, pressured by the algorithm, stopped sharing their lunch photos and started crafting beautiful narratives. They added filters. They staged shots. They made everything prettier.

Then influencers showed up with professional equipment and teams. Then brands showed up. Then Instagram became a visual advertising platform instead of a social network.

That's when the decline in authenticity actually started. Not with AI. With the algorithm. AI just accelerated a process that was already underway.

Mosseri knows this. And he knows that fixing it requires going backwards, algorithmically speaking. Prioritizing less-polished content. Surfacing niche creators. Deprioritizing influencers and brands.

But that would destroy Instagram's business model. So it's not happening.

Instead, Instagram is trying to adapt to a world where everything might be fake by building tools to detect fakes and label them. Which is like trying to prevent a pandemic by printing more warning labels.

AI Content: The "Slop" Problem Mosseri Dismissed

Mosseri dismissed criticism of "AI slop" by claiming there's "amazing AI content." But this deserves scrutiny because it reveals how platforms actually view AI-generated content.

AI slop is real. It's the tsunami of mediocre, derivative, algorithmically-generated content that's flooding the internet. Training data from Reddit, YouTube, and Instagram is being fed into AI models, processed, and spit back out as "new" content.

Most of it is terrible. It's oversampled. It's repetitive. It has obvious tells. It's... sloppy.

But here's what Mosseri might actually be saying: the problem isn't AI content. The problem is bad AI content. And the solution isn't to block AI. It's to get better at using AI.

Midjourney outputs can look incredible. DALL-E can create genuinely novel artwork. Adobe's Firefly can do things that would take a human hours.

The difference between good AI content and slop is usually: how much a human was involved in the creation process. AI as a tool (guided by a human with taste and vision) produces good content. AI as a generator (where the human just hits a button) produces slop.

Mosseri's advocacy for "building the best creative tools" is really advocacy for giving creators better tools so they can produce better AI-assisted content. Not for replacing creators with AI, but for amplifying creator capability.

The problem is that this doesn't solve the trust problem. Even good AI content is still AI. And when good AI content floods the platform, people still have no idea what's real.

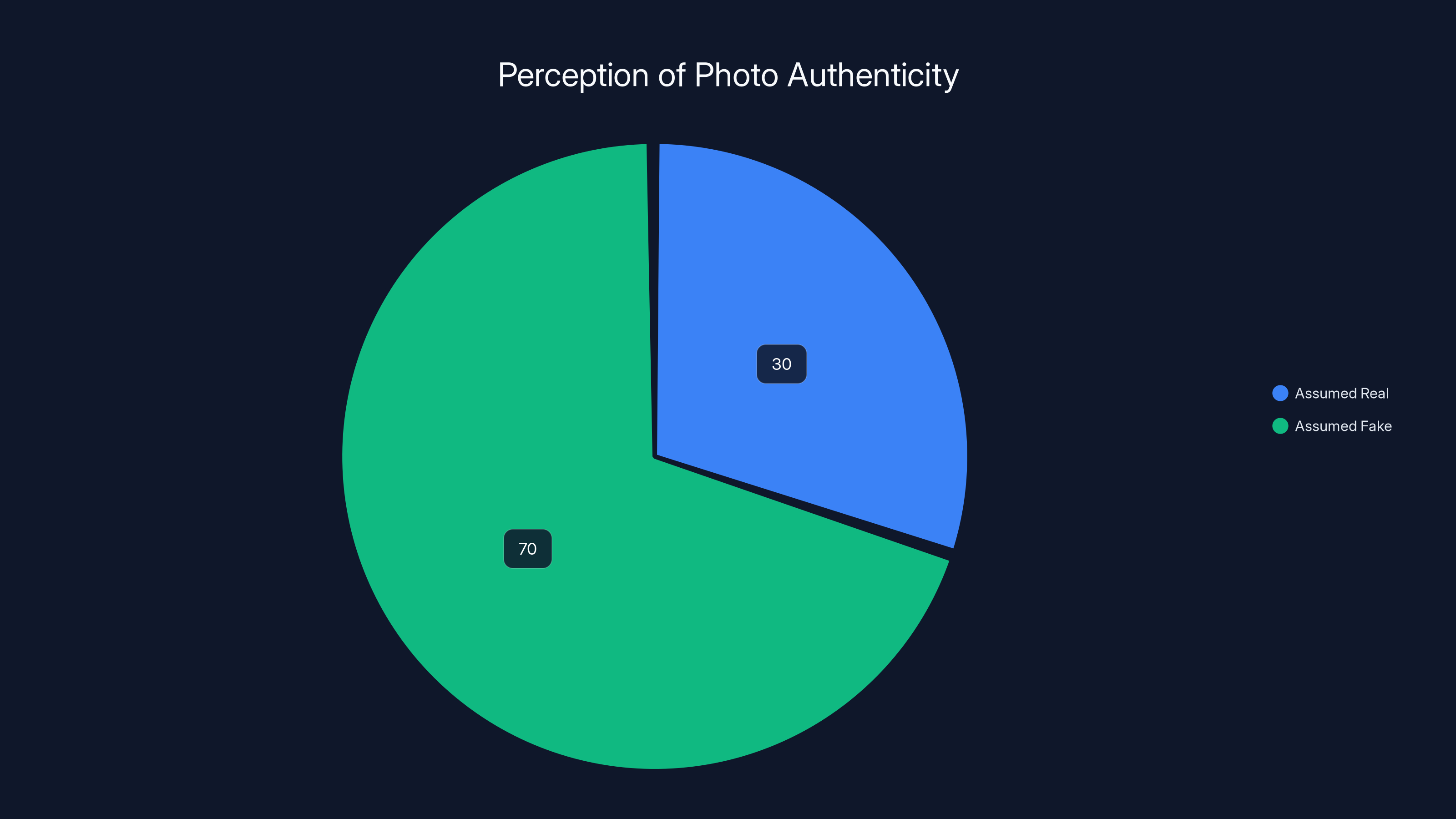

Estimated data suggests a shift where 70% of people might assume photos are fake by default, highlighting a significant change in perception.

The Cryptographic Future: Signing Images at the Camera Level

Mosseri mentions the eventual solution: "Fingerprints and cryptographic signing of images from the cameras that took them to ID real media instead of relying on tags and watermarks added to AI."

This is actually the most technically sound suggestion in his entire framework. Here's how it works:

Every digital camera has a unique "fingerprint." It's caused by tiny imperfections in the sensor. These imperfections create a pattern that's unique to that specific camera. It's almost impossible to fake or remove without being detected.

Cryptographic signing goes further. When a camera takes a photo, it could digitally sign the metadata with a private key. That signature proves the photo came from that specific camera and hasn't been edited.

The problem: this requires buy-in from camera manufacturers. Canon, Sony, Nikon, and phone makers all need to implement it. Then every platform needs to verify it.

C2PA is trying to establish this as a standard. But adoption is glacially slow. And there's a problem: old photos don't have signatures. Everything uploaded to Instagram before this system was implemented is unsigned.

So you'd end up with a two-tier system: old unsigned content (potentially fake), and new signed content (verified). That still leaves years of content from the transition period in a trust limbo.

Also, this only prevents synthetic content from being claimed as real. It doesn't prevent someone from generating a photo, printing it, photographing the print, and then uploading the photo. Technical solutions always have gaps.

The Uncomfortable Truth About Labels and Detection

Mosseri says platforms need to "label AI-generated content." In theory, this is great. In practice, it's almost impossible.

Detecting AI-generated images is a moving target. As soon as researchers publish a detection method, generative AI researchers train their models to bypass it. Open AI publishes research about detection methods, then turns around and makes their models harder to detect.

It's not malicious. It's just how technology works. Detection and evasion are locked in a feedback loop.

Because of this, any detection system will have false positives and false negatives. It will flag some real images as AI and miss some AI images as real.

False positives are bad for creators. If your real photo gets flagged as AI, it damages your credibility. False negatives are bad for audiences. If a fake photo isn't flagged, it fools people.

Meta's current approach is to label images created with their own tools. This avoids the detection problem. But it means most AI content (created with other tools) goes unlabeled.

The honest truth: labeling will never be comprehensive. Platforms will have to accept that some AI content will slip through unlabeled. The only real defense is the shift to source credibility that Mosseri mentions.

Estimated data shows that AI content quality significantly improves with human involvement, as seen in tools like Midjourney, DALL-E, and Adobe Firefly compared to generic AI-generated content.

How Creators Are Adapting: The Imperfection Arms Race

We're already seeing creators develop new strategies to signal authenticity. It's wild to watch in real-time.

The most obvious: people are posting "raw" content. Unfiltered. Unflattering. With visible blemishes, bad lighting, messy backgrounds. This signals "This is too real to be fake."

But once this becomes a widespread authenticity signal, AI generators will be trained on it. Soon you'll have AI-generated "candid" photos that look just as imperfect as real ones.

At which point creators will have to find a new signal. Maybe it's the metadata. Maybe it's consistency over time. Maybe it's community verification.

This is an arms race, and creators are on the losing side because they're reacting to AI improvements instead of driving them.

What's interesting is how this plays out on different platforms. TikTok's algorithm rewards "authentic" seeming content. YouTube's algorithm rewards production quality. Twitter's algorithm rewards engagement.

On TikTok, creators will increasingly lean into "realness" as a competitive advantage. On YouTube, they might embrace AI tools to improve production quality. On Twitter, they'll do whatever drives engagement.

Each platform will develop different norms around AI content based on what their algorithms reward.

The Business Model Problem: Why Platforms Can't Fix This

Here's what Mosseri didn't say explicitly, but is implicit in everything he wrote: Instagram's business model is fundamentally incompatible with authenticity.

Instagram makes money by selling ads. The more people spend on Instagram, the more ads they see, the more money Instagram makes.

To maximize engagement, Instagram's algorithm prioritizes content that's visually appealing, emotional, and aspirational. Because that's what makes people scroll for hours.

Authentic, raw, boring content doesn't drive engagement. So the algorithm deprioritizes it.

Creators, responding to algorithmic incentives, make their content more polished, more curated, more fake.

Now, with AI tools available, they can fake things at scale. They don't have to spend hours on production. They just generate it.

Instagram could fix this by changing their algorithm to prioritize original, authentic content. But that would reduce engagement. Which would reduce ad revenue. Which shareholders wouldn't like.

So instead, Instagram will add labels to AI content and hope that's enough. It won't be.

This is the core problem with every platform's approach to synthetic content: they're trying to solve a business model problem with technical solutions.

You can't engineer your way out of misaligned incentives.

The Role of Runable and Automation Platforms

For teams and creators building content at scale, automation platforms like Runable are becoming essential infrastructure. But they also underscore the authenticity problem Mosseri discusses.

Runable enables creators to generate presentations, documents, reports, and images using AI agents. This is powerful for productivity, but it also means a single creator can produce the appearance of a team's output.

One person can generate a week's worth of polished content in minutes. That looks authentic. That drives engagement. But it's fundamentally synthetic at scale.

Platforms like Runable ($9/month) are going to be crucial for the next wave of content creation. But they also make the authenticity problem worse, not better.

The irony is that Runable's automation capabilities make it easier to fake authentic content at scale. A single person using Runable's AI agents can maintain the appearance of being a multi-person operation.

Use Case: Automatically generate presentation decks from raw data without revealing that a single person is behind the entire operation.

Try Runable For FreeThis isn't a criticism of Runable. It's a criticism of the entire ecosystem. When automation tools get good enough, authenticity signals break down.

The Next Shift: Micro-Communities and Local Trust

Mosseri's focus on surfacing credibility signals points toward a larger trend: the fracturing of global social networks into micro-communities based on local trust.

As global platforms become harder to trust, people retreat to communities where they know the people or have shared verification mechanisms.

This is already happening. Discord servers, Reddit subreddits, and niche Facebook groups are where real conversations happen. Instagram is becoming a broadcast platform for brands and influencers, not a social network.

The future of Instagram might not be about fixing authenticity. It might be about accepting that broad platforms can't maintain trust at scale, and fragmenting into smaller, more trustworthy spaces.

This would be a return to how the internet worked in the 1990s: smaller communities, greater trust, less reach.

Mosseri probably understands this, which is why he's talking about credibility signals instead of trying to rebuild a trustworthy global platform. He's adapting to a world where that's not possible.

What Users Should Do Right Now

You can't wait for platforms to solve this. You have to adapt your own information processing.

First, assume skepticism by default. If something looks amazing, ask if it's real. If someone seems too perfect, they probably are.

Second, check sources. If a claim is important, find the source. Who originally said this? What's their credibility?

Third, follow verified sources. In the age of synthetic content, verification badges matter. Not as a perfect signal (they can be faked), but as a filtering mechanism.

Fourth, engage with communities you trust. Small, moderated communities with strong norms are more resistant to misinformation than large, algorithmic feeds.

Fifth, develop visual literacy. Learn how to spot obvious AI artifacts. It's getting harder, but it's still possible with modern generative models.

Sixth, check metadata. Right-click on images and look at properties. See when it was created. See what camera created it. It's not foolproof, but it's a start.

Seventh, be suspicious of perfection. Real life is messy. Real people are imperfect. If something is too polished, too perfect, too good to be true, it probably isn't real.

The Long Game: Years to Adapt

Mosseri ended his statement with a realistic assessment: "We're going to take us years to adapt."

He's right. This isn't something that gets solved in a few months or even a few years.

The technology will keep improving. Detection methods will keep failing. New signals of authenticity will emerge and be co-opted. Platforms will release features that don't actually solve the problem.

Society will gradually shift from trusting visual information by default to assuming everything is potentially fake. We'll develop better heuristics for evaluating sources. We'll become more skeptical in general.

But the underlying technology won't stop advancing. AI image generation is only going to get better. Video synthesis is following the same trajectory. Audio synthesis is almost indistinguishable from real human speech.

Eventually, we might reach a point where there's no technical signal left that distinguishes real from fake. At that point, the only remaining signal is source credibility.

When that happens, we're living in a fundamentally different information environment. Not just on Instagram, but everywhere.

The old rule "cameras don't lie" will be gone. Replaced by something new: "Trust people you know, and be skeptical of strangers."

That's not progress. It's a return to pre-internet dynamics. But it might be the only stable equilibrium possible in an era of perfect synthetic media.

FAQ

What does Adam Mosseri mean by "infinite synthetic content"?

Infinite synthetic content refers to the era where AI-generated images, videos, and text can be created at unlimited scale. Unlike real content (which requires someone to have an experience, capture it, and share it), synthetic content can be generated instantly and infinitely. This fundamentally changes how social platforms function because credibility signals based on real experiences no longer work.

How does AI-generated content undermine trust on Instagram?

AI-generated content undermines trust by making it impossible to distinguish real photos from fake ones through visual inspection alone. Instagram was built on the premise that creators share real moments from their lives. When AI can create indistinguishable synthetic moments, the primary value proposition of the platform (authentic human connection) collapses. Users no longer know what's real, which erodes trust in the entire platform.

What are credibility signals and why do they matter now?

Credibility signals are observable indicators that content comes from a trustworthy source, such as verification badges, posting history, engagement patterns, and community reputation. In an age where visual authenticity is impossible to verify technically, credibility signals become the primary defense against misinformation. Instead of asking "Is this real?" users have to ask "Does this person have a reason to lie?"

Why can't platforms just detect and remove AI-generated content?

Detection of AI-generated content is nearly impossible at scale because detection methods and evasion capabilities are locked in a continuous arms race. Every time researchers publish a detection method, generative AI researchers train their models to bypass it. Additionally, detection systems produce false positives (flagging real images as fake) and false negatives (missing fake images), both of which create problems. Meta's current approach of only labeling images created with their own tools is more practical but leaves most AI content unlabeled.

What does cryptographic signing of images mean?

Cryptographic signing means digitally "signing" a photo at the moment it's captured (at the camera level) using encryption technology. This creates a verifiable proof that the image came from a specific camera and hasn't been edited. C2PA (Coalition for Content Provenance and Authenticity) is working to establish this as an industry standard, but adoption has been slow. The system would work for future photos but doesn't solve the problem of existing content that was never signed.

How are creators currently proving their content is real?

Creators are adapting by posting "raw" or unfiltered content with visible imperfections. This temporarily signals authenticity because highly polished content looks more likely to be AI-generated. However, this is a temporary advantage. As AI improves, it will learn to generate imperfect-looking content, and creators will need to find new authenticity signals. Eventually, the only reliable signal will be source credibility and community reputation.

What does Mosseri think Instagram should do to survive this transition?

Mosseri outlined four specific requirements: (1) Build the best creative AI tools so creators can leverage AI ethically, (2) Label AI-generated content and verify authentic content, (3) Surface credibility signals about who's posting, and (4) Improve ranking for originality. However, these solutions have significant implementation challenges. Labeling is incomplete, verification is technically difficult, credibility signals are weak in anonymous platforms, and prioritizing originality conflicts with algorithmic engagement optimization.

Why is imperfection becoming a sign of authenticity?

Imperfection signals authenticity because AI-generated images initially looked too perfect. Grain, noise, unflattering angles, and poor lighting were tells of genuine content. However, this signal is temporary. Once AI is trained to generate imperfect-looking images, imperfection no longer proves authenticity. This represents a fundamental shift in how authenticity is signaled, from technical markers to source credibility.

The Challenge Ahead

We're standing at a crossroads. The visual media that humans evolved to trust is becoming unreliable. The platforms built on sharing real experiences are flooding with synthetic content. The old signals of authenticity are being weaponized and co-opted.

Mosseri's framework is honest about the challenge, even if the solutions feel insufficient. Because the real problem isn't technical. It's cultural and psychological.

We evolved to trust what we see. That instinct kept our ancestors alive. But in 2025, it makes us vulnerable. We need to fundamentally change how we process information, and that's uncomfortable.

There's no quick fix. No algorithm that solves this. No label that makes it okay.

What there is: awareness. Understanding that everything you see might be fake. Questioning your sources. Valuing credibility and community over perfection and polish.

That's the adaptation Mosseri is really describing. Not a technical solution. A cultural shift toward skepticism, verification, and local trust.

It's not progress. But it might be necessary.

Related Concepts Worth Exploring

The issues Mosseri raises connect to broader trends in digital media: the rise of deepfakes, the decline of traditional journalism's gatekeeping role, the challenge of synthetic media in political discourse, and the fundamental question of what "truth" means in an age of perfect simulation.

As AI technology continues advancing, these questions will only become more urgent. The next few years will determine whether platforms can adapt fast enough to maintain user trust, or whether the internet fragments into smaller, more trustworthy communities.

The stakes are higher than Instagram's business model. They're about how humans navigate an increasingly synthetic information environment.

Key Takeaways

- The default assumption about photographs is shifting from 'this is real' to 'this might be fake'—a fundamental change in how humans process visual information

- AI-generated content has reached technical parity with authentic content; technical detection is an unsustainable arms race

- Creators are temporarily signaling authenticity through imperfection, but this signal will be weaponized and co-opted once AI learns to generate imperfect-looking content

- Instagram's proposed solutions (labeling, detection, credibility signals) address symptoms rather than the root problem: platforms' business models incentivize engagement over authenticity

- The only sustainable long-term strategy is shifting trust from visual signals to source credibility and community reputation, fragmenting large platforms into smaller, more trustworthy communities

Related Articles

- Samsung Jet Bot AI Ultra: Why This Robot Vacuum Dominates [2025]

- Qwen-Image-2512 vs Google Nano Banana Pro: Open Source AI Image Generation [2025]

- Watch College Football Bowl Games 2025/26 Free Online [2025]

- Meta's $2 Billion Manus Acquisition: The Future of AI Agents [2025]

- Best Comfortable Office Chairs [2025]

- Samsung's CES 2026 First Look: Everything You Need to Know [2026]

![Instagram's Authenticity Crisis: How AI-Generated Content Is Reshaping Trust [2025]](https://tryrunable.com/blog/instagram-s-authenticity-crisis-how-ai-generated-content-is-/image-1-1767222357275.jpg)