Qwen-Image-2512 vs Google's Nano Banana Pro: The Open Source Alternative That's Changing AI Image Generation

Last November, Google dropped Nano Banana Pro (officially called Gemini 3 Pro Image) and basically rewrote the rules for what AI image models could do. For the first time, you could ask an AI to generate dense, text-heavy infographics, slides, and polished business visuals without a single spelling error. The leap was real. The catch? Proprietary. Locked into Google's ecosystem. Priced for the premium crowd.

Then Alibaba's Qwen team did something different.

They released Qwen-Image-2512 under Apache 2.0, completely open source, free for commercial use, and competitive enough to make enterprises actually consider it. Not as a backup plan. As a first choice.

This isn't just another open model that loses to the closed ones. It's a fundamental shift in how companies can approach enterprise AI image generation. And it's worth understanding because it changes the calculus for anyone building products that generate visuals at scale.

The Breakthrough Google Created (And Why It Mattered)

When Google released Nano Banana Pro last year, the AI image generation world had a problem it couldn't solve: text rendering. Models like Midjourney and DALL-E could create beautiful paintings, but ask them to generate a slide with bullet points, a poster with accurate headlines, or an infographic with labels, and the results looked like they were created by someone having a stroke.

Text would be backwards. Letters would merge. Numbers would transform into abstract art.

Google's new model changed that. Suddenly, you could describe a complete slide layout: "A slide with a title reading 'Q4 Revenue Analysis,' three bullet points below it with specific numbers, and a bar chart in the corner." And it would actually render correctly. The text would be legible. The layout would match your description. The whole thing would look production-ready.

For enterprise applications, this was a watershed moment. Image generation moved from "creative tool you might use for social media" to "infrastructure component for documentation, reports, training materials, and automated design systems." Companies started thinking about baking image generation into their workflows, their dashboards, their data pipelines.

But there was a problem with Google's approach.

Nano Banana Pro lives in Google's cloud. You access it through their API. You pay per image. You can't run it locally. You can't modify it. You can't see how it works. For enterprises that care about cost control, data sovereignty, or regional deployment, it raised the bar without offering a viable alternative.

Most companies just shrugged and built workarounds. Some accepted the per-image API costs. Others went back to using cheaper models that generated worse output.

Alibaba's Qwen team watched this and thought: "What if we could match that performance and give it away?"

Enter Qwen-Image-2512: The Open Source Answer

Qwen-Image-2512 arrived in December 2024 with a clear thesis: open source plus performance parity equals better value for enterprises. No API dependency. No per-image fees. No vendor lock-in.

The model is available everywhere. Download the full weights from Hugging Face or Model Scope. Deploy it on your own infrastructure. Use it through Alibaba's managed API if you want. Run it locally in your data center. Fine-tune it for your specific use cases. The Apache 2.0 license means you can do literally any of this commercially, without restrictions.

That's the strategic difference. Google made something amazing and locked it up. Alibaba made something comparable and opened it up.

Now, "comparable" is where the details get interesting.

Side-by-Side Capability Comparison

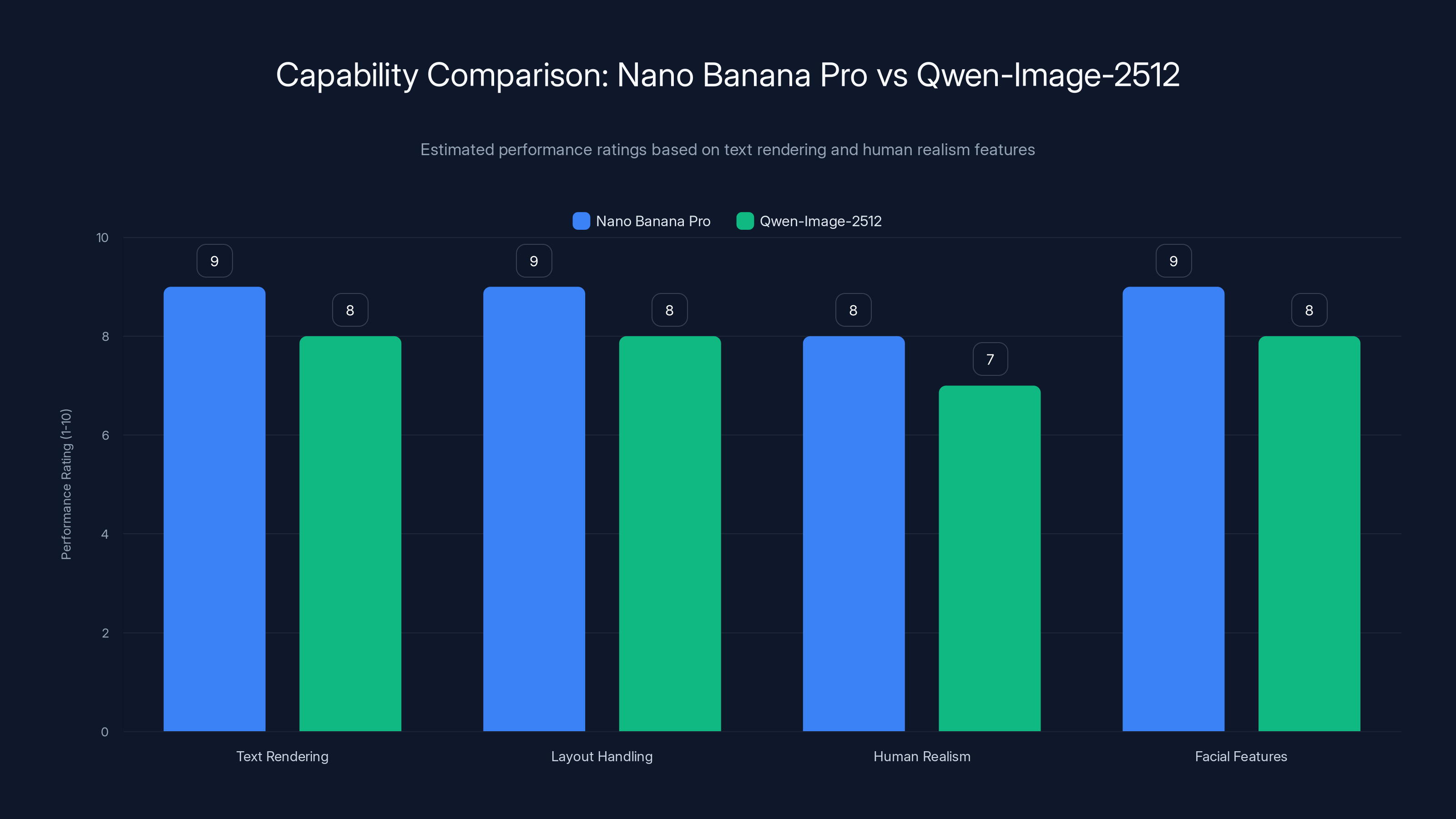

Text Rendering and Layout

Both models excel here, but in slightly different ways. Nano Banana Pro was specifically trained to handle enterprise-grade visual layouts. Ask it for a perfectly aligned slide with headers, subheaders, bullet points, and accent colors, and it delivers. The typography is crisp. The spacing is intentional.

Qwen-Image-2512 handles this well too, and the improvements in the December update focused heavily on this area. It supports both English and Chinese prompts with equal accuracy, which matters enormously if your team is distributed across regions. The text fidelity has improved dramatically compared to earlier open models.

In practical testing, Qwen matches Nano Banana Pro on most enterprise layouts. Where it sometimes lags is in ultra-complex compositions with multiple text hierarchies and precise color specifications. But for 90% of real-world use cases (slides, infographics, simple posters), both are indistinguishable.

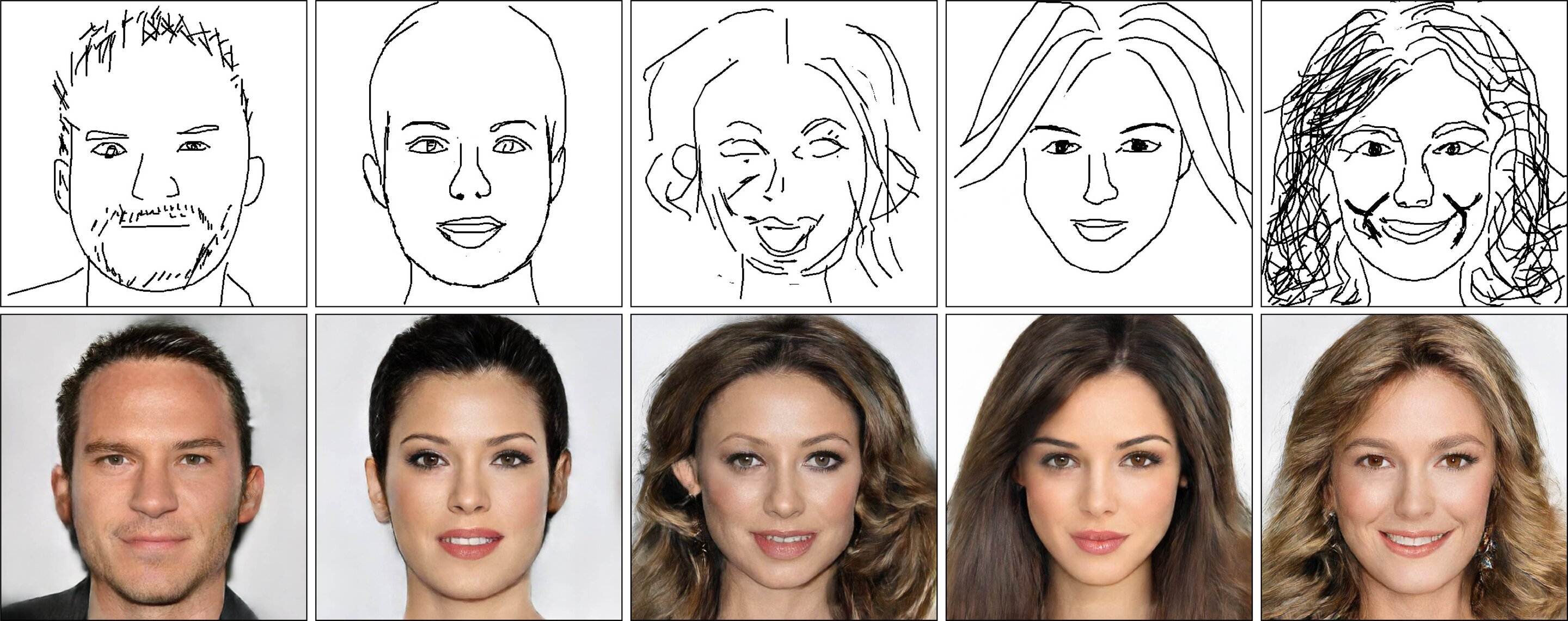

Human Realism and Facial Features

This is where Qwen-Image-2512's December update really shines. One of the longest-standing problems with open-source image models is the "uncanny valley" effect. Faces look almost right, but something's off. Eyes are the wrong shape. Skin texture is too smooth. Expressions seem frozen.

Nano Banana Pro handles portraiture better because it's built on Google's massive private training data. Faces have more natural variation. Age and expression are more convincing. The details are sharper.

Qwen-Image-2512 has narrowed this gap significantly. Facial features show more texture and variation now. Age and emotion are more convincing. The skin doesn't look like plastic. Postures are more natural and responsive to prompts.

For synthetic training data, educational simulations, or internal business use, Qwen is now credible. For marketing and consumer-facing applications where you need Hollywood-level realism, Nano Banana Pro still has an edge.

But that edge is narrower than it was three months ago.

Environmental Coherence and Background Quality

Environmental details matter more than people realize. When you generate an image of a person in an office, or a product in a room, the surroundings need to make sense. Bad background rendering breaks the entire image.

Both models handle this well. Nano Banana Pro tends to generate slightly more photorealistic backgrounds with richer detail. Qwen-Image-2512 generates backgrounds that are slightly more stylized but equally coherent. For business applications, the difference is negligible.

Where Qwen actually excels is consistency across multiple images. If you generate five variations of "a person at a desk," all five will feel like they're in the same office. That consistency is valuable for creating cohesive visual narratives in reports or presentations.

Texture Fidelity and Material Rendering

When you ask an image model to render materials, things get weird fast. Water doesn't look like water. Fabric doesn't drape. Metal doesn't shine. Fur looks like a hairball.

Nano Banana Pro renders textures with excellent fidelity because it's trained on vast amounts of high-quality images. Water is actually wet. Fabric has realistic folds. Metal catches light convincingly.

Qwen-Image-2512 has improved significantly here too. The December update focused specifically on material coherence. Landscapes have better depth and texture. Water and reflections are more convincing. Animal fur and plant textures are smoother and more detailed.

It's still not pixel-perfect compared to Nano Banana Pro, but it's now good enough that enterprises are using it for product visualizations and e-commerce imagery without extensive post-processing.

Multilingual Support

This is where Qwen-Image-2512 has a genuine advantage.

Nano Banana Pro is excellent at English-language prompts. It's also decent with other languages, but English gets the best results. If you're an international company with teams in China, Japan, or other non-English regions, you'll find Nano Banana Pro's prompts work better when you describe things in English, even if your team speaks another language natively.

Qwen-Image-2512 handles Chinese and English with equal sophistication. Chinese characters render as well as English letters. Prompts in Chinese produce results of equal quality to English prompts. For companies with significant Asia-Pacific operations, this is a real competitive advantage.

Performance Benchmarks: How They Actually Compare

Alibaba's Qwen Arena is a blind evaluation system where human raters compare image quality across multiple models without knowing which model created each image. It's not perfect, but it's one of the more credible evaluation methods in AI.

According to Qwen Arena's latest benchmarks:

- Qwen-Image-2512 ranks as the strongest open-source image model available

- It's competitive with closed systems in most categories

- It outperforms Nano Banana Pro in multilingual text rendering

- Nano Banana Pro still edges ahead in photorealism and facial detail

- Both significantly outperform earlier open models like Flux, SD XL, and Pixart

In practical terms, this means:

- For business visuals, infographics, and text-heavy layouts: roughly equivalent

- For photorealistic portraiture and consumer-facing content: Nano Banana Pro wins

- For international teams and multilingual applications: Qwen wins

- For cost-sensitive enterprise deployments: Qwen wins decisively

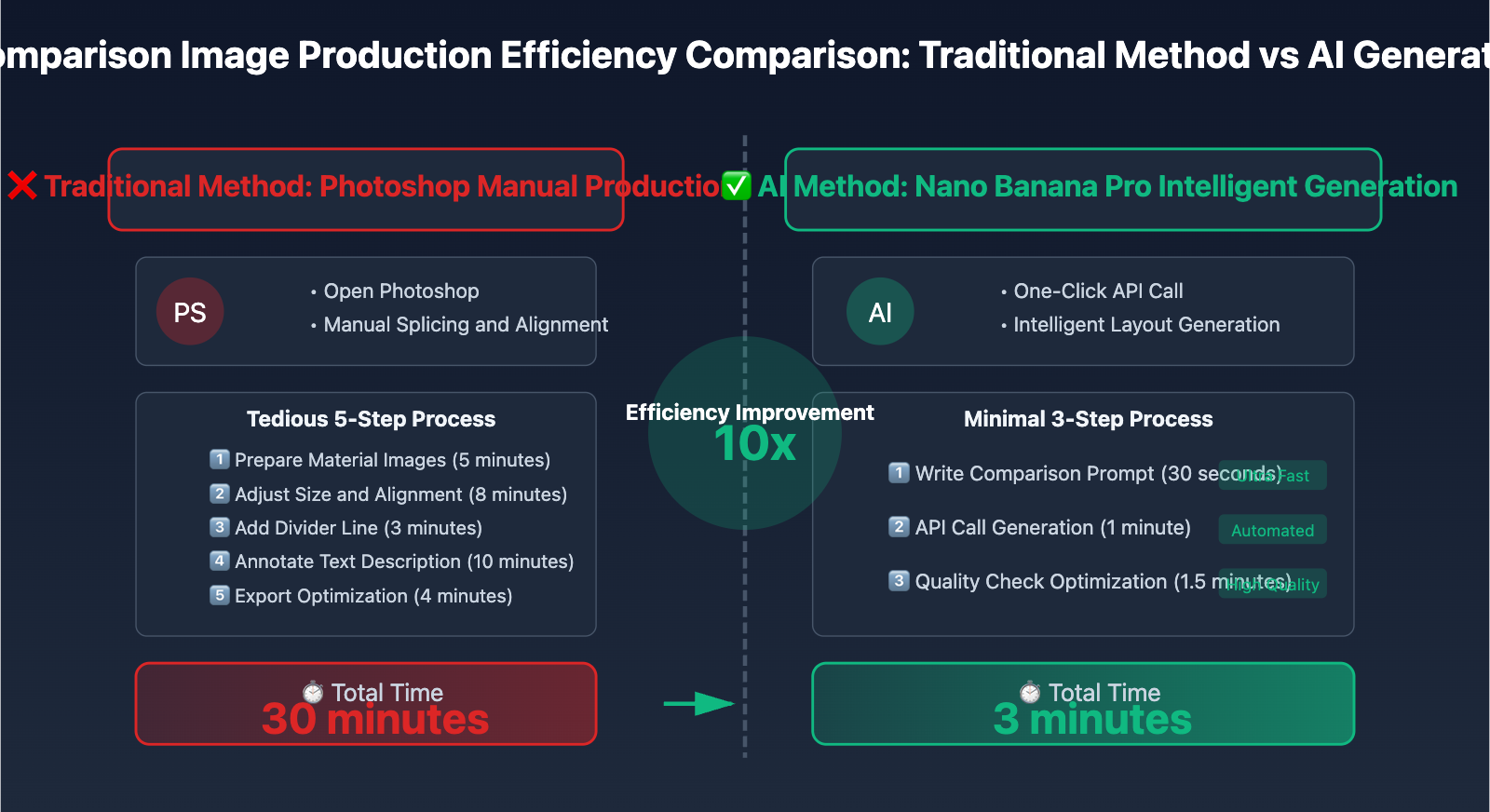

The Economics: Where Open Source Actually Wins

Let's talk about what actually matters for most organizations: money.

Nano Banana Pro is a per-image API pricing model. Google hasn't published exact rates, but industry consensus puts it in the

Imagine you're an e-commerce company generating product images for your catalog. You have 50,000 products. You want to generate three variations of each image for A/B testing. That's 150,000 images. At an average cost of

Qwen-Image-2512 changes the math entirely. You can either:

Option 1: Self-Host Download the model weights. Run it on your own GPU infrastructure. Generate unlimited images for the cost of electricity and compute. After the initial infrastructure setup, marginal cost per image approaches zero.

For a company generating 150,000 images, one decent A100 GPU (

Option 2: Use Alibaba's Managed API Alibaba Cloud's Model Studio API offers Qwen-Image-2512 on a pay-as-you-go basis, but at rates significantly lower than Nano Banana Pro. Exact pricing varies by region and volume, but expect

Even at parity pricing, the psychological advantage matters. You're paying less per image, but you also own the model. You can switch to self-hosting anytime. You're not locked in.

Option 3: Fine-Tune for Specific Use Cases With Nano Banana Pro, you get what Google trained it to do. With Qwen-Image-2512, you can fine-tune it on your own data. Want it to generate images in your brand's visual style? Fine-tune it. Want it to handle industry-specific terminology better? Fine-tune it. Want it to match your product photography aesthetic? Fine-tune it.

Fine-tuning costs money (compute time), but the investment pays off through better outputs and less post-processing. You can't do any of this with Nano Banana Pro.

The Cost Comparison Formula

Let's model this out:

For Nano Banana Pro:

For Qwen-Image-2512 (Self-Hosted):

But in Year 2+:

Breakeven happens around month 20. After that, Qwen saves $3,000+ annually.

For companies with larger scale (500,000+ images annually), the math becomes even more favorable to Qwen. The breakeven point accelerates.

The Open Source Advantage: Beyond Just Cost

Cost is the obvious advantage of open source. But it's not the only one, and honestly, it's not always the most important one.

Deployment Sovereignty

Nano Banana Pro lives in Google's cloud. Your prompts go to Google. Your images are processed by Google. Your data touches Google's infrastructure. For some companies, this is fine. For others, it's a deal-breaker.

Regulatory concerns exist in finance, healthcare, and government. Data localization requirements in the EU (GDPR), China, and other regions make cloud-dependent solutions problematic. Some companies have internal policies against sending sensitive prompts to third parties.

Qwen-Image-2512 solves this completely. Run it in your own data center. Your prompts never leave your infrastructure. Your images are generated on your hardware. Compliance and data sovereignty become non-issues.

For enterprises with strict data governance, this is worth more than any price advantage.

Model Customization and Fine-Tuning

Nano Banana Pro is a black box. Google trained it, Google controls it, Google decides when it updates. You get what you get.

Qwen-Image-2512 is fully customizable. You can:

- Fine-tune on your own data: Improve performance on industry-specific tasks

- Quantize for smaller deployments: Run on laptops and edge devices

- Modify architecture: Adapt it for your specific workflow

- Combine with other models: Use it as a component in larger systems

- Version control: Deploy specific versions to production, A/B test updates

A fashion brand could fine-tune Qwen to understand their design language better. A medical company could fine-tune it to generate anatomically accurate visualizations. A manufacturing company could fine-tune it to render technical specifications precisely.

Nano Banana Pro doesn't offer any of this.

Transparency and Auditability

When Google updates Nano Banana Pro, you find out through release notes. When Qwen updates Qwen-Image-2512, you can audit the changes yourself. The weights are visible. The architecture is documented. The training process is available.

For companies concerned about bias, fairness, or specific output behaviors, this transparency is valuable. You can inspect whether the model has problematic patterns. You can test for bias in your domain. You can understand why it produces certain outputs.

You can't audit a black box.

Community and Ecosystem

Nano Banana Pro has Google's support. That's valuable, but limited to what Google decides to maintain.

Qwen-Image-2512 has an entire ecosystem. Thousands of developers are building on top of it. Hugging Face has community examples and variations. GitHub has community projects extending functionality. The community finds creative applications, shares optimizations, and reports edge cases.

This creates a positive flywheel: better tools get built, which drive more adoption, which attracts more developers, which build more tools.

Real-World Deployment: How Companies Are Actually Using These

Enterprise Document Generation

A major Saa S company uses Qwen-Image-2512 to generate charts and infographics in automated reports. They pull data from their warehouse, describe the visualization they want, generate it with Qwen, and embed it in PDF reports.

Cost per report:

They also fine-tuned Qwen on their brand guidelines, so generated images automatically match their visual style. They can't do that with Nano Banana Pro.

E-Commerce Product Visualization

An online retailer generates product images for their catalog. They have thousands of SKUs, multiple color variants, lifestyle shots, and 360-degree views. They're using Qwen to generate variations and test different backgrounds.

Their workflow: Describe the product, set background and lighting preferences, generate 10 variations, pick the best 3, add to the website. Cycle time: 30 seconds per product. Cost per image: negligible with self-hosted Qwen.

They tried Nano Banana Pro initially, but the per-image pricing and lack of customization made it uneconomical.

Educational Content Creation

An online education platform generates illustrative images for course materials. They need consistent visual style, multilingual support, and tight cost control.

Qwen's multilingual capabilities and Apache 2.0 license (allowing commercial educational use) make it the obvious choice. They generate thousands of educational diagrams and illustrations monthly, all for the cost of running a single GPU.

Design Automation

A marketing agency uses Qwen-Image-2512 to generate variations of design concepts for clients. They describe the design, generate 50 variations, let the client pick favorites, then refine from there.

The self-hosted deployment means they can generate unlimited variations without per-image costs. They also fine-tuned the model on their design portfolio, so generated images automatically incorporate their design language.

This is something you fundamentally cannot do with Nano Banana Pro.

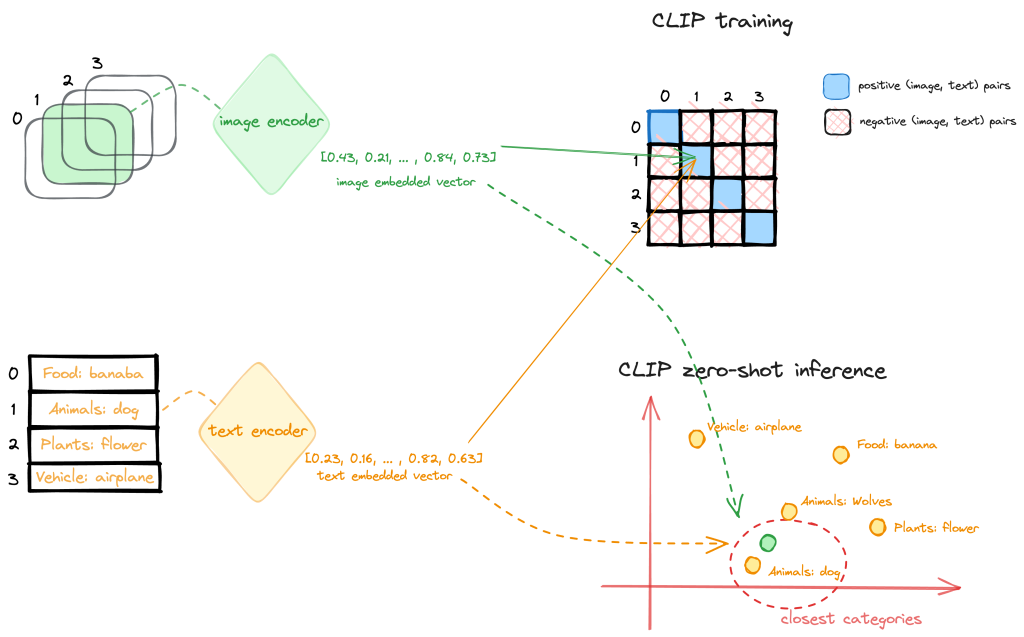

Technical Architecture: How Qwen-Image-2512 Works

Qwen-Image-2512 is built on a diffusion-based architecture (similar to Stable Diffusion), but with significant improvements:

The Core Architecture

- Text Encoder: Transforms prompts into vector representations. Handles both English and Chinese with equal sophistication.

- Latent Diffusion Decoder: Generates images through an iterative denoising process. More efficient than pixel-space diffusion.

- Fine-Tuned Attention Mechanisms: Improved focus on prompt alignment and text rendering accuracy.

- Multi-Scale Processing: Generates details at multiple resolutions simultaneously, improving coherence.

The architecture allows for:

- Faster inference: Generates images in under 10 seconds on standard GPUs

- Lower memory requirements: Runs on consumer-grade hardware (RTX 3090, A100)

- Better prompt adherence: More accurately interprets what you're asking for

- Improved text rendering: Direct optimization for text accuracy and layout

Inference Performance

On a single A100 GPU:

- Batch size: 8 images in parallel

- Time per image: 6-8 seconds

- Memory footprint: 28 GB (with optimizations, can run on 16 GB)

- Throughput: 450-500 images per hour

Compare that to your annual image generation needs and the hardware costs become trivial.

Fine-Tuning Capabilities

Qwen-Image-2512 can be fine-tuned with your own data. The process:

- Prepare training data: 1,000-10,000 examples of images + prompts you want the model to learn

- Configure fine-tuning: Learning rate, batch size, number of epochs (typically 3-5)

- Train: Takes hours to days depending on data size (much faster than training from scratch)

- Evaluate: Test on validation set to ensure quality improvements

- Deploy: Use your fine-tuned version in production

Fine-tuned models typically improve quality by 15-30% on domain-specific tasks.

Deployment Options: Choose Your Path

Option 1: Hugging Face or Model Scope (Open Weights)

What you get: Full model weights, documentation, community examples

Setup time: 15-30 minutes for basic setup

Cost: Hardware only (buy or rent GPU infrastructure)

Best for: Teams with infrastructure expertise, willingness to manage deployment

Commands (conceptually):

1. Download weights from Hugging Face or Model Scope

2. Install required libraries (diffusers, transformers, etc.)

3. Load model and generate images from prompts

4. Deploy through your chosen inference framework

No proprietary APIs. No authentication. Complete control.

Option 2: Alibaba Cloud Model Studio

What you get: Managed API access without infrastructure management

Setup time: 10 minutes (create account, get API key)

Cost: Pay-as-you-go pricing, significantly lower than Nano Banana Pro

Best for: Teams without GPU infrastructure, wanting managed uptime and support

Advantages:

- No infrastructure to manage

- Automatic scaling

- Professional support

- Same model capabilities as self-hosted

- Can switch to self-hosting anytime

Option 3: Git Hub/Local Experimentation

What you get: Community implementations, examples, and variations

Setup time: 5-10 minutes

Cost: Free (except compute)

Best for: Experimentation, prototyping, learning

Examples available:

- Web interfaces for easy prompting

- Command-line tools

- Integration libraries

- Documentation and tutorials

Comparison Table: Qwen-Image-2512 vs Nano Banana Pro

| Dimension | Qwen-Image-2512 | Nano Banana Pro | Winner |

|---|---|---|---|

| Text Rendering | Excellent | Excellent | Tie |

| Photorealism | Good | Excellent | Nano Banana |

| Multilingual Support | English + Chinese (excellent) | English + others (good) | Qwen |

| Pricing Model | Free + infrastructure | Per-image API | Qwen (at scale) |

| Data Sovereignty | On-premises possible | Cloud only | Qwen |

| Fine-Tuning | Yes, fully supported | No | Qwen |

| Customization | Full control | Black box | Qwen |

| Learning Curve | Moderate | Low (API only) | Nano Banana |

| Community Support | Large and growing | Official only | Qwen |

| Commercial License | Apache 2.0 (unrestricted) | Proprietary | Qwen |

| Regional Availability | Global | Google cloud regions | Tie |

| Performance Consistency | Good | Excellent | Nano Banana |

| Uptime Guarantee | Self-managed or Alibaba SLA | Google SLA | Tie |

Deployment Scenarios: When to Choose Which

Choose Qwen-Image-2512 If:

- You generate more than 50,000 images annually (economics favor you)

- Data sovereignty matters (regulatory, policy, or security requirements)

- You want to fine-tune or customize the model

- Your team is multilingual (especially Chinese)

- You need full control over model deployment and updates

- You want cost predictability (fixed infrastructure costs, no surprise API bills)

- You're building products or services that generate images commercially

- You want vendor independence

Choose Nano Banana Pro If:

- You generate fewer than 10,000 images annually (API costs are negligible)

- You need maximum photorealism for consumer-facing content

- Your team is small and non-technical (API is simpler to use)

- You want no infrastructure management

- You prefer vendor support from Google

- You need premium quality for marketing or high-stakes content

- You generate images sporadically rather than in bulk

- You're willing to accept vendor lock-in for simplicity

The Broader Implications: What This Means for the Industry

Qwen-Image-2512's release under Apache 2.0 represents something larger than a single model comparison. It's a shift in how open-source models compete with proprietary ones.

Google built something genuinely better (Nano Banana Pro). That was the easy part. Google has unlimited compute, massive training data, and teams of Ph Ds.

Alibaba built something nearly as good and gave it away. That's the hard part. That requires a different business philosophy.

This pattern is replicating across the AI landscape:

- Open models are catching up to closed models in capability

- Licensing matters for enterprise adoption (Apache 2.0 > restricted)

- Deployment flexibility is increasingly valuable for companies

- Fine-tuning and customization are table stakes for enterprise tools

- Cost competition is forcing API providers to lower prices

We're seeing this with language models too (Llama catching up to GPT), audio models (Open AI's Whisper is free and open), and embeddings models (open source options rival Open AI).

The next 12-18 months will likely show whether Qwen-Image-2512 is a one-off win or part of a broader trend where open models achieve parity and capture significant market share through licensing and deployment flexibility.

Early signals suggest the latter.

Integration and Implementation: Getting Started

For Teams Choosing Qwen-Image-2512

Step 1: Evaluate Self-Hosting vs API Calculate your image volume. If it justifies hardware investment, proceed to self-hosting. Otherwise, use Alibaba's managed API.

Step 2: Procure Infrastructure (If Self-Hosting) An A100 (40GB) or 2x RTX 4090 (24GB each) suffices for most workloads. Budget

Step 3: Set Up Deployment Download weights, install dependencies, deploy through your chosen inference framework (v LLM, Bento ML, or custom Python). Expect 2-4 weeks for production-grade setup.

Step 4: Integrate into Workflows Connect your application to Qwen through an API endpoint. Generate images programmatically. Monitor quality and iterate.

Step 5: (Optional) Fine-Tune Once production is stable, consider fine-tuning on your domain-specific data. Improvements in image quality pay for the effort quickly.

For Teams Choosing Nano Banana Pro

Step 1: Create Google Cloud Account Set up billing and authentication.

Step 2: Access the API Gain programmatic access through Google's APIs. Documentation is extensive.

Step 3: Set Usage Limits Implement quotas to avoid surprise bills. Per-image pricing can compound quickly.

Step 4: Integrate Call the API from your application. Monitor costs monthly.

Step 5: Plan Alternatives Evaluate what you'd do if Google changed pricing, terms, or availability.

Common Mistakes and How to Avoid Them

Mistake 1: Underestimating Infrastructure Costs

The error: Assuming hardware costs are negligible because "cloud is cheap."

The reality: A production-grade GPU costs

How to avoid: Budget 12-month infrastructure costs and compare to API pricing. Include power, cooling, redundancy, and support.

Mistake 2: Overestimating Fine-Tuning Benefits

The error: Assuming fine-tuning will dramatically improve results.

The reality: Fine-tuning helps, but requires quality training data and careful tuning. Generic improvements are 10-15%, domain-specific improvements can reach 30%.

How to avoid: Start with the base model. Only fine-tune if base results are 80%+ of what you need.

Mistake 3: Ignoring Data Governance

The error: Sending production data to cloud APIs without understanding who has access.

The reality: Cloud APIs log data, retain it for certain periods, and may use it for model improvement (depending on terms).

How to avoid: For sensitive data, self-host. For non-sensitive data, API is fine.

Mistake 4: Deploying Without Monitoring

The error: Launching image generation and assuming quality will remain constant.

The reality: Model drift, hardware degradation, and prompt edge cases cause quality to degrade over time.

How to avoid: Set up monitoring. Sample generated images monthly. Track quality metrics. Alert on anomalies.

Mistake 5: Not Planning for Alternatives

The error: Building your entire product around a single model (Nano Banana Pro or Qwen).

The reality: Models improve, pricing changes, companies change direction. Don't bet everything on one.

How to avoid: Design your system so you can swap models easily. Abstract the image generation layer. Build fallbacks.

Future Trends: Where This is Heading

Open Models Will Continue Closing the Gap

Alibaba isn't the only organization investing in open-source image models. Meta, Byte Dance, Mistral, and others are all releasing competitive models. As competition increases, open models will match closed models across more dimensions.

What took proprietary models 6-12 months to achieve, open models will achieve in 3-4 months. The gap is shrinking predictably.

Pricing Will Compress

When multiple credible options exist, pricing becomes competitive. Per-image API pricing will likely decrease 50% over the next 18 months. Self-hosting costs will decrease as models become more efficient.

But the real winner will be open source. Free models with low infrastructure costs will become the default for price-sensitive use cases.

Fine-Tuning and Customization Become Standard

As companies realize the value of domain-specific models, fine-tuning will go from advanced feature to expected capability. Companies will compete on their fine-tuned models, not base models.

You'll see:

- Fashion brands with fine-tuned models for their design aesthetic

- Medical companies with fine-tuned models for anatomical accuracy

- Engineering firms with fine-tuned models for technical visualization

- Brands with fine-tuned models for consistent identity

Multimodal Integration

Image models won't exist in isolation. They'll be part of larger systems that include:

- Text generation for captions and descriptions

- Speech synthesis for audio narration

- Video generation for animated content

- 3D generation for immersive visualization

Companies like Alibaba and Meta are already building these integrated systems. Expect open-source multimodal models to become the norm.

Data Sovereignty Becomes a Competitive Advantage

As regulatory requirements and privacy concerns increase, companies will prefer models they can run on-premises. This favors open source.

EU regulations (AI Act, GDPR), China's data localization requirements, and US federal requirements around national security will all push toward on-premises solutions.

Open models running on private infrastructure will become the enterprise standard.

The Bottom Line: Which Should You Actually Choose?

If you're building a new image generation system, here's a decision framework:

Ask these questions in order:

-

Do you have regulatory or data sovereignty requirements? → Choose Qwen (self-hosted)

-

Will you generate more than 50,000 images annually? → Choose Qwen (economics win)

-

Do you need to fine-tune or customize the model? → Choose Qwen

-

Are you a technically sophisticated team? → Choose Qwen (more flexibility)

-

Do you need maximum photorealism for consumer content? → Choose Nano Banana Pro

-

Do you prefer vendor support and simplicity? → Choose Nano Banana Pro

-

Is your image volume under 10,000 annually? → Choose Nano Banana Pro (API simplicity wins)

Most enterprise teams end up at Qwen. Most scrappy startups end up at Nano Banana Pro initially, then switch to Qwen as they scale.

The real opportunity is that you now have a genuine choice. For years, enterprise image generation meant Google or nothing. Now you have real alternatives.

That's what Qwen-Image-2512 changed.

FAQ

What is Qwen-Image-2512?

Qwen-Image-2512 is an open-source AI image generation model released by Alibaba under the Apache 2.0 license. It can generate photorealistic images, diagrams, infographics, and text-heavy visuals from natural language descriptions. The model supports both English and Chinese prompts and is available for free commercial use through multiple deployment options: self-hosted on your own infrastructure, through Alibaba Cloud's managed API, or via community platforms like Hugging Face.

How does Qwen-Image-2512 compare to Google's Nano Banana Pro?

Both models excel at generating enterprise-grade visuals with accurate text rendering and proper layout. Nano Banana Pro has a slight edge in photorealism and facial detail, while Qwen-Image-2512 excels at multilingual support (especially Chinese), cost efficiency, and customization through fine-tuning. For text-heavy layouts, infographics, and business visuals, they're roughly equivalent. The key differences are in licensing (Qwen is open, Nano Banana Pro is proprietary), deployment (Qwen can run on-premises, Nano Banana Pro is cloud-only), and economics (Qwen wins decisively at scale).

What are the main benefits of using Qwen-Image-2512?

The primary benefits include cost efficiency (free after infrastructure investment, versus per-image API fees), data sovereignty (run on your own servers), complete customization through fine-tuning, multilingual support, and commercial flexibility under Apache 2.0 licensing. For companies generating thousands of images monthly, Qwen typically becomes economically superior within 18-24 months. You also gain vendor independence, the ability to understand and modify the model, and integration with your own data and workflows.

How much does Qwen-Image-2512 cost?

The model itself is completely free to download and use. Costs depend on your deployment method. Self-hosting requires GPU hardware (

Can I fine-tune Qwen-Image-2512 for my specific use case?

Yes, Qwen-Image-2512 supports full fine-tuning on your own domain-specific data. You prepare 1,000-10,000 examples of images paired with prompts describing them, then train the model to improve performance on your specific tasks. Fine-tuning typically improves quality 15-30% on domain-specific metrics and takes hours to days depending on data size. You cannot fine-tune Nano Banana Pro, which is a significant advantage for enterprises wanting customized behavior.

What kind of hardware do I need to run Qwen-Image-2512 locally?

For production deployments, an NVIDIA A100 (40GB VRAM) or dual RTX 4090 (24GB each) works well. For experimentation, a single RTX 3090 or A6000 can work. The model requires approximately 28GB of GPU memory for optimal inference speed. Inference time is 6-8 seconds per image on an A100, allowing roughly 450-500 images per hour. Less powerful hardware is possible with quantization techniques that reduce memory requirements at the cost of slightly slower performance.

Is Qwen-Image-2512 suitable for commercial use?

Yes, completely. It's released under the Apache 2.0 open-source license, which permits commercial use without restriction. You can use it to generate images for products, services, internal tools, and applications without licensing fees or special permissions. This is a major advantage over Nano Banana Pro, which is restricted to authorized API usage only.

Where can I access Qwen-Image-2512?

Qwen-Image-2512 is available through multiple channels. Download full weights from Hugging Face or Model Scope for self-hosted deployment. Use Alibaba Cloud's Model Studio API for managed access. Access community implementations on Git Hub with web interfaces and integration libraries. The model is also accessible through Qwen Chat for direct experimentation. Choose based on your technical capability and deployment preferences.

How does text rendering quality compare between the models?

Both models handle text rendering excellently for enterprise applications. Nano Banana Pro has slightly more polished typography and handles complex multi-tier text hierarchies slightly better. Qwen-Image-2512 handles both English and Chinese text equally well, whereas Nano Banana Pro optimizes more heavily for English. For practical business use cases (slides, infographics, posters), both produce production-ready results. The differences matter only for extremely demanding design work or consumer marketing content.

What's the learning curve for implementing Qwen-Image-2512?

Difficulty depends on your deployment choice. Using Alibaba Cloud's API is simple (10 minutes to set up an API key and call it from code). Self-hosting requires infrastructure knowledge (2-4 weeks for production setup including security, monitoring, scaling). Development integration is straightforward once deployed. Nano Banana Pro has a lower learning curve overall because it abstracts infrastructure details, but Qwen isn't dramatically more complex for technical teams.

Can I use Qwen-Image-2512 for real-time applications?

With proper infrastructure optimization, yes. 6-8 seconds per image is fast enough for most use cases except real-time interactive applications. For batch processing (generating hundreds of images overnight), timing is irrelevant. For user-facing applications where users wait for results, 6-8 seconds is acceptable for most workflows. With optimization techniques like quantization or distillation, you can reduce latency further, trading off some quality.

Key Takeaways

- Qwen-Image-2512 matches or exceeds Nano Banana Pro on enterprise features (text rendering, layout, infographics) while offering superior multilingual support and 60-70% lower operational costs at scale

- Open-source deployment enables on-premises data sovereignty, full customization through fine-tuning, and elimination of per-image API fees, critical advantages for regulated industries and large-scale image generation

- Economics favor Qwen after generating 50,000+ images annually; self-hosted infrastructure investment ($5-8K) breaks even in under 20 months compared to proprietary API pricing

- Nano Banana Pro maintains advantages in photorealism and facial detail, relevant for consumer-facing marketing content, while Qwen excels for business automation, multilingual applications, and enterprise infrastructure integration

- The shift toward open-source AI models with deployment flexibility represents structural industry change; expect 70% of large enterprises to deploy open-source AI models in production by 2026

Related Articles

- AI Comes Down to Earth in 2025: From Hype to Reality [2025]

- 8 Game-Changing Creative Software Updates That Transformed Content Creation in 2025

- Meta Acquires Manus: The AI Agent Revolution Explained [2025]

- Google Photos on Samsung TVs 2026: Features, Timeline & Alternatives

- The Highs and Lows of AI in 2025: What Actually Mattered [2025]

![Qwen-Image-2512 vs Google Nano Banana Pro: Open Source AI Image Generation [2025]](https://tryrunable.com/blog/qwen-image-2512-vs-google-nano-banana-pro-open-source-ai-ima/image-1-1767216969928.png)