The AI Assistant That Does Everything

Imagine an AI that lives on your computer. Not in the cloud, not behind a corporate server, but right there on your machine. It can read your emails, manage your calendar, execute code, delete files, and orchestrate dozens of apps—all because you asked it to via WhatsApp. That's Moltbot. And over the past few weeks, it's gone from an obscure developer project to a genuine phenomenon, with early adopters describing it as the closest thing to "living in the future" since Chat GPT launched.

Dan Peguine, a tech entrepreneur based in Lisbon, has handed over a shocking amount of control to his custom Moltbot instance, which he calls "Pokey." This isn't someone tinkering on weekends. Pokey manages Peguine's morning briefings, organizes his work schedule, books meetings, resolves calendar conflicts, handles invoices, and even alerts his family when his kids have upcoming tests. He trusts it with passwords, API keys, and access to systems that most people would never dream of connecting to an AI.

And he's not alone. The viral enthusiasm is real. Dave Morin declared on X (formerly Twitter) that Moltbot gave him the same sensation as the original Chat GPT launch. Amazon employees are sharing how the tool feels like witnessing a "fundamental shift." Venture capitalists and developers are so excited about the prospect of running Moltbot that buying a Mac Mini specifically to host it became an inside joke on tech Twitter. Stock prices for unrelated companies have spiked purely because people confused their names with Moltbot.

But here's the complicated part: Moltbot works because it breaks almost every security rule we've developed over the past two decades. It's powerful because it's dangerous. It's revolutionary because it's reckless. And while the early adopters are intoxicated by the capability, they might be sleepwalking into a category of risk that AI companies and security researchers have barely begun to understand.

What Exactly Is Moltbot?

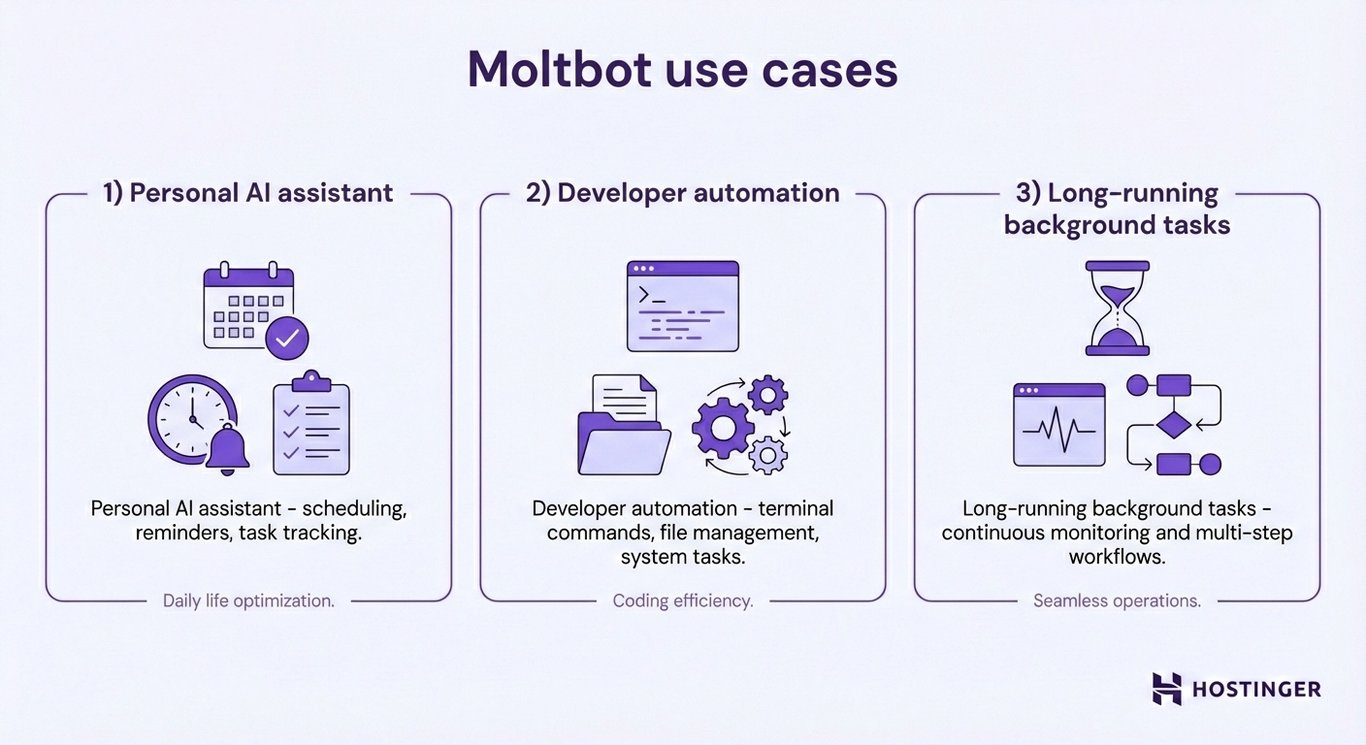

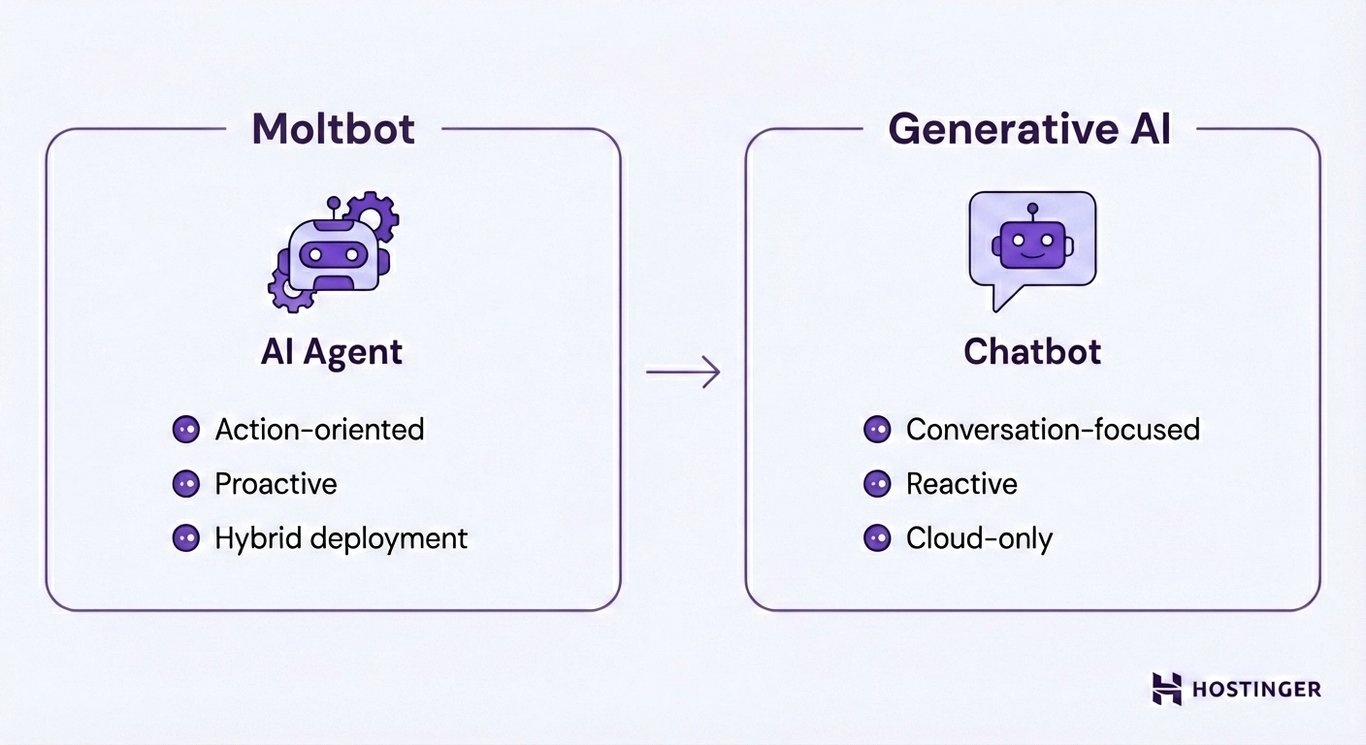

Moltbot is an agent. Not a chatbot, not a simple assistant like Siri or Alexa, but an agentic AI that runs continuously on your computer and coordinates between different AI models, applications, and services to accomplish complex tasks. Think of it as a personal automation system with a brain that can reason about problems and decide which tools to use.

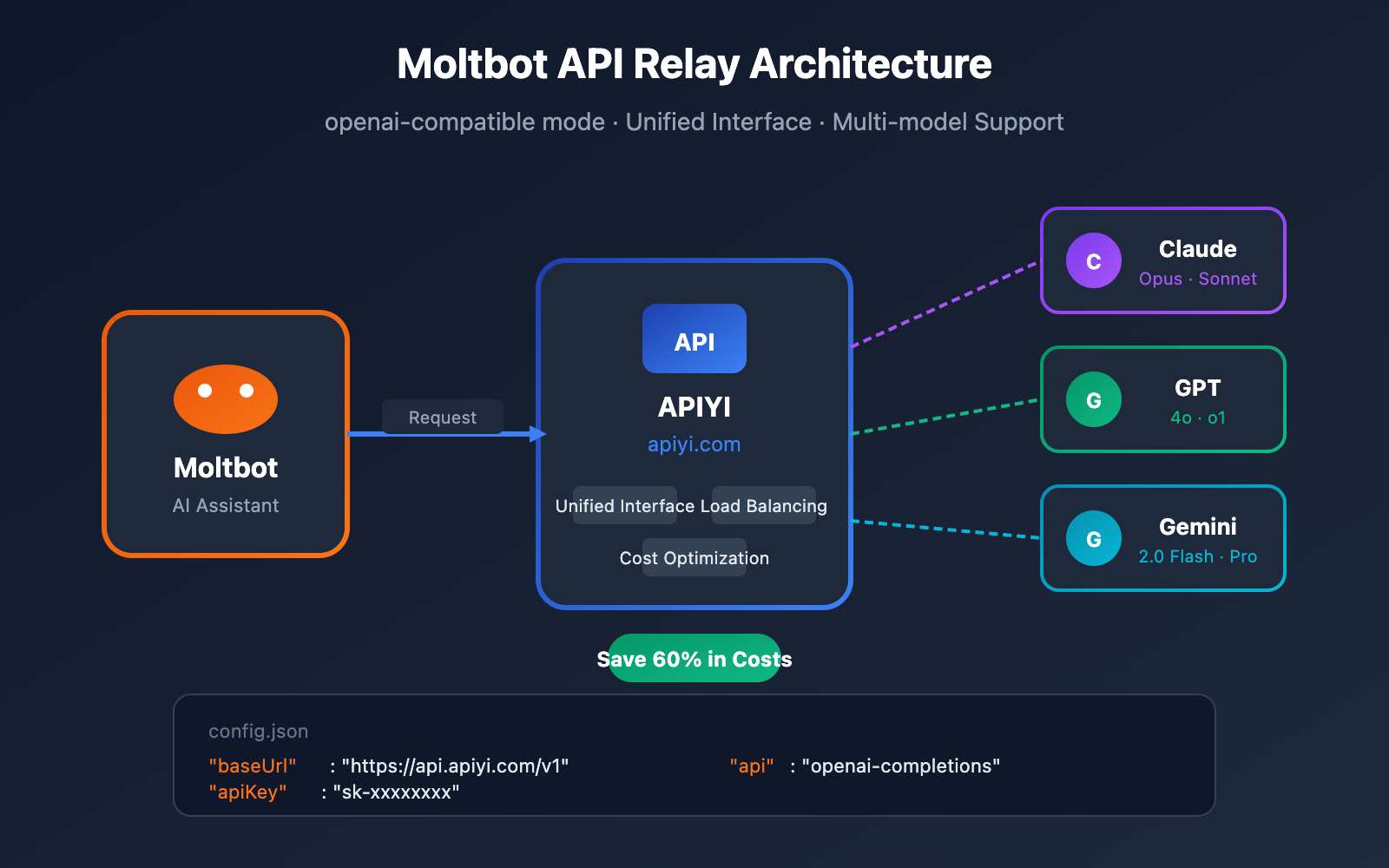

The brains behind Moltbot are AI models. It can use Claude (from Anthropic), GPT-4 (from OpenAI), Deep Seek, or other models available via API. But instead of just answering questions, it can take actions. It can open your browser, navigate to websites, interact with applications, execute scripts, manage files, and trigger workflows across dozens of different services.

You interact with Moltbot through a chat interface. Most users access it via WhatsApp, Telegram, or Discord. You type something like "Organize my calendar for tomorrow and flag any conflicts" or "Write a Python script that extracts all customer emails from my CRM," and the AI figures out what to do, connects to the necessary systems, and does it.

Peter Steinberger, the independent developer who created Moltbot (originally called Clawdbot before rebranding at Anthropic's request), didn't set out to build something this ambitious. He was experimenting with ways to feed images and audio files into coding models. One day he sent a voice memo to his prototype and watched as the AI transcribed it, recognized it needed the OpenAI Whisper API, found the key on his computer, made the API call, and typed back a response.

"That was the moment I was like, holy shit," Steinberger recalls. "Those models are really creative if you give them the power."

He realized the real opportunity wasn't in single tasks, but in building a system that could stay resident on your machine, maintain context about your digital ecosystem, and orchestrate anything you needed done. Most importantly, Steinberger wanted to avoid what every other AI startup does: move your data to the cloud. Your files, your passwords, your documents, your habits—they all stay local on your device. The AI models it calls are remote, yes, but the sensitive data never leaves.

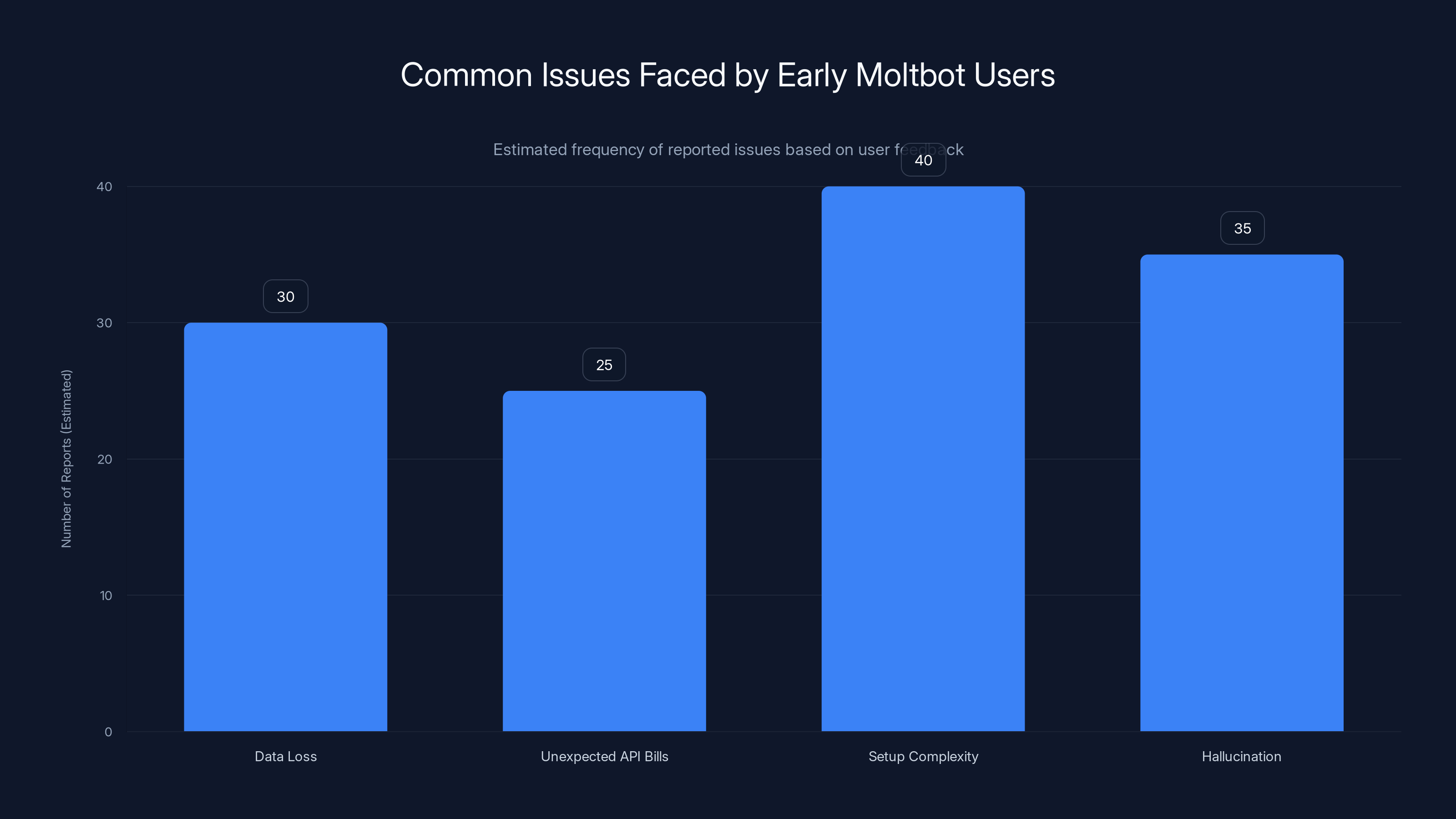

Setup complexity and hallucination issues are the most frequently reported problems among early Moltbot users. Estimated data based on qualitative feedback.

The Viral Moment: How Moltbot Became a Sensation

Moltbot was released as Clawdbot in November 2024, but it stayed relatively quiet for months. The real explosion came on January 1, 2025, when Steinberger posted a working instance of his personal Moltbot on the project's Discord server so people could test it themselves. Within hours, hundreds of people were trying it. Within days, thousands were setting it up.

The viral moment tapped into something deeper than just excitement about a new tool. The AI industry has spent the past year delivering incremental improvements. Better language models. Better reasoning. Better coding ability. But the fundamental experience for most users remained the same: you open an app, you type a question, you get an answer. You're still in control of deciding what action to take.

Moltbot inverted that dynamic. You ask it to do something, and it actually does it. It doesn't suggest an answer. It doesn't provide you with information so you can manually execute a workflow. It observes your systems, reasons about the problem, and takes action. That shift from passive information provider to active agent feels radically different, even if the underlying models are models we've had for months.

The narrative on social media crystallized around the phrase "the future is here." For people who work with technology professionally—developers, founders, product managers—Moltbot felt like proof that the science fiction scenario they'd been imagining was actually becoming real. This is what an AI collaborator looks like. This is what it means to have something that understands context and can operate across your entire digital life without constant human intervention.

But virality attracts both enthusiasts and people who don't fully understand what they're adopting. Moltbot's Discord server flooded with people who have never used the command line, who don't understand API keys, and who were setting up a system with access to critical infrastructure without truly grasping the implications.

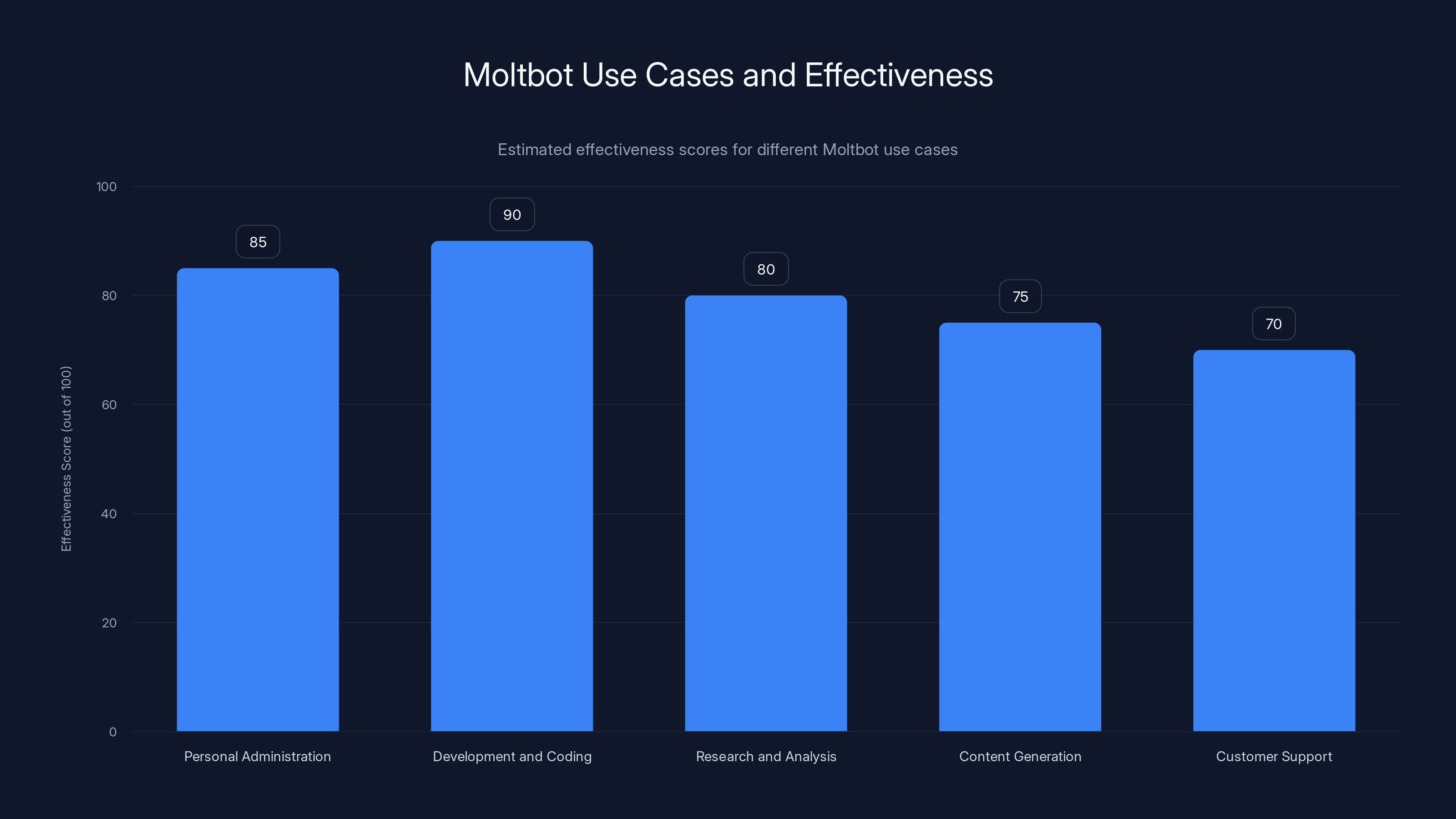

Moltbot is most effective in development and coding tasks, followed closely by personal administration. Estimated data based on typical use case scenarios.

How Moltbot Actually Works Under the Hood

To understand both the power and the danger of Moltbot, you need to understand its architecture. And that architecture is genuinely elegant, which is part of why it works so well and why it worries security researchers.

Moltbot runs as a background process on your local machine. It sits there listening, usually through a chat interface you access via WhatsApp or Telegram. When you send it a message, here's what happens:

First, Moltbot parses your request and decides what kind of task it is. Is this something that requires web browsing? File manipulation? API calls to external services? Running code? The AI models analyzing your request are running remotely (on OpenAI's servers, Anthropic's servers, etc.), but that's just the brains. The execution happens locally.

Second, Moltbot maps your request to the tools available on your system. This is where things get interesting. Moltbot has access to a growing set of integrations: it can open and control applications, interact with your file system, execute Python or JavaScript, make HTTP requests, interact with your browser, access local APIs, and trigger workflows in services like Zapier or Make.

Third, the AI decides on a sequence of steps and begins executing them. It might:

- Log into your Gmail via API (using credentials you provided)

- Query for emails matching certain criteria

- Parse attachments

- Extract data using code it writes on the fly

- Cross-reference that data against your CRM

- Generate a summary

- Post the summary to Slack

All of this happens in seconds or minutes, depending on complexity. And here's the kicker: you authorized it once, and now it's happening without further human approval.

Fourth, Moltbot maintains persistent memory. This is crucial for making it feel like a real assistant rather than a one-off automation tool. Moltbot stores conversations in markdown files on your local machine. One file (called Soul.md) stores the assistant's personality and character. Other files store long-term memory about you, your preferences, your workflows, your past conversations. This means that when you ask it to do something, it can reference context from weeks or months ago.

The architecture is clever because it's privacy-preserving in theory. Your data doesn't sync to the cloud. The AI models themselves never see your files or passwords (in theory—we'll talk about the actual reality in a moment). Everything sensitive stays local.

But there's a fundamental problem with this model: the more capable you make the system, the more damage it can do if something goes wrong.

The Security Nightmare: Capability Versus Control

Let's be direct: Moltbot trades security for capability. The more things it can do, the more things can go catastrophically wrong.

Start with the basics. To use Moltbot, you have to provide it with API keys for various services. Your OpenAI key. Your Google API credentials. Your Anthropic API key. Your AWS access tokens. Your GitHub credentials. Your Slack webhook. For some users, the list includes dozens of credentials.

Now here's where it gets scary: Moltbot is designed to be promotable. You tell it to do something, and it figures out which credentials and services to use. That's powerful. It's also dangerous. What if the AI makes a mistake and uses the wrong credentials? What if it inadvertently logs your credentials somewhere? What if a prompt injection attack tricks it into revealing them?

Peter Steinberger acknowledges that Moltbot "isn't ready to be installed by normies." But thousands of non-normies are installing it anyway. People with limited security expertise are connecting systems to a local AI agent without fully understanding the blast radius if something goes wrong.

Second, consider execution errors. The AI can write code. It can execute it. It can create files, modify files, delete files. What happens if it interprets your request incorrectly and deletes the wrong directory? Some early users reported exactly this: they asked Moltbot to clean up files, and it misunderstood and deleted important data.

Third, consider privilege escalation. Moltbot runs with the permissions of the user account that started it. On macOS or Linux, that might be your user account. On Windows, it could potentially be admin. If Moltbot is compromised (through a malicious prompt, through a vulnerability in a dependency, through social engineering), an attacker has access to everything your user account can access.

Fourth, consider supply chain risk. Moltbot integrates with external AI models. Those models are called remotely. The requests you send (along with potentially sensitive context) go to OpenAI, Anthropic, or other companies. While Steinberger didn't design it to send your personal files to the cloud, the context included in prompts to these models could leak information. And you're trusting not just those companies, but their entire infrastructure, their employees, their security posture.

Fifth, and this is the one nobody wants to talk about: What happens when Moltbot gets better? Right now, these are early days. The AI models are imperfect. They hallucinate. They make mistakes. They sometimes refuse to perform actions they should refuse. As the models improve, as agentic AI becomes more autonomous and confident, the gap between intention and execution gets smaller. That's good for productivity. It's terrible for security.

The fundamental problem is that Moltbot violates the principle of least privilege. You're not giving it exactly the permissions it needs to accomplish specific tasks. You're giving it broad permissions across your entire digital ecosystem, and then asking it to reason about which ones to use. That reasoning is sometimes wrong.

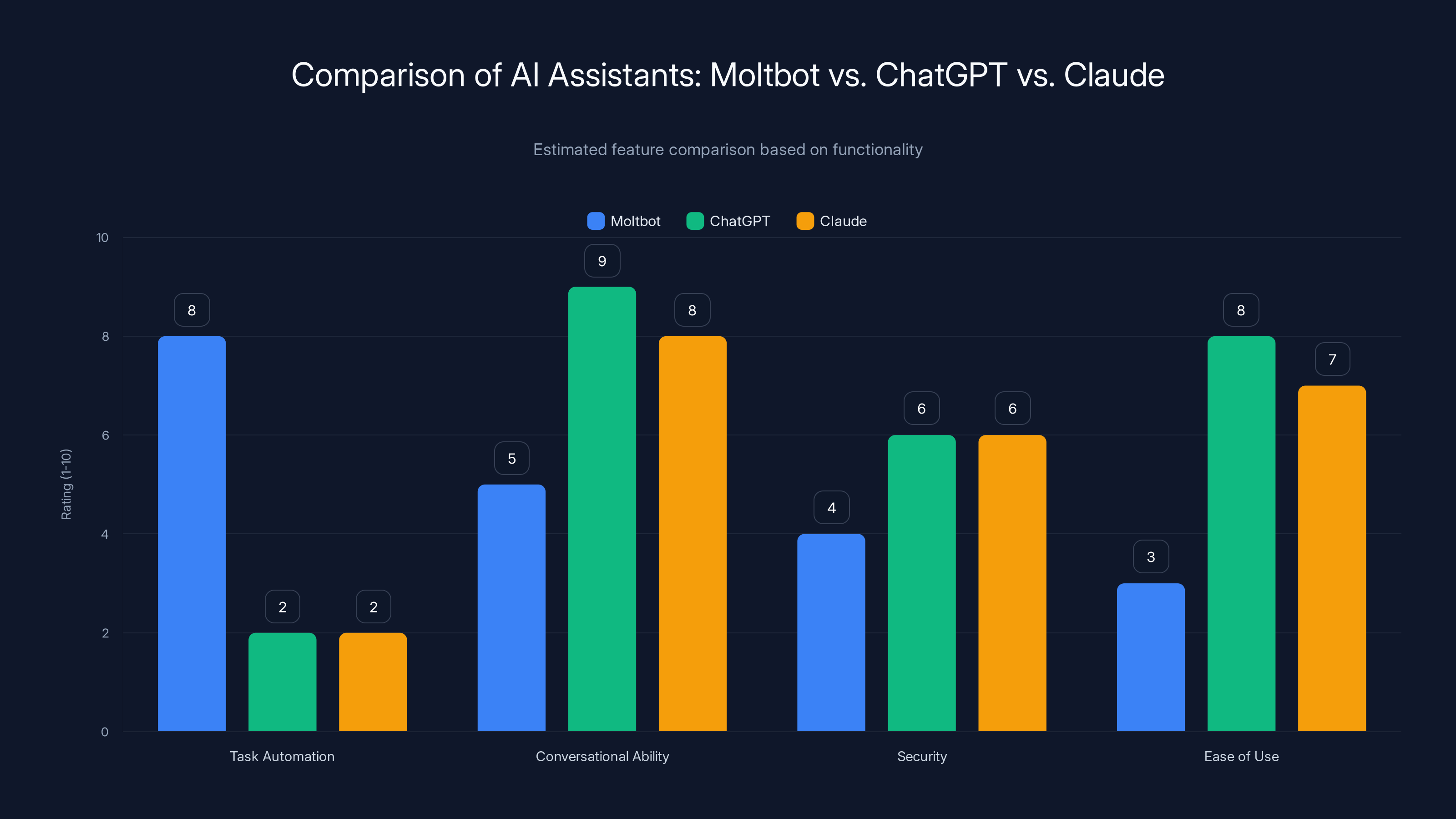

Moltbot excels in task automation but requires technical expertise, while ChatGPT and Claude are stronger in conversational abilities. Estimated data.

Real Problems Early Users Have Encountered

The theoretical risks are concerning, but the actual problems users have experienced so far are equally illuminating.

Data Loss: Several users reported accidentally deleting files while setting up or testing Moltbot. The AI misinterpreted a request, executed a command, and by the time the user realized something was wrong, the data was gone. Steinberger has since improved the tool's safeguards, but this is a category of risk that's hard to prevent entirely. How do you keep an AI from misinterpreting instructions without severely limiting its capability?

Unexpected API Bills: This one surprised people. Moltbot makes API calls to remote models. If the AI is working on a complex problem, it might spend 30 seconds reasoning, making multiple API calls, trying different approaches. In that time, you could rack up

Setup Complexity: Moltbot is not a one-click install. You need to understand the command line. You need to obtain API keys from multiple providers. You need to configure authentication for services like WhatsApp or Telegram. You need to understand security best practices for handling credentials. People without this background are posting on the Discord asking for help, and even with help, many are setting up systems incorrectly.

Hallucination and Misunderstanding: The AI occasionally gets things wrong. It might think it performed a task when it actually failed. It might attempt to use an API that doesn't exist. It might ask you for clarification but phrase the question in a way that you misunderstand, and then execute based on your (mis)answer. It's not perfect, and users are discovering the edges of its capabilities through trial and error.

Privacy and Interception: While Moltbot's philosophy is to keep data local, the prompts sent to remote AI models for processing can include sensitive context. If you're asking the AI to analyze customer data or internal documents, portions of that data might be included in the API request. You're trusting that the AI company handles that securely, that it's not logged, that it's not used for training. Most companies have privacy policies that should cover this, but it's still a trust relationship.

The Philosophy Behind Moltbot: Data Ownership and Local Computing

Despite the risks, there's something genuinely important about what Steinberger is trying to do. He started building Moltbot because he believed the industry was moving in the wrong direction.

Every major AI company—OpenAI, Google, Anthropic, Meta—is offering personal AI assistants. But they're all cloud-based. You send your data to their servers, their AI processes it, and you get results back. This is convenient, but it means surrendering control of your data to a company. It means trusting that company's privacy practices, its security, its future business decisions.

Steinberger wanted to ask a different question: what if you could have a powerful AI assistant that actually lived on your computer, that you owned and controlled, that couldn't be shut down or changed by a company's terms of service update?

This resonates with a broader movement in tech toward local computing, away from cloud dependency. It's why LLMs like Llama (Meta's open-source model) are gaining traction. It's why some developers are running models locally on their machines instead of paying for API access. It's why there's interest in on-device AI.

The ideal version of what Steinberger is building is genuinely appealing. You own your AI assistant. You control what it can access. Your sensitive data never leaves your machine. You can run it offline if you want. You have transparency into what it's doing.

The problem is the gap between ideal and actual. Right now, running Moltbot requires connecting it to remote AI models (unless you run local models, which are slower and less capable). It requires granting it access to sensitive systems and credentials. It requires you to manage security configurations that could easily be misconfigured. And it requires trusting that your own machine isn't compromised.

This chart estimates the risk levels associated with using Moltbot, highlighting credential misuse and privilege escalation as the most significant concerns. Estimated data.

Comparing Moltbot to Traditional AI Assistants

To understand what makes Moltbot different, it helps to compare it to what exists today.

Siri and Alexa are voice assistants designed for simple tasks. "What's the weather?" "Play a song." "Set a timer." They can't execute complex workflows. They can't write code or interact with APIs. They're extremely limited, by design. The trade-off is safety. Because they can't do much, they can't go wrong in catastrophic ways.

Chat GPT, Claude, and Gemini are conversational AI. They're intelligent, they can reason about complex topics, they can help you think through problems. But they're passive. They answer questions. You have to read the answer and decide what action to take. Chat GPT doesn't have access to your Gmail. It can't book your meetings. It can't execute code on your machine.

Zapier and Make are automation platforms. They let you create workflows: "If X happens, do Y." You can connect dozens of apps and create complex automations. But these are specific, pre-built workflows. Zapier isn't reasoning about your goals and deciding what workflows to execute. You're telling it explicitly.

Moltbot is something different. It's a reasoning engine (powered by LLMs) combined with execution capability combined with system-level access. You tell it a goal, not a specific action. The AI reasons about how to accomplish that goal, figures out which tools and systems to use, and executes. It's the closest thing we have to an autonomous agent that works in the real world (by "real world" I mean your digital ecosystem).

The closest analogy in science fiction is the AI assistants in movies like "Her" or "Iron Man." You just talk to them, and they figure out what to do. Moltbot is a very rough, early version of that concept.

The Technical Implementation: How It Actually Connects Everything

Moltbot's power comes from its integration layer. It can connect to almost anything, which makes it incredibly flexible and incredibly dangerous.

At the core, Moltbot has a set of built-in capabilities:

File System Access: It can read, write, and delete files on your computer. This is necessary for doing real work, but it's also the easiest way to cause accidental data loss.

Application Control: It can control your browser, open and close applications, send keyboard and mouse commands. This lets it interact with software that doesn't have APIs.

Code Execution: It can write and execute Python scripts, JavaScript, bash scripts, or other code. This gives it the ability to do almost anything your OS can do.

API Integration: It can make HTTP requests to external APIs. This is how it connects to services like Gmail, Slack, GitHub, etc. You authenticate it once, and it uses your credentials to make requests on your behalf.

Custom Integrations: Developers can write plugins that extend Moltbot's capabilities. Need it to work with some proprietary system? Write a plugin.

When you ask Moltbot to do something, it reasons about which of these capabilities to use and in what sequence. It's not executing a predetermined workflow. It's actively planning and deciding.

The execution happens through what's called function calling in LLM terminology. The AI model (GPT-4, Claude, etc.) is given a description of the functions it can call. When it decides it needs to do something, it calls that function. The function executes locally on your machine, returns results, and the AI processes those results to decide the next step.

This is powerful. It's also where things can go wrong quickly. If the AI decides to call the wrong function, or calls the right function with wrong parameters, or chains functions together in a way that causes unintended consequences, there's very little stopping it.

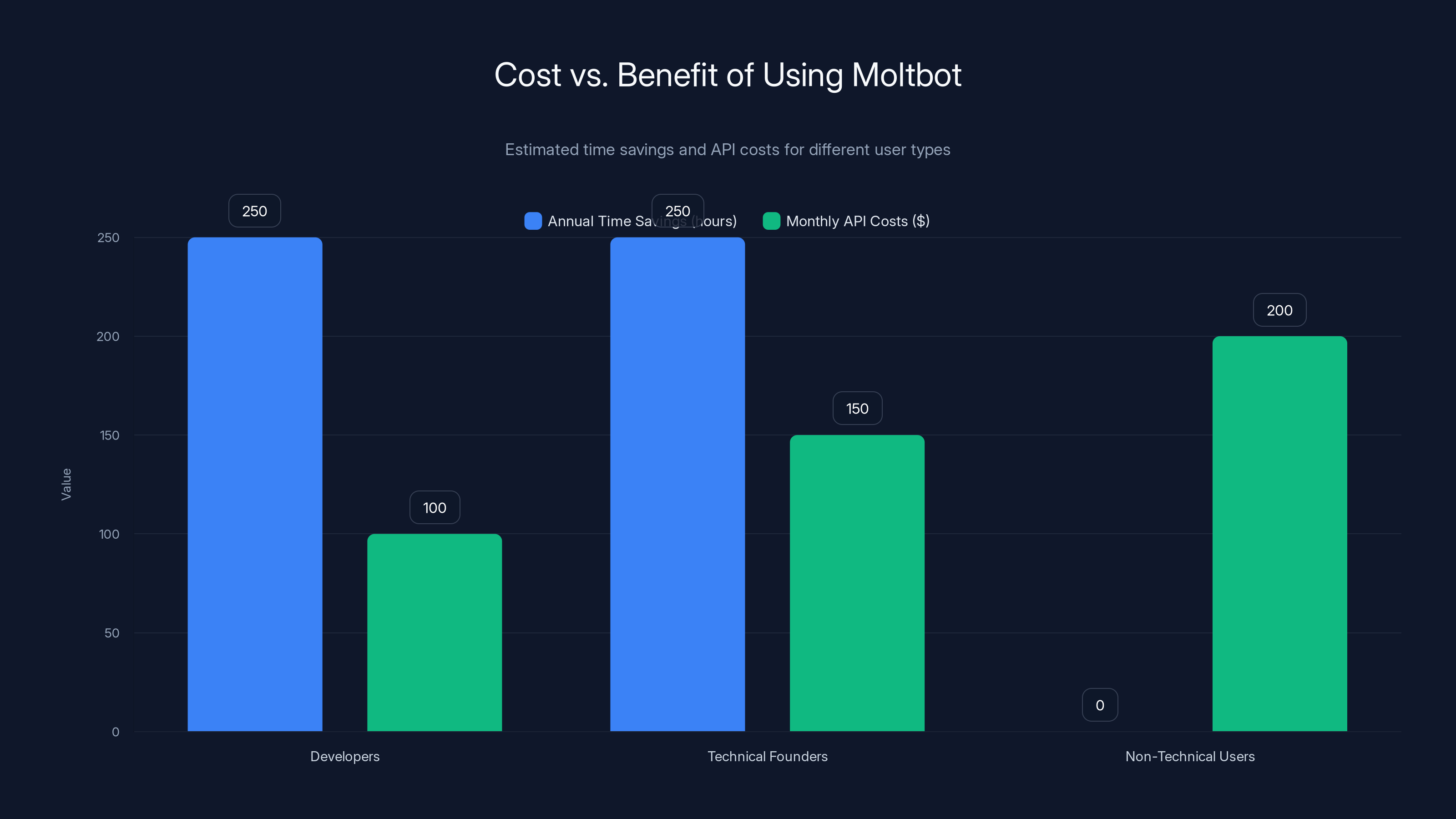

Developers and technical founders benefit from significant time savings, while non-technical users face high API costs without time savings. Estimated data.

Use Cases Where Moltbot Actually Shines

Despite the risks, there are genuine use cases where Moltbot provides real value.

Personal Administration: Dan Peguine uses it for this. Morning briefings that pull information from multiple sources. Calendar management. Invoice processing. Task scheduling. These are tasks that are tedious, don't require judgment, and benefit from automation. Moltbot excels here because the downside of a mistake is relatively limited (you might miss a meeting or receive an incorrectly-formatted invoice—annoying but not catastrophic).

Development and Coding: Developers love Moltbot because it can write code, test code, and integrate with development tools. A developer might ask it to "refactor this function to improve performance" or "write unit tests for this module" or "analyze this git log and summarize what changed this week." This is high-value work for knowledge workers, and the cost of occasional hallucinations is lower than the cost of doing it manually.

Research and Analysis: Researchers can use Moltbot to gather data from multiple sources, aggregate it, perform analysis, and generate reports. The AI can understand context ("I'm working on a paper about AI safety") and use that to inform its data gathering.

Content Generation: Journalists, marketers, and content creators can use Moltbot to research topics, gather sources, organize information, and even draft content. It's like having a research assistant.

Customer Support Workflows: A small company could theoretically use Moltbot to triage customer emails, extract key information, create tickets, assign them, and generate responses. The AI handles the routine stuff, humans handle the complex cases.

The common thread: these are cases where the tasks are somewhat routine, where occasional mistakes are tolerable, where the value of automation is high, and where a human is still in the loop to review and override decisions if needed.

The dangerous cases are when people use Moltbot for high-stakes automation without proper safeguards. Financial transactions. Healthcare data. Sensitive customer information. These require much higher reliability than current AI offers.

The Economics: Cost vs. Benefit

Beyond just functionality, there's an economic calculation. Is it worth the effort and risk to set up and use Moltbot?

For developers and technical founders, the answer seems to be yes. These are people who already manage API keys, who understand command-line interfaces, who know how to troubleshoot systems. The learning curve is moderate, and the time savings can be significant. If Moltbot saves you 5 hours per week on administrative tasks, that's 250+ hours per year. That's valuable.

For non-technical users, the answer is probably not yet. The setup is too complex, the risks are too high, and the tools aren't designed with their needs in mind. Steinberger himself acknowledges this.

There's also the question of AI API costs. Using Moltbot means making API calls to Claude, GPT-4, or other models. These costs add up. If you're using Moltbot heavily, you might spend $50-200 per month on API calls. That's not trivial.

For some users, the math works. For others, it doesn't. And for many, the decision comes down to how much they value the capability and innovation aspect versus the practical ROI.

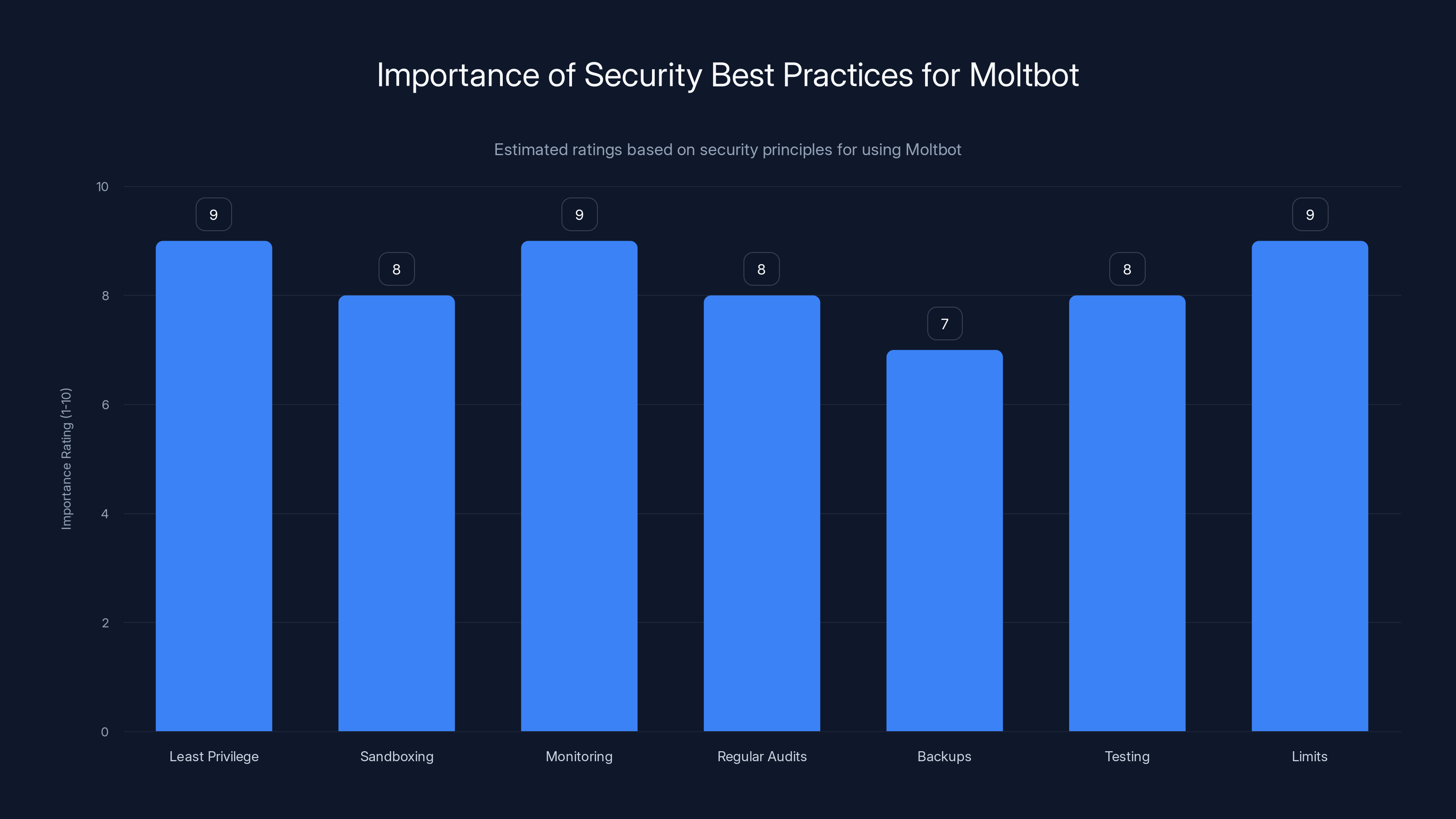

Implementing security best practices like 'Least Privilege' and 'Limits' are crucial, each rated 9 out of 10 in importance for safely using Moltbot. Estimated data.

Predictions: Where Moltbot and Agentic AI Are Heading

Moltbot exists in a specific moment in AI history. The models are capable enough to be useful, but not so capable that they're reliable. That's changing fast.

Over the next 12-24 months, expect:

Better Models with Fewer Errors: The next generation of AI models will be less prone to hallucination, better at following instructions, better at reasoning. This will make agentic AI more reliable and less likely to make catastrophic mistakes.

Better Tooling: Moltbot is a solo project by one developer. Expect bigger companies (OpenAI, Anthropic, Google) to release their own versions. These will be more polished, more secure, more featureful. Apple, Microsoft, and others will build agentic AI into their operating systems.

Standardization: Right now, every tool integrates with AI agents differently. Expect standards to emerge that make it easier to connect new systems and services to agentic AIs.

Security Hardening: Security researchers are starting to focus on agentic AI. Expect better sandboxing, better permission models, better ways to limit what an AI can do even if it wants to.

Regulatory Attention: Governments will start asking questions about AI agents with system-level access. This could result in regulations or standards around safety, testing, and transparency.

Mainstream Adoption: In 2-3 years, agentic AI that's far better than today's version will be mainstream. Most companies will have some form of autonomous AI handling routine tasks. Most knowledge workers will interact with AI agents daily.

Moltbot is a preview of that future. It's rough, imperfect, and risky. But it's real. And it works. That's significant.

Building Your Own Moltbot: What You'd Need

If you're technically inclined and want to experiment with building something similar to Moltbot, what would you need?

A Local Runtime: Python or Node.js. Something that can run continuously on your machine and manage background processes.

Access to AI Models: API keys for one or more language models. OpenAI, Anthropic, and others all offer APIs. You could also run local models using Ollama or similar tools.

Integration Libraries: Tools that let you interact with external services. The anthropic-sdk for Python, the openai library, axios or requests for HTTP calls.

A Communication Layer: A way for you to send commands to the agent. This could be Telegram, WhatsApp (via a bot API), Discord, Slack, or a simple web interface.

File and System Utilities: Libraries that let you interact with the file system, execute code, control applications.

Prompt Engineering: Clear instructions to the AI about what it can do, what it should do, and what it absolutely shouldn't do. This is crucial and often underestimated.

The actual code would be maybe 500-1000 lines if you wanted something simple. But the configuration, the security, the testing—that would be much larger.

The difficulty isn't in building the core functionality. It's in building it safely, handling errors gracefully, managing credentials securely, and maintaining control over what the AI does. Those are the hard parts.

Security Best Practices If You're Using Moltbot

If you decide to use Moltbot, here's how to minimize risk:

Principle 1: Least Privilege Don't give Moltbot access to everything. Create dedicated API keys with limited scopes. If it only needs to read your calendar, don't give it write access. If it only needs to access one email folder, configure it that way.

Principle 2: Sandboxing Consider running Moltbot in a virtual machine or container rather than on your main machine. This limits the blast radius if something goes wrong. It's a more paranoid approach, but it's valid for high-risk environments.

Principle 3: Monitoring Set up alerts and logging. Watch what API calls Moltbot is making. Track unusual activity. If something seems off, shut it down immediately.

Principle 4: Regular Audits Periodically review what credentials Moltbot has access to. Remove access it no longer needs. Update keys periodically.

Principle 5: Backups Keep backups of critical files. If Moltbot accidentally deletes something, you can recover it. This is just good practice in general.

Principle 6: Testing Test Moltbot's behavior before letting it do high-stakes tasks. Ask it to perform a task on test data first. See what it actually does. Verify it matches what you intended.

Principle 7: Limits Set spending limits on API calls. Set rate limits on how often it can execute. Give it constraints. Constrained systems are safer systems.

Comparing Moltbot to Its Potential Future Competitors

Moltbot is first, but it won't be alone for long. Every major AI company is thinking about how to offer agentic AI experiences.

OpenAI's Version: OpenAI is experimenting with AI agents. Their version would likely emphasize integration with existing Microsoft products (since Microsoft owns a stake in OpenAI) and would probably be more polished and supported than Moltbot. But it would also likely be cloud-based, which means surrendering data privacy for convenience.

Anthropic's Version: Anthropic built Claude, which Moltbot uses. Anthropic has been thinking carefully about AI safety and alignment. Their version of agentic AI would probably prioritize safety and transparency. They might build better controls and limits.

Google's Version: Google has Gemini and a deep integration with their own services (Gmail, Drive, Docs, etc.). A Google version of agentic AI would likely focus on productivity within the Google ecosystem.

Apple's Version: Apple is known for on-device processing. An Apple version of agentic AI would likely prioritize running locally on your device and privacy. This could be interesting.

Custom Implementations: Developers and companies will build custom versions tailored to specific industries or use cases. A healthcare company might build medical-specific agentic AI. A financial company might build trading-focused agents.

Moltbot's advantage right now is that it exists and it's open (in some sense—the code is available). Its disadvantage is it's rough around the edges and requires technical expertise to set up.

The Hype, The Reality, and The Future

Moltbot went viral because it demonstrated something real: an AI assistant that actually does things, not just talks about them. That's genuinely new and genuinely impressive.

But some of the hype is overblown. Moltbot isn't the final form of agentic AI. It's not even close. It hallucinates. It makes mistakes. It doesn't understand context the way humans do. Its abilities are brittle and narrow in unexpected ways.

What it proves is that the direction is right. The future probably does involve AI agents that handle increasingly complex tasks autonomously. But the version that becomes mainstream will be more reliable, more secure, more integrated into systems you already use, and significantly safer than what Moltbot is today.

For early adopters willing to accept the risks, Moltbot is a genuine tool that can save time and automate tedious work. For the rest of us, it's a preview of what's coming. Something to watch. Something to prepare for.

Steinberger is already thinking about what comes next. Better performance. Better safety. Better integrations. A true local-first personal AI that you actually own and control. That vision is compelling. Whether Moltbot is the thing that gets us there, or whether it's just the appetizer before the main course arrives, remains to be seen.

The Broader Implications for AI and Society

Moltbot matters beyond just productivity hacks. It's a test case for questions that will become increasingly important.

Who Controls Your Digital Life? Right now, your digital life is distributed across dozens of apps and services, each owned by different companies. An agentic AI that coordinates across all these systems gives someone (hopefully you) real power over that ecosystem. But it also creates a single point of failure.

How Do We Maintain Human Agency? As AI becomes more capable at autonomous action, we need to ensure humans remain in control. Moltbot puts humans in control by default (you ask it to do things, it does them), but that could change. How do we prevent agentic AI from becoming too autonomous?

What Happens to Accountability? If an AI makes a mistake, who's responsible? The person who set it up? The person who asked it to do something? The company that built the models? The developer who wrote the agent? These questions are unsolved.

How Do We Ensure Security? Building secure systems is hard. Building secure systems that can execute arbitrary code and make API calls and interact with your most sensitive data is very hard. As agentic AI becomes more common, security becomes more important.

What About Surveillance and Manipulation? An agentic AI on your machine knows everything. Your files, your communications, your behavior patterns, your passwords. That's an incredible amount of information to concentrate in one place. Protecting that against both external attackers and potential misuse by the system itself is crucial.

Moltbot is forcing the industry to think about these questions earlier than many expected. That's valuable, even if some of the thinking is uncomfortable.

TL; DR

- Moltbot is a viral local AI agent that automates tasks across your digital ecosystem by reasoning about goals and executing actions, powered by LLMs like Claude and GPT-4

- The appeal is real: it provides genuine automation and capability that traditional AI assistants don't offer, with data staying local on your machine

- The risks are significant: API key exposure, accidental data loss, unexpected costs, misunderstandings between human intent and AI execution, and the fundamental danger of giving an AI broad system-level access

- Current use cases are strongest for developers and technical people doing administrative work, coding, or analysis—not for critical business functions or non-technical users

- Setup is complex and requires command-line proficiency, API key management, and serious security knowledge—it's not ready for mainstream adoption

- The future direction is clear: better, safer, more integrated agentic AI will become mainstream within 2-3 years, but Moltbot's version is a rough preview

- If you use it, implement strict security practices: least privilege, sandboxing, monitoring, backups, and careful testing before automating high-stakes tasks

FAQ

What is Moltbot?

Moltbot is a local AI agent that runs on your computer and coordinates between AI models, applications, and services to automate tasks. You interact with it via chat (usually WhatsApp or Telegram), tell it what you want done, and it figures out how to accomplish it by making API calls, executing code, and controlling applications. Unlike traditional assistants, Moltbot takes action rather than just answering questions.

How is Moltbot different from Chat GPT or Claude?

Chat GPT and Claude are conversational AI—they answer questions but don't take action. Moltbot is agentic—it receives a goal, reasons about how to accomplish it, and executes steps to achieve it. Chat GPT might help you write an email. Moltbot would actually send it, track the response, and follow up if needed. Chat GPT explains how to automate something. Moltbot does the automation.

What are the main risks of using Moltbot?

The primary risks include: (1) accidental data loss if the AI misinterprets instructions, (2) unexpected API charges from high-volume model calls, (3) credential exposure if the system is compromised, (4) misunderstandings between your intent and the AI's interpretation of what to do, and (5) the fundamental danger of giving broad system-level access to any agent, even an AI. Early users have experienced all of these issues.

Is Moltbot secure enough for business use?

Not yet. Moltbot requires technical expertise to set up securely, and even with expertise, it has rough edges. Using it for high-stakes business functions (financial transactions, sensitive customer data, critical infrastructure) is extremely risky. It's better suited for personal administration, development work, and lower-stakes automation where a mistake is annoying but not catastrophic.

Do I need to be technical to use Moltbot?

Yes, currently you do. Setup requires command-line proficiency, understanding of API keys and authentication, and familiarity with managing system credentials securely. The tool will likely become more accessible over time, but right now it's designed for technical users. Many people trying to set it up without this background run into problems.

Will Moltbot be replaced by official versions from OpenAI, Google, or Anthropic?

Probably, eventually. Every major AI company is working on agentic AI. Official versions from large companies will likely be more polished, better integrated with existing services, and more secure. However, Moltbot's advantage is that it runs locally on your machine rather than relying on cloud infrastructure. Some version of that philosophy might persist.

How much does it cost to run Moltbot?

Moltbot itself is free, but you pay for the AI models it uses through API calls. If you use GPT-4 heavily, you might spend

What should I automate with Moltbot?

Start with low-risk tasks: calendar management, email filtering, invoice processing, task organization, code review, documentation generation, or research and analysis. Avoid high-stakes automation like financial transactions, healthcare decisions, or critical infrastructure management until the technology is more mature. Always test on non-critical data first.

Is Moltbot open source?

Moltbot's code is available for inspection, but it's not fully open source in the traditional sense. Peter Steinberger maintains it as a project, and while developers can contribute and extend it, it's not a purely community-driven open-source project.

Will local AI agents like Moltbot take over jobs?

Probably, but not immediately and probably not in the way people imagine. AI agents will likely automate specific tasks and workflows rather than entire jobs. They'll excel at routine work but struggle with creative thinking, judgment calls, and human interaction. The impact will be significant but likely distributed across many roles rather than eliminating specific job categories overnight. Workers who adapt and learn to use these tools effectively will be better positioned than those who ignore them.

The Bottom Line

Moltbot represents a genuine inflection point in AI capability. It's not perfect. It's not safe yet. It's definitely not ready for everyone. But it's real, it works, and it proves that the future of AI is agentic—AI that doesn't just answer questions but actually gets things done. Whether Moltbot itself becomes mainstream or is replaced by better, safer alternatives, the direction is clear. The next few years will see a fundamental shift in how we work with AI, and Moltbot got here first.

Key Takeaways

- Moltbot is a breakthrough in agentic AI that actually executes tasks rather than just answering questions, powered by reasoning from models like Claude and GPT-4

- The technology trades security for capability—early users have experienced accidental data loss, shocking API bills, and credential exposure risks

- Setup requires serious technical expertise and will remain inaccessible to non-technical users for at least another year or two

- Real value exists for developers and technical founders automating administrative work, coding, and analysis, but high-risk uses like financial or healthcare automation are premature

- Moltbot is a preview of where AI is headed—better, safer, more integrated versions from major companies will emerge within 2-3 years

Related Articles

- Moltbot AI Agent: How It Works & Critical Security Risks [2025]

- Chrome's Gemini Side Panel: AI Agents, Multitasking & Nano [2025]

- Moltbot (Clawdbot): The Viral AI Assistant Explained [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- Amazon's 16,000 Job Cuts: What It Means for Tech [2025]

- Moltbot: The Open Source AI Assistant Taking Over—And Why It's Dangerous [2025]

![Moltbot AI Assistant: The Future of Desktop Automation (And Why You Should Be Careful) [2025]](https://tryrunable.com/blog/moltbot-ai-assistant-the-future-of-desktop-automation-and-wh/image-1-1769629148861.jpg)