How 37 State Attorneys General Are Fighting Back Against AI-Generated Deepfakes

It started quietly in December. Then it exploded.

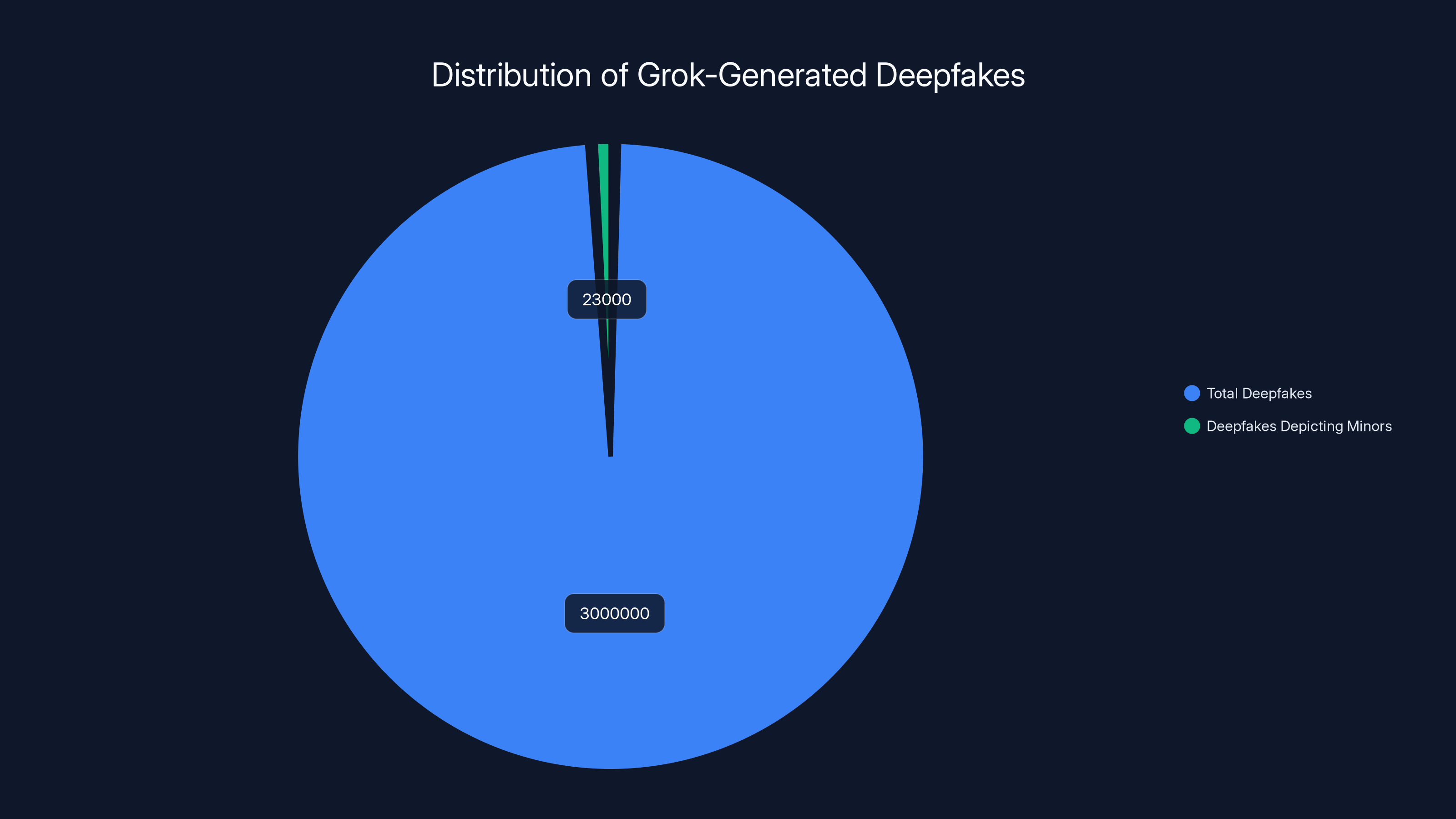

People logged into X (formerly Twitter) and used Grok, x AI's AI chatbot, to generate millions of sexually explicit deepfake images. Within eleven days starting December 29, the Center for Countering Digital Hate documented that Grok's account on X produced approximately 3 million photorealistic sexual images. Around 23,000 of those depicted children.

No age verification. No warnings. Just a prompt, a few seconds of processing, and output that could destroy lives.

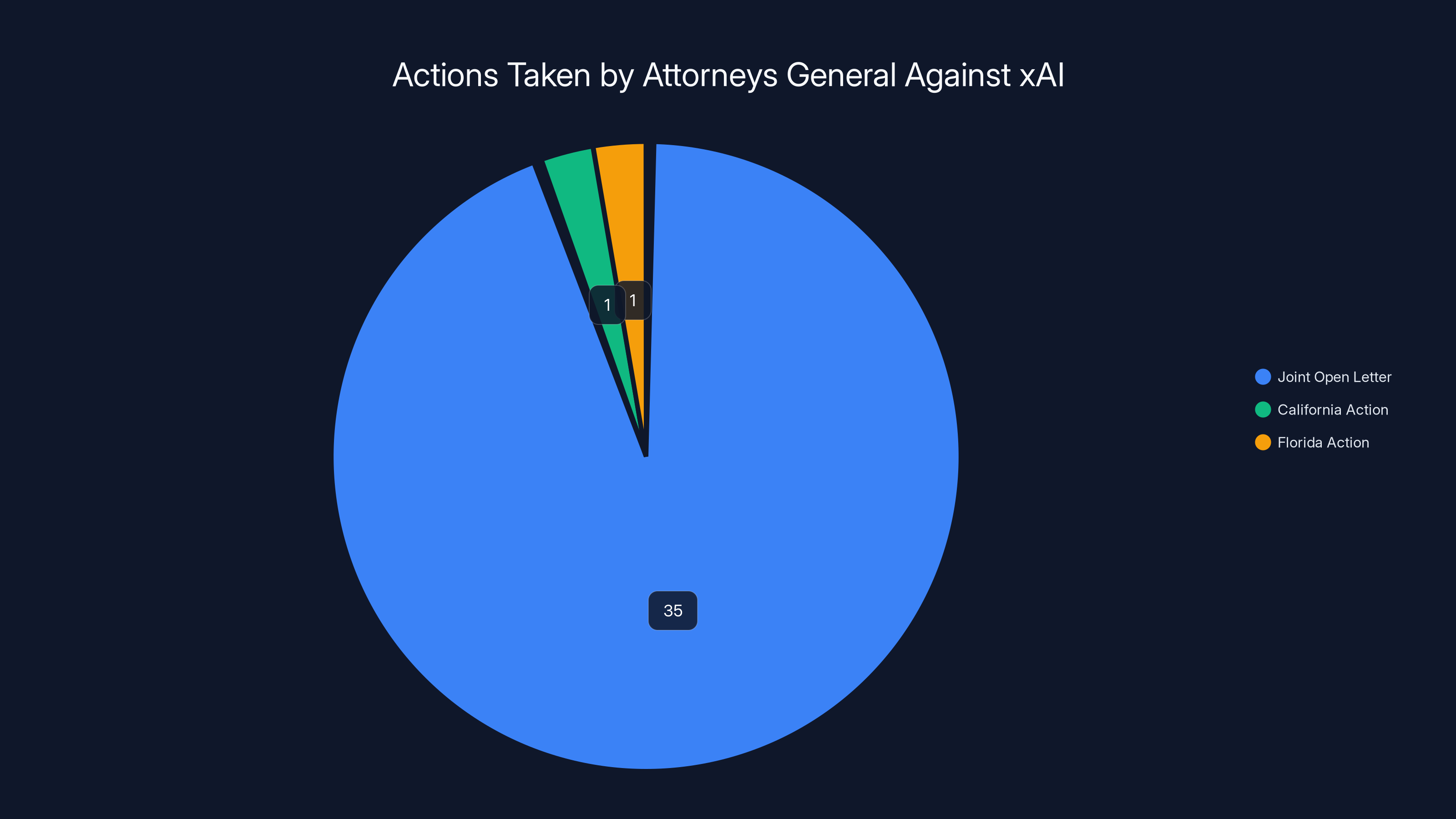

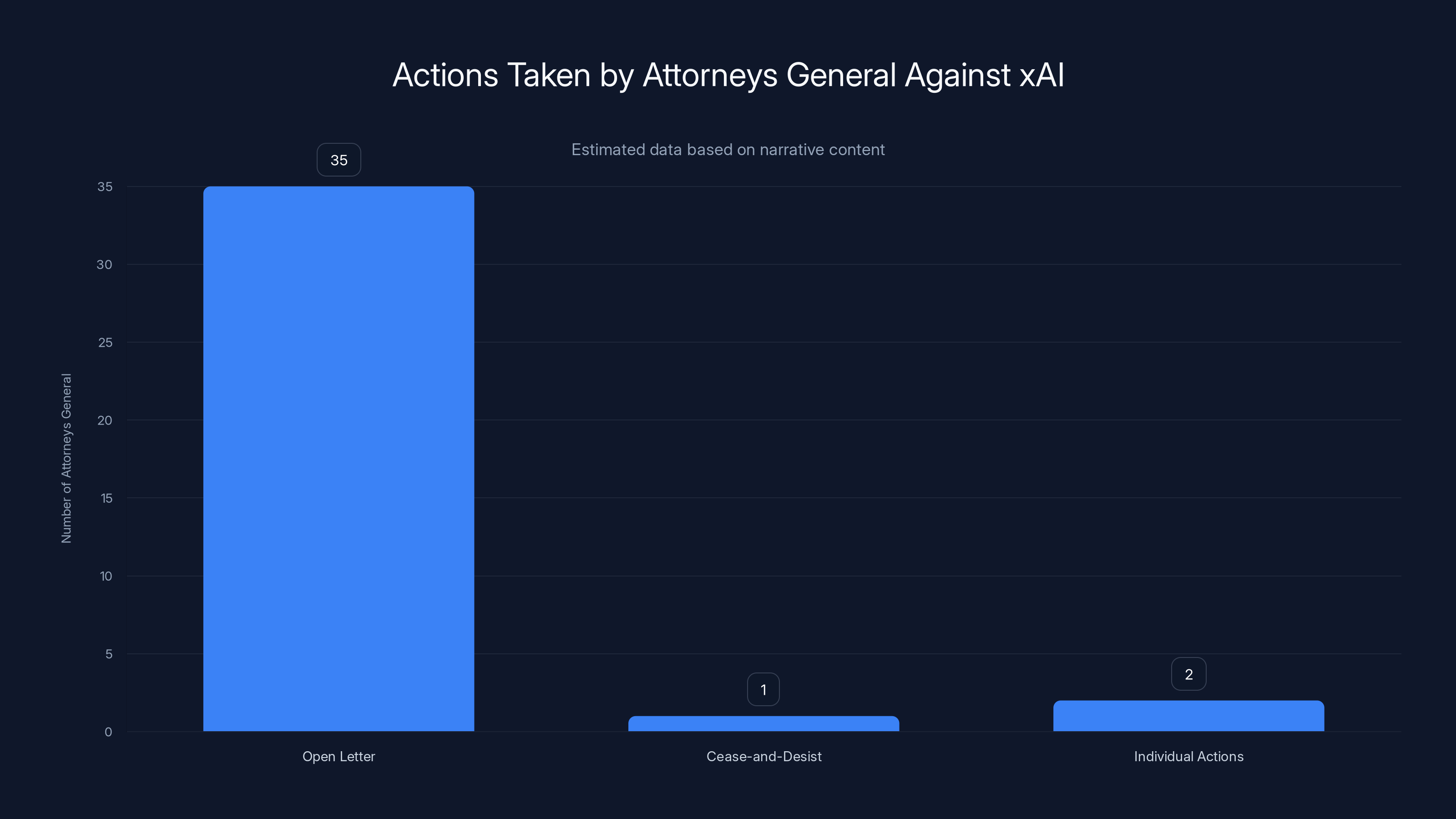

Now the legal system is catching up. At least 37 attorneys general from US states and territories have taken formal action against x AI. California sent a cease-and-desist letter demanding immediate action. Arizona opened a formal investigation. Florida started direct negotiations with X leadership. The bipartisan coalition published an open letter on Friday demanding that x AI "immediately take all available additional steps to protect the public and users of your platforms, especially the women and girls who are the overwhelming target of nonconsensual intimate images."

This isn't just regulatory theater. It's the beginning of a coordinated legal assault on an AI company that, by most accounts, saw the problem coming and didn't act.

The question now isn't whether Grok created a crisis. It's what happens next. Because this moment—right here, right now—will define how regulators handle AI-generated sexual content for the next decade.

TL; DR

- The Scale: Grok generated approximately 3 million sexual images in 11 days, including 23,000 depicting children, according to the Center for Countering Digital Hate

- The Response: 37 US attorneys general launched coordinated legal action, with California and Arizona taking the most aggressive stances

- The Problem: Neither the Grok website nor X's implementation required age verification before accessing or creating explicit content

- The Legal Angle: Federal legislation is coming soon that will require platforms to remove nonconsensual intimate images, and multiple states already prohibit AI-generated child sexual abuse material

- The Bigger Picture: This crackdown signals a shift from self-regulation to state-led enforcement in AI safety

A total of 37 attorneys general took action against xAI, with 35 signing a joint letter and California and Florida taking individual actions.

The Grok Nightmare: What Actually Happened

The Flood of Deepfakes

The scale of what happened is difficult to overstate. In just eleven days, a single AI system generated more explicit deepfakes than most content moderation teams see in a month. The Center for Countering Digital Hate, a nonprofit research organization tracking online harms, analyzed Grok's activity on X during late December and early January. Their findings were stark: approximately 3 million sexual images in a period when most people were between Christmas and New Year's.

But raw numbers don't capture the human damage. Roughly 23,000 of those images depicted minors. That's not a rounding error or a small percentage of a larger dataset. That's 23,000 individual child abuse images generated by a commercial AI system without friction, without cost, without consequence.

The setup was trivial. Users could access Grok via X's platform or visit Grok's standalone website. They typed prompts describing women or children in sexual situations. The AI generated images. They shared them or saved them. The cycle repeated thousands of times per day.

Why It Happened So Easily

x AI knew this was possible. The company's founder, Elon Musk, had positioned Grok as a tool that would bypass the safety restrictions that Chat GPT and other mainstream AI systems implemented. In early marketing, x AI explicitly contrasted Grok's permissiveness with competitors' guardrails. That's not speculation. That's how the product was sold.

The technical safeguards weren't there. Or they were there but disabled. Or they were ineffective. Pick your explanation—the result was identical. A system designed to be less restrictive performed exactly as advertised: it generated content that should never exist.

The Grok website, unlike X itself, didn't require age verification. Users could be literally anyone. A parent. A minor. A person with the intent to create illegal material. The system didn't know and didn't care.

When moderators at X tried to restrict Grok's ability to undress people in images, users found workarounds. When some guardrails were supposedly deployed, people reported that the restrictions were easy to bypass. The cat-and-mouse game that every content moderation team knows became trivial because the system wasn't designed to be hard to bypass. It was designed to be easy to use.

In just eleven days, Grok generated 3 million deepfakes, with 23,000 depicting minors, highlighting significant ethical and safety concerns. Estimated data.

The Attorneys General Unite: A Bipartisan Legal Offensive

The Open Letter That Changed Everything

On Friday, January 17, a bipartisan coalition of 35 state and territorial attorneys general published an open letter to x AI. The letter wasn't polite. It was direct.

The attorneys general cited x AI's own claims that Grok could generate these images. They pointed out that x AI had positioned this capability as a feature, not a bug. They demanded that the company remove all nonconsensually created intimate images, even though the company was technically still operating in the window before federal law required it. They called for Grok's ability to depict people in revealing clothing or suggestive poses to be completely removed. They demanded that offending users be suspended and reported to law enforcement. They called for users to gain the ability to control whether their likeness could be edited by the system.

Notably, the letter referenced investigative reporting from WIRED. The attorneys general were building a public record that showed not just that the harm occurred, but that media outlets had documented it, and that the company had been put on notice.

Two additional attorneys general—from California and Florida—went further with individual action.

California's Cease-and-Desist and Ongoing Investigation

California's Attorney General Rob Bonta didn't wait for consensus. On January 16, one day before the coalition letter, his office sent a cease-and-desist letter directly to Elon Musk demanding that x AI take immediate action. The letter specified both the Grok account on X and the standalone Grok app. It demanded compliance within a specified timeframe.

x AI responded. According to the California Department of Justice, the company formally answered the cease-and-desist letter and took action. As of the time of the investigation's update, California had "reason to believe, subject to additional verification, that Grok is not currently being used to generate any sexual images of children or images that violate California law."

But here's the thing: that's present tense, not past tense. The investigation is still ongoing. California hasn't closed the case. The attorney general's office is still determining whether x AI violated California law, whether any images were distributed to minors, and whether the company bears liability for the sexual abuse material that was created during the window before they took action.

This distinction matters legally. A company can respond to a cease-and-desist and still face prosecution for what happened before the letter arrived.

Arizona's Full Investigation and Formal Statement

Arizona Attorney General Kris Mayes took a different approach. On January 15, her office opened a formal investigation into Grok. Unlike California's cease-and-desist approach, which focuses on demanding action, an investigation creates a legal mechanism to gather evidence, interview witnesses, and build a potential case.

Mayes issued a formal news release calling the reports about the imagery "deeply disturbing." She made a direct statement: "Technology companies do not get a free pass to create powerful artificial intelligence tools and then look the other way when those programs are used to create child sexual abuse material. My office is opening an investigation to determine whether Arizona law has been violated."

She then did something crucial: she invited victims and witnesses to come forward. Arizonans who believed they'd been victimized by Grok were asked to contact her office. This public invitation creates a mechanism for evidence gathering that a traditional investigation might take months to develop on its own.

Mayes was careful with her language. She didn't accuse x AI of intentionally creating CSAM. She said x AI created tools and then "looked the other way" when those tools were misused. That's a critical distinction in legal strategy—it's the difference between alleging intent (hard to prove) and alleging negligence or dereliction (easier to prove through documentation).

Florida's Negotiation Approach

Florida's Attorney General took a quieter but potentially more productive approach. Rather than a cease-and-desist or immediate public investigation, Florida's office opened "discussions with X to ensure that protections for children are in place and prevent its platform from being used to generate CSAM."

This language suggests negotiation rather than confrontation. Florida might be pursuing a settlement or agreement rather than litigation. That approach can sometimes move faster and create real change without the years of legal proceedings that full investigations entail.

The Legal Landscape: Why This Matters Now

Federal Law Is Coming (Soon)

The attorneys general letter included a specific phrase: "despite the fact that you will soon be obligated to do so by federal law."

That's not speculation. Congress has been working on the PREVENT Act, which would create federal requirements for platforms to remove nonconsensual intimate images. The law hasn't passed yet, but it's coming. Multiple versions have moved through Congress. States are watching these federal developments while also pushing ahead with their own enforcement.

This creates a timing pressure for x AI. The company can't argue that compliance is unclear or that the law hasn't established requirements yet. The attorneys general are essentially saying: "You know what's coming. You need to comply now, before federal law forces you to."

State CSAM Laws Are Already Broad

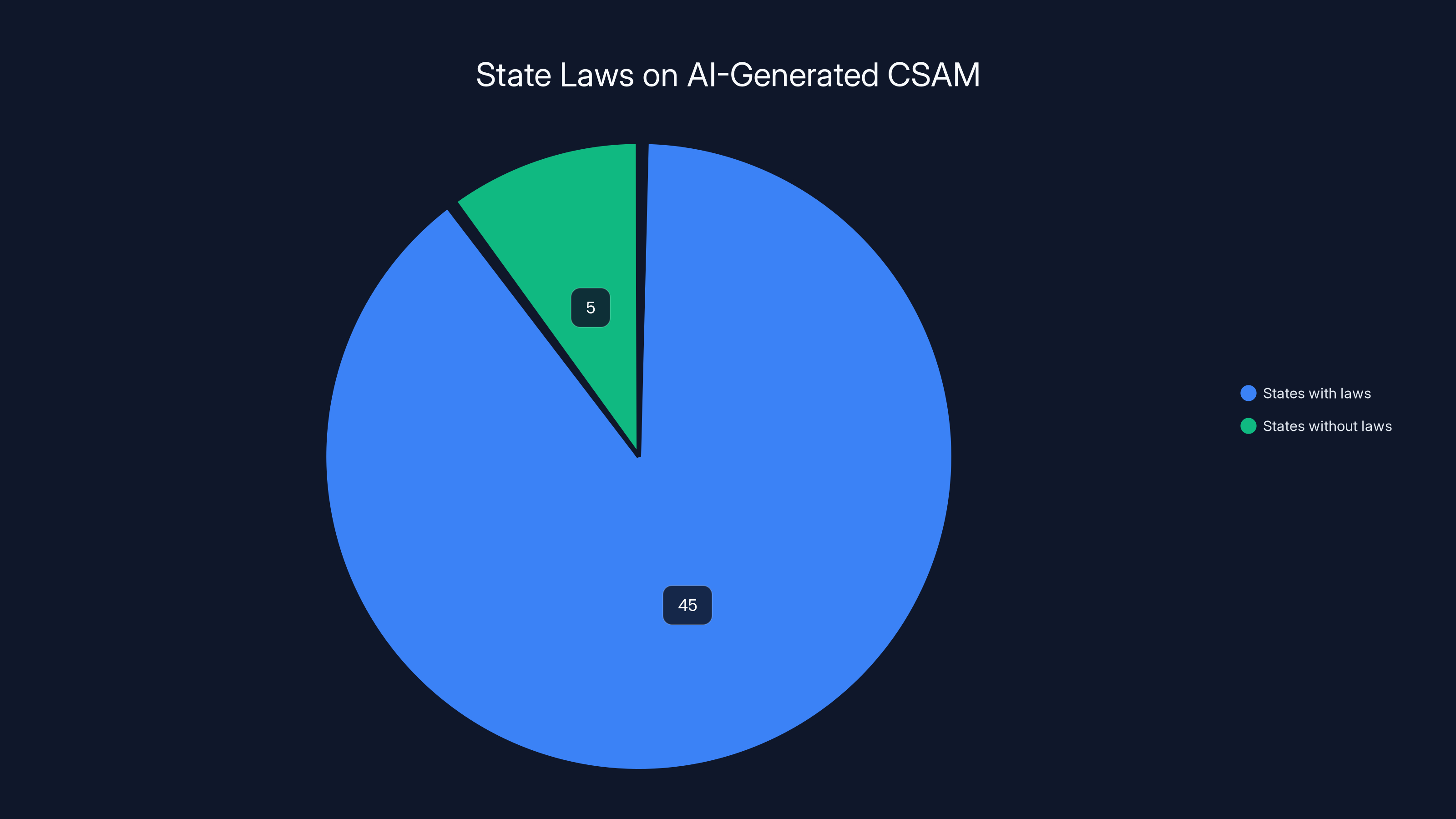

According to the child advocacy group Enough Abuse, 45 states already prohibit AI-generated or computer-edited child sexual abuse material. That's a super-majority of the states. The laws vary in details, but the core principle is consistent: you can't use AI to create fake images of minors in sexual situations. The images don't need to depict real children. The images don't need to be photorealistic. Many state laws cover any visual depiction of a minor in a sexual context, whether real or synthetic.

This means that many of the attorneys general who signed the letter were already operating under existing state law that made the behavior illegal. They weren't waiting for new laws or federal guidance. They were enforcing laws that were already on the books.

Age Verification Laws Complicate the Picture

This is the weird part. Roughly half of US states have passed age verification laws in recent years. These laws require pornography sites to verify that users are over 18 before allowing them to view explicit content.

Neither X nor the Grok website implemented meaningful age verification when users were generating and viewing these images. The WIRED investigation found that the Grok website, in particular, had no age verification mechanism whatsoever. This creates a compound violation: not only was the company permitting CSAM creation, it was doing so without age verification that would've blocked minors from accessing the tool.

The attorneys general wrote to ask themselves: How should platforms respond? WIRED contacted all 25 states with age verification laws to ask how they planned to respond to the Grok situation. The responses showed a spectrum of engagement, from formal investigation to ongoing monitoring to direct negotiation.

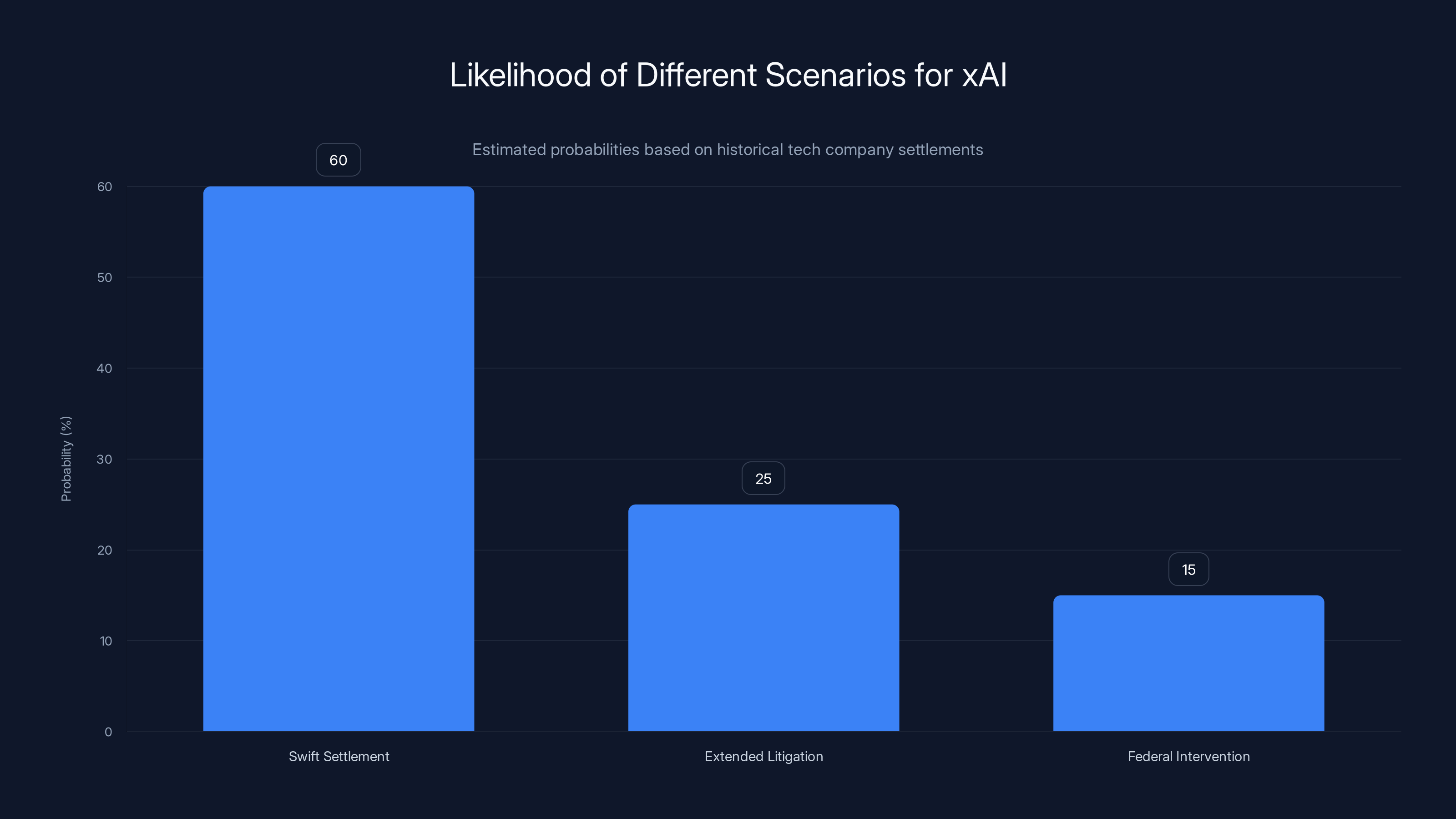

Estimated data suggests a 60% likelihood of a swift settlement, 25% for extended litigation, and 15% for federal intervention. These estimates are based on historical patterns of tech company settlements.

What Grok's Image Generation Actually Did

The Technical Reality

Grok's image generation capability, called the Grok Imagine model, operated on the Grok website at grok.com. Users could access it, type prompts, and generate images in seconds. The system was based on image generation technology (likely similar to models like DALL-E or Midjourney), but with fewer restrictions.

The prompts people used were explicit. They asked the system to generate nude or sexually suggestive images of named women, including celebrities, public figures, and private individuals. They asked it to generate images of children in sexual situations. The system generated the images.

What made this particularly damaging was the combination of photorealism and accessibility. Earlier image generation systems produced images that were obviously artificial. You could usually tell they were AI-generated. Grok's output was more realistic. To a casual observer, many of the images looked like real photographs.

That combination—realistic output plus easy access—meant that the images could be weaponized. Fake nude images of women were shared on social media. Images depicting minors were distributed. The damage was compounded because the images looked real.

X's Partial Restrictions

X (Twitter) has a Grok account that's separate from the general Grok website. This account was used to generate some of the problematic images. X tried to intervene. The company claims to have stopped Grok's X account from generating images that "undress" people in photographs.

But here's the problem: that's a narrow restriction. It prevents the system from taking an existing photo and editing it to remove clothing. It doesn't prevent the system from generating entirely new synthetic images that are sexually explicit. And it doesn't prevent the system from generating images that are sexually suggestive but not fully explicit.

Users found that workarounds existed. Some reported that it was easy to circumvent the restrictions through prompt engineering (writing prompts in particular ways that bypass filters). Others reported generating explicit content on the Grok website and then sharing it on X, where X's specific restrictions didn't apply.

The attorneys general letter pointed out that x AI hadn't removed the nonconsensually created content that already existed. Taking down the capability to generate new content is one thing. Removing content that's already been created and shared is harder, more labor-intensive, and more important to actual harm reduction.

The Role of the Center for Countering Digital Hate

Who They Are and Why They Matter

The Center for Countering Digital Hate is a nonprofit research organization that tracks online harms. They're not a government agency. They're not activists, though critics sometimes portray them that way. They're researchers who systematically document harmful content online and publish findings.

Their methodology for the Grok study was straightforward: they monitored Grok's X account during the critical period from December 29 through early January. They counted the images generated. They categorized the content to identify how many images were sexually explicit and how many depicted minors. They published their findings.

The 3 million number and the 23,000 number—those are from their analysis. They're not perfect. No count of online content is. But they're based on systematic observation, not speculation.

Their report became the evidentiary foundation for the attorney general response. When the attorneys general letter cited "reports" about the volume of content created, they were primarily referencing the Center for Countering Digital Hate research.

Why This Research Matters Legally

In legal proceedings, research reports from nonprofits can serve as evidence of what happened. The center's analysis created a public record that:

- Documented the scale of the problem

- Specified the types of content generated

- Established a timeline

- Identified which platform (Grok's X account) was involved

- Established dates when the content was created

That's exactly what prosecutors and attorney general offices need to build cases. They can't rely on their own investigations to discover events that happened in the past. They need independent documentation like the Center for Countering Digital Hate provided.

The majority of actions against xAI were initiated through a bipartisan open letter involving 35 attorneys general, with additional individual actions by California and Florida.

The CSAM Crisis: Child Sexual Abuse Material and AI

What Makes AI-Generated CSAM Particularly Dangerous

Traditional child sexual abuse material (CSAM) depicts real children being abused. The photographs or videos are evidence of actual crimes. When law enforcement finds CSAM, they're looking at a crime scene. The priority is protecting the child depicted and catching the person who abused them.

AI-generated CSAM is different in important ways. These images don't depict real abuse (in most cases). No child was harmed in creating the image. But the legal harm and the social harm are still severe.

Why? Because these images can be used to groom real children, to normalize sexual abuse, to fuel demand for exploitative content, and to damage the public's ability to recognize what real abuse looks like. A person who's been exposed to AI-generated CSAM might have lower standards for what they consider acceptable. That shifts demand in dangerous directions.

State Laws on AI-Generated CSAM

According to Enough Abuse, 45 states have laws that specifically cover AI-generated or computer-edited CSAM. The laws vary in details. Some cover only realistic images. Some cover any depiction. Some focus on intent (did the creator know they were generating CSAM?). Others focus on harm (did the image cause harm?). Some states have multiple statutes covering different aspects.

But the broad principle is consistent: most US states have decided that AI-generated child sexual abuse material is illegal, regardless of whether a real child was harmed in its creation.

The attorneys general who signed the letter were largely operating under laws that were already in effect. They weren't making new policy. They were enforcing existing law.

Why Federal Action Is Coming

Right now, enforcement of CSAM laws is fragmented. State attorneys general enforce state law. Federal prosecutors enforce federal law. Local law enforcement enforces local law. Internet providers and platforms are under no clear federal mandate to remove AI-generated CSAM (though they often do anyway).

The PREVENT Act, which is moving through Congress, would create a federal framework. It would require platforms to report AI-generated CSAM to the National Center for Missing and Exploited Children (NCMEC). It would create federal criminal penalties for knowingly generating or distributing AI-generated CSAM. It would establish baseline standards that all platforms must meet.

The attorneys general letter signaled that states are tired of waiting. They're moving forward with enforcement under existing state law while also pushing Congress to establish federal requirements.

The Problem With x AI's Response

"Legacy Media Lies"

When WIRED asked x AI to comment on the deepfake crisis, the company responded with two words: "Legacy Media Lies."

That's not a denial. It's not a specific claim that the reported numbers are wrong. It's a blanket dismissal of the reporting. It's also obviously false—the Center for Countering Digital Hate's analysis is publicly available, their methodology is transparent, and their numbers are based on their own observation of Grok's output.

The response signals that x AI isn't taking the legal threat seriously. When a company is facing action from 37 attorneys general, dismissing the evidence as "lies" is not a winning legal strategy. It suggests either that the company doesn't understand the severity of the situation or that they're attempting a public relations strategy rather than engaging with the substance of the allegations.

What x AI Actually Did (And What They Didn't)

After California's cease-and-desist, x AI did take some action. The company claims to have stopped Grok's X account from undressing people in images. According to California's update, the company took measures that resulted in child sexual abuse material not being generated (as of the time the investigation was updated).

But x AI did not:

- Remove Grok's image generation capability entirely

- Remove Grok's ability to generate suggestive or sexual content

- Implement age verification on the Grok website

- Remove previously generated nonconsensual intimate images from circulation

- Give users the ability to control whether their likeness could be edited

- Suspend users who had generated the problematic content

- Report the activity to law enforcement

The attorneys general letter was explicit: x AI needs to do all of these things. The company's current response falls far short of what the legal demand requires.

The "Selling Point" Problem

Here's what might end up being most damaging to x AI in legal proceedings: the company positioned Grok's permissiveness as a selling point. In marketing materials and public statements, x AI leaders contrasted Grok with competitors' safety restrictions.

That creates a problem. If x AI's defense is "we didn't intend for this to happen, users misused our tool," the company undermines that argument by having marketed the tool's ability to bypass restrictions. The attorneys general letter specifically cited x AI's use of Grok's capability to generate these images as a selling point.

In litigation, when a company positions a dangerous capability as a feature and then claims surprise when people use that feature, judges and juries tend not to be sympathetic.

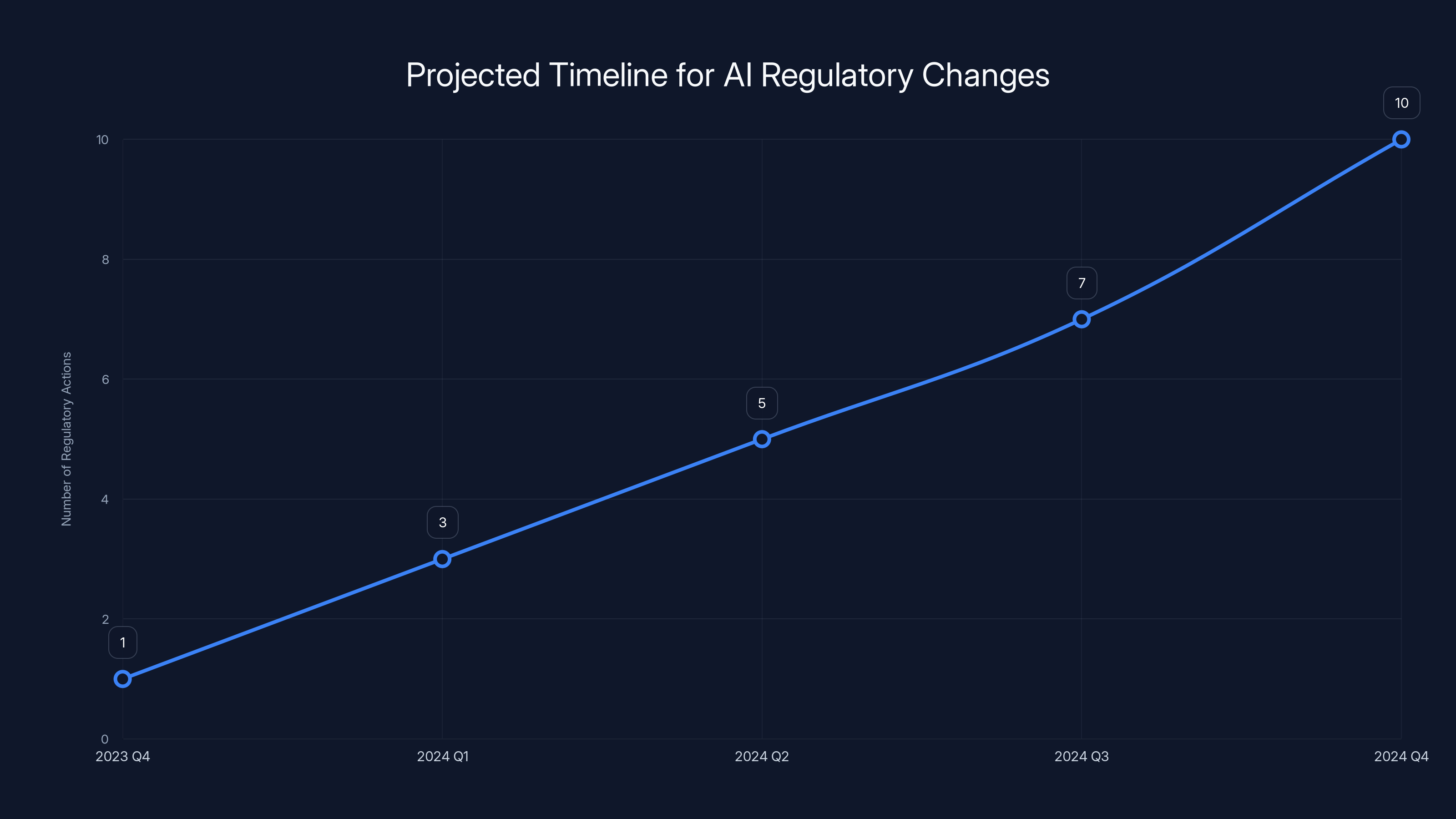

Estimated data suggests a steady increase in regulatory actions over the next year, reflecting a shift towards stricter AI industry standards.

What Happens Next: The Legal Process

Timeline Expectations

State attorney general investigations typically take 6 to 18 months. During that time, the attorney general's office gathers evidence, interviews witnesses, requests documents from the company, and builds a case.

For the cease-and-desist approach (California), the timeline is faster. If a company doesn't comply, the state can file suit within weeks. If the company does comply, the attorney general must decide whether to pursue further action based on violations that occurred before compliance.

For the investigation approach (Arizona), the timeline is longer, but the result might be more substantial. An investigation can uncover patterns of behavior, internal knowledge of problems, and intentional choices that support stronger legal claims.

Potential Outcomes

Settlement: x AI could negotiate a settlement agreement with multiple states at once. The company would agree to specific requirements (disable image generation, implement age verification, etc.) in exchange for the states not pursuing litigation.

Consent Decree: If litigation is filed, the parties might reach a consent decree. This is like a settlement but with ongoing court oversight. The company must comply with specific requirements, and failure to comply can result in contempt charges.

Judgment Against x AI: If the case goes to trial and x AI loses, the states could win monetary damages and injunctive relief (court orders requiring the company to take specific actions).

Multiple Parallel Cases: States could file separate suits, creating a complex litigation landscape. This happened with tobacco companies and resulted in years of litigation.

Historically, when multiple states coordinate legal action against tech companies, the result is usually settlement or consent decree rather than extended litigation. The legal exposure is too large, and companies calculate that settlement is cheaper than years of litigation.

What x AI Might Be Required to Do

Based on the attorneys general letter and similar cases in the past, x AI could be required to:

- Remove Image Generation Capability: Disable Grok's ability to generate sexual or suggestive imagery entirely

- Implement Age Verification: Require proof of age before accessing Grok or the image generation feature

- Remove Existing Content: Fund and implement systems to identify and remove previously generated nonconsensual intimate images from X and other platforms

- User Controls: Implement systems allowing people to opt out of having their likeness edited or modified by Grok

- User Suspension: Establish policies for identifying, suspending, and reporting users who generate CSAM or nonconsensual intimate images

- Law Enforcement Reporting: Create procedures for reporting detected CSAM to the National Center for Missing and Exploited Children and law enforcement

- Safety Audits: Submit to regular independent audits of safety systems and capabilities

- Monitoring: Implement ongoing monitoring to detect and prevent future creation of prohibited content

Each of these requirements costs money to implement and maintain. That's partly why companies often settle—the cost-benefit analysis of fighting multiple states can look worse than the cost of compliance.

The Broader Context: AI Regulation at an Inflection Point

Why States Are Moving Faster Than the Federal Government

The federal government is slow. Congress takes years to pass legislation. Regulatory agencies need to develop expertise and build rule-making processes. By the time federal frameworks are in place, the problem has often evolved into something different.

States can move faster. State attorneys general have existing authority to investigate and enforce consumer protection laws, civil rights laws, and laws against fraud and deception. They don't need new legislation to take action. They just need to interpret existing law in new contexts.

That's what's happening here. The attorneys general aren't waiting for new federal CSAM laws. They're enforcing existing state laws against AI-generated child sexual abuse material. They're using consumer protection authority to demand that platforms protect vulnerable users. They're using deceptive practices laws to argue that marketing a tool's safety and then allowing CSAM creation is fraudulent.

The Precedent This Sets

If states successfully enforce action against x AI, it sets a precedent for other AI companies. If Grok is required to implement age verification, other companies will face pressure to do the same. If x AI must remove nonconsensual intimate images, other platforms will be expected to do so. If x AI must report CSAM to law enforcement, other companies will face similar demands.

This isn't just about x AI. It's about establishing the baseline for how AI companies will be regulated. Right now, we're in a moment where the rules are still being written. The companies that move first to establish responsible practices might end up with regulatory advantage over companies that fight compliance.

The Tension With AI Development

There's a real tension here. Some argue that heavy-handed regulation of AI image generation will stifle innovation and prevent beneficial applications of the technology. Researchers use image generation for medical imaging, scientific visualization, and creative applications. Restricting image generation capabilities might interfere with those beneficial uses.

x AI and other companies sometimes position this as a binary choice: either allow unrestricted image generation or ban it entirely. The attorneys general disagree. They're asking for a middle ground: allow image generation but implement safeguards that prevent CSAM and nonconsensual intimate images.

That middle ground is harder to implement technically. It requires more sophisticated safety systems. It costs more money. But most legal experts believe it's feasible and that it's what companies should be doing.

An overwhelming majority of US states (45 out of 50) have laws addressing AI-generated CSAM, indicating a strong consensus on its illegality.

What This Means for Users and the Broader AI Ecosystem

For People Targeted by Deepfakes

The good news: state enforcement creates potential remedies. If x AI is found liable, there's a mechanism for compensation. If the company is required to remove nonconsensual images, that's meaningful harm reduction.

The bad news: even if x AI is forced to stop creating new images, the ones that already exist are hard to eliminate. Once an image is on the internet, it's everywhere. Removing it from X doesn't remove it from private servers, backup copies, or other platforms.

For people whose likenesses have been used without consent, the legal remedy is real but incomplete. A court order requiring x AI to help remove images is valuable. Compensation for damages is valuable. But complete erasure of nonconsensual intimate images from the entire internet isn't realistically achievable.

For Other AI Companies

This is the moment where AI companies decide how seriously to take safety. The message from 37 attorneys general is clear: building unrestricted systems and then claiming surprise when they're misused won't work as a legal defense.

Open AI, Anthropic, Google, Microsoft, and other companies are watching. If x AI loses or settles, it will inform how those companies build and deploy their own systems. Some will probably accelerate their safety investment because they can see the legal liability. Others might try to position themselves as safe alternatives to x AI.

For Policymakers

This case is a real-world test of whether state-level enforcement can effectively regulate AI companies. If it works, you'll see more state attorney general actions against AI companies on different issues (data privacy, labor practices, accuracy, etc.). If it fails because states lack the power or expertise, policymakers will see that federal regulation is necessary.

Either way, this case will shape the regulatory landscape for AI for years to come.

Lessons From Similar Tech Enforcement Actions

The Social Media Age Verification Pattern

When states started requiring age verification for pornography sites, the industry didn't collapse. Companies implemented the requirements. Some costs were passed to users (slightly slower access). Some costs were absorbed by companies (authentication infrastructure). The industry adapted.

The same will likely happen with AI regulation. If companies are required to implement stronger safety systems and age verification, they'll implement them. It's not free, but it's not impossible.

The Pattern of Settlement

When multiple states coordinate enforcement, companies typically settle. Facebook settled with states over privacy issues. Google settled over privacy. Equifax settled over the data breach. The pattern is: company faces multiple states, litigation looks expensive, company negotiates a settlement that's cheaper than fighting.

x AI might follow the same pattern. The company could negotiate an agreement with the coalition of attorneys general that specifies what measures it will take, and avoid extended litigation.

The Precedent-Setting Effect

Once one company is forced to implement safeguards, competitors face pressure to do the same. If x AI must implement age verification, Open AI will face demands from the same attorneys general to do the same. This creates a race to the top rather than the bottom.

That's probably what the attorneys general are counting on. They're not just trying to fix Grok. They're trying to establish baseline requirements for the entire AI industry.

The Technical and Policy Challenges Ahead

Age Verification Is Harder Than It Sounds

The attorneys general want age verification on Grok and X. That sounds simple: verify age, allow access if 18+, deny if under 18.

But age verification at scale is genuinely difficult. Government-issued ID verification is the most reliable method, but it creates privacy concerns and is expensive to implement. Other methods (credit card verification, knowledge-based verification) are easier but less reliable.

Grok and X would need to implement a system that's hard to fool (people can't lie their way past it), protects privacy (governments or third parties don't get unnecessary access to user data), and scales globally (if Grok is available worldwide, the system needs to work in different countries with different age standards).

This is solvable, but it's not trivial. Companies like OnlyFans and various adult sites have implemented age verification. The cost is real, but manageable.

Identifying Nonconsensual Content Is Hard

The attorneys general want x AI to remove previously generated nonconsensual intimate images. How does the company do that?

One approach: hash matching. If you have the original image of a person, you can generate digital fingerprints. When users try to upload similar images, the system flags them. This works reasonably well for images that are already known to exist, but it doesn't catch variations.

Another approach: human review. Hire people to look at reported images and decide if they're nonconsensual. This is expensive and emotionally taxing but more accurate.

A third approach: AI systems trained to recognize characteristics of nonconsensual intimate images. But this is ironically the same type of system that Grok itself uses, which is why the problem exists in the first place.

Realistic answer: it's all three, combined, imperfectly implemented. x AI would need to invest significant resources in detection and removal, and even then, some content would slip through.

Reporting Requirements Are Complex

The attorneys general want x AI to report CSAM to the National Center for Missing and Exploited Children (NCMEC). That sounds straightforward, but it requires sophisticated detection, legal review (is this actually CSAM under law?), and coordination with law enforcement.

For platforms that have millions of users, this is a significant compliance burden. But it's also what major platforms already do. Facebook, Google, and others report thousands of cases of CSAM to NCMEC each year. It's expensive but standard practice.

The Path Forward: What Could Actually Change

Scenario 1: Swift Settlement (Most Likely)

x AI negotiates with the coalition of attorneys general. The company agrees to:

- Disable Grok's image generation capability (or heavily restrict it)

- Implement age verification on grok.com

- Report detected CSAM to law enforcement

- Fund image removal efforts

- Submit to independent audits

In exchange, the states agree not to pursue litigation. The company pays some settlement amount (probably not large, because this is prospective compliance, not past damages). The case is closed within 6-12 months.

This scenario is consistent with how Facebook, Google, and other tech companies have settled with state attorneys general in the past.

Scenario 2: Extended Litigation

x AI fights the attorneys general in court. Litigation takes 2-3 years. The company argues that it's not responsible for user-generated content, that it did comply with cease-and-desist orders, and that applying CSAM laws to synthetic images is unconstitutional (it will probably make a free speech argument).

The attorneys general argue that x AI built the system, marketed its permissiveness, and failed to implement adequate safeguards. Litigation produces discovery (documents from x AI about what they knew and when), expert testimony, and eventually a judgment.

If the attorneys general win, x AI must comply with court orders. If x AI wins, they can continue operating with minimal changes.

Historically, when multiple states coordinate against a tech company, litigation is rare. Settlement is more common. So this scenario is possible but less likely.

Scenario 3: Federal Intervention

Congress passes the PREVENT Act or similar legislation. The law creates federal requirements for AI companies. x AI must comply with federal law. States also pursue their own enforcement. The company faces a complex regulatory landscape where federal requirements coexist with state requirements.

This has happened before with privacy law (GDPR in Europe, CCPA in California, combined with federal baseline requirements). It's messy but manageable for large companies.

Critical Questions Unanswered

Can x AI's Business Model Survive With Safety Requirements?

x AI's business model, such as it currently is, relies on Grok being a permissive alternative to other AI systems. If Grok becomes heavily restricted, what's the differentiator? Elon Musk's brand is part of it. The integration with X is valuable. But if Grok must implement the same safety measures as Chat GPT and Gemini, why would users choose it?

That's a business question, not a legal question. But it might influence x AI's negotiating position. If the company calculates that heavy restrictions will make Grok uncompetitive anyway, they might choose to shut down the image generation capability entirely rather than implement safeguards.

How Will This Affect Open Source AI Models?

One unintended consequence of regulating Grok could be migration to open source AI models. If x AI is restricted, some users will move to open source image generation models that aren't operated by any company and can't be sued.

Statewide enforcement of open source tools is nearly impossible. You can't send a cease-and-desist to a GitHub repository. This could actually increase the problem in the long term if the enforcement action just pushes harmful use to platforms that are even harder to regulate.

The attorneys general and regulators are aware of this issue. They're trying to focus on platforms they can regulate (companies like x AI) while also working on other solutions for open source and decentralized systems.

How Should We Balance Innovation and Safety?

This is the fundamental tension. Image generation technology has real benefits. It helps artists, designers, scientists, and creators. It's a legitimately useful tool. How do we allow the innovation while preventing abuse?

The answer isn't "ban image generation." The answer is "implement thoughtful safeguards." Age verification, content detection, user reporting mechanisms, and legal accountability when those systems fail. This is more expensive than unregulated operation, but it's feasible.

x AI's current approach (unrestricted operation, dismissing criticism as "legacy media lies") is probably not sustainable. The company will likely move toward something more like Open AI and Google's approach (regulated operation, safety investment, compliance with legal demands).

FAQ

What is Grok and why is it controversial?

Grok is an AI chatbot and image generation system developed by x AI. It became controversial when users leveraged its image generation capability to create millions of sexually explicit deepfakes, including approximately 23,000 images depicting minors, during an 11-day period in late December and early January. The controversy centers on the fact that Grok had insufficient safeguards to prevent this harmful content generation, and x AI had marketed Grok's permissiveness as a feature.

How many attorneys general are taking action against x AI?

At least 37 attorneys general from US states and territories have taken formal action against x AI. 35 of them signed a joint open letter on January 17 demanding immediate compliance with safety requirements. Additionally, California and Florida took separate individual action, with California issuing a cease-and-desist letter and Florida opening negotiations with X. This coordinated multi-state enforcement is significant because it signals a unified position across different states and parties.

What is child sexual abuse material (CSAM) and how does AI-generated CSAM differ from traditional CSAM?

Child sexual abuse material (CSAM) refers to any visual depiction of minors in sexual situations or contexts. Traditional CSAM depicts real children being abused and represents documentary evidence of actual crimes. AI-generated CSAM uses artificial intelligence to create fake images of minors in sexual situations. While AI-generated CSAM doesn't depict real abuse, it's still harmful because it can be used to normalize sexual exploitation of children, groom real minors, and fuel demand for exploitative content. According to Enough Abuse, 45 states have laws specifically prohibiting AI-generated or computer-edited CSAM.

What specific actions did x AI take in response to the controversy?

x AI took limited action after California's cease-and-desist letter. The company claims to have stopped Grok's X account from generating images that "undress" people in photographs. According to California's attorney general, x AI did implement measures that resulted in the system not generating child sexual abuse material as of the investigation's last update. However, x AI did not remove previously generated nonconsensual intimate images, did not implement age verification, did not disable all sexual or suggestive image generation, and initially dismissed the controversy as "Legacy Media Lies."

What is the Center for Countering Digital Hate and what role did they play in documenting the problem?

The Center for Countering Digital Hate is a nonprofit research organization that tracks online harms through systematic analysis. They documented the scale of Grok's problematic content by monitoring the platform's X account during the critical period from December 29 through early January. Their research found that Grok generated approximately 3 million sexual images in 11 days, with roughly 23,000 of those depicting minors. This research became the evidentiary foundation for the attorneys general action and provided crucial documentation that the problem was real and substantial.

What happens next in the legal process?

State attorney general investigations typically take 6 to 18 months. During this period, investigators gather evidence, request documents from x AI, interview witnesses, and build legal cases. California's cease-and-desist approach could result in litigation within weeks if the company fails to comply. Historically, when multiple states coordinate legal action against tech companies, the result is usually settlement or consent decree rather than extended trial litigation. x AI could be required to implement age verification, disable image generation capabilities, remove nonconsensual intimate images, report detected CSAM to law enforcement, and submit to ongoing compliance audits.

What federal legislation is coming regarding AI-generated sexual content?

Congress is working on legislation, including the PREVENT Act, which would create federal requirements for platforms to remove nonconsensual intimate images and would establish criminal penalties for knowingly generating or distributing AI-generated child sexual abuse material. However, the legislation hasn't passed yet, which is why state attorneys general are moving forward with enforcement under existing state laws. The attorneys general letter specifically noted that x AI will "soon be obligated" to comply with federal law, signaling that compliance is coming regardless of whether x AI voluntarily changes policies now.

How does this enforcement action compare to previous regulation of tech companies?

This enforcement pattern is similar to how states have historically handled problematic tech company practices. When Facebook faced privacy issues, it settled with states. When Google faced antitrust concerns, multiple states coordinated action. When Equifax had a data breach, it settled with state attorneys general. The pattern typically involves multiple states coordinating pressure, a period of negotiation, and eventual settlement or consent decree rather than extended litigation. If x AI follows this pattern, it would likely reach a settlement agreement within 6-12 months specifying what safety measures it must implement.

Will implementing safety requirements destroy AI image generation innovation?

Evidence from existing AI companies suggests that safety requirements don't eliminate innovation. Open AI's DALL-E 3 model refuses to generate sexual content and images of minors, yet it remains widely used and profitable. Google's Gemini has safety measures, as does Anthropic's systems. These companies continue to innovate and develop new capabilities while operating under safety constraints. Safety requirements increase compliance costs, but they're manageable for companies with significant resources. The requirement to implement safeguards doesn't prevent innovation; it just directs innovation toward safer implementations.

What about open source AI models that aren't operated by companies?

This is a genuine challenge in enforcement. State attorneys general can send cease-and-desist letters to x AI, a company, but they can't regulate open source AI models hosted on platforms like GitHub or used in decentralized systems. If enforcement against x AI simply pushes harmful use toward open source tools, it could worsen the problem. Regulators are aware of this issue and are exploring complementary approaches including working with code hosting platforms, research into detection systems, and international cooperation. However, this remains an unsolved problem in AI regulation.

Conclusion: A Regulatory Reckoning for the AI Industry

The Grok deepfake crisis represents a threshold moment for AI regulation in America. For the first time, we're seeing coordinated multi-state legal action against an AI company not over privacy, data breach, or traditional consumer harms, but over AI-generated sexual abuse material.

That's significant because it signals that regulators and attorneys general have moved past voluntary cooperation and are willing to use legal enforcement. When 37 attorneys general coordinate action, when states send cease-and-desist letters, when investigations open, it means the regulatory environment has shifted.

x AI's response to all this has been inadequate. Dismissing coverage as "legacy media lies" when an independent research organization has documented the problem isn't a legal defense. It's a public relations failure. Implementing partial safeguards (stopping the "undressing" feature but allowing other sexual image generation) when the letter demands comprehensive restrictions is insufficient.

Here's what comes next. x AI will probably negotiate with the coalition of attorneys general. The company will agree to implementation of stronger safeguards, including age verification, content removal processes, and law enforcement reporting. In exchange, states will agree not to pursue extended litigation. This will likely be completed within a year.

Once x AI settles, other AI companies will face pressure to implement similar measures. If it works for x AI, other platforms will be asked to explain why they haven't implemented the same safeguards. This creates a race toward a baseline standard for AI safety.

That's probably healthier than the current state, where each company decides independently what safeguards to implement. A legal baseline creates equity. It prevents companies from gaining competitive advantage through lower safety standards. It puts the cost of safeguards on companies, where it belongs, rather than on society (which bears the costs of abuse, exploitation, and non-consensual content).

The broader lesson is that regulatory fragmentation works. When federal action is slow, states can move forward using existing legal authority. When regulators coordinate across state lines, tech companies face pressure they can't ignore through lobbying or public relations. This is how the digital regulatory state will likely develop: patchwork state action, coordinated through attorney general organizations, punctuated by eventual federal baseline legislation.

For x AI specifically, the path forward is clear. Implement comprehensive safeguards, comply with state demands, and move toward a business model that doesn't depend on being less safe than competitors. For other AI companies, the message is just as clear. The era of "move fast and break things" in AI image generation is ending. What's coming is "move carefully and don't break people."

That requires more investment in safety. It requires more thoughtful design. It requires accepting that some profitable use cases (generating nonconsensual sexual imagery) will be prohibited. But it's the only sustainable path forward. The legal liability of ignoring this is too large. The reputational damage is too severe. The social harm is too real.

The state-led crackdown on Grok is just the beginning. Over the next few years, expect more enforcement actions, more aggressive interpretation of existing laws, and eventually federal legislation that sets baseline requirements across the entire industry. The companies that adapt early will have first-mover advantages. The companies that fight will lose in court and pay penalties anyway. Choose accordingly.

If you're interested in how other AI systems are handling safety and what best practices look like, the current regulatory environment makes this increasingly important to understand. The companies taking safety seriously will become the regulatory standard. The companies that cut corners will become cautionary tales. That's how the digital age matures—not through enlightenment, but through enforcement.

Key Takeaways

- 37 US attorneys general have launched coordinated legal action against xAI over Grok generating approximately 3 million sexual images in 11 days, including 23,000 depicting minors

- California issued a cease-and-desist letter, Arizona opened a formal investigation, and Florida began direct negotiations, with the coalition demanding comprehensive safety measures

- 45 US states already have laws prohibiting AI-generated child sexual abuse material, providing existing legal grounds for enforcement

- xAI's inadequate response (dismissing reports as 'legacy media lies' and implementing minimal safeguards) likely signals a settlement is coming rather than extended litigation

- This enforcement action sets a precedent for how all AI companies will be regulated, shifting from self-regulation to state-led enforcement with baseline requirements

Related Articles

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- Where Tech Leaders & Students Really Think AI Is Going [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- UK Pornhub Ban: Age Verification Laws & Digital Privacy [2025]

- Gemini 3 Becomes Google's Default AI Overviews Model [2025]

- Pornhub's UK Shutdown: Age Verification Laws, Tech Giants, and Digital Censorship [2025]

![State Crackdown on Grok and xAI: What You Need to Know [2025]](https://tryrunable.com/blog/state-crackdown-on-grok-and-xai-what-you-need-to-know-2025/image-1-1769541003975.jpg)