New York's Push to Regulate AI: Two Bills That Could Change Everything

New York is about to become ground zero for AI regulation in America. The state legislature is moving forward with two separate bills that tackle different aspects of the AI boom, and together they represent one of the most aggressive regulatory approaches we've seen from any state. One bill targets the spread of AI-generated misinformation in news, while the other takes aim at the infrastructure explosion driving the AI boom.

If you've been paying attention to the AI industry, you know that moves in New York often ripple across the country. California pioneered tech regulation with privacy laws. Massachusetts set standards for autonomous vehicles. Now New York's doubling down on AI, and what happens here could influence how every other state approaches the technology.

The timing matters too. We're at a critical inflection point. AI companies are pouring resources into data centers at an unprecedented scale. The electricity demands alone are reshaping power grids across the country. Meanwhile, AI-generated content is flooding the internet, and news organizations are struggling to determine what's real and what's machine-made. These bills are essentially New York saying: "Hey, we need to pump the brakes and think about this carefully."

What's interesting is that these bills aren't coming from some anti-tech crusade. They're bipartisan concerns driven by real consequences that New Yorkers are experiencing. Electricity rates are climbing. Data center developers are snapping up property. And newsrooms are getting pressure to cut costs by replacing journalists with AI tools. These bills represent a practical response to tangible problems, not ideological opposition to technology itself.

Let's break down what these bills actually do, what the implications are, and what the broader conversation tells us about the future of AI regulation in America.

TL; DR

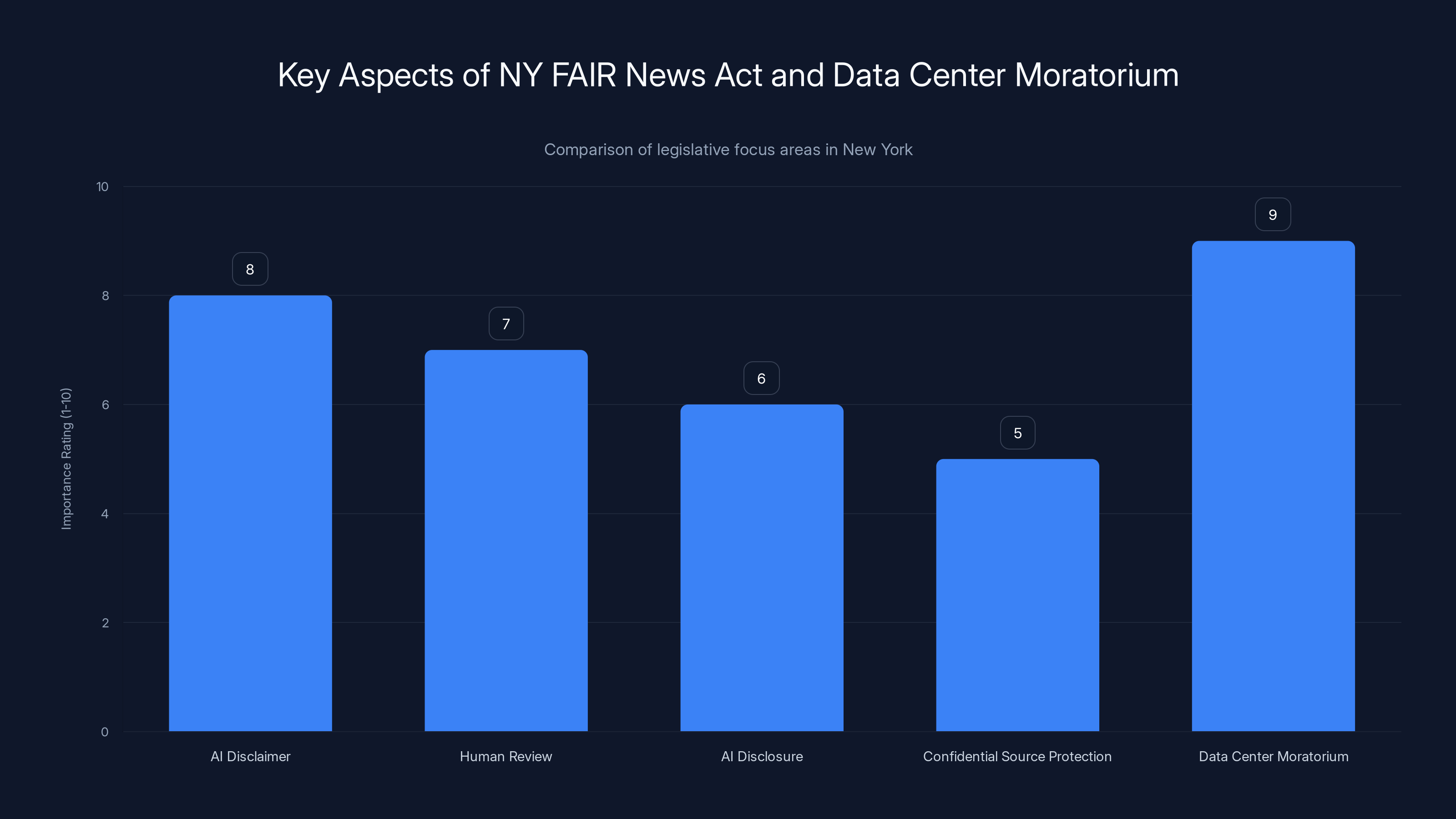

- New York FAIR News Act requires AI-generated news content to be labeled and reviewed by humans before publication

- Data Center Moratorium Bill (S9144) pauses new data center permits for three years due to power grid strain

- Grid capacity crisis: Large power demands have tripled in one year, with 10 gigawatts expected in five years

- Editorial accountability: News organizations must disclose AI usage to employees and protect confidential sources

- Broader implications: These bills signal aggressive state-level AI regulation that could spread to other states

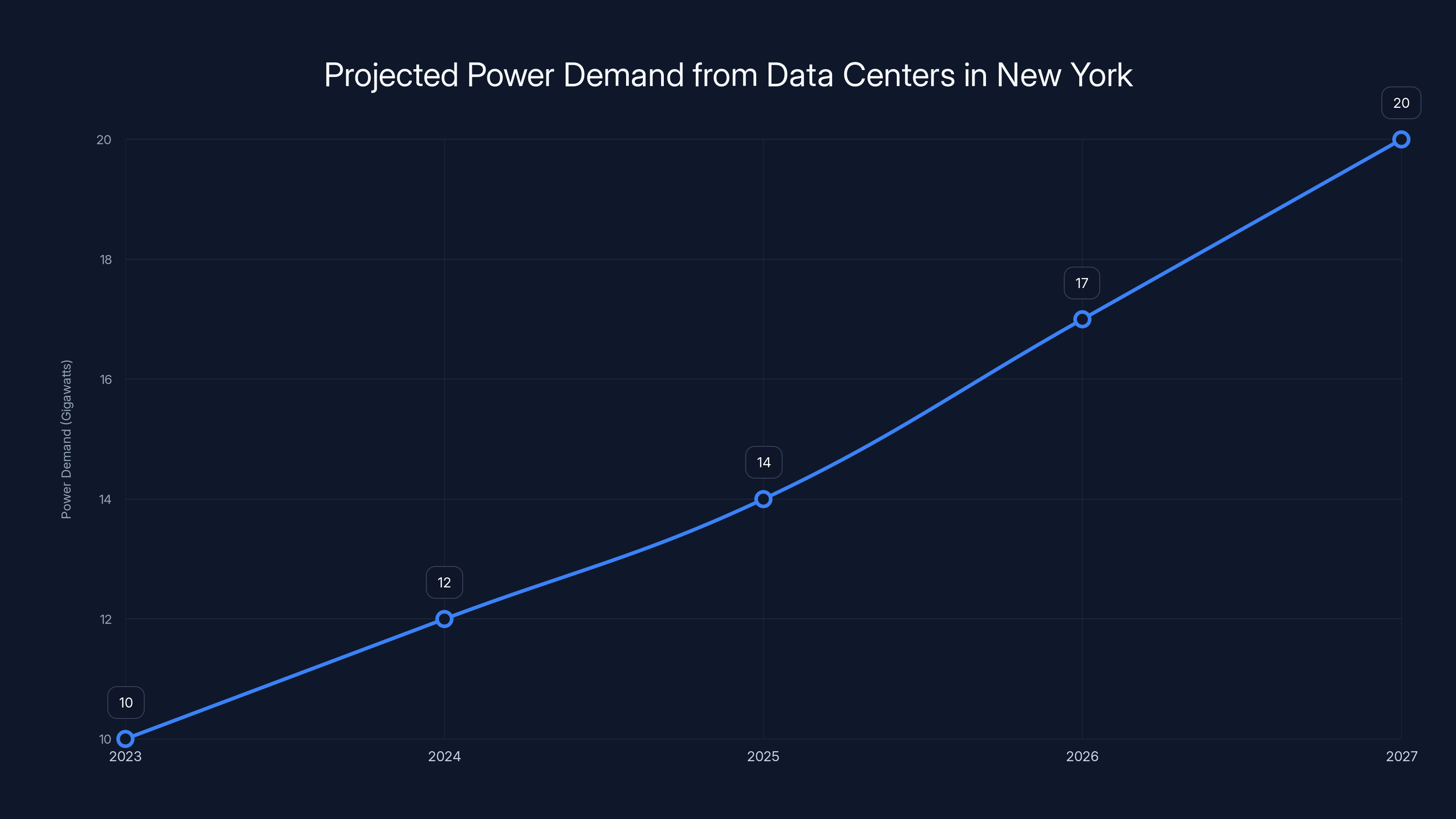

Projected data suggests a significant increase in power demand due to data centers, reaching 20 gigawatts by 2027. Estimated data based on current trends.

The NY FAIR News Act: Labeling AI-Generated Content

The New York Fundamental Artificial Intelligence Requirements in News Act—mercifully shortened to the NY FAIR News Act—is one of the first serious legislative attempts to address AI-generated misinformation in the news industry.

Here's what it requires: any news content that is "substantially composed, authored, or created through the use of generative artificial intelligence" must carry a disclaimer. This sounds straightforward, but the implications are significant. News organizations would need to implement processes to identify where AI was used, track it, and make it visible to readers. That's not trivial.

But the bill goes further. Before any AI-generated news content gets published, it has to be reviewed and approved by a human with "editorial control." This is where things get interesting. It's essentially saying that you can't just push a button and let AI write your news story without human oversight. Someone has to read it, verify it, and sign off on it.

The bill also requires transparency within newsrooms. Organizations have to disclose to their employees how and when AI is being used in the creation of content. This addresses a real concern: journalists discovering that their jobs are being replaced by AI without warning. It forces management to be transparent about automation and gives workers visibility into how the technology affects their roles.

Additionally, the bill includes safeguards for confidential information. News organizations have to prevent confidential information—especially information about sources—from being accessed or processed by AI systems. This is crucial for investigative journalism. If an AI system could access your confidential source database or internal notes about sources, the entire structure of investigative reporting breaks down.

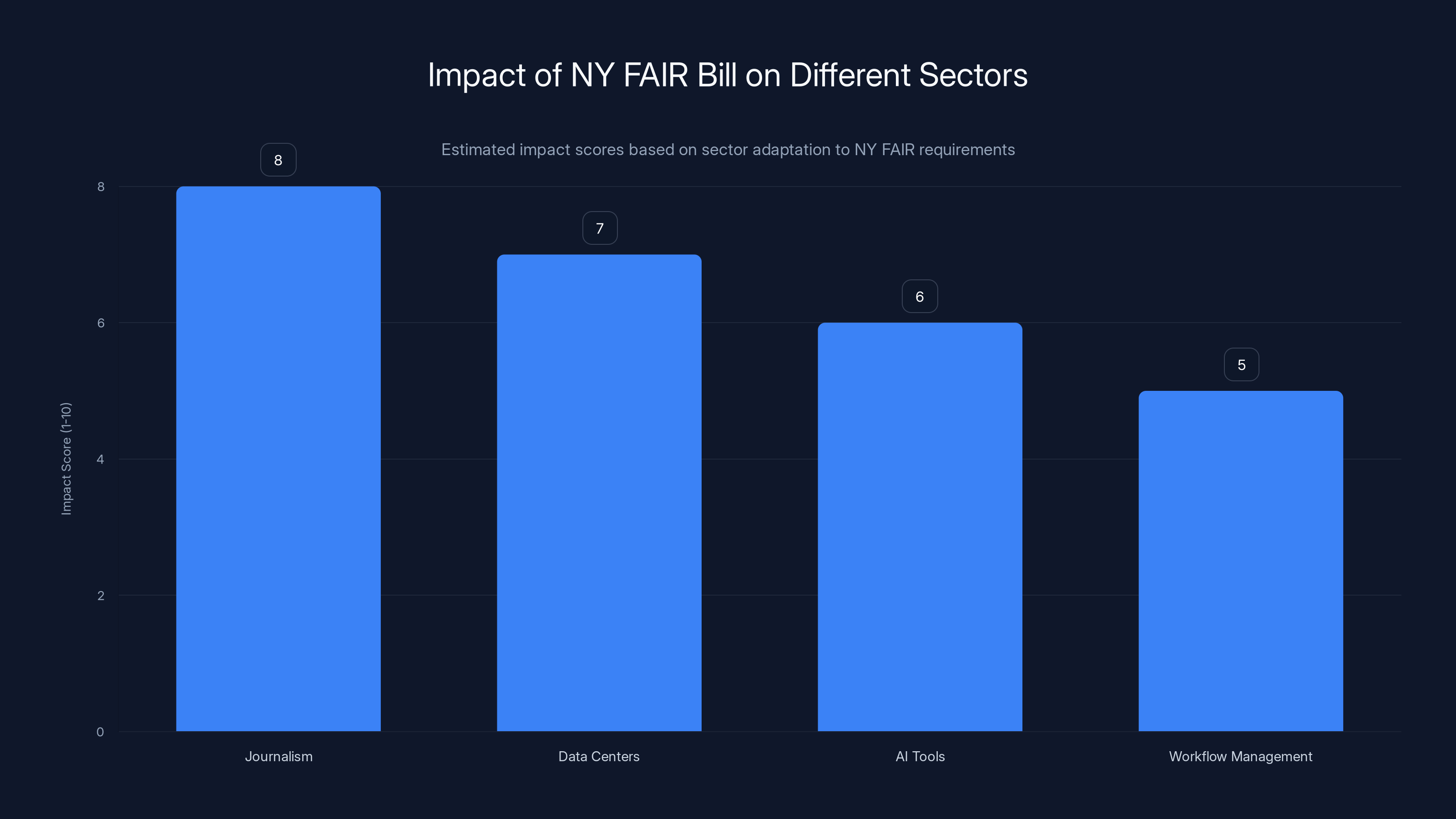

The real-world impact here is interesting. Major news organizations have already been experimenting with AI for years. The Associated Press uses AI to write earnings reports. Some outlets use AI to generate headlines or suggest story angles. What this bill does is force those experiments into the light. It makes the invisible visible.

There's also a subtler point: the bill doesn't ban AI in news. It just requires transparency and human review. This is important because it's not a sledgehammer approach. It's saying "use AI if you want, but be honest about it and maintain human editorial control." That's a more nuanced regulation than "ban AI in news," which would be technically impractical anyway.

But here's the catch. "Editorial control" is vague. What does that actually mean? If an editor glances at AI-generated content for five seconds before publishing, does that count as editorial control? Or does it require substantial revision and fact-checking? The devil's in the details, and those details will probably get hashed out in further amendments or legal challenges.

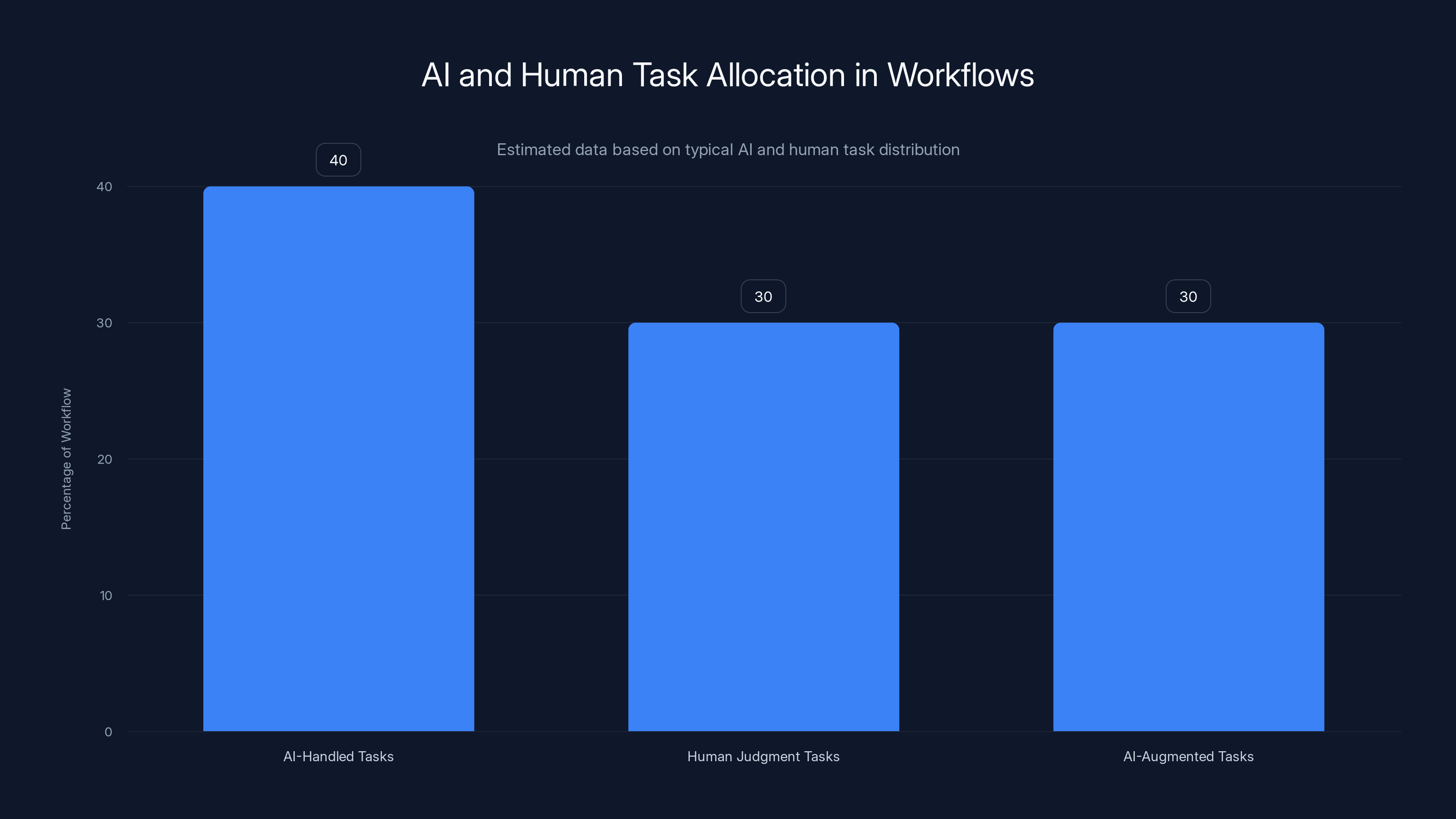

Estimated data shows a balanced approach where AI handles repetitive tasks, humans focus on judgment, and AI augments human capabilities.

Data Center Moratorium: The Infrastructure Bottleneck

While the NY FAIR News Act targets content, bill S9144 targets something equally important: the physical infrastructure that powers AI. It imposes a moratorium on new data center permits for at least three years.

Why? Because the power grid is getting crushed.

Data centers are energy-hungry beasts. Training AI models, running inference on large language models, and storing massive amounts of data requires enormous amounts of electricity. New York's power infrastructure wasn't built for this kind of demand surge.

Let's look at the numbers. National Grid New York reports that requests for "large load" connections have tripled in just one year. Not doubled. Tripled. And they're projecting at least 10 gigawatts of additional demand in the next five years. For context, that's roughly the power consumption of 10 million homes.

Here's the problem: New York's grid is already straining. The state just approved a 9 percent rate increase for Con Edison customers over the next three years. Electric bills are climbing across the country as data centers put pressure on power infrastructure. And New York residents are getting hit with the costs.

The state already has over 130 data centers operating. The moratorium would effectively pause growth on new data center construction, giving the grid time to upgrade and the state time to plan better infrastructure.

Now, this is where things get complicated. Data center companies, cloud providers, and AI companies obviously hate this idea. They want to build. They want to expand. A three-year moratorium essentially pauses their growth plans in the state.

But from New York's perspective, it makes sense. You can't let infrastructure needs drive power policy. You have to plan the infrastructure first, then allow growth that fits within that infrastructure. Otherwise, you end up with rolling blackouts, skyrocketing electricity costs for residents, and a broken grid.

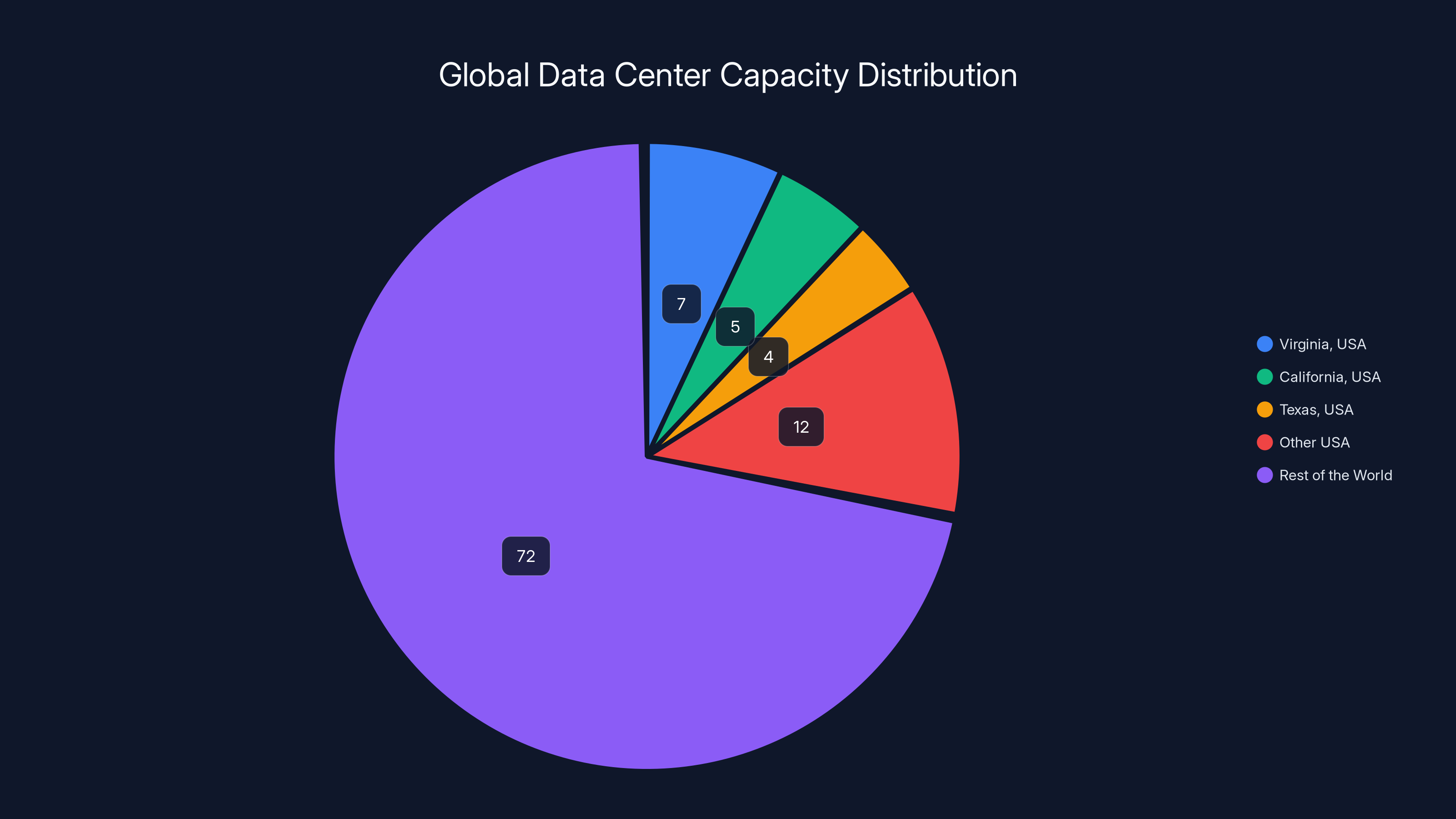

The practical effect of this bill would be significant. Companies would shift their data center construction to other states. Virginia, Texas, Ohio, and other states with cheaper power and less regulatory oversight would become even more attractive for new data centers. This is a form of regulatory arbitrage: if one state makes it hard to build, companies go somewhere else.

What's interesting is that this bill is explicitly about resident protection, not anti-AI sentiment. The framing is "rising electric and gas rates for residential, commercial, and industrial customers." It's about keeping power affordable for regular New Yorkers while the tech industry scales up.

The Broader Regulatory Landscape: Why These Bills Matter

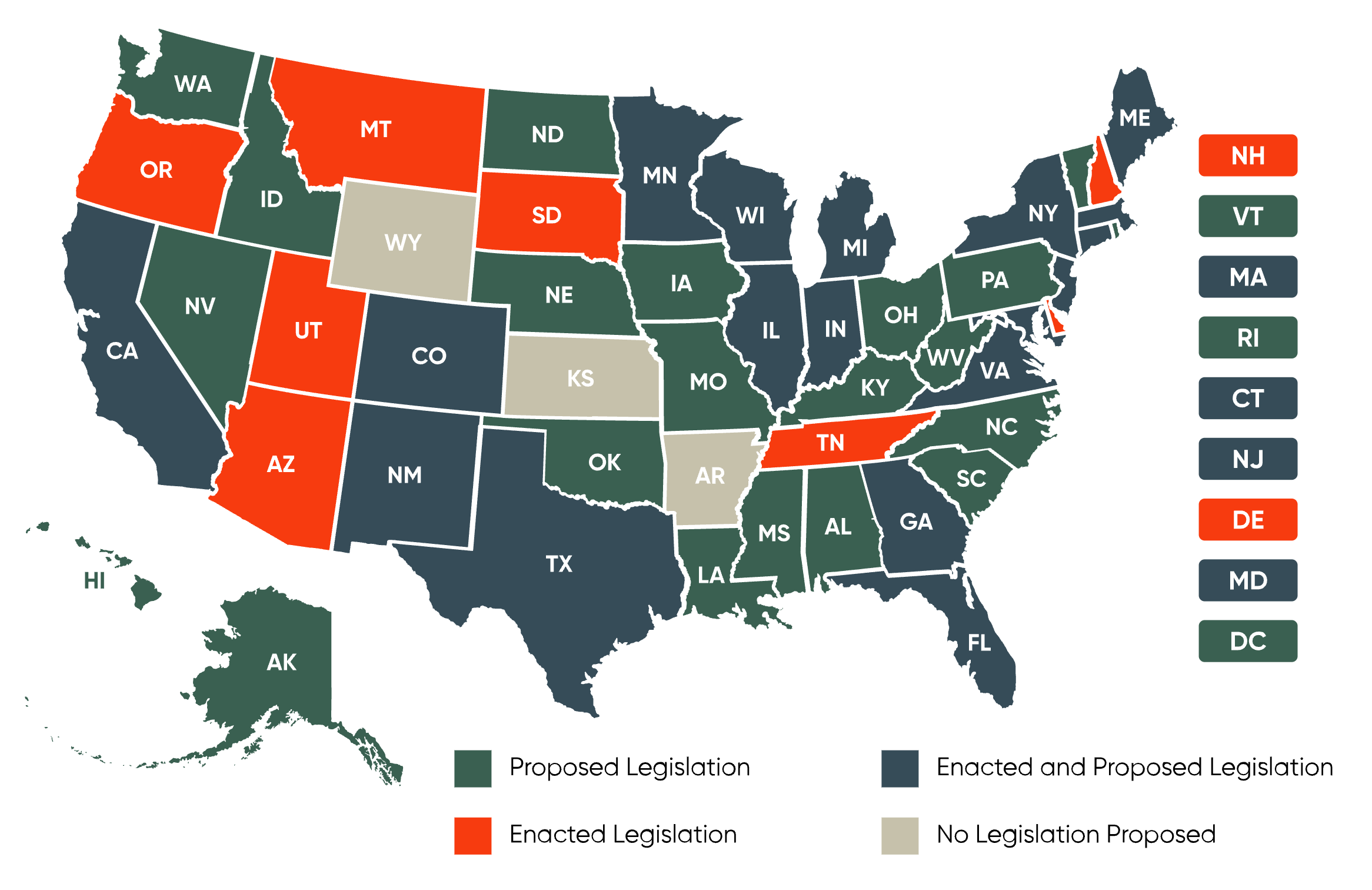

These aren't isolated moves. They're part of a broader shift toward AI regulation at the state level in America.

We've already seen California pass the AI Transparency Bill, which requires companies to disclose how they use AI. The EU passed the AI Act, which is far more comprehensive and already shaping how global companies operate. And now New York is joining the club with targeted, sector-specific regulation.

What makes New York's approach interesting is that it's pragmatic. It's not trying to regulate AI in general. It's targeting specific problems: misinformation in news and infrastructure strain. It's saying "we're not against AI, but we need rules around how it's used in these specific contexts."

This creates a patchwork of regulations that companies have to navigate. If you're a news organization operating in New York, you need to comply with NY FAIR. If you're a data center operator, you need to work within the moratorium. If you're operating nationally, you might have to implement systems that work across all state regulations.

That's actually good for regulation in the long term. When different states experiment with different approaches, you get to see what works and what doesn't. California's been the testing ground for privacy regulations for years, and their approach influenced regulations everywhere else. New York could end up being the testing ground for AI governance.

But there's also a risk. If regulation becomes too fragmented, it creates compliance nightmares for companies and may actually stifle innovation. A startup developing AI tools for news organizations shouldn't have to build separate compliance systems for New York, California, Massachusetts, and whatever other states pass AI bills.

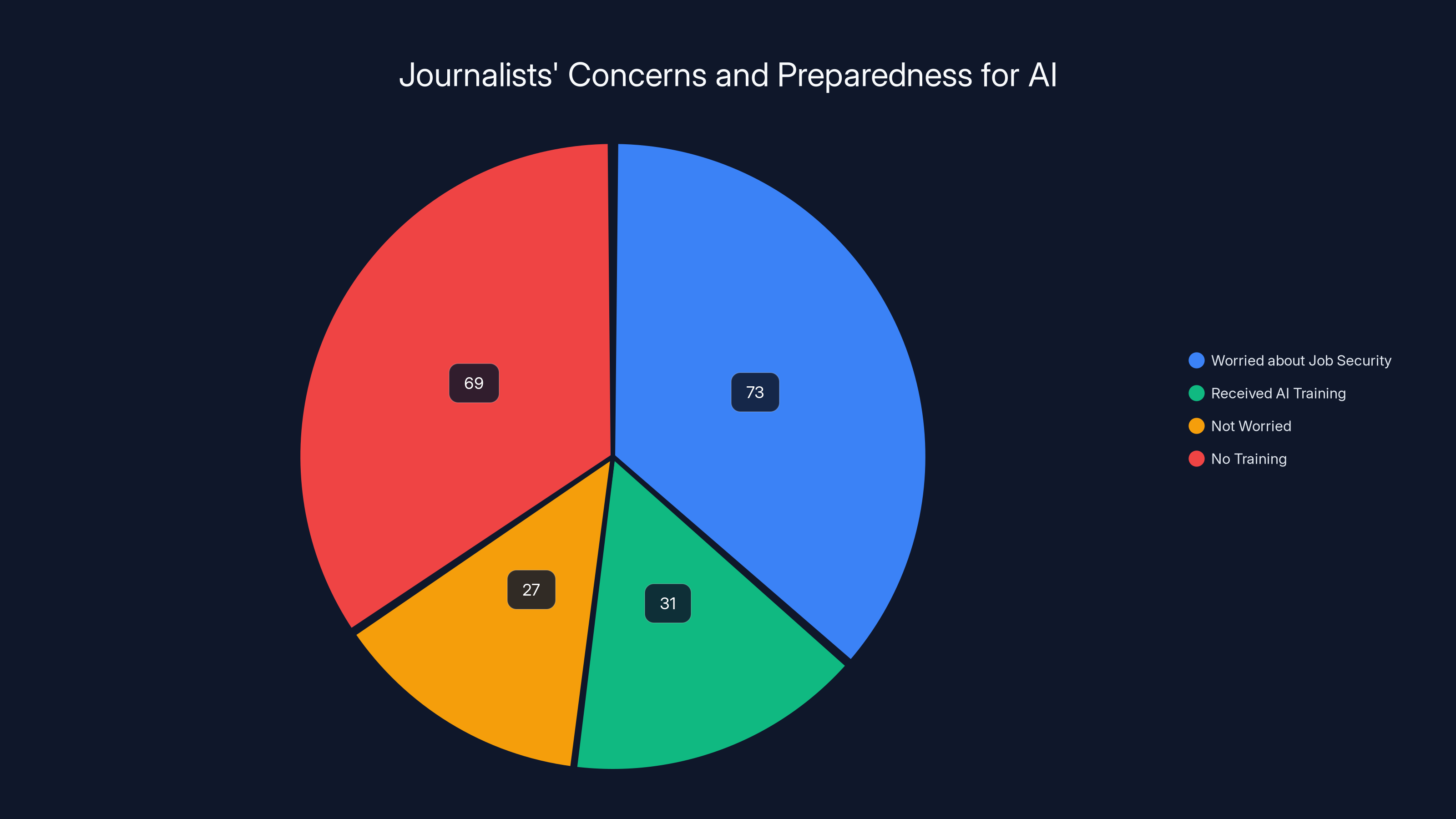

A significant 73% of journalists are worried about job security due to AI, yet only 31% have received training on AI tools. This highlights a gap between concern and preparedness.

The News Industry Response: Labor Concerns

News organizations are nervous about both bills, but for different reasons.

The NY FAIR News Act represents a threat to the cost-cutting strategies many outlets have embraced. Over the past decade, newsroom sizes have shrunk dramatically. In 2008, there were about 51,000 newspaper journalists in America. By 2023, that number had dropped to around 28,000. That's a devastating loss of jobs and expertise.

AI offers a way to continue producing content with fewer journalists. Some outlets have already started replacing entry-level reporters with AI tools. The NY FAIR Act forces transparency around this, which could create political pressure not to use AI so aggressively.

From a labor perspective, that's good. Journalists get to know if their jobs are at risk. They can organize, negotiate, or transition to new roles. From a business perspective, it complicates cost reduction strategies.

But here's the nuance: the bill doesn't ban AI. It just requires transparency and human review. Smart news organizations could actually use AI to augment their journalists, not replace them. AI could handle routine tasks like data entry, headline suggestions, or transcription, freeing up journalists to do what they do best: investigate, interview, and analyze.

The real issue is that many news organizations haven't thought that carefully about implementation. They just see AI as a way to cut costs. The NY FAIR Act forces them to think more seriously about how they integrate AI into their operations.

Data Center Economics: The Cost of Growth

The data center moratorium brings up interesting questions about economic development and infrastructure planning.

Data centers are supposed to be good for local economies. They bring jobs, investment, and tax revenue. A single large data center can employ hundreds of people and contribute millions in taxes. Communities have historically competed to attract data centers.

But the hidden cost is infrastructure strain. Power companies have to invest billions in upgrading the grid to support new data centers. Those costs get passed on to regular residents through higher electricity bills. So in effect, residential customers are subsidizing the infrastructure for tech companies.

New York's moratorium is a way of saying: "Wait. Let's make sure our infrastructure is actually ready before we add more demand." It's a version of regulated growth rather than uncontrolled growth.

The economic impact could be substantial. Data center construction in New York represents billions in investment. Pausing that has ripple effects. Construction jobs get delayed. Equipment suppliers see reduced demand. Real estate developers lose opportunities.

On the flip side, the moratorium gives New York time to upgrade its power infrastructure. It allows the state to invest in grid modernization, renewable energy, and distributed generation. It creates a chance to plan intelligently rather than react to crisis.

The NY FAIR News Act emphasizes AI transparency and human oversight, while the data center moratorium focuses on infrastructure sustainability. Estimated data reflects key focus areas.

Technical Challenges: How Would NY FAIR Actually Work?

On paper, the NY FAIR News Act sounds reasonable. Label AI-generated content. Have a human review it. Disclose to employees. Protect sources. Simple.

In practice, implementation gets messy.

First, there's the identification problem. What counts as "substantially composed" by AI? If a journalist writes a story and uses AI to generate a headline, does the entire article get labeled? If an editor uses AI for spell-check and grammar suggestions, does that trigger the labeling requirement? What if AI was used just to organize research or suggest a story structure?

You need clear thresholds. Is it 50 percent AI content? 25 percent? 10 percent? The bill doesn't specify, which means interpretations could vary, and legal challenges would likely follow.

Second, there's the human review requirement. Who counts as a human with "editorial control"? A junior editor? A senior reporter? A copy editor? Someone who's never seen the story before? Someone who wrote the story? The specifics matter because they determine how labor-intensive the process becomes.

Third, there's the source protection problem. How do you prevent AI systems from accessing confidential information about sources without making the AI system useless? If you're using AI to help organize and analyze articles, but you exclude confidential source information, you're working with incomplete data. The AI becomes less useful.

Fourth, there's the definitional issue around "news." Is opinion journalism covered? Commentary? Satire? Analysis? The bill specifies "news," but that term is increasingly blurry.

These aren't deal-breakers. They're implementation details that would need to be worked out through regulation, legal precedent, and industry standard-setting. But they highlight the complexity of translating a well-intentioned bill into practical rules that businesses can actually follow.

The Power Grid Upgrade Reality: What Comes After the Moratorium?

The data center moratorium buys time, but the real question is what happens during that three years.

New York needs to significantly upgrade its power infrastructure. This requires investment, planning, and coordination between utilities, the state government, and private developers.

Some of that's already happening. New York has been investing in renewable energy, particularly wind power. The state has committed to 70 percent renewable energy by 2030 and 100 percent by 2040. That's going to be crucial for powering data centers sustainably.

But renewables alone won't solve the problem. The state also needs better grid management technology. Smart grids, demand response programs, and battery storage systems can help distribute power more efficiently and smooth out demand peaks.

There's also the question of whether data centers themselves need to change. Could they operate more efficiently? Could they use more renewable energy directly? Could they implement demand-side management to shift usage away from peak hours?

These are the kinds of questions that should be addressed during the moratorium period. If New York comes out of the moratorium with a clearer plan for sustainable data center growth, then it will have been time well spent. If it just lifts the moratorium and lets things go back to business as usual, then it won't have accomplished much.

The NY FAIR bill significantly impacts journalism and data centers, requiring adaptation to transparency and efficiency standards. Estimated data.

Comparing New York's Approach to Other States and Regions

How does New York's approach compare to what other places are doing?

California's been more focused on broad AI transparency and accountability. The AI Transparency Bill requires companies to disclose how they're using AI systems. It's industry-wide and doesn't target specific sectors. California's also pushing for AI safety standards and worker protections.

Europe's AI Act is far more comprehensive and technically sophisticated. It categorizes AI systems by risk level and applies different rules to high-risk systems. It's a more systematic approach, but it's also more complex and harder to implement.

New York's approach is targeted and pragmatic. It's not trying to regulate all AI. It's addressing specific problems in specific sectors. This might be more politically feasible and easier to implement than broader regulations.

Massachusetts has focused on autonomous vehicles, creating regulations for testing and deployment. Texas and other conservative-leaning states have largely avoided AI regulation, preferring lighter-touch approaches.

What we're seeing is a genuine regulatory experiment. Different jurisdictions are trying different approaches. Over time, we'll learn which approaches work, which create unintended consequences, and which get widely adopted.

New York's positioning itself as an AI regulation leader, similar to how California became the privacy regulation leader. That comes with benefits (influence over national standards) and costs (businesses might relocate to less regulated states).

The Economics of AI-Generated News: Is It Actually Worth It?

Underlying all this is an economic question: is AI-generated news actually cost-effective and good for news organizations?

The theory is straightforward. Automated news reduces labor costs. You don't need as many journalists if you can generate content with AI. This is particularly attractive for routine news like earnings reports, weather summaries, or sports recaps where the format is repetitive.

The Associated Press has reported good results with their AI earnings reporting. They generate thousands of reports annually, which would require hundreds of journalists to produce manually. The AI lets them cover more companies and provide more comprehensive financial news.

But there are downsides. AI-generated news has errors. It lacks context and nuance. It can't investigate or interview. It can't make editorial judgments about what's important. For anything beyond routine reporting, human journalists are still essential.

Moreover, if every news organization uses AI in the same way, you get homogenized news. Everyone's running the same AI on the same data, producing similar stories. That reduces the diversity of perspectives and coverage that a healthy news ecosystem needs.

From a consumer perspective, there's also a trust issue. Studies show that readers trust human-authored content more than AI-generated content, even when the AI content is accurate. Transparency about AI authorship is important, but it might also reduce reader trust.

So the economic case for AI in news isn't as clear-cut as it initially seems. It works well for specific applications, but not as a general replacement for journalism.

Virginia hosts approximately 7% of global data center capacity, leading the USA. New York's moratorium could shift more capacity to Virginia, increasing regional concentration. (Estimated data)

Automation and Workflow Optimization in News Operations

Interestingly, this brings up a broader point about automation in professional workflows.

There's a distinction between automation that replaces workers and automation that augments them. AI can handle routine, repetitive tasks like data processing, transcription, initial research, or formatting. That frees up journalists to focus on more interesting, higher-value work like investigation, analysis, and storytelling.

Tools that assist without replacing—this is where the most value probably exists. A journalist using AI to transcribe interviews faster, organize research automatically, or get headline suggestions isn't being replaced. They're being made more productive.

This is why context matters in the NY FAIR Act. If it pushes news organizations toward "AI-assisted journalism" rather than "AI-replacement journalism," it could actually be good for both journalists and news quality.

The bill's requirement for human editorial control points in this direction. You can't just publish AI content. Someone has to review it. That requirement, if implemented thoughtfully, could actually encourage a healthier relationship between journalists and AI tools.

But it requires intentional implementation. News organizations have to think about how to structure workflows so that AI augments rather than replaces. That's harder than just cutting staff and hoping AI can handle the work.

What Happens Next: The Path Forward

Neither of these bills has passed yet. They're in the legislature. The actual outcome depends on legislative processes, political will, and industry lobbying.

Data center companies will definitely lobby against the moratorium. They'll argue it's anti-business, that it will drive investment to other states, that it will harm economic development. Those arguments have real weight. Economic development is politically popular, and states compete for data center investment.

News organizations and tech companies will lobby against or seek to modify NY FAIR. They'll argue it's too vague, too burdensome, that it will stifle innovation. They'll try to get exemptions or narrower definitions. Some of those arguments are reasonable.

What's likely is that if these bills pass, they'll be modified from their current forms. Thresholds will be defined. Exemptions will be carved out. Compliance pathways will be clarified. But the core ideas—transparency about AI in news and managed growth of data center capacity—are likely to stick around in some form.

If they pass in New York, expect similar bills in other states. California, Massachusetts, and others will probably follow. You could end up with a patchwork of state-level AI regulations that companies have to navigate.

That's actually valuable long-term. A patchwork of state regulations is suboptimal, but it's better than complete regulatory chaos. Eventually, regulations converge or the federal government steps in with national standards. We've seen this pattern with privacy regulations, environmental regulations, and labor standards.

Longer-Term Implications: The AI Regulation Trend

These bills are part of a larger trend toward AI governance. Around the world, governments are waking up to the fact that AI is powerful enough to warrant regulation.

The question isn't whether AI will be regulated. It's what form that regulation will take. Overly restrictive regulation could stifle innovation. Too-light regulation could allow harmful practices. The challenge is finding the balance.

New York's approach is interesting because it's targeted rather than broad. It doesn't try to regulate all AI applications. It addresses specific harms: misinformation in news and grid strain from data centers. This targeted approach is probably more sustainable than attempting to regulate all AI at once.

What's also important is that these regulations are starting to happen at the state level before major federal regulation. This gives states a chance to experiment and shape regulation before it gets locked in at the national level. It's messy, but it's also how American federalism works.

The risk is that if too many states regulate differently, you get fragmentation that makes compliance impossible. Companies would end up having to maintain separate systems for different states. That's expensive and inefficient.

The opportunity is that state-level experimentation can show what works. If New York's approach to news labeling works well, other states might adopt it. If it creates problems, states can learn from New York's mistakes. Over time, best practices emerge.

The Sustainability Angle: AI, Data Centers, and Climate

Hidden in the data center moratorium is a climate story.

Data centers consume enormous amounts of electricity. As AI scales, that consumption is growing exponentially. According to projections, AI could account for 5 to 10 percent of global electricity consumption within the next decade.

If that electricity comes from fossil fuels, it's a climate problem. If it comes from renewables, the impact is much lower. New York's moratorium effectively forces the state to plan for sustainable energy infrastructure before expanding data center capacity.

This is good climate policy, even if it's framed as power grid management. By limiting data center growth to what the grid can sustainably support, New York is preventing a situation where data centers would be powered by fossil fuels to meet peak demand.

Other states and countries are starting to think about this too. Some data center operators are committing to renewable energy. Cloud providers are talking about carbon-neutral operations. But these are commitments, not regulations. A three-year moratorium gives New York time to actually build the renewable infrastructure to support data center growth.

This could become a template. "Pause growth in energy-intensive industries until you can support it with renewable energy." It's a pragmatic approach to climate policy that also addresses immediate infrastructure concerns.

Industry Perspectives: What Tech Companies Are Saying

Big tech companies have been cautiously quiet about these bills, but industry associations are definitely paying attention.

The data center industry argues that these moratoriums are short-sighted. Data centers bring jobs, tax revenue, and economic development. A moratorium might feel good politically, but it has real economic costs.

They also argue that data centers are getting more efficient. Modern data centers use less power per unit of computing than older facilities. Blanket moratoriums ignore these efficiency improvements.

On the news side, platforms like Google and Meta have complex interests. They don't want to be seen as replacing journalists, but they also benefit from AI-generated content and automation. They'll probably stay on the sidelines and let news organizations and open-source AI developers deal with the regulatory fallout.

AI startups and open-source projects would likely oppose broad AI regulation but might support targeted approaches like NY FAIR, since it applies mainly to news organizations, not AI developers.

The politics here are complicated because different parts of the tech industry have different interests. There's no unified "tech industry" position. There's cloud providers, AI companies, platforms, news companies, and infrastructure developers, all with different stakes.

The Broader Question: Can Regulation Keep Pace with Technology?

One of the fundamental questions underlying all this is whether regulation can actually keep pace with technology.

AI is moving fast. Companies are deploying new models, new applications, new use cases constantly. By the time a bill becomes law, the technology landscape has often changed significantly.

There's a structural tension here. Regulation requires a slow, deliberate process. Companies need to weigh in. Legislators need to debate. Lawyers need to review. By the time a regulation is finalized, it might be addressing yesterday's concerns with yesterday's understanding of the technology.

But completely unregulated technology development creates different problems. Companies will optimize for profit, which doesn't always align with public interest. Without guardrails, you get harmful applications, labor displacement, and environmental damage that doesn't get addressed until after the harm is done.

The answer probably isn't perfect regulation that keeps pace with technology. That's impossible. The answer is frameworks that are flexible enough to adapt as technology changes while still providing clear rules.

New York's targeted approach actually works better in this regard than broader regulation. "Label AI-generated news and require human review" is a rule that will work for decades, even as AI gets more sophisticated. "Pause data center construction until infrastructure is ready" is a principle that applies regardless of what new data center technologies emerge.

Compare that to a broad rule like "AI systems must be transparent about their reasoning." That sounds good but becomes impossible to implement as models get more complex. How do you make a 500-billion-parameter language model transparent? That's a technical problem that might not have a good answer.

So targeted regulation on specific harms, applied through flexible mechanisms, is probably more sustainable than trying to regulate AI in general.

FAQ

What is the NY FAIR News Act?

The New York Fundamental Artificial Intelligence Requirements in News Act (NY FAIR News Act) is proposed legislation that requires news content substantially created by AI to carry a disclaimer and be reviewed by a human with editorial control before publication. It also mandates that news organizations disclose AI usage to employees and implement safeguards to protect confidential sources from being accessed by AI systems. The bill aims to address the spread of AI-generated misinformation in journalism while maintaining transparency about how AI is used in news production.

How would the NY FAIR News Act be enforced?

Enforcement would likely involve the New York Attorney General's office and potentially the New York Department of State's media division. News organizations would be required to maintain documentation of AI usage, human review processes, and disclosure statements. Violations could result in fines or legal action. However, the bill's current language doesn't specify exact enforcement mechanisms, which would likely need to be defined in regulations or through legal precedent. The practical enforcement would depend heavily on how the state defines key terms like "substantially composed" and "editorial control."

What is the data center moratorium bill (S9144)?

Bill S9144 imposes a moratorium on issuing permits for new data centers in New York for at least three years. The moratorium is intended to give the state time to upgrade its power infrastructure before allowing new data center development. The bill cites rising electricity rates for residents and commercial customers, increased power demand from existing data centers, and the need for sustainable infrastructure planning. After the three-year period, the state would presumably allow data center development to resume, assuming infrastructure upgrades have been completed.

Why is the data center moratorium necessary?

New York's power grid is experiencing unprecedented demand from data centers. Requests for large power connections have tripled in one year, and utilities project 10 gigawatts of additional demand over the next five years. This growing demand strains the grid and pushes up electricity rates for residential and commercial customers. The state has already approved a 9 percent rate increase for Con Edison customers over three years. The moratorium provides time to upgrade infrastructure and plan for sustainable energy sources before allowing further data center expansion. Without the moratorium, the state risks grid instability and further rate increases for regular residents.

What are the economic implications of these bills?

The data center moratorium could reduce investment in New York, potentially redirecting data center construction to other states with less regulation. This affects construction jobs, real estate development, and tax revenue. However, preventing grid strain protects existing residents and businesses from higher electricity costs. The NY FAIR News Act could increase compliance costs for news organizations that use AI extensively, but it preserves journalism jobs by making AI replacement more difficult and transparent. Both bills involve trade-offs between economic development and managing the externalities of rapid tech growth.

Could these bills become models for other states?

Highly likely. New York and California have historically been bellwethers for state-level regulation. If these bills pass and are effective, other states will probably follow with similar legislation. This could lead to a patchwork of different state AI regulations, or it could lead to broader consensus on best practices that eventually influences federal regulation. The bills' targeted approach to specific problems, rather than attempting to regulate all AI broadly, makes them more likely to be adopted by other states. However, different states might modify the approach based on their specific circumstances and priorities.

What happens if companies don't comply with these regulations?

For the NY FAIR News Act, non-compliance could result in enforcement action from the New York Attorney General, including cease-and-desist orders, fines, or civil litigation. News organizations could face legal liability if they publish AI-generated content without proper disclosure or human review. For the data center moratorium, companies would be unable to obtain permits for new facilities, effectively stopping new construction. Data center operators might challenge the moratorium in court, arguing it violates property rights or interstate commerce provisions. Both bills would likely face legal challenges, and courts would ultimately determine how strictly they're enforced.

How do these bills compare to AI regulation in other countries?

New York's approach is more targeted and sector-specific than the EU's comprehensive AI Act, which applies risk-based rules to AI systems broadly across industries. New York's bills also avoid the prescriptive technical standards that the EU requires. Compared to California's AI Transparency Bill, New York's approach focuses on specific harms rather than general disclosure requirements. The UK and other countries have preferred light-touch regulation with industry self-governance, while New York is moving toward more active government oversight. The bills represent a middle ground between Europe's comprehensive regulation and the lighter approaches taken in most of the United States.

Could AI help news organizations comply with the NY FAIR News Act?

Actually, yes. News organizations could use AI tools to help document and track AI usage in their workflows, flagging content that requires special disclosure or review. However, this creates a somewhat circular situation: using AI to track AI usage. The deeper issue is that AI could help with compliance infrastructure but can't reduce the underlying requirement for human editorial review. Organizations might use AI to streamline content creation and then require human approval, but they still need to hire or designate humans with editorial authority to review content before publication. The bill doesn't eliminate the need for human editorial staff.

What would happen to data center jobs under the moratorium?

Under a three-year moratorium, data center construction jobs in New York would effectively pause. Workers would either relocate to other states with data center projects or transition to other industries. However, the moratorium could create opportunities in other sectors: grid infrastructure upgrades, renewable energy installation, and data center efficiency improvements would likely accelerate as the state prepares for post-moratorium growth. The transition period would be difficult for workers already established in the data center industry, but longer-term, the diversified economic activity might create more stable employment than continued rapid data center growth.

Automation and AI in Your Workflow

While New York debates how to regulate AI in news and infrastructure, organizations of all sizes are figuring out how to integrate AI responsibly into their operations.

The lessons from these bills apply beyond just news and data centers. Whether you're building software, creating content, managing operations, or running a business, you're probably wondering about AI's role in your workflow.

The key insight from NY FAIR is that transparency and human oversight actually work together with effective automation. It's not "AI replaces humans" or "no AI at all." It's "AI handles what it's good at, humans handle what requires judgment, and we're transparent about the combination."

For any organization deploying AI, consider: What tasks are genuinely better handled by AI? What tasks absolutely require human judgment? Where can you use AI to augment human capabilities rather than replace them? Building this clarity prevents the worst outcomes while capturing the efficiency benefits AI offers.

Tools that help you automate workflows, generate content, and streamline processes can be valuable—if implemented thoughtfully. That's where platforms like Runable come in. Runable offers AI-powered automation for creating presentations, documents, reports, images, videos, and slides starting at $9/month. It's designed to help teams automate repetitive workflow tasks while maintaining human oversight and control over final outputs.

The point is that good AI implementation isn't about cutting people. It's about making people more productive and freeing them from tedious work. News organizations that use AI to let journalists focus on investigation rather than routine reporting tend to be happier with the outcome. Teams that use automation to handle data entry and formatting spend more time on creative work.

But that requires intentional implementation. It requires thinking about your workflow, identifying where AI genuinely helps, and ensuring humans remain in control of important decisions.

New York's bills, whatever their flaws, point toward this. Transparency about AI usage. Human review of AI outputs. Safeguards for sensitive information. These aren't anti-innovation. They're pro-responsibility.

As you think about adopting AI and automation in your organization, use NY FAIR as a checklist: Are you being transparent about where you're using AI? Is there human review of AI outputs? Are you protecting sensitive information? Are you being thoughtful about how automation affects your team?

If you're working through these questions, Runable's platform provides a framework for automating content and reports while maintaining clear human control points. The goal is productivity, not replacement. Accountability, not opacity.

Use Case: Automate your weekly reports and presentations while keeping your team in control of content review and approval workflows.

Try Runable For Free

What This Means for You

If you're in journalism, take NY FAIR seriously. Start understanding how your organization uses AI today. Document it. Make sure your workflow can accommodate the transparency and review requirements the bill mandates. If you haven't thought about this yet, now's the time.

If you're in data centers or infrastructure, the three-year moratorium changes your growth plans. Consider this time an opportunity to invest in efficiency and renewable energy sources. Companies that prove they can grow responsibly will have an easier path forward.

If you're building or using AI tools, these bills are a reminder that regulation is coming. Not everywhere yet, but in major markets. Building responsibility into your products now—transparency, human oversight, data protection—positions you well for whatever regulations emerge.

And if you're managing any kind of workflow or team that uses AI, the principles underlying these bills apply: Be clear about where you're using AI. Maintain human control over important decisions. Protect sensitive information. Be transparent with stakeholders.

New York's bills might feel like they're just about news and data centers. But they're really about how we integrate powerful technology into society responsibly. That's a question every organization needs to answer.

Key Takeaways

- NY FAIR News Act requires labels on AI-generated news, human editorial review, newsroom transparency, and source protection safeguards

- Data center moratorium bill pauses new permits for 3 years due to power grid strain and rising electricity costs affecting residents

- Data center power demand has tripled in one year with 10 gigawatts additional capacity projected over five years

- Implementation challenges include defining 'substantially composed' by AI, establishing editorial control standards, and protecting confidential information

- New York's targeted regulatory approach positions it as an AI governance leader, likely influencing regulation in other states

Related Articles

- New York Data Center Moratorium: What You Need to Know [2025]

- Why States Are Pausing Data Centers: The AI Infrastructure Crisis [2025]

- X's AI-Powered Community Notes: How Collaborative Notes Are Reshaping Fact-Checking [2025]

- Washington Post CEO Crisis: What Jeff D'Onofrio's Appointment Means for Media [2025]

- New York's Data Center Moratorium: What the 3-Year Pause Means [2025]

- UK Battery Subscription Service Cuts Electricity Bills by £1000 [2025]

![New York's AI Regulation Bills: What They Mean for Tech [2025]](https://tryrunable.com/blog/new-york-s-ai-regulation-bills-what-they-mean-for-tech-2025/image-1-1770586548636.jpg)