X's AI-Powered Community Notes: How Collaborative Notes Are Reshaping Fact-Checking [2025]

When X announced it was letting artificial intelligence write the first draft of Community Notes, the internet had opinions. Some saw it as a clever way to speed up fact-checking on the world's busiest information network. Others worried it was another step toward letting algorithms decide what's true. According to Engadget, this move is part of a broader trend of integrating AI into social media platforms to enhance content moderation.

Honestly? Both perspectives have merit.

X's latest experiment with Community Notes represents something bigger than just adding AI to a moderation tool. It's a fundamental shift in how platforms approach truth-checking in real-time. Instead of waiting for human volunteers to painstakingly write accurate context for misleading posts, X is now having AI generate draft notes that humans refine, rate, and approve. This approach is similar to strategies discussed in Vocal Media, which highlights the dual role of AI in both spreading and combating misinformation.

The company calls this "collaborative notes," and it's the most significant change to Community Notes since the program launched. But what does it actually mean for users, for misinformation fighters, and for the future of fact-checking on social media?

Let's dig into it.

What Are Community Notes, Really?

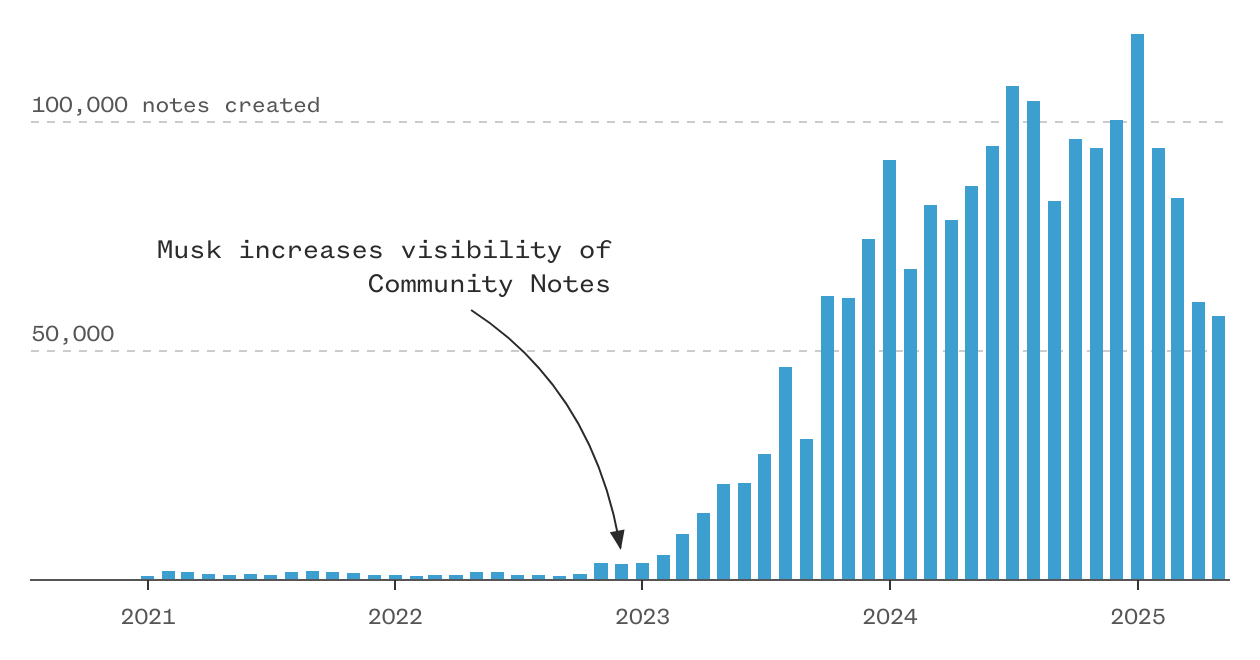

Community Notes didn't appear out of nowhere. X (formerly Twitter) launched the program years ago because they realized something fundamental: the platform had a misinformation problem that no amount of fact-checkers or journalists could solve alone. This aligns with findings from a Britannica analysis that highlights the limitations of traditional fact-checking methods in the digital age.

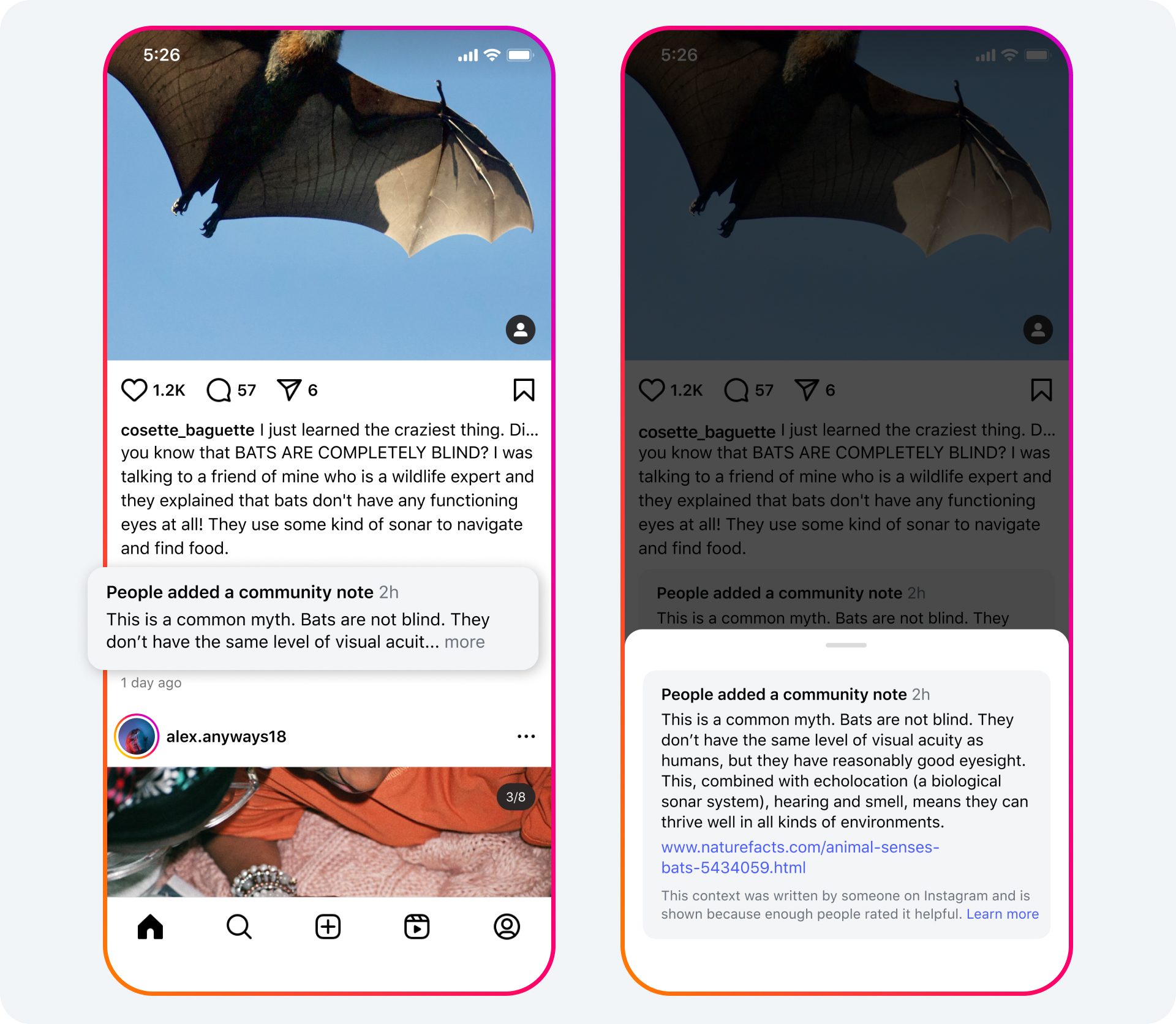

The core idea was simple but radical: let everyday people who use the platform write factual notes about misleading posts. Not professional fact-checkers. Not journalists. Regular users who understood their communities and could identify when someone was spreading nonsense.

It worked surprisingly well. Really well, actually. Studies showed that Community Notes reduced the spread of misinformation more effectively than traditional fact-checking labels. People took them seriously because they weren't coming from some distant corporation claiming authority. They came from neighbors. From people in the same digital space.

But there's always been a problem with Community Notes: speed.

Writing a good note takes time. You need to verify claims, find sources, synthesize information clearly, and make sure your note actually addresses what's misleading about the original post. A skilled note writer might spend 15 to 30 minutes crafting a single note. During that time, the original post keeps getting amplified, spreading to hundreds of thousands of people.

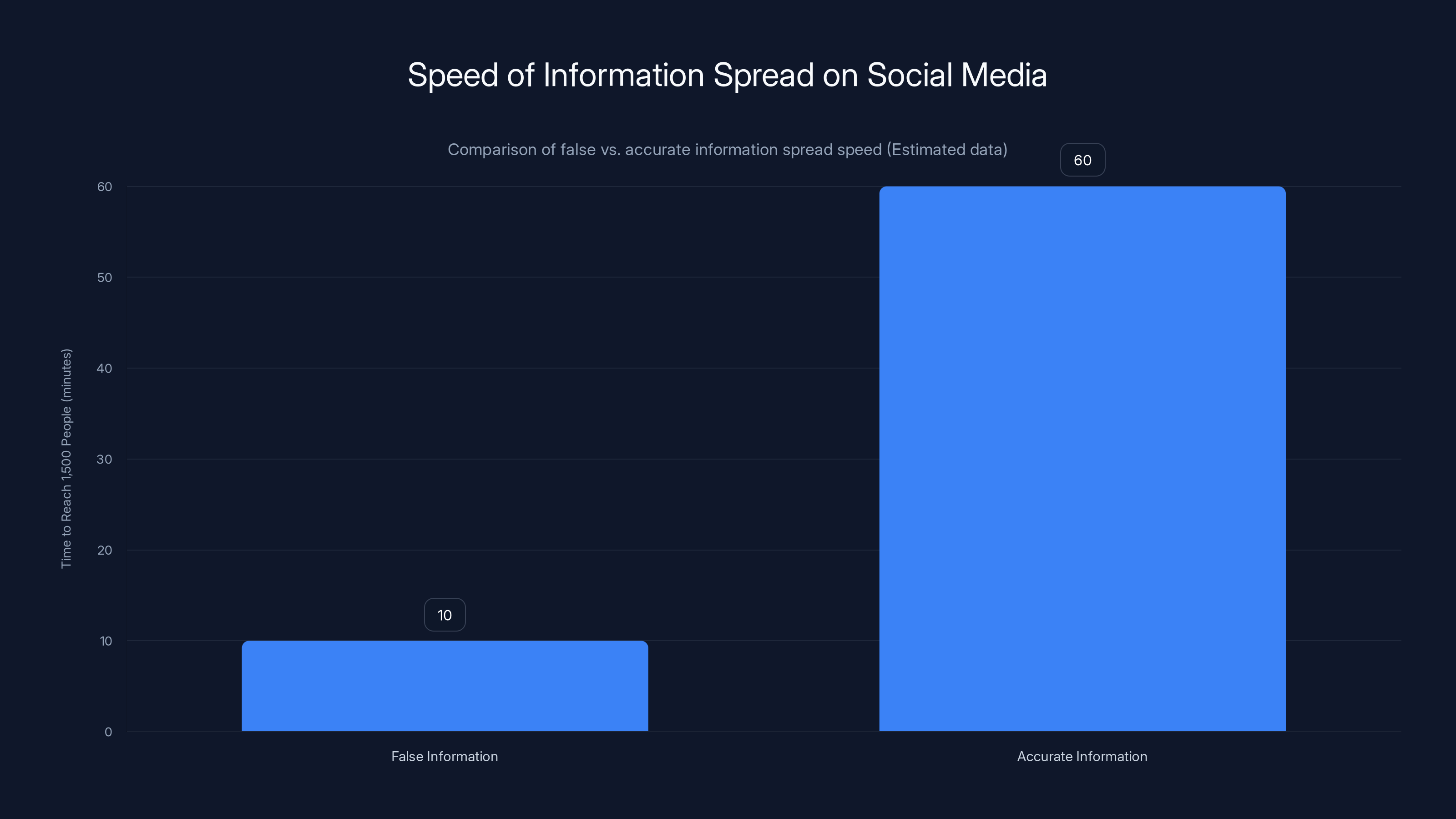

Misinformation moves at network speed. Fact-checking has always moved at human speed. That gap is where damage happens. A report by the Atlantic Council emphasizes the critical role of speed in combating misinformation, especially in geopolitical contexts.

This is the problem X is trying to solve. Not the problem of whether AI should write notes. The problem of speed. Of filling the gap between when misinformation drops and when accurate context becomes available.

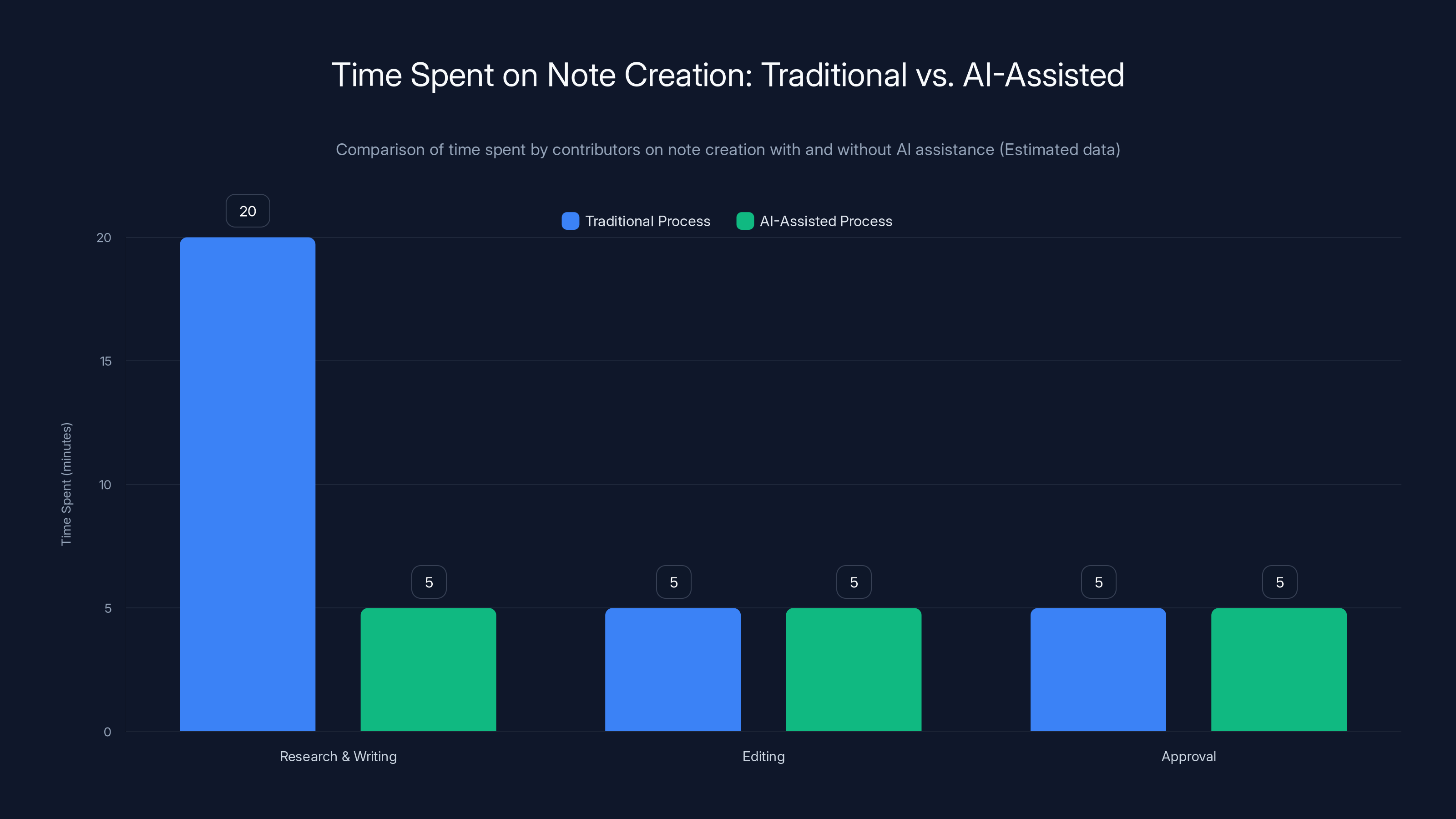

AI-assisted note creation reduces the time spent on research and writing from 20 minutes to 5 minutes, significantly speeding up the process. Estimated data.

The Rise of AI in Fact-Checking

X isn't the first platform to experiment with AI-assisted fact-checking. But they're approaching it differently than most.

Other platforms have tried automated misinformation detection. Facebook (now Meta) invested heavily in AI that could identify false claims and demote them in the feed. Google tried to flag unreliable sources. These approaches shared a common flaw: they relied on machines to determine what was true, then act as judges. eMarketer discusses how these AI tools are being integrated into social media platforms to enhance brand safety and reduce misinformation.

X's approach is different. They're not using AI to decide what's false. They're using it to accelerate the human process of explaining why something is misleading.

Think about the difference. One approach says: "The algorithm says this is false, so we're hiding it." The other says: "Here's context from a human, and AI helped them write it faster."

The second approach respects human judgment while leveraging machine speed. It's collaborative, not authoritarian.

But it also introduces new risks. What if the AI generates notes that sound authoritative but are subtly wrong? What if it confidently states things that require nuance? What if it hallucinates sources or makes connections that aren't actually supported by evidence?

These aren't theoretical concerns. They're things that have happened with large language models. They'll happen again. Nature has published research highlighting the potential pitfalls of AI-generated content, including issues with accuracy and source verification.

Despite English speakers being only 15% of the global online population, 89% of Community Notes are in English. This highlights a significant language imbalance in community contributions. Estimated data.

How Collaborative Notes Actually Work

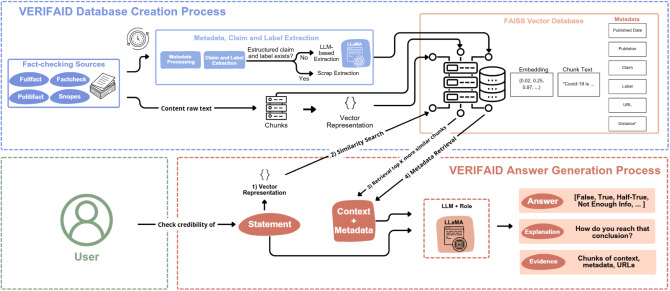

X released limited technical details about the collaborative notes system, but here's what we know from their announcement and from testing the feature:

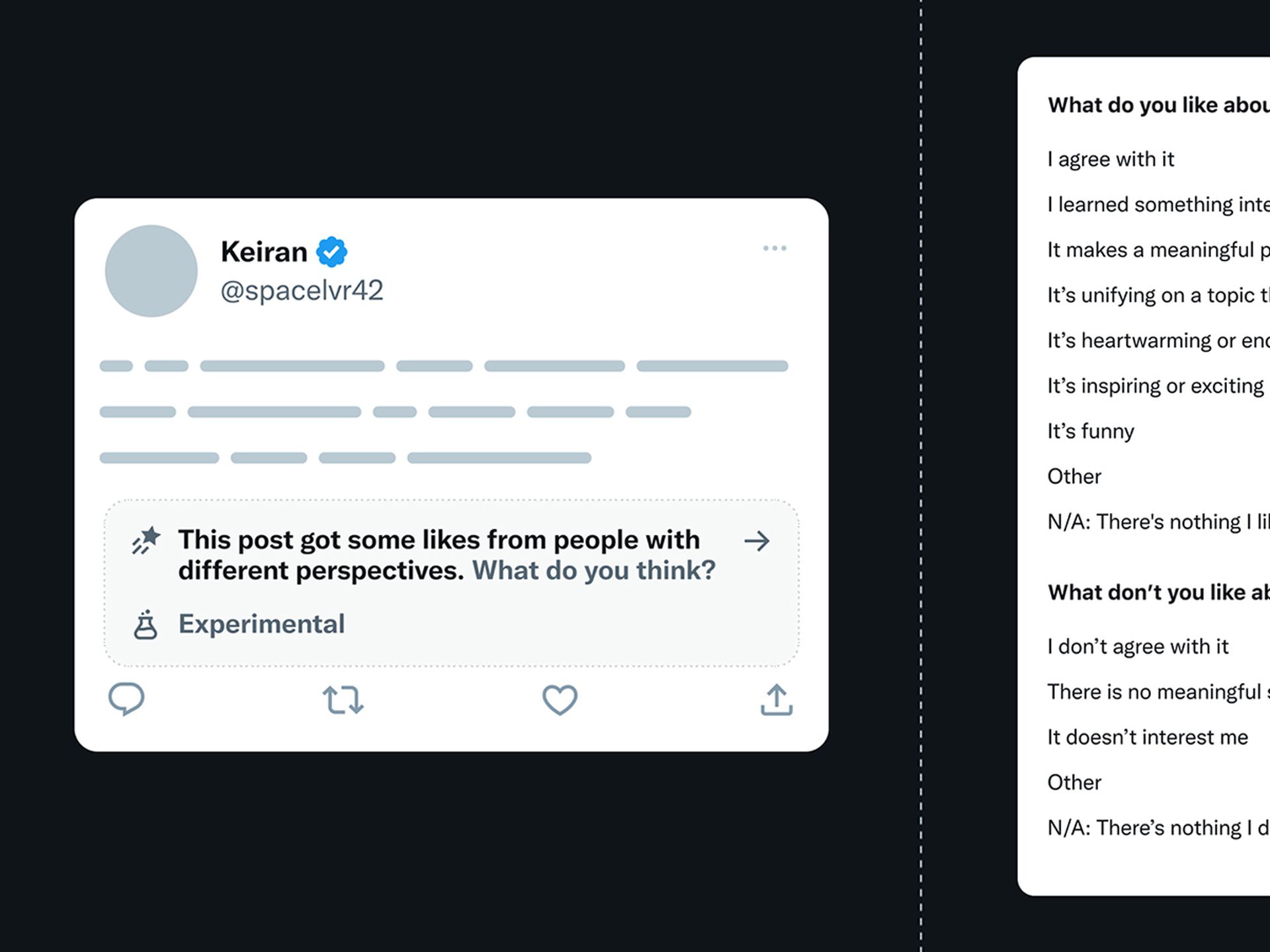

When a Community Note contributor with "top writer" status submits a request to create a note on a post, the system now automatically generates an AI draft alongside that request. This draft appears in the Community Notes interface, ready for human review.

Contributors can then do several things:

They can rate the note. They can suggest improvements. They can completely rewrite it if it misses the mark. The system then reviews all the feedback and suggestions and decides whether a revised version is actually a meaningful improvement over the previous one.

This is important: X isn't automatically publishing AI notes. They're using AI as a starting point, then letting humans validate, improve, and approve them. The human element remains crucial. This approach is similar to the collaborative strategies discussed in Holland Sentinel, which emphasizes the importance of human oversight in AI processes.

The system also implements something X calls "continuous learning." As contributors rate and suggest improvements to notes, the system learns from those patterns. This theoretically makes future AI-generated drafts better, because they're getting trained on community feedback about what makes a good note.

It's a feedback loop. More contributors rate notes, more data the system has. More data means better AI suggestions. Better suggestions means contributors spend less time rewriting from scratch.

In theory, this compounds. Over time, the AI should become more accurate, more contextually aware, and more aligned with what Community Notes contributors actually value.

In practice? We'll have to see. This is still an experiment. X is rolling it out slowly, starting with top writers only. They're watching how it performs, gathering feedback, and presumably preparing to expand if it works.

The Technical Infrastructure Behind the System

X hasn't publicly stated whether they're using Grok, their own in-house large language model, or some other AI system for generating note drafts. But it's a reasonable guess that they're using Grok, given the timing of these developments and how X has positioned Grok as a tool for getting real-time information.

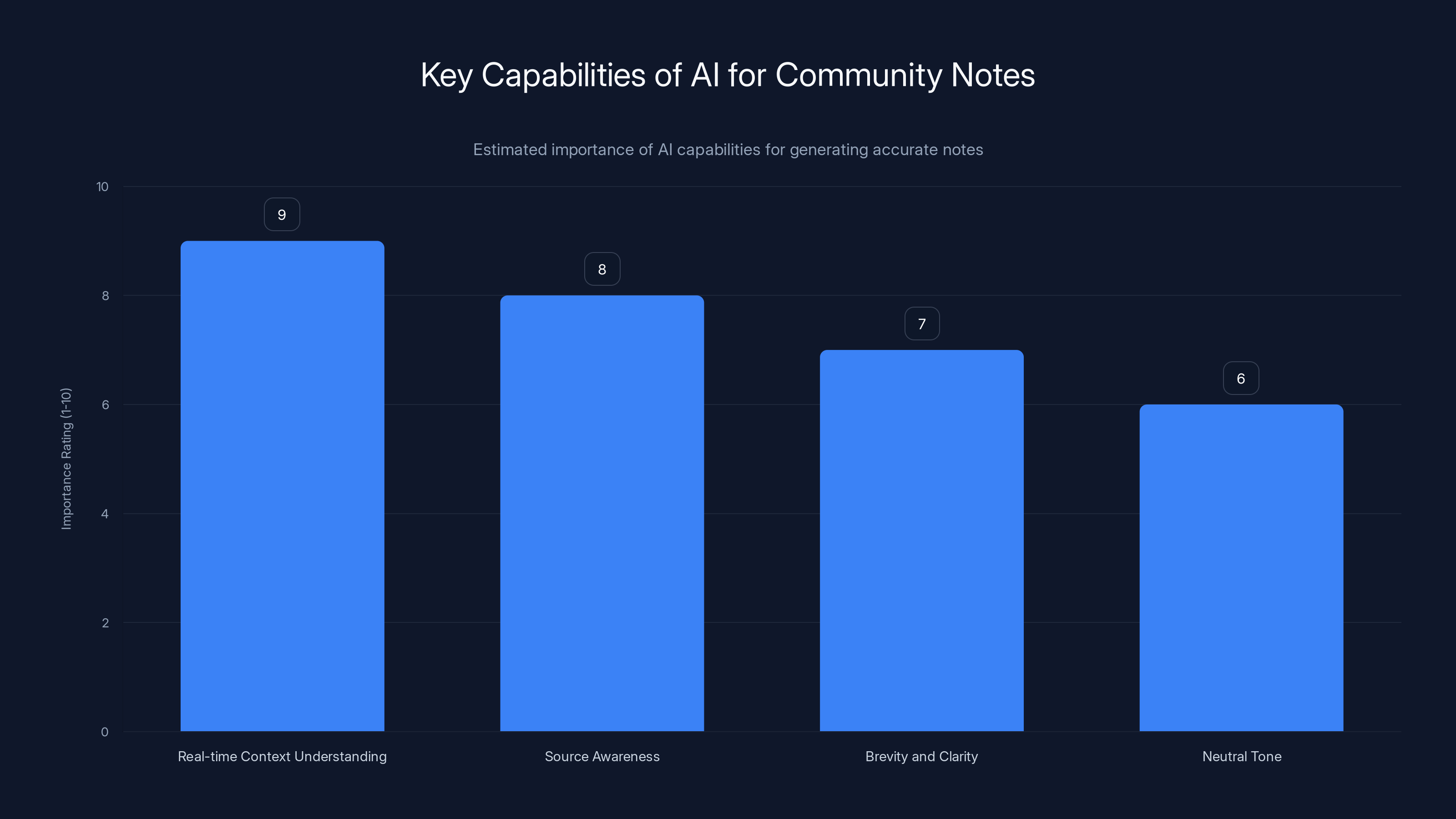

Whatever model they're using, it needs specific capabilities:

Real-time context understanding: The AI needs to read a post, understand what claims it's making, and identify what's potentially misleading about it. This requires not just language understanding but also knowledge of current events, common misconceptions, and how people present information.

Source awareness: A good note cites specific sources. The AI needs to understand what sources are credible, how to reference them, and when to acknowledge uncertainty versus stating facts confidently.

Brevity and clarity: Community Notes have character limits and need to be readable in seconds. The AI can't generate long, rambling notes. It needs to distill complexity into clarity.

Neutral tone: Community Notes are supposed to inform, not persuade. They shouldn't be angry or dismissive. This is harder for AI than it sounds, because training data is full of opinionated writing.

These requirements are technically challenging. They're not impossible, but they're not trivial either. And they matter, because a Community Note that sounds professional but is subtly inaccurate or misleading could actually amplify the original misinformation by creating confusion. BBC News has reported on similar challenges faced by AI systems in maintaining accuracy and neutrality.

Say someone claims vaccines cause autism (they don't). An AI-generated note might cite a study that debunks this. But if the AI misrepresents what the study actually says, or cites it in a way that sounds authoritative but is technically inaccurate, you've now created a situation where there's "a debate" instead of a fact.

This is why the human review component is so critical. Human contributors catch these nuances. They know when something sounds right but isn't. They understand the difference between a note that's technically accurate but misleading, and a note that's both accurate and clear.

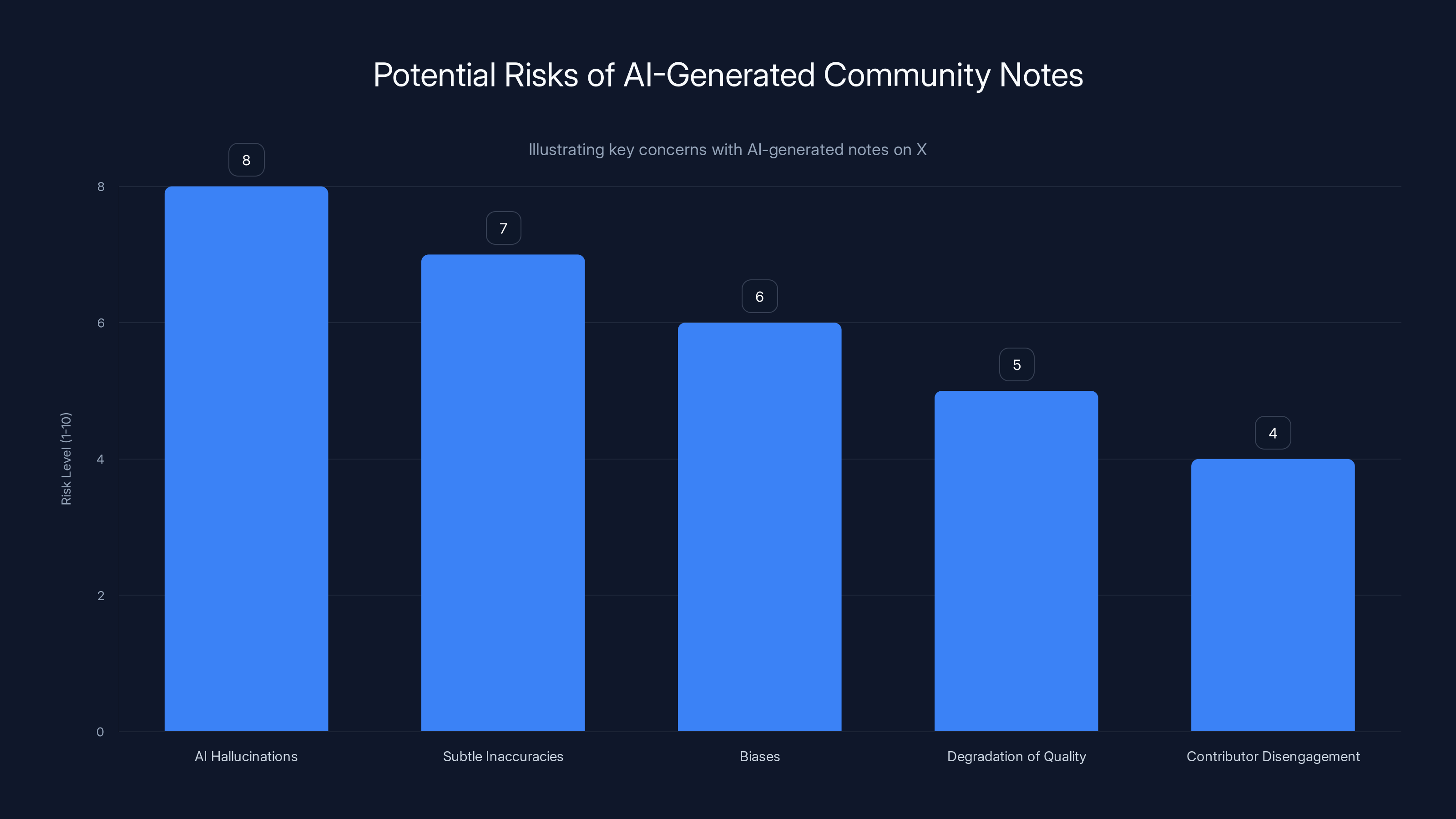

AI-generated Community Notes on X face several risks, with AI hallucinations and subtle inaccuracies being the most significant concerns. Estimated data based on common AI challenges.

Speed Gains and Reality Checks

Let's talk about what this actually solves.

Community Notes has always been slow. A typical timeline looks like this:

- Someone posts something misleading

- It gets shared hundreds of times while people read it

- A note contributor sees it and decides to write a note

- They spend 15-30 minutes researching and writing

- Other contributors rate and comment on the draft

- The note gets refined based on feedback

- Eventually, it's finalized and attached to the original post

- By now, the post has been seen by hundreds of thousands of people

With AI-assisted notes, step 4 becomes much faster. Instead of starting from scratch, a contributor sees an AI-generated draft. They might:

Spend 5 minutes evaluating it ("this is 80% right")... edit it for 5 minutes ("needs to add context about the 2019 study")... submit it for approval. Total time: maybe 10-15 minutes instead of 30.

Multiply that across thousands of notes and you're talking about meaningful time savings. Not game-changing, but real.

However, there's a flip side. If contributors get lazy and approve AI drafts without proper review, you might actually get worse notes faster. Speed without accuracy is just faster misinformation.

This is where X's design becomes important. They're not automatically publishing notes. They're requiring human approval. They're incentivizing thorough review by tracking which contributors have high ratings. This approach is similar to the strategies discussed in Hematology Advisor, which highlights the importance of human oversight in AI-assisted processes.

But will this hold at scale? Once the system is fully rolled out and handling thousands of notes per day, will volunteers still have time to properly review AI drafts? Or will they rubber-stamp them to get through the queue?

The Grok Question

Everyone's wondering: is X using Grok for this?

It makes sense strategically. Grok is X's proprietary AI model, designed specifically to handle real-time information and integrate with the platform. Using Grok for Community Notes would be a natural fit. It would also give Grok a public-facing use case that X users actually care about.

Grok has gotten mixed reviews. Some users have praised its ability to provide context and nuance. Others have documented cases where Grok confidently states incorrect information.

One particularly memorable example: Grok was asked about whether a prominent figure had passed away. Grok incorrectly confirmed that they had died, despite the person still being alive. It stated this confidently, the way you'd state an obvious fact. That's exactly the kind of error that would be catastrophic in Community Notes.

If X is using Grok (or a similar model) for note generation, these kinds of hallucinations are a real concern. Not because Grok is uniquely bad at this, but because no language model is perfect. They all sometimes confidently assert things that aren't true.

The human review component mitigates this risk. A community note contributor who knows the space should catch these errors before they make it into the final note. But that depends on the review actually happening thoroughly.

False information spreads significantly faster on social media, reaching 1,500 people in an estimated 10 minutes compared to 60 minutes for accurate information. Estimated data.

Who Gets Access First

X is being cautious about rollout. Initially, only Community Notes contributors with "top writer" status can create collaborative notes. This is a deliberate choice.

Top writers are contributors who have a track record of writing high-quality notes. Their notes get rated helpful by other contributors. They understand the standards and norms of the Community Notes program.

By limiting access to this group, X is essentially saying: "Let's see if this works with people who really understand how to write good notes. If they can use AI effectively without compromising quality, we'll expand."

This is smart gatekeeping. It prevents a scenario where the collaborative notes feature immediately tanks the quality of notes across the platform.

But it also creates a two-tier system. Top writers get faster turnaround times. Their notes get published quicker. Regular contributors still have to write from scratch the slow way.

Over time, this might create pressure to expand access. If collaborative notes are so much faster and contributors like using them, X will probably make them available to everyone. The question is whether they do so carefully, with quality checks in place, or whether they just flip a switch.

Risks and Worst-Case Scenarios

Let's be honest about potential problems.

Hallucinations and false citations: Language models are notorious for making up sources or misrepresenting what sources say. An AI draft might cite a non-existent study or misquote a real one. Human reviewers should catch this, but under time pressure or if reviewers aren't in the specific domain, errors slip through.

Bias in training data: The AI model was trained on text data that reflects all the biases and blind spots of the internet. It might systematically be better at generating notes about some types of misinformation and worse at others. It might have regional biases or cultural blind spots.

Subtle inaccuracy that sounds authoritative: This is perhaps the scariest scenario. A note that's technically accurate but misleadingly presented, or that oversimplifies in ways that distort the truth. These are the hardest errors to catch because they don't look like errors.

Erosion of quality over time: If the system gets flooded with notes and human review becomes less thorough, quality degrades gradually. You don't notice until suddenly Community Notes stop being reliable.

Regulatory vulnerability: If governments start asking why AI is generating misinformation corrections, X might face pressure to shut down the feature or heavily restrict it. The last thing X needs is another controversy about AI and content moderation.

Contributor abandonment: Paradoxically, AI assistance might actually discourage human participation. Why write Community Notes if the AI can do it? This could lead to atrophy of the volunteer base that makes the system work.

None of these are guaranteed to happen. But they're real risks that X needs to manage actively.

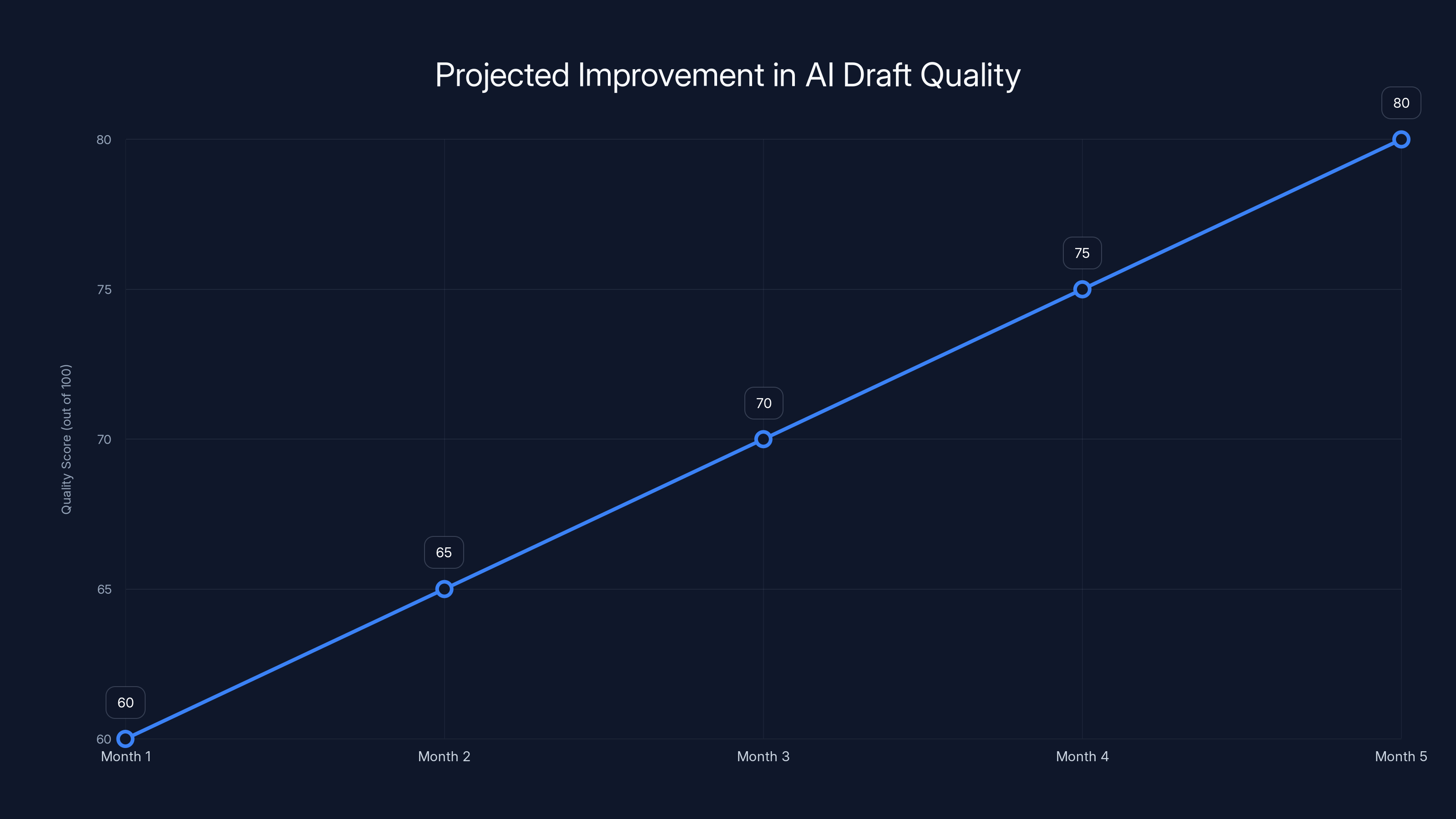

Estimated data shows a projected increase in AI draft quality over five months due to continuous learning from community feedback.

Comparing to Other Platforms' Approaches

X isn't the only platform dealing with the speed-accuracy tradeoff in fact-checking.

Meta (Facebook, Instagram, WhatsApp) partners with third-party fact-checkers. They label false content with fact-check warnings but don't generate context in-app. It's fast to deploy but requires a network of partner organizations.

Google's approach is more algorithmic. They've built systems to identify unreliable sources and surface fact-checks from authoritative publishers. It scales well but depends on having trusted fact-checkers in every topic area and language.

Reddit uses a different model: community-moderated subreddits where experts can comment and the best explanations rise to the top through voting. It's decentralized and human-driven but inconsistent in quality.

X's Community Notes are unique because they're:

- Platform-native (context appears directly on the post)

- Community-written (not relying on partnerships)

- Real-time (notes can appear within hours of a post)

- Human-edited (not fully automated)

Adding AI-generated drafts to this model preserves these advantages while addressing the speed problem. It's an elegant solution in theory. Implementation is where things get tricky.

The Learning Feedback Loop

One of the more interesting aspects of X's system is the continuous learning component. They're not just using AI once per note. They're using human feedback to improve the AI over time.

Here's how this works in principle:

- AI generates a draft note

- Contributors rate it (helpful/not helpful) and suggest improvements

- Contributors flag specific issues (missing sources, inaccurate claim, biased tone)

- The system aggregates this feedback

- Signals from step 4 are used to retrain or fine-tune the AI model

- Future drafts incorporate lessons from this feedback

This is actually a really clever approach. It treats contributors as training data generators. Every interaction with the system makes the AI smarter.

But there are implementation challenges:

You need enough feedback to actually learn from. If notes are approved or rejected without detailed commentary, you don't have enough signal. You need to somehow weight feedback appropriately. A suggestion from a highly-rated contributor should probably matter more than one from someone with no track record.

You need to avoid feedback loops where bad behavior by AI gets amplified. If the AI learns to generate notes that sound authoritative but are actually wrong, and human reviewers approve them quickly because they sound good, the AI learns that this is the right approach.

You need to handle regional and domain variations. A note that's great for US politics might be terrible for Indian politics. The AI needs to learn these distinctions.

Done well, this feedback loop could genuinely make Community Notes smarter over time. Contributors and AI learn from each other. Notes get faster and better simultaneously.

Done poorly, it could lead to systematic quality degradation. The system learns to optimize for things humans approve quickly (brevity, confidence, simplicity) rather than things that are actually accurate.

Estimated data suggests that real-time context understanding is the most crucial capability for AI in generating Community Notes, followed by source awareness, brevity, and maintaining a neutral tone.

The Human Element Remains Critical

Here's the thing that doesn't get enough attention: Community Notes only works because humans care.

Thousands of volunteers spend hours every month writing and reviewing notes. They do this unpaid. They do it because they believe misinformation is a problem and they want to help fix it.

These aren't bots. They have expertise, judgment, and commitment. They understand their domains. They know when something sounds right but isn't. They catch nuance that algorithms miss.

AI can speed up their work. It can generate drafts that are 70% done instead of starting at zero. But it can't replace them.

Everything depends on whether those volunteers stay engaged and maintain their standards. If adding AI drives away contributors, or if contributors get lazy and stop reviewing carefully, the whole system breaks down.

X seems to understand this, which is why they're rolling out cautiously and starting with proven top writers. But scaling up while maintaining quality and contributor engagement is genuinely hard.

What This Means for Information Reliability

Let's zoom out and think about the bigger picture.

Misinformation is a problem on every major platform. People share false claims faster than fact-checkers can respond. Bad information shapes public opinion. It affects voting, health decisions, investment choices. The damage is real.

Platforms have been searching for solutions for years. Some have tried removing false information entirely (which creates censorship concerns). Some have tried labeling (which some research suggests can backfire). Some have tried algorithmic demotion (which raises transparency concerns).

Community Notes is different. It's built on the idea that context matters more than removal. That explaining why something is misleading works better than hiding it. That communities can self-regulate more effectively than algorithms or authorities.

Adding AI to this model doesn't fundamentally change the philosophy. It just makes it faster.

In the best case scenario: Community Notes get faster, more comprehensive, and better sourced. Misinformation gets context quickly. The platform becomes more reliable. Volunteers stay engaged because their work is more impactful.

In a worse case scenario: Quality degrades as AI-generated notes become more common. Contributors get lazy. Some false information gets "corrected" by inaccurate notes. The system's credibility suffers.

Realistically? It's probably somewhere in between. Some note quality improves from AI assistance. Some degradation happens. Overall, it probably helps. But not without ongoing attention and care.

The critical variable is X's commitment to maintaining standards while scaling. If they care about getting this right, it works. If they treat it as just another feature to roll out and move on, it fails.

Implementation Challenges and Scale

Theoretically, collaborative notes could scale dramatically. Theoretically.

Practically, there are implementation challenges that increase with scale.

First, there's the review bottleneck. Human contributors can't review every note instantly. As the volume of notes increases, either review time increases (defeating the speed advantage) or quality of review decreases (creating accuracy problems).

X could solve this with more AI assistance, but you're now having AI review AI's work, which creates its own problems. Or they could incentivize more human contributions, but volunteer communities are hard to scale.

Second, there's consistency. Community Notes from different contributors have different styles, depths, and standards. Adding AI-generated drafts to this mix makes consistency harder. One draft might be too brief, another too verbose. One might be overly cautious, another too confident.

X could address this with templates, style guides, and training. But that adds friction that might discourage contribution.

Third, there's the misinformation evolution problem. Bad actors will adapt. They'll learn to make claims that are harder to correct with simple notes. They'll target topics where notes are slower to appear. They'll try to exploit the AI itself, maybe by making claims specifically designed to confuse language models.

Fourth, there's the international challenge. Community Notes work reasonably well in English on topics where there's a large community of informed contributors. But they don't work as well in languages with smaller contributor bases. Adding AI doesn't solve this problem and might actually make it worse if the AI is undertrained on less common languages.

The Future of Community Notes

If X gets this right, collaborative notes could become the standard for how platforms handle misinformation.

Think about the combination: human judgment + AI speed + community engagement + transparent process. It's a genuinely good solution to a genuinely hard problem.

But there are futures where it goes differently. Futures where the AI gets better faster than human review can keep up. Futures where contributors lose interest because their work feels less valuable. Futures where regulatory pressure forces X to restrict or shut down the program.

The next 6 to 12 months matter. X needs to prove that collaborative notes maintain quality while improving speed. They need to show that the system is actually reducing misinformation, not just creating the illusion of addressing it.

They need to be transparent about how the AI works, what it's trained on, and when it makes mistakes. This builds trust with contributors and users.

And they need to resist the temptation to scale too fast. Better to move slowly and maintain quality than to rush and watch the whole thing collapse.

What Contributors and Users Should Watch For

If you're a Community Notes contributor, here's what you should pay attention to:

Quality of AI drafts: Are they actually saving you time, or are they wrong often enough that you're spending more time correcting them than you would writing from scratch?

Contributor morale: Are other contributors staying engaged? Are they excited or frustrated?

Note approval rates: If most AI-generated notes are getting approved quickly, that could be good (the AI is getting better) or bad (people are rubber-stamping them without proper review).

Misinformation trends: Is misinformation still spreading as fast? Are notes appearing faster? Is there evidence that collaborative notes are actually having an impact?

If you're just a regular X user seeing Community Notes:

Check the sources: Good notes cite specific sources. AI-generated notes sometimes cite things in ways that sound authoritative but don't actually check out.

Look for nuance: Good notes acknowledge complexity. Notes that are too simple or too confident should make you skeptical.

Notice trends: If you see a lot of AI-sounding notes on topics you know well, pay attention to whether they're actually accurate.

This level of engagement is important. Community Notes only works if people actually read and trust them. If they start looking like corporate fact-check labels, people will dismiss them.

FAQ

What exactly are Community Notes?

Community Notes are context-providing posts written by volunteer contributors on X. When a post contains misleading information, community members can write a note that appears on the post, providing factual context and sources. These notes are rated by other contributors, and only notes that achieve broad agreement appear publicly.

How do collaborative notes differ from regular Community Notes?

Collaborative notes leverage AI to generate a draft note automatically when a contributor requests one. The contributor then reviews, edits, and rates the AI-generated draft before it goes through the normal Community Notes approval process. Regular notes require contributors to write from scratch without AI assistance.

Who can create collaborative notes right now?

Currently, only Community Notes contributors with "top writer" status can create collaborative notes. X is planning to expand access to more contributors over time as they evaluate how the system is working. Top writers have demonstrated a history of creating helpful, accurate notes that receive positive ratings.

Is X using Grok to generate the notes?

X hasn't publicly confirmed which AI model generates the note drafts. While it's widely assumed to be Grok given its real-time information focus and integration with the platform, X has not officially stated this. The exact technical implementation remains proprietary.

What are the risks of having AI write Community Notes?

Key risks include AI hallucinations (making up sources or misrepresenting what sources say), subtle inaccuracies that sound authoritative, biases in the training data, and potential degradation of note quality if human review becomes less thorough. There's also a risk that contributors might become less engaged if they feel their expertise is being undervalued.

Can AI-generated notes still be inaccurate?

Absolutely. Language models sometimes confidently state incorrect information. This is why human review is built into the process. Contributors rate and can revise AI drafts before they're published. However, if reviewers don't catch errors, inaccurate AI notes could be published, which is why careful review is critical.

How is X preventing misinformation in the notes themselves?

The system uses multiple safeguards: AI drafts must be reviewed and approved by human contributors; notes are rated helpful/unhelpful by other contributors; only notes with broad agreement are prominently displayed; contributors have track records that are visible, creating accountability; and the system learns from contributor feedback to improve future AI drafts.

Will this make Community Notes faster?

In theory, yes. If contributors can edit an 80% complete AI draft instead of writing from scratch, notes should appear faster. In practice, the time savings depend on the quality of the AI draft and how thoroughly contributors review it. Low-quality drafts that require heavy editing might not save much time.

Could this system eventually replace human note writers entirely?

Technically, X could make notes fully automated. But that would fundamentally change what Community Notes are. The value of Community Notes comes partly from human judgment and expertise. Fully automated notes would lose that advantage and likely lose community trust. X appears committed to keeping the human element central.

How will this work for topics outside the US?

This is a real challenge. Community Notes work better in English and on topics with large contributor communities. The AI was likely trained primarily on English text. Expanding to other languages and regions will require either training the AI on local language data or recruiting more international contributors. X hasn't detailed plans for this.

Conclusion: The Balance Between Speed and Accuracy

X's collaborative notes experiment represents a genuine attempt to solve one of the hardest problems in online information: how to correct misinformation at network speed without sacrificing accuracy or transparency.

It's not a perfect solution. No solution is. But it's a thoughtful one that respects both the speed problem (misinformation spreads fast) and the accuracy problem (corrections need to be right).

The success of this experiment depends on several things X actually controls:

They need to maintain rigorous quality standards even as they scale. This probably means keeping the human review component non-negotiable, even when it creates bottlenecks. They need to be transparent about how the AI works and when it fails. Secrecy breeds distrust. They need to keep community contributors engaged and valued. Volunteers are everything here. They need to learn from mistakes and adapt the system. This is the first major attempt at AI-assisted fact-checking at platform scale. Course corrections will be necessary. And they need to resist the temptation to over-automate. The human element is the secret sauce.

If X gets these things right, collaborative notes could become a model that other platforms adopt. A proof of concept that AI can make human fact-checking faster without making it worse.

If they get it wrong—if quality degrades, or AI hallucinations become common, or contributors get disenfranchised—the whole approach could become discredited.

We're in the early stages of what AI-assisted human judgment can do. Community Notes is becoming a crucial testing ground for that question.

The stakes are higher than just the platform. How social media handles misinformation shapes public discourse, which shapes democracy, which shapes real outcomes in real people's lives.

That's why this experiment matters. That's why it deserves careful attention and honest evaluation.

X is betting that humans and AI can collaborate on truth. The evidence over the next year will tell us whether that bet was sound.

Key Takeaways

- X's collaborative notes let AI generate draft fact-checks that human contributors review and refine, cutting note creation time by roughly 30-50%

- The system addresses a critical gap: misinformation spreads at network speed while human fact-checking traditionally moves at human speed

- Continuous learning from contributor feedback helps improve future AI drafts, potentially creating a virtuous cycle of better notes over time

- AI-generated notes still carry risks of hallucinations and subtle inaccuracies, making human review non-negotiable for quality control

- Success depends on maintaining volunteer contributor engagement and rigorous quality standards while scaling the system

Related Articles

- X's 'Open Source' Algorithm Isn't Transparent (Here's Why) [2025]

- Grok's Deepfake Problem: Why AI Keeps Generating Nonconsensual Intimate Images [2025]

- AI Chatbots Citing Grokipedia: The Misinformation Crisis [2025]

- Building Trust in AI Workplaces: Your 2025 Digital Charter Guide [2025]

- Tech Politics in Washington DC: Crypto, AI, and Regulatory Chaos [2025]

- How Right-Wing Influencers Are Weaponizing Daycare Allegations [2025]

![X's AI-Powered Community Notes: How Collaborative Notes Are Reshaping Fact-Checking [2025]](https://tryrunable.com/blog/x-s-ai-powered-community-notes-how-collaborative-notes-are-r/image-1-1770327407004.jpg)