New York's Social Media Warning Labels Law: What You Need to Know

Introduction: The Digital Age Meets Regulation

Back in 2024, New York became a pivotal battleground in the fight against social media addiction. Governor Kathy Hochul signed landmark legislation requiring warning labels on social media platforms, positioning the state as a pioneer in tech regulation. This wasn't just another feel-good policy statement that gets lost in bureaucratic channels. It's a genuine attempt to make social platforms acknowledge the real harm they inflict on younger users.

The law arrived at a moment when the conversation about social media's mental health impacts has reached a fever pitch. Parents are worried. Schools are struggling. Teenagers are reporting unprecedented levels of anxiety and depression. And frankly, the platforms themselves have done little to meaningfully address these concerns beyond half-hearted PR statements.

What makes New York's law genuinely interesting is that it's not banning anything. It's not restricting access. It's not even preventing kids from using these apps. Instead, it's forcing companies to be honest about what they've built. The law requires warning labels similar to those on cigarette packages, displayed when users interact with features the state specifically identifies as addictive: infinite scrolling, auto-play videos, like counts, and algorithmic feeds.

This is important because it represents a philosophical shift in how government approaches tech regulation. Rather than playing defense against Silicon Valley's army of lobbyists, New York decided to play a different game. It's not about preventing something bad. It's about forcing transparency.

But here's where it gets complicated. The law immediately faced legal challenges. Tech companies argued it violates their free speech rights. Constitutional experts are divided on whether warning labels on digital platforms constitute protected speech or merely factual disclosure. The debate mirrors earlier battles over cigarette warnings, but with fundamentally different legal terrain.

This article breaks down everything about New York's social media warning labels law: what it requires, why it matters, how platforms are responding, and what it means for the future of tech regulation across America.

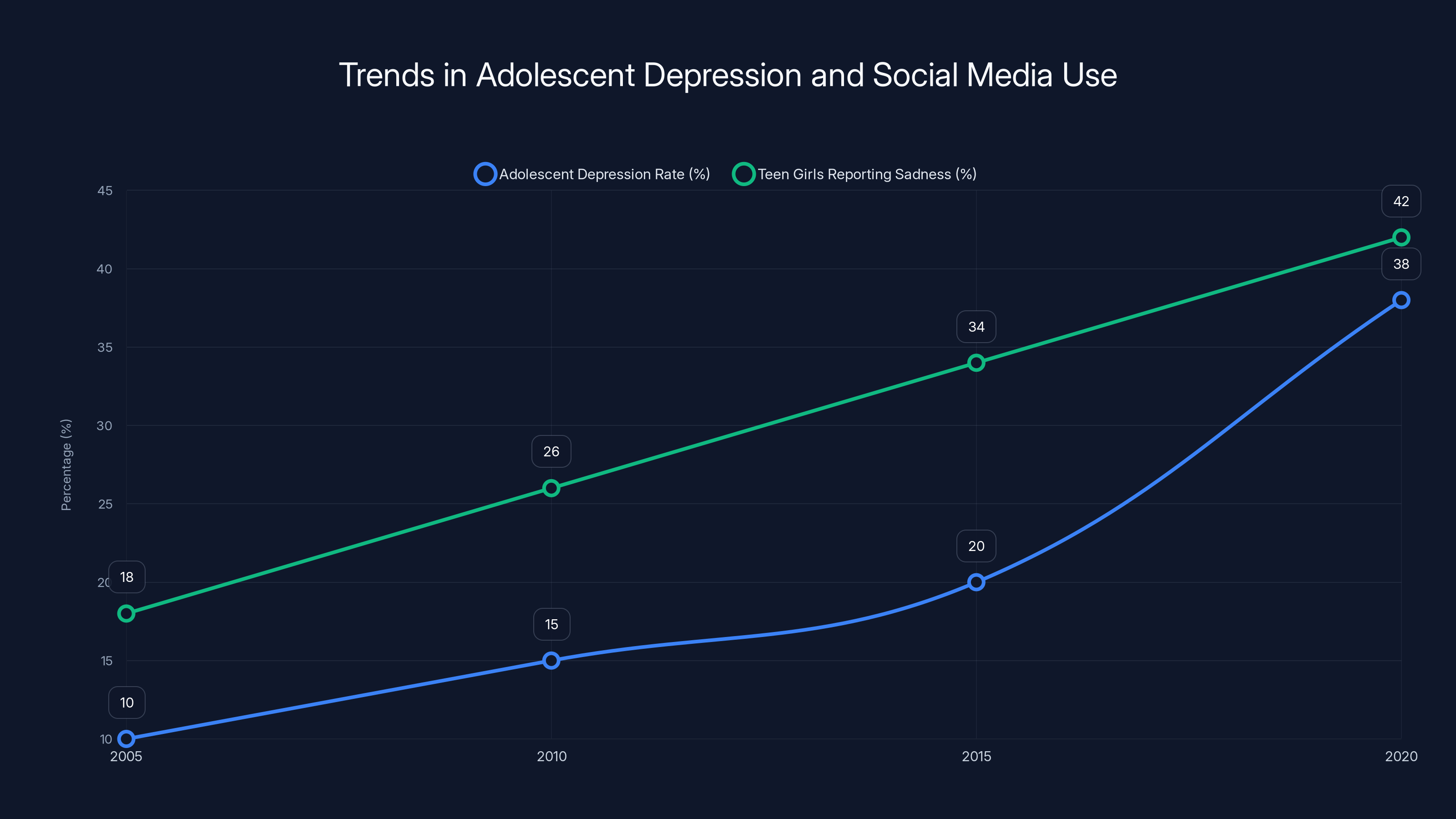

The line chart illustrates a significant increase in adolescent depression rates and persistent sadness among teen girls, correlating with the rise of social media use. Estimated data.

TL; DR

- New York requires warning labels on social media platforms with infinite scroll, auto-play, like counts, or algorithmic feeds

- Labels appear at first interaction with addictive features and periodically thereafter

- Law covers all platforms accessed from New York, including Tik Tok, Instagram, You Tube, and Snapchat

- Companies face legal challenges arguing the law violates free speech protections

- Other states and nations are watching, with similar laws proposed in California and implemented in Australia

- Mental health crisis is real: youth anxiety and depression correlate strongly with heavy social media use

The Law Itself: What Actually Happens

Let's start with the basics because government legislation can be deliberately confusing. New York's social media warning labels law, officially known as the Social Media Addictive Feeds Restriction (SMAFR), does one specific thing: it mandates disclosure.

When a user first interacts with certain features on a social media platform, they'll see a warning label. The exact wording matters here. These aren't subtle disclaimers buried in fine print. They're prominent alerts similar in design and visibility to cigarette warnings. The labels specifically caution about potential harms to mental health, with particular emphasis on impacts to minors.

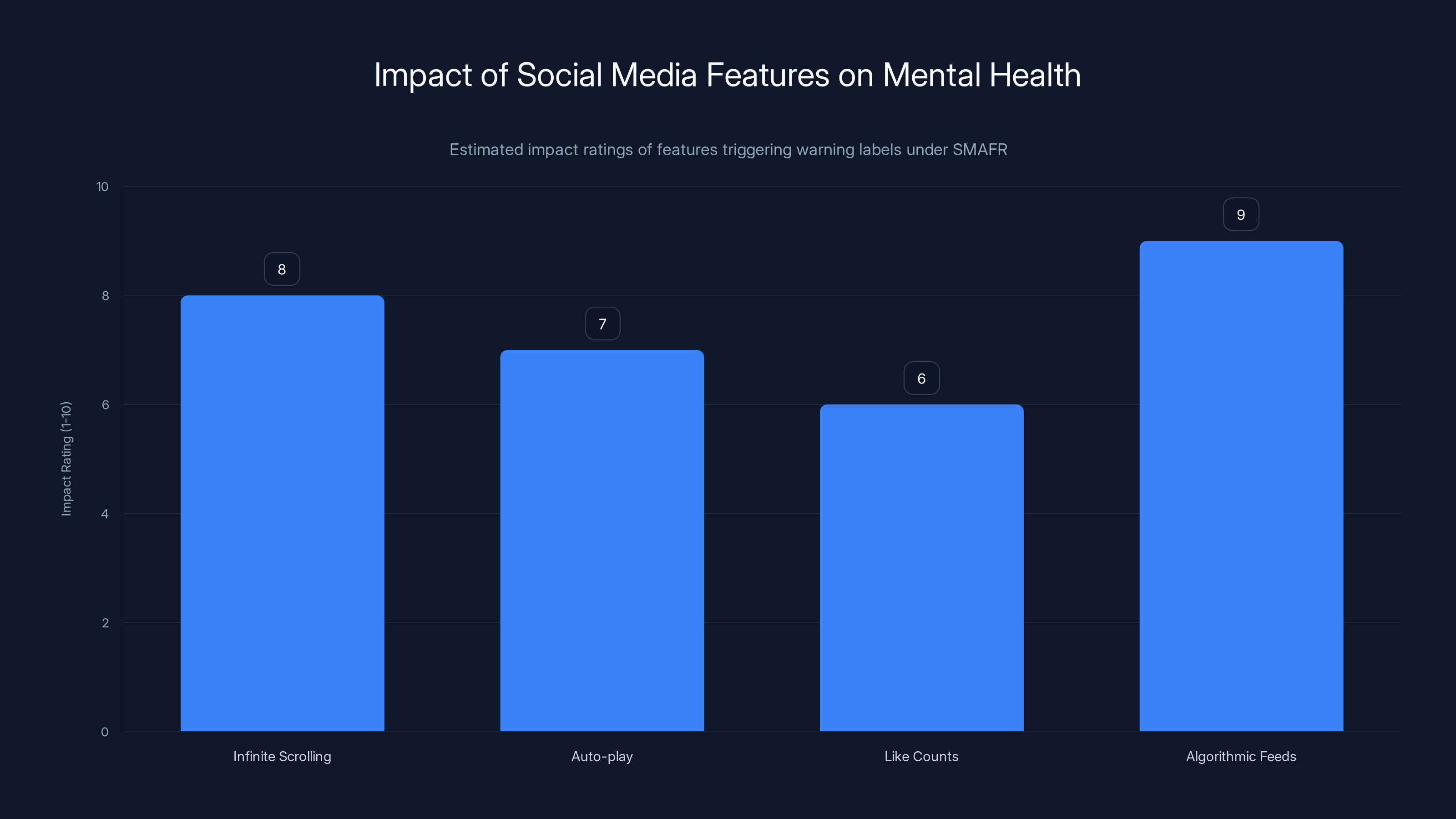

The features that trigger warnings are precisely defined in the legislation:

Infinite scrolling is the first. This is the endless feed where you keep swiping and swiping, and there's no natural stopping point. Your brain keeps releasing dopamine with each new piece of content. Engineers call this "engagement" or "retention." Mental health professionals call it addictive design.

Auto-play comes next. Video platforms automatically start playing the next video without you clicking anything. You're watching a five-minute clip, and suddenly you're thirty minutes deep in a rabbit hole. It happens so seamlessly that users often lose track of time entirely.

Like counts and engagement metrics also trigger labels. When you see that your post got 2,000 likes, your brain responds to social validation. Young users especially can become obsessed with these metrics, constantly refreshing to watch the numbers climb. The psychological feedback loop is deliberate.

Algorithmic feeds complete the list. Instead of chronological feeds where you see posts from people you follow in order, algorithmic feeds show you what an AI system thinks will keep you scrolling longest. The algorithms optimize for engagement, not truth or wellbeing.

The timing of when warnings appear is crucial. Users must see the warning when they first interact with a flagged feature. If you open Instagram and start scrolling, you see the warning immediately. The law also requires periodic re-display of these warnings as users continue to use the features.

What's intentionally vague in the legislation is the exact format and wording of the warnings. This creates flexibility but also uncertainty. Companies will likely argue over what constitutes "adequate" warning language. Expect litigation here.

The law applies to any social media platform accessed from within New York State. This is deliberately broad because the legislation recognizes that young people in New York can access apps regardless of where the companies are headquartered. If you're a Tik Tok creator in New York, or a teenager in Buffalo scrolling through Instagram, these labels apply.

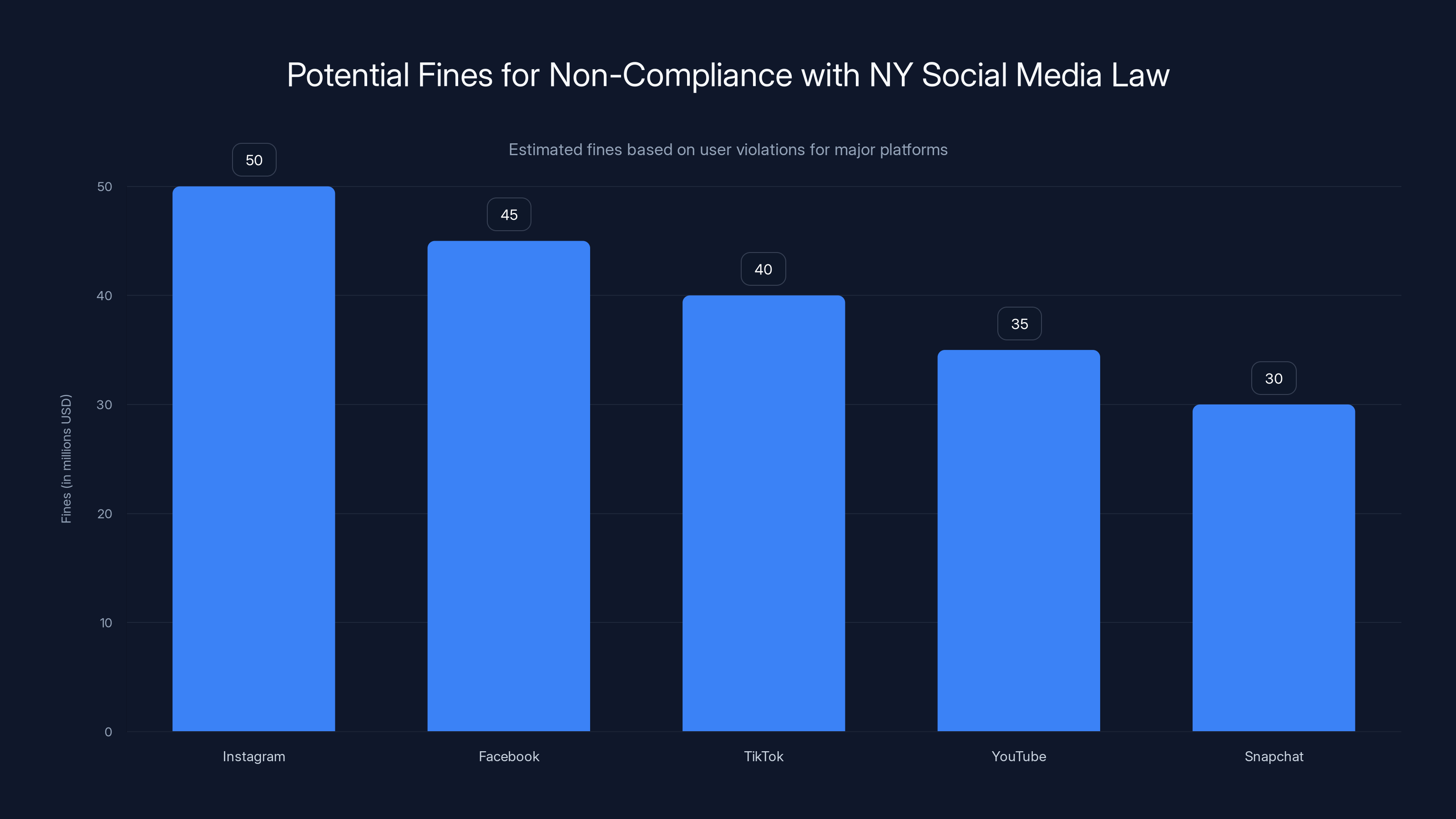

Non-compliance carries significant penalties. Violations can result in fines ranging from

But here's the practical question everyone's asking: Will companies actually comply, or will they fight it in court first? The answer appears to be "yes to both."

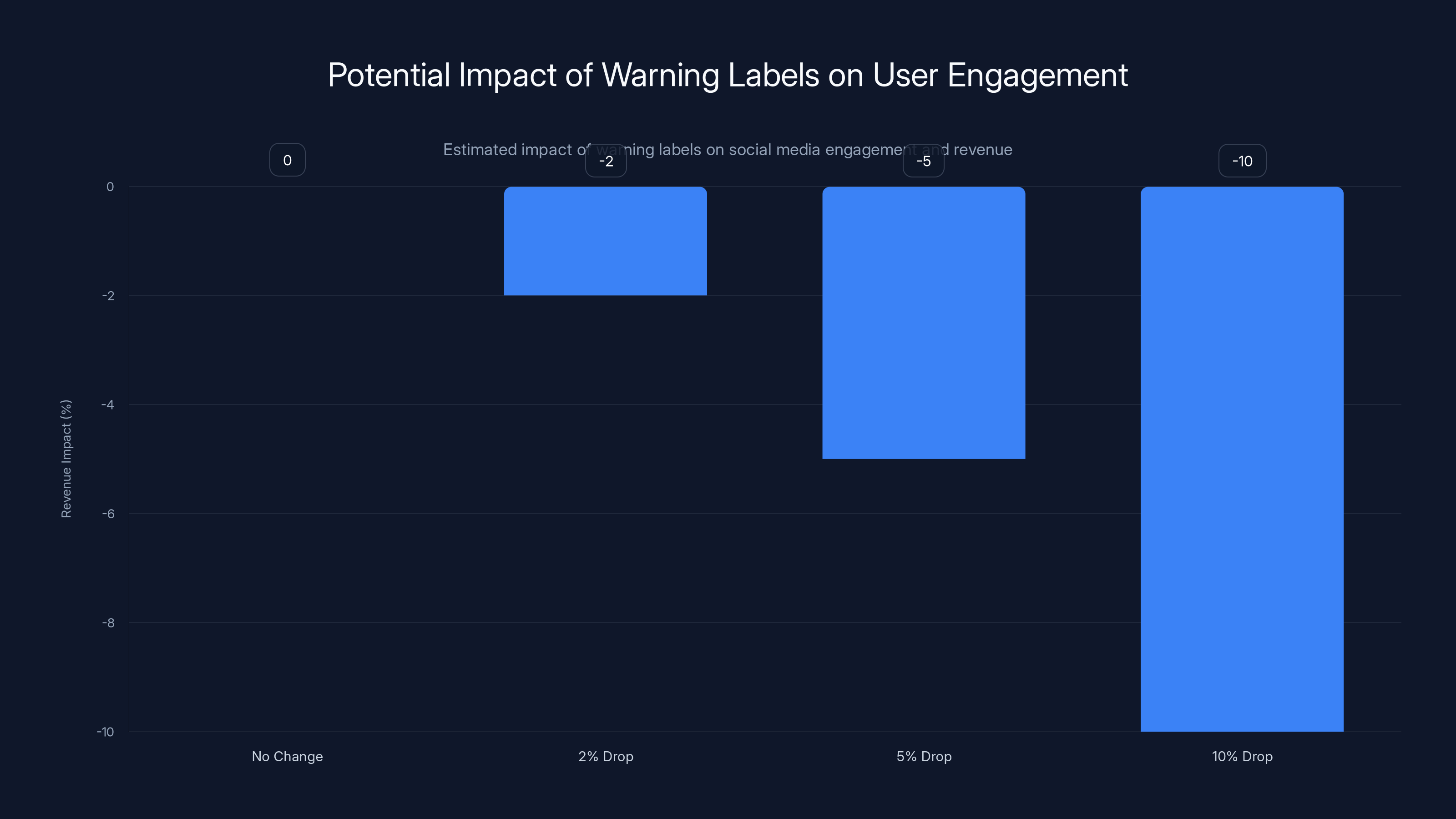

Estimated data shows that even a 2% drop in engagement due to warning labels could lead to a significant revenue impact for social media platforms. Larger drops could severely affect advertising revenue.

Why This Law Exists: The Mental Health Crisis

To understand why New York took this step, you need to grasp the scope of the problem. The mental health impacts of social media on young people aren't theoretical anymore. They're documented, measured, and deeply troubling.

The U. S. Surgeon General has been sounding alarms for years. Official reports now identify social media as a significant risk factor for youth anxiety, depression, and disordered eating. Studies show correlations between increased social media use and worsening mental health outcomes, particularly among teenagers.

Let's look at some actual numbers. Rates of adolescent depression increased by approximately 38% between 2005 and 2017, with steeper increases during peak social media adoption years. Teen girls reporting persistent feelings of sadness or hopelessness increased from 26% in 2011 to 42% in 2021. These aren't small fluctuations. This is a genuine public health crisis.

The mechanism is well-understood by now. Social media platforms employ what researchers call "persuasive design" or, more bluntly, "addiction design." Every feature mentioned in the warning labels law was explicitly engineered to maximize time spent on platform.

Infinite scrolling doesn't exist because it's better for users. It exists because stopping the scroll requires intentional action. Your natural stopping points get eliminated. Engineers use the term "stopping cue" to describe natural moments when a user might exit an app. Social media removes these. There's always one more video. Always one more post.

Algorithmic feeds don't exist to show you the content you actually want. They exist because algorithms that optimize for engagement consistently outperform chronological feeds in terms of time spent. An algorithm might show you outrageous political content, conspiracy theories, or triggering comparisons to other people's curated lives, because these generate strong emotional reactions that keep you scrolling.

Like counts and engagement metrics exploit social comparison and validation-seeking behavior that's particularly intense during adolescence. The teenage brain is literally rewired to care intensely about peer status. Social media weaponizes this developmental reality.

Former Facebook product manager Frances Haugen became famous for whistleblowing about these exact issues. Internal company research showed that Facebook knew Instagram was harming teenage girls' body image and mental health. The company had conducted studies showing the negative impacts. And they chose to prioritize growth metrics over user wellbeing.

This isn't speculation or conjecture. This is documented fact from internal company communications.

Parents have been frustrated by the lack of meaningful change. Schools have reported increased classroom disruption due to smartphone distraction. Mental health professionals have witnessed a clear correlation between their patient caseloads and smartphone adoption timelines.

New York's legislature essentially said: We can't wait for voluntary industry reform. We can't trust these companies to self-regulate. We need to force transparency about what we know these platforms do.

The Legal Arguments: Why Companies Are Fighting Back

Here's where it gets interesting legally. The platforms didn't roll over. They immediately challenged the law, and their arguments are genuinely sophisticated.

The central claim: warning labels on social media violate the First Amendment. Companies argue that the requirement to display warnings constitutes compelled speech, which is legally protected against government intrusion.

The Supreme Court has consistently held that the government cannot force private entities to communicate messages they don't endorse. This principle has been applied to everything from bumper stickers to religious expression to, in one case, the layout of a produce display.

Social media companies argue that their platforms are forms of expression. The feed itself, the algorithm, the design—all of it constitutes protected speech. Forcing them to add warnings is like forcing a newspaper to display messages the newspaper's editor disagrees with.

It's not a frivolous argument. It has real constitutional grounding.

But here's the counterargument, and it's equally compelling: Warning labels aren't about the platforms' speech. They're about disclosure of facts about what the platforms do. Restaurants must disclose calorie counts. Financial products must disclose interest rates. Tobacco products must disclose health risks. These aren't seen as violating free speech because they're factual disclosures, not compelled messages.

Proponents of the law argue that warning labels about addictive features are factual statements, not opinion or ideology. The features do exist. Scientific evidence does show correlations with mental health harms. The warning isn't forcing the platform to say anything political or controversial—just to acknowledge reality.

The litigation is ongoing, and the outcome genuinely matters. If courts rule that the law violates the First Amendment, it could establish a precedent making similar laws across other states vulnerable to the same challenges.

Conversely, if courts uphold the law, it opens the door to much more aggressive regulation of tech platforms. Other states will undoubtedly copy the model.

Some legal scholars suggest a middle ground: the law might survive if modified to be less prescriptive about warning format and more flexible about how companies demonstrate compliance. But New York's current law is fairly rigid.

One more wrinkle: jurisdiction. How does a state law apply to global platforms? Tik Tok is owned by Chinese parent company Byte Dance. Does New York have regulatory authority over a foreign company? This gets into complex questions about jurisdiction and extraterritoriality that courts haven't fully resolved in the digital age.

Global Perspective: What Other Countries Are Doing

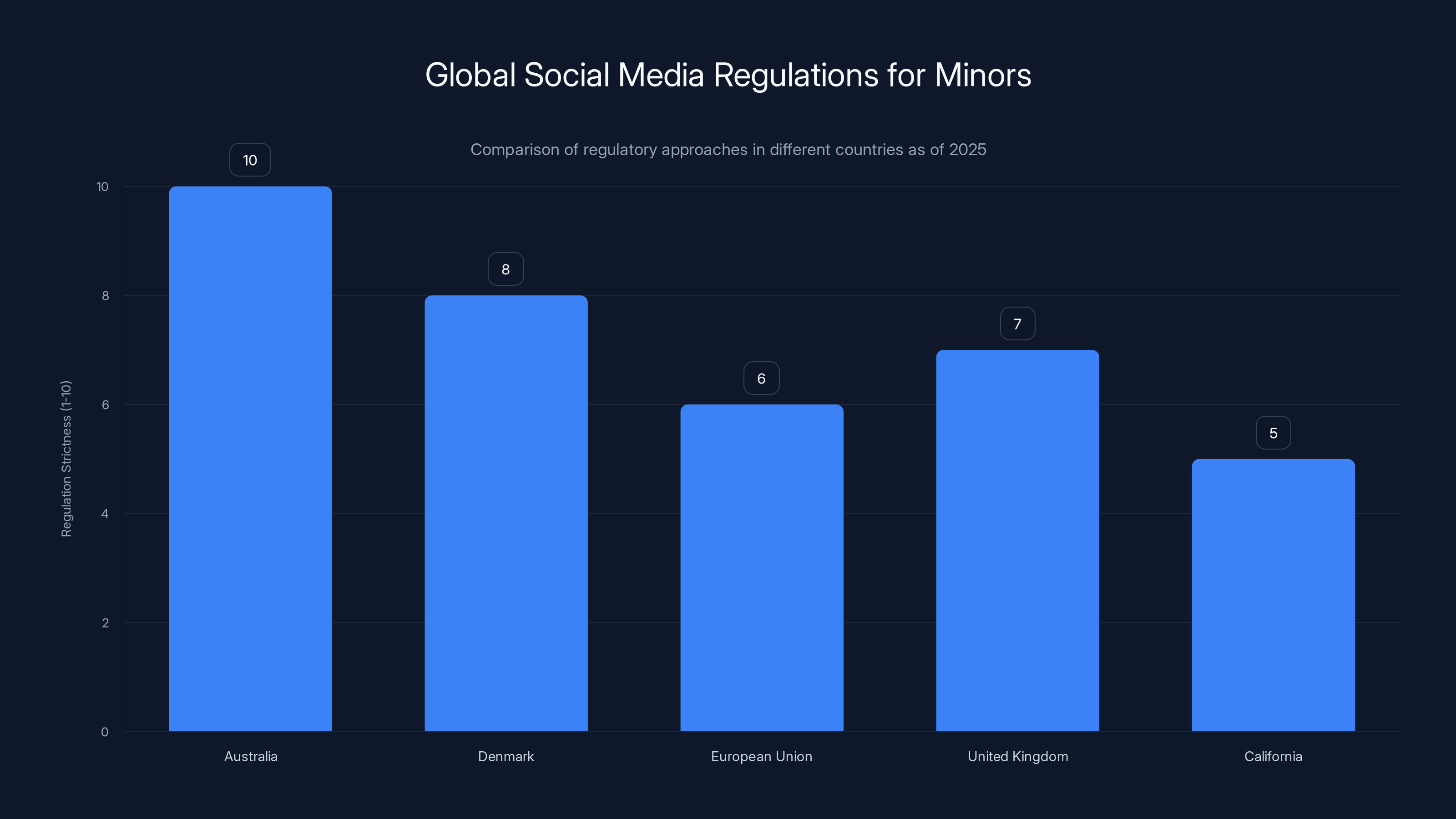

New York isn't operating in isolation. The world is waking up to social media's harms, and regulations are accelerating.

Australia moved first with truly aggressive action. In late 2024, Australia passed legislation banning social media for children under 16. Not warning labels. Not restrictions. Complete bans. The law represents the hardest possible line on youth social media access.

Australia's approach is fascinating because it reflects genuine frustration with industry intransigence. The government essentially said: You've had years to self-regulate. You've failed. Now we're making the decision for young people.

Denmark followed Australia's lead, with its own restrictions on social media for minors. The European Union is also developing comprehensive digital regulations, though more focused on data privacy and algorithmic transparency than age-based restrictions.

The United Kingdom is working on Online Safety Bill provisions that would require age verification and restrict algorithmic feeds for minors. The approach is lighter than Australia's but more comprehensive than New York's warning labels.

California has proposed legislation similar to New York's, though as of 2025, it hasn't yet become law. The state has been more focused on data privacy through laws like CCPA and CPRA.

What's notable about this global pattern is that every major jurisdiction with strong democratic institutions is moving toward stronger tech regulation. This isn't a partisan issue. It's a cross-party recognition that social media platforms have created genuine public health problems that markets alone won't solve.

The platforms argue they're complying with local regulations in each jurisdiction. They're building age gates in some countries. They've added screen time warnings in some regions. But critics argue these are performative compliance measures that don't address the core issue: the business model depends on maximizing engagement, regardless of harm.

Estimated fines for non-compliance with New York's social media warning labels law could reach millions per platform, highlighting the significant financial risk. Estimated data.

How Platforms Are Responding (So Far)

Meta, Tik Tok, Snap, and You Tube initially challenged the law rather than prepare for compliance. This is typical corporate strategy: fight in court while hedging with limited technical preparations.

Meta's position centers on the free speech argument. The company has been vocal about how warning labels constitute compelled speech that violates their editorial independence. They've also argued that the definitions in the law are vague and could apply to features that aren't actually problematic.

Tik Tok has taken a similar legal stance while quietly noting that the app's algorithm differs fundamentally from how Meta describes its own. Tik Tok claims its recommendations are designed for content discovery rather than maximizing engagement, though researchers and critics dispute this characterization.

Snap has been somewhat quieter about the legal fight, possibly because Snapchat already includes various safety features and doesn't emphasize like counts as heavily as Instagram or Tik Tok.

You Tube faces a particular challenge because the platform has infinite scrolling and auto-play as core features. If the law is upheld, You Tube would need to either modify these features or display warnings constantly.

None of the major platforms have announced plans to remove the flagged features. Instead, they're betting on legal victory. This creates an interesting dynamic: if they lose in court, the needed technical changes will need to be implemented quickly to avoid massive fines.

Some smaller platforms and alternative social networks have taken a different approach. Apps like Be Real, Discord (in certain modes), and newer platforms built with mental health considerations have actually implemented some of these features preemptively. They see regulatory compliance as a competitive advantage, a way to differentiate as the "ethical" alternative.

This creates interesting market dynamics. If warning labels become mandatory, companies that have already designed away from addictive features gain credibility and user trust. They can market themselves as having "already solved" what the law is trying to address.

What the Warning Labels Will Actually Look Like

This is where technical implementation gets crucial. The law doesn't prescribe exact label design, which creates both opportunity and regulatory uncertainty.

Based on precedent from cigarette warnings, we can expect prominent disclaimers, potentially with visual elements like warning symbols. They'll likely need to be displayed in a way that's impossible to miss or easily dismiss.

One likely scenario: when you first open Tik Tok or Instagram or You Tube, before you can start scrolling or watching videos, you'll see a full-screen or modal warning explaining that the platform uses features designed to be addictive and that research shows correlations with mental health harms.

The warning might include language like:

"This application contains features, including infinite scrolling and algorithmic recommendations, that are designed to be addictive. Research indicates these features may be harmful to your mental health. If you are a minor, please be aware of the potential risks. Parents and guardians can help monitor healthy usage patterns."

Then you click "I understand" or similar to proceed. And then, according to the law, you see the warning again periodically.

This raises interesting questions about what "periodically" means. Daily? Weekly? Each session? Companies will argue for the minimum, legislators will argue for more frequent. Expect this to be litigated.

Companies might also try creative compliance approaches. What if Instagram removes like counts (or makes them optional) but keeps algorithmic feeds? Does that reduce the scope of required warnings? What if they add a "chronological feed" option alongside the algorithmic one? Can users opt out of algorithmic recommendations?

These aren't trivial questions. They get at whether the law actually changes behavior or just shifts liability.

The Precedent: Tobacco Warnings and Beyond

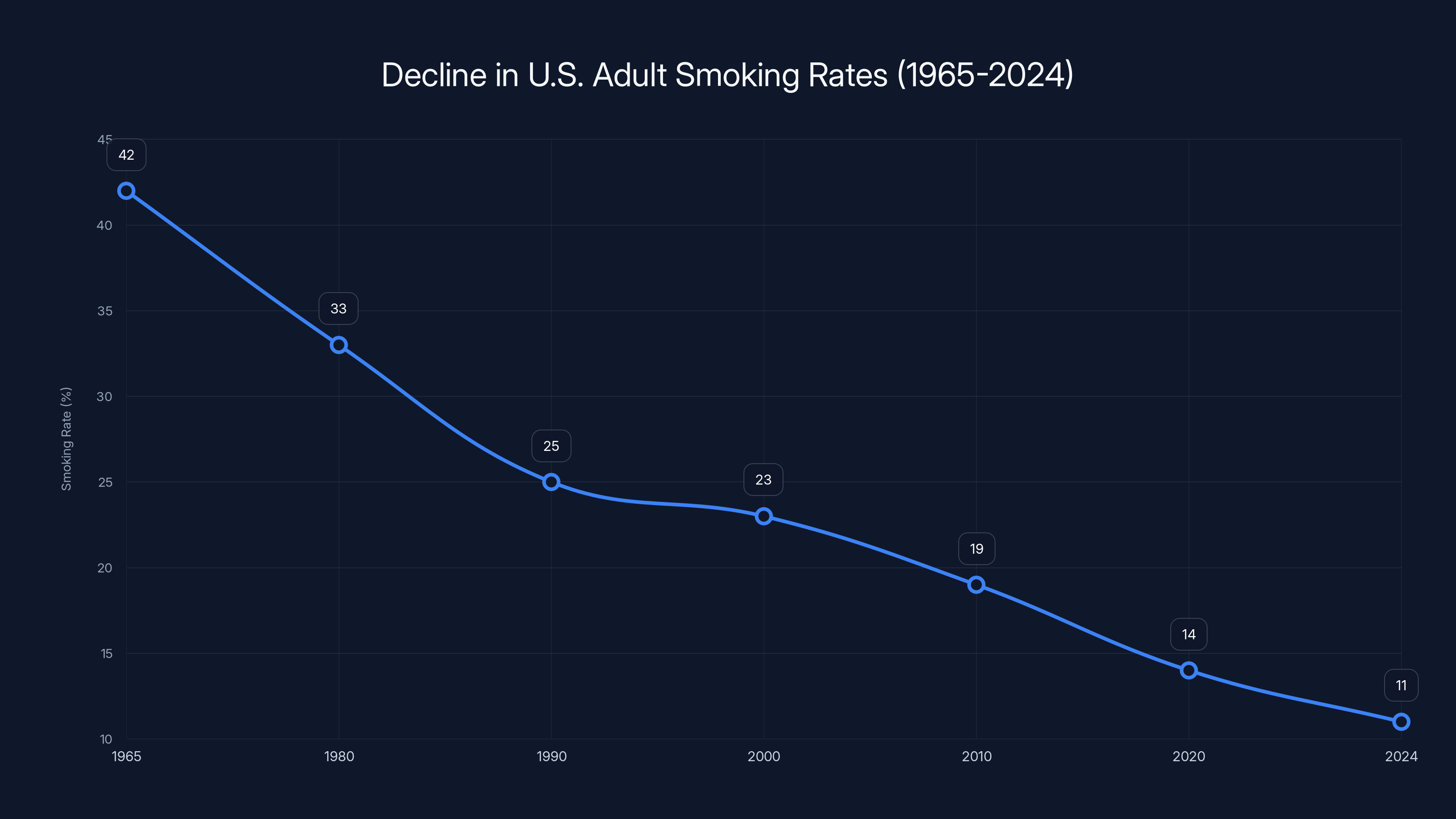

The model for all this is cigarette warnings. Starting in 1966, the U. S. required health warnings on cigarette packages. Later, in the 1980s, the federal government mandated increasingly graphic and visible warnings.

Did cigarette warnings eliminate smoking? No. But they did increase awareness of risks, support quit attempts, and shifted social norms around tobacco use. Smoking rates in the U. S. have declined from about 42% of adults in 1965 to roughly 11% in 2024.

Warnings are part of that decline, though not the only factor. Regulation, taxation, smoke-free policies, and cultural shifts all contributed.

The question for social media is whether similar warnings will change behavior. Some research suggests yes. Studies on warning labels for sugary beverages showed modest reductions in consumption. Warnings on alcohol products have some documented effect on consumer perception.

But social media is different. Cigarettes are voluntary purchases. You can choose not to buy them. Social media is often integral to teenage social life. Not using Instagram means social isolation from peers in many school environments.

This asymmetry matters. Warnings might inform users about risks, but won't necessarily enable them to avoid the platforms. That's where the global move toward age-based restrictions gets more aggressive.

Estimated data suggests algorithmic feeds have the highest potential impact on mental health, followed closely by infinite scrolling. Estimated data.

Economic and Business Model Implications

Here's what keeps tech executives awake at night: what if warnings actually work?

Social media platforms generate revenue through advertising. Advertising works because the platforms have massive engaged user bases spending hours on the apps. If warnings reduce usage, especially among younger demographics, it directly impacts advertising revenue.

Meta's quarterly reports show that engagement metrics (daily active users, time spent) directly correlate with advertising pricing. If engagement drops 10%, you're looking at significant revenue impact.

Now, will New York warning labels cause 10% engagement drops? Probably not. Will they cause any measurable change? Maybe. The economic stakes are massive, which is why companies are fighting this legally.

There's also a cascading concern for platforms. If New York wins this battle, other states will follow. California, Illinois, and New Jersey have already considered similar legislation. Within a few years, platforms could face a patchwork of state-by-state warning label requirements, each with different specifications.

This compliance complexity is itself a cost. Building systems to detect geographic location and display state-specific warnings isn't free. Modifying platforms to account for different regulatory regimes requires engineering resources.

Some analysts suggest this could actually benefit larger platforms with bigger compliance budgets while harming smaller competitors who can't afford to customize systems for each state.

Youth Behavior and Real-World Impact

So what actually happens when kids see these warnings? That's the empirical question nobody can fully answer yet.

Behavioral economics and psychology suggest a few scenarios:

Scenario 1: Minimal Impact. Warning labels become background noise. Users see them, acknowledge them, and continue as before. This is the platforms' hope and probably their prediction of what happens.

Scenario 2: Modest Behavior Change. Some users, especially those already concerned about social media's impact, use the warnings as confirmation to reduce usage. Some parents use the warnings as conversation starters with their kids. Aggregate usage drops slightly but measurably.

Scenario 3: Significant Behavior Change. The warnings shift social norms. Using heavily addictive social media becomes seen as less desirable, similar to how smoking shifted from normal to stigmatized. This would require the warnings to operate at a cultural rather than individual level.

Research on warning labels suggests Scenario 2 is most likely. Not no impact, but not transformative impact either.

What might actually drive bigger behavior changes: if the warnings prompt platforms to modify their addictive features. A platform that removes infinite scrolling or replaces algorithmic feeds with chronological ones would see significant usage changes.

But here's the catch: platforms don't want to do this voluntarily because engagement metrics drive revenue. They'll do it only if forced by regulation.

So the law's real impact might not be the labels themselves, but the threat of regulation prompting platforms to finally engineer for user wellbeing rather than engagement maximization.

What Happens If Other States Pass Similar Laws

Imagine a scenario where California, Texas, Florida, and ten other states all pass social media warning label laws. They don't all use identical language, but they cover the same core features.

Now every social platform needs to navigate this landscape. Some companies might argue they need to comply with the strictest standard (the lowest common denominator approach). Others might build state-specific implementations.

The practical effect is that state-by-state regulation becomes increasingly expensive and complex. At some point, platforms might actually lobby Congress for federal legislation, reasoning that a single federal standard is better than managing fifty different state regimes.

This has happened before. Companies in regulated industries often prefer federal regulation to state-by-state patchworks. The EU also demonstrated how a single comprehensive regulation can be simpler for compliance than managing multiple country-specific rules.

Federal regulation could either strengthen or weaken protections depending on political dynamics. A Democratic-controlled Congress might strengthen safeguards. A Republican one might weaken them. The platform is shifting, but direction depends on political factors.

Australia leads with the strictest regulations, banning social media for children under 16. Other regions like Denmark and the UK are also implementing significant measures. Estimated data.

Alternatives to Warning Labels

Some policy experts argue that warning labels alone don't go far enough. Alternative approaches include:

Algorithmic transparency requirements: Forcing platforms to explain how their algorithms work and allowing users to opt out. This addresses the core problem directly rather than just warning about it.

Engagement metrics restrictions: Banning like counts, view counts, and other metrics that gamify social interaction. This would require design changes rather than just disclosure.

Time management tools: Requiring platforms to include robust tools for monitoring and limiting usage. Many platforms already have these, but they're often buried in settings and easy to disable.

Age verification: Requiring proof of age before accessing platforms. More invasive than warnings but more effective at keeping minors off systems designed to addict them.

Chronological feeds by default: Requiring algorithmic feeds to be opt-in rather than default. Users would see posts from accounts they follow in chronological order unless they explicitly chose algorithmic sorting.

Profit sharing restrictions: Banning business models based on engagement maximization. This would fundamentally change how platforms operate.

Each approach has trade-offs. Warning labels are least restrictive but potentially least effective. Age-based access bans are most restrictive but raise fairness and equity questions.

New York chose the least restrictive approach, possibly because it's most defensible legally. More aggressive approaches face First Amendment and potentially constitutional concerns.

Implementation Timeline and Enforcement

The law takes effect at a date specified in the legislation, with enforcement provisions outlining how the state will monitor compliance.

Initially, this will likely rely on user reports. Someone sees a platform not displaying warnings and files a complaint with New York's Attorney General office. The AG's office then investigates whether the platform is in compliance.

For a large platform with millions of New York users, perfect compliance is nearly impossible. Some users will inevitably experience glitches or failures to see warnings. The question becomes: what level of non-compliance triggers enforcement action?

Platforms will argue for a "safe harbor" provision: if they make good-faith efforts to comply and display warnings to 95% or 99% of users, technical glitches shouldn't trigger massive penalties.

The state will likely agree to some reasonable threshold rather than enforcing strict perfection. But the exact threshold will probably be negotiated through litigation if platforms challenge it.

One interesting wrinkle: how does New York detect whether warnings are being displayed? The state could hire auditors to create accounts and manually check. But at scale, this requires automated monitoring or self-reporting by platforms.

Most regulatory regimes rely on a combination of self-reporting and audits. Companies report their compliance metrics, and regulators verify through spot checks.

Financial Impact Calculations

Let's do some math on potential financial exposure for platforms.

The law allows fines of

If a platform fails to show warnings to all users for a full day, that's potentially 15 million violations at

Now, platforms won't accumulate that exact level of liability. Systems will fail occasionally, but they'll remediate quickly. But the theoretical maximum exposure is enormous.

In practice, enforcement will likely result in much smaller penalties unless there's evidence of deliberate non-compliance. But even

For context, Meta's quarterly revenue in 2024 exceeded

The financial calculus for platforms becomes: spend tens of millions on compliance engineering versus risk potentially larger fines plus reputational damage. Compliance starts looking economically rational.

Smoking rates in the U.S. have significantly declined from 42% in 1965 to an estimated 11% in 2024, influenced by warnings, regulations, and cultural shifts.

Technical Implementation Challenges

Building warning label systems sounds simple until you think about the technical realities.

How does a platform know whether a user is in New York? IP geolocation? Not reliable for VPNs. Device location data? Requires location permissions. Account registration address? Users can lie.

Platforms will likely use a combination: IP geolocation as the primary signal, with user-provided location information for refinement. There will be false positives (people outside New York seeing warnings) and false negatives (New Yorkers not seeing them).

The warnings need to be displayed in a way users can't easily bypass. A simple "click OK" button isn't enough because users would just click through without reading. But make it too intrusive and platforms claim user experience suffers.

Displaying warnings periodically adds another layer. Defining "periodic" is ambiguous. If it means once per session, that's manageable. If it means every hour of usage, that's intrusive. The actual litigation over this detail could be extensive.

Multilingual considerations matter too. New York has large populations of non-English speakers. Warnings need to be clear in Spanish, Mandarin, and other languages.

For younger users, even more clarity is needed. The warnings need to be comprehensible to a 13-year-old, not just adults.

The Precedent This Sets for Tech Regulation

Beyond social media, this law establishes a model for regulating addictive technology generally.

Video games with loot boxes and engagement mechanics could face similar requirements. Streaming services could require warnings about binge-watching. Email systems could require warnings about notification addiction.

Once the principle is established that government can require warnings about addictive digital features, the scope can expand indefinitely.

This could be good or bad depending on your perspective. Consumer protection advocates see it as overdue accountability for addictive design. Tech industry advocates see it as overreach into private business models.

International precedent matters too. If multiple countries independently conclude that similar regulations are necessary, it strengthens the case that this is genuine policy innovation rather than anti-tech bias.

What Users and Parents Should Do Now

For people actually using these platforms, what does the warning label law mean practically?

If you're a parent: use the warnings as conversation starters. When your kid sees a warning about addictive features, ask them what it means. Why would the government require these warnings? What do they think the risks are? This turns a regulatory requirement into a teaching moment.

If you're a young person: take the warnings seriously. They exist because there's genuine evidence of harm. That doesn't mean you can't use social media, but it means being intentional about how you use it.

For everyone: consider the platforms you're using and what features actually serve you versus what's purely addictive. Do you need infinite scrolling, or would you prefer a chronological feed? Do you care about like counts, or would you prefer to hide them? Many platforms now let you customize these things. Use those options.

Start tracking your own screen time. Most phones have built-in tools for this. See what you're actually doing. The data often surprises people.

Consider deleting apps from your phone and accessing platforms through browsers instead. This friction reduces mindless usage without eliminating necessary access.

Looking Forward: 2025 and Beyond

As of 2025, the New York law is in effect, but the legal battles are far from over. Appeals will take years. Other states are watching to see whether courts uphold it.

Meanwhile, the conversation around social media's societal impact continues to evolve. There's growing bipartisan recognition that something needs to change. Democrats tend to emphasize mental health harms to minors. Republicans tend to emphasize algorithmic bias and censorship concerns. But both sides increasingly agree that the current regulatory vacuum is untenable.

Technologically, we might see platforms preemptively modifying features to reduce regulatory exposure. Some companies might invest in genuine wellness-focused design rather than engagement maximization.

Newer platforms continue to emerge with different models: chronological feeds, no like counts, privacy-focused design. Whether these genuinely compete with established giants or remain niche remains to be seen.

International regulation will likely accelerate. The EU's Digital Services Act, Australia's age-based bans, and others will create cascading pressures for change.

The deeper question: can you create a social platform that genuinely serves human connection rather than engineering addiction? Or is the business model fundamentally incompatible with user wellbeing? That's the real issue beneath all the warning labels.

Conclusion: A Turning Point in Tech Regulation

New York's social media warning labels law represents a watershed moment. It's the moment when government decided it could no longer wait for voluntary industry reform. The consequences ripple far beyond New York.

The law probably won't end social media addiction. Warning labels have modest effects, historically. But the law serves multiple purposes simultaneously. It informs users about risks. It signals to platforms that regulation is coming. It establishes legal precedent that government can require disclosure about digital harms. It emboldens other states to consider their own legislation.

The platforms will fight it in court, probably successfully at least for now. But even litigation is instructive. Courts will have to grapple with fundamental questions about free speech, digital regulation, and government power in the internet age.

What happens next depends on multiple factors: court rulings, other state actions, global regulatory trends, and potentially most importantly, continued public pressure demanding that tech companies finally prioritize user wellbeing over engagement metrics.

The warning labels themselves might not change much. But they signal that the era of tech companies operating without accountability is ending. What comes next remains to be seen, but it will be interesting to watch.

FAQ

What exactly does New York's social media warning labels law require?

The law mandates that social media platforms display prominent warning labels when users interact with features identified as addictive: infinite scrolling, auto-play videos, like counts, and algorithmic feeds. These warnings must appear when users first engage with these features and periodically thereafter. The warnings specifically caution about potential mental health harms, particularly to minors. The law applies to any platform accessed from within New York State.

Which social media platforms are affected by this law?

Virtually all major social media platforms with the specified features are covered, including Meta's Instagram and Facebook, Tik Tok, You Tube, Snapchat, and others. Any platform offering infinite scrolling, auto-play, like counts, or algorithmic recommendations must comply. Smaller or alternative platforms without these features may not be covered. The law's application is based on features rather than specific companies, so the scope is potentially broad.

What happens if social media companies don't comply with the law?

Non-compliance can result in fines ranging from

Are there legal challenges to this law, and could it be overturned?

Yes, multiple platforms have challenged the law on First Amendment grounds, arguing that requiring warning labels constitutes compelled speech that violates free speech protections. The legal battle centers on whether factual disclosures about platform features constitute protected speech or merely factual regulatory requirements. Courts will ultimately decide whether the law can survive, with potential appeals reaching higher court levels. The outcome could set precedent for similar laws nationwide.

How does this law compare to what other countries are doing about social media harms?

New York's approach using warning labels is relatively modest compared to some international efforts. Australia has implemented age-based bans on social media for users under 16. Denmark is pursuing similar age restrictions. The European Union is developing comprehensive digital regulations focusing on transparency and algorithmic accountability. The United Kingdom is developing Online Safety Bill provisions. New York chose the least restrictive approach, relying on disclosure rather than access restrictions, likely because it's more legally defensible.

Will these warning labels actually change how people use social media?

Historically, warning labels have modest effects on behavior. Research on cigarette warnings shows they inform users but don't eliminate consumption. For social media, warnings might prompt some users—particularly those already concerned about their usage—to reduce time spent on platforms. However, warnings alone probably won't produce dramatic behavior changes since social media is often integral to teenage social life, unlike voluntarily purchased products. The real impact might come if warnings motivate platforms to redesign addictive features, which could drive larger behavioral shifts.

What can parents and teenagers do in response to this law?

Parents should use the warning labels as conversation starters with their teenagers about the designed addictiveness of social media and research showing correlations with mental health harms. Teenagers should consider customizing their app settings to hide like counts or use chronological feeds where available. Both should track actual screen time and consider deliberate limits. More substantively, parents can implement parental controls, set family phone-free times, and maintain open conversations about healthy social media usage. Individual behavior changes might matter more than legal requirements.

Could this law be applied to other technology besides social media?

Yes, the legal precedent established could potentially extend to other digital products with addictive features. Video games with engagement mechanics, streaming services designed for binge-watching, notification systems engineered for addiction, and other digital products could theoretically face similar warning requirements. This creates both opportunity and concern depending on perspective. Consumer advocates see broader regulation of addictive digital design as necessary. Tech industry advocates worry about increasingly extensive government intrusion into product design and business models.

What is the timeline for when these warning labels must appear?

The law specifies an effective date by which platforms must comply, with enforcement beginning at that date. Platforms are expected to display warnings immediately when they first interact with flagged features. However, ongoing litigation delays and legal challenges might modify implementation timelines. Companies have argued for transition periods to build compliant systems. The actual practical timeline depends on court rulings and enforcement decisions by New York's Attorney General office.

Related Reading

For readers interested in exploring adjacent topics, consider investigating: digital addiction science and psychology, international approaches to tech regulation, platform design ethics, youth mental health epidemiology, the business economics of engagement-driven models, and first amendment jurisprudence related to commercial speech and disclosure requirements.

Key Takeaways

- New York requires warning labels on social media platforms with infinite scroll, auto-play, like counts, and algorithmic feeds—a historic regulatory move

- Labels must display when users first interact with addictive features and periodically thereafter, with fines up to $1,000 per violation per user

- Tech companies are challenging the law on First Amendment grounds, arguing warnings constitute compelled speech rather than factual disclosure

- Youth mental health crisis shows documented correlation with social media adoption, with teen depression rates rising from 26% (2011) to 42% (2021)

- Australia implemented age-based bans while EU, Denmark, and UK pursue comprehensive regulations, showing global shift toward stricter tech oversight

- Warning labels likely have modest behavioral impact historically, but may prompt platforms to redesign addictive features or motivate other states to pass similar laws

![New York's Social Media Warning Labels Law: What You Need to Know [2025]](https://tryrunable.com/blog/new-york-s-social-media-warning-labels-law-what-you-need-to-/image-1-1766784958237.jpg)