Nvidia's AI Empire: The Complete 2025 Investment Landscape

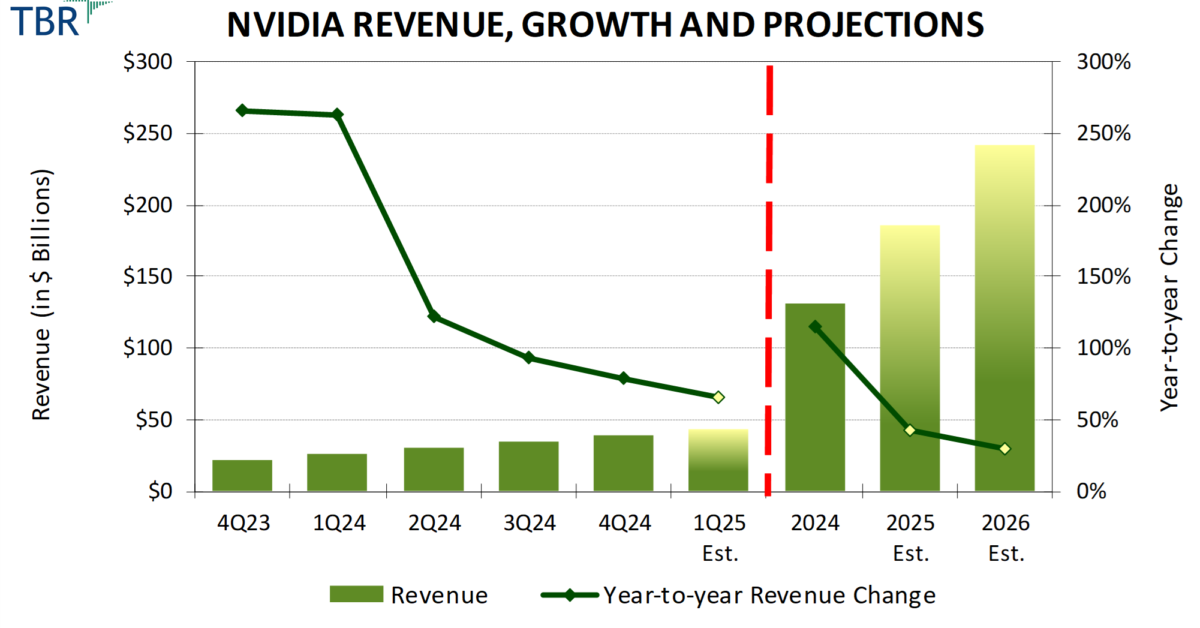

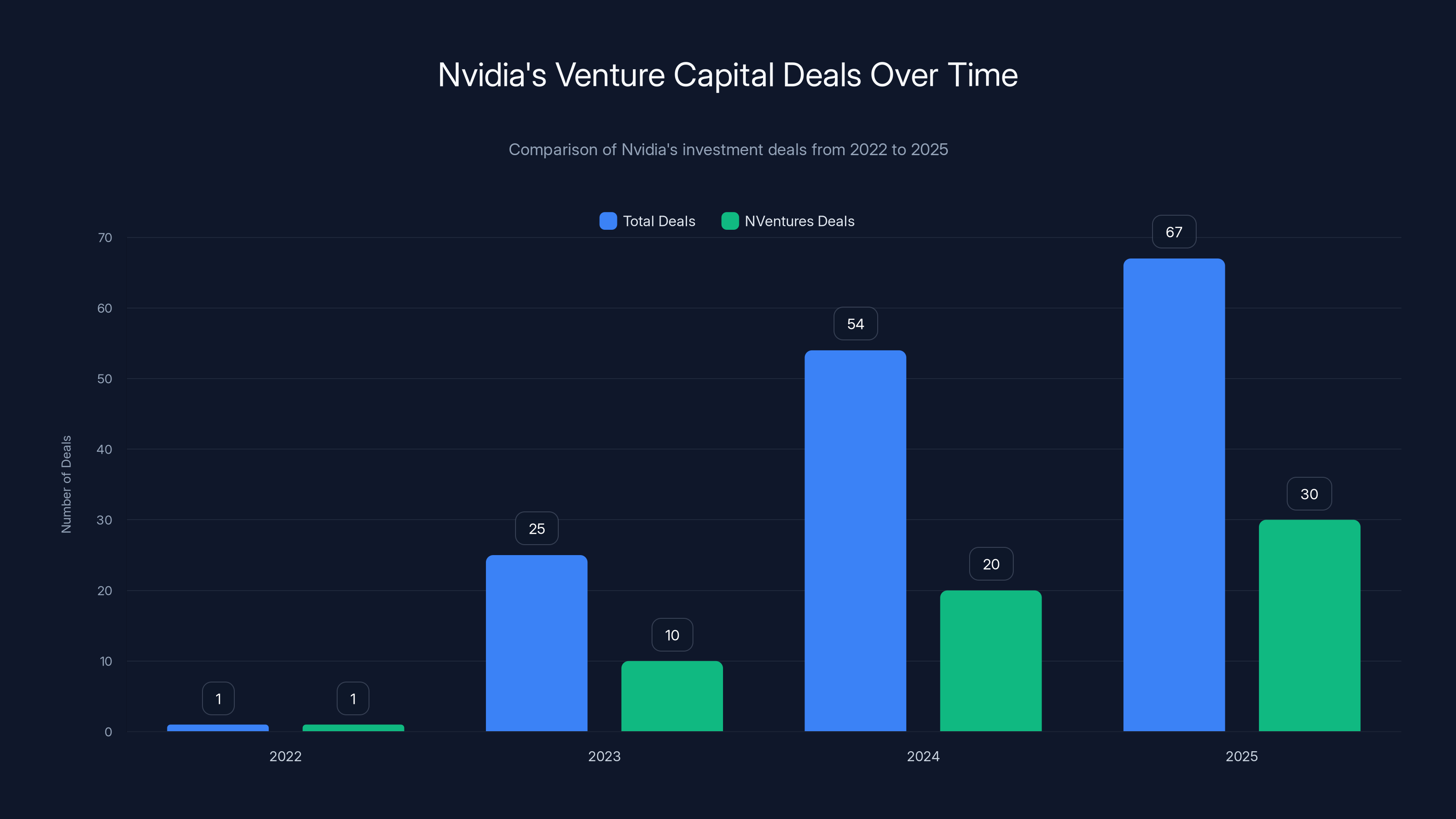

Nvidia has fundamentally transformed from a GPU manufacturer into a venture capital powerhouse, reshaping the artificial intelligence ecosystem through strategic investments. Over the past three years, since Chat GPT's breakthrough launch, the company has accelerated its corporate investment velocity to unprecedented levels. In 2025 alone, Nvidia participated in nearly 67 venture capital deals, more than doubling its 2024 pace of 54 transactions. This explosive growth in investment activity reveals a sophisticated strategy: rather than simply selling GPUs to AI companies, Nvidia is actively shaping the future direction of artificial intelligence development itself.

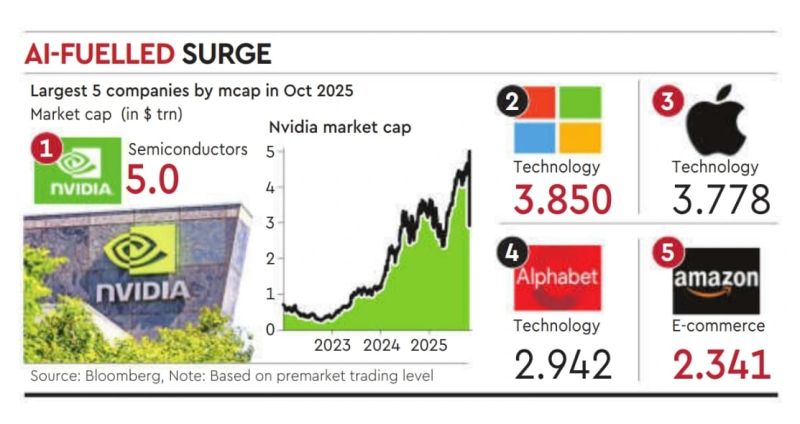

The numbers tell a compelling story about Nvidia's market dominance and financial capacity. The company's market capitalization has soared to approximately $4.6 trillion, fueled by insatiable demand for its high-performance GPUs that power everything from large language models to enterprise AI applications. This astronomical valuation has created a war chest that Nvidia leverages not just through its formal corporate venture capital fund, NVentures, but also through direct strategic investments across hundreds of emerging AI companies. The distinction is important: while NVentures completed 30 deals in 2025 compared to just one in 2022, the broader Nvidia investment ecosystem extends far beyond the formal VC fund into a complex web of strategic partnerships and minority stakes.

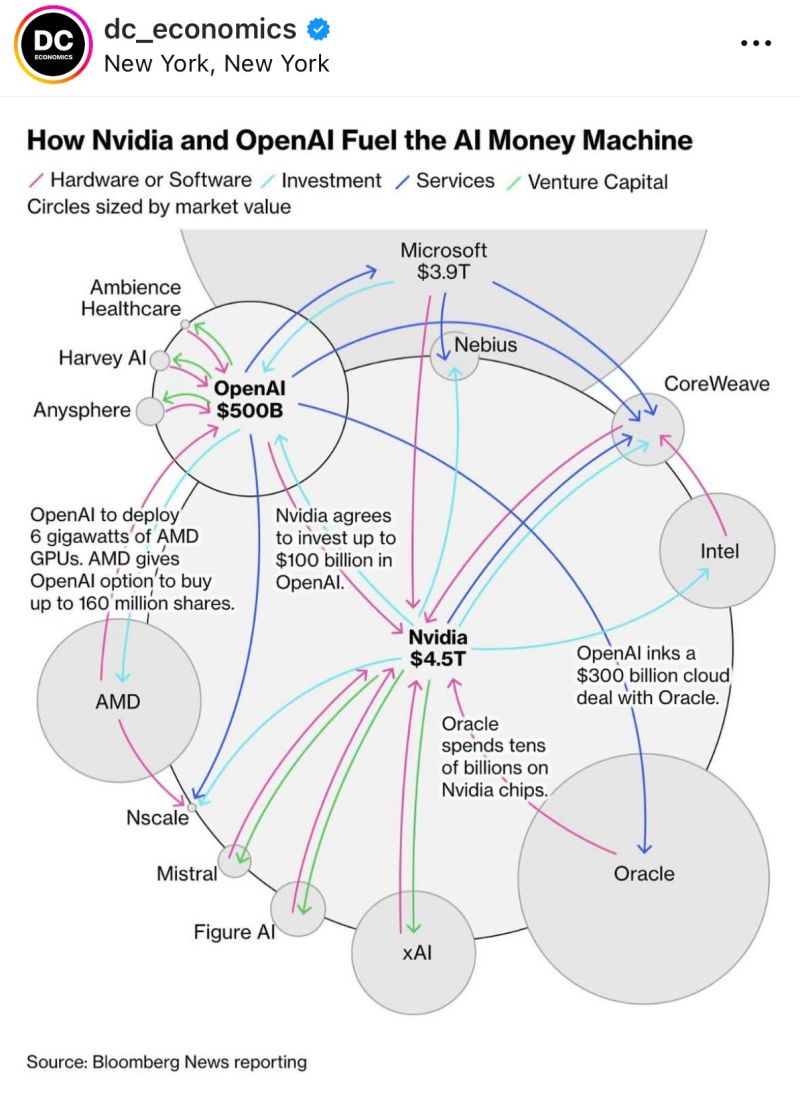

What makes Nvidia's investment approach particularly intriguing is the underlying economic incentive structure. Every AI startup Nvidia backs faces enormous computational demands. Training large language models requires thousands of GPUs, often Nvidia's H100 or more recent Blackwell chips. By investing in these companies early, Nvidia essentially creates locked-in customers for its hardware infrastructure. This strategy accomplishes multiple objectives simultaneously: diversifying Nvidia's revenue beyond direct hardware sales, ensuring that emerging AI leaders depend on Nvidia infrastructure, and building switching costs that make it difficult for competitors to displace Nvidia's GPUs from the enterprise stack.

The company publicly frames these investments as efforts to expand the AI ecosystem by backing "game changers and market makers." This framing, while strategically useful, contains substantial truth. The startups Nvidia backs span the entire AI value chain—from foundational large language models like Open AI and Anthropic, to specialized tools like Cursor (AI-powered code assistants), to infrastructure plays like Crusoe Energy (AI data center optimization). This portfolio diversity suggests Nvidia understands that its long-term dominance depends not on any single AI application winning, but on Nvidia's infrastructure becoming essential regardless of which AI startups ultimately succeed.

The Scale of Nvidia's Investment Commitment

The sheer magnitude of Nvidia's investment commitments in 2025 represents a stunning shift in corporate strategy. The company has moved beyond traditional venture investing into territory more resembling strategic acquisitions and infrastructure partnerships. Consider that Nvidia committed up to

These aren't traditional venture capital checks. They're structural agreements that bind AI startups to Nvidia's infrastructure ecosystem while creating massive revenue opportunities for the chip giant. A $100 billion commitment to Open AI translates into years of chip purchases, support services, and infrastructure buildout. These arrangements reflect a fundamental understanding that the real value in AI isn't in the software but in the computational infrastructure required to train and deploy models at scale.

The investment pace itself reveals Nvidia's resource allocation priorities. Participating in 67 deals in a single year requires substantial capital but also significant internal resources—technical due diligence teams, strategy personnel, and ongoing portfolio management. This investment intensity demonstrates that Nvidia views venture investing not as a side business but as core to its long-term competitive strategy. The company is essentially hedging against being disrupted while simultaneously ensuring that whichever AI startup wins the market, they'll need Nvidia's chips to do it.

The Billion-Dollar Roundtable: Nvidia's Most Significant AI Bets

Nvidia's largest investment rounds tell a clear story about where the company believes the AI future lies: in foundational models, developer tools, and specialized AI infrastructure. These aren't seed-stage speculative bets but massive commitments to already-proven AI companies with established market positions.

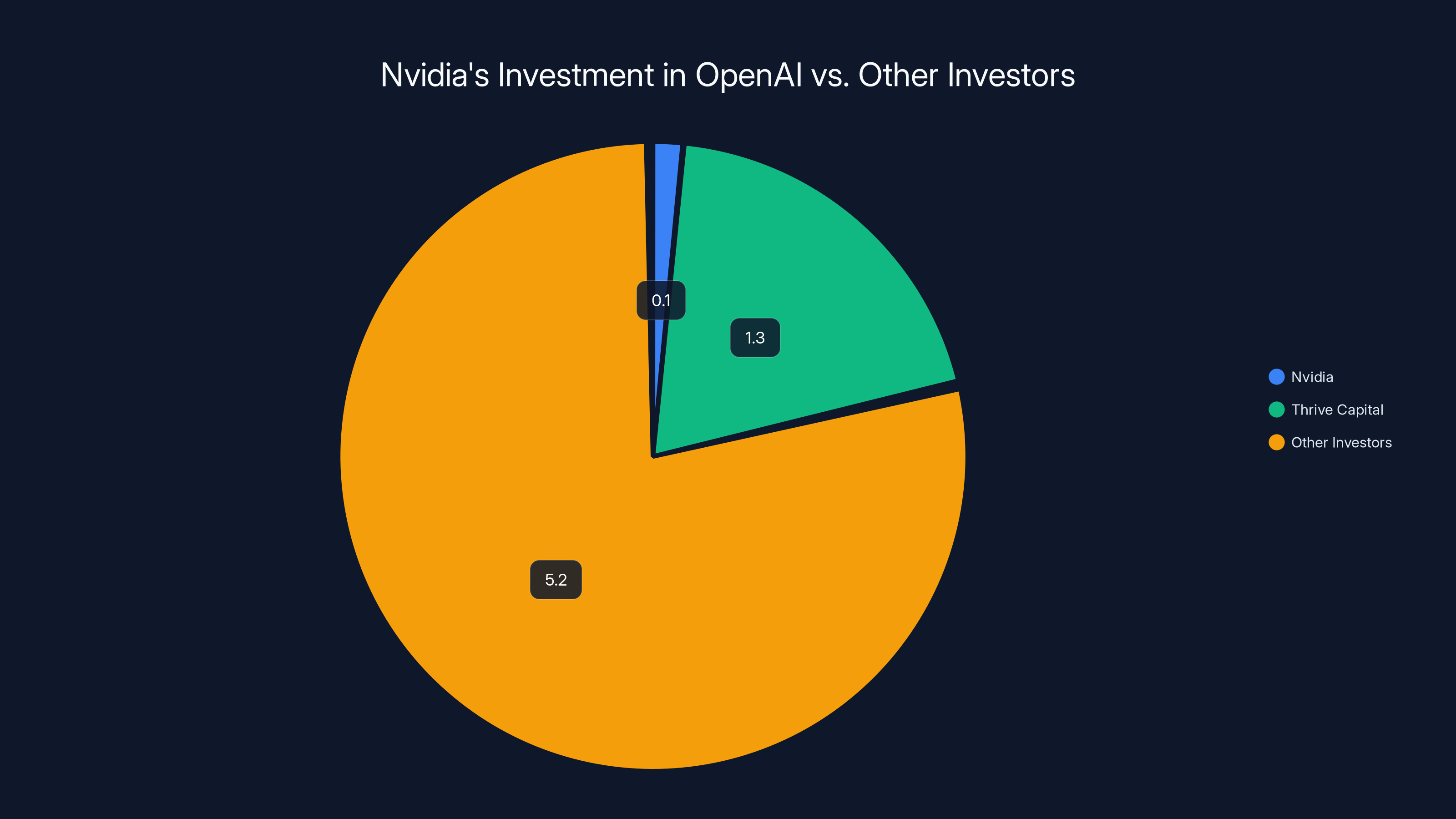

Open AI: The Chat GPT Monopoly Investment

Open AI represents Nvidia's most high-profile investment and arguably the most strategically important. In October 2024, Nvidia wrote a

What's particularly revealing is the subsequent announcement that Nvidia would invest up to $100 billion in Open AI over time, structured as a multi-year strategic partnership. This arrangement goes far beyond traditional venture investing. Nvidia essentially committed to funding Open AI's infrastructure buildout, ensuring that as Open AI scales to millions of users and conducts increasingly sophisticated training runs, they'll purchase Nvidia's most advanced and expensive GPUs. The economics are compelling for both parties: Open AI gets favorable access to cutting-edge hardware, and Nvidia locks in a massive customer with predictable, recurring hardware purchases.

However, financial disclosures revealed important caveats. Nvidia later stated in quarterly filings that "there is no assurance that any investment will be completed on expected terms, if at all." This language suggests that the $100 billion commitment is conditional on various factors—Open AI's financial performance, GPU availability, competitive dynamics, and regulatory approval. It's a reminder that even the largest tech partnerships include exit ramps and contingencies.

The strategic logic here is almost too obvious: Open AI dominates the consumer AI market with Chat GPT, which has over 200 million users and generates billions in potential revenue. By backing Open AI, Nvidia ensures that its infrastructure remains essential to the company's operations and growth. Every new model Open AI trains, every API request Chat GPT processes, every enterprise customer Open AI brings on-board drives incremental GPU demand.

Anthropic: The $10 Billion Microsoft-Nvidia Bet

Anthropic represents a different type of strategic investment. In November 2025, Nvidia committed up to

As part of the deal, Anthropic committed to spending $30 billion on Microsoft Azure compute capacity while also agreeing to purchase Nvidia's future Grace Blackwell and Vera Rubin systems. This circular arrangement—where a startup receives funding from major tech companies while simultaneously committing to purchase those companies' services and hardware—illustrates how venture capital has evolved in the age of expensive AI infrastructure. Traditional venture investing assumes founders use investor capital to build products. Modern AI investing involves investors essentially funding their own future customers.

Anthropic's Claude model has become increasingly competitive with Open AI's offerings, particularly for specialized tasks requiring careful reasoning and reduced hallucinations. By backing Anthropic, Nvidia ensures that regardless of whether Claude or Chat GPT ultimately dominates, Nvidia's infrastructure will be essential to both companies. This diversified bet reduces Nvidia's risk while simultaneously ensuring the company benefits from AI's growth regardless of which specific models or companies win market share.

The $10 billion commitment also signals Nvidia's confidence in Anthropic's long-term viability. Anthropic was founded by former Open AI leadership including Dario Amodei and Daniela Amodei, bringing proven AI research expertise to a startup that was less than two years old when it raised this funding. For Nvidia to commit such substantial capital suggests the company believes Anthropic has a legitimate chance of becoming a foundational AI company comparable to Open AI.

Cursor: The Code Generation Tool Unicorn

In November 2025, Nvidia made a strategic investment in Cursor, a startup developing AI-powered code assistant tools, as part of a

Cursor's success reflects a crucial insight about the AI economy: the most valuable AI companies aren't necessarily those building foundational models but those building specialized tools that help developers be more productive with AI. Cursor specifically targets the massive developer population worldwide, offering Git Hub Copilot-like functionality with improved performance and user experience. By backing Cursor, Nvidia taps into a market that's potentially larger than the foundational model market itself—virtually every software company in the world needs developers, and those developers increasingly use AI coding assistants.

What's particularly notable is that Nvidia participated in this round alongside Google, demonstrating that the company sees code assistance as a critical part of the AI infrastructure stack. As developers increasingly use AI to write code, they'll rely on GPU-intensive training and inference for those tools. Cursor's success thus drives demand for Nvidia infrastructure in a different way than direct large language model applications.

x AI: Nvidia Bets on Elon Musk's AI Company

In December 2024, Nvidia participated in Elon Musk's x AI funding round, investing in a

This investment is particularly revealing about Nvidia's strategy. x AI, though led by a celebrity entrepreneur, was still an unproven company compared to Open AI or Anthropic. Yet Nvidia backed it anyway. Why? The answer lies in Nvidia's diversification strategy. By spreading investments across multiple AI startups pursuing different approaches to building powerful AI systems, Nvidia ensures that regardless of which company ultimately succeeds, the winner will depend on Nvidia infrastructure. If x AI's approach to building AI systems proves superior, Nvidia wants to benefit from that success. If x AI falters, Nvidia has many other bets in its portfolio.

This portfolio approach also reflects a hedge against regulatory risk. If governments eventually decide to restrict Open AI or Anthropic due to concerns about AI safety or market concentration, Nvidia has alternatives ready. By backing multiple large language model companies, Nvidia reduces its dependence on any single player in the AI market.

Mistral AI: The European Language Model Champion

Mistral AI represents Nvidia's bet on a European-based AI company challenging the American dominance of large language models. In September 2025, Nvidia invested in Mistral's Series C round, which raised €1.7 billion (approximately

Mistral's significance lies in its challenge to the US-dominated AI landscape. While Open AI and Anthropic are American companies, Mistral proves that Europe can build competitive large language models using Nvidia infrastructure. This has important geopolitical implications. As Europe increasingly seeks technological independence from American companies, Mistral becomes strategically important not just to Nvidia but to European governments hoping to maintain AI sovereignty. By backing Mistral, Nvidia ensures its infrastructure remains essential even if Europe develops its own AI champions.

Mistral's efficient approach to language model training—using techniques like mixture-of-experts to reduce computational requirements—also demonstrates innovation in how AI systems consume GPU resources. This feedback loop between Nvidia and startups like Mistral leads to better hardware optimization, as Nvidia's chip design team learns from how startups use their GPUs.

Reflection AI: Competing with Deep Seek

In October 2025, Nvidia invested in Reflection AI as part of a

This investment reveals something crucial about Nvidia's strategy: the company isn't just investing in AI winners, it's investing in companies that Nvidia believes should win based on strategic considerations. Deep Seek's success with less-expensive models potentially threatens Nvidia's long-term GPU demand by reducing the computational resources needed to train competitive models. By backing Reflection AI as a "US-based competitor," Nvidia supports a company that, if successful, would build models using traditional approaches requiring maximum GPU resources.

The timing of this investment—as Deep Seek gained significant market share in 2025—suggests Nvidia was actively concerned about the Chinese company's approach reducing GPU demand. By backing Reflection AI with substantial capital, Nvidia essentially hedges against Deep Seek winning the efficiency race.

Thinking Machines Lab: Funding Mira Murati's New Venture

When Mira Murati, former CTO of Open AI, announced Thinking Machines Lab in 2025, Nvidia was among the investors backing her

Thinking Machines Lab demonstrates how Nvidia captures value across different AI approaches and leadership teams. Rather than betting exclusively on Open AI, Nvidia also backed a company founded by an Open AI executive potentially pursuing a different approach to AI development. This diversification approach—backing multiple teams from the same organization—is increasingly common in venture capital and particularly prevalent in AI investing.

The substantial seed valuation also illustrates the current AI market dynamics where leadership experience and track record matter more than proven revenue or users. Murati's reputation as a world-class AI executive apparently justified a $12 billion valuation before the company had shipped a product or acquired customers.

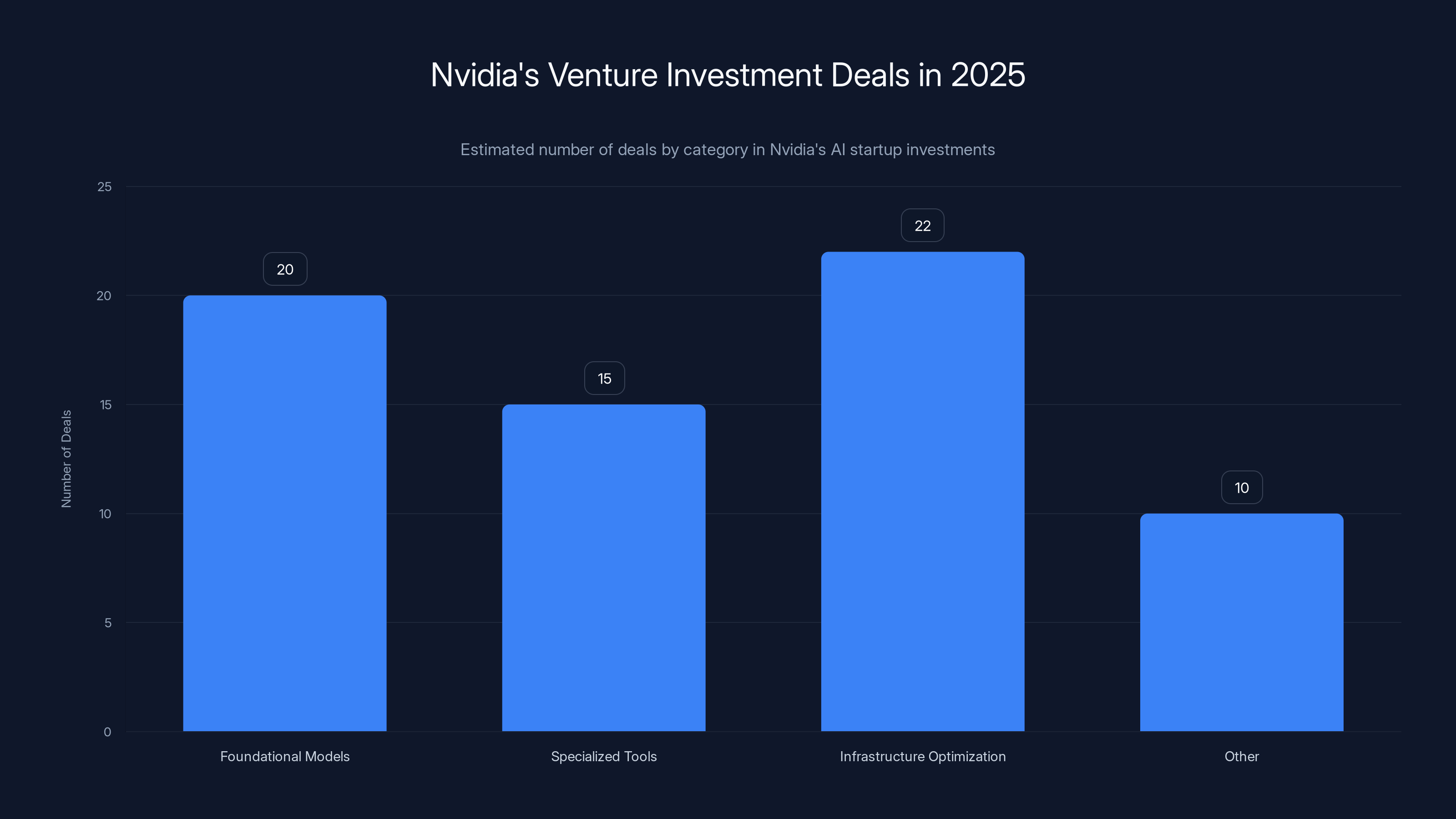

In 2025, Nvidia participated in 67 deals, focusing heavily on foundational models and infrastructure optimization. (Estimated data)

The Complete Portfolio: Understanding Nvidia's AI Ecosystem Strategy

While the billion-dollar-round companies capture headlines, Nvidia's complete investment portfolio tells a more nuanced story about the company's competitive strategy. The 67 deals Nvidia participated in during 2025 span nearly every category in the AI technology stack.

Infrastructure and Compute Layer

Beyond direct model investments, Nvidia backed several companies focused on the computational infrastructure required to train and deploy AI systems. Crusoe Energy received Nvidia investment as part of a

Crusoe's business model is particularly interesting from Nvidia's perspective. The company helps customers run more efficient data centers, which means they can train larger models or serve more users with the same GPU budget. This seems counterintuitive for a GPU manufacturer—shouldn't Nvidia want customers to use more GPUs? But Crusoe actually drives GPU demand by making AI infrastructure economically viable in previously uneconomical locations and at previously uneconomical scales.

Investments in infrastructure partners like Crusoe demonstrate that Nvidia understands the complete value chain required to make AI practically deployable. Simply selling GPUs isn't sufficient; customers need partners who can help them solve power, cooling, networking, and operational challenges that come with deploying thousands of expensive chips.

Developer Tools and Applications

Cursor's investment reflects Nvidia's recognition that the developer tool layer represents enormous value creation opportunities. As AI becomes increasingly central to software development, tools that help developers use AI more effectively become critical infrastructure. Cursor's success—growing from a small startup to a $29 billion company valuation in under two years—validates this thesis.

Nvidia's investments in this layer extend beyond Cursor. The company backed multiple companies building specialized AI applications for specific industries, from healthcare AI startups to legal research tools. This diversified application layer approach ensures Nvidia captures value not just from foundational model training but from all the ways those models get deployed in production systems.

Alternative Model Architectures

Mistral and Reflection AI both pursue different approaches to building large language models than Open AI's dominant transformer-based architecture. By backing companies exploring different technical approaches, Nvidia hedges against the risk that the transformer architecture ultimately proves suboptimal. If, for example, a startup discovers that mixture-of-experts models can match transformer performance with 50% fewer GPUs, Nvidia wants to participate in that success rather than be disrupted by it.

This technical diversification is crucial because Nvidia's long-term dominance depends on remaining essential regardless of which specific AI technical approaches ultimately dominate. By backing companies pursuing multiple technical paths, Nvidia ensures that virtually any path to powerful AI requires Nvidia infrastructure.

Specialized AI Infrastructure

Several investments target specific technical problems in scaling AI. Companies working on distributed training, inference optimization, model compression, and other specialized challenges receive Nvidia backing. These companies typically don't compete with Nvidia but rather complement the chip giant by solving problems that make Nvidia GPUs more valuable and more economically viable.

Nvidia's

The Economic Model: How Nvidia Profits from Venture Investments

Nvidia's investment strategy isn't primarily about financial returns in the traditional venture capital sense. While the company certainly benefits when startups appreciate in value and Nvidia can liquidate positions, the real value comes from locking in hardware customers and shaping the AI ecosystem.

Guaranteed Hardware Purchases

Many of Nvidia's largest investments include commitments from portfolio companies to purchase Nvidia hardware. Anthropic's $30 billion Azure commitment implicitly includes Nvidia GPU purchases. x AI's agreement to purchase Nvidia hardware for AI training creates predictable revenue. These arrangements transform venture investments into hardware pre-sales, guaranteeing that Nvidia recovers capital while the startup is still private and before questions about profitability arise.

From a financial engineering perspective, this is elegant. Nvidia provides capital at favorable terms (as a venture investor accepting dilution rather than demanding revenue-sharing agreements), and the startup repays that investment through hardware purchases that carry high margins. Everyone wins: Nvidia captures recurring revenue, the startup gets the capital it needs at reasonable terms, and Nvidia's balance sheet benefits from investment gains when companies eventually go public or achieve strategic liquidity events.

Switching Costs and Lock-In

Startups that build their AI infrastructure around Nvidia GPUs face enormous switching costs. Moving from NVIDIA's CUDA programming environment to a competitor's programming model requires retraining engineers, rewriting code, and managing performance migration risks. By investing early in startups, Nvidia ensures that when those startups scale to billions in valuation and revenue, they're deeply committed to Nvidia's ecosystem.

This lock-in effect compounds over time. A startup that started using NVIDIA GPUs in early 2023 has spent two years building expertise, optimizing code, and training teams. By 2025, the cost of switching to AMD's MI300 chips or emerging competitors would be enormous, even if competitors offered better price-to-performance. The switching cost vastly exceeds any potential hardware savings.

Market Shaping and Competitive Dynamics

By backing multiple competitors in the same space—Open AI, Anthropic, x AI, Mistral—Nvidia ensures that regardless of which company ultimately dominates, the winner will depend on Nvidia infrastructure. This diversified approach also prevents any single startup from achieving too much leverage over Nvidia. If Nvidia backed exclusively Open AI and Open AI became dominant, that would give Open AI significant negotiating power when buying hardware. By backing multiple large language model companies, Nvidia maintains negotiating leverage with each.

This strategy also has important competitive implications. Nvidia's backing validates startups and helps them raise capital at premium valuations. A startup that announces Nvidia investment receives a significant credibility boost in fundraising and customer conversations. This is particularly important early in AI's history when companies are trying to convince enterprises that AI solutions are worth investing in. Nvidia's backing essentially signals: "We, the leading GPU maker, believe this company will be important," which helps startups recruit customers and teams.

Portfolio Insurance

Nvidia's diverse investment portfolio serves as insurance against technological disruption. If Deep Seek's efficiency approach ultimately prevails and future models require 50% fewer GPUs, Nvidia's backing of Reflection AI hedges that risk. If transformer models prove suboptimal and attention-free approaches emerge, Nvidia's backing of various research teams ensures the company participates in that transition. By spreading capital across many approaches, Nvidia reduces the risk that any single technological disruption undermines its business.

Traditional GPU companies might have concentrated investment in Open AI and called it a day. Nvidia's broader strategy recognizes that AI is still in early days, technology is evolving rapidly, and the companies that dominate in 2030 might use fundamentally different approaches than those dominant in 2025.

The NVentures Factor: Nvidia's Formal Venture Capital Fund

While much of Nvidia's investment activity flows through direct corporate investments, the company also operates NVentures, a formal corporate venture capital fund that has dramatically accelerated its pace. In 2022, NVentures completed just one deal. By 2025, the fund participated in 30 deals annually.

Fund Size and Capital Allocation

NVentures operates with substantial capital, though Nvidia hasn't disclosed exact fund size. Based on the number and size of deals, the fund likely manages multiple billions in capital. This scale allows NVentures to lead or co-lead significant rounds, giving the fund substantial influence over investment terms and governance.

The acceleration from one deal annually to 30 demonstrates a strategic shift. Nvidia clearly identified venture investing as crucial to its long-term competitive strategy and increased capital allocation accordingly. This shift occurred in parallel with Nvidia's extraordinary stock price appreciation and market cap growth, allowing the company to fund venture investments from operating cash flow without requiring additional debt or equity financing.

NVentures Investment Thesis

NVentures explicitly targets companies that represent "game changers and market makers" in AI. This language suggests the fund looks for companies that might reshape the entire market rather than serving niche use cases. This thesis explains why so many NVentures investments involve massive rounds at premium valuations—the fund is looking for companies with potential to reach enormous scale.

The fund also prioritizes companies using Nvidia infrastructure. While the fund likely invests in some companies using other chips, the emphasis on Nvidia ecosystem compatibility ensures that NVentures investments drive incremental GPU demand. This alignment between investment thesis and hardware business creates a virtuous cycle: successful investments require Nvidia GPUs, which drives Nvidia hardware revenue, which funds additional venture investments.

Geographic and Technical Diversity

NVentures investments span multiple geographies, from US-based companies like Open AI and Cursor to European companies like Mistral. This geographic diversification serves strategic purposes: it allows Nvidia to build relationships with international tech ecosystems, hedge geopolitical risks, and support the emergence of global AI champions that remain dependent on Nvidia infrastructure.

Technically, NVentures backs companies pursuing diverse approaches to AI—from transformer-based language models to mixture-of-experts architectures to alternative model types still in research stages. This technical diversification ensures Nvidia captures value from AI innovation regardless of which technical approaches ultimately dominate.

Nvidia's investment activity has surged, with total deals increasing from 1 in 2022 to 67 in 2025, showcasing its strategic expansion in the AI sector. Estimated data for 2023.

Competitive Landscape: Who Else Is Making Mega-Investments in AI Startups?

While Nvidia's investment pace has accelerated dramatically, other major tech companies and investors are also backing AI startups at scale. Understanding these competitive investments reveals how Nvidia's strategy fits into the broader AI ecosystem.

Microsoft's Strategic AI Investments

Microsoft has pursued a somewhat different strategy than Nvidia, focusing on a smaller number of larger, more strategic investments rather than diversified bets across dozens of companies. Microsoft's

Microsoft's approach differs from Nvidia's in important ways. While Nvidia benefits when any AI startup succeeds because they'll likely use Nvidia GPUs, Microsoft's benefits depend more on specific companies winning. If Open AI or Anthropic dominates enterprise AI, Microsoft benefits from integrating those models into Office, Azure, and other products. But Microsoft has less direct revenue from competitors' success.

This difference in incentive structures explains why Nvidia can diversify investments across many competitors while Microsoft concentrates on fewer strategic bets. Nvidia wins when the market grows regardless of specific winners; Microsoft wins when specific companies align with its cloud and productivity vision.

Google and Meta Investments

Google has made AI investments but typically focuses on internal research and development rather than external venture capital. Google Ventures (now part of Google's broader investment operations) invests in startups, but Google's strategy emphasizes vertical integration—building AI capabilities internally rather than relying on external startups.

Meta similarly emphasizes internal AI research, though the company has made some external investments. Meta's approach reflects concern that depending on external startups for crucial AI technology creates competitive vulnerability. Google and Meta have the internal resources to build many AI systems themselves, so external venture investments serve strategic and defensive purposes rather than filling core technological gaps.

In contrast, Nvidia's core business is hardware manufacturing, not AI model development. This makes external venture investments particularly important for Nvidia because the company can't easily build Open AI-equivalent capabilities internally. Hardware companies need software companies to use their products, making venture investment in software startups strategically essential.

Saudi Arabia's PIF and Other Sovereign Wealth Funds

Sovereign wealth funds including Saudi Arabia's Public Investment Fund have made substantial AI investments, often at larger check sizes than traditional venture capital. These institutions provide patient capital unconstrained by typical venture fund economics, allowing startups to raise larger rounds at valuations that traditional VCs might consider too expensive.

Sovereign wealth fund investments have become increasingly common in AI, reflecting the geopolitical importance of AI capabilities and the willingness of wealthy nations to invest in companies that enhance their technological capabilities. These investments sometimes come with expectations that portfolio companies prioritize relationships with the investing nation, creating geopolitical complexity absent from purely financial investments.

Traditional Venture Capital's AI Bets

Traditional venture capital firms including Sequoia, Andreessen Horowitz, and Accel have all made substantial AI investments. These firms often lead or co-lead the same rounds Nvidia participates in, meaning Nvidia and traditional VCs are frequently aligned in backing the same startups. This co-investment pattern suggests broad agreement across sophisticated investors about which AI companies are most promising.

However, traditional VCs and Nvidia have different incentive structures. VCs benefit financially when portfolio companies appreciate and achieve liquidity events through IPO or acquisition. Nvidia benefits from portfolio company success but receives much of its actual profit from hardware sales, not from investment returns. This difference means Nvidia might tolerate longer paths to profitability or accept lower equity percentages if it captures sufficient hardware revenue.

Risk Factors and Challenges in Nvidia's Venture Strategy

While Nvidia's investment strategy is sophisticated and well-executed, several risks and challenges could undermine its long-term success.

Antitrust Scrutiny and Regulatory Risk

Nvidia's dominant market position and deep involvement in funding and shaping the AI ecosystem creates antitrust risk. Regulators might eventually question whether Nvidia's hardware dominance combined with venture investments and strategic partnerships creates unfair competitive advantages. If a startup funded by Nvidia receives preferential access to chips or technical support unavailable to competitors, that could raise regulatory concerns.

The US Federal Trade Commission and international regulators have shown increasing interest in AI competitive dynamics. Nvidia's coordinated investments and partnerships could attract regulatory scrutiny, particularly if competitors argue they're locked out of critical resources. The company's forward guidance about "no assurance" regarding Open AI investments suggests Nvidia is aware of regulatory uncertainty.

Technology Disruption

Nvidia's entire strategy depends on GPUs remaining essential for AI training and deployment. If novel architectures emerge that don't rely on GPU acceleration—such as photonic computing, quantum approaches, or yet-undiscovered technologies—Nvidia's competitive moat weakens. By backing startups exploring alternative approaches, Nvidia hedges this risk, but the hedge isn't perfect. If a company backed by a competitor discovers a GPU-free approach to AI, Nvidia loses its advantage regardless of other portfolio companies' success.

The recent emergence of Deep Seek's efficient models also demonstrates that GPU requirements could decline if AI research focuses on efficiency rather than raw scale. While Reflection AI represents Nvidia's hedge against this outcome, there's no guarantee that the Nvidia-backed company will win the efficiency race.

Concentration Risk

Nvidia's largest investments are concentrated in a small number of companies. Open AI, Anthropic, and x AI alone account for over $100 billion in total commitments. If one of these companies faces catastrophic failure—whether through technological obsolescence, regulatory action, or competitive displacement—Nvidia's entire strategy is partially undermined. While portfolio diversification provides some protection, the concentration of capital in top bets creates exposure to idiosyncratic risk.

Startup Execution Risk

Venture investments depend on founding teams successfully executing against enormous technical challenges. Large language models remain difficult and expensive to train. Many startups might fail not because their approach is wrong but because they run out of capital, fail to attract talent, or face unforeseen technical obstacles. Nvidia's investment doesn't guarantee success; it merely increases the probability.

Startups also face market risk. Even if technology works perfectly, customers might not adopt it at scale, or the market might mature differently than expected. Open AI's Chat GPT clearly succeeded beyond even optimistic expectations, but not all AI startups will replicate that success.

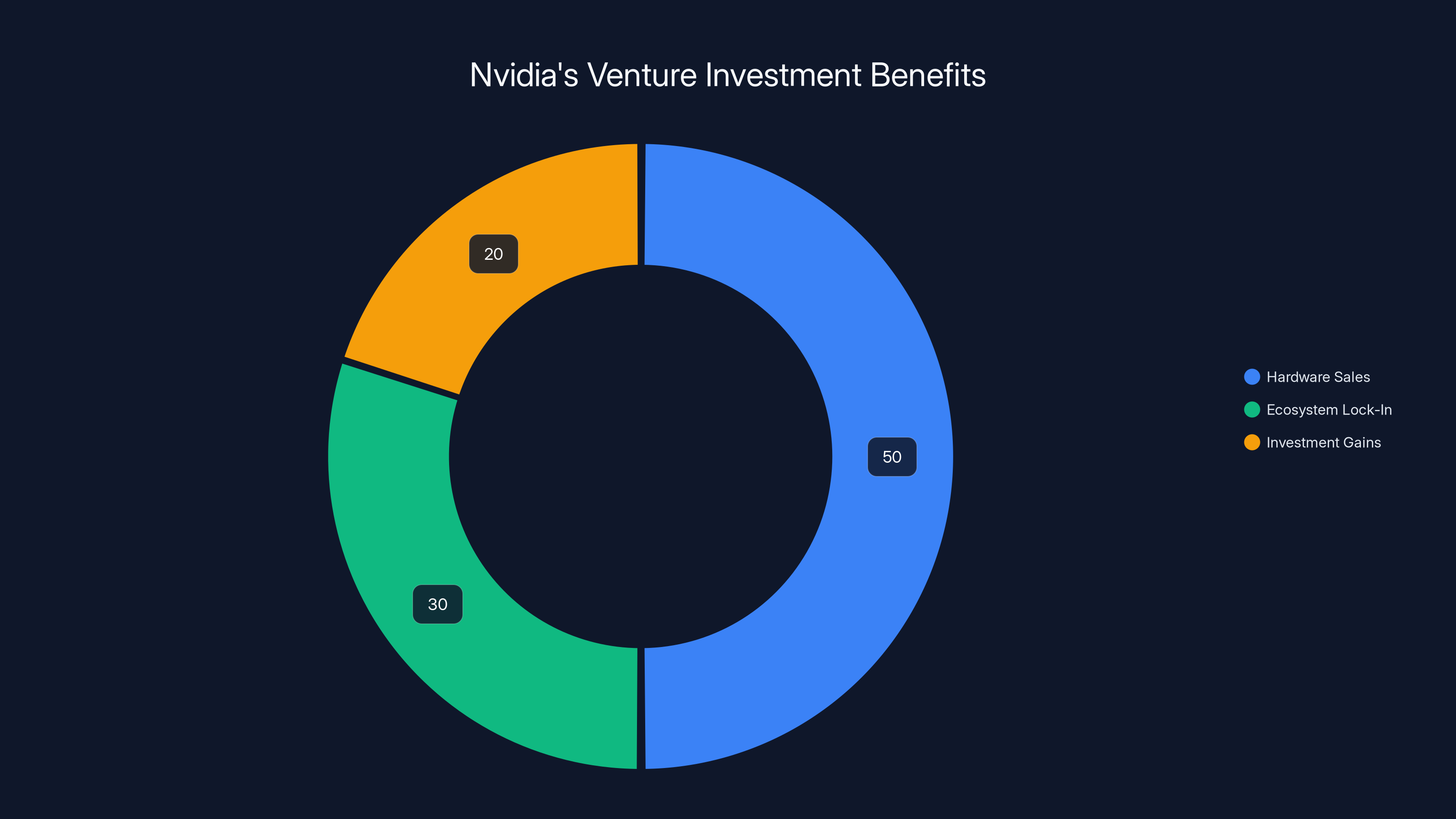

Nvidia's venture investments primarily benefit from hardware sales (50%) and ecosystem lock-in (30%), with investment gains contributing 20%. Estimated data.

Investor and Enterprise Implications

Nvidia's venture strategy has important implications for investors evaluating the company and for enterprises planning AI infrastructure investments.

For Nvidia Shareholders

Nvidia's venture investments provide upside through potential portfolio appreciation while securing revenue through hardware sales and infrastructure commitments. A diversified portfolio approach reduces single-company risk while ensuring that successful startups drive GPU demand. Investors should recognize that Nvidia's stock performance depends not just on hardware margins but on whether portfolio companies successfully scale and achieve adoption.

The risk is that venture investments don't generate sufficient returns to justify capital allocation. Nvidia might have been better served deploying capital through share buybacks if venture returns underperform. However, the strategic value of ensuring GPU demand likely justifies some performance drag in traditional venture returns.

For Enterprise Customers

Enterprises building AI systems should recognize that Nvidia's investment strategy creates lock-in effects. Startups backed by Nvidia benefit from capital, credibility, and prioritized access to hardware. This might make Nvidia-backed startups more likely to succeed, which could influence purchasing decisions. Enterprises seeking to maintain independence from Nvidia might deliberately choose solutions from companies without strong Nvidia backing.

However, the practical reality is that most AI companies require Nvidia infrastructure, regardless of whether they're Nvidia-backed. The market has voted with its purchasing decisions, and Nvidia's dominance reflects genuine technical superiority, not just venture investments. Enterprises should evaluate AI solutions based on capabilities and cost rather than Nvidia backing status.

For Other Venture Capitalists

Nvidia's investment success validates the venture capital thesis that AI represents extraordinary growth opportunity. Nvidia's participation in major rounds signals that even mature, publicly traded tech companies see venture-scale opportunities in AI. This validation encourages other investors to allocate capital to AI at scale.

However, Nvidia's advantages—massive capital, strategic alignment with GPU demand, and ability to provide hardware at preferential terms—aren't easily replicated by traditional VCs. Venture firms backing AI startups compete with Nvidia for the best deals and founding teams. Some traditional VCs might specialize in AI applications or infrastructure that doesn't directly compete with Nvidia-backed companies.

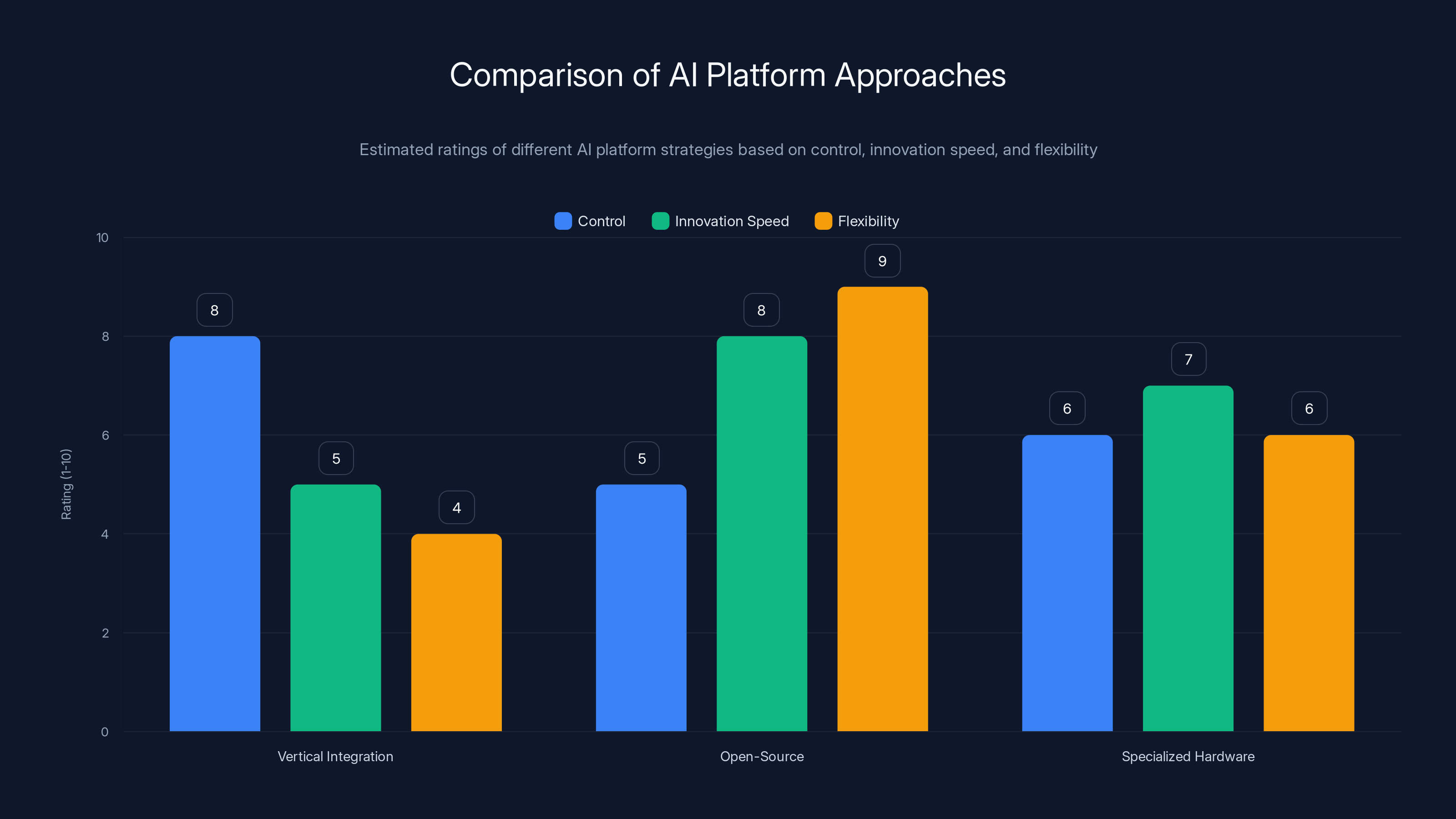

Alternative Platforms and Ecosystem Approaches

While Nvidia's strategy of backing an ecosystem of AI companies represents one approach to dominating the AI future, alternative models exist and deserve consideration.

Internal Development and Vertical Integration

Google and Meta represent the vertical integration alternative. Rather than backing external startups, these companies build AI capabilities internally. This approach provides complete control over technology direction and customer relationships but requires massive internal research teams and accepts slower innovation cycles typical of large organizations.

For enterprises, vertical integration means relying on fewer external partners. Google Cloud offers AI services built internally; enterprises using those services depend on Google's engineering rather than startup innovations. This approach provides stability but potentially sacrifices innovation speed.

Open-Source and Community-Driven Development

Meta's release of LLa MA and subsequent open-source models represents an alternative to closed-source commercial models. Open-source AI allows distributed development by multiple organizations, potentially accelerating innovation. This approach differs fundamentally from Nvidia's venture-backed model.

For enterprises, open-source models reduce dependency on any single vendor but require internal expertise to fine-tune and maintain models. The tradeoff is between flexibility (choosing any model, modifying as needed) and convenience (buying a finished product from a vendor).

Specialized AI Infrastructure Providers

Companies like Cerebras, Samba Nova, and others pursue specialized hardware approaches optimized for specific AI workloads. Rather than generalizing like Nvidia, these companies build chips optimized for language models, recommendation systems, or other specific applications. For enterprises with specialized requirements, these alternatives might offer better price-to-performance than Nvidia's generalist approach.

However, Nvidia's advantage lies partly in software ecosystem maturity. CUDA, Nvidia's programming environment, benefits from years of development and massive community adoption. Competing platforms face a chicken-and-egg problem: they need software developers to adopt their platform to build applications, but developers need applications to justify learning the platform.

Integrated End-to-End Solutions

For teams seeking comprehensive AI automation and content generation capabilities without building complex infrastructure from scratch, platforms like Runable provide compelling alternatives to the startup-venture approach. Runable delivers AI agents for document generation, slide creation, and workflow automation at $9/month, enabling teams to leverage AI-powered productivity tools without managing custom infrastructure or depending on multiple specialized startups.

Rather than juggling investments across Cursor for code generation, separate document-generation startups, and presentation tools, teams can consolidate their AI automation on Runable's unified platform. This approach suits enterprises and teams that want productivity benefits of AI without deploying custom language models or managing complex vendor relationships.

The ecosystem approach Nvidia pursues optimizes for maximum flexibility and cutting-edge capabilities—enterprises get best-in-class solutions for each need. The integrated approach that platforms like Runable represent optimizes for simplicity, cost-effectiveness, and ease of deployment. The right choice depends on organizational requirements: large enterprises with sophisticated needs benefit from best-of-breed solutions, while smaller teams often prefer integrated platforms.

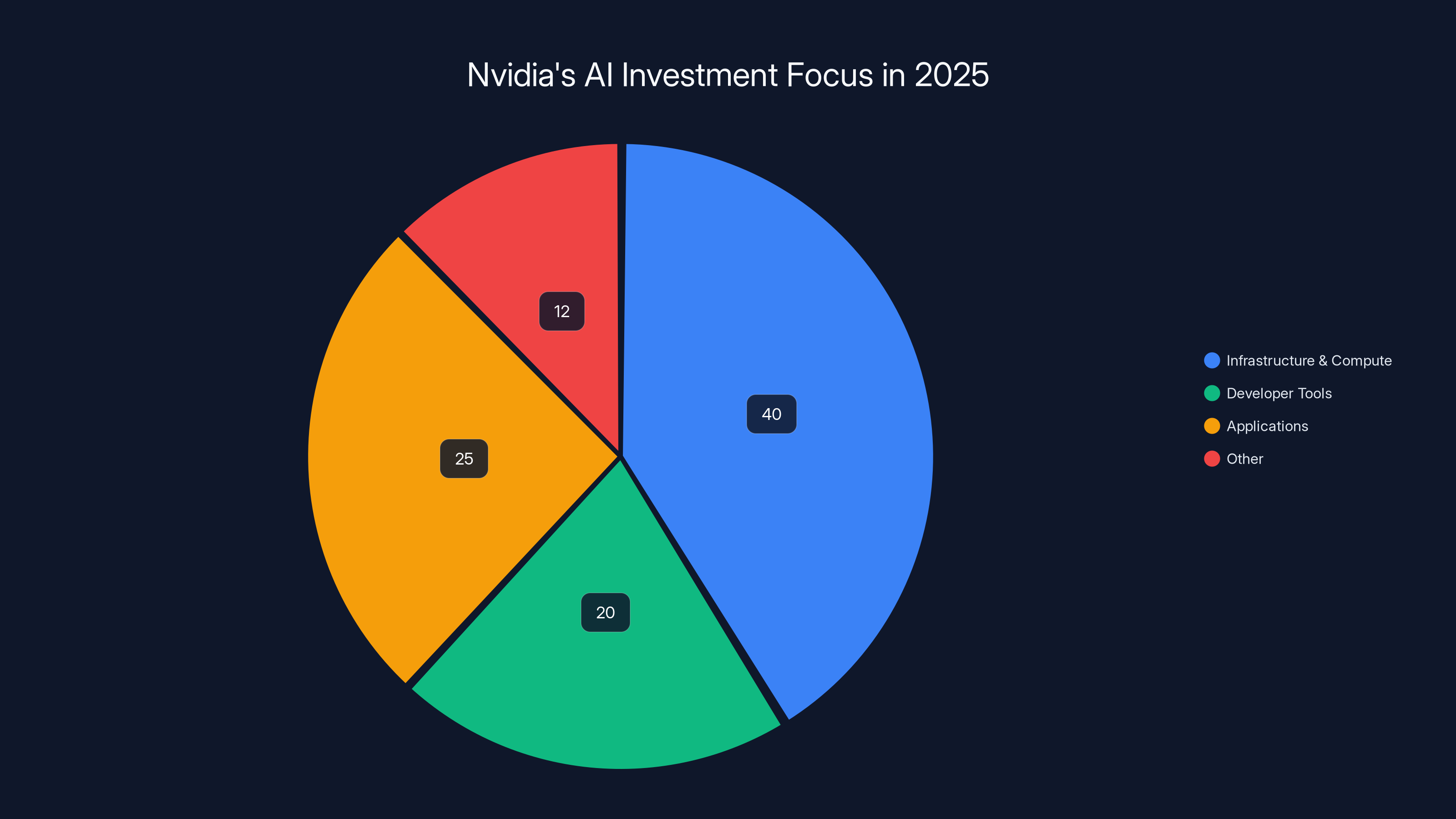

Nvidia's 2025 AI investment strategy shows a strong focus on infrastructure and compute, comprising 40% of their portfolio, highlighting their commitment to supporting the foundational layers of AI technology. (Estimated data)

The Future of AI Investing and Nvidia's Evolution

Looking ahead, Nvidia's investment strategy likely continues evolving as the AI landscape matures.

Consolidation and Later-Stage Focus

As AI startups mature and face pressure to demonstrate profitability, Nvidia likely shifts investment capital from early-stage diversified bets toward later-stage investments in companies showing clear paths to sustainability. Rather than backing 30 companies annually at seed and Series A stages, Nvidia might concentrate on Series C and later rounds for companies already validating business models.

This shift would reflect maturation of the AI market. Early-stage startups require exploration because nobody knows which approaches will ultimately win. Once winners emerge, investment capital concentrates behind proven capabilities.

Infrastructure and Efficiency Emphasis

As raw scale becomes less differentiating (with multiple companies achieving large language models), competitive advantage shifts toward efficiency. Nvidia's investments in companies like Crusoe Energy and backing of efficient model companies like Mistral suggest this shift is already underway. Future AI companies will compete not just on capability but on computational efficiency, latency, and cost.

Nvidia's hardware innovation must keep pace with software efficiency innovations. If startups discover ways to achieve Open AI-equivalent capabilities with 50% fewer GPUs, Nvidia's addressable market shrinks despite continued growth. Nvidia's venture strategy helps the company stay aware of efficiency trends and maintain relevance regardless of technical direction.

Geopolitical Complexity

AI has become geopolitically significant, with governments viewing AI leadership as crucial to national competitiveness. Nvidia's international investments, particularly in European companies like Mistral, create geopolitical complexity. Governments might eventually restrict where Nvidia can invest or mandate that critical AI capabilities remain under domestic control.

Future Nvidia venture strategy must navigate geopolitical constraints while maintaining global AI ecosystem presence. The company might establish region-specific funds or accept limitations on certain investments to maintain government relationships and ensure market access globally.

Key Takeaways: Understanding Nvidia's AI Investment Empire

Nvidia's transformation into a venture capital powerhouse represents a strategic evolution driven by the company's extraordinary profitability and the recognition that AI's future success depends on a healthy ecosystem of startups building applications, infrastructure, and developer tools on top of Nvidia hardware.

The company's 67 deals in 2025, ranging from billion-dollar commitments to Open AI and Anthropic down to smaller strategic bets on specialized startups, accomplish multiple objectives simultaneously. Nvidia secures future GPU customers through hardware-buying commitments, reduces technological disruption risk through portfolio diversification, shapes competitive dynamics by backing multiple AI approaches, and gains intelligence about emerging technologies through board seats and ongoing operational involvement.

For investors evaluating Nvidia, recognizing the strategic value of venture investments beyond traditional financial returns is crucial. Nvidia doesn't need impressive venture returns; it needs portfolio companies to succeed and purchase Nvidia hardware. This difference in motivation explains why Nvidia can accept lower equity stakes in major rounds and commit to long-term partnerships rather than pursuing exit scenarios.

For enterprises building AI systems, Nvidia's investment landscape matters less than underlying capabilities and cost economics. The fact that Anthropic is backed by Nvidia doesn't make Claude better or worse than competing models. However, enterprises should understand that startups with Nvidia backing potentially have advantages in securing compute resources and scaling infrastructure, which could influence long-term reliability and capability development.

The broader lesson is that AI's future depends on healthy competition across multiple dimensions—foundational model companies (Open AI, Anthropic, x AI, Mistral), developer tools (Cursor), specialized applications, and infrastructure optimization (Crusoe, others). By backing this full ecosystem, Nvidia ensures its dominance regardless of specific market winners while contributing to AI innovation and adoption.

As AI matures from experimental technology to essential infrastructure, Nvidia's role will likely evolve from GPU supplier to infrastructure strategist, actively shaping which companies and approaches prevail. This evolution represents not a departure from Nvidia's core mission but a natural expansion of how the company maintains dominance in a rapidly transforming technology landscape.

Different AI platform strategies offer varying levels of control, innovation speed, and flexibility. Vertical integration provides high control but lower innovation speed, while open-source models excel in flexibility. (Estimated data)

FAQ

What is Nvidia's venture investment strategy?

Nvidia's venture strategy involves backing AI startups across the entire value chain—from foundational models (Open AI, Anthropic) to specialized tools (Cursor) to infrastructure optimization (Crusoe). The company participated in 67 deals in 2025, committing up to $100 billion to select companies. The strategy accomplishes multiple goals: securing hardware customers, reducing technology disruption risk, and shaping the AI ecosystem to remain dependent on Nvidia's GPU infrastructure.

How does Nvidia benefit from backing AI startups?

Nvidia profits from venture investments through multiple mechanisms. Portfolio companies typically commit to purchasing Nvidia hardware, effectively converting venture investments into hardware pre-sales. Backed startups also become locked into Nvidia's CUDA ecosystem, creating switching costs that prevent customers from migrating to competitors. Additionally, by backing multiple competitors in the same space, Nvidia ensures profitability regardless of which specific company wins market share—the winner will likely depend on Nvidia infrastructure.

Why did Nvidia commit $100 billion to Open AI?

Nvidia's $100 billion commitment to Open AI, structured as a multi-year strategic partnership rather than a traditional venture investment, serves multiple purposes. It secures Open AI as a major GPU customer (every new model Open AI trains requires thousands of Nvidia chips), provides favorable access to cutting-edge hardware, and positions Nvidia as essential infrastructure to the dominant large language model company. The arrangement benefits both parties: Open AI gets reliable access to chips at favorable terms, and Nvidia locks in recurring hardware revenue from a company with substantial growth potential.

What is NVentures and how does it differ from Nvidia's direct investments?

NVentures is Nvidia's formal corporate venture capital fund, which completed just 1 deal in 2022 but 30 deals in 2025. NVentures operates separately from Nvidia's direct corporate investments, though both serve similar strategic purposes. The distinction allows Nvidia to pursue both opportunistic direct investments and systematic venture fund investments through NVentures. The fund explicitly targets "game changers and market makers" in AI, focusing on companies with potential to reshape entire markets rather than serve niche use cases.

How does Nvidia's investment in multiple AI companies reduce risk?

By backing Open AI, Anthropic, x AI, Mistral, and other large language model companies simultaneously, Nvidia ensures profitability regardless of which company ultimately dominates. If Open AI captures 80% of the market, Nvidia benefits from Open AI's success. If Anthropic or x AI unexpectedly becomes dominant, Nvidia still captures value through its investments and hardware sales. This portfolio approach, similar to traditional venture capital diversification, distributes risk across many bets rather than concentrating capital in a single outcome. Nvidia also hedges against technology disruption by backing companies pursuing diverse technical approaches.

What is the difference between Nvidia's strategy and Microsoft's AI investment approach?

Nvidia's strategy emphasizes diversified bets across many startups because the company benefits when any AI startup succeeds (they'll purchase Nvidia hardware). Microsoft concentrates on fewer, larger strategic investments (Open AI, Anthropic) because Microsoft benefits most when specific companies aligned with Microsoft's cloud and productivity vision succeed. This difference in incentive structures explains why Nvidia can be agnostic about which specific company wins, while Microsoft has stronger preferences. Nvidia's approach spreads risk across many companies; Microsoft's approach concentrates capital for maximum influence over fewer strategic partners.

How do Nvidia's investments in infrastructure companies like Crusoe benefit the GPU manufacturer?

Crusoe Energy optimizes AI data center efficiency by leveraging stranded energy resources, which makes AI infrastructure economically viable at new scales and locations. This seems counterintuitive—shouldn't Nvidia want customers to use more GPUs?—but infrastructure optimization actually drives Nvidia GPU demand. By making AI deployments more economical, Crusoe enables companies to build larger-scale AI systems that consume more GPUs overall. Additionally, Crusoe's optimization insights help Nvidia understand how startups use its chips, informing future hardware design that better matches real-world needs.

What are the risks to Nvidia's venture investment strategy?

Several risks threaten Nvidia's approach. Antitrust regulators might question whether Nvidia's dominance combined with venture investments creates unfair competitive advantages. Technology disruption could render GPUs less central to AI (through photonic computing, quantum approaches, or other novel architectures). Concentration risk exists because Nvidia's largest commitments go to a small number of companies; catastrophic failure at Open AI or Anthropic would substantially undermine the strategy. Startup execution risk means many portfolio companies might fail despite Nvidia investment. Finally, geopolitical complexity could restrict where Nvidia can invest or mandate that critical AI capabilities remain under domestic control.

How does Nvidia's venture strategy compare to alternatives like open-source development or vertical integration?

Nvidia's venture approach optimizes for flexibility and cutting-edge innovation by backing many external companies pursuing different technical approaches. Google and Meta pursue vertical integration, building most AI capabilities internally—this provides complete control but moves slower and requires massive internal research teams. Open-source approaches (like Meta's LLa MA) enable distributed development by many organizations. Integrated platforms like Runable optimize for simplicity and cost-effectiveness by consolidating multiple AI capabilities. The right approach depends on organizational requirements: large enterprises with sophisticated needs benefit from Nvidia's best-of-breed ecosystem; smaller teams often prefer integrated solutions.

Will Nvidia continue increasing venture investments at the current pace?

While Nvidia likely continues substantial venture activity, the pace may moderate as the AI market matures. Early-stage rapid diversification (backing 30 new companies annually) makes sense when nobody knows which approaches will win. As winners emerge, Nvidia probably shifts toward later-stage investments in companies already demonstrating traction. The company will also likely navigate increasing geopolitical complexity and potential antitrust scrutiny, which could constrain investment freedom. However, the strategic value of maintaining an AI ecosystem dependent on Nvidia hardware ensures venture investments remain core to corporate strategy.

How should enterprises evaluate AI vendors in light of Nvidia's investment strategy?

Enterprises should evaluate AI solutions based primarily on capabilities, cost, and reliability rather than Nvidia backing status. The fact that Anthropic or Cursor received Nvidia investment doesn't automatically make their products superior to non-Nvidia-backed alternatives. However, startups with Nvidia backing might have advantages in securing compute resources, attracting talent, and scaling infrastructure. Enterprises concerned about vendor dependency might prefer solutions not backed by Nvidia or other dominant infrastructure providers. Ultimately, the best choice depends on specific requirements: whether solutions address your use case, whether pricing aligns with budget, and whether vendors provide reliable ongoing support.

Conclusion: Nvidia's Venture Empire and the Future of AI

Nvidia's transformation from a hardware company into a venture capital powerhouse represents one of the most significant strategic pivots in tech industry history. With 67 deals in 2025, commitments exceeding $100 billion to select companies, and a formal venture fund dramatically accelerating its pace, Nvidia has become the central figure shaping which AI startups succeed and how the AI ecosystem develops globally.

The genius of Nvidia's strategy lies in its circular economic model. By investing in startups, Nvidia ensures they become dependent on Nvidia hardware for training and deployment. By backing multiple competitors, Nvidia ensures profitability regardless of specific market winners. By participating in diverse technical approaches, Nvidia hedges against technology disruption. By supporting infrastructure optimization companies, Nvidia makes its own products more economically viable at larger scales.

This strategy doesn't depend on Nvidia's venture investments generating outsized financial returns, though they certainly help. The real value comes from securing future GPU customers, understanding emerging technology trends through board participation, and ensuring Nvidia's infrastructure remains essential as AI reshapes computing. Every dollar Nvidia invests in a startup effectively pre-sells future GPU capacity while reducing the risk that Nvidia becomes disrupted by novel AI architectures or computing approaches.

For enterprises, understanding Nvidia's investment landscape provides important context but shouldn't dominate technology purchasing decisions. The best AI solution remains the one that solves your specific problem most effectively at the lowest cost, regardless of Nvidia backing. However, enterprises should recognize that startups with Nvidia support might have structural advantages in securing compute resources and scaling, which could influence long-term reliability.

For investors, Nvidia's venture strategy validates the extraordinary value creation potential in AI while demonstrating how dominant infrastructure providers use strategic investment to maintain competitive moats. As AI continues maturing from experimental technology to essential infrastructure, Nvidia's role will likely evolve from pure hardware supplier toward ecosystem architect, actively shaping technology direction through strategic capital allocation.

The next several years will reveal whether Nvidia's strategy succeeds at insulating the company from disruption. If GPU requirements decrease significantly due to novel AI approaches, all of Nvidia's venture investments won't prevent competitive displacement. If foundational models become commoditized and profits migrate to applications, Nvidia's hardware margins might compress despite portfolio company success. However, betting against Nvidia's ability to adapt seems historically unwise given the company's track record of maintaining dominance through rapid technology evolution.

Ultimately, Nvidia's $100 billion+ annual investment in AI startups represents not just a financial bet but a fundamental commitment to shaping the AI future. The company understands that controlling the infrastructure upon which innovation happens provides more durable competitive advantage than controlling any individual application or capability. By backing the full ecosystem of AI companies—models, tools, infrastructure, applications—Nvidia ensures that regardless of how AI develops, the company remains essential to the companies and enterprises building and deploying AI systems worldwide.

Related Articles

- [2025] Empromptu Raises $2M to Revolutionize AI Apps

- 16 Top Logistics & Manufacturing Startups: Disrupt Battlefield 2026 [2025]

- College Dropout Credential: Why AI Founders Are Leaving School [2025]

- 14 Fintech & PropTech Startups from TechCrunch Disrupt 2025: Complete Guide

- Best AI Presentation Makers 2026: Complete Guide & Alternatives

- [2025] Lucid Motors Faces Lawsuit Over Wrongful Termination