Open AI's New Head of Preparedness: Why AI Safety Just Became a Critical Executive Role [2025]

Imagine being handed a job title that comes with an honest warning: "This is a stressful job and you'll jump into the deep end pretty much immediately." That's what Sam Altman told the world about Open AI's newly vacant Head of Preparedness position. It's not exactly a typical recruiter's pitch, but it's exactly the kind of transparency that signals something genuinely important is happening.

The role isn't about predicting the future in some mystical sense. It's about getting ahead of the ways that increasingly powerful AI models can be misused, how they might fail in unexpected ways, and what safeguards need to be in place before those failures happen at scale. It's about taking responsibility before the problems become crises.

Open AI is actively searching for someone to lead its Preparedness framework, the company's systematic approach to tracking frontier capabilities and preparing for the risks that come with them. The position offers $555,000 base salary plus equity, a compensation package that reflects both the urgency and the complexity of the work. But the money isn't the story here. The story is that one of the world's most influential AI companies is publicly acknowledging that preparing for AI's potential harms isn't a side project—it's a critical function that deserves C-level attention.

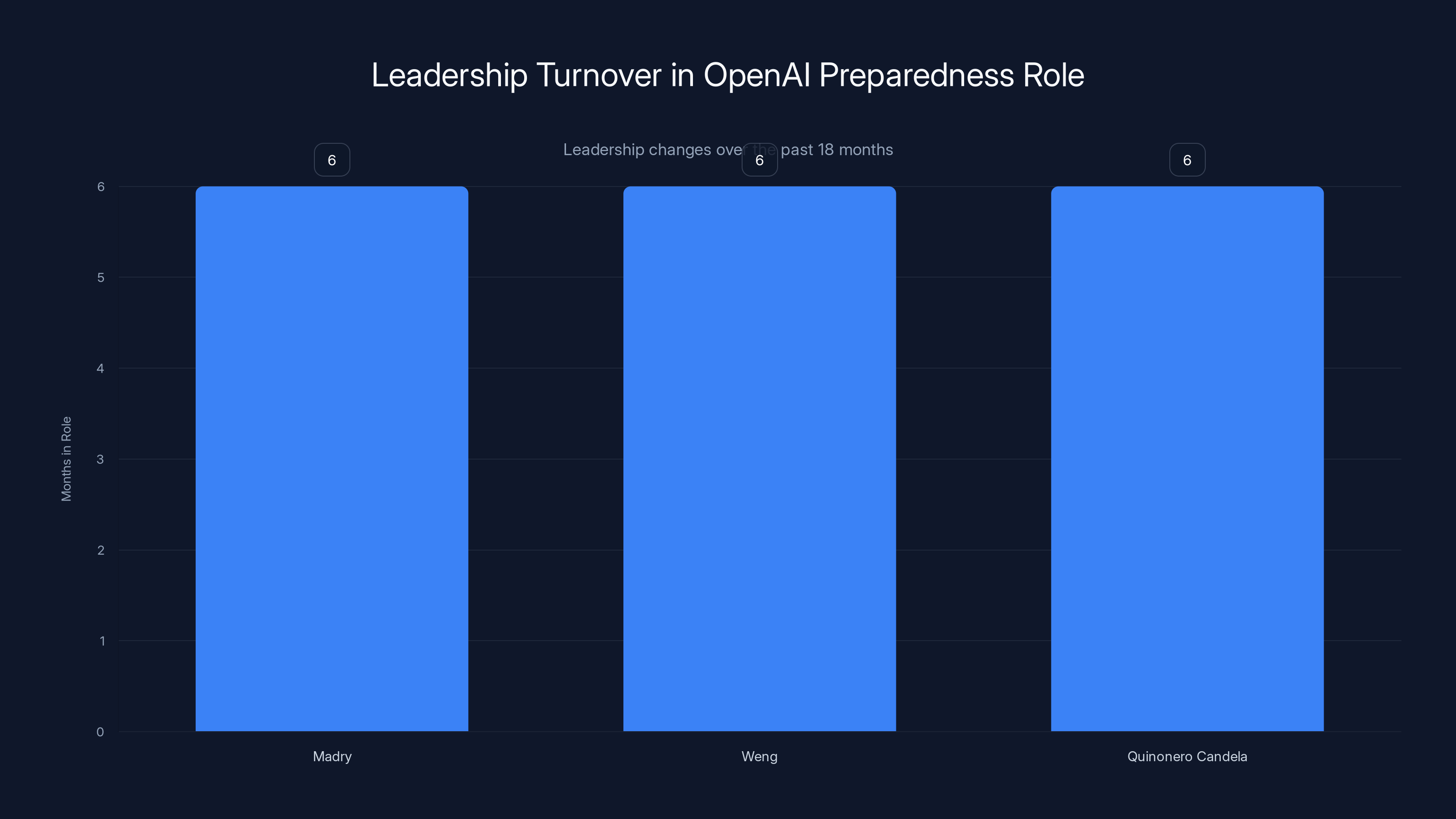

This hiring move comes after a tumultuous 2024-2025 for Open AI's safety leadership. Aleksander Madry, the original Head of Preparedness, was reassigned in July 2024. The role was temporarily split between Joaquin Quinonero Candela and Lilian Weng, but Weng departed the company a few months later, and Quinonero Candela moved to lead recruiting efforts in July 2025. The preparedness function needed a permanent leader.

What's striking is why Altman is being so candid about this hire. At the end of 2025, Open AI faced multiple wrongful death lawsuits alleging that Chat GPT impacted users' mental health. The company had to publicly acknowledge that "the potential impact of models on mental health was something we saw a preview of in 2025," along with other unforeseen challenges that come with increasingly capable AI systems. This isn't theoretical risk anymore. It's happening now.

This article breaks down what the Head of Preparedness actually does, why the role exists, what challenges the successful candidate will face, and what this hiring decision reveals about how Open AI—and the broader AI industry—is thinking about safety and responsibility at scale.

TL; DR

- Open AI is actively recruiting a new Head of Preparedness to lead safety strategy and prepare for frontier AI capabilities that create new risks

- The role pays $555K plus equity and comes with Altman's honest warning that it's "a stressful job" requiring immediate impact

- Preparedness isn't theoretical: Open AI faced wrongful death lawsuits in 2025 related to AI's mental health impacts, proving the need for proactive risk management

- Leadership continuity issues: Three leaders (Madry, Weng, Quinonero Candela) have rotated through preparedness roles in 18 months, signaling instability

- The framework addresses frontier capabilities: The role involves tracking emerging AI abilities and anticipating harms before they happen at scale

- Bottom Line: This hire reflects a genuine shift toward making AI safety a board-level executive function, not a secondary concern

Anthropic scores highest in centralizing AI safety leadership, reflecting its foundational focus on safety. OpenAI and the White House also prioritize centralized safety roles, while DeepMind and EU AI Act distribute responsibilities more broadly. (Estimated data)

What Is the Head of Preparedness, Exactly?

The Head of Preparedness at Open AI isn't a PR position, nor is it a traditional product or engineering role. It's something more specialized: a technical strategy leadership function focused on anticipating how powerful AI systems might fail, be misused, or cause unintended harm.

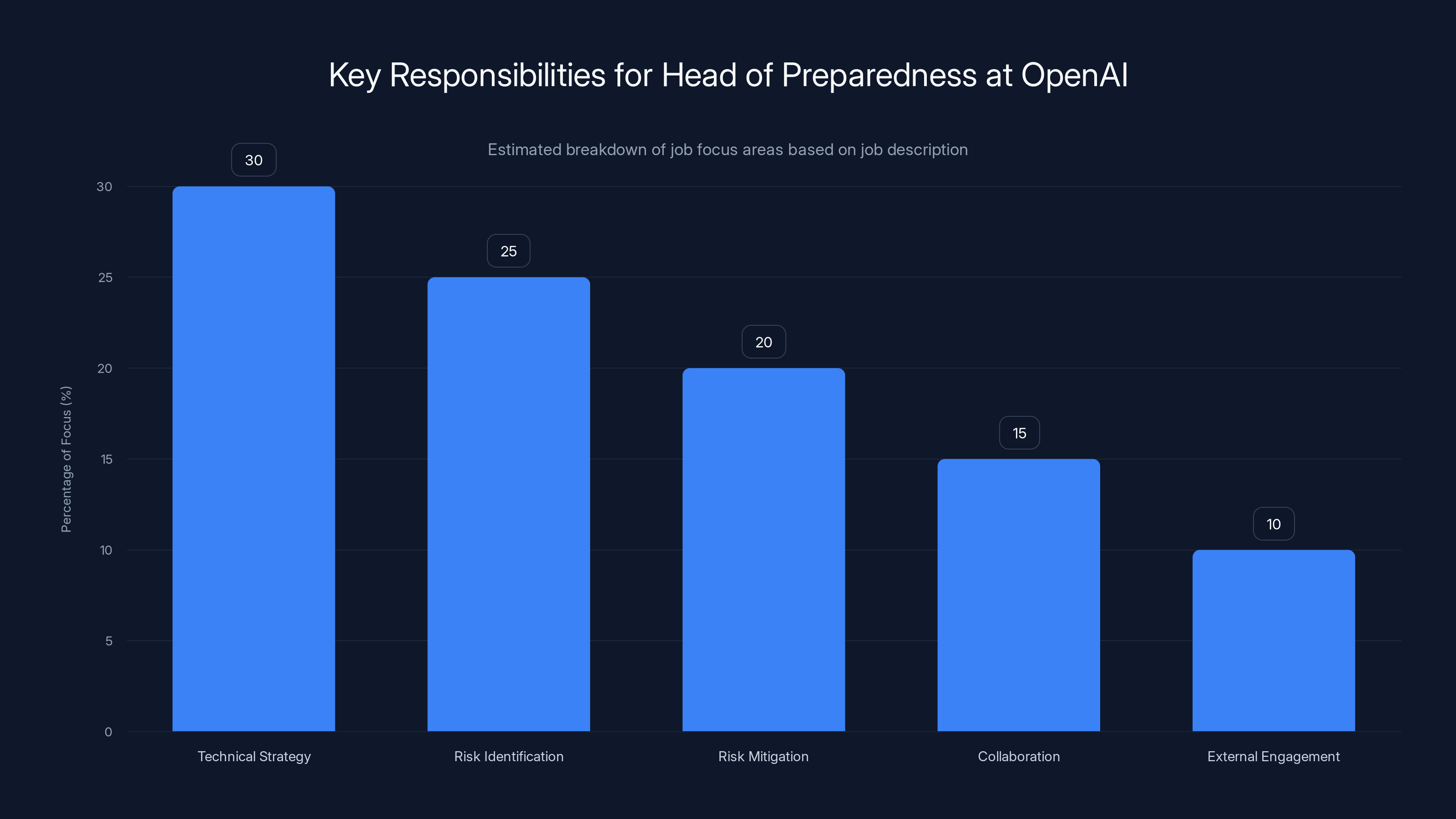

According to the official job listing, the Head of Preparedness "will lead the technical strategy and execution of Open AI's Preparedness framework." That framework isn't a product or a service. It's Open AI's internal methodology for tracking frontier capabilities and preparing for risks of severe harm before those capabilities reach production systems or customer hands.

Breaking this down further: frontier capabilities are model abilities that don't exist yet but might soon. Think of it like early warning systems. Before GPT-5 (or whatever comes next) gets released, the preparedness team asks critical questions. What new capabilities will it have? How could someone misuse them? What safeguards do we need? How do we test for unexpected failures?

The role requires someone who can think across multiple domains simultaneously. You need deep understanding of AI capabilities and how they scale. You need threat modeling skills—essentially, the ability to imagine novel attack vectors and abuse scenarios. You need statistical rigor to design tests and measure risk. And you need organizational credibility to tell executives uncomfortable truths: "This feature creates risks we can't fully mitigate yet."

It's part researcher, part strategist, part engineer, and part whistle-blower all rolled into one title.

Preparedness hiring is estimated to have a high impact on business risk and governance scale, highlighting the evolving priorities in the AI industry. Estimated data.

The Job Listing Breakdown: What Open AI Actually Needs

The official job posting for this role tells us far more than typical corporate job descriptions. Open AI wasn't vague or corporate about what they want.

The description emphasizes that the Head of Preparedness will "lead the technical strategy and execution" of the preparedness framework. This isn't committee work. It's autonomous leadership with real decision-making authority. You're not managing a team that manages another team. You're directly shaping how Open AI approaches frontier risk.

The role involves tracking capabilities across Open AI's model development. As new capabilities emerge during training, the preparedness function needs to identify them early. This requires close collaboration with research teams, but from a position of independence. You need to be able to say "this creates risks" even if it's inconvenient for a product roadmap.

The job also emphasizes "preparing for" risks, not just identifying them. This means designing mitigations. Maybe that's technical controls, like limiting who can access certain capabilities during testing. Maybe it's deployment strategies that reduce harm potential. Maybe it's external engagement with governments and institutions affected by frontier capabilities. The preparedness function touches all of these.

Notably, the listing specifies that the role involves "our approach to tracking and preparing for frontier capabilities that create new risks of severe harm." The emphasis on "severe" is significant. Open AI isn't asking this person to worry about every possible harm. The focus is on risks that could cause significant damage at scale, not minor inconveniences.

The salary and equity package signals seriousness. $555,000 base isn't entry-level compensation. Open AI is trying to recruit someone with established credibility in AI safety, either from academia, policy, or industry. The equity component suggests they want long-term commitment and skin in the game—someone who cares about Open AI's success, not just the paycheck.

Why Sam Altman Is Being Honest About the Stress

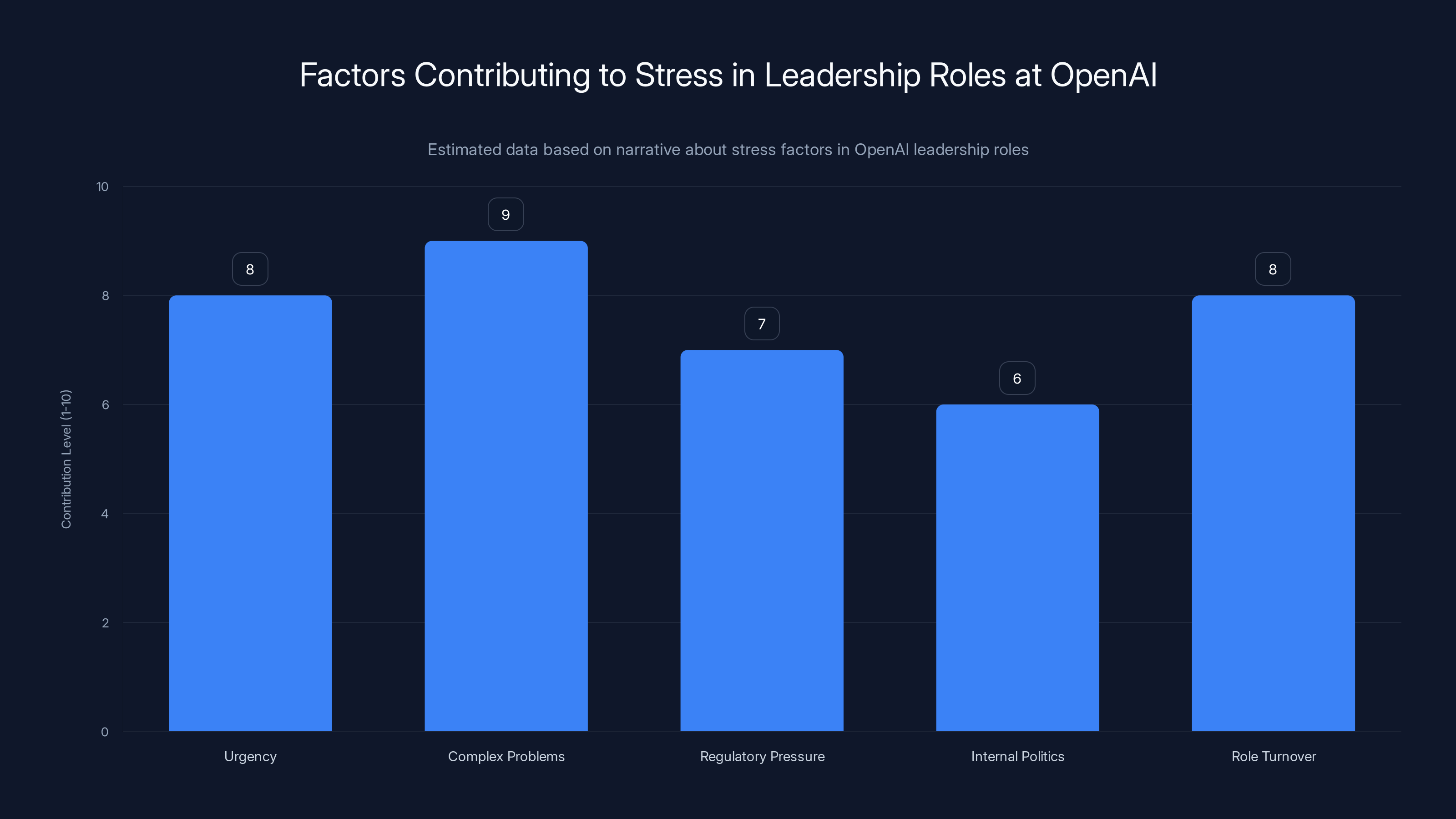

When Altman posted about this role on X, he didn't use typical corporate recruitment language. He said the job is "stressful" and that the candidate would "jump into the deep end pretty much immediately." This is unusual transparency, and it reveals something important about Altman's thinking.

First, it signals genuine urgency. Open AI doesn't have the luxury of lengthy onboarding for this role. The company is shipping increasingly capable models, facing real-world harms, and dealing with regulatory pressure. The incoming Head of Preparedness needs to make an impact fast, not spend six months understanding organizational politics.

Second, it's honest recruiting. Instead of overselling the role, Altman is acknowledging that this person will be dealing with complex, unsolvable-seeming problems. How do you prepare for a risk you can't fully predict? How do you balance innovation with caution? How do you recommend killing features that could generate billions in revenue? These are genuinely hard questions, and Altman's honesty about the difficulty is more likely to attract the right kind of person—someone who thrives on difficult problems rather than someone seeking a comfortable executive position.

Third, it reflects the political reality inside Open AI. The company is pushing hard on capabilities. The preparedness function sometimes has to say "slow down" or "we need more testing." That's inherently a stress-inducing position because you're sometimes aligned against the company's growth incentives.

The frank acknowledgment of stress also hints at why previous leaders in this role have rotated out. Madry's reassignment, Weng's departure, and Quinonero Candela's move to recruiting might not be failures on their part—they might be symptoms of the role being genuinely draining. Working in safety is often thankless. When the safeguard works, it's invisible (nothing bad happened). When someone thinks a safeguard shouldn't exist, you're the person blocking them.

By being honest about these dynamics, Altman is potentially attracting candidates who understand what they're signing up for—people whose motivations are aligned with actual safety work, not ego or resume-building.

The Head of Preparedness focuses more on risk assessment and technical mitigations for AI capabilities, while the Chief Safety Officer covers a broader range of safety concerns including data privacy and ethical practices. Estimated data.

The Mental Health Crisis That Made This Hire Necessary

Open AI didn't suddenly decide that preparedness was important. The company faced concrete, measurable harms that forced the conversation. In 2025, multiple wrongful death lawsuits emerged alleging that Chat GPT had negative impacts on users' mental health. These weren't speculative concerns—they were real cases with real families seeking accountability.

The existence of these lawsuits forced Open AI's leadership into public acknowledgment. Altman explicitly stated that "the potential impact of models on mental health was something we saw a preview of in 2025." This is significant. He's not saying the company is worried about hypothetical future harms. He's saying it observed actual harms from current products in the recent past.

What kinds of mental health impacts could Chat GPT cause? The lawsuits hint at several possibilities. Some users might become dependent on the AI for emotional support, potentially replacing human relationships. Others might have their worldviews influenced by AI responses, leading to confusion or isolation. Some might use the tool to amplify self-harmful thoughts. Some might become addicted to the engagement loop of prompting and receiving responses.

These harms aren't unique to Chat GPT—they're risks inherent to any AI system that can engage in conversation, provide advice, or influence thinking. But Open AI's scale made them visible. Chat GPT reached 100 million users faster than any app in history. When you have that many users, even rare harms become common in absolute terms.

The lawsuits are important because they prove that preparedness isn't a theoretical exercise. It's not about preparing for risks that might exist in some future superintelligent AI. It's about managing harms that are happening right now, with current technology, in the current user base.

This context makes the Head of Preparedness hire urgent. The company needs someone who can look at mental health impacts and ask: How did we miss this? How do we detect it earlier? How do we prevent users from accessing the product in ways that maximize harm? How do we communicate risks to users? How do we design safeguards into the product itself?

The mental health crisis also explains why previous leadership rotations might have happened. Working in preparedness means grappling with failures—ways the current systems have already caused harm. That's a different kind of pressure than building new features. It's backward-looking and guilt-inducing in ways that forward-looking innovation work isn't.

Leadership Turnover in Preparedness: The Red Flag Nobody's Talking About

On the surface, Open AI is hiring a new Head of Preparedness because the previous leaders moved on. But the timeline reveals something more concerning: preparedness leadership has been unstable for 18 months.

Aleksander Madry held the role, presumably for some time, but was reassigned in July 2024. This wasn't described as a promotion exactly—more like a lateral move. When Madry left, the role was split between two executives: Joaquin Quinonero Candela and Lilian Weng. Splitting leadership is often a signal that the company is uncertain about the role's direction or importance.

Lilian Weng departed the company a few months after taking on this shared responsibility. In Silicon Valley, when someone leaves a company, especially someone as accomplished as Weng, it often indicates concerns about direction or culture. We don't know Weng's specific reasons, but the timing—shortly after taking on preparedness leadership—is notable.

Then, in July 2025 (roughly a year after the split), Joaquin Quinonero Candela announced he was leaving the preparedness team to lead recruiting at Open AI. Recruiting is important, but it's typically a different function than safety strategy. This move signals that either preparedness was stabilized enough to not need his full-time attention, or that he was reassigned because preparedness wasn't working as intended.

In 18 months, the company went through three leadership changes in a single function. That's unusual for a role the CEO is publicly calling critical.

There are a few possible interpretations. The most generous: the company is finding the right person and configuration for the role. The most critical: preparedness is organizationally difficult because it sometimes has to say "no" to profitable product ideas. The most realistic: it's probably some of both.

The leadership churn also has practical implications. Each leadership transition means loss of institutional knowledge. Each new leader potentially brings different philosophies about what preparedness means and how risk should be approached. If you're working on long-term safety research, continuity matters. You can't reset the research agenda every time leadership changes.

From a candidate's perspective, this history is worth considering. The Head of Preparedness position is either a launching pad for career advancement (if your work goes well and you move to bigger roles) or a challenging position where you do important work without as much visibility or upside as other executive roles.

OpenAI has experienced leadership instability in its preparedness role, with three leaders each serving approximately 6 months over the past 18 months. Estimated data.

How Open AI Defines the Preparedness Framework

The actual framework that the Head of Preparedness will oversee is Open AI's systematic methodology for identifying and preparing for frontier risks. Understanding what preparedness means at Open AI is crucial to understanding why the role exists.

The framework has several components. First, there's capability tracking: Identifying what new abilities emerge in frontier models. As Open AI trains larger, more capable models, novel abilities appear that weren't present in earlier versions. These emergent capabilities sometimes surprise even the researchers who trained the models. The preparedness function needs visibility into what's emerging, often before it's fully documented or understood.

Second, there's risk assessment: For each new capability, the framework asks what harms could result. This isn't speculative philosophy. It's threat modeling. Given a new capability, what are the highest-leverage ways someone could misuse it? How many people might it affect? How severe could the harm be? What's the probability someone will actually attempt this misuse?

Third, there's technical mitigation: Can the capability be deployed safely with specific guardrails? Maybe the model has a new ability, but you can restrict who accesses it, or restrict how it can be used. Maybe the model outputs can be filtered to remove harmful content. Maybe the capability only becomes dangerous in certain contexts, and you can detect those contexts and decline to engage. Technical mitigations are the domain of the preparedness team.

Fourth, there's policy and deployment strategy: How should the model be released, if at all? Should it be released to all users or to a restricted group? Should the company publish research about what it can do, or keep capabilities quiet? Should they engage with governments or regulatory bodies beforehand? These questions are partly technical, partly political, and partly ethical.

Fifth, there's external coordination: Open AI doesn't exist in isolation. Its models are deployed in the real world, competing with models from other companies. The preparedness function needs to think about what happens if Open AI makes cautious choices but competitors don't. Does that create incentives for reckless capability race behavior? Should Open AI coordinate with other labs on safety standards?

The framework is essentially a formalized way of asking: Before we deploy this model, have we thought seriously about how it could cause harm, and have we done everything reasonable to reduce that harm? It's a way of making safety systematic rather than occasional.

What's notable is that this framework is supposed to be comprehensive. It's not just about chatbots causing mental health harms. It's about any frontier capability that creates "new risks of severe harm." That could include code generation capabilities (enablement of cyberattacks), reasoning capabilities (misinformation at scale), or capabilities we haven't even imagined yet.

What Candidates Need to Bring to This Role

Open AI isn't hiring a typical executive. The Head of Preparedness needs a specific skill combination that's rare in the market.

First, there's technical depth in AI. This isn't a role for someone who's read articles about AI safety. The successful candidate needs to understand how models are trained, how capabilities scale, what emergent behaviors look like, and why something works or doesn't work. They probably need either a research background in ML or years of experience shipping AI products. This is credibility requirement—you can't convince technical teams to take you seriously if you don't understand the technology.

Second, there's risk thinking and threat modeling. The candidate should have experience identifying ways systems fail, how they can be abused, and designing mitigations. This might come from security backgrounds, from policy backgrounds where you've analyzed regulatory risks, or from previous safety work. The key is a mindset that naturally thinks about adversarial scenarios.

Third, there's communication and organizational influence. Technical credibility alone isn't enough. You need to be able to explain complex risk assessments to executives and convince them that certain tradeoffs are necessary. You need to work well with product teams, research teams, and leadership. You need political savvy—the ability to navigate organizational dynamics while maintaining your integrity.

Fourth, there's judgment about risk tolerance. Some people in safety roles want to eliminate all possible risks, which is impossible. The best candidates understand that some risks are acceptable, some can be mitigated rather than eliminated, and some require trade-offs with other values (like capability and impact). Making those calls correctly requires deep judgment.

Fifth, there's resilience and conviction. We discussed earlier that this is a stressful role. People will push back on your recommendations. You'll sometimes recommend killing features that people are excited about. You'll sometimes be wrong (recommending caution about something that turns out to be safe). You need the emotional resilience to do this work over time without burning out.

The candidate profile suggests Open AI is probably looking at several types of people. Academic safety researchers with strong ML backgrounds. Senior engineers or researchers who've already spent significant time thinking about AI safety. Maybe someone from policy backgrounds who's done risk analysis at regulatory agencies. Maybe someone from security who's done threat modeling at scale.

What they probably won't find is someone with all of these skills fully developed. They'll likely find someone strong in two or three areas and coachable in the others.

The role of Head of Preparedness at OpenAI emphasizes technical strategy and risk identification, with significant focus on risk mitigation and collaboration. Estimated data based on job description.

Precedents and Comparisons: Other Organizations' Approaches to AI Safety Leadership

Open AI isn't the only organization wrestling with formal AI safety leadership. Understanding how other organizations approach this offers context for why Open AI made this hire.

Deep Mind, owned by Google, has a senior researcher dedicated to AI safety work, though their organizational approach differs from Open AI's. Deep Mind tends to distribute safety concerns across teams rather than centralizing them. The tradeoff is that safety is everyone's job (good for integration) but also nobody's priority when conflicts emerge (bad for institutional power).

Anthropic, the company behind Claude, was founded partly because of concerns about safety at other organizations. Safety is more central to their organizational DNA than at Open AI. But even Anthropic has structure and dedicated leadership. The existence of focused research teams on alignment suggests that making safety work a distinct function is becoming standard practice.

Governments and regulatory bodies are also appointing safety-focused leadership. The White House established AI safety positions within its administration. The European Union's AI Act requires organizations to have risk management frameworks. These policy developments make internal safety leadership more important for companies—someone needs to be responsible for compliance and policy engagement.

The lesson across these examples is consistent: as AI systems become more powerful and more deployed, making safety leadership explicit and well-resourced matters. It's not just ethical—it's becoming a business requirement. Organizations that don't have clear safety leadership are more likely to face regulatory backlash, reputational damage, and product failures.

Open AI's approach of giving the Head of Preparedness title, compensation, and board-level reporting reflects this industry trend. They're signaling that safety isn't an afterthought or a department—it's a core executive function.

The Specific Risks That Preparedness Needs to Address

What kinds of harms are we actually talking about when we say "frontier capabilities that create new risks of severe harm"? Understanding the specific risks makes the role more concrete.

One category is persuasion and manipulation. As AI models become better at generating human-like text, they become better at persuading people. An AI that can write at a human level can potentially write propaganda, manipulate social media discourse, or generate convincing deepfakes of speech. The frontier capability here is the boundary where AI text becomes indistinguishable from human text. Once you cross that threshold, the harm potential increases dramatically.

Another category is autonomous harm. If an AI system has access to tools (like code execution, internet access, or APIs), it might be able to cause damage autonomously. Maybe it has a bug, or maybe someone jailbroke it, but the result is that it takes actions in the real world that cause harm. The frontier capability here is the ability to interact with external systems reliably. Once models can reliably use tools, the attack surface expands massively.

There's information hazards: Information about how to cause harm becomes easier to access. This includes things like instructions for bioweapons, better hacking techniques, or methods for manipulating people psychologically. The frontier capability here is the model's ability to answer questions about sensitive topics accurately and helpfully. The more capable the model is at answering any question, the easier it becomes to extract dangerous information.

There's economic concentration: AI systems could amplify economic inequality if they're only available to wealthy organizations or individuals. A frontier capability that dramatically increases productivity could lead to massive job displacement if deployed carelessly. The concern isn't that AI makes things better for some people—it's that it makes things much better for some people and much worse for others.

There's geopolitical power imbalance: If one country has AI capabilities that others don't, it might gain military, economic, or political advantages. This creates incentives for dangerous capability races where countries prioritize speed over safety. The frontier capability here is anything that translates to military or strategic advantage.

There's loss of human agency: Even without catastrophic failure, AI systems could gradually reduce human decision-making authority over important domains. Doctors might stop diagnosing diseases themselves. Judges might always defer to AI recommendations. Teachers might stop teaching certain subjects. The frontier capability here is the AI's ability to outperform humans at tasks we care about.

What's notable about all these risks is that they exist on a spectrum. They don't require AGI or superintelligence. Many of them are possible with current or near-term AI capabilities. This is why the Head of Preparedness role is urgent now, not in some far future.

Estimated data suggests that dealing with complex problems and urgency are the highest stress factors in leadership roles at OpenAI. Altman's honesty about these challenges is likely to attract candidates who are prepared for such intense environments.

Internal Structures: How Preparedness Fits into Open AI's Organization

Understanding where the Head of Preparedness sits in Open AI's organizational structure helps explain the role's importance and challenges.

The Head of Preparedness reports to Open AI's leadership, likely at or near the C-suite level. This is different from safety research teams that report to the Chief Scientist. The preparedness function has strategic authority—meaning it can make or influence major product and research decisions, not just provide recommendations.

This reporting structure matters. If safety reported to product, then product could override safety concerns. If safety reported to research, then research priorities might overrule safety considerations. By having preparedness report at a high level, Open AI is creating institutional structures where safety considerations have independent weight.

The Head of Preparedness also likely coordinates across multiple departments. They work with the research teams building models (to understand emerging capabilities), with product teams (to design safe deployment strategies), with policy teams (to coordinate government engagement), and with the Chief Safety Officer or equivalent (if that role exists) to handle broader organizational safety issues.

This cross-functional coordination is both a strength and a challenge. It means the Head of Preparedness has input into important decisions. But it also means they're constantly negotiating between different organizational priorities. When research wants to push capabilities faster and preparedness wants to move slower, who wins? Ideally, they negotiate to a reasonable middle ground. In practice, it can become political.

Open AI's organizational maturity also matters for this role. If the company has strong processes for decision-making (clear frameworks for how trade-offs are made), then the Head of Preparedness can work within those processes. If decision-making is ad-hoc or driven by individual relationships, then the role becomes more about political influence and less about systematic risk management.

The fact that Open AI is hiring this role suggests they're trying to formalize and strengthen their preparedness function. The previous arrangement with split leadership probably felt inadequate. A single, empowered executive can move faster and make clearer decisions than shared leadership.

Why the Preparedness Hiring Matters for the Broader AI Industry

Open AI's choice to make this hire is significant beyond just Open AI. It signals something about how the AI industry is evolving.

First, it signals that safety is becoming professional. There was a time when AI safety was mostly academic research, separate from industry. Now, top AI companies are hiring safety professionals at senior levels with serious compensation. This professionalization means safety thinking is becoming embedded in how products are built, not bolted on afterward.

Second, it signals that companies are taking moral responsibility seriously. For years, the tech industry claimed that safety and responsibility would happen automatically through competition and regulation. Open AI's explicit hiring of a preparedness leader acknowledges that this isn't true—companies need to be proactive. They need people whose job is specifically to worry about harms before they happen.

Third, it signals that preparedness is now a business risk, not just a moral issue. The wrongful death lawsuits Open AI faced made it clear that failing to prepare for harms has concrete consequences. Shareholders care about liability. Regulators care about whether companies have safety processes. Insurance companies care about risk management. All of these create business incentives to take safety seriously.

Fourth, it signals that the scale of AI systems now requires dedicated governance. When you're building systems with 100 million users, when you're training models with trillions of parameters, when you're influencing how billions of people interact with technology—you need governance structures that match that scale. The Head of Preparedness is part of Open AI recognizing this.

Other AI companies are paying attention. If Open AI sees this role as critical enough to recruit at the C-level and offer $555K+ compensation, then other labs might feel pressure to hire similar roles. This could lead to a maturation of AI safety as a professional discipline across the industry.

It also puts pressure on governments. If private companies are building sophisticated safety governance internally, what does that mean for regulation? Do governments need to strengthen requirements around safety leadership? Do they need to audit whether companies actually have the governance they claim?

The broader implication is that Open AI's Head of Preparedness hire is a signal: AI safety is moving from the periphery to the center of how powerful AI companies operate.

The Challenges This Role Will Face Immediately

Whoever gets hired as Head of Preparedness will walk into some specific, near-term challenges beyond the general difficulty of the role.

First, there's the liability question: Open AI is facing lawsuits about mental health harms. The Head of Preparedness will inherit a company that's partially on trial. They'll need to help Open AI navigate liability questions, think about whether and how to change products based on legal risks, and coordinate with legal teams. This is immediately pressing, not theoretical.

Second, there's the regulatory uncertainty: Governments worldwide are considering AI regulation. The EU has rules. China has rules. The U. S. is still developing policy. Open AI operates globally, and the Head of Preparedness will need to understand these regulatory landscapes and ensure the company's safety processes align with what regulators might require. They'll probably spend significant time engaging with policymakers.

Third, there's the capability acceleration challenge: Open AI is pushing hard on capabilities. The company is racing to build AGI, or at least very capable systems. The Head of Preparedness will sometimes need to say "slow down, we need to understand this capability better." Managing this friction while staying within the organization is difficult. You need to have genuine authority to slow things down when necessary, but also enough political capital to not be seen as a blocker.

Fourth, there's the measurement problem: How do you know if you're succeeding? If nothing bad happens, does that mean preparedness is working, or does it mean nothing bad happened to happen? How do you measure whether a safeguard actually prevented harm versus whether the harm just didn't occur? This is a genuine methodological challenge that the Head of Preparedness will need to figure out.

Fifth, there's the succession and knowledge problem: The previous three leaders rotated through in 18 months. The new person will need to stabilize the function, keep good people on the team, and probably rebuild some institutional knowledge. Getting people to commit to safety work when the leadership keeps changing is difficult.

Sixth, there's the external credibility challenge: The AI safety community is watching. Academic safety researchers have opinions about whether Open AI is taking safety seriously. Civil society groups have concerns. Competitors have their own safety narratives. The Head of Preparedness will need to engage with all of these external stakeholders while managing internal dynamics.

The Broader Vision: What Open AI Actually Wants to Accomplish with Preparedness

Beyond the specific role and the immediate challenges, it's worth asking: What is Open AI trying to accomplish with this preparedness function? What's the long-term vision?

One vision is responsible capability deployment. Open AI wants to develop powerful AI systems and deploy them to the world. But they want to do it in ways that reduce harm and increase trust. The preparedness function is the mechanism for thinking systematically about how to deploy responsibly. Instead of just releasing things and hoping nothing bad happens, the company thinks through risks and mitigations in advance.

Another vision is industry leadership on safety. Open AI is the most visible AI company in the world. If they can demonstrate that you can be a successful, cutting-edge AI lab and also take safety seriously, that's valuable proof of concept. It shows other labs that safety and capability aren't in complete opposition. This could influence the entire industry's approach to safety.

Another vision is stakeholder alignment. Open AI serves multiple stakeholders: its employees (who care about safety and ethics), its customers (who want products they can trust), regulators (who are watching closely), and society (which bears the risks). A functional preparedness process helps all these stakeholders understand that the company is taking risks seriously. It builds trust.

Another vision might be insurance against catastrophe. This one is harder to say explicitly, but it's implicit. Some people in the AI safety community worry that very powerful AI systems could cause severe harm. Whether or not you believe that risk is high, having governance structures in place to prepare for frontier risks is a form of insurance. If something unexpected happens, at least the company can say "we had processes in place to try to prevent this."

What's notable about all these visions is that they require actual execution, not just hiring someone with a fancy title. The preparedness function only matters if it has real authority and resources. If it becomes a paper tiger that makes recommendations nobody follows, it fails all these objectives. The Head of Preparedness hire is a bet by Open AI that they're serious about making preparedness real.

Looking Forward: What This Hire Suggests About AI's Future

Taking a step back, what does Open AI's decision to hire a Head of Preparedness at this scale and with this urgency tell us about where AI is headed?

It suggests that AI systems are reaching a scale and capability level where governance matters more. When Chat GPT was first released, safety considerations were important but not urgent. Now that the system has 100+ million users and Open AI is working on increasingly capable models, governance structures are becoming essential. This pattern will only accelerate.

It suggests that harm from AI is increasingly concrete, not hypothetical. The mental health lawsuits proved that. As AI touches more parts of people's lives, the ways it can cause harm become more apparent and measurable. This creates incentives for better governance.

It suggests that companies are starting to believe that responsibility and capability don't have to be opposed. For a long time, the narrative was that pursuing safety would slow progress. Open AI's willingness to invest in preparedness at the executive level suggests they believe you can do both—develop powerful systems and reduce harm.

It suggests that the AI industry is becoming more like other safety-critical industries. Finance has risk management. Aviation has safety oversight. Medicine has extensive safety processes. As AI becomes more powerful and more deployed, it's making sense to adopt similar formal governance structures. The Head of Preparedness role is part of this maturation.

The specific moment of this hire is also significant. It comes after multiple years of rapid capability improvements, after Chat GPT reached massive scale, and after concrete harms became visible. It's not a proactive hire made when everything was going smoothly. It's a defensive hire made because Open AI realized they need better governance. That suggests the company is responding to reality, not pushing for safety as a marketing message.

Where does this lead? If the pattern continues, we should expect:

- More structured safety functions across AI companies

- Higher standards for safety governance as regulatory requirements

- More public transparency about how companies assess and mitigate risks

- Stronger integration between safety teams and product development, rather than safety being separate

- Better tools and methodologies for predicting and preventing AI harms

None of this solves the fundamental challenges of AI safety, but it does suggest the industry is taking the challenges seriously at an organizational level.

FAQ

What exactly is the Preparedness framework that Open AI mentions?

Open AI's Preparedness framework is an internal methodology for identifying, assessing, and preparing for risks that emerge from frontier AI capabilities. It involves tracking what new abilities appear in models, threat modeling how those abilities could be misused, designing technical mitigations, determining deployment strategies, and coordinating with external stakeholders. The framework is designed to be systematic—making safety a structured organizational process rather than something that happens ad hoc. The Head of Preparedness role leads the execution of this framework, ensuring that all major model releases and capabilities go through proper risk assessment before deployment.

Why would mental health impacts from Chat GPT require a specific Head of Preparedness role?

The mental health lawsuits revealed that Open AI didn't fully anticipate or prepare for certain harms that users experienced. Whether it was AI replacing human connection, amplifying harmful thoughts, or creating dependency, these impacts happened after the product was widely deployed. A dedicated Head of Preparedness function can systematically ask these questions before launch: How might this capability affect mental health? Who might be at risk? What safeguards would reduce that risk? By making preparedness an explicit, high-level function, the company creates institutional responsibility for thinking about these questions in advance, not after lawsuits appear.

How is a Head of Preparedness different from a Chief Safety Officer?

While there's some overlap, these roles have different focuses. A Chief Safety Officer typically oversees broad safety across the organization, including things like data privacy, information security, physical safety, and ethical practices. A Head of Preparedness is more specialized and technical—specifically focused on anticipating harms from frontier AI capabilities and preparing for those specific risks. The Head of Preparedness is more about technical risk management, while a Chief Safety Officer is about broader governance. Open AI might have both roles, or the preparedness function might be part of a larger safety organization. The distinction matters because it allows Open AI to have deep technical expertise in the specific domain of frontier capability risks.

What qualifications would make someone good for this role?

The ideal candidate would combine several capabilities: deep technical understanding of how AI models work and how capabilities scale, experience with threat modeling and risk assessment, organizational influence and communication skills to work across teams, judgment about acceptable versus unacceptable risks, and resilience to handle the stress of sometimes saying "no" to powerful stakeholders. Candidates might come from academic AI safety research, from engineering backgrounds in AI, from policy or regulatory roles where they've analyzed risks, or from security backgrounds where they've done adversarial thinking. The successful candidate probably won't excel in all these areas but will be strong in most of them.

Why has preparedness leadership at Open AI changed so much in 18 months?

The exact reasons for these changes aren't publicly clear, but the pattern suggests a few possibilities. Preparedness is a genuinely difficult job because it sometimes has to prioritize caution over capability development. That creates inherent organizational friction. Leadership rotations might reflect difficulty finding the right person, or they might reflect that the people in the role moved on because they found the position unsustainably stressful or because they achieved their goals and decided to move elsewhere. Lilian Weng's departure might have been about wanting to work on different problems. Quinonero Candela's move to recruiting might have been his choice or a strategic restructuring. Without inside information, we can't know for sure, but the pattern does suggest that preparedness has been organizationally unstable.

How does Open AI's preparedness approach compare to other AI companies like Anthropic or Deep Mind?

Anthropic distributes safety thinking throughout its organization as a core value, while Open AI is trying to create a specific executive function for it. Deep Mind tends to integrate safety researchers with product teams rather than centralizing oversight. Open AI's approach of having a dedicated Head of Preparedness is more formalized and hierarchical. Each approach has tradeoffs. Distributed safety makes it everyone's responsibility but can mean nobody has clear authority. A dedicated function creates clear accountability but can create silos. The fact that different organizations are experimenting with different structures shows that there's no consensus yet on the best way to govern AI safety in large organizations.

What would success look like for the Head of Preparedness?

Success would likely involve several things: building a team that does rigorous technical analysis of emerging capabilities, establishing processes that are actually used in product development decisions (not just theoretical), correctly predicting risks that later become obvious, designing mitigations that actually reduce harm, maintaining institutional stability and credibility, and eventually showing measurable improvements in Open AI's ability to deploy capabilities responsibly. Long-term success would be if Open AI becomes known as a company that took frontier AI risks seriously, had good governance for managing those risks, and still built innovative products. The hardest part of measuring success is that if the preparedness function works well, you won't see dramatic failures—just a company that handles the risks that do emerge better than it otherwise would have.

Is preparedness just a response to regulation, or is there genuine commitment?

It's probably both, but likely with genuine commitment being primary. Open AI is facing real harms and real lawsuits, not just regulatory pressure. Sam Altman's honest framing of the role's difficulty suggests genuine concern about getting it right, not just creating a PR function. That said, companies are also rational actors responding to their environment. Regulation, liability, market competition, and employee concerns all create incentives for taking safety seriously. The fact that multiple incentives point in the same direction—toward better preparedness—suggests this isn't just a temporary trend.

Conclusion: The Preparedness Hire as Industry Signal

Open AI's decision to publicly hire a Head of Preparedness with prominent responsibility, significant compensation, and honest acknowledgment of the role's difficulty is more significant than it might appear at first glance.

On the surface, it's about filling an executive position. Open AI needs someone to lead the technical strategy for identifying and preparing for risks from frontier AI capabilities. The position comes with authority, resources, and accountability.

But beneath that surface, the hire signals something larger about how the AI industry is evolving. Safety and responsibility are moving from the periphery to the center. They're becoming professional disciplines with career paths, not just side projects for idealistic researchers. Companies are recognizing that preparing for frontier risks isn't a constraint on progress—it's a prerequisite for responsible progress at scale.

The fact that Open AI faced wrongful death lawsuits in 2025 related to Chat GPT's mental health impacts proves that these aren't hypothetical concerns. The harms are real. They're happening now, with current technology, at current scale. This makes the Head of Preparedness role not an aspiration but a necessity.

For the candidate who takes this job, it will be exactly as Altman promised: stressful, immediate, and challenging. You'll need to make decisions about which capabilities to develop cautiously or not at all. You'll need to work across teams with different priorities. You'll need to sometimes be the person saying "slow down" in an organization incentivized to move fast.

But it will also be among the most important work happening in AI right now. Because ultimately, whether AI systems benefit humanity depends not just on how capable they are, but on how carefully we've thought about their potential harms and how much work we've done to reduce those harms. The Head of Preparedness at Open AI carries some responsibility for that outcome.

The role matters because the stakes are real. Open AI is building systems that will influence billions of people. The company's decision to make someone accountable for thinking carefully about those impacts is a recognition of that responsibility.

For the AI industry more broadly, this hire is a signal: we're moving into a phase where structured safety governance isn't optional. It's becoming standard practice for serious AI companies. That's the real story here.

Related Concepts Worth Exploring

If you're interested in AI safety, preparedness, and responsible AI development, these related areas provide deeper context:

AI Safety Research: Understanding the technical methods used to identify and mitigate risks in AI systems, including adversarial testing, interpretability research, and alignment research.

Responsible AI Frameworks: How organizations systematize thinking about responsible AI, from Microsoft's Responsible AI Standard to Google's AI Principles to Open AI's preparedness approach.

AI Governance and Regulation: How governments worldwide are developing requirements for AI safety, from the EU AI Act to executive orders to emerging standards in different countries.

Product Safety and Risk Management: How other industries (aviation, medicine, finance) have built mature safety cultures and governance systems that could inform AI safety approaches.

Organizational Ethics: How companies make decisions when safety concerns conflict with business incentives, and how institutional structures shape ethical outcomes.

The hiring of a Head of Preparedness at Open AI connects to all these areas. It's a practical organizational response to the theoretical discussions happening in all of them.

Key Takeaways

- OpenAI is hiring a Head of Preparedness at the executive level ($555K+) to lead systematic risk management for frontier AI capabilities

- The role exists because real harms happened in 2025: wrongful death lawsuits alleging ChatGPT impacted mental health, forcing accountability

- Preparedness is moving from a niche safety function to a critical business function as AI systems reach 100M+ users and governments regulate

- The position has seen three leadership changes in 18 months, suggesting the role is organizationally challenging but increasingly seen as necessary

- Success requires rare combination of technical AI expertise, threat modeling skills, organizational influence, and resilience to deliver unpopular recommendations

Related Articles

- OpenAI's Head of Preparedness: Why AI Safety Matters Now [2025]

- Is Artificial Intelligence a Bubble? An In-Depth Analysis [2025]

- CIOs Must Lead AI Experimentation, Not Just Govern It [2025]

- 8 AI Movies That Decode Our Relationship With Technology [2025]

- Prompt Injection Attacks: Enterprise Defense Guide [2025]

- The Night Before Tech Christmas: AI's Arrival [2025]

![OpenAI's Head of Preparedness Role: AI Safety Strategy [2025]](https://tryrunable.com/blog/openai-s-head-of-preparedness-role-ai-safety-strategy-2025/image-1-1766875098274.jpg)