Introduction: The Role Nobody Expected to Exist

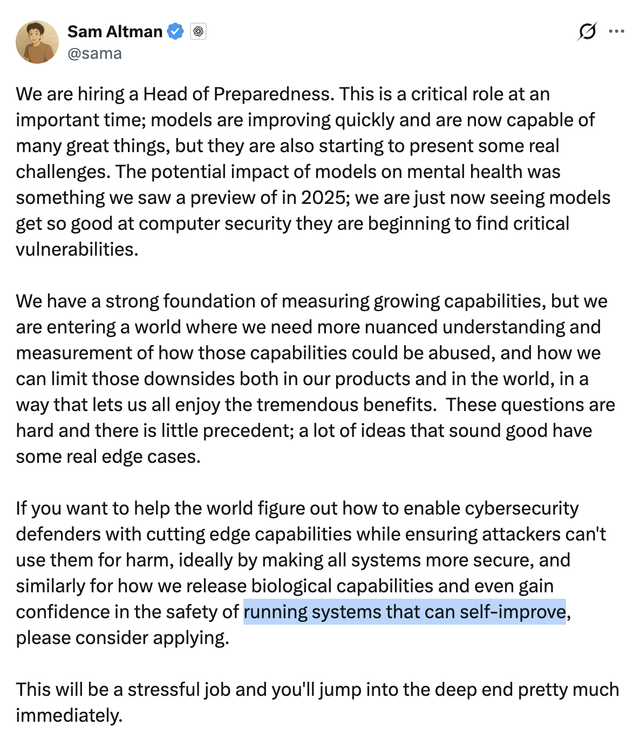

Sam Altman just posted one of the most telling job listings in tech history, and most people missed what it actually means. OpenAI is hiring a "Head of Preparedness" for $555,000 a year plus equity. On the surface, that sounds like a niche safety role at a major AI company. In reality, it's a red flag wrapped in a job description.

Listen, when a company this big suddenly creates a leadership position focused on "preparedness," they're not being proactive. They're being reactive. Something has already happened—or is about to—that forced their hand.

The role description itself is vague enough to hide something. "Head of Preparedness" doesn't tell you much. But when you dig into what OpenAI has said publicly about this position, you start to see the real picture. This isn't about general safety. This is about crisis management at scale.

In 2025, OpenAI acknowledged something most AI companies still won't: their models are already affecting people in ways they didn't predict. Altman himself mentioned that the company saw a "preview" of how AI models can impact mental health that year. That's corporate speak for "we know people have been hurt and we're not exactly sure how to stop it."

The person who takes this job will be walking into a storm. They'll need to understand how advanced AI models could be abused, make calls on safety decisions that affect millions of users, and secure OpenAI's systems against threats they might not even see coming yet. Altman was honest about at least one thing: he warned applicants that this will be a "high-stress" role, and they'll "jump into the deep end pretty much immediately."

That's not recruitment language. That's a warning label.

So what does this role actually mean for OpenAI, for AI safety, and for the industry? What happens when a company that's been criticized for prioritizing commercial opportunities suddenly dumps half a million dollars into a safety position? And most importantly, why should you care about a job posting from a company you don't work for?

Because this role tells you everything you need to know about where the AI industry is headed, what problems are already here, and how unprepared most organizations really are.

TL; DR

- OpenAI is hiring a Head of Preparedness at $555,000 per year plus equity to manage AI safety risks and model-related harms

- The role comes after real-world problems: OpenAI acknowledged in 2025 that their models are affecting mental health and causing documented harms

- Previous holders of the role have been reassigned: Aleksander Madry, Joaquin Quiñonero Candela, and Lilian Weng all held the position before moving to other roles

- The job is intentionally high-stress: Altman warned applicants they'll face immediate, critical challenges with limited guidance

- This signals a shift in industry priorities: After years of criticism about prioritizing commercial goals, OpenAI is finally dedicating serious resources to safety infrastructure

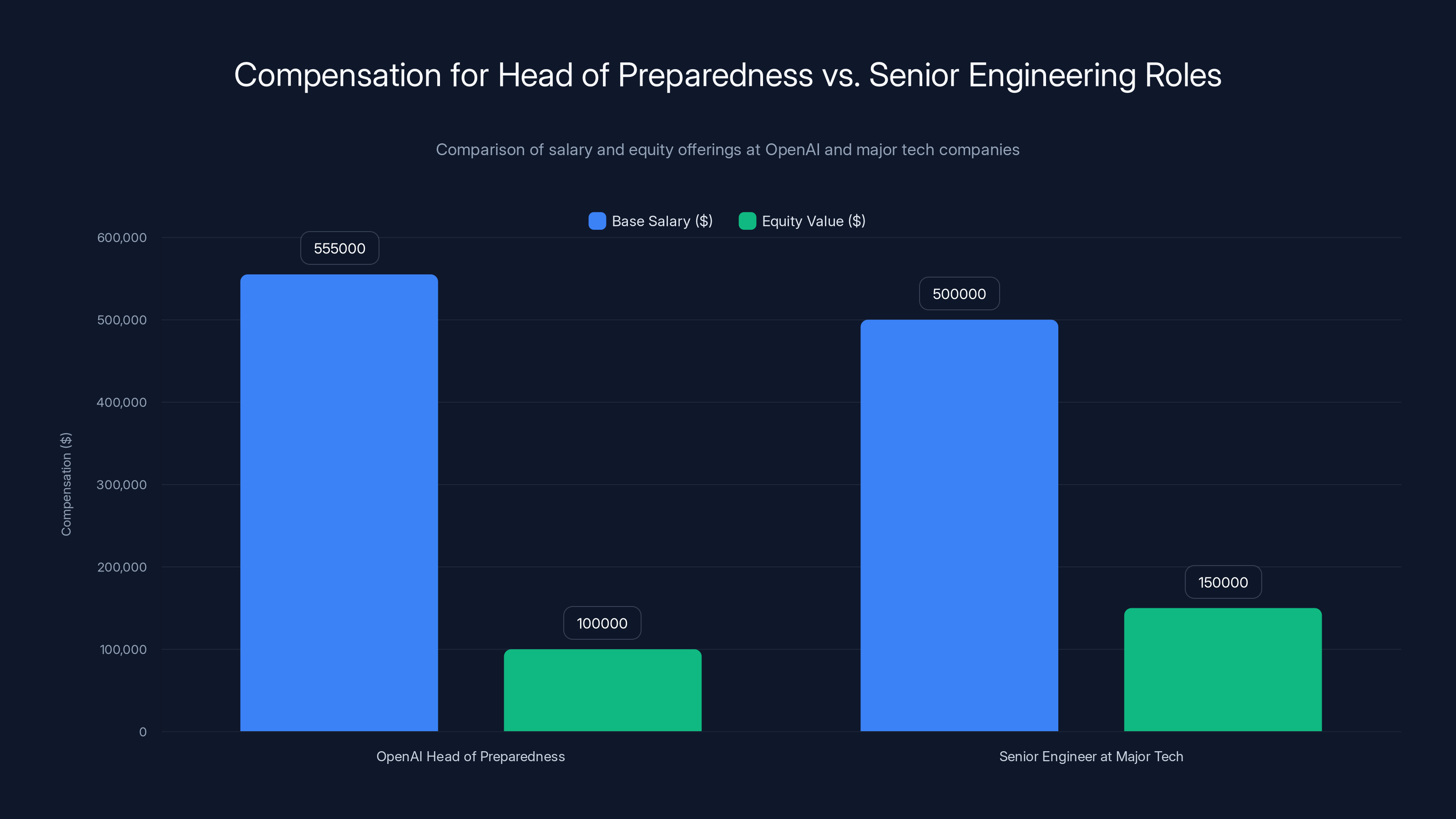

The Head of Preparedness at OpenAI is offered a competitive base salary of $555,000, comparable to senior engineering roles at major tech firms, with additional equity incentives aligning personal and company success.

What Is the Head of Preparedness Role?

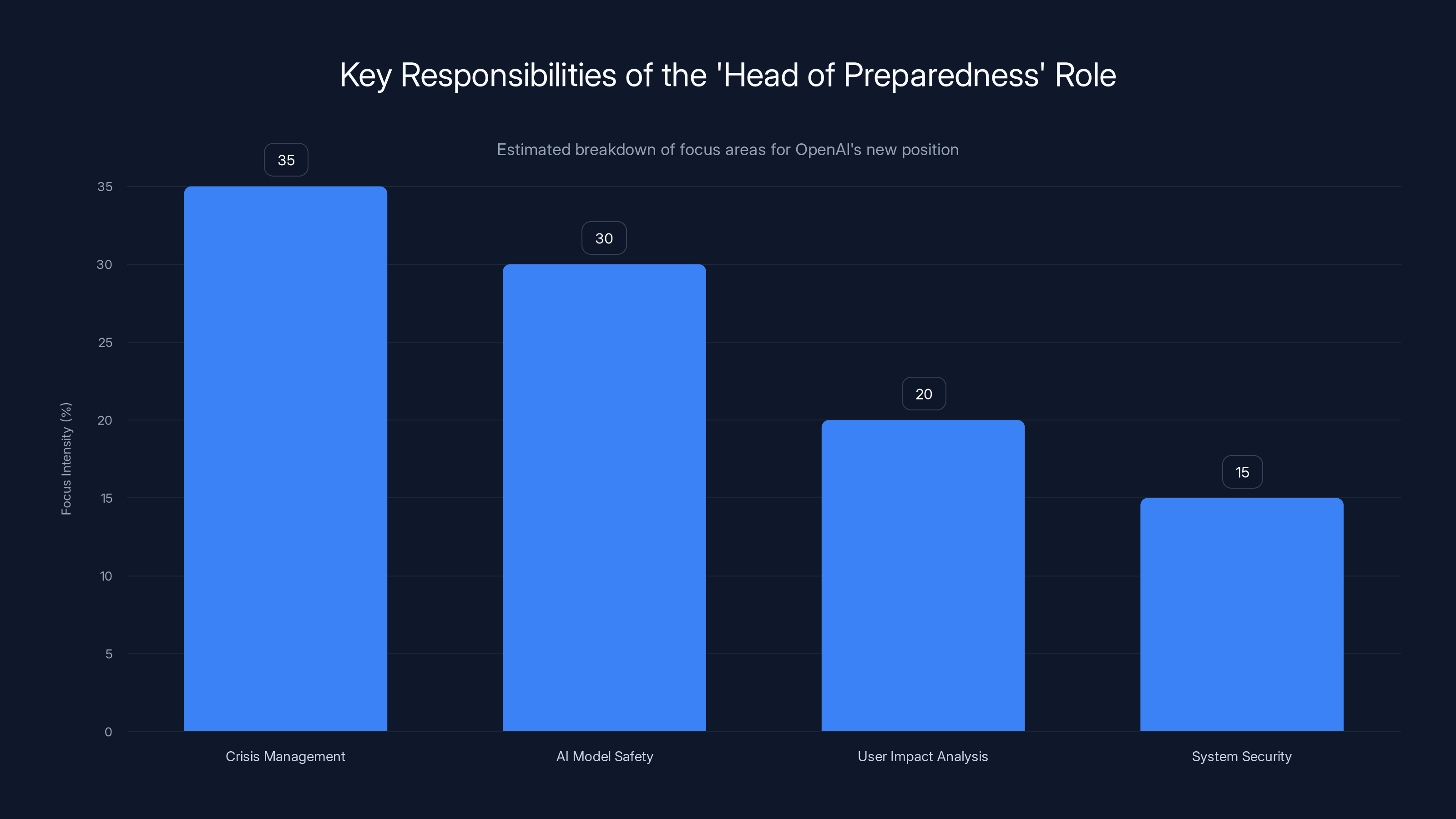

Let's be clear about what this actually is. The Head of Preparedness isn't a traditional safety officer role. It's not someone who sits in meetings all day discussing theoretical risks. This is an operational position designed to prevent specific, real-world harms that are already happening.

The role focuses on three core responsibilities. First, understanding how advanced AI models can be abused and misused at scale. Second, steering safety decisions that directly impact product development and deployment. Third, securing OpenAI's infrastructure and systems against attacks, theft, and misuse.

Each of those sounds straightforward until you think about what they actually require. "Understanding how models can be abused" means you need deep technical knowledge, security expertise, psychological insight, and enough imagination to think like someone trying to break your system. You need to anticipate harms that haven't happened yet while managing harms that are happening right now.

"Steering safety decisions" means you have a seat at the table when OpenAI decides what features to release, how to handle controversial use cases, and when to pull back. But here's the problem: you're steering, not driving. You're advising executives who are under pressure from investors, competitors, and users. That's where the stress comes in.

"Securing systems" isn't just IT security. It's about preventing model weights from leaking, stopping prompt injection attacks, limiting how people can manipulate the system into doing things it shouldn't, and building defenses against threats you haven't even discovered yet.

The title "Preparedness" is interesting because it suggests planning for an emergency. But what emergency? OpenAI never explicitly said. However, the company did acknowledge in 2025 that its models have been involved in mental health crises. Users have reportedly had serious negative psychological reactions to interactions with Chat GPT. Some of these have been severe enough to raise ethical questions about the company's responsibility.

This isn't theoretical. These are documented cases. And when documented cases exist, you need someone whose entire job is making sure they don't multiply.

The role also comes with a small but high-impact team. That's corporate speak for "you won't have hundreds of people," but also "everyone will be deeply technical and experienced." Building a small team means every hire matters. The person taking this role will be hiring their own lieutenants, which means they get to choose who fights this fight with them.

Why OpenAI Created This Role Now

OpenAI didn't create a Head of Preparedness role because they suddenly became risk-averse. They did it because they got caught.

The company has been operating under constant criticism from former employees, safety researchers, and industry observers about prioritizing commercial goals over safety concerns. Multiple former employees have spoken publicly about feeling pressured to move quickly rather than carefully. The company's reputation has been built on disruption and speed, not caution.

But something changed in 2025. OpenAI started having to reckon with the real-world consequences of that approach.

Altman mentioned that the company saw concerning mental health impacts linked to their models. He didn't provide specifics, but the acknowledgment itself was significant. You don't casually mention that your product has caused psychological harm to users unless you've already fielded enough complaints or evidence to force the issue.

The company also had to roll back a GPT-4o update because it could reinforce harmful user behavior. That's another example of something that slipped through and had to be corrected. That doesn't happen if you have strong systems in place to catch these things before they reach users.

When problems slip past you multiple times, you have two choices. You can blame bad luck, or you can acknowledge that your safety infrastructure isn't adequate. OpenAI chose the second path, and the Head of Preparedness role is their response.

But there's another factor here. Regulation is coming. The EU has already passed AI regulations. The US is still figuring out its approach, but the pressure is mounting. OpenAI knows that having a dedicated, well-paid leader for safety and preparedness looks good to regulators. It demonstrates commitment to responsible development. It's both genuine concern and strategic positioning.

The salary offer—$555,000 plus equity—is also telling. That's not a token position. That's serious money for a serious role. It suggests OpenAI is willing to compete for top talent in safety and security. They're signaling that this position matters as much as senior engineering roles that would command similar compensation.

The equity component is particularly interesting. It means whoever takes this job is betting they can make OpenAI more valuable by making it safer. That's aligned incentives. You're not just collecting a paycheck. You're building something that could be worth significantly more if executed well.

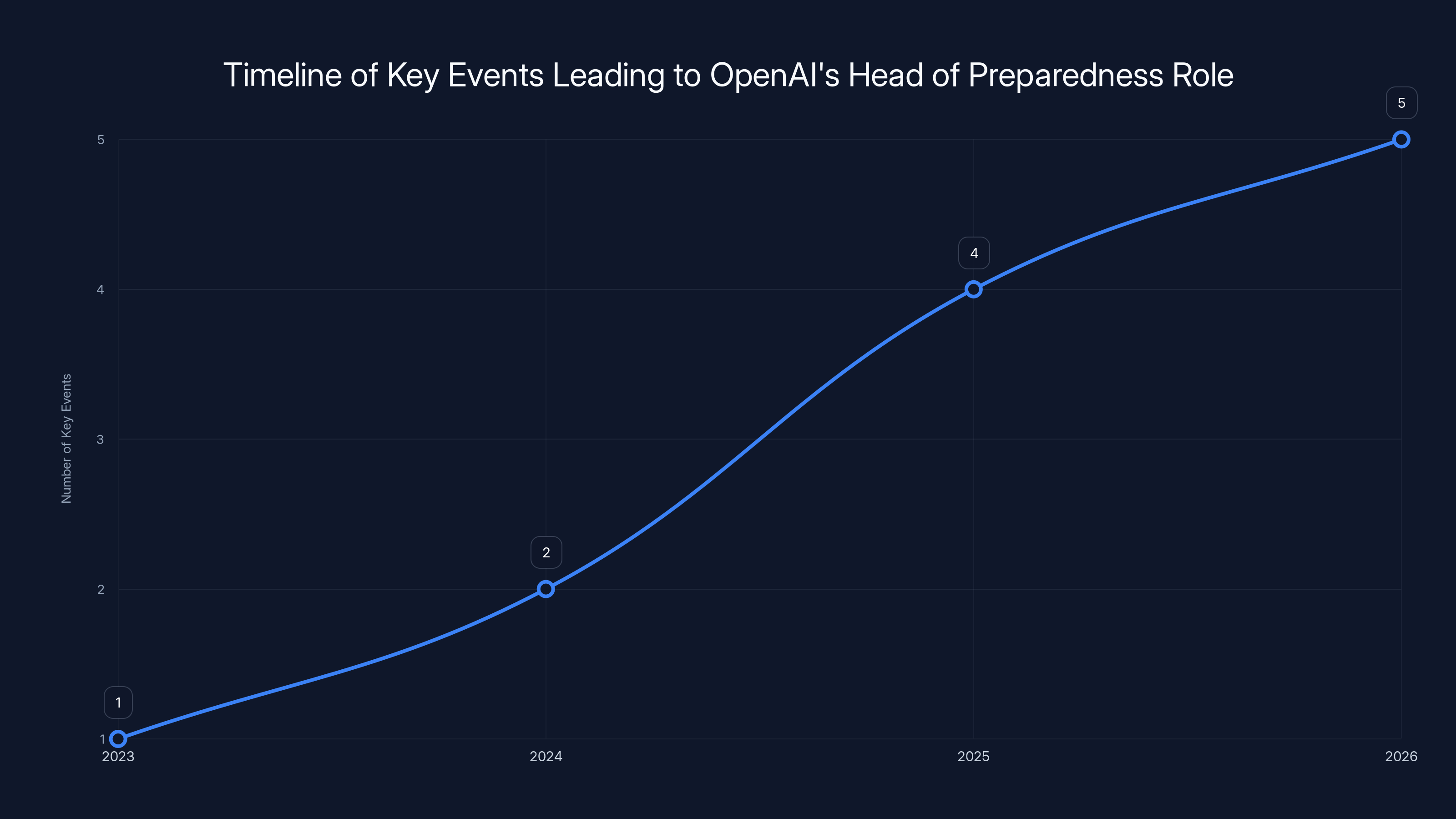

The timeline shows an increase in key events leading to the creation of the Head of Preparedness role, highlighting a shift in OpenAI's focus towards safety and regulatory compliance. Estimated data.

The Previous Heads of Preparedness and What Happened to Them

One of the most telling aspects of this job posting is what happened to the people who held it before. Understanding that history shows you what this role actually is and how it fits into the company.

Aleksander Madry was the first Head of Preparedness at OpenAI. Before that, he was doing groundbreaking research on adversarial attacks—essentially, how to break AI systems. He brought both academic credibility and practical expertise to the role. His background meant he understood the technical landscape for AI safety better than almost anyone.

But Madry eventually moved on. He was reassigned to a different position. Nobody at OpenAI made a big announcement about it, but the change tells you something important. Even the people most qualified for this job eventually need to do something else. Whether that's burnout, disagreements with leadership, or just the natural career progression, the pattern is clear: this position has a lifespan.

After Madry came Joaquin Quiñonero Candela. He took on the Head of Preparedness role and brought his own perspective on AI safety and model behavior. But Candela also moved on. He's now the Head of Recruiting at OpenAI. That's interesting because it means he left the safety role for human resources. That could indicate he wanted to influence hiring standards, or it could mean the Preparedness role just wasn't the right fit long-term.

Lilian Weng also spent time as Head of Preparedness. She's now in other leadership positions at OpenAI. The fact that multiple talented, accomplished people have rotated through this role suggests a few things. First, it's genuinely difficult work. Second, there might be structural challenges that make it hard to succeed long-term. Third, OpenAI values people enough to move them to new roles rather than keeping them stuck in positions that aren't working.

The pattern of reassignments could also mean that OpenAI's approach to safety keeps evolving. What worked for Madry might not work for Candela. What made sense for Weng might not be right for the next person. This isn't unusual—most organizations refine their approach to safety over time. But it does mean whoever takes this job shouldn't assume they'll have the exact same mandate as their predecessors.

There's also the question of whether these reassignments happened because the executives wanted to move on or because OpenAI needed them elsewhere. The company has always been somewhat opaque about internal moves. But the fact that strong candidates are available to take on new roles suggests they left Preparedness with their reputations intact, which is a good sign for whoever fills it next.

The Mental Health Crisis Nobody's Talking About

Altman's comment about seeing a "preview" of how AI models affect mental health in 2025 is the most important part of this entire story. It's also the most buried.

He didn't provide specifics, which is frustrating and entirely intentional. OpenAI doesn't want to publicize cases where Chat GPT contributed to user harm. That invites lawsuits, regulatory scrutiny, and bad publicity. But the fact that he mentioned it at all suggests the issue is significant enough that they can't ignore it.

Here's what we know: AI models like Chat GPT can form parasocial relationships with users. People share personal problems with chatbots the way they would with therapists, friends, or confidants. The AI provides responses that sometimes feel empathetic, intelligent, and supportive. But the AI isn't actually thinking about your wellbeing. It's predicting tokens.

When the relationship breaks down—when users realize they're talking to a machine, when the AI gives bad advice, when the user is in crisis and the AI doesn't understand the severity—there's psychological harm. People have reported feeling manipulated, abandoned, or worse. Some have reported that chatbot interactions made their mental health crises more severe.

OpenAI isn't the only AI company facing this problem, but they're the most prominent. Chat GPT has the most users, the most sophisticated responses, and the most potential for these parasocial dynamics to develop. That means they also have the most liability.

The company has already taken some steps. They added safety instructions to Chat GPT to prevent it from roleplaying as a therapist or providing specific mental health advice. But limiting the AI's behavior is different from preventing harm. Some users will still form inappropriate attachments. Some will still share things that would be better shared with a real human. Some will still make decisions based on AI advice that harm them.

The mental health angle is crucial because it explains why this role needs to exist. You can't just engineer your way out of parasocial dynamics. You need people thinking about the social, psychological, and ethical dimensions of what happens when millions of people interact daily with AI systems.

A Head of Preparedness focused on this challenge would need to work across multiple domains. They'd need to understand mental health basics. They'd need to know how to recognize when a product is causing harm. They'd need to work with mental health professionals and advocates. They'd need to make decisions about what the AI can and can't do, what information it displays to users, and how to handle crisis situations.

That's radically different from traditional AI safety work, which often focuses on technical robustness, alignment, and preventing misuse. This is about preventing harm that emerges from normal use by vulnerable people.

What the Job Description Actually Demands

Let's decode the actual requirements for this role, reading between the lines of what OpenAI officially said.

They need someone who can "understand how advanced models could be abused." That means deep technical knowledge, security expertise, and the ability to think like an attacker. This person needs to understand prompt injection attacks, jailbreak techniques, data exfiltration, model extraction, and threats they haven't even discovered yet.

But it also means understanding the human side. How do people manipulate systems? What incentives drive misuse? How can you design systems to be robust against both technical attacks and social engineering? This requires psychology, behavioral economics, and knowledge of how real people try to break things.

They need to "steer safety decisions." That's a political skill, not just a technical one. You're sitting in rooms with engineers, product managers, and executives. You're saying "no" to features people have spent months building. You're asking for delays when the company wants to move fast. You need credibility, political savvy, and the ability to make a technical case that business leaders will accept.

You also need to be right. If you block a feature that turns out to be safe, you've slowed the company down unnecessarily. If you approve a feature that causes harm, you've failed at your core mission. There's no margin for error, and second-guessing is constant.

They need to "secure systems." This isn't just cybersecurity, though that matters. You need to prevent people from jailbreaking your models, stealing your weights, reverse-engineering your safety measures, and using your infrastructure in ways you didn't intend.

But you also need to do this while maintaining usability. If you make the system so locked down that it's useless, you've failed. Users want flexibility. They want to push boundaries. Your job is managing that tension without creating security disasters.

The role also requires leading a small team. That means hiring the right people, keeping them motivated, and retaining them in a high-stress environment. You're competing with other companies and startups for the best safety talent. You need to build a culture where people want to stay even though the work is genuinely difficult.

You need to be able to work with external partners. Safety researchers, regulatory agencies, academic experts, and other companies all have perspectives on how to handle AI risks. You need to collaborate without giving away sensitive information or compromising OpenAI's competitive position.

Final requirement that's never stated: you need to have good judgment about uncertainty. You're making decisions in an environment where you don't have complete information. You can't test for every possible failure mode. You can't predict every way your system will be used. You're making bets about what's safe enough with the knowledge you have.

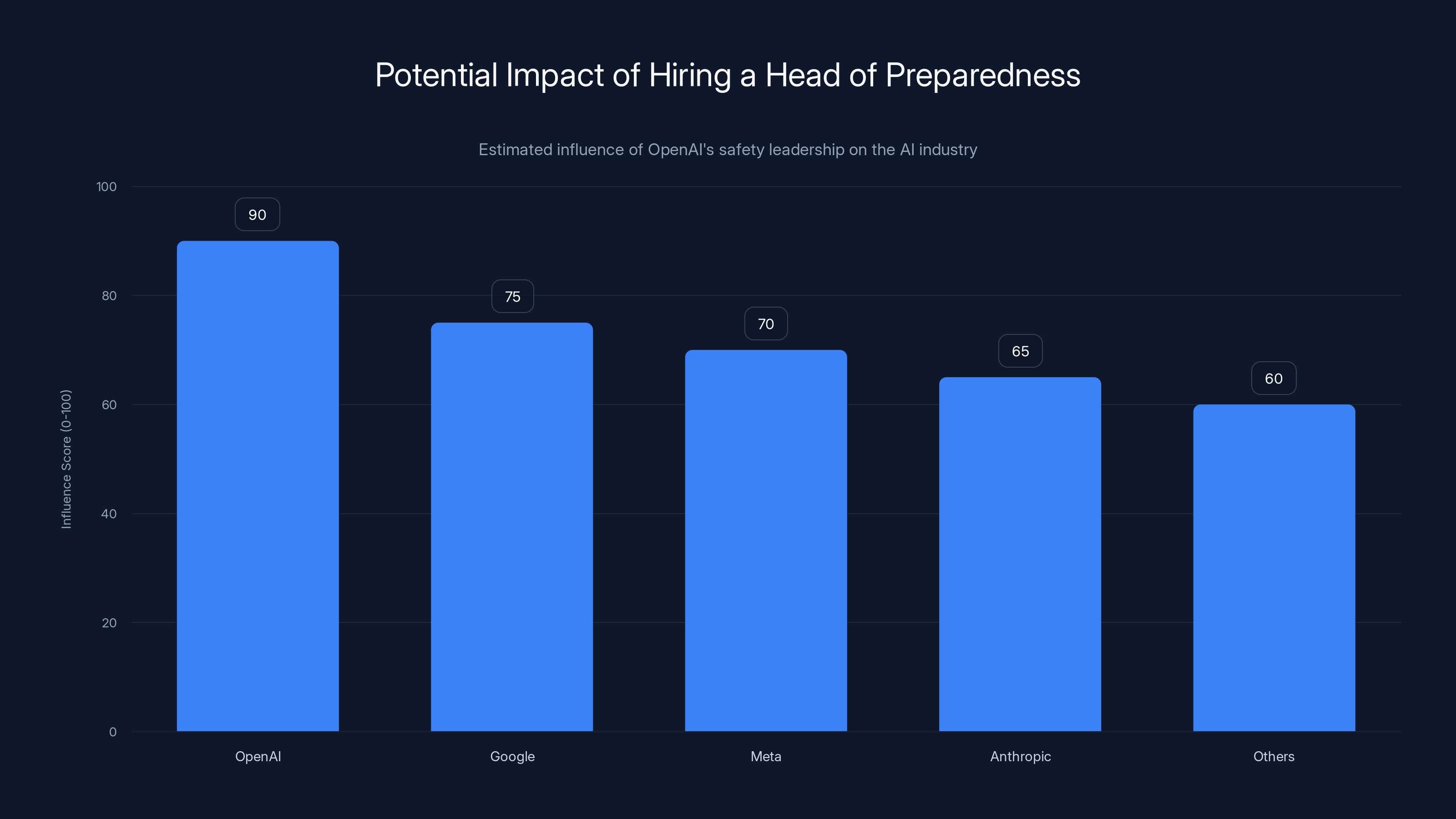

Estimated data: OpenAI's hiring decision could significantly influence the AI industry's approach to safety, with potential ripple effects on major companies like Google and Meta.

The Salary, Equity, and What It Tells You

$555,000 per year is serious money. To put that in context, that's more than most PhD researchers will earn in an entire career. It's comparable to senior engineering roles at major tech companies. For a safety and preparedness position, it's unprecedented.

The equity component is equally significant. We don't know the exact terms, but equity at OpenAI is essentially a bet on the company's future valuation. If OpenAI is worth $100 billion in 5 years, equity packages are worth tens of millions. If it's worth less, they're worth less. Either way, you're investing your career in the company's success.

This creates interesting incentives. The Head of Preparedness is incentivized to make OpenAI safer in ways that increase company value. That's usually aligned with doing the right thing—a safer company is a more valuable company in the long run. But in the short term, there can be tension. Pushing for more thorough testing slows down product releases. Blocking features reduces short-term revenue. That's where the political aspects of the role become crucial.

The salary also tells you about OpenAI's financial position. They're not a startup scrappy company anymore. They can afford to pay $555,000 for a safety role. They're making enough money to invest in preparedness without it threatening the business.

But here's what's important: that salary would be wasted if the person in the role doesn't have decision-making authority. If you're a Head of Preparedness making $555,000 a year but you can't actually stop unsafe features from shipping, you're just expensive window dressing.

So the salary is partially a signal about how seriously OpenAI is taking this. But it's also a reminder that whoever takes the job needs to actually have power.

Building the Preparedness Team: Who Needs to Be Hired

The job posting mentions a "small but high-impact team." That's vague, but it's the most important part of what the Head of Preparedness will actually do.

You can't do this work alone. You need specialists in different areas. You probably need a security engineer who understands model extraction attacks and infrastructure vulnerabilities. You need someone with deep knowledge of how AI models fail in production. You need someone who understands the regulatory landscape and can navigate conversations with agencies. You might need someone with mental health expertise or experience in crisis support.

Building that team means hiring people who are both technically excellent and genuinely interested in safety. That's surprisingly hard. A lot of talented engineers want to build new things, not constrain existing things. Convincing them to join a safety team requires believing in the mission.

It also means retaining that team. The Head of Preparedness will be fighting political battles to protect their people from pressure to move faster or cut corners. If your team members feel like they're banging their heads against a wall, they'll leave. Keeping smart people in a difficult position requires leadership that acknowledges the difficulty but provides a sense of mission.

The team also needs to be able to move quickly when needed. Safety work can't paralyze the organization, but it also can't be overruled by faster competitors. The team needs judgment about when to be strict and when to be pragmatic.

How This Role Connects to OpenAI's Broader Strategy

This isn't just about safety. It's about OpenAI's position in the AI industry and how they want to be perceived.

There are two major narratives in AI right now. One says AI companies are reckless and need heavy regulation to prevent catastrophic harms. The other says AI companies are innovating responsibly and regulation would slow progress. OpenAI has been caught in the middle of that debate.

By creating a well-funded, high-status Head of Preparedness role, OpenAI is signaling that they take the first narrative seriously. They're showing regulators that they're willing to invest in safety infrastructure. They're showing their users that they care about the harms their products can cause. They're showing their employees that safety work is valued.

This also positions OpenAI as a thought leader on AI safety. The Head of Preparedness role will likely involve publishing research, speaking at conferences, and influencing industry standards. That benefits OpenAI in multiple ways—it builds brand reputation, it helps attract safety talent, and it influences how other companies think about safety.

But there's also a defensive element. By being proactive about safety, OpenAI is trying to get ahead of regulation and criticism. If they can show that they've identified risks and built systems to address them, regulators are more likely to trust their self-governance. It's both genuine and strategic.

The salary for the Safety Role at OpenAI is significantly higher than typical PhD researchers and comparable to senior engineering roles, indicating the importance OpenAI places on this position. Estimated data for PhD and Senior Engineer roles.

Comparing This to Safety Efforts at Other AI Companies

OpenAI isn't the only AI company hiring for safety roles. Anthropic has built their entire company around the concept of Constitutional AI and safety. DeepMind has dedicated safety research teams. Stability AI has started to think about safety more seriously.

But OpenAI's approach with the Head of Preparedness role is distinctive. Most other companies have distributed safety responsibilities across teams or housed safety in a research function. OpenAI is creating a dedicated operational role with clear authority over product decisions.

That's either more serious or more performative, depending on who you ask. Critics might say it's window dressing—create a role, pay someone well, but don't actually give them power to stop things. Supporters would say OpenAI is recognizing that safety needs operational authority, not just research credit.

The answer is probably somewhere in the middle. The role likely has real power in some domains and less in others. The Head of Preparedness probably can't unilaterally block a major feature, but they can force the conversation and make sure risks are understood before decisions are made.

This comparison also matters because it influences how AI talent is distributed. If top safety researchers believe OpenAI is serious about safety, they'll apply for the Head of Preparedness role. If they think it's window dressing, they'll go to Anthropic or start their own companies. The quality of candidates applying will reveal a lot about how the industry perceives OpenAI's commitment.

What Success Actually Looks Like for This Role

Imagine you took this job. How would you know if you were succeeding?

The traditional metrics don't work. You can't count the number of harms prevented because you don't know the counterfactual. You can't say "we prevented X bad things from happening" because you'll never have perfect visibility into what might have happened otherwise.

So success for a Head of Preparedness is subtle and hard to measure. It's preventing crises before they become public. It's building systems that catch problems early. It's having your concerns taken seriously in product meetings, even when people disagree. It's hiring and retaining a team of smart people who believe the work matters.

It's also about influence. Does OpenAI change how they think about safety because of decisions made by the Preparedness team? Do other companies follow their lead? Do regulatory agencies cite their work as examples of responsible AI development? Do safety researchers respect the work being done?

Success also looks like not having major scandals. If 2026 or 2027 goes by without Chat GPT causing documented mental health harms or being exploited in catastrophic ways, that's a win. It might be a win because of the Head of Preparedness's work, or it might just be luck. But it matters.

Failure, on the other hand, is more obvious. It's a high-profile incident that could have been prevented. It's the Preparedness team's concerns being ignored. It's the best people on the team leaving because they're frustrated. It's regulators pointing to OpenAI as an example of what not to do.

The Challenges This Person Will Face Immediately

Altman warned that this job is high-stress and you'll jump into the deep end immediately. That's not exaggeration. Here are the real challenges:

First, you're inheriting an organization that has already had safety incidents. People have been harmed by interactions with Chat GPT. The AI has demonstrated harmful behaviors that made it through multiple layers of testing. You're not starting with a clean slate. You're starting with a track record you need to improve.

Second, you're under time pressure. OpenAI is in intense competition with Google, Anthropic, Meta, and others. There's pressure to release new capabilities quickly. Your job is sometimes to say no or slow down, which puts you in conflict with people who are also trying to succeed.

Third, you're dealing with inherent uncertainty. You can't definitively prove that a capability is safe. You can only make judgment calls based on imperfect information. That means you'll sometimes block things that would have been fine, and you'll sometimes approve things that cause problems.

Fourth, you're building from a relatively weak institutional foundation. OpenAI has safety researchers, but they haven't historically had strong operational authority. You're trying to change a culture that has prioritized speed and innovation. Cultural change is slow, especially in a company with strong existing incentives.

Fifth, you need to interface with regulators, academics, and advocacy groups without compromising OpenAI's competitive position. That's a delicate balance. You need to be transparent enough to build trust, but careful enough not to give away sensitive information.

Sixth, you need to hire and retain a team in an environment where many of the best safety researchers are skeptical of OpenAI's commitment. The fact that Anthropic was founded by people who left OpenAI over safety disagreements sends a signal. You're trying to prove those people wrong and convince new talent that things have changed.

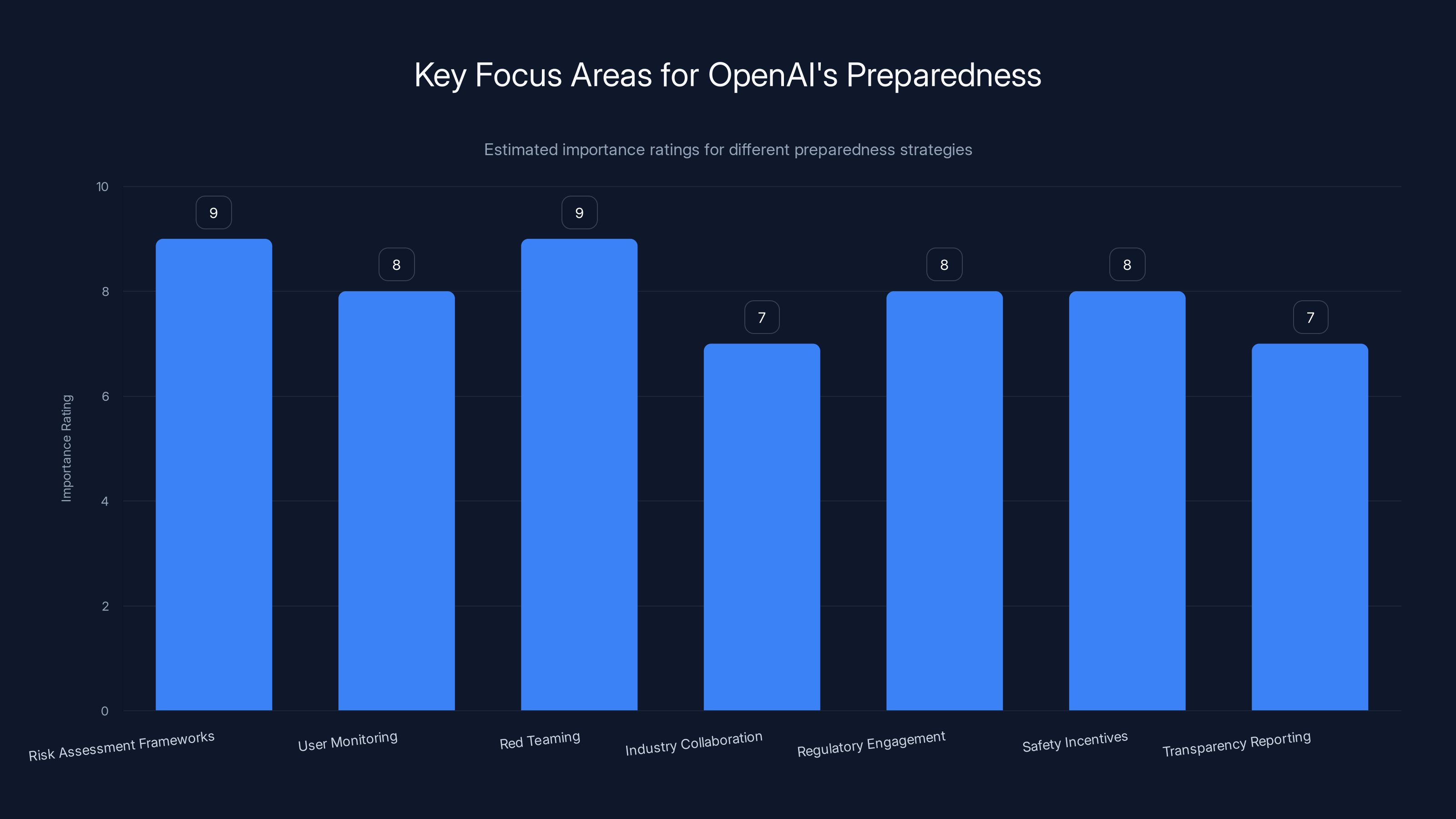

Risk Assessment Frameworks and Red Teaming are rated as highly important strategies for OpenAI's preparedness, reflecting their critical role in ensuring safety and security. (Estimated data)

What Happens When Preparedness Clashes with Product Development

Here's where the real story is. All the structure and authority in the world doesn't matter if your recommendations get overruled when they're inconvenient.

Let's say you're the new Head of Preparedness and your team discovers that a new feature could enable a category of harmful uses. The engineering team has spent three months building it. The product team has customers waiting for it. The executives are expecting revenue from it. You recommend not shipping until you can mitigate the risks.

Now what?

Best case: OpenAI delays the feature, invests in safety measures, and ships responsibly. You did your job, the feature ships safely, and everyone moves on.

Middle case: OpenAI accepts your concerns but decides the risk level is acceptable. You disagree, but they're the executives and it's their company. You document your dissent and ship anyway. If nothing bad happens, you look overly cautious. If something bad happens, you told them so.

Worst case: Your concerns are dismissed as overblown or not worth the business impact. The feature ships, something bad happens, and you're held responsible for not pushing hard enough. Or you're not held responsible, but you know you let something bad happen.

These conflicts are inevitable. You can't prevent all harms. You can't know with certainty what will cause problems. You're making judgment calls in situations where being wrong has real consequences.

The Head of Preparedness role only works if the person in the position has enough credibility and authority that their concerns are taken seriously. That means they need to be right most of the time. That means they need to have built relationships where people trust them. That means they need to understand both safety and business well enough to explain why something matters.

Practical Preparedness: What Should OpenAI Actually Be Doing?

If I were designing what a real Head of Preparedness function should do, here's what I'd focus on:

Risk Assessment Frameworks: Build systems for evaluating new capabilities before they're released. Not theoretical frameworks, but actual processes that teams follow. Make it easy to do a good job, hard to cut corners.

User Monitoring: Develop tools to detect when users are experiencing harm from interactions with Chat GPT. This includes mental health crises, manipulation, or other negative outcomes. Act on that data quickly.

Red Teaming: Maintain a team whose job is to break the system, find vulnerabilities, and think like attackers. This needs to happen regularly and comprehensively.

Industry Collaboration: Share learnings with other AI companies about what works and what doesn't. Competitive advantages don't need to be in safety. The whole industry benefits from shared understanding of risks.

Regulatory Engagement: Build relationships with regulators, policymakers, and advocates. Understand what's coming and help shape sensible regulation rather than fighting all restrictions.

Safety Incentives: Make sure the engineering team is incentivized to care about safety, not just performance and features. That might mean compensation adjustments, public credit for safety work, or cultural changes.

Transparency Reporting: Publish regular reports about incidents, harms, and how they were addressed. Let the public and regulators understand what's actually happening.

These aren't novel ideas. Other industries have risk management functions that do similar things. The challenge is implementing them in an AI company where moving fast is culturally valued and safety can feel like friction.

The Larger Question: Is This Enough?

Even if OpenAI hires an exceptional Head of Preparedness and that person has real authority, we should ask: is this sufficient?

One person, even a very good one, can't prevent all harms from AI systems used by millions of people. They can set direction, build teams, and influence decisions. But they can't be everywhere at once. They can't predict every failure mode.

This is actually a question for the whole industry. Can we make AI safe through organizational structure, or do we need regulatory requirements? Can voluntary safety measures prevent catastrophic harms, or do we need mandatory standards?

OpenAI's approach—creating a high-level safety role with authority and resources—is one answer. It suggests that companies can self-govern responsibly. But the history of other industries suggests that self-governance has limits. Food safety requires regulation. Pharmaceutical safety requires approval processes. Financial safety requires oversight.

AI might be different. AI develops fast, and regulation lags. The risks are hard to predict. The expertise is concentrated in private companies. Maybe self-governance is the only option right now.

Or maybe it's just a stopgap. Maybe in five years, we'll have AI-specific regulations that require companies to have safety functions like this. Maybe this role becomes mandatory, not optional.

The fact that OpenAI is creating it now suggests they're anticipating one of those futures.

Estimated data suggests that the 'Head of Preparedness' will primarily focus on crisis management and AI model safety, reflecting OpenAI's reactive approach to emerging challenges.

The Types of People Who Should Apply

If you're reading this and thinking about whether this role is for you, here's my honest assessment:

You should apply if you've spent your career thinking about how technology can cause harm and how to prevent it. You should apply if you have the combination of technical depth and political judgment that lets you navigate complex organizations. You should apply if you can accept uncertainty and make the best decisions with imperfect information.

You should apply if you understand that you won't win every argument. You'll have ideas that get rejected. You'll approve things that cause problems. You'll block things that would have been fine. You need to be able to live with that.

You should apply if you want to influence how the most powerful AI systems are developed. If you care about that outcome more than you care about job security or perfect success.

You probably shouldn't apply if you need to feel like you're always winning. If you need to be certain that your decisions are right. If you need a role where success is easy to measure and failure is someone else's fault.

You shouldn't apply if you believe AI is either perfectly safe or irredeemably dangerous. You need to believe that risks can be managed through careful thought and good process, while also accepting that complete prevention of harm is impossible.

What This Means for the Future of AI

This role matters because it signals where the AI industry is going. OpenAI is essentially saying: safety is going to be important enough that we need dedicated leadership. It matters enough to pay serious money for. It matters enough to have direct input on product decisions.

Other companies will watch what happens. If OpenAI's Head of Preparedness makes a difference—if incidents decrease, if regulation becomes less hostile, if the company's reputation improves—other companies will follow. If the role becomes a figurehead position with no real influence, companies will stick with distributed safety responsibilities.

There's also a question about whether this role becomes a template for the industry. Do all major AI companies eventually have a Head of Preparedness? Does that become a regulatory requirement? Do you see similar roles at Google, Meta, Anthropic, and others?

The industry is at a transition point. Companies have been operating mostly without dedicated safety leadership. Regulation has been minimal. That's ending. The question is what comes next. Is it voluntary industry standards led by people like the Head of Preparedness? Is it heavy-handed regulation that mandates specific safety practices? Is it some combination?

OpenAI's move suggests they're betting on a future where companies that self-govern responsibly face less regulation. That might be right. Or companies might self-govern during their early stage and become more reckless as they scale, requiring regulation anyway.

We won't know for a few years. But we'll be watching.

How This Role Compares to Other Crisis Leadership Positions

To understand what this role is really asking for, it helps to compare it to other positions where someone has been hired to fix a broken function in a high-stakes environment.

When a company discovers it has a product safety problem, they often hire a Chief Safety Officer from outside. This person usually comes from the pharmaceutical, automotive, or aerospace industries where safety failures can kill people. They're used to regulatory oversight, liability concerns, and the necessity of saying no.

When a company discovers it has a cybersecurity problem, they hire a Chief Security Officer. This person usually comes from a background where they've seen a breach happen, understand what went wrong, and know how to build defenses.

When a company discovers it has a regulatory compliance problem, they hire a Chief Compliance Officer.

What do these roles have in common? They're usually created after something has already gone wrong. The company waited too long. They're playing catch-up.

OpenAI's Head of Preparedness is in that category. The company is hiring this role because mental health harms have occurred, features have shipped with safety issues, and they realize they need a dedicated function.

But there's a difference. Pharma companies have 50+ years of safety regulation. Car companies have decades of required testing. Financial companies have established compliance frameworks. OpenAI is creating a safety leadership function in an industry with minimal precedent and no regulatory mandate.

That makes the role harder in some ways (no established playbooks) and easier in others (no entrenched resistance to change). It's an opportunity to build something right from the start, but also a chance to build something that doesn't work and doesn't know it.

The Long Game: Where This Leads

If OpenAI successfully builds a strong Preparedness function, what happens next?

Most likely, you see maturation of how the entire AI industry thinks about safety. Right now, safety is something that happens in research labs or scattered through engineering teams. With a dedicated leadership position, it becomes part of the operating model.

That probably leads to industry standards. Companies start comparing how they handle safety. Best practices emerge. Standards get documented. Regulations eventually follow the pattern that industry has already established.

You might also see safety becoming a competitive advantage. Companies that have strong safety records and transparent processes attract users and talent. Companies with safety problems face lawsuits and regulation. That incentive structure pushes everyone toward better practices.

But there's also risk. If the Head of Preparedness role becomes purely ceremonial, it could actually make things worse. Companies could point to the existence of the role as proof they're taking safety seriously, while ignoring the person's actual recommendations. That's safety theater, and it might be worse than not having the role at all.

The other possibility is that this role never scales. Maybe AI safety requires regulatory oversight that companies can't self-govern. Maybe the conflicts between safety and commercial interest are too intense. Maybe in 10 years, this role looks quaint because AI companies are heavily regulated and safety is mandated, not voluntary.

We won't know for a while. But the creation of the Head of Preparedness role is a signal that the industry believes it can manage these risks through internal process. That belief is about to be tested.

What You Should Actually Care About

Zoom out from the job posting itself. Why does this matter to you, personally, if you don't work at OpenAI?

Because OpenAI's decisions affect how you interact with AI systems. Chat GPT isn't just a thing OpenAI uses internally. Millions of people use it. Your kids might use it. You use it. The mental health impacts that prompted this role might affect you.

Because OpenAI's choices influence how other companies develop AI. Google watches what OpenAI does and makes decisions based on competitive pressure. Anthropic watches OpenAI's safety efforts and decides how to respond. Microsoft, which distributes OpenAI's technology, has incentives aligned with OpenAI's choices.

Because this role signals what the industry thinks matters. If OpenAI is willing to invest half a million dollars in a safety and preparedness position, it's saying safety matters. That influences what startups and other companies prioritize.

Because the existence of this role tells you something about the state of AI safety. It tells you that major companies believe harm has already occurred and will continue to occur unless they actively intervene. That's not reassuring, but it's honest. It's better to know the truth than to be lied to.

Final reason: this role influences your future. If AI companies successfully implement strong safety practices, the technology is probably safer for everyone. If they don't, and harms escalate, that affects regulation, which affects how you interact with AI, what it can and can't do, and how your data is used.

FAQ

What exactly is OpenAI's Head of Preparedness role?

The Head of Preparedness is a leadership position responsible for understanding how OpenAI's AI models could be abused or cause harm, steering the company's safety decisions, and securing systems against misuse. The person leads a small team focused on preventing real-world harms from AI interactions and managing emerging risks as models become more capable.

Why did OpenAI create this role now instead of earlier?

OpenAI created the role because documented harms have already occurred—the company acknowledged seeing mental health impacts from AI interactions in 2025. Additionally, OpenAI faced criticism from former employees about prioritizing commercial speed over safety. The role represents both a genuine response to real problems and strategic positioning ahead of emerging AI regulation. Altman's public acknowledgment of these issues suggests the company felt compelled to act.

What happened to the previous Heads of Preparedness at OpenAI?

Aleksander Madry, Joaquin Quiñonero Candela, and Lilian Weng all held the Head of Preparedness position before being reassigned to other roles at OpenAI. Madry moved on after his tenure, Candela became Head of Recruiting, and Weng took on other leadership positions. Their reassignments suggest either that the role is genuinely difficult and people move on, or that OpenAI's approach to safety keeps evolving and people are better suited for different positions.

How much does the Head of Preparedness position pay?

OpenAI is offering $555,000 per year plus equity for the role. This compensation level is significant—comparable to senior engineering positions at major tech companies. The equity component means the successful candidate is betting on OpenAI's future valuation, creating aligned incentives between personal success and company value.

What is the "mental health crisis" that prompted this role?

Altman mentioned that OpenAI saw a "preview" of how AI models can affect mental health in 2025, seemingly referring to documented cases where Chat GPT interactions contributed to negative psychological outcomes. Users can form parasocial relationships with AI, share sensitive personal information, and be harmed when the AI provides inadequate support or the relationship breaks down. This isn't theoretical—cases have been reported where Chat GPT interactions made mental health crises worse.

How much actual authority does the Head of Preparedness have?

The actual authority isn't fully clear from the job posting, but the Head of Preparedness leads a team focused on safety decisions and steers product development choices. They likely can't unilaterally block major features, but they can force conversations about risks before decisions are made. Real power would be demonstrated through whether their safety concerns actually influence what ships and when. The $555K salary suggests the role is intended to have meaningful influence, but implementation will reveal how much authority is actually exercised.

What would success look like in this role?

Success is difficult to measure because you can't count prevented harms. It includes building effective safety processes, hiring and retaining strong teams, having safety concerns taken seriously in product meetings, preventing public crises that could have been avoided, influencing company culture around safety, and gaining respect from external safety researchers. Conversely, failure looks like high-profile incidents, safety team members leaving due to frustration, and the role becoming ceremonial window dressing.

How does this compare to safety efforts at other AI companies?

Most AI companies have distributed safety responsibilities across teams or housed them in research functions. OpenAI is creating a dedicated operational role with clear authority over product decisions. Anthropic, which was founded by people who left OpenAI over safety disagreements, focuses on Constitutional AI as their whole company approach. DeepMind has safety research teams but less operational authority. OpenAI's approach is distinctive in centralizing safety decision-making under one leader.

What immediate challenges will this person face?

The new Head of Preparedness inherits an organization that has already experienced safety failures. They'll face pressure to release capabilities quickly while saying no when they judge risks are unacceptable. They'll deal with inherent uncertainty about what's safe. They need to change a company culture that has historically prioritized speed. They'll need to hire teams in an environment where safety researchers are skeptical of OpenAI's commitment. They'll interface with regulators while protecting competitive interests.

What does this role mean for the future of AI regulation?

This role signals that OpenAI believes companies can self-govern responsibly and that doing so proactively is better than waiting for regulation. If successful, it could become an industry template and influence regulatory approaches. If unsuccessful or becomes ceremonial, it might actually accelerate regulation by showing that voluntary safety measures don't work. Either way, the creation of this role suggests AI regulation is coming, and OpenAI is trying to shape what that looks like by demonstrating internal responsibility.

Conclusion: The Job That Might Change Everything

OpenAI's job posting for a Head of Preparedness at $555,000 per year is a watershed moment for the AI industry. It's not just a hiring announcement. It's a confession wrapped in a job description.

The company is essentially saying: our models are already causing real harm, we're not satisfied with our current approach to managing risks, and we need dedicated leadership to fix it.

That's important because most AI companies haven't admitted that yet. They're still operating like early-stage startups where safety can wait until the product matures. OpenAI, which is at a different scale entirely, has acknowledged that safety can't wait.

But the real story is what happens next. Does OpenAI hire an exceptional safety leader and give them the resources and authority to actually change how the company operates? Or does the Head of Preparedness become a high-paid figurehead while the company continues prioritizing commercial speed?

The answer matters because OpenAI's choices influence the entire industry. Google, Meta, Anthropic, and dozens of other companies are watching. If OpenAI successfully implements strong safety practices, others will follow. If they don't, competitors will use safety as a differentiator, and regulation will eventually force everyone.

For the person who takes this job, it's the most stressful position in tech right now. You'll be making decisions with incomplete information that could affect millions of people. You'll be fighting political battles in a company that's used to moving fast. You'll be inheriting failures that aren't your fault and responsible for preventing new ones.

But if you succeed, you could genuinely change how AI companies think about safety. You could influence regulation. You could prevent real harms from happening. You could build something that looks quaint in 10 years because safety is just how everyone operates.

That's why OpenAI is offering $555,000 plus equity. That's why they're being honest about the role being high-stress. That's why this job posting matters.

OpenAI is saying: we're serious about this. We're willing to pay for it. We're willing to admit where we've fallen short. We're building for a future where safety isn't optional.

Whether that vision becomes reality depends on who takes the job and what happens next.

Key Takeaways

- OpenAI created the Head of Preparedness role at $555K/year because documented harms from AI models have already occurred, including mental health impacts acknowledged by Sam Altman in 2025

- The role requires managing competing priorities: preventing AI harms while maintaining innovation speed, with authority to influence product decisions and budget

- Previous heads of this role (Aleksander Madry, Joaquin Quiñonero Candela, Lilian Weng) were eventually reassigned, suggesting the position is challenging even for experienced leaders

- This hire signals that OpenAI is positioning itself as responsible ahead of emerging AI regulation, while genuinely responding to real problems their systems have caused

- The success of this role will influence whether AI companies can self-govern on safety or if regulation becomes necessary, making it a bellwether for the entire industry

Related Articles

- OpenAI's Head of Preparedness Role: AI Safety Strategy [2025]

- OpenAI's Head of Preparedness: Why AI Safety Matters Now [2025]

- Satya Nadella's AI Scratchpad: Why 2026 Changes Everything [2025]

- Tech Trends 2025: AI, Phones, Computing & Gaming Year Review

- Complete Guide to New Tech Laws Coming in 2026 [2025]

- ChatGPT Judges Impossible Superhero Debates: AI's Surprising Verdicts [2025]

![OpenAI's Head of Preparedness Role: What It Means and Why It Matters [2025]](https://tryrunable.com/blog/openai-s-head-of-preparedness-role-what-it-means-and-why-it-/image-1-1767380863148.jpg)