Satya Nadella's AI Scratchpad: Why 2026 Changes Everything

When Microsoft's CEO decides to start blogging, you pay attention. Not because every CEO blog deserves your time, but because Satya Nadella doesn't do this lightly. The guy has been running one of the world's most complex software companies. If he's carving out time to write about AI on his new "sn scratchpad" blog, something's shifted in how he thinks about the industry.

The first post landed in late 2025, and it's refreshingly candid. Nadella basically says the entire AI industry is arguing about the wrong things. Everyone's fighting over "AI slop versus sophistication," but that's missing the point entirely. He wants to move past the anxiety about AI-generated mediocrity and focus on something bigger: how AI becomes the cognitive amplifier that fundamentally changes how humans work, create, and think.

This isn't just tech CEO posturing. It's a signal that Microsoft has come to terms with a hard truth. The AI race isn't about making better models anymore. It's about building systems that actually work in the real world, systems that humans will actually use, and systems that matter morally and environmentally. And that's a very different game.

Let's break down what Nadella's actually saying, why he's saying it now, and what it means for everyone betting their business on AI in 2026.

TL; DR

- Nadella's main argument: The AI industry needs to stop debating "slop vs sophistication" and focus on practical cognitive amplifier tools

- The timing matters: With new Microsoft leadership in place, Nadella can focus on longer-term AI vision and strategic messaging

- Microsoft's actual bet: AI agents (not better models) that integrate into Copilot, Teams, and Office, replacing traditional software interfaces

- The reality gap: Current Copilot promises don't match what actually works, but Nadella sees 2026 as the year this changes

- Bottom line: 2026 is make-or-break for AI agents—the difference between hype and something enterprises actually depend on

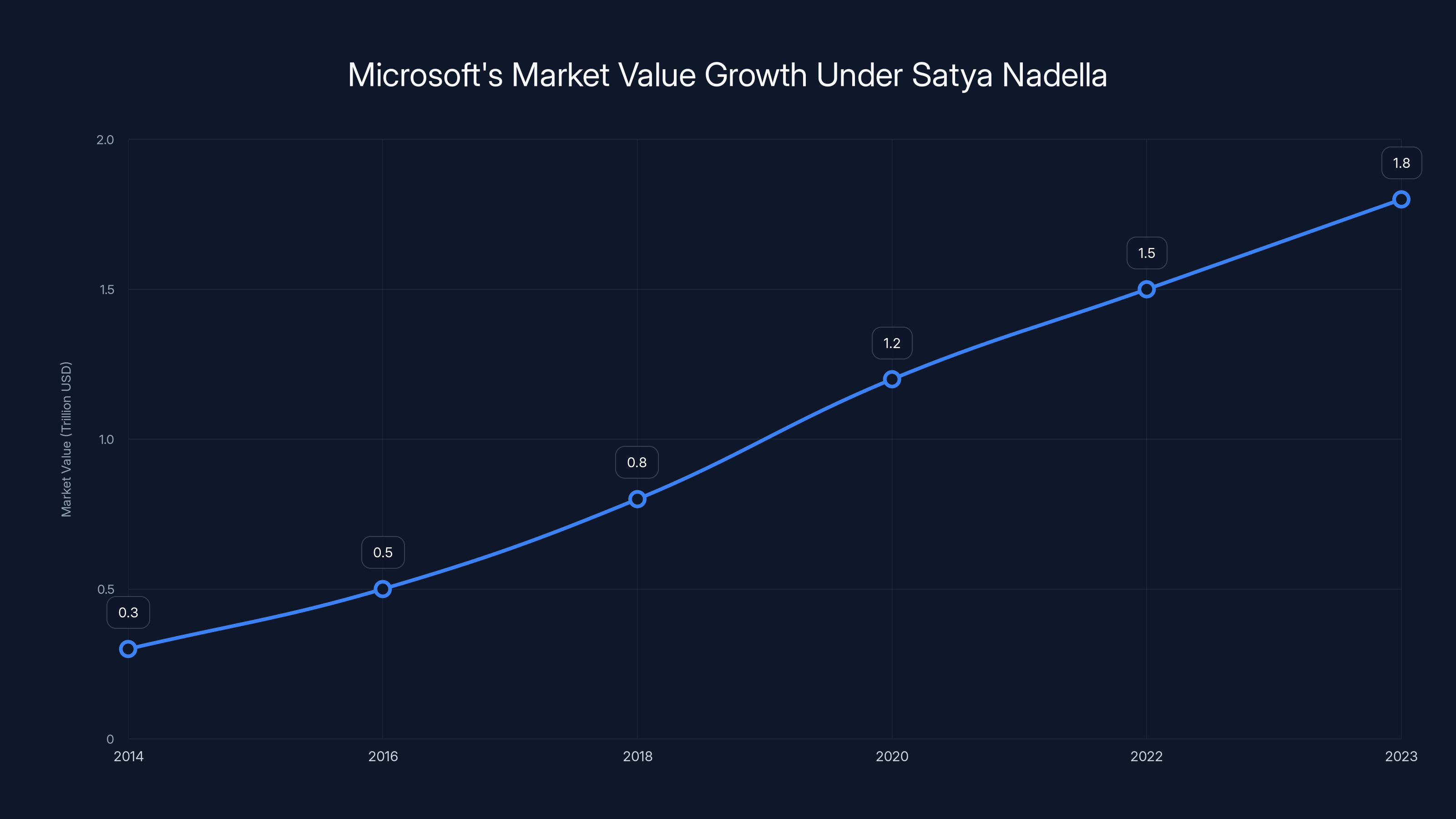

Under Satya Nadella's leadership, Microsoft's market value increased by over $1.5 trillion from 2014 to 2023, showcasing a significant transformation into a cloud-first, AI-forward company. (Estimated data)

The CEO Who Got Quiet, Then Loud Again

It's worth remembering where Nadella was six months ago. Copilot was supposed to be everywhere by now. Enterprises were supposed to be restructuring entire workflows around AI. The average knowledge worker was supposed to be spending their day talking to intelligent agents instead of clicking through menus.

None of that happened.

Instead, we got a year of fractured promises. Copilot features shipped half-baked. Integration with Microsoft 365 was clunky. AI model wars between OpenAI, Anthropic, and Google dominated headlines instead of practical advances. And creatives got legitimately angry about seeing their art styles cloned by AI models.

Then something happened. Microsoft's board made internal changes. New leadership took over specific business units, and suddenly Nadella had breathing room. He wasn't tied up in day-to-day firefighting anymore.

That's when he started blogging.

The blog isn't a vanity project. It's a reset. Nadella's essentially saying: "Stop looking at the noise. Here's what actually matters."

A significant gap exists between the 60% of executives concerned about AI ethics and the 25% with governance frameworks. Addressing this gap is crucial to avoid future legal and regulatory challenges.

The "Slop vs Sophistication" Problem He's Trying to Solve

Let's talk about what "AI slop" really means, because Nadella's dismissing it as the wrong debate.

AI slop is real. It's the mediocre marketing copy generated by ChatGPT. It's the middling stock photos generated by Midjourney. It's the bland LinkedIn posts that everyone now associates with "somebody let their AI write this." It's the subtle awkwardness in tone that screams "machine-generated."

People got mad about AI slop for good reasons. Creatives saw their livelihoods threatened by tools that could copy their styles. Writers watched their craft get reduced to statistical averaging. Musicians found their voices cloned without permission. The anxiety was justified.

But here's what Nadella's actually saying: that debate is missing the bigger picture.

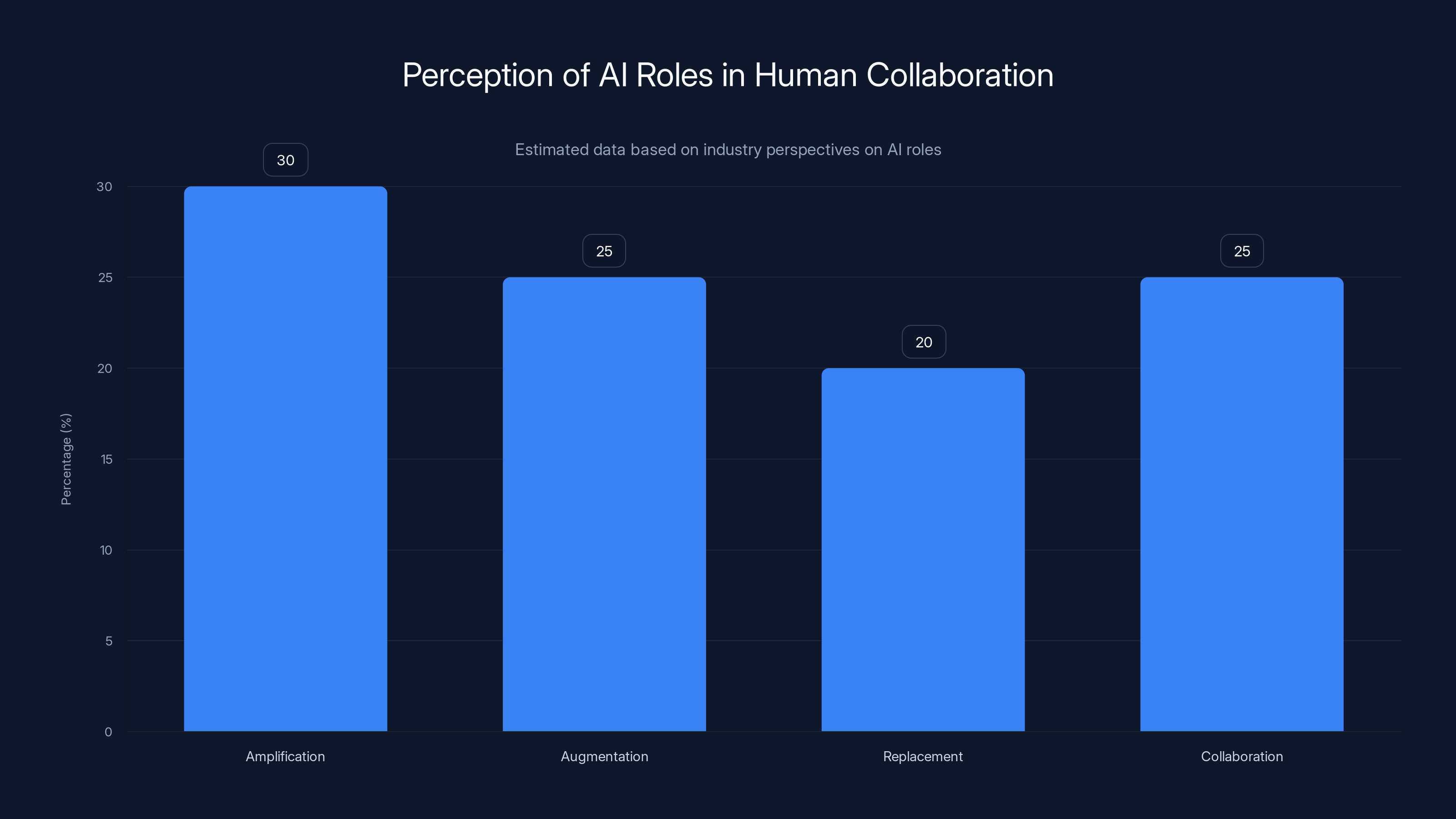

He argues the real issue isn't whether AI output is polished or mediocre. It's whether AI becomes a tool that amplifies human cognition or replaces it. The difference is philosophical, but it's everything.

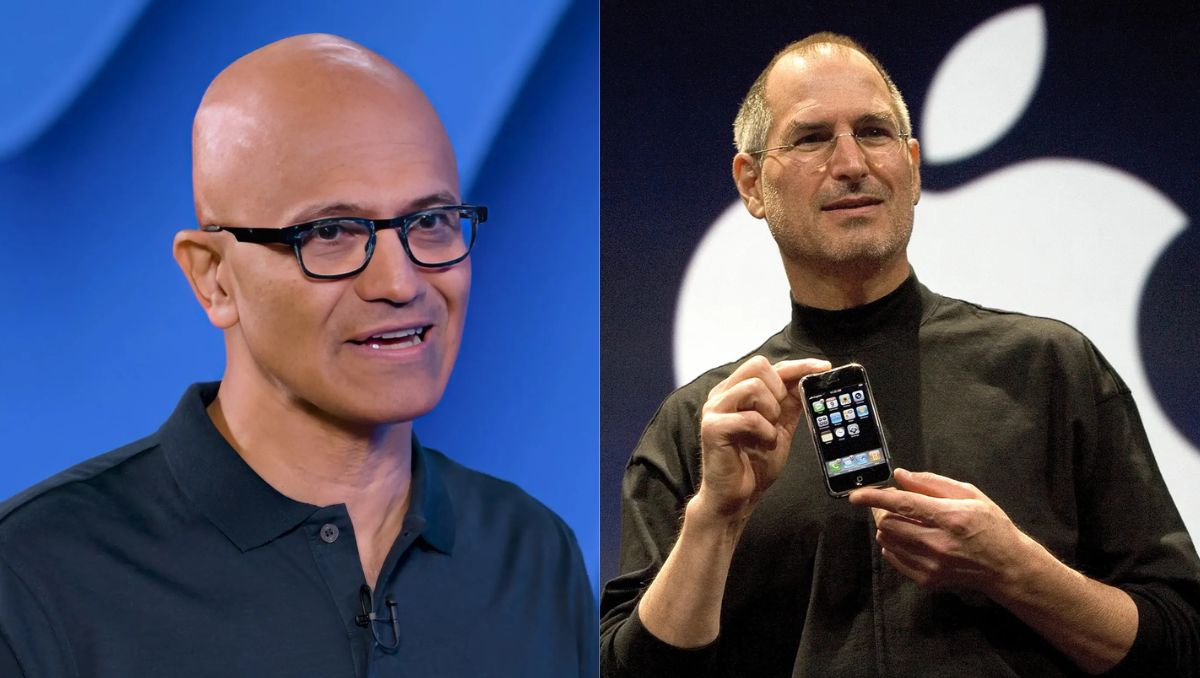

Think about how Steve Jobs described computers in the 1980s: "bicycles for the mind." A bicycle didn't replace running; it amplified it. You could go farther, faster, with less effort. But you still had to steer. You still had to pedal. The bicycle made you more capable.

That's what Nadella wants AI to be. Not replacement. Amplification.

The problem is, most AI right now is being built and sold as replacement. "Let AI write your emails. Let AI code your app. Let AI design your graphics." That's the value proposition everyone's pushing. And yeah, some of that output is slop. But more importantly, it treats the human as optional.

Nadella's saying Microsoft doesn't want to build optional humans. It wants to build tools that make humans more powerful.

Why The Industry Got The Theory of Mind Wrong

Here's the part of Nadella's blog post that's actually radical: he talks about needing a new "theory of the mind" for the AI era.

That phrase is doing a lot of work. Let him unpack it.

For decades, we've thought about computers in one way: they're tools. You open them. You input commands. You get output. The human is always the agent. The computer is always the passive instrument. This works great for spreadsheets, word processors, and email.

But AI agents don't work that way. They're supposed to reason independently, make decisions, and take actions without being explicitly told. They're supposed to develop a kind of "mind," even if it's not conscious.

So the question becomes: if humans are equipped with cognitive amplifier tools that have their own kind of intelligence, how do we think about the relationship? Are we collaborating with the tool, or are we delegating to it? Are we amplified, or are we augmented away?

That's not a technical question. That's a philosophical one. And Nadella's saying the industry doesn't have consensus on it yet.

Microsoft certainly doesn't. Right now, Copilot is positioned as both: sometimes a tool that amplifies you, sometimes a replacement that takes tasks off your plate. When it works, users love it. When it doesn't work (which is often), users feel bamboozled.

The theory of mind problem explains why. Everyone's building AI agents without agreement on what role they should play in human life. Should a Copilot agent in your calendar write your meeting summaries, or should it just suggest summaries for you to refine? Should it take meetings on your behalf, or should it prep you before meetings you take?

These sound like small differences. They're not. They define whether users feel empowered or replaced.

Nadella's blog post is basically saying: Microsoft needs to figure this out, the industry needs to figure this out, and we need to do it before shipping another generation of half-baked AI products.

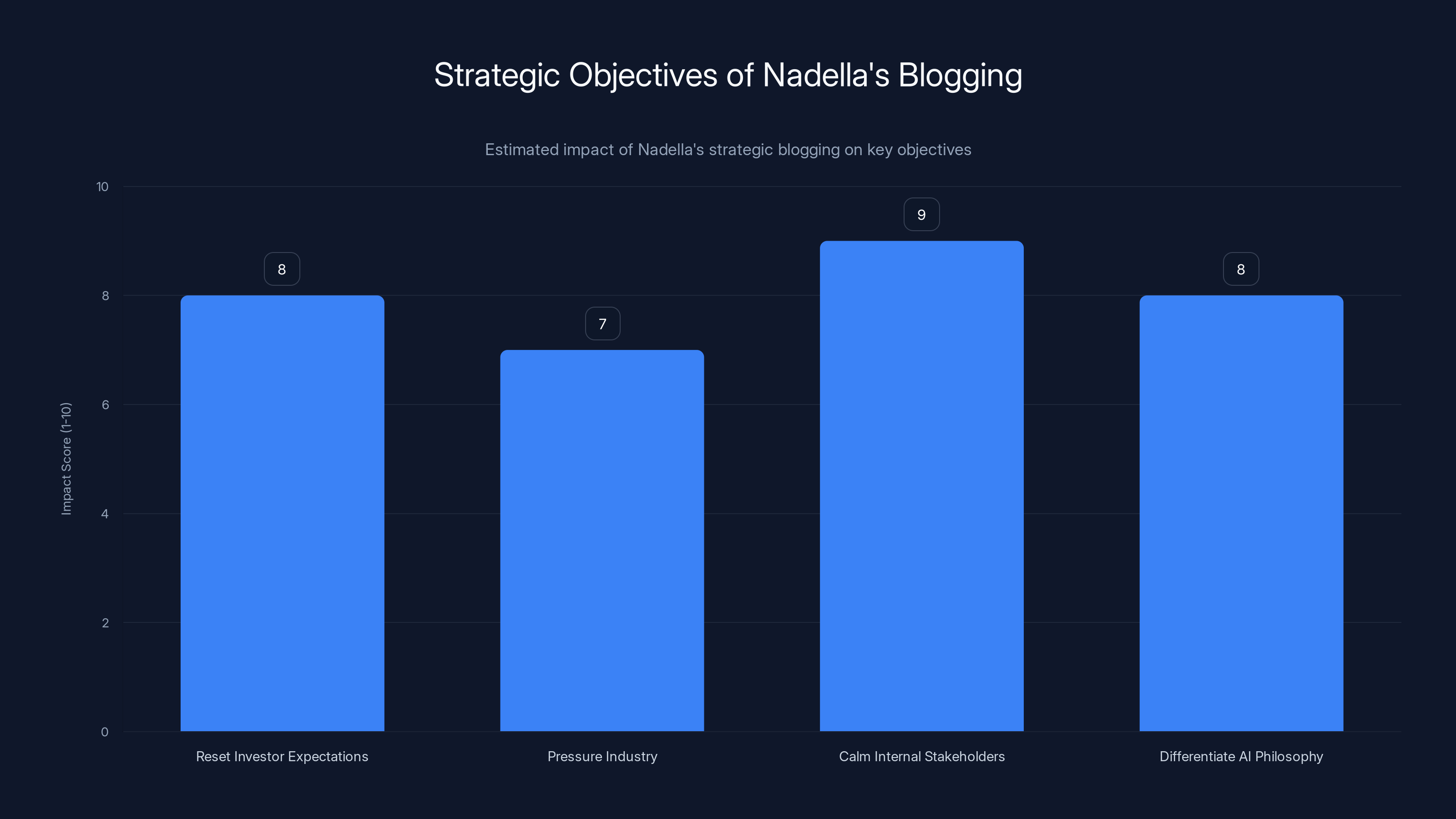

Nadella's blog aims to strategically reset expectations, pressure the industry, calm stakeholders, and differentiate Microsoft's AI approach. Estimated data.

The Real Microsoft Bet: AI Agents, Not Better Models

There's a joke in Silicon Valley right now: "Everyone's racing to build the best LLM, but nobody's building the best agent."

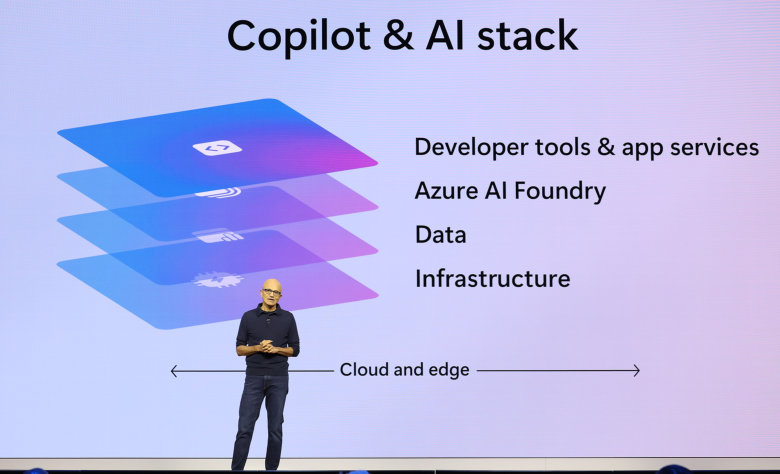

Nadella's trying to invert that. He's not saying Microsoft is giving up on models. It's not. But he's saying the model wars aren't the bottleneck anymore.

The bottleneck is agents.

Think about it practically. An LLM like GPT-4 or Claude can answer questions, draft emails, explain concepts. But it can't do much in your actual work environment without a human running it through a web interface.

An AI agent is different. It's a system that lives in your tools (Outlook, Teams, Excel), understands your context, watches what you're doing, and takes actions. It's proactive, not reactive. It's integrated, not external.

Microsoft's betting that this is what enterprises actually need. Not a better chatbot. A smarter coworker that lives in the tools everyone already uses.

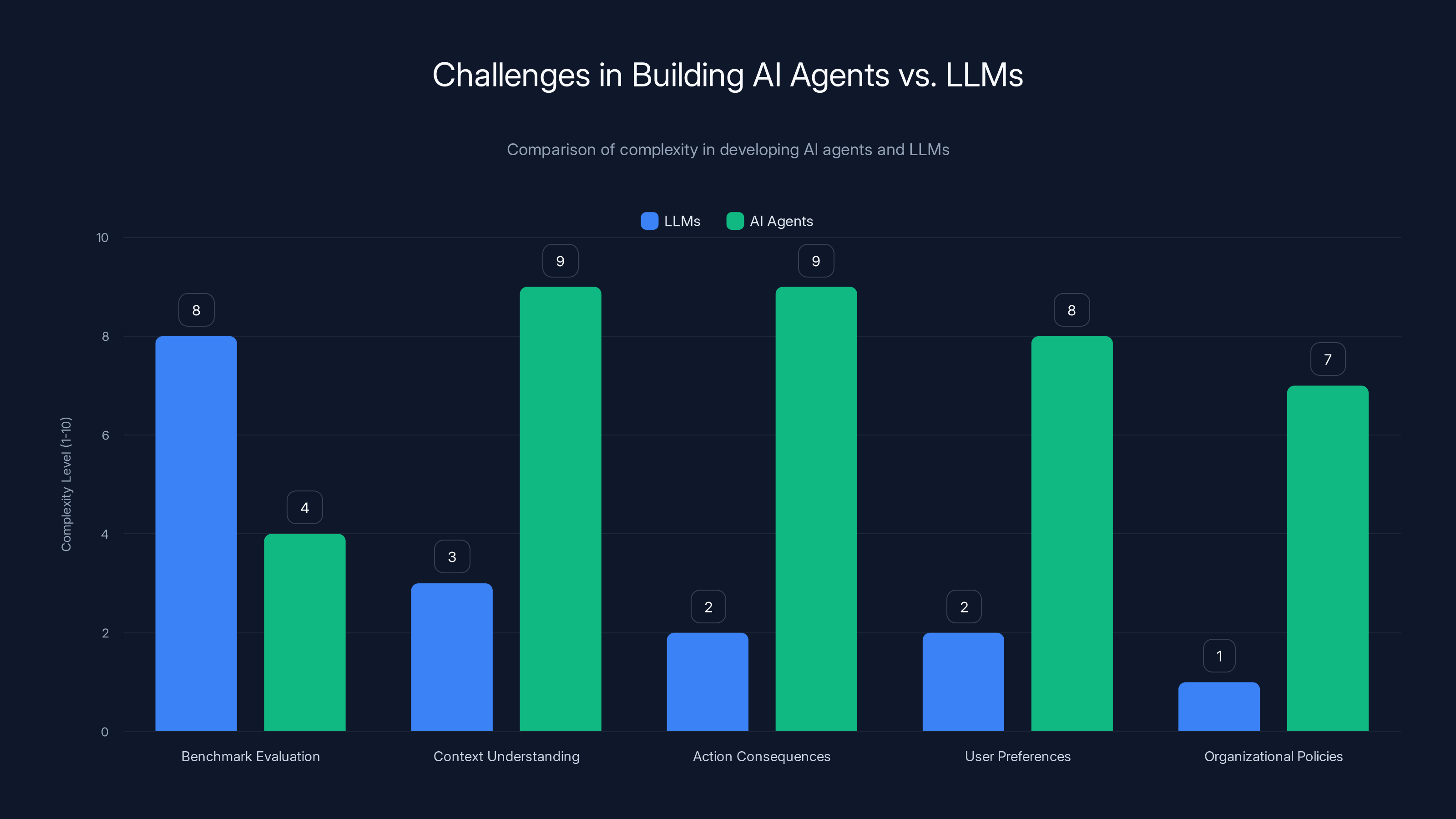

The problem is, building that is exponentially harder than building a good LLM.

An LLM can be evaluated on benchmarks. It either answers questions correctly or it doesn't. An agent has to navigate complexity: permissions, context, consequences, edge cases, user preferences, organizational policies. An agent that "acts" without understanding consequences is just expensive automation that breaks things.

That's why Nadella wrote this blog post when he did. Microsoft has spent 2025 building agents that barely work. But now the company is closer to building agents that actually do what they're supposed to do. 2026 is make-or-break for that bet.

The Gap Between Vision and Reality (And Why 2026 Matters)

Nadella's blog post is careful here. He doesn't pretend Copilot is working great right now. He basically admits it's not.

That's worth noting. A CEO of Microsoft's size doesn't casually admit that flagship products are falling short. But he does. Because he's trying to reset expectations.

The vision is clear: "We will evolve from models to systems when it comes to deploying AI for real world impact." Translation: individual AI models aren't enough. You need systems that orchestrate models, handle context, manage consequences, and integrate with actual workflows.

The reality is messier. Copilot in Office falls back to generic suggestions. Copilot in Teams sometimes fails to understand conversation context. When you ask Copilot to take an action (like scheduling a meeting or drafting a proposal), it often generates something close but not quite right.

Users then have two choices: trust the output anyway (which leads to "slop"), or spend time fixing it (which defeats the purpose of automation).

Nadella's saying 2026 is when this dynamic changes. Not because models get smarter, but because systems get better at understanding what you actually need.

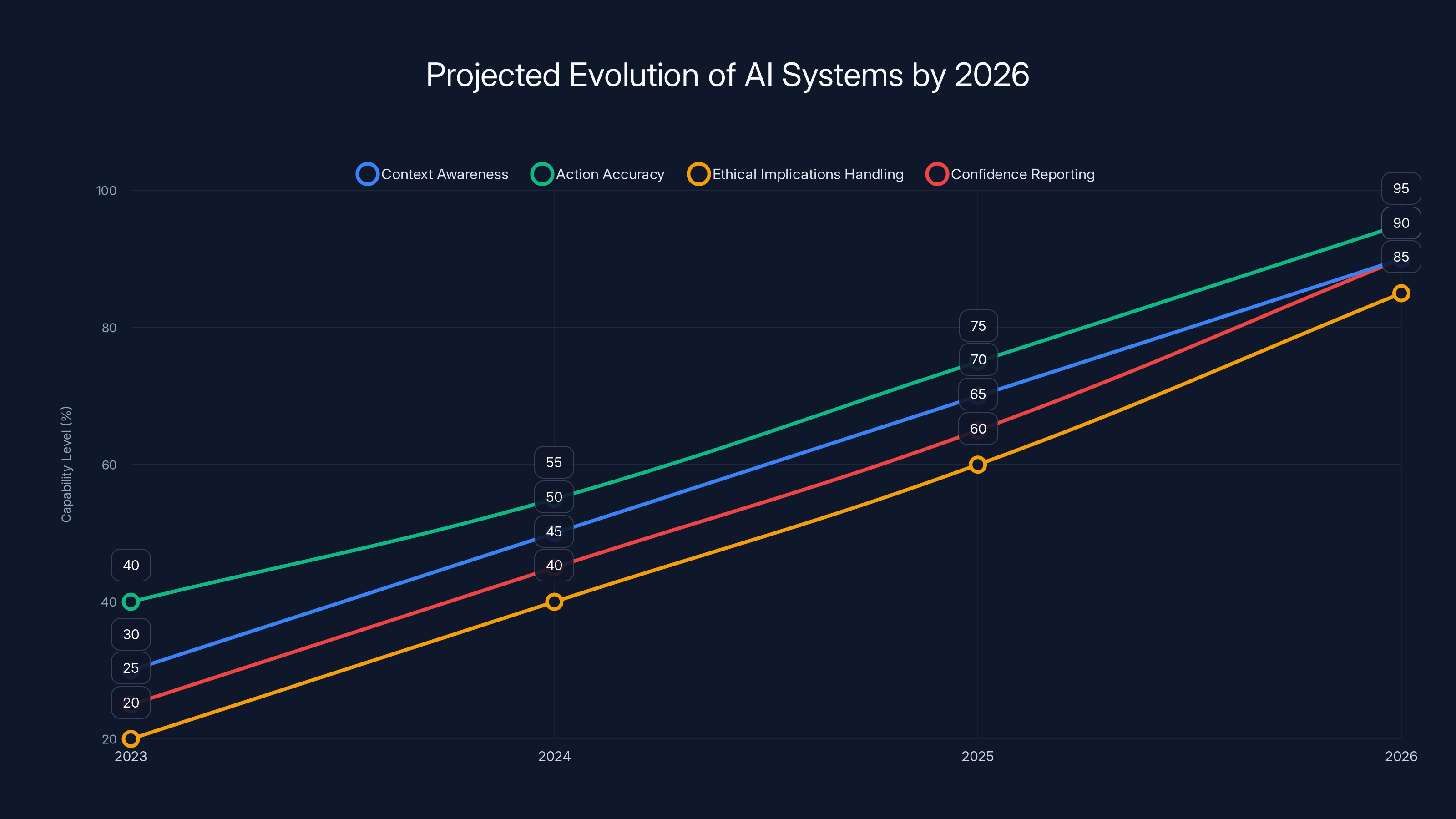

That means better context awareness. It means agents that know when to act and when to ask for clarification. It means systems that understand organizational constraints and ethical implications of their actions. It means honestly reporting confidence levels instead of overconfidently committing to actions that might fail.

In other words, it means treating AI agents like they're supposed to be partners, not oracles.

The company that pulls this off first wins the AI race. Not because they have the best model, but because they have the best system.

Estimated data suggests varied perceptions of AI roles, with amplification and collaboration being the most favored. Estimated data.

The Socio-Technical Issue Nadella's Really Talking About

There's a sentence in Nadella's blog that deserves more attention than it got:

"The choices we make about where we apply our scarce energy, compute, and talent resources will matter. This is the socio-technical issue we need to build consensus around."

That's not a CEO talking. That's an engineer who's become a philosopher.

What he's really saying is this: AI requires more energy, compute, and talent than any previous technology. Those are finite resources. If you're going to use them, you need to be intentional about what problems you're solving.

Right now, AI is being applied to everything: marketing copy, social media, customer service, coding, design, legal contracts, medical diagnosis. Some of these are legitimate uses. Some are just... because we can.

The "slop" problem isn't a technology problem. It's a choice problem. We're using expensive, resource-intensive AI systems to generate low-value outputs because it's profitable, not because it's important.

Nadella's essentially saying: Microsoft won't win the AI race if it keeps treating AI as a generic amplifier for any task. It wins if it treats AI as a scarce resource that should be deployed strategically for high-impact problems.

That means saying "no" to some use cases. It means building agents that focus on genuinely important workflows (diagnostic medicine, scientific research, engineering, strategic analysis) rather than trying to automate everything.

It also means thinking about environmental impact. The energy cost of training and running models at scale is real. Anthropic and others are starting to calculate this. Nadella's saying Microsoft needs to be honest about it too.

That's a radical position for a CEO to take in public. It limits market opportunity. But it's also the position you take if you actually want to build something that lasts instead of something that generates quarterly revenue and then becomes obsolete.

Nadella's Implicit Critique of the Model Wars

You have to read between the lines here, but Nadella's basically saying the AI industry is obsessed with the wrong competition.

Everyone's comparing GPT-4 vs Claude vs Gemini on benchmarks. Who reasons better? Who codes better? Who's more creative? It's a real competition, and the benchmarks matter.

But Nadella's saying: that's not how enterprises choose tools. Enterprises choose based on integration, reliability, trustworthiness, and whether the tool actually solves their problem. A slightly better model doesn't matter if it doesn't integrate with the tools your team actually uses.

Microsoft has an unfair advantage here. Microsoft 365 runs enterprise workflows. Windows runs enterprise desktops. Azure runs enterprise infrastructure. If Microsoft can get agents working in these environments, competitors with better models won't matter.

That's why Nadella's optimistic about 2026. Not because OpenAI's models will get better (they will), but because Microsoft will have agents actually deployed and working in millions of enterprise workflows. Network effects. Switching costs. Defensibility.

The model war is important, but it's not the war that decides who wins AI in the enterprise. The system war is. And Nadella thinks Microsoft can win that one.

AI agents require higher complexity management in context understanding and action consequences compared to LLMs, which are primarily evaluated on benchmark performance. Estimated data.

Why Blogging Now? The Timing Is Strategic

Some people saw Nadella starting a blog and thought: "Oh, he's trying to be Elon Musk or something. Just sharing thoughts."

That's not it. The timing is strategic.

Microsoft just restructured its leadership. New blood in key positions. Nadella has moved from day-to-day operations into pure strategy. He's not defending quarterly results anymore. He's setting direction.

When a CEO writes a blog post that explicitly says "we need to move past these arguments" and "we need to build consensus around this," it's not personal thoughts. It's strategic narrative.

Nadella's trying to do several things at once:

-

Reset investor expectations: AI isn't going to drive huge revenue growth in 2026. It's going to be investment-heavy, integration-heavy, and uncertain. Prepare for that.

-

Pressure the industry: The "slop" debate is bad for everyone. It makes consumers fear AI instead of use it. Nadella's saying: let's collectively move past it and build something actually useful.

-

Calm internal stakeholders: Microsoft's spent billions on OpenAI partnership. Copilot's struggling. Product teams are frustrated. The blog post says: we understand the problems, we have a plan, we're not panicking.

-

Differentiate Microsoft's AI philosophy: Meta is going all-in on raw model capability. Google is pushing Gemini everywhere. Nadella's saying Microsoft is thinking about AI differently: as a system problem, not a model problem. As a human amplifier, not a human replacement.

That's genuinely different from how competitors are positioning AI. And it's smart positioning, because the market will eventually realize that better models don't automatically mean better products. Systems matter. Integration matters. Actual utility matters.

The 2026 Prediction: Make or Break for AI Agents

Nadella's bold claim is that 2026 is a "pivotal year" for AI. Not another incremental year. A pivot point.

He's right that 2025 didn't deliver what everyone expected. Copilot didn't transform enterprise workflows. AI agents didn't become standard. The industry spent the year shipping incremental improvements and getting caught up in model wars instead of system building.

But he's also predicting that 2026 will be different. Why? A few reasons:

First, the infrastructure is more mature. Azure can now run AI workloads at enterprise scale. The API ecosystems are established. The integration tooling exists. It's not world-class yet, but it's good enough to build real systems.

Second, the business case is clearer. We've spent 18 months learning what AI can and can't do. Enterprises now know: it's not a replacement for knowledge workers, but it's a force multiplier for routine cognitive work. That's a valuable use case. It's just not as revolutionary as the 2024 hype cycle suggested.

Third, the competition is settled. OpenAI has the best models. Anthropic has the most reliable models. Google has the most integrated models. That competition is ongoing, but the initial shock is over. Now it's about building systems on top of capable models, not waiting for even more capable models.

So Nadella's pivot from models to systems makes sense. It's the right move at the right time. Companies that ship functioning AI agents in 2026 will have enormous competitive advantages in 2027.

Companies that are still arguing about model superiority in 2026 will be behind.

By 2026, AI systems are projected to significantly improve in context awareness, action accuracy, ethical implications handling, and confidence reporting. Estimated data.

What This Means For Developers and Creators

If you're building products, this matters. A lot.

Nadella's essentially saying: the competitive advantage in AI isn't going to be in model capability anymore. It's going to be in system design, integration, and real-world reliability.

That means:

For developers: The skill that matters most isn't fine-tuning models or understanding transformer architecture. It's building systems that use models safely and effectively. Prompt engineering is going to matter less than system architecture. Agent reliability is going to matter more than model capabilities.

For creatives: The anxiety about AI replacing your work is missing the point. AI replaces generic work, not creative work. What matters is whether you use AI as an amplifier (AI helps you do your best work faster) or you get replaced by it (your employer uses AI to replace you with cheaper work). That choice is being made right now, in 2026. It's not predetermined.

For enterprises: The companies making the biggest AI bets right now aren't waiting for perfect models. They're building imperfect systems that actually work in their workflows. They're betting that a mediocre system integrated with their tools beats a perfect model running in isolation.

That's the insight Nadella's sharing. It's not flashy. It's not a technical breakthrough. But it's strategically important. And it changes how you should be thinking about AI in 2026.

The Unspoken Tension: Microsoft's Open AI Partnership vs Its Own AI Vision

Here's something Nadella didn't explicitly say in the blog, but it's important to understand:

Microsoft has a structural conflict of interest. Microsoft owns significant stake in OpenAI. It's deployed tens of billions of capital into that partnership. OpenAI's philosophy is: build the most capable models possible.

But Nadella's blog post is basically saying: the most capable models aren't the most important thing.

That's not a contradiction. Nadella could have both. OpenAI builds the best models, Microsoft builds the best systems on top of them. That's actually a clean division of labor.

But it's also a source of tension. If OpenAI decides to build agents directly, it competes with Microsoft's agent strategy. If Microsoft decides to build its own models, it reduces the strategic importance of the OpenAI partnership.

Nadella's not saying they're building their own models. But he's not ruling it out either.

This matters because it suggests Microsoft is hedging its bets. It's bet on OpenAI, but it's also building the systems that would work with any capable model. That's smart strategy. It reduces dependence on OpenAI's decisions while still benefiting from OpenAI's capabilities.

Why The Societal Impact Question Matters More Than You Think

Nadella's blog post keeps coming back to this: "We will evolve from models to systems when it comes to deploying AI for real world impact."

And then: "These systems will have to take into consideration the societal impact they have on people and the planet."

That's not CEO platitudes. That's a warning.

Here's why: AI at scale has real societal impact. When you deploy an AI agent in enterprise workflows, it affects hiring decisions, customer service quality, job security, creativity, and fairness. You can't pretend these are someone else's problem anymore.

The companies that start taking this seriously now will have enormous advantages in 2026 and beyond. They'll build systems that people trust. They'll avoid the backlash that's coming when more people understand what AI does.

Meta's facing regulatory scrutiny for AI social media. Google's facing lawsuits about AI training data. OpenAI's facing criticism about AI in education. The societal impact question isn't hypothetical anymore. It's real, it's urgent, and it's coming for every company deploying AI at scale.

Nadella's saying: let's not pretend this is someone else's problem. Let's build it into our systems from the start.

That's actually defensible. A company that says "we thought about these issues and here's how we addressed them" will win more trust than a company that says "we didn't think about it" or "it's not our problem."

The Road From Blog Post to Product: What Actually Changes in 2026

Nadella's blog post is strategic messaging. But strategic messaging only matters if it translates into actual products and systems.

So what should we actually expect from Microsoft in 2026 based on what he wrote?

Better Copilot agents in Microsoft 365: Copilot should get smarter about context. It should understand your calendar, your email, your working style, and suggest actions with much higher accuracy. It should also know when to ask for clarification instead of guessing.

AI agents in Teams that actually work: Right now, Copilot in Teams is a chatbot. By 2026, it should be an agent that can actually help run meetings, follow up on action items, and prepare participants. It should watch the meeting, understand context, and deliver value that a human had to do before.

Better integration with enterprise systems: Copilot should connect to your CRM, your ERP, your analytics platform. It should work across your entire software stack, not just Microsoft products. That's ambitious, but it's the system thinking Nadella's describing.

Clearer boundaries on what AI should and shouldn't do: Microsoft should be explicit about where agents have autonomy and where they need human approval. That's the theory of mind work Nadella mentioned.

Honest measurement of impact: Microsoft should report how much time Copilot actually saves users, how many errors it makes, what it's good at, what it's bad at. Right now, those metrics are opaque. 2026 should be the year that changes.

That's a pretty concrete roadmap. It's not about inventing new technology. It's about shipping the technology that already exists in a way that actually works.

Comparing Microsoft's Vision to Competitors' Bets

It's worth stepping back and seeing how Nadella's vision compares to what other companies are betting on.

Meta is betting on frontier models and open-source distribution. The idea: give everyone access to powerful models, let them build what they want, let open innovation happen. It's democratic. It's also chaotic and harder to monetize.

Google is betting on integration within search and workplace. Gemini should be everywhere you use Google products. It's a similar strategy to Microsoft's, but Google has weaker enterprise tools, so the advantage is smaller.

Anthropic is betting on safety and reliability. Build the most trustworthy AI possible, and sell to enterprises that care about safety more than raw capability. It's a narrower market, but it's defensible.

OpenAI is betting on frontier capabilities and consumer adoption. GPT should be so good that it becomes the default interface for intelligent reasoning. It's ambitious, but it requires network effects and consumer trust.

Nadella's vision is: system integration. Better integrations + good-enough models + enterprise embedded = defensible advantage.

It's not the sexiest strategy. But it might be the most resilient. Because enterprises won't switch if the switching cost is too high. And the switching cost for enterprise software is astronomical.

The Question Nadella's Blog Doesn't Answer

There's one thing Nadella doesn't address in his blog post, and it's worth noting:

What if 2026 isn't the pivotal year? What if agents still don't work well enough? What if enterprises decide AI isn't worth the complexity? What if the backlash against AI labor replacement gets too strong?

Nadella doesn't have a Plan B. Or if he does, he's not sharing it.

That's actually honest. Sometimes you put all your chips on a vision and you live or die by it. Microsoft has done this before: bet on cloud computing, bet on mobile, bet on gaming. Sometimes it worked, sometimes it didn't.

This is the biggest bet Microsoft's made since the pivot to cloud. And Nadella's confident enough to bet his public reputation on it.

That's either visionary or delusional. We'll find out in 2026.

What This Means for Your Team and Your Choices in 2026

If you're in tech, or you're building products, or you're choosing tools for your team, here's what Nadella's blog post is telling you:

Don't wait for perfect AI. It's not coming. Work with what you have. Build systems around imperfect AI. The competitive advantage goes to people who ship agents that work in real workflows, not who wait for better models.

Integrate, don't isolate. Standalone AI tools are going away. Integrated AI that works within your tools is the future. Choose vendors that can integrate with your entire stack.

Think about impact, not just capability. The AI tool that saves you the most time isn't necessarily the most powerful. It's the one designed for your specific workflow. Capability matters less than integration and reliability.

Prepare for agents, not chatbots. The next wave of AI tools won't be things you talk to. They'll be things that work autonomously within your systems. That's a different skillset to learn and manage.

Plan for 2026 transition. If you're not thinking about how AI agents will change your workflows in 2026, you're behind. Start experimenting now. Start understanding how agents could help your team. Start building institutional knowledge.

Nadella's blog post is a roadmap for companies that are paying attention. The companies that move first will have massive advantages. The companies that wait will be playing catch-up.

FAQ

What is Satya Nadella's "sn scratchpad" blog?

The "sn scratchpad" is a new blog started by Microsoft CEO Satya Nadella where he shares personal thoughts on AI, technology, and strategic direction. The name is an inside joke ("sn" are his initials), and the first post focuses on the future of AI agents in 2026. It's not a corporate blog. It's Nadella's personal platform for setting long-term strategy and shifting industry narrative.

Why is Nadella dismissing the "AI slop" debate?

Nadella argues that focusing on whether AI-generated content is mediocre (slop) versus high-quality (sophisticated) misses the bigger point: whether AI becomes a tool that amplifies human capability or replaces human workers. He wants the industry to move past the anxiety about AI quality and focus on building systems that make humans more capable. The slop debate is a distraction from the more important question of how AI should be deployed.

What does Nadella mean by "cognitive amplifier tools"?

A cognitive amplifier is an AI system that enhances human thinking and decision-making without replacing it. Like how a bicycle amplifies running ability, a cognitive amplifier makes humans more capable at knowledge work, creativity, and problem-solving. It's the opposite of automation (replacing humans) and different from simple tools (which you control explicitly). Cognitive amplifiers are supposed to be proactive partners, not passive instruments.

How is Microsoft's vision different from competitors like OpenAI or Meta?

Microsoft (under Nadella's vision) is focused on building integrated AI systems that work within enterprise tools and workflows, rather than just building the most capable models. OpenAI prioritizes frontier model capabilities. Meta prioritizes open-source model distribution. Google prioritizes integration within search and workplace. Nadella's approach is distinctly about system integration and enterprise embedding, which gives Microsoft structural advantages in the enterprise market but requires flawless execution.

What does Nadella mean by needing a new "theory of the mind"?

Nadella is saying the AI industry needs philosophical consensus on how humans should interact with intelligent systems. Should AI agents make decisions autonomously, or should they require human approval? Should they replace tasks, or amplify human capability? Should they have transparency, or just deliver results? These aren't technical questions. They're philosophical questions about the relationship between humans and AI. The industry hasn't agreed on answers yet, and building AI systems without consensus is causing confusion and backlash.

Why does Nadella emphasize the societal and environmental impact of AI?

AI systems consume massive amounts of energy, compute resources, and talent. These are finite resources. If you're going to use them, Nadella argues, you need to be intentional about what problems you're solving. Deploying AI to generate low-value marketing content is a waste of scarce resources. Companies that think about societal impact and environmental consequences from the start will build more defensible systems and face less regulatory risk. It's both an ethical position and a strategic one.

How realistic is Nadella's prediction that 2026 is a "pivotal year" for AI?

It's ambitious but defensible. Nadella's not predicting AI will be perfect by 2026. He's predicting that the gap between vision and reality will start to close, that agents will become more functional, and that enterprise adoption will become real rather than experimental. The infrastructure exists, the business case is clear, and the competitive landscape has stabilized. Whether Microsoft actually executes on this vision is another question, but 2026 is plausible as a year where AI agents move from promising to practical.

The Moment Where Nadella Pivots From Vision to Execution

Nadella's blog post is the moment where Microsoft publicly pivots from competing on models to competing on systems. It's the moment where the CEO says: "We've spent enough time arguing about whether AI is good or bad. Let's build something useful."

That's actually a significant shift. For 18 months, Microsoft's been competing in the model wars. Beating OpenAI on benchmarks, promoting Copilot's capabilities, racing to add AI to every product. It's the right move for 2024 and early 2025. But it's not a winning long-term strategy.

Nadella's recognizing that. He's essentially saying: "We're good at systems. We're good at enterprise integration. We're good at long-term infrastructure bets. That's where we should compete."

That's a more mature strategy. And if Microsoft executes on it, the company that wrote the playbook on enterprise software integration might do it again with AI.

The companies to watch in 2026 are the ones that move beyond the hype and start shipping systems that actually work. Microsoft's signaling that it's one of those companies. Whether that signal translates into reality is the question 2026 will answer.

Key Takeaways

- Nadella's core argument: The AI industry is debating the wrong thing (slop vs sophistication) instead of focusing on building practical cognitive amplifier systems.

- Microsoft is pivoting from competing on model capabilities to competing on system integration—a strategic shift that plays to the company's strengths in enterprise software.

- 2026 is positioned as a 'pivotal year' not because AI models will be perfect, but because integrated AI agents should finally deliver practical value in real workflows.

- The societal and environmental constraints of AI (energy, compute, talent) are real limitations that should inform where AI gets deployed, not marketing considerations.

- Companies that ship functioning AI agents in 2026 will have massive competitive advantages through network effects and switching costs; the model wars are already decided.

Related Articles

- AI Comes Down to Earth in 2025: From Hype to Reality [2025]

- The Highs and Lows of AI in 2025: What Actually Mattered [2025]

- Pinterest's AI Slop Problem: Why Users Are Leaving [2025]

- Tech Trends 2025: AI, Phones, Computing & Gaming Year Review

- ChatGPT Judges Impossible Superhero Debates: AI's Surprising Verdicts [2025]

- AI Dating Apps vs. Real-Life Connection: The 2025 Shift [2025]

![Satya Nadella's AI Scratchpad: Why 2026 Changes Everything [2025]](https://tryrunable.com/blog/satya-nadella-s-ai-scratchpad-why-2026-changes-everything-20/image-1-1767370096264.jpg)