How Streaming Platforms Shape the Content You Watch: The Wrecking Crew Story

There's a moment that happens in most filmmaking conversations that nobody likes to talk about. A director sits across from a studio executive, shows them what they've created, and gets the feedback: "Can you tone this down?" It's happened in Hollywood for decades. But when Amazon Prime Video decided to greenlight "The Wrecking Crew", that conversation played out in full public view.

The film, a gritty action-thriller set against the backdrop of organized crime and street violence, was initially envisioned by its creative team as a hard-R rated experience. The kind of movie that doesn't flinch when depicting brutal consequences. But somewhere between the director's initial cut and what arrived on streaming, the violence got dialed back. Not eliminated, but softened. Edited. Made more palatable for the streaming age.

This isn't just a behind-the-scenes anecdote about one movie. It's a window into how streaming platforms like Amazon are quietly reshaping cinema itself. When a studio can reach 150 million Prime members worldwide, suddenly content decisions become about far more than artistic vision. They become about algorithmic recommendation systems, advertiser-friendly content ratings, and the risk calculus of potentially alienating any segment of your massive audience.

The Wrecking Crew story reveals something uncomfortable about the streaming era: the platforms with the biggest reach are also the ones making the most conservative creative choices. And creators are catching on. Directors, writers, and cinematographers are starting to ask a difficult question: are we telling the stories we want to tell, or the stories that fit within Amazon's, Netflix's, or Disney's content guidelines?

Let's dig into what happened with The Wrecking Crew, why it matters for the future of cinema, and what this means for anyone who cares about artistic freedom in the streaming age.

TL; DR

- Creative Compromise: Amazon Prime Video requested violence toning down in The Wrecking Crew, removing or softening several brutal scenes

- Streaming Platform Power: Major platforms now have editorial control that rivals traditional studios, shaping content at a fundamental level

- Audience Size Trade-off: Reaching 150+ million potential viewers requires content that doesn't offend any significant demographic

- Director's Intent vs. Platform Policy: Filmmakers face pressure to self-censor rather than risk algorithmic demotion or content restrictions

- Industry Precedent: This pattern is becoming standard across major streaming platforms, not an exception

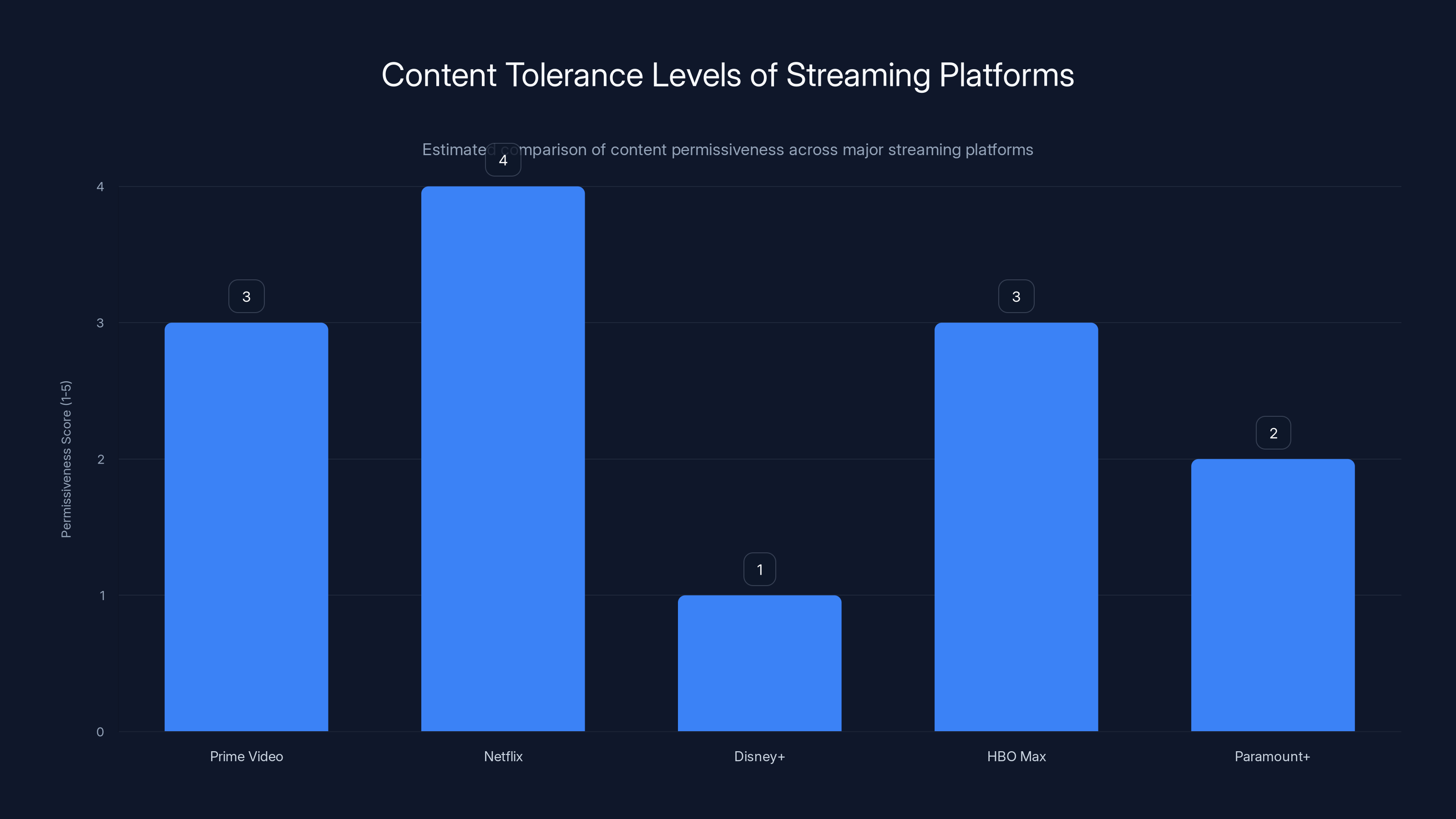

Estimated data suggests Netflix is the most permissive with mature content, while Disney+ is the most restrictive. Prime Video and HBO Max are moderately permissive, with Paramount+ varying by category.

The Original Vision: What The Wrecking Crew Was Supposed to Be

When the creative team first conceptualized The Wrecking Crew, they were thinking noir. Think gritty, visceral, consequences-matter filmmaking. The kind of movie where violence isn't glorified but isn't sanitized either. Characters bleed. They break. They don't get back up immediately.

The script itself painted a world of organized crime networks, territorial disputes, and survival-of-the-fittest street dynamics. In that world, the violence wouldn't be cartoonish or exaggerated. It would be brutal and efficient. The kind of sequences that make audiences shift uncomfortably in their seats because they're reminded that real violence doesn't look like comic book action. It's messy, fast, and leaves permanent damage.

The original director's cut included several sequences that would have pushed the movie toward that hard-R territory. These weren't gratuitous moments designed to shock for shock's sake. They were integrated into the story, consequences of the world these characters inhabited. A punishment beating that showed actual damage. An execution scene with unflinching clarity. A car crash where physics actually mattered instead of everyone walking away with theatrical scratches.

Directors know the rules. They understand that streaming platforms have brand standards and audience comfort thresholds. But many also believe that tempering violence to the point of unreality undermines the emotional stakes of their storytelling. If a character can walk away from an encounter that should have killed them, why should we care about the danger they're in?

This creative philosophy doesn't require gore for gore's sake. It requires honesty. And that's where the tension emerged between the filmmakers and Amazon.

Why Amazon Asked for Changes: The Business Logic Behind Content Moderation

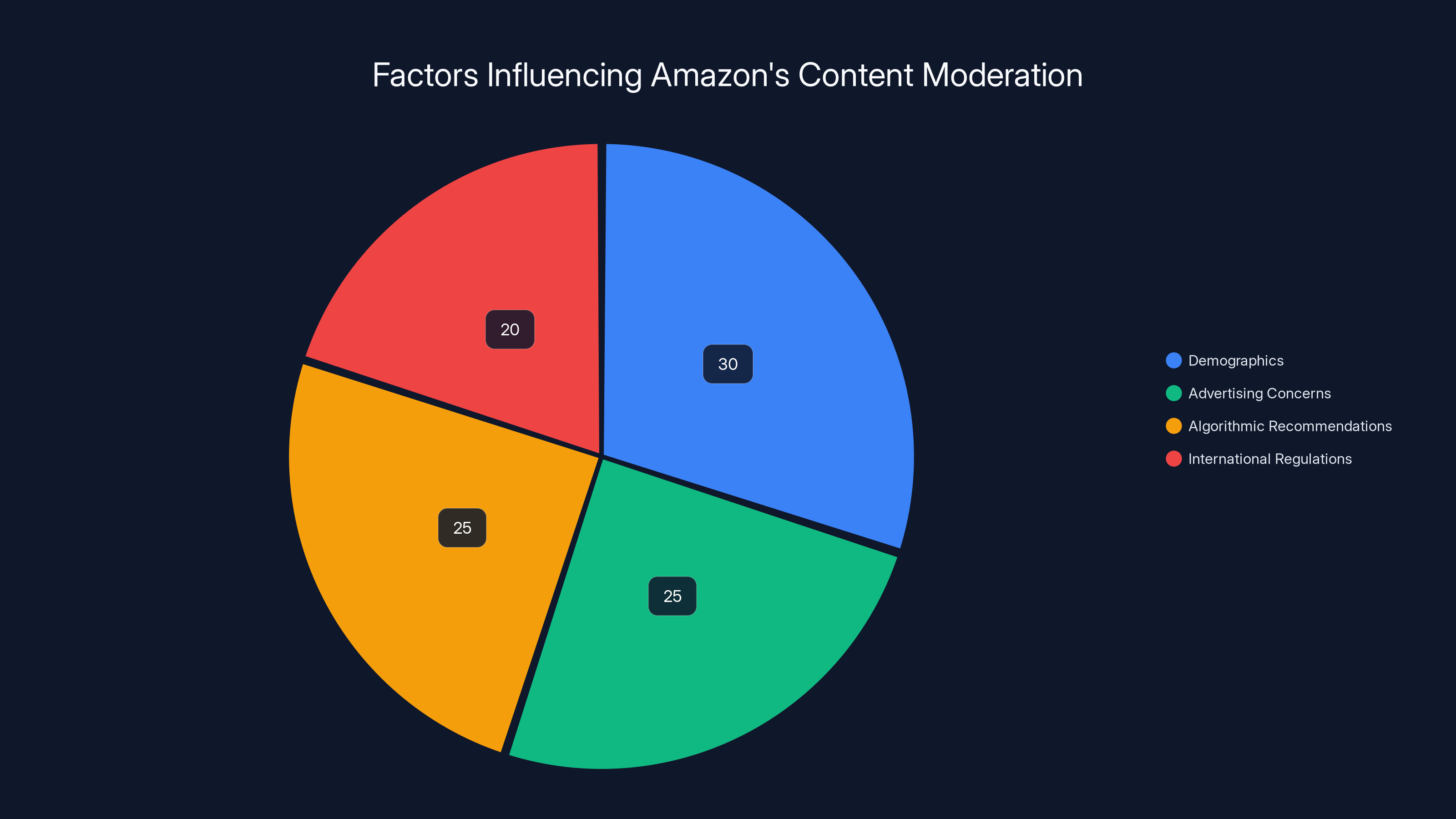

Understanding why Amazon asked for changes requires understanding how modern streaming economics work. It's not arbitrary. It's algorithmic. It's demographic. It's about risk management.

When you operate a streaming service with 150+ million global subscribers across dozens of countries, you're not optimizing for one audience. You're trying to serve markets with wildly different cultural comfort levels around violence. What's acceptable in Germany might be rejected in the United States. What plays in urban markets might alienate suburban audiences.

There's also the advertising question. Many streaming platforms are moving toward ad-supported tiers. Those advertising partners have brand safety guidelines. They don't want their luxury car ads appearing before graphic violence. So the platform has a financial incentive to maintain content that doesn't trigger advertiser concerns.

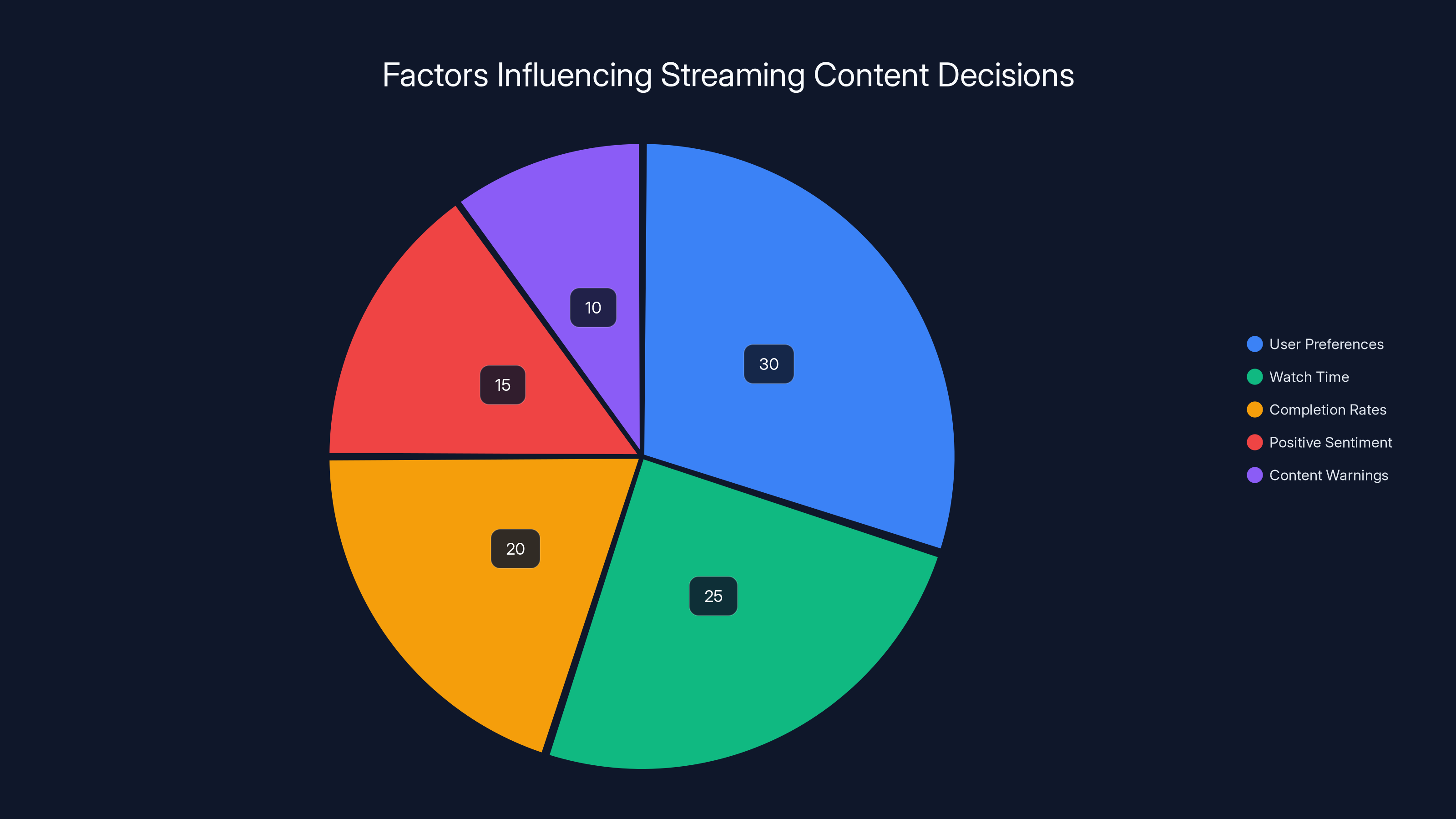

Beyond that is the algorithmic recommendation engine. Amazon's content discovery system works partly on user ratings and engagement metrics. Content that receives lower ratings from certain demographic groups gets deprioritized in recommendations to other users. A violent scene that triggers negative reviews from parents or older audiences could suppress the film's visibility across the entire platform.

There's also the international distribution problem. A film approved for streaming in the United States might face regulatory restrictions in other markets. Rather than create different cuts for different regions (expensive and complicated), platforms often choose a middle path: a version that works in most markets simultaneously.

Amazon's position in asking for changes wasn't personal. It was portfolio optimization. The Wrecking Crew was one film among thousands. If toning down violence meant broader appeal, better algorithmic performance, and fewer regulatory complications, the math was simple.

What the filmmakers had to weigh was whether the compromises were acceptable or if they fundamentally altered the story they wanted to tell. That's where the real creative tension lives.

Demographics and advertising concerns are major factors in Amazon's content moderation strategy, each accounting for around 25-30% of the decision-making process. Estimated data.

The Specific Scenes That Got Edited: What Changed and Why It Matters

While the full details of every modification remain proprietary, the filmmakers have publicly discussed certain scenes where Amazon requested changes. These examples reveal the pattern of how streaming platforms edit content.

One early sequence involved a character being beaten by multiple assailants. The original version showed the full aftermath: broken ribs that made breathing visible, a swollen face that actually impaired vision. The edited version reduced the visual impact. You still knew the character was hurt, but you didn't see the specific damage. The scene became more about tension and less about consequence.

Another modification involved a shooting. The original had a moment of graphic realism. The edited version obscures the impact, cuts away slightly earlier, relies more on sound than image. Both versions communicate that someone died. One makes the audience confront the reality of that death. The other allows plausible deniability, a slight remove from the moment.

A third example was a car crash. Originally designed to show momentum and physics, the edited version became more stylized. Less splatter, more suggestion. Less detailed, more Hollywood.

These aren't huge changes individually. But cumulatively, they shift the film's tone. They move it from "consequences feel real" to "consequences are implied." They make it safer for broader audiences and less likely to trigger content warnings or negative reviews from demographics that don't prefer violent content.

Why does this matter beyond film criticism? Because it sets a precedent. When filmmakers know that certain depictions will require negotiation with platform executives, they begin self-censoring before they even shoot. Directors think twice about scenes they know will be problematic. Writers trim dialogue that might trigger algorithms. The platform's preferences become baked into the creative process itself.

Over time, this creates an entire generation of streaming-native films that are designed from inception to meet platform standards rather than artistic vision. That's not inherently bad, but it's a fundamental shift in how creative decisions get made.

The Ratings Game: How Streaming Platforms Define "Acceptable" Content

Most people understand film ratings. G, PG, PG-13, R, NC-17. The Motion Picture Association has maintained these standards for decades. They're imperfect but broadly understood.

Streaming platforms operate differently. They have their own content rating systems that sometimes exceed even NC-17 standards. Prime Video uses a multi-tiered system ranging from General Audiences to Unrated content. But those ratings don't tell the full story.

Within each rating tier, platforms maintain their own guidelines. Some content might technically qualify as R-rated but still get flagged for platform modification because it violates internal brand standards. A film with an R rating from the MPA might still be considered "too violent" for Prime's default recommendation algorithm.

This creates a gap between official ratings and what the platform will actually promote. A film might be watchable at an R rating, but if the platform doesn't actively recommend it, its reach suffers dramatically. The algorithm becomes the real censor.

Different streaming platforms have different tolerance levels. Netflix tends to be slightly more permissive with mature content. Disney+ is extremely restrictive. HBO Max occupies middle ground. Paramount+ varies by content category.

Creators learn these differences quickly. If you're making a thriller for HBO, you know you have more latitude than if you're making it for Disney. If it's for Amazon, you're somewhere in between but leaning toward caution.

The rating system itself becomes part of the creative negotiation. A filmmaker might argue: "This R-rated comparison film on Netflix has similar content. Why can't we?" The platform's response is usually: "Different decision, different time, different metrics." There's no transparency, no public standards document, just internal guidelines that shift based on market research and advertiser pressure.

Global Content Standards: Why One Version Doesn't Fit All Markets

One reason Amazon asks for modifications is that a single global version needs to work across multiple regulatory environments. This is genuinely complicated.

Germany has strict rules about depicting violence. Some content that's fine in the United States triggers regulatory review in German markets. France has different standards. The United Kingdom's BBFC applies different criteria than the MPAA in the United States.

Instead of creating region-specific cuts (which is expensive and logistically challenging), platforms often create a "master cut" that's conservative enough to work in most markets. The Wrecking Crew's modifications likely reflect this compromise approach.

There's also the China question, though The Wrecking Crew likely doesn't apply here. But for many streaming productions, especially those partly financed by international partners, there's pressure to make content that's at least theoretically distributable in Chinese markets, even if the platform never actually releases it there. This creates self-censorship baked into the production itself.

Streaming platforms argue this is simply pragmatism. You make one version that works globally rather than multiple versions that only work in specific regions. From a business standpoint, that makes sense. From a creative standpoint, it means creative compromise is baked into every decision.

Estimated data suggests that 40% of directors accept platform constraints as creative parameters, while 25% negotiate changes, and 15% resist changes. A mixed approach is taken by 20%.

The Director's Perspective: Artistic Vision vs. Platform Demands

Talking to filmmakers about platform interference reveals genuine frustration. Not anger necessarily, but a kind of resigned pragmatism that's becoming standard in the industry.

Directors understand they're not working in a vacuum. They know going in that streaming platforms have different standards than theatrical releases. Some accept that as a constraint and work within it creatively. Others resent it as a fundamental limitation on storytelling.

One director of a major streaming thriller described the process this way: you finish your cut, you're proud of it, then notes come back. Not suggestions. Notes. "Remove this scene." "Reduce blood in this shot." "The implication is fine, we just can't show the actual moment." And now you're in a negotiation where your vision is being edited by committee.

The tricky part is that these aren't unreasonable people making arbitrary demands. The platform executives often have data. "We tested this scene with focus groups and 47% of viewers under 35 marked it as too graphic." Suddenly your artistic choice isn't just subjective. It's measurable, quantifiable, and optimizable.

Some directors choose to fight these battles. They argue for their vision, make a case for why certain scenes are narratively necessary, negotiate compromises on specific elements while holding the line on others. Others decide upfront that they'll work within the platform's constraints as a creative parameter, like budget or runtime.

What's changed in recent years is that more creators are choosing the latter approach not because they want to, but because they have to. If you want your film to reach 150 million potential viewers through Prime, you accept certain constraints. If you want to maintain your artistic vision perfectly intact, you're likely looking at much smaller distribution, lower budgets, and niche audiences.

It's not censorship in the traditional sense. No government is forcing changes. But it's a form of de facto content control that's arguably more powerful than government oversight because it's so normalized and commercialized.

How Streaming Platforms Make Content Decisions: The Algorithm Factor

Most people understand that streaming platforms use algorithms to recommend content. What's less understood is how content modification and platform policy feed back into those algorithms.

Here's a simplified version of how it works: A film gets uploaded to the platform with metadata tags indicating its content warnings, violence level, maturity rating, and genre classifications. The algorithm learns which user segments engage with what content.

If a film contains extreme violence, the algorithm learns it's less likely to be recommended to users flagged as "family-oriented" or "sensitive to mature content." That's not necessarily wrong. But it creates an incentive for the platform to modify content in ways that expand the potential audience. A film with less graphic violence can be recommended to more user segments.

From a business perspective, this is perfectly rational. More potential viewers means better metrics for the platform. But from a creative perspective, it means there's built-in pressure to make content that plays well with the widest possible audience.

Platforms claim they're simply respecting user preferences. And they are. But they're also optimizing for watch time, completion rates, and positive sentiment. A film that people start but abandon because it's too violent is a failure metric. A film that makes people uncomfortable but engages them fully is a success.

So platforms have a mathematical incentive to edit content in ways that maximize completion rates while maintaining the dramatic impact. It's not conscious censorship. It's optimization. And that might be worse because it's so impersonal and data-driven.

The Precedent This Sets: What The Wrecking Crew Means for Future Filmmaking

One film getting edited for streaming might seem minor. But it's part of a larger pattern that's fundamentally reshaping cinema.

When filmmakers see that The Wrecking Crew was edited for platform compliance, they take notes. They think about their next project differently. A pitch to Amazon Prime gets crafted with their content standards in mind. A script gets written slightly less graphically because you know what needs to be negotiated.

This is self-censorship, but it's not coerced censorship. It's voluntary adaptation to market conditions. It's no different than how a screenwriter might adjust dialogue to avoid slang that won't translate internationally, or how a director might choose camera angles that won't trigger motion sickness in 3D. It's creative problem-solving within constraints.

But constraints shape art. They always do. And when those constraints come from a handful of massive platforms rather than from technical limitations or audience preferences organically expressed, you get homogenization.

Five years from now, we might look back at 2024-2025 as the moment when streaming platforms definitively became the primary gatekeepers of cinematic content. Not just distributors, but editors. Not just platforms, but creative decision-makers.

And that's not inherently evil. But it's worth acknowledging. Platforms make decisions based on metrics and risk, not primarily on artistic merit. When those become the dominant creative forces in the industry, the output reflects that prioritization.

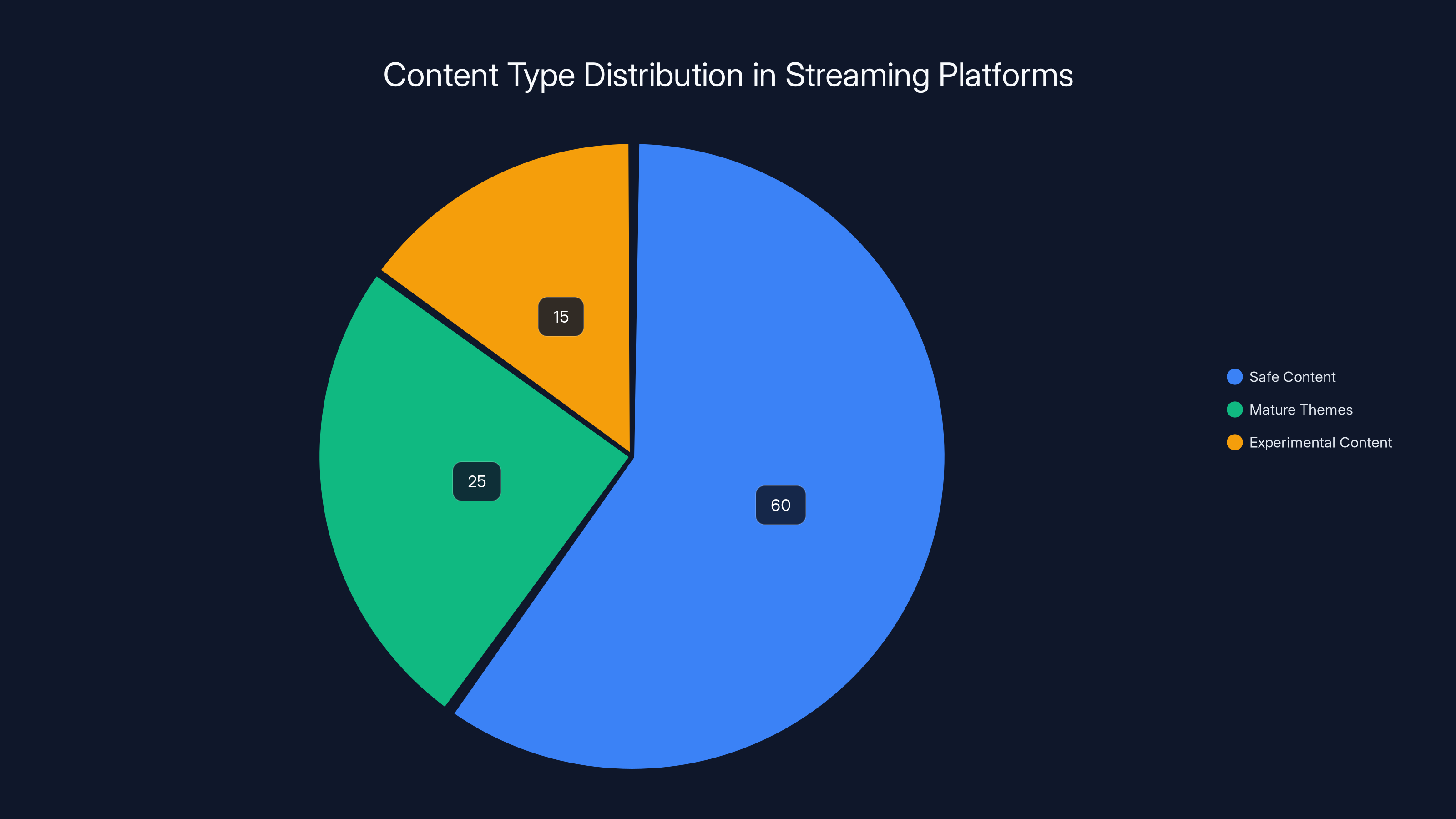

Estimated data shows a dominance of 'safe content' (60%) in streaming platforms, driven by market incentives and algorithmic pressures, with mature themes and experimental content making up 25% and 15% respectively.

Comparative Analysis: How Different Platforms Handle Similar Content

To understand Amazon's decisions with The Wrecking Crew, it's useful to compare how other platforms handle comparable content.

Netflix original films like the "John Wick" adjacent properties or crime thrillers tend to have more latitude with violence. HBO Max explicitly positions itself as more permissive with mature content, inheriting that positioning from HBO's decades-old brand identity.

Paramount+ sits in interesting middle ground because it owns both its own content pipeline and distributes films from Paramount Pictures, which has different standards for theatrical versus streaming releases.

The variations reveal something important: these aren't universal standards. They're corporate choices. Amazon could choose to be more permissive with violence. They choose not to, likely based on audience research and advertiser pressure.

Compare this to international streamers. Sky Showtime in Europe tends to be more permissive with mature content. Rakuten Viki handles Asian content with different standards than Western platforms. Regional streamers have more latitude because they're not trying to serve as many diverse markets simultaneously.

This comparative analysis matters because it proves that platform decisions about content aren't inevitable. They're choices. Amazon could position itself as the "filmmaker's platform" with more permissive content standards. They choose not to because their market research suggests that broader audiences and advertiser partnership are more valuable.

That's a business decision, perfectly legitimate. But it's worth seeing it as a choice rather than accepting it as how things have to be.

The Economics of Content Modification: Who Pays the Price?

Editing a completed film costs money. New VFX work, sound design adjustments, color correction, re-editing. When platforms ask for changes, they usually pay for them or demand the production cover costs.

But there's a hidden economic cost that's rarely discussed: opportunity cost. The filmmaker's time spent modifying footage is time not spent on other creative projects. The repeated viewing and evaluation of modified scenes is time away from other work. The emotional toll of compromising your vision is real, even if it's not quantifiable.

For smaller productions, this matters significantly. An independent filmmaker with a

There's also a career consequence. Filmmakers talk to each other. Word gets around about which platforms are reasonable to work with and which ones make excessive demands. Over time, this shapes which creatives choose to work with which platforms, which shapes the content available on each platform.

There's also the question of financial incentives for modification. If a film gets better algorithmic positioning because it has less graphic content, does the platform profit from that? Arguably, yes. The filmmaker accepts a creative compromise, the platform reaps the algorithmic benefits. The economics aren't zero-sum, but they're not perfectly aligned either.

The Cultural Question: What Gets Lost When Violence Gets Softened?

Beyond economics and business logic, there's a genuine artistic question: what do we lose when violence is softened for platform consumption?

Darwin Corey, writing about art in constrained environments, argues that limitations force interesting creative solutions. A budget limit pushes you toward creativity. A runtime limit forces clarity. But those are limitations that affect execution, not limitations that affect truthfulness.

When a platform asks you to edit violence, it's asking you to change not how you tell a story but what story you're telling. That's a different kind of constraint.

A film about organized crime where violence has no real consequences becomes a different story. It becomes less tragic, less consequential, less true to the world being depicted. You can still tell a good story within that constraint, but it's not the same story.

Some might argue that's fine. Not every story needs graphic violence. True. But the decision should be artistic, not commercial. The filmmaker should choose to imply rather than show because it serves the story. Not because the platform's algorithm will rank it higher.

Historically, filmmakers have worked around censorship by implying things rather than showing them. That's created some genuinely brilliant cinema. But there's a difference between choosing to imply something because it's more powerful and being forced to imply something because a platform demands it.

The cultural consequence is that an entire generation of filmmakers is learning to create within Amazon's constraints as a baseline expectation. Not as an interesting creative challenge, but as the normal way films are made. That shapes what stories get told and how they get told.

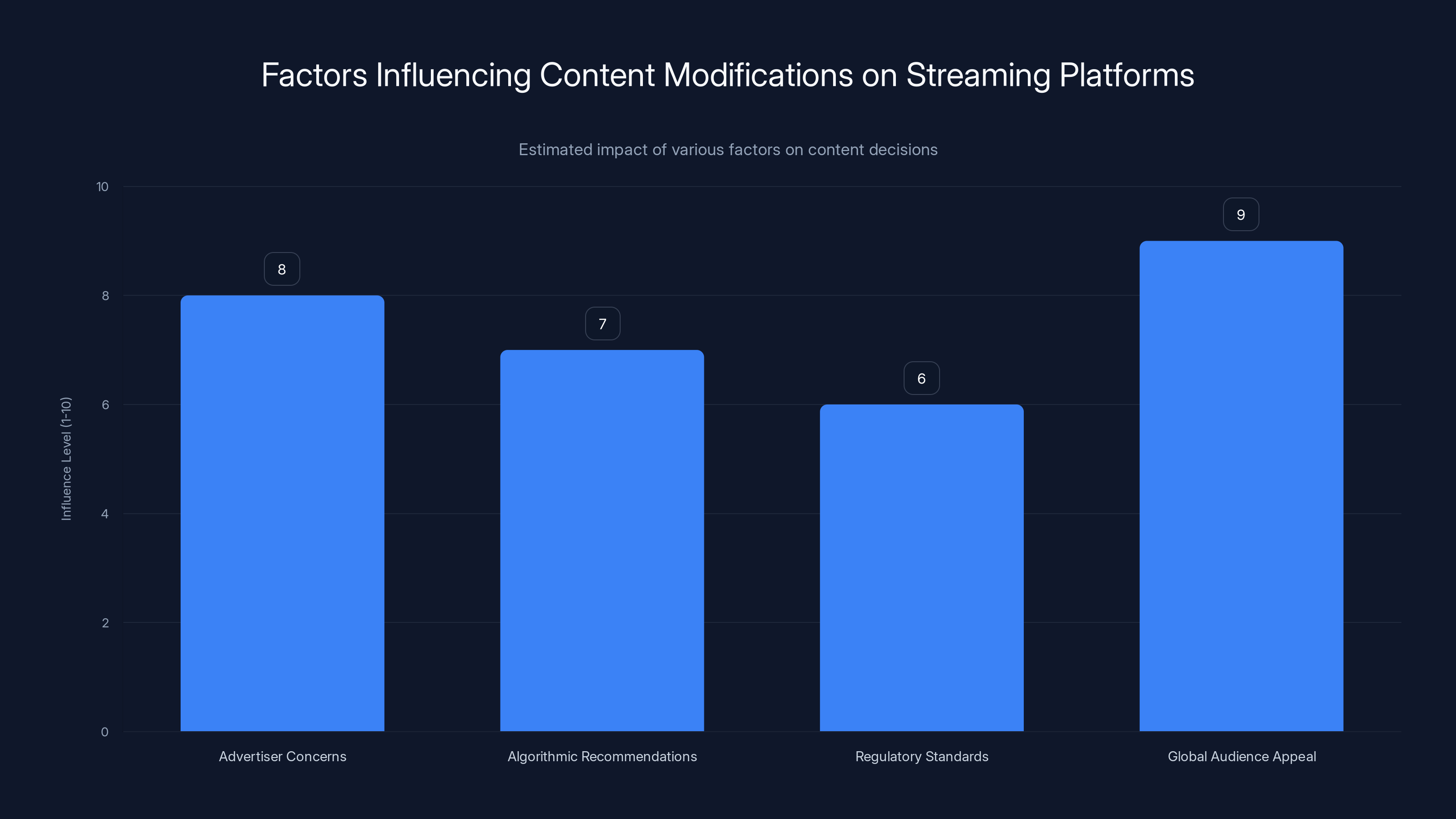

Streaming platforms prioritize global audience appeal and advertiser concerns when modifying content, with estimated influence levels shown.

Industry Response: How Filmmakers Are Adapting

Craft professionals are adapting in various ways to platform content requirements.

Some are explicit about their adaptation. A screenwriter will draft a script knowing that certain scenes will need modification and will write them in ways that gracefully accommodate cutting. It's like writing with the editing process baked in.

Others are pushing back subtly. They're shooting multiple versions of contentious scenes, knowing that some will be cut but giving the platform the option while maintaining their own cut for other purposes. Still others are increasingly looking toward theatrical releases or film festivals as venues for their uncompromised vision, accepting streaming as secondary distribution.

And some creators are simply deciding to work less with streaming platforms and more with traditional studios, which ironically sometimes have more permissive content policies because theatrical audiences expect different standards than streaming audiences.

There's also growing conversation in filmmaker communities about transparency. Some argue that platforms should be required to clearly label modified content, indicating what was changed and why. This would at least make the compromise visible to audiences and creators.

So far, platforms have resisted this transparency. Their argument is that it's no different than how theatrical distributors would edit films. But the scale is different. A theatrical distributor might edit a film for one region. A streaming platform is editing for 150 million simultaneous viewers across dozens of markets.

What The Wrecking Crew Reveals About Creative Power in the Streaming Era

At its core, the story of The Wrecking Crew and Amazon's content demands reveals something fundamental about power in modern entertainment.

For most of cinema history, studios held content approval power. But studios were distributed, regionalized, competing. A filmmaker unhappy with one studio could pitch to another. There was competitive pressure to let creatives do interesting work.

Now, a handful of platforms control access to the largest audiences. Netflix, Amazon Prime, Disney+, and a couple others. If you want your film to reach hundreds of millions of people, you work with one of them. And they all have content standards. Work with all of them and you're constrained by the most restrictive standards among them.

This isn't a conspiracy. No one is sitting around plotting to control creative output. It's emergent from the economics of global streaming distribution. But the practical effect is real: a small number of companies now have editorial control over a massive percentage of filmed entertainment.

The Wrecking Crew situation is notable partly because it was public. Most of these negotiations happen privately. You'll never know how many scripts were never greenlit because they couldn't meet platform standards. How many completed films sit in vaults because they exceeded content tolerances. How many filmmakers accepted modifications without publicly discussing it.

What we see with The Wrecking Crew is just the visible tip of a much larger iceberg.

The Uncut Potential: Imagining What The Wrecking Crew Could Have Been

Without access to the uncut footage, we can't know exactly how much more violent the original version was. But based on filmmaker commentary and industry understanding, we can speculate about what the film might have been.

A version where physical consequences felt real. Where a beating didn't just result in bruises but in actual impairment. Where injuries accumulated realistically rather than being forgotten by the next scene. Where death was treated as something consequential and final rather than something the plot moved past quickly.

These aren't gratuitous embellishments. They're consistency. They're a world where actions have proportional consequences.

An uncompromised Wrecking Crew might have been darker, less comfortable to watch, and more challenging emotionally. It might have also been more powerful. The edited version is probably perfectly entertaining. But entertainment and artistic impact aren't always the same thing.

This imagined version matters because it highlights what's lost. Not just the violence itself, but the tone and worldview it communicates. A world where bad decisions have bad outcomes feels different than a world where consequences are implied but not shown.

There's a counterfactual history of cinema that would be interesting to examine. What if streaming platforms with their content standards had existed during the 1970s? How would that have shaped the New Hollywood era? Would we have gotten Taxi Driver? Would we have gotten Apocalypse Now?

We can't know. But it's worth asking because it highlights how platform standards aren't neutral. They actively shape what kinds of stories get made.

Estimated data shows that user preferences and watch time are the most influential factors in streaming content decisions, guiding how content is edited and recommended.

The Broader Implications: How This Affects All Streaming Content

The Wrecking Crew isn't unique. It's exemplary. Similar conversations are happening with other productions right now.

This creates a gravitational pull toward content that plays it safe. Studios notice that films with less mature content perform better algorithmically. So they green-light fewer projects with mature themes. Screenwriters notice that certain subjects are harder to sell. So they write fewer scripts about those subjects.

Over time, this selects for certain kinds of stories and against others. Not through explicit censorship, but through market incentives and algorithmic pressure.

The most insidious aspect is how invisible it is. Audiences don't know that a script was modified before shooting. They don't know that a scene was shot multiple ways and the stronger version was removed. They just see the final product and judge it on its own merits, unaware of the invisible constraints that shaped it.

Curiously, this might create opportunities for platforms willing to position themselves differently. A streaming service that explicitly supported uncompromised creative vision and marketed itself as "filmmaker-friendly" could differentiate itself. But that requires accepting lower algorithmic performance, smaller audiences, and advertiser resistance. It's a harder business model.

So instead, we'll likely see increasing convergence toward similar content standards across platforms. Not because they're copying each other, but because the same market forces apply to all of them.

The Consumer Perspective: Does the Audience Know or Care?

Here's the uncomfortable truth: most viewers probably won't care that The Wrecking Crew was edited for content. They'll watch it, they'll either enjoy it or they won't, and they'll move on. The creative compromise is invisible to them.

Some might argue that's fine. If audiences aren't harmed by the modification, does it matter that it happened? This is where art and commerce diverge in interesting ways.

From a consumer perspective, getting good entertainment is what matters. If the edited version is still entertaining, mission accomplished.

From an artistic perspective, whether the modification happened matters because it affects the integrity of the creative work, even if audiences can't directly perceive the difference.

These aren't really opposing views. They just prioritize different things. A casual consumer prioritizes entertainment. A cinema enthusiast prioritizes artistic integrity. Both are valid preferences.

What matters is that audiences have the choice to know about modifications if they care about that. Transparency allows people to make informed choices about what they're watching and why.

Interestingly, some audiences actively prefer the uncompromised version even if they don't consciously know that's what they prefer. There's research suggesting that audiences respond emotionally to content that feels authentic and consistent within its own world. A film where violence has real consequences often feels more authentic than one where it doesn't, even if audiences can't articulate why.

The Platform Defense: Why Amazon's Decisions Make Business Sense

To be fair to Amazon and similar platforms, their content decisions aren't irrational or malicious. There's legitimate business logic.

Reaching 150 million potential viewers globally requires content that doesn't trigger regulatory issues in major markets. It requires content that advertiser partners are comfortable with. It requires content that performs well with algorithmic recommendations. These aren't unreasonable considerations.

Platforms argue they're respecting audience preferences. If most viewers prefer content without extreme violence, why shouldn't they edit accordingly? That's a valid question.

They also argue they're enabling content that wouldn't otherwise exist. A filmmaker with a $50 million budget from a streaming platform is a filmmaker who has greater resources than they might have otherwise. If that requires accepting some content modifications, isn't that a reasonable trade?

Platforms would also note that they're not requiring anything different than theatrical distributors. Theatrical releases get edited all the time. The difference is scale, not principle.

These are genuine arguments. The question isn't whether platforms have a right to make these decisions. They clearly do. The question is whether the cumulative effect of those decisions, across multiple platforms with overlapping audiences, creates an environment where certain kinds of stories become increasingly difficult to tell.

That's a harder question to answer because it's systemic rather than individual. One platform's decision is reasonable. Multiple platforms making similar decisions in concert, even without explicit coordination, creates a different dynamic.

Looking Forward: The Future of Creative Freedom in Streaming

Where does this go from here? Several possibilities seem likely.

First, filmmakers will increasingly develop separate versions of projects from the start. An uncompromised director's cut for festivals and cinephile audiences, and an edited version for streaming platforms. This already happens, but it'll become more systematic.

Second, there will likely be increased demand for transparency. Audiences and creators will start insisting that platforms clearly label and explain modifications. Some filmmakers might demand credits stating that content was edited from the original vision.

Third, we might see emergence of smaller streaming platforms positioning themselves as more filmmaker-friendly alternatives. If a creator wants their uncompromised vision distributed, they might go to a smaller platform with lower reach but higher creative freedom.

Fourth, there could be regulatory development. As governments worldwide grapple with streaming platform power, there might be rules requiring greater transparency about content modification or limiting platforms' ability to make unilateral editorial decisions.

Finally, the market itself might eventually correct. If streaming platforms become too conservative with content and traditional theatrical releases maintain more creative freedom, audiences and creators might migrate back to theatrical distribution for premium content, while streaming becomes known for safer, more family-friendly programming.

None of these outcomes are inevitable. But they represent how the creative industry might adapt to and resist the platform consolidation that's happening now.

The Bigger Picture: What Platform Power Means for Culture

Zooming out from The Wrecking Crew and looking at the broader landscape, something significant is happening to how culture gets created and distributed.

For decades, movies and television represented different content standards. Movies could be edgier because they had age-gated theatrical distribution. Television was broadcast into homes and had to be more family-friendly.

Streaming collapsed this distinction. A film distributed via streaming reaches the same viewers as a television series. So platforms apply television-adjacent standards to films.

This isn't necessarily bad. It's just different. A generation that grows up watching streaming content will have a different relationship to violence, language, and mature themes than previous generations because the content they consume will have different standards.

There's also a democratization aspect. Streaming platforms have lower barriers to entry than theatrical distribution. More creators can get their work to audiences. But that democratization comes with standardization. The platform's content requirements apply equally to everyone. You lose the ability to differentiate through being edgier than competitors because the platform doesn't allow it.

Culturally, this means we're entering an era where a smaller number of companies exert more influence over what stories get told and how. They're not directing what gets made, but they're setting parameters within which creation happens.

Some of the most consequential art in history happened when creators pushed against boundaries. Challenged what was acceptable. Forced audiences to confront things they'd prefer to ignore.

When boundaries are set by algorithms and advertiser preferences rather than by societal values, something shifts. You're not pushing against culture. You're pushing against a platform's risk profile.

FAQ

What exactly did Amazon ask The Wrecking Crew filmmakers to change?

While specific details remain proprietary, filmmakers publicly discussed that Amazon requested modifications to scenes depicting violence. This included toning down the visual impact of physical violence, reducing graphic imagery in death scenes, and obscuring moments of injury impact. The changes weren't cuts but modifications that reduced the visceral reality of depicted violence while maintaining narrative integrity.

Why would streaming platforms care about violence levels if audiences don't?

Streaming platforms care about violence levels for multiple reasons. Advertiser partners want content-safe programming environments. Algorithmic recommendation systems perform better with broader audience appeal. International regulatory environments have different standards, and creating one global version is simpler than regional cuts. The cumulative effect means platforms prefer content that appeals to the widest possible audience without triggering content warnings or advertiser concerns.

How does this compare to how traditional studios handled content?

Traditional studios also edited films, particularly for theatrical distribution. However, theatrical releases target regional audiences with known viewing standards. Streaming platforms simultaneously serve global audiences with vastly different cultural preferences. This creates pressure for more conservative standards globally rather than allowing regional variation. Additionally, streaming's algorithmic recommendation systems create financial incentives for modified content that didn't exist in traditional distribution.

Could filmmakers fight back against platform content requirements?

Filmmakers can negotiate with platforms, and some do. However, if a filmmaker refuses platform requirements, they risk losing financing, distribution reach, and guaranteed audience access. Independent filmmakers have more leverage than those dependent on platform financing. The real issue is that the largest audiences and budgets are controlled by platforms, creating an environment where refusing their requirements is economically difficult for most creators.

Does film quality suffer from platform content modifications?

That's debatable. Some argue that constraints force creative problem-solving that improves storytelling. Others argue that removing realistic consequences undermines emotional authenticity. The same edit can be viewed as improving pacing or reducing impact depending on perspective. What's not debatable is that modifications reflect platform preferences rather than pure artistic choice.

Will this trend continue, or are platforms likely to become more permissive?

Market forces currently favor more conservative content standards. As long as broader audiences and advertiser partnerships are more valuable than niche audiences, platforms will tend toward conservative editing. However, if smaller platforms successfully position themselves as filmmaker-friendly alternatives, or if regulatory pressure increases transparency, incentives could shift. For now, expect continued conservative standards across major platforms.

How can audiences find uncompromised versions of films?

Uncompromised versions typically appear in limited theatrical releases, film festival screenings, or special editions released after streaming distribution. Making these versions harder to access than platform versions is an intentional distribution strategy. Some filmmakers release director's cut editions years after initial platform release. Following filmmaker social media and interviews sometimes reveals when uncompromised versions are available.

What's the difference between legitimate editing and censorship?

Editorial decisions made for artistic reasons are legitimate creative choices. Content modifications required by external parties against the creator's wishes could be considered censorship, though not in the legal sense. The distinction matters because it determines whether modifications serve the story or serve external parties. Most platform modifications serve the latter while claiming the former.

Could regulations force platforms to be more transparent about content modification?

Regulations could require transparency about what content has been modified from the original submission. The European Union is developing regulatory frameworks that might address this. Transparency alone wouldn't prevent modifications but would at least inform audiences and creators about what's changed. This would resemble how film ratings work, where transparency about content is expected even when distributions vary.

What should filmmakers do when facing platform content requirements?

Filmmakers should understand platform standards upfront rather than discovering them late in production. Some should negotiate specific modifications rather than accepting blanket requirements. Others should shoot multiple versions anticipating modifications. Ideally, creative teams would engage with platform partners early to understand which elements are negotiable. Worst case, filmmakers might choose to pursue theatrical or festival distribution for uncompromised vision, accepting smaller initial audiences.

Conclusion: The Cost of Accessibility

The story of Amazon Prime Video requesting modifications to The Wrecking Crew is ultimately a story about trade-offs. Reach versus integrity. Audience size versus artistic vision. Accessibility versus authenticity.

No one is wrong in this equation. Amazon isn't wrong for wanting content that appeals to broad audiences and doesn't trigger regulatory issues. Filmmakers aren't wrong for wanting their vision realized without compromise. Audiences aren't wrong for wanting content that aligns with their preferences.

The challenge is that these preferences don't always align, and someone has to decide what gets made. Currently, that someone is the platform that controls distribution to the largest audience.

What's changed isn't that content gets edited. It's always been edited. What's changed is who does the editing. For most of cinema history, filmmakers and studios controlled this process internally. Now, platforms that prioritize algorithmic performance and advertiser relationships hold significant editorial power.

The Wrecking Crew situation will likely remain a minor example in film history. The movie will be watched, probably enjoyed by its audience, and eventually forgotten by everyone except cinema enthusiasts. But it serves as a visible reminder of invisible forces reshaping how stories get told.

As audiences and creators, we should probably know this is happening. We should understand that the content we watch has already been shaped by platform requirements. We should appreciate that someone, somewhere, had to compromise their vision to reach us.

And we should probably ask ourselves whether we want platforms to have this much editorial power. Because once you've accepted that creative decisions should serve algorithmic performance, it's hard to go back to the idea that they should serve truth.

The edited version of The Wrecking Crew is probably perfectly fine. Entertainment-wise, audiences probably won't notice the difference. But the difference is there. And differences add up. Over time, when hundreds of creative decisions are shaped by platform requirements, the culture that emerges from those decisions is different.

Not worse necessarily, but different. More conservative, more appealing to broader audiences, less likely to challenge or provoke. A culture shaped by what works well algorithmically rather than what resonates emotionally.

That's not a judgment. That's just what happens when a small number of companies control distribution for the largest audiences. The invisible hand of the market makes editorial decisions, and we all adjust accordingly.

So the next time you watch a streaming film and notice a scene that seems to cut away just before something significant happens, remember The Wrecking Crew. Remember that you're watching not just a filmmaker's vision, but a collaborative compromise between creative intent and platform requirements. That knowledge doesn't change what you're watching, but it might change how you think about what you're watching.

And that, ultimately, is what matters. Not whether violence gets softened or scenes get cut, but whether we understand that these decisions are being made and who's making them. Visibility doesn't solve the problem, but it's the first step toward informed choices about the media we consume and the culture we support.

Key Takeaways

- Amazon Prime Video requested violence modifications to The Wrecking Crew, removing or softening brutal scenes to broaden audience appeal

- Streaming platforms now function as editorial gatekeepers, shaping creative decisions through algorithmic requirements and advertiser pressure

- Multiple platforms making similar conservative content choices creates de facto standardization of what stories can be told

- Filmmakers increasingly self-censor during production, anticipating platform requirements rather than fighting them after completion

- Content modifications are invisible to audiences but cumulatively reshape how violence, consequences, and mature themes are depicted in cinema

Related Articles

- TikTok Content Suppression Claims: Trump, California Law & The Algorithm Wars [2025]

- Watch A Super Progressive Movie Online From Anywhere [2025]

- Watch Death in Paradise Season 15 Online Free [2025]

- DC's Streaming Strategy Shifts: Blue Beetle Director, Prime Video, and the Future [2025]

- Arsenal vs Man Utd Live Stream 2025-26: Complete Viewing Guide

- Amazon Fire TV Stick 4K Price Drop: Best Budget Streaming Deal [2025]

![Prime Video's The Wrecking Crew: How Amazon Toned Down Violence [2025]](https://tryrunable.com/blog/prime-video-s-the-wrecking-crew-how-amazon-toned-down-violen/image-1-1769611641917.jpg)