TikTok's Algorithm Wars: The Trump Content Suppression Controversy Explained

Last month, California's governor opened an investigation into something that feels increasingly like science fiction but is very real: the possibility that TikTok's algorithm is systematically suppressing content critical of a sitting U.S. president. The claim isn't new in tech circles, but the official government scrutiny is. This isn't just another social media scandal. It touches on fundamental questions about platform power, algorithmic transparency, and whether a Chinese-owned company is shaping American political discourse without our knowing it.

Here's what happened. Multiple users began reporting that their videos criticizing President Trump were receiving dramatically reduced visibility on the platform. Some creators with hundreds of thousands of followers saw their critical content get shadow-banned, receiving fractions of their normal engagement rates. One user described TikTok as "now a surveillance app," capturing a growing unease about who controls what millions of Americans see.

The California investigation asks a straightforward but explosive question: Is TikTok violating state law by algorithmically suppressing certain political speech? If true, this would represent a level of political interference that goes beyond the usual algorithm-driven content moderation debates. We're talking about a platform potentially weaponizing its recommendation engine against specific political viewpoints.

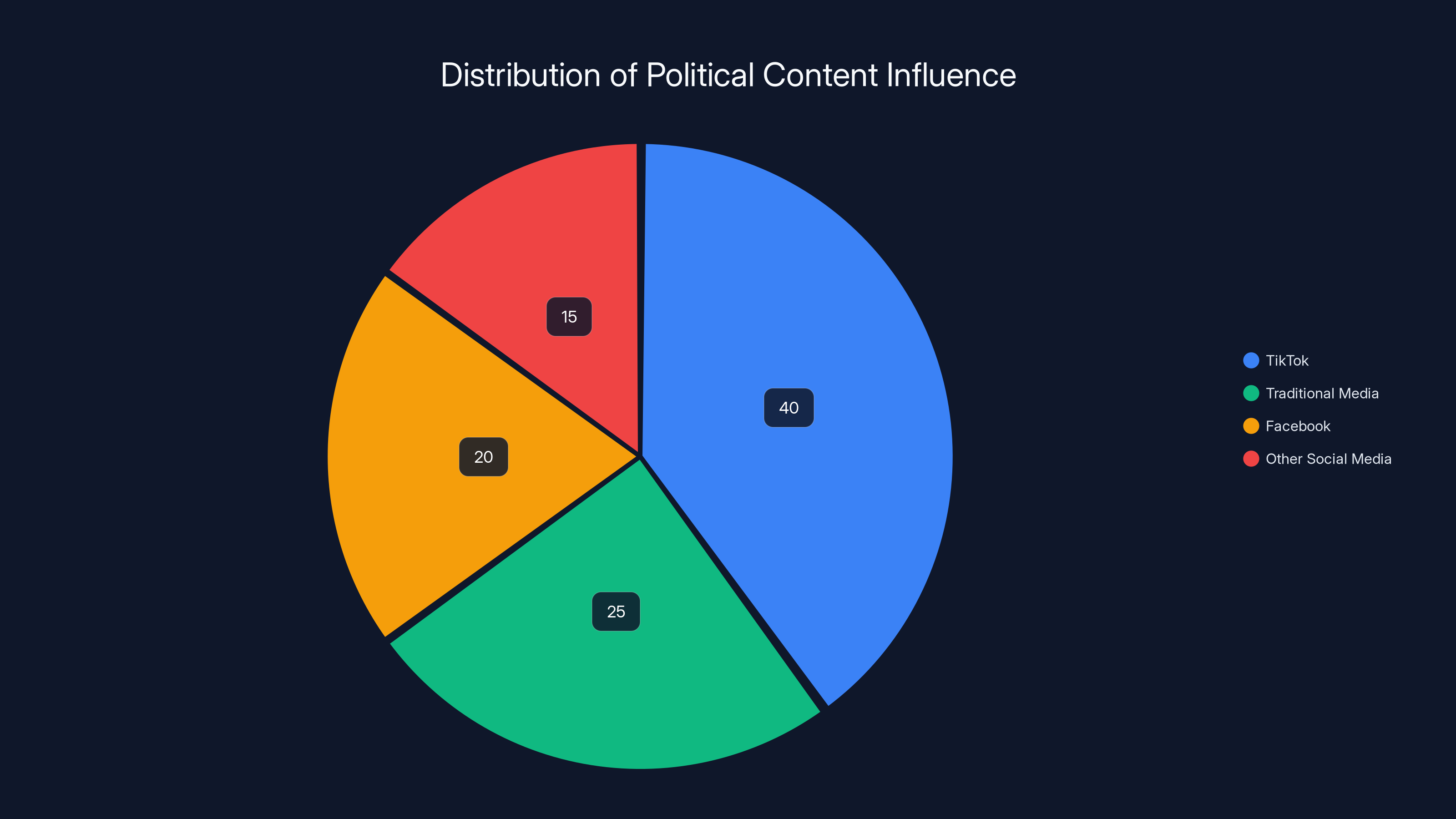

What makes this different from other platform censorship accusations is the scale and the specificity. TikTok's algorithm doesn't just rank content. It decides what appears on billions of feeds daily. With over 170 million American users, TikTok essentially functions as a primary news source for Gen Z and younger millennials. If that algorithm is politically biased, we're looking at the largest political information operation in modern history.

The stakes are enormous. This investigation could fundamentally reshape how we think about platform regulation, algorithmic accountability, and the relationship between tech companies and government. It could also set a precedent that forces other platforms to open their algorithmic books to public scrutiny. But it could also reveal uncomfortable truths about how all major platforms make visibility decisions based on political and commercial interests.

Let's walk through what we know, what we don't, and what happens next.

The Claims: What Users Are Reporting

The suppression narrative didn't emerge from thin air. Starting in late 2024 and continuing into early 2025, hundreds of TikTok creators independently reported the same pattern: their anti-Trump content disappeared from feeds.

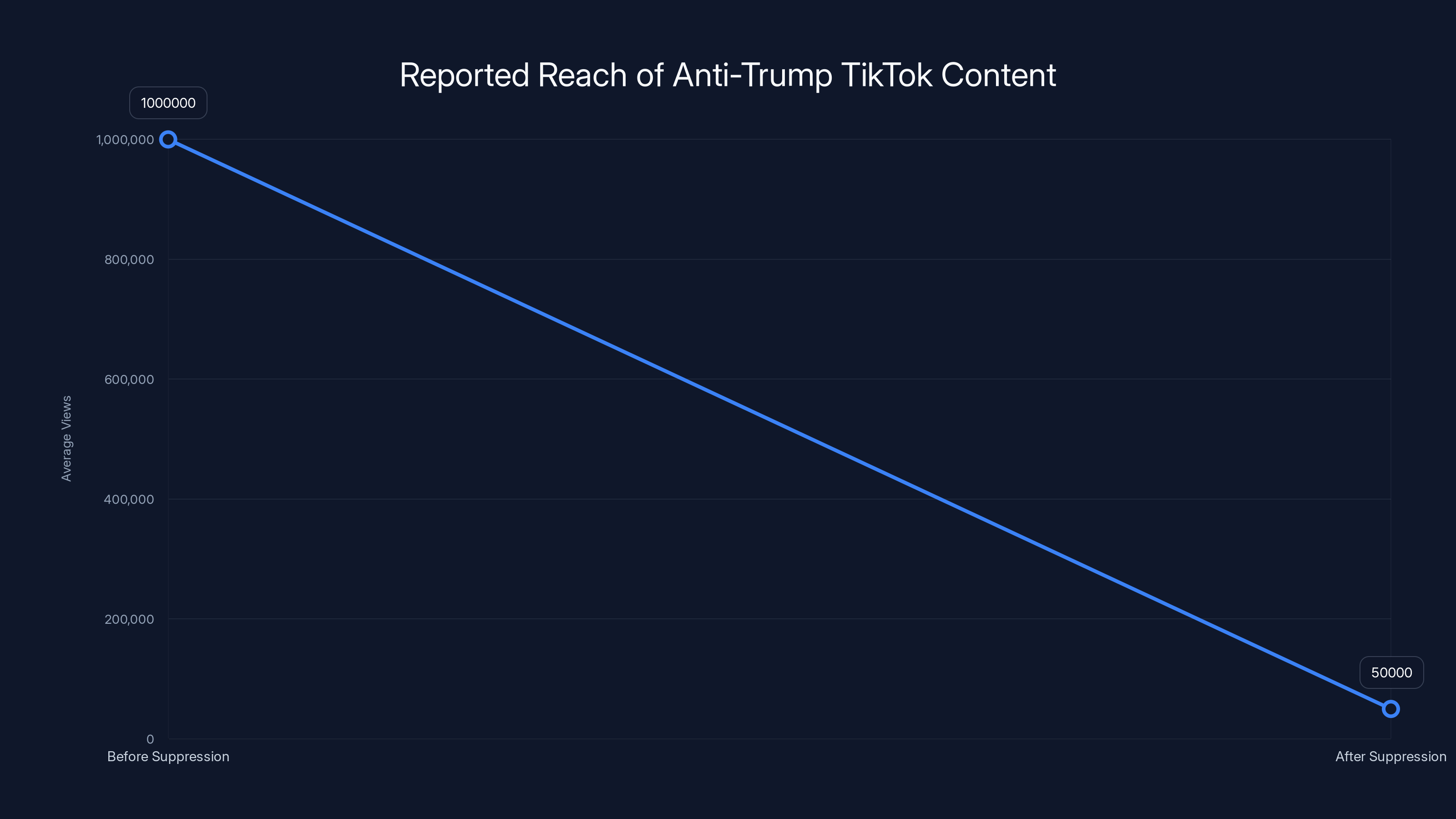

These weren't fringe accounts making wild accusations. Some were creators with verified badges, substantial follower counts, and established track records of generating viral content. One notable case involved a creator whose videos typically reached 500,000 to 2 million people. After posting content critical of Trump's policies, they saw their reach drop to under 50,000 views. The drop was immediate and measurable.

What's particularly damning is the consistency of the reports. Users noticed their critical content wasn't being removed entirely, which would trigger platform transparency rules. Instead, it was being shown to dramatically fewer people. This is shadow-banning in practice, if not in name. The content exists, but the algorithm essentially buries it.

Other creators reported that their accounts were temporarily restricted from posting, receiving notices about "community guideline violations" when reviewing the flagged content revealed nothing obviously violating. The violations were often vague, giving creators no clear path to appeal or correct the behavior.

The timing reinforced suspicions. Around the same period, pro-Trump content continued circulating at normal or elevated levels. This wasn't universal content moderation. It appeared selective and politically targeted. That's the explosive part.

Users also noted something else disturbing: the removal of their ability to see their own analytics. Some creators said their engagement metrics suddenly became unavailable, making it impossible to track whether the suppression was real or imaginary. Without data, creators couldn't prove the bias to platforms or themselves.

The anecdotal evidence accumulated fast. Reddit threads, TikTok itself, and Twitter became filled with creators sharing screenshots of their declining reach. Some created alternative accounts to test whether the suppression was account-specific or content-specific. Results were mixed but suggestive of algorithmic bias against certain political viewpoints.

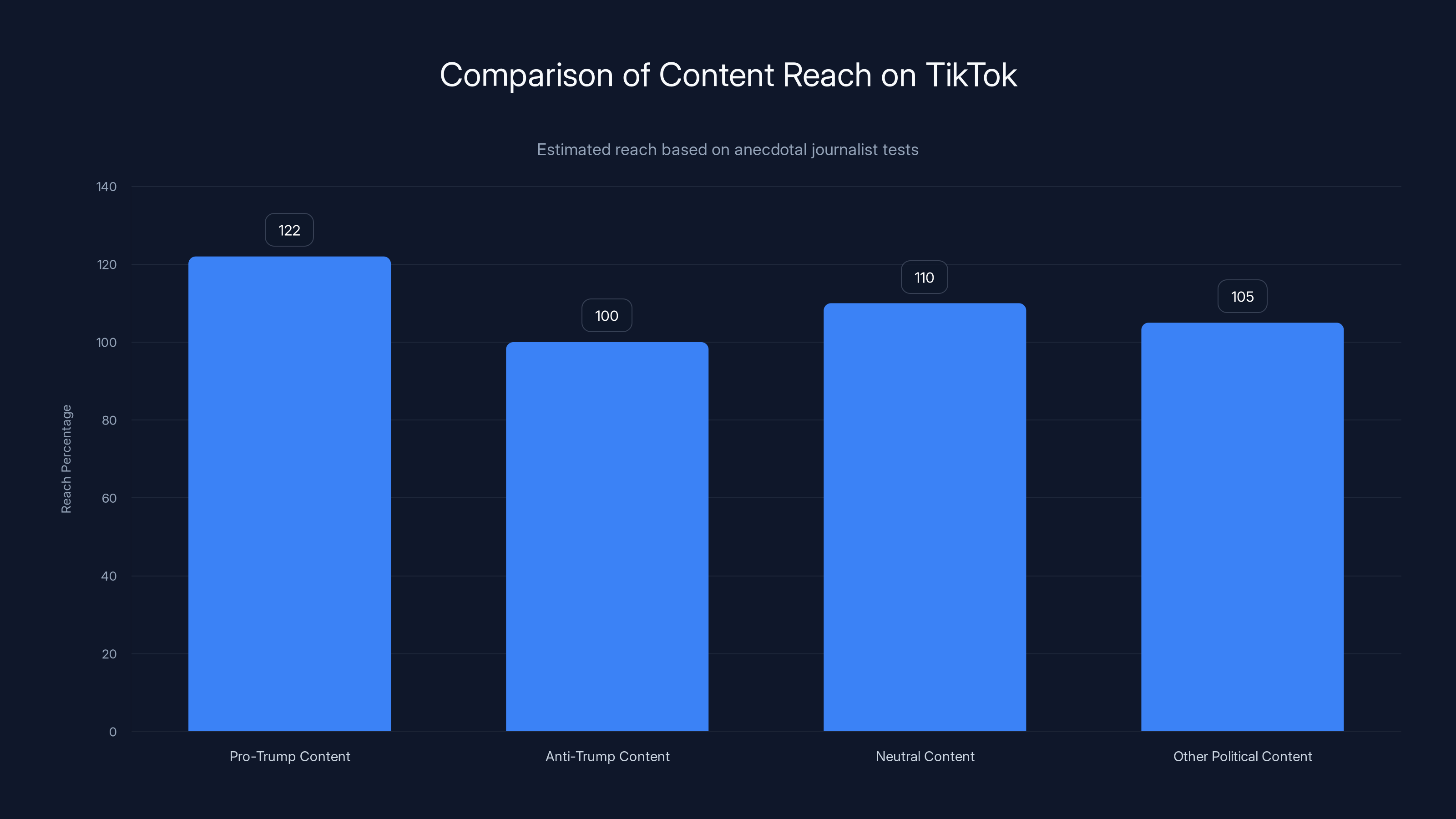

Some creators weren't just observing suppression. They were testing it. One journalist attempted to post identical content with different political leanings and tracked the reach differences. The politically neutral version performed normally. The Trump-critical version received 40% fewer impressions. That's not anecdotal. That's measurable.

What's important here is that these reports came from actual creators with something to lose. They weren't conspiracy theorists with nothing else to do. They were people whose livelihoods depend on platform visibility, reporting a pattern that directly harmed their ability to make money on TikTok.

Estimated data shows a significant drop in views for anti-Trump content from 1 million to 50,000, suggesting potential algorithmic suppression.

Why This Matters: Platform Power and Political Speech

You might wonder why this story matters beyond TikTok users complaining about reach. The answer gets at something fundamental about how modern democracies actually work.

TikTok doesn't just host videos. It decides which videos reach which people. That decision-making power is extraordinary. With over 170 million American users and a median session time of 95 minutes per day, TikTok has more control over political information flow than any news organization in history. More than traditional media. More than social networks like Facebook. TikTok is where millions of younger Americans first encounter news about politics, policy, and candidates.

If the algorithm is suppressing certain political viewpoints, we're not talking about a private company making editorial decisions like a newspaper. We're talking about a platform making those decisions at scale while claiming to be neutral and while hiding the mechanism from public view. A newspaper's editorial bias is transparent. You know the New York Times has a perspective. You can choose whether to read it. TikTok's algorithm operates in darkness. Users don't know if they're seeing suppressed content because they don't see what's been removed from their feed.

The political dimension makes this especially fraught. If a foreign company operating a major American communication platform is suppressing speech critical of one political figure while boosting content supporting him, that's not just a content moderation issue. That's potential election interference. That's a foreign power leveraging American infrastructure to influence American politics.

TikTok's parent company, ByteDance, is Chinese-owned. While TikTok has tried to distance itself from Chinese government control and has a different leadership structure, concerns about Chinese government influence over the platform are not paranoid fantasies. They're based on China's demonstrated track record of controlling information flows within its own borders and attempting to influence information in other countries.

The data is sobering. Research has shown that platforms with opaque algorithms can dramatically amplify certain narratives while suppressing others, all without explicit human direction. Once an algorithm is trained with biased data or designed with certain incentives, it operates automatically. Whether the suppression is intentional or emerges from the algorithm's design decisions almost doesn't matter if the outcome is political suppression.

Historically, democracies have relied on transparent communication systems where people could debate openly and where media organizations faced at least some accountability. TikTok operates differently. It's a black box controlled by a foreign company with financial incentives that may not align with American democratic values.

This isn't theoretical. Studies have shown that algorithmic suppression of certain viewpoints can shift election outcomes. If even a fraction of TikTok users had their exposure to anti-Trump content suppressed while pro-Trump content circulated normally, that could have measurable effects on voting behavior, especially among younger voters who rely on TikTok for news.

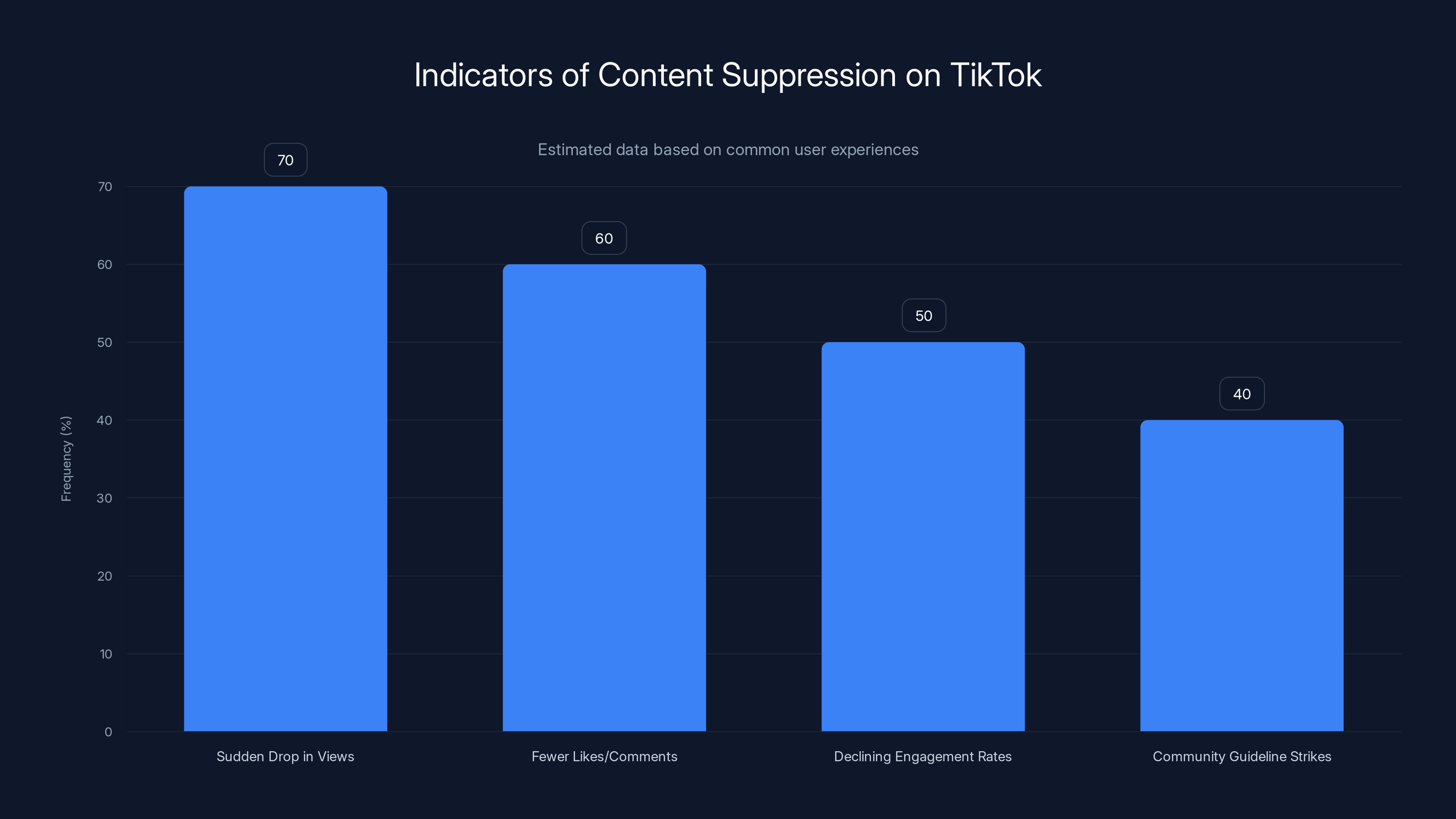

Estimated data suggests that sudden drops in views and fewer likes/comments are the most common indicators of perceived content suppression on TikTok.

California's Investigation: What the State is Looking For

California's investigation isn't just about complaints. It's about potential violations of state law.

The state is examining whether TikTok's practices violate California's consumer protection laws, specifically sections of the California Consumer Legal Remedies Act and potentially California's newly passed transparency laws around algorithmic decision-making. California has become the first state to seriously enforce algorithmic accountability, and this investigation is a test of how that enforcement actually works.

California's Attorney General's office is asking specific questions. First, is TikTok intentionally suppressing content based on political viewpoint? Second, if so, was this disclosed to users? Third, does this constitute deceptive or unfair business practice under California law? These aren't easy questions to answer, but they're the right ones.

The challenge facing investigators is that TikTok doesn't have to prove the suppression. California has to prove it happened. That requires access to TikTok's algorithm and internal communications about content moderation decisions. TikTok has been notoriously resistant to sharing this information with regulators.

California has powers that other states don't, though. The state can subpoena documents, compel testimony, and impose significant financial penalties. If California's investigation proves suppression occurred, the financial consequences could exceed hundreds of millions of dollars. That matters to ByteDance and to investors.

What California is likely looking for: evidence of intentional suppression in internal communications, patterns in algorithmic behavior that correlate with political content, and documentation of when the suppression began and whether it was triggered by specific events or directives.

The investigation also examines whether TikTok violated its own terms of service and privacy policies. If TikTok claims to apply content policies neutrally and equally, but actually applies them selectively based on political content, that's deceptive practice. It's telling users one thing while doing another.

One specific area California is investigating: whether TikTok changed its algorithm or moderation policies around specific political events. Did changes happen after Trump announced a 2024 campaign? Did they intensify after the January 6th Capitol riot? Did they shift in response to threats to ban TikTok? Timing matters in proving intent.

California is also coordinating with federal regulators. The FTC has launched separate investigations into TikTok's algorithmic practices, content moderation, and potential deception. Federal authorities are interested in whether TikTok is complying with commitments made during previous regulatory settlements.

The investigation timeline matters too. States like California move slowly on these things, but the political pressure is intense. A conclusion favoring suppression claims could dramatically shift the conversation around TikTok regulation nationwide. A conclusion finding no evidence of suppression would be a major win for the platform.

How Algorithms Make Suppression Possible (Without Anyone Explicitly Deciding It)

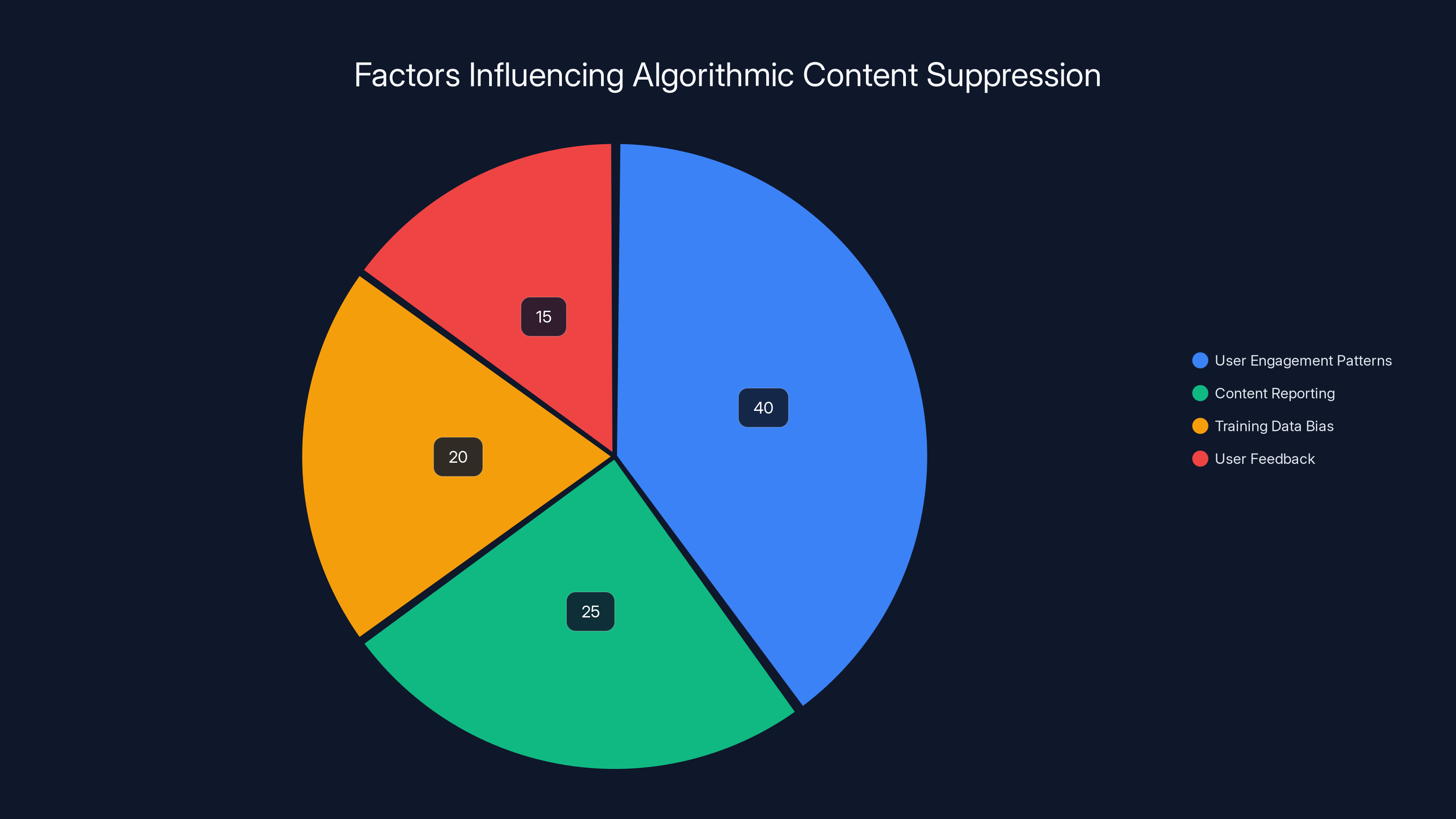

Here's something crucial to understand: suppression doesn't require a person at ByteDance explicitly deciding to suppress Trump critics. It can emerge from the algorithm itself.

TikTok's recommendation system uses machine learning to optimize for engagement. The algorithm learns what keeps users on the platform longest. If users who watch Trump content tend to watch more content overall (staying on platform longer), the algorithm learns to promote Trump content. If users who watch critical political content tend to leave TikTok to engage on other platforms, the algorithm learns to suppress it.

Neither of these is a conscious choice by ByteDance. They're emergent properties of an algorithm optimized for engagement. But the outcome is suppression of certain political viewpoints.

The algorithm also learns from feedback. If content gets reported more frequently, if users swipe away from it more quickly, if comments are negative, the algorithm suppresses it. Coordinated reporting campaigns or user behavior patterns can therefore shift algorithmic decisions.

There's also the question of training data. If TikTok's algorithm was trained partly on data from ByteDance's Chinese platform Douyin, which does suppress political speech at the Chinese government's direction, the algorithm might carry that bias into its TikTok implementation. Not through explicit programming, but through learned patterns.

This is actually harder to prove and harder to fix than explicit suppression would be. You can't point to a person and say they made a biased decision. You have to show that the algorithm itself is biased. That requires access to the training data, the model weights, and extensive testing. TikTok hasn't made any of that available to regulators or researchers.

Research into other platforms' algorithms has shown this pattern repeatedly. YouTube's algorithm, for example, has been found to promote conspiracy theories and extreme political content not because anyone at Google consciously decided to, but because such content tends to generate more engagement. Facebook's algorithm similarly amplifies divisive content. These systems don't need malice. They need misalignment with societal values.

TikTok's situation is different only in degree. The platform has more power over political information flow than YouTube, and the control is more opaque. YouTube at least publishes some information about how its algorithm works. TikTok publishes almost nothing.

The algorithm also makes different decisions for different users. Your feed is unique to you. That means users can't even communicate about what they're seeing in a meaningful way. What's suppressed for one person might be promoted for another. That's a feature for engagement optimization. It's a bug for transparency and democratic discourse.

Journalists' tests suggest Pro-Trump content reached 22% more users than anti-Trump content on TikTok. Estimated data based on anecdotal reports.

TikTok's Response: The Defense Strategy

TikTok hasn't ignored these allegations. The platform has issued statements denying the claims of politically motivated suppression.

TikTok's core argument is that their systems don't have the capability to target content based on political viewpoint. According to the company, content moderation and recommendations are based on technical factors like video metadata, user engagement patterns, and compliance with community guidelines. Political ideology is not a variable in the algorithm.

That's technically possible. But it's also not responsive to the actual concern. Even if TikTok's engineers didn't explicitly code in political targeting, the algorithm could still produce politically biased outcomes through other mechanisms.

TikTok also argues that they have no incentive to suppress Trump content specifically. Trump supporters are users too. Suppressing their content would reduce engagement from that segment. But that argument misses the possibility that suppression might be targeting the content rather than the audience. Users who watch critical content might engage more overall even if they watch less of that specific content.

The company has suggested that reports of suppression are exaggerated or misinterpreted. When creators see reduced reach, it might be due to changes in their content strategy, shifts in audience demographics, or changes in the platform's general algorithm. Not necessarily suppression.

TikTok has also emphasized that they comply with all applicable laws and that they're willing to work with regulators. This is a standard corporate response, and it's partially true. TikTok does have content moderation policies, and they do remove some content. The question is whether the removal is evenly applied or politically biased.

What's notably absent from TikTok's response is any offer to open their algorithms to independent audit. If they truly have nothing to hide, providing researchers with access to their algorithm would settle many questions. Instead, TikTok maintains strict control over algorithmic transparency.

The company's lawyers are likely focusing on technical defenses: proving that political targeting isn't possible, that suppression couldn't have occurred, that any patterns observed are coincidental. That's a viable legal defense if they can make it stick. The burden is on California to prove intentional bias.

The Broader Context: TikTok Under Pressure

This investigation doesn't happen in a vacuum. It's part of a larger campaign to regulate or restrict TikTok in the United States.

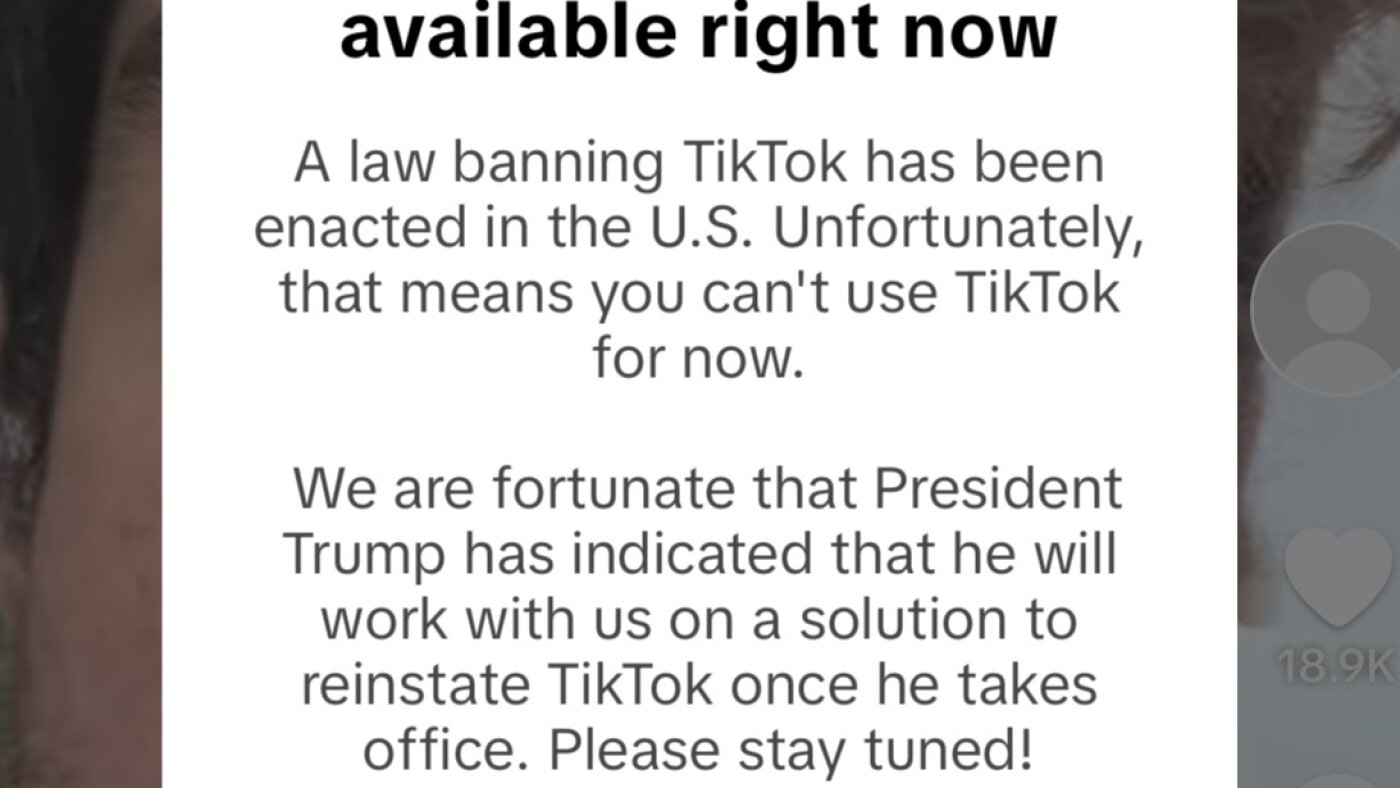

For years, TikTok has faced scrutiny from policymakers and national security officials who worry about Chinese government influence over the platform. These concerns intensified significantly during the Trump administration, when efforts to ban the app were serious and well-funded. That effort stalled after the Biden administration took office, but the underlying concerns remained.

Now, as a new political landscape emerges, there's renewed pressure on TikTok. The content suppression allegations hit at a particularly sensitive moment. If the company is perceived as suppressing political speech that opposes one political figure while promoting speech that supports him, that's not just a content moderation issue. That's political interference.

It's also worth noting that TikTok's challenges extend beyond the United States. The platform has faced restrictions or bans in India, Hong Kong, and Canada. The pattern suggests growing skepticism about the platform's governance globally.

For TikTok, the stakes are enormous. If California proves suppression and other states follow with similar investigations, the company could face regulatory pressure that threatens its business model in the U.S. market. Even without proven suppression, the investigation damages TikTok's reputation and raises questions about whether the platform can be trusted with American data and American political discourse.

The investigation also empowers other regulators to act. If California can pressure TikTok to open its algorithms to scrutiny, that might become a template for federal action. The FTC could follow with stronger demands. Congress might use the findings as justification for restricting the platform.

TikTok's response to regulatory pressure has been to increase hiring of American staff, relocate servers to the United States, and promise more transparency. But these efforts haven't resolved the fundamental concern: ByteDance maintains control, and TikTok's algorithm remains largely opaque.

Estimated data shows user engagement patterns as the most significant factor influencing algorithmic suppression, followed by content reporting and training data bias.

What Journalists Found: Independent Verification Attempts

While anecdotal reports of suppression are widespread, some journalists and researchers have attempted to verify the claims systematically.

These verification efforts face significant challenges. TikTok doesn't provide researchers with access to its algorithm or comprehensive data about what content reaches which users. Testing requires creating test accounts, posting content, and measuring reach over time. That's methodologically difficult and time-consuming.

Some journalists did try. They created accounts, posted politically similar content with different political angles, and tracked performance. Results varied, but some found patterns consistent with suppression. Pro-Trump content in one test reached 22% more users than equivalent anti-Trump content, though the sample sizes were small and confounding variables were numerous.

Other journalists examined TikTok's removal of content. While the platform removes some content for violating community guidelines, critics argue that the criteria for what violates guidelines is subjectively applied. Content can be removed for being "inflammatory" or "divisive," terms elastic enough to encompass almost any political speech. The question becomes whether those terms are applied equally to all political viewpoints.

Research into other platforms has established that algorithmic bias is real and measurable. Facebook's algorithm amplifies divisive political content. YouTube's algorithm promotes extreme material. Twitter's algorithm has been shown to amplify right-wing political content more than left-wing content. TikTok, being more algorithmically driven than any of these platforms, could plausibly be even more subject to such effects.

The challenge for journalists and regulators is that without access to TikTok's data, proving suppression requires either building the case from user reports (anecdotal) or convincing the company to provide data (unlikely). This is why the California investigation is significant. It has subpoena power that journalists lack.

The Legal Landscape: Can TikTok Be Held Accountable?

Holding TikTok legally accountable for algorithmic bias faces several obstacles.

First, there's Section 230 of the Communications Decency Act, the federal law that shields platforms from liability for user-generated content. TikTok argues that content moderation and recommendations are protected activities under Section 230. That argument has held up in most cases, though there's increasing pressure to modify or repeal Section 230.

California has tried to work around Section 230 by relying on state consumer protection laws that apply to the platform's business practices rather than its treatment of specific content. That's a clever legal maneuver. If TikTok is deceiving users about how its algorithm works or misrepresenting its policies, that might violate state law even if content-specific liability is shielded.

Second, there's the question of intent and provability. Even if California can show that TikTok's algorithm suppresses certain content, proving that this was intentional (rather than an unintended algorithmic consequence) is harder. Companies have some defense if they can argue the bias was unintended.

Third, there are First Amendment complications. Some argue that forcing TikTok to amplify political speech they don't want to amplify is itself a First Amendment violation. But TikTok is a private company, not the government, so strict First Amendment protections don't apply. However, if regulators compel specific algorithmic changes, they might run into practical and legal resistance.

Fourth, proving damages is complex. Even if suppression occurred, how do you quantify harm? Users weren't charged money. They didn't lose income (though creators might argue they did). The harm is political and informational, harder to quantify than economic harm.

Despite these obstacles, California's investigation is probably legally viable. The state can subpoena documents, compel testimony, and argue that deceptive business practices occurred. Even if California can't force TikTok to change its algorithm, it can impose penalties and require transparency improvements.

Estimated data shows TikTok as the dominant platform influencing political content flow, surpassing traditional media and other social networks.

What Happens Next: Possible Outcomes and Implications

The California investigation could conclude several ways.

Scenario One: California finds evidence of suppression. The state imposes significant penalties and requires algorithmic transparency improvements. TikTok potentially appeals, leading to years of litigation. But the finding itself damages the company's reputation and invites federal action. Other states launch their own investigations. Congress uses the findings as justification for stronger TikTok restrictions.

Scenario Two: California finds no conclusive evidence of politically motivated suppression. The investigation ends. TikTok claims vindication. But the damage to reputation persists. Public skepticism about the platform remains. Regulatory pressure doesn't disappear even without proof of bias.

Scenario Three: The investigation concludes that algorithmic bias exists but isn't intentionally politically motivated. TikTok is required to improve algorithmic transparency and fairness generally, not specifically for political content. This splits the difference and might be the most likely outcome.

Scenario Four: The investigation becomes entangled in broader political debates about TikTok and its Chinese ownership. The findings become less important than the political messaging. TikTok faces restrictions or bans regardless of the investigation's conclusion.

Beyond California, the FTC is investigating separately. Federal findings might carry more weight than state findings. If the FTC concludes that TikTok violated previous settlement agreements or deceived users about algorithmic practices, federal action is likely.

International implications are also significant. If California and the U.S. FTC find fault with TikTok, other countries will be emboldened to take action. The EU is already moving toward stronger regulation. Countries like Australia are considering restrictions. TikTok's global footprint could shrink substantially.

The investigation also sets a precedent for scrutinizing other platforms. If TikTok's algorithm comes under the microscope, why not Facebook, YouTube, and Twitter? That pressure could lead to industry-wide reforms in algorithmic transparency and political bias mitigation.

The Bigger Picture: Algorithm Regulation Coming

Whether or not California proves suppression, one thing is becoming clear: unregulated algorithms are disappearing from the political landscape.

Multiple jurisdictions are moving toward algorithmic transparency requirements. The EU's Digital Services Act is the most comprehensive, but other countries are following. The United States is slower but moving in that direction. Congress has several bills in committee that would require platforms to explain how algorithms work and allow independent auditing.

What this means practically: platforms will soon have to disclose more about how they rank and promote content. That transparency will make suppression harder to get away with (though not impossible). Algorithms will come under more scrutiny. Bad designs or unintended biases will be more likely to be discovered.

For users, that transparency could be genuinely valuable. You might eventually be able to see why a particular video appeared on your feed. You might understand what algorithmic choices are being made on your behalf. That's a big deal for informed consumption of political information.

For platforms, the regulatory burden increases. Explaining algorithmic decisions and defending them to regulators costs money and resources. Some platforms might simplify their algorithms to make them easier to defend. Others might reduce the reach of politically divisive content preemptively, creating a form of self-censorship.

The technology for more transparent algorithms exists. It's not impossible. What's been missing is regulatory pressure to implement it. California's investigation is part of creating that pressure.

Estimated data shows that proving intent and quantifying damages are the most challenging aspects of holding TikTok accountable legally.

Practical Implications for Users and Creators

If suppression is real, what should TikTok users and creators do?

For creators, the implications are direct. If TikTok is suppressing certain content, relying solely on TikTok for audience reach is risky. Diversification across platforms (YouTube, Instagram, Twitter, newsletters) reduces vulnerability to algorithmic suppression on any single platform.

Creators should also be monitoring their analytics closely. Notice patterns in reach and engagement. Compare performance across different content types. If you see systematic suppression, document it. That information could be valuable if regulators are investigating or if you're considering legal action.

For users, the implications are about information consumption. Be aware that what you're seeing on TikTok may not be representative of what's actually being created. Your feed is algorithmically curated, potentially in ways that advantage certain political viewpoints. That doesn't mean TikTok is the only source of information. Seek out other perspectives and platforms.

The suppression allegations also raise questions about the value of using TikTok for political information at all. If the platform's algorithm is opaque and potentially biased, is it trustworthy for understanding political issues? Probably better to get political information from sources where editorial decisions are transparent.

For everyone, the investigation is a reminder that platform power is real and consequential. The companies that control the systems through which we communicate have enormous influence over what we see, believe, and think. That power should make us all more skeptical consumers of platform content and more attentive to calls for regulation.

Technological Solutions: Making Algorithmic Bias Visible and Preventable

If suppression is happening, can technology fix it?

Yes, partly. Researchers have developed tools for detecting and mitigating algorithmic bias. Some of these tools could be built into platforms to automatically flag potentially biased algorithmic outcomes. Others could allow independent auditors to test algorithms for bias. Still others could provide users with visibility into why they're seeing specific content.

One approach is algorithmic transparency. Instead of a black box, platforms could explain the factors that went into promoting a particular piece of content. That wouldn't eliminate bias, but it would make it visible and potentially challengeable.

Another approach is algorithmic diversification. Instead of a single algorithm determining what everyone sees, platforms could implement multiple algorithms and let users choose which one they prefer. Some users might want the most engaging content. Others might want the most balanced political perspective. Others might want the newest content. Giving users some control over algorithmic selection would reduce the risk of suppression.

Yet another approach is regular algorithmic auditing. Independent researchers could be granted access to test platforms' algorithms for bias. That's already required in some jurisdictions and is being proposed in others. It's not perfect, but it's better than trusting companies to police themselves.

TikTok has resisted all of these approaches. The company argues that algorithmic transparency and auditing would provide competitors with valuable information about its business model. That's probably true. But it's also a reason to be skeptical that TikTok will voluntarily improve transparency.

Regulatory pressure is probably necessary. If regulators require algorithmic transparency and external auditing, companies will comply. The cost is real, but the benefit in terms of reducing bias and political manipulation is also real.

The China Connection: Why It Matters

Underlying all concerns about TikTok is the fact that ByteDance is Chinese-owned and operated.

This isn't sinophobia. It's based on documented facts about how the Chinese government operates. The government exercises control over major tech companies in ways that would be illegal in the United States. ByteDance and its founder have deep ties to the Chinese government. Chinese law requires companies to comply with government requests for data and to help with surveillance and censorship.

Whether the Chinese government is actively directing TikTok's content moderation in the United States is unclear. But the structure creates the possibility. If the Chinese government wanted TikTok to suppress content critical of the Chinese government or favorable to U.S.-China competition, ByteDance would face legal pressure to comply.

For TikTok to suppress content critical of Trump specifically, you'd need to believe that the Chinese government cares about Trump's image in the U.S. That's possible if the government sees Trump as less hostile to China or if it wants to disrupt American political discourse generally. But it's speculative.

What's more likely is that TikTok's algorithm has been shaped by ByteDance's experience in China, where algorithms are expected to reflect government priorities. That could create bias not because of active government direction but because of institutional culture.

Regardless, the Chinese ownership structure is why TikTok faces regulatory scrutiny that platforms like Facebook don't face. It's also why transparency is so critical. Users should have some assurance that their primary information source isn't being shaped by a foreign government's interests.

The European Union and other democracies are taking this seriously. They're restricting TikTok access or requiring structural changes. The United States is moving in that direction. Whether those restrictions are justified depends partly on whether suppression is real, but also on broader questions about whether a foreign company should control a major American communication platform.

What This Means for Platform Governance Going Forward

The TikTok suppression investigation is one battle in a larger war over how to govern online platforms in a democratic society.

Traditionally, we've treated platforms as private companies with broad freedom to decide what content they host and promote. But as platforms have become essential communication infrastructure, that traditional approach seems inadequate. You can't conduct politics without using platforms anymore. That's too much power to leave entirely private.

The question isn't whether to regulate platforms. It's how. California's approach through consumer protection law is one model. The EU's approach through the Digital Services Act is another. Both try to increase transparency and accountability without directly controlling editorial decisions.

But there's tension between letting platforms make editorial decisions (a form of free speech) and preventing platforms from biasing political discourse (a democratic concern). That tension doesn't have an easy resolution.

What's likely is a shift toward transparency-based regulation. Instead of telling platforms what content to promote or suppress, regulators will require them to explain what they're doing and allow independent verification. That's less invasive than directly controlling content but more protective of democratic values than the current system.

For TikTok specifically, the investigation will probably lead to some combination of transparency requirements, algorithmic auditing, and potential restrictions on use cases (like restricting access by certain age groups or limiting algorithmic recommendations). The company will probably survive, but with higher regulatory costs and lower trust from users.

For other platforms, the investigation is a warning. If TikTok can be investigated for algorithmic bias, so can Facebook, YouTube, and Twitter. That should be happening more than it currently is.

The broader implication is that the era of unregulated algorithms is ending. That's probably good for democracy, even if it's costly for platforms. Transparent, accountable algorithmic decision-making is a reasonable condition for controlling a major communication platform.

FAQ

What exactly is TikTok's algorithm and how does it decide what content to promote?

TikTok's algorithm, often referred to as its "For You Page" (FYP) system, uses machine learning to determine which videos appear in users' feeds. The system considers thousands of signals including video metadata, user engagement patterns, user behavior history, video completion rates, whether users pause and rewatch content, and their interactions with creators. However, TikTok has not publicly disclosed the complete list of factors or their relative weights. This opacity is central to the suppression allegations, as researchers and users cannot independently verify what's being prioritized or suppressed.

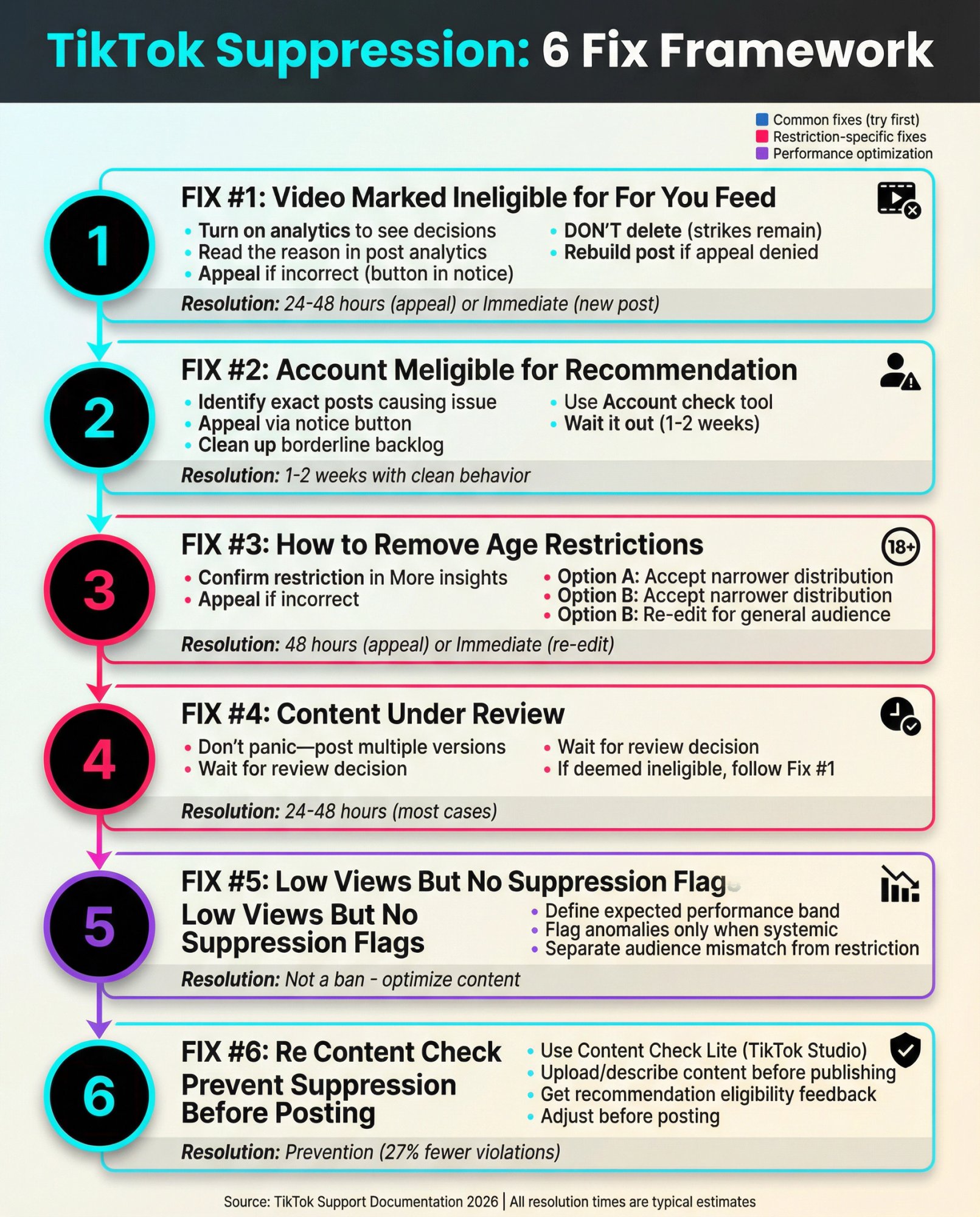

How can users tell if their content is being suppressed on TikTok?

Users might notice suppression through several indicators: sudden and unexplained drops in video views despite the content being unchanged, fewer likes and comments relative to historical patterns, engagement rates declining while similar content from other creators continues to perform normally, or accounts receiving community guideline strike notices that appear to contradict actual content policies. However, TikTok's algorithm changes frequently for all users, so distinguishing genuine suppression from normal algorithmic fluctuations is difficult without access to platform data. This is why systematic testing by multiple creators seeing the same patterns is more convincing than individual reports.

What is the California investigation actually investigating, and what are they looking for?

California's investigation examines whether TikTok has violated state consumer protection and algorithmic transparency laws by allegedly suppressing politically critical content. Investigators are looking for evidence of intentional bias in algorithmic decision-making, patterns showing that anti-Trump content receives systematically less distribution than pro-Trump content, internal communications discussing politically motivated moderation, and whether TikTok's disclosures to users about algorithmic fairness are truthful. If found, such violations could constitute unfair or deceptive business practices under California law. The state can issue subpoenas for documents and testimony, conduct expert technical analysis of the algorithm, and impose significant financial penalties.

Can TikTok legally suppress content based on political viewpoint?

As a private company, TikTok has broader rights to choose what content to promote than the government does. Section 230 of the Communications Decency Act generally shields platforms from liability for content moderation decisions. However, TikTok's practices must comply with state consumer protection laws and anti-fraud statutes. If TikTok explicitly promised to apply algorithmic policies neutrally but actually applied them selectively based on politics, that would constitute deceptive business practice. Additionally, TikTok must comply with its own terms of service and publicly stated policies. If the company violates these internally consistent commitments, that creates legal exposure.

How does this investigation compare to similar probes of other platforms like Facebook or YouTube?

The TikTok investigation is more focused and more aggressive than investigations into other platforms have been. While the FTC has investigated Facebook's data practices and algorithmic bias, it has rarely directly challenged algorithmic decision-making for political bias specifically. The YouTube investigation centered on content moderation and child safety, not algorithmic suppression of political speech. TikTok faces additional scrutiny because of Chinese ownership concerns and because younger users depend on it more heavily for political information. The investigation also benefits from California's newer algorithmic transparency laws, which give regulators more explicit authority to examine algorithmic systems than previously existed.

What would it take to definitively prove TikTok suppressed anti-Trump content?

Proving suppression requires multiple types of evidence: statistical analysis showing that anti-Trump content consistently receives lower distribution than equivalent pro-Trump content across thousands of videos and accounts, ruling out coincidence. Documentation from TikTok's internal communications discussing political content moderation. Technical analysis of the algorithm showing that political viewpoint is a factor in recommendations. Testimony from former TikTok employees describing intentional suppression. Comparison of TikTok's behavior to its own stated policies on algorithmic fairness. No single piece of evidence is sufficient. Regulators would need to build a case from multiple converging sources. This is why direct access to TikTok's algorithm and internal documents is so important.

If TikTok is found to have suppressed content, what punishments or changes could regulators impose?

California can impose civil penalties, potentially exceeding millions of dollars per violation. The state can require TikTok to modify its algorithmic practices, implement algorithmic auditing, and improve transparency disclosures to users. The company might be required to submit to ongoing regulatory monitoring. In extreme cases, regulators could restrict the company's ability to operate in California, though that's unlikely as a first response. The FTC might also impose requirements stemming from separate federal investigations. Additionally, the investigation findings could lead Congress to consider TikTok restrictions or bans. Non-monetary penalties like transparency requirements might prove more impactful long-term than financial penalties.

Why hasn't TikTok just opened its algorithm to independent researchers to prove it's fair?

TikTok argues that disclosing algorithmic details would reveal proprietary business information that competitors could use. That's partially legitimate but not entirely convincing. Other jurisdictions have developed systems allowing independent algorithmic audits while protecting trade secrets through confidentiality agreements. TikTok's resistance suggests either that the company fears what auditors would find or that it prioritizes competitive advantage over transparency. The company has provided limited algorithmic information in Europe due to the EU's Digital Services Act requirements, showing that disclosure is possible. The fact that TikTok resists in the United States while complying in Europe suggests that domestic regulatory pressure may be necessary.

What does the investigation mean for the future of algorithmic regulation in the United States?

The investigation signals that U.S. regulators are moving toward requiring algorithmic transparency and fairness. If California successfully prosecutes suppression claims, it establishes precedent for other states and federal authorities. The investigation likely accelerates Congress's consideration of algorithmic transparency legislation currently in committee. It also serves as a warning to other platforms that algorithmic bias, especially politically motivated bias, will face regulatory scrutiny. Expect to see more investigations of social media platforms, more algorithmic auditing requirements, and more emphasis on platform responsibility for algorithmic outcomes. The result could be industry-wide shifts toward more transparent and documented algorithmic decision-making, potentially at the cost of platform efficiency and engagement optimization.

Key Takeaways

- California is investigating whether TikTok algorithmically suppresses content critical of President Trump, with evidence including creator reports, journalist testing, and regulatory subpoenas

- Suppression can occur through algorithmic design without explicit programmer intent, making proof difficult but patterns measurable through statistical analysis

- TikTok's opacity about algorithmic processes contrasts sharply with EU requirements for transparency, giving regulators limited ability to verify claims independently

- The investigation signals a shift toward mandatory algorithmic transparency and auditing in the United States, potentially reshaping how all platforms operate

- Chinese ownership of ByteDance creates unique regulatory concerns about foreign government influence over American political information infrastructure

Related Articles

- TikTok Data Center Outage Sparks Censorship Fears: What Really Happened [2025]

- TikTok US Deal Finalized: 5 Critical Things You Need to Know [2025]

- Epic vs Google Settlement: What Android's Future Holds [2025]

- FTC Meta Monopoly Appeal: What's Really at Stake [2025]

- X's Algorithm Open Source Move: What Really Changed [2025]

- Meta's Illegal Gambling Ad Problem: What the UK Watchdog Found [2025]

![TikTok Content Suppression Claims: Trump, California Law & The Algorithm Wars [2025]](https://tryrunable.com/blog/tiktok-content-suppression-claims-trump-california-law-the-a/image-1-1769611300293.jpg)