Roblox Mandatory Age Verification: Complete Guide for Parents and Users [2025]

Roblox just made a massive shift in how it handles user safety. Starting this year, every single person on the platform—regardless of where they live—needs to complete an age verification process before they can access chat features. No exceptions. No workarounds.

If you've got kids on Roblox, or you're a user yourself, this changes how the platform works. Period.

The rollout came after months of legal pressure, lawsuits, and investigations into how Roblox handled child safety. Multiple state attorneys general came after the company hard, citing serious issues: grooming, explicit content exposure, predatory behavior. The company finally said enough and decided to implement a real verification system instead of just asking kids to type in their birth year during signup.

Here's what's happening, why it matters, and what you actually need to know about it.

TL; DR

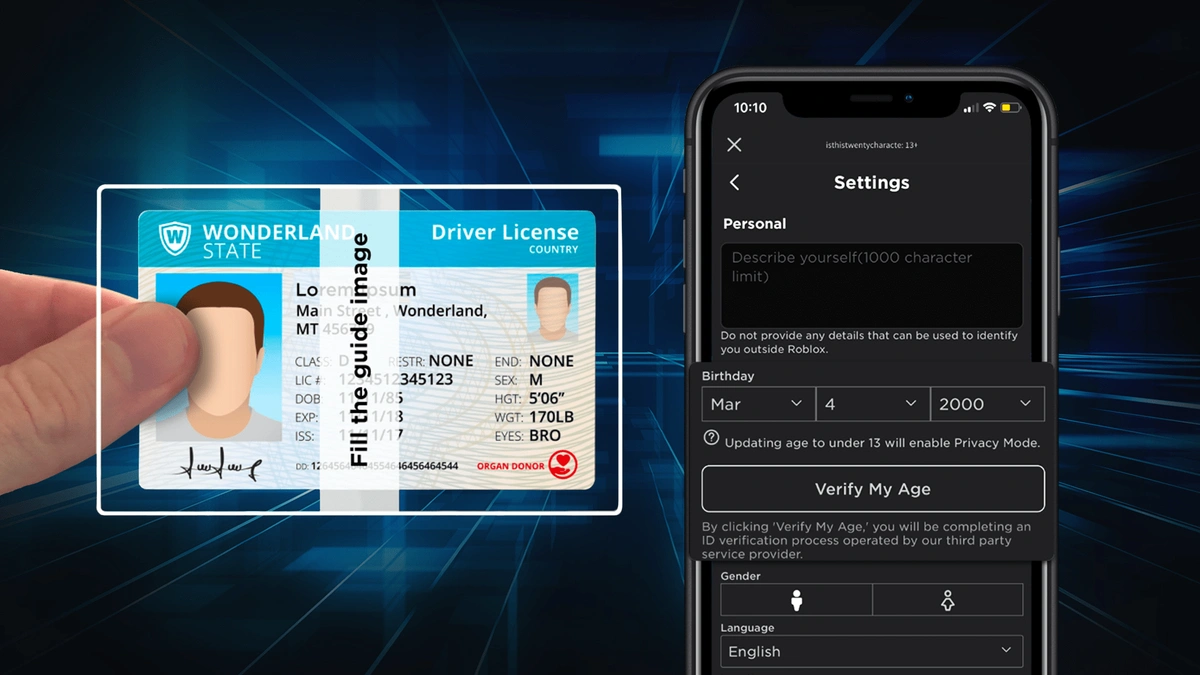

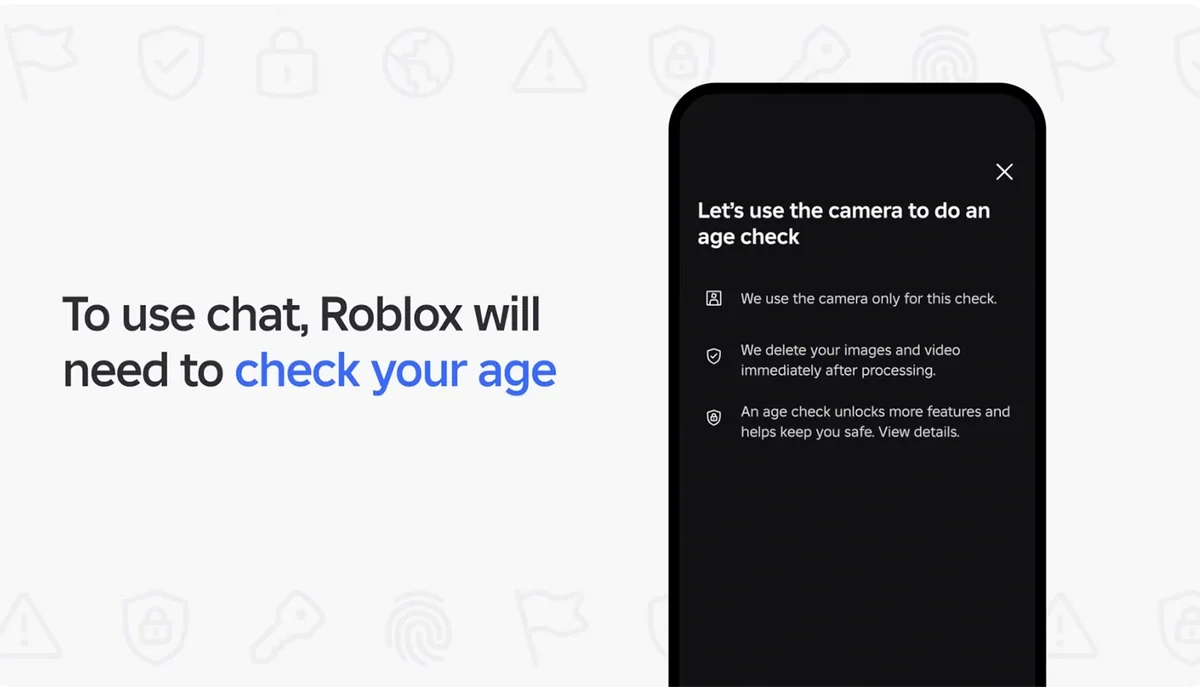

- Mandatory for chat access: Roblox now requires age verification (facial or ID) before anyone can use chat features globally.

- Facial verification is primary: Users upload a selfie for instant age verification; the company then deletes the image after processing.

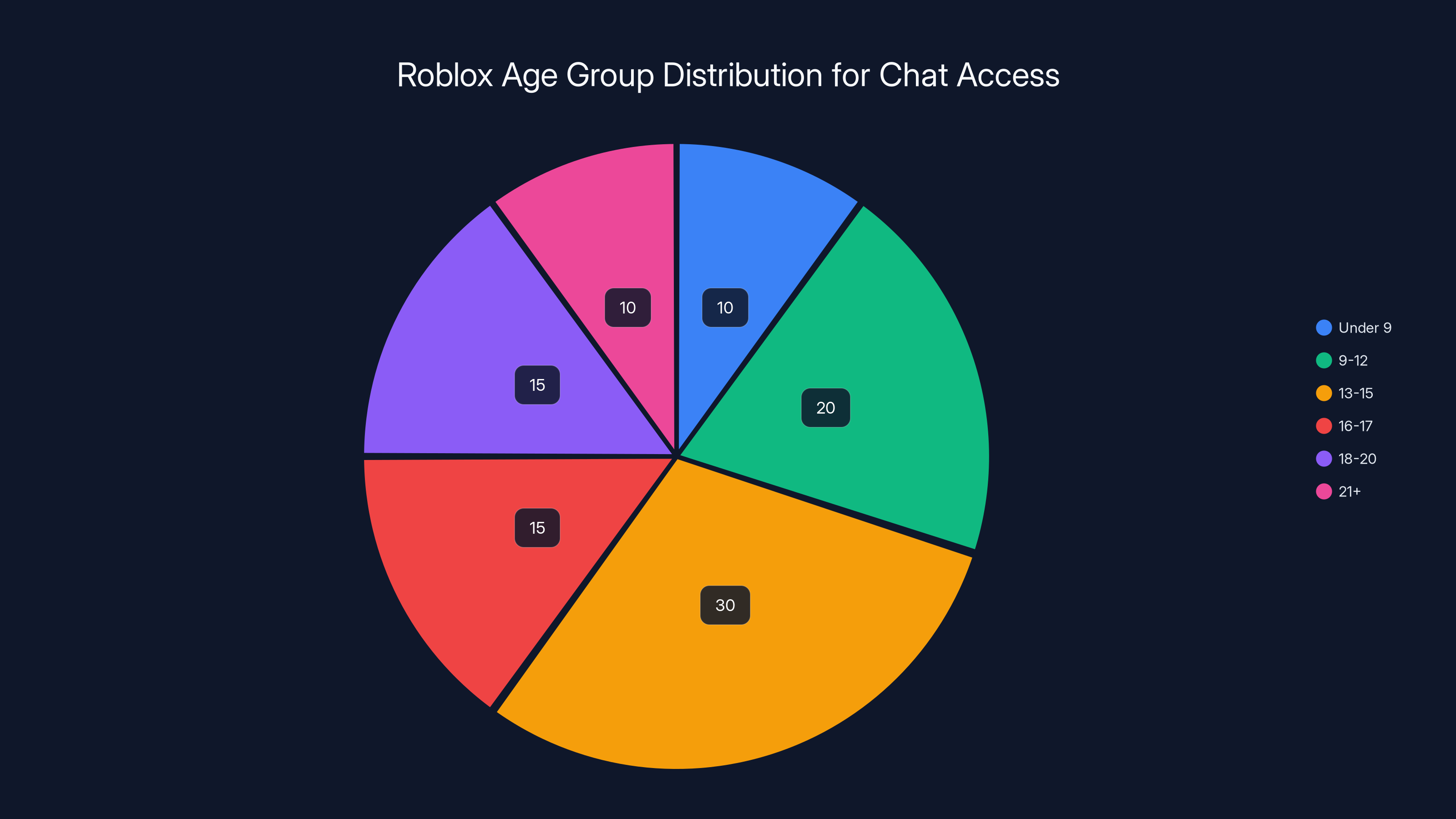

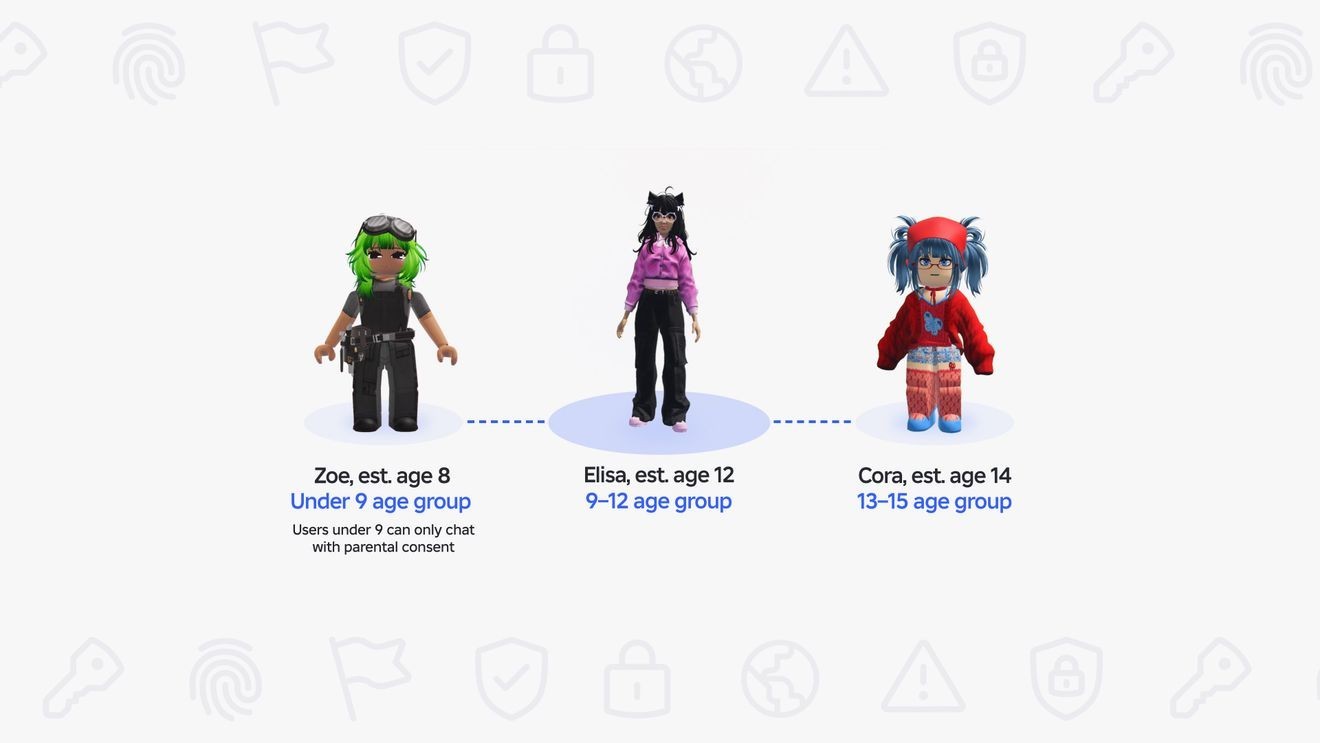

- Age-based chat groups: Six tiers exist (under 9, 9-12, 13-15, 16-17, 18-20, 21+), and users can only chat with adjacent age groups.

- Third-party processing: Persona, a verification vendor, handles the facial recognition and deletes all data after completion.

- Legal pressure drove this: Lawsuits from Texas, Louisiana, and other states forced Roblox to strengthen child safety measures.

- Bottom line: This is a real step forward for child protection, though privacy concerns remain for facial data collection.

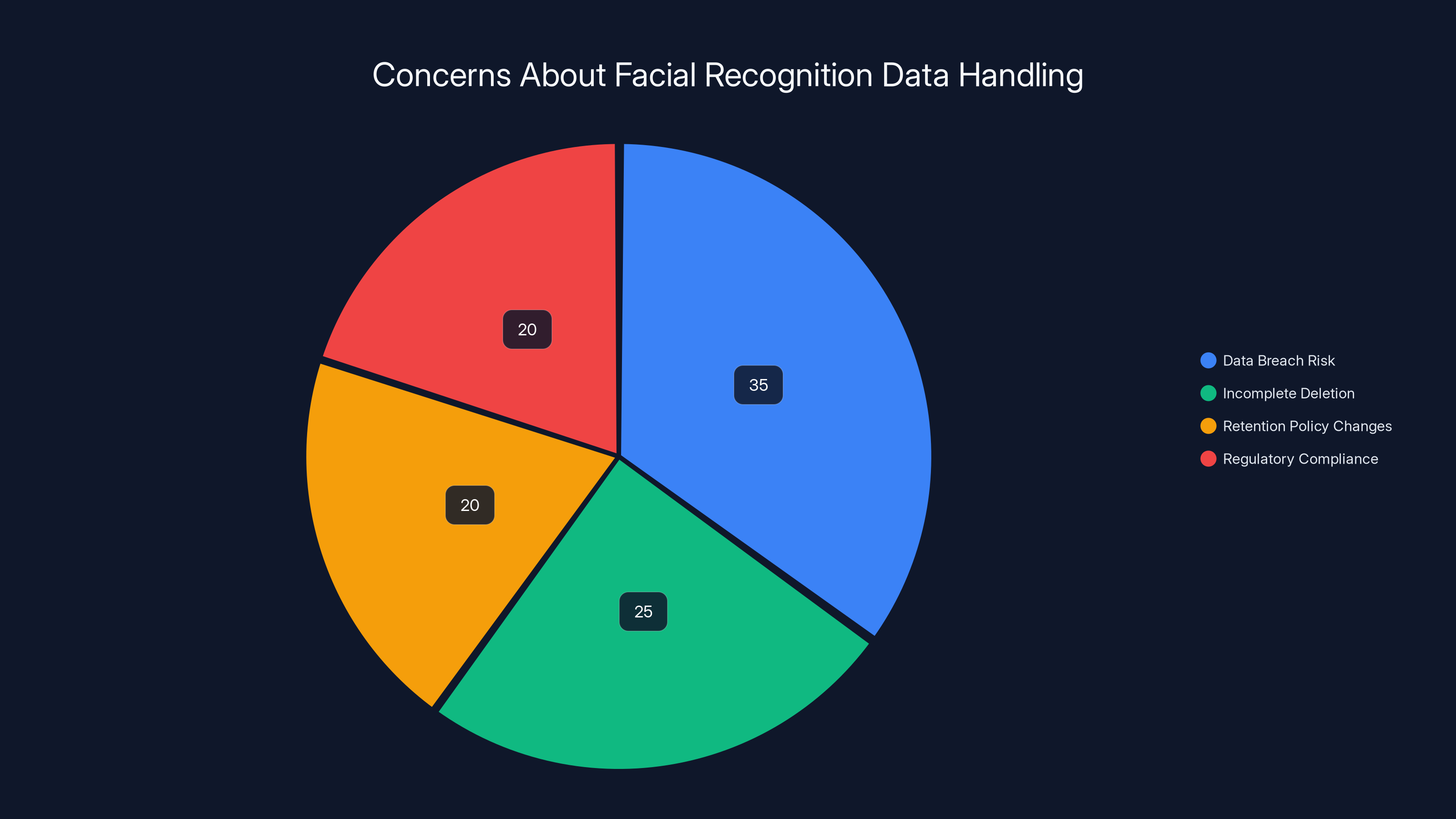

Estimated data shows that data breach risk is the top concern (35%), followed by incomplete deletion (25%), retention policy changes (20%), and regulatory compliance (20%).

Why Roblox Finally Took Action on Age Verification

Roblox didn't wake up one morning and decide this was a good idea. The company was forced into it.

In 2024 and into 2025, multiple lawsuits piled up. The attorneys general of Texas and Louisiana led the charge, filing official complaints that claimed Roblox was exposing minors to serious dangers. We're not talking about minor inconveniences here. The allegations included grooming, explicit content, and predatory behavior from other users.

The core problem was obvious: Roblox's age verification system was basically a joke. When you signed up, you just typed your birth year. Anyone could claim to be any age. A 45-year-old could say they're 12. A kid could pretend to be 18. Nobody knew who was actually talking to whom, which created a perfect storm for predators.

Roblox tried to argue that it had safety measures in place. Moderation, reporting systems, community standards. But the legal arguments were stronger. The company's own internal research (eventually discovered during litigation) showed that kids were being exposed to dangerous content and interactions. That's not a feature, that's a catastrophic oversight.

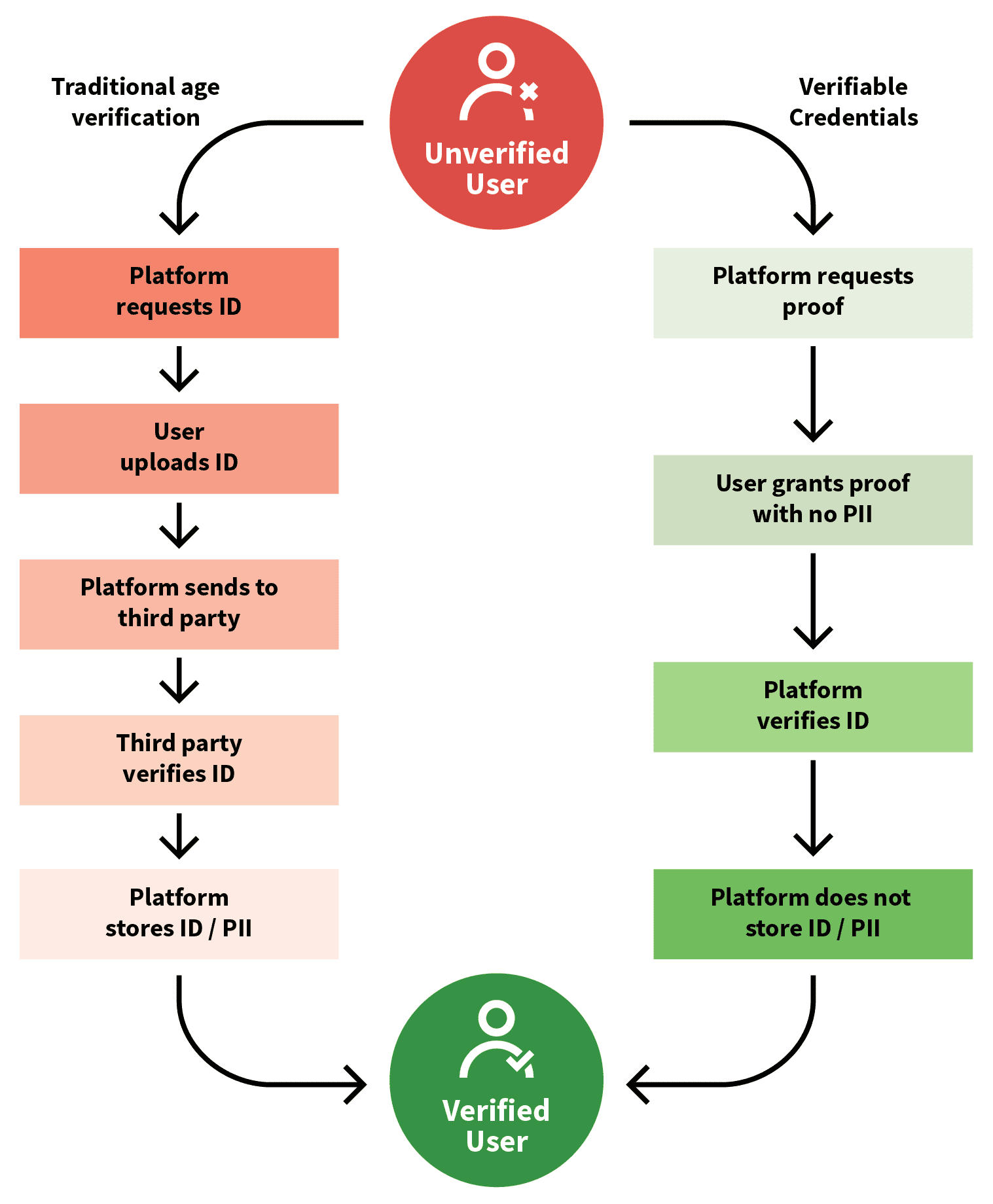

So Roblox did what any company under serious legal threat does: it invested in real verification. The company partnered with Persona, a third-party age verification vendor that uses facial recognition technology. Suddenly, instead of typing a birth year, users have to prove they are who they claim to be.

Was this the only solution? Not necessarily. But it was the most visible, most concrete response the company could offer to regulators and worried parents.

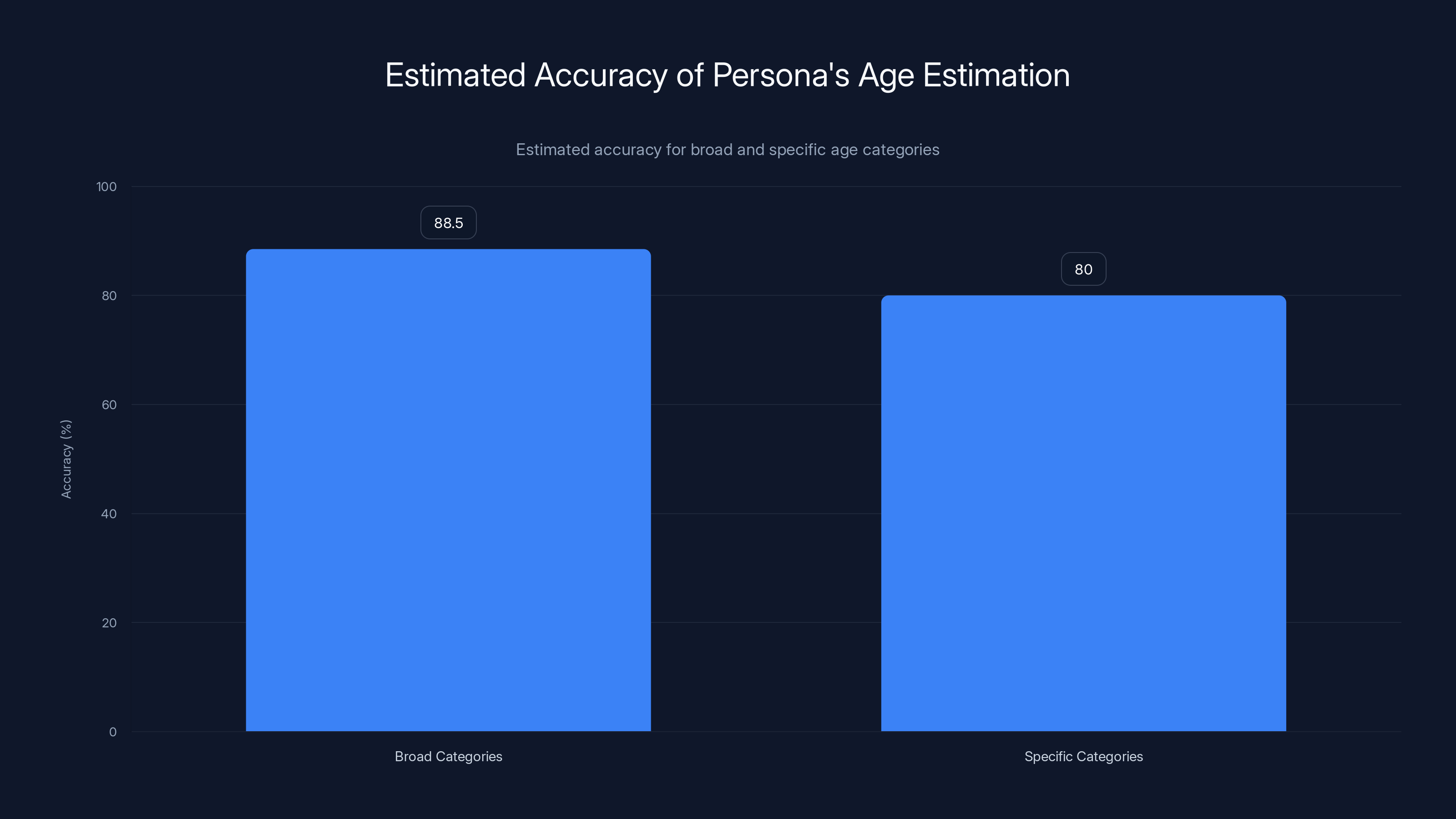

Estimated data suggests Persona's system has higher accuracy for broad age categories (85-92%) compared to specific categories (75-85%).

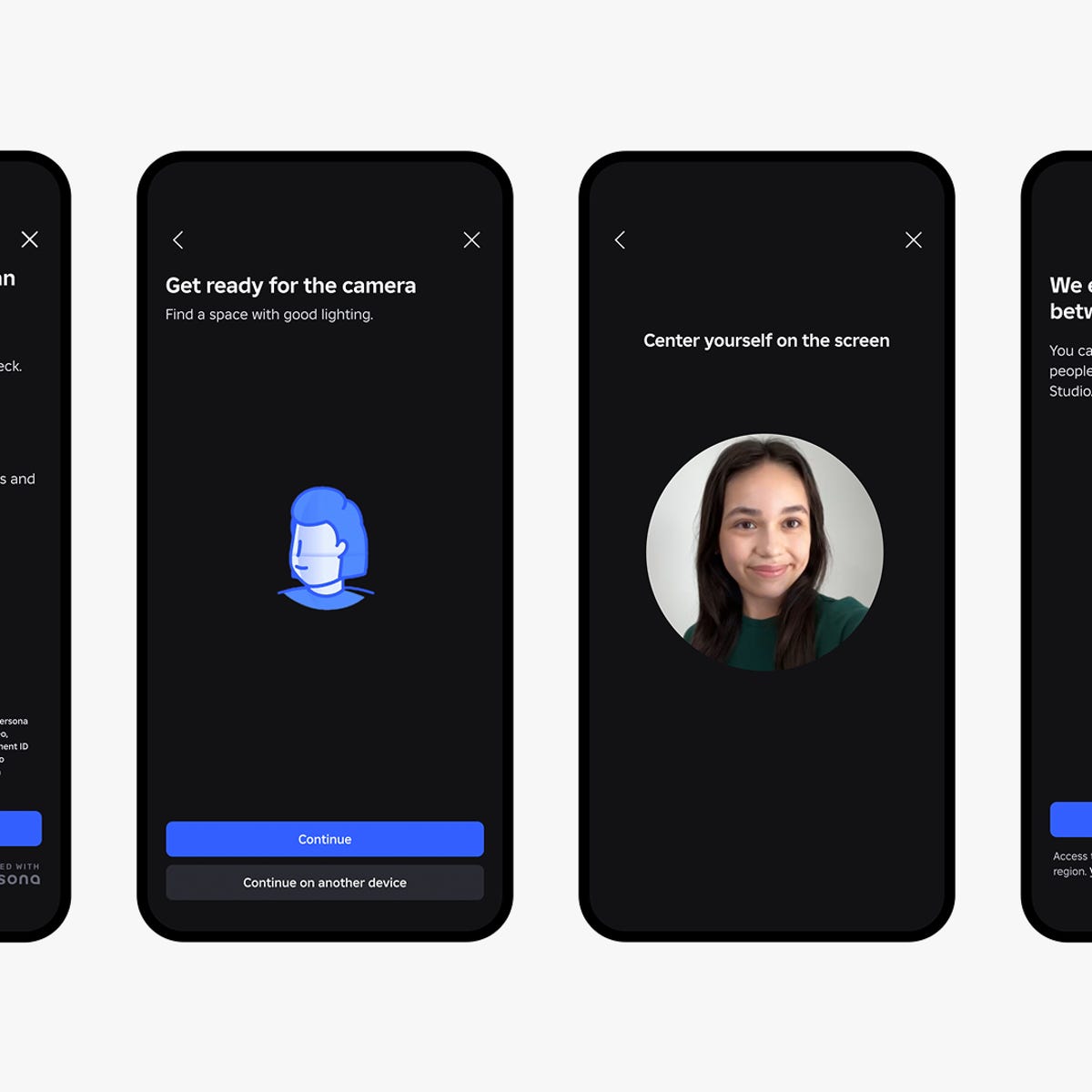

How the Age Verification Process Actually Works

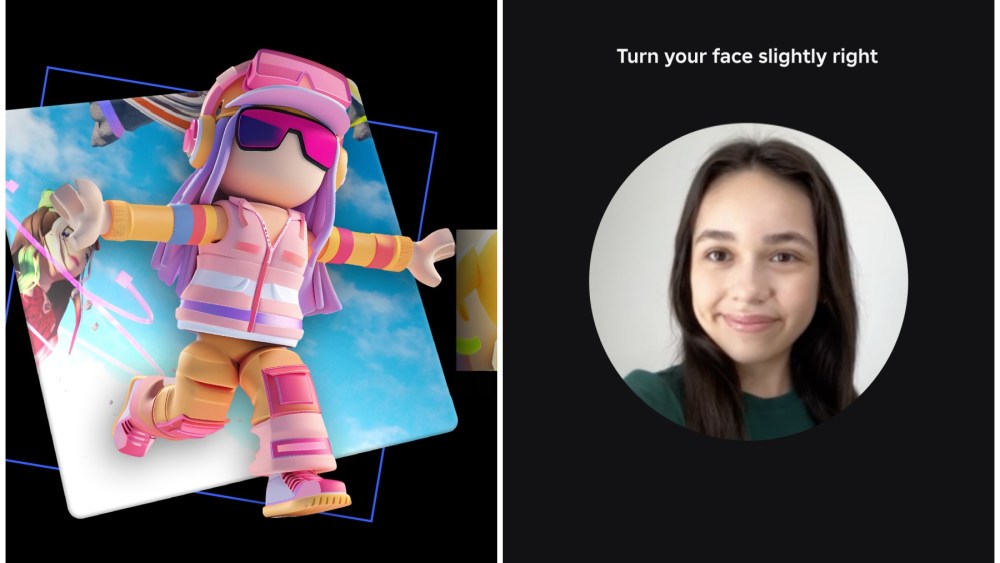

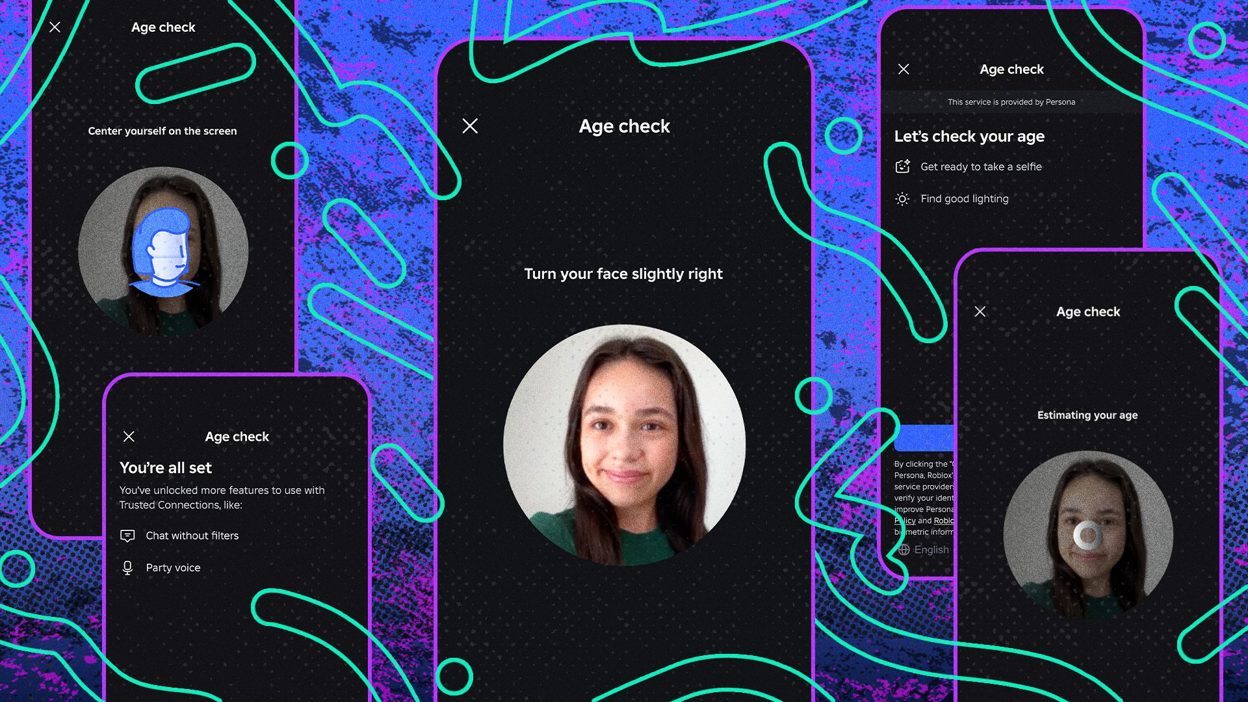

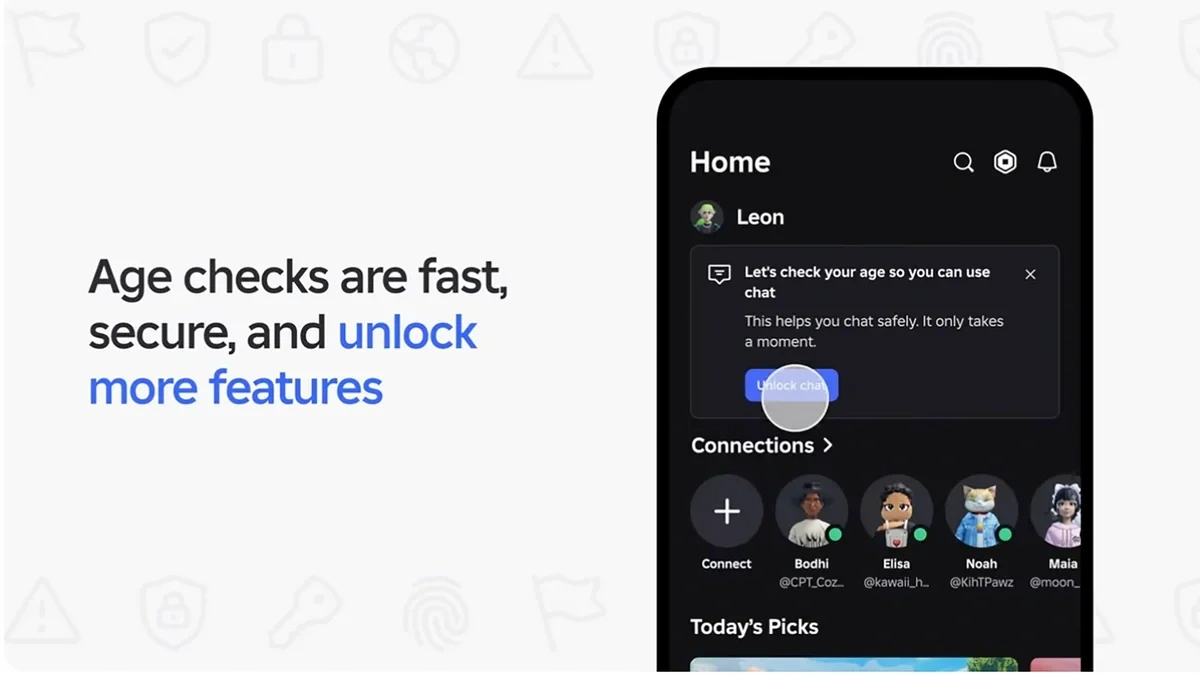

The mechanics are straightforward, which is exactly why this matters.

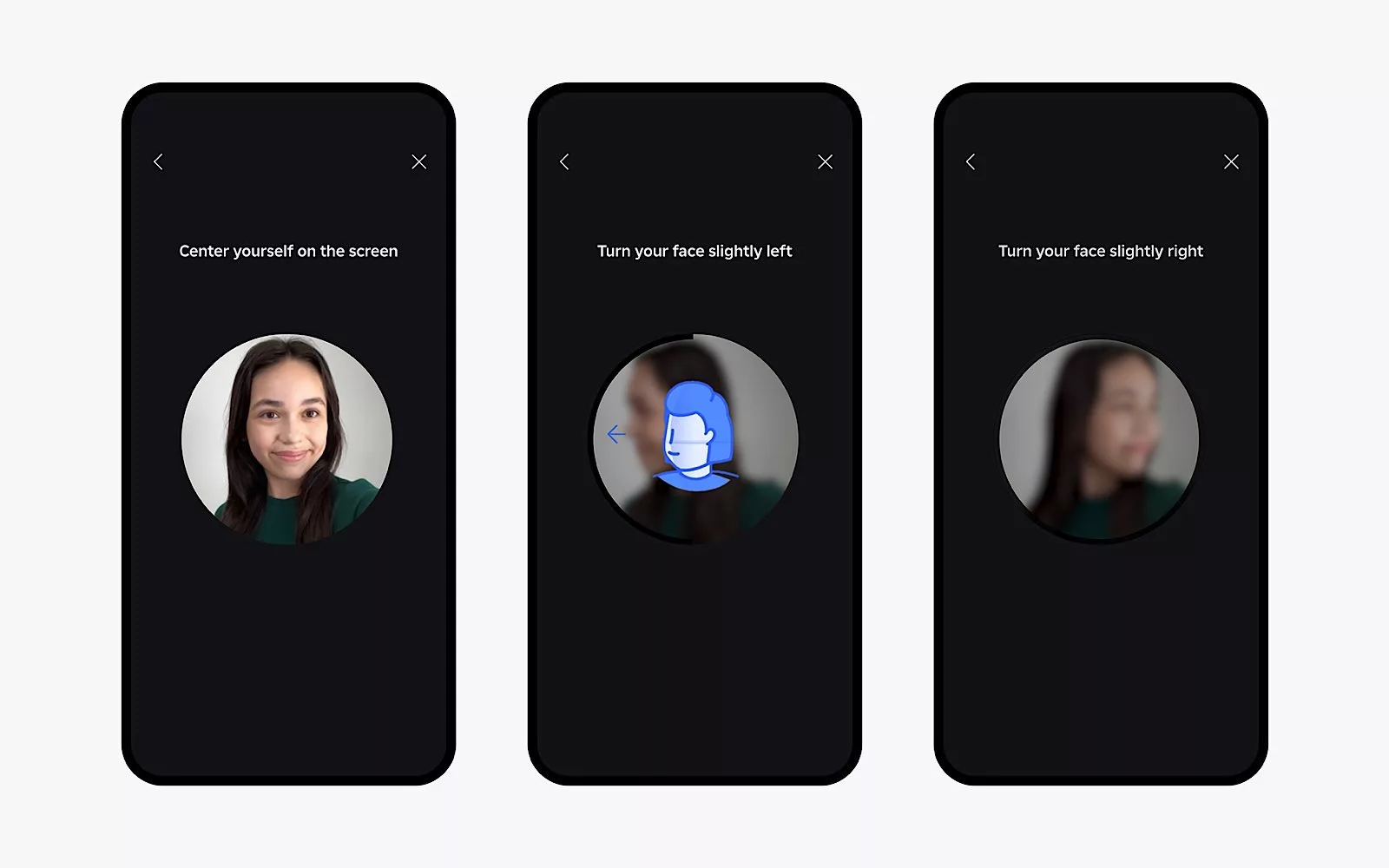

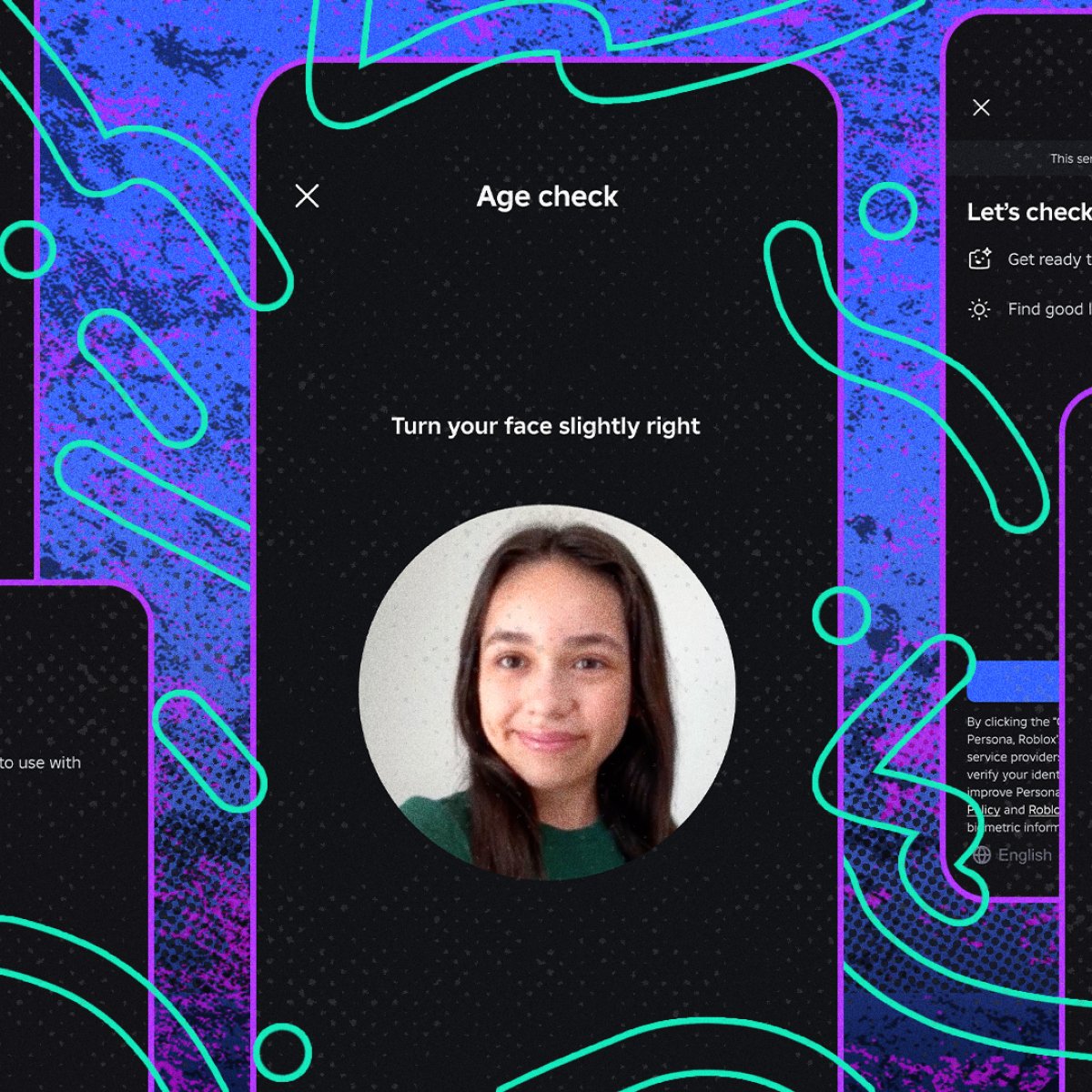

When a user wants to access chat (or any other age-gated feature), they get prompted to complete an age check. They open the Roblox app, grant camera access, and follow on-screen instructions. The process takes about two to three minutes, typically.

Here's the step-by-step:

- Initiate verification: User taps on chat or encounters an age-gated feature.

- Camera permission: The app requests access to your device's camera.

- Follow prompts: Roblox displays real-time instructions on screen.

- Capture selfie: You take a selfie following the guidance (sometimes multiple angles, sometimes just one).

- Submission: The image gets sent to Persona's servers.

- Processing: Persona's AI analyzes the facial features and estimates age.

- Result: Roblox gets a response indicating age category (under 9, 9-12, etc.).

- Image deletion: Both Persona and Roblox delete the facial data after processing.

The whole thing is automated. No human looks at your face. Roblox doesn't store your selfie. It's all just facial geometry data getting analyzed by an algorithm.

Users 13 and older have an alternative: they can skip the facial verification and verify through ID instead. That means uploading a photo of a driver's license or passport. Roblox says this option exists for users who prefer not to use facial recognition, though it adds an extra step and requires more personal information.

If someone thinks the age check got it wrong, they can appeal. Roblox will ask them to try again with facial verification, or they can escalate to manual review with ID verification. There's also a parental control option where parents can manually adjust their child's age in the system.

The key insight here is that this isn't theater. It's an actual technical system designed to verify age, not just collect data.

The Age-Segmented Chat System: Who Can Talk to Whom

Once a user completes age verification, they don't get full access to everyone on Roblox. That would defeat the whole purpose.

Instead, Roblox created six age categories, and users can only chat with people in their own group plus the groups directly adjacent to theirs. Think of it like age rings: you can talk to people in your ring and the rings next to it, but not across the whole platform.

The six categories are:

- Under 9: Can chat with under 9 and 9-12 groups only (unless parent consents).

- 9-12: Can chat with under 9, 9-12, and 13-15 groups.

- 13-15: Can chat with 9-12, 13-15, and 16-17 groups.

- 16-17: Can chat with 13-15, 16-17, and 18-20 groups.

- 18-20: Can chat with 16-17, 18-20, and 21+ groups.

- 21+: Can chat with 18-20 and 21+ groups.

This design is clever because it reduces mixing of vastly different age groups without completely isolating everyone. A 14-year-old can still talk to a 12-year-old (common scenario: older sibling), but a 14-year-old can't directly message a 25-year-old.

For children under 9, chat is actually disabled by default. Parents have to explicitly opt in to allow chat, and even then, kids in this age group can only talk to others in the under 9 and 9-12 categories.

Roblox also built in continuous monitoring. The company says it's "leveraging multiple signals" to watch user behavior and flag when someone seems significantly older or younger than their verified age. If behavior patterns don't match the age verification, Roblox can ask users to re-verify. So if a verified 16-year-old suddenly starts chatting like they're 30, the system might catch that and require a new verification.

Is this perfect? No. Behavioral analysis has false positives and false negatives. But it's a meaningful layer beyond just taking the facial verification at face value.

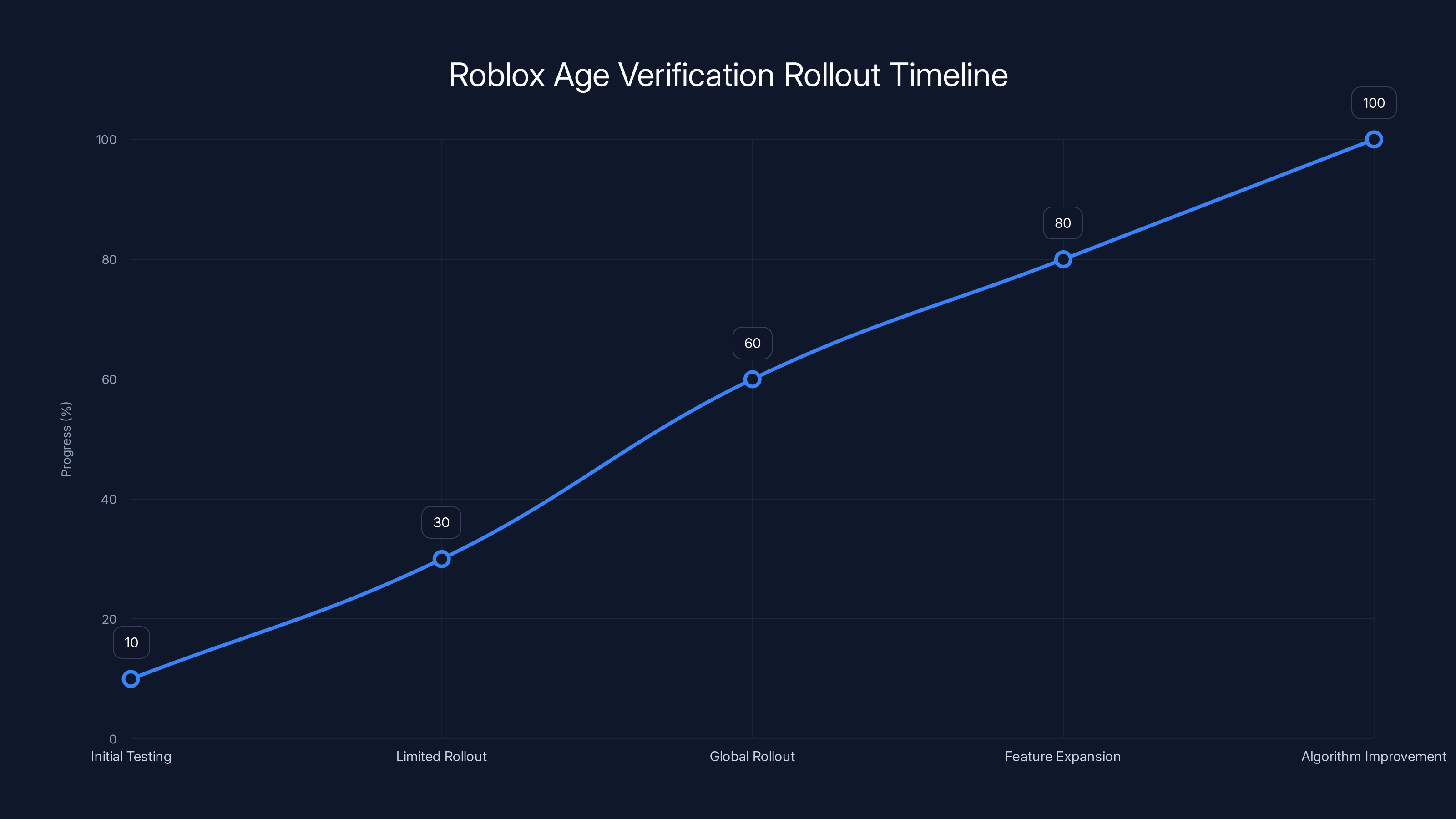

The estimated timeline shows a phased rollout approach, starting with initial testing and gradually expanding to global rollout and feature enhancements. Estimated data.

Privacy Concerns: The Facial Recognition Elephant in the Room

Here's where this gets complicated.

Roblox and Persona both say they delete facial images after processing. Roblox doesn't store your selfie. Persona doesn't store it either. In theory, no facial database exists. The verification is one-time and anonymous.

But here's what people actually worry about: what if there's a breach? What if Persona's systems get hacked before the data deletes? What if there's a retention policy that's different from what's stated? What if the deletion is delayed or incomplete?

These aren't paranoid concerns. They're legitimate questions based on actual history. Companies get breached. Deleted data sometimes isn't actually deleted. Retention policies get extended. Regulatory bodies have fined companies for facial data misuse before.

The European Union's GDPR essentially says that collecting facial data requires explicit consent and legitimate purpose. Roblox is operating globally, so it technically has to comply with GDPR even if a user is in the US. That adds legal constraints that actually protect users, but it also means questions are valid.

Persona is a real company with a real business model focused on age and identity verification. It's not some random startup. The company has been through security audits and operates in multiple regulated markets. That's worth noting, though it's not a guarantee.

The privacy calculus here is: facial data collection for age verification is better than the previous system (no verification) in terms of safety, but it's worse in terms of privacy. Every user with camera access on their device is now contributing biometric data to a third-party vendor, even if that data gets deleted afterward.

For parents, the question becomes: is the safety improvement worth the privacy trade-off? For kids, it's more coercive. They don't really have a choice—if they want to chat, they verify.

One more thing: Roblox says Persona deletes data after processing, but the company also says Roblox "continuously evaluates user behavior" to determine age accuracy. That behavioral data remains. So while the facial image deletes, behavioral metadata and verification timestamps do not. That's not necessarily bad, but it's important context.

The Legal Landscape: Why This Matters for Child Safety

Roblox didn't implement age verification because it was fun or innovative. It did it because not doing so was becoming legally indefensible.

The Texas and Louisiana attorney general lawsuits centered on a straightforward claim: Roblox knowingly exposed children to dangerous content and predatory behavior because its safety systems were inadequate. The evidence was internal communications showing Roblox executives understood the problems.

These aren't civil lawsuits filed by individual users. These are state-level government actions filed by law enforcement officials. That's different. That's existential threat territory.

Other states were watching too. New York, California, and others had either begun or were considering similar actions. The regulatory pressure was mounting globally. The UK, Canada, and EU regulators were all examining child safety on gaming platforms.

For Roblox, the calculation was brutal: either implement real verification now, or face escalating fines, possible app store removal, or even structural restrictions on how the company operates. Age verification was the cheapest, fastest way to show regulators that the company was taking action.

This also sets a precedent for other platforms. TikTok is facing similar pressure. Discord has similar risks. YouTube Kids was criticized for inadequate age verification. Once one major platform implements real age verification, regulators can point to it and ask why everyone else doesn't.

The legal framework here is important: most child safety regulations focus on "reasonable measures." What's reasonable? That's often determined by what's technically feasible and what competitors do. By implementing facial verification, Roblox just raised the bar for what "reasonable" means.

Smaller platforms can't use cost or complexity as an excuse anymore. Roblox proved it's doable. That's why this matters beyond just Roblox.

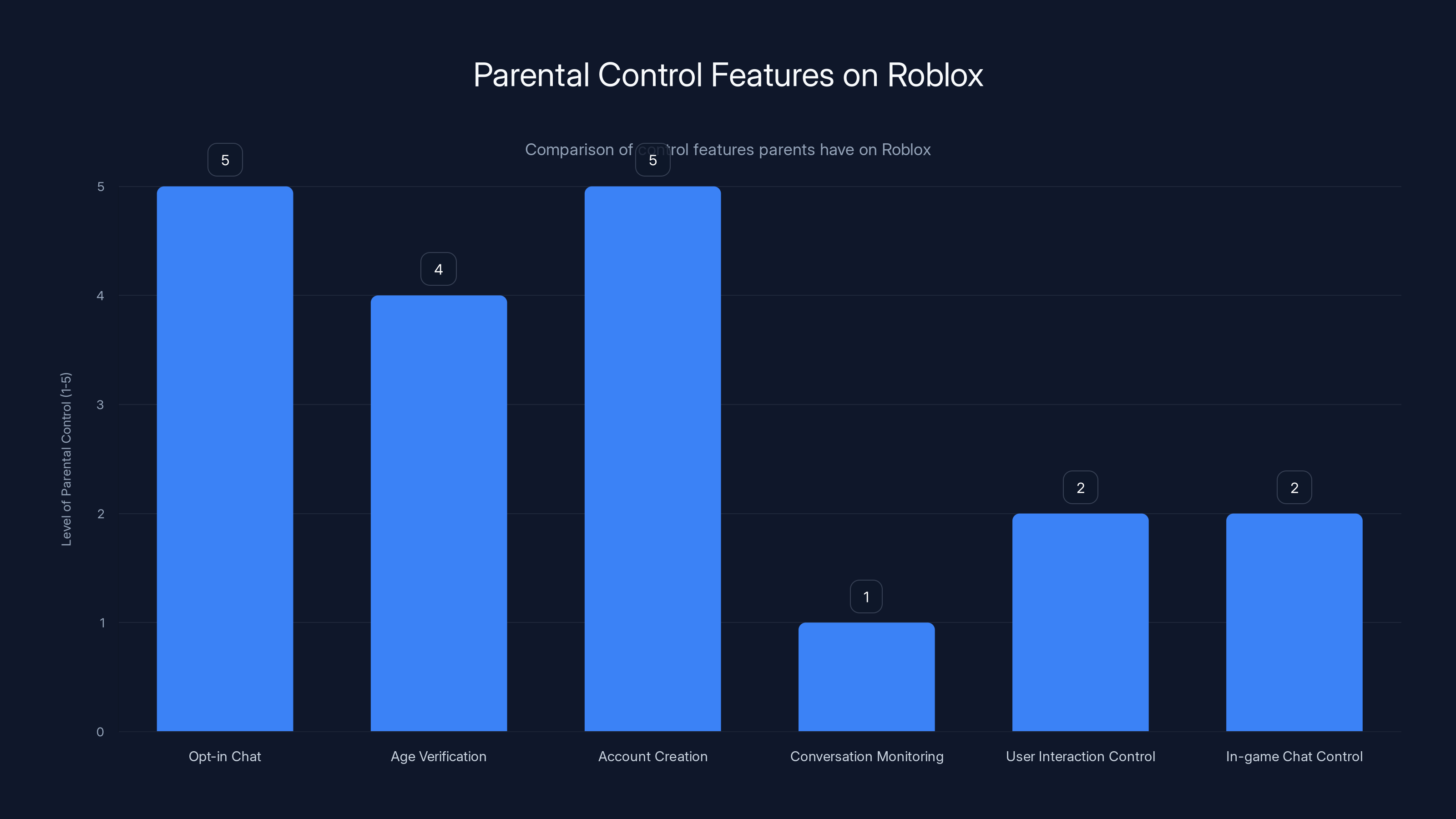

Parents have the most control over opt-in chat and account creation, but limited control over conversation monitoring and in-game chat features. Estimated data based on feature descriptions.

Impact on Young Users: What Actually Changes

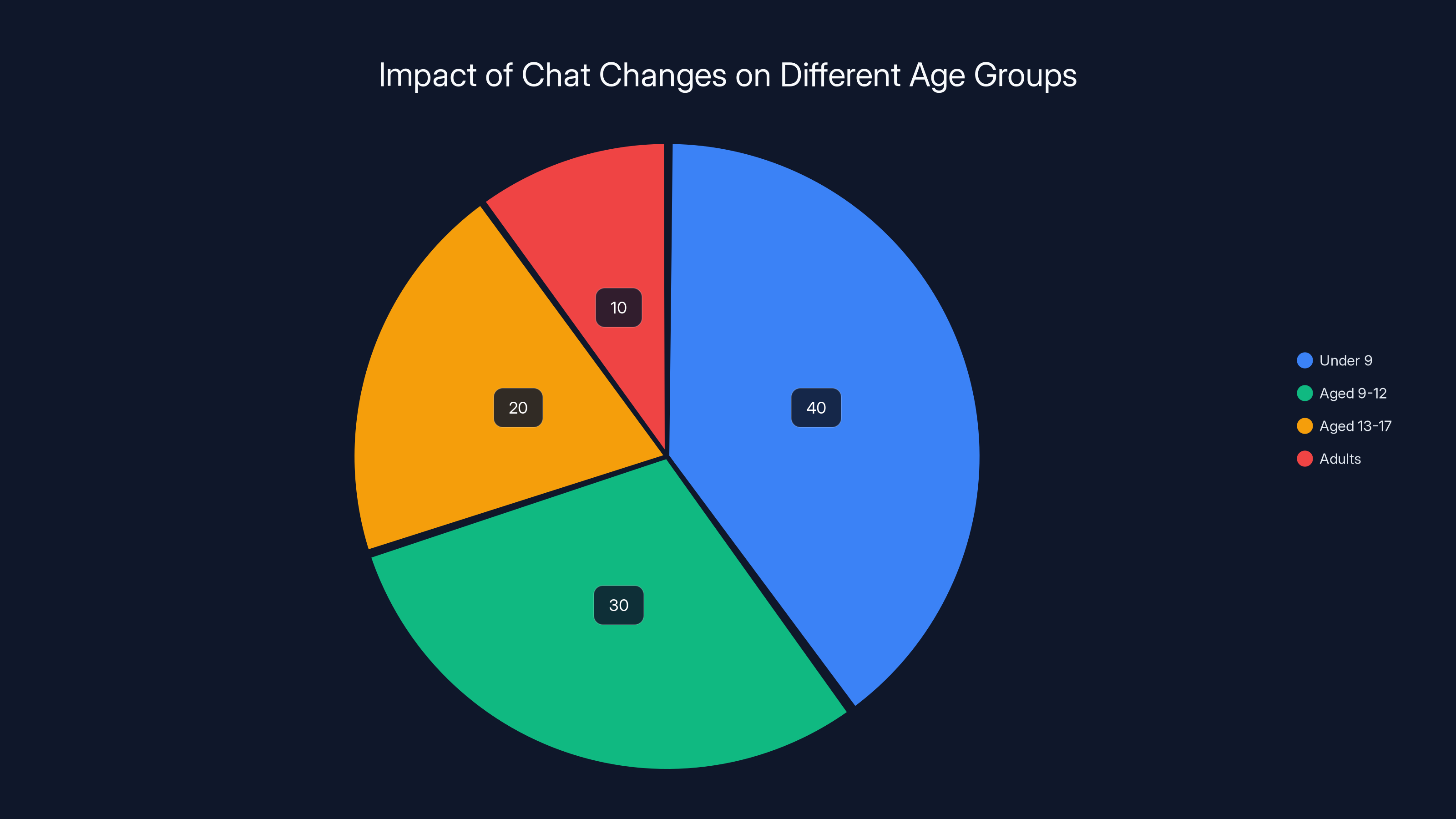

For kids under 9, the impact is significant.

Chat is now disabled by default. Parents have to actively opt in. That means most young children won't be able to chat at all unless parents specifically enable it. For children who do get chat access, it's segmented to only under 9 and 9-12 age groups.

Previously? Kids could chat with anyone. There were moderation systems in place, but segmentation didn't exist.

For kids aged 9-12, the change is moderate. They can still chat with kids around their age, but they can't chat with teenagers or adults, even if they were previously connected. This breaks some legitimate interactions (older sibling accounts, for example), but it also prevents age-inappropriate connections.

For teens aged 13-17, the impact is minimal in practical terms. They can still chat with peers and adjacent age groups. But they now have verified age, which means predators trying to pose as their age can't as easily deceive them (since the platform knows actual ages).

For adults, honestly, the impact is almost none. The age verification is slightly annoying, but it takes two minutes.

The real impact is behavioral. Kids now know that someone verified their age. They might be more careful about what they discuss, more aware that they're not actually anonymous. That's not necessarily bad—it's a friction point that might prevent some risky behaviors.

Parents should be aware: verification isn't parental control in the traditional sense. Parents can't see who their kids chat with. They're just enabling chat and trusting the age segmentation to work correctly. There's no parent dashboard, no conversation logs, no monitoring.

So verification is about protecting kids from predators trying to misrepresent their age. It's not about parents monitoring their children's conversations.

Technical Implementation: How Persona's Facial Recognition Works

Persona's system is interesting because it's solving a specific problem: age verification through facial analysis, not identification.

This is a crucial distinction that most people miss. Facial identification is asking, "Who is this person?" Facial age estimation is asking, "How old does this person look?"

They're different algorithms with different accuracy profiles.

Here's roughly how it works:

Step 1: Image capture and preprocessing. The user takes a selfie. Persona's system analyzes image quality, lighting, angle, occlusion (glasses, hats, etc.). If quality is too low, it asks for a retake.

Step 2: Facial feature extraction. The algorithm maps facial landmarks. Things like the spacing between eyes, jawline geometry, skin texture, wrinkle patterns, facial symmetry. These features correlate with age.

Step 3: Age estimation model. A machine learning model (likely trained on thousands of images with verified ages) predicts age range. The model outputs confidence scores for each age bracket.

Step 4: Decision logic. Persona likely uses threshold rules. For example: if confidence for "under 13" is >80%, the user gets placed in that category. If confidence is between 60-80% and ambiguous, the system might ask for a retake or escalate to manual review.

Step 5: Response and deletion. Persona returns the age category to Roblox. The facial image gets deleted from Persona's servers (theoretically immediately, practically within 24 hours per their policies).

Accuracy is probably in the 85-92% range for broad age categories (under 13 vs. 13+). For Roblox's six categories, accuracy is likely lower, maybe 75-85%. That's why appeals exist and why behavioral monitoring happens.

The system can be fooled. Someone with unusually youthful appearance might be placed in a lower age group. Someone who looks older might be placed in a higher group. Makeup, filters, and certain lighting can throw off the algorithm.

But—and this is important—the purpose isn't perfect accuracy. It's creating sufficient friction that casual age misrepresentation becomes harder. A 30-year-old predator trying to pose as a 14-year-old has to either:

- Actually look like a 14-year-old (most don't).

- Use heavy makeup and tricks (which can work but requires effort).

- Compromise quality by bad lighting or angle (which triggers retakes).

That friction reduces the volume of casual predatory behavior.

Estimated data shows that the majority of Roblox users fall within the 13-15 age group, with significant portions in the 9-12 and 16-17 groups as well. Estimated data.

Comparison to Other Platforms' Age Verification Approaches

Roblox isn't the first platform to implement mandatory age verification, so there's context.

Discord has optional age verification for some features. It's not mandatory. If you want to access NSFW channels, Discord verifies age via phone number. That's weaker than facial verification but lower friction.

TikTok uses a combination of phone verification, ID verification, and behavioral analysis. It doesn't rely on facial recognition alone. The company has been criticized for allowing underage users to circumvent age gates.

YouTube Kids uses parental controls and app-level restrictions rather than user verification. Google controls the environment, not user identity verification.

Fortnite (Epic Games) doesn't require age verification for chat. It uses moderation and parental controls instead. Epic has resisted implementing mandatory verification, arguing it's unnecessarily invasive.

Minecraft (Microsoft) relies on parental controls in the Microsoft account system. No mandatory verification for chat.

Which approach is most effective? It depends on what problem you're solving.

Facial verification (Roblox's new approach) is the most technically sophisticated and creates the most friction. It's also the most privacy-invasive if done incorrectly. It's also hardest to circumvent.

Phone verification (Discord) is moderate friction and moderate effectiveness. Most people have unique phone numbers, so it prevents casual multi-accounting.

Behavioral analysis (various platforms) is low friction but requires massive data collection and machine learning. It can work but isn't foolproof.

Parental controls (YouTube Kids, Minecraft) are high friction for parents but low friction for kids (assuming they don't know the workarounds).

Roblox chose the option that creates the most friction for users but the highest accuracy for age verification. That's because the legal and reputational stakes were so high that half-measures weren't acceptable.

Potential Workarounds and How They Might Fail

People are already asking: can you circumvent this?

The honest answer: maybe, but it's getting harder.

Traditional workarounds that might have worked:

Fake ID uploads: Users could theoretically photoshop an ID for the ID verification option. Persona likely has anti-spoofing measures now (checking for obvious edits, verifying holograms, running ID authenticity checks). It's not impossible but it's harder.

Using someone else's face: A 30-year-old could theoretically use a photo of a 12-year-old. But Persona's system has liveness detection, meaning it verifies the photo is actually being taken in real-time, not uploaded. The system might require blinking, head movement, or other live detection checks. That prevents simple photo spoofing.

VPN and regional changes: You could theoretically try to appear as a different region to access different chat groups. But Roblox knows your account's creation date, history, and behavioral patterns. A new account claiming to be an adult but exhibiting child-like behavior would get flagged.

Account hacking: Someone could hack an adult's account and use it to chat with kids. That's possible but it's a different problem (account security, not age verification). Roblox's behavioral monitoring might catch sudden changes in chat patterns.

Creating fake accounts on multiple passes: Someone could keep trying different photos until they get the age category they want. But after a few failures, the system probably requires manual review or ID verification. There's likely a limit to retry attempts.

None of these workarounds are impossible, but all of them are harder than the previous system (just typing a birth year). That's the point. The goal isn't to make circumvention literally impossible—it's to raise the difficulty enough that casual circumvention drops significantly.

So will some predators still get through? Probably. But the volume will drop. The barrier to entry is higher now.

Estimated data shows that the most significant impact of chat changes is on users under 9, with minimal impact on adults.

What Parents Need to Know About the New System

If you have kids on Roblox, this changes how you approach the platform.

First, understand what you now have control over:

-

Opt-in chat: For kids under 9, you're deciding whether they can chat at all. That's explicit parental control. Use it. Most security experts recommend not enabling chat for very young children.

-

Age verification oversight: You can see your child's verified age category in parental controls. You can adjust it manually if you think the verification was wrong.

-

Account creation: You're still the one creating and managing the account (theoretically). You can choose not to enable chat, not to complete verification, or to limit it.

What you don't have control over:

-

Conversation monitoring: Roblox won't show you what your kid chats about. You can't see conversations, log them, or audit them.

-

Who they talk to: You can't whitelist or blacklist specific users. The platform handles segmentation automatically.

-

Chat features in games: Some Roblox games have in-game chat separate from the platform chat. Those might not go through age verification. Check individual game settings.

Best practices for parents:

Communicate about verification: Explain to your child why age verification exists. Don't present it as a punishment. Frame it as a safety measure.

Use parental controls fully: Set the chat options to match your comfort level. "Friends only" is more restrictive than "everyone in age group." Start restrictive and relax it as your child matures and demonstrates responsibility.

Monitor play time and friends: You should still know which games your kid plays and which friends they interact with, even without seeing exact conversations.

Report suspicious behavior: If your child mentions strange interactions or uncomfortable conversations, report them to Roblox immediately. Behavioral reports are the most effective way to catch people evading the age system.

Keep conversations open: Ask your kid regularly about their Roblox experience. Who do they chat with? What do they talk about? Are they ever uncomfortable?

The new verification system isn't a substitute for parental involvement. It's an additional layer of protection that hopefully reduces the worst cases.

The Industry Reaction and What It Means for Gaming

Roblox's implementation has been watched closely by other platforms.

The gaming industry is generally split into two camps:

Camp 1: The safety-first advocates believe Roblox made the right move and other platforms should follow. Industry voices pushing for this include child safety organizations, some regulators, and competitor platforms that want to avoid the same lawsuits.

Camp 2: The privacy advocates argue that facial verification is overkill and that less invasive approaches work. This camp includes privacy organizations, some tech companies, and user advocacy groups.

Most major platforms are watching legal developments closely. If Roblox's implementation successfully reduces incidents without major security breaches, other platforms will probably adopt similar systems. If there are breaches or if false positives create problems, platforms will move more slowly.

The precedent this sets is important. Regulators will now point to Roblox as an example of what's technically feasible. The phrase "industry best practices" will start including facial age verification. That means:

- Smaller platforms will face pressure to implement similar systems or explain why they don't.

- Platforms that don't implement verification will face higher legal risk.

- Age verification becomes a competitive feature, not just a regulatory requirement.

- The cost of entry for platforms serving young users goes up.

This likely accelerates consolidation in the gaming industry. Large players like Roblox and Epic Games can afford sophisticated verification systems. Smaller indie platforms cannot. So we'll probably see smaller platforms either getting acquired by larger ones, shut down, or forced to implement less sophisticated (and potentially less effective) systems.

Implementation Rollout and Timeline

Roblox didn't flip a switch and make age verification mandatory globally overnight.

The company tested the system in a few specific markets first. Limited rollout in select countries allowed Roblox to:

- Identify technical issues before global rollout.

- Test the user experience and identify friction points.

- Monitor for unexpected problems or security issues.

- Adjust policies based on regulatory feedback.

- Build operational capacity to handle support tickets.

After the test phase proved successful (or at least acceptable), Roblox rolled out globally. Not all features immediately. Chat was first. Other age-gated content likely follows.

The timeline matters because it signals to regulators that Roblox is being deliberate, not reckless. It also gives platforms like Discord, Fortnite, and others time to react and plan their own implementations.

For Roblox, the gradual rollout also reduces the support burden. A global rollout of mandatory facial verification hitting 680 million users simultaneously would crash the system. Phased rollout means the company can scale support.

Looking forward, Roblox will likely:

- Expand to more features: Age verification for avatar customization, in-game purchases, Discord integration, etc.

- Improve the algorithm: Refine facial age estimation based on real-world accuracy data.

- Add additional signals: Behavioral analysis to catch false positives and people evading the system.

- Update policies: Respond to regulatory feedback and incorporate new requirements.

- Expand to adults: Eventually make verification mandatory for all users, not just for chat access.

The first year of implementation will be critical. Success means fewer incidents, no major breaches, user acceptance. Failure means legal problems, regulatory intervention, and pressure to change course.

Effectiveness Questions: Does This Actually Work?

Here's the uncomfortable truth: age verification is good at reducing one specific problem (adults misrepresenting their age), but it's not a complete solution to child safety.

It does help with:

- Preventing casual predatory behavior: A 40-year-old who can't pretend to be a teenager is less likely to attempt grooming.

- Creating friction for bad actors: Even sophisticated predators have to work harder.

- Protecting legal liability: Roblox can now claim it implemented reasonable measures.

- Reducing volume: Most predatory behavior at scale comes from low-effort actors.

It doesn't help with:

- Sophisticated predators: Someone determined to circumvent the system with technology or stolen identity documents might still manage it.

- Behavioral red flags: Age verification doesn't catch predatory behavior patterns, only age misrepresentation.

- In-game predation: If someone is actually the age they claim but still behaves predatorily, the system doesn't catch them.

- Off-platform grooming: Once someone connects to a kid on Roblox, they can move to Discord or other platforms where this isn't an issue yet.

- Non-predatory harm: Bullying, harassment, exposure to inappropriate content—age verification doesn't stop these.

So: is it effective? Yes, partially. Will it solve child safety completely? No. It's one tool in a larger toolkit that should include moderation, behavioral analysis, parental controls, and user reporting.

Roblox is positioning it as a major win, and in isolation, it is. But it's not "the" solution. It's "a" solution.

The most honest assessment is probably this: age verification reduces the baseline risk significantly but creates a new frontier where more sophisticated actors might operate. It's a step forward, not a finish line.

Privacy Implications and Data Handling

Roblox and Persona say facial data deletes immediately after processing, but let's dig into what that actually means.

"Deletion" in tech often means several things:

- Cryptographic erasure: The key to decrypt the data is deleted, making the data unrecoverable (but copies might exist).

- Secure deletion: Data is overwritten multiple times using secure deletion protocols.

- Immediate deletion: Data deletes as soon as processing completes.

- Delayed deletion: Data deletes after 24-72 hours (gives time for backups and edge cases).

Roblox hasn't specified which method Persona uses. Persona's privacy documentation says data deletes "as soon as possible" after verification. That's vague enough to cover several deletion methods.

The real privacy concern isn't the facial image itself—it's the metadata:

- Verification timestamp: When you verified your age.

- Device information: Device ID, IP address, device type used for verification.

- Retry patterns: How many attempts you needed.

- Behavioral data: Patterns of re-verification or behavioral changes that triggered re-verification.

- Account linkage: Verification tied to your Roblox account.

This metadata stays. It gets correlated with chat behavior, game play, purchase history, friends list, etc. Over time, Roblox builds a very detailed profile of who you are, including biometric data indicating approximate age.

That data is incredibly valuable. Advertisers pay a lot for demographic information, especially for users under 18 (even though they're not supposed to target them directly).

Will Roblox sell this data? Probably not directly. But third-party analysis, internal use for algorithm optimization, government data requests—those are all possible.

The GDPR restricts Roblox's use of this data. If a user is in the EU, Roblox has specific legal obligations about retention, sharing, and usage. If a user is in California (CCPA), there are different but similar obligations. But in many countries, there's no regulation.

For users in less regulated jurisdictions, the privacy implications are real and meaningful.

Future of Age Verification: Where This Is Heading

Roblox's implementation is probably not the final form of age verification in gaming.

Here are likely next steps:

Decentralized verification: In a few years, we'll probably see third-party verification services that work across platforms. Instead of each platform running its own verification, users verify once with a trusted service (government, financial institution, etc.) and reuse that verification across multiple platforms. This reduces privacy concerns because no single platform has the facial data.

Liveness detection improvements: Current liveness detection can be fooled with sophisticated video playback. Future systems will probably require multiple verification methods (facial + voice + behavioral patterns) to confirm liveness.

AI-resistant approaches: As deepfakes improve, facial recognition becomes less trustworthy. Age verification might shift to blockchain-based systems, government-issued digital IDs, or hybrid approaches that combine multiple signals.

Mandatory industry standards: Regulators will probably mandate that platforms use certified age verification services that meet specific accuracy and privacy standards. This could reduce fragmentation but also increase costs.

Age verification for all online services: Gaming might just be the beginning. Banks, social media, news sites—everything targeting minors might eventually require age verification. That's both good for safety and concerning for privacy and surveillance.

Privacy-preserving alternatives: We'll probably see research into zero-knowledge proofs, where you prove you're a certain age without revealing your identity or facial data. These exist in research but aren't mainstream yet.

In 10 years, our approach to age verification will probably look primitive compared to what we'll have developed. Roblox's current system is actually quite advanced for 2025. By 2035, it'll look outdated.

Comparing This to Other Regulatory Approaches

Age verification isn't the only way to solve child safety problems.

European approach: The UK Online Safety Bill and EU Digital Services Act focus more on platform responsibility for content moderation, algorithmic transparency, and user reporting mechanisms. Less emphasis on age verification, more emphasis on what platforms do with that knowledge.

US approach: No federal comprehensive law yet. Individual states and the FTC focus on specific harms (dark patterns, targeted advertising to minors, data collection practices). Age verification is only one tool.

Chinese approach: Very strict real-name registration requirements, combined with strict content moderation and behavioral monitoring. More Big Brother-style but arguably more effective at preventing harm.

Australian approach: Balanced combination of age verification, parental involvement, and industry codes of conduct with government enforcement.

Roblox's choice reflects US regulatory patterns: focus on age verification as the primary mechanism, assume platform responsibility after that. Whether that's sufficient is an open question.

International regulators are watching to see whether age verification alone solves the problem or whether platforms need to implement additional measures.

FAQ

What is the Roblox age verification requirement?

Roblox now requires all users globally to complete age verification before accessing chat features. The verification uses facial recognition technology (provided by third-party vendor Persona), though users 13+ can alternatively verify using ID. Roblox deletes facial images after processing, though metadata about verification remains.

How does the Roblox facial age verification process work?

Users grant camera access in the Roblox app and follow on-screen prompts to take a selfie. Persona's AI analyzes facial features to estimate age category (under 9, 9-12, 13-15, 16-17, 18-20, or 21+), then Roblox receives the age result. The facial image is deleted after processing, and users are assigned to an age-based chat group accordingly.

Who is required to complete age verification on Roblox?

All Roblox users who want to access chat features must complete age verification, regardless of their current account age or location. However, verification is technically optional—users can still play Roblox games without verifying, they simply cannot access chat features without completing the age check.

What age groups can chat with each other on Roblox?

Roblox created six age categories, and users can chat with people in their own group plus directly adjacent groups. For example, users aged 13-15 can chat with 9-12, 13-15, and 16-17 age groups, but not with under 9 or 18+. Chat is disabled by default for children under 9 unless parents explicitly opt in.

Is facial verification the only way to verify your age on Roblox?

For users 13 and older, Roblox offers ID verification as an alternative to facial recognition. This option lets users upload a photo of a driver's license or passport instead. Users under 13 cannot use ID verification—they must use facial verification or have a parent manually adjust their age in parental controls.

What happens if the age verification gets your age wrong?

If you believe the age check incorrectly estimated your age, you can appeal the decision. Roblox allows users to retry the facial verification process, escalate to ID verification for manual review, or contact parental controls to have a parent manually adjust your verified age. Multiple attempts and appeals are available before facing restrictions.

Will Roblox use my facial data for anything else besides age verification?

Roblox and Persona both state that facial images are deleted after age verification is complete and are not used for identification, advertising, or other purposes. However, metadata about verification (timestamps, retry patterns, behavioral signals) remains in Roblox's systems and may be used for fraud detection or continuous age accuracy monitoring.

Why did Roblox implement mandatory age verification now?

Roblox faced lawsuits from multiple state attorneys general (Texas, Louisiana, and others) alleging the platform exposed children to grooming, predatory behavior, and explicit content due to inadequate age verification. Mandatory age verification was implemented to address legal liability, meet regulatory requirements, and strengthen child safety by making age misrepresentation more difficult.

Does Roblox let parents see their child's conversations?

No. Age verification doesn't include conversation monitoring or parental oversight of chats. Parents can enable or disable chat access and adjust their child's age category, but Roblox doesn't provide tools to view, monitor, or log your child's actual conversations. Parents must rely on open communication and reporting suspicious behavior.

What other platforms have implemented similar age verification systems?

Most major gaming platforms use age-related safety measures, though few use mandatory facial verification like Roblox. Discord uses phone verification for NSFW channel access. YouTube Kids uses app-level parental controls. Fortnite uses moderation and reporting. Roblox's facial verification approach is among the most technically sophisticated mandatory systems currently implemented on mainstream gaming platforms.

Key Takeaways

Roblox's mandatory age verification represents a significant shift in how major platforms approach child safety. Here's what actually matters:

For parents: You now have more control over whether your young children can chat on Roblox, but you don't have visibility into their conversations. The age segmentation should reduce contact between children and adults, but it's not foolproof. Stay involved and talk to your kids about their online interactions.

For users: If you want to access chat, you'll need to complete age verification. The process takes a few minutes, and you can use facial recognition or ID verification depending on your age and preferences. Try it once before assuming it won't work—most people succeed on the first attempt.

For the industry: This sets a new expectation. Other platforms will face pressure to implement similar systems or explain why they don't. The regulatory bar for child safety just got higher everywhere. Smaller platforms that can't afford sophisticated verification systems will struggle.

For society: We're trading some privacy (facial data collection) for improved safety (reduced age misrepresentation). Whether that trade-off is worth it depends on how effective the system actually is at preventing harm. Early signs are positive, but long-term effectiveness remains uncertain.

Roblox didn't invent age verification. But it did implement it at scale, globally, on a platform where it matters. That matters for the future of how we think about online child safety.

![Roblox Mandatory Age Verification: Complete Guide for Parents and Users [2025]](https://tryrunable.com/blog/roblox-mandatory-age-verification-complete-guide-for-parents/image-1-1767789623379.jpg)