Introduction: When Digital Gatekeeping Met Government Policy

Walk into any convenience store, and you'll see the ritual play out dozens of times a day. Someone approaches the counter with alcohol or cigarettes. The clerk asks for ID. It's straightforward, almost invisible. But online? Everything gets complicated.

For nearly two decades, policymakers have been wrestling with a deceptively simple question: how do you verify someone's age on the internet? The answers have shifted dramatically. First came porn sites. Then social media platforms. Now, lawmakers have set their sights on something different: app stores.

The logic seems almost obvious in hindsight. App stores like Apple's App Store and Google Play function as gatekeepers. They're centralized. They control what billions of people can access on their phones. Rather than forcing regulators to track down millions of individual apps scattered across the internet, you could simply install age verification at the entrance.

But here's where it gets messy. Age verification on the internet isn't actually simple. It collides with privacy rights, First Amendment protections, cybersecurity vulnerabilities, and practical implementation challenges that make the policy sound far easier than it actually is.

This article explores how app stores became the latest battleground in the age verification wars, what regulators are pushing for, what the technical and legal obstacles actually are, and what might actually work without creating worse problems than the ones we're trying to solve.

TL; DR

- The Shift: Lawmakers have moved age verification requirements from porn sites to app stores, viewing them as centralized gatekeepers

- The Logic: Verifying age at a single point (the app store) seems more efficient than requiring verification for hundreds of millions of individual apps

- The Constitutional Problem: Previous Supreme Court rulings found age verification burdensome for adults accessing legal speech; social media laws have been blocked on similar grounds

- The Implementation Challenge: App stores would need to verify billions of users, creating massive privacy and security risks

- The Real Question: Whether app stores can actually be forced to become age-verification gatekeepers without violating constitutional protections or creating dangerous data repositories

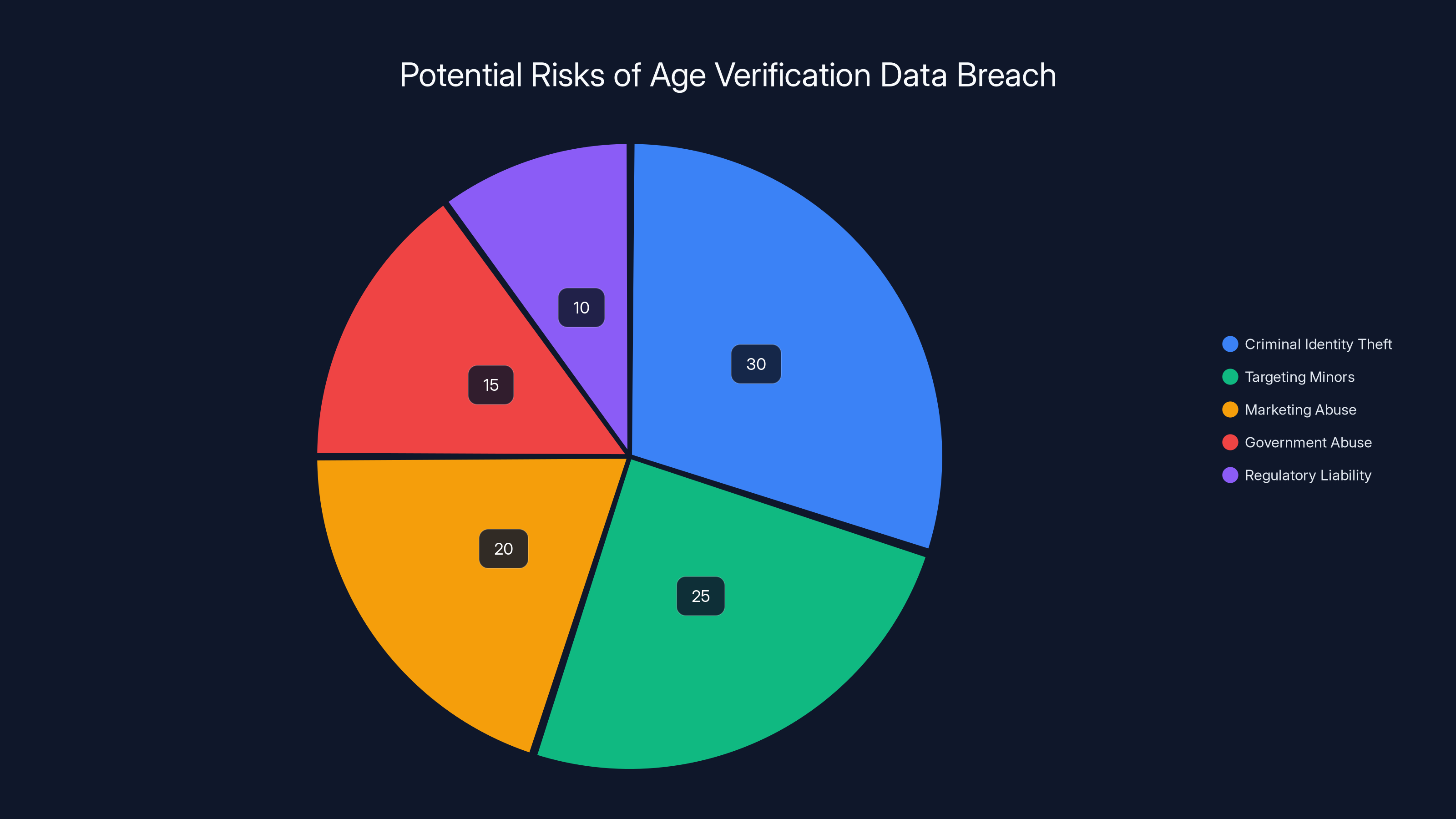

Estimated data shows that criminal identity theft poses the highest risk in the event of an age verification data breach, followed by targeting minors and marketing abuse.

The Historical Context: Why App Stores Became The Target

Age verification on the internet isn't a new concept. The debate has been simmering for decades, but it's evolved dramatically in scope and focus.

Back in 2004, the Supreme Court's decision in Ashcroft v. ACLU essentially shut down mandatory age verification for adult websites. The justices found that such requirements were too burdensome for adults trying to access constitutionally protected speech. The court pointed out that less invasive alternatives existed, like parental controls and content filters that users could activate themselves.

That ruling didn't kill the movement entirely. Instead, advocates shifted focus. Child safety advocates argued that porn sites remained problematic, social media platforms were exposing minors to harmful content, and gaming apps were creating addiction pathways. But each of these platforms presented unique constitutional challenges.

Social media age verification laws have faced repeated court challenges. States like Arkansas passed laws requiring age verification for platforms like Tik Tok and Instagram, but courts blocked them. The reasoning was straightforward: while states have an interest in protecting minors from certain harmful content, social media platforms also distribute massive amounts of protected speech. Forcing adults and teens to jump through verification hoops to access legal content creates constitutional problems.

Then came the realization that changed the calculus entirely. App stores, unlike individual platforms, don't host speech themselves. They distribute it. That distinction matters legally.

Apple and Google don't create the content on Tik Tok or Instagram. They just distribute the apps. That means age verification at the app store level could theoretically be treated differently than age verification at the content platform level. You're not restricting access to speech directly. You're restricting access to the distribution mechanism.

This shift also coincided with growing evidence that minors were accessing age-inappropriate content. Studies from organizations like Common Sense Media showed that children regularly used apps with mature content ratings, sometimes with parental awareness but often without it.

For lawmakers, app stores seemed like the perfect pressure point. They're run by just two companies in most markets. They're already rated content. Installing age verification there would theoretically block millions of inappropriate app downloads before they happened.

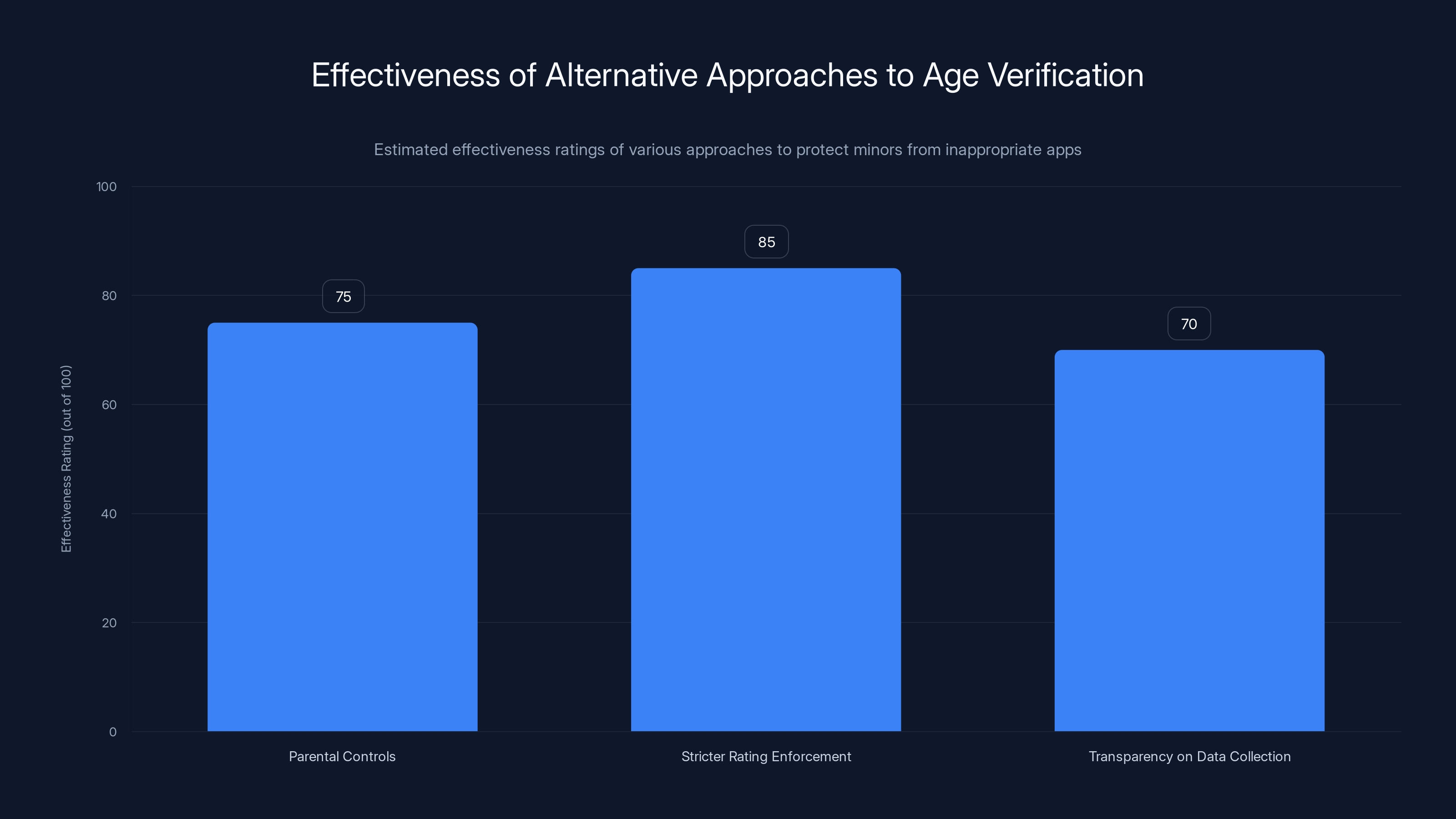

Stricter enforcement of app rating standards is estimated to be the most effective approach, with an 85 out of 100 effectiveness rating. Estimated data.

The Current Regulatory Landscape: Who's Pushing For What

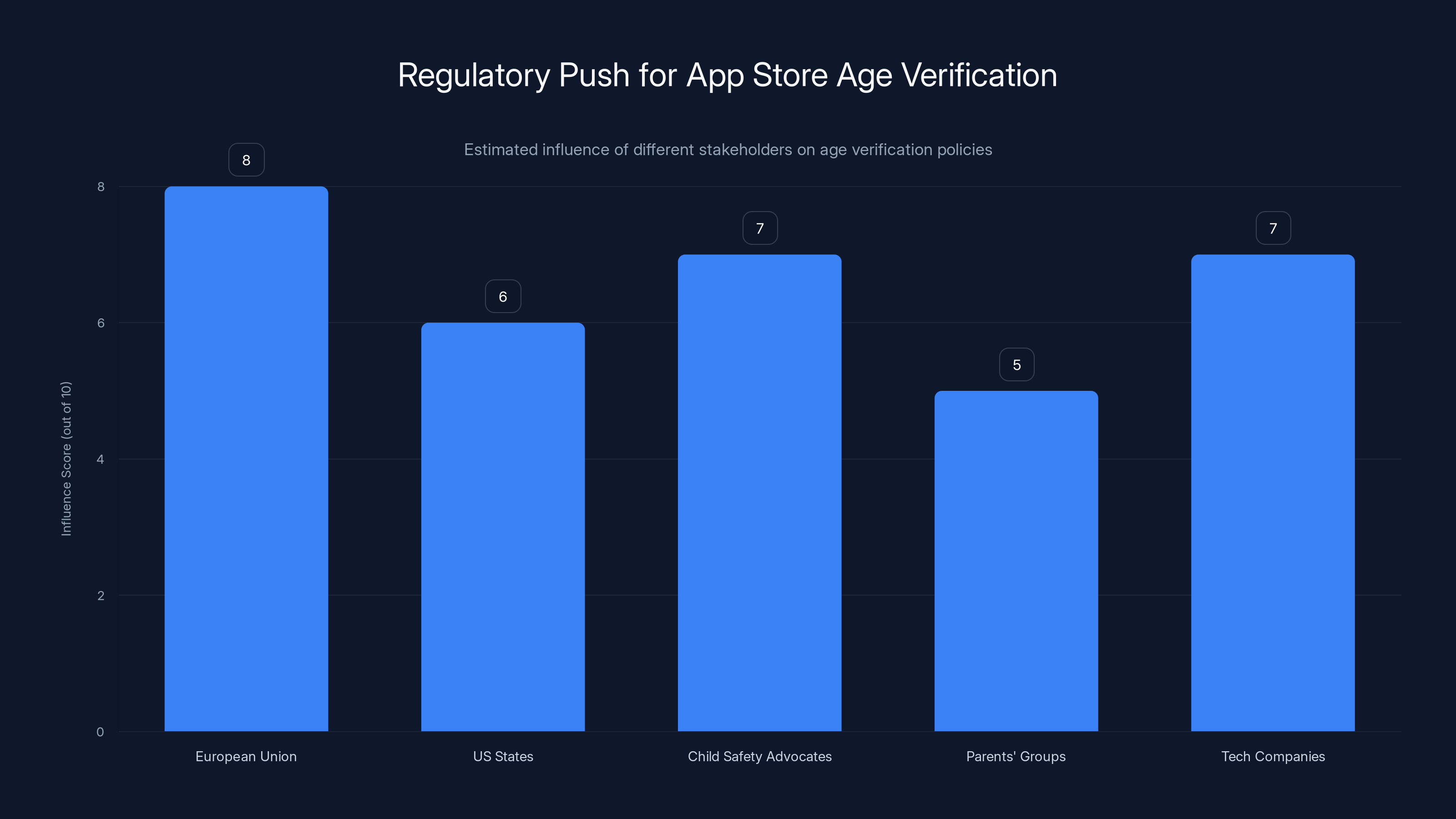

Age verification for app stores isn't a unified movement. Different jurisdictions are pushing different approaches, and the momentum is building faster than most people realize.

The European Union has been the most aggressive. The Digital Services Act (DSA) imposes age verification requirements on platforms that host user-generated content or social features. While it doesn't explicitly mandate app store age verification, the pressure is being felt throughout the ecosystem.

In the United States, state-level legislation has emerged sporadically. Some states have focused on social media specifically. Others have tried to impose broader requirements on platforms operating within their borders. The problem is that state-level regulation collides with interstate commerce and federal authority, creating legal uncertainty.

Then there's the lobbying pressure. Child safety advocates have become increasingly vocal about app store age verification. Organizations like the National Center for Missing & Exploited Children have argued that app stores should implement meaningful protections. Parents' groups have echoed the sentiment, pointing to examples of children accessing gambling apps, adult dating apps, and games designed to maximize addictive engagement.

Apple and Google have responded to pressure by implementing new tools. Apple added communication safety features to i Message and introduced age-related app restrictions. Google implemented similar parental controls through the Family Link feature. But advocates argue these are opt-in solutions that rely on parental awareness and action.

What's notable is that regulators aren't just suggesting age verification. Some are actively considering making it mandatory. The threat of regulatory action has pushed both companies to adopt more robust verification mechanisms, at least for certain high-risk app categories like gambling and dating apps.

However, the regulatory environment remains fragmented. What's required in the EU may be voluntary in North America. What's mandated for gambling apps might not apply to social media. This fragmentation creates compliance challenges for app store operators and doesn't necessarily solve the underlying problem across different jurisdictions.

The Technical Architecture: How Age Verification Would Actually Work

This is where the theoretical simplicity of "verify age at the app store" collides with technical reality.

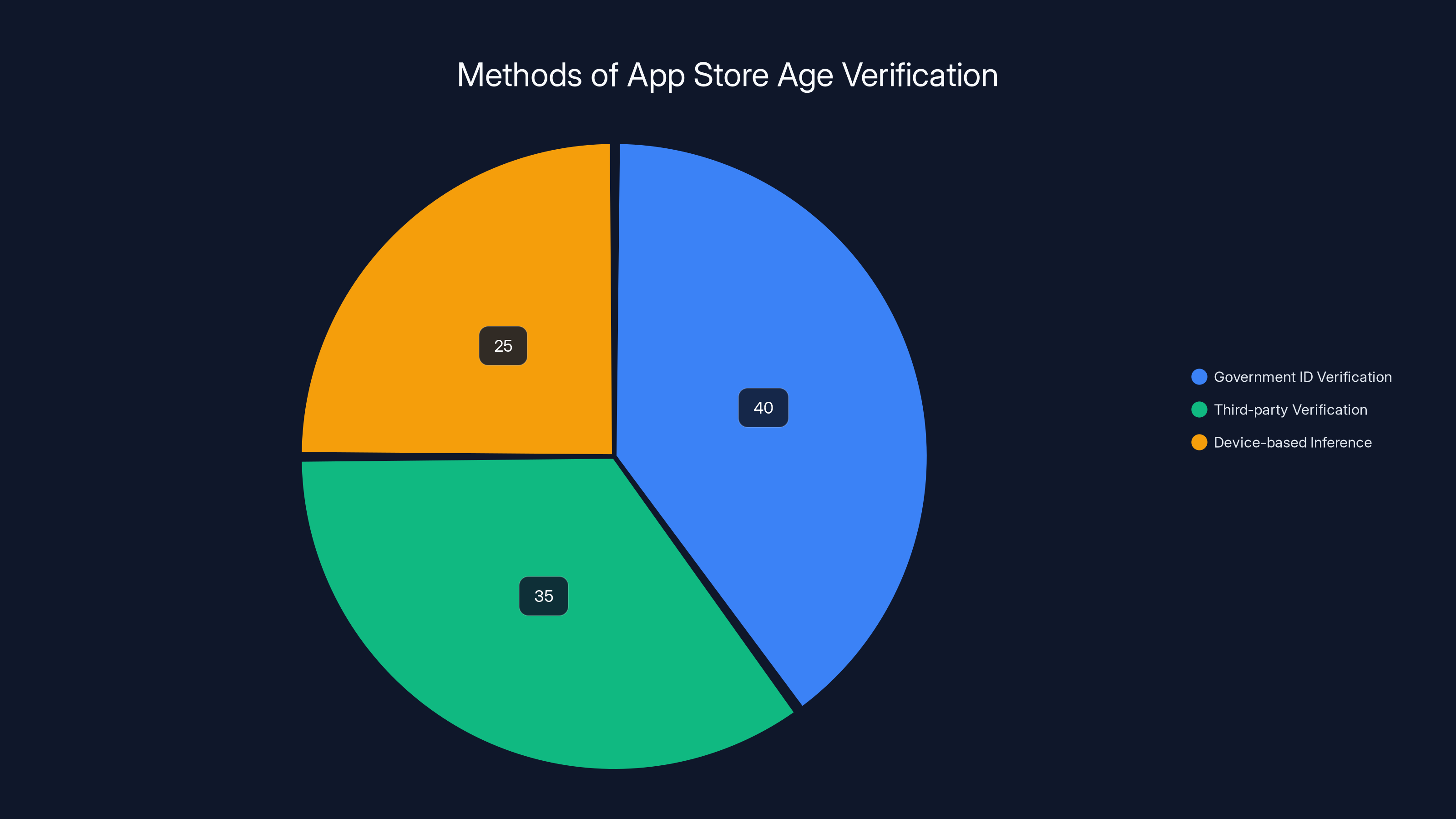

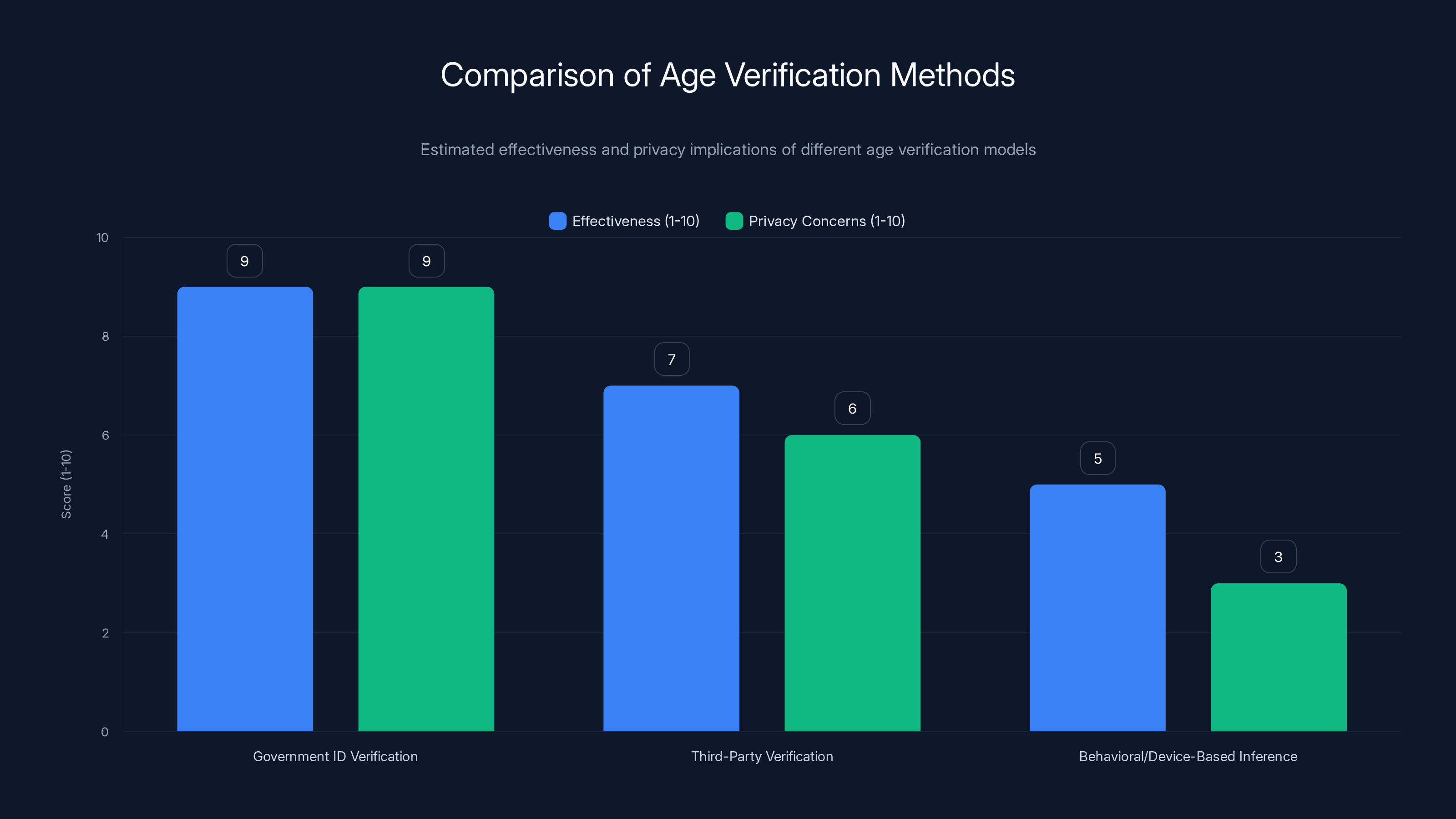

Modern age verification systems typically operate on one of three models: government ID verification, third-party verification services, or behavioral/device-based inference.

Government ID Verification is the most legally robust approach. The user scans or photographs their government-issued ID. Optical character recognition (OCR) technology extracts the birth date and other identifying information. The system verifies that the ID is genuine and that the person in the photo matches the person attempting to create the account.

This approach works well for high-stakes transactions like opening a bank account or purchasing alcohol. It's extremely difficult to circumvent. But it creates serious privacy implications at scale. Apple and Google would essentially become custodians of billions of government ID photographs and extracted data. The attack surface for a breach becomes enormous.

Third-Party Verification Services operate differently. Rather than Apple or Google storing the actual ID information, users verify their age through a third-party vendor (like IDology or Onfido). These vendors confirm the user's age and send a token back to Apple or Google confirming verification without revealing personal information.

This approach distributes the security burden and reduces the liability concentration on app stores. But it still creates privacy concerns. Users must trust both the verification service and the app store. If the verification service is compromised, millions of identities could be exposed.

Behavioral and Device-Based Inference represents a different approach entirely. Rather than asking for government ID, the system analyzes patterns. Does the device belong to someone whose online behavior suggests adult status? Are the apps they're trying to download consistent with adult usage patterns? This approach is far less invasive but also far less reliable. False positives are common, and the system can be easily defeated by determined users.

Each approach involves tradeoffs:

| Approach | Accuracy | Privacy Impact | Implementation Cost | User Friction |

|---|---|---|---|---|

| Government ID | 99%+ | Severe | High | High |

| Third-Party Service | 95%+ | Moderate | Medium | Medium |

| Device-Based Inference | 60-80% | Low | Low | Low |

The technical challenge extends beyond just implementing age verification. It includes scalability. Apple and Google distribute hundreds of millions of apps annually. An age verification system would need to handle hundreds of millions of verification requests, manage exceptions and appeals, deal with multiple verification methods across different jurisdictions, and maintain real-time accuracy as regulations change.

Another technical complexity involves jurisdiction-specific requirements. Age of majority varies by country. Different regions have different ID standards. Some countries lack comprehensive government ID systems. Building a system that accommodates this complexity while maintaining security is genuinely difficult.

Estimated data suggests that government ID verification is the most common method for app store age verification, followed by third-party verification and device-based inference.

The Constitutional Minefield: Why Age Verification Keeps Getting Blocked

Let's talk about the constitutional elephant in the room. Why hasn't age verification simply been implemented already?

The answer is the First Amendment. Specifically, the question of whether the government can impose burdens on adults' access to legal speech in the name of protecting minors.

The Supreme Court addressed this in Ashcroft v. ACLU. The case involved the Child Online Protection Act (COPA), which required age verification for pornographic websites. The Court found that COPA was unconstitutional because it imposed too heavy a burden on adults' access to protected speech, and because less burdensome alternatives existed.

That ruling set a precedent that haunts age verification efforts to this day. Courts since have applied similar reasoning to social media age verification laws. Even if the government has a compelling interest in protecting minors (which courts generally acknowledge), the means must be narrowly tailored and not excessively burdensome.

The constitutional analysis works like this:

-

Government Interest: Does the government have a compelling interest? Yes. Protecting minors from harmful content is generally recognized as compelling.

-

Narrow Tailoring: Is the regulation narrowly tailored to achieve that interest? This is where age verification usually fails. Broad requirements that burden all users are considered overinclusive.

-

Less Burdensome Alternatives: Do less restrictive means exist? Usually, courts find they do. Parental controls, content warnings, age ratings, and self-regulation are all considered less burdensome alternatives.

App store age verification presents an interesting constitutional wrinkle. App stores aren't publishing speech themselves. They're distributing it. So is age verification at the app store level a restriction on speech, or a restriction on distribution?

Legal scholars disagree. Some argue that app stores are essentially neutral conduits, and age verification is just quality control, like any other curation mechanism. Others contend that restricting app access is effectively restricting speech, and the same constitutional protections apply.

What makes this especially complicated is that the Supreme Court just recently suggested it might reconsider some of these precedents. In a 2023 ruling, the Court indicated that the "changed internet landscape" might require rethinking some of the Ashcroft v. ACLU assumptions. That opened a door, but it's a narrow one.

The Court didn't say age verification is suddenly constitutional. It said the changed internet landscape might be relevant to the analysis. That's a long way from approval, but it's more encouraging to regulators than they've had in years.

Meanwhile, social media age verification laws continue to face constitutional challenges. Courts have blocked attempts in states like Arkansas to require age verification for Tik Tok, reasoning that such requirements place unconstitutional burdens on adults' access to protected speech.

The Privacy Nightmare Scenario: Why Data Security Matters Here

Set aside the constitutional questions for a moment. Let's talk about what happens if age verification actually gets implemented at scale.

Apple and Google would need to store or process identifying information for billions of users. Even with third-party verification services handling the actual ID analysis, someone needs to maintain records of who's verified and at what age.

Consider the scale. There are approximately 2 billion i Phone users globally and over 3 billion Android users. Even if only a fraction go through age verification (let's say 30% due to existing accounts and exemptions), you're talking about 1.5 billion verification records.

What happens if that gets breached? The consequences are catastrophic:

- Criminal Identity Theft: Thieves gain access to hundreds of millions of verified identities, enabling fraud, account takeovers, and worse.

- Targeting Minors: Criminals know exactly who the minors are, where they are, and what devices they use. Predatory behavior becomes more targeted.

- Marketing Abuse: Commercial entities obtain behavioral profiles linked to precise age and identity information.

- Government Abuse: Authoritarian regimes gain the ability to identify and track specific populations.

- Regulatory Liability: Companies face massive fines under GDPR, CCPA, and other privacy regimes.

This isn't hypothetical. Companies storing sensitive identity information have been breached repeatedly. The U. S. Office of Personnel Management breach exposed 21.5 million government employees' security clearance investigations. Equifax exposed nearly 150 million people's financial information. The pattern is clear: scale and sensitivity invite attack.

Apple and Google would need to implement extraordinary security measures. That means:

- Encryption: End-to-end encryption for ID data, encrypted storage for verification records.

- Segmentation: Isolating age verification systems from other company systems to prevent lateral movement by attackers.

- Compliance: Meeting GDPR, CCPA, PIPEDA, and dozens of other privacy regimes simultaneously.

- Auditing: Constant security monitoring, penetration testing, and incident response.

- Data Minimization: Storing the absolute minimum information necessary and deleting it as soon as possible.

Even with all these measures, risk remains. A sophisticated state-sponsored attack or a malicious insider could still compromise the system.

That's why many privacy advocates argue that age verification at the app store level is fundamentally incompatible with user privacy. You can't verify the age of billions of people without creating a massive liability.

Government ID verification is the most effective but raises significant privacy concerns. Third-party services offer a balance, while behavioral inference is less effective but more privacy-friendly. Estimated data based on typical industry assessments.

The Implementation Challenges: Why It's Harder Than It Sounds

Even setting aside constitutional and privacy concerns, the practical implementation challenges are substantial.

First, there's the global complexity. Age verification systems designed for the United States won't work in Europe, Asia, or Africa. Government ID standards differ everywhere. Some countries have national ID systems. Others don't. Some jurisdictions restrict what information companies can collect. Others mandate what information must be stored.

Building a system that accommodates these regional variations while maintaining consistency is genuinely difficult. It probably requires different verification paths for different regions, different data handling protocols for different jurisdictions, and different escalation procedures for disputes.

Second, there's the false positive problem. Behavioral-based age inference systems inevitably misclassify some users. A teenager using a device with mature apps (because they're using a parent's device) might be classified as an adult. An adult using only kid-friendly apps might be classified as a minor. These errors create frustration and support costs.

Government ID verification seems more reliable, but even it has problems. ID scanners sometimes fail to read licenses properly. Lighting issues, damaged IDs, or uncommon ID formats cause failures. Some users lack government-issued ID entirely. Travelers might be using passports instead of driver's licenses.

Third, there's the appeal and dispute process. When age verification makes a mistake, users need a way to contest it. That requires human review, which is expensive and slow. Scaling human review to handle millions of disputes is logistically challenging.

Fourth, there's the international tensions. Age verification systems that satisfy EU regulators might conflict with Chinese requirements or U. S. security concerns. Building a system that satisfies all stakeholders simultaneously is nearly impossible.

Fifth, there's the circumvention problem. Determined users will find ways around age verification. They'll use older siblings' devices, borrow parents' IDs, or use synthetic identity fraud to create adult accounts. No system is perfectly resistant to circumvention.

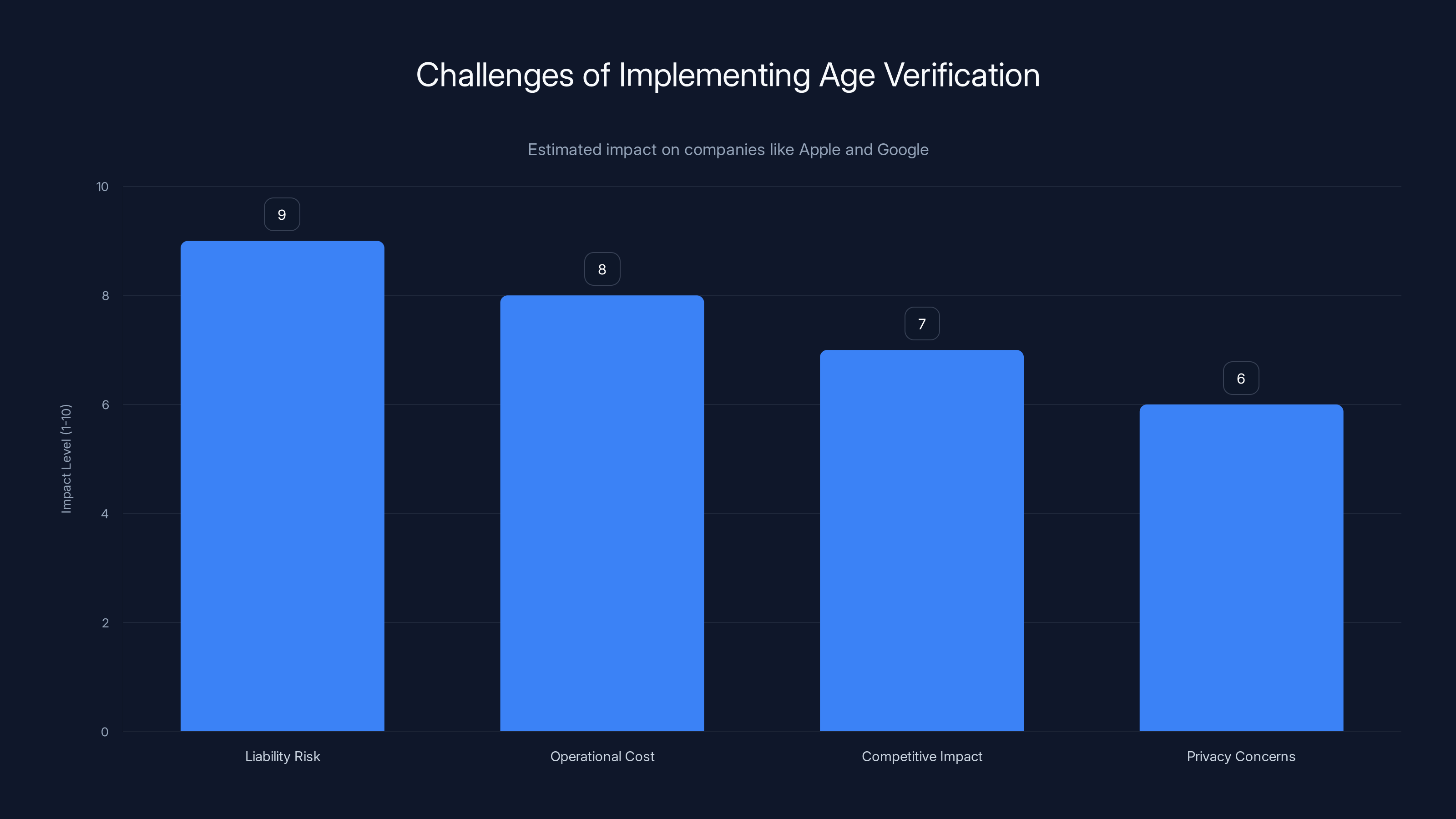

The costs of implementing robust age verification are also substantial. Apple and Google would need to invest billions in infrastructure, hire thousands of compliance specialists, build fraud detection systems, and maintain ongoing operations.

Meanwhile, the compliance burden extends beyond Apple and Google. Individual developers would need to adjust their apps to handle age verification differently across regions. Some apps might become unavailable in certain jurisdictions because they can't comply with regional requirements. This fragmentation creates problems for developers and users alike.

The App Developer Perspective: How This Affects Smaller Players

Age verification at the app store level has outsized impact on developers, particularly smaller ones.

Large developers like Meta, Netflix, and Activision Blizzard can absorb the complexity. They have compliance teams, legal departments, and international operations. If app stores implement age verification, these companies simply adapt.

Smaller developers face different challenges. A developer based in Eastern Europe creating an educational app might find that the cost of complying with age verification requirements in different regions makes international distribution economically unviable.

Specialized app categories are particularly vulnerable. Dating apps, gambling apps, and apps focused on teen mental health all become subject to stricter verification requirements. A dating app that previously relied on self-reported age suddenly needs government ID verification. A mental health app aimed at teenagers needs to navigate complex questions about what constitutes "appropriate" content for different age groups.

The impact on innovation is real. A startup developing a new communication platform might look at age verification requirements and decide the compliance burden is too high. That startup might never launch, or might launch only in specific regions where compliance is less burdensome.

This creates a regulatory moat that benefits established players while discouraging competition. That's not necessarily bad policy (regulatory friction can serve legitimate purposes), but it's a real consequence that deserves acknowledgment.

There's also a secondary impact on parental tools and safety apps. Apps designed to help parents monitor their children's device usage sometimes need to bypass or work around app store restrictions. If age verification becomes strict, these tools might become more difficult to develop and distribute.

Estimated data shows that liability risk is the highest challenge for companies like Apple and Google when considering age verification, followed by operational costs and competitive impact.

The Middle Ground: Alternative Approaches That Might Actually Work

Age verification at the app store level isn't the only way to address concerns about minors accessing age-inappropriate apps.

One alternative is stronger parental controls built into the operating system. Rather than asking companies to verify age, enable parents to configure their children's devices more granularly. Allow parents to restrict access to specific app categories, set time limits, require approval for downloads, and monitor usage patterns.

Apple has moved in this direction with Screen Time. i Phone Screen Time allows parents to set app restrictions based on content rating. Google has similar capabilities through Family Link. But these tools are opt-in, and many parents don't enable them.

Another approach is stricter enforcement of existing app rating standards. Apple and Google already require developers to rate their apps. The problem is that ratings are self-reported and often inaccurate. An app developer might rate their app as 4+ when it includes content suitable only for teens. If regulators required independent auditing of app ratings and imposed penalties for misclassification, app stores could become more reliable without implementing full age verification.

A third approach is enhanced transparency about what data apps collect. Many users don't realize how much personal information apps request. If app stores required clear disclosure of data collection practices and provided easy mechanisms for users to see what data an app accesses, parents and users could make more informed decisions.

A fourth approach is technology-based content filtering at the device level. Machine learning systems could analyze app content and automatically flag potentially age-inappropriate content. This could happen on-device (preserving privacy) rather than requiring data to be sent to app stores.

Each alternative has tradeoffs:

| Approach | Privacy Impact | Effectiveness | Implementation Burden | User Burden |

|---|---|---|---|---|

| Parental Controls | Low | Moderate | Low | High (requires parental action) |

| Rating Enforcement | Very Low | High | Medium | None |

| Transparency Requirements | Low | Moderate | Medium | Low |

| Device-Level Filtering | Very Low | Moderate | High | Medium |

| App Store Age Verification | Very High | High | Very High | High |

None of these alternatives perfectly solve the problem of minors accessing age-inappropriate content. But they might solve it well enough without creating the privacy and security risks that come with centralized age verification systems.

The International Perspective: How Different Regions Are Approaching This

Age verification policy varies dramatically across jurisdictions, creating a complex patchwork that affects app stores globally.

The European Union has been the most aggressive. The Digital Services Act imposes age verification requirements on platforms with user-generated content or social features. Regulators have been vocal about expecting app stores to implement age verification, though they haven't formally mandated it yet.

More significantly, GDPR creates massive constraints on how age verification can be implemented. Companies cannot process the personal data of children under 16 without parental consent in most cases. This creates a paradox: regulators want age verification, but privacy law makes it difficult to collect and process the information necessary to implement it.

The United Kingdom has implemented the Online Safety Bill, which places responsibility on platforms for protecting minors. The law is technology-neutral and doesn't specifically mandate age verification, but the pressure on platforms to implement it is real.

The United States has no federal mandate for app store age verification. Individual states have tried. Arkansas passed an age verification law focused on social media, but it was blocked by courts. Other states have passed or are considering legislation, but federal courts have been skeptical.

The FTC has been active in investigating age-related concerns but hasn't issued specific guidance on app store age verification.

Australia has emerged as an aggressive regulator. The government has been pushing social media platforms to implement age verification and has suggested that app stores should play a role in preventing minors from accessing age-inappropriate content.

China and other authoritarian regimes face different questions. Age verification in these jurisdictions is often tied to broader surveillance capabilities. The problem becomes less about protecting minors and more about enabling surveillance.

This international fragmentation creates real problems for app stores. Implementing different age verification systems for different regions is expensive. Not implementing them creates regulatory risk in key markets. Apple and Google are caught between complying with EU requirements, respecting U. S. constitutional protections, and managing requirements in dozens of other jurisdictions.

The European Union is leading the push for app store age verification with a high influence score, followed by child safety advocates and tech companies. Estimated data.

The Business Reality: Why Companies Are Reluctant

Apple and Google aren't opposed to age verification on principle. But they're extremely cautious about the liability and operational burden.

From a liability perspective, becoming the arbiter of age verification exposes app stores to lawsuits. If age verification fails and a minor accesses harmful content, is the app store liable? If a breach exposes age verification data, what's the damages exposure? Companies would face unprecedented legal risk.

From an operational perspective, implementing age verification at the scale of billions of users is genuinely difficult. The infrastructure costs, compliance burden, and ongoing operational expense would be substantial. Apple would need to invest billions and hire thousands of additional employees.

From a competitive perspective, age verification might disadvantage certain business models. Platforms that rely on network effects and rapid user growth (like social media apps) would be more impacted by age verification than games or productivity apps. This creates political pressure from certain app categories.

From a privacy perspective, apple has built a brand around privacy. Being forced to become a custodian of government ID information and age verification data conflicts with that brand positioning. Google has less of a privacy focus, but the company still faces pressure from privacy advocates.

Interestingly, both companies have made strategic moves suggesting they're preparing for potential age verification requirements. Apple introduced identity verification features. Google has been integrating age-detection technology. These moves might be defensive, positioning themselves to implement age verification if forced, while maintaining the ability to argue they've been proactive on the issue.

The Evidence Problem: What Actually Works to Protect Minors

Underlying the entire age verification debate is a question that deserves more attention: what actually works to prevent minors from accessing harmful content?

The research is murkier than policymakers often admit. Some studies suggest that age verification reduces minor access to adult content. Other studies find that motivated minors easily circumvent age verification. Still others suggest that parental oversight and digital literacy education are more effective than any technical barrier.

This creates a legitimate tension in policy. If there's insufficient evidence that age verification actually prevents harm, is it justified to impose the privacy and security risks?

Some jurisdictions have required evidence-based policy. The UK's approach to the Online Safety Bill includes requirements for platforms to conduct impact assessments. This is a more sophisticated approach than simply mandating age verification and hoping it works.

Research on the effectiveness of parental controls is more encouraging. When parents actively use device-level restrictions, minor access to age-inappropriate content decreases substantially. The challenge is that many parents either don't know these tools exist or find them cumbersome to use.

Research on digital literacy is also positive. When minors receive education about online safety, privacy, and critical evaluation of information, they make better decisions about what content to access and what information to share.

These findings suggest that a portfolio approach might be more effective than age verification alone. Improve parental tools, educate users, enforce rating standards, and implement age verification for the highest-risk categories. Each approach addresses the problem from a different angle.

The Technical Workarounds: How Determined Users Circumvent Age Verification

No age verification system is perfect. Determined users can usually find ways around it.

Device Sharing is the simplest workaround. A minor uses a parent's device that's already verified as adult. There's no technology that can prevent this without essentially monitoring device usage in real-time, which creates its own privacy problems.

Synthetic Identity Fraud involves using combinations of real and fabricated information to create fake adult identities. A minor might use a real parent's name combined with a fabricated address or fabricated details about a parent to verify an account. Detecting this requires sophisticated analysis and often doesn't catch determined fraudsters.

ID Spoofing involves using images or videos of someone else's ID to pass verification. Deepfake technology and other manipulation techniques are improving rapidly, making this an increasingly viable workaround.

Third-Party Verification Hacking involves compromising the third-party verification service used by app stores. If the verification service is hacked, attackers can issue false verification tokens that bypass app store age checks.

Each workaround has different difficulty levels. Device sharing is trivial and nearly impossible to prevent. Synthetic identity fraud requires some sophistication but is doable. ID spoofing is becoming easier with deepfake technology. Third-party service hacking requires significant technical skill and is relatively rare.

This raises the question: at what circumvention rate does an age verification system stop being effective? If 10% of minors seeking to access age-inappropriate content can do so, is that acceptable? 20%? 30%?

This question matters because implementing age verification involves real costs and risks. If the system only prevents, say, 40% of unwanted access, then the costs and privacy risks might outweigh the benefits.

The Path Forward: What Reasonable Policy Might Look Like

Given all these considerations, what might reasonable age verification policy actually look like?

First, acknowledge the evidence gaps. Before mandating age verification, require rigorous analysis of whether it actually prevents harm and at what cost. Governments should fund research into effectiveness, not simply assume that making age verification mandatory will automatically improve outcomes.

Second, prioritize data minimization. If age verification is implemented, require that personal information be deleted immediately after verification. Rather than storing who's verified and at what age, simply store a cryptographic token indicating "this account has been verified as adult." This dramatically reduces privacy risk without reducing functionality.

Third, adopt graduated requirements. Not all apps need the same level of age verification. Age-inappropriate content apps (dating, gambling) might require government ID verification. Apps with moderate age-restrictions (social media, games with mature themes) might only require device-based inference or third-party verification. Apps with minimal age-restrictions might need nothing. This proportional approach balances protection with practicality.

Fourth, protect constitutional rights. Age verification policy should be carefully designed to withstand constitutional scrutiny. Narrowly tailored requirements that don't excessively burden adults' access to protected speech stand a better chance of surviving legal challenge.

Fifth, invest in alternatives. Rather than solely relying on age verification, fund development of better parental control tools, digital literacy education, and content rating infrastructure. These alternatives should be tested for effectiveness alongside age verification.

Sixth, ensure international coordination. Fragmented requirements across regions create problems for everyone. International bodies should work toward harmonized approaches, at least among like-minded democracies, to reduce compliance burden while maintaining protection.

Seventh, maintain human oversight. Automated age verification systems will make mistakes. Users should have clear, accessible mechanisms to appeal decisions. These appeal processes should not require lawyers or extensive documentation.

Eighth, address special populations. People without government ID, transgender individuals whose ID doesn't match their current identity, and international users should have mechanisms to verify age that don't require government-issued documents.

The Bigger Picture: What This Means for Digital Rights

Age verification in app stores is a specific policy issue, but it reflects broader tensions about how we govern the internet.

One tension is between protection and access. Society has a legitimate interest in protecting minors from harmful content. But restrictions that protect minors also burden adults' access to legal speech. Balancing these interests is genuinely difficult.

Another tension is between centralization and privacy. Centralizing age verification at app stores makes regulatory enforcement easier and reduces friction for users. But it creates centralized repositories of sensitive personal information that become attractive targets for attackers. Distributing the responsibility (requiring verification at individual apps) preserves privacy but creates compliance burden and friction.

A third tension is between regulation and innovation. Strict age verification requirements might reduce access to harmful content but also reduce incentives for new platforms and services to launch. Over-regulation can freeze markets in place.

A fourth tension is between local sovereignty and global systems. Different countries have different values about what content is appropriate for different ages. A one-size-fits-all age verification system doesn't reflect this diversity. But multiple different systems create incompatibility and complexity.

These tensions don't have perfect solutions. Policy always involves tradeoffs. The goal should be to make tradeoffs consciously, acknowledging what's being sacrificed and why, rather than pretending difficult choices don't exist.

Conclusion: The Uncertain Future of Digital Gatekeeping

App stores have become the latest battleground in the age verification wars. Regulators see them as convenient gatekeeping infrastructure. Advocates see them as potential checkpoints preventing minors from accessing inappropriate content. Companies see them as potential liability nightmares.

The outcome remains uncertain. Courts haven't definitively ruled on whether app stores can be forced to implement age verification. Legislators are moving in different directions in different jurisdictions. Technology companies are making defensive moves while resisting mandatory requirements.

What seems clear is that some form of age verification is likely coming, at least in certain jurisdictions and for certain app categories. The question isn't whether age verification will happen, but how it will be implemented and what safeguards will protect privacy and preserve constitutional rights.

The most likely scenario is a graduated approach. High-risk categories like gambling and adult dating will face stricter requirements. Mainstream social media will face moderate requirements. General-purpose apps might face minimal requirements. Different jurisdictions will implement different standards, creating complexity for developers but maintaining regional autonomy.

What's important is that this evolution happens consciously, with attention to evidence about effectiveness, careful consideration of privacy implications, and protection for both minors and adults' digital rights.

Age verification was supposed to be simple. "Just verify age like you do at the corner store." But the internet operates at scales and with complexities that make simple solutions inadequate. Any age verification system implemented at the scale of billions of users will be imperfect, create unintended consequences, and require constant adjustment.

The challenge for policymakers is accepting that perfection is impossible and that managing the tradeoffs requires ongoing attention and willingness to change course as evidence emerges.

For companies implementing age verification, the challenge is building systems that are secure, respect privacy, accommodate international diversity, and don't excessively burden the vast majority of legitimate users.

For advocates, the challenge is distinguishing between protection measures that are evidence-based and workable, and those that sound good in principle but are impractical or counterproductive in reality.

The debate about age verification in app stores will likely continue for years. The outcome will shape how platforms govern access to content and information for billions of people. Getting it right matters.

FAQ

What exactly is app store age verification?

App store age verification is a system where users must prove their age before downloading apps from centralized platforms like Apple's App Store or Google Play. Rather than requiring individual apps to verify age, the requirement is implemented at the app store level, functioning as a single gateway for age-gating purposes. This could involve government ID verification, third-party verification services, or device-based age inference, depending on the implementation approach.

Why did regulators focus on app stores instead of individual apps?

App stores are attractive regulatory targets because they're centralized gateways controlled by just two major companies (Apple and Google). Rather than trying to regulate millions of individual apps across the internet, regulators can implement age verification at a single point. This reduces enforcement burden and means users only need to verify age once instead of repeatedly at different apps. Additionally, app stores already distribute content with age ratings, making them natural checkpoints.

How would app store age verification actually work in practice?

Implementation varies by approach. Government ID verification would require users to scan their driver's license or passport, with the system extracting and verifying birth date. Third-party verification services act as intermediaries, confirming age without the app store directly accessing ID information. Device-based inference analyzes usage patterns and device characteristics to estimate age. Different approaches involve different tradeoffs between accuracy, privacy, and user friction.

What are the main constitutional problems with age verification?

Age verification faces constitutional challenges primarily because it potentially burdens adults' access to legal speech. Courts have found that restrictions must be narrowly tailored and cannot be more burdensome than necessary. Previous rulings like Ashcroft v. ACLU established that less restrictive alternatives (like parental controls) must be exhausted before age verification can be imposed. Social media age verification laws have been repeatedly blocked on similar constitutional grounds.

What privacy risks does age verification create?

Age verification at the app store scale would require handling sensitive personal information for billions of users. This creates massive breach risk: a single compromise could expose government ID photographs and verified identity information for hundreds of millions of people. This makes it an attractive target for criminals, competing intelligence agencies, and potential authoritarian surveillance. Historical breaches of sensitive data suggest centralized identity repositories face inherent security challenges regardless of precautions taken.

Are there alternatives to age verification that might work?

Yes, several alternatives exist. Improved parental control systems can empower parents to configure device restrictions. Stricter enforcement of app rating accuracy could reduce misrated content. Enhanced transparency about app data collection could inform user decisions. Device-level content filtering using machine learning could flag potentially inappropriate content. Research suggests these alternatives, particularly parental oversight and digital literacy education, can be quite effective when combined with modest technical barriers.

How do different countries approach age verification policy?

Approaches vary significantly by jurisdiction. The European Union has been most aggressive, with the Digital Services Act implying expectations for age verification, though implementation details remain unclear. The United States has no federal mandate; individual states have attempted age verification laws, but courts have blocked them as unconstitutional. The United Kingdom has focused on platforms' responsibilities under the Online Safety Bill without specifically mandating age verification. Australia and other countries are actively pushing for implementation, creating a fragmented global landscape.

How can determined users bypass age verification?

Determined users have multiple workarounds. Device sharing allows minors to use verified adult devices. Synthetic identity fraud involves combining real and false information to create fake adult accounts. ID spoofing uses others' IDs or deepfake technology. Third-party service hacking could compromise verification infrastructure. Research suggests 15-20% of determined users can successfully circumvent typical age verification systems, raising questions about effectiveness relative to implementation costs and privacy risks.

What would reasonable age verification policy look like?

Reasurable policy would: acknowledge evidence gaps by requiring rigorous research on effectiveness before mandating age verification, prioritize data minimization by storing verification tokens rather than personal information, adopt graduated requirements tailored to actual risk levels, protect constitutional rights, invest in alternative approaches, ensure international coordination where possible, maintain human oversight for appeals, and address special populations lacking government ID. This balanced approach recognizes legitimate protection needs while respecting privacy, constitutional rights, and practical constraints.

What happens if app stores are forced to implement age verification?

If mandated age verification is implemented, the most likely scenario is a graduated approach where high-risk categories (gambling, dating) face strict requirements while mainstream apps face moderate or minimal requirements. Different jurisdictions would implement different standards, creating complexity for developers. Large companies would adapt; smaller developers might exit certain markets. Privacy risks would be significant and ongoing, requiring investment in security infrastructure. The system would likely have imperfect enforcement, with some minors still accessing age-inappropriate content while adults face inconvenience. Overall effectiveness would depend heavily on implementation details.

Real-World Implications: How This Affects You Today

These policy debates aren't abstract. They affect real people in practical ways.

If you're a parent, age verification could eventually mean that your teenager faces additional friction when trying to download apps, requiring parental consent or verification. Alternatively, if implemented poorly, it might fail to prevent access to inappropriate content while putting your family's personal information at risk.

If you're a developer, age verification requirements could affect your ability to distribute apps globally. You'd need to adapt to different requirements in different jurisdictions, increasing development costs and complexity.

If you're a regular user, age verification might one day be required before downloading any app, adding friction to the process. This could be quick and painless or could be a significant inconvenience depending on implementation.

If you're concerned about privacy, the centralized collection of government ID information at scale represents genuine risk that deserves your attention and advocacy.

If you're concerned about digital rights and free speech, age verification policies have implications for how the internet is governed that extend far beyond protecting minors.

Staying Informed: How to Track This Issue

Age verification policy is evolving rapidly across different jurisdictions. If you want to stay informed:

Follow regulatory developments in your region. State and national legislatures are actively considering age verification bills. Track what your representatives are supporting.

Monitor case law. Courts are making important decisions about the constitutionality of age verification requirements. These decisions shape what's possible.

Pay attention to international developments. Policies in the EU, UK, and other major jurisdictions often influence policies elsewhere, though not always in positive directions.

Listen to multiple perspectives. Companies, child safety advocates, privacy organizations, and legal scholars all have legitimate points. Understanding the full landscape helps form informed opinions.

Engage in advocacy if you feel strongly. Contacting elected representatives, supporting relevant organizations, and participating in public consultations shapes policy outcomes.

The debate about age verification in app stores is likely to continue for years. Staying informed helps you understand the tradeoffs involved and make your own informed judgments about what policies you think are wise.

Key Takeaways

- App stores have become the latest regulatory focus for age verification policy, replacing failed attempts to regulate individual platforms directly.

- Technical implementation challenges are substantial: managing billions of identity verifications, accommodating international variations, and balancing security with privacy.

- Constitutional protections for adult access to legal speech continue to pose legal obstacles, as established in Ashcroft v. ACLU and subsequent social media age restriction cases.

- Centralized age verification at the app store level creates unprecedented security risks by concentrating sensitive identity information in a single breach-prone repository.

- Alternative approaches—improved parental controls, stricter rating enforcement, and digital literacy education—may achieve comparable child protection outcomes with lower privacy risks.

Related Articles

- Porn Taxes & Age Verification Laws: The Constitutional Battle [2025]

- Apple's ICEBlock Ban: Why Tech Companies Must Defend Civic Accountability [2025]

- WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- Porn Taxes and Age Verification Laws: The Constitutional Crisis [2025]

- Cyera's $9B Valuation: How Data Security Became Tech's Hottest Market [2025]

![App Store Age Verification: The New Digital Battleground [2025]](https://tryrunable.com/blog/app-store-age-verification-the-new-digital-battleground-2025/image-1-1768136791430.jpg)