Introduction: The Future of User-Generated Game Content

Roblox just changed the game. Literally.

The platform launched its 4D creation toolset in open beta, and it's the kind of feature that makes you realize how much gaming has evolved. We're not talking about static 3D models anymore. We're talking about objects that move, react, and respond to player input—all generated with AI assistance and zero coding required.

Now, before you get excited about accessing actual fourth-dimensional space, let me be clear: that's not what's happening here. The "4D" terminology refers to three-dimensional objects that behave dynamically in response to game events and player interactions. It's marketing language, sure, but it's also pretty accurate once you understand what the tools actually do.

Here's what makes this genuinely interesting: Roblox has spent years building toward this moment. The platform launched an open-source AI model last year that could generate 3D objects from text prompts. Most people ignored it because the results were limited and the use cases felt niche. But the company kept iterating, kept improving, and now we're seeing the real payoff.

The implications are massive. Imagine a platform where anyone—not just technical creators, but kids with ideas, indie developers, artists—can build interactive game objects without touching a single line of code. No 3D modeling experience required. No game development background necessary. Just describe what you want, and the AI builds it for you.

That's the promise. And based on what's already showing up on the platform, that promise is starting to materialize.

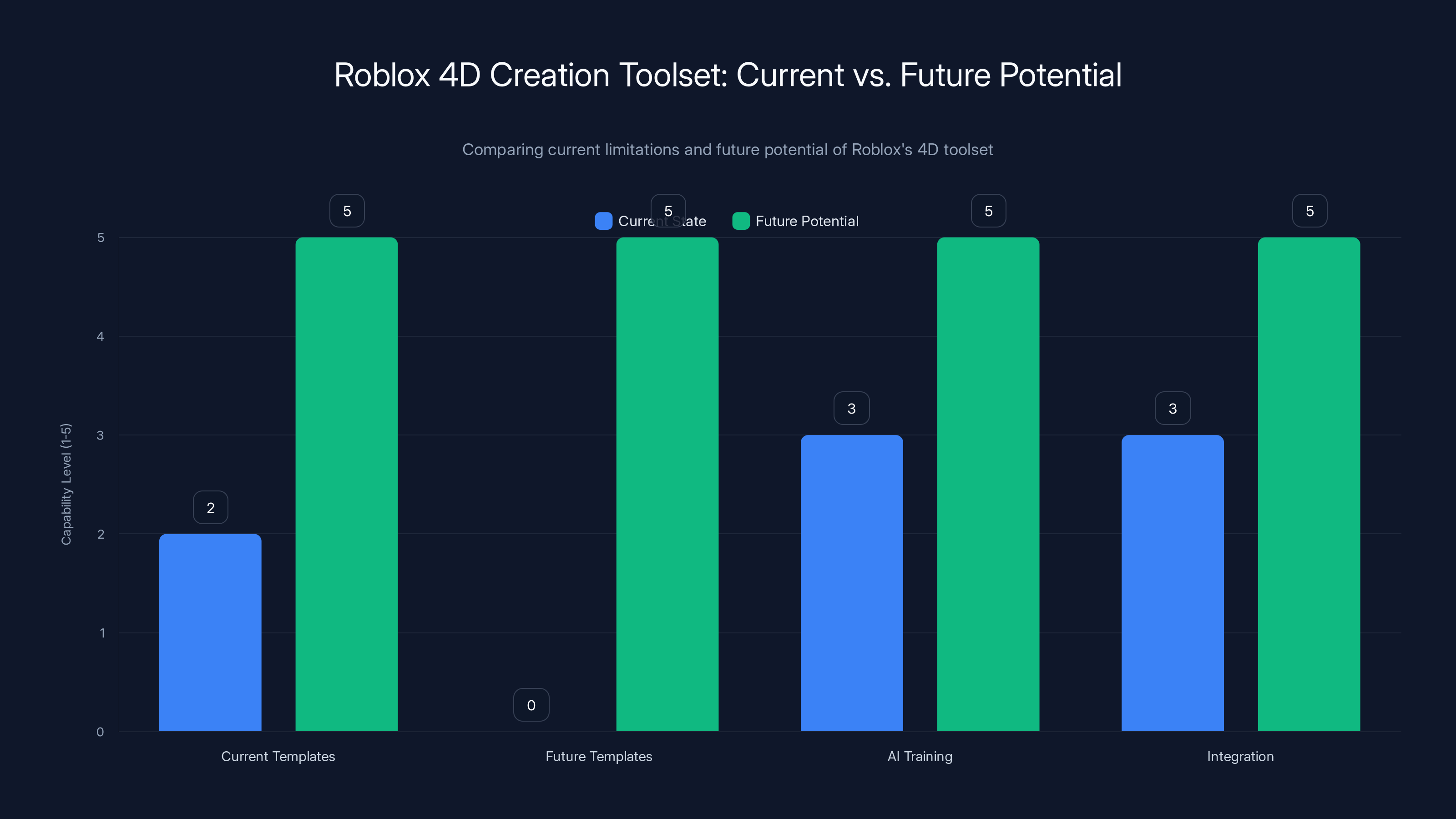

But like all beta tools, there are significant limitations. The current toolset is locked into two templates: cars and solid objects. That's it. You can make driveable vehicles or static sculptures, and while that's genuinely cool, it's hardly the open-ended creative freedom the marketing suggests. Roblox has committed to expanding the templates eventually, but they haven't shared a timeline, which is frustrating if you're waiting for specific features.

The bigger question isn't what you can make right now. It's what becomes possible once those restrictions lift. Because when they do, this moves from "interesting tool" to "game-changer for user-generated content."

Let's dive into what's actually happening here, how creators are using it, what the limitations are, and where this is heading.

TL; DR

- What It Is: Roblox's new 4D toolset generates interactive 3D objects that respond to player input and game events

- Current Capabilities: Two templates available—driveable cars with five independent parts and static 3D objects like sculptures

- AI Integration: Uses AI to generate objects from text prompts, handling complex physics and interactivity automatically

- Current Limitation: Only two templates in beta; custom templates coming eventually (no timeline)

- Real-World Impact: Already enabling new game types like Wish Master, which generates vehicles and objects in real-time

- Bottom Line: This is a significant step toward democratizing game object creation, but the beta limitations mean we're seeing only a fraction of the potential

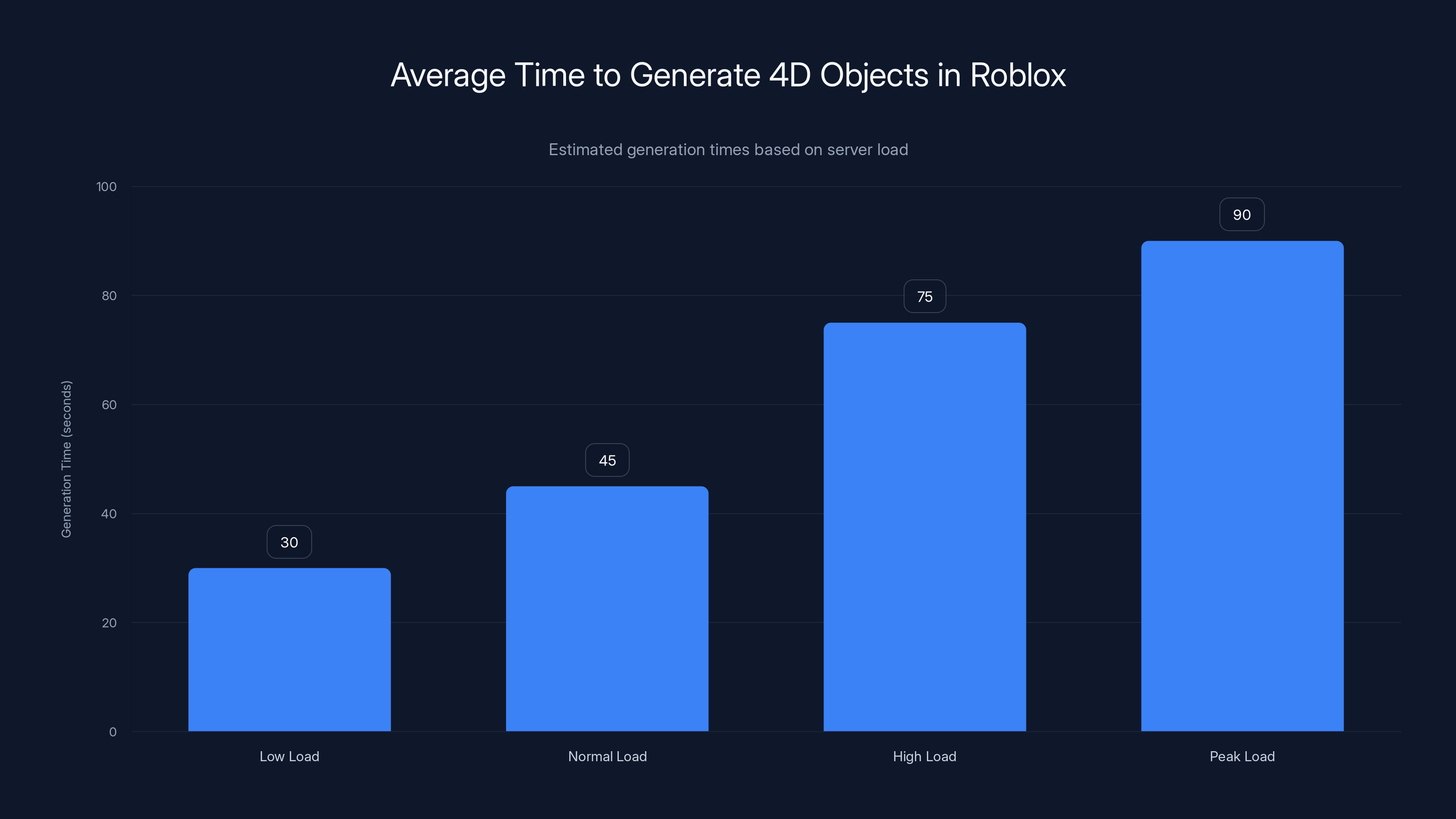

Generation times for 4D objects vary with server load, averaging 45-60 seconds during normal usage. Estimated data.

What Exactly Is This 4D Toolset?

Let's clear up the terminology first, because "4D" is doing a lot of work in the marketing department.

When Roblox talks about "4D creation," they're not opening portals to hyperspace. What they mean is: three-dimensional objects that exist in four-dimensional space (where the fourth dimension is time and interactivity). So basically, 3D objects that change, move, and respond to stuff happening in the game.

It's a bit of a stretch semantically, but it's not entirely wrong either. The objects these tools create aren't static models sitting in your game world looking pretty. They're fully functional, physics-enabled objects that interact with players and the environment.

Roblox accomplished this by training an AI model on massive amounts of 3D asset data. The system learns patterns about how objects are structured, how their parts relate to each other, and how those parts should behave when a player interacts with them. Feed the AI a prompt like "create a driveable off-road truck," and it doesn't just generate a model. It generates a complete mechanical system with wheels that spin independently, a chassis that responds to physics, and suspension that actually works.

The technical achievement here is real. Building interactive 3D objects traditionally requires expertise in multiple domains: 3D modeling, rigging, physics simulation, and scripting. You need someone who understands how to construct the geometry, how to define movement constraints, and how to write the code that makes it all work together.

Roblox's AI abstracts away most of that complexity. You describe what you want in plain English, and the system handles the technical implementation. It's the kind of tool that should have existed years ago.

The catch? It only works within defined templates right now. Those templates are carefully designed so the AI has guardrails. The car template, for instance, defines a specific structure: a chassis, four wheels, a steering mechanism, and body panels. The AI fills in the details within that framework, but it can't invent a completely new structure.

The solid objects template is even more constrained. You get to generate abstract sculptures or boxes, but the tool won't generate a functioning robotic arm or a complex mechanical device. It's all about geometry, not mechanical behavior.

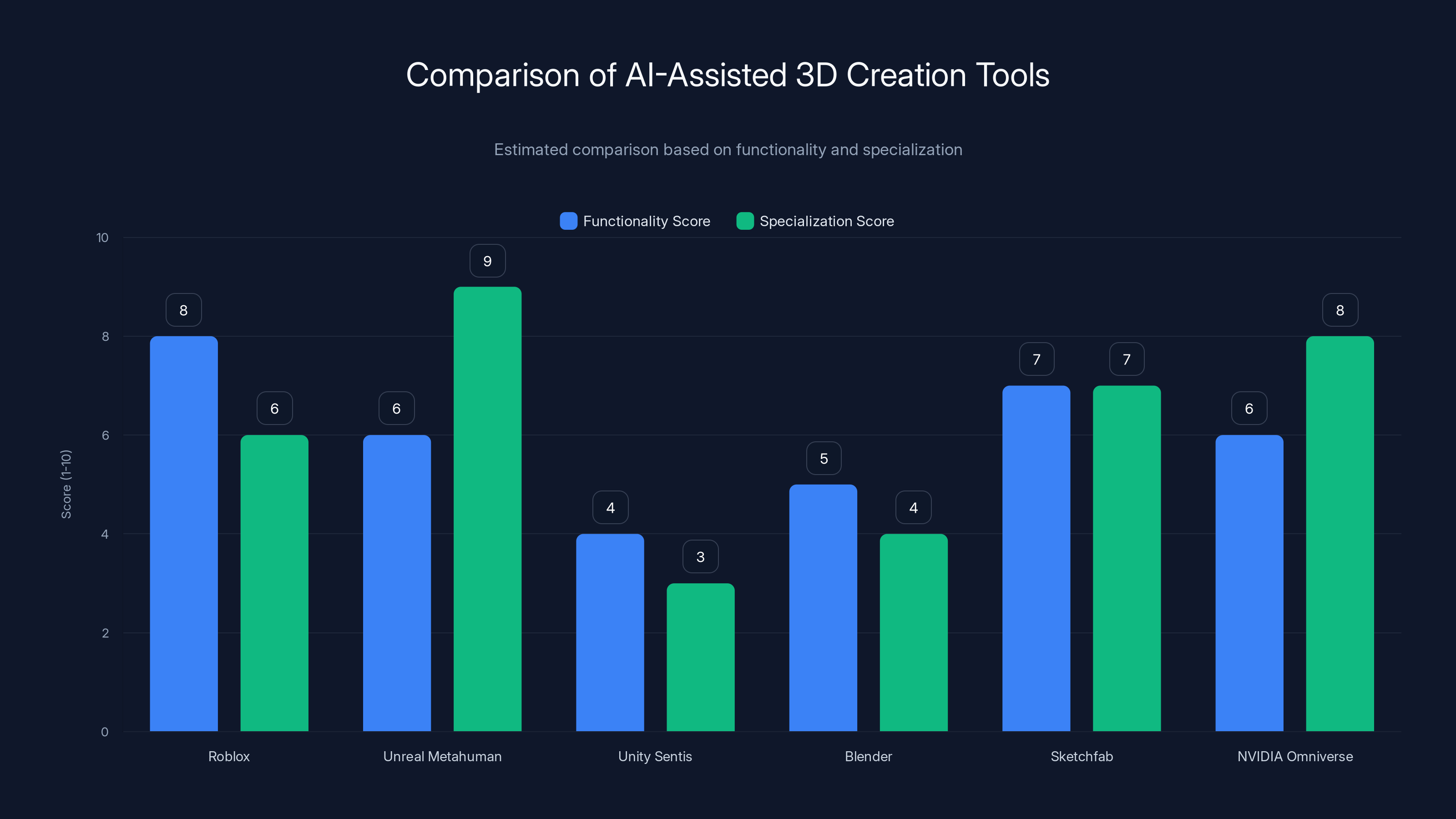

Roblox scores high on functionality due to its integrated system, while Unreal Metahuman excels in specialization. Estimated data based on tool descriptions.

How the AI Generation Actually Works: Behind the Curtain

Here's where it gets technical, and honestly, pretty clever.

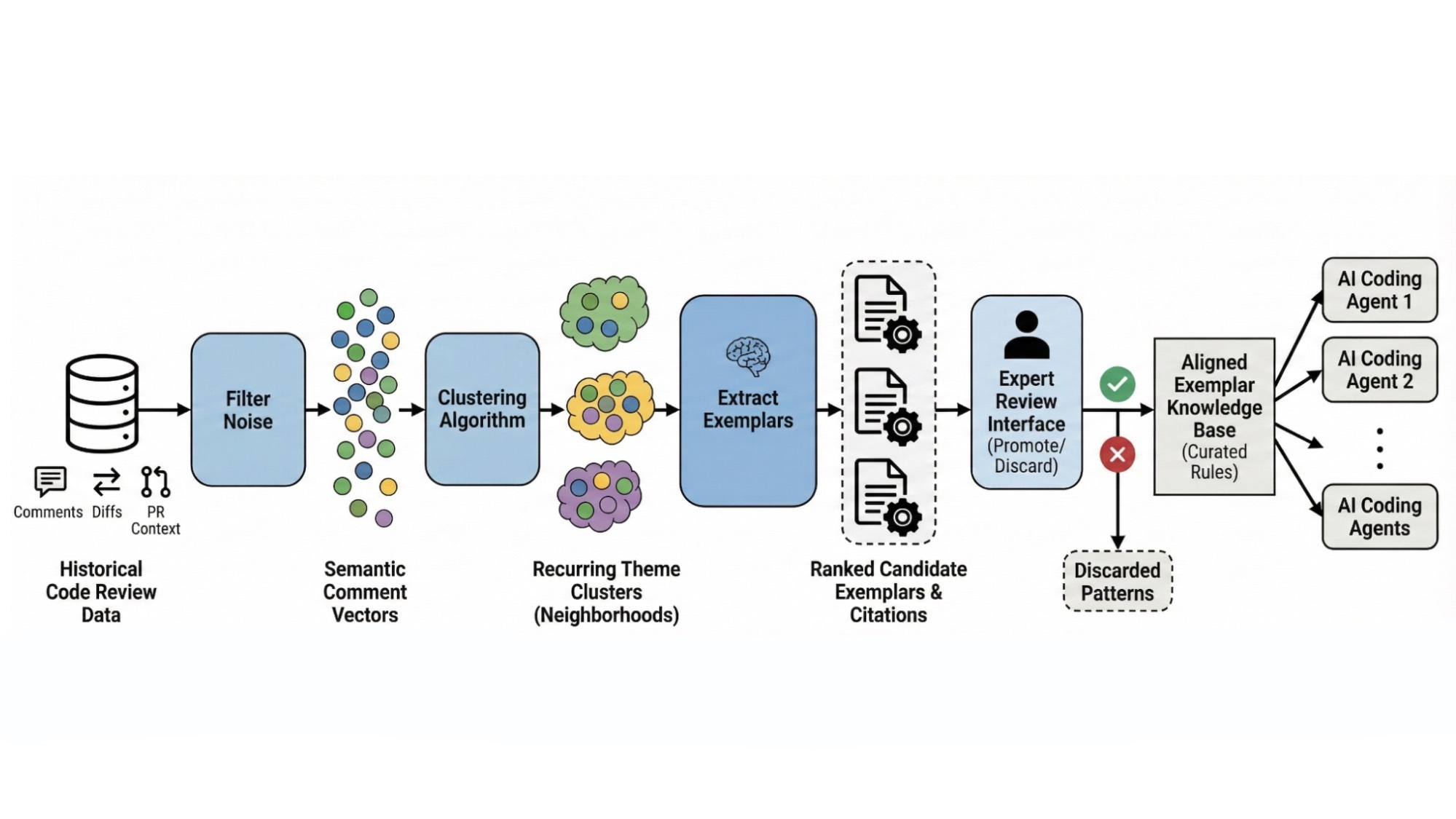

Roblox didn't build this from scratch. The foundation was laid last year when they released an open-source AI model trained on 3D assets. That model learned to understand the relationship between text descriptions and 3D geometry. But generating static geometry is only half the battle.

For the 4D tools, they added a layer of physics simulation and behavioral scripting on top. The AI doesn't just generate the shape of a car. It needs to understand that wheels should be circular, that they should attach to an axle, that the axle should rotate when the car moves, and that the rotation should respond to player input in predictable ways.

They did this by training the model on examples of functioning objects. The system learned patterns: wheels are round and mounted on axles. Doors are flat rectangles attached with hinges. Suspension systems connect wheels to the chassis with springs that compress and extend. When you feed it a prompt, it reconstructs these patterns to build something new.

The generation process works in stages. First, the AI parses your text input and builds a semantic understanding of what you're asking for. "Driveable sports car" means something specific: lower profile, likely faster acceleration, sharper handling. The AI doesn't know "sports car" in the way you do, but it's learned the features that typically correlate with that description.

Second, it generates the base geometry. The shape, proportions, and overall structure. This uses what's called a diffusion model, which starts with random noise and gradually refines it based on the input prompt until it converges on a reasonable 3D model.

Third, it applies physics constraints and behavioral rules. This is the critical piece. The system needs to assign properties to each part: which parts are rigid, which can move, what triggers movement, how much force can be applied. This is where the "4D" part actually happens. The object isn't just a shape anymore. It's a system.

Finally, it generates or validates the interaction model. How will players control this object? What input maps to what action? For cars, this means steering, acceleration, and braking. For other objects, it might mean different interactions entirely.

All of this happens in seconds. You enter a prompt, wait maybe 30-60 seconds depending on server load, and you get back a fully functional object you can place in your game.

The accuracy isn't perfect yet. The system sometimes generates wheels that don't quite align properly, or doors that clip through the chassis. But the base objects are solid, and most problems are cosmetic rather than functional.

The Two Current Templates: Cars and Static Objects

Roblox launched the beta with two templates, and this decision tells you a lot about their thinking.

Cars first because they're mechanically interesting but structurally simple. A car has a well-defined architecture: a chassis, wheels, suspension, a steering system. These relationships are consistent across nearly every car design. Whether you're generating a sedan or a sports car or an off-road truck, the fundamental structure stays the same. It's complex enough to be impressive, but not so open-ended that the AI gets lost.

The cars generated in this beta are genuinely functional. The wheels spin independently of each other, which means you can actually drive around obstacles. The suspension responds to terrain. The physics feels weighty and real. Some of the cars I tested handled better than professionally-modeled cars in other games, which is frankly surprising given how new this technology is.

They gave the system five key parts to work with: the chassis (the main body and frame), the wheels (typically four, but the system can vary this), the suspension (the system connecting wheels to the chassis), the steering mechanism (converting player input to wheel rotation), and the body panels (doors, hood, trunk, bumpers—the aesthetic pieces that make a car look like the specific type of car you asked for).

These parts aren't locked into place. The AI can adjust their proportions, their materials, their appearance. A truck will have a cargo bed and different proportions than a sports car. A racing car will have a lower profile and more aggressive styling. The system learned these correlations and applies them, making each generated car feel intentional rather than random.

The second template—static solid objects—is more limited but still useful. This is for sculptures, architectural elements, decorative pieces, and objects that don't need to move. You can ask for "a futuristic obelisk" or "a crystalline formation" or "a stone statue of a knight." The system generates the geometry without worrying about physics or interaction models.

This template exists primarily to show that the technology isn't locked to mechanical objects. It works for pure geometry generation as well. But honestly, this is the template that feels most limited. You're essentially getting a fancy 3D modeler with an AI that can interpret descriptions. It's useful, but it's not revolutionary.

The real potential lies in expanding beyond these two templates. Roblox has publicly stated they're working on allowing creators to build custom templates. Imagine being able to define a template for "humanoid characters," "quadruped animals," "mechanical robots," "sailing boats," or "furniture." Once you create a template, the AI could generate infinite variations within that framework.

They haven't given a timeline for custom templates, which is frustrating. The statement is just "eventually." That's typical Roblox fashion, but it also suggests they're being cautious. They probably want to make sure the system is stable and produces reliable results in the broader cases before opening up full customization.

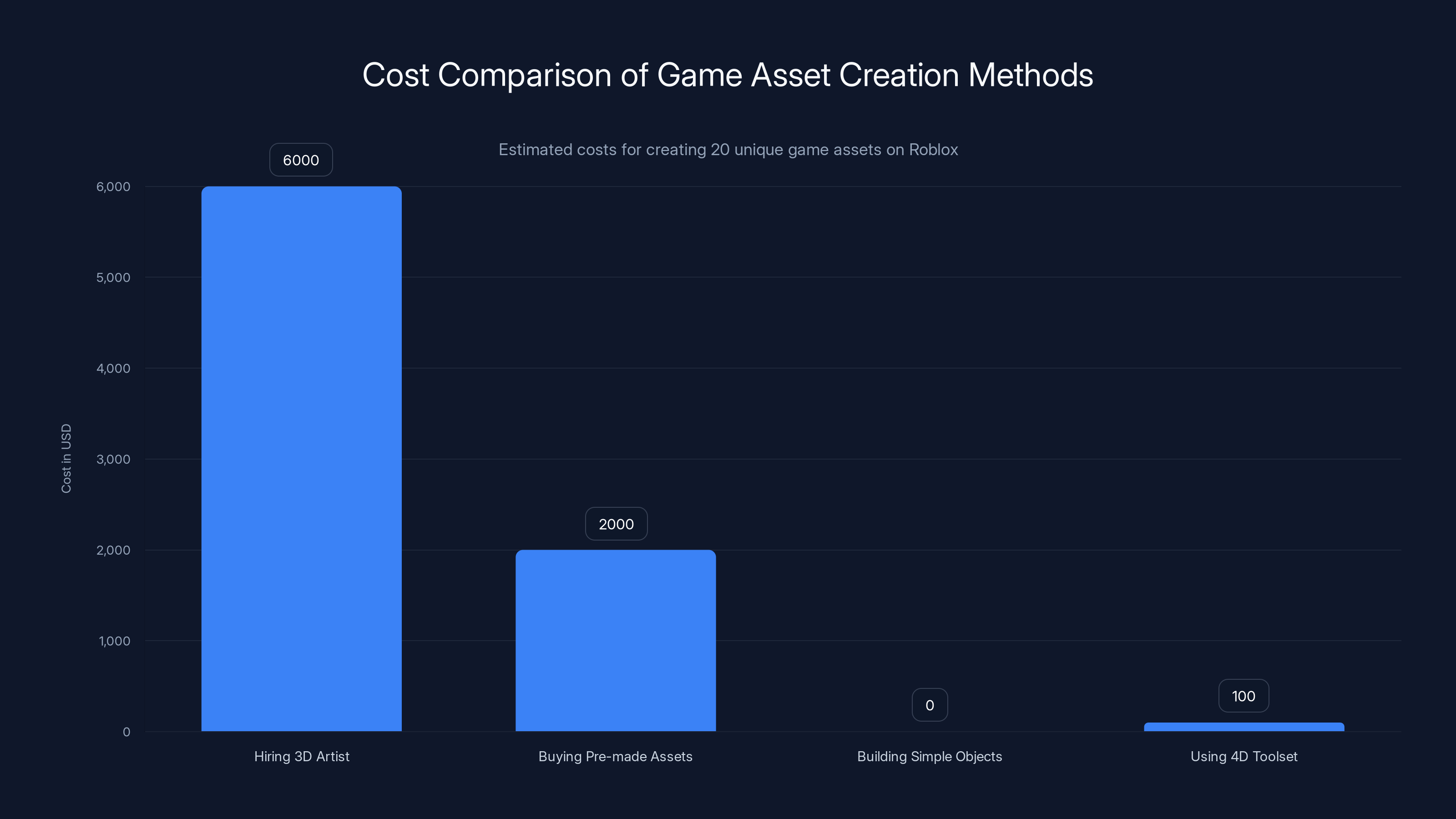

Using the 4D toolset significantly reduces costs for creating game assets, making it a cost-effective option compared to hiring artists or buying pre-made assets. (Estimated data)

Real-World Example: Wish Master and What Creators Are Building

Roblox didn't just launch the tool in a vacuum. They also highlighted an existing game that's using it: Wish Master.

Wish Master is the kind of game that only makes sense with generative AI creation tools. Players jump into the game world and request vehicles. A plane, a car, a bus, a helicopter—whatever they ask for. The game generates it in real-time using the 4D toolset. Then players can drive, fly, or interact with whatever they just created.

It's chaotic and unpredictable and genuinely fun. You never know what you're going to get, and part of the appeal is experimenting with weird requests. "Generate a flying motorcycle." "Create a tank made of glass." "Build me a giant walking robot."

Some requests produce cool, usable objects. Others produce absolute disasters that barely function but are hilarious because they're broken in unexpected ways. That's part of the charm.

Wish Master proves several important things. First, the generation system is fast enough for real-time gameplay. Players don't want to wait five minutes for their car to generate. They want it now. The system delivers. Second, the objects are stable enough that a whole game can be built around them. Third, creators are already thinking creatively about how to use this technology.

What's interesting is what Wish Master doesn't do: it doesn't claim perfection. Some of the generated vehicles are weird. They might have proportions that look off, or wheels that don't align quite right. But they work. They're functional and playable, which is what matters in a game context.

This is actually a really important data point for where this technology is heading. It doesn't need to be perfect. It needs to be good enough. Game developers have worked with imperfect assets forever. A slightly wonky car is still infinitely better than a completely unfinished game world.

Other creators are experimenting too. Some have built racing games where they generate tracks instead of cars. Others have created sandbox games where you generate objects and then arrange them into structures. The constraint of only having two templates hasn't stopped people from being creative. They're working within the constraints in interesting ways.

There's a parallel here to the early days of 3D modeling software. When tools like Blender or 3DS Max first came out, they were powerful but limited compared to what they are today. Creators worked with those constraints and produced amazing things. As the tools improved, the quality of work improved exponentially.

We're at that early stage with the 4D toolset. The constraints are real. But creators are already pushing against them and finding workarounds.

The Physics Engine: Why Objects Actually Feel Right

Here's something that matters more than you might think: the objects Roblox generates actually have believable physics.

This isn't automatic. When you generate a car, the system doesn't just create the shape. It assigns physical properties to each part. The wheels have mass and friction. The suspension has spring constants and dampening. The chassis has weight distribution. When you drive that car, it responds to the terrain realistically. Hit a bump too fast and the wheels compress. Turn too sharply and you might flip.

This matters because it makes the objects feel intentional and real. A car that slides around like it's on ice would be useless. A suspension that doesn't compress would feel dead. Getting the physics right is what separates "interesting toy" from "actually useful game asset."

Roblox built this by training the system on real-world physical principles. The AI doesn't just learn from examples. It learns the underlying physics rules that make those examples work. When you ask it to generate a car, it doesn't randomly assign spring constants to the suspension. It uses learned knowledge about how car suspensions should behave.

There are still imperfections, sure. Some generated cars are lighter or heavier than expected. Some suspensions might be stiffer than intended. But these are variations, not broken physics. And frankly, they add to the variety. A car that's lighter and quicker to accelerate is different from one that's heavier and slower, which is interesting from a gameplay perspective.

The physics validation happens in post-generation. After the AI creates the object, it runs a physics simulation to verify the object behaves reasonably. If something is obviously broken—like wheels that can't rotate, or a center of mass that's impossibly high—the system flags it and either fixes it automatically or regenerates the object.

This is a critical part of the system that doesn't get enough attention. The AI generation is impressive, but the physics validation is what makes it actually work in a game.

The current Roblox 4D toolset has limitations, such as only two templates and moderate AI training. However, future potential shows significant improvement as more templates and better integration are expected. Estimated data based on narrative.

Current Limitations and Why They Exist

Let's be honest: this is a beta tool, and the limitations are real.

Two templates is not a lot. After you've generated your fifth car or your third sculpture, the novelty wears off. You start wanting more. You want to generate NPCs. You want mechanical enemies. You want environmental objects with interactive elements. You want ships and helicopters and creatures. The tool doesn't do that yet.

Roblox knows this. Their roadmap explicitly mentions expanding the templates. But they're being cautious about rolling out new templates, and there's actually good reasoning behind that caution.

Each new template requires training time and validation. Roblox has to collect or create training data for that object type. They have to build the constraint framework. They have to test extensively to make sure the generated objects are stable and don't have weird edge cases that crash games. A poorly-designed template that ships bugs could damage trust in the entire toolset.

So they're going slower than enthusiasts would like, but faster than skeptics expected. That's probably the right call.

Another limitation: the generation isn't infinitely flexible within templates. You can ask for "a sports car" and you'll get something that looks and acts like a sports car. But you can't generate a "sports car with six wheels" or a "sports car that transforms into a boat." The template constrains the output space.

This is actually a feature more than a bug. The constraints ensure stability. An unconstrained generator would produce more variety, sure, but also more broken objects. The constraints guarantee that what you generate will work in your game.

The visual quality varies. Most generated cars look good. Some look great. Others look a bit generic or have proportions that feel off. This isn't a massive problem because you can always regenerate, but it does mean you might need to iterate a few times to get exactly what you want.

Speed is generally good, but it depends on server load. During peak hours, you might wait 2-3 minutes for generation. During off-peak hours, it's usually under a minute. This is reasonable for a beta service that's probably not using the most aggressive caching and optimization techniques.

The objects are fully editable after generation. You're not locked into what the AI creates. You can adjust proportions, change colors, modify physics properties, and add custom scripting on top. This is crucial because it means the AI generation is a starting point, not a final product.

The Broader Context: AI in Game Development

Roblox's 4D toolset isn't happening in isolation. It's part of a broader wave of AI tools entering game development.

Game engines like Unreal Engine and Unity are both experimenting with AI-assisted creation tools. Unity has been working on AI-generated environments. Unreal has integrated tools for generative art. Independent studios are using AI for everything from dialogue writing to enemy behavior trees to level design.

But Roblox is approaching this differently. The platform has democratized game creation for over a decade. Game development on Roblox doesn't require expensive software or specialized hardware. Most content is built by teenagers using Roblox's free editor and free scripting language. The 4D toolset continues that democratization. It removes yet another barrier to entry.

This is the context in which the 4D toolset matters most. For a professional game studio, generative AI is a tool that speeds up production. For a platform like Roblox, it's transformative. It takes something that required specialized training and makes it accessible to anyone.

That has huge implications for the quality and quantity of content on the platform. Games that might have never been built because they required complex 3D modeling are now possible. Kids with game ideas but no 3D art skills can now build the games they imagine.

At the same time, this is going to flood the platform with content. A lot of it will be low-quality. But that's fine. Roblox has always prioritized quantity and accessibility over curated excellence. The best creators will rise to the top, and the mediocre stuff will fade away.

There's also a labor angle here that's worth discussing honestly. People whose job is building 3D assets for games are going to see some displacement. Tools like this reduce demand for junior 3D modelers. That's a real impact on real people, and it shouldn't be glossed over.

But it's also a story as old as technology. Every tool that automates something reduces demand for people doing that thing manually. The typewriter displaced stenographers. The camera displaced portrait painters. Photography didn't kill painting. It freed painters to explore more interesting artistic directions.

I expect something similar here. The demand for 3D artists won't disappear. It'll shift. There will still be demand for specialized artists who can create unique, custom work that the AI can't. But the demand for generic asset creation will decrease.

Facial verification is a moderate safety measure, but privacy concerns and platform restrictions present significant challenges. Estimated data.

Child Safety Considerations: The Context Matter

Roblox has been dealing with persistent criticism about child safety. The company has faced lawsuits, regulatory investigations, and has even been banned in some countries due to concerns about protecting minors.

In response, Roblox implemented mandatory facial verification for chat access. The goal was to prevent adults from impersonating children in chat spaces. It's a reasonable safety measure, but according to reports, the implementation hasn't gone smoothly. Some users are having trouble with the facial verification system. Privacy concerns have been raised. Some countries looked at the measure and decided the whole platform needed to be restricted instead.

The timing of the 4D toolset launch is interesting in this context. Roblox is pushing forward with new creator features while also trying to address safety concerns. It's a balancing act.

The 4D toolset itself doesn't inherently raise new safety concerns. It's a tool for creating game objects, not a communication platform. The safety issues on Roblox exist primarily in the social features: chat, private messaging, and user-to-user interactions.

But there's a broader conversation about how platforms that cater to children handle safety. Roblox is trying to balance creator freedom with child protection. The company wants developers and creators to be able to build freely, but also wants to ensure the platform isn't exploited by bad actors.

It's a hard problem with no perfect solution. More restrictions help safety but limit legitimate creation. Fewer restrictions enable more creative freedom but increase risk. Roblox has been criticized for landing on both sides of this equation at different times.

The 4D toolset fits into this equation because it enables more creators to build more content faster. That's exciting from a platform perspective, but it also means more content to moderate and police. Roblox will need to ensure the tool can't be used to create inappropriate content. The fact that they're launching with limited templates suggests they're being thoughtful about this.

The Roadmap: What's Coming Next

Roblox has been relatively transparent about what's coming next with the 4D toolset, even if the timeline is vague.

Top of the list: custom templates. This is the feature that will unlock the real potential of the system. Once creators can define their own templates and constraints, the possibilities expand exponentially. Expect to see this arrive probably in the next 6-12 months, though Roblox hasn't committed to a timeline.

Also coming: generation from reference images. Roblox mentioned they're developing technology that will let you provide a photo or a sketch of an object, and the system will generate a 3D version that matches the reference. This would be massive for creators who have a visual idea but can't articulate it in words. Instead of describing your dream car, you'd just show it a picture of a car you like, and it would generate something in that style.

Better quality and variety in generated objects. As the AI trains on more data, the system will produce more diverse and higher-quality outputs. This is incremental improvement but also important. The more the system can generate things that feel unique and interesting, the more valuable the tool becomes.

Integration with other Roblox systems. Right now the 4D tools are somewhat siloed. As the platform integrates them more deeply with the environment creation tools, the game design systems, and the monetization features, the ecosystem becomes more powerful.

Expanded hardware support. Currently optimized for PC and cloud-based generation. Mobile optimization is probably on the roadmap. Mobile players represent the majority of Roblox's user base, and if the generation system could work on mobile, that would democratize creation even further.

Roblox is also almost certainly working on better image generation for the objects. The 3D geometry is great, but texturing and shading are areas where the system could improve. Better-looking surfaces would make generated objects more visually competitive with professionally-created assets.

The company is also probably exploring physics-only variations. What if you could generate just the underlying physics system without the visual geometry? This would enable procedural content generation in a different way.

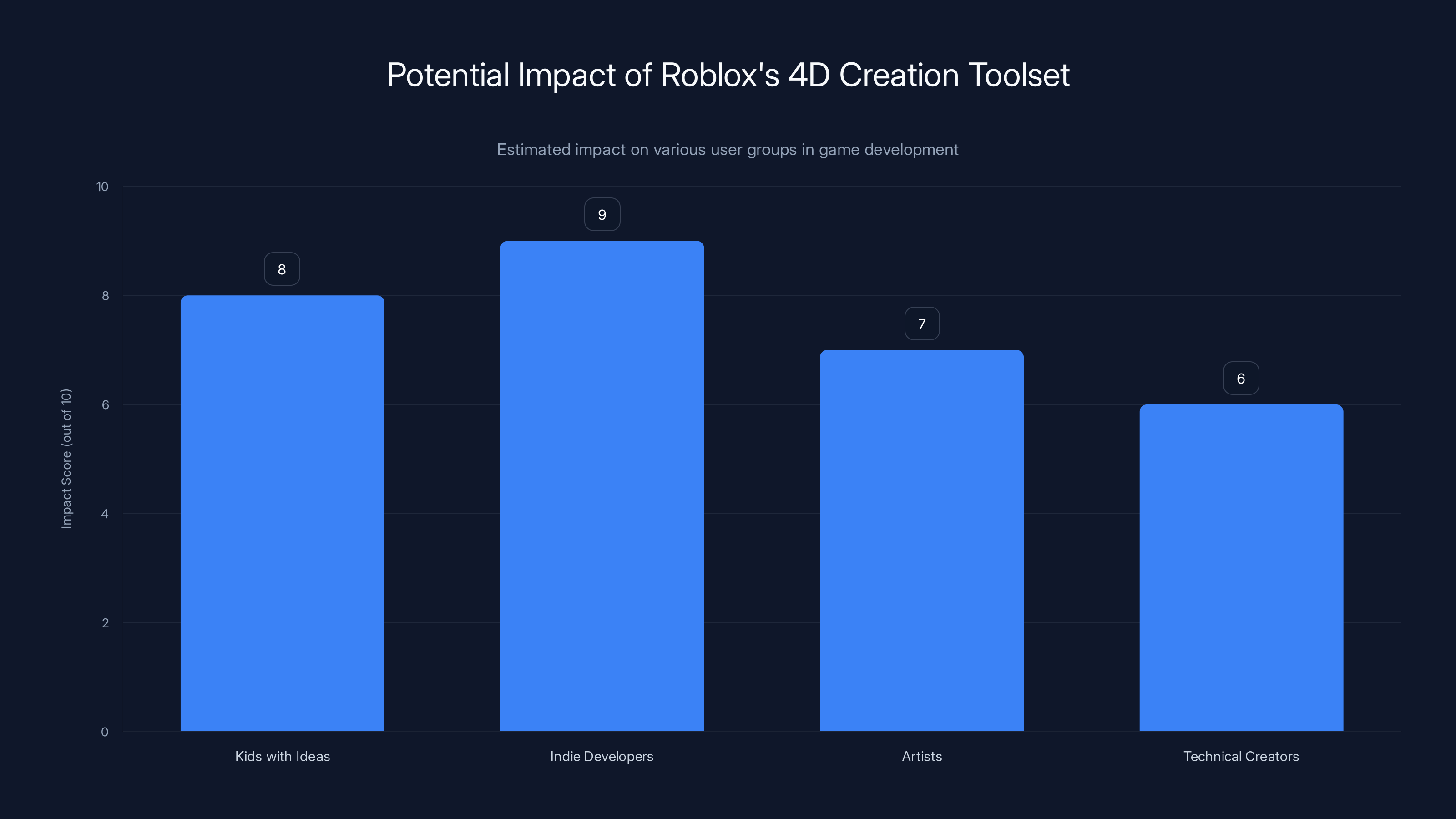

Estimated data shows that Roblox's 4D creation toolset could significantly empower indie developers and kids with ideas, offering high impact scores for these groups.

Comparison: How This Stacks Up Against Other Tools

Roblox isn't the only platform experimenting with AI-assisted 3D creation. How does the 4D toolset compare to what else is out there?

Unreal Metahuman Creator lets you generate human characters quickly. It's impressive, but it's narrowly focused on humanoids. The Roblox approach is broader in scope but less specialized.

Unity Sentis is Unity's neural network inference engine. It lets developers build AI systems into their games, but it's more of an infrastructure tool than a creation tool. Different purpose entirely.

Blender generative features are still experimental. You can use AI within Blender, but it's not as integrated or automatic as Roblox's approach.

Sketchfab AI generation lets you generate 3D models from prompts, similar to Roblox. But Sketchfab's outputs aren't automatically physics-enabled the way Roblox's are.

NVIDIA Omniverse has AI tools for content creation, but it's aimed at professionals and studios, not individual creators on a social platform.

The unique thing about the Roblox approach is the combination of automatic generation, physics simulation, and behavioral scripting all in one integrated system. You don't get a nice 3D model that you then have to rig and script. You get a fully functional game object.

That's a significant advantage for game development specifically. You're not just generating art. You're generating systems.

The limitation is specialization. Roblox's templates are deliberately constrained. The object generators that operate with fewer constraints (like Sketchfab or Midjourney for 3D) produce more variety but require more post-generation work to make them functional in a game.

There's a trade-off between flexibility and functionality, and Roblox has chosen to prioritize functionality. That's the right call for a platform focused on actual game creation rather than art generation.

Implementation Guide: How to Actually Use This

If you're a Roblox creator and want to start using the 4D toolset, here's what you need to know.

First, make sure you have access to the beta. Roblox rolled this out to a limited group initially and is gradually expanding access. If you don't have it yet, you might need to wait a bit longer. The platform usually doesn't make early-access features available to everyone immediately.

Once you have access, you'll find the 4D tools in your creation interface. Navigate to the asset creation section and look for the generative creation options. You'll see the two templates: cars and solid objects.

To generate a car:

- Select the car template from the creation menu.

- Type a description of the car you want. Be specific. "Racing car" is better than "car." "Off-road truck with big tires" is better than "truck."

- Click generate and wait. Times vary, but typically 30-90 seconds.

- Review the result. If you like it, import it into your game. If not, regenerate.

- Once imported, you can edit properties: colors, materials, physics parameters, add custom scripts.

- Test drive it (literally) to make sure it handles the way you expect.

To generate a solid object:

- Select the solid objects template.

- Describe the object. "Futuristic obelisk," "stone dragon," "crystalline tower."

- Generate and wait.

- Import if satisfied, regenerate if not.

- Place it in your game world.

- These don't have interactive behavior, so mostly you're just checking that the geometry looks right.

Some tips from experienced users:

Be descriptive in your prompts. "Sports car" is okay, but "sleek sports car with a low profile, wide stance, and aggressive front grille" will produce better results. The more detail you provide, the better the system understands what you're asking for.

Use style descriptors. "Futuristic car," "vintage car," "brutalist car," "biomechanical car." These influence the aesthetic direction.

Describe the intended purpose. "Off-road truck" will generate something different from "racing truck." "Fire truck" will be different from both. Context matters.

Generate multiple times. You won't get exactly the same result twice. If the first generation isn't quite right, try again. You might get something better.

Edit after generation. The generated objects are just starting points. You can tweak proportions, change colors, adjust physics, add custom scripting. Don't feel locked into what the AI produces.

Check physics before shipping. Drive around your generated vehicles. Make sure the handling feels right. Adjust suspension and weight properties if needed.

Save iterations. If you generate something cool, save it even if you don't use it immediately. You can reference it later for inspiration.

The Economics: How This Affects Game Development Costs

Let's talk money. How does the 4D toolset affect the economics of game development on Roblox?

Traditionally, if you wanted to include a variety of vehicles or interactive objects in a Roblox game, you had a few options: hire a 3D artist to create custom assets, buy pre-made assets from the Roblox marketplace, or build simple objects using the terrain and mesh tools.

Hiring a 3D artist costs money. A decent freelancer might charge

Buying pre-made assets avoids the hiring cost but gives you less control and variety. Most marketplace assets are used by multiple games, which means your game might not feel unique.

Building simple objects yourself is free but produces low-quality results.

With the 4D toolset, you can generate dozens of unique, functional objects for free (or very cheap, assuming Roblox might eventually charge for high-volume generation). The quality is middle-of-the-road—not as good as a professional artist, but way better than amateur efforts.

This dramatically changes the economics. Suddenly, it's feasible to build a game with lots of variety without hiring or spending money. The barrier to entry drops further.

Roblox probably isn't thinking about this as a cost-reduction play immediately. They're thinking about it as a growth play. By making it cheaper and faster to create content, they encourage more creators to build more games, which keeps players engaged longer, which makes Roblox more valuable.

But the real impact is on creator income distribution. Right now, the creators who make the most money are those who understand both game design and technical execution. The 4D toolset shifts the balance slightly toward pure game designers and away from technical specialists.

That's not necessarily bad. It democratizes opportunity. But it does mean the premium for specialized technical skills decreases.

For Roblox as a platform, this is almost certainly positive. More creators building more content faster makes the platform stickier for players.

Safety and Moderation: Preventing Misuse

Here's a question that doesn't get asked enough: can this system be used to create inappropriate content?

Yes. Obviously. Humans can use anything creative to make something inappropriate.

But the constraints matter. Because the system only generates objects within defined templates, it's harder to ask it to generate something that violates policy. You can't use the car template to generate a weapon. You can't use the solid objects template to generate explicit content (at least, not well—the system might refuse or produce something that looks nothing like what you asked for).

Roblox built the constraints partly for technical reasons (keeps generation stable) and partly for safety reasons (limits bad outputs).

But here's where the human element comes in. Once an object is generated, it's in the creator's hands. What do they do with it? Do they use it legitimately in a game? Or do they try to manipulate it into something inappropriate?

Roblox has systems for moderating generated content. When a creator generates an object, it probably gets flagged for review. When they publish a game using generated objects, that gets reviewed too. The company has gotten better at moderation over the years, though they still catch far from 100% of violations.

The risk is lower with the 4D toolset than with unrestricted AI image generation because the constraints are built in. But the risk isn't zero.

Roblox is probably thinking about this carefully. One of the reasons they launched with limited templates is almost certainly because they want to understand how the system gets used before expanding it.

If they see that people are reliably using the tools appropriately, they'll probably expand. If they see widespread misuse, they might lock it down or add additional review requirements.

This is a classic platform moderation challenge: enable creativity while preventing harm. There's no perfect answer.

The Competitive Landscape: Why Roblox Moved Now

Why did Roblox launch this now? Why not wait for more polish?

Competition, probably. Fortnite Creative mode has been a success. Minecraft has massive user-generated content. Discord is becoming a platform for gaming communities. Roblox feels these competitive pressures and needs to keep innovating to stay relevant.

Also, the technology is ready enough. The AI models are good enough. The physics systems are stable enough. Waiting longer provides diminishing returns. It's better to launch with limitations and iterate than to delay indefinitely waiting for perfection.

There's also an opportunity cost to delay. Every month that Roblox doesn't have this feature is a month where creators on competing platforms might be using equivalent tools. If Fortnite had launched AI-assisted object generation first, Roblox would be playing catch-up.

The beta label is strategic too. It manages expectations. If something breaks or the quality isn't perfect, Roblox can say "it's beta." But it also gets the feature out into the world where creators can start using it and providing feedback.

This is standard practice for platforms now: launch features early, get feedback, iterate in public. It's faster than developing in secret and launching a "perfect" product.

Looking Forward: What Comes After 4D

Assuming the 4D toolset is successful and adoption grows, what's next?

Smarter templates that understand more complex concepts. Instead of just "car," you might specify "military vehicle," "luxury car," "utility vehicle," and get appropriately specialized generation.

Neural customization layers. The ability to provide more fine-grained control over generation. "Generate a car with these wheels," "generate a car in this color scheme," "generate a car with this level of damage." More control without losing automation.

Generative level design. Objects are cool, but full environment generation would be transformative. Imagine describing a game level and having the system generate the basic layout and populating it with appropriate objects.

Generative narrative. Game scripts, dialogue, NPC behavior. This is further out and more complex, but Roblox is probably thinking about it.

Integration with voice interfaces. "Roblox, generate me a red sports car." Natural language input that makes the tools even more accessible.

Community-driven templates. User-generated templates that expand the system's capabilities beyond what Roblox officially creates.

Monetization of generated assets. If creators generate something really cool, they might want to sell it to other creators. This could create a marketplace for AI-generated assets within the broader asset marketplace.

Licensing and rights clarity. As AI generation becomes more common, questions about who owns generated content, whether it can be sold, etc., become important. Roblox will need to address this.

The long-term vision probably includes an AI-assisted game development platform where you can describe a game and have the system generate basic versions of the mechanics, the assets, the environments. That's a ways off, but the 4D toolset is a step in that direction.

Honest Assessment: Pros and Cons

Let's cut through the hype and be realistic about what this is and isn't.

Pros:

- Dramatically lowers the barrier to entry for game creators. No 3D modeling skills needed.

- Objects are fully functional, not just visually interesting. They work in games immediately.

- Physics simulation is integrated, which makes it game-ready.

- The system is fast enough for practical use.

- The generated objects are customizable after generation.

- Free to use (at least in beta).

- Opens possibilities for games that wouldn't have existed otherwise.

Cons:

- Limited to two templates right now. Constraining if you have specific needs.

- Quality is good but not professional-grade. Some generated objects feel generic.

- Customization options are somewhat limited. You can adjust but not completely redesign.

- Still beta software with occasional glitches.

- No custom templates yet.

- Slower than instant. Generation takes time, which breaks creative flow sometimes.

- No guarantee of uniqueness. Other creators might generate identical objects.

The Bottom Line:

This is a genuine advancement in game development tools. Not revolutionary, but significant. It makes game creation more accessible and faster. It will probably have a noticeable impact on the platform.

But it's not magic. It won't replace skilled artists and designers. It will augment their capabilities and reduce demand for certain types of work. The impact will be felt most by junior-level 3D modelers, but the platform as a whole will probably be healthier and more creative as a result.

If you're a Roblox creator, this should be on your radar. Even if you don't want to use it for everything, it's useful for prototyping, for generating environmental variety, for filling out details quickly.

If you're a player, you might not notice much at first. But over time, you'll probably see more variety in games, more ambitious projects from solo creators, and generally more interesting content.

That's worth something.

FAQ

What does "4D" actually mean in the context of Roblox's toolset?

"4D" refers to three-dimensional objects that behave dynamically across time and respond to player interactions. The fourth dimension is essentially time and interactivity rather than a literal fourth spatial dimension. These objects move, react to physics, and respond to player input—unlike static 3D models that just sit in your game world looking pretty. The terminology is marketing language, but it's reasonably accurate once you understand the functionality.

How long does it take to generate a 4D object, and can I use it in my game immediately?

Generation typically takes 30-90 seconds depending on server load, with average times around 45-60 seconds during normal usage. Once generated, objects are immediately usable in your game. You can import them directly into your game world, test them, customize their properties, and deploy them. The system is designed to be fast enough for practical iteration, though during peak hours you might experience slower generation times.

What happens if I don't like the generated object? Can I regenerate it?

Absolutely. You can regenerate as many times as you want until you get something you're happy with. Each generation produces slightly different results even with identical prompts. The system learns from examples rather than following rules, so there's inherent variation. If your first car doesn't look right, generate it again. Most creators go through 2-3 iterations to find something they like. You're not locked into the first result.

Are there limits on how many objects I can generate, or does Roblox charge for this?

In the open beta, the generation appears to be free and unlimited, though Roblox hasn't officially stated quotas or pricing for the final release. Typically, Roblox offers free tiers for creator tools with optional premium features for more advanced options. During the beta period, you should be able to experiment freely without worrying about hitting limits or incurring costs.

Can I edit the generated objects after they're created, or am I stuck with what the AI generates?

Generated objects are fully editable. You can adjust proportions, change colors and materials, modify physics properties like weight and friction, and add custom scripting on top. The AI output is a starting point, not a final product. Many creators use generation as the foundation and then customize extensively to match their vision or game's aesthetic.

Will custom templates be available eventually, or is the platform limited to cars and solid objects permanently?

Roblox has explicitly stated they're developing custom templates, allowing creators to define their own generation frameworks beyond the current car and solid object templates. However, the company hasn't provided a timeline for this feature. Based on Roblox's typical release schedule, expect this within 6-12 months, though this is an estimate rather than a commitment. The current limited templates are partly for technical stability and partly to ensure the system works reliably before expanding.

How do the physics in these generated objects compare to professionally-created assets?

The physics are surprisingly solid for AI-generated objects. The system generates not just geometry but also physical properties like mass distribution, friction coefficients, and suspension characteristics. In testing, many generated cars handle more realistically than expected. That said, they don't always match the precision of professionally-tuned physics. You might need to make adjustments to exact parameters, but the baseline is functional and realistic enough for most game purposes.

What prevents creators from using this tool to generate inappropriate content?

The template constraints build in safety by limiting what can be generated. You can't use the car template to generate weapons, and the solid objects template doesn't easily produce explicit content. Beyond the technical constraints, Roblox's moderation systems review generated content and games that use it. Once content is published, the company's moderation staff reviews it for policy violations. The constraints reduce risk but don't eliminate it entirely, as with any creative tool.

Is the 4D toolset available on mobile, or just desktop?

Currently, the toolset is optimized for desktop and browser access. Mobile support is not yet available in the open beta. Given that mobile represents the majority of Roblox's user base, mobile optimization is probably on the roadmap, though Roblox hasn't officially announced a mobile release date. The generation process is computationally intensive, which is why desktop optimization came first.

How does the quality of AI-generated objects compare to marketplace assets or custom-commissioned art?

AI-generated objects are middle-ground: better than amateur-created assets, comparable to lower-end marketplace assets, but not quite professional-commission quality. They're perfectly serviceable for games and provide good value because they're free. However, if you want AAA-quality visuals or completely unique specialized designs, you'd still commission professional artists. For developers prototyping games or building on a budget, the quality is quite good. For projects where visual polish is critical, custom art might still be preferable.

Conclusion: The Beginning of Something Bigger

Roblox's 4D creation toolset represents a meaningful shift in how games get built. Not a revolutionary overnight change, but a measurable step toward democratizing game development.

The tool isn't perfect. The current limitations are real. Two templates is restrictive. The quality is good but not professional-grade. Custom templates haven't shipped yet. But the foundation is solid, and the trajectory is clear.

What's most interesting isn't what the tool is right now. It's what it enables going forward. As custom templates arrive, as the AI training improves, as integration with other Roblox systems deepens, this toolset becomes increasingly powerful.

We're in that early stage where people are still figuring out how to use this well. Games like Wish Master are only scratching the surface of what's possible. Give creators time, and they'll figure out clever ways to use these tools that Roblox probably didn't anticipate.

The competitive implications are worth noting too. Roblox needed to move on AI-assisted creation to stay relevant against Fortnite, Minecraft, and other platforms. This move isn't desperate, but it's not optional either. The company is right to invest here.

For creators, the key takeaway is simple: this tool is available, it's worth experimenting with, and it's probably going to become more important over time. Even if you don't want to rely on it exclusively, learning how to use it effectively is a useful skill.

For players, the impact will be gradual but real. More games will be created by solo developers and small teams who previously couldn't afford 3D artists. That probably means more variety, more experimental games, and more content overall. Quality varies in any user-generated content platform, but quantity has a quality of its own.

Roblox has been smart about how it's handled this technology. Limited launch with clear constraints. Integration with existing systems rather than completely replacing them. Focus on practical game development use cases rather than pure art generation. This is thoughtful product design, not desperate feature-throwing.

The company is also being appropriately cautious about expansion. They could have launched with ten templates. Instead, they chose two and committed to expanding carefully. That suggests they're thinking about stability and long-term trust rather than maximum immediate impact.

Long-term, this positions Roblox well for continued growth. Game developers always need tools, and tools that make development faster and cheaper have permanent value. The 4D toolset is that kind of tool.

For the broader industry, this is a signal that AI-assisted content creation isn't a niche experimental feature anymore. It's becoming core infrastructure. Expect every major game platform and development tool to have equivalent features within the next 2-3 years.

The question won't be "does this tool exist?" but "which approach works best?" Roblox has chosen a constrained, template-based approach. Others might try less constrained systems. Over time, we'll learn which approach actually works better in practice.

But for now, Roblox has shipped something genuinely useful that makes game creation more accessible. That's worth celebrating, even if we're realistic about its limitations.

The future of game development isn't AI replacing human creators. It's human creators using AI tools to work faster, try more ideas, and accomplish more ambitious projects than they could alone. The 4D toolset is that future, arriving a little earlier than expected.

It's worth paying attention to.

Key Takeaways

- Roblox's 4D toolset generates fully functional, interactive 3D game objects using AI, dramatically lowering barriers to game creation

- The system combines geometry generation, physics simulation, and behavioral scripting into one integrated workflow—no manual rigging or scripting required

- Current beta limits creators to two templates (cars and solid objects), but custom templates are coming to expand possibilities exponentially

- Physics simulation is automatically integrated, making generated objects game-ready immediately without post-generation work

- This represents a significant shift toward democratized game development, especially impacting solo creators and small teams who previously couldn't afford 3D artists

Related Articles

- Egypt Blocks Roblox: The Global Crackdown on Gaming Platforms [2025]

- Take-Two's AI Strategy: Game Development Meets Enterprise Efficiency [2025]

- Google's Project Genie: How AI World Models Are Disrupting Gaming [2025]

- Krafton's Quest for the Next PUBG: Inside 26 Games in Development [2025]

- Rockstar's Cfx Marketplace Explained: The Future of GTA Modding [2025]

- Roblox's Age Verification System Catastrophe [2025]

![Roblox's 4D Creation Toolset: Everything You Need to Know [2025]](https://tryrunable.com/blog/roblox-s-4d-creation-toolset-everything-you-need-to-know-202/image-1-1770233828437.png)