Roblox's Age Verification System: A Complete Breakdown of What Went Wrong [2025]

Something truly absurd happened on the internet last week. A kid drew a mustache and some stubble on his face, took a selfie, and Roblox's new age verification system instantly classified him as 21+. Another child flashed a photo of Kurt Cobain and got the same result.

This isn't a joke setup. This is what's actually happening inside one of the world's largest gaming platforms, which hosts millions of children.

Roblox, valued at over $15 billion, rushed to implement a mandatory age verification system in response to mounting pressure from lawmakers and lawsuits over child safety. The predator problem had become impossible to ignore. Multiple state attorneys general launched investigations. Lawsuits piled up from Louisiana, Texas, and Kentucky. Florida's AG issued criminal subpoenas. The company's survival felt like it might depend on getting this right.

Instead, they got it catastrophically wrong.

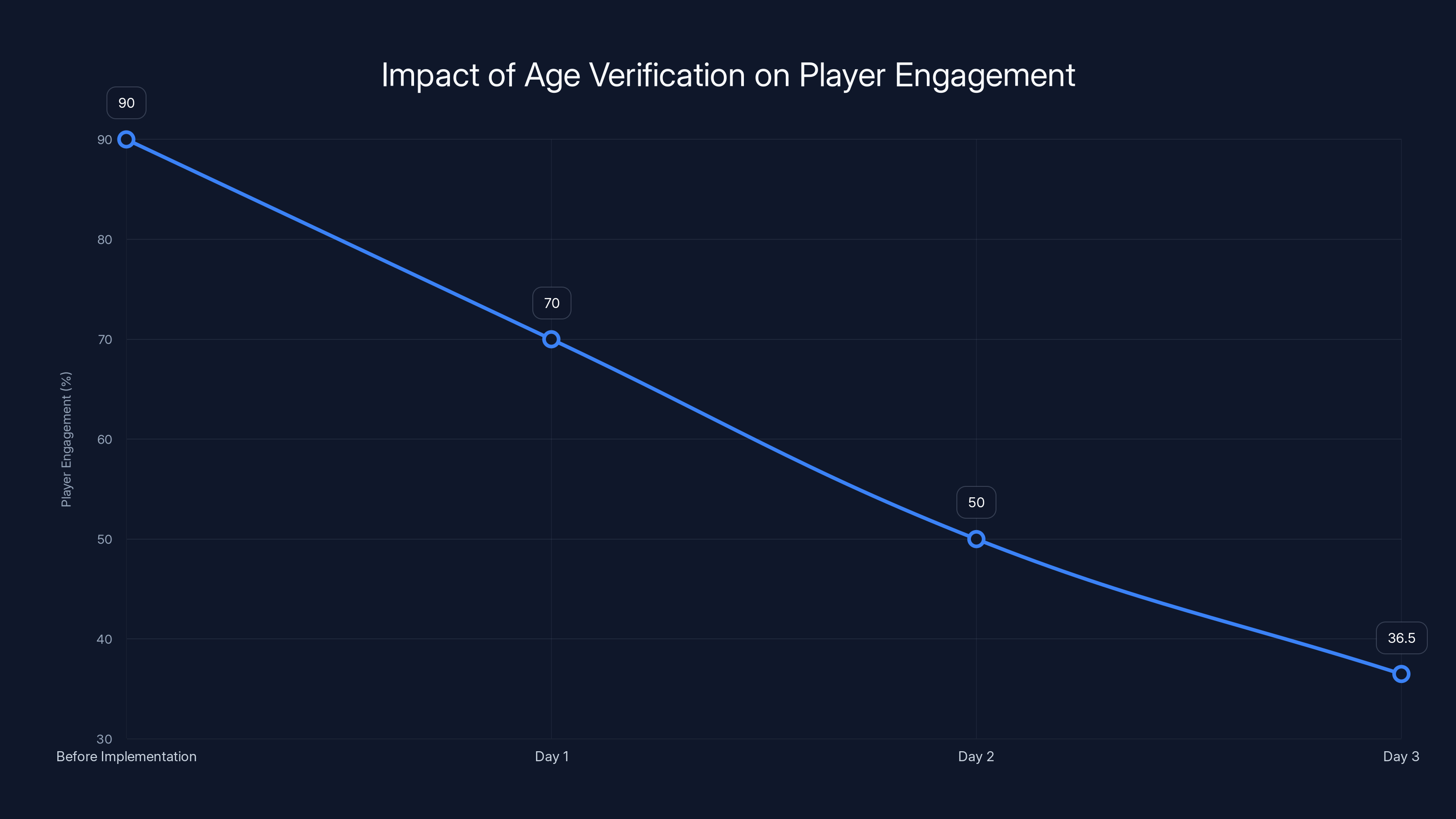

Within 48 hours of making age verification mandatory for anyone using chat, the internet was flooded with reports of the system failing in ways that would be funny if they weren't genuinely dangerous. A 23-year-old was marked as 16-17. An 18-year-old landed in the 13-15 range. Children were gaming the system with hand-drawn facial features. Parents were accidentally locking their kids into the 21+ category. Thousands of game developers reported seeing player engagement collapse from 90% to 36.5% on their games.

What should have been Roblox's breakthrough moment in child safety became a masterclass in implementation failure.

Let me break down exactly what happened, why it matters, and what this means for the future of online child safety.

The Predator Problem That Forced Roblox's Hand

Roblox didn't build an age verification system because they wanted to. They built it because the alternative was potential platform collapse.

The platform has become a known hunting ground for child predators. That's not hyperbole or exaggeration. Multiple investigative journalists, child safety organizations, and law enforcement agencies have documented patterns of adults grooming children on Roblox. The mechanics are simple: adults create accounts, find young players, build rapport, and then transition conversations to private channels where they attempt to solicit inappropriate content or arrange real-world meetings.

Roblox's chat system made this tragically easy. The platform had minimal content moderation in direct messages. Young players, taught to be trusting and collaborative through years of multiplayer gaming culture, were vulnerable. And predators knew exactly where to find them.

By 2024, the situation had spiraled into something that could no longer be ignored. Lawsuits started hitting different states simultaneously. Louisiana filed suit. Texas followed. Kentucky did too. The Florida attorney general didn't just threaten investigation, they actually issued criminal subpoenas to Roblox executives. That's not a warning. That's law enforcement saying "we think crimes are happening here" as reported by WIS TV.

For a company that depends on staying attractive to parents and advertisers, this created an existential threat. Roblox couldn't just pay a settlement and move on. The fundamental structure of their platform—how kids connected, how they chatted, how they interacted—had become a liability.

So in January 2025, Roblox announced a solution: mandatory age verification for anyone using the chat feature.

The reasoning made sense on paper. If you could verify that someone is actually 23 years old, and prevent them from chatting with kids in the 13-15 age group, you'd eliminate a huge vector for grooming. Kids would only see chat from age-appropriate players. Adults would be restricted from initiating conversations with minors.

It was logical. It was also naive about the technical reality of age verification at scale.

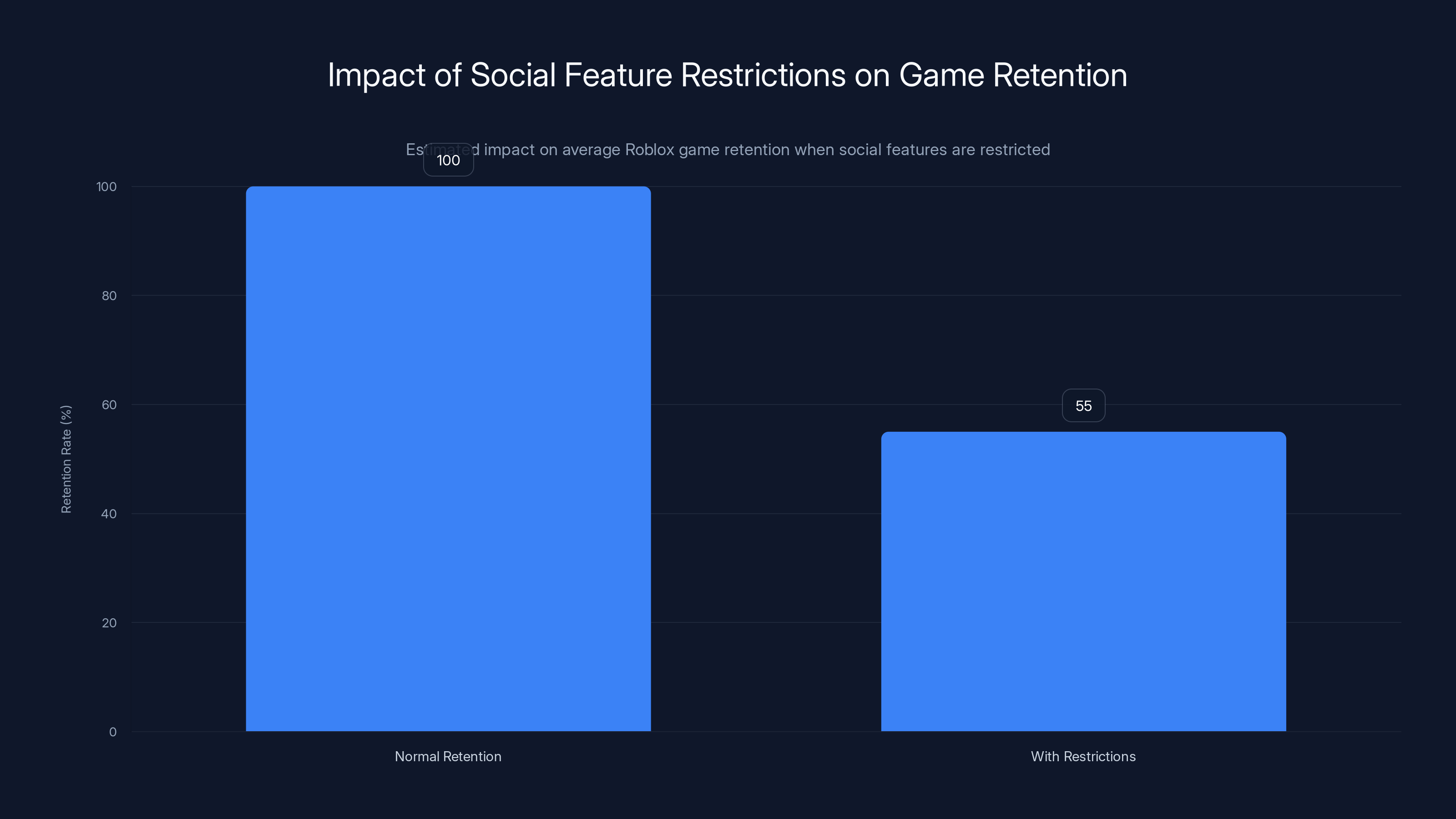

Estimated data shows a significant drop in game retention (40-50%) when social features are restricted, highlighting their critical role in user engagement.

How the Verification System Actually Works (And Why It Fails)

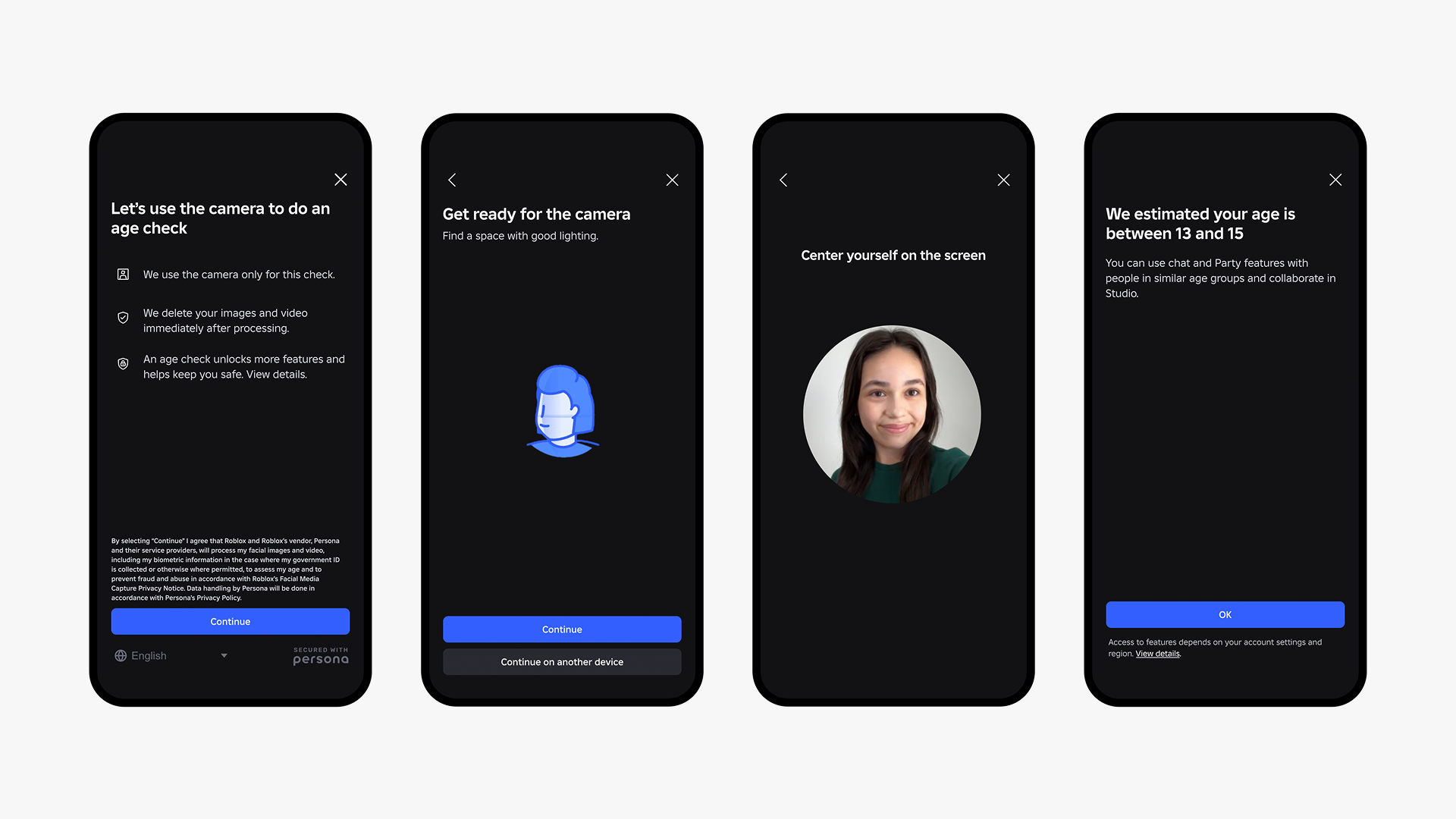

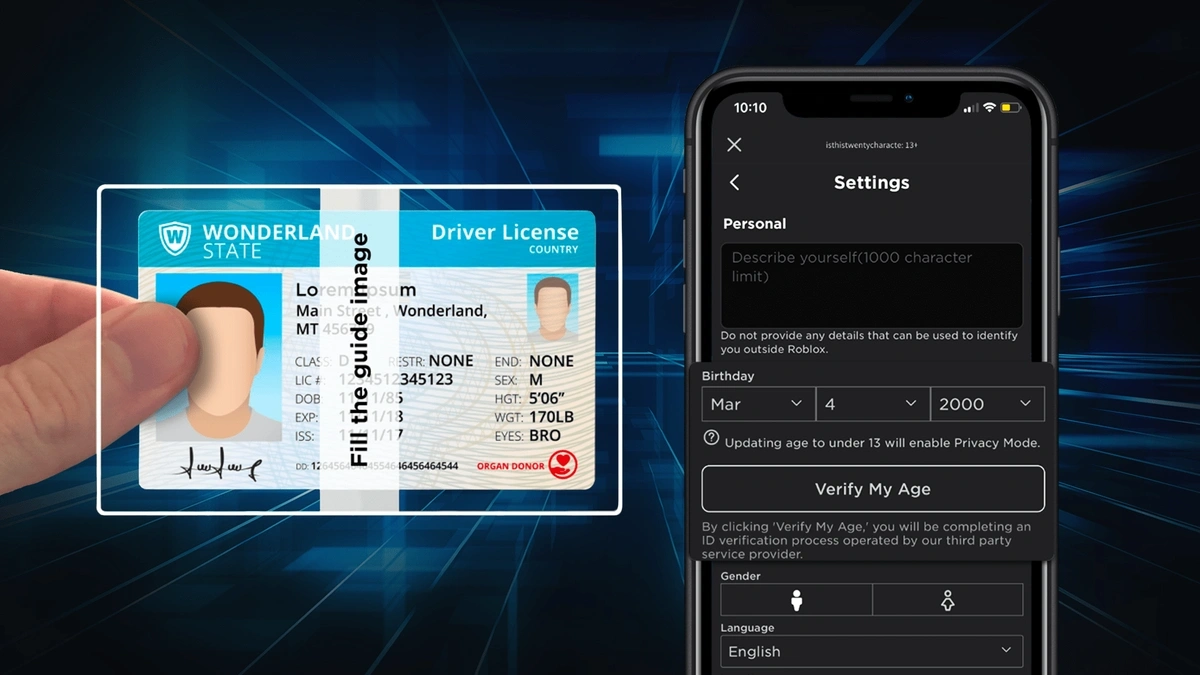

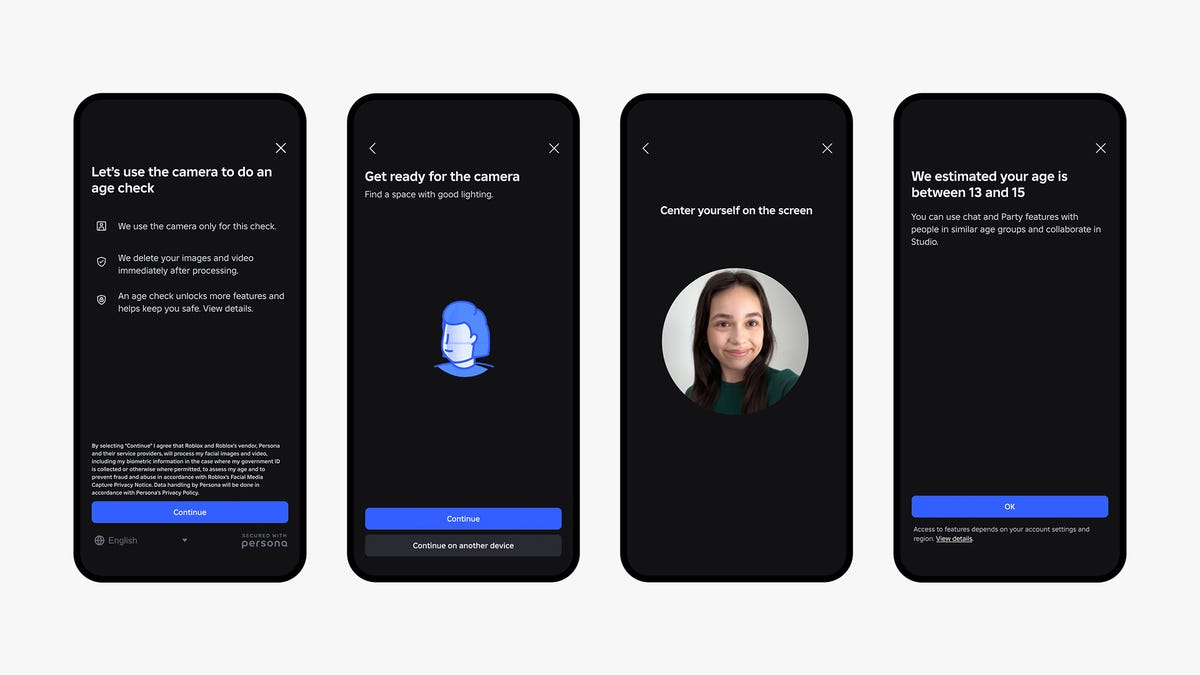

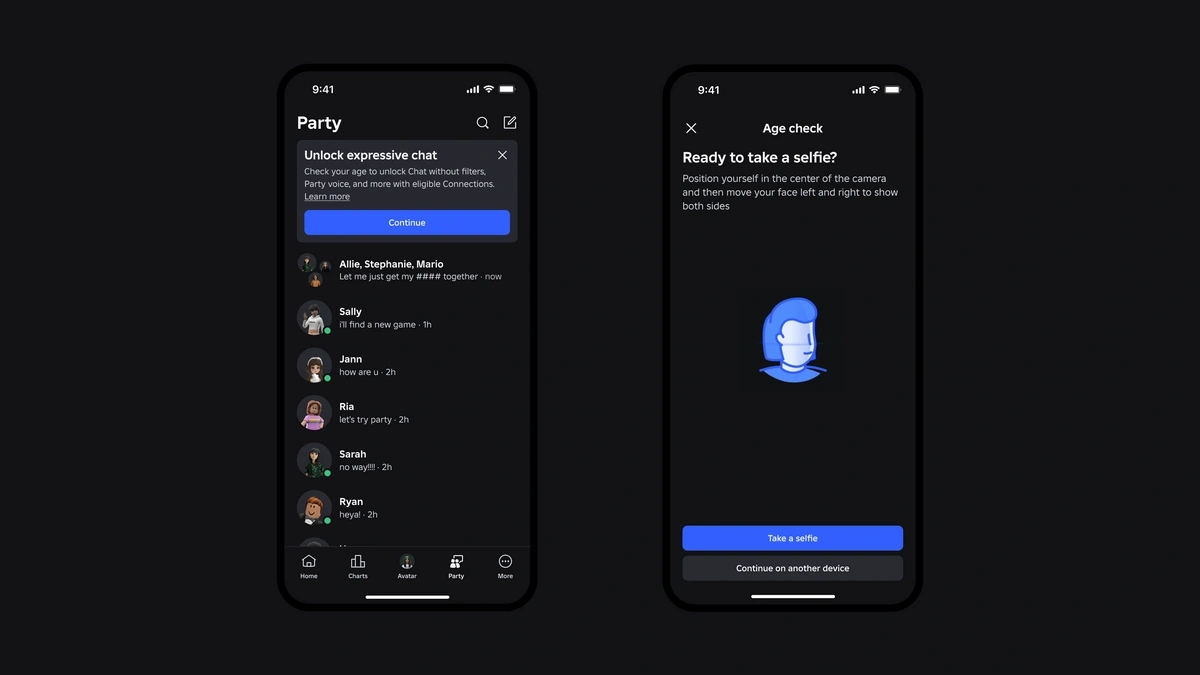

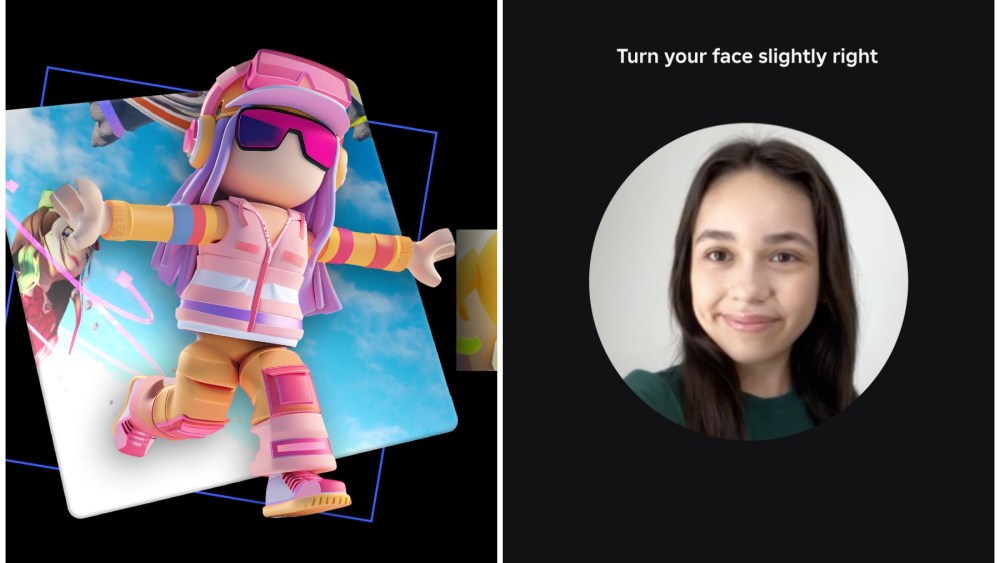

Roblox gave users two options: facial age estimation via selfie, or ID verification for anyone 13+.

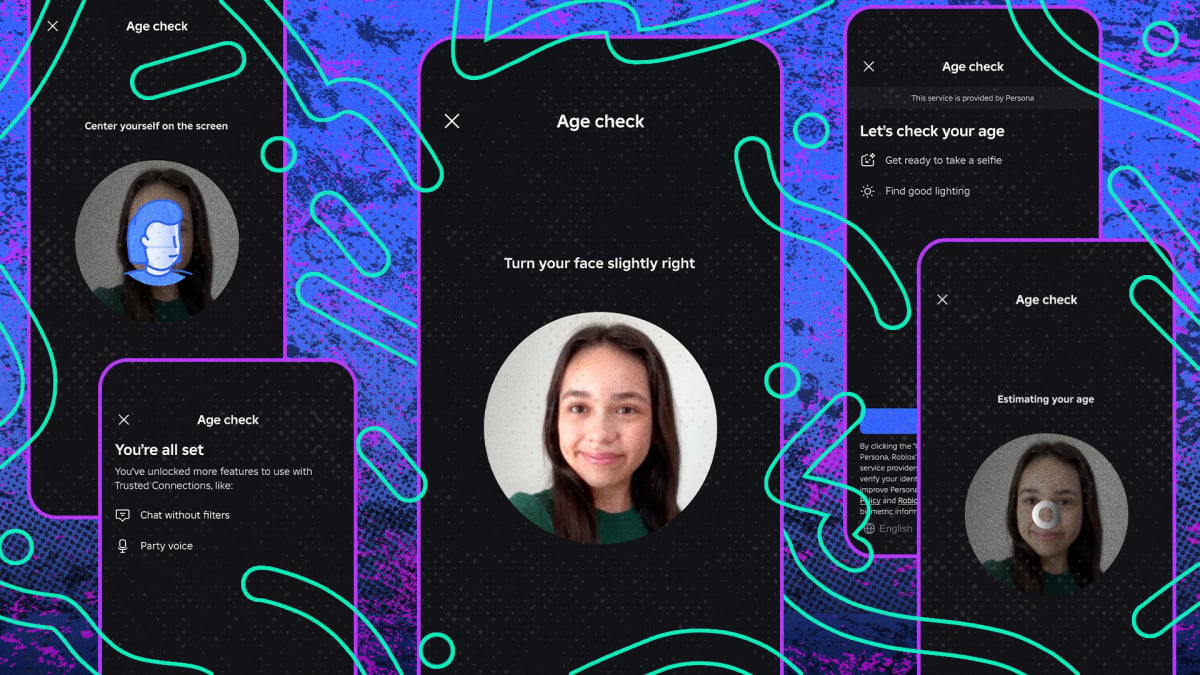

The selfie route is where things got weird immediately. Players take a photo. Roblox's AI analyzes facial features. The algorithm makes a guess: are you 13 and under? 13-15? 16-17? 18-20? 21+?

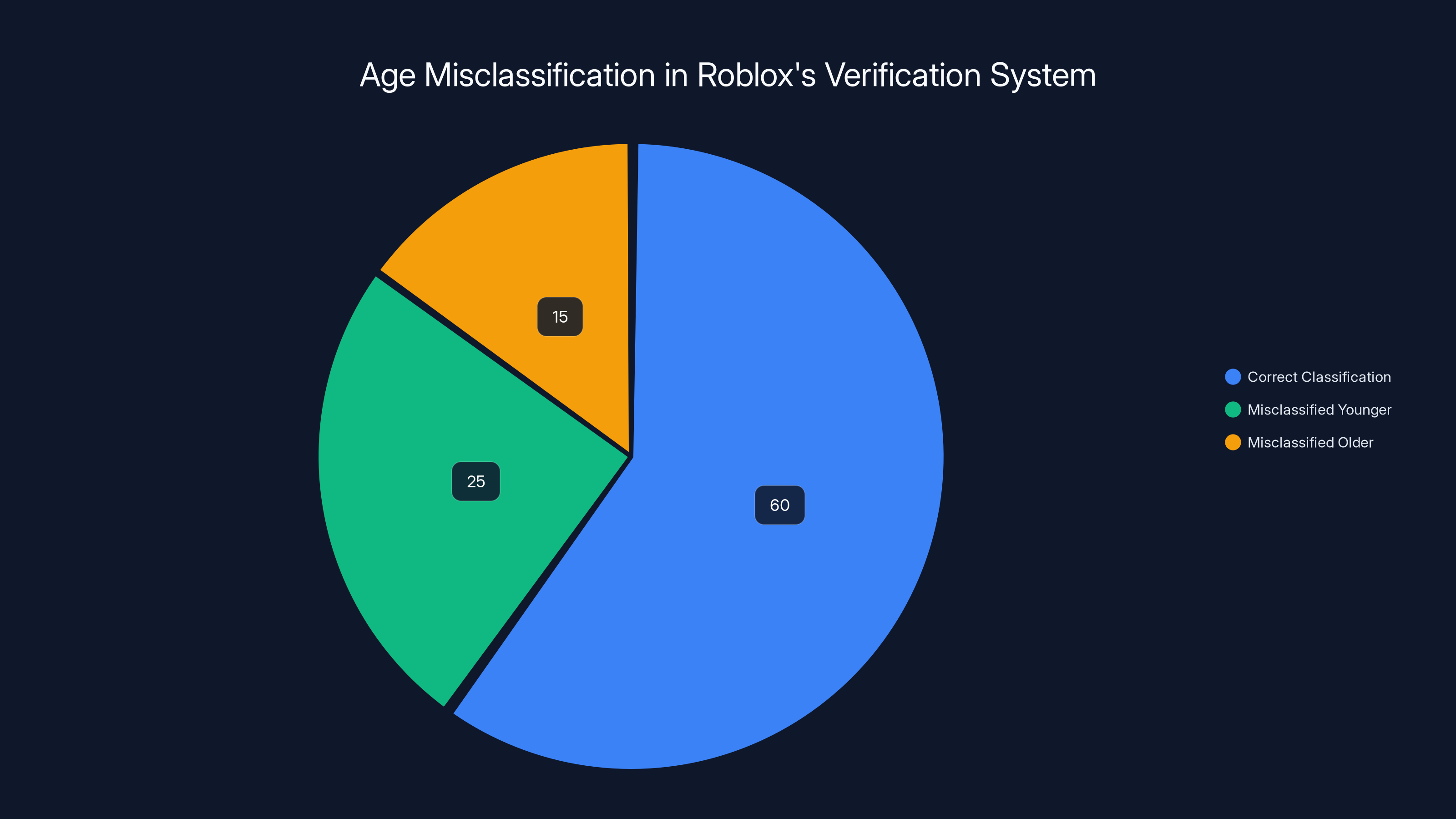

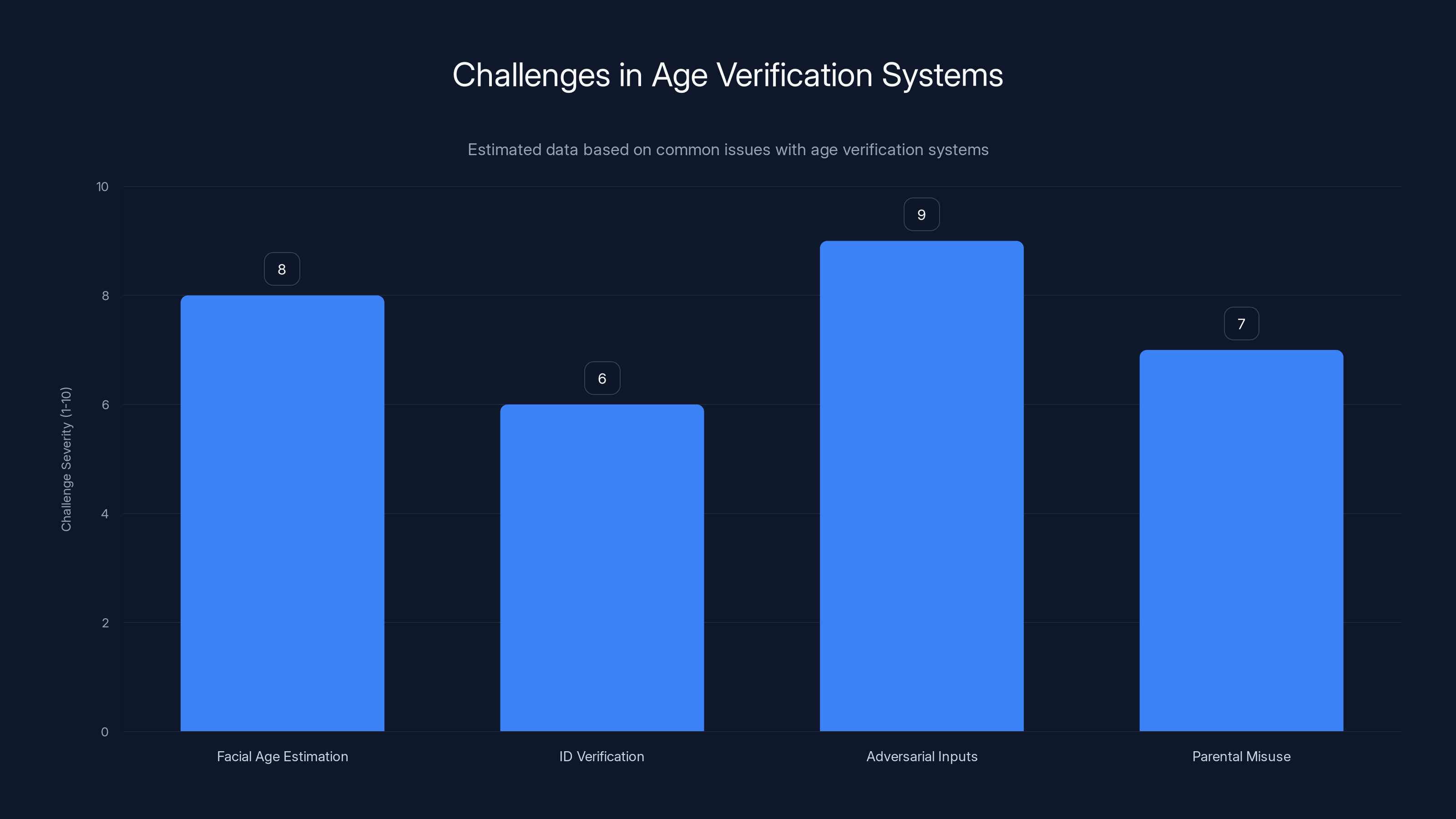

That's already a problem. AI facial age estimation doesn't work with the precision that a system like this requires. These algorithms are trained on specific demographics. They're notoriously bad at accurately estimating age across different ethnicities, with different lighting, with different facial hair, makeup, or expression.

But here's the critical failure: the system was apparently trained with absolutely no robustness against adversarial inputs. A child drawing wrinkles on his face with a marker wasn't supposed to work. The system should have detected that the "wrinkles" didn't match real facial structure. It should have flagged fake facial hair. It should have required the photo to show an actual human face, not a cartoon representation.

Instead, it just looked at the features and said "yep, that's a 21-year-old."

The ID verification path seemed more robust until it didn't. Roblox claims to verify government-issued identification, which should be harder to spoof. Except parents started verifying their children using their own IDs. A 10-year-old with her mother's ID gets classified as an adult. Roblox acknowledged this was happening and said they'd "work on solutions," but solutions weren't there from day one.

The third failure was perhaps the most predictable: nobody seemed to anticipate the behavioral response. When you suddenly restrict people's ability to chat with everyone on a social platform, they stop using chat.

Game developers reported that usage of the chat feature dropped from around 90% to 36.5% overnight. Players complained that their games felt "lifeless" or like "a ghost town." Thousands of negative comments flooded Roblox's developer forum.

Why? Because most of those chats were innocuous. Kids coordinating on puzzles. Players saying "nice build!" to each other. Social interactions that had nothing to do with safety but everything to do with the fun of multiplayer gaming.

By trying to solve a predator problem, Roblox broke the core social mechanic that made their platform appealing.

Estimated data suggests that 40% of users experience misclassification, either being placed in a younger or older age group than their actual age.

The Technical Failures Nobody Should Be Surprised By

If you've been following AI and content moderation for the past decade, Roblox's failures fit a predictable pattern.

Automated age verification has never worked at consumer scale. We've watched this movie before. Content moderation systems misidentify political content as hate speech, then swing the other direction and miss obvious violations. Facial recognition struggles with basic demographics, let alone age estimation.

The difference is that most platforms iterate in private, fix problems quietly, and launch improved versions. Roblox launched a mandatory system affecting 80 million users and apparently expected it to work perfectly on day one.

That suggests a few things went wrong:

-

Testing was limited. You can't discover these failure modes without testing across diverse faces, lighting conditions, expressions, and yes, silly things like drawn-on facial hair. That requires either extensive testing or launching and learning. Roblox chose the latter.

-

The pressure to ship was immense. With lawsuits coming from multiple states and criminal subpoenas being issued, Roblox's executives probably felt like they were choosing between "imperfect system now" and "platform getting shut down." They chose imperfect system now.

-

The verification methods were inherently flawed. Asking a 13-year-old to take a selfie for age verification assumes that 13-year-olds look distinctly different from 18-year-olds. They don't. The difference between 16 and 18 can literally be one year of development. That's not a solvable problem with AI. It's a problem with the approach itself.

Why This Matters Beyond Roblox

Roblox's failure is important because it's a real-world test of something that other platforms are also trying to do.

Discord has age verification in testing. YouTube needs better age-gating for content. TikTok faces constant pressure to verify ages. As regulatory pressure increases globally, every platform with young users will eventually face this same choice: implement age verification or face government action.

Roblox's catastrophe demonstrates that rushing implementation doesn't work. But it also demonstrates something more dangerous: that the regulatory pressure is so intense that companies will ship broken systems anyway.

When you're facing criminal subpoenas and lawsuits from multiple states, you don't have the luxury of "let's wait until the technology is ready." You have to do something now. That's the regulatory trap: the pressure for action is greater than the technology's readiness.

That's not Roblox-specific. That's an emerging problem for the entire internet.

Estimated data shows a dramatic drop in player engagement from 90% to 36.5% within three days of implementing the age verification system, highlighting the system's failure.

The Developer Backlash and What It Reveals

The response from game developers on Roblox was almost uniformly negative.

Thousands of comments on the developer forum ranged from frustrated to furious. Some demanded the entire update be reversed. Others showed graphs demonstrating the collapse in player engagement. Developers reported that games that were previously profitable based on player time and engagement had become unviable.

Here's what that tells you: the developers understood something that Roblox's executives apparently didn't. The platform's value comes from social connection. Remove that, and you don't have a game platform anymore. You have a single-player experience. And nobody needs Roblox for single-player experiences when Minecraft, Fortnite, and hundreds of other options exist.

Roblox tried to solve a safety problem without understanding that the safety problem and the engagement problem are intertwined. The chat system that enables grooming is the same chat system that enables coordination, friendship, and community.

You can't remove one without removing the other.

Developers aren't heartless. They understand the safety problem. What they're saying is that a system that breaks the platform to solve the problem might be worse than the problem itself.

That's worth considering seriously.

The Child Safety Paradox

This is where things get genuinely difficult because there isn't a clean answer.

Roblox has a real predator problem. That's documented. That's serious. Children have been harmed. That's not acceptable. Something has to change.

But every solution to that problem has a cost. Age verification introduces friction that drives away users. Content moderation can be both over-aggressive (false positives) and under-aggressive (false negatives). Restricting communication prevents grooming but also prevents normal social interaction.

The question isn't "how do we eliminate the predator problem?" Because that's impossible. The question is "what's the right balance between safety and usability?"

Roblox's rollout suggests they believe the answer is "maximum safety, no matter the cost." But that belief can't be tested against actual results because the implementation was too broken to measure.

When you have a 23-year-old being classified as 16-17, you're not actually improving child safety. You're creating a system so confused that it can't accomplish either goal: protecting children OR enabling normal interaction.

Facial age estimation and adversarial inputs pose the greatest challenges in age verification systems, highlighting their vulnerability to inaccuracies and misuse. (Estimated data)

What Roblox Should Have Done (And What Others Should Learn)

This is the part where I get to be a Monday morning quarterback, but there are genuine lessons here.

Phase 1: Limited rollout. Announce the system. Test it with opt-in volunteers. Spend 30-60 days finding and fixing major failure modes. Yes, this means sitting with a known problem longer. But shipping a broken solution makes everything worse.

Phase 2: Staged rollout. Don't make it mandatory immediately. Let developers opt-in to the age-gated chat feature. Let users opt-in to verification. Build social proof that it works before you force it on everyone.

Phase 3: Multiple verification methods. Don't rely solely on AI face verification. Require phone verification. Use third-party age verification providers (they exist, they're imperfect but better than internal AI systems). Make ID verification actually work (which requires human review at scale, which is expensive, but worth it).

Phase 4: Graduated consequences. You don't go from "chat works for everyone" to "chat is completely locked down." You could restrict what age groups can chat with each other without completely disabling the feature. You could require adult accounts to have real-name verification and phone numbers. You could require players to play together (be in the same game) before they can start a private chat.

These approaches require more work. They require more investment. They require more patience. They're less sexy than "we deployed AI-powered age verification." But they're more likely to actually work.

The Predator Problem Remains Unsolved

Here's the uncomfortable truth: Roblox's failed rollout didn't actually address the predator problem at all.

The system can't reliably distinguish adults from older teenagers. So predators who look young or who know how to present differently can still access age groups they shouldn't. Meanwhile, legitimate 18-21 year olds are being restricted from playing with friends.

The predator problem is still there. It's just been joined by a broken solution problem.

Solving the predator problem actually requires human moderation. It requires reviewing reported conversations. It requires AI systems trained to detect grooming patterns, not just age estimation. It requires things that don't scale as easily as automated age verification.

It also requires accepting that no system will be perfect. Some predators will get through. Some false positives will happen. The goal isn't eliminating risk entirely. It's reducing it to acceptable levels while maintaining a functional platform.

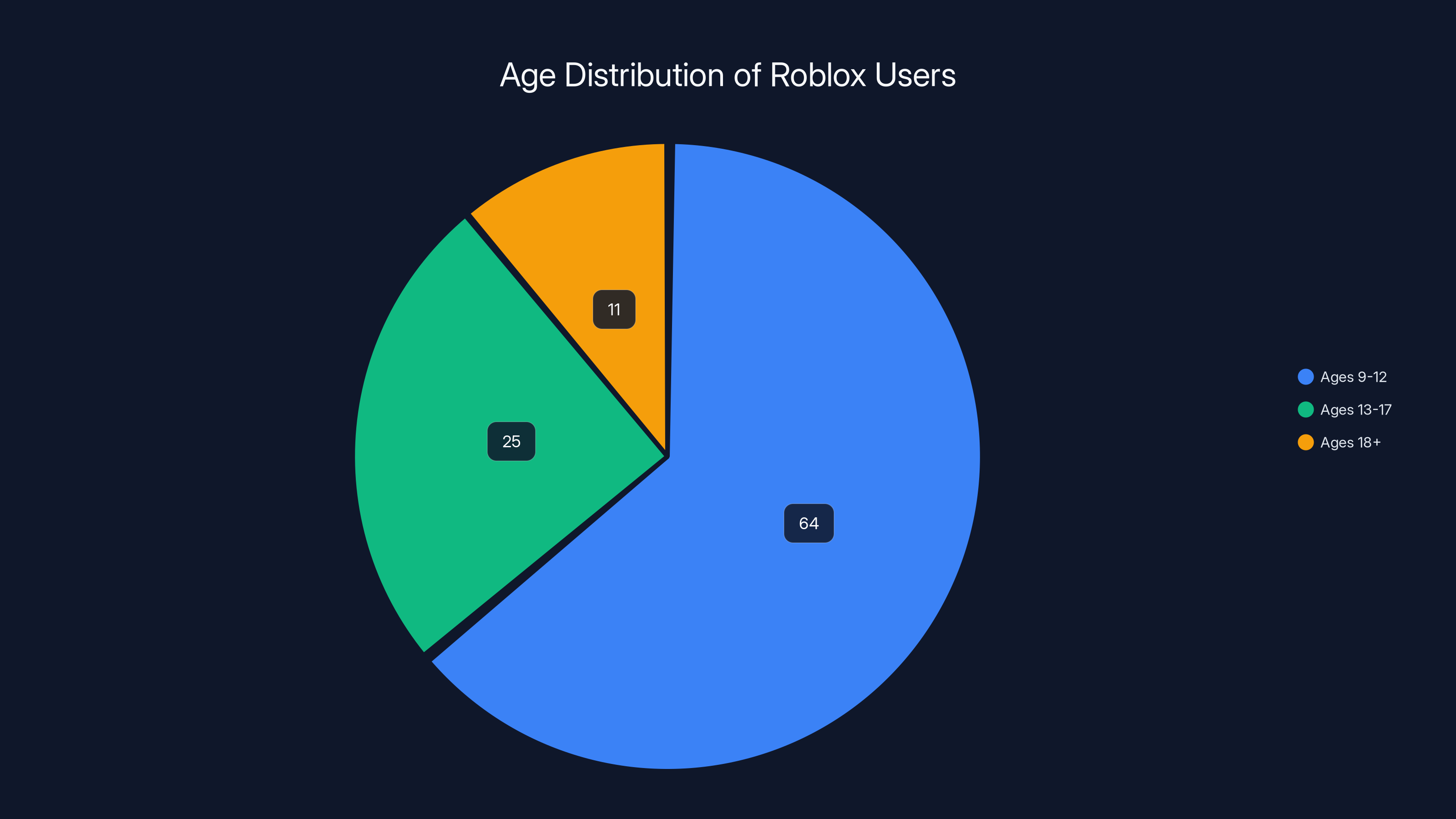

Approximately 64% of Roblox's 80 million monthly active users are aged 9-12, highlighting the platform's popularity among young children. (Estimated data)

What Happens Next

Roblox is in a bind. They can't undo the rollout without looking like they don't care about child safety. They can't keep the current system without watching the platform gradually empty out. They have to improve it.

That's expensive. It's probably going to take months. It might require partnerships with third-party age verification companies (which adds costs). It might require hiring more human moderators (which also adds costs).

The lawyers are probably advising them that the safest move is to keep pushing forward, to refine the system, to show they're serious about the problem. And they might be right legally, even if they're wrong practically.

Meanwhile, developers are making decisions. Some are exploring other platforms. Some are reducing their investment in Roblox titles. Some are experimenting with solo-player mechanics that don't depend on chat.

Those decisions compound over time. If the best developers leave, Roblox loses its competitive advantage. If engagement stays depressed, younger audiences start exploring alternatives. If the platform becomes less vibrant, it becomes less attractive to new users.

That's the actual risk here. Not that the system is broken. But that a broken system, if left unfixed too long, can degrade a platform irreversibly.

The Broader Implications for Internet Safety Regulation

Roblox's failure is important because it's probably going to be the template for what happens when regulation meets technological reality.

Governments don't know how to regulate platforms they don't understand. So they demand specific things: age verification, content filters, parental controls. Companies, under pressure, implement these things even when they don't work well.

The result is security theater. Systems that feel like they're improving safety without actually doing so.

Some countries are starting to learn this lesson. The UK's Online Safety Bill, for instance, focuses more on requiring companies to take reasonable steps toward safety rather than mandating specific technological solutions. The EU's Digital Services Act similarly focuses on obligations and processes rather than prescribing exact tools.

The US regulatory approach is still more focused on demanding specific solutions. That's why Roblox felt pressured to implement age verification specifically. It's what lawmakers kept asking for.

The problem is that age verification, as a solution to the predator problem, is both harder than it sounds and less effective than it seems.

Real Talk About What Roblox Actually Needs to Do

If I were advising Roblox's leadership right now, here's what I'd tell them:

First, admit the rollout was premature. Not apologetically, but factually. "We moved faster than the technology would support. Here's what we're doing to fix it."

Second, separate the goals. Child safety isn't just about age verification. It's about:

- Detecting grooming patterns

- Rapid response to reported abuse

- Making it harder for predators to operate

- Educating young users about safety

- Working with law enforcement

Age verification is one tool among many. It's not the solution.

Third, upgrade the verification to actually work. Phone verification plus ID verification, with human review for edge cases. Yes, that's expensive. You need expensive. You're fighting for your platform's survival.

Fourth, relax the age restrictions. Let 18-year-olds chat with 16-year-olds who are verified friends. Let people in different age groups chat if they're in the same game. The goal is preventing predators from accessing minors, not segregating all teenagers from each other.

Fifth, invest heavily in grooming detection. Train your moderation team to recognize patterns. Build AI systems specifically designed to catch inappropriate conversations, not just estimate age.

Sixth, maintain transparency. Share what you're finding with researchers, with other platforms, with policy makers. Be part of solving this industry-wide problem, not just your company's problem.

Will any of that happen? Honestly, I don't know. Corporate pressure, legal risk, and technical complexity all pull in different directions.

But I know what happens if Roblox doesn't fix this: the platform gradually becomes less fun to use, developers gradually leave, young users gradually migrate to alternatives, and in five years Roblox looks very different.

Or it becomes a case study in how not to implement safety systems. Either way, the window to fix this gracefully is closing.

The Bigger Picture: Platform Design and Child Safety

This situation didn't happen in a vacuum. It's the result of specific design choices that Roblox made years ago.

Platforms built around social connection (which is what makes them engaging to younger users) are inherently vulnerable to predation. It's not unique to Roblox. Discord, Minecraft multiplayer servers, online games in general, they all face the same problem.

The counterintuitive lesson is that the most engaging platforms for young people are often the most risky. That's not an accident of implementation. It's a structural trade-off.

Disney has a much easier time controlling safety on its platforms because it controls the experience tightly. Roblox is popular precisely because it gives users freedom and social tools. That same freedom is what enables predators.

This is why there's no perfect solution. You can make the platform safer by making it more restricted, but then it becomes less engaging and loses its competitive advantage. You can make it more engaging by giving users freedom, but then it becomes less safe.

The answer isn't to eliminate the trade-off. It's to manage it thoughtfully. That means:

- Accepting that some risk is inherent

- Investing continuously in detection and response

- Being transparent about limitations

- Evolving systems based on what you learn

- Collaborating with other platforms on shared problems

Roblox's failure wasn't in trying. It was in assuming that a single technical solution could eliminate the risk entirely.

Where We Go From Here

Roblox will eventually fix the age verification system. The technology will improve. The false positives will decrease. The bugs will get patched.

But the deeper lesson won't go away: when you're pressured to solve a hard problem quickly, you tend to ship solutions that are worse than the problem you're trying to solve.

That's not unique to Roblox. It's a pattern we'll see repeatedly as regulation increases pressure on platforms to address child safety, data privacy, misinformation, and other hard problems.

The companies that navigate this successfully will be the ones that can:

- Say no when the solution isn't ready

- Invest in the expensive infrastructure that actually works

- Be transparent about limitations and trade-offs

- Iterate based on real-world feedback rather than assuming tech will solve everything

Roblox had a chance to do that. The jury's still out on whether they'll learn the lesson.

What's certain is that 80 million users, most of them children, are waiting for them to get it right. And a bunch of predators are probably laughing at how long that's taking.

FAQ

What is Roblox's age verification system?

Roblox introduced a mandatory age verification system in January 2025 that requires players to verify their age before accessing the chat feature. Users can either submit a selfie for AI-based facial age estimation or upload a government ID. The system classifies users into age groups: 13 and under, 13-15, 16-17, 18-20, or 21+, and restricts chat interactions based on age proximity.

How does the age verification system work?

The system uses two verification methods. The first is AI-powered facial age estimation where players take a selfie and the algorithm analyzes facial features to estimate age. The second is government ID verification for users 13 and older. After verification, the system restricts which age groups can chat with each other, attempting to prevent adults from communicating with minors.

What are the main problems with Roblox's age verification system?

The system has multiple critical failures. It misclassifies users in both directions, placing 23-year-olds in the 16-17 category and 18-year-olds in the 13-15 range. Children can spoof the facial recognition by drawing facial features with markers or showing celebrity photos. Parents accidentally verify their children with their own IDs, resulting in kids being placed in the 21+ category. These failures suggest the AI wasn't adequately tested before the mandatory rollout.

Why did Roblox implement age verification?

Roblox faced mounting pressure from law enforcement and lawmakers over documented predator activity on the platform. Multiple states, including Louisiana, Texas, and Kentucky, filed lawsuits. Florida's attorney general issued criminal subpoenas. The company needed to demonstrate commitment to child safety to avoid potential shutdown or regulatory action, making age verification a necessary response regardless of technological readiness.

How has the age verification system affected gameplay?

The mandatory age verification has severely impacted platform engagement. Reports show that chat feature usage dropped from approximately 90% to 36.5% among players. Game developers reported that their games felt "lifeless" and like "ghost towns" as players avoided the friction of verification or felt restricted by age-based chat limitations. Thousands of developers posted complaints in the official forum demanding the update be reversed.

What should Roblox do to fix the age verification system?

Roblox should implement a phased approach: conduct extended testing with opt-in volunteers, use staged rollout rather than immediate mandates, employ multiple verification methods including phone verification and human review, relax age group restrictions to allow verified friends across age groups, and invest heavily in grooming detection rather than relying solely on age estimation. The platform should prioritize accuracy and usability together rather than treating them as competing goals.

How does this reflect broader challenges in platform safety?

Roblox's failure illustrates a fundamental tension between regulatory pressure and technological capability. Governments demand specific solutions (age verification) without understanding their limitations. Companies implement these solutions under legal pressure even when they're not ready, resulting in systems that don't effectively solve the problem and damage user experience. This pattern will likely repeat across all platforms hosting young users as regulation increases globally.

What is the predator problem on Roblox?

Multiple investigations have documented that Roblox's chat system enables child predators to identify, initiate contact with, and groom young players. Adults create accounts, build relationships with minors, and transition conversations to private channels where they solicit inappropriate content or arrange meetings. The platform's minimal chat moderation and young user base created an environment where this activity went largely undetected.

Can age verification actually prevent child predation?

Age verification alone cannot prevent predation. While it can reduce exposure between age groups, sophisticated predators can spoof age verification or operate within age-appropriate groups. Effective protection requires human moderation trained to detect grooming patterns, rapid response protocols, law enforcement collaboration, and user education about safety. Age verification is one tool among many, not a complete solution.

What do game developers think about the age verification system?

Developers are overwhelmingly negative about the implementation. They understand the safety problem but argue that a broken system that kills engagement damages the platform more than the original problem. Thousands of forum posts show developers considering moving to other platforms or redesigning games to work without social chat features, representing a potential exodus of content creators that could irreversibly harm Roblox's competitive position.

Key Takeaways

- Roblox's age verification system fails to reliably classify users, misidentifying adults as teens and teens as children within 48 hours of mandatory rollout

- Children bypass the facial recognition system using simple adversarial inputs like hand-drawn facial features, highlighting inadequate testing before launch

- Chat feature engagement collapsed from 90% to 36.5% due to verification friction and age-based restrictions, turning game worlds into 'ghost towns'

- The system exemplifies regulatory pressure forcing companies to ship incomplete solutions before technology is ready, creating worse outcomes than the original problem

- Real predator protection requires human moderation and grooming detection systems, not just age verification, which remains ineffective as a standalone solution

Related Articles

- App Store Age Verification: The New Digital Battleground [2025]

- Roblox's AI Age Verification System Failure Explained [2025]

- Ofcom's X Investigation: CSAM Crisis & Grok's Deepfake Scandal [2025]

- WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]

- Senate Defiance Act: Holding Creators Liable for Nonconsensual Deepfakes [2025]

- AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]

![Roblox's Age Verification System Catastrophe [2025]](https://tryrunable.com/blog/roblox-s-age-verification-system-catastrophe-2025/image-1-1768343857034.jpg)