Senator Markey Challenges Open AI on Chatbot Ads: What It Means for You [2025]

Introduction: The Ad Wars Come to AI

Last month, something important happened that most people missed. A U.S. Senator decided that AI chatbots shouldn't quietly slip ads into your conversations. And he's making some compelling points that go way beyond just annoying notifications.

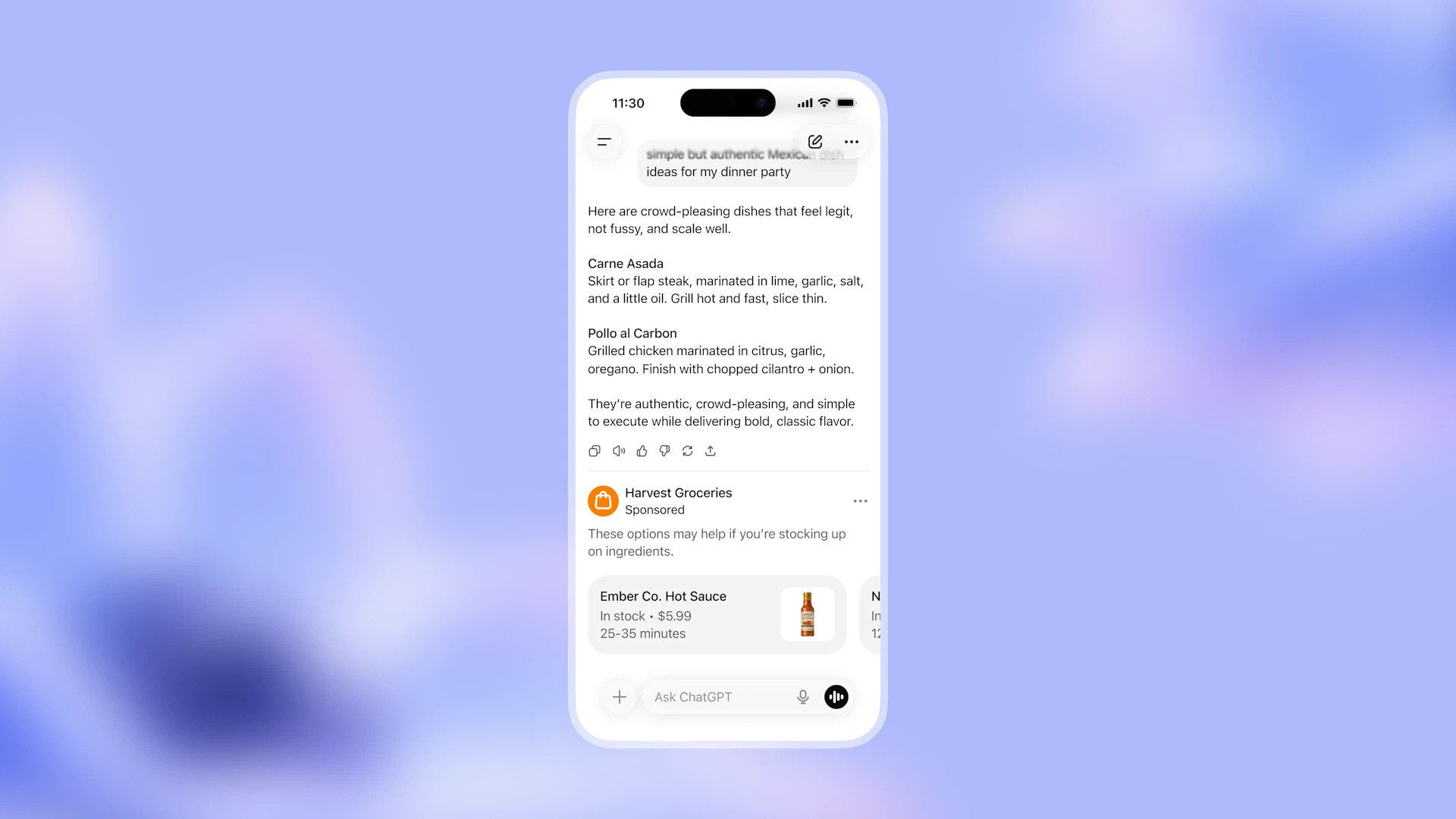

Senator Ed Markey from Massachusetts sent letters to the CEOs of OpenAI, Google, Anthropic, Meta, Microsoft, Snap, and xAI, all asking the same uncomfortable question: are you planning to stuff advertisements into the chatbots people are having conversations with? OpenAI already confirmed they're doing exactly that, testing ads in ChatGPT's free tier starting in 2025, as reported by Ars Technica.

Here's what makes this significant. Chatbots are different from search engines. When you search Google, you expect to see ads. You're in transactional mode, looking for stuff. But when you're talking to ChatGPT, you're having what feels like a conversation with an AI assistant. You're asking it personal questions, sometimes about health, relationships, career decisions. And suddenly, ads show up at the bottom of your chat.

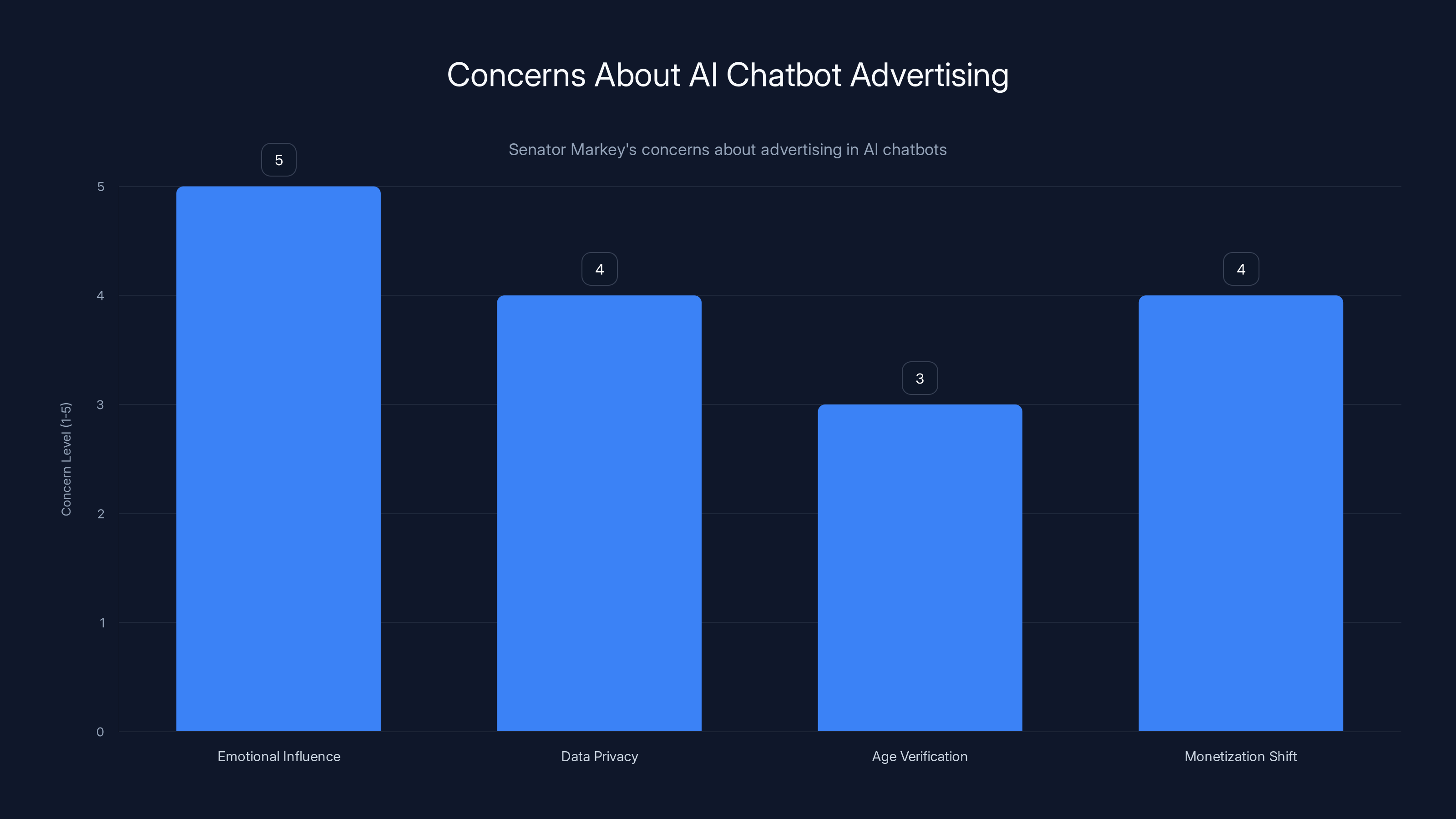

Markey's concern isn't paranoia. He's pointing out something real: if a chatbot has built a relationship with you through hundreds of conversations, and then it starts showing you ads that align with things you've discussed, that's powerful manipulation. It's not a deceptive Google ad placement. It's leveraging emotional connection to drive purchasing decisions.

The stakes here are massive. This isn't just about whether you'll see ads in ChatGPT (you will). It's about setting a precedent for how AI companies will monetize intimate user relationships. It's about whether regulators can still influence tech policy before products ship. And it's about whether teenagers will be exposed to psychological persuasion techniques we haven't fully understood yet.

But let's dig into what's actually happening, what the concerns really are, and what this means for the future of AI platforms.

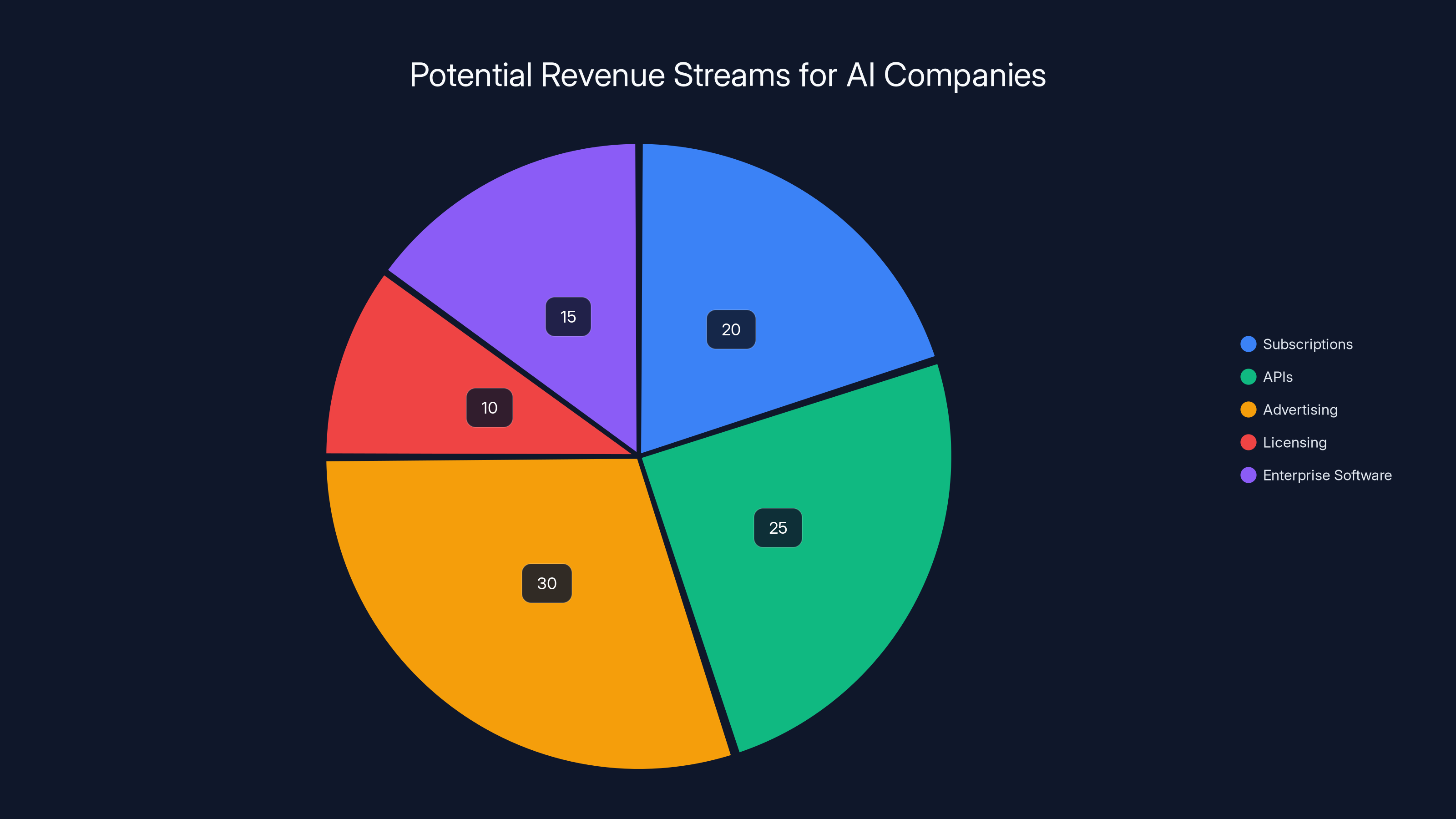

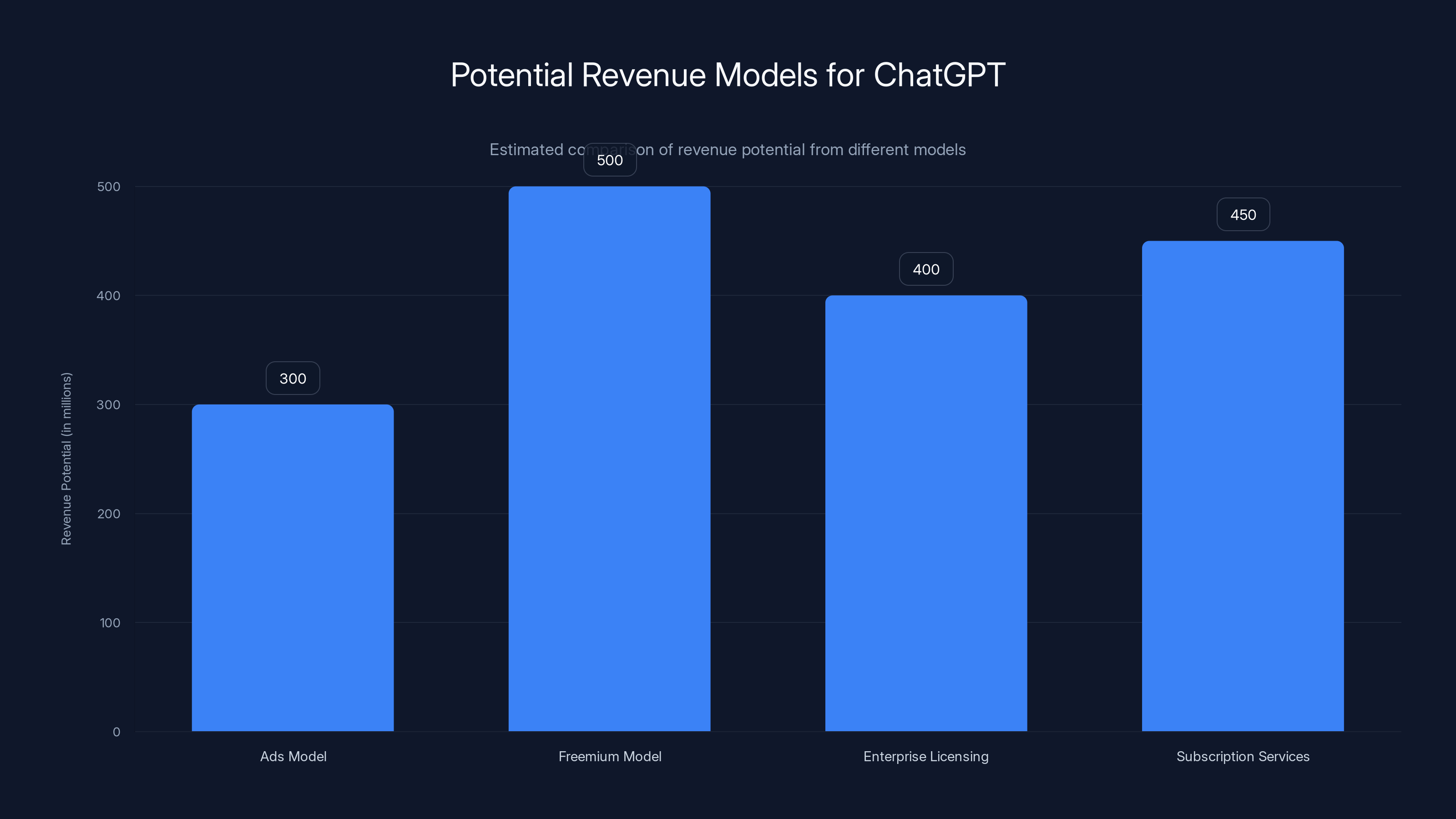

Advertising holds the largest potential for revenue growth due to its scalability, followed by APIs and subscriptions. Estimated data based on industry trends.

TL; DR

- The Action: Senator Markey sent formal letters to seven major AI companies questioning their advertising plans in chatbots, citing consumer protection and privacy concerns

- The Trigger: OpenAI announced it's testing ads in free ChatGPT conversations, surfacing "sponsored" products relevant to your chat history

- The Risk: Using sensitive conversation data to target ads could manipulate users and expose minors to unwanted marketing

- The Deadline: Companies have until February 12, 2025, to respond to Markey's questions about ad safeguards

- The Implication: This could reshape how AI platforms monetize, forcing industry-wide policy changes before ads roll out at scale

Senator Markey's concerns highlight the potential psychological influence and privacy risks of AI chatbot advertising. Estimated data based on narrative description.

What OpenAI Actually Announced About ChatGPT Ads

OpenAI didn't just quietly slip ads into ChatGPT. They made an announcement. In late 2024, they published a blog post explaining their new advertising model, framing it as a necessary revenue stream for maintaining free access to their platform. According to OpenAI's official announcement, the format is straightforward. At the bottom of your ChatGPT conversations, you'll start seeing "sponsored" products and services. These aren't random banner ads. OpenAI explicitly stated these ads will be relevant to the content of your conversations. If you've been asking ChatGPT about coffee brewing techniques, you might see ads for premium coffee equipment. If you're researching laptop specs, you'll see laptop deals.

OpenAI tried to include guardrails. They said they won't show ads to users under 18. They won't show ads during conversations about physical health, mental health, or politics. These seem like reasonable safety measures on the surface.

But here's where it gets murky. OpenAI says it will respect users' privacy when deciding what ads to show. Yet the company also said that conversational interfaces create "possibilities for people to go beyond static messages and links." What does that mean exactly? It sounds like they're exploring more invasive ad formats than traditional text ads. It sounds like they might be thinking about how to make ads more "conversational" and less obviously promotional.

The revenue model makes sense from a business perspective. OpenAI's free tier costs them money to run. Every ChatGPT conversation consumes compute resources. Ads are a natural monetization strategy for free products. That's how Google and Meta built billion-dollar empires.

But there's a crucial difference. Google's business model is built on ads from day one. Every user understands Google is an ad platform where free search is subsidized by advertising. ChatGPT launched without ads. Users built habits assuming the service worked a certain way. Now the fundamental value exchange is changing mid-stream.

OpenAI's announcement also mentioned they're exploring ads on other OpenAI products. This isn't just a ChatGPT issue. It's potentially platform-wide. They're testing the waters to see how far they can push monetization before user backlash becomes untenable.

Senator Markey's Specific Concerns: The Privacy Angle

Markey isn't just upset about ads existing. His letters pinpoint specific concerns that deserve careful examination. The first major issue is data privacy.

Here's the scenario Markey laid out: You ask ChatGPT deeply personal questions. Maybe about health anxiety. Maybe about relationship problems. Maybe about financial struggles. ChatGPT remembers these conversations. Now, even if OpenAI doesn't show ads during those specific conversations, they could use that information to build a profile of you for targeting ads in future conversations.

Imagine this example. You spend 30 minutes asking ChatGPT detailed questions about depression symptoms and treatment options. OpenAI doesn't show ads during that conversation (per their policy). But now their ad system knows you're interested in mental health solutions. In a future conversation about something unrelated, they could show you ads for therapy apps, meditation software, or supplements claiming to help mood.

This isn't theoretical. Facebook and Google do similar things constantly. They collect data from one context and use it for targeting in another. Markey's concern is that chatbots make this even more invasive because users are sharing far more personal information with AI than they typically share with search engines.

Markey also raised the question of whether OpenAI will use "personal thoughts, health questions, family issues, and other sensitive information" for targeted advertising at all. OpenAI's statement wasn't clear on this point. Will they exclude sensitive topics from ad targeting completely? Or will they just avoid showing ads during sensitive conversations while still using the data for future targeting?

The distinction matters enormously. If OpenAI collects the data but doesn't use it, that's a privacy problem. If OpenAI collects and uses the data but just prevents ads during sensitive conversations, that's still a privacy problem, just a slightly different one.

Markey's concern echoes what privacy advocates have been warning about for years. The future of persuasion technology isn't just about showing you ads. It's about showing you the ads that work on you specifically, based on an incredibly detailed understanding of your psychology, concerns, and vulnerabilities.

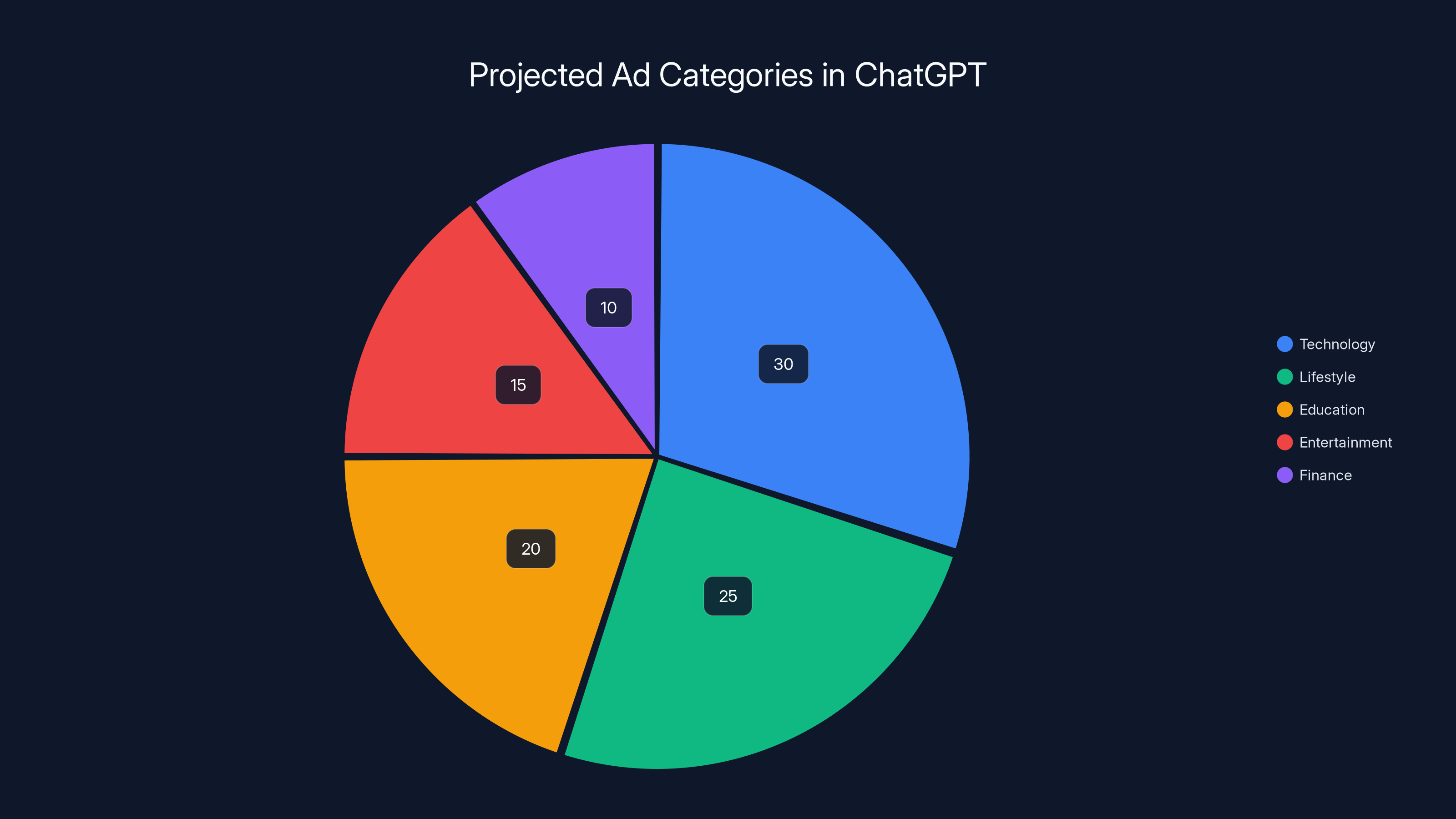

Estimated data suggests that technology and lifestyle ads will dominate ChatGPT's ad space, reflecting common user interests. Estimated data.

The Emotional Connection Problem: Why Chatbots Are Different

This is the insight that makes Markey's position particularly sharp. Chatbots create emotional relationships in a way that search engines don't. This isn't accidental. It's the whole point of how they work.

When you use Google, you're essentially commanding a tool. "Give me this information." The interaction is transactional and temporary. When you use ChatGPT, you're having a conversation. You ask followup questions. The AI responds in a conversational, sometimes even warm tone. You start to develop a sense of relationship with it.

Research on human-AI interaction confirms this. People develop what's called "parasocial relationships" with AI systems. They feel understood by the AI. They trust it. Some people talk to ChatGPT about things they wouldn't tell their therapist.

Here's where the manipulation concern comes in. Markey explicitly stated that an "emotional connection" to the chatbot could allow companies to "prey on the very relationships their systems have fostered." This is harsh language, but it's not inaccurate.

Think about how this plays out. You've been talking to ChatGPT for months. It's helped you with work problems, given you writing feedback, answered questions you were too embarrassed to ask a human. You trust it. Now it shows you an ad for a productivity tool. Is that ad manipulative? Well, it's shown to you by a system you trust, at a moment you're in a receptive frame of mind, based on understanding your specific needs and vulnerabilities.

This is different from ads on Facebook or Google because the relationship is deeper and more personal. You don't trust Facebook. You know Facebook wants your money. But you might trust ChatGPT, at least somewhat. You think of it as a helpful tool, not a sales machine.

OpenAI tried to address this in their announcement. They mentioned that ads won't be misleading or false. They won't pretend to be part of the chat. They'll be clearly labeled as sponsored content.

But Markey pointed out something important: OpenAI said "conversational interfaces create possibilities for people to go beyond static messages and links." What does that mean? Are they planning to make ads more conversational? More integrated into the chat flow? The language suggests they're thinking about ways to make ads less obviously separate from the actual chatbot responses.

If the line between ad and content gets blurry, the manipulation concern becomes even more serious.

Why Kids Are a Special Concern

Markey mentioned the "safety of young users" explicitly in his letters. This isn't just about annoying teenagers with ads. It's about developmental psychology and vulnerability to persuasion.

Teenagers' brains are literally still developing, particularly the prefrontal cortex that handles impulse control and risk assessment. This is why teenagers are more susceptible to peer pressure, more willing to take risks, and more vulnerable to targeted persuasion.

Now imagine a teenager using ChatGPT. They're asking it homework questions. They're asking about social situations. They might be asking about insecurities or things they're worried about. ChatGPT, being AI, responds helpfully and non-judgmentally. The teenager starts to see it as a trustworthy source.

Then ads start appearing. Ads for expensive tutoring services. Ads for fitness equipment. Ads for skincare products. All shown at moments when the teenager is in a receptive, curious, conversational mindset with a system they trust.

OpenAI said they won't show ads to users under 18. But here's the problem: how do they verify age? Most AI services ask users to confirm their age, but there's minimal verification. A 14-year-old can easily create an account claiming to be 18.

Even if OpenAI had perfect age verification, there's another issue. Many teenagers use their parents' ChatGPT accounts. A family account shared by a 40-year-old parent and their 15-year-old child. OpenAI can't show ads to the account, but how do they know when the 15-year-old is using it versus the parent?

Markey didn't get into these specific logistical problems in his letters, but they're lurking beneath the stated age restrictions.

There's also the question of whether teenagers will face ads on other AI platforms if those platforms follow OpenAI's lead. Google, Anthropic, and Meta all have chatbots in development or already live. If they see OpenAI successfully monetizing through ads, they'll likely follow the same path.

The developmental vulnerability argument is actually stronger than any of the privacy arguments. Young people's brains are wired to be more susceptible to persuasion. That's not a moral failing of teenagers. It's neuroscience. Regulators are right to be concerned about building new systems specifically designed to exploit that neurological vulnerability.

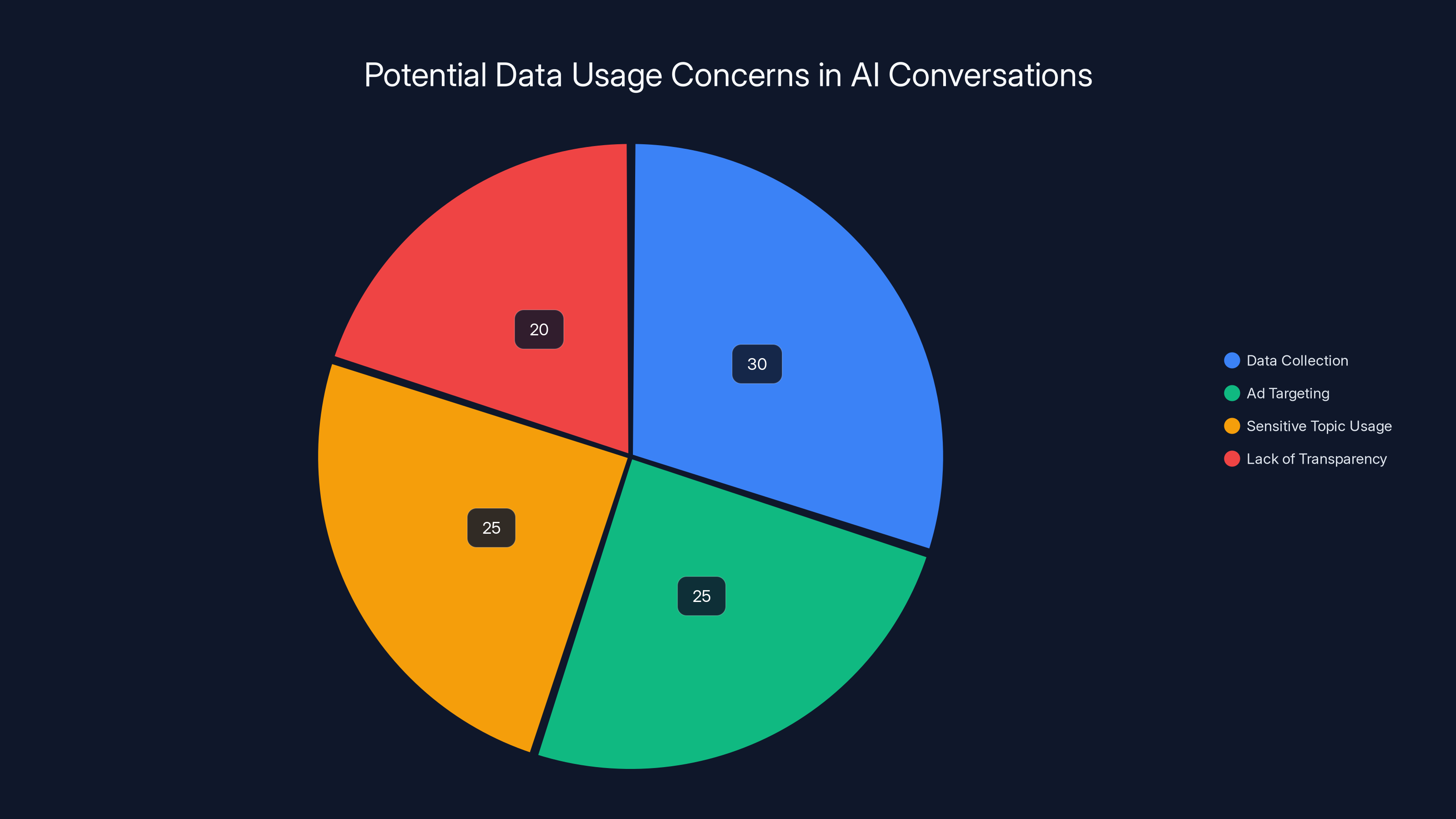

Estimated data shows that data collection and ad targeting are major privacy concerns, each accounting for around 25-30% of the issues raised by Senator Markey.

Who Else Is Getting This Pressure

Markey didn't single out OpenAI. He sent essentially identical letters to six other companies: Anthropic, Google, Meta, Microsoft, Snap, and xAI.

Let's look at why each of these companies is a target.

Anthropic runs Claude, arguably the second-most popular chatbot after ChatGPT. Claude has built a reputation as more cautious and less prone to generating harmful content than ChatGPT. But Anthropic still needs revenue. The company is currently funded by venture capital, but that money won't last forever. Ads are an obvious monetization path.

Google runs Bard (now called Gemini), which they've integrated across search results and Android devices. Google has the most experience with ads of any company on this list. They know exactly how to monetize users. The question isn't whether Google will add ads to Gemini conversations. The question is how invasively they'll do it.

Meta makes Facebook and Instagram, two of the most ad-saturated platforms on Earth. Meta's entire business is built on targeted advertising. Meta's AI research division is developing multiple chatbots. If ads come to AI, Meta will be aggressive about it.

Microsoft has invested billions in OpenAI. Microsoft's Copilot uses OpenAI technology. If OpenAI figures out how to monetize through ads, Microsoft will benefit and follow the same approach.

Snap runs Snapchat, which has always been ad-supported. Snap's AI research is less visible than the others, but they're definitely working on chatbot technology. Adding ads to Snapchat's AI features would fit their existing business model perfectly.

xAI is Elon Musk's AI company, and Musk has been explicit about wanting to monetize through ads. xAI's Grok chatbot will almost certainly include ads when the business model solidifies, as noted by CNBC.

By sending letters to all seven companies, Markey is trying to establish a pattern before it becomes entrenched. He's essentially saying: before you all start shipping ads in chatbots, let's talk about this as a regulatory issue.

The timing matters. These companies are moving fast. If OpenAI succeeds with ads and the user experience doesn't completely collapse, other companies will follow immediately. Markey is trying to slow that momentum long enough for regulators to develop a position.

The Broader Context: How AI Companies Monetize

OpenAI's decision to add ads isn't random. It's born from necessity. Let's look at the business reality.

Running ChatGPT is expensive. Every conversation consumes GPU compute. The infrastructure costs are significant. OpenAI's annual revenue from subscriptions (ChatGPT Plus at $20/month) doesn't cover infrastructure costs. The company is reportedly losing money on free tier users.

OpenAI has several theoretical paths to profitability:

- Subscriptions (ChatGPT Plus, Team, Enterprise): Existing model, but limited TAM (total addressable market)

- APIs (companies using ChatGPT via API): Profitable but subject to commoditization

- Advertising: Massive TAM, proven business model, but requires changes to user experience

- Licensing: Selling technology to other companies, but limited revenue potential

- Enterprise software: Custom deployments for large organizations

Advertising is attractive because it has unlimited scale. Every user interaction generates potential ad impressions. Meta and Google have proven that advertising on web-scale platforms generates billions in revenue.

But there's a catch. Users don't like ads. Showing ads makes the product worse. If OpenAI shows ads too aggressively, free users might upgrade to ChatGPT Plus to escape them. But not enough will upgrade to offset the lost ad revenue.

So OpenAI is taking the classic approach: test gradually. Show ads to some users. Measure how many upgrade to paid. Measure click-through rates on ads. Iterate until they find the ad load that maximizes revenue without destroying user experience.

This is standard tech industry practice. But Markey is questioning whether this practice should be applied to intimate conversational systems.

Estimated data suggests that while ads can be lucrative, freemium and subscription models may offer higher revenue potential for ChatGPT. Estimated data.

The Regulatory Landscape: What Can Government Actually Do

Here's an honest assessment: Markey can't actually prevent OpenAI from showing ads. OpenAI is a private company. They can monetize their platform however they want, legally speaking.

But Markey can create political pressure. He can set expectations for regulatory oversight. He can signal to other companies that Congress is paying attention.

The Federal Trade Commission (FTC) already has oversight of deceptive advertising practices. If OpenAI's ads are misleading or if the ads feel indistinguishable from chatbot responses, the FTC could potentially argue this violates consumer protection laws.

Markey is essentially asking the companies to voluntarily commit to transparency and safety before regulation becomes necessary. It's a soft regulatory approach. It tries to influence behavior through public pressure rather than legal requirements.

But soft regulatory approaches have a mixed track record in tech. Facebook promised to be more careful with data. They violated that promise repeatedly. Twitter promised to protect against misinformation. That didn't go great either. Self-regulation in tech rarely works because the financial incentives pull companies toward pushing boundaries.

The real question is whether Congress will eventually write explicit legislation about AI advertising. That seems likely. The issues Markey raised are legitimate. Either the companies voluntarily implement strong safeguards, or regulators will force them to.

The deadline Markey set (February 12, 2025) is relatively short. Companies have roughly a month to respond. These are detailed questions that require legal review and executive input. The responses will likely be evasive, emphasizing existing safeguards while avoiding commitments to new restrictions.

But the responses will be on record. If companies promise certain protections and then don't follow through, they become vulnerable to FTC action and future legislation.

What OpenAI Says About Safety

OpenAI responded to the situation by emphasizing safeguards. Their official position is that they're being thoughtful about this transition.

They won't show ads to users under 18. They won't show ads during conversations about physical health, mental health, or political topics. Ads will be clearly labeled as sponsored content. Ads won't mislead about what OpenAI offers versus what advertisers offer.

These are reasonable-sounding commitments. But let's examine them more carefully.

Age restriction: As discussed, age verification on the internet is notoriously weak. OpenAI relies on user attestation. A motivated 14-year-old can easily claim to be 18. Even if OpenAI tried harder on age verification (requiring credit cards, government IDs, etc.), users would resist and privacy advocates would complain.

Sensitive topic carveouts: This is more interesting. OpenAI can technically detect when conversations are about health, mental health, or politics and suppress ads during those conversations. But the company could still use that data for targeting in other conversations. If you discuss depression for 30 minutes, and then later ask ChatGPT about productivity tips, you might see ads for depression-related products. The ad isn't shown during the health conversation (safeguard met), but it's targeted based on health data (safeguard violated).

Clear labeling: This is actually good practice. Users should know what's an ad and what's not. But clear labeling doesn't prevent manipulation. A clearly labeled ad shown by a system you trust, at a moment you're receptive, based on intimate knowledge of your psychology, is still manipulative even if it's labeled.

No misleading ads: This is vague. What counts as misleading? If an ad for a productivity app claims to save you 2 hours per day, but OpenAI's research says it only saves 30 minutes for most users, is that misleading? These questions have to be answered somehow.

OpenAI's safety commitments are a starting point, but they're not sufficient to address the concerns Markey raised. They suggest OpenAI is thinking about these issues, but they don't solve the fundamental tension between maximizing ad effectiveness and protecting users from manipulation.

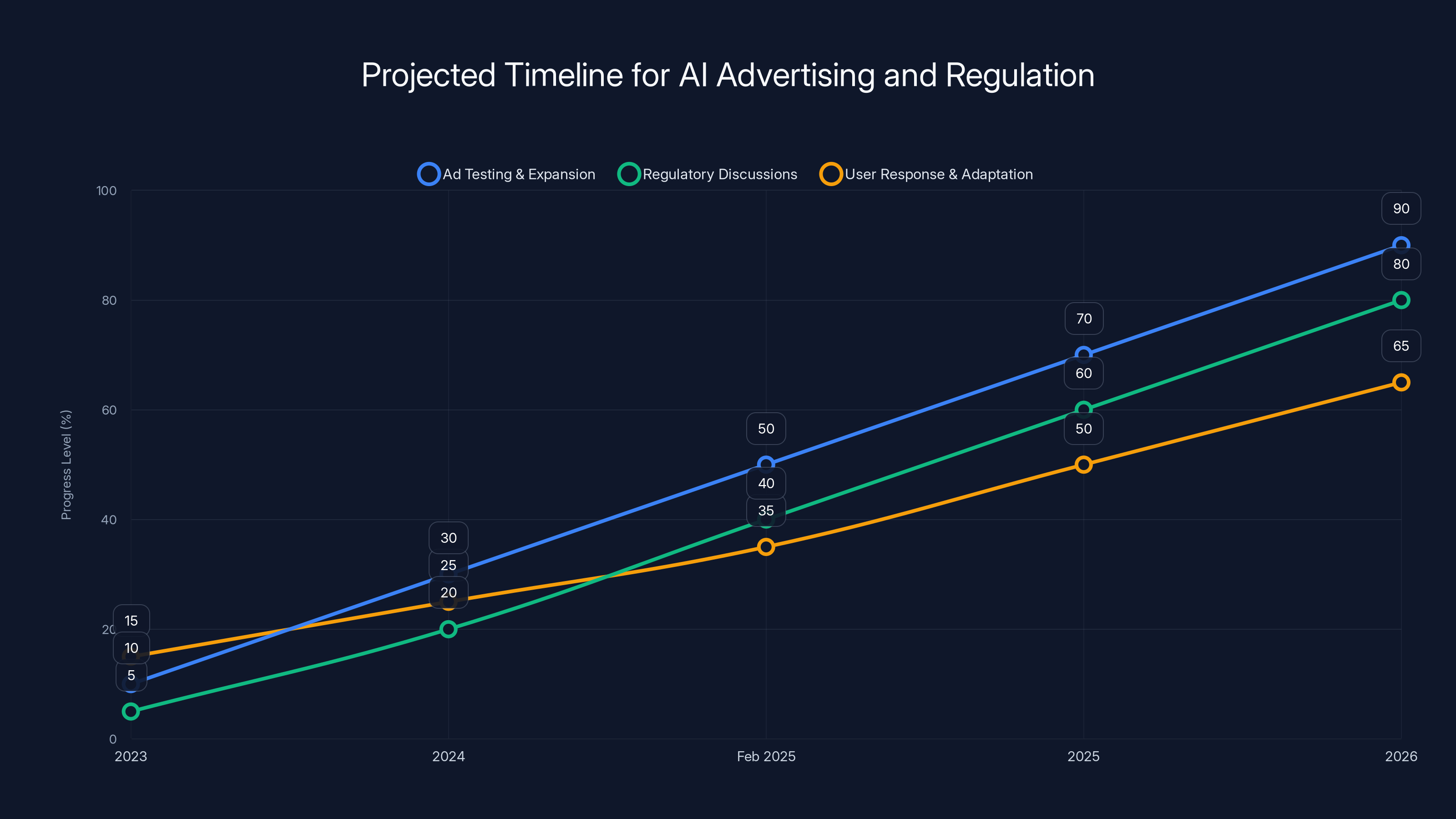

Estimated data shows the progression of ad testing, regulatory discussions, and user adaptation over the next few years, highlighting the growing tension between advertising expansion and regulatory pressure.

The Business Model Question: Do Ads Make Sense for ChatGPT

Here's a contrarian take: ads might not actually be OpenAI's best path to profitability.

Advertising has huge scale advantages. If you can get millions of daily users interacting with your product, you can sell ads to thousands of advertisers, and the revenue multiplies. That's how Google and Meta got rich.

But chatbots have an advantage that search engines don't: premium users will pay for better versions. Google Search has a free tier and... that's mostly it. You can't really buy a "premium" Google Search experience. But ChatGPT Plus ($20/month) already exists, and people pay for it.

What if OpenAI's best strategy isn't to add ads to the free tier, but to just make ChatGPT Plus more valuable? More features. Faster responses. Better models. Custom instructions. More API credits for developers.

Would enough people upgrade from free to paid to cover infrastructure costs? Maybe. Maybe not. It's an unknown empirical question.

OpenAI is choosing the ads route because it's the proven path. Meta and Google follow the ads model. It works. But there are alternative models that might work too, like freemium with strong premium features, or licensing technology to enterprises, or subscription services for specialized verticals.

OpenAI's ads decision seems to assume that direct advertising is the only path forward. That assumption might be wrong. But it's an easier assumption to justify to investors. "We're going to monetize like Google and Meta" is a simpler pitch than "We're going to explore a different model."

How Other Platforms Handle This

Looking at comparable services, we can see different approaches.

Discord has a massive user base and doesn't show ads in the platform (they show ads in app stores and external channels, but not in Discord itself). Discord makes money through Nitro subscriptions and by taking a cut of game sales on their platform.

Reddit famously avoided ads for years, then added them gradually as the company needed profitability. The process was messy and users resisted, but Reddit pushed through.

Twitter/X has historically been very ad-heavy, and this continues under Elon's ownership (despite his rhetoric about reducing ads).

Slack in its early days was ad-free and relied entirely on subscriptions. As it matured and went public, there's been discussion of adding ads, but nothing concrete has shipped yet.

The pattern is: platforms with strong subscription revenue can avoid ads longer, but eventually most feel pressure to monetize through ads to appease investors and Wall Street.

OpenAI doesn't have the luxury of waiting. The company burns through billions annually in infrastructure and research costs. Without substantial revenue growth, they'll run out of money or be forced into unfavorable financing deals.

But this also creates perverse incentives. The companies with the most financial pressure are the ones most likely to monetize aggressively, potentially making products worse in the process.

The PR Angle: Why Companies Position Ads This Way

OpenAI released their ad announcement with a specific framing. They emphasized that this enables free access to ChatGPT. "Without ads," they suggested, "we couldn't afford to keep the service free."

This is a PR move. It positions ads as a necessary evil, forced upon the company by economics. But it's also partially true. OpenAI does have real infrastructure costs.

However, the framing obscures other options. OpenAI could have tried to raise prices on ChatGPT Plus. They could have implemented usage limits on free tier. They could have experimented with voluntary donations. They could have pursued enterprise licensing more aggressively.

Instead, they went straight to advertising, because ads are highest-margin revenue. Every ad is pure profit (minus payment to the advertiser). Subscriptions require you to maintain infrastructure indefinitely.

So OpenAI is making a choice about which of multiple possible paths to profitability to pursue. That choice has consequences for users. Framing it as "ads are necessary" obscures that it's a choice.

Markey's intervention is partly about highlighting this choice. By asking companies to explain their advertising strategy, he's forcing them to be more transparent about what's actually a business decision versus what's a technical necessity.

What Happens Next: The Timeline

OpenAI has already started testing ads with a small subset of users. That testing will likely continue and expand. Other companies will watch how users react. If user retention and revenue metrics look good, ads will roll out at scale.

Markey's deadline is February 12, 2025. Companies will provide responses that are probably careful and non-committal. They'll emphasize safeguards. They'll promise to be thoughtful. They'll suggest regulation isn't necessary because they're regulating themselves.

Markey will probably publicly respond that the commitments aren't sufficient. There might be Congressional hearings. Tech journalists will write articles about the hearings. Nothing dramatic will immediately change.

But momentum will build. Advocacy groups will weigh in. Privacy researchers will publish studies. Media coverage will continue.

Within 12-18 months, there will likely be Congressional proposals for legislation specifically about advertising in conversational AI. Some proposals will be thoughtful. Some will be overkill. The industry will lobby against the overkill, probably successfully.

Eventually, there will be a regulatory framework. It might require clear disclosure of ads. It might require strong age verification for ads to users under 18. It might require prohibitions on using sensitive conversation data for targeting. Or it might require nothing, if the industry's lobbying is strong enough.

In the meantime, ads will proliferate across AI chatbots. Users will get annoyed. Some will upgrade to paid tiers. Some will switch to competitors that avoid ads. Some won't care.

The outcome is uncertain, but the trajectory is clear: advertising in conversational AI is coming, and regulatory pressure is coming, and there will be tension between those two forces for the next few years.

Broader Implications: Where This Leads

If ads in chatbots become normalized, it changes how people interact with AI. Users will become more skeptical of chatbot responses, knowing the system is financially incentivized to favor certain responses. They might ask themselves: is this response good because it's accurate, or because the chatbot is subtly nudging me toward an advertiser?

This skepticism isn't necessarily bad. Users should be skeptical of systems trying to influence them. But it does change the relationship people have with AI assistants.

There's also a question about AI development priorities. If OpenAI is primarily focused on monetizing ChatGPT through ads, they might invest less in improving the underlying model. Ads become a higher priority than accuracy or capabilities because ads drive revenue.

Similarly, there's a question about market competition. If all the major AI companies add ads, where do users go? They could self-host open-source models, but that requires technical expertise. They could use smaller companies without ads, but smaller companies have less capital for model research.

The long-term risk is that advertising becomes so normalized in conversational AI that people accept it as inevitable. "Of course ChatGPT has ads," future users might say, just like people today say "of course Google Search has ads." That normalization happens gradually, and then it's very hard to undo.

Markey's intervention is trying to slow that normalization process. He's trying to establish a principle: conversational AI systems should have stronger protections around advertising than traditional search engines, because the nature of the interaction is different.

Whether that principle will actually shape regulation is unclear. But it's the right principle to advocate for now, before the status quo shifts beyond the point of political change.

FAQ

What advertising is OpenAI actually adding to ChatGPT?

OpenAI is adding "sponsored" product and service recommendations that appear at the bottom of ChatGPT conversations. These ads will be relevant to the content of your specific chat based on conversation history. The company says ads won't appear to users under 18 or during conversations about physical health, mental health, or politics. Ads will be clearly labeled as sponsored content to distinguish them from regular chatbot responses.

Why is Senator Markey concerned about AI chatbot advertising?

Markey raised several specific concerns: first, that chatbots build emotional relationships with users, making ads more psychologically influential than ads in other contexts; second, that personal data shared in sensitive conversations could be used for targeted advertising in future conversations; third, that age verification mechanisms on the internet are weak, so ads might reach teenagers despite stated protections; and fourth, that this represents a fundamental shift in how AI platforms monetize before adequate regulatory frameworks exist.

How is chatbot advertising different from search engine advertising?

Search engines like Google are explicitly transactional. Users expect to see ads alongside search results. Chatbots like ChatGPT are conversational. Users develop a sense of relationship and trust with the chatbot. They share personal information they wouldn't normally share with a commercial service. Ads in this context are more psychologically manipulative because they leverage the emotional connection the user has developed with the system.

What safeguards did OpenAI promise regarding ads?

OpenAI committed to several safeguards: no ads will be shown to users under 18; no ads during conversations about health, mental health, or politics; ads will be clearly labeled as sponsored content; ads won't be misleading about what OpenAI offers versus what advertisers offer; and ads will be relevant to conversation content without using deceptive tactics. However, critics including Senator Markey point out that these safeguards don't address whether personal data from sensitive conversations will be used for targeting ads in future conversations.

What companies did Senator Markey contact about advertising?

Markey sent letters to the CEOs of seven companies: OpenAI, Anthropic, Google, Meta, Microsoft, Snap, and xAI. Each company is developing or already operating chatbots, so each is a potential target for advertisers. The letters were essentially identical, pressing each company to explain their advertising plans and what safeguards they'll implement to protect consumer privacy and safety, particularly for young users.

What is the deadline companies have to respond to Senator Markey?

OpenAI and the other six companies have until February 12, 2025, to respond to Markey's questions about their advertising plans and safeguards. The deadline is relatively short, requiring companies to coordinate responses from legal, product, and executive teams. Responses will likely be submitted in writing and might later be discussed in Congressional hearings, though no formal hearings have been scheduled yet.

Could Congress pass legislation restricting AI advertising?

Yes, legislation regulating advertising in conversational AI is likely within the next 12-18 months. Congress has oversight authority over deceptive advertising practices through the Federal Trade Commission. Potential legislation could require strong age verification, prohibit using sensitive conversation data for ad targeting, require clear disclosure of ad presence and placement, or mandate specific safeguards for minors. The industry will likely lobby against strict regulations, so the final legislation (if any passes) will probably be a compromise between consumer protection advocates and industry interests.

How might AI companies try to circumvent advertising restrictions?

If restrictions are passed, companies might respond by employing techniques like: making age verification extremely stringent (thus excluding younger users from free service), using AI to automatically detect when conversations are sensitive and pre-emptively suppressing data collection (rather than just suppressing ads), creating separate "ad-free" tiers above free but below ChatGPT Plus pricing, or lobbying for exemptions for specific use cases. Companies could also move users toward licensed APIs or enterprise products where regulation is less strict.

What's the risk of doing nothing about AI advertising?

If no regulatory action is taken, advertising in conversational AI will likely proliferate across all major platforms. This could normalize manipulative advertising practices, particularly affecting teenagers whose brains are still developing and more susceptible to psychological persuasion. Users would lose the ability to interact with conversational AI without commercial manipulation. Over time, the baseline of what users expect from AI assistants will shift from "helpful tool" to "commercial product optimized for revenue," which could fundamentally change how people use and trust AI systems.

Conclusion: Why This Matters More Than You Might Think

On the surface, this is just about whether you'll see ads when you use ChatGPT. That's the immediate impact. But the deeper question is about the future of how technology companies monetize intimate user relationships.

For the first time in the commercial internet, advertising is coming to conversational AI systems. These systems are different from everything that came before. They're more personal. They collect more sensitive data. They build emotional relationships with users. They're sophisticated enough to understand nuance and context in ways that previous advertising platforms couldn't.

Senator Markey is essentially asking: before this new form of advertising becomes normalized, can we stop and think about what we want the rules to be?

That's a reasonable question. We don't have to let advertising in conversational AI follow the same path as advertising in search and social media. We could decide as a society that these systems should be held to higher standards. That conversational AI should have stronger privacy protections. That teenagers shouldn't be targeted with behavioral ads in systems designed to feel like trusted advisors.

Or we could do nothing, and ten years from now, kids will grow up with AI advisors that subtly manipulate them toward products and services while feeling like they're talking to a helpful friend.

Markey's intervention is important because it's early. The ad systems aren't fully deployed yet. The regulatory frameworks haven't calcified. There's still time to shape how this technology evolves.

Whether Congress actually does anything remains to be seen. The tech industry lobbies effectively. Regulation moves slowly. By the time laws pass, advertising in AI might already be everywhere.

But at least someone is asking the right questions at the right time. At least there's a record of concerns raised before the status quo shifted. At least there's a political signal that some people in power think this matters.

That might not be enough to prevent ads in chatbots. But it might be enough to ensure that when those ads do arrive, they're subject to real oversight rather than purely industry self-regulation. And in the messy world of tech policy, that's often the best we can hope for.

Key Takeaways

- Senator Markey is pressing seven major AI companies (OpenAI, Google, Anthropic, Meta, Microsoft, Snap, xAI) to explain advertising safeguards before ads become widespread in conversational AI systems

- OpenAI is testing ads in free ChatGPT showing sponsored products relevant to conversation content, with stated protections excluding under-18 users and sensitive health/political topics

- Chatbot advertising differs fundamentally from search engine ads because users develop emotional relationships with conversational AI, making them more vulnerable to psychological manipulation through targeted ads

- Data privacy risks include companies using sensitive personal information from conversations to target ads in future interactions, even if ads aren't shown during sensitive conversations themselves

- Age verification on the internet remains weak, making it unclear whether stated protections for minors will actually prevent teenagers from seeing targeted ads in chatbot systems

Related Articles

- ChatGPT Ads Are Coming: Everything You Need to Know [2025]

- How Grok's Deepfake Crisis Exposed AI Safety's Critical Failure [2025]

- AI-Generated Non-Consensual Nudity: The Global Regulatory Crisis [2025]

- The TikTok U.S. Deal Explained: What Happens Now [2025]

- Samsung's Bixby Gets AI Brain Transplant from Perplexity [2025]

- ChatGPT Targeted Ads: What You Need to Know [2025]

![Senator Markey Challenges OpenAI on Chatbot Ads: What It Means for You [2025]](https://tryrunable.com/blog/senator-markey-challenges-openai-on-chatbot-ads-what-it-mean/image-1-1769119806918.png)