The Shadow AI Crisis: What Leaders Need to Know in 2025

When Sarah Chen, a marketing manager at a mid-sized financial services firm, needed to draft a compliance document quickly, she made a choice that would keep her boss awake at night. She copied sensitive customer data into Chat GPT, received a polished version back within minutes, and met her deadline. The productivity gain felt real. The risk? Catastrophic—though she barely noticed it.

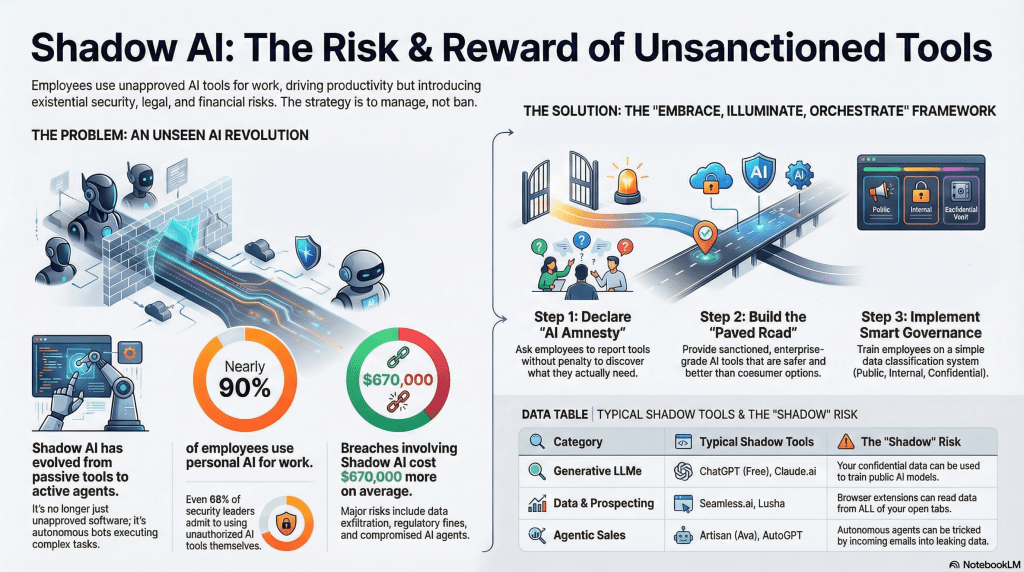

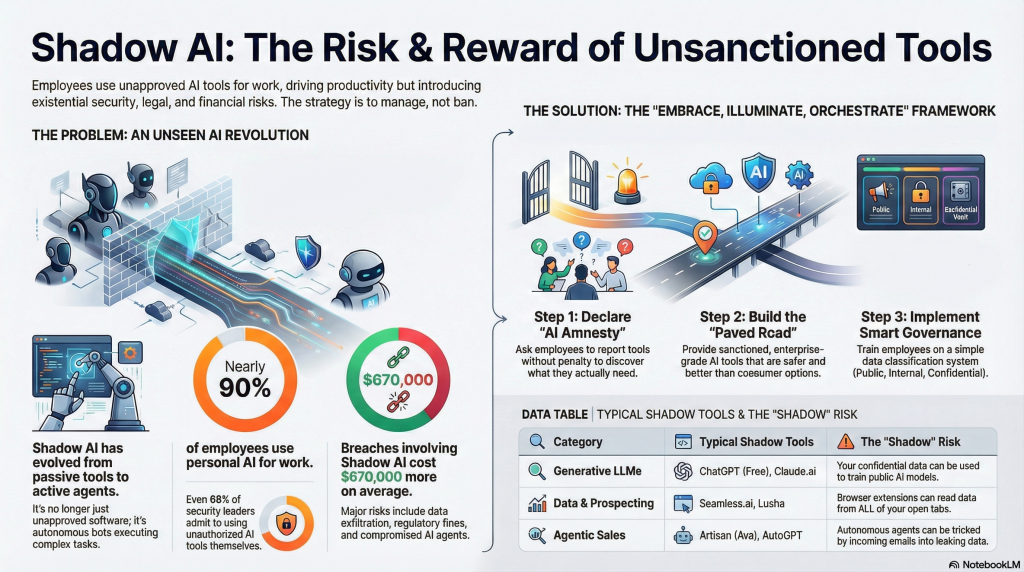

This scenario plays out thousands of times daily across enterprise organizations worldwide. What researchers call "shadow AI"—the unauthorized use of artificial intelligence tools and platforms by employees without IT oversight or management approval—has quietly become one of the most significant operational and security challenges facing modern businesses. Unlike shadow IT of decades past, which involved rogue spreadsheets and unapproved software, shadow AI operates at machine speed and scale, capable of exposing proprietary data, violating regulatory requirements, and creating liability nightmares within seconds.

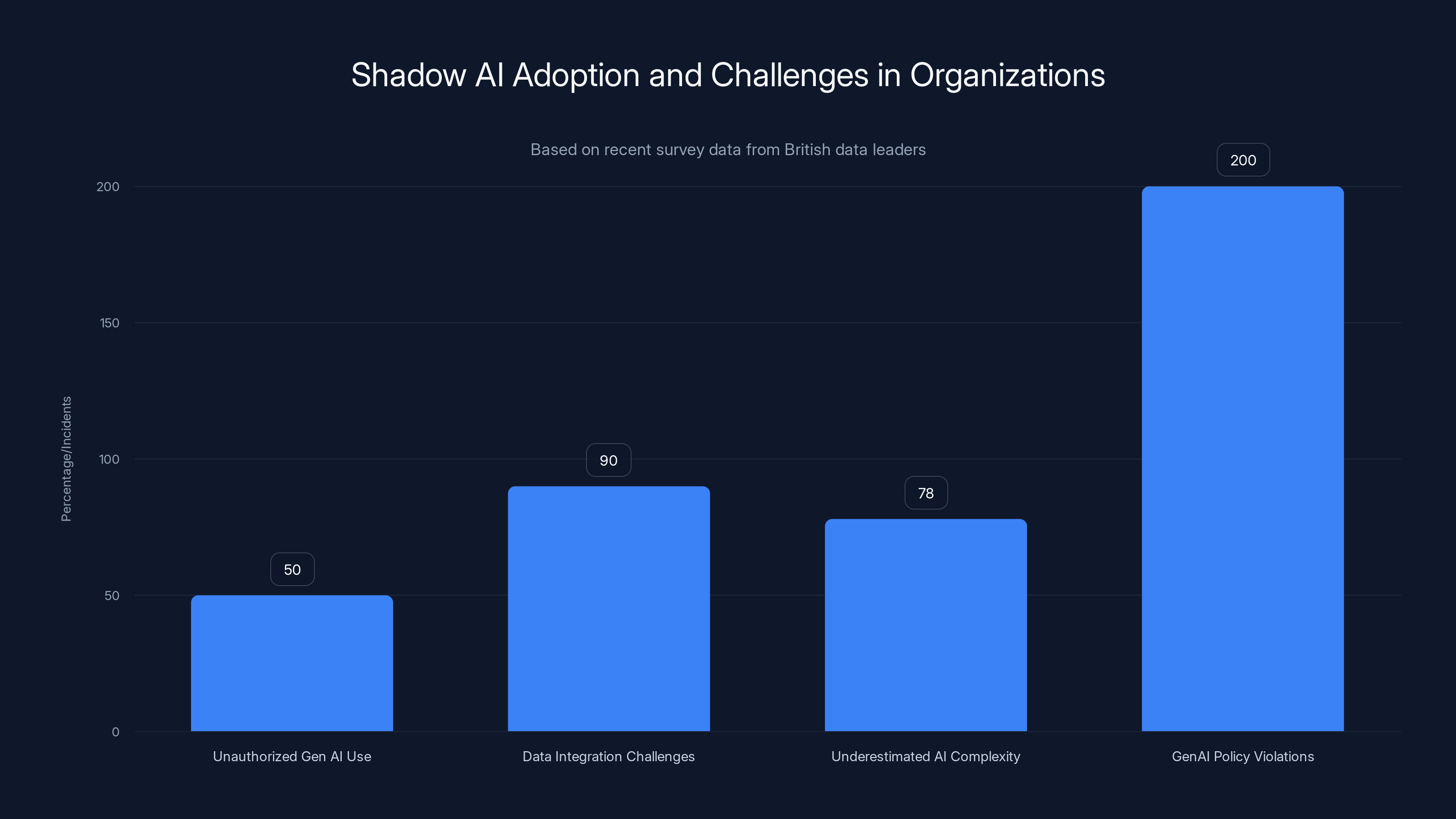

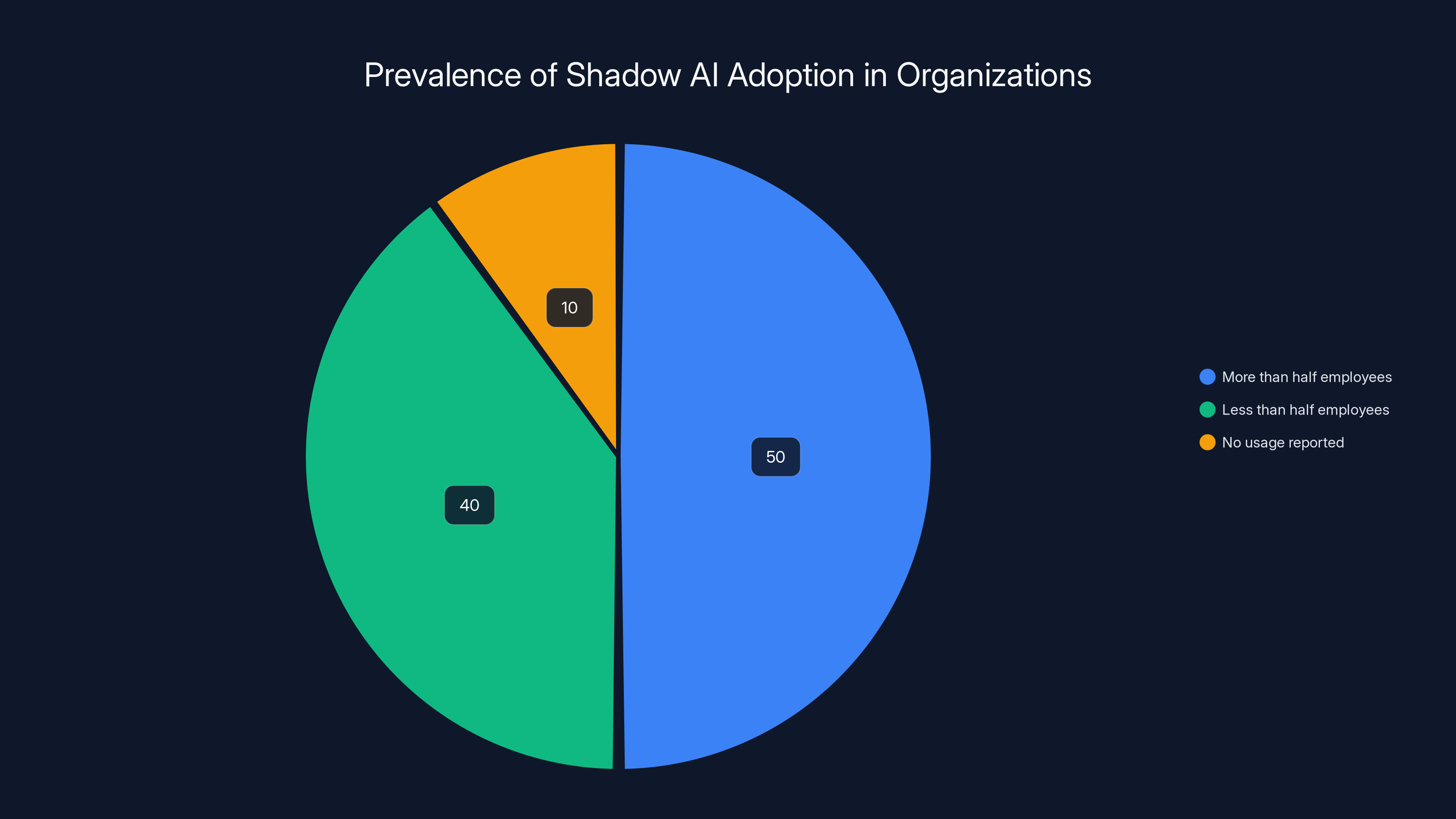

Recent research reveals a stunning reality: 50% of senior British data leaders believe more than half of their employees are using generative AI tools for company work without permission. This isn't a niche problem confined to tech-forward companies or Silicon Valley startups. It's happening in financial institutions, healthcare organizations, government agencies, and manufacturing firms. It's happening right now, in your organization, possibly without your knowledge.

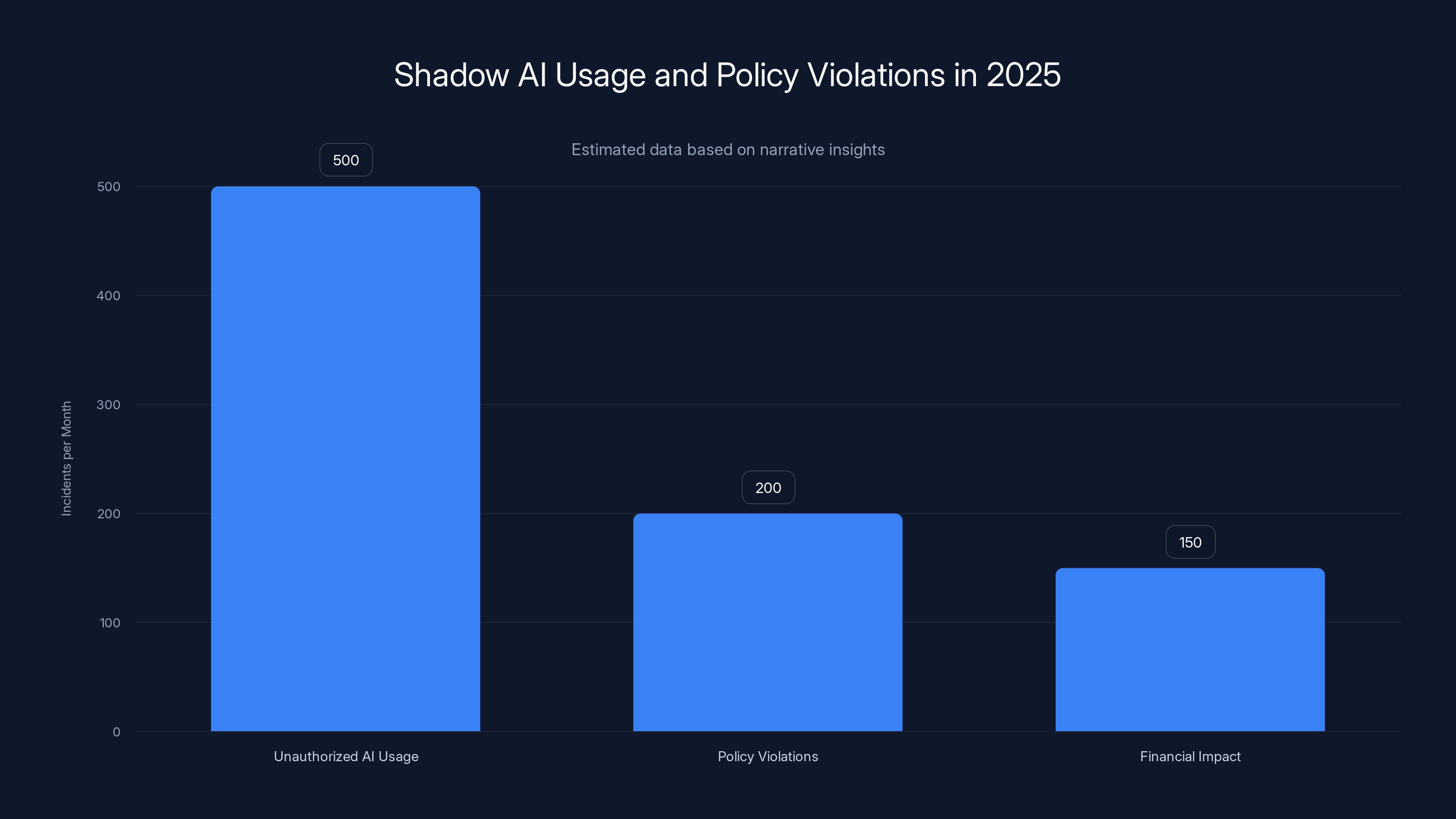

The scope of the problem extends far beyond a handful of rule-breaking employees. Organizations are now reporting over 200 Gen AI-related data policy violations each month on average. These violations represent breaches of confidential information, intellectual property exposure, regulatory violations, and potential legal liability. The financial impact alone—through potential fines, data breach remediation, and lost competitive advantage—could exceed millions of dollars annually for large enterprises.

What makes shadow AI uniquely dangerous compared to previous waves of unsanctioned technology adoption is the ease of entry, the power of the tools, and the speed at which damage can occur. Traditional shadow IT required technical knowledge, deliberate installation steps, and created digital footprints that security teams could theoretically detect. Shadow AI requires nothing more than a web browser and an email address. The tools are intuitive, powerful, and specifically designed to make complex tasks trivially easy. A single copy-paste operation can extract an organization's crown jewels.

The irony deepens when you examine the motivation behind these unauthorized implementations. Employees aren't deliberately sabotaging their organizations. They're trying to work faster, produce better results, and reduce tedious tasks. AI genuinely does accelerate knowledge work. The problem isn't the desire for productivity—it's that this desire is outpacing governance structures, security controls, and leadership understanding of the actual risks involved.

According to recent organizational surveys, 78% of C-suite executives significantly underestimate the complexity and time required to deploy reliable, production-grade AI systems. This knowledge gap creates a vacuum where employee-driven, grassroots AI adoption fills the void. When leadership doesn't provide approved, secure, enterprise-grade AI tools, employees find their own solutions. The resulting technical debt, security exposure, and compliance violations become someone else's problem—usually the CIO's and the Chief Information Security Officer's.

Understanding Shadow AI: Definition and Scope

What Exactly is Shadow AI?

Shadow AI refers to any use of artificial intelligence tools, models, or platforms by employees without explicit authorization, governance, or integration with official IT infrastructure and security protocols. This includes generative AI chatbots like Chat GPT, Claude, or Gemini; AI-powered productivity tools; machine learning applications; and even custom AI implementations built by employees using cloud services and public APIs.

The definition encompasses both intentional and unintentional scenarios. A developer might deliberately experiment with a large language model for code generation. A sales representative might not realize they're violating policy by using an AI writing assistant to draft proposals. A data analyst might leverage open-source machine learning libraries to expedite analysis without understanding the compliance implications. From the organization's perspective, all three scenarios represent shadow AI—unmanaged, unmonitored, unsecured use of AI capabilities.

What distinguishes shadow AI from legitimate, approved AI use is the absence of organizational governance. Official AI tools and systems go through procurement processes, security audits, compliance reviews, and integration with enterprise data governance frameworks. Shadow AI bypasses all of these controls, creating a parallel AI infrastructure that operates entirely outside organizational visibility and control mechanisms.

The Scale and Prevalence Problem

The proliferation of shadow AI becomes immediately clear when examining actual usage patterns. The research revealing that 50% of British data leaders believe more than half their employees use unauthorized AI tools represents a conservative estimate—many organizations lack sufficient monitoring to accurately quantify the practice.

Shadow AI adoption accelerates across multiple dimensions simultaneously. First, there's tool proliferation: hundreds of AI applications now exist for every conceivable workplace task. Need to summarize documents? Ten options exist. Want to generate images? Dozens of platforms. Require SQL query optimization? Multiple specialized AI tools await. The barrier to entry has essentially disappeared.

Second, there's knowledge diffusion: information about AI capabilities spreads rapidly through social media, professional networks, and internal communication channels. When one team discovers an AI tool that increases productivity by 30%, word spreads quickly to adjacent teams and departments. What started as one person's experiment becomes departmental practice within weeks.

Third, there's insufficient legitimate alternatives: many organizations lack approved, integrated AI tools that employees can turn to. When IT departments move slowly on AI adoption—constrained by budgets, procurement timelines, and risk aversion—employees solve the problem themselves. This creates a fundamental supply-and-demand mismatch where demand for AI-powered productivity vastly exceeds official supply.

The result is a shadow AI infrastructure that grows exponentially, largely invisible to IT and security teams. By the time organizations gain visibility into the problem, shadow AI usage is already endemic, deeply woven into work processes, and extremely difficult to unwind.

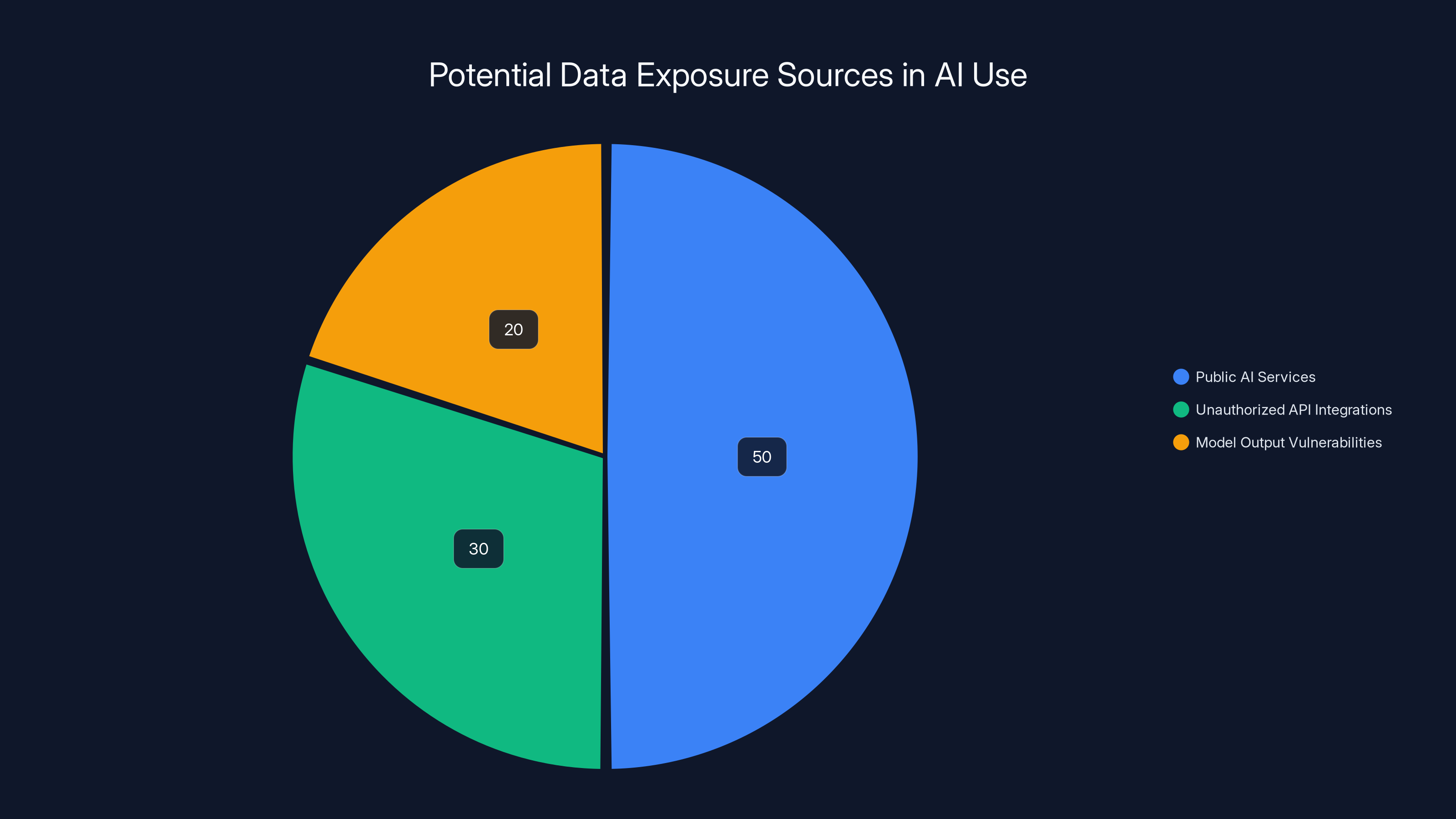

Public AI services account for the largest share of potential data exposure, followed by unauthorized API integrations and model output vulnerabilities. Estimated data.

The Security and Compliance Nightmare

Data Exposure Mechanisms and Attack Vectors

Shadow AI creates multiple pathways through which sensitive organizational data can leak, be compromised, or be lost entirely. These aren't theoretical risks—they represent daily occurrences across enterprises worldwide.

Direct Data Exposure Through Public AI Services: When employees paste company information into public AI platforms like Chat GPT, that information flows through Anthropic, Open AI, or other service providers' infrastructure. Terms of service vary, but many platforms explicitly state that user inputs may be used to improve their models. This means your customer data, product specifications, financial information, or strategic plans could be ingested into training datasets, making it accessible to competitors or malicious actors.

Consider the actual scenario from research confessions: a senior executive admitted copying and pasting sensitive data into Chat GPT weekly. Multiply this across an organization with hundreds or thousands of employees, each making individual decisions about what constitutes "sensitive." The cumulative exposure becomes staggering.

Unauthorized API and Integration Risks: Employees increasingly connect shadow AI tools to internal systems using APIs. A data analyst might connect a machine learning platform to the organization's data warehouse to accelerate analysis. A developer might integrate an AI coding assistant with the version control system. These integrations create permanent data pipelines that persist long after the initial decision, often without IT department awareness or approval. Should the external service suffer a security breach, compromise, or change its terms of service, the data pipelines become vectors for attack.

Model Output Vulnerabilities: AI models sometimes reproduce training data in their outputs—a phenomenon called "memorization" or "training data extraction." When an employee trains a custom model on proprietary data or asks a public AI model to process sensitive information, there's a non-zero probability that the model will reproduce that information directly. This can occur weeks, months, or even years later when someone queries the model with the right prompts.

Prompt Injection and Data Leakage: As organizations increasingly use AI tools to process stored data, attackers exploit prompt injection techniques—embedding malicious instructions in data to trick AI systems into revealing information. An attacker who deposits a file in your document management system could include a prompt injection that causes AI systems to leak additional data when that file is processed.

Regulatory and Compliance Violations

Shadow AI creates compliance violations that can trigger significant financial and reputational penalties. Organizations operating under regulatory frameworks like GDPR, HIPAA, CCPA, or SOX face particular exposure.

GDPR and Personal Data Exposure: Under GDPR, transferring personal data to third parties without proper data processing agreements constitutes a violation. When employees paste customer data or employee information into public AI services, they're potentially transferring personal data beyond the EU or to jurisdictions without adequate data protection—clear GDPR violations. Individual violations can trigger fines up to €20 million or 4% of annual revenue, whichever is larger.

HIPAA Violations in Healthcare: Healthcare organizations using unauthorized AI tools with patient data face HIPAA violations. Since most public AI services lack Business Associate Agreements (BAAs) and appropriate safeguards for protected health information, their use is prohibited. Yet shadow AI in healthcare is rampant—clinicians using AI summarization tools on patient notes, researchers processing de-identified patient data through AI models without approval, and administrators using AI for appointment scheduling and documentation.

Financial Services and SOX Compliance: Financial institutions must maintain detailed audit trails of how data is processed and stored. Shadow AI violates these requirements by creating untracked data flows and unauditable processing pipelines. Regulatory examination findings citing shadow AI compliance failures are becoming increasingly common.

Export Control and Intellectual Property Issues: Organizations developing technology subject to export controls face additional complications when shadow AI enters the picture. Using public AI services to process export-controlled information or proprietary algorithms may constitute illegal export of controlled technology.

The average organization reporting over 200 Gen AI-related data policy violations monthly suggests that compliance risks have already manifested in most enterprises. These violations may go undocumented, but they accumulate legal and regulatory exposure that could surface during audits, investigations, or breach notifications.

Financial and Reputational Impact

The business impact of shadow AI extends beyond security and compliance into direct financial harm. When sensitive information leaks through shadow AI channels, organizations face multiple cost categories.

Breach Remediation Costs: The Ponemon Institute reports that the average cost to remediate a data breach exceeds

Regulatory Fines and Legal Liability: Beyond remediation, organizations face regulatory fines, private right of action lawsuits, and class action litigation. A single major breach resulting from shadow AI could trigger fines in the tens of millions range, particularly in highly regulated industries.

Competitive Disadvantage: When shadow AI exposes strategic plans, product designs, customer lists, or pricing algorithms to competitors, the competitive harm is often permanent and quantifiable. A competitor who gains intelligence on your product roadmap through your own employees' shadow AI can anticipate your moves and adjust their strategy accordingly.

Loss of Customer Confidence: When customers learn that their data was processed through unauthorized, unsecured channels—even if no breach occurred—trust deteriorates. This affects renewal rates, expansion opportunities, and brand reputation.

The survey highlights significant shadow AI use, with 50% of data leaders noting unauthorized Gen AI use by employees. Data integration challenges are a top concern for 90% of leaders, while 78% acknowledge underestimated AI complexity. Organizations report over 200 GenAI policy violations monthly.

Why Shadow AI Adoption Accelerates

The Productivity Paradox

Shadow AI adoption persists despite clear risks because it genuinely delivers productivity benefits. This is the central paradox that makes the shadow AI problem so intractable: unauthorized AI tools actually work, often very well.

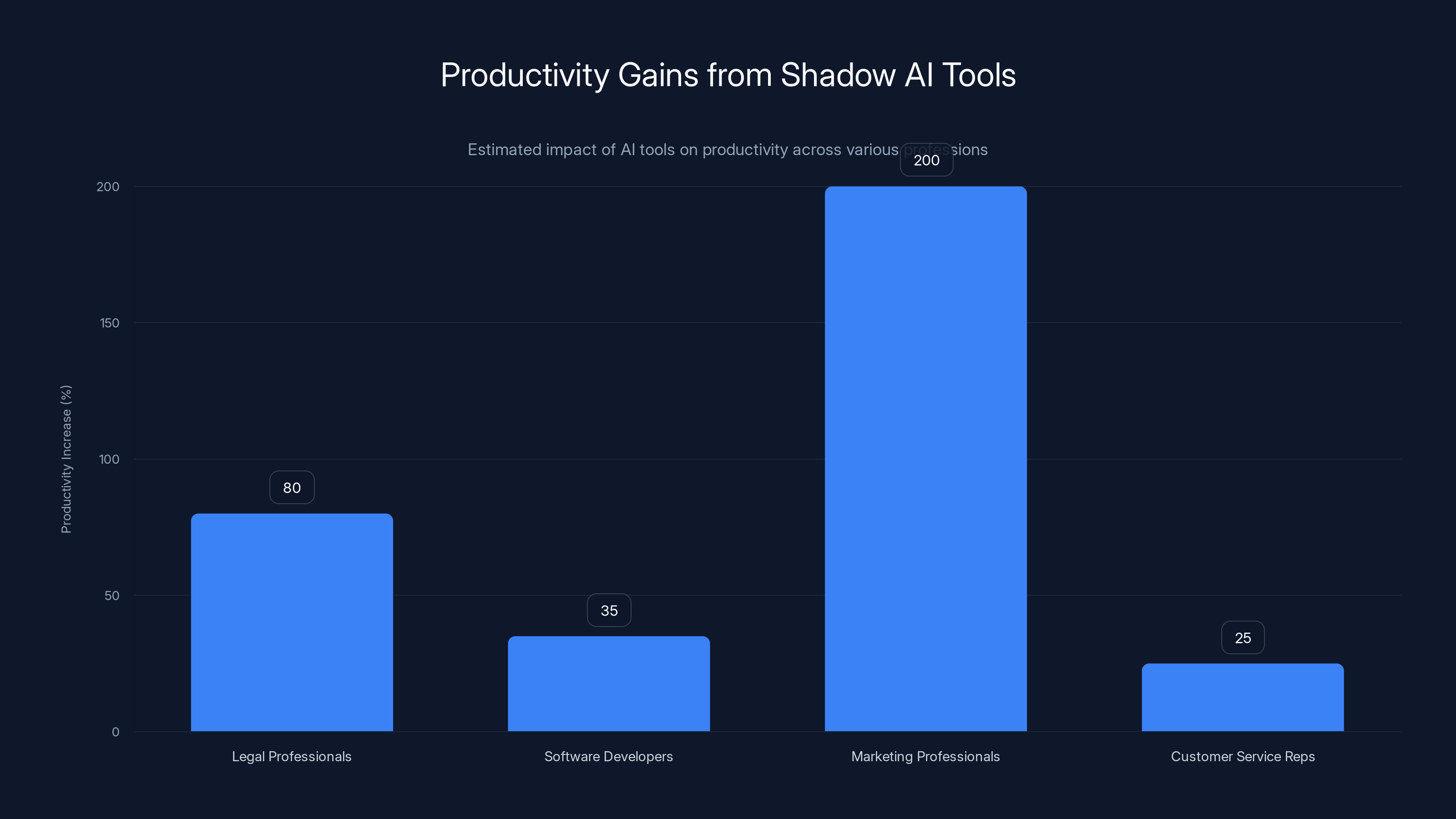

Consider concrete productivity gains. Legal professionals using AI-powered document review tools report completing discovery processes in days instead of weeks. Software developers using AI code generation assistants reduce coding time by 30-40% for routine tasks. Marketing professionals using AI writing tools produce content 2-3x faster while maintaining quality. Customer service representatives using AI-powered response suggestions handle 20-30% more tickets daily. These aren't marginal improvements—they're transformative.

When employees experience these productivity gains personally and directly, the motivation to continue using shadow AI becomes powerful. From their perspective, they're working smarter, delivering better results, and reducing burnout from tedious tasks. The abstract organizational risks that IT describes feel distant and hypothetical compared to the concrete, immediate benefits they experience daily.

Management layers often tacitly enable shadow AI adoption because the productivity gains flow upward in the organization. A department head notices her team delivering results faster and exceeding targets. She doesn't necessarily know—or care—whether her team achieved this through shadow AI or legitimate means. The results speak for themselves, and the risk remains invisible until a breach occurs.

Organizational Gaps and Broken Governance

Shadow AI flourishes in the absence of legitimate alternatives and clear governance frameworks. Many organizations have recognized the AI opportunity but moved slowly in implementing solutions.

Slow Approval Processes: Traditional enterprise software procurement takes six months to two years from initial request to deployment. A developer who wants to use an AI coding assistant doesn't have two years—they need a solution today. Shadow AI provides instant gratification compared to the glacial pace of official procurement.

Lack of Approved AI Tools: Many organizations have no approved, integrated AI solutions available to employees. IT departments are still debating AI strategy while employees take matters into their own hands. This creates a fundamental mismatch between employee needs and official supply.

Insufficient Technical Leadership Understanding: The research finding that 78% of C-suite executives underestimate the complexity of production-grade AI deployment illustrates a broader problem: organizational leaders don't understand AI well enough to govern it effectively. This lack of understanding manifests as either paralysis (we'll address AI later) or recklessness (let's deploy AI everywhere, quickly). Both extremes enable shadow AI.

Missing Data Governance Foundations: Organizations attempting to deploy AI often lack the fundamental data governance, metadata management, and data quality infrastructure required for success. This underlying weakness means that even official AI initiatives struggle, further reducing trust in organizational AI governance and pushing employees toward shadow solutions.

Employee Empowerment Through Accessibility

Modern AI tools are designed for accessibility and ease of use. This democratization is philosophically sound but creates governance challenges.

No special technical knowledge is required to use Chat GPT, Claude, Copilot, or hundreds of other AI services. A marketing manager can use these tools just as effectively as a data scientist. This accessibility is intentional—AI companies have deliberately removed friction to maximize adoption. The result is that capability that once required specialized expertise is now universally available.

Moreover, free or low-cost options abound. Many valuable AI services operate on freemium models where individuals can access significant functionality without organizational involvement or expenditure. Even the paid options often cost less than $50 monthly, placing them below procurement thresholds and under budget lines that don't attract organizational scrutiny.

The combination of accessibility, affordability, and ease of use means that shadow AI adoption requires minimal initiative or deliberate rule-breaking. Employees don't feel like rebels—they feel like smart workers using modern tools to do their jobs better.

Current State: Research Findings and Organizational Reality

Key Survey Data and Prevalence Rates

Recent research conducted across hundreds of organizations provides quantitative evidence of shadow AI's scope. These findings represent conservative estimates—organizations with less mature monitoring and security cultures likely show even higher shadow AI adoption rates.

50% of senior British data leaders believe more than half of their employees use Gen AI tools for company work without permission. This figure suggests that shadow AI isn't a fringe activity—it's majority behavior in many organizations. In organizations where 75% of employees use unauthorized AI, shadow AI isn't an anomaly; it's the dominant pattern.

90% of British data leaders cite data integration challenges or proprietary data access as their top concern when deploying AI—the highest level globally. This indicates that organizations attempting to deploy AI legitimately encounter significant obstacles related to data access, data quality, and integration complexity. These obstacles themselves likely drive shadow AI adoption, as employees find workarounds to access the data and tools they need.

78% of C-suite executives said their C-suite underestimates the time and difficulty required to achieve AI systems that would be reliable enough for production deployment. This self-awareness among data leaders is valuable but also somewhat damning—if the technical leadership acknowledges that executives underestimate AI complexity, it suggests that organizational resources, timelines, and expectations are misaligned with reality. This misalignment creates slack that shadow AI fills.

Over 200 Gen AI-related data policy violations monthly in the average organization represents a concrete measure of shadow AI's actual impact. Each violation represents a potential compliance issue, security exposure, or intellectual property leak. If this rate held constant across a year, it would accumulate to 2,400 violations annually per organization—far too many to remediate or even fully catalog.

Types of Shadow AI Usage

Shadow AI adoption spans the full spectrum of workplace activities. Understanding the diversity of use cases reveals why simple prohibition strategies fail.

Knowledge Worker Productivity: Marketing professionals using AI writing assistants, consultants using AI research tools, lawyers using AI document analysis platforms, and recruiters using AI resume screening tools represent the high-end productivity use cases. These employees aren't trying to circumvent rules—they're trying to work more efficiently in their domains.

Software Development: Developers using AI code completion, code generation, and technical documentation tools represent perhaps the most widespread shadow AI adoption. Git Hub Copilot alone has hundreds of thousands of users, many of whom use it without explicit organizational approval. The productivity gains are substantial and immediately obvious to the developer.

Data Analysis and Reporting: Data professionals using AI-powered analytics tools, natural language query interfaces, and automated insight generation represent shadow AI adoption in the technical domain. These uses often involve connecting the AI tool to organizational data sources, creating persistent pipelines and integration points.

Customer-Facing Applications: Customer service representatives using AI-powered response suggestions, sales professionals using AI proposal generation, and support teams using AI ticket routing represent shadow AI that directly impacts customers. If the AI system is compromised, trained on outdated data, or producing hallucinations, customer-facing impact occurs immediately.

Strategic and Sensitive Work: Perhaps most concerning, employees in strategic roles—executives, strategists, product managers—use shadow AI to analyze competitive threats, develop strategic plans, and make significant decisions. When this work involves sensitive data, the exposure is magnified.

The "AI Confessions Booth" Insights

During a major industry summit, researchers deployed an "AI Confessions Booth" where senior executives could anonymously share their shadow AI experiences. The confessions revealed the psychological and organizational reality beyond statistics.

A senior executive admitted: "I know almost all of my employees are using shadow AI — and most of them copy and paste sensitive data into Chat GPT every week." This confession is particularly valuable because it reveals three things: (1) the executive knows the problem exists, (2) the executive is aware it represents a security issue, and (3) the executive has not implemented effective controls to prevent it. This represents the typical state of organizational response to shadow AI—awareness without effective action.

A senior sales director noted: "We are currently building AI randomly, with no regard to the data foundations...people have invested huge amounts in AI, but the data foundations are so shaky the return on investment won't be there." This confession illustrates how shadow AI adoption, when combined with inadequate organizational AI strategy, creates wasted investment and technical debt. The organization is paying for AI initiatives while employees simultaneously build parallel, ungoverned AI systems.

These confessions transform shadow AI from an abstract risk to a concrete, lived reality in real organizations.

Estimated data shows that 50% of senior British data leaders believe more than half their employees use shadow AI tools without permission, indicating significant unauthorized AI tool usage in organizations.

Industry-Specific Shadow AI Risks

Financial Services and Banking

Financial institutions face particularly acute shadow AI risks due to regulatory requirements, data sensitivity, and the mission-critical nature of AI-driven decisions.

Regulatory Exposure: Banks operate under strict regulatory frameworks including Dodd-Frank, Mi FID II, and SOX. Shadow AI creates unauditable data flows and decision pathways that directly violate these requirements. Regulatory examiners increasingly scrutinize AI implementations, and shadow AI represents a clear point of vulnerability.

Model Risk Management: Banks are required to implement model risk management frameworks that govern all models used in significant business processes. Shadow AI models used for lending decisions, risk assessment, or trading bypass these frameworks entirely. If a shadow AI model produces discriminatory outcomes or incorrect risk assessments, regulatory liability attaches to the institution even though the model was unauthorized.

Customer Data Protection: Financial institutions maintain enormous quantities of sensitive customer data. Shadow AI tools used to process customer financial information, trading data, or personal information represent direct threats to data security and customer privacy.

Trading and Compliance Issues: Traders and analysts using unauthorized AI tools in trading, risk analysis, or market research can create compliance violations related to market integrity, fair dealing, and conflicts of interest. An AI tool used to analyze proprietary trading strategies or execute trades outside official systems creates liability for the institution.

Healthcare and Life Sciences

Healthcare organizations face shadow AI risks that directly impact patient safety, data privacy, and regulatory compliance.

Patient Safety Implications: Clinicians using unauthorized AI tools for diagnosis, treatment recommendations, or clinical decision support bypass the validation and testing required for clinical AI systems. If an AI system trained on questionable data provides incorrect clinical guidance, patient harm can result.

HIPAA and Privacy Violations: Healthcare organizations must maintain strict controls over protected health information (PHI). Clinicians using public AI services to process patient data create HIPAA violations and patient privacy breaches.

Research and Data Integrity: Researchers using unauthorized AI tools to analyze clinical data or process research datasets compromise data integrity and potentially violate research protocols and institutional review board (IRB) requirements.

Medical Device and Regulatory Status: AI tools used in clinical settings may constitute medical devices under FDA jurisdiction. Using unauthorized or unvalidated AI tools in clinical decision-making may violate medical device regulations.

Government and Defense

Government organizations and defense contractors face shadow AI risks related to national security, export control, and regulatory compliance.

Export Control Violations: Defense contractors and government agencies working with export-controlled technology must prevent that information from leaving secure channels. Shadow AI tools used on export-controlled information may constitute illegal export of controlled technology.

National Security Implications: Government agencies using cloud-based AI services for sensitive national security analysis or policy-relevant work create risks related to data sovereignty and government authority over classified or sensitive information.

Audit Trail and Oversight Requirements: Government operations require detailed audit trails demonstrating appropriate authorization and oversight of decisions and data processing. Shadow AI creates untracked data flows that violate these requirements.

Technology and Software Development

Technology companies face shadow AI risks related to intellectual property, product security, and competitive advantage.

Intellectual Property Exposure: Software development organizations using public AI services to process proprietary code, algorithms, or architecture may expose valuable intellectual property. If an AI model is trained on this code, competitors could potentially extract similar patterns from the trained model.

Security Vulnerability Introduction: Developers using AI code generation tools without proper review may introduce security vulnerabilities. AI code generators sometimes produce insecure code patterns, SQL injection vulnerabilities, or cryptographic errors. Without proper review, these vulnerabilities propagate into production systems.

Product Roadmap and Strategy Exposure: Developers and product managers using AI to analyze or document product roadmaps may expose strategic information about future features, technical direction, or planned acquisitions.

The Leadership Knowledge Gap

C-Suite Understanding of AI Complexity

The research finding that 78% of C-suite executives underestimate the time and difficulty required to achieve production-grade AI systems reveals a fundamental organizational problem. When executives don't understand a technology's complexity, they make decisions that are misaligned with reality.

Underestimation Consequences: Executives underestimating AI complexity often set unrealistic timelines, allocate insufficient budget, and fail to appreciate the infrastructure investments required. A CIO who believes AI implementation should take six months when twelve months would be realistic will feel constantly behind schedule. An executive who believes data governance isn't necessary for AI deployment will build fragile systems prone to failure.

The Demo Problem: AI capabilities are genuinely impressive when demonstrated. A large language model can write convincing essays, generate working code, and answer complex questions. These demonstrations are real—the model can do these things. What the demonstration doesn't show is everything required to make that AI system reliable, fair, secure, and compliant with organizational requirements in a production environment. Executive witnesses to impressive AI demos often conclude that if the AI can do that, implementation must be straightforward. This leads to underestimated complexity and insufficient resource allocation.

Training and Development Needs: Most C-suite executives have limited hands-on experience with AI tools. They may have briefly tried Chat GPT, but they likely haven't grappled with the full scope of AI implementation complexity—data preparation, feature engineering, model validation, bias mitigation, explainability requirements, infrastructure requirements, and ongoing maintenance. Without this hands-on experience, it's difficult to intuitively grasp the complexity.

Technical Leadership's Dual Dilemma

Data leaders and technical teams find themselves in a difficult position. They understand AI's potential but also understand the risks and complexity. This creates a paralysis where organizational AI strategy progresses slowly while shadow AI flourishes.

Risk Aversion vs. Competitive Pressure: Data leaders recognize shadow AI risks and want to implement proper governance. Simultaneously, they feel competitive pressure to move quickly and not be left behind by competitors aggressively adopting AI. This dual pressure creates tension in strategic decision-making.

Resource Constraints: Implementing proper AI governance requires significant investment in data infrastructure, security, compliance, and talent. Many organizations lack these resources and face difficult choices between investing in proven systems and investing in emerging AI capabilities.

Governance vs. Agility Trade-off: Comprehensive AI governance frameworks naturally slow down decision-making and implementation speed compared to a free-for-all where individuals use any tool they choose. Organizations face a trade-off between moving quickly and maintaining control. Shadow AI represents employees choosing speed over control.

Estimated data shows significant productivity gains from shadow AI tools, with marketing professionals experiencing up to 200% increase in content production speed.

The Cost of Inaction and Shadow AI Escalation

Compounding Risks and Escalation Dynamics

Shadow AI doesn't remain static—it escalates. Over time, shadow AI systems become more deeply embedded, more complex, and more difficult to unwind.

Dependency Accumulation: As shadow AI systems demonstrate value, other processes begin depending on them. A data analyst using an unauthorized cloud-based machine learning tool might build an ad-hoc reporting dashboard depending on the model's output. Sales leadership begins making decisions based on this dashboard. Eventually, multiple teams depend on the shadow AI system for critical business processes. At that point, eliminating the system or transferring it to an approved platform becomes extremely difficult without disrupting operations.

Technical Debt Accumulation: Shadow AI systems are typically built without following organizational architectural standards, security requirements, or documentation practices. As they grow in functionality and importance, the technical debt becomes crushing. Transitioning the system to an approved platform requires rebuilding it—essentially starting over—which executives will resist given the apparent success of the existing system.

Regulatory Vulnerability Growth: With each passing month that shadow AI systems operate processing regulated data, additional regulatory violations accumulate. An organization operating a shadow AI system for twelve months without detection faces potential penalties for twelve months of violations, not just the current month. When audits or investigations occur, the retrospective liability can be substantial.

Skill and Organizational Knowledge Concentration: Shadow AI systems often have limited documentation and are understood by a small number of individuals. If key employees depart, the knowledge of how the system works and what data it processes can disappear with them. This concentrates organizational risk around specific individuals and creates continuity vulnerabilities.

The Post-Breach Scenario

For organizations with significant shadow AI adoption, the eventual data breach represents a compounding catastrophe. The breach doesn't involve just the shadow AI system—it involves the organizational reputation, regulatory consequences, legal liability, and customer trust simultaneously.

Discovery and Attribution Difficulties: When investigating a breach, security teams must determine how the breach occurred, what data was exposed, and how it was discovered externally. Shadow AI systems create investigation complexity because they operate outside official monitoring. The organization may not know definitively how sensitive data reached an attacker—was it extracted through the shadow AI system? Was it exposed in model training data? Was it accessed through an unauthorized API integration?

Amplified Damage: The breach narrative becomes more damaging if it's revealed that the organization knowingly allowed shadow AI to operate without controls, despite understanding the risks. Media coverage and public perception shift from "they were victims of a sophisticated attack" to "they ignored warnings and let employees put sensitive data at risk." This amplification damages brand reputation and customer confidence far beyond the direct impact of the breach itself.

Regulatory and Legal Consequences: Regulators investigating a breach involving shadow AI will scrutinize whether the organization took reasonable steps to prevent unauthorized systems from processing regulated data. If data leaders warned executives about shadow AI risks and the organization took insufficient action, this significantly increases regulatory penalties. Private litigation from affected customers becomes more aggressive when plaintiffs can show the organization ignored known risks.

Remediation Complexity: Containing a breach involving shadow AI systems requires identifying all systems connected to the compromised data, securing all potential data pathways, and ensuring that no backdoors remain. With shadow AI, this is far more complex than with official systems where IT maintains inventory and control.

Governance Challenges and Technical Realities

Detection and Monitoring Limitations

One of the most frustrating aspects of shadow AI for security teams is the difficulty in detecting and monitoring it. Unlike shadow IT, which often leaves detectable network traces, shadow AI can operate almost invisibly.

Cloud and Saa S Accessibility: Public AI services accessible through the public internet create limited detection opportunities. A developer can use Git Hub Copilot through a browser extension without creating network traffic that appears suspicious or detectable to traditional network monitoring. An employee can use Chat GPT on a personal device and email the results internally. Traditional network-based detection strategies have limited effectiveness against cloud-based AI services.

Encrypted Traffic Limitations: Many shadow AI services operate over encrypted HTTPS connections, making it impossible for network monitoring tools to inspect the content of communications without more invasive inspection techniques. Organizations that attempt to block certain AI services may simply drive shadow AI adoption to more difficult-to-detect channels.

Detection Tool Immaturity: As of 2025, detection tools specifically designed to identify shadow AI adoption are still relatively immature. Tools exist, but they tend to generate high false-positive rates, miss new or less common tools, and struggle with evolved usage patterns as employees find new ways to use AI tools without detection.

Endpoint Monitoring Overhead: Detecting shadow AI at the endpoint level (monitoring what applications are running, what APIs are being called) requires intrusive monitoring that most organizations find unacceptable from a privacy and employee morale perspective. Employees understandably object to surveillance-level monitoring of their computer usage.

The Integration and Data Pipeline Problem

Shadow AI becomes particularly problematic when it integrates with organizational data sources and systems. These integrations create persistent vulnerabilities and governance challenges.

API Keys and Credentials Exposure: When employees integrate shadow AI tools with organizational systems using API keys or credentials, these sensitive credentials are stored in the shadow AI service. If that service experiences a breach or is compromised, the credentials may be exposed, allowing attackers to access organizational systems and data directly.

Data Pipeline Persistence: An integration created for one person's use case often persists and becomes embedded in organizational processes. A data analyst might initially connect a shadow AI tool to the data warehouse to accelerate their analysis. Over time, others begin depending on the output. What was intended as temporary becomes permanent infrastructure.

Version and Documentation Gaps: Shadow AI integrations typically lack the documentation and versioning controls maintained for official systems. If an integration breaks, discovering what changed and why becomes difficult. If a data scientist needs to understand how a model was configured or what training data was used, documentation may be minimal.

Data Governance Incompleteness: Official data governance frameworks track data sources, transformations, and destinations. Shadow AI integrations typically operate outside these frameworks, creating unmapped data flows that prevent complete understanding of how organizational data moves through the system.

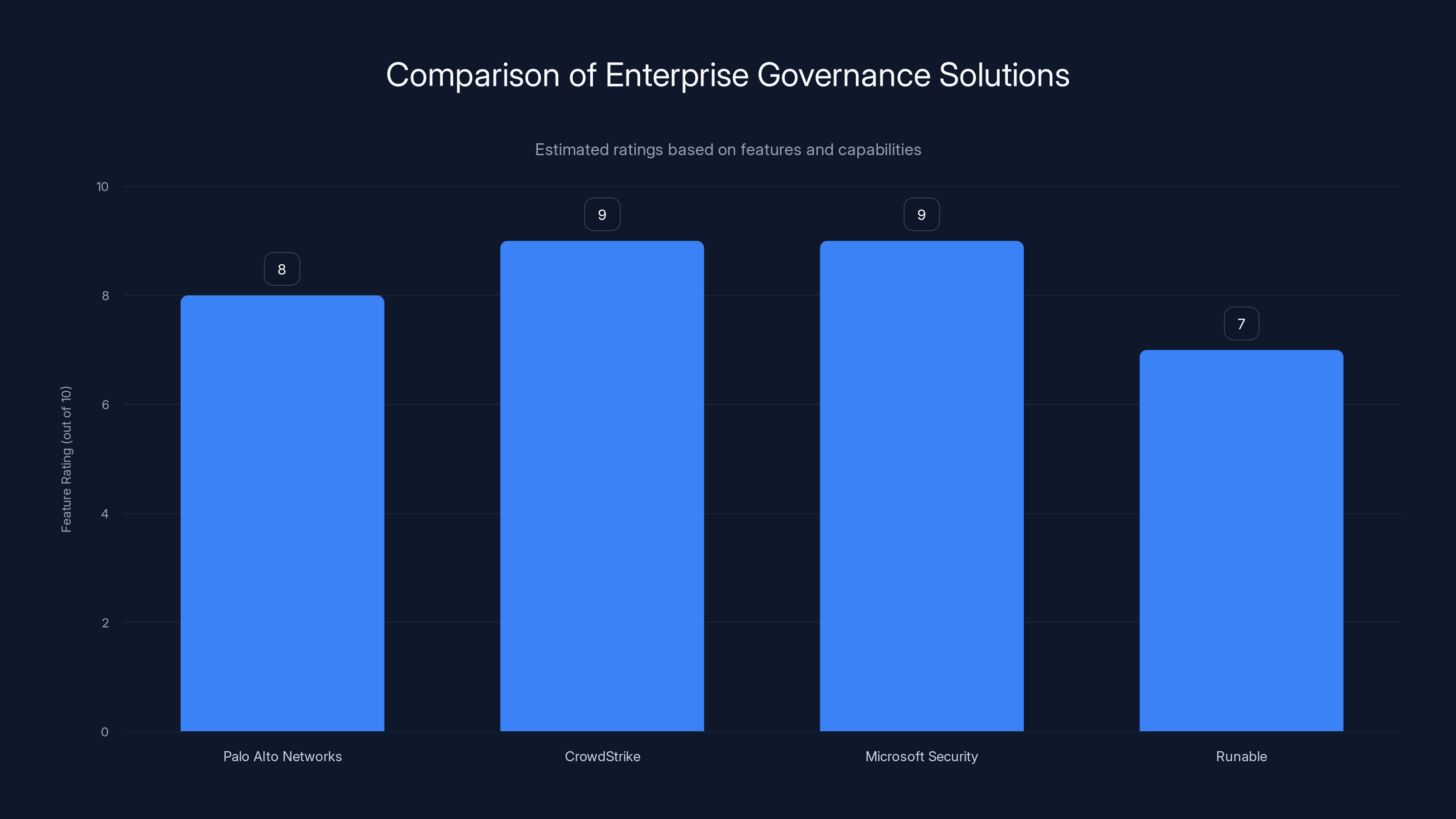

Estimated ratings suggest that CrowdStrike and Microsoft Security offer the most comprehensive features for enterprise governance, while Runable provides a cost-effective automation solution.

Strategic Responses: Building Organizational AI Resilience

Governance Framework Fundamentals

Addressing shadow AI requires building a governance framework that balances innovation and risk mitigation. Organizations need clear policies, approval processes, and monitoring mechanisms.

AI Policy Development: Organizations should establish clear policies governing AI tool use. These policies should address what categories of tools are prohibited, what categories are approved, what data can be processed through which tools, and what approval processes are required for new tool adoption. Policies should be specific enough to provide guidance but not so restrictive that they prevent beneficial AI adoption.

Tool Approval Processes: Organizations should maintain approved AI tool lists that specify which tools employees can use and for what purposes. This list should be regularly updated as new tools emerge and existing tools are evaluated. Critically, the approval process should be relatively fast—measured in weeks, not months. If the official process is slower than the shadow AI alternative, employees will choose shadow AI.

Data Classification and Controls: Organizations should classify data based on sensitivity and establish rules about which classified data can be processed through which AI tools. This might mean that public data and anonymized data can be processed through public AI services, while confidential data can only be processed through approved, secured AI tools. Clear data classification enables employees to make appropriate decisions about tool usage.

Documentation and Transparency: Organizations should maintain documentation of approved AI tools, their use cases, security properties, data retention practices, and any known limitations. This documentation enables informed decision-making by employees and managers about whether a particular tool is appropriate for their use case.

Detection and Monitoring Strategies

While complete detection of shadow AI may be impossible, organizations can implement monitoring strategies that increase visibility and create accountability.

Saa S and Cloud Monitoring: Organizations should implement Saa S discovery and monitoring tools that identify which cloud applications employees are accessing. Tools like Netskope, Zscaler, or Palo Alto Networks can provide visibility into cloud service usage, including AI tools. This monitoring isn't perfectly granular—it may identify that an employee is using Chat GPT but not exactly what they're using it for—but it provides baseline visibility.

User Surveys and Voluntary Disclosure: Organizations can conduct periodic surveys asking employees about their AI tool usage. Combining this with voluntary disclosure programs that reward employees for disclosing shadow AI usage without penalties can increase visibility. This approach acknowledges that employees aren't malicious and that shadow AI often results from benign decisions rather than deliberate rule-breaking.

Threat Hunt and Investigation: Organizations can conduct periodic threat hunts specifically focused on identifying shadow AI usage patterns. This might involve analyzing data exfiltration logs, API usage patterns, or behavioral analytics to identify unusual data flows that suggest shadow AI usage.

Data Leak Detection: Organizations can implement data leak detection solutions that identify sensitive data being transmitted to external services. If a data leak detection tool identifies customer data being transmitted to a public AI service, it provides evidence of shadow AI usage and enables intervention.

Building Better Alternatives

Ultimately, the most effective strategy for addressing shadow AI is making legitimate alternatives so superior that shadow AI becomes unnecessary.

Approved AI Tool Portfolio Development: Organizations should develop portfolios of approved AI tools covering major use cases. For software development teams, this means approved AI coding assistants with appropriate security and privacy protections. For data teams, this means approved AI-powered analytics and modeling tools. For marketing and communication teams, this means approved AI writing and content generation tools. The goal is to ensure that for any significant AI use case, employees have approved alternatives that are at least as convenient and powerful as shadow AI options.

Data Governance and Platform Investment: Organizations should invest in underlying data governance platforms that enable AI to function effectively. This means implementing data catalogs, metadata management, data lineage tracking, and data quality frameworks. When data governance is strong, official AI implementations can move quickly and efficiently, competing effectively with shadow AI in terms of speed and convenience.

Secure AI Infrastructure: Organizations should build or procure secure AI infrastructure that meets data protection, compliance, and security requirements while remaining accessible and user-friendly. This might involve implementing secure, hosted instances of open-source AI models, purchasing enterprise AI platforms with appropriate security controls, or building internal AI platforms that provide convenience without sacrificing security.

Training and Enablement: Organizations should invest heavily in training employees to use approved AI tools effectively. Much shadow AI adoption occurs because employees don't fully understand what approved alternatives exist or how to use them. Comprehensive training, documentation, and support reduce the appeal of shadow AI.

Fast Approval and Procurement: Organizations should implement expedited approval processes for new AI tools that meet their security and compliance requirements. If a secure AI tool relevant to a business function is identified, it should move from discovery to approval to deployment within weeks, not months. This speed creates competitive pressure that makes shadow AI less attractive.

Change Management and Culture

Technical controls and governance frameworks are necessary but insufficient. Addressing shadow AI also requires cultural change that positions AI as a collaborative enterprise initiative rather than something to circumvent.

Leadership Communication: Executive leaders should consistently communicate commitment to AI adoption and investment. When employees hear that leadership takes AI seriously and is investing in legitimate solutions, shadow AI adoption often decreases. The inverse is also true—when leadership appears paralyzed or skeptical about AI, employees are more likely to pursue shadow solutions.

Success Stories and Visibility: Organizations should publicize successful, legitimate AI implementations. When employees see colleagues using approved AI tools to achieve impressive results, it normalizes tool usage and reduces the perceived risk of adoption. Success stories also validate leadership's AI strategy and investment.

Non-Punitive Disclosure Programs: Organizations should create programs that encourage employees to voluntarily disclose shadow AI usage without fear of punishment. The goal should be to understand what problems shadow AI is solving and ensure that approved alternatives address those problems. If an employee is using shadow AI because an approved alternative doesn't exist, the organization has valuable product feedback. If the employee simply didn't know about approved alternatives, they can be educated and trained.

Risk Communication: Organizations should communicate shadow AI risks to employees in ways that are understandable and concrete, not abstract. Employees are more likely to modify behavior if they understand specific risks ("pasting customer data into Chat GPT could trigger GDPR fines up to €20 million") than if they're told it's "not allowed."

Emerging Solutions and Tooling Landscape

Saa S Management and Cloud Visibility Platforms

A category of tools specifically designed to provide visibility into Saa S and cloud application usage has matured significantly. These platforms help organizations understand which cloud services employees are using and can flag AI services specifically.

How These Work: Saa S discovery platforms analyze network traffic, endpoint activity, and user authentication logs to identify cloud service usage. They maintain databases of thousands of Saa S applications and AI tools, enabling rapid identification and categorization. When an employee accesses a known AI tool, the platform can flag it and log the activity.

Limitations: These tools can identify that employees are using certain AI services but typically can't determine exactly what data is being processed or what outputs are being generated. The visibility is at the service level, not the data level.

Examples and Cost: Major Saa S management platforms like Netskope, Zscaler, and Palo Alto Networks Prisma Cloud offer these capabilities as part of broader cloud security platforms. Costs typically range from $5-20 per user monthly, depending on features and deployment model. Smaller organizations might use tools like Coin Base or One Trust as alternatives.

Data Loss Prevention and Exfiltration Detection

Data loss prevention (DLP) tools have evolved to detect when sensitive data is being transmitted to external services, including AI tools.

How These Work: DLP tools inspect data in motion (network traffic), at rest (stored files), and at endpoints (data on user devices). They identify patterns that suggest sensitive data exposure—customer information, financial data, source code, intellectual property. When sensitive data is detected being transmitted to public services, alerts are triggered.

Effectiveness Against Shadow AI: DLP tools are reasonably effective at detecting when sensitive data is being pasted into public AI services, particularly if the service uses the public internet for data transmission. They're less effective at detecting usage of shadow AI tools where the data is already encrypted or where employees are deliberately avoiding data pasting by instead uploading files or using other transmission methods.

Examples and Cost: Enterprise DLP tools like Forcepoint, Symantec, and Mc Afee offer comprehensive capabilities. Costs typically range from $100,000+ annually for enterprise deployments, making them available primarily to larger organizations.

Secure AI Infrastructure and Enterprise AI Platforms

A growing category of enterprise AI platforms specifically designs for organizational governance and data protection represents the approved alternative to shadow AI.

How These Work: Enterprise AI platforms provide hosted or on-premises AI capabilities (typically large language models, machine learning frameworks, or specialized AI tools) with comprehensive security, compliance, and governance features. They often include data access controls, audit trails, model versioning, and integration with organizational data governance frameworks.

Key Features: These platforms typically include:

- Data residency controls (data stays within your environment)

- Role-based access controls (limiting who can use the platform)

- Audit logging (complete records of all usage)

- Integration with data governance frameworks (model lineage, data lineage)

- Compliance certifications (HIPAA, SOC 2, Fed RAMP, etc.)

- Custom model training capabilities (train models on organizational data while maintaining confidentiality)

- Output review and moderation tools

Examples: Enterprise AI platforms include offerings from major cloud providers (Azure AI, Google Cloud AI, AWS Sage Maker), specialized platforms like Hugging Face's enterprise solutions, and emerging companies like Anthropic and Cohere offering enterprise API access with appropriate controls.

Cost Considerations: Enterprise AI platforms typically cost significantly more than public consumer AI services—often hundreds or thousands monthly depending on usage—but provide data protection and governance that justify the cost for regulated organizations and those handling sensitive data.

AI Governance and Risk Management Platforms

A nascent but rapidly growing category of platforms specifically targets AI governance, compliance, and risk management.

Capabilities: These platforms typically provide:

- AI inventory management (cataloging AI systems used organization-wide)

- Risk assessment frameworks

- Compliance checking (against regulations like GDPR, HIPAA, SOX)

- Model monitoring (detecting degradation, bias, or safety issues)

- Incident response workflows

- Reporting and documentation

Emerging Players: Companies like Trust Lens, Humane Intelligence, and Orca Security are developing governance-focused solutions. This category remains relatively immature, but significant investment and development activity suggest rapid maturation.

Estimated data shows that unauthorized AI usage and policy violations are significant, with over 500 unauthorized uses and 200 policy violations monthly, highlighting the urgent need for better AI governance.

Best Practices for Organizational AI Governance

Policy Development Framework

Organizations should develop AI policies addressing multiple dimensions:

Tool Classification: Classify AI tools by risk profile. Low-risk tools using non-sensitive data might be freely available to all employees. Medium-risk tools using organizational data might require manager approval. High-risk tools involving regulated data might require executive approval and security review.

Data Sensitivity Policies: Define what data classifications can be processed through which AI tools. "Public" data might have no restrictions. "Internal" data might require approved tools with specified security properties. "Confidential" or regulated data might prohibit certain tools entirely.

Ownership and Accountability: Assign clear ownership and accountability for AI tool procurement, security evaluation, and ongoing management. Shadow AI often flourishes when responsibility is unclear.

Training and Certification: Require employees using AI tools to complete training on appropriate usage, data protection, and security considerations. Certifications can verify understanding and create additional accountability.

Regular Review Cycles: Establish regular review cycles where policies are revisited based on new tools, emerging threats, and lessons learned from incidents.

Implementation Checklist

Organizations implementing AI governance should address the following:

☐ Inventory: Catalog all officially approved AI tools and their security properties, data handling practices, and approved use cases.

☐ Data Classification: Implement a data classification system and apply it across the organization, enabling employees to understand what data can be processed through which tools.

☐ Policy Documentation: Develop written AI policies that employees can reference and understand. Distribute these policies and ensure acknowledgment.

☐ Training Program: Develop training covering approved tools, data protection, security considerations, and shadow AI risks. Make training mandatory for roles that commonly use AI.

☐ Monitoring: Implement monitoring tools providing visibility into AI tool usage and potentially shadowy data flows.

☐ Incident Response: Develop incident response procedures for shadow AI discovery, including investigation, remediation, and communication.

☐ Executive Alignment: Ensure IT, security, legal, compliance, and business leadership all understand and support the AI governance approach.

☐ Continuous Improvement: Establish feedback loops where employee concerns, shadow AI discoveries, and emerging threats inform ongoing policy and tool selection refinements.

Looking Ahead: Future Trends in AI Governance

Regulatory Frameworks Emerging

Governments worldwide are beginning to regulate AI, which will force organizational governance maturation. Organizations that establish strong governance frameworks now will be better positioned to comply with emerging regulations.

EU AI Act: The European Union's AI Act, which came into force in 2025, creates a risk-based regulatory framework for AI. Systems classified as "high-risk" are subject to compliance requirements including documentation, testing, and human oversight. Organizations processing EU personal data must comply with this regulation regardless of where they're headquartered.

US Executive Orders and Proposed Legislation: The US federal government has issued executive orders requiring AI risk management in federal procurement and has proposed legislation addressing AI transparency, liability, and safety. While US regulations remain less prescriptive than EU approaches, regulatory momentum is clear.

Sector-Specific Regulations: Financial regulators, healthcare regulators, and other sector regulators are developing AI-specific guidance. Banks must soon comply with AI risk management requirements from their regulators. Healthcare providers must navigate FDA AI approval processes and CMS payment policies for AI-driven diagnostics.

These emerging regulations will significantly increase the cost of non-compliance and the pressure on organizations to implement proper governance.

Technical Advances in Detection and Monitoring

As shadow AI becomes more prominent, detection technologies will mature. Expect significant advances in:

Behavioral Analytics: AI systems analyzing user behavior patterns can identify suspicious activity suggesting shadow AI usage—unusual data access patterns, exfiltration to unusual destinations, accessing multiple external services simultaneously.

Data Flow Analysis: Advanced data governance platforms will track data flows more comprehensively, making it increasingly difficult for shadow AI data pipelines to operate invisibly.

AI-Powered Governance: Organizations will increasingly use AI itself to monitor for shadow AI, creating a meta-layer of AI governance monitoring other AI.

Organizational Evolution and Integration

As organizations mature in AI governance, expect evolution toward:

Chief AI Officer Roles: More organizations will establish Chief AI Officer positions reporting to the C-suite, elevating AI governance from a technical concern to a strategic priority.

Integrated AI Strategy: Rather than treating AI governance as a security or risk management function, organizations will integrate AI strategy with business strategy, product strategy, and technology strategy.

Innovation Platforms: Organizations will move beyond simple approval-or-rejection decision-making toward innovation platforms that encourage AI experimentation within bounded, controlled environments. Safe-to-play sandbox environments where employees can experiment with new AI capabilities before moving them to production.

Practical Solutions: Tools and Platforms for Comparison

Enterprise Governance Solutions

Comprehensive Security Suite Approach: Organizations can implement comprehensive security platforms that include Saa S discovery, data loss prevention, and behavioral analytics as integrated components. Platforms like Palo Alto Networks, Crowd Strike, or Microsoft Security combine multiple capabilities.

Specialized Governance Platforms: Dedicated AI governance platforms provide focused capabilities specifically designed for AI risk management, compliance, and governance.

Internal Platform Development: Some organizations develop internal AI governance platforms tailored to their specific requirements, risk tolerance, and technology stack.

For teams building internal automation and governance solutions, platforms like Runable offer automation capabilities that can help scale governance enforcement. With features for automated workflow orchestration and policy enforcement, Runable can help teams implement controls that scale without proportional growth in headcount. Organizations looking for cost-effective automation solutions starting at $9/month can use Runable to build internal governance workflows, document processing, and compliance checking automation.

Approved AI Tool Portfolios

Development Teams: Git Hub Copilot (with enterprise security controls), Jet Brains AI assistance, and specialized development-focused platforms provide approved alternatives for developers considering shadow solutions.

Data Teams: Databricks, Domino Data Lab, and enterprise instances of open-source platforms provide approved AI infrastructure for data science and analytics.

Marketing and Communication: Approved enterprise versions of content generation tools from major cloud providers (Azure Open AI, Google Cloud Generative AI, AWS Bedrock) provide organization-controlled alternatives.

Cross-Functional: Microsoft Copilot Pro with enterprise security, Open AI API with appropriate compliance certifications for specific use cases, and specialized vertical solutions address different organizational needs.

Conclusion: Regaining Control of AI

Shadow AI represents one of the most significant emerging governance challenges for modern organizations. Unlike previous technology adoption waves that threatened to disrupt IT operations, shadow AI simultaneously threatens security, compliance, intellectual property, and organizational control. The scope of the problem—with 50% of senior leaders believing more than half their employees use unauthorized AI, and average organizations experiencing 200+ monthly Gen AI policy violations—indicates this is not a fringe issue but a majority organizational reality.

The central irony of shadow AI is that it persists not despite organizational opposition, but because it delivers genuine value. Employees aren't rule-breakers; they're productivity-focused workers using tools that demonstrably improve their work. The problem isn't employee motivation—it's organizational governance structures that can't match the pace and convenience of unauthorized tools.

Addressing shadow AI requires a multifaceted approach combining technical controls, policy frameworks, approved alternatives, and cultural change. Organizations must simultaneously make it harder to use shadow AI through monitoring and controls, easier to use approved alternatives through investment in legitimate AI infrastructure, and more understood through education and transparency about risks and benefits.

The timeline for action is compressed. As shadow AI adoption accelerates, the embedded nature of these systems deepens. Shadow AI that operates invisibly for six months becomes foundational infrastructure that can't be easily unwound without operational disruption. Regulatory frameworks are tightening, meaning that what seems like a tolerable risk today may trigger significant penalties in a year or two. Data breaches involving shadow AI create amplified reputational and financial damage.

Organizations that move decisively now—establishing clear governance, investing in approved alternatives, implementing appropriate monitoring, and building organizational AI literacy—will find themselves with superior risk profiles, better positioned for regulatory compliance, and better positioned to harness AI's genuine benefits in a controlled, responsible manner.

The alternative is to continue with shadow AI expansion, accumulating technical debt, regulatory exposure, and security risk while hoping that the inevitable breach doesn't occur at your organization. This path is almost certainly more expensive than investing in proper governance, though the costs are hidden until a crisis occurs.

The question isn't whether to address shadow AI—it's when. Organizations waiting for a crisis will pay far more than organizations acting proactively now. The time to establish AI governance is before shadow AI becomes completely embedded in organizational processes and culture. For most organizations reading this, that time is now.

FAQ

What is shadow AI and why is it a problem for organizations?

Shadow AI refers to unauthorized use of artificial intelligence tools and platforms by employees without explicit organizational approval or IT oversight. It's problematic because it creates security vulnerabilities, compliance violations, intellectual property exposure, and regulatory liability while operating outside organizational visibility and control. When employees paste sensitive data into public AI services like Chat GPT or integrate shadow AI tools with organizational databases, they expose the organization to data breaches, regulatory fines, and loss of competitive advantage.

How prevalent is shadow AI adoption in organizations?

Research indicates that 50% of senior British data leaders believe more than half their employees use generative AI tools for company work without permission. Additionally, organizations report experiencing over 200 Gen AI-related data policy violations monthly on average. These statistics suggest shadow AI adoption is majority behavior in many organizations, not a fringe activity. The prevalence varies by industry, with technology and knowledge-work sectors showing higher adoption rates.

What specific data protection and compliance risks does shadow AI create?

Shadow AI creates multiple compliance risks including GDPR violations when personal data is transferred to third-party services without appropriate data processing agreements, HIPAA violations when healthcare organizations use unauthorized tools with protected health information, SOX violations when financial institutions process regulated data through untracked systems, and export control violations when defense contractors process controlled technology through public cloud services. Each violation carries potential regulatory fines ranging from tens of thousands to millions of dollars.

Why do employees use shadow AI despite organizational policies?

Employees use shadow AI because it delivers genuine productivity benefits—research shows AI tools can reduce coding time by 30-40%, accelerate document review processes, and increase customer service representative efficiency by 20-30%. When approved organizational alternatives don't exist or move slowly through procurement processes, employees solve the problem themselves. The risk feels abstract while the productivity gains feel concrete and immediate. Additionally, many employees don't understand the compliance and security implications of their tool choices.

What governance frameworks and policies should organizations implement to address shadow AI?

Organizations should implement multi-layered governance including clear AI tool policies classifying tools by risk profile, data classification frameworks defining what data can be processed through which tools, fast-track approval processes enabling tool adoption within weeks rather than months, Saa S monitoring and data loss prevention tools providing visibility into unauthorized tool usage, and approved alternative tools addressing major use cases. The approach should balance security and compliance with innovation and productivity, making legitimate options competitive with shadow alternatives.

How can organizations detect shadow AI if it operates outside official channels?

Detection strategies include implementing Saa S discovery tools that monitor cloud application usage, deploying data loss prevention systems that flag sensitive data transmission to external services, conducting employee surveys and voluntary disclosure programs, performing threat hunting focused on unusual data access and transmission patterns, and monitoring API usage patterns suggesting unauthorized integrations. No single detection method is complete, but layered approaches provide reasonable visibility even if shadow AI can't be entirely eliminated.

What are the key differences between addressing shadow AI and previous shadow IT governance challenges?

Shadow AI presents unique challenges compared to shadow IT because public AI tools require no installation, create minimal detectable network signatures, deliver immediate productivity benefits that justify usage, operate through simple web browsers, and are affordable enough that individual users can adopt them without organizational budget involvement. Additionally, shadow AI tools access and process data rather than just running isolated applications, creating more significant compliance and security implications than shadow IT.

How do organizations balance enabling innovation with preventing security and compliance risks from shadow AI?

Organizations should implement innovation platforms and sandbox environments where employees can safely experiment with new AI tools within bounded, controlled environments. This approach combines guardrails preventing uncontrolled production usage with freedom to explore and learn. Organizations should also establish fast-track approval processes for tools that meet security requirements, shifting from a default-deny posture to a default-allow-with-controls approach that enables productive innovation while maintaining necessary oversight.

What are the regulatory implications of shadow AI adoption becoming mainstream?

Emerging regulations including the EU AI Act, US executive orders on AI governance, and sector-specific regulatory requirements from financial and healthcare regulators increasingly require organizations to demonstrate control over AI systems. Shadow AI directly violates these requirements by operating outside organizational governance and audit trails. Organizations with significant shadow AI adoption face regulatory examination findings, enforcement actions, and fines when audits reveal uncontrolled AI usage processing regulated data.

How should organizations prioritize investments in approved AI alternatives over time?

Organizations should prioritize based on impact and prevalence. Start by identifying which shadow AI use cases create the greatest risk (those involving regulated data or sensitive information) and which are most prevalent (which tools are most commonly used). Develop approved alternatives for high-risk, high-prevalence use cases first, ensuring that approved tools are at least as convenient and powerful as shadow alternatives. Gradually expand to lower-risk use cases. Simultaneously, invest in underlying data governance and security infrastructure that makes all approved AI tools safer and more reliable.

Key Takeaways

- Shadow AI adoption is majority behavior: 50% of senior leaders believe more than half their employees use unauthorized AI tools without permission

- Organizations experience 200+ GenAI-related policy violations monthly on average, accumulating compliance and regulatory exposure

- 78% of C-suite executives underestimate AI implementation complexity, creating organizational gaps that shadow AI fills

- Shadow AI creates multiple risk categories: security breaches, compliance violations, intellectual property exposure, and regulatory liability

- Detection of shadow AI is challenging but achievable through layered approaches: SaaS discovery, DLP, behavioral analytics, and user surveys

- Effective governance requires balancing security with innovation through approved alternatives, fast-track approval processes, and innovation platforms

- Shadow AI risks compound over time as systems become embedded, creating technical debt and amplified breach consequences

- Regulatory frameworks like EU AI Act are tightening, making shadow AI non-compliance increasingly costly

- Organizations should prioritize addressing high-risk, high-prevalence shadow AI use cases first while building underlying data governance

- Proactive governance is significantly less expensive than managing breaches and regulatory responses from shadow AI