The Great AI Music Showdown: What Spotify Actually Said

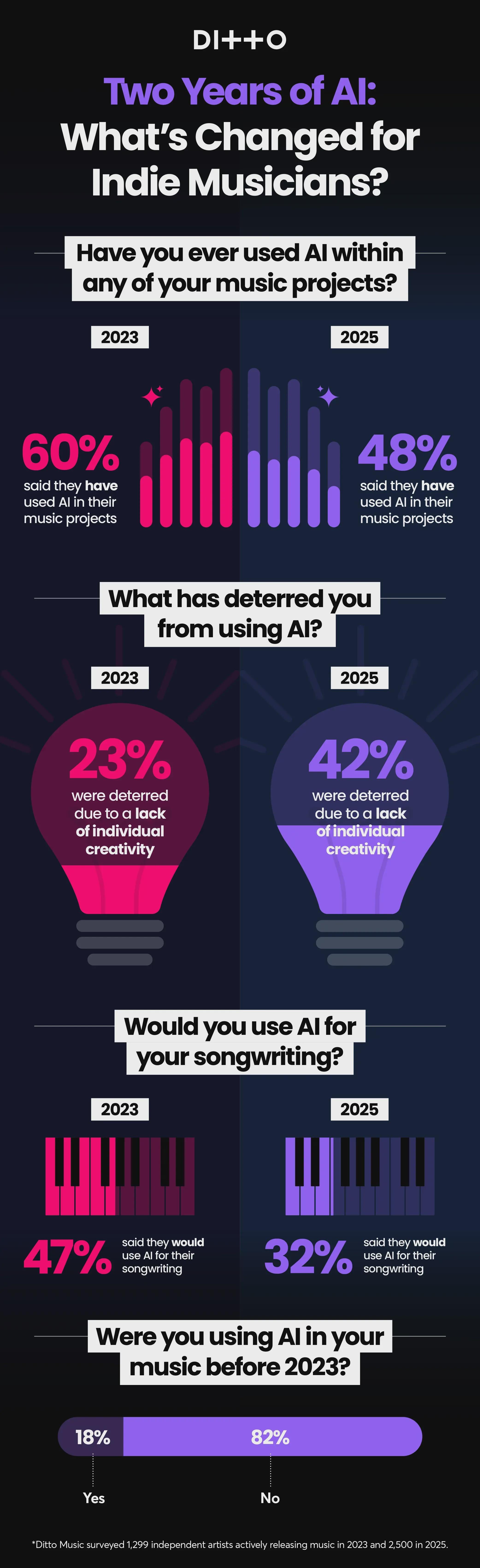

Last month, Spotify made headlines by claiming it doesn't actively promote or penalize tracks created with AI tools. Sounds reasonable on the surface, right? But the music industry had a collective eye roll. Real talk: this statement landed in the middle of a heated debate about AI-generated music flooding streaming platforms, and the timing felt like a dodge.

The controversy isn't new. For years, producers, musicians, and fans have watched AI-generated tracks pile up on Spotify with titles like "AI Music 247" or generic lo-fi beats that sound like they were composed by an algorithm trained on elevator music. Because they were. The problem? These tracks drown out legitimate artists, clog discovery playlists, and generate royalties that get split among mysterious account holders instead of actual human creators.

Spotify's official position is that the platform applies the same algorithmic treatment to all music, regardless of whether it was made by a person with a guitar or an algorithm processing terabytes of training data. No favoritism. No penalties. Just neutral treatment. Except that's where things get complicated.

The statement itself was a response to growing pressure from artists, labels, and advocacy groups who've watched AI-generated music explode across streaming services. Some estimates suggest thousands of AI tracks are uploaded to Spotify daily. When you're talking about that volume, even "neutral" treatment means artificial intelligence is reshaping the entire streaming ecosystem in real-time. And unlike traditional disruption, this one doesn't require anyone to acknowledge it's happening.

Bandcamp, the independent music platform, took a different approach entirely. Instead of claiming neutrality, Bandcamp doubled down on protecting human creators. The platform implemented stricter rules around AI-generated content, requiring clear disclosure and limiting algorithmic promotion. It's a fundamentally different philosophy: not "we'll treat everyone equally," but "we'll actively defend the creators who built this platform."

That contrast matters. Because Spotify's statement, while technically accurate, obscures what's actually happening on the platform. Let's dig into the details.

How Spotify's Algorithm Actually Treats AI Music

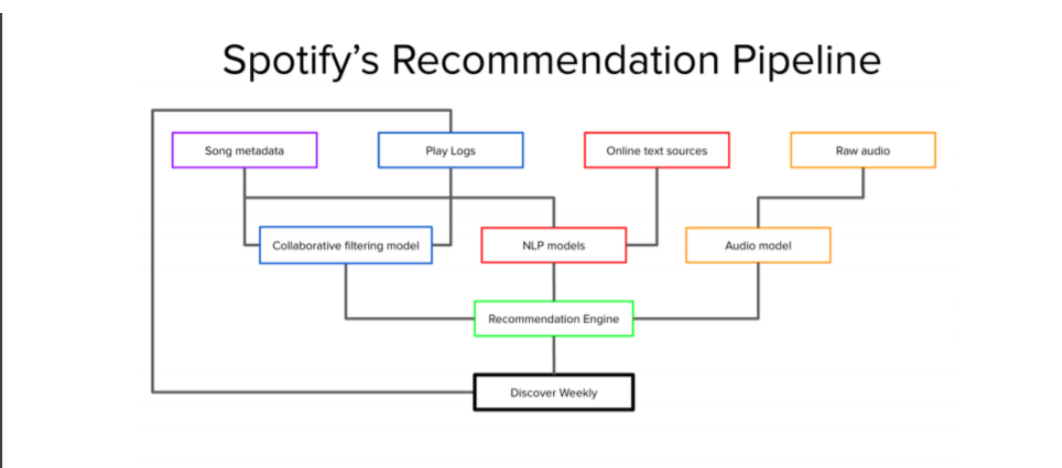

Here's what makes Spotify's "neutral treatment" claim tricky to evaluate. Spotify doesn't manually review every track before recommending it. The platform relies on machine learning algorithms that analyze audio characteristics, listening patterns, user behavior, and engagement metrics. These algorithms don't know or care whether a human composed a track or an AI did.

But that's exactly the problem. When you feed an algorithm thousands of AI-generated tracks optimized for maximum algorithmic appeal, and you feed it thousands of human-created tracks with all their messy, unique characteristics, guess which category tends to win? The one designed to game the system.

AI music generators are increasingly sophisticated. Tools like Amper Music and Sound Raw are built to produce perfectly acceptable background music. They're predictable. They hit emotional beats with mechanical precision. They don't have weird harmonic choices or unexpected key changes because their training data was optimized for broad appeal.

From an algorithmic perspective, that's a feature, not a bug. Spotify's recommendation engine can confidently predict that a listener who enjoyed Track A will probably enjoy Track B. The algorithm doesn't penalize AI music, but it also doesn't need to. AI-generated music is often already optimized to perform well algorithmically.

Spotify's counterargument would be: we're not penalizing it either. The playing field is level. But when one type of music is specifically engineered to appeal to recommendation algorithms, and the other type isn't, "level" means something different.

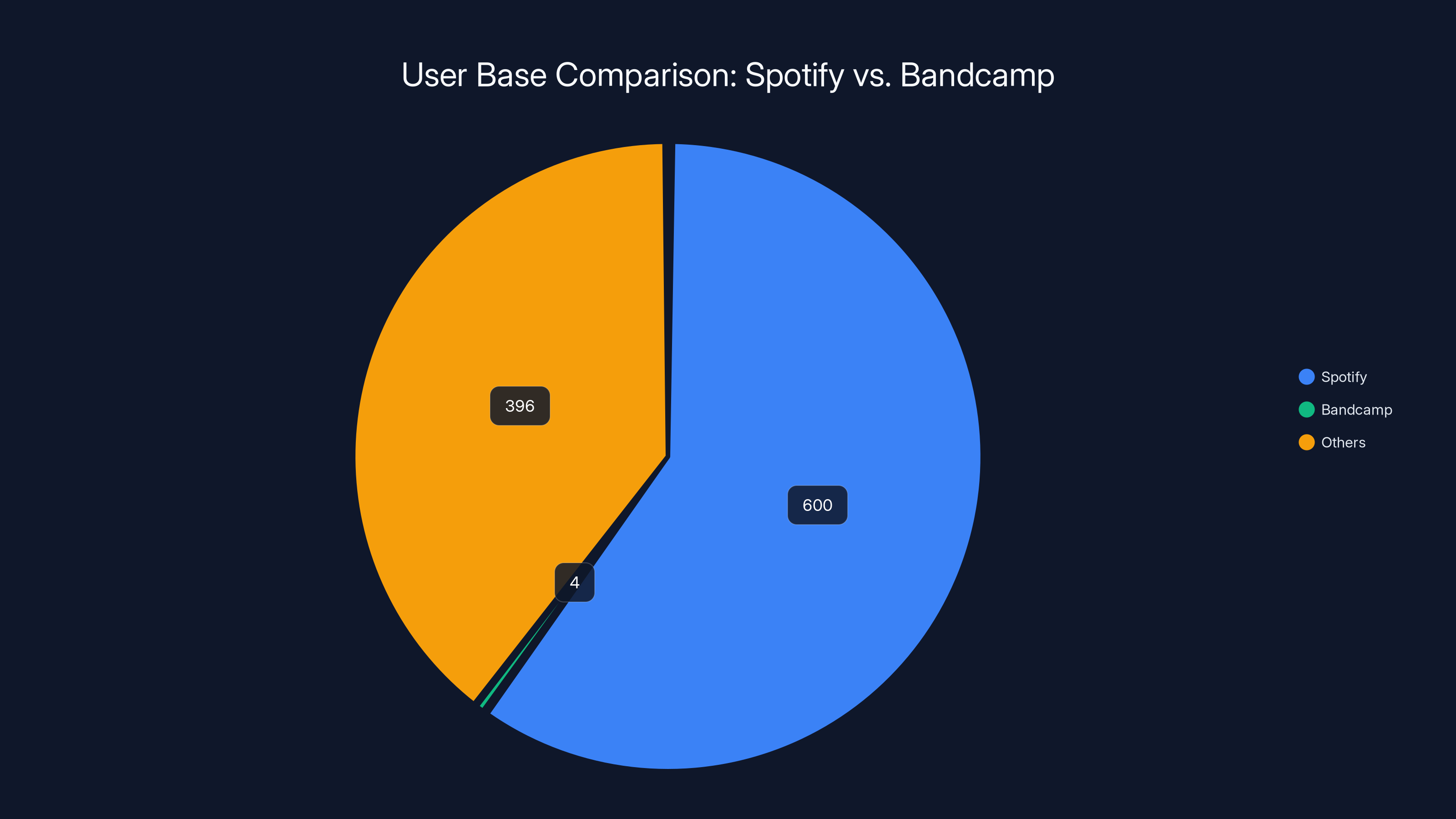

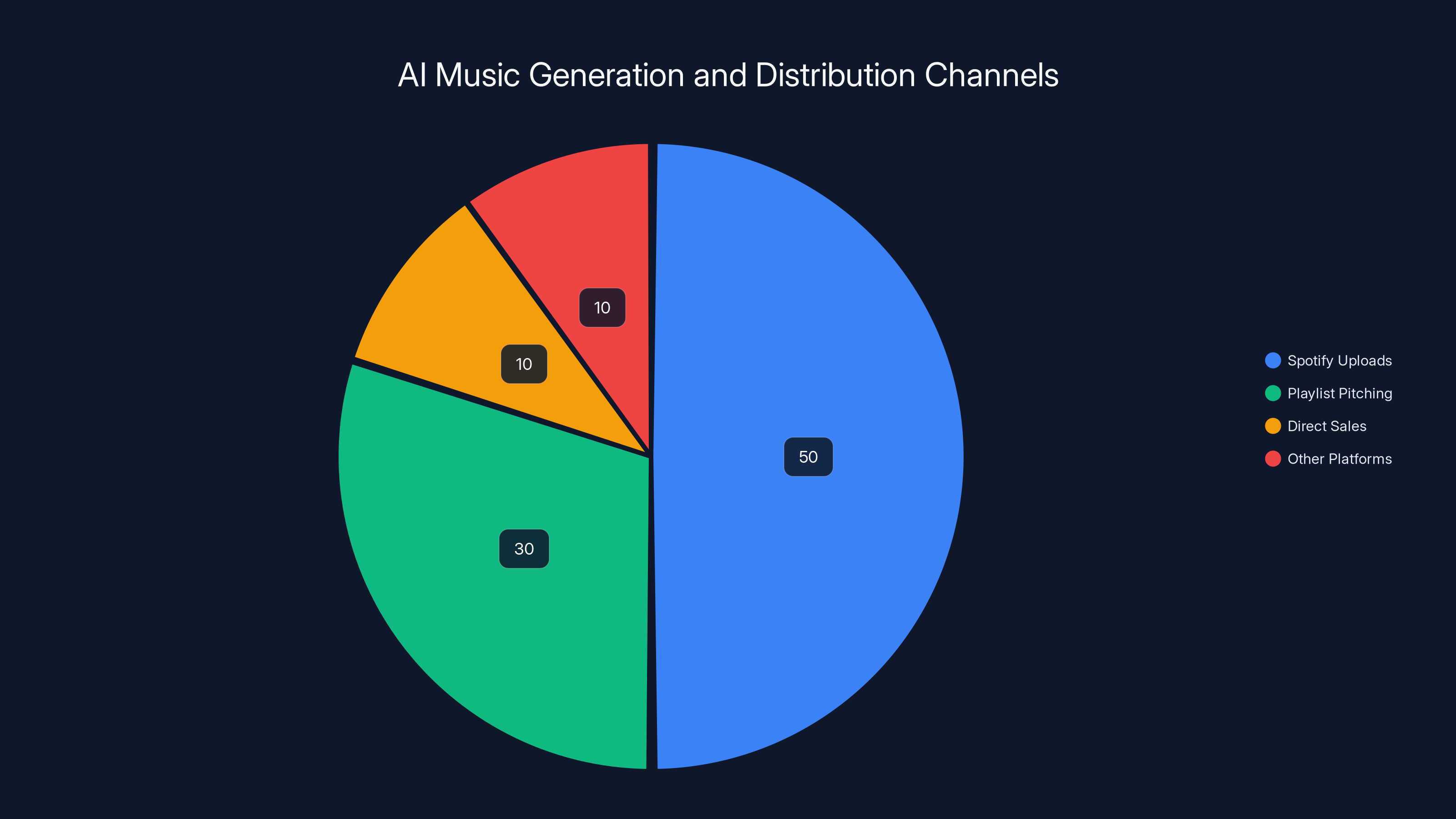

Spotify dominates with 600 million users compared to Bandcamp's 4 million, highlighting a vast difference in scale and community focus. Estimated data includes other platforms.

The Artist Perspective: Why Creators Are Frustrated

Spotify has roughly 100 million tracks in its catalog. That number grows by thousands every single day. For independent artists trying to get noticed, visibility is already brutally competitive. Spotify's playlists and algorithmic recommendations are often the difference between a sustainable career and a side project that pays next to nothing.

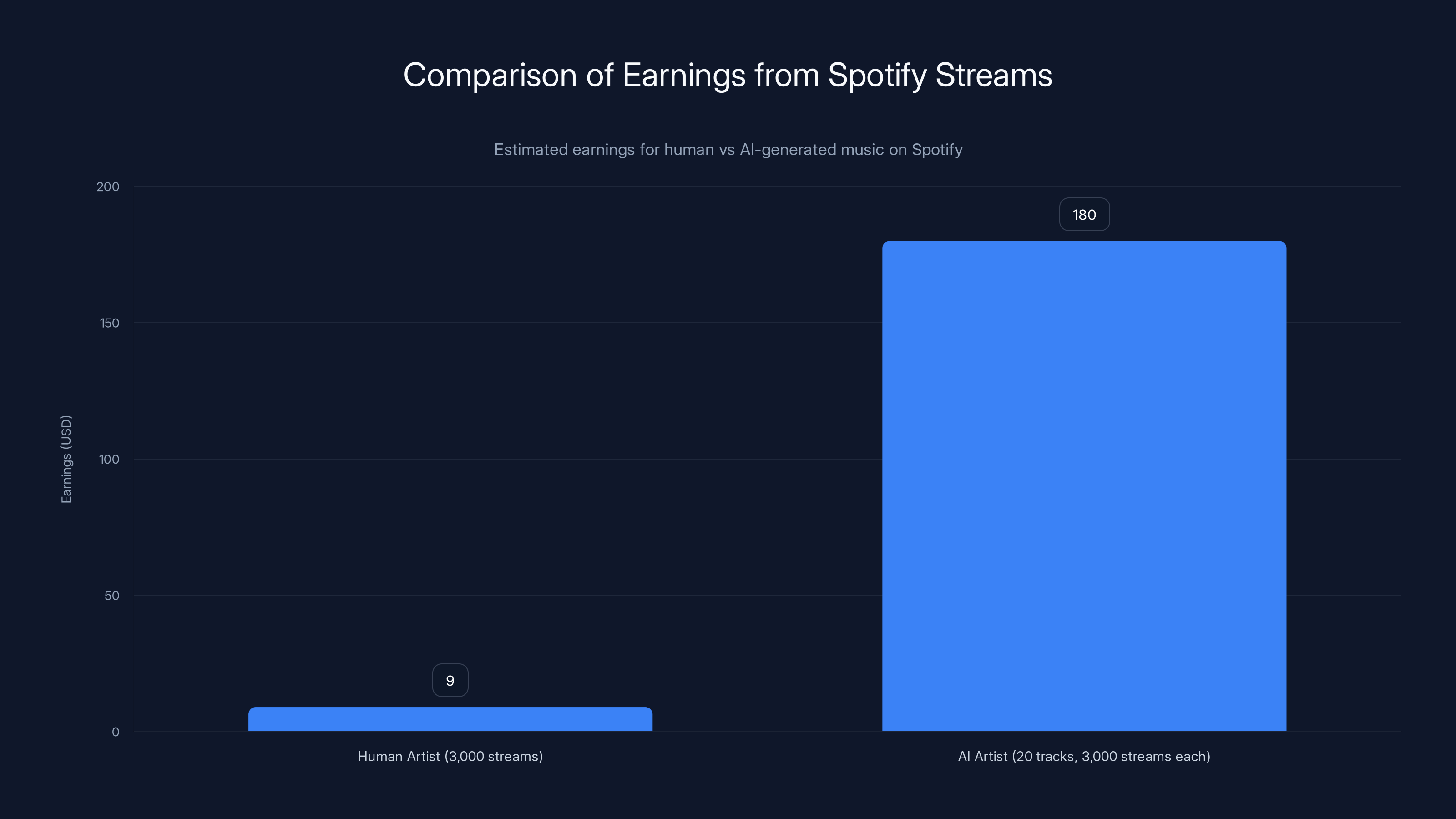

When AI-generated tracks flood the platform, they're not just adding quantity. They're changing the economics of music distribution. Here's the actual math: Spotify pays out roughly

The scarcity argument works too. When playlists are finite and curator attention is limited, every AI track that lands on a placement is a human artist who didn't. This isn't theoretical. Artists have documented instances where their original music got outcompeted by AI-generated alternatives of the same genre, often from accounts that uploaded 100+ tracks in a month.

Spotify's response—that they don't promote or penalize AI music—fundamentally misses the artists' concern. The problem isn't necessarily that Spotify is actively promoting AI tracks. The problem is that the platform isn't actively protecting human creators, and those are two different things.

Some artists have started adding metadata and using hashtags like #Human Artist or #Not AI to help listeners identify legitimate creators. The fact that musicians feel compelled to prove their own humanity speaks volumes about the current environment.

Human artists earn approximately

Bandcamp's Alternative Model: Active Curation Over Neutrality

Where Spotify chose neutrality, Bandcamp chose curation. The independent music platform explicitly requires users to disclose AI involvement in track creation. More importantly, Bandcamp implemented algorithms that prioritize long-form engagement and human artist discovery over pure metric optimization.

Bandcamp's CEO has stated publicly that the platform's mission is to support independent artists directly. AI-generated content doesn't necessarily violate that mission, but it changes how the algorithm allocates visibility. Bandcamp requires transparency because the platform's community cares about knowing who created what.

This matters because Bandcamp proves there's a viable alternative to Spotify's approach. The platform has over 4 million artists and a community that actively purchases music rather than streaming for fractions of pennies. That community has made clear it values human creativity and direct artist relationships.

Bandcamp's model also shows something else: required disclosure doesn't destroy AI music, it just changes how it's positioned. Some AI-generated tracks on Bandcamp are legitimate tools for specific use cases—background music for content creators, experimental compositions, or educational projects. When disclosed and contextualized properly, they can coexist with human music without creating the same friction.

The key difference is intent. Bandcamp says: "If you're using AI, tell people." Spotify says: "We don't care either way." One approach prioritizes transparency and community values. The other prioritizes platform neutrality and scale.

The Real Issue: Spotify's Scale vs. Community Values

Spotify has 600 million users. Bandcamp has somewhere around 4 million. That difference isn't just size—it's fundamental philosophy. Spotify optimizes for growth, engagement time, and subscriber retention across a global audience with wildly different music tastes. Bandcamp optimizes for direct artist support and community values.

Spotify's neutrality claim makes sense at their scale. Manually curating whether tracks are AI-generated or human-created would require thousands of moderators making subjective judgments constantly. It's impractical. And since AI music is constantly improving, the question of "what counts as AI" gets philosophically murky fast.

But here's the tension: Spotify already curates aggressively through human editorial playlists and algorithmic recommendations. The platform constantly makes choices about what gets promoted. They just don't make those choices based on creator type. Yet.

The real issue isn't neutrality. It's what happens when neutrality meets market incentives. AI-generated music is cheaper to produce, easier to iterate on, and increasingly plausible. From a business perspective, an independent artist uploading one heartfelt folk album per year has the same algorithmic treatment as a bot uploading 365 AI-generated ambient tracks.

Spotify's position is defensible only if you believe the recommendation algorithm is truly neutral, which most data scientists would tell you is naive. Algorithms encode the values and optimization targets of whoever built them. If the optimization target is engagement, and AI music is engineered for engagement, then Spotify's neutrality claim is technically accurate but practically misleading.

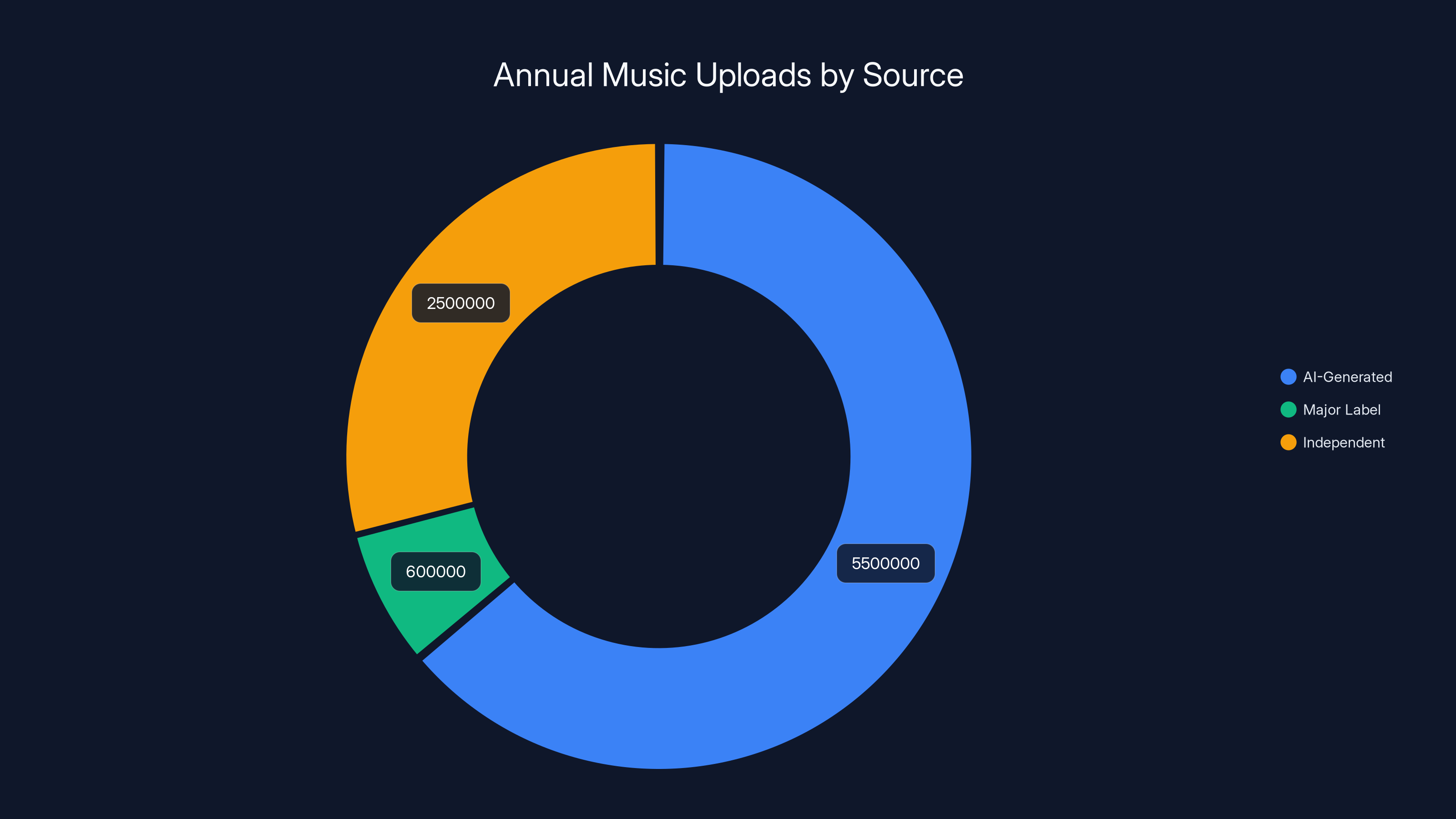

AI-generated music uploads are estimated to reach 5.5 million annually, surpassing both major label and independent releases combined. Estimated data.

What "Not Promoting or Penalizing" Actually Means

Let's unpack Spotify's exact claim: the platform does not promote or penalize tracks created using AI tools. This is technically precise language that reveals everything by saying very little.

Not promoting could mean several things. It could mean Spotify's curators don't manually select AI tracks for official playlists. That's probably true. It could also mean that algorithmic recommendations treat AI and human music identically, which is almost certainly not true because no two music genres or styles are algorithmically identical.

Not penalizing is the flip side. Spotify isn't explicitly blocking AI music or suppressing its algorithmic reach. The platform didn't ban AI tracks, hide them from search results, or reduce their eligibility for playlist inclusion. That's also probably true. But the absence of explicit penalty doesn't mean the playing field is level.

Here's what the statement doesn't address: is Spotify detecting AI music at all? The platform has never publicly revealed whether they're using audio analysis, metadata inspection, or other techniques to identify AI-generated tracks. If they're not identifying them, then the "no penalization" claim is almost meaningless—it's like saying we're not penalizing a group of people we haven't identified.

Industry speculation suggests Spotify could develop AI music detection systems if they wanted to. Audio fingerprinting, watermarking, and machine learning models trained to recognize AI-generation artifacts could all work. Spotify almost certainly has the technical capability. The question is whether they have the business incentive.

Here's where Spotify's business model becomes important. The platform pays out based on streams, and streams come from human listeners. If human listeners prefer human-created music (which engagement data suggests they do), then algorithmic recommendations naturally favor human creators without Spotify needing to explicitly say so. The algorithm follows user behavior, not artist type.

But that's a massive assumption. It assumes listeners can't tell the difference, or don't care, and that algorithmic amplification of AI content isn't happening. The evidence suggests otherwise.

The Flooding Problem: Scale of AI Music on Streaming

Numbers vary, but most industry watchers estimate between 10,000 and 20,000 AI-generated tracks are uploaded to major streaming platforms every single day. Some estimates go higher. Let's say 15,000 per day is reasonable. That's roughly 5.5 million per year, just from new daily uploads.

For context, major label releases in the US total roughly 600,000 per year. Independent releases probably add 2-3 million more. AI uploads would be on the same scale, but concentrated in specific genres where algorithmic appeal is highest: lo-fi beats, ambient music, lo-fi hip-hop, and instrumental background music.

These genres are exactly where AI music excels algorithmically. Why? Because AI training data is optimized for broad appeal and algorithmic measurables rather than artistic distinctiveness. An AI lo-fi beat generator trained on thousands of popular lo-fi tracks will naturally produce music that statistically resembles successful lo-fi. A human lo-fi artist might introduce weird time signatures, unexpected chord progressions, or idiosyncratic production choices that make the track less "algorithmically efficient" but more artistically distinctive.

The flooding problem creates practical issues. Spotify playlists have finite slots. Algorithmic recommendations have limited space. When you're competing for attention, and someone else can generate 100 tracks as fast as you can produce one, scale becomes a weapon.

Artists have reported that AI accounts are increasingly bidding for placement on editorial playlists through Spotify's ad network. Someone can generate 100 AI tracks, spend $50-100 on promotional campaigns, and get some of them onto "New Ambient" or "Focus" playlists, reaching millions of listeners. A human artist would need to spend proportionally more, coordinate with playlist curators, or get lucky.

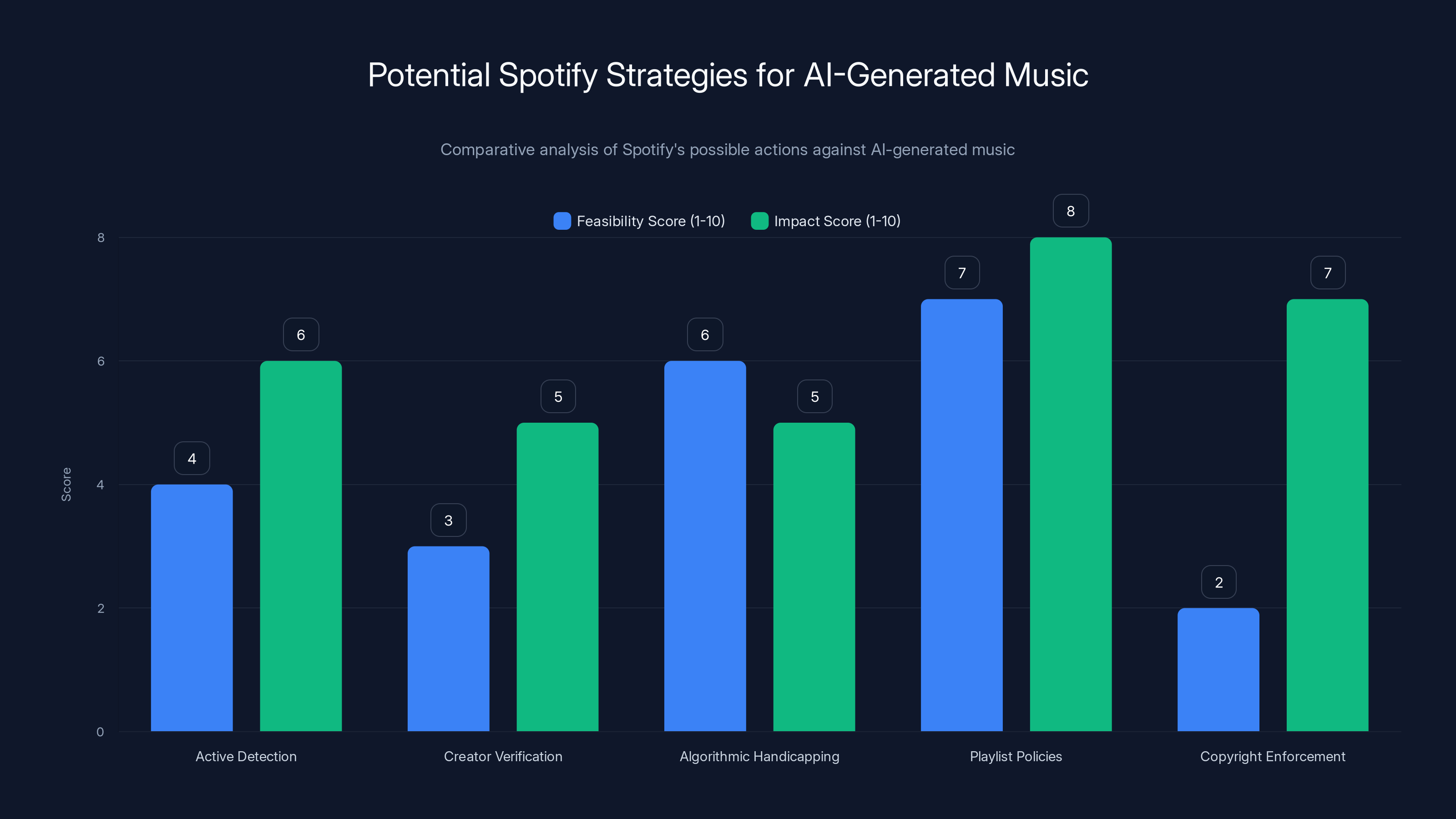

Playlist Policies have the highest impact potential with moderate feasibility, suggesting a balanced approach for Spotify. Estimated data based on strategic analysis.

Creator Concerns: Beyond Just Lost Streams

The economic impact is real, but artists' concerns go deeper than just missing out on fractions of pennies per stream. There's a philosophical issue about what music is and who deserves to participate in the music economy.

Music has traditionally been a creative medium where effort, skill, and emotional authenticity matter. A producer spends years learning sound design. A songwriter spends weeks on a single chorus. A vocalist develops their voice over decades. This work has intrinsic value partly because it's difficult and requires human creativity.

AI music generation disrupts that value proposition. If someone can generate 100 acceptable tracks in an afternoon, what happens to the market for people who spend months crafting albums? Economics suggests: prices fall, and professionals struggle to compete against cheap alternatives.

This mirrors disruption patterns in other industries. Photography got disrupted by phones. Graphic design got disrupted by templates and cheap freelancers. Software development is getting disrupted by AI code generation. But music is different because music is deeply personal. People listen to music they feel connected to, and that connection is often rooted in knowing a human created it.

Some artists are starting to market their work specifically as "human-created" or "not AI." That's the canary in the coal mine. When creators feel compelled to prove their humanity, it means the environment has changed in a way that requires explicit proof of authenticity.

Spotify's neutrality stance essentially says: "We're not taking sides in this philosophical debate." But neutrality in the face of major disruption often amounts to allowing the disruption to proceed unchecked. Bandcamp's curation approach says: "We're taking sides. We believe human creators should have advantages, and transparency is non-negotiable."

Both are defensible. Neither is obviously wrong. But they lead to completely different ecosystems.

How AI Music Is Actually Getting Generated and Distributed

Understanding the technical side helps explain why this is such a big problem now. AI music generation has gotten scary good in the past 18 months. Tools like Suno, Udio, and Mubert allow anyone to generate professional-sounding music by typing a description.

Want a lo-fi hip-hop beat with jazzy chords and subtle rain sounds? Describe it. Done in 30 seconds. Want background music for a YouTube video? Same thing. The quality has improved from "obviously AI" to "indistinguishable from human-created" in many genres.

This is creating a new category of "music entrepreneurs" who aren't musicians. Someone could theoretically:

- Generate 100 unique tracks using AI

- Upload them to Spotify with minimal metadata

- Run micro-campaigns promoting them

- Earn passive income from streams without any musical training

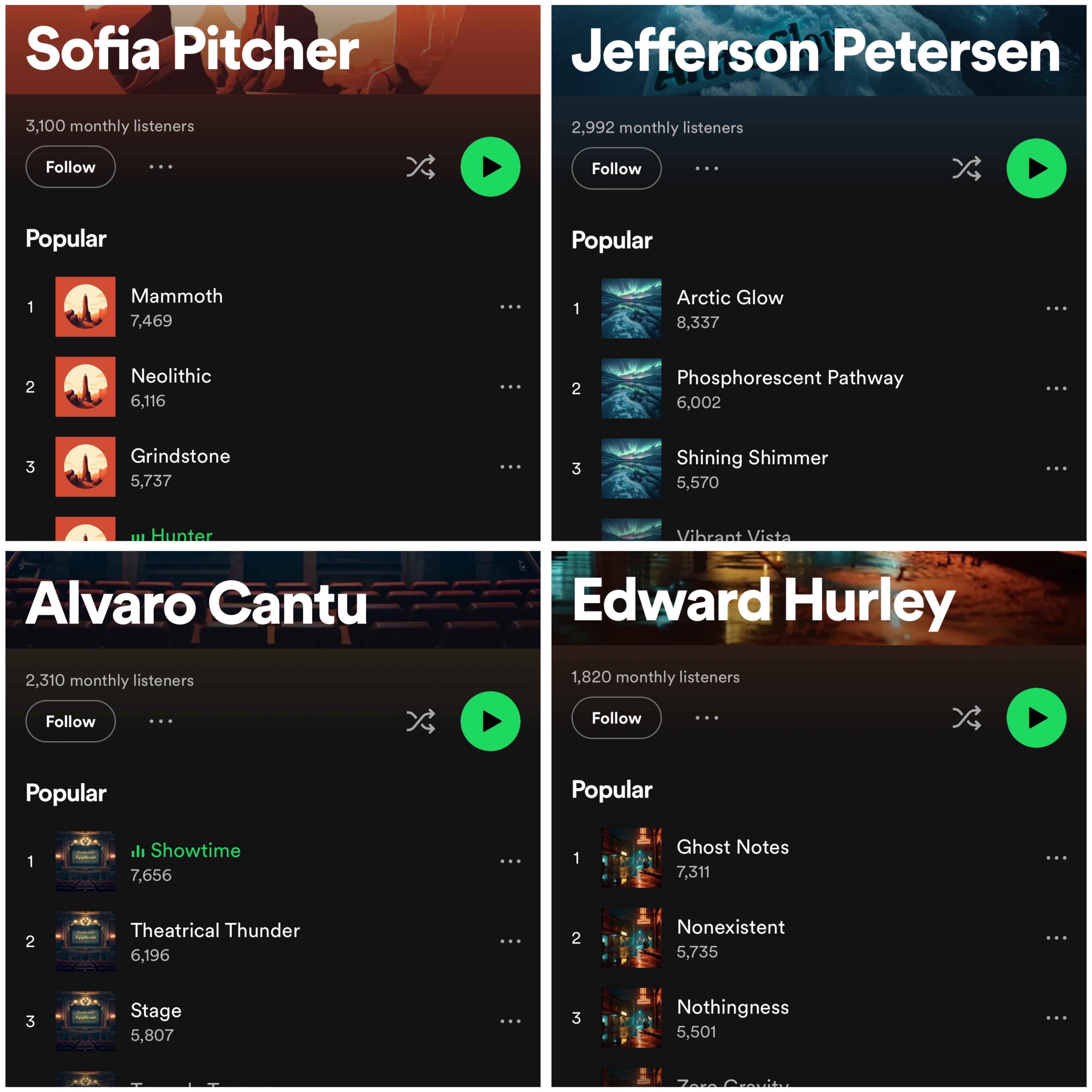

This is already happening. There are accounts on Spotify with thousands of uploads, minimal artist information, and no apparent human creators. Some get millions of streams.

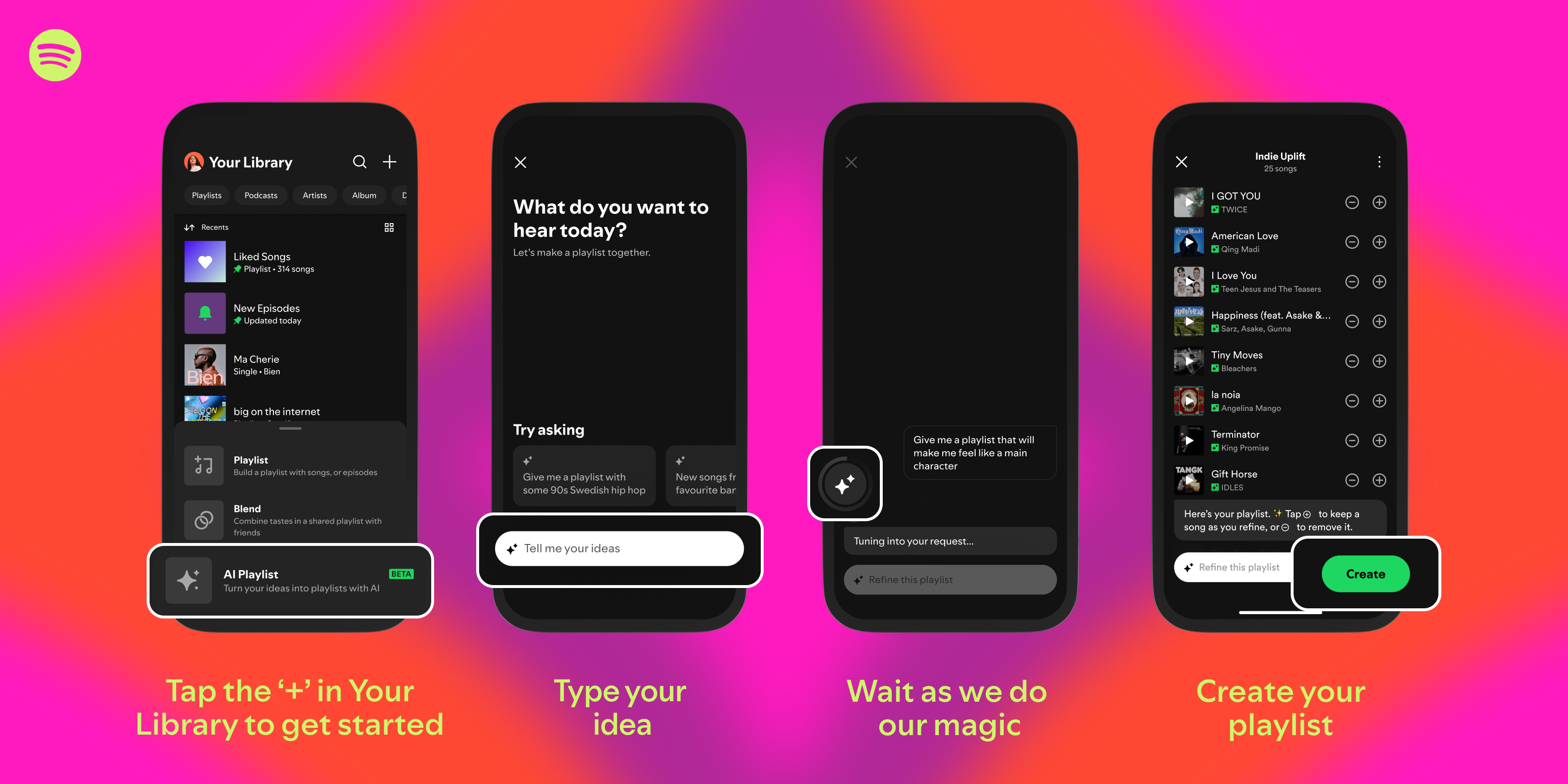

The distribution mechanism is key. Spotify makes it trivial to upload music. The platform doesn't require proof of creation, copyright verification, or artist verification in most cases. An account needs an email address and can start uploading immediately. This accessibility is great for legitimate independent artists but creates zero friction for AI uploaders.

Many AI-generated tracks are also getting distributed through playlist pitching services. These services submit music to curators for consideration. Some curators are probably accepting AI music without knowing it's AI, especially when the metadata doesn't disclose the generation method.

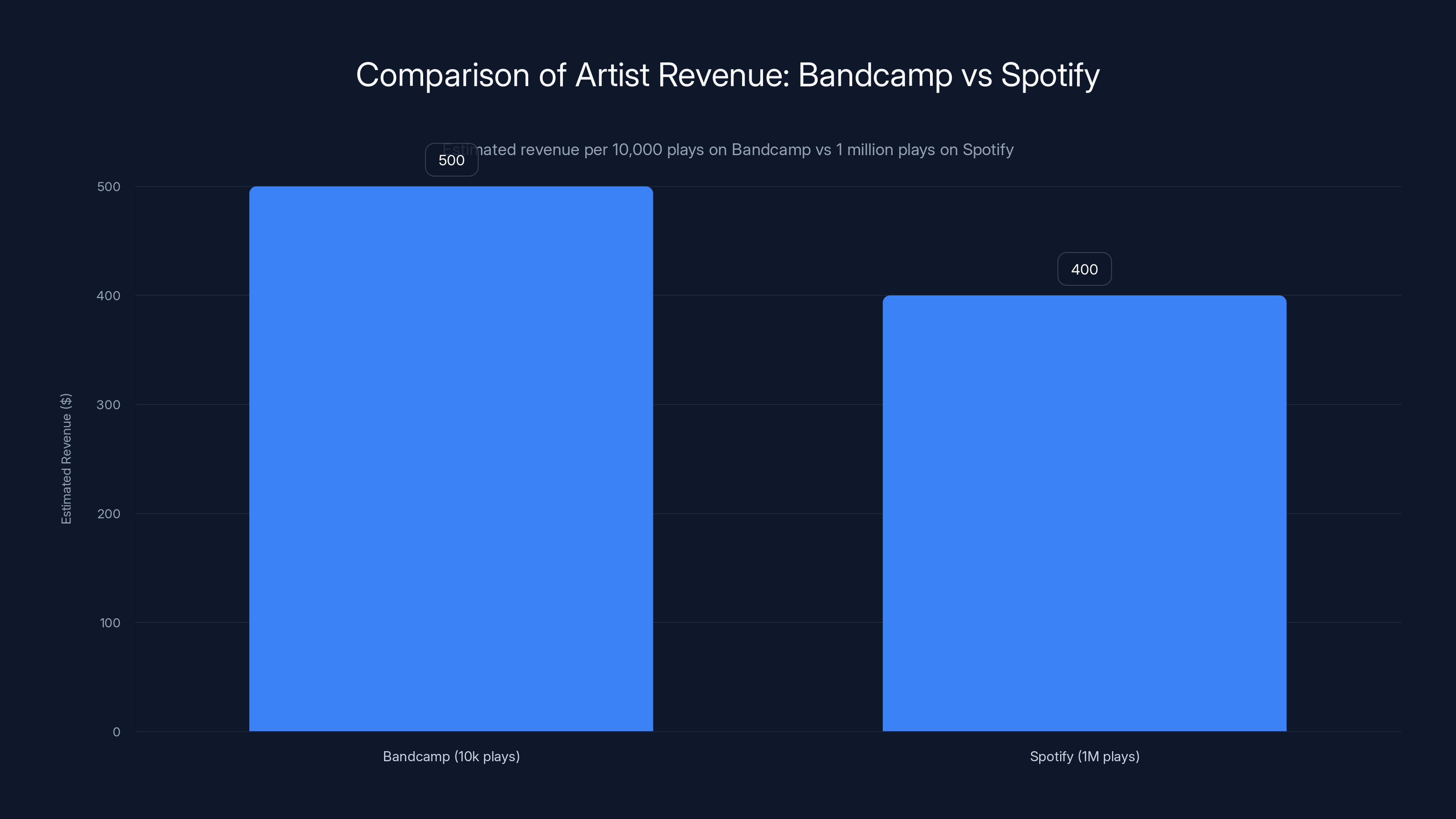

Bandcamp's model allows artists to earn more from fewer plays compared to Spotify. Estimated data shows Bandcamp can generate more revenue from 10,000 plays than Spotify from 1 million plays.

The Copyright Question: Who Actually Owns AI Music?

This gets legally complicated fast. When you generate a track using Suno, who owns it? The user who typed the prompt? Suno? The original artists whose work trained the model? This question has massive implications for Spotify because it affects copyright, royalty payments, and legal liability.

Most AI music tools claim that users own the generated output. But that ownership is built on shaky ground. The training data came from somewhere—usually from existing music licensed under various agreements. If a model was trained on copyrighted music, does the user who generated a new track owe royalties to original rights holders? Nobody's answered this definitively.

Spotify theoretically has exposure here. If a track uploaded to Spotify is AI-generated from copyrighted training data, and the original rights holders sue, where does that liability fall? Spotify? The uploader? This uncertainty is probably why Spotify hasn't taken a hard stance on AI music. Until the copyright questions are resolved, taking any position beyond "neutrality" creates risk.

Bandcamp's approach is simpler: require disclosure. This shifts responsibility to the uploader. If you're uploading AI-generated music, you're asserting that you have the rights to do so and that the track doesn't violate the intellectual property of artists who trained the generation model. That's a clearer liability structure.

But for Spotify at global scale with millions of daily uploads, this is genuinely complicated. Different countries have different copyright laws. The US has fair use doctrine. Europe has different frameworks. China's framework is different still. Spotify can't possibly manually verify copyright status for millions of uploads. They need to either build automated detection (which is imperfect) or claim neutrality and let the legal system sort it out.

What Spotify Could Actually Do (But Probably Won't)

The platform has several options for handling AI-generated music more actively, but each comes with trade-offs.

Option 1: Active Detection and Labeling Spotify could build or license audio analysis tools to detect AI-generated tracks and label them accordingly. This would give listeners transparency and help human artists stand out. The challenge: false positives, false negatives, and constantly evolving AI making detection harder. Also, it requires enforcement resources and international coordination.

Option 2: Creator Verification Require artists to verify identity, provide portfolio evidence, or accept more responsibility for uploads. This raises friction for legitimate independent artists but blocks many bot accounts. Bandcamp does something like this. Spotify's scale makes it impractical without major process changes.

Option 3: Algorithmic Handicapping Apply slight algorithmic penalties to tracks with uncertain provenance or from accounts with suspicious patterns (too many uploads, no engagement history, etc.). This isn't penalizing AI specifically—it's using broader signals of legitimacy. Spotify probably already does some of this, but not publicly.

Option 4: Playlist Policies Change how editorial and algorithmic playlists are populated. Require curator discretion for AI-generated music. Weight algorithmic recommendations toward artists with verified identities or longer track records. This is moderate effort and would tangibly help human creators.

Option 5: Copyright Enforcement Actually require proof that AI tracks don't infringe on training data copyright holders. This kicks the hardest problem to users and would reduce AI uploads significantly, but also introduces legal complexity.

Spotify hasn't moved toward any of these publicly. The company's neutrality stance means they're essentially betting on the status quo: the market will self-correct if human music is actually preferred, and Spotify doesn't need to intervene.

The company might be right. Listener behavior ultimately decides which music gets heard. If people prefer human-created music, algorithmic engagement metrics will show that preference, and human music will win without Spotify needing to actively promote it. The problem is, engagement isn't the same as preference. Algorithmic recommendation is powerful. If AI music gets decent algorithmic placement early, it might build engagement through exposure rather than merit.

Estimated data shows that Spotify uploads account for 50% of AI music distribution, followed by playlist pitching at 30%. The ease of access and minimal verification on platforms like Spotify facilitate AI music proliferation.

The Industry Response: Labels, Artists, and Advocacy Groups

It's not just independent artists complaining. Major record labels, artist advocacy groups, and musician associations have all raised concerns about AI-generated music on Spotify.

The Recording Industry Association of America (RIAA) has been pushing for stronger copyright enforcement and AI disclosure requirements. Independent artist groups like the Featured Artists Coalition have advocated for transparency. Some artist collectives have even created playlists and promotions specifically highlighting human-created music as a form of protest.

There's also a growing "AI-free" movement where artists explicitly market their work as non-AI-generated. This is economically rational if listeners prefer human creativity. It's also a sign that the industry recognizes AI as a genuine competitive threat, not just a fringe concern.

Record labels are taking different approaches. Some are betting on AI-generated music as a way to lower production costs. Others are actively lobbying for restrictions. The splits are emerging along economic lines: companies that profit from cheap music production favor AI, while companies that profit from artist royalties and rights management oppose it.

Spotify's position is interesting here because the company makes money from streams regardless of music origin. The platform has no inherent economic incentive to favor human over AI music. But Spotify's survival depends on having quality content that keeps listeners engaged. If listeners migrate to platforms like Bandcamp that offer human-created music with more curated experiences, that becomes a competitive threat.

Some industry analysts think Spotify is waiting to see how the AI situation resolves before taking a position. If copyright issues get resolved in favor of artists suing AI music companies, Spotify might pivot. If AI music becomes culturally dominant and listeners stop caring, Spotify's neutrality stance was correct all along.

Why Bandcamp's Approach Is Gaining Attention

Bandcamp isn't huge by Spotify's standards, but the platform has become a refuge for artists skeptical of algorithmic streaming. Here's why Bandcamp's model matters:

First, Bandcamp requires direct artist-to-listener relationships. Artists set their own prices, control their own metadata, and communicate directly with fans. This creates accountability and authenticity that algorithmic platforms don't.

Second, Bandcamp pays significantly higher per-stream rates than Spotify, and takes a smaller cut. Artists can actually make sustainable income from fewer listeners. A track with 10,000 plays on Bandcamp might generate more revenue than the same track with 1 million plays on Spotify.

Third, Bandcamp's community explicitly values artistry and human creativity. The platform's culture—built through editorial curation, creator interviews, and community discussions—reinforces that values. AI-generated music doesn't fit the community vibe, so disclosure becomes natural rather than required by policy.

Fourth, Bandcamp actually engages in curation. The platform has a team that listens to music, writes about artists, and features creators in promotional campaigns. This is expensive and doesn't scale to Spotify's 100 million tracks, but it creates a different experience. Listeners know a human chose the music they're seeing, which carries weight.

Bandcamp isn't a perfect solution. The platform has lower discoverability for new artists, smaller reach, and limited playlist culture. Most listeners will continue using Spotify because of convenience, library size, and integrated services. But Bandcamp demonstrates that there's a viable alternative for artists and listeners who prioritize human creativity over algorithmic optimization.

What Listeners Actually Want (And What The Data Shows)

This is where Spotify's neutrality stance gets tested. Do listeners actually prefer human-created music or is that a bias of critics and artists? The data is mixed.

Engagement metrics from Spotify suggest listeners skip AI-generated music at higher rates than human music when both are presented equally. This is hard data, not opinion. If you show a listener AI ambient music and human ambient music in random order, they're statistically more likely to skip the AI version and come back to the human version.

But there's a catch. If the AI music is already well-promoted through playlists or algorithmic recommendations, it gets more plays because of exposure, not quality. This creates a confounding variable. Are listeners rejecting AI because it's lower quality, or are they rejecting it because they know it's AI and that knowledge affects their judgment?

Some data suggests the latter. When listeners don't know if music is AI-generated, quality judgments are more favorable. Once they learn a track is AI-generated, satisfaction often drops. This means the issue might not be about Spotify promoting AI unfairly, but about Spotify failing to provide information that would let listeners make informed choices.

This brings us back to Bandcamp's approach: transparency. If listeners knew which tracks on Spotify were AI-generated, they could make their own choices. Spotify's neutrality means Spotify isn't providing that information, which is a form of decision-making. It's saying: "We won't tell you, and the platform treats music equally."

Most listener surveys show strong preferences for supporting human artists when given the choice. Independent artist playlists on Spotify, when labeled as such, often outperform algorithmic suggestions. This suggests listeners would choose human music if it were highlighted, but they're not making that choice because they're not given clear options.

The Future: Regulation, Technology, and Platform Evolution

This situation is probably going to escalate before it resolves. Several forces are pushing change:

Regulation is coming. The EU's Digital Services Act and similar regulations in other countries are starting to require platforms to be more transparent about content moderation and algorithmic promotion. AI-generated content disclosure could be mandated within the next few years.

Copyright litigation will reshape the landscape. Multiple lawsuits against AI music companies are working through courts. If artists and their estates win, AI music generation might become expensive or legally fraught, changing the economics entirely.

Technology for detection and verification will improve. Within a few years, detecting AI-generated music will probably be easier and more reliable. This will make labeling easier and reduce Spotify's excuse for not taking action.

Listener preferences might shift. As AI music becomes ubiquitous, listeners might either embrace it as a legitimate musical form or reject it more strongly. Either way, the current ambiguous middle ground won't last.

Platforms will differentiate based on policies. The Spotify-Bandcamp split might become permanent, with different platforms attracting different audiences based on their AI stance. Some platforms will go "AI-native," focusing on algorithm-optimized content. Others will go "human-only," catering to listeners and artists who want authenticity.

Spotify's neutrality stance is probably temporary. Eventually, the company will face pressure to either promote human creators actively or accept being associated with algorithmic optimization over artistic merit. The market will likely punish whichever choice alienates the bigger audience.

The Bigger Picture: What This Reveals About Platforms

The AI music debate isn't really about AI. It's about how platforms operate, whose interests they serve, and what happens when disruption outpaces policy.

Spotify's neutrality claim sounds fair until you realize that neutrality requires actively deciding not to intervene. Bandcamp's curation approach is openly biased in favor of human creators. Which is actually more honest?

Arguably, Bandcamp is more transparent about its values. The platform says: "We believe human artists matter, and we structure our platform accordingly." Spotify says: "We're neutral," while actually operating a system that may inadvertently favor algorithmic efficiency over artistic distinctiveness.

This pattern is playing out across the tech industry. Platforms claim neutrality while making choices that have massive consequences. Algorithm design is a choice. Moderation decisions are choices. Not taking action is a choice. Pretending otherwise is just avoiding responsibility.

The music industry is catching the problem earlier than other industries. When code generation tools flooded GitHub, software engineers had to adapt to a new reality where not all submitted code came from humans. When image generation tools hit mass adoption, digital artists had to figure out how to compete. Music is going through the same transition, and Spotify's moment of reckoning is coming soon.

Bandcamp is showing that there's an alternative to algorithmic neutrality. It's more expensive. It requires human judgment. It doesn't scale globally as easily. But it creates a platform where human creators can compete on quality rather than algorithmic efficiency, and listeners know what they're getting.

The question isn't whether Spotify should change. The question is whether Spotify's current approach is sustainable when a growing subset of listeners and artists explicitly prefer the Bandcamp model. If that preference grows, Spotify's neutrality claim becomes a competitive liability, and the platform will eventually adapt.

TL; DR

- Spotify's Neutrality Claim: The platform says it doesn't promote or penalize AI-generated music, but "neutrality" masks deeper algorithmic choices that may inadvertently favor AI-optimized content

- The Real Problem: Thousands of AI tracks upload daily, creating competition for human artists and potentially gaming algorithms designed for algorithmic efficiency rather than artistic quality

- Artist Impact: Musicians report losing playlist placement and listener attention to AI-generated tracks, especially in lo-fi, ambient, and background music genres

- Bandcamp's Alternative: The independent platform requires AI disclosure, prioritizes human creator visibility, and pays higher per-stream rates, showing a viable alternative model exists

- Bottom Line: This isn't ultimately about Spotify being evil—it's about platform design choices and whether algorithmic systems should actively protect human creativity or remain technically neutral while market forces determine outcomes

FAQ

What does it mean that Spotify doesn't promote or penalize AI-generated music?

It means Spotify's algorithms treat AI-generated tracks the same as human-created music in terms of algorithmic recommendations and playlist eligibility. The platform isn't actively boosting AI tracks to the top of playlists or hiding them from discovery. However, "neutral treatment" doesn't mean equal outcomes, since music optimized for algorithmic appeal may naturally perform better without Spotify explicitly promoting it.

Why are musicians concerned if Spotify says it treats AI music equally?

Musicians worry because "equal treatment" means AI-generated tracks compete directly with human-created music. Since AI tools can generate hundreds of tracks quickly while human artists might produce one album per year, the sheer volume can drown out legitimate creators. Additionally, AI music is often engineered for algorithmic appeal, so equal algorithmic treatment might inadvertently favor AI.

How is Bandcamp's approach different from Spotify's?

Bandcamp requires creators to disclose AI involvement, prioritizes human artists in algorithmic recommendations, and pays higher per-stream rates. Instead of claiming neutrality, Bandcamp actively supports human creators and transparency. This makes Bandcamp smaller but more curated for artists and listeners who value human creativity.

How much AI-generated music is actually on Spotify?

Estimates vary, but research suggests 15,000 to 20,000 AI-generated tracks upload to streaming platforms daily. This is roughly equivalent to the entire annual major label release rate in the US. Most AI music concentrates in lo-fi, ambient, and instrumental genres where algorithmic appeal is highest.

Can I tell if a song on Spotify is AI-generated?

Not easily. Spotify doesn't label AI-generated music, and high-quality AI tracks sound convincingly human. You might notice AI music through artist research: check if the creator has a biography, a reasonable upload history, and engagement with fans. Accounts with hundreds of uploads in weeks and minimal artist information are often AI operations.

What could Spotify do about AI-generated music?

Spotify could label AI-generated tracks, require artist verification, apply subtle algorithmic handicaps to tracks with uncertain provenance, or change how editorial playlists incorporate AI music. The platform has technical capability for most of these changes but hasn't implemented them publicly.

Is AI-generated music copyright-infringing?

This is unresolved legally. Most AI music tools claim users own generated output, but training data often came from copyrighted music. Multiple lawsuits are currently working through courts on this question. Until resolved, copyright liability is unclear for both AI music companies and platforms like Spotify that host the content.

Should I worry about AI-generated music replacing human musicians?

Likely, AI will change the music industry significantly, but human musicians probably won't disappear. AI excels at producing background music and algorithmic-optimized content. It struggles with deeply personal, experimental, or genre-defining music. Human musicians who build direct fan relationships, create distinctive work, and diversify their income (not just streaming) will adapt better to this shift.

Why does Spotify claim neutrality instead of taking a position?

Spotify's neutrality stance avoids regulatory risk, legal complexity around copyright, and alienating AI music creators. The platform makes money from streams regardless of music origin, so there's no inherent business incentive to favor human creators. Spotify is betting that listener preference will naturally favor quality human music without platform intervention.

What's the difference between Spotify's approach and Bandcamp's?

Spotify optimizes for scale, engagement, and algorithmic efficiency. Bandcamp optimizes for direct artist-listener relationships, human curation, and community values. Spotify serves the average listener better. Bandcamp serves artists and listeners who prioritize authenticity better. Both models are economically viable, just at different scales.

Key Takeaways

- Spotify's 'neutral' treatment of AI music masks algorithmic choices that may inadvertently favor content optimized for engagement metrics over artistic distinctiveness

- Thousands of AI-generated tracks upload daily to streaming platforms, creating competition that human artists struggle to match in volume and algorithmic efficiency

- Bandcamp's curation-based alternative demonstrates that requiring disclosure and prioritizing human creators is economically viable at a different scale than Spotify

- Copyright questions around AI music training data remain legally unresolved, creating uncertainty that likely drives Spotify's hands-off approach

- Listener data suggests preference for human-created music when choices are transparent, but algorithmic amplification and lack of labeling obscure those preferences on Spotify

Related Articles

- Most Controversial Film of 2024: Prime Video's Hidden Gem [2025]

- Landman Season 2 Episode 9 Release Date & Time on Paramount+ [2025]

- Disney Plus Vertical Video: The TikTok-Like Future of Streaming [2025]

- Spotify's Real-Time Listening Activity Sharing: How It Works [2025]

- AI Slop Songs Are Destroying YouTube Music—Here's Why Musicians Are Fighting Back [2025]

- MTV Rewind: The Developer-Built Tribute to 24/7 Music Channels [2025]

![Spotify's AI Music Stance: What It Really Means for Creators [2025]](https://tryrunable.com/blog/spotify-s-ai-music-stance-what-it-really-means-for-creators-/image-1-1768398119885.jpg)