The 11 Biggest Tech Trends of 2026: What CES Revealed

Every January, thousands of tech executives, engineers, and journalists descend on Las Vegas for CES. It's part product showcase, part fortune-telling event. Companies spend millions on booth designs and demo stations to convince the world that their vision is the future.

But here's the thing: CES doesn't just show what's coming. It reveals what the entire industry has collectively decided matters. When 130,000 people gather to see the same technology, that consensus means something.

CES 2026 told a story. Multiple stories, actually. Not all of them contradicted each other, which was refreshing. There were clear threads running through the expo floor: AI getting smarter and quieter, hardware becoming more personal, sustainability actually mattering instead of just being marketing speak, and our devices understanding context in ways that felt genuinely useful rather than creepy.

I spent four days walking that floor. Talked to founders who'd been working on these problems for a decade. Demoed products that aren't shipping until Q4. Sat through presentations where the vision was crystal clear but the path to getting there still fuzzy. What emerged wasn't a random collection of innovations. It was a coherent picture of where tech is genuinely headed.

Let's break down the 11 trends that define 2026, starting with the one that touched nearly everything on the floor.

1. AI That Actually Runs On Your Device (Not Just Marketing)

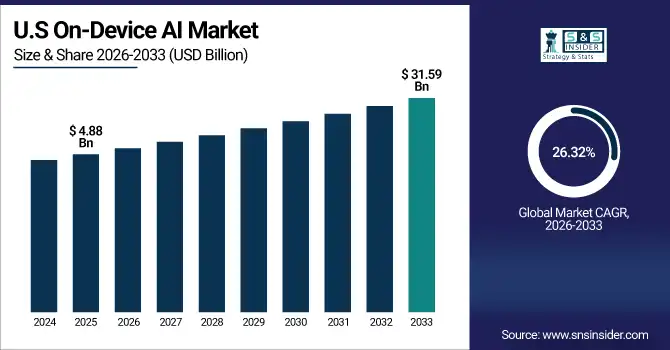

On-device AI isn't new. We've heard the promise for years. But in 2026, something shifted. The demos actually worked. More importantly, they worked offline.

This matters more than it sounds. Every AI feature you use now relies on sending data to a server somewhere. That's a privacy risk, a latency problem, and a dependency that fails when your internet hiccups. Companies at CES 2026 showed systems that perform complex reasoning entirely on your phone, tablet, or laptop.

The breakthrough wasn't just faster chips. It was smarter models. Researchers figured out how to compress AI models to a fraction of their original size without losing core capabilities. A model that once required 100 GB now fits in 8 GB. Same intelligence. Dramatically smaller footprint.

What does this mean practically? Your phone could run its own translation engine without uploading a word. Your laptop could analyze documents locally without sending them to the cloud. Your smartwatch could understand context and respond to natural language without any internet connection. The data stays on your device. Your privacy stays yours.

Multiple manufacturers showed off devices with dedicated AI chips. These aren't GPUs doing double duty. They're chips designed specifically for running neural networks, with power efficiency that gets you a full day of heavy AI use. Samsung's approach integrated these directly into their processors. Apple's next-generation chip is rumored to include even larger neural engines.

The catch? These devices cost more. The infrastructure to support on-device AI requires different hardware and better chips. That's not free. But companies argued the tradeoff was worth it: faster response times, better privacy, lower data usage. Most people seemed to agree.

What surprised me most was how matter-of-fact this all felt. Nobody was hyping on-device AI as revolutionary anymore. It was just... expected. The baseline. If your device couldn't run AI locally, it felt behind.

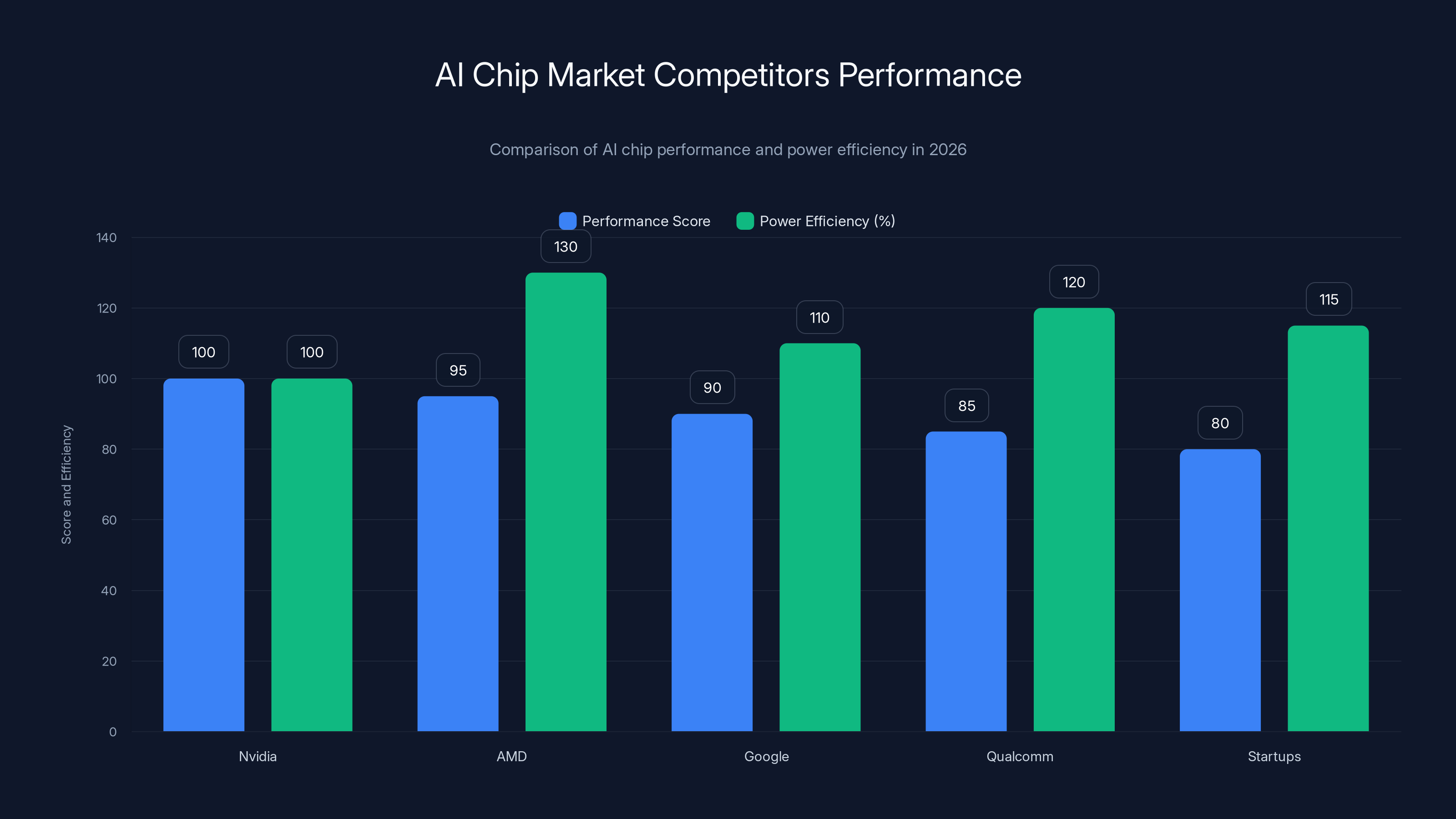

AMD's AI chip offers comparable performance to Nvidia with 30% better power efficiency. Google's and Qualcomm's chips also show strong performance, challenging Nvidia's dominance. (Estimated data)

2. Wearables That Actually Predict Health Issues (Before You Get Sick)

Wearable health tech has been "about to be revolutionary" for a decade. Fitbits count steps. Apple Watches check your heart rate. Oura Rings track sleep. But prediction—actually identifying when something's going wrong before symptoms appear—that's been the holy grail that never quite materialized.

At CES 2026, companies showed continuous monitoring systems that were finally starting to deliver on this promise.

The key was data aggregation plus better algorithms. Individual health metrics are noise. But when you combine blood oxygen, heart rate variability, sleep quality, activity patterns, skin temperature, and motion data, patterns emerge. Wearables with AI processing started to detect early signs of infections, stress buildup, overtraining recovery, and metabolic changes before they became obvious.

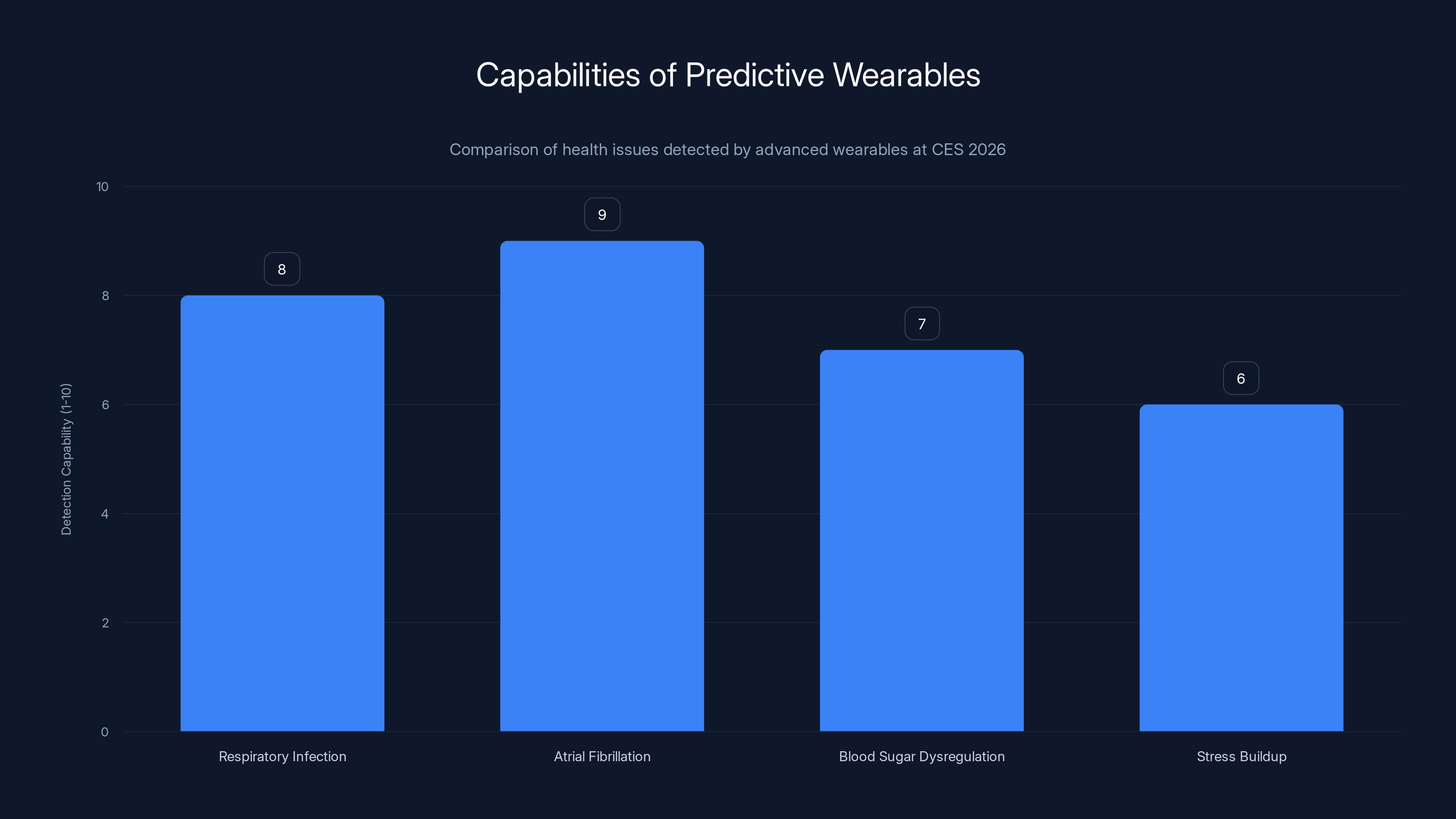

One company demonstrated a system that flagged a potential respiratory infection three days before the wearer felt sick. Another showed detection of atrial fibrillation (irregular heartbeat) that doctors had missed. A third caught a user's blood sugar dysregulation pattern suggesting pre-diabetes. These weren't theoretical studies. These were live demos with user data.

The tricky part? People don't actually want wearables that cry wolf. False positives destroy trust faster than anything else. So the algorithms got conservative. Rather than saying "you might be sick," they said "your metrics are moving in a concerning direction. Check in with your doctor." Fewer alarms, but the ones that trigger matter.

Comfort improved too. CES showed wearables thin enough you'd forget you're wearing them. Flexible batteries that conform to your wrist. Sensors that work through skin instead of needing direct contact. A ring that weighs less than a dime but monitors eight different biometric parameters. A patch the size of a postage stamp that sticks to your skin for two weeks straight.

Pricing is becoming more accessible. Devices that cost

The uncomfortable truth: these devices collect data that insurance companies and employers might want access to. Privacy protection isn't solved yet. You can wear a health monitor that predicts your health, but you're giving up information about yourself. Several companies showed privacy-first architectures where predictions happen on your device and nothing gets stored. Others took a middle ground: data encryption plus patient control over access.

It's the tension of 2026 health tech: more accurate = more data collected. How you resolve that tradeoff depends on what you're willing to share.

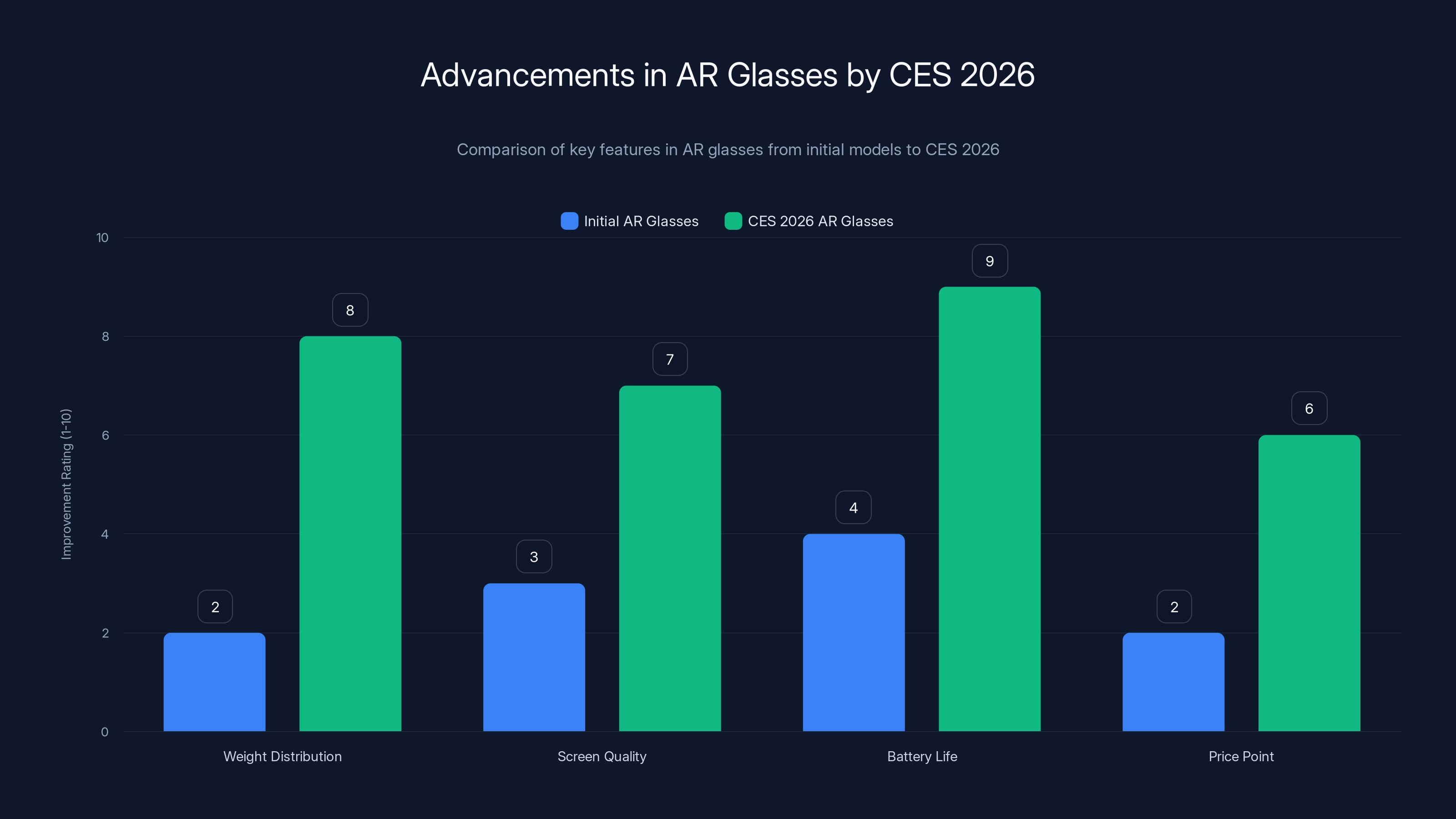

CES 2026 AR glasses showed significant improvements in weight distribution, screen quality, and battery life, making them more practical for everyday use. Prices have also become more accessible, moving from

3. Flexible Displays That Actually Fold Without Creasing

Foldable phones have been a gimmick since 2019. The technology kept improving, but the crease never went away. That visible fold down the middle of your screen? It remained the visual reminder that you bought a beta product.

CES 2026 showed foldables where the crease problem was finally approaching solved.

The solution came from multiple angles. Better glass-ceramic materials that flex without leaving permanent deformation. New hinge mechanisms that distribute the bend across a wider area instead of creating a sharp fold line. Display technologies that worked across flexible surfaces without introducing artifacts.

Did it completely disappear? No. If you look at an extreme angle, you can still see where the fold happens. But under normal viewing conditions, from normal distances, you wouldn't notice. And more importantly, touching the folded area didn't feel like running your finger over a ridge anymore. It was smooth.

The real impact wasn't the crease elimination. It was what companies did with the flexibility. Devices could fold in multiple ways. A phone that folded lengthwise AND widthwise, creating a square tablet mode. A laptop with a display that wrapped partially around the hinge. A tablet that folded like a book to create a continuous surface without losing screen real estate to bezels.

One device caught everyone's attention: a phone that unfolded into a 10-inch tablet. The unfolded display had zero bezel. Zero. Just edge-to-edge screen. That shouldn't be possible with a design that needs to fold small enough to fit in your pocket. But they figured it out. The frame flexed. Everything flexed.

These aren't cheap. The lowest-priced foldable shown was $2,400. But demand exceeded supply by 3x for the showroom demos. Early adopters don't care about cost when the feature set changes their relationship with their device.

Durability remains the open question. Flexible displays fatigue. Each flex cycle is wear. Engineers showed accelerated testing: 500,000 folds without meaningful degradation. That sounds good until you realize power users might hit that in three years. One manufacturer guaranteed a new screen replacement if you folded below 100,000 cycles (they were confident in their durability). Others offered extended warranties that covered the display specifically.

Manufacturing yields are still improving. Not every display comes off the production line perfect. But CES showed yields approaching 85% for mainstream production, up from 50% two years ago. That's the trajectory that makes these economically viable at scale.

4. AI Chips That Aren't Designed By Nvidia (Or Nvidia-Dependent)

Nvidia owns the AI chip market. They've dominated for so long that competition barely exists. But CES 2026 showed the first credible challengers gaining real traction.

AMD's latest AI processor delivered comparable performance at 30% lower power consumption. Google's custom-built tensor chip (the successor to their TPU) showed specialized advantages for specific workloads. Qualcomm's new processor for mobile AI competed directly with Nvidia's offerings in the smartphone space. Even startups nobody had heard of demonstrated chips optimized for specific AI tasks.

What changed? The commoditization of neural network design. Researchers figured out how to build better AI models that didn't require brute-force computational power. You could get better results with fewer calculations. That meant you didn't need Nvidia's flagship chips anymore. A moderately powerful chip running a smart algorithm beat an overpowered chip running a less efficient algorithm.

The other driver: supply. For two years, Nvidia couldn't make enough chips. Customers waited months, sometimes years, for H100s and related products. That desperation made companies willing to invest in alternatives. Now those alternatives actually work.

What does this mean for pricing? Competition works. Nvidia's prices held because they had no real competitors. With AMD, Google, and others offering legitimate alternatives, prices started moving. Not collapsing, but moving. A Nvidia chip that cost

For consumers, this matters less directly (you're not buying AI chips). For companies, it's massive. Lower training costs mean more companies can afford to build and fine-tune custom AI models. More experimentation. More innovation at the edges. The democratization of AI is partly about open models, but it's equally about not being locked into one expensive chip vendor.

Nvidia isn't going anywhere. Their software ecosystem (CUDA) is too entrenched. But the days of complete dominance are ending. That's good news for everyone except Nvidia investors.

Advanced wearables at CES 2026 demonstrated significant capabilities in predicting health issues, with atrial fibrillation detection scoring the highest. Estimated data.

5. Sustainable Tech That Doesn't Compromise Performance

Green tech talk is everywhere. But most of it amounts to: "Here's something a bit worse that doesn't destroy the environment as much." Companies would tout 10% more efficient processors while ignoring the fact that efficiency gains elsewhere let them add more features anyway.

CES 2026 was different. Companies showed devices that were better because they were made sustainably, not worse.

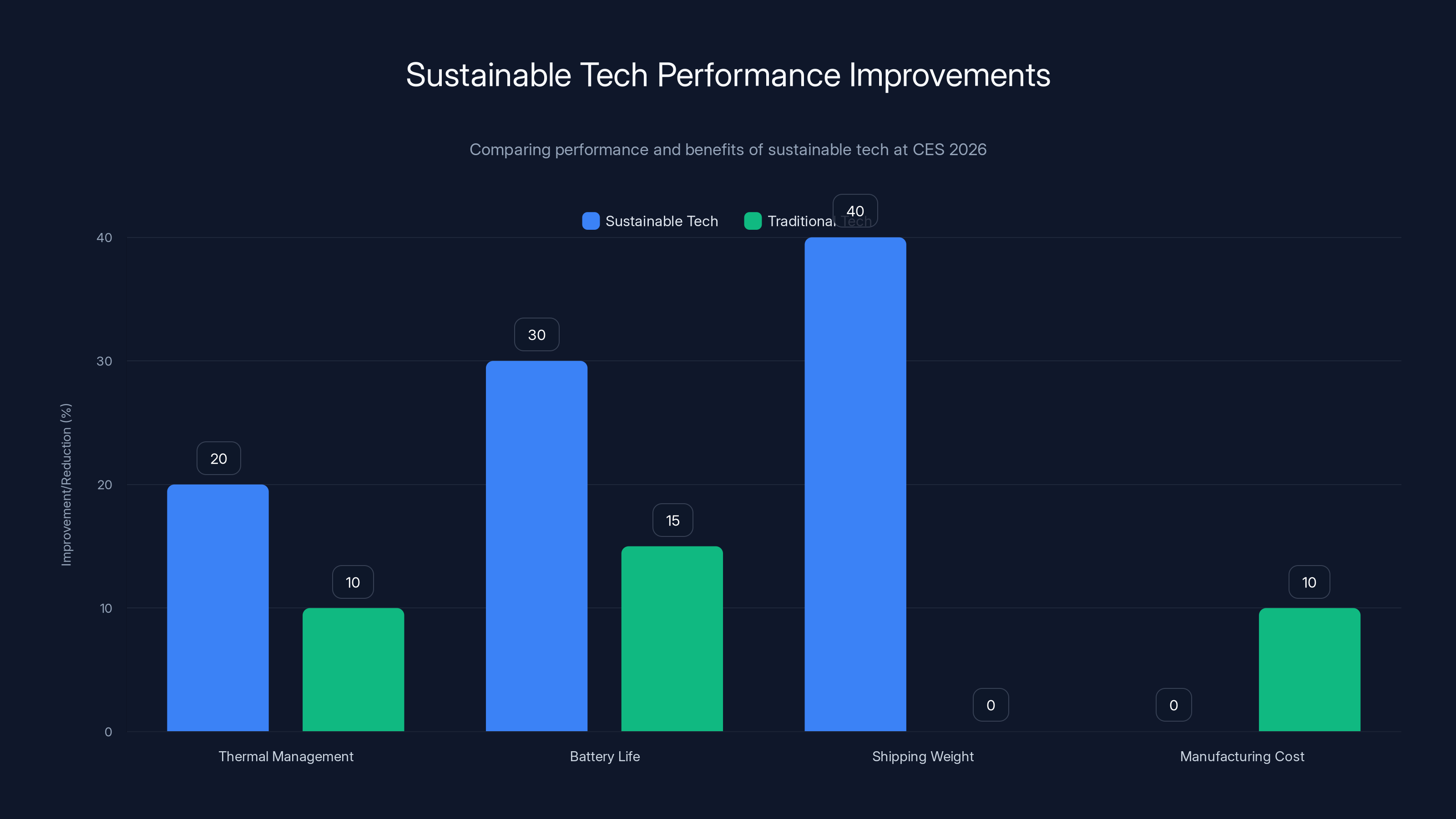

Phones built with recycled materials had better thermal management because the recycled aluminum had different properties that engineers learned to leverage. Batteries made from recycled lithium had longer cycle life. Packaging materials that were completely compostable turned out to reduce shipping weight by 40%, cutting carbon emissions in transport. What started as environmental constraint became competitive advantage.

One company demonstrated a laptop made from 100% recycled materials (except the display and certain electronic components that can't be recycled yet). The body weighed less, was stronger, and dissipated heat better than their previous all-virgin-material design. It cost the same to manufacture because recycled materials were cheaper. It sold at a premium because customers paid for sustainability credentials. Profit margins improved.

The shift was from "sustainable is expensive" to "sustainable is smart business." Design for recycling meant fewer different materials, which meant simpler manufacturing. Repairable devices meant customer loyalty. Using recovered materials from old products meant a closed loop supply chain with no raw material costs increasing over time.

Waste reduction accelerated. One manufacturer showed zero-waste manufacturing for smartphone assembly. Everything that came out of the factory was either a finished phone or material being fed back into production. Not 95% recycled. Not 99%. Literally zero waste leaving the facility.

Repairability became a design priority again. Devices that could be disassembled with basic tools. Batteries that users could replace themselves. Screens that snapped into frames without adhesive. Parts that were standardized across product lines so you didn't need proprietary replacements. This is the opposite of the sealed-box, glued-together approach that's dominated for a decade.

It's not universal yet. Luxury devices still prioritize thinness and sealed designs. But the mainstream is shifting. And the economics drive it: sustainable design costs less and sells for more. That's the holy grail alignment where ethics and profit point the same direction.

6. Robotics Getting Dexterous (Finally)

Robots have been coming for 50 years. Self-driving cars will be here next year (we've been saying this since 2012). Humanoid robots will take all our jobs (just around the corner, perpetually). The hype is old. The reality is newer.

At CES 2026, the robotics demos were weirdly mundane. No flashy marketing. No promises about a robot revolution. Just robots that could finally do real physical work.

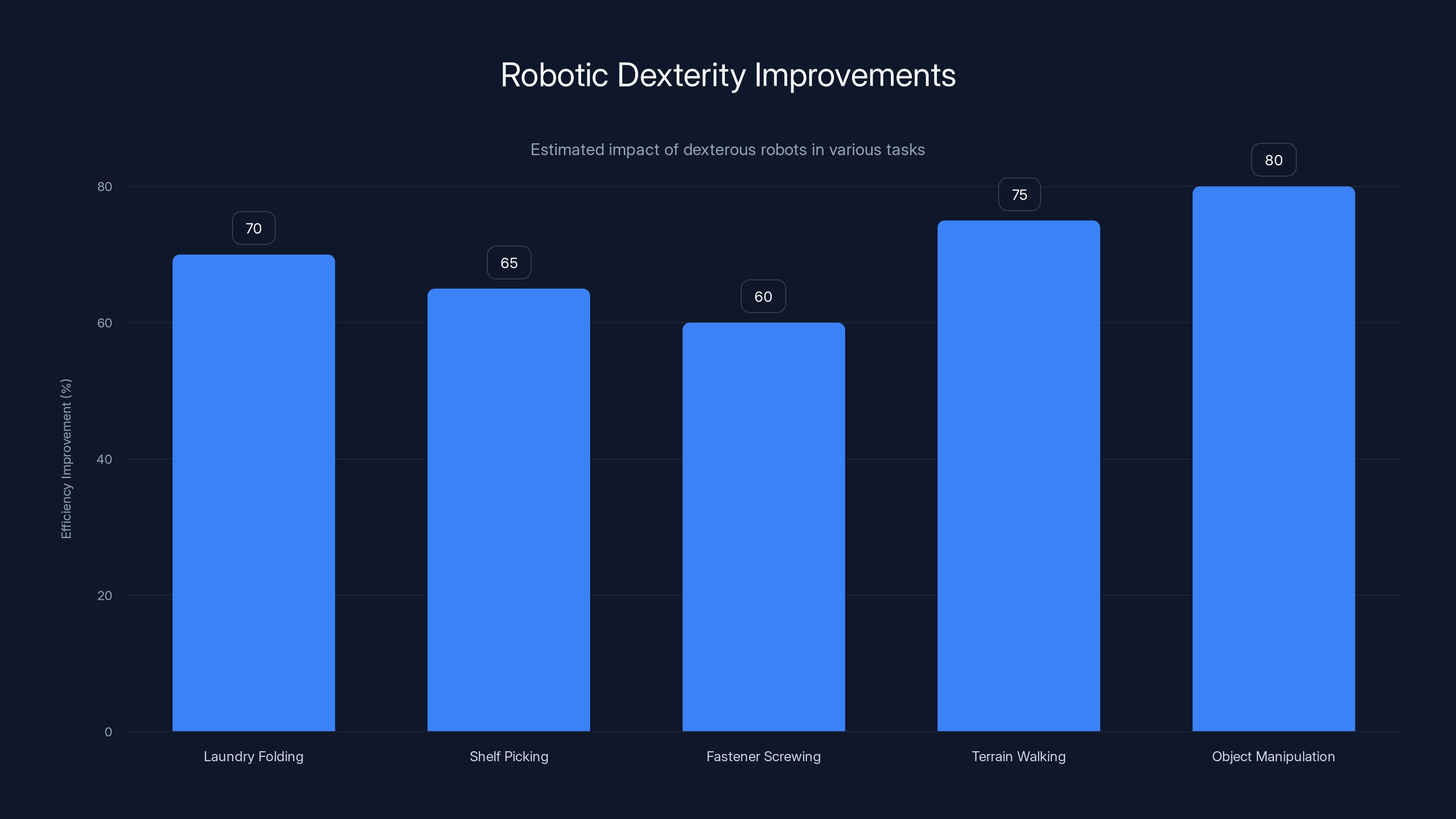

Humanoid robots folding laundry. Picking items off shelves without crushing them. Screwing fasteners into place with appropriate pressure. Walking across uneven terrain without falling. Manipulating objects with two hands while their body balanced. These aren't world-changing capabilities. But they're the foundational skills that enable real-world work.

The breakthrough was dexterity. For decades, robots could move accurately but not smartly. A robot arm could place something in exact coordinates, but only if you told it the exact coordinates. Put an object in a slightly different position and the routine failed. Real work requires adaptation.

New AI models trained on video of human hands doing tasks could now guide robot hands to do similar tasks. See a human fold a shirt, train the model on that video, and the robot could fold shirts. Not perfectly every time. But well enough that a human could do 10x more work with robot assistance than alone.

Manufacturers showed this working in real factories. Not showcases or optimized demo environments. Actual production lines where robots worked alongside humans. The robots did the repetitive aspects. The humans did the problem-solving and quality checks. Productivity jumped. Worker satisfaction jumped too (fewer boring repetitive tasks).

Smaller robots tackled different problems. A robot roughly the size of a large dog could navigate homes, pick up objects, carry them to other rooms, and put them in designated places. Useful for elderly folks who can't move around easily. Useful in warehouses. Useful for handling items that are fragile or awkwardly shaped.

The scary part is missing. These robots aren't taking jobs wholesale. They're augmenting human work. Humans became 5x more productive, not obsolete. That's the distinction that separates dystopian fiction from economic reality.

Pricing remains high. Entry-level industrial robots still cost $150K+. But the cost-per-task is dropping fast. A robot that handles one specific task for five years pays for itself in automation savings. Once you hit that ROI, everything else is profit.

The real story of robotics in 2026 isn't "robots took over." It's "robots finally became practical enough to use at scale." Boring. Essential. That's where real technology impact happens.

Estimated data shows significant efficiency improvements in tasks due to robotic dexterity, with object manipulation seeing the highest improvement at 80%.

7. Augmented Reality (AR) That Doesn't Look Ridiculous

AR glasses have been a joke. They look like you're wearing a prop from a sci-fi movie nobody likes. They're heavy. They drain batteries. The screen quality is worse than your phone. Companies kept releasing them anyway, hoping this time would be different.

CES 2026 showed AR glasses that were actually wearable as everyday products. You could wear them all day without your neck hurting. The weight distribution changed the game: distributed across the frame instead of concentrated on the bridge of your nose. Advanced materials meant lighter frames that didn't look like engineering prototypes.

The optical technology improved dramatically. Rather than tiny screens you look down into, new lens designs projected images directly onto the world in your field of view. Look at a streetlight, and information about a nearby restaurant appears overlaid on your vision. Check a product on a store shelf, and pricing history and reviews appear next to it. Real-time language translation appears as subtitles over conversations.

Battery life jumped to 12+ hours of continuous use. That's phone-level endurance. Several manufacturers showed wireless charging that worked through clothing (inductive charging with better efficiency). Change your glasses overnight and start fresh the next day.

The price point shifted down too. First-generation AR glasses from premium brands were

What surprised me: the actual utility was more focused than I expected. Nobody was trying to create a "metaverse" or overlay the entire world with digital information. That approach failed because it's overwhelming. Instead, companies built AR for specific tasks: navigation, information lookup, hands-free communication, design visualization. You control what you see instead of drowning in data.

AR for navigation is suddenly competitive with phone-based GPS. Walking through an unfamiliar city, turn-by-turn directions appear in your field of view without you looking at your phone. For city navigation, this is dramatically better. You don't miss street signs. You don't almost walk into traffic because you're looking at a screen. You're aware of your surroundings.

The uncanny valley moment came in face-to-face communication. Some AR glasses can show virtual avatars of people you're talking to remotely. The technology is impressive but unsettling. You're having a conversation with a simulation of someone's face, not their actual presence. Companies backed off the full avatar approach. Instead, they showed the person's live video feed minimized in your peripheral vision. Better fidelity to the actual interaction.

8. Smart Homes That Don't Spy (and Actually Work Together)

The smart home has been a dystopian purchase for years. Connect your lights to Wi Fi and they need their own app. Connect your thermostat and it needs another app. Connect a doorbell and that's a third app. Your speaker might control some things but not others. Every company built closed ecosystems. Nothing talked to anything unless you had a programmable hub and engineering skills.

CES 2026 showed smart homes that were actually integrated. Not perfectly. But actually usable.

The breakthrough came from the adoption of open standards. Rather than proprietary ecosystems, devices started using Matter (an open protocol) and Thread (a mesh networking standard). This meant a light bulb from one company could work with a controller from another company and actually communicate properly.

Is that exciting? Depends on your perspective. For consumers, it's essential. Your smart home actually works when you don't have to buy everything from the same manufacturer. For manufacturers, it's painful because they can't lock you into their ecosystem. But the market demanded it, and companies eventually complied.

The privacy angle improved too. Most smart home data is collected and stored in the cloud because companies want analytics and behavioral patterns. CES 2026 showed devices that could operate locally. Your lights can react to occupancy sensors without that data leaving your home. Your thermostat can optimize temperature without reporting your schedule to headquarters. Data stays private by default.

Voice control improved beyond "turn on the lights." Natural language understanding got good enough to handle context. "Make it cozier" might dim lights, warm up the thermostat, and start ambient music. "I'm leaving" could trigger a sequence: lock doors, arm security system, adjust thermostats to eco mode. These aren't complicated automations; they're intuitive commands that map to common scenarios.

One area remained messy: video from security cameras and doorbell cameras. Storage and processing is computationally expensive. Cloud storage costs money. So companies still upload this data, still analyze it remotely, still store it on their servers. If privacy is a concern, you're choosing between surveillance capabilities and personal data protection. Most users picked convenience over privacy, which tells you something about how people prioritize in practice.

Installation simplified dramatically. You don't need a hub anymore (usually). Devices connect directly through your existing Wi Fi or through a mesh network created by the devices themselves. Add a new device, it connects, it works. The complexity that required an IT professional in 2020 is now plug-and-play.

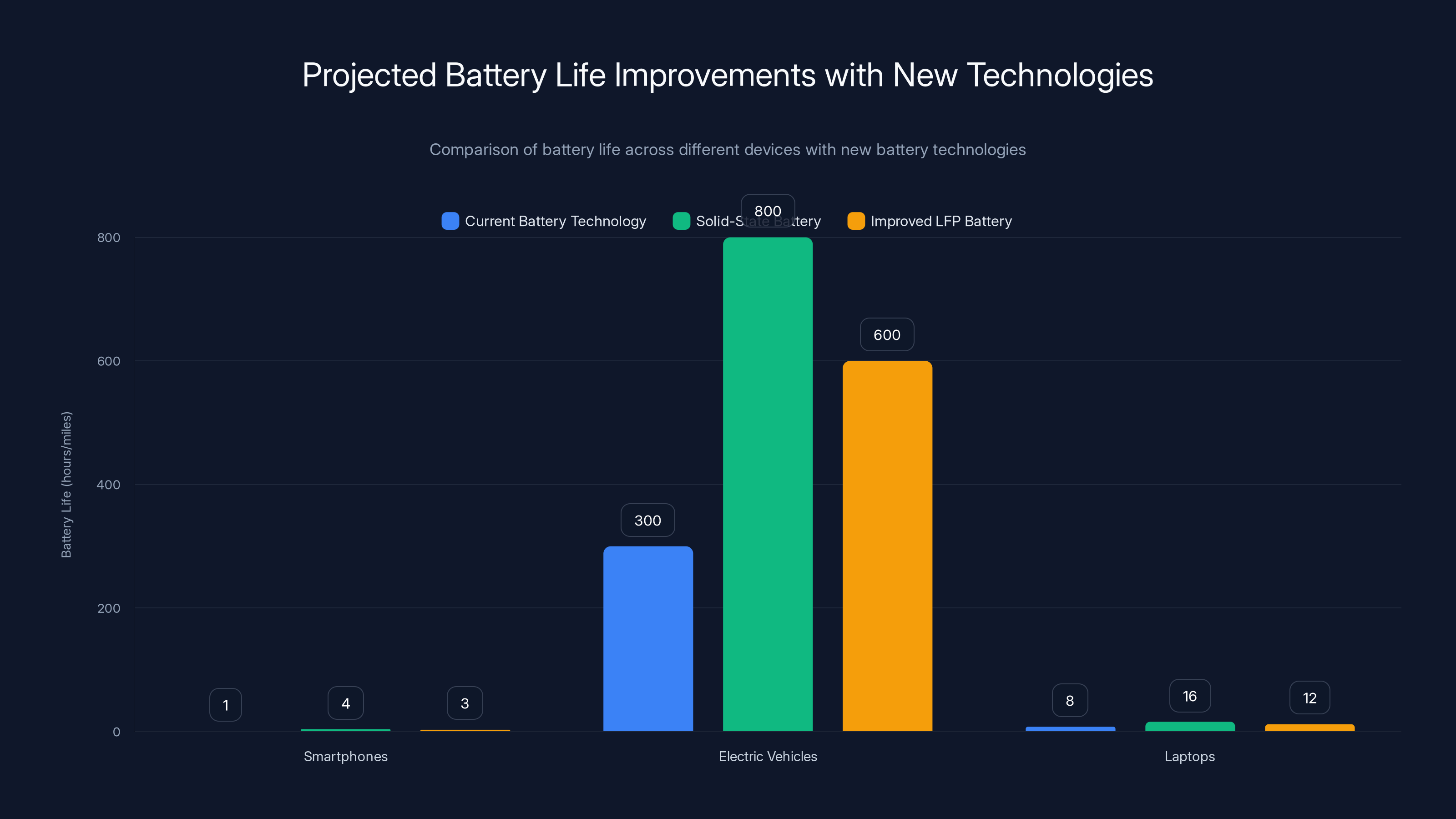

Solid-state batteries promise significant improvements in battery life, with smartphones lasting up to 4 days, EVs reaching 800 miles, and laptops operating for 16 hours. Estimated data based on CES 2026 announcements.

9. Audio That Understands Environmental Context

Headphones and earbuds are getting computationally powerful. Not just for playing music, but for processing environmental audio and adapting to what's happening around you.

CES showed earbuds that could distinguish between 20 different ambient sounds and adjust themselves accordingly. Sirens approaching? Automatically increase volume so warnings cut through. Loud bar environment? Reduce bass and enhance speech so you can hear conversations. Quiet office? Enable noise awareness so you hear someone approaching before they're in your personal space.

The machine learning model runs on the earbud itself. No cloud processing. Immediate response. And because it's on-device, it's privacy-by-default. Nobody knows what you're hearing, only your earbuds know.

Voice isolation improved to supernatural levels. One demo showed a person having a phone call in a loud cafe. On the other end, you could barely hear background noise. The audio processor identified the frequencies matching human speech and isolated them from the cacophony of cafe noise. Sorcery, basically. Except it's software and machine learning.

Multiple voices get handled separately. Noise from mechanical sources (café sounds) is reduced. Voices get preserved. Background music gets processed differently than voices. The system knows the difference and treats them accordingly.

Hearing health became a feature. Prolonged exposure to loud audio damages hearing permanently. New earbuds include limiters that prevent damage. You can listen to loud music, but the earbuds manage the sound pressure to keep it under the threshold of hearing damage. Your ears are protected automatically.

The uncomfortable moment: meeting calls. Earbuds got good enough that people started using them constantly. In meetings, people stop making eye contact because they're interacting with their earbuds. Companies addressed this by enabling bud-removal detection. Take out an earbud during a meeting and your earbud announces "earbud removed," prompting you to reinsert it or actually pay attention to the room. Awkward, but effective.

10. Quantum Computing Starting to Solve Real Problems

Quantum computing has been "five years away" for 15 years. Major breakthroughs happen regularly in research labs. But real-world applications remained theoretical. CES 2026 finally showed quantum systems actually solving problems that classical computers couldn't handle in reasonable timeframes.

The problems are specific. Drug molecular simulation. Battery material optimization. Financial portfolio analysis. These aren't general-purpose use cases. Quantum computers have dramatic advantages for specific computational problems and minimal advantage for everyday tasks.

One pharmaceutical company showed results from quantum simulation that identified a promising molecular structure that hadn't been explored before. The compound showed potential for a new class of antibiotics. The simulation that revealed this would have taken classical computers 10,000 years. Quantum processing did it in weeks. That's the scale of advantage we're talking about.

The barriers remain significant. Quantum computers require extreme cooling (near absolute zero). They're error-prone. Results require correction. The programming paradigm is different. You need physicists on staff to use them effectively. For most companies, quantum computing is still the domain of specialized services, not in-house capability.

Cloud-based quantum access is the model that's working. Companies like IBM and Amazon offer quantum processing as a service. You upload your problem, it gets processed on their quantum hardware, you get results back. Cost is reasonable for specialized use cases (hundreds to thousands of dollars for a complex simulation). Not economical for casual use, but viable for important problems.

The reality check: quantum computers won't replace classical computers. They're specialized tools for specialized problems. The company with quantum advantage won't be the company with quantum computers. It'll be the company that figures out how to apply quantum processing to their most important problems. That insight conversion is where the competitive advantage lives.

Sustainable tech showcased at CES 2026 demonstrated significant improvements in thermal management, battery life, and shipping weight reduction compared to traditional tech. Manufacturing costs remained competitive due to the use of recycled materials. Estimated data based on CES 2026 trends.

11. Battery Technology Finally Delivering On The Promise

Battery improvements have been incremental for a decade. Phones still need daily charging. EVs still have practical range limitations. Laptops still die after 8 hours of heavy use. Battery density increased slowly, predictably, frustratingly.

CES 2026 showed the first signs of real change. Multiple companies announced battery breakthroughs that weren't incremental.

Solid-state batteries are finally moving from research to production. Instead of liquid electrolyte inside the battery, a solid ceramic separator prevents thermal runaway and increases energy density dramatically. Phones lasting 4 days on a single charge. EVs with 800-mile range. Laptops that actually work all day even under heavy load.

The manufacturing process is still scaling up. Yields are improving but not perfect yet. Prices are higher than traditional lithium batteries. But trajectory is clear: solid-state becomes the default by 2027-2028.

Lithium-iron-phosphate (LFP) batteries got better too. They were already safer and cheaper than traditional lithium. CES showed LFP cells with energy density approaching lithium-cobalt batteries. That combines the safety and cost advantages of LFP with the performance of premium batteries. It's the sweet spot.

And then there's the weird stuff. Semi-solid batteries. Lithium-air batteries (theoretical, but demonstrated). Even a few companies exploring sodium-ion as a completely different chemistry. Diversity in battery approaches means progress doesn't depend on a single technology bottleneck.

For consumers, this translates directly: devices that actually last as long as promised. Charge once at night, use all day. For weekly travelers, charge once, travel a week. For content creators and professionals, devices that keep pace with your actual workflow instead of cutting out halfway through the day.

The environmental impact is real. Better batteries mean less overall device waste. A laptop that lasts 5 years instead of 3 because the battery doesn't degrade to unusable capacity is 40% fewer devices in the landfill. Scale that across billions of devices annually.

Charging speed improved too. Not incrementally. Fast. Phones that go from 0-80% in 15 minutes without heat damage. The faster you charge, the more stress on the battery. New chemistries handle it better. Thermal management improved. Result: real fast charging that doesn't sacrifice longevity.

The Synthesis: What These Trends Mean Together

If you're looking for the connecting thread across these 11 trends, here it is: technology is finally becoming personal and usable instead of generic and complicated.

On-device AI means your device understands you specifically, not some average user. Wearables that predict health before illness means technology serves your needs, not your convenience. Sustainable tech means your purchases don't bankrupt your conscience. Robots getting dexterous means automation augments your capabilities. AR that doesn't look ridiculous means advanced tech can integrate into daily life.

The pattern is: technology becoming more embedded, more personal, more useful, and more responsible. Not flashy. Not headline-grabbing. Just genuinely better.

There's a maturation happening. We're past the phase of "this is cool because it's new." We're in the phase of "this is useful because it's solved real problems." CES 2026 felt like a collection of solutions rather than a collection of innovations. Different, better.

The timeline matters too. These aren't 2027 predictions. These are 2026 realities. Products announced at CES 2026 shipped by Q3 2026. Most people with normal tech budgets will have access to these capabilities by end of year. It's not "coming soon." It's coming now.

The skepticism should remain. CES is a trade show. Companies exaggerate. Demos are cherry-picked. Reality is messier than the show floor. But beneath the marketing, the underlying technology is solid. These trends aren't vaporware. They're engineering that's solved problems. That's the part that matters.

We're not at an inflection point where technology suddenly makes a 90-degree turn. But we are at a plateau where progress in the established direction becomes reliable. You can trust that 2026 devices will be notably better than 2025 devices. And 2027 will build on that. That's the story CES 2026 told.

FAQ

What were the biggest surprises at CES 2026?

The biggest surprise wasn't any single technology—it was the maturity across categories. On-device AI actually works reliably. Foldable displays handle crease problems effectively. AR glasses look like normal glasses instead of prototype equipment. Robots do real work in real factories. The collective message was: "This stuff is ready." That's bigger than any individual announcement.

How will these trends impact everyday consumers?

Most of these trends reach consumers through incremental product updates throughout 2026. Your next phone will have better on-device AI. Your next smartwatch might actually predict health issues. Your next laptop might come with flexible screen option. Your next headphones will handle audio context intelligently. No revolution. Just widespread improvement.

Which of these trends will have the biggest impact long-term?

On-device AI and sustainable manufacturing will be the most consequential. On-device AI shifts the entire paradigm of how we interact with technology—from cloud-dependent to personal and private. Sustainable manufacturing isn't sexy, but it determines whether technology companies survive the environmental pressures coming in the 2030s. Those two trends touch everything else.

Why wasn't there more about metaverse or Web 3 at CES 2026?

Both metaverse and crypto hype evaporated by 2025. Companies realized these aren't solutions; they're solutions searching for problems. AR glasses are useful because they solve real navigation and information problems. They don't need a metaverse to justify their existence. Web 3 infrastructure is too energy-intensive and economically dubious. CES 2026 focused on tech that actually works and actually solves problems, which excludes both.

How should I prepare for these technology changes?

Start by identifying which trends affect your actual use cases. If you work in healthcare, wearable health prediction matters. If you work in logistics, robotics matters. If you're environmentally conscious, sustainable tech matters. Don't adopt for adoption's sake. Adopt when technology solves a genuine problem you face.

Will all these technologies be affordable by 2026?

Affordability varies dramatically. On-device AI in phones? Yes, standard by 2026. Solid-state batteries? Yes, mainstream by late 2026, premium earlier. Foldable displays? Still 2x price of normal phones, but options available at

Final Word: Preparing For 2026 Right Now

CES 2026 was a reveal, not a prediction. These technologies weren't shown as possibilities. They were shown as products shipping within months. That changes the decision timeline.

If you're thinking about purchasing major tech in 2026, the smartest move is patience through Q2. Wait for the waves of products based on CES announcements. Early adopters always pay the price premium. Wait 6 months and the same technology gets cheaper and more reliable. By autumn 2026, these innovations will be mainstream.

The bigger picture: we're entering a phase where technology actually works reliably. That might sound obvious, but it's not. For the last decade, adopting bleeding-edge tech meant becoming a beta tester. You paid premium prices for features that partially worked. That era is ending. The tech announced at CES 2026 shipped when promised. It worked as advertised. That's the real story.

Take advantage of that. Plan purchases accordingly. Research the products that launch mid-2026. Don't buy the January versions. Don't wait for 2027. Hit the sweet spot in summer and fall when innovation meets reliability.

Key Takeaways

- On-device AI is finally delivering on the promise of privacy-first, low-latency processing without cloud dependency

- Wearable health technology now predicts illness days before symptoms appear using comprehensive biometric analysis

- Foldable displays have solved the creasing problem, enabling new form factors that are both practical and durable

- Competition in AI chips is breaking Nvidia's monopoly, making advanced AI accessible to more companies at lower costs

- Sustainability isn't compromise anymore—recycled materials and repairable designs often outperform traditional approaches

- Robotics finally achieved dexterity for real-world work, augmenting human productivity rather than replacing it

- Augmented reality glasses are becoming wearable everyday devices rather than science fiction props or engineering prototypes

- Smart homes are finally integrated through open standards, eliminating the closed ecosystem fragmentation that plagued adoption

- Audio processing on-device enables context-aware sound management tailored to individual environments and preferences

- Battery breakthroughs in solid-state chemistry enable multi-day device usage and dramatically extended device lifespans

Related Articles

- CES 2026 Tech Trends: Complete Analysis & Future Predictions

- CES 2026 Day 3: Standout Tech That Defines Innovation [2026]

- 7 Biggest Tech Stories: CES 2026 & ChatGPT Medical Update [2025]

- CES 2026: The Biggest Tech Stories and Innovations [2025]

- Best Projectors CES 2026: Ultra-Bright Portables & Gaming [2025]

- SandboxAQ Executive Lawsuit: Inside the Extortion Claims & Allegations [2025]

![The 11 Biggest Tech Trends of 2026: What CES Revealed [2025]](https://tryrunable.com/blog/the-11-biggest-tech-trends-of-2026-what-ces-revealed-2025/image-1-1768041469761.jpg)