The Uncomfortable Truth About AI at Work

You're sitting at your desk. A tough problem lands in your lap. Your instinct used to be: grab coffee, sketch some ideas, talk it through with someone. Now? You open Chat GPT, paste the problem, and wait thirty seconds for a solution.

It works. It saves time. And that's exactly what worries people who've been thinking deeply about how we work. According to Harvard Business Review, AI doesn't necessarily reduce work; it often intensifies it by creating new tasks and expectations.

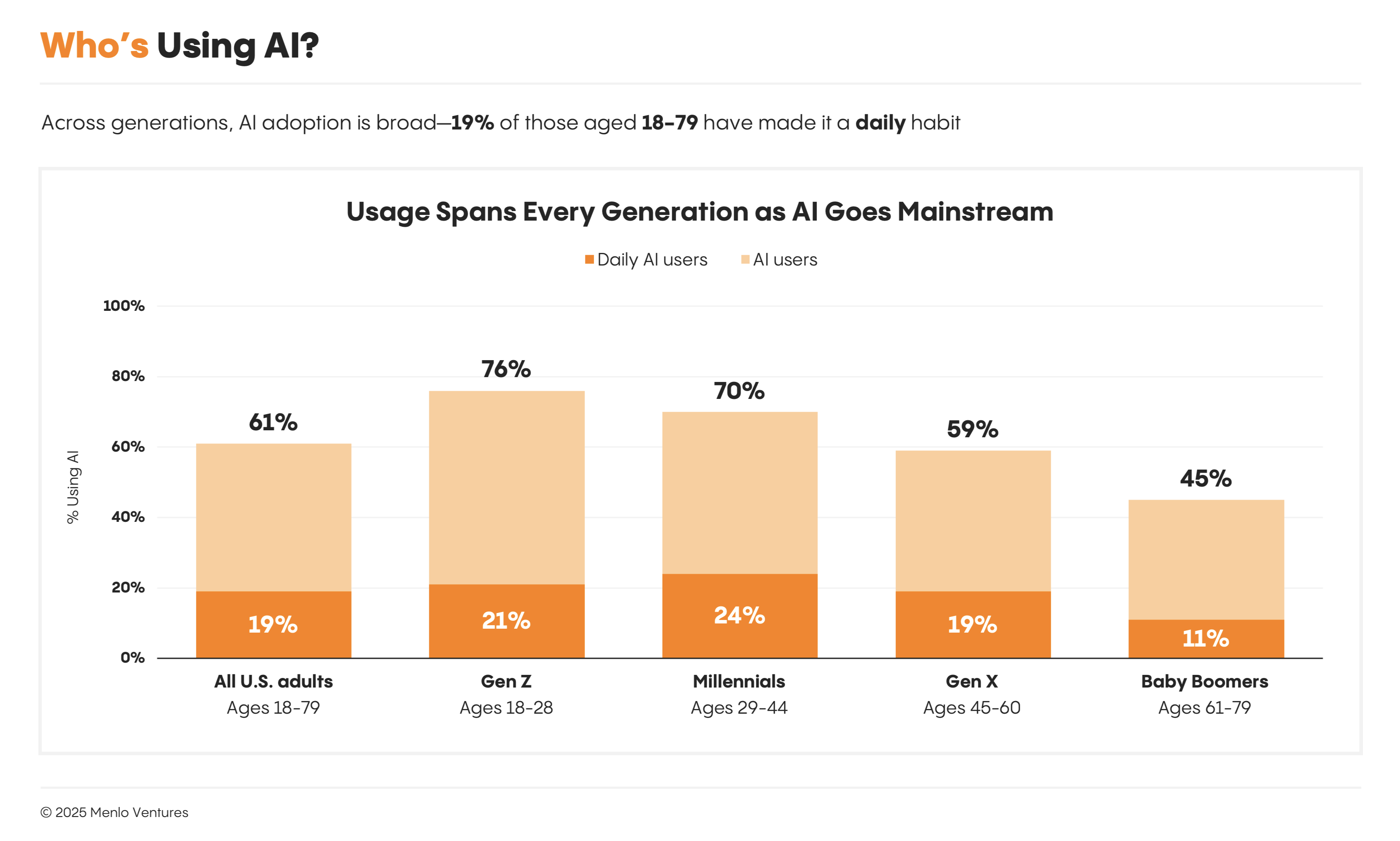

We're in the middle of something nobody quite knows how to talk about. AI isn't just changing what we do at work—it's changing how we think. And not always in ways we notice until it's too late.

The question isn't whether AI makes us more productive. It probably does, at least in the short term. The real question is more subtle and harder to measure: what happens to your brain when you stop wrestling with difficult problems? What skills atrophy when you hand thinking over to machines?

This isn't pessimism. It's something worth examining carefully.

Why Struggle Actually Made Us Better

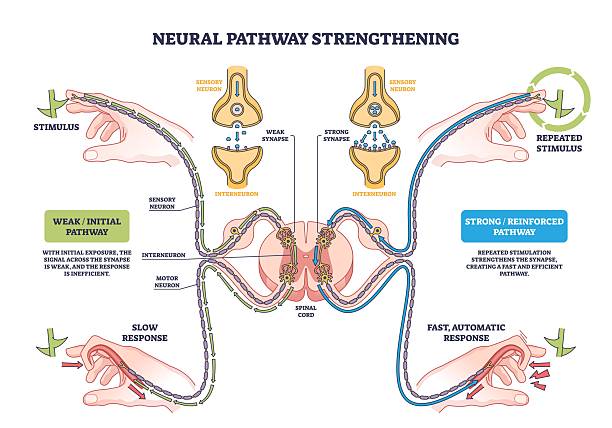

There's real science behind this, and it's uncomfortable for anyone who thinks AI is purely beneficial. A recent study published in Nature highlights the importance of struggle in learning and cognitive development.

When you struggle with a hard problem—when you're stuck, frustrated, trying different angles—your brain is doing something specific. It's building neural pathways. Creating connections between concepts. Developing what researchers call "transfer learning," which is the ability to take something you learned in one context and apply it somewhere completely different.

This doesn't happen when someone (or something) just hands you the answer.

Consider what happens in your brain when you solve a math problem yourself versus when someone explains the solution. The self-solving version produces deeper understanding. Your brain was forced to try multiple approaches, fail, recalibrate, try again. That repetitive cycle of hypothesis and feedback is what locks knowledge into long-term memory.

Now imagine that happening across every problem you encounter at work. The spreadsheet design challenge. The customer objection you need to overcome. The code architecture decision. The presentation structure. The negotiation strategy.

When AI handles the cognitive load, you're outsourcing the exact mechanism that builds expertise. Dr. Bjorn Mercer, AI researcher and academic, has written extensively about how expert knowledge isn't just information—it's built through repeated, deliberate practice over years. The person who becomes brilliant at something doesn't get there by asking someone for answers repeatedly. They get there by struggling, failing, learning from failure, and trying again.

Yet this is precisely what AI optimizes away.

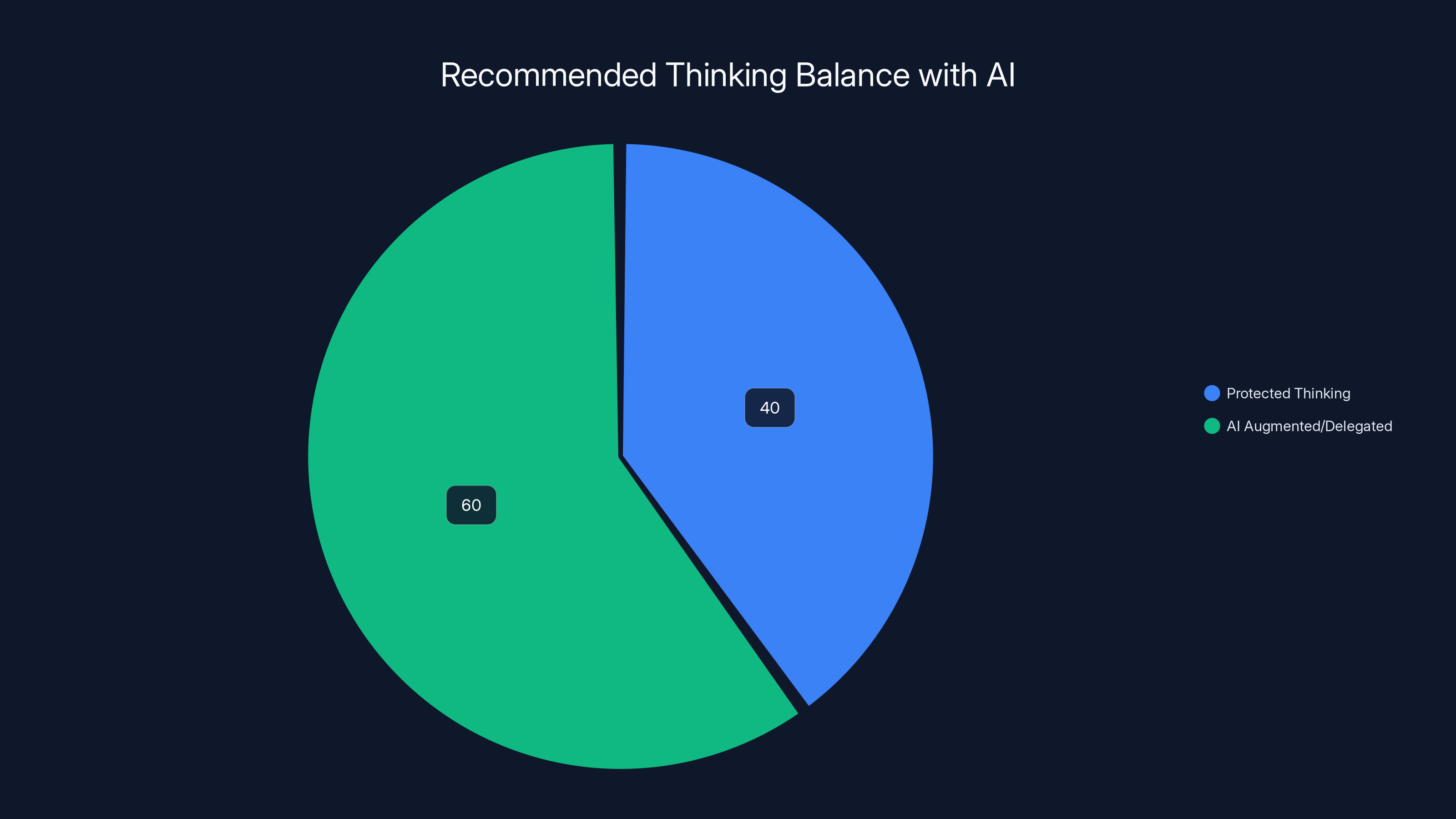

To maintain cognitive skills, it's recommended to perform 40% of thinking tasks independently and 60% with AI augmentation. Estimated data based on suggested practices.

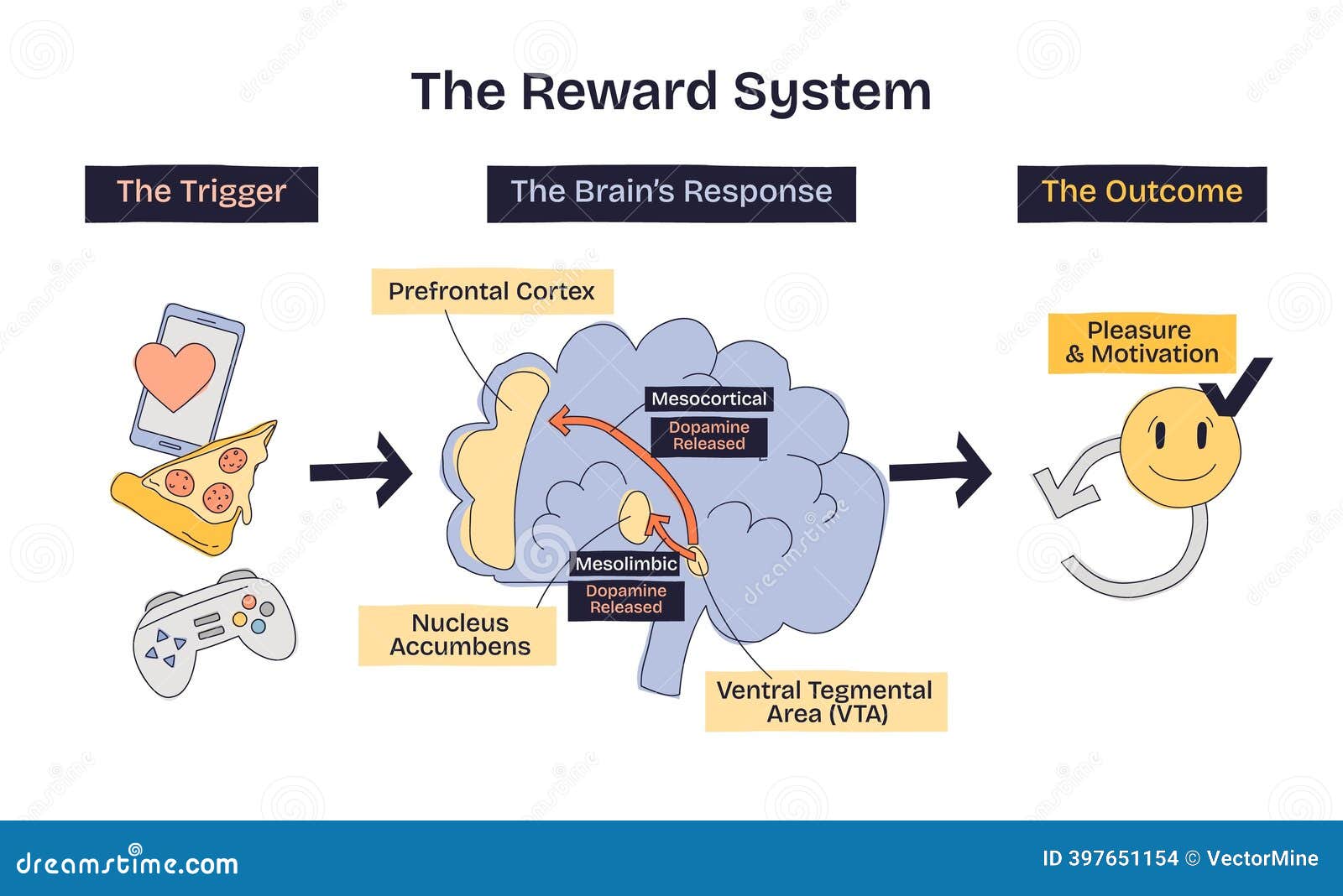

The Dopamine Trap

There's another mechanism at play here that's worth understanding.

Your brain loves immediate wins. The dopamine hit when you solve something quickly is real. It feels good. Productive. You can check it off your list. However, research suggests that this immediate gratification can lead to long-term cognitive decline.

AI gives you that hit almost instantly. Problem → Solution → Done. Your nervous system doesn't know the difference between solving something yourself and outsourcing the solution. The reward is the same.

But here's the catch: the reward system is actually measuring the wrong thing. What feels productive in the moment (getting the task done) isn't the same as what produces long-term capability (understanding how to solve it).

This is similar to why cramming for an exam feels productive while you're doing it, but students who cram forget everything two weeks later. The brain is optimized for immediate payoff, not learning. And AI is perfectly designed to exploit that.

Psychologists call this "productivity theater." You look busy, you complete tasks, your metrics go up. But your actual competence is stagnating. You're getting faster at delegating thinking, not faster at thinking itself.

The insidious part? You don't notice it until you're in a situation where AI isn't available. Then you realize you've lost ground.

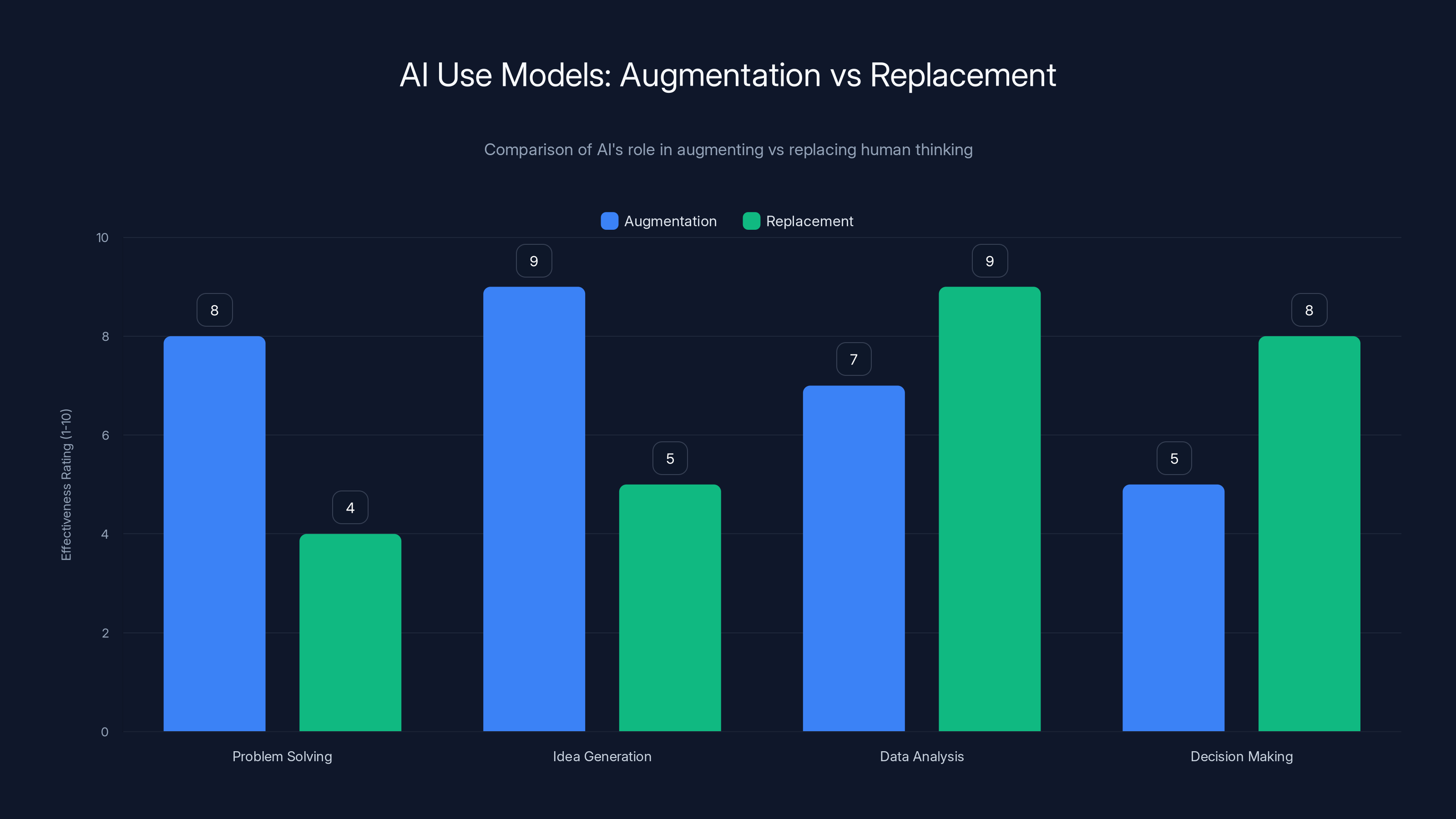

AI is more effective at augmenting human thinking in areas like problem solving and idea generation, while it tends to replace human effort in data analysis and decision making. Estimated data.

Professional Identity and the Problem-Solver Paradox

Let's get personal for a moment.

Your value at work is built on the problems you solve. The deals you close. The bugs you fix. The writing you produce. The strategies you develop. This is who you are professionally.

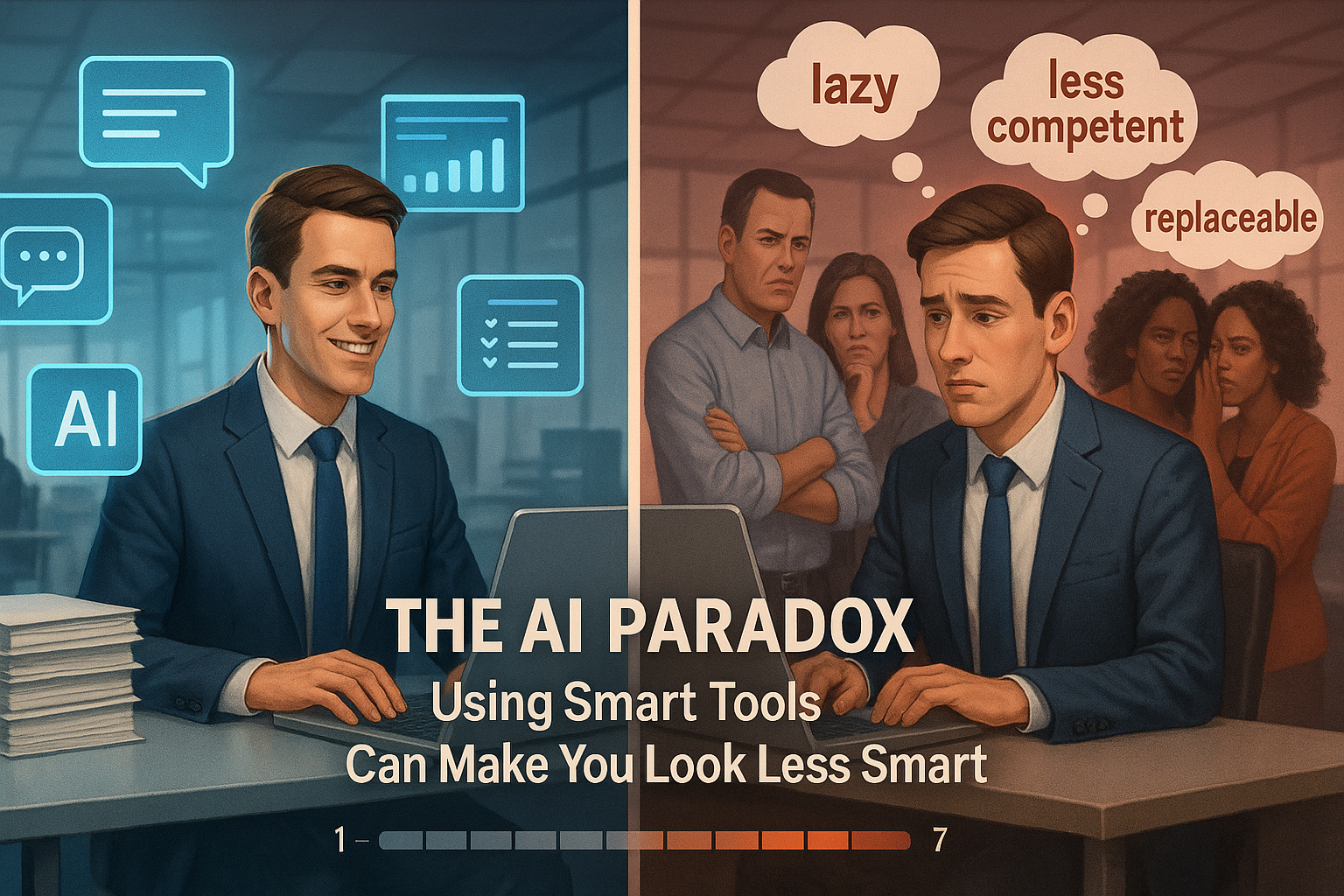

But what happens to that identity when the problems are being solved by AI, and you're mostly just reviewing and approving?

You become a filter, not a creator. A reviewer, not a builder. That's a fundamentally different—and less satisfying—role, even if the output looks the same.

People don't derive meaning from approving work. They derive meaning from doing it. There's research on this. The "flow state" that psychologist Mihaly Csikszentmihalyi documented happens when challenge matches skill. When you're working at the edge of your abilities, focused completely on the task.

You don't get flow from watching someone else work. You don't get it from reviewing AI output and clicking "approve."

Over months and years, this compounds. You lose confidence in your own abilities. The imposter syndrome, ironically, can get worse even as you use AI to produce more. You know, at some level, that you didn't actually solve these problems. Someone—something—did.

There's also a subtler issue: judgment. Good decision-making comes from pattern recognition. You've seen hundreds of situations, and you've internalized the patterns. When you offload the thinking to AI, you're also offloading the pattern-building.

Then, when you need to make a judgment call that AI didn't surface—the edge case, the unusual situation—you're worse at it because you haven't been training that muscle.

The Collective Knowledge Problem

Here's something that doesn't get discussed much: what happens to organizational knowledge when the people aren't thinking?

In a healthy organization, junior employees learn by watching senior ones think. They see how problems get framed, what questions get asked, why certain decisions get made. It's apprenticeship without the formal structure. A Harvard Business Review article discusses how AI can disrupt traditional learning pathways within organizations.

When the senior person asks Chat GPT instead of thinking out loud, the junior person learns nothing. There's no modeling of problem-solving. No exposure to the decision-making process. Just a solution appearing.

Repeat this across an entire organization, and you've got a knowledge transfer crisis that won't show up in metrics for years.

The experienced person who could have trained the team has been replaced by AI. The junior people have no role models for how to approach hard problems. When the experienced people leave, institutional knowledge walks out the door with them—because nothing was ever transferred.

Companies that still have deep expertise usually got it the hard way: people struggling with problems, learning from mistakes, and passing that wisdom forward. You can't compress that into a prompt.

Yet many organizations are optimizing for speed right now. Ship faster. Use AI to accelerate. Worry about knowledge transfer later.

Later arrives sooner than expected.

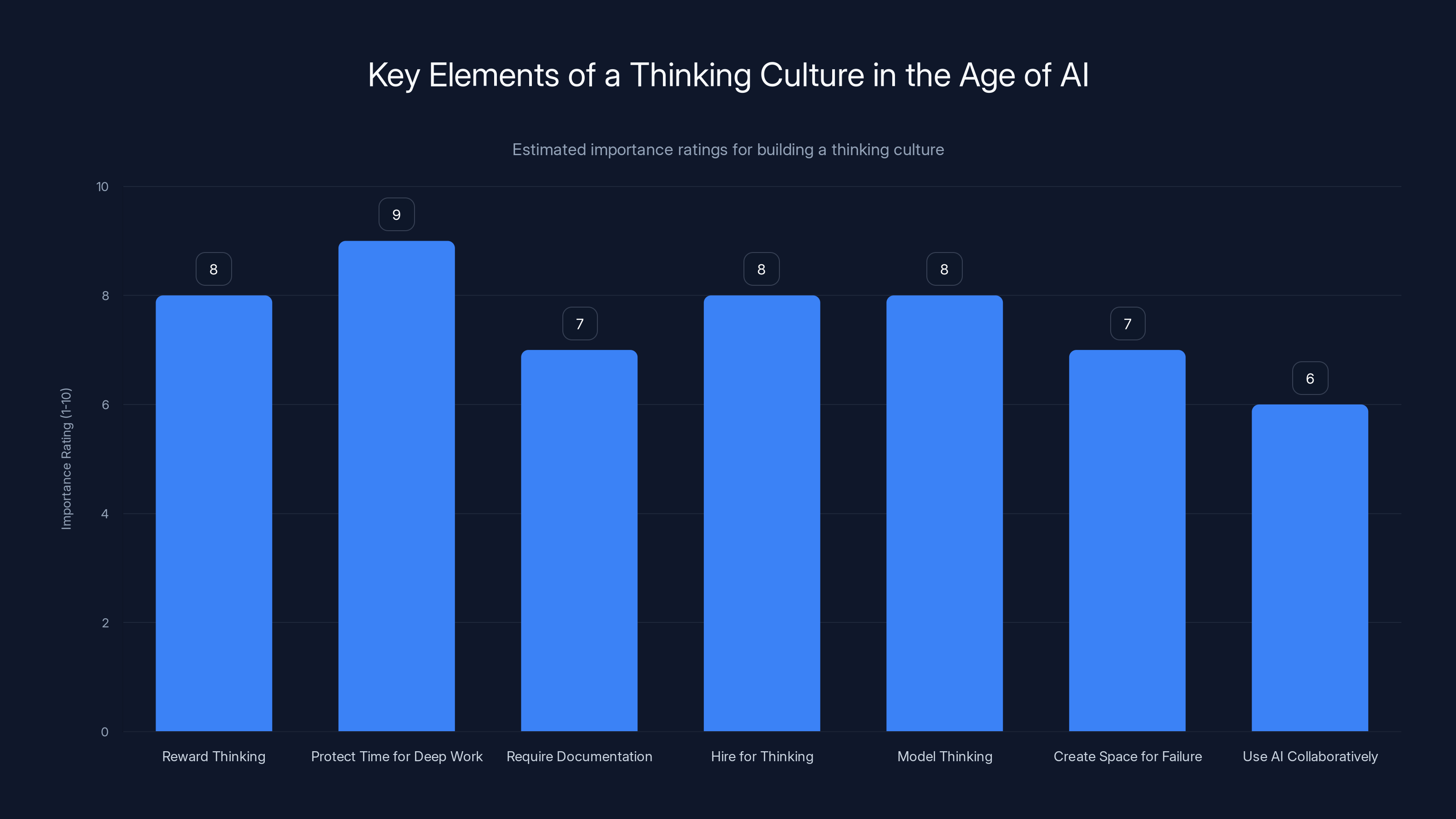

Protecting time for deep work is rated as the most important element in fostering a thinking culture, followed closely by rewarding thinking and hiring for thinking capacity. (Estimated data)

Where AI Actually Adds Value Without Stealing Your Brain

Before this sounds entirely like doom-and-gloom: AI isn't inherently bad for thinking. It depends on how you use it.

The key distinction is whether AI is augmenting your thinking or replacing it.

Augmentation looks like this: You've been working on a problem for an hour. You've exhausted your obvious approaches. You're stuck. You ask AI for different angles, new frameworks, perspectives you hadn't considered. Then you go back to thinking. This forced you to go deeper. The AI didn't solve it; it pushed your thinking further.

Replacement looks like this: Problem appears. AI solves it. You move on. No thinking required.

Augmentation still has the struggle component. You've built the foundation before asking for help. Replacement skips that step entirely.

This distinction matters enormously.

Similarly, AI excels at certain types of thinking: brainstorming ideas without judgment, exploring edge cases, finding patterns in data you couldn't manually analyze, generating variations on a theme you've already conceived of.

What AI is worse at: understanding context, reading between the lines, knowing what actually matters in a specific situation, making values-based decisions, innovating in fundamentally new directions.

Yet we're increasingly using AI for all of these, indiscriminately.

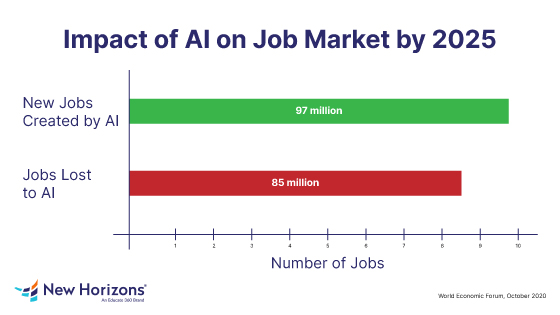

The smartest approach? Use AI for the thinking you're not good at (data analysis, variation generation), but protect the thinking you need to stay good at (judgment, framing, strategy). This aligns with insights from Exploding Topics on how AI is replacing jobs.

Most people do the opposite.

The Interview Problem: What Happens When It Matters

Here's a practical example that cuts to the core issue.

You're interviewing for a promotion or a new role. The interviewer asks you to walk through how you'd approach a complex problem. You're prepared. You've used AI to think through scenarios, to explore approaches, to generate ideas.

But something's off. When the interviewer probes deeper—asks you to explain your reasoning, to defend a decision, to adapt to new information—you hesitate. Because you didn't actually do that thinking. AI did.

You can sense the interviewer noticing.

This moment is telling. It reveals what we've lost. The ability to think on your feet. To adapt reasoning in real-time. To stand behind a decision because you understand it deeply, not because an AI generated it.

These skills are increasingly valuable because they're increasingly rare. As more people outsource thinking, the people who've protected their own cognitive work become more differentiated.

Paradoxically, using AI to accelerate might actually reduce your long-term career capital.

This is especially true in leadership. A manager who can't think through problems independently becomes a bottleneck. A strategic leader who's offloaded strategy to AI isn't leading anymore; they're approving.

Companies know this, even if they don't always admit it. That's why senior roles still require demonstrations of thinking. Why interviews get harder as you go up. Why the "thinking jobs" still can't be fully automated despite everyone's predictions.

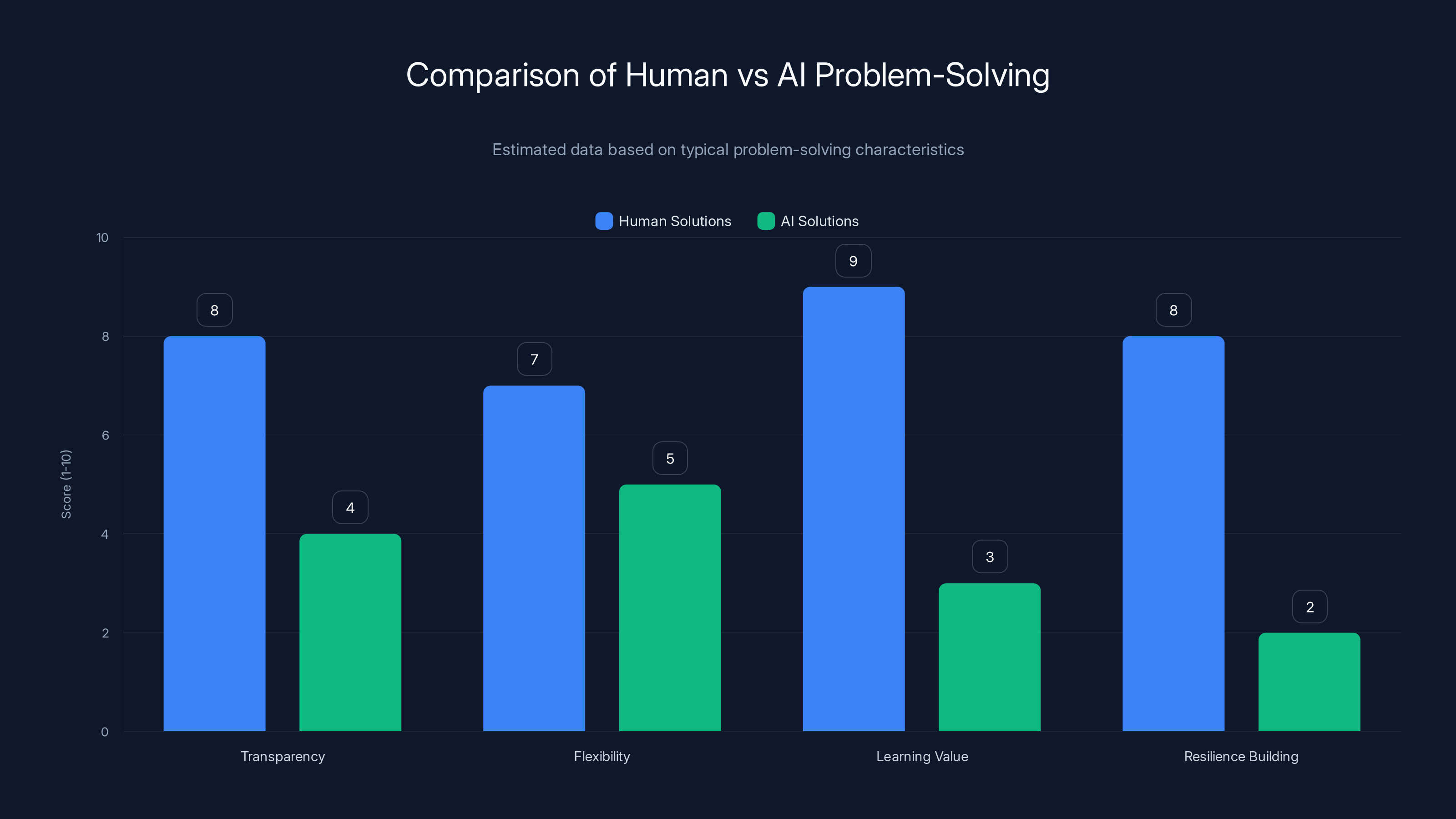

Human solutions score higher in transparency, learning value, and resilience building compared to AI solutions, which are often seen as more polished but less transparent. Estimated data.

The Skill Decay Curve

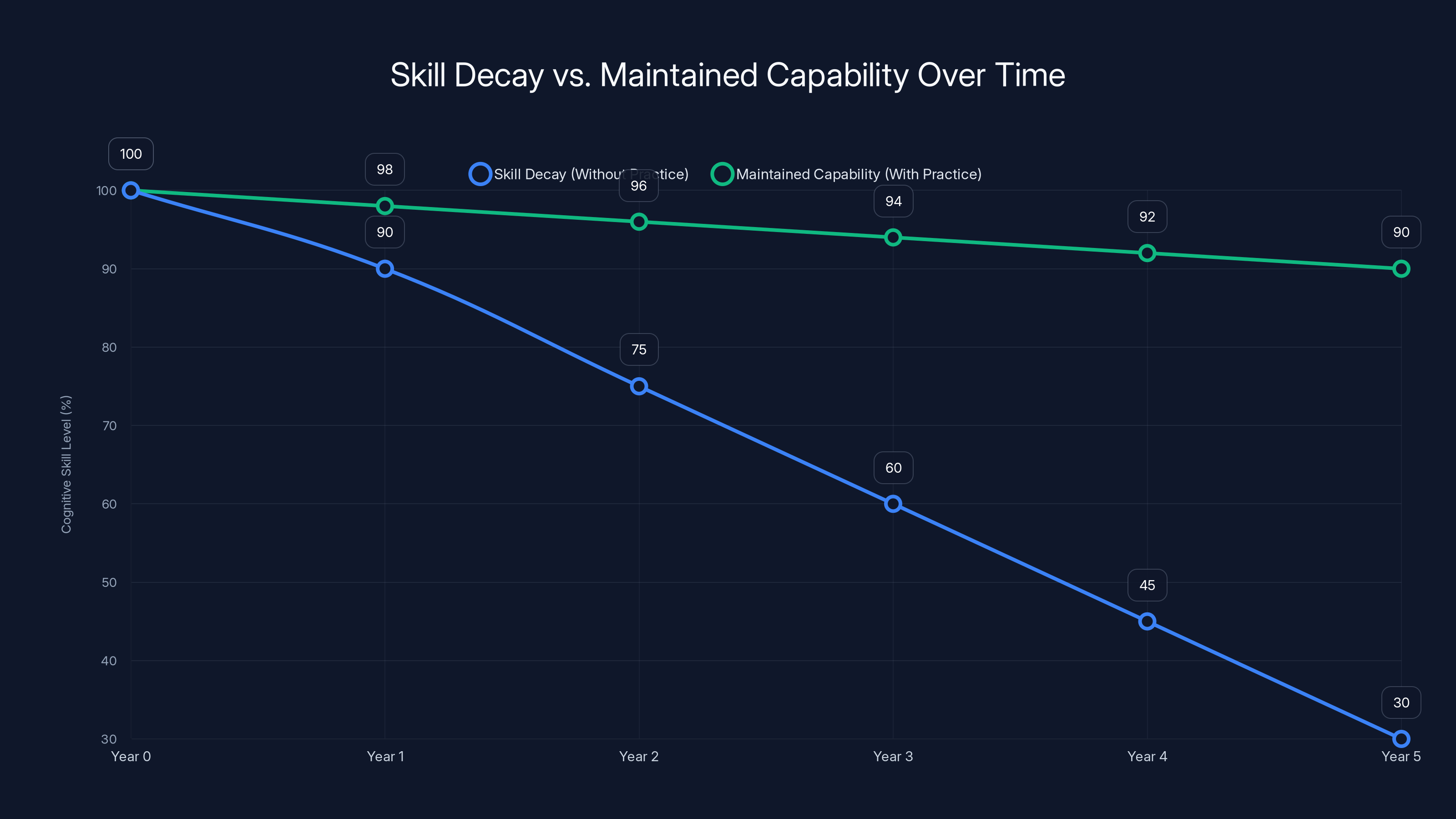

Let's talk about something uncomfortable: how fast you lose skills you're not using.

There's a well-researched phenomenon in cognitive psychology called "skill decay." When you stop practicing something, the neural pathways that support it atrophy. The knowledge doesn't disappear, but your access to it slows down. Your ability to apply it in new contexts deteriorates. Your confidence erodes.

The decay is actually faster than you'd expect. Research suggests that meaningful decline can happen in just weeks if you're not practicing.

Now apply this to complex professional thinking. If you spend six months delegating your problem-solving to AI, then suddenly need to make an important decision on your own, you'll be rustier than you think. More than just out of practice—your brain's infrastructure for that kind of thinking has started to degrade.

This is why athletes keep training even when they're not competing. Why musicians practice scales even when they're composing. Why writers write every day even if they're not on deadline.

The skill needs constant use to maintain.

For knowledge workers, the equivalent of "practice" is thinking through problems, wrestling with challenges, making decisions without immediate external input. When AI absorbs all of that, the practice stops.

The decay begins.

Then there's the compounding effect. As your skills decay, you become less confident in your own thinking. So you reach for AI more. Which accelerates the decay. Which pushes you further toward automation.

It's a downward spiral that feels like progress because you're completing tasks faster.

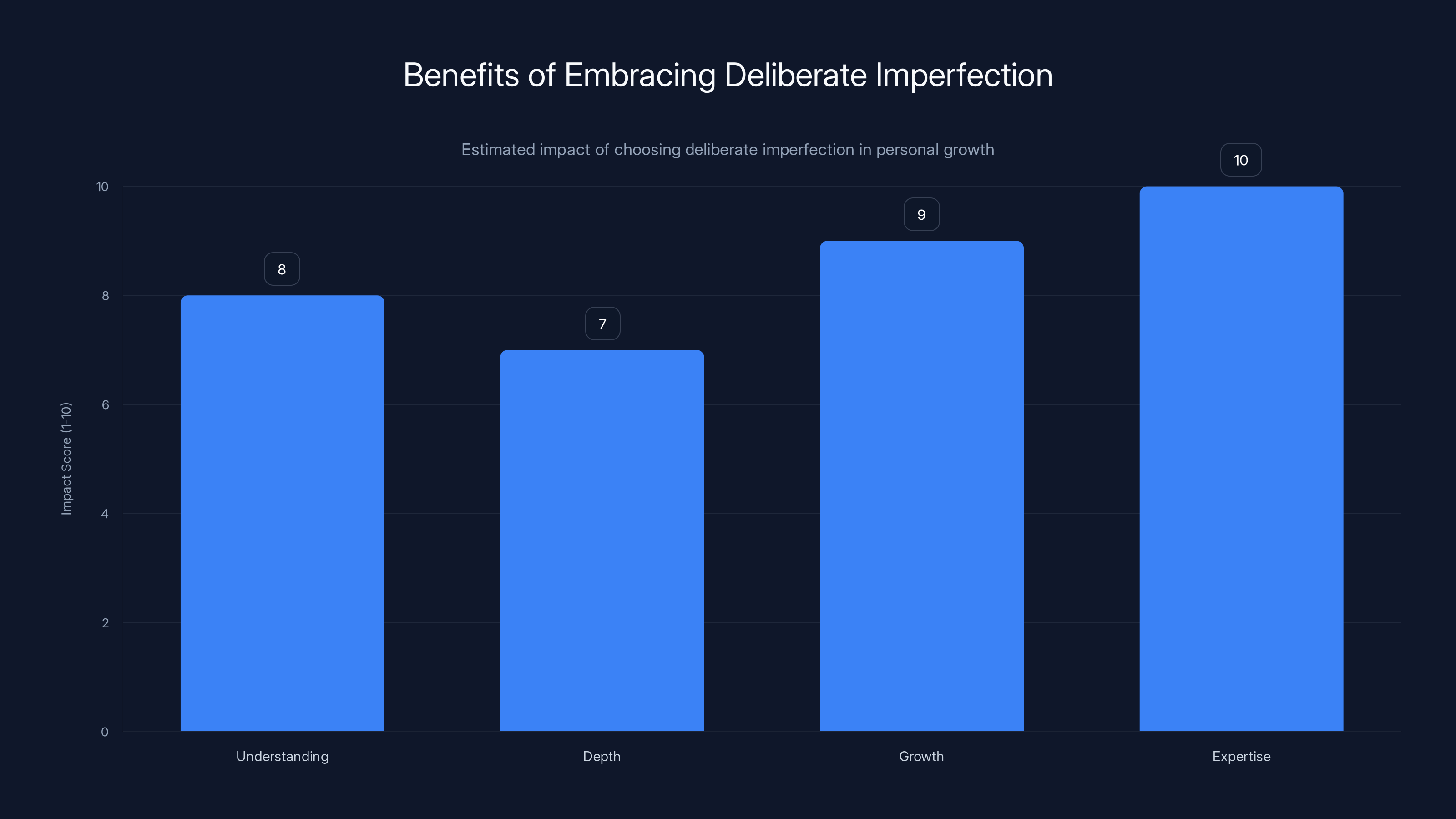

What Imperfect Human Solutions Actually Teach Us

There's something valuable in the imperfection of human thinking that AI advocates rarely mention.

When a human solves a problem, they don't usually find the optimal solution. They find a solution, often with trade-offs they're aware of, sometimes with constraints they've chosen to accept. The solution is good enough, and they understand the reasoning.

More importantly: they understand the reasoning. Not someone else's reasoning. Theirs.

When they explain that solution to someone else, they can articulate why they made trade-offs. Why they chose this approach over that one. What assumptions they're operating under. That explanation is incredibly valuable. It's how teams learn. How organizations build judgment.

AI solutions, by contrast, are presented as complete. Polished. Optimal-looking. But the reasoning is often opaque. "Here's the answer" without "here's why I approached it this way."

Human imperfection isn't a bug. It's a feature. The fact that humans leave thinking visible—showing their work, articulating their assumptions, explaining their trade-offs—is what makes human thinking valuable for teaching and learning.

AI obscures this. The answers appear with no reasoning trail. Even if they're better answers, they're worse for learning.

There's also something about struggling with an imperfect solution that builds resilience. You learn that problems rarely have perfect answers. That you have to make choices. That good-enough solutions, chosen consciously, are often better than optimal solutions you don't understand.

This is wisdom. AI skips over it.

Estimated data shows cognitive skill decay without deliberate practice compared to maintained capability with regular cognitive engagement.

The Case for Deliberate Cognitive Friction

This might sound counterintuitive, but what work really needs isn't less friction. It's friction in the right places.

Friction—the resistance you feel when doing hard thinking—is actually a feature, not a bug. It's the sensation of learning. Your brain working at capacity. New neural pathways forming.

Smooth, frictionless work feels good in the moment. But it's often the work you forget. The learning that doesn't stick. The expertise that doesn't develop.

The most valuable work is usually the opposite. It's the project that frustrated you. The problem that took weeks. The decision that you had to defend repeatedly because you weren't entirely certain. These are the experiences you remember. That shaped how you think.

AI removes friction entirely. Problem → Solution → Friction-free completion.

But organizations and individuals that move too quickly toward frictionless work are betting on something risky: that they won't need deep thinking in the future. That the ability to delegate thinking will be permanently available. That the problems won't change in ways that require human expertise.

Historically, that's a bad bet.

The smarter move might be to embrace some friction deliberately. To work on problems the hard way sometimes. To think before delegating thinking. To preserve the struggle as a way of maintaining capability.

This isn't nostalgia for the pre-AI days. It's a recognition that the muscles you don't use atrophy, and you might need them later.

How Organizations Are Losing Institutional Memory

Zoom out from individual thinking to organizational thinking, and the problem gets bigger.

Institutional memory—the collective knowledge and experience of an organization—is built through repeated challenges, mistakes, and adaptations over time. A company develops wisdom about how to do things well not because someone documented it, but because people struggled, figured it out, and then taught others.

When you introduce AI-assisted work at scale, you compress this learning process. Problems get solved faster. But the learning process is skipped. The wisdom never gets transferred.

This happened with other technologies. Companies that adopted email too early and stopped using phone calls lost something: the patterns of thinking that emerged from real-time conversation. The nuance of tone, the back-and-forth negotiation, the interpersonal learning.

It felt like progress. Efficiency gains. But something was lost.

AI is orders of magnitude bigger than email. It's not just changing communication; it's changing thinking itself.

A company that outsources all of its strategic thinking to AI consultants and AI tools might move faster initially. But it also stops developing internal strategic capability. When the AI is unavailable or gives bad advice, the company has no immune system. No internal wisdom to fall back on.

The organization becomes dependent on the tool. That's different from improved.

This is already happening. Companies that integrated AI early are running into problems: overreliance, atrophy of internal expertise, inability to operate when the tools malfunction or aren't appropriate.

The solution isn't to abandon AI. It's to be intentional about what thinking you keep internal and what you delegate.

Choosing deliberate imperfection enhances understanding, depth, growth, and expertise significantly. Estimated data.

The Future of Work Without Deep Thinking

Let's imagine where this goes if the current trend continues unchecked.

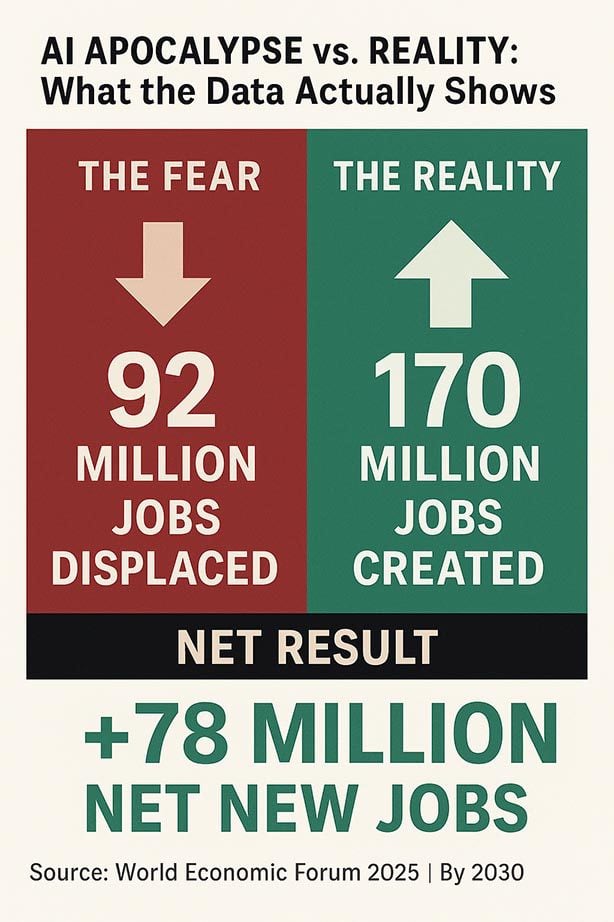

In five years, most routine thinking is automated. Problems get solved by AI. Humans review and approve. The pace of decision-making accelerates. Quarterly results improve. Metrics look great.

But somewhere in the organization, something has been lost. The ability to think. The confidence to make decisions without AI validation. The judgment that comes from wrestling with difficult problems.

Then something happens that AI wasn't trained on. A market shift. A novel problem. A situation that requires adaptive thinking. And the organization discovers it doesn't have the capability to handle it. The people couldn't, because they haven't been thinking. The AI wasn't trained on it, so it produces hallucinations.

The organization is left with fast processes that can't adapt. That's not resilience; that's brittleness.

Companies that prosper long-term aren't the ones that optimize for speed. They're the ones that maintain adaptive capacity. The ability to think in new ways when the environment changes.

That capacity requires humans who think. Regularly. Deliberately. Even when it would be faster to delegate.

The organizations that preserve this—that protect thinking time, that value judgment, that see struggle as investment rather than inefficiency—will be the ones that outmaneuver the purely optimized competitors.

The bet is on human cognition as a sustainable competitive advantage.

Right now, the momentum is all in the other direction. But the psychology and neuroscience of learning are universal. The human brain doesn't change because we have better tools. It still gets better through struggle and practice, not through outsourcing.

The question is whether we'll remember that before we've atrophied our own thinking away.

What Leaders Should Actually Be Doing

If you're responsible for teams or organizations, this matters directly to your strategic thinking.

The first move is to stop treating AI as a direct replacement for thinking. It's not. It's a tool that can augment thinking if used correctly, and it can replace thinking if used carelessly.

There's a huge difference, and it compounds over years.

The second move is to be intentional about what thinking you want your team doing. Not everything should be optimized for speed. Some things should be done the hard way. Some problems should be wrestled with before AI is consulted. Some decisions should be defended by humans who actually understand the reasoning.

This isn't about being Luddite. It's about being strategic about where you invest thinking capacity.

The third move is to protect apprenticeship and knowledge transfer. Make space for junior people to watch senior people think. To see the decision-making process, not just the decisions. This is how judgment develops. And it can't happen if the senior people are entirely AI-assisted.

The fourth move is to measure what actually matters. Not velocity or output, but quality of thinking, depth of understanding, and long-term capability. These metrics are harder to track, but they're what actually predicts resilience.

Final move: stay thinking yourself. Don't outsource your own cognition entirely. Even if AI could handle everything in your role, you wouldn't be able to lead effectively without doing some of the thinking. Leaders need to know what's hard about the work. They need to struggle sometimes. That's where judgment comes from.

This is the opposite of the efficiency mindset. It's the resilience mindset. And it's increasingly rare.

Individual Skills Worth Protecting

At the personal level, certain thinking skills are worth protecting because they're becoming scarce.

Strategic thinking: The ability to see patterns across time, to predict how changes in one domain affect others, to build mental models of complex systems. This is getting rarer because it's hard and slow. But it's increasingly valuable.

Judgment under uncertainty: Making decisions without complete information, accepting that you might be wrong, and proceeding anyway. AI is terrible at this. Humans can do it. But you need practice, and you lose the skill if you don't use it.

Communication of reasoning: Explaining not just what you think, but why. This is how you teach others. How you convince skeptics. How you build trust. AI can generate words, but it can't articulate genuine human reasoning because it doesn't have any.

Emotional and social reasoning: Reading people, understanding motivation, navigating interpersonal dynamics. Still mostly human territory, and worth maintaining.

Creative problem-solving: Not brainstorming (AI is fine at that), but the ability to see connections between unrelated domains and apply them in novel ways. This requires deep knowledge in multiple areas, which requires actual learning and thinking.

Ethical reasoning: Deciding what's right when the answer isn't obvious. AI can generate plausible-sounding ethics, but actual moral judgment requires consciousness (or at least, something that AI doesn't have yet). This is irreducibly human.

These skills are worth investing in precisely because they're becoming scarce. They're the insurance policy against over-reliance on tools.

Work on them deliberately. Practice them even when you could delegate. The effort compounds.

The Honest Balance: Where AI Wins and Where It Doesn't

Let's be fair about this. AI has genuine advantages, and pretending otherwise is naive.

AI excels at:

- Processing and analyzing enormous amounts of data faster than humans can

- Generating multiple options or approaches quickly

- Spotting patterns in data that would be impossible to see manually

- Automating routine, repetitive cognitive tasks (content generation, code boilerplate, standard processes)

- Providing a starting point that humans can refine

- Working 24/7 without cognitive fatigue

- Removing tedium from work that's necessary but not intellectually interesting

These are real gains. They're not negligible.

But AI is worse at:

- Understanding context, nuance, and what actually matters in a specific situation

- Making values-based decisions or ethical judgments

- Building genuine expertise through learning over time

- Adapting to truly novel situations

- Explaining reasoning in a way that teaches others

- Catching its own errors (it's overconfident)

- Knowing what it doesn't know

- Creating work that's truly original or innovative

The smart move is to be clear about which category your problem falls into, and choose accordingly.

Most of the damage from AI is being done by people using it for the second category. Asking AI to make strategic decisions. Using it to avoid thinking about genuinely novel problems. Letting it become the default for everything.

If you reversed that pattern—using AI for the first category and protecting human thinking for the second—you'd get most of the benefits with way fewer downsides.

But that requires discipline. And it's slower than just asking Chat GPT.

Building a Thinking Culture in the Age of AI

If you want to build a team or organization that actually thinks—that stays competitive long-term—you need to create conditions that protect and encourage thinking.

This means:

Reward thinking, not just output. Don't just measure how much got done. Measure how well people understand what they're doing. Ask people to explain their reasoning. Make judgment visible and valuable.

Protect time for deep work. This is obvious and almost universally ignored. Meetings expand to fill the day. Slack notifications interrupt constantly. Then you add "AI to make things faster" and wonder why people aren't thinking anymore. You've designed thinking out of the job. Reverse it. Protect blocks of time where the only thing happening is thinking.

Require documentation of reasoning. When a decision gets made, capture not just the what but the why. The assumptions, the trade-offs considered, the reasoning that led here. This creates a trail of thinking for future people to learn from.

Hire for thinking, not efficiency. During interviews, pay attention to how people think through problems in real-time. Can they explain their reasoning? Do they consider trade-offs? Can they adapt when you push back? These signal thinking capacity, not just intelligence.

Model thinking yourself. If you're a leader, think out loud. Show your work. Explain your decision-making. Let people see the process, not just the result. This is how judgment gets transferred.

Create space for failure and iteration. Deep thinking sometimes produces wrong answers. That's okay. It's part of learning. If every mistake is punished, people stop thinking and start copying. If iteration is expected, people take intellectual risks.

Use AI as a thought partner, not a replacement. When using AI, do it collaboratively with your team. Explain what you're asking it and why. Critique its output together. Show people how to use it as augmentation.

None of this is revolutionary. It's just creating conditions where thinking can happen. It requires defending against the momentum toward pure efficiency.

But it's the difference between organizations that stay capable and ones that become dependent on tools.

The Personal Philosophy of Deliberate Imperfection

Here's something worth considering at a personal level: maybe imperfection isn't something to eliminate. Maybe it's something to embrace.

Your imperfect solution, arrived at through struggle, is worth more than a perfect solution handed to you. Not because it's better (it might not be). But because it's yours. You understand it. You can defend it. You can adapt it. You learned from building it.

When you accept the slow, imperfect way of doing something, you're choosing depth over speed. Understanding over optimization. Growth over output.

This sounds inefficient. And it is, in the moment. But compounded over years, it produces genuine expertise. The kind of deep knowledge that can't be replicated by tools.

And deep knowledge is increasingly valuable as tools become commoditized.

The choice to do something the hard way—to think instead of delegating thinking, to struggle instead of optimization, to learn slowly instead of moving fast—is a career strategy. Not a time management failure.

People who make that choice, consistently, end up with capabilities that tools can't touch. That's their insurance policy. Their competitive advantage. Their protection against obsolescence.

You don't have to choose this for everything. But choosing it for some things, deliberately and regularly, is the difference between staying capable and becoming a spectator in your own career.

Conclusion: Thinking as a Commitment

We're at a moment in work history where it's possible to optimize thinking away entirely. To let AI handle most cognition and spend your time on management and approval.

It's tempting. The short-term gains are real.

But the cost is real too. It's paid in atrophied skills. Lost expertise. Organizations that can move fast but can't adapt. People who've lost confidence in their own thinking.

The uncomfortable truth that hardly anyone wants to admit is that struggle is valuable. That imperfect human solutions, arrived at through wrestling with problems, create learning. That effort builds competence in a way that outsourced solutions can't.

AI is a tool. A powerful one. Use it. But be intentional about where. Protect the thinking that builds your capabilities. Maintain the struggle that creates expertise. Remember that the problems you solve yourself, imperfectly, make you better at solving problems.

Because eventually, there will be a problem that AI wasn't trained on. A situation that requires adaptation. A moment where you need judgment you haven't been practicing.

You want to have that muscle when you need it.

The choice is yours: optimize for speed now, or protect capability for later.

Most people will choose speed. That's why the people who choose differently—who protect thinking, who embrace struggle, who stay cognitively engaged—will be increasingly valuable.

The question isn't whether AI is good or bad. It's how you'll use it. And whether you have the discipline to keep thinking, even when you don't have to.

TL; DR

- The learning paradox: Struggle and imperfection build expertise; outsourcing thinking saves time but atrophies skills

- Cognitive costs: When you stop wrestling with problems, your brain's problem-solving capacity actually declines measurably within weeks

- Organizational risk: Teams that delegate all thinking lose institutional memory and become dependent on tools for capabilities they no longer possess

- Skills worth protecting: Strategic thinking, judgment under uncertainty, reasoning explanation, emotional intelligence, and creative problem-solving are becoming scarcer and more valuable

- The balance: Use AI for data processing, option generation, and tedium removal. Protect human thinking for strategy, novel problems, judgment calls, and anything that teaches your brain

- Bottom line: Moving fast with AI feels productive but can reduce long-term career capital. The people who deliberately preserve their own thinking will have the adaptive advantage.

FAQ

Does using AI make you less intelligent over time?

Not intelligence itself, but specific cognitive capabilities that come from practice. Research shows that when you stop using skills like calculation, writing, or decision-making, the neural pathways supporting them weaken measurably within weeks. This is called "skill decay." Using AI for everything accelerates this process. You'll maintain raw intelligence, but your ability to apply it decreases.

How can I tell if I'm augmenting thinking with AI or replacing it?

The key difference is struggle. If you spent meaningful time thinking about a problem before asking AI for help, that's augmentation. The AI is now pushing you further. If your first instinct is to ask AI without attempting the problem yourself, that's replacement. Augmentation leaves your thinking muscle engaged. Replacement atrophies it.

What's the real risk if organizations become too dependent on AI for thinking?

The risk is brittleness. AI is trained on historical data and patterns. When something genuinely novel happens—a market shift, a crisis, a problem with no precedent—AI will hallucinate or fail. If your organization stopped developing internal problem-solving capacity, you have no backup. You can't think your way out because you've trained people to wait for AI. That's when dependencies become vulnerabilities.

How much thinking should I protect from AI to maintain my skills?

A practical approach: 40% of your thinking output done the hard way, 60% augmented or delegated. This preserves enough capability to stay sharp while gaining most of the efficiency benefits. The exact ratio depends on your role. Leadership and knowledge work benefit more from protected thinking than routine work. Start with one major problem per week done without AI—that's enough to maintain your core capabilities.

Why is it so hard to have this conversation at work?

Because speed is measurable and rewarded. The cost of atrophied thinking is invisible until it matters. Also, admitting that you should slow down runs counter to the entire optimization narrative around AI. It sounds like you're rejecting progress. You're not. You're being strategic about where optimization helps and where it hurts. That's not a popular position in fast-moving environments.

Can organizations actually afford to protect thinking time with AI moving this fast?

They're actually taking the bigger risk by not protecting it. Companies that moved fastest toward AI in 2023-2024 are now finding that they've lost internal capability. Problems that used to be handled by experienced people now stall if the tool fails. They've gained speed on routine work but lost adaptive capacity on novel work. The organizations with the best outcomes protected some thinking capacity deliberately. It felt slower then. It looks smarter now.

What if my entire industry is already AI-automated for my job?

Then you have two paths: become expert at prompting and reviewing AI output (still requires judgment), or develop capabilities that AI can't touch (strategic thinking, relationship management, ethical decision-making). Most smart people do both. They use AI for what it's good at while building deep expertise in what it's not good at. That combination is currently the highest-value skillset.

Is the answer just to reject AI entirely?

No. That's overshooting in the other direction. AI genuinely does some things better, faster, and cheaper than humans. The answer is discernment. Use it for what it's actually good at (data processing, option generation, tedium removal) and protect human thinking for what humans are actually good at (judgment, strategy, adaptation, teaching). The both/and approach is harder than pure automation or pure resistance, but it's where the real advantage is.

FAQ

What skills become obsolete if AI does more thinking?

Not obsolete, but dramatically less valuable: basic calculation, routine writing, standard problem-solving, information retrieval, and pattern-matching in well-defined domains. These were the foundation of knowledge work for decades. As AI dominates them, they become commoditized. The thinking skills that remain valuable are the ones AI struggles with: novel problem-solving, judgment calls, understanding context and nuance, ethical reasoning, and building genuine expertise. Learning to do these better is the current insurance policy against obsolescence.

How do I maintain my thinking skills if I'm already deep into AI-assisted work?

Deliberate practice on problems you could delegate but choose not to. Spend 30-60 minutes daily on "thinking work" without AI: sketching problems, building models, explaining reasoning to skeptics, making decisions without tool assistance. This is similar to how musicians maintain technique through scales even when not actively performing. It's boring and feels inefficient, but it's what prevents skill decay. Also, explain your reasoning out loud. Teaching others forces deeper thinking than just using AI and moving on.

Does this apply to technical work differently than other fields?

Slightly, because technical skills have objective verification. You can't fake knowing how to debug code. But the dynamic is the same: if you never debug without AI assistance, your ability to debug complex problems atrophies. If you never write code without generation tools, your ability to architect solutions from scratch declines. The most valuable technical people right now are ones who understand the fundamentals deeply enough to know when AI is wrong. That depth comes from doing the work themselves, not just reviewing AI output.

Key Takeaways

- Struggle and imperfection are how expertise develops. When AI removes struggle, it removes learning.

- Skill decay happens surprisingly fast—professional thinking abilities decline measurably within weeks without practice.

- Using AI for everything converts you from a creator to an approver. That's a different, less satisfying professional identity.

- Organizations that automated all thinking lost institutional memory. Junior employees have no senior people modeling problem-solving anymore.

- The smartest approach: use AI for data processing and option generation, but protect human thinking for strategy, judgment, and anything that teaches your brain.

- The people who deliberately preserve their own thinking will have an adaptive advantage over those who've fully outsourced cognition.

Related Articles

- Is AI Adoption at Work Actually Flatlining? What the Data Really Shows [2025]

- Admin Work is Stealing Your Team's Productivity: Can AI Actually Help? [2025]

- Why Companies Won't Admit to AI Job Replacement [2025]

- Mastering NYT Connections: Hints, Strategies, and Future Trends [2025]

- Microsoft Teams People Skills Profile: How to Showcase Your Work Talents [2025]

- Robot Baristas vs. Human Touch: The Future of Coffee Shops [2025]

![The Hidden Cost of AI Thinking: What We Lose at Work [2025]](https://tryrunable.com/blog/the-hidden-cost-of-ai-thinking-what-we-lose-at-work-2025/image-1-1770905538234.jpg)