Is AI Adoption at Work Actually Flatlining? What the Data Really Shows

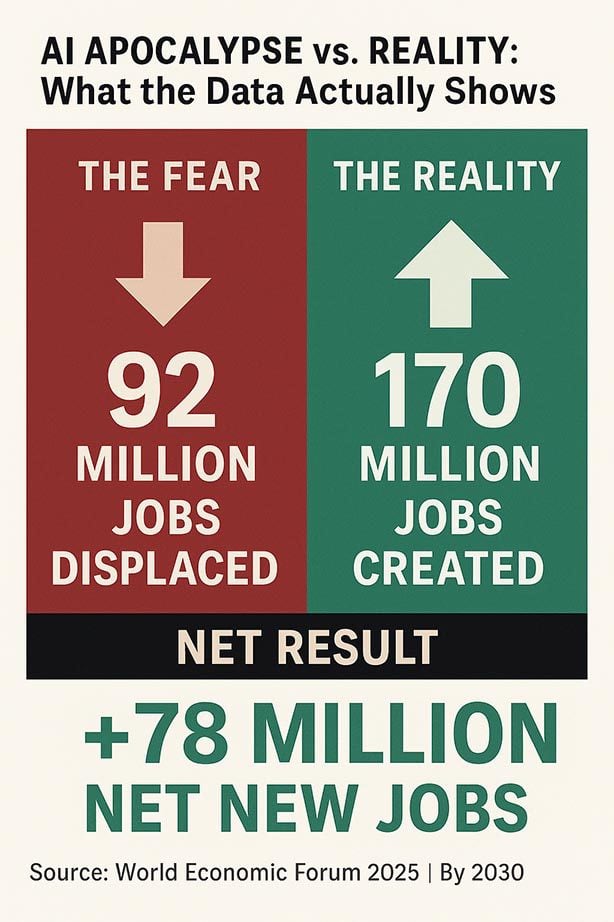

Something strange happened. We went from "AI will transform everything" to "wait, nobody's actually using it?" in less than a year.

I know because I watched it happen. One minute, every company was rushing to deploy AI. The next, reports started trickling in that adoption was slower than expected. Now we're hearing the word that nobody wants to say out loud: flatlining.

But here's the thing—the story is way more nuanced than just "AI adoption is dead." The reality is messier, more interesting, and honestly, more revealing about how companies actually implement technology. This isn't a post-mortem on AI at work. It's a reality check on what's actually happening in offices and how the gap between hype and reality is forcing teams to rethink their entire approach.

Let me break down what the latest research actually shows, what it means for your organization, and more importantly, what's missing from the conversation everyone's having right now.

TL; DR

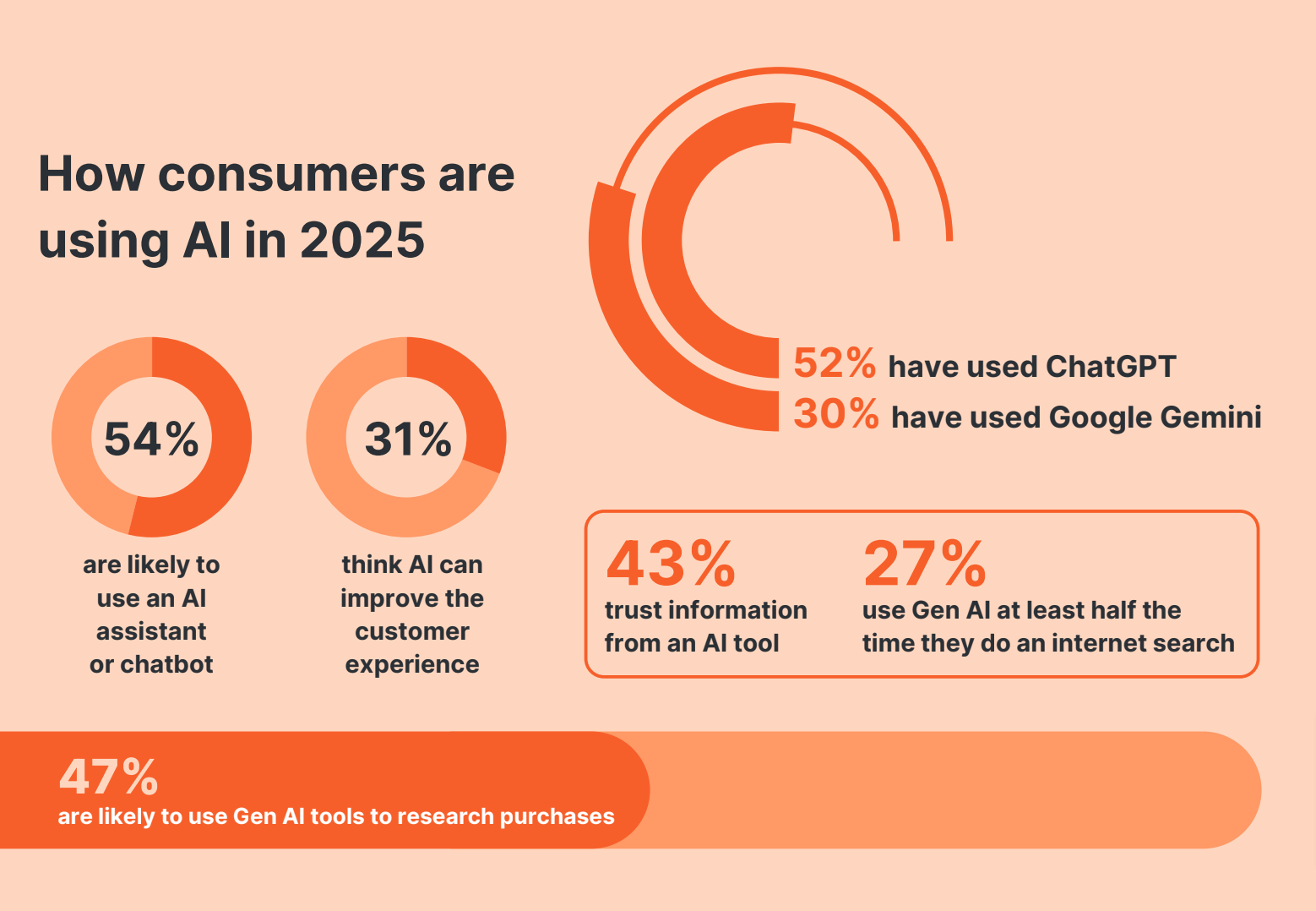

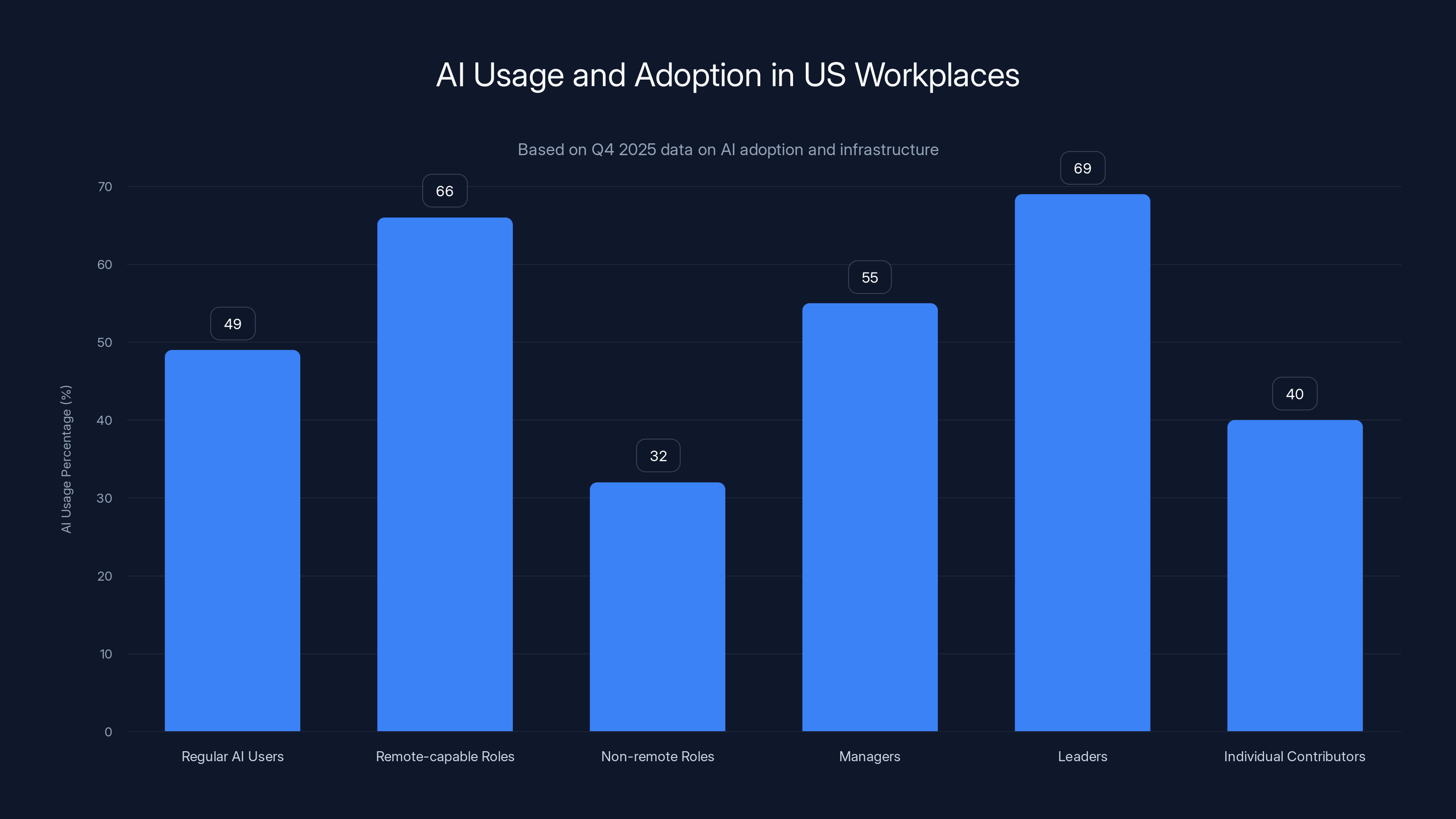

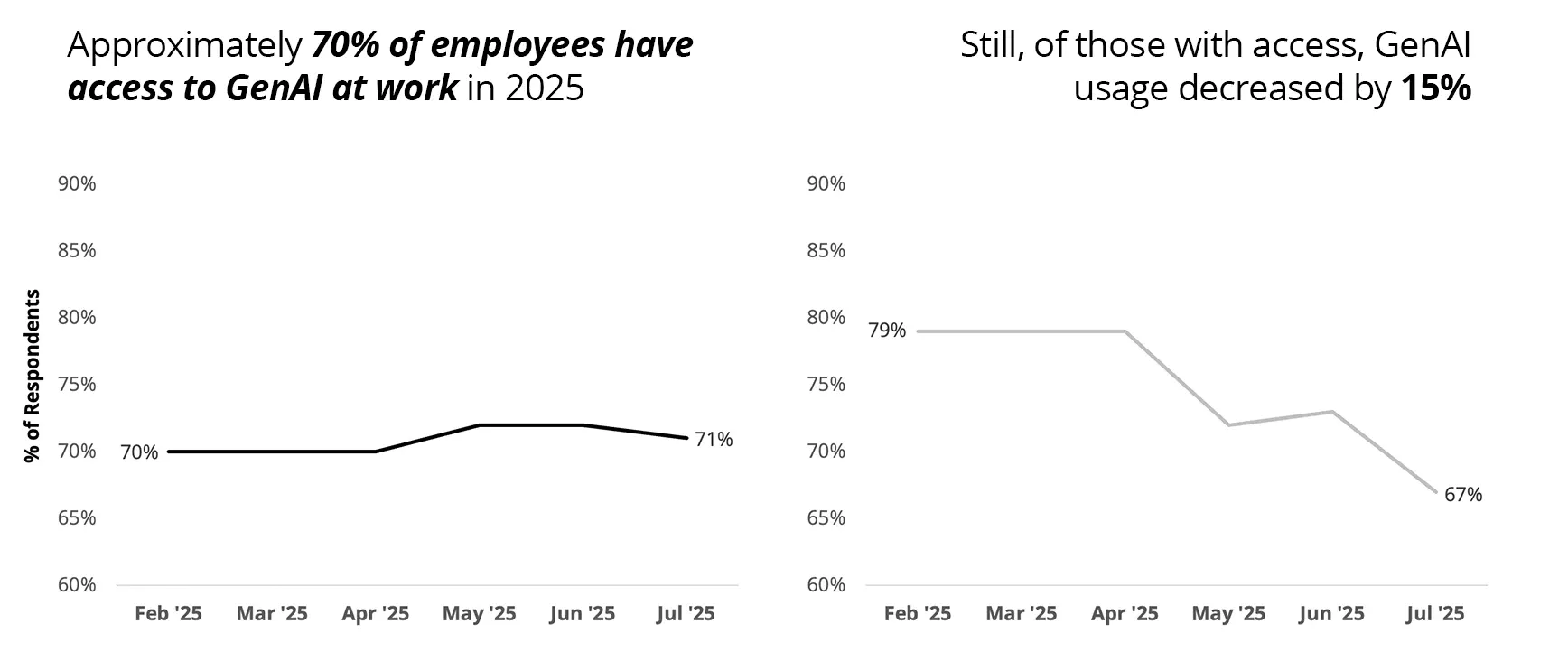

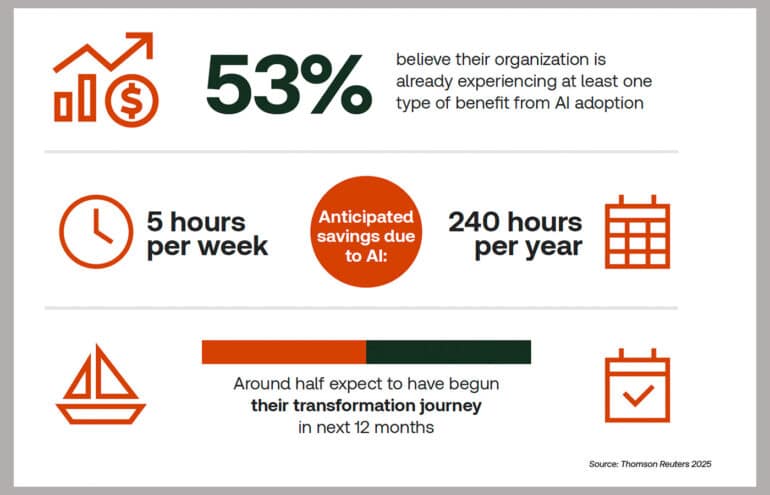

- AI user growth is slowing: Only 49% of US workers use AI regularly, with daily AI usage up just 2% quarter-over-quarter in Q4 2025

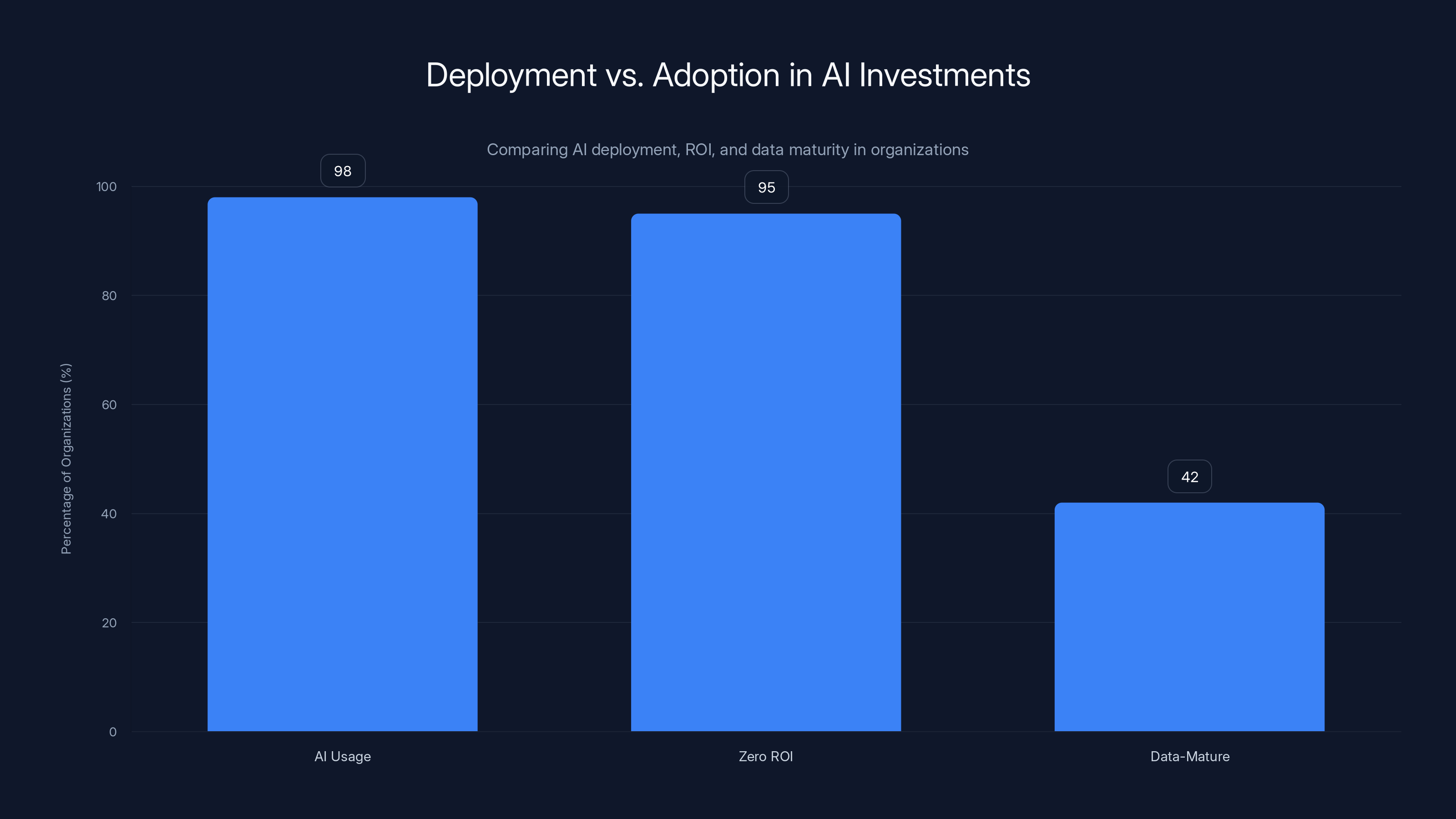

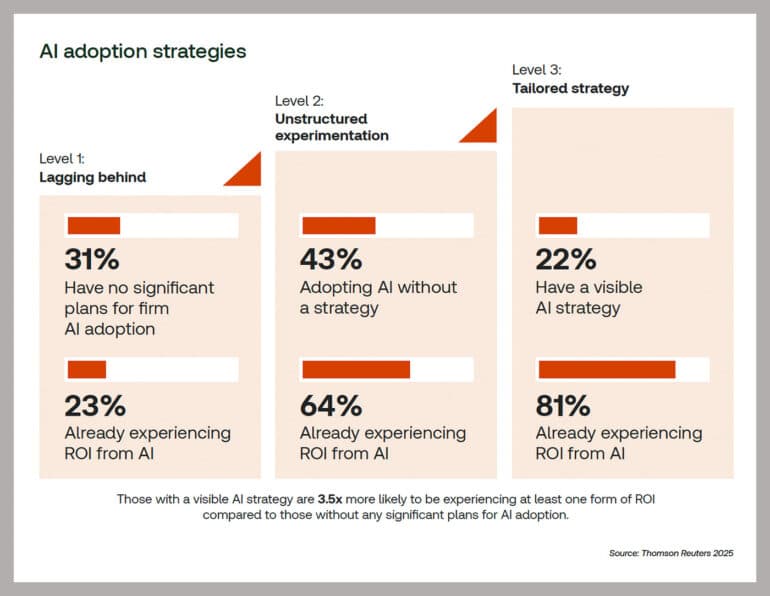

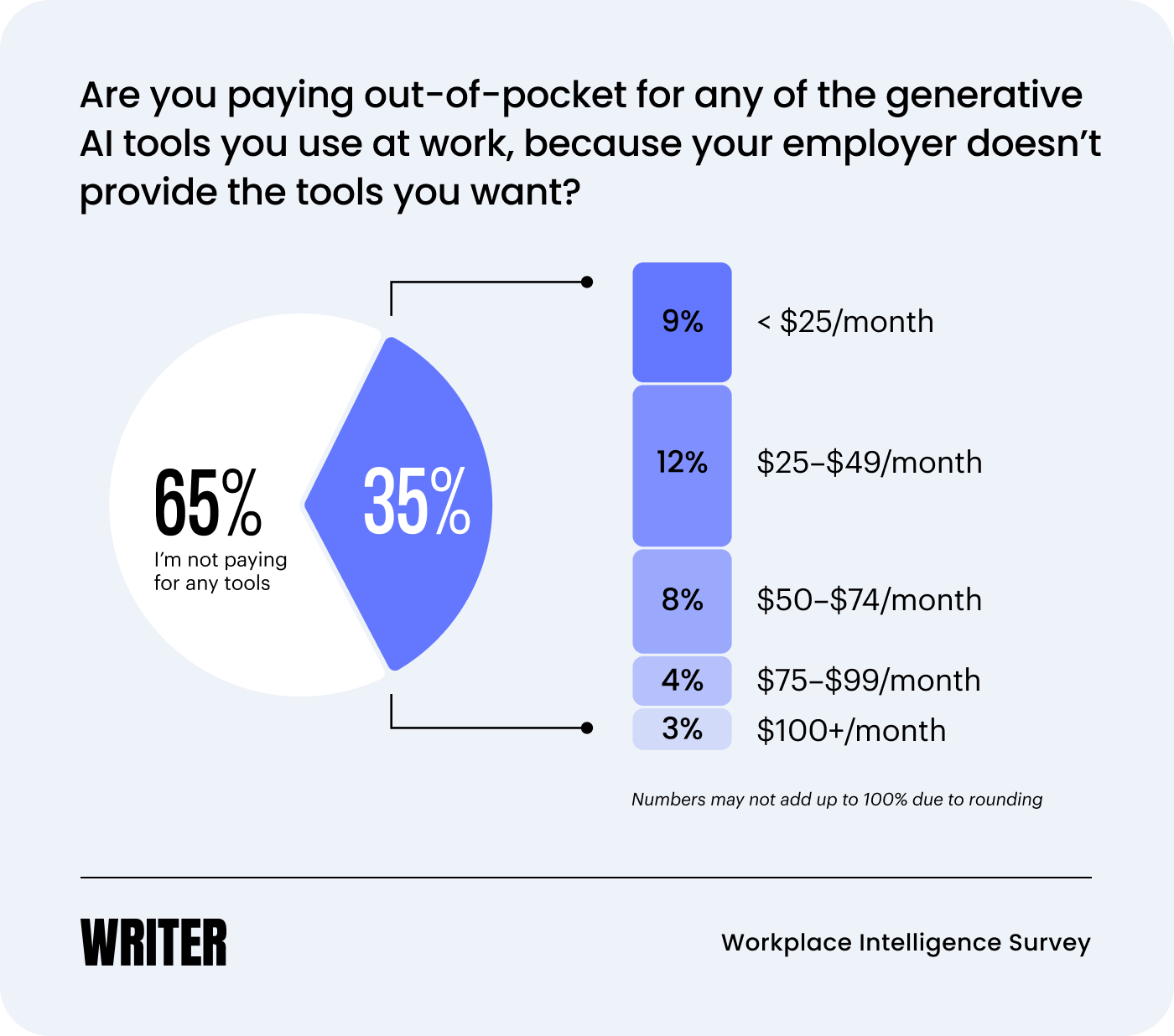

- Deployment doesn't equal adoption: 98% of companies are piloting or exploring AI, but 95% report zero ROI due to infrastructure gaps

- The experience divide is real: Remote-capable roles use AI at 66%, but non-remote roles lag at just 32%

- Data infrastructure is the bottleneck: Only 42% of organizations consider themselves "data-mature" despite data quality being the #1 success factor

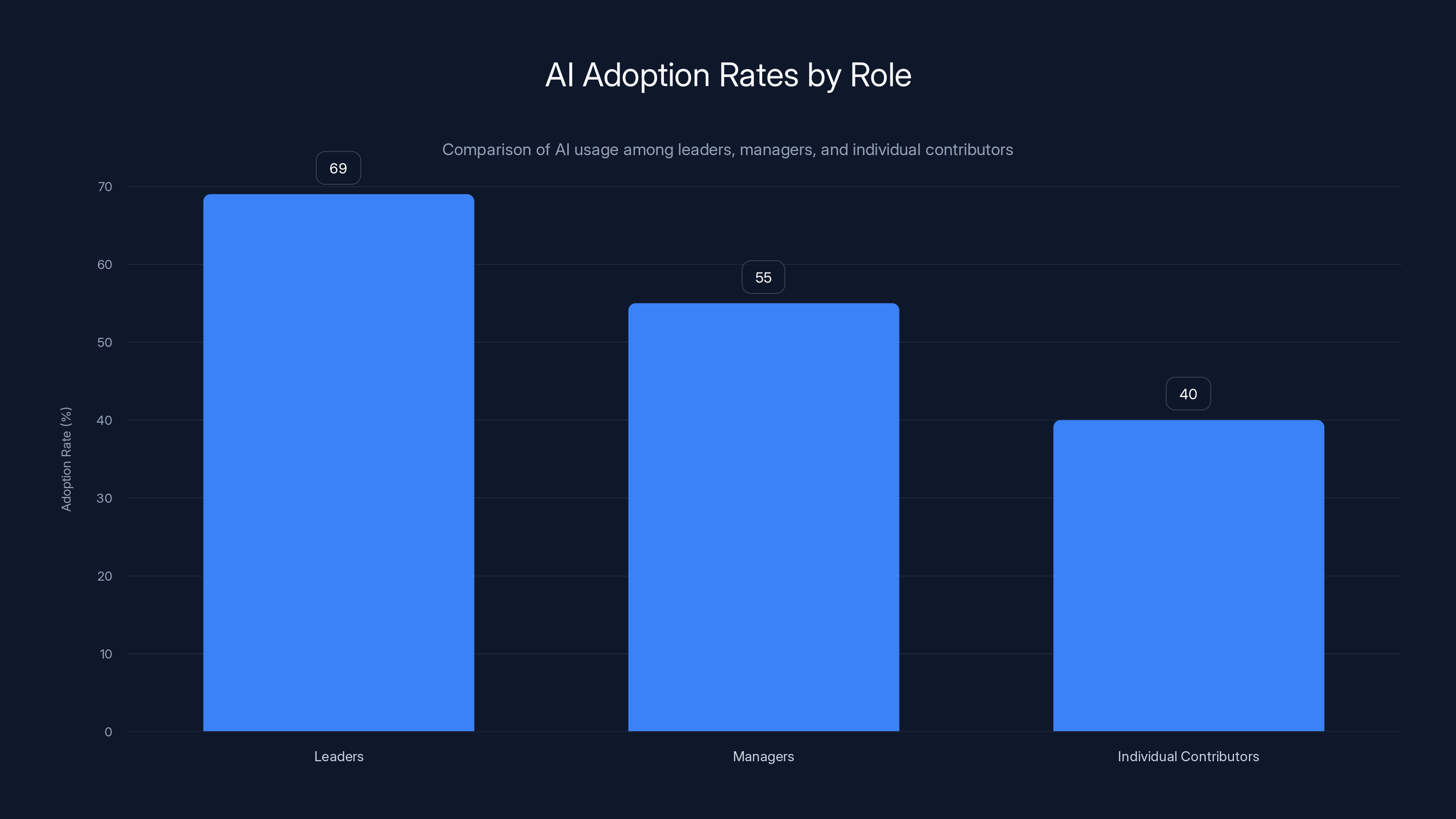

- Leadership bias exists: Managers (55%) and leaders (69%) use AI far more than individual contributors (40%)

- The next wave requires foundation-building: Real adoption comes from clear use cases and trusted data, not just deploying tools

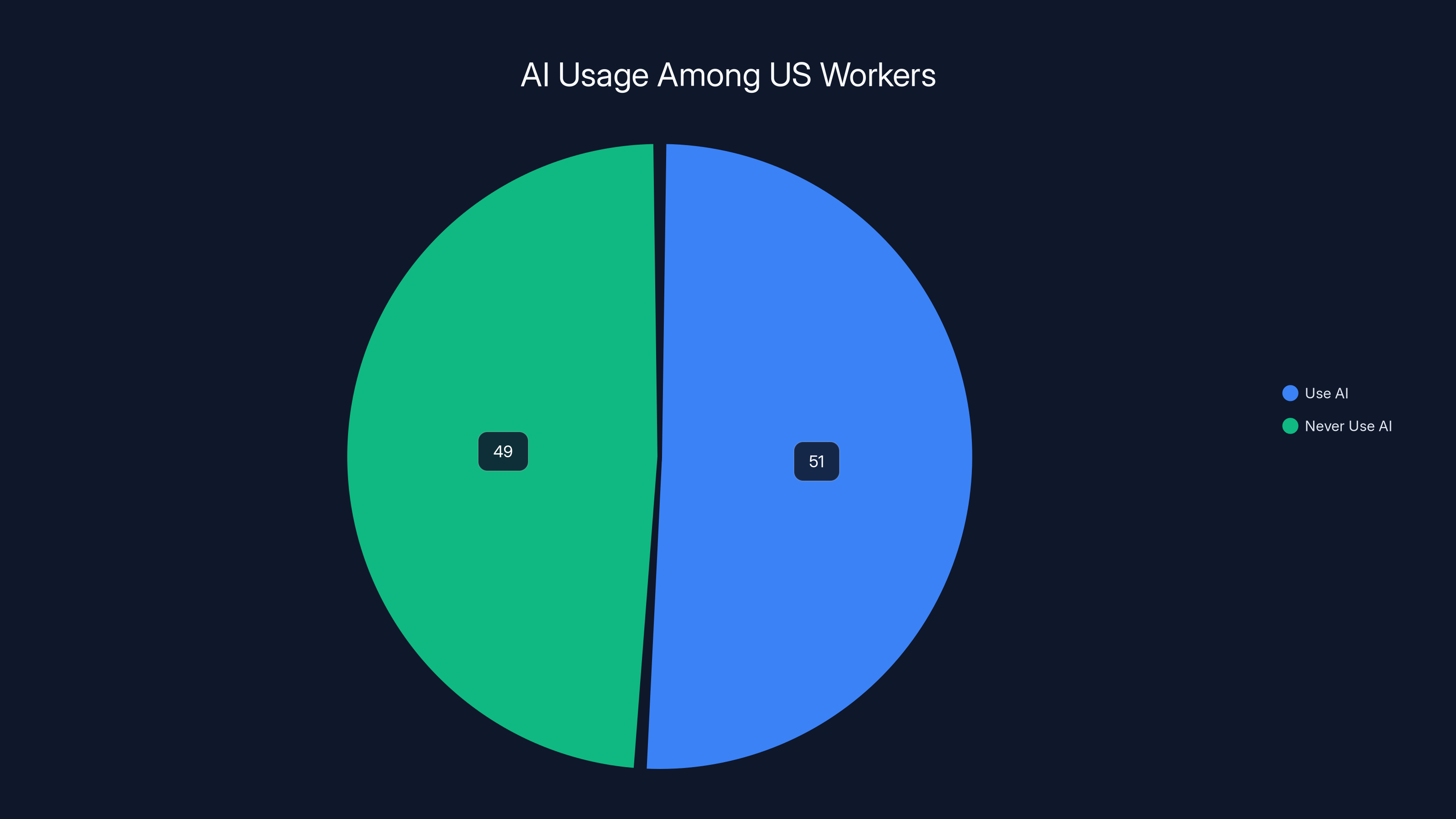

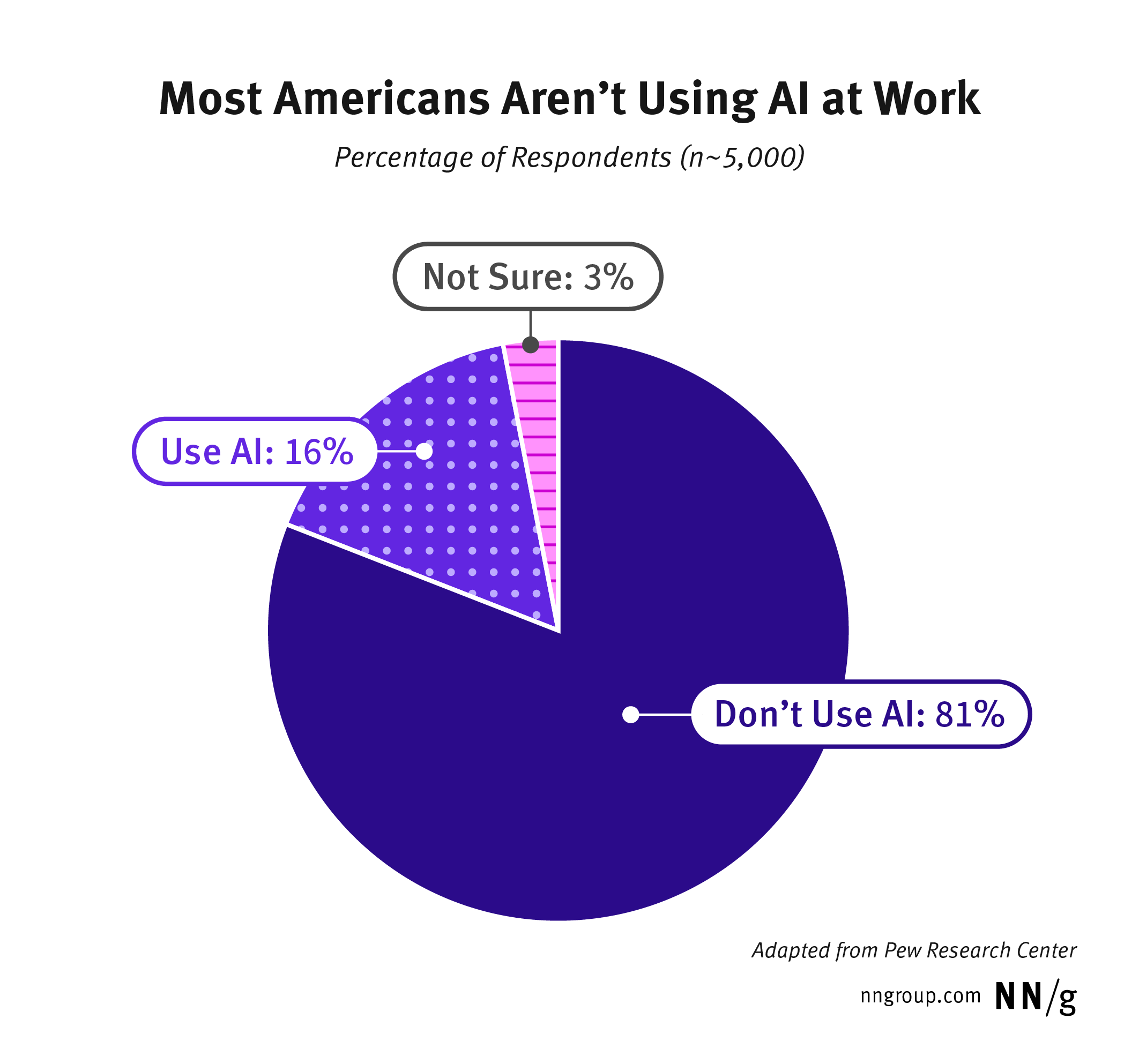

Approximately 51% of US workers use AI in some capacity, while 49% report never using it, indicating an adoption plateau.

The Surprising Truth About AI Usage Numbers

When Gallup released their latest research, the headlines grabbed attention for the wrong reasons. "AI adoption flatlining!" they shouted. But if you actually read past the headline, the picture becomes far more complicated and, oddly, more encouraging.

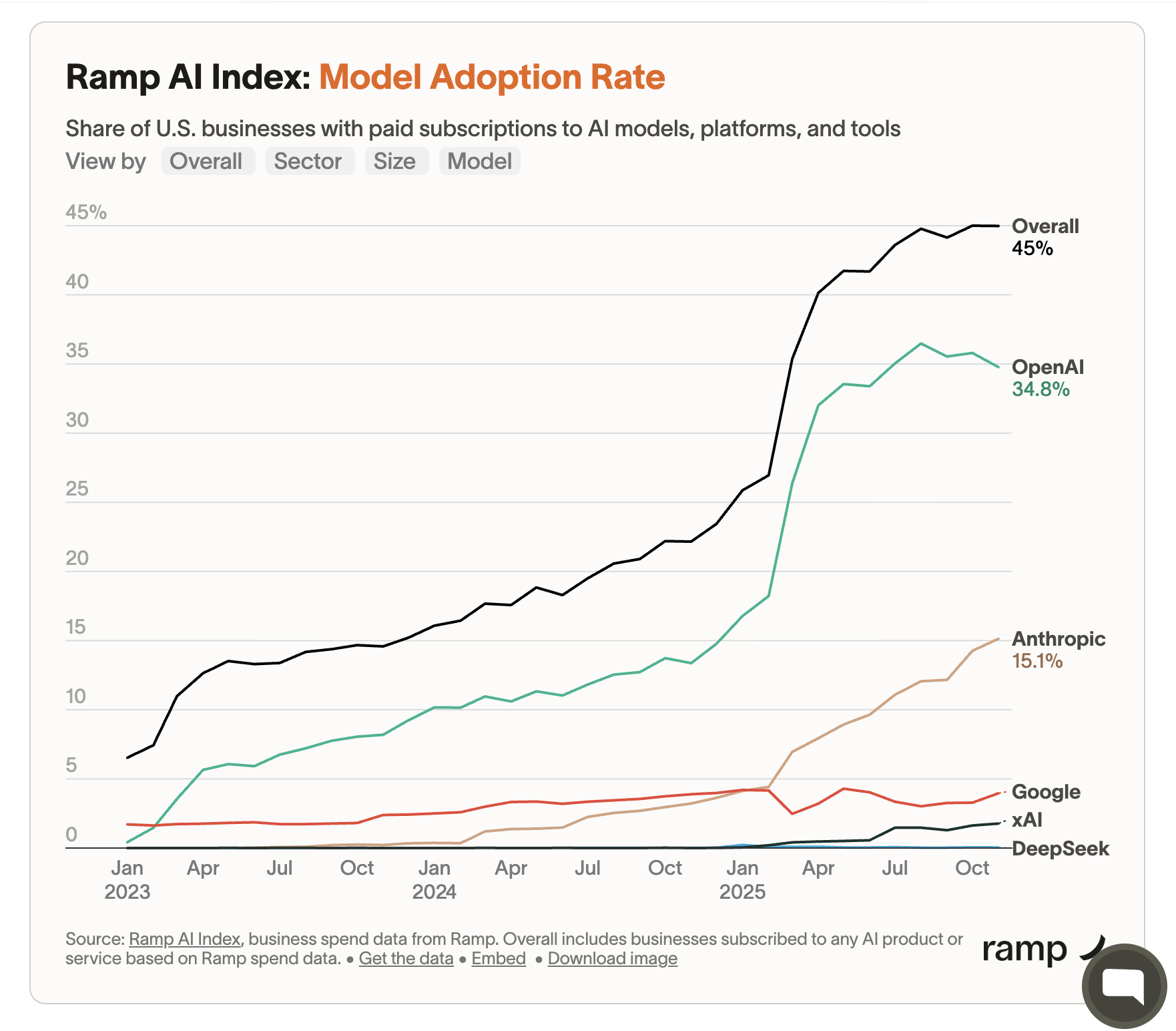

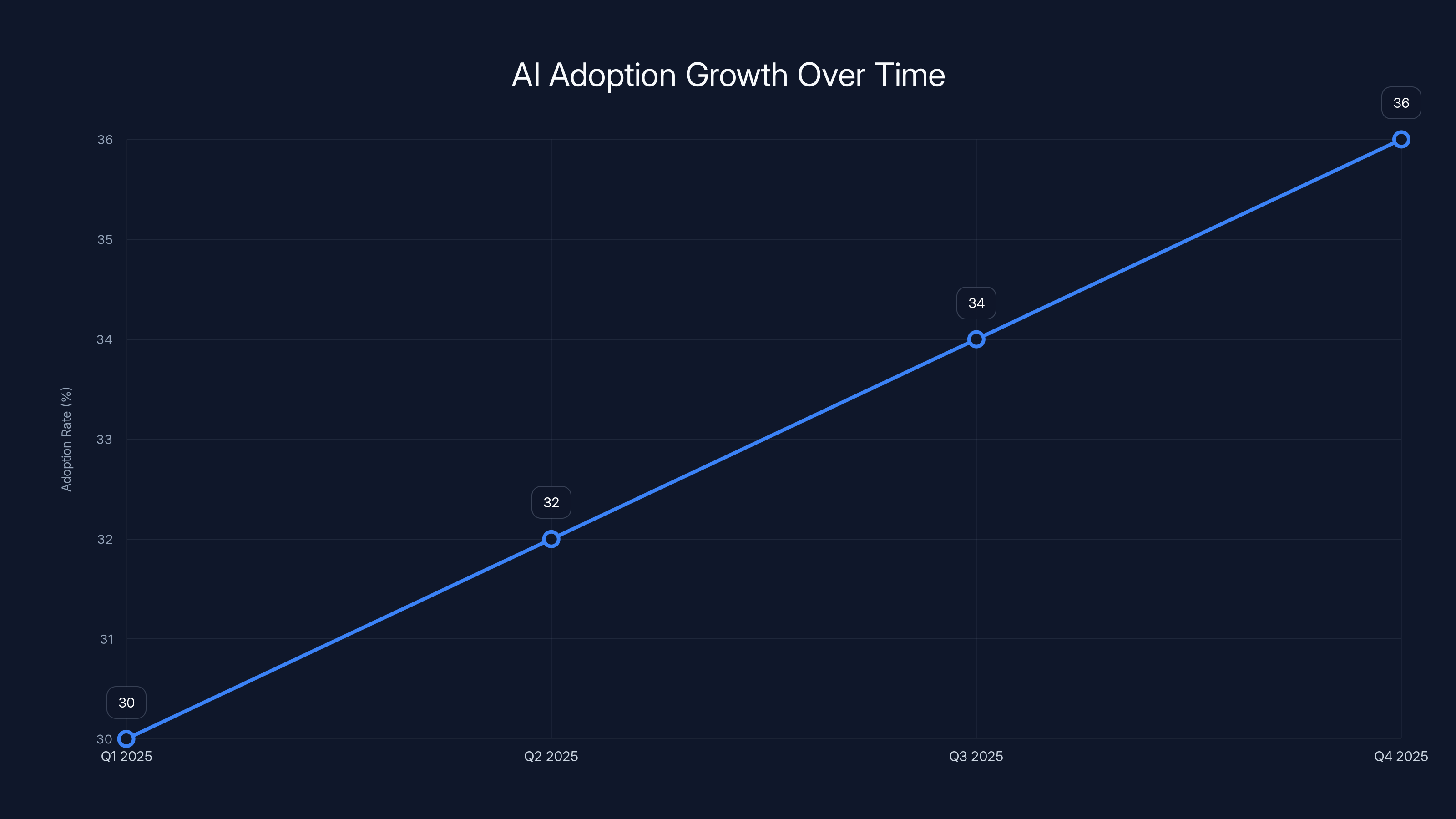

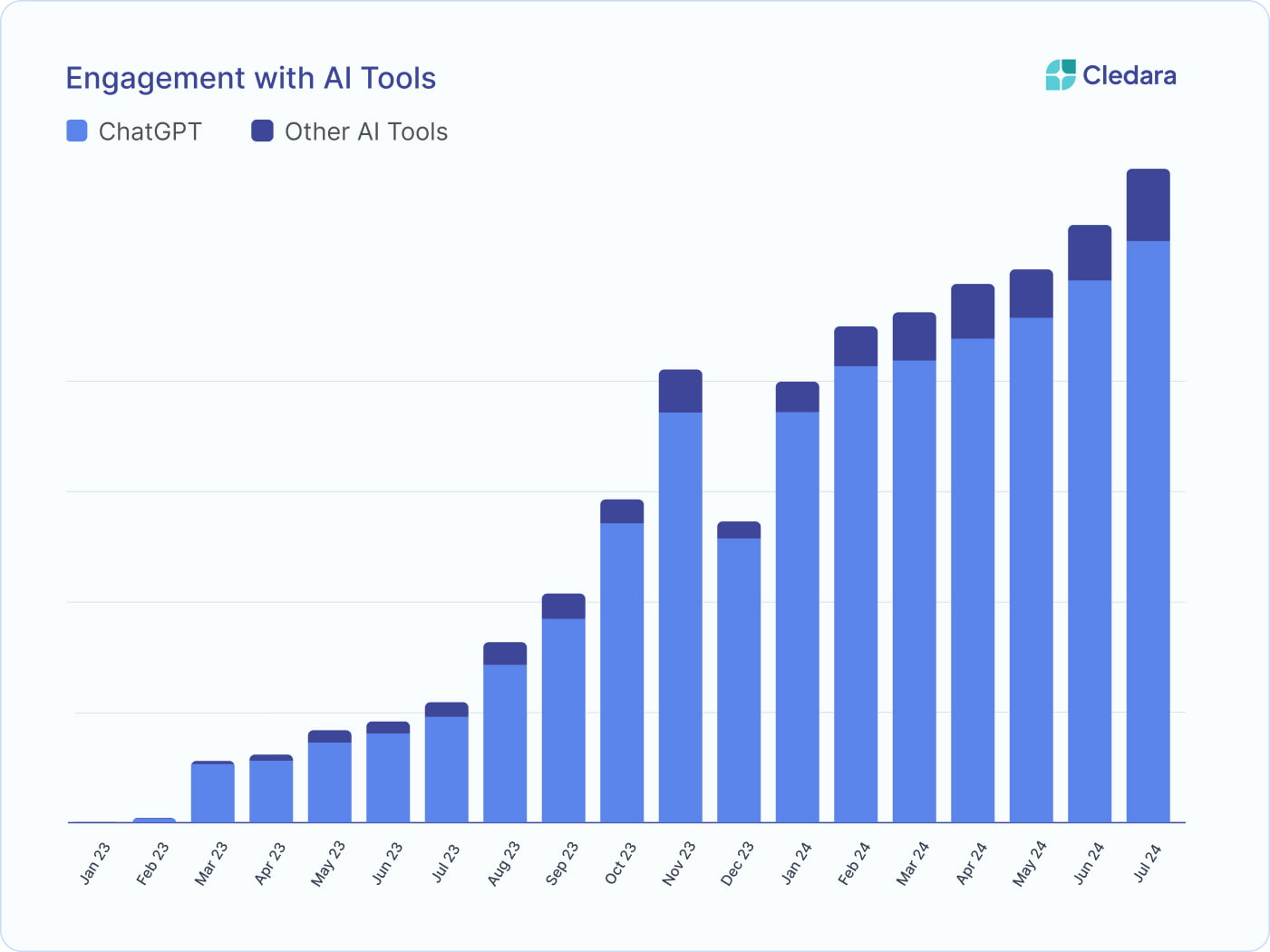

The data shows that daily AI use increased by just 2 percentage points in Q4 2025 compared to the previous quarter. Frequent AI use climbed 3 percentage points. Those aren't typos. We're talking about single-digit growth from what was supposed to be the most revolutionary technology since the internet.

What really jumped out at me was this: the total number of AI users remained completely flat. Not down. Not up. Just... stuck. Meanwhile, 49% of US workers say they never use AI in their role at all. That's almost half the workforce completely absent from the AI conversation.

Now, before you assume AI is dying, understand what's actually happening here. That flat number doesn't mean AI is failing. It means we've hit an interesting inflection point. The early adopters—the folks who were excited about AI—are using it more frequently now. But we're not seeing new waves of workers jumping in. It's more depth with existing users, not breadth with new ones.

This matters because it tells us something important: the low-hanging fruit has been picked. Everyone who was immediately ready to use AI has already started. The next phase requires something different.

Who's Actually Using AI Right Now

The adoption patterns reveal clear winners and losers in this AI wave. And honestly, the disparity is striking.

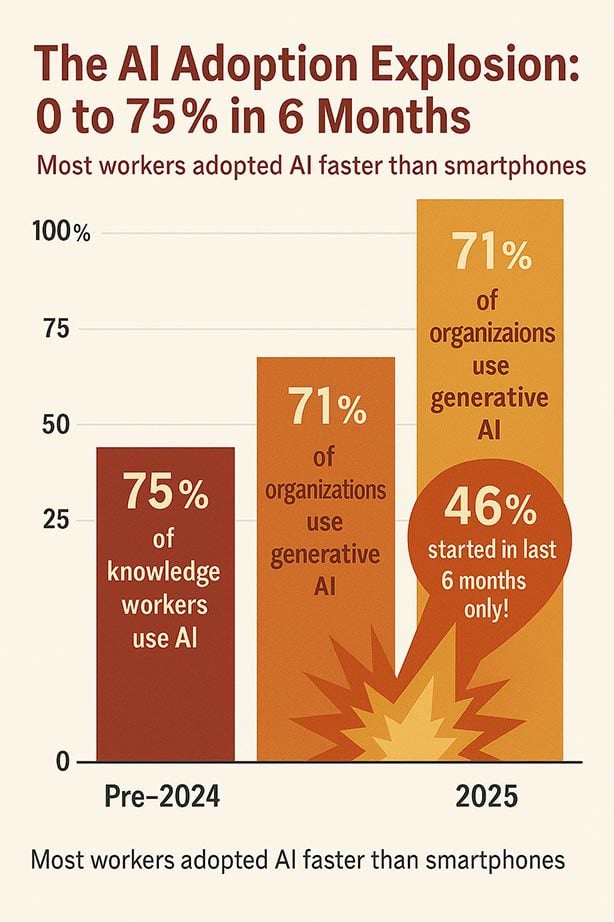

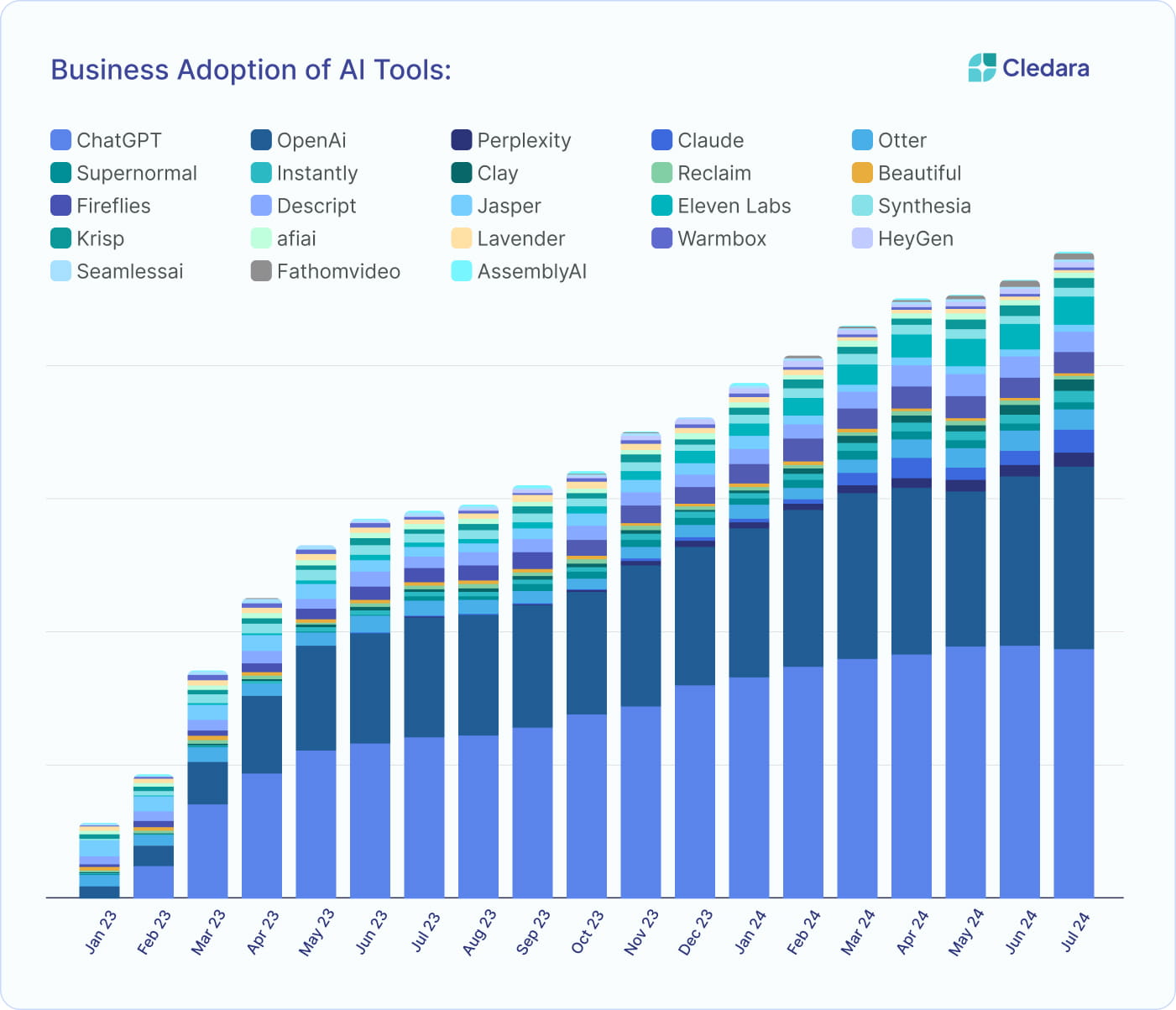

Remote-capable roles absolutely dominate. These are your desk jobs, your knowledge workers, your folks who can sit down with a laptop and interact with AI tools. Adoption in this category jumped from 28% in 2023 to 66% in 2025. That's substantial growth in just two years. These workers—designers, writers, analysts, developers—have embraced AI because it directly solves their problems.

Non-remote-capable roles? They're stuck at 32%. Think construction workers, nurses, retail staff, manufacturing jobs. There's a reason. Most AI tools were built for desk workers. They require a computer, internet, and specific job functions that can be digitized. If your work involves physical presence or hands-on action, AI adoption is significantly harder to implement.

That gap is roughly two years of adoption lag, according to the research. Which means if we see this pattern continue, non-remote roles will eventually approach parity with remote ones. But that requires purpose-built solutions that don't exist yet in many industries.

The hierarchy also matters. Leaders use AI at 69%, managers at 55%, and individual contributors at just 40%. This is where things get messy. Part of this makes sense—leaders have more flexibility to experiment with new tools. But there's also a concerning pattern here: the people actually doing the work are the ones least likely to be using AI.

That's a problem. When decision-makers use a tool more than frontline workers, you often get bad implementations. You get tools that solve executive problems rather than worker problems. And that's exactly what we're seeing in many organizations.

The Sector Divide That Nobody Talks About

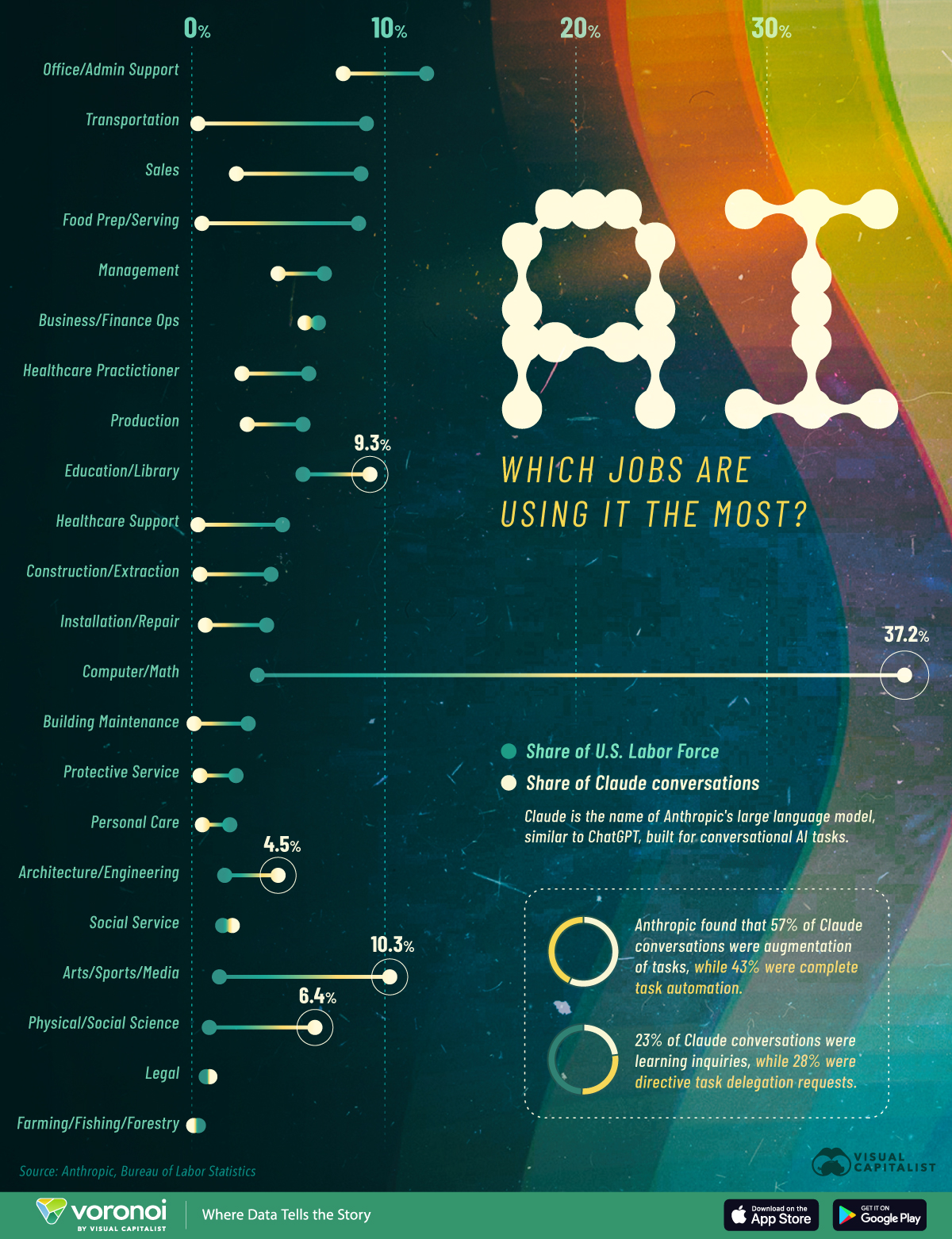

AI adoption isn't randomly distributed. It's heavily concentrated in specific sectors that had the right conditions to adopt it quickly.

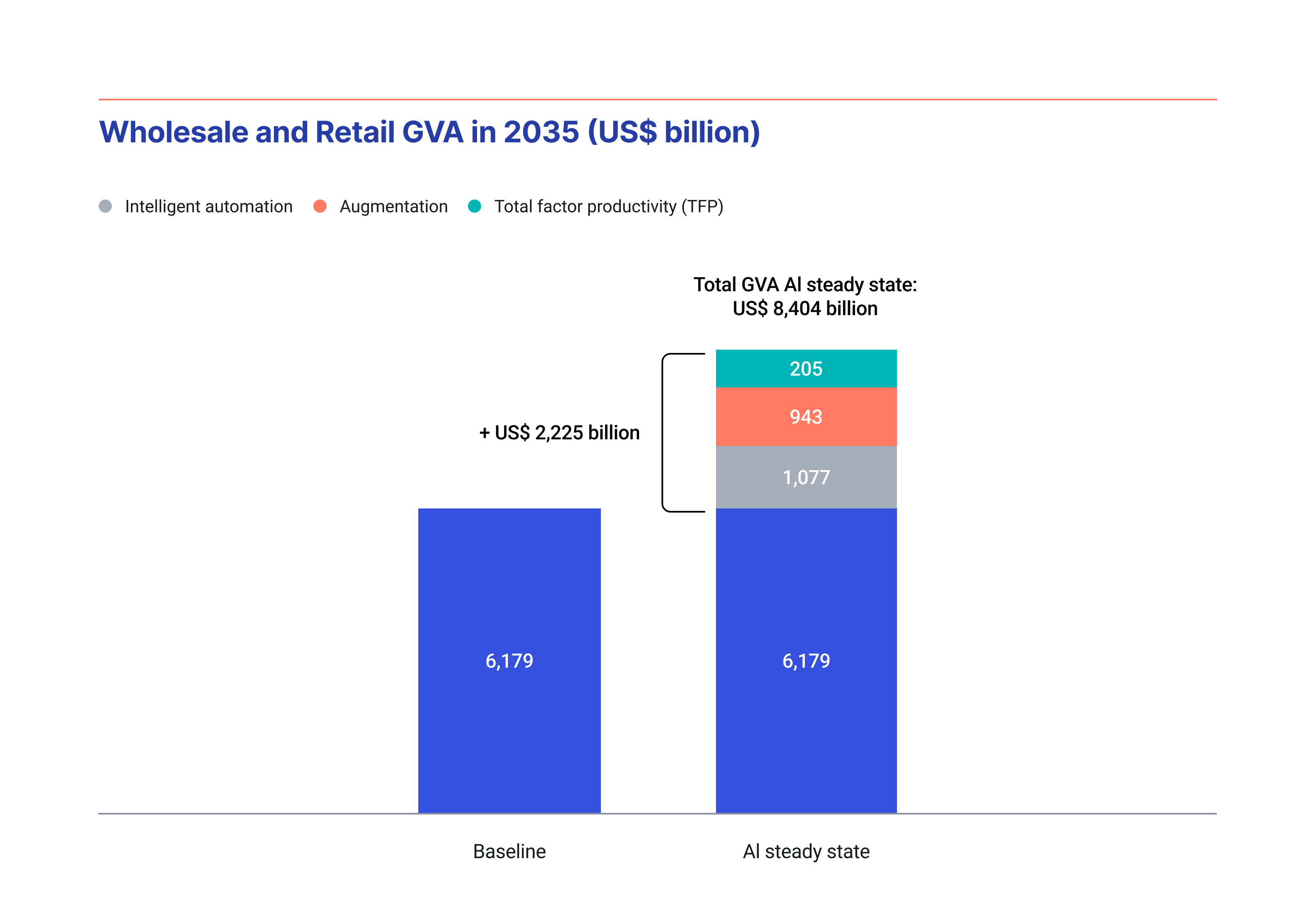

Technology, finance, and professional services lead the pack. These sectors have something in common: they already had strong data infrastructure, tech-savvy workforces, and clear use cases for AI. Adding AI to a financial modeling tool? Makes immediate sense. Using AI to optimize code? Obviously valuable. These industries didn't need to figure out why to use AI. They just needed to figure out how.

Education—colleges, universities, and K-12 schools—shows strong adoption too. This surprised some people, but it shouldn't. Teachers have always been early adopters of productivity tech. AI for grading, generating lesson plans, personalizing tutoring? These are problems educators immediately understood.

But look at other sectors and the adoption story changes dramatically. Manufacturing, healthcare, retail—these struggle with AI integration not because the technology isn't applicable, but because the use cases are harder to identify and implement. You can't just bolt AI onto a nurse's workflow. You have to rethink the entire workflow.

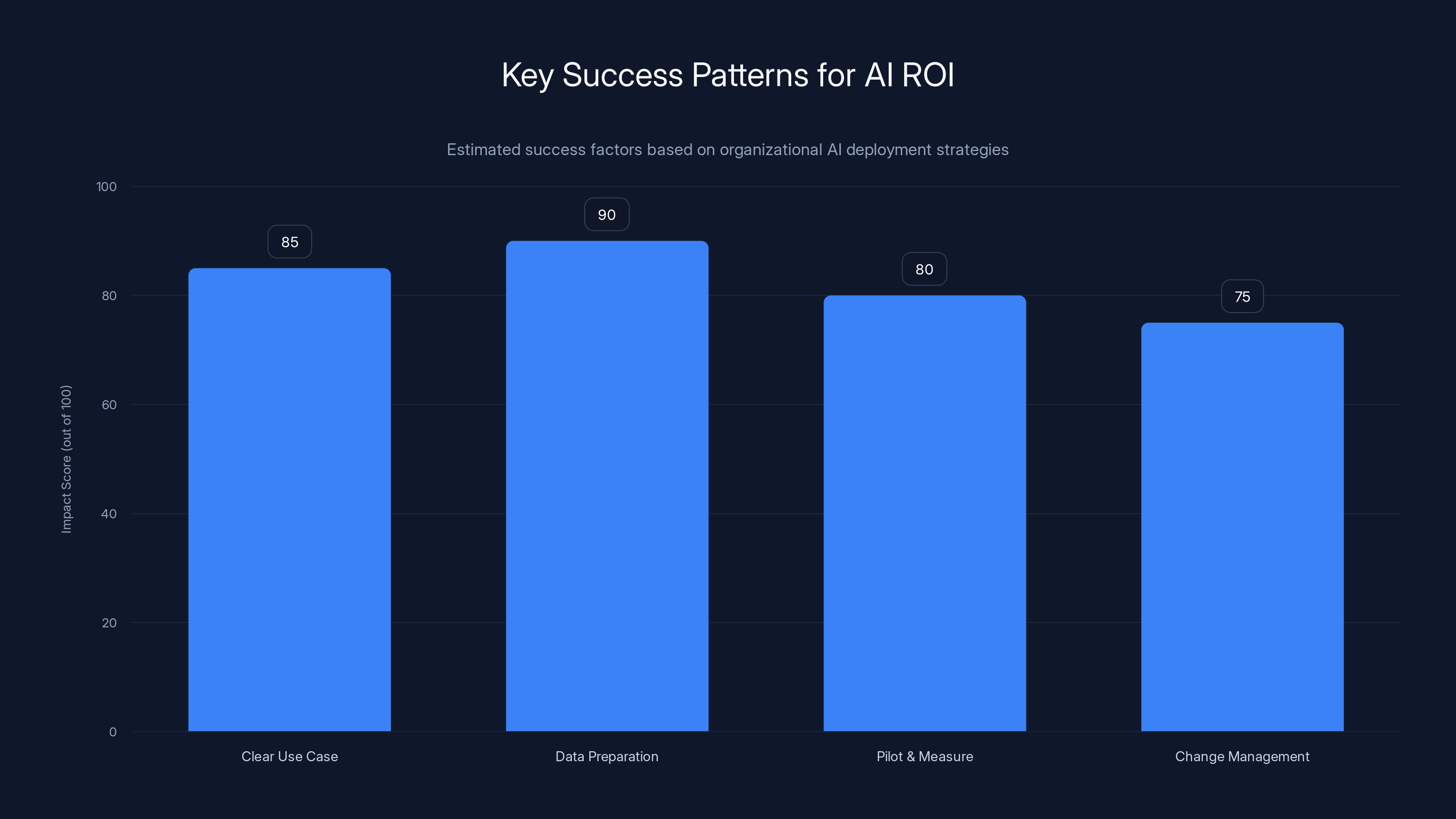

Organizations that see AI ROI often excel in clear use case definition, data preparation, piloting and measuring, and change management. Estimated data based on common success patterns.

The Deployment vs. Adoption Gap That's Breaking Everything

Here's where things get really interesting. And honestly, pretty damning for how companies approach technology investments.

98% of companies are using, piloting, or exploring AI. Nearly all of them. That's massive adoption of AI as a concept. But deployment ≠ adoption. Just because something is deployed doesn't mean people use it.

The kicker? 95% of organizations reported zero return on investment from their generative AI investments. That's not "lower than expected." That's not "we're learning." That's zero. Not one dollar of value delivered.

Why? The research from Hitachi Vantara points to one culprit: infrastructure gaps. Companies are buying AI tools and throwing them at problems without fixing the underlying data infrastructure that makes AI actually work.

Think about it this way. AI is only as good as the data feeding it. Garbage data in, garbage answers out. But most companies haven't invested in data quality, data governance, or data infrastructure. They've invested in AI tools. It's like buying a Ferrari when you haven't built roads yet.

The numbers back this up. Only 42% of organizations consider themselves "data-mature", meaning they have reliable, well-governed data infrastructure. Yet the same organizations identified data quality as the top driver for AI success. So they acknowledge what the problem is, they know they don't have it, but they keep deploying AI anyway and wondering why it doesn't work.

This is the implementation gap nobody's talking about. And it explains the "flatlining" narrative better than anything else.

Why Infrastructure Is Where AI Dies

Let me give you a concrete example of how this plays out in the real world.

A typical scenario: Company has a customer database with information scattered across 15 different systems. Some data is duplicated. Some contradicts itself. Some hasn't been updated since 2019. They deploy an AI tool to "analyze customer behavior" and expect insights. The AI ingests garbage data and produces garbage conclusions.

Executive asks why the $200K AI investment isn't delivering ROI. The AI team blames the business for unclear requirements. The business blames the AI team for overpromising. Meanwhile, the real problem—the data infrastructure—remains unfixed.

The next AI tool arrives. Same story. Different expense.

This isn't a theoretical problem. This is exactly what happened at companies that Hitachi Vantara's CEO Sheila Rohra described when she said: "AI succeeds when the data behind it is trusted, well-governed and resilient." That's not marketing speak. That's the actual lesson from companies that got AI ROI versus those that didn't.

Companies that saw actual AI returns invested in data infrastructure first. They cleaned up their data. They established data governance. They made data accessible. Then they deployed AI.

Companies that deployed AI first and hoped for the best? They're in the 95% with zero ROI.

The Trust and Governance Problem

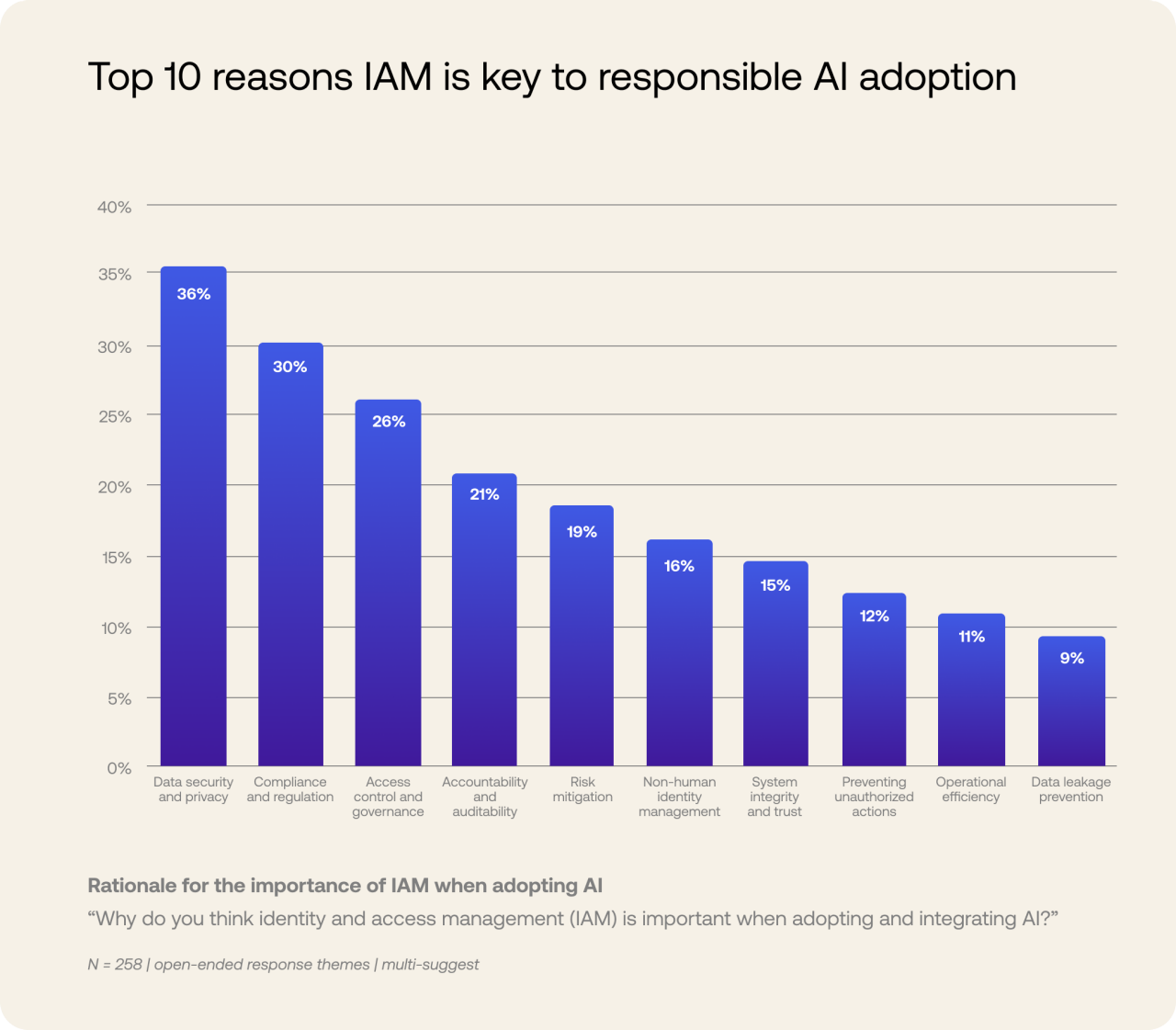

There's another layer to why AI deployment isn't translating to adoption, and it's less talked about than infrastructure but equally important: people don't trust the outputs.

When Hitachi Vantara surveyed organizations about barriers to AI adoption, they found that trust, security, and governance were critical concerns. Employees worry about AI breaches. They worry about unreliable outputs. They worry about regulatory risks.

These aren't paranoid concerns. These are legitimate problems. An AI tool that hallucinates data is useless. An AI tool that creates compliance risks is dangerous. An AI tool that might leak customer data is a liability.

But here's what's interesting: many organizations haven't built the governance frameworks to address these concerns. So workers, quite rationally, don't use the AI tools. They stick with the processes they trust.

This creates a perception problem. The organization thinks the AI adoption is failing. The workers think the organization is being reckless. Both are somewhat right.

Why Managers Use AI More Than Individual Contributors

There's a curious pattern in the adoption data that deserves deeper examination: leadership and management tiers are ahead of individual contributors.

69% of leaders actively use AI in their work. 55% of managers do. But only 40% of individual contributors use it. Those gaps matter. A lot.

On the surface, this makes intuitive sense. Managers have more discretion. They're less bound by specific job requirements. They can experiment. They have budgets to buy tools. So of course they adopt faster.

But dig deeper and you see something more concerning: this adoption pattern often leads to bad AI implementations. When managers use AI to "optimize" processes designed by workers they don't fully understand, the results can be comical or damaging. You get AI-generated requirements that miss real-world constraints. You get automated workflows that skip important steps.

The real value of AI adoption comes when workers closest to the actual problem use it. A developer knows exactly why they need AI for code review. A designer knows where AI can help concept generation. A financial analyst knows where AI can speed up data preparation.

When adoption flows from the bottom up (workers discovering use cases) rather than the top down (executives mandating AI adoption), the ROI is dramatically higher. But our current adoption patterns have reversed this.

The Unexamined Cost of Manager-Led Adoption

There's a hidden cost to having managers use AI more than workers: resentment and resistance.

Workers notice when management adopts a tool that was supposed to "help" them but was never designed with their input. They notice when executives make decisions about their tools without understanding their work. Over time, this creates adoption resistance that's hard to overcome.

I've talked to teams where management mandated AI tools that workers then actively avoided. Not because the tools were bad, but because workers felt like the implementation was something being done to them rather than for them.

Reverse the dynamic. Let workers discover how AI can help their specific challenges. Let them try tools. Let them decide what works. Then management supports and scales the winners. Adoption rates skyrocket, and more importantly, ROI actually materializes.

Despite 98% of companies exploring AI, 95% report zero ROI due to infrastructure gaps, with only 42% considering themselves data-mature.

The Data Maturity Crisis That's Holding Everything Back

Let's talk about the real problem underlying the adoption plateau: most organizations are data-immature and don't know how to fix it.

The research is clear on this. 95% of organizations with zero AI ROI pointed to infrastructure gaps as the primary reason. Infrastructure isn't a code word for "bad servers." It's data infrastructure. The pipes, processes, and governance that move data around and keep it trustworthy.

Yet only 42% of organizations rate themselves as "data-mature." That's a massive gap. You have the vast majority of companies trying to do AI with infrastructure that's fundamentally inadequate for the task.

What does "data-mature" actually mean? It means:

- Data is accessible: You can find the data you need without spending weeks with database administrators.

- Data is trustworthy: You know the data is accurate, up-to-date, and hasn't been corrupted or duplicated.

- Data is governed: You have clear policies about who can access what, how it's used, and what it's used for.

- Data is integrated: Different systems talk to each other. Data doesn't live in silos.

- Data quality is monitored: You catch data problems before AI systems ingest them.

Most organizations have none of this. They have databases from different eras running different technologies. They have data that gets copied and pasted between systems, creating inconsistencies. They have limited visibility into who's using data for what.

Then they deploy an AI tool and expect magic.

How to Actually Assess Your Data Maturity

If you're sitting in an organization wondering whether you're data-mature, here are some questions to ask:

Can you identify the source of truth for customer information? Not "where is customer data stored," but "which system has the authoritative version of a customer record?" Most companies can't answer this cleanly. They have customer data in CRM, billing system, data warehouse, and analytics platform, all slightly different.

How long does it take to get a clean dataset for analysis? If it's more than a few hours, you're not data-mature. Most organizations spend days having data conversations before analysis even begins.

Do you have data lineage documentation? Can you trace where a number came from, what transformations it went through, and why a calculation changed? Most can't. They know data has changed, but not why.

Are data quality problems caught automatically? Or do humans discover them weeks later when reports look wrong? Mature organizations catch quality issues immediately and alert data teams.

Do you have data governance policies that are actually enforced? Or do you have a data governance document gathering dust while people work around the policies?

If you're answering "no" or "kind of" to most of these, you're in the 58% of organizations that aren't data-mature. And you're probably also in the 95% getting zero ROI from AI.

The good news? Data maturity is fixable. It just requires prioritization and investment that many organizations haven't made yet.

The Sector-Specific Reality of AI Adoption

AI isn't adopting evenly across industries, and that's by design, not accident. Some sectors are naturally positioned for AI success. Others are fighting uphill battles.

Technology and Professional Services (Leading)

These sectors are ahead because they already had the prerequisites. Strong data infrastructure. Technical talent. Clear ROI metrics. When a tech company deploys AI for code optimization or security testing, the value is immediate and measurable.

They also didn't have to convince workers that AI was valuable. Developers understand that AI can help them write better code. Security engineers understand that AI can help spot vulnerabilities. The use case is obvious, the benefit is clear, and adoption follows.

Finance and Professional Services (Accelerating)

Finance is particularly interesting because AI adoption unlocked use cases that weren't possible before. Portfolio analysis, risk modeling, fraud detection, customer insights—all areas where AI provides measurable value.

The finance sector also benefits from existing data infrastructure. Banks have been investing in data technology for years. When they deploy AI, they're doing it on solid foundation.

Education (Surprising Success)

Higher education and K-12 adoption surprised many observers. But it makes sense. Teachers understand that AI can help with administrative tasks, personalized learning, and assessment. The use cases are obvious once you think about them.

Plus, educators are used to experimenting with new tools. They're inherently curious about technology. If Chat GPT can help with lesson planning, why wouldn't they use it?

The challenge in education is different from other sectors: regulation and institutional caution. Many schools and universities are struggling with policies around AI, academic integrity, and appropriate use. The technology adoption is easy. The institutional adoption is hard.

Manufacturing, Healthcare, Retail (Lagging)

These sectors are behind not because workers are less intelligent or resistant to change. They're behind because the use cases are harder to identify and the implementations are more complex.

You can bolt Chat GPT onto an administrative process. Reimagining a manufacturing workflow around AI is harder. It requires understanding production constraints, safety requirements, supply chain dynamics, and human factors that most AI tools weren't built to address.

Healthcare is instructive here. The sector desperately needs AI. Patient volumes are crushing existing systems. Administrative burden is overwhelming. But healthcare implementations require regulatory clearance, clinical validation, and human oversight in ways that a financial modeling tool doesn't.

That complexity isn't a flaw. It's a feature. Healthcare is being cautious because the stakes are high. But caution means slower adoption.

AI adoption growth has slowed, with only a 2% increase in Q4 2025, indicating a shift from new users to deeper usage by existing users. Estimated data.

Why the "Flatlining" Narrative is Misleading

Let's zoom out from data points and discuss narrative. The "AI adoption is flatlining" story is technically true but deeply misleading. It's like saying "car sales flatlined" when what actually happened was the market shifted from new car sales to used car sales and subscription models.

The adoption isn't actually flatlining. The growth pattern is changing. And honestly, that change is healthier than pure exponential growth would be.

Here's what's actually happening: The low-friction adoption phase has ended. Anyone who could immediately benefit from basic AI tools (Chat GPT, Copilot) has already adopted. Adding more of those users becomes harder because the remaining population either can't use AI (non-remote roles, certain industries) or doesn't see immediate value (and they're often right to be skeptical given the ROI track record).

But simultaneously, depth of adoption among existing users is increasing. People aren't just trying Chat GPT once. They're building it into workflows. They're experimenting with more advanced use cases. They're learning what works and what doesn't.

That's not flatlining. That's transition from breadth to depth. It's a completely normal technology adoption curve. The hockey stick gets steeper, then it flattens. When it flattens, it doesn't mean growth stopped. It means you're hitting saturation in that market segment and need to move to the next segment.

The next segment is the 58% of workers who aren't actively using AI yet. But those workers are in harder-to-reach sectors and job categories. They'll adopt when the tools and use cases are specifically built for them.

What Comes After the Plateau

If you plot technology adoption over time, you see a pattern: early growth, plateau, then renewed growth from a different starting point as the technology evolves.

PC adoption plateaued. Then cloud adoption restarted growth. Mobile adoption plateaued. Then AI restarted it. This current AI plateau isn't an ending. It's an inflection point.

The next wave of growth comes from:

- Vertical AI tools built for specific industries: Generic AI can't unlock healthcare adoption. Industry-specific AI tools can.

- Better infrastructure and governance: Once 50%+ of organizations achieve data maturity, AI ROI will improve dramatically, and adoption will accelerate.

- Clearer use cases and proven playbooks: Right now, many organizations are experimenting. Once best practices crystallize, adoption becomes easier.

- Regulatory clarity: In sectors like healthcare, financial services, and law, regulation is still settling. Once it does, adoption will accelerate.

- Workforce training and development: Skills are a bottleneck. As more people understand how to use AI effectively, adoption will increase.

So the plateau is actually a healthy correction. It's weeding out companies deploying AI without purpose and rewarding companies doing the foundational work to make AI actually succeed.

The ROI Crisis and What Actually Drives Success

Here's the uncomfortable truth: 95% of organizations reporting zero AI ROI aren't failures. They're making an investment that hasn't matured yet.

But here's the other uncomfortable truth: many of them will never see ROI because they're approaching this wrong.

The organizations that are seeing AI ROI share certain characteristics. Let me spell them out because if you understand these, you can avoid the zero-ROI club.

Success Pattern #1: Clear Use Case Definition

They don't deploy AI and hope. They identify a specific problem—"our customer support response time is 4 hours"—then find an AI solution for that problem. If AI can reduce it to 2 hours and the value of that improvement exceeds the cost, they deploy.

Failing organizations approach it backwards. They acquire an AI platform and then try to figure out what to do with it. That backwards approach almost always fails because you're hunting for problems to fit your solution rather than hunting for solutions to fit your problems.

Success Pattern #2: Data Preparation Comes First

Before deploying AI, they clean and organize their data. They establish data governance. They create data pipelines. This takes time and money. It feels like overhead before getting to the "real" AI work.

But it's not overhead. It's the foundation. Organizations that skip this phase are the ones in the 95% with zero ROI.

Success Pattern #3: Pilot and Measure Ruthlessly

They don't roll out across the organization. They pilot with one team or one process. They measure the impact meticulously. They establish baselines before AI and track metrics after. They know exactly whether the investment is working.

Failing organizations deploy broadly without measurement. Then six months later, they can't tell if the AI is actually delivering value or just creating a new cost line.

Success Pattern #4: Change Management and Training

They invest in helping people understand how to use AI tools. They create playbooks. They establish best practices. They build organizational muscle memory around AI usage.

Failing organizations assume people will figure it out. Then they're puzzled why adoption is low and ROI is zero.

Success Pattern #5: Realistic Expectations

They expect incremental improvements, not transformation. They understand that AI is a tool, not a solution unto itself. They know that AI will handle 60% of a task and humans will handle 40%.

Failing organizations buy the narrative that AI will revolutionize everything. They expect 10x improvements. When they get 20% improvements (which are still valuable), they consider it a failure.

AI usage varies significantly across different roles and work settings, with leadership and remote-capable roles showing higher adoption rates.

Building AI Adoption the Right Way

If the adoption plateau and zero-ROI crisis sound depressing, here's the encouraging part: it's completely fixable. You don't need revolutionary change. You need structural change.

Step 1: Audit Your Data Infrastructure

Before you buy another AI tool, understand what you're actually working with. Where does your data live? How clean is it? Who owns it? Who can access it?

This audit isn't fun. It usually reveals problems that were conveniently ignored because they weren't causing immediate crisis. But ignoring them means ignoring future ROI.

A good data audit takes 2-4 weeks for a medium-sized organization. It involves your data, IT, and business teams. The output is a clear picture of your data maturity.

Step 2: Create a Data Improvement Roadmap

Based on your audit, create a 12-24 month roadmap to improve data maturity. This typically includes:

- Data integration: Connecting siloed systems so data flows properly.

- Data quality: Establishing processes to catch and fix bad data.

- Data governance: Creating policies and tools for managing data access and usage.

- Data documentation: Building data lineage and metadata so people understand what data means.

This is infrastructure investment. It doesn't directly create value. It enables value creation from AI and analytics.

Step 3: Identify Clear AI Use Cases

Once you have a data roadmap, identify specific problems AI can solve. Not "use AI to improve efficiency"—that's too vague. Think "use AI to reduce customer support response time from 4 hours to 2 hours," or "use AI to identify at-risk customers before they churn."

Find 3-5 high-impact, medium-difficulty use cases. High-impact so the ROI justifies the effort. Medium-difficulty so they're achievable without months of work.

Step 4: Pilot and Measure

Choose one use case. Build a pilot. Establish metrics before you deploy. Measure rigorously after. Be honest about results. If it's working, expand. If it's not, kill it and try the next one.

This discipline seems obvious, but most organizations don't do it. They deploy broadly, then spend months arguing about whether it's working.

Step 5: Build a Center of Excellence

Once you have working pilots, create a small team whose job is to:

- Share learnings across the organization

- Build playbooks and best practices

- Help other teams adopt successful approaches

- Train people in AI usage

- Advocate for the infrastructure investments required

This team is often just 3-5 people, but they're crucial to preventing the "islands of AI" problem where individual teams use AI effectively but the organization doesn't learn from it.

Remote vs. Non-Remote Work Dynamics

The split in adoption between remote-capable and non-remote-capable roles is one of the most interesting findings, and it deserves deeper analysis because it reveals something about how technology adoption actually works.

Remote-capable roles sit at 66% AI adoption. Non-remote roles sit at 32%. That's not a small gap. That's a fundamental difference in how technology is adopted across job categories.

Part of this is obvious. Remote-capable roles—knowledge work, mostly—can naturally incorporate AI tools. You're already sitting at a computer. Adding Chat GPT to your daily work is frictionless.

But there's something deeper happening. Remote-capable roles are, on average, more job-flexible. You can try new tools without disrupting core work. Non-remote roles often can't do that. If you're a nurse, you're busy with patients. You don't have time to experiment with AI tools.

There's also a selection bias. Remote-capable roles tend to attract people who are more comfortable with technology. They're self-selected for adaptability. Non-remote roles include a broader spectrum.

But here's what's interesting: the two-year lag suggests that non-remote adoption isn't impossible. It's just slower. As AI tools specifically designed for non-remote work improve, adoption will climb.

The Opportunity in Non-Remote Roles

There's a massive opportunity here for companies building vertical AI solutions. Generic AI tools don't work well for non-remote roles. But AI tools specifically built for nurses, retail workers, construction teams, and manufacturing floor operators could unlock a wave of adoption.

Yet most venture capital funding flows to horizontal tools (the Perplexitys and Chat GPTs) rather than vertical tools. That's backwards from where real adoption opportunity is.

The companies that win the next phase of AI adoption will be those building for non-remote work. They'll face harder technical challenges. But they'll capture markets that are currently underserved.

Leaders have the highest AI adoption rate at 69%, followed by managers at 55%, and individual contributors at 40%. This highlights a significant gap in AI usage across different organizational levels.

The Leadership Adoption Paradox

I keep coming back to this: leaders and managers use AI significantly more than individual contributors. And while there are obvious reasons for this, there are also subtle problems embedded in this pattern.

When leadership uses AI more than workers, it creates an incentive misalignment. Leaders might optimize for metrics they care about without fully understanding frontline impact. They might deploy tools that make reporting easier for managers but harder for workers.

I've seen this play out repeatedly. A company deploys AI for "better resource allocation," which managers love because it makes their dashboards cleaner. But it also creates unpredictable scheduling for frontline workers. Metrics improve. Worker satisfaction drops. Turnover increases.

From an adoption standpoint, this is a huge problem. Workers notice that AI is used for oversight and optimization, not for helping them. They become resistant to AI.

The solution isn't to stop management from using AI. It's to ensure that workers also benefit from AI deployment. If you're using AI for resource optimization, also use AI for something that helps workers. Better task routing, automated busywork, or decision support.

This requires intentional design. Most organizations don't do it because it requires coordinating benefits across different groups. But it's worth doing because it creates buy-in rather than resistance.

What Companies Are Actually Succeeding With AI

Instead of talking about abstract success patterns, let me describe what I've seen companies actually do successfully.

Company A: Financial Services Firm

They wanted to reduce time spent on regulatory reporting. They spent six months building data infrastructure that consolidated compliance data from 12 different systems. They spent another two months identifying exactly how much manual work compliance reporting required.

Then they deployed AI to automate documentation and flag anomalies. They didn't expect AI to replace people. They expected it to reduce manual drudgery from 40 hours per week to 15 hours per week per person.

Result: compliance reporting time dropped 60%. Staff now spends more time on actual compliance analysis and less on admin. ROI was achieved in month 4 and has compounded.

Key success factors: Clear measurement, realistic expectations, human-in-loop design.

Company B: Healthcare Provider

They wanted to improve patient scheduling efficiency. Instead of deploying an AI scheduling system broadly, they piloted with one clinic. They measured no-show rates, patient satisfaction, and staff burden.

The first version of the AI system was terrible. It scheduled patients based on availability without considering clinical complexity. Patients got frustrated. Doctors got frustrated.

They went back to the drawing board. They built AI that understood not just availability but also complexity, provider preferences, and patient context. The second version worked.

Result: Better schedules, fewer no-shows, higher patient satisfaction. ROI achieved in month 6.

Key success factors: Willingness to fail, iteration mindset, including end-users in design.

Company C: Retail Organization

They wanted to use AI to improve inventory management. They deployed a tool that predicted demand better than their existing system. This was technically successful—the AI worked.

But store managers hated it because it created inventory patterns they couldn't intuitively understand. They started ignoring the AI recommendations and doing their own thing.

Result: The AI tool was a expensive shelf-ware.

They eventually fixed this by adding visualization and explanation to the AI system. Managers could see why the AI made a recommendation. They started trusting it.

Key success factor: AI explainability matters for adoption, not just for accuracy.

The Real Barriers to the Next Wave of Adoption

We've talked about infrastructure and use cases. But there are other barriers that are less discussed but equally important.

Barrier 1: Skills Gap

Using AI effectively requires skills most organizations don't have. Not coding skills necessarily, but skills like:

- Prompt engineering: Getting good output from language models requires understanding how they work.

- Data interpretation: Understanding what AI outputs actually mean and where they might be wrong.

- Critical evaluation: Knowing when to trust AI and when to override it.

Most organizations haven't developed these skills in their workforce. Until they do, adoption will remain limited.

Barrier 2: Regulatory Uncertainty

In regulated industries (finance, healthcare, law), regulatory frameworks for AI are still settling. Companies are cautious because they don't know what compliance will look like.

This is especially true for generative AI. The regulatory landscape is changing rapidly. Until it stabilizes, adoption will be slower in regulated sectors.

Barrier 3: Change Management Resistance

Some adoption resistance isn't irrational. It's workers protecting their value. If your job is reviewing documents and AI can do that now, of course you're skeptical about AI.

Companies that succeed address this head-on. They retrain people. They move them to higher-value work. They don't just eliminate jobs.

Barrier 4: Budget Constraints

Infrastructure investment costs money. AI tools cost money. Training costs money. Many organizations look at the upfront investment and choose not to proceed.

This is rational in the short term. But it means missing the window where AI adoption is relatively easy.

The Data Maturity Framework You Actually Need

If you're trying to figure out where your organization stands on data maturity, here's a practical framework that goes beyond the abstract "42% are data-mature" statistic.

Level 1: Data Chaos

Your organization is here if:

- Data lives in multiple systems with no clear integration

- Nobody knows what the source of truth is for any data

- Data quality is constantly problematic

- Governance exists on paper but not in practice

If you're at Level 1, don't deploy AI. You're guaranteed to waste money. Invest in data infrastructure first.

Level 2: Data Awareness

Your organization is here if:

- You acknowledge data problems but haven't fully fixed them

- Some data integration exists, but it's incomplete

- Data quality is improving but still inconsistent

- Governance policies are being implemented

Level 2 is where many organizations currently sit. You can pilot AI here, but expect limited ROI until you move to Level 3.

Level 3: Data Maturity

Your organization is here if:

- Data is well-integrated across systems

- Data quality is high and monitored automatically

- Clear governance exists and is enforced

- Most data is accessible to those who need it

- Data lineage is documented

Level 3 is where AI actually works. If you deploy AI at Level 3, you can expect meaningful ROI.

Level 4: Data-Driven AI

Your organization is here if you've reached Level 3 and built AI capabilities on top:

- Multiple AI models running in production

- AI insights are regularly used for decision-making

- AI ROI is well-understood and tracked

- Continuous improvement loops are in place

Most organizations taking AI seriously are shooting for Level 3. Level 4 is where true competitive advantage emerges.

The Future of AI Adoption: Beyond the Plateau

So what happens next? The plateau was never the ending. It's an inflection point. Here's what I expect to happen over the next 18-24 months:

Prediction 1: Infrastructure Investment Accelerates

Once organizations realize that 95% zero-ROI rate is because of infrastructure, spending on data infrastructure will increase. Not as dramatically as AI spending increased, but meaningful.

This won't be exciting technology investment. It won't generate press releases. But it'll be where real value creation happens.

Prediction 2: Vertical AI Tools Emerge as Winners

Generic AI tools are good for early adopters. Vertical tools built for specific industries will drive the next wave of adoption.

We'll see AI tools specifically designed for healthcare workflows, manufacturing processes, retail operations, and legal work. These tools will understand domain-specific constraints in ways that horizontal tools can't.

Prediction 3: AI Becomes More Embedded, Less Visible

Right now, AI is this separate tool you interact with. In the future, AI will be embedded into existing tools. It'll be a feature of your CRM, your analytics platform, your project management tool. Less visible, more useful.

Adoption will increase because people won't think of it as "using AI." They'll just think of it as using their existing tools better.

Prediction 4: Skills and Training Become Critical Differentiators

Companies that invest heavily in training people to use AI effectively will see dramatically better ROI. This will create a talent advantage that's harder to overcome than buying the same tools your competitor uses.

Prediction 5: Governance Becomes a Competitive Advantage

As regulatory frameworks settle and risks from bad AI implementations become clearer, companies with strong AI governance will be seen as safer partners. Governance will shift from cost center to advantage.

Try Runable to automate AI-powered document generation and workflow automation across your team—helping you move from AI exploration to actual implementation faster than traditional tools allow.

Conclusion: The Plateau Is Actually Progress

If you're reading headlines about AI adoption flatlining and feeling concerned, I hope this changes your perspective. The plateau isn't a failure. It's a reset. It's the market correcting from hype to reality.

The organizations that are going to win in the next phase of AI adoption aren't the ones who deployed AI fastest. They're the ones who are doing the unglamorous work of building data infrastructure, defining clear use cases, and training teams properly.

They're the ones learning from the 95% that got zero ROI and deliberately avoiding those mistakes.

They're the ones building bottom-up adoption rather than top-down mandates.

They're the ones treating AI as a tool to solve specific problems rather than a solution unto itself.

The adoption plateau isn't the end of the AI story. It's the end of the "let's try AI and see what happens" phase. What comes next is the "let's build AI the right way" phase.

And honestly? That's when things get interesting.

FAQ

What does "AI adoption flatlining" actually mean?

It means that the percentage of workers using AI regularly has stopped growing significantly. Daily AI usage increased only 2 percentage points in Q4 2025, and the total number of AI users remained flat. However, this doesn't mean AI is dying—it means the early adopters have already embraced it, and adoption is now transitioning from breadth (more new users) to depth (existing users using AI more frequently and in more sophisticated ways).

Why are 95% of organizations reporting zero ROI from AI investments?

Most organizations are deploying AI tools without fixing their underlying data infrastructure. AI implementations fail when data quality is poor, data governance is absent, and data accessibility is limited. These infrastructure gaps make even sophisticated AI tools produce unreliable results. Organizations that achieve positive ROI invest in data infrastructure first, then deploy AI on top of a solid foundation.

What's the difference between data maturity and just having data?

Having data and being data-mature are completely different. Having data means your data exists somewhere. Being data-mature means your data is trustworthy, accessible, well-governed, and integrated across systems. Only 42% of organizations are truly data-mature, which explains why so many AI deployments fail. Data maturity is achievable but requires intentional investment in infrastructure, governance, and processes.

Why do leaders use AI more than individual contributors if individual contributors could benefit more?

Leaders have more discretion to experiment with new tools and budgets to purchase them. Individual contributors are often bound by specific job requirements and may not have the autonomy to try new tools. This top-down adoption pattern often leads to misaligned implementations. The most successful organizations reverse this by empowering individual contributors to discover AI use cases for their specific work, then scaling the winners across the organization.

How long does it take to move from data chaos to data maturity?

For a medium-sized organization (500-2,000 employees), the typical timeline is 12-24 months. This includes auditing current data infrastructure (2-4 weeks), creating improvement roadmaps (4-8 weeks), implementing integration and governance (6-18 months), and establishing monitoring and processes (ongoing). Smaller organizations can move faster. Larger enterprises may take 24-36 months. The key is consistent prioritization and budget allocation throughout the process.

What should we do if we've already deployed AI without good data infrastructure?

Don't panic. You're in the majority. Start by auditing your data infrastructure to understand current gaps. Create a data improvement roadmap and begin addressing the highest-impact problems first. Simultaneously, evaluate your current AI deployments and measure their actual ROI honestly. This often reveals that you're getting some value in specific areas even if overall ROI is low. Focus on the areas working and plan how to expand them once data infrastructure improves. Consider hiring or training a small team to become your internal AI and data expertise center to prevent future misaligned deployments.

Is the adoption plateau temporary or permanent?

It's temporary. The plateau represents saturation of the early adopter segment and transition from horizontal tools (Chat GPT, Copilot) to vertical tools built for specific industries and roles. As vertical AI tools improve, as regulatory frameworks stabilize, as data infrastructure matures, and as workforce skills improve, adoption will accelerate again. The next wave will be slower but deeper than the initial hype wave.

Which industries should prioritize AI adoption right now?

Technology, finance, and professional services have the infrastructure and clear use cases for immediate ROI. Healthcare and education should focus on vertical AI solutions built for their specific workflows. Manufacturing, retail, and other non-remote-heavy sectors should wait for or invest in vertical solutions designed for their unique constraints. This doesn't mean non-leading sectors should ignore AI—it means they should be thoughtful about finding use cases where generic horizontal tools actually apply rather than forcing fit.

Key Takeaways

- AI adoption has plateaued with only 2-3% quarterly growth in usage, but this represents a healthy transition from breadth to depth rather than failure

- 95% of organizations achieve zero AI ROI due to poor data infrastructure, not tool quality—data maturity is the missing prerequisite for success

- Only 42% of organizations are data-mature despite identifying data quality as the #1 AI success factor, creating a critical implementation gap

- Remote-capable roles (66% adoption) far outpace non-remote roles (32%), representing a two-year adoption lag with opportunity for vertical AI solutions

- Leadership uses AI significantly more than individual contributors, creating misaligned implementations that don't serve frontline workers effectively

- The next AI adoption wave will be driven by vertical tools built for specific industries, regulatory clarity, improved infrastructure, and better workforce training

Related Articles

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

- Gemini 3 Becomes Google's Default AI Overviews Model [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- Pinterest Layoffs 15% Staff Redirect Resources AI [2025]

- Risotto's $10M Seed: How AI is Disrupting Help Desk Ticketing [2025]

![Is AI Adoption at Work Actually Flatlining? What the Data Really Shows [2025]](https://tryrunable.com/blog/is-ai-adoption-at-work-actually-flatlining-what-the-data-rea/image-1-1769542779002.jpg)