Linus Torvalds' AI Coding Confession: Why Pragmatism Beats Hype

When the creator of Linux admits to using AI for coding, people lose their minds. But Linus Torvalds isn't the poster child for AI adoption you might think.

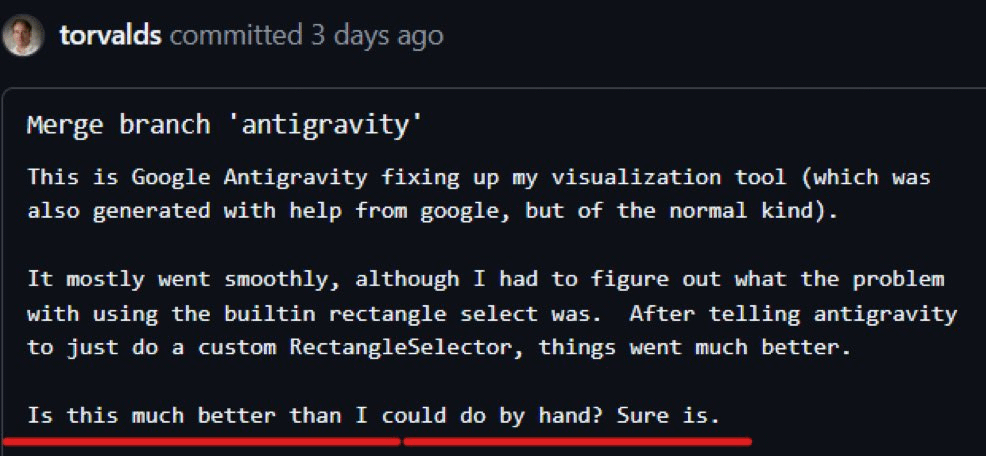

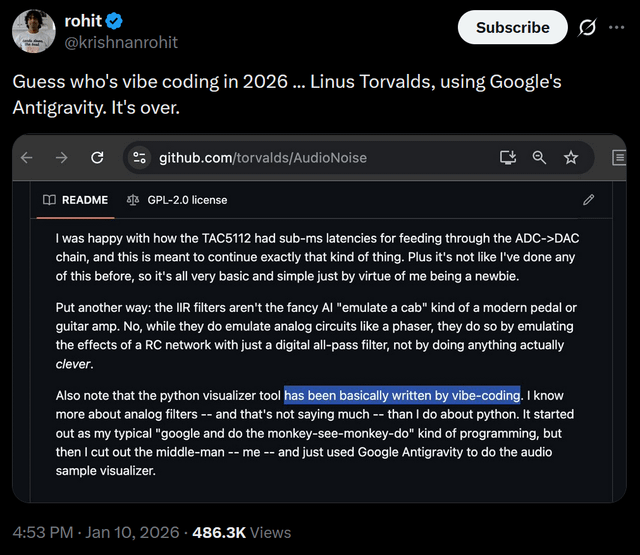

Last year, Torvalds quietly mentioned he'd used AI for a personal hobby project called Audio Noise, a silly guitar pedal effect generator. Specifically, it helped with Python visualization code—something outside his wheelhouse. The internet erupted with hot takes: "Even the skeptic uses AI!" "The hype is validated!" "Resistance is futile!" According to Phoronix, this project was purely for personal interest.

Except that's not what happened.

Torvalds spent decades criticizing AI hype as marketing theater disconnected from reality. His recent admission doesn't contradict that. It actually proves his point harder than any lecture could. As noted by ZDNet, Torvalds has always been skeptical of AI's broader claims.

Here's what actually happened: A legendary engineer, during a holiday break, used an AI tool as a learning shortcut for unfamiliar code in a personal project with zero production consequences. He's been saying this exact use case is fine for years. The only news is that people finally paid attention.

This situation reveals something crucial about where AI actually belongs in software development and where it absolutely doesn't. It's not about whether AI coding works. It's about understanding the gap between "works for learning" and "works for critical systems." That gap is massive, and most of the AI hype completely ignores it.

Let's dig into what Torvalds actually said, why it matters, and what it tells us about the future of AI in programming.

TL; DR

- Torvalds used AI for learning: AI helped with Python visualization in a hobby project, not core logic or production systems

- It's not a validation of AI coding: He's been consistent for years that AI is fine for learning, experimental code, and non-critical tasks

- The hype misses the point: Most AI discussions ignore the critical difference between hobby projects and infrastructure like Linux

- Real divide is about trust: AI-generated code can catch bugs and accelerate learning, but shouldn't replace human verification for systems people depend on

- Pragmatism over ideology: Torvalds isn't anti-AI—he's anti-bullshit, which is why he uses it for what it's good at and rejects it where it isn't

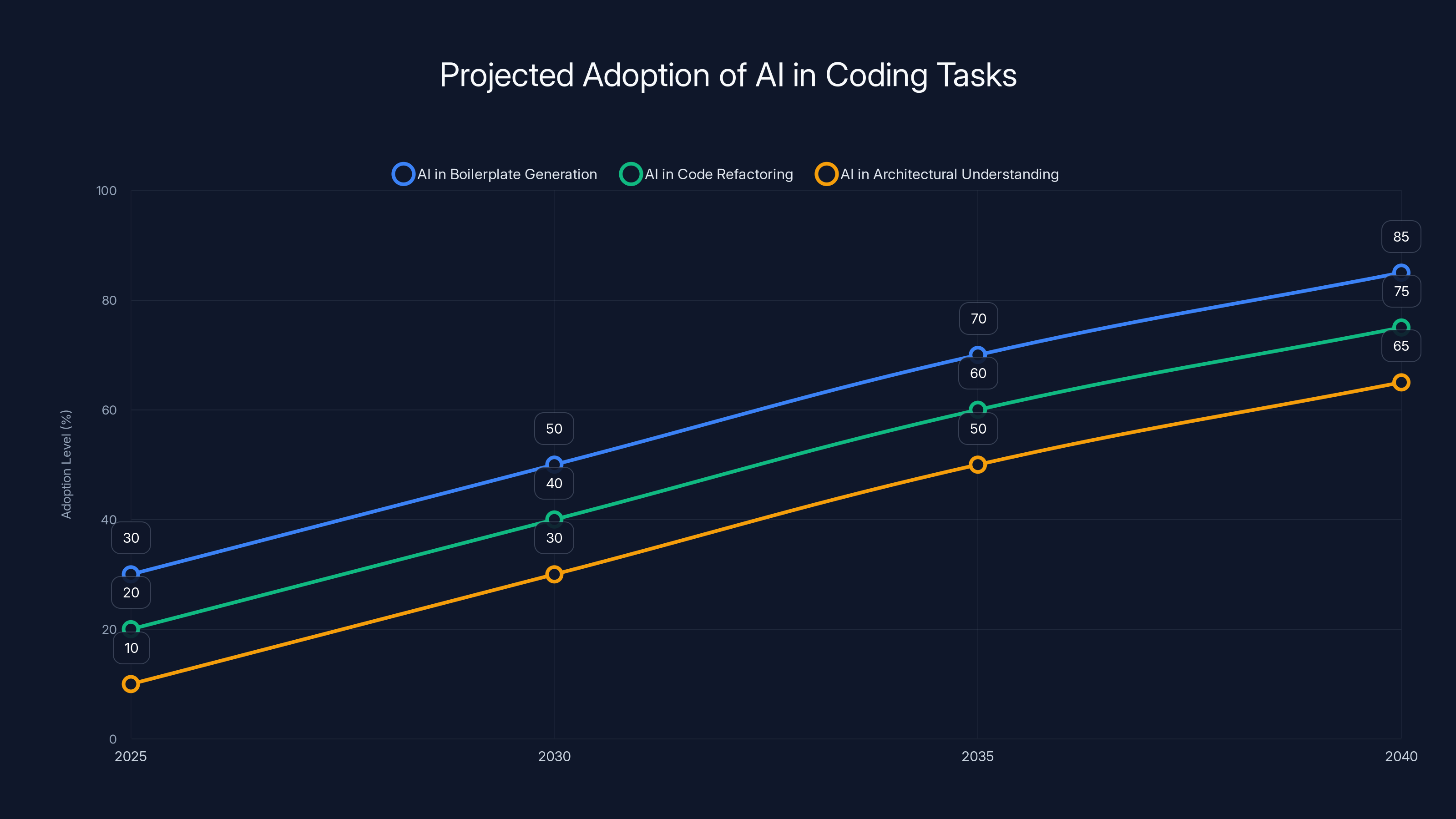

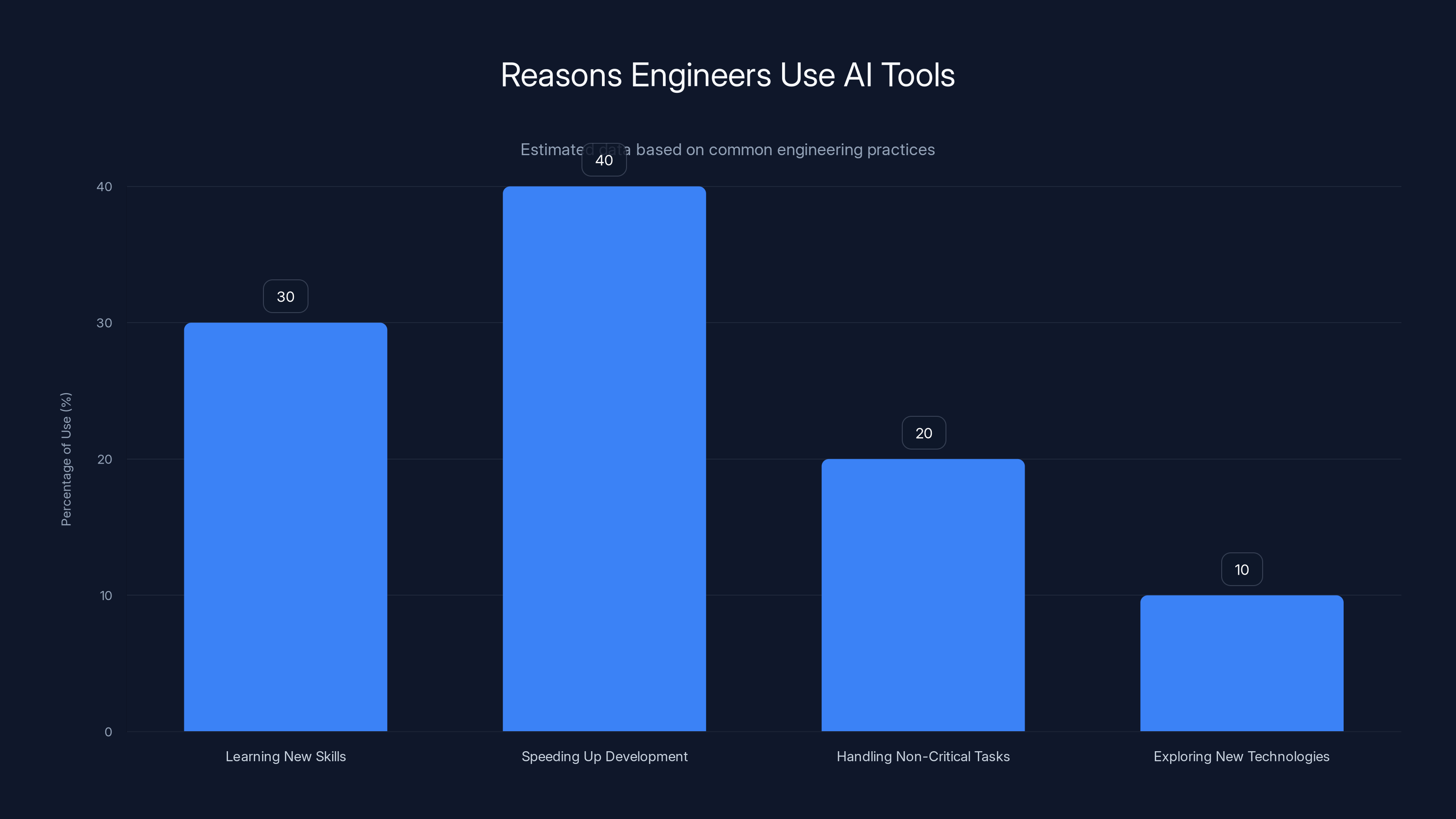

Estimated data suggests gradual adoption of AI in coding tasks, with significant improvements expected by 2040. AI is projected to enhance productivity rather than replace developers.

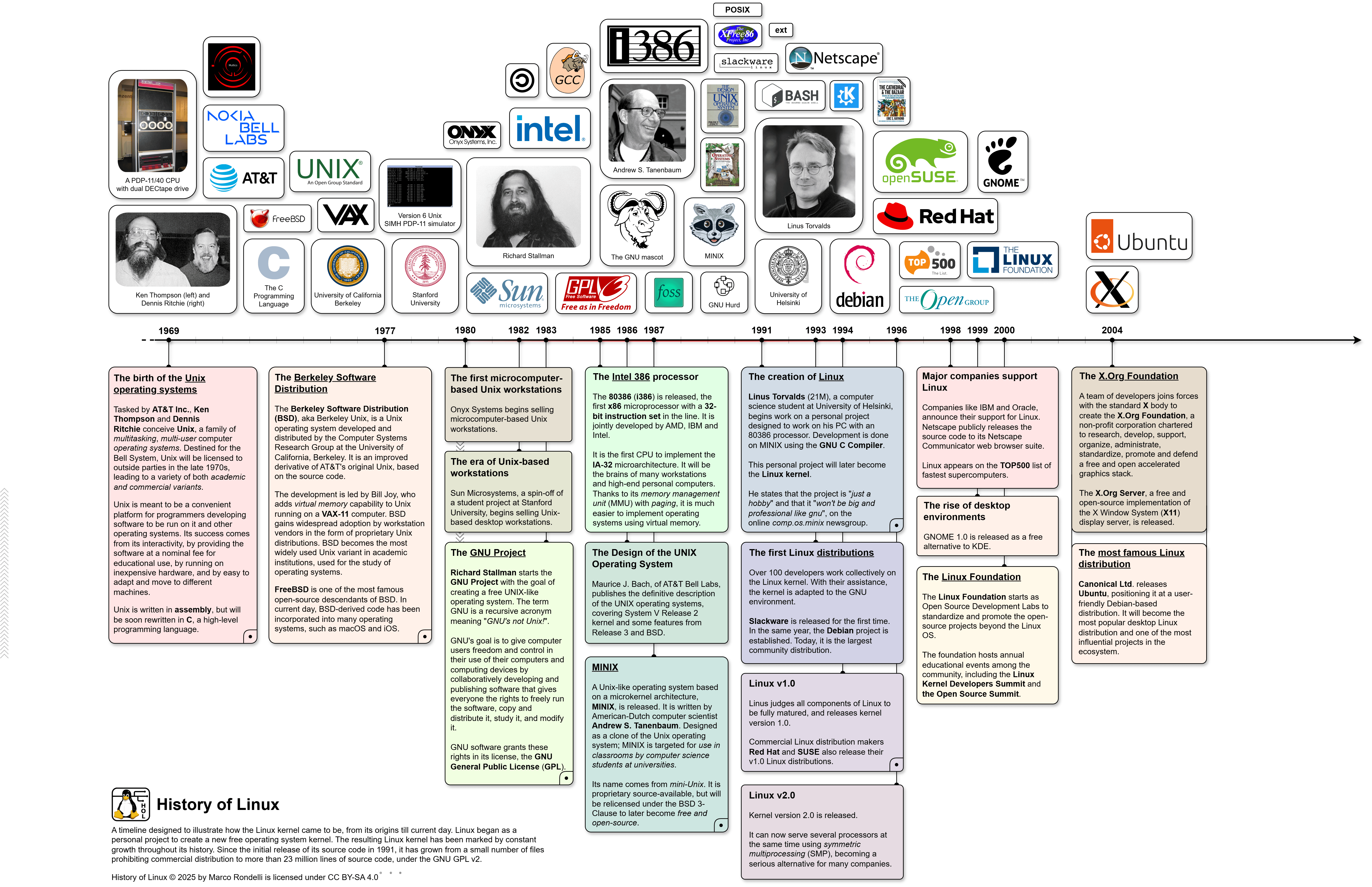

Who Is Linus Torvalds, and Why Should We Listen?

If you're not familiar with Linus Torvalds, here's the TL; DR: He's the engineer who created Linux in 1991 and has been maintaining it for over three decades. Linux now powers everything from your Android phone to the servers running the internet to the systems controlling spacecraft. As reported by CNET, Linux's influence is vast and critical.

Torvalds isn't a casual observer. He's dealt with billions of lines of code, thousands of contributors, and decisions that affect systems running on millions of machines worldwide. His skepticism about AI isn't ideological—it's earned through decades of seeing engineering realities that marketing glosses over.

His approach to technology has always been pragmatic. He cares about what works, what's useful, and what's overhyped nonsense. He's famously direct about dismissing ideas that don't hold up to scrutiny. He's also vocally critical of the hype machine that builds up products faster than they deliver real value.

This matters because when Torvalds says something, he's not performing for venture capital or marketing teams. He's speaking from a position of "I've been doing this longer than most people have been alive, and here's what I think." That's different from influencers, tech journalists, or AI evangelists trying to shape opinion.

So when he said he used AI for a personal project, people assumed it meant he'd changed his mind about AI in software development. He hadn't. But understanding why requires looking at exactly what he did and didn't do.

The Audio Noise Project: A Hobby That Started a Thousand Hot Takes

The code Torvalds used AI on lives in a repository called Audio Noise. What is it? Exactly what he called it: a silly guitar pedal project. It generates random digital audio effects. This project was highlighted by Ars Technica as a non-critical, personal endeavor.

This isn't a core system. It's not infrastructure. It's not something deployed to millions of machines or used by critical applications. It's a personal project, the kind of thing an engineer tinkers with during downtime because it's interesting or fun.

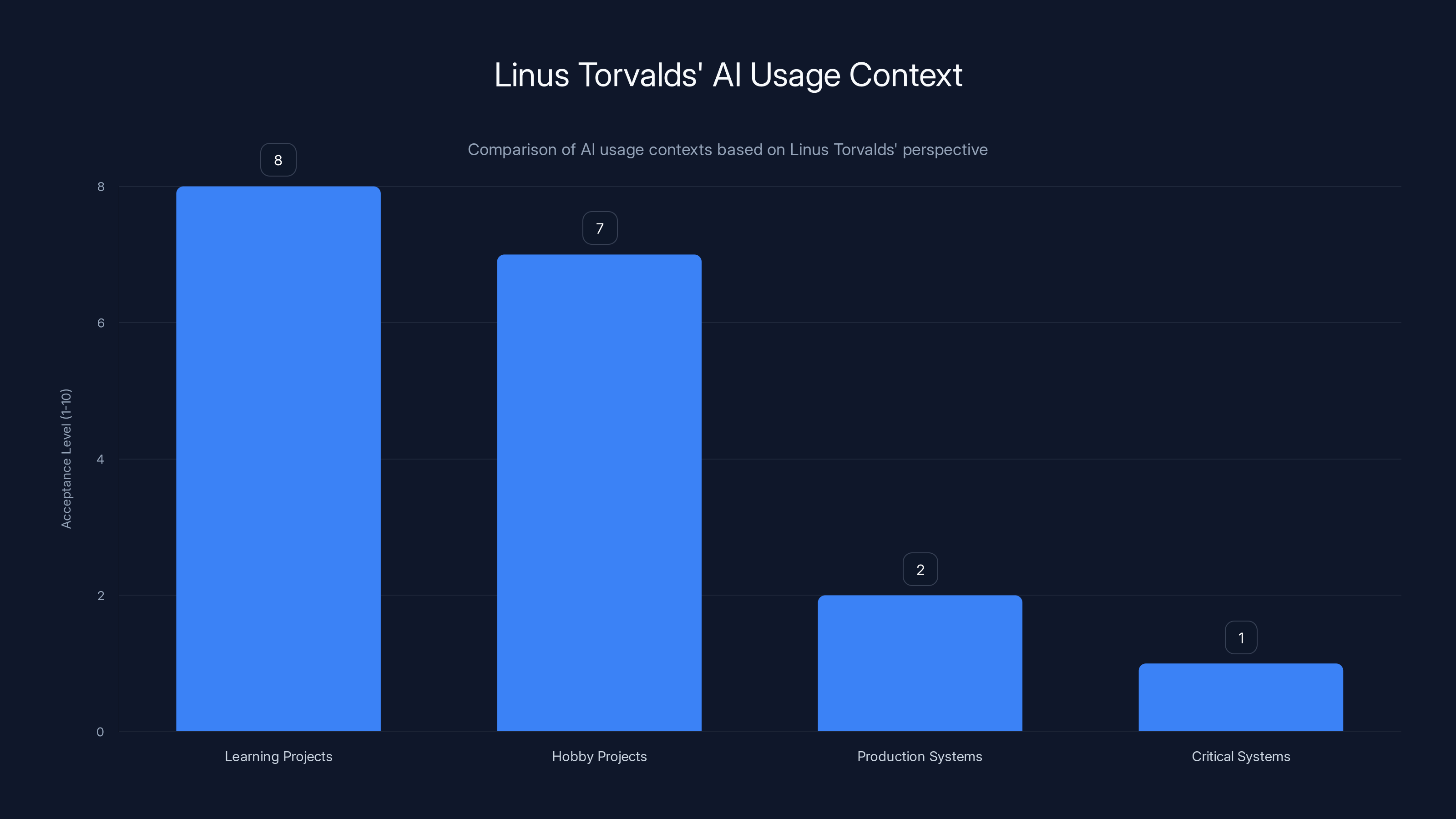

Torvalds himself has been clear about this distinction. He's said that using AI for code is "okay" if you're not building anything important. This is exactly that scenario. It's a hobby project with zero production consequences.

But here's where it gets interesting: The specific part where he used AI was the Python visualization component. Why does that matter? Because Python wasn't his comfort zone. He knows C and systems-level languages deeply. He knows Linux kernel development inside out. Python visualization APIs? Not his specialty.

In the past, he said he'd just grab code snippets from Stack Overflow or forum threads, modify them until they worked, and move on. That's a common pattern among experienced engineers—you don't know everything, so you find examples and adapt them. It's fast, it works, and for non-critical code, it's practical.

This time, instead of Stack Overflow, he used an AI tool. The tool functioned as a learning shortcut, not a code generator for critical logic. It was helping him with something he didn't know well, in a hobby project, during a holiday break.

Think about that carefully. This isn't a revelation that AI is ready to replace software engineers. It's an engineer using a tool for exactly the same reason he'd use Stack Overflow: "I need to visualize some data, I don't know the best way to do this in Python, let me get some help."

The difference is the tool was AI instead of search results. The principle is identical.

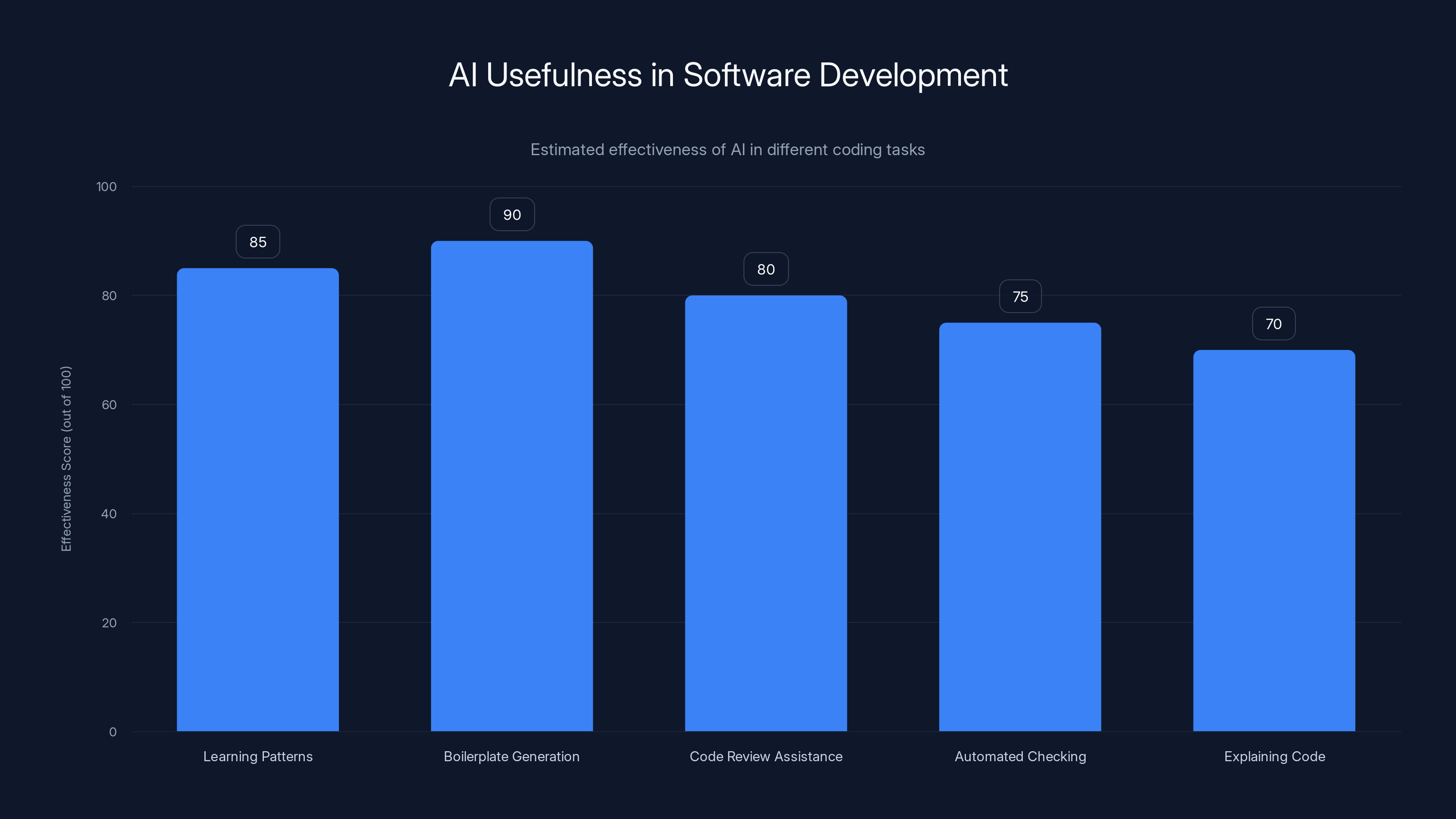

AI is most effective in generating boilerplate code and learning unfamiliar patterns, with scores of 90 and 85 respectively. Estimated data.

What Torvalds Actually Said About AI (Spoiler: Nothing New)

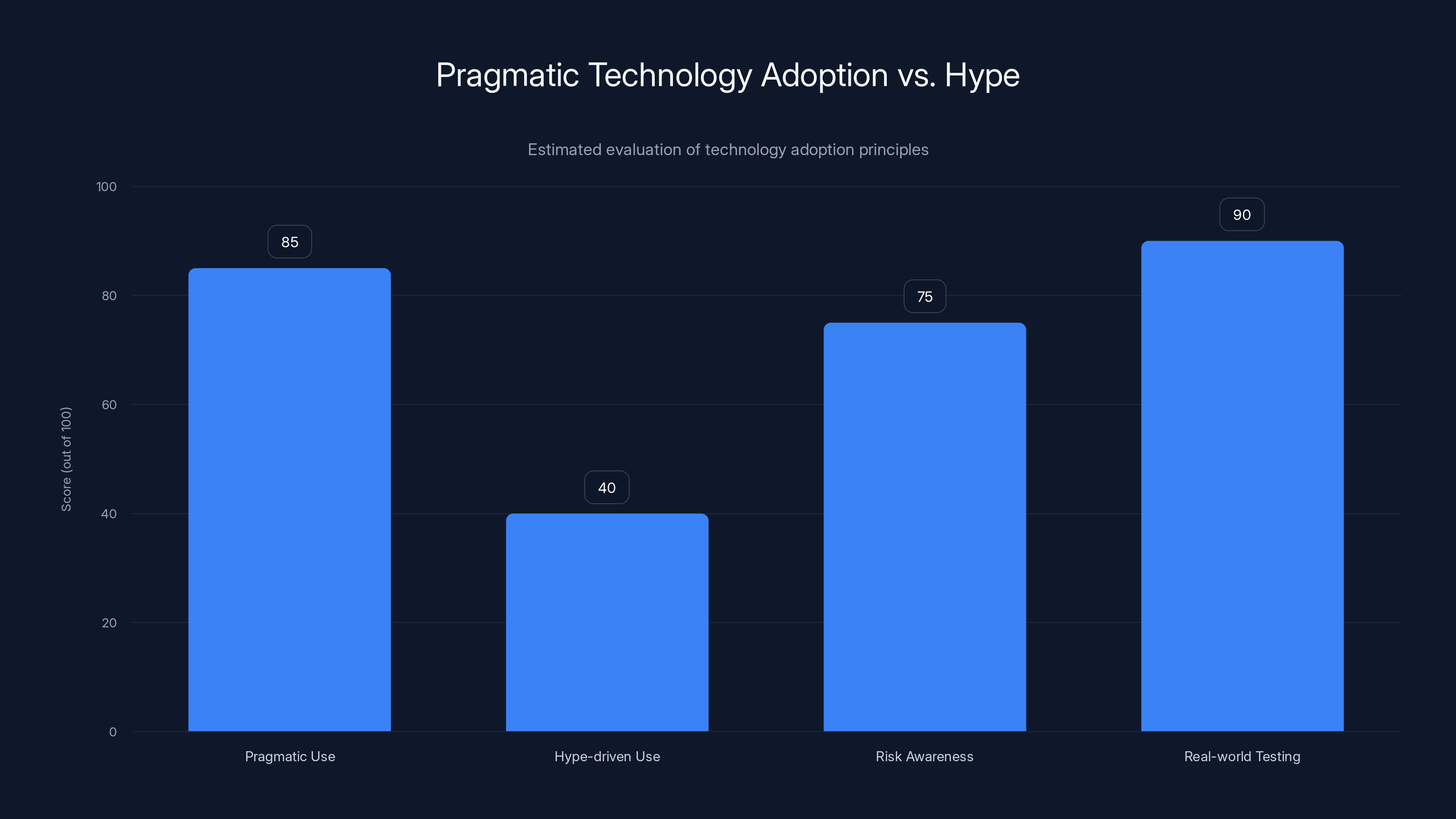

Torvalds has been consistent about AI for years. He's not anti-AI in principle. He's anti-hype, anti-bullshit, and skeptical of claims that don't match reality.

In previous statements, he's outlined where he thinks AI has genuine value: learning, assistance with unfamiliar patterns, automated patch checking, code review. These are all support tools, not core development. This perspective is supported by 36Kr, which highlights his pragmatic use of AI.

He's also been clear about where AI shouldn't be trusted: critical systems, security-sensitive code, anything where a failure has real consequences. This isn't controversial—it's obvious if you think about it for five seconds.

The broader point he's made repeatedly is about the AI industry's marketing problem. Much of the AI discussion, according to Torvalds, is driven by hype and financial incentives rather than technical reality. Companies want to convince you that AI will replace developers, automate coding entirely, revolutionize everything. The incentives are huge—venture capital, stock valuations, first-mover advantage.

But reality is slower and messier. AI is useful for specific things. It's not useful for other things. The companies selling AI want you to focus on the useful parts and ignore the limitations. Torvalds refuses to participate in that game.

He's also noted something interesting about the relationship between AI systems and human knowledge. He wrote that he feels "great" about his code being ingested by large language models. Not because it means AI will replace human engineers, but because it democratizes knowledge and tools. Open source did that in the 1990s. AI, properly deployed, could do something similar—help small teams compete with bigger companies by giving them better tools.

But that's different from saying AI should write critical code autonomously. It's saying AI should augment human capabilities. That nuance is lost in most of the discourse.

The Real Divide: Learning vs. Production, Hobby vs. Infrastructure

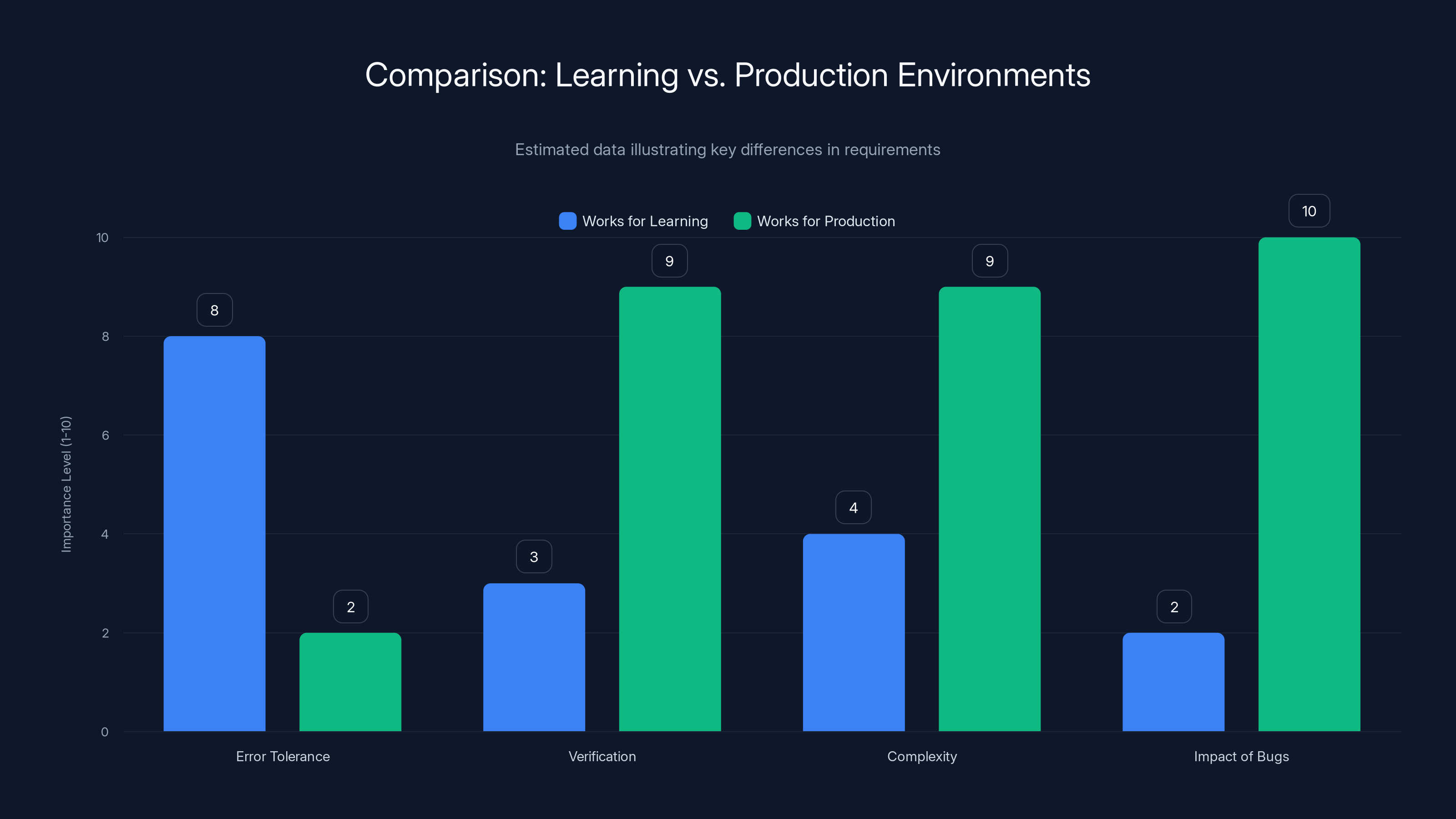

Here's the crucial distinction that most AI coverage misses: There's a massive gap between "AI is helpful for learning and experimentation" and "AI-generated code is trustworthy for production systems."

Torvalds' Audio Noise project sits firmly on the learning and experimentation side. There are no stakes. If the visualization code has a bug, nobody cares. It's a personal project. Maybe he fixes it, maybe he doesn't. It doesn't matter.

Compare that to Linux. Every line matters. Millions of machines run Linux. Failures have real consequences—services go down, security vulnerabilities open doors for attackers, bugs in critical paths affect countless applications. The stakes are incomprehensibly higher.

For a hobby project, AI can suggest approaches, generate boilerplate, help you learn a library you've never used. You can experiment. You can test. You can iterate. If something doesn't work perfectly, that's fine. You're learning.

For production infrastructure, every suggestion needs human verification. Every change needs to be understood. You need to know exactly why the code does what it does, because you might need to maintain it, debug it, or fix it in a critical situation at 3 AM.

AI systems are probabilistic. They make educated guesses based on patterns. Sometimes those guesses are perfect. Sometimes they're confidently wrong. For a visualization library, a wrong guess is a learning opportunity. For kernel code, a wrong guess is a security vulnerability.

Torvalds' stance reflects this reality. AI is fine for learning. AI is not fine for critical systems. The fact that he used it for the former doesn't mean he'd approve of it for the latter.

But here's where the hype distorts things: The AI industry wants you to think every problem is a learning problem that can be automated. Then they can sell you software that "generates code" autonomously. The reality is that different contexts have different requirements. Understanding which is which is the actual skill.

The Developer Community Reaction: Belief and Fear

The developer community's response to Torvalds' admission reveals something interesting about how we think about AI.

Some developers panicked. If even the skeptic uses AI, doesn't that mean AI is taking over? The fear is real: Will AI make software engineers obsolete? Will companies prefer cheaper AI-generated code to hiring developers? If AI can write code, what's the future of the job?

Other developers were more measured. They recognized what actually happened: An experienced engineer used a tool for a specific, appropriate use case. It's the same way experienced engineers use libraries, frameworks, and Stack Overflow. Nothing revolutionary, just practical tooling.

The panic, though, reveals an insecurity. It suggests that if AI can write any code at all, it must eventually replace all code writing. That's like saying if calculators can do arithmetic, mathematicians must be obsolete. The logic doesn't follow.

What actually matters is: What does the AI do well? Where is it useful? Where does it fail or create risk? These are engineering questions, not philosophical ones.

On the flip side, some developers are enthusiastically adopting AI for coding assistance. They're finding it genuinely useful for certain tasks: boilerplate code, documentation, refactoring suggestions, explanations of unfamiliar libraries. These are legitimate uses.

The danger is when enthusiasm becomes replacement. When developers stop verifying AI suggestions because they trust the tool. When code review becomes less rigorous because "the AI probably got it right." When critical systems are built with less scrutiny because AI accelerated the development.

That's where Torvalds' pragmatism becomes crucial. He's saying: Use AI where it helps. Don't use it where it creates risk. Understand the difference. Be honest about what the tool can and can't do.

For most working developers, the truth is somewhere in the middle. AI is useful. But it's a tool, not a replacement for judgment, verification, and understanding.

Linus Torvalds accepts AI usage for learning and hobby projects but remains cautious about its use in production and critical systems. (Estimated data)

How AI Helps (And Where the Help Actually Matters)

Torvalds mentioned specific ways AI has been useful to him. It's worth looking at these because they're honest about where AI adds value.

Learning unfamiliar patterns: When you need to work with code or libraries outside your expertise, AI can accelerate the learning process. Instead of searching for documentation, examples, and forum posts, you can ask questions and get immediate, personalized explanations. This is genuinely useful. It's like having a knowledgeable colleague available instantly.

Boilerplate and scaffolding: A lot of code is repetitive. Configuration files, template patterns, standard implementations. AI is great at generating these because they're highly predictable. You provide the parameters, the tool generates the structure, you customize it. This saves time and is low-risk because boilerplate is easy to verify.

Code review assistance: AI can analyze code and flag potential issues—performance problems, security concerns, patterns that might cause bugs. Torvalds mentioned that AI systems have caught problems he missed. This is valuable because it's additive. The AI doesn't replace human review, it enhances it. The human still decides whether the flagged issue is real.

Automated checking: AI can run automated analysis on patches before they go into review. Does this follow the project's style? Does this introduce obvious problems? For a project as large as Linux, where thousands of patches arrive daily, this kind of automated triage is genuinely useful. It catches obvious issues before humans even look.

Explaining code: Sometimes you inherit code written by someone else, or you're looking at a codebase section you're not familiar with. AI can explain what it does, why it might be structured that way, and what the implications are. Again, this accelerates learning and understanding.

Notice the pattern: All of these are support and augmentation, not replacement. The human engineer is still making decisions. The human engineer still understands the code. The human engineer is still responsible.

Where AI struggles is in understanding larger context. It doesn't know the project's constraints, the performance requirements, the architectural decisions that led to the current structure. It doesn't know what might break downstream if you make a particular change. It doesn't understand the tradeoffs involved in different approaches.

These contextual, judgment-based decisions require human expertise. That's not something AI has replaced. That's something AI can't replace, at least not yet, and maybe not ever.

The Difference Between "Works for Learning" and "Works for Production"

Let's be concrete about why this distinction matters.

Suppose you're building a hobby project visualizing audio data in Python. You write a function that takes audio samples and returns a visualization. The function needs to:

- Read audio data

- Process it into frequencies

- Generate visualization coordinates

- Return the result

AI can help you write this. It can suggest approaches, generate code, help you understand the libraries involved. If the output is slightly wrong, or if there's a subtle bug in the visualization, you'll notice when you test it. You'll fix it. You'll learn something about Python visualization APIs. Great. That's learning.

Now suppose you're working on the Linux kernel scheduler. The scheduler decides which process runs on which CPU, when processes sleep, how resources are allocated. It's thousands of lines of complex code. A bug here doesn't affect a hobby project. It affects:

- System responsiveness

- Power consumption

- Real-time applications

- Security isolation between processes

- Performance on everything running on the system

If AI suggests a change to the scheduler, you can't just "see if it works." You need to:

- Understand exactly why the change is correct

- Understand the performance implications

- Understand the edge cases it might affect

- Test it on multiple hardware configurations

- Consider interactions with other subsystems

- Get peer review from kernel experts

- Understand what happens if it's wrong

This is why Torvalds doesn't trust AI-generated code for critical systems. It's not ideology. It's engineering. The stakes are different. The verification requirements are different. The acceptable risk level is different.

Most of the AI hype conflates these two scenarios. It presents evidence that AI works (true, for simple tasks), then implies therefore AI should be trusted everywhere (false, for critical systems). Torvalds refuses that conflation.

Where AI Fails: The Confidence Problem

One of the biggest risks with AI-generated code is that AI doesn't know what it doesn't know.

Large language models generate text that's grammatically coherent and contextually plausible. They're pattern matching systems that have learned statistical relationships in their training data. They're remarkably good at making educated guesses.

But they can't distinguish between "this is definitely correct" and "this looks plausible but might be wrong." They're equally confident in both cases. They'll generate code that looks correct, works for common cases, but fails in edge cases. They'll suggest approaches that seem smart but have hidden performance problems. They'll generate security-vulnerable patterns because those patterns appear in training data.

Worse, they'll explain themselves confidently. The generated code comes with an explanation that sounds authoritative. A developer skimming the code might trust it because the explanation is plausible. But the explanation was generated by the same probabilistic system that generated the code. If the code is wrong, the explanation is probably also wrong, but in an equally plausible way.

This is why code review is crucial. An experienced engineer reading the code can spot when something is wrong, even if the AI's explanation sounds reasonable. But that only works if the engineer actually reads and understands the code. If the engineer trusts the AI and skips careful review, the failures slip through.

Torvalds has seen this in action. When AI catches problems in patches, sometimes the AI is right. Sometimes the AI is wrong, and the human needs to verify. When the AI works, it's genuinely helpful. But the human is still the decision maker.

For a hobby project, this risk is acceptable. You'll discover bugs through testing and just fix them. For production systems, the risk is unacceptable. You need to understand every line.

Estimated data shows that production environments require higher verification and have a greater impact of bugs, while learning environments tolerate more errors.

The Marketing vs. Reality Problem

Torvalds' fundamental criticism of AI hype is about marketing disconnected from engineering reality.

The AI industry has massive financial incentives to convince people that AI will replace coders. If AI can fully replace developers, the addressable market is the entire software development workforce globally. That's a trillion-dollar opportunity. If AI can only help developers be slightly more productive, the market is smaller, but probably more realistic.

Guess which narrative the industry pushes.

Companies selling AI coding tools talk about "autonomously generating code," "freeing developers from boilerplate," "dramatically accelerating development." Some of these claims are true for specific, narrow use cases. But they're presented as general truths, when they're actually not.

The AI industry also benefits from vague language. "AI-generated code" sounds bad (why would you trust AI code?). "AI-assisted code" sounds better (AI helps you write better code). Both refer to the same tool. The difference is marketing framing.

Torvalds gets frustrated with this gap between what's real and what's marketed. He sees the incentive structures driving the hype. He sees credulous journalists amplifying the claims. He sees developers and managers making decisions based on marketing narratives rather than engineering reality.

His response is blunt honesty: AI is useful for some things. It's not useful for other things. Pretending otherwise is dishonest.

This stance is increasingly valuable as AI moves from startup hype into mainstream business decision-making. Organizations need to understand where AI actually helps and where it creates risk. Torvalds' pragmatism provides that grounding.

What Changed (Spoiler: Nothing)

Here's the thing: Torvalds using AI for a hobby project doesn't represent a change in his thinking. He's been saying for years that AI is useful for learning. This is just an example of him doing exactly what he's been saying is reasonable.

The "change" narrative comes from people who misunderstood his previous position. They thought he was opposed to all AI in coding. He wasn't. He was opposed to using AI in ways that create risk or depend on flawed assumptions about what AI can do.

Using AI to help with visualization code in a hobby project? That matches his stated position perfectly. It's appropriate use of the technology for an appropriate context.

If Torvalds had announced he was integrating AI code generation into the Linux kernel development process, that would be a change. It would also contradict everything he's said about the technology. But that's not what happened.

What actually happened is he did something mundane (used a tool for a specific task), and the internet interpreted it as profound (the skeptic converts to AI). The mundane reality is more interesting, because it reveals what pragmatism actually looks like.

The Bigger Picture: AI's Place in Software Development

If we zoom out from Torvalds and Audio Noise, what does this moment tell us about AI in software development?

First, AI is becoming a normal tool. It's not revolutionary anymore. It's a utility that exists, and people use it where it's useful. Some dramatically, some not. This is healthy. It means we're moving past hype into maturity.

Second, context matters enormously. Using AI for a hobby project is completely different from using it for production systems. Understanding the difference is what separates competent engineers from people who make expensive mistakes.

Third, trust is the core issue. You can trust AI to generate boilerplate or learning code. You can't trust AI to generate critical code without verification. Pretending otherwise is dangerous.

Fourth, the gap between what AI can do and what it should do is crucial. Just because AI can generate code doesn't mean you should use it everywhere. Just because AI is useful for some tasks doesn't mean it's useful for all tasks.

Fifth, marketing will continue to blur these lines. Companies selling AI have incentives to overstate capabilities and understate risks. Developers need to maintain healthy skepticism and do their own due diligence.

Looking forward, AI will probably become more useful for coding tasks. It might get better at understanding code context. It might become better at avoiding common mistakes. But it's probably not going to become "good enough to replace human engineers making critical decisions." And that's fine. That's not a failure of AI. That's a realistic boundary between where machines are good and where humans are necessary.

Estimated data suggests pragmatic technology adoption scores higher in real-world testing and risk awareness compared to hype-driven use.

What This Means for Developers Right Now

If you're a developer trying to figure out whether to use AI tools, Torvalds' example is instructive.

For learning: Use AI. It's great for accelerating your understanding of unfamiliar code, libraries, or patterns. Ask questions. Get explanations. Experiment. This is exactly what AI is good at.

For boilerplate and scaffolding: Use AI. It's genuinely useful for generating structure that you'll customize anyway. Save your mental energy for the parts that matter.

For code review assistance: Use AI. Let it flag potential issues. But don't skip human review. Use the AI suggestions as prompts for deeper investigation, not as verdicts.

For critical logic: Don't trust AI. Write it yourself. Have experienced people review it. Understand every line. If you use AI to help you understand something, fine. But the final code should reflect your understanding and judgment.

For experimental and hobby projects: Use AI liberally. These are low-stakes environments where you can experiment and learn.

The pattern is: Use AI where it reduces boring work without creating risk. Don't use AI where it creates risk of misunderstanding or bugs in critical code.

This is what Torvalds does. It's not anti-AI. It's pro-reality.

The Future of AI in Coding: Not What the Hype Suggests

If we believe Torvalds and other experienced engineers who've thought seriously about this, the future of AI in coding is probably less revolutionary than the hype suggests.

AI will likely become better at specific tasks. It'll get better at generating boilerplate, explaining patterns, suggesting refactoring, flagging issues. It might even get better at understanding architectural constraints and producing code that fits the system.

But it's probably not going to become good enough that companies eliminate developer teams. The market probably won't work that way. Businesses will use AI to make developers more productive, not to replace them. Developers who can effectively use AI tools will be more valuable than developers who can't.

The timeline is also important. We're not in 2030 or 2040 yet. We're in 2025. The technology is early, useful for specific things, not yet useful for many others. The industry is in the "this is interesting, let's figure out what it's good for" phase, not the "this has completely transformed everything" phase.

Torvalds' position—pragmatic, skeptical of hype, interested in usefulness—is probably closer to how most developers will feel in a few years. The excitement will cool. The technology will find its actual level. Engineers will use it where it works and ignore it where it doesn't.

Lessons From Torvalds' Pragmatism

What Torvalds models, and what's valuable to learn from, is an approach to technology based on reality rather than narrative.

Question the hype: When someone tells you a technology will revolutionize everything, ask probing questions. What specifically can it do? Where does it struggle? What are the risks? What incentives does the person promoting it have?

Understand your context: What's true for a hobby project isn't true for production systems. What works for boilerplate code doesn't work for critical logic. Understanding the difference is crucial.

Test on reality: Try the tool. See what it's actually good at. See where it fails. Make your own judgment rather than accepting someone else's narrative.

Stay skeptical of trust: Tools are useful when they enhance human capability and judgment. Tools are dangerous when people trust them blindly. Keep that boundary clear.

Admit trade-offs: Nothing is purely good or purely bad. AI has real benefits for specific things. It also has real limitations for other things. Both can be true.

Maintain responsibility: Even if a tool helps you, you're responsible for understanding the code, for verifying it works, for maintaining it. You can't outsource judgment.

These aren't specifically about AI. They're about how to think about any technology. They're how you avoid both dismissing useful tools and becoming a victim of hype.

Engineers often use AI tools for learning new skills and speeding up development, particularly in non-critical tasks. Estimated data.

The Irony of the AI Skeptic Who Uses AI

There's an interesting irony here. Torvalds is famous as an AI skeptic. The industry interpreted his Audio Noise admission as validation of AI. But it's not. It's actually a perfect demonstration of his original position.

He said: "AI is useful for learning and support tasks. Don't use it for critical code."

Then he used AI for a learning support task in non-critical code.

That's not a change in position. That's consistency.

The irony is that his skepticism might be more valuable now than it was when people dismissed it as "just an old engineer afraid of change." Now that AI is everywhere and hype is even more intense, skepticism grounded in engineering reality is a useful counterweight.

Torvalds isn't trying to stop progress. He's trying to separate progress from marketing. That's a public service.

Why This Moment Matters

Torvalds' Audio Noise admission mattered, but not for the reasons most people thought.

It mattered because it provided a data point showing what pragmatic AI adoption actually looks like, as opposed to what hype suggests.

It mattered because it demonstrated that being skeptical of hype doesn't mean rejecting useful tools.

It mattered because it modeled how to think about AI: understanding context, being honest about limitations, using it where it helps, not using it where it doesn't.

It mattered because in an industry increasingly drowning in hype and financial incentives, Torvalds is one of the few people with enough credibility to call bullshit and have people listen.

And it mattered because developers making real decisions about whether to use AI tools, how far to trust them, and where the boundaries are, can look at what Torvalds actually did versus what he said, find them consistent, and get some grounding in reality.

The story isn't "AI skeptic converts." The story is "pragmatic engineer uses tool for appropriate purpose, consistent with what he's been saying all along." That's less exciting. It's also more useful.

Moving Forward: What Developers Should Do

If you're trying to figure out your own relationship with AI coding tools, Torvalds' example and philosophy point toward a few concrete things:

-

Experiment: Try AI tools on non-critical projects. Learn what they're good at. Find the areas where they save you time and mental energy.

-

Verify everything: Even if you trust a tool, verify the output. Read the code. Understand what it does. If you can't understand it, don't use it.

-

Maintain responsibility: Whatever tools you use, you're responsible for the code. Tools can help you, but they can't replace your judgment.

-

Be honest about risk: Don't use AI for critical code if you're using it as a substitute for understanding. Using it to help you understand is different.

-

Watch for overconfidence: The biggest risk is trusting AI suggestions because they sound plausible. Stay skeptical.

-

Evaluate against context: A tool that's great for hobby projects might be inappropriate for production systems. Context determines appropriateness.

These aren't revolutionary ideas. They're just careful engineering. But they're ideas that a lot of AI hype implicitly rejects. Torvalds is arguing for returning to them.

The Conversation Ahead

As AI becomes more integrated into development workflows, the conversation is going to shift.

It's already shifting, actually. We're past the "will AI replace developers?" phase. Most people recognize that's not happening in the near term, if ever. We're now in the "how do we use AI effectively and responsibly?" phase.

That's a conversation Torvalds' pragmatism is well-suited for. He's not interested in either extreme: not "AI is amazing, use it for everything," and not "AI is scary, avoid it entirely." He's interested in "here's what this tool actually does, here's where it helps, here's where it creates risk, use it accordingly."

That's the conversation that matters. Not because it's exciting. Because it's practical.

FAQ

What did Linus Torvalds actually use AI for?

Torvalds used AI assistance (specifically Google Antigravity, an AI code tool) to help generate Python visualization code for a personal hobby project called Audio Noise, which generates random guitar pedal effects. He used it for the visualization component specifically because Python visualization APIs were outside his comfort zone, not for core logic or anything production-critical.

Why is this significant if Torvalds has been saying AI is okay for learning?

It's significant because Torvalds has been consistent—he's always said AI is fine for learning and non-critical code. His actual use matched what he's been saying all along. The significance isn't that his position changed; it's that people finally paid attention to his nuanced take instead of oversimplifying it as "Torvalds opposes all AI."

Does Torvalds' use of AI mean it's safe for production systems?

No. Torvalds was explicit that he uses AI for learning and hobby projects with zero production consequences. He's repeatedly said AI shouldn't be trusted for critical systems like Linux without extensive human verification. The context of the Audio Noise project—non-critical, personal, low-stakes—is crucial to understanding why it's appropriate there but wouldn't be appropriate for infrastructure code.

What's the difference between using AI for learning versus production code?

For learning code in hobby projects, bugs are acceptable because they're opportunities to understand how code works. You can experiment and iterate without consequences. For production code, every line matters because failures affect real systems and real people. You need to understand exactly why the code is correct, understand edge cases, and have confidence that it works under all conditions. AI can help with the former; it can't replace human verification for the latter.

Has Torvalds changed his mind about AI in software development?

No. Torvalds' position has been consistent for years: AI is useful for learning, code review assistance, and support tasks. AI is not appropriate for critical systems without extensive verification. His Audio Noise project perfectly fits the "learning and support" category, making his use of AI consistent with everything he's previously stated about appropriate uses of the technology.

Why does Torvalds emphasize the hype problem with AI?

Torvalds sees a gap between what AI can actually do and what the AI industry claims it can do. He's critical of marketing-driven narratives that overstate capabilities and understate limitations, especially when those narratives influence how developers and organizations make decisions. His concern is that hype disconnected from engineering reality leads to inappropriate technology adoption and increased risk.

What can developers learn from Torvalds' approach to AI?

Developers can learn to evaluate tools based on actual capabilities and real context rather than hype. Torvalds models pragmatism: use AI where it reduces boring work and creates no risk, maintain healthy skepticism, understand limitations, and don't use tools as substitutes for judgment and understanding. His approach is about matching the tool to the task appropriately.

Is AI replacing software engineers?

Not yet, and probably not soon. Torvalds and most experienced engineers believe AI will make developers more productive and handle specific tasks, but won't eliminate the need for human judgment, understanding, and responsibility in software development. AI is becoming a tool developers use, not a replacement for developers themselves.

Where should developers use AI coding tools today?

According to Torvalds' pragmatic framework: use AI for boilerplate code, learning unfamiliar patterns, generating scaffolding, code review assistance, and experimentation. Don't use AI as a substitute for understanding critical code. Don't skip verification and code review. Use AI where it enhances your productivity without creating risk from misunderstanding.

What's the future of AI in software development?

Based on Torvalds' analysis, AI will probably become more useful for specific tasks but won't become universally trusted for all code. The technology will mature, find its actual level of usefulness, and be integrated into developer workflows where it makes sense. Marketing hype will eventually cool, and the industry will settle on realistic understanding of what AI can and can't do effectively.

Conclusion: Pragmatism Over Hype

Linus Torvalds' admission that he used AI for a hobby project is fascinating not because it represents a revolution in his thinking, but because it perfectly demonstrates what pragmatic technology adoption actually looks like.

He didn't change his fundamental position. He applied his existing principles to a specific situation. AI was useful for learning Python visualization APIs in a low-stakes project. So he used it. Exactly as he's been saying is appropriate for years.

The broader lesson here extends far beyond Torvalds or even AI coding tools. It's about how to evaluate technology in a world drowning in marketing hype and financial incentives to overstate capabilities.

Ask what the tool actually does. Understand what it's good at and what it struggles with. Test it yourself. Be honest about risks. Use it where it helps. Don't use it where it creates problems. Maintain responsibility and understanding regardless of what tools you use.

These principles are almost boring in their straightforwardness. They're also increasingly rare in an industry where hype and venture capital often override engineering judgment.

Torvalds has spent decades modeling this kind of pragmatism. He doesn't do it to be contrarian or to seem smarter than others. He does it because building systems that work, at scale, for millions of people, requires grounding in reality rather than narrative.

As AI becomes more integrated into software development, that pragmatism isn't going away. It's becoming more valuable. Not because Torvalds is always right about everything, but because someone consistently questioning hype and pushing for honest evaluation of what technology can actually do is a necessary counterweight to the forces pushing everything toward "AI will transform everything."

The transformation is probably more modest than the hype suggests. The usefulness is probably more constrained than marketing claims. The role AI plays is probably more as a helpful tool for specific tasks than as the revolutionary force reshaping the entire industry.

And that's probably fine. Not everything needs to be revolutionary. Some things just need to work better than they did before. Sometimes the best story isn't about dramatic change but about incremental improvement and mature judgment.

Torvalds' Audio Noise project demonstrates exactly that. An experienced engineer using a tool because it was useful, in a context where it was appropriate, for exactly the reason he's been saying is legitimate for years. It's boring. It's pragmatic. It's real.

And in a world of hype, that might be the most refreshing thing of all.

Key Takeaways

- Torvalds used AI for learning Python visualization in a hobby project, perfectly consistent with his years of saying AI is appropriate for learning, not production systems

- The critical divide is context: learning projects have no stakes, production systems have massive stakes requiring human verification and understanding

- AI excels at boilerplate, scaffolding, and learning assistance; it fails as a replacement for judgment in critical infrastructure code

- Marketing hype conflates 'AI works for specific tasks' with 'AI should be trusted everywhere,' conflating different risk categories inappropriately

- Pragmatic AI adoption means using it where it reduces boring work without creating risk, maintaining skepticism about confidence claims, and keeping human judgment as the final authority

Related Articles

- AI's Hype Problem and What CES 2026 Must Deliver [2025]

- AI Comes Down to Earth in 2025: From Hype to Reality [2025]

- UK Police AI Hallucination: How Copilot Fabricated Evidence [2025]

- VoiceRun's $5.5M Funding: Building the Voice Agent Factory [2025]

- MacBook Air M4: The Best AI Laptop of 2025 [Review]

- AI PC Crossover 2026: Why This Is the Year Everything Changes [2025]

![Linus Torvalds' AI Coding Confession: Why Pragmatism Beats Hype [2025]](https://tryrunable.com/blog/linus-torvalds-ai-coding-confession-why-pragmatism-beats-hyp/image-1-1768424919134.jpg)