Virginia Teen Safety Law: What's Happening & Why It Matters

January 1st, 2025 marked a turning point for social media regulation in America. Virginia quietly launched one of the strictest age-verification laws for minors, and the internet promptly lost its mind. Not in a "finally someone's protecting kids" way, but in an "oh no, this creates massive privacy problems" way.

The law sounds simple on the surface: teens can't access social media unless they're verified as older than 14. But dig into the actual mechanics, and you'll find something far messier. Tech companies have to collect personal data to verify age. Parents gain unprecedented control over teen accounts. Digital rights advocates are warning this could normalize mass surveillance of young people.

Here's the thing: this law didn't appear out of nowhere. It's part of a wave of legislation sweeping across America, all claiming to protect kids from social media addiction, predatory behavior, and mental health damage. But each law creates the exact privacy problems legislators claim to be solving.

We're not here to tell you whether the law is good or bad. We're here to break down exactly what it does, why experts are furious about it, and what's coming next as courts dismantle pieces of it.

This matters whether you're a Virginia parent, a teen, someone building social media platforms, or just someone who cares about where privacy rights are headed in 2025.

TL; DR

- Virginia's law requires age verification for teens under 14, forcing social media platforms to collect and store identifying information

- Privacy advocates warn the law normalizes mass data collection on minors, creating honeypots for hacking and government surveillance

- Parental control provisions give parents sweeping power to monitor and restrict teen accounts, raising concerns about surveillance of minors by guardians

- Legal challenges are already underway, with free speech and constitutional privacy concerns likely to strike down portions of the law

- Other states are watching, meaning what happens in Virginia will shape teen privacy nationwide in 2025-2026

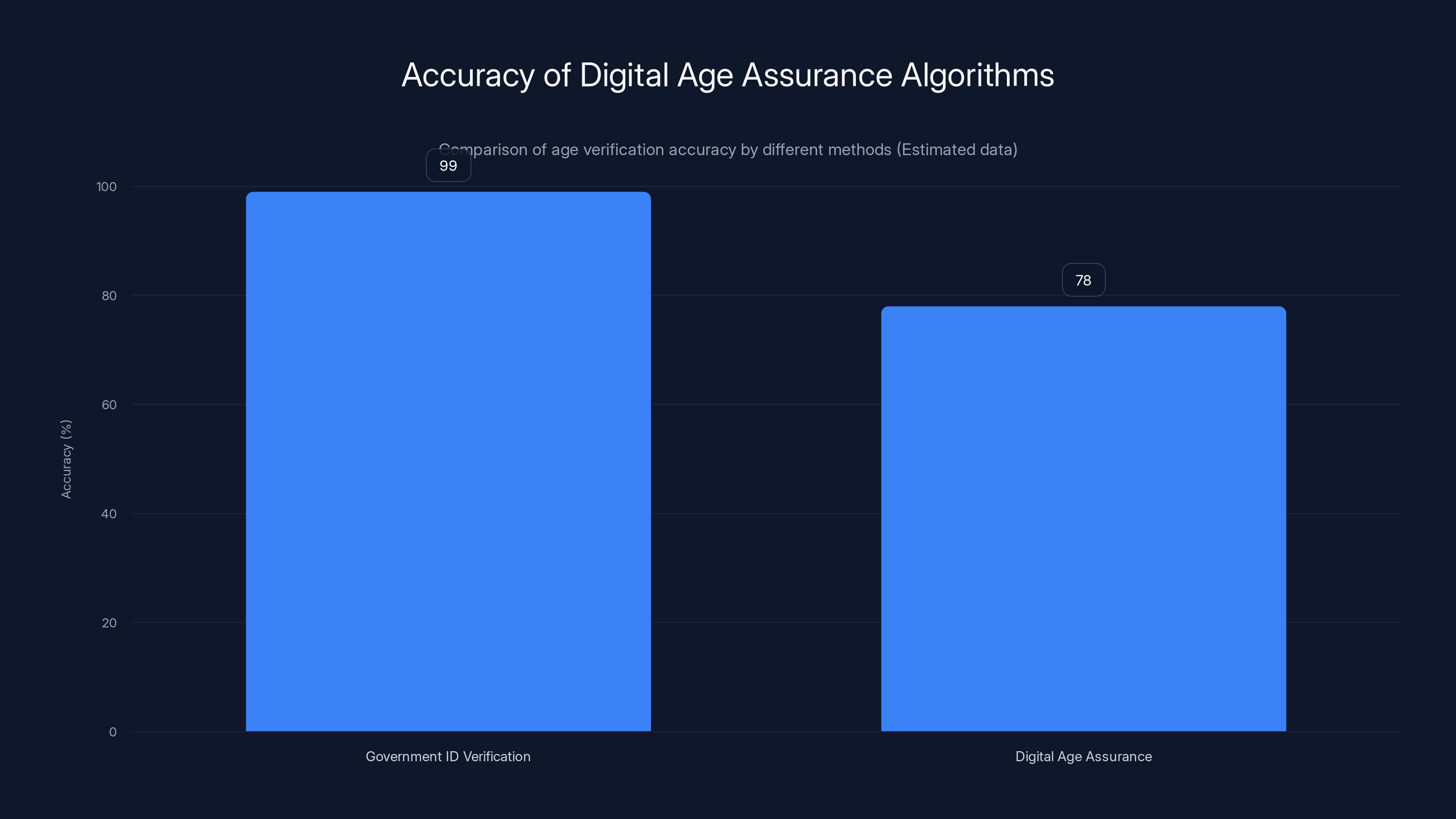

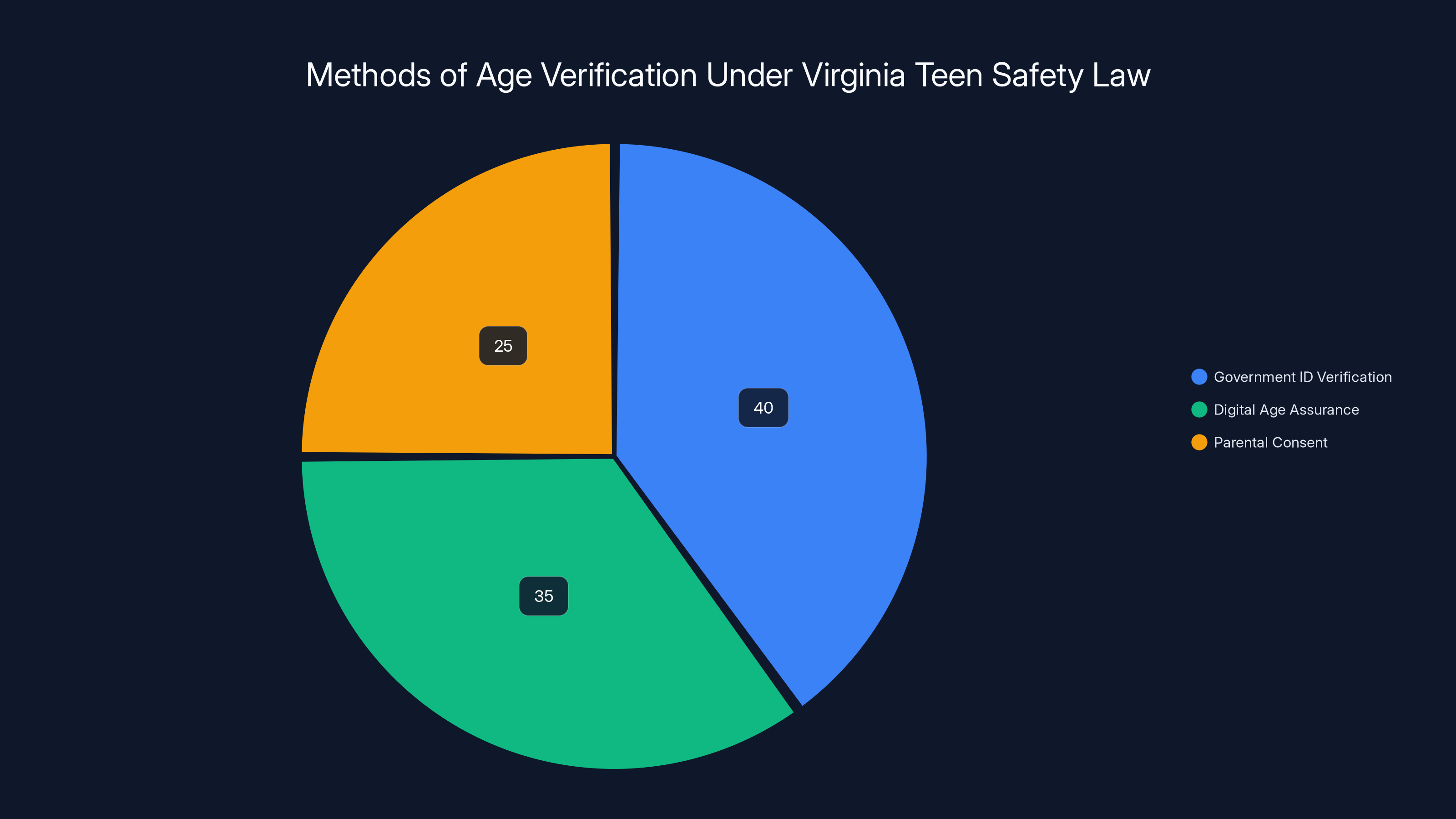

Government ID verification offers near-perfect accuracy, while digital age assurance methods have an estimated accuracy of 70-85%, highlighting potential misidentification risks. Estimated data.

What Exactly Is the Virginia Teen Safety Law?

The law is formally called the Protecting Virginians Online from Predatory and Addictive Content bill. It's a comprehensive attempt to regulate how social media companies interact with minors in Virginia.

At its core, the law establishes strict rules around who can access what, and what data gets collected. For teens under 14, social media platforms must verify their age before allowing access. For teens 14-17, there are additional restrictions on algorithmic recommendations, notifications, and other engagement features designed to drive usage.

But here's where it gets complicated. Age verification doesn't work without collecting personal data. The law requires one of three methods: government ID verification, digital age assurance (analyzing user behavior patterns), or parental consent with ID verification.

Government ID is the most direct: give us your driver's license number or passport. Digital age assurance is fuzzier: platforms analyze how you use the account and guess your age based on activity patterns. Parental consent means a parent provides their ID to verify they're allowing their kid on the platform.

Each method has privacy implications that keep security researchers up at night.

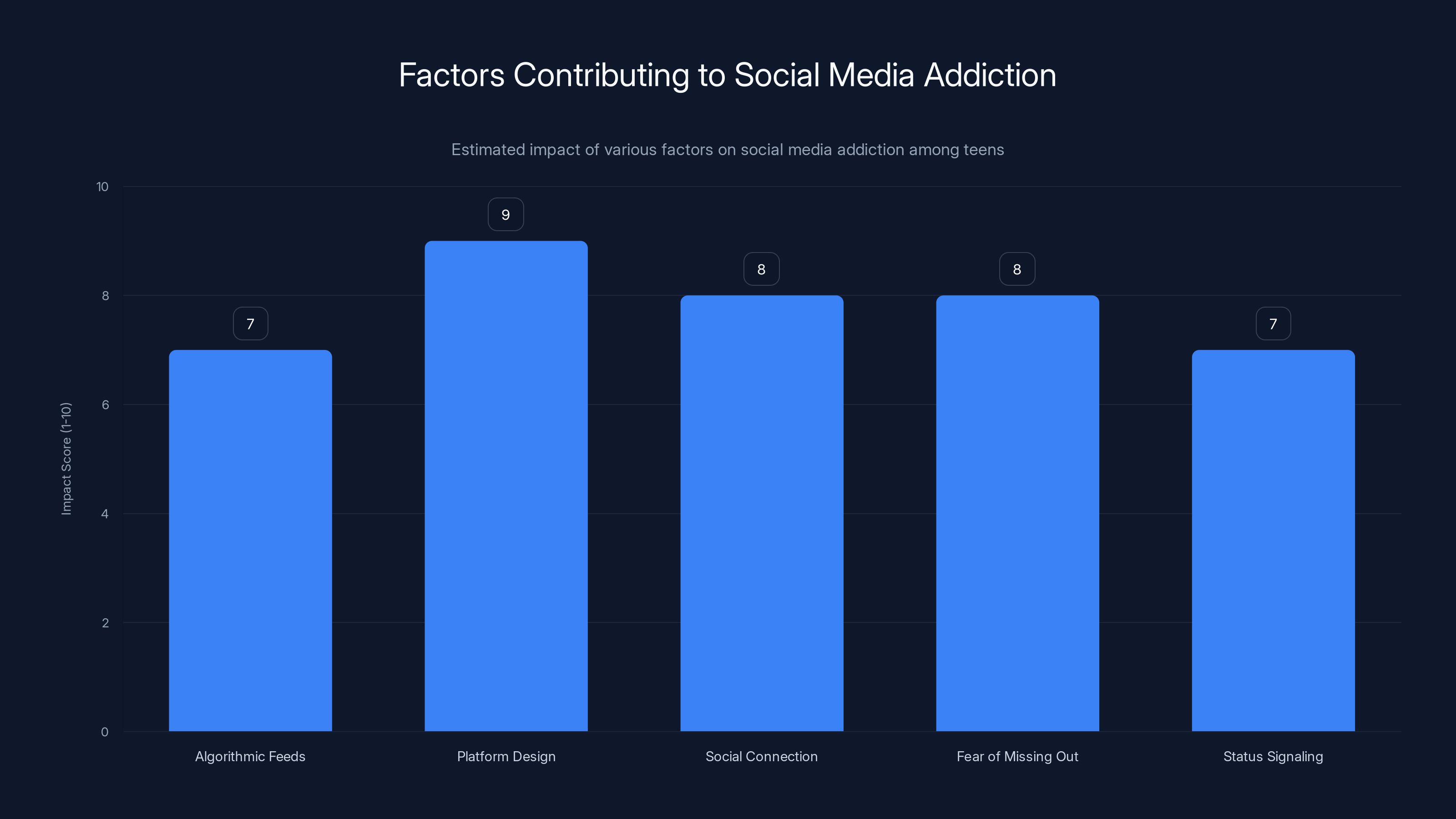

Platform design and social connection are major drivers of social media addiction, more so than algorithmic feeds. Estimated data based on typical factors influencing teen behavior.

The Age Verification Problem: Why It's a Privacy Nightmare

Age verification sounds reasonable until you think about the logistics. Platforms need a way to know how old you are without just asking "are you 18?" and accepting a yes.

Government ID verification is the gold standard. It's hard to fake. But it requires users to upload photos of their ID—drivers licenses, passports, and state IDs contain way more information than just age. Address. Full name. Identifying characteristics. License number. Expiration date.

Social media platforms aren't banks. Their security track records are mixed at best. In 2023, Tik Tok suffered a data breach exposing millions of user records. Instagram has had multiple security failures. Even platforms with strong track records face constant hacking attempts. Now imagine if every teenage user in Virginia has to upload their government ID to access Tik Tok.

You've just created a centralized database of teen identification documents. If hackers breach it, they don't just get account passwords. They get photo IDs for thousands of minors.

Digital age assurance is supposed to be the privacy-friendly alternative. The theory is elegant: analyze how the user behaves, what they search for, what they click on, and estimate their age without collecting ID. Companies like Yoti and Yodlee have spent years developing algorithms that claim to do this with decent accuracy.

But here's the catch: to analyze behavior patterns accurately, platforms need to track your activity in detailed ways they normally wouldn't. Every click, every pause, every search, every time you delete something. That granular tracking is potentially more invasive than just asking for your ID once.

And the accuracy isn't perfect. Digital age assurance typically works with 70-85% accuracy. That means a significant percentage of users get misidentified. A 16-year-old might get flagged as under 14. A 13-year-old might pass through as 18. Now you have a system creating false positives and false negatives, which means either legitimate teen access gets blocked, or underage users bypass protections entirely.

Parental consent with ID verification creates a third problem. Parents have to give their identification to allow their teen on social media. This centralizes family data in platform databases. It also creates a perverse incentive structure: parents who want to control their teen's account are incentivized to maintain active digital monitoring relationships with platforms, which now have financial reasons to keep those accounts engaged and active.

Parental Control Features: Surveillance or Protection?

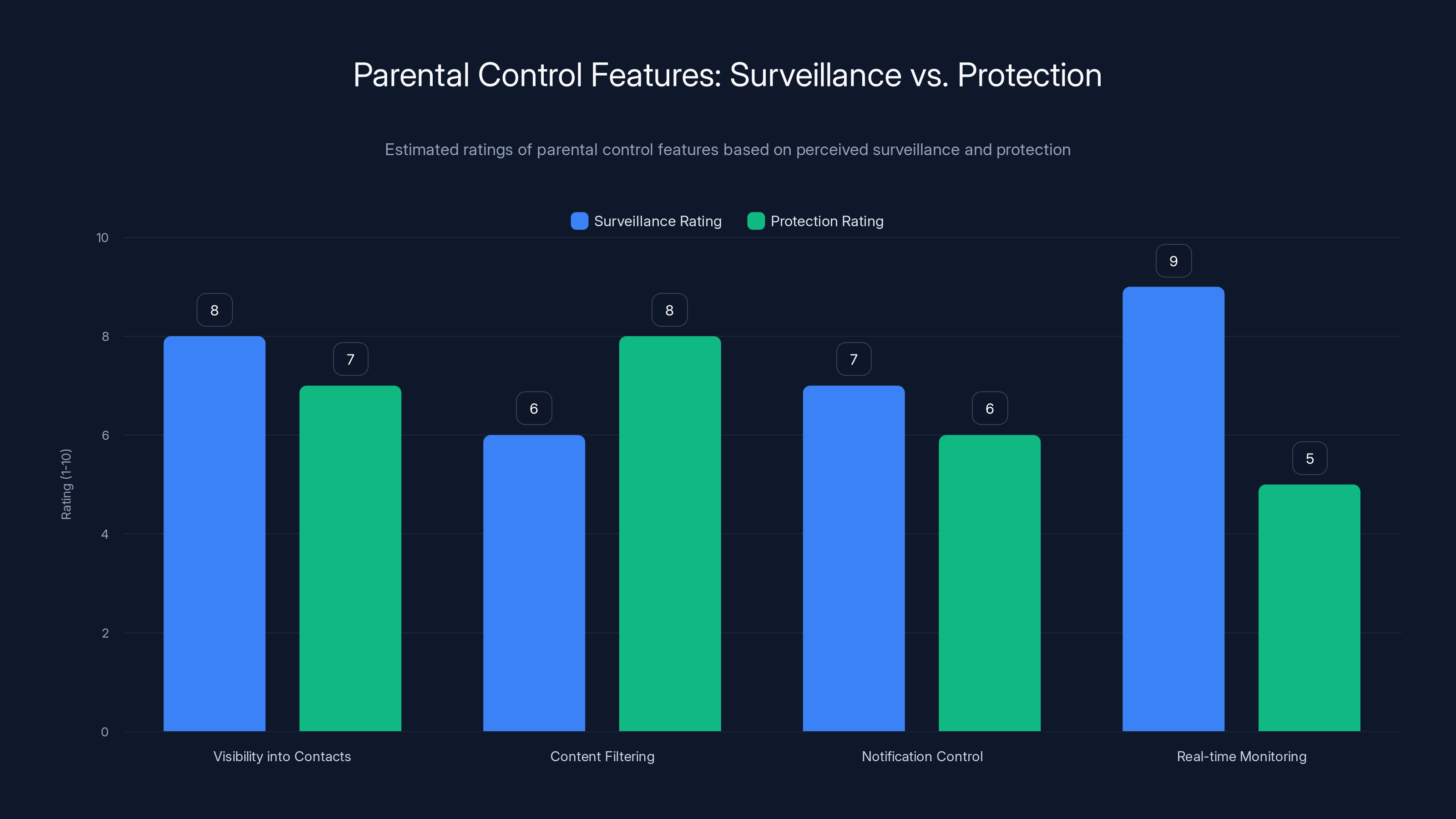

The law doesn't just restrict teen access. It also gives parents powerful monitoring tools.

Parents can now see their teen's followers, the accounts their teen follows, and the direct messages their teen receives. They can restrict what content their teen can see based on algorithmic filtering. They can disable notifications and features designed to drive engagement.

On paper, this sounds great. Parents get visibility into who their teen is talking to, helping prevent predatory behavior and unwanted contact.

In practice, it's surveillance architecture. A parent can now monitor everything their teenager does on social media in real-time. Every follow. Every like. Every message. This isn't parental oversight. This is 24/7 monitoring of a minor's digital life.

And it normalizes something concerning: the idea that comprehensive monitoring of minors is acceptable. A 16-year-old's right to privacy from their parents is limited, sure. But requiring all social media platforms to provide surveillance tools makes it standard, not exceptional.

Further, the law doesn't require parental monitoring to be transparent to the teen. A parent can enable monitoring without telling their kid they're watching. This creates an asymmetric power dynamic where a teenager doesn't even know they're under surveillance.

There's also a question of data security. Platforms now hold sensitive information about parent-child relationships. Who sees that data? Can law enforcement access it? Can the platform use it for marketing? The law is vague on these points.

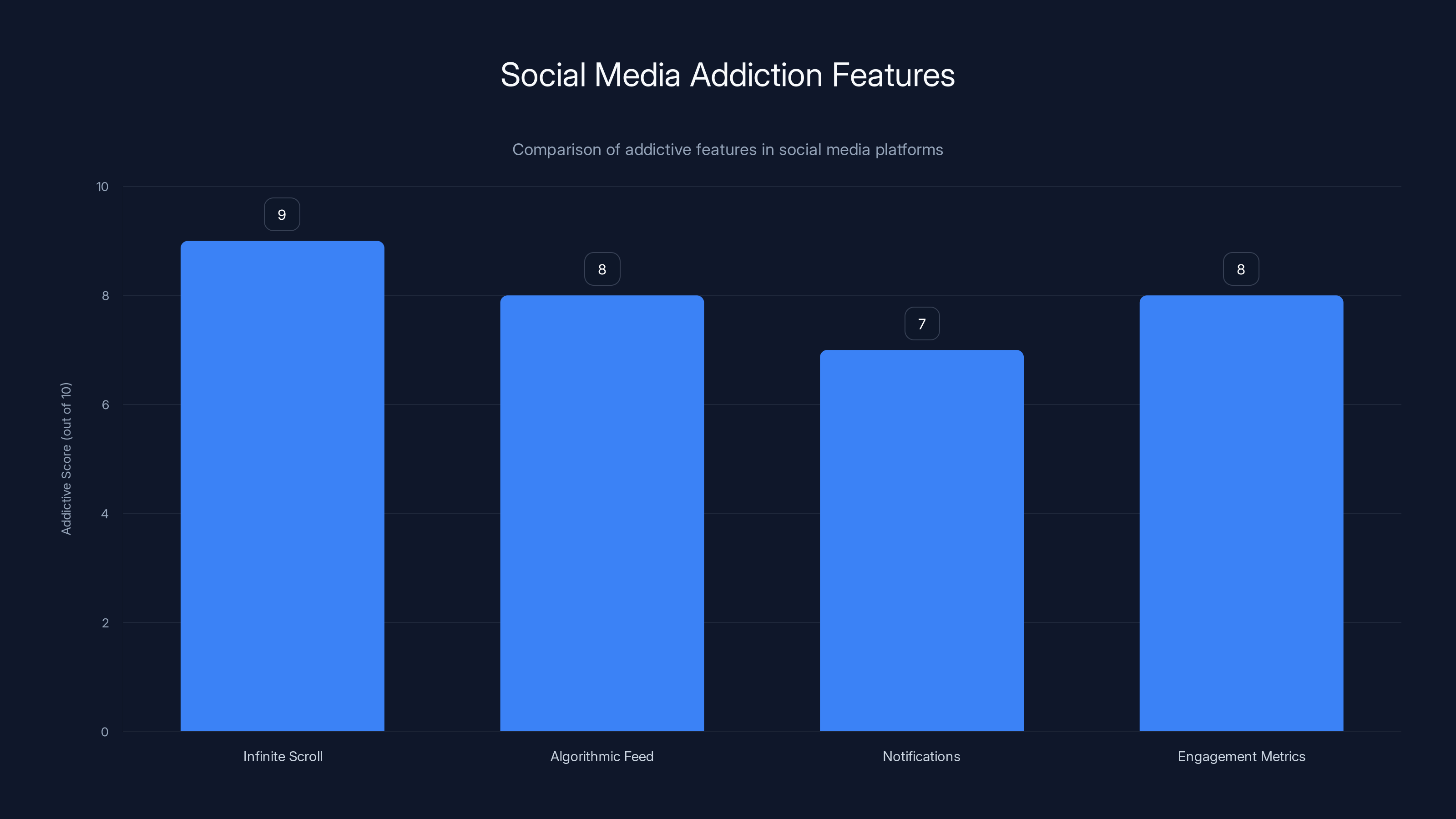

Estimated data shows that features like infinite scroll and algorithmic feeds are highly addictive, contributing to prolonged platform use across all ages.

The Data Collection Honeypot: Creating Targets for Hackers

Here's what keeps privacy researchers awake at night:

Virginia just mandated that social media platforms collect and store identifying information on every teenage user in the state. That's millions of data points. Names. Addresses. Photos of government-issued IDs. Family relationship data (parent info). Behavioral tracking data.

All in one place.

All required to be stored by companies that face constant security challenges.

Historically, the biggest breaches in America have targeted exactly this kind of data. In 2017, Equifax exposed personal information on 147 million people. In 2019, a data broker exposed 4 million teenagers' data through misconfigured databases. In 2020, Twitter suffered a breach exposing user phone numbers and emails.

Now imagine a breach targeting the age-verification data that Virginia's law requires. Hackers get access to physical identifying information of thousands of minors. They get photos of IDs. They get family relationship data. They get behavioral tracking information.

That data has value. To identity thieves. To scammers targeting minors. To law enforcement agencies seeking location or activity data (even without proper warrants, according to privacy advocates).

The law requires platforms to keep this data "secure," but doesn't define what that means. Does Tik Tok need military-grade encryption? Can they store IDs in plain text? Can they use a third-party verification service and transfer unencrypted data to them?

The ambiguity is a feature, not a bug. It means platforms have wiggle room on security standards, which means compliance costs stay lower, which means the law can claim success while actual security remains questionable.

How Social Media Platforms Are Responding

Major platforms haven't been silent. They've filed legal challenges, issued public statements, and begun implementing (or pseudo-implementing) compliance measures.

Tik Tok and Meta have both stated the law creates practical impossibilities for their business models. Tik Tok's core value proposition is algorithmic recommendations. Restrict that for teens 14-17, and you're fundamentally changing how their product works. Meta's Instagram and Facebook operate on the same premise.

Most platforms are treating age verification as a game. Tik Tok's implementation asks users to confirm their age through a consent screen. Not verification. Confirmation. They ask you to self-report your age, which defeats the entire purpose of the law.

Twitter (now X) has resisted even that, arguing the law is unconstitutional.

Smaller platforms are simply blocking Virginia. Some dating apps and niche social networks have geofenced Virginia entirely, refusing to serve the state rather than implement expensive verification systems.

The legal community is divided on whether platforms' resistance is justified or self-serving. Privacy advocates say the law creates unconstitutional data collection. Platform defenders say the law is unworkable as written. Regulators argue everyone's being dramatic.

Meanwhile, teens are finding workarounds. VPNs mask their location. Friends' accounts can be shared. Verification is being spoofed by using digital age assurance platforms that are themselves not verified.

The law is creating a cat-and-mouse game where compliance is hard, evasion is easy, and the actual security benefits are unclear.

The Virginia Teen Safety Law mandates three methods for age verification, with government ID verification being the most direct and digital age assurance being the least intrusive. Estimated data.

Constitutional Challenges: Free Speech and Privacy Rights

Multiple lawsuits are already challenging the Virginia law on constitutional grounds.

The primary argument is free speech. Restricting what content teenagers can see is a form of speech restriction. The First Amendment protects a broad right to access information. Requiring age verification to access certain platforms could violate that right.

The law restricts algorithmic recommendations for teens 14-17. Algorithms are a form of speech—they're editorial decisions by platforms about what to show you. Restricting algorithmic speech could violate the First Amendment, especially if the restriction is content-based rather than age-based.

There's also a constitutional privacy angle. The Fourth Amendment protects against unreasonable searches and seizures. Does requiring mandatory data collection on minors violate that? Legal scholars are split. Some argue data collection itself isn't a "seizure." Others argue collecting ID information from minors without specific justification is an unreasonable privacy intrusion.

The Fifth Amendment's due process clause is another angle. Does the law deprive minors of liberty interests (access to social media) without due process? That's a weaker argument, but it's being made.

The strongest argument is likely the First Amendment challenge. Courts have consistently struck down laws that restrict minors' access to speech, even when those laws claim to protect minors. The rationale is that minors have First Amendment rights too, and restrictions must pass strict scrutiny (be narrowly tailored to serve compelling state interests).

Is age verification narrowly tailored? Probably not. It collects massive amounts of data that aren't necessary to achieve the goal of preventing very young teens from accessing social media.

Courts have also noted that age verification isn't foolproof, so it might not even accomplish the law's stated goals. If the law doesn't meaningfully achieve its objectives, it fails the constitutional test.

Expect at least one of these constitutional challenges to succeed. The timeline is unclear—could be late 2025, could be 2026. But the law as written is likely to be partially struck down.

The Addiction Problem: Does Age Verification Actually Solve It?

Let's step back and ask the obvious question: does this law actually prevent social media addiction in teens?

The answer is almost certainly no.

The law assumes that keeping very young teens off social media will reduce addiction. But it doesn't address the core problem: platforms are designed to be addictive, regardless of age. A 15-year-old on Tik Tok is still being hit with the same addictive algorithmic recommendations as a 20-year-old.

For teens 14-17, the law restricts algorithmic recommendations. So instead of the platform's algorithm deciding what to show you, what happens? You have to manually scroll and search. That's actually less addictive, not more. The endless algorithmic feed is what drives addiction. Remove that, and engagement drops.

But the law allows platforms to show you algorithmic feeds if you have parental consent. So a teen can just ask their parent to enable algorithmic recommendations, and the restriction is meaningless.

Further, the law doesn't address the actual drivers of teen social media use: social connection, fear of missing out, status signaling, entertainment. All of those exist regardless of algorithmic restrictions.

Research on social media addiction suggests the problem isn't the algorithm. It's the platform design itself. The notifications. The notification badges. The way platforms are built to keep you coming back. The social dynamics that make leaving the platform feel socially isolating.

Age verification does nothing to address these design choices.

If Virginia were serious about reducing teen social media addiction, the law would mandate design changes: no infinite scroll, no notifications, no engagement metrics, no algorithmic feeds. Instead, Virginia banned young teens from accessing platforms entirely and restricted algorithmic features for older teens.

The first approach (banning young teens) is what Germany tried to do with their Netz DG law. Teen social media usage didn't decrease. Teens just used VPNs and parent accounts.

The law might reduce very young teens' access to platforms (though workarounds are trivial). But for 14-17 year olds, and for the stated goal of reducing addiction, the law is unlikely to have measurable impact.

Estimated data shows that while features like visibility into contacts and real-time monitoring are rated high for surveillance, they also have significant protection ratings, highlighting the dual nature of these tools.

How Other States Are Watching Virginia's Experiment

Virginia isn't alone. At least 12 other states have similar laws in various stages of implementation or legal challenge.

Arkansas, Florida, and Utah all passed versions of the Virginia law in late 2024. Texas has a more restrictive version pending. New York just introduced a bill. California is considering its own approach.

Everyone's watching how the Virginia law plays out legally and practically. If courts strike it down, other states will either amend their versions or abandon them. If it survives legal challenges, expect a wave of similar laws across America.

The international angle matters too. The EU's Digital Services Act requires similar age verification and parental notification. European tech companies are already implementing these features. If Virginia's law survives, American platforms will likely adopt the same features nationwide, making the Virginia approach the de facto standard.

That's the real concern for privacy advocates. One state's experiment becomes national policy without federal oversight or congressional debate.

Government Surveillance Concerns: What Happens to the Data?

Here's the scenario privacy advocates are warning about:

A social media platform collects age verification data for all Virginia teens: ID photos, behavioral patterns, location data (inferred from IP addresses and GPS), follower networks, message content.

Law enforcement requests that data for an investigation. No warrant. Or with a warrant, but for something broadly defined.

Now the government has detailed information about a teen's digital life, social network, and location patterns. All collected through a law ostensibly designed to protect teens from addiction.

The law doesn't prevent this. It doesn't require platforms to resist government requests. It doesn't mandate encryption or legal barriers to access.

In fact, the law creates a perverse incentive: platforms have collected all this data. If they resist government requests, they look complicit in protecting crime. If they comply, they've done their duty under the law.

This isn't hypothetical. Law enforcement already requests social media data constantly. In 2023, Facebook received 164,000 government requests for user data. If that data is now more granular and includes age verification information, the requests will target it.

A teen posting about substance use at a party could find themselves on law enforcement radar. A trans teen accessing resources could be identified by their real name to authorities in states with anti-trans laws.

The law transforms age verification from a platform feature into a government surveillance architecture. That's the actual privacy risk.

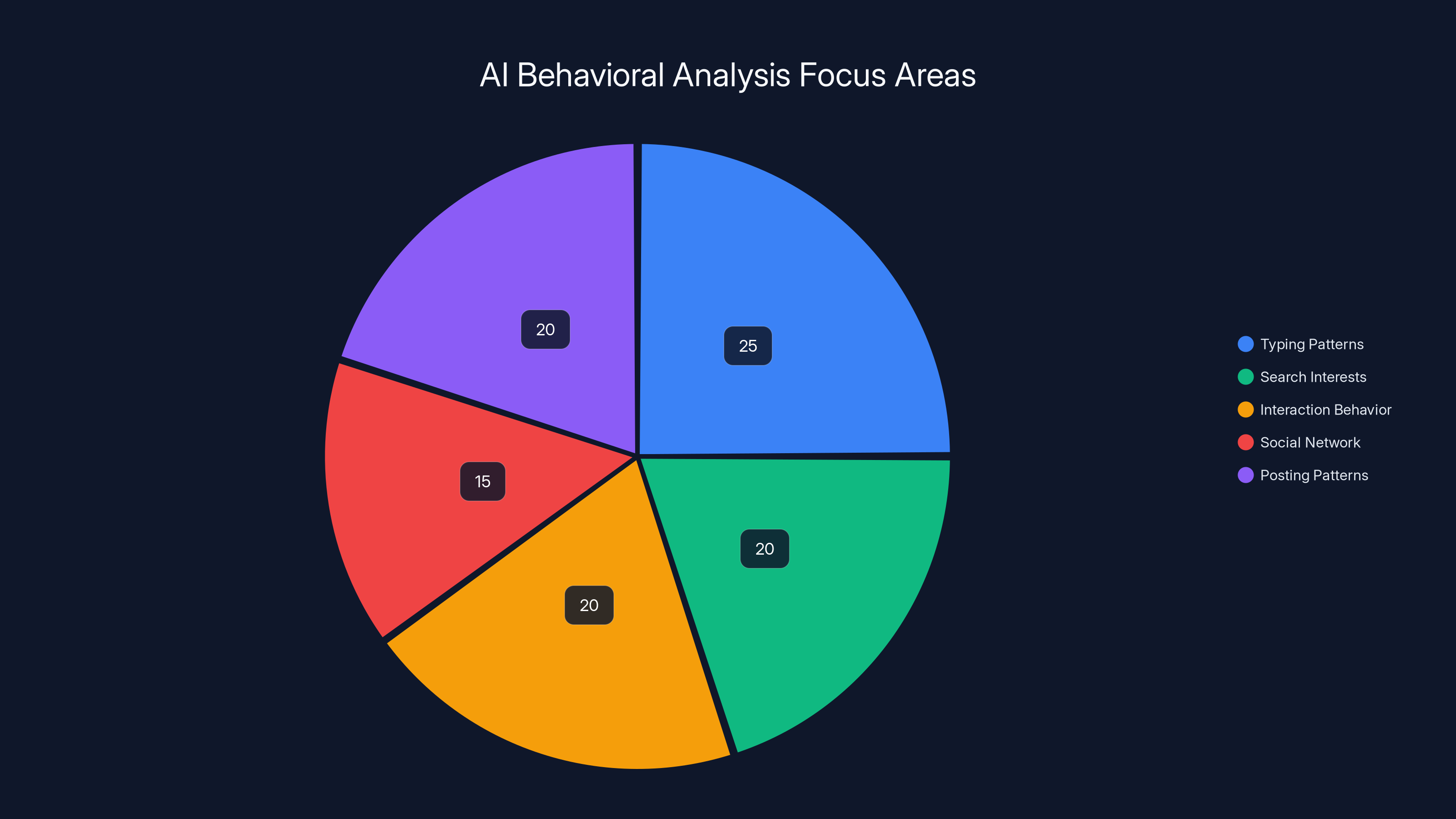

Estimated data suggests that AI systems for age verification focus equally on typing patterns, search interests, and interaction behavior, with slightly less emphasis on social networks and posting patterns.

The Role of AI in Age Verification and Behavioral Analysis

This is where things get technically weird and potentially more invasive.

Digital age assurance companies use AI to analyze behavioral patterns and estimate age. These systems look at:

How you type (typing speed, vocabulary, grammar patterns) What you search for (age-appropriate interests) How you interact (click patterns, dwell time, scrolling speed) Your social network (who you follow, who follows you) Your posting patterns (frequency, time of day, content themes)

AI models trained on millions of users can identify patterns that correlate with age. A 12-year-old types differently than a 20-year-old. Uses different slang. Searches for different things.

But here's the catch: these models are also collecting massive amounts of behavioral data to feed the AI. Every keystroke is being analyzed. Every click is being logged. Every dwell time is being measured.

To estimate age with 80% accuracy, you need to track behavior at a granularity that's more invasive than just asking for an ID.

And AI models can be fooled. A young person can change their typing patterns. Someone can use a VPN and pretend to be in a different region (which changes what they search for). The AI is playing a game against humans trying to evade it.

Further, these AI systems have bias. Models trained predominantly on English speakers from the US might misidentify teens from different linguistic backgrounds. Neurodivergent users (with ADHD, autism, dyslexia) have different typing and browsing patterns that might be misidentified by age AI.

The law creates an incentive for platforms to deploy increasingly sophisticated behavioral tracking AI. All in the name of age verification. But the surveillance apparatus is the real goal.

What Teens, Parents, and Companies Should Know Right Now

If you're in Virginia, here's what's actually happening on the ground:

For Teens: The law is technically in effect, but compliance is spotty. Most platforms are using self-attestation (you confirm your age) rather than real verification. You can still access social media. If you want extra privacy, a VPN masks your Virginia location. Tech-savvy teens are already bypassing the restrictions entirely.

For Parents: You have access to parental monitoring tools on platforms that implement them. But those tools work both ways—platforms now know you're monitoring your teen, which changes how they interact with your account. And you're trusting platforms with your ID information, which is a security risk.

For Platforms: Compliance is expensive and imperfect. Most major platforms are challenging the law legally. Some are implementing half-measures (like self-attestation) that technically comply while not meaningfully verifying age. Smaller platforms are blocking Virginia entirely.

For Privacy Advocates: The law is creating exactly what they warned against: centralized databases of teen identification information, behavioral tracking infrastructure, and government surveillance architecture. The addiction problem it claims to solve will likely remain unsolved.

The Broader Landscape: How Virginia Fits Into Global Tech Regulation

Virginia's law is part of a global trend. Governments worldwide are trying to regulate tech companies through legal mandates.

The EU's Digital Services Act (DSA) requires age verification for minors across the EU. The UK's Online Safety Bill includes similar provisions. Australia's Online Privacy Code is stricter. China requires real-name registration for all users.

The common thread: governments are using child protection as the rationale to collect more data on their citizens and assert more control over platform design.

It's not a conspiracy. It's a pattern. Each regulation claims its restrictions are necessary for child safety. Each one collects more data and concentrates more power with governments and platforms.

Virginia's law is just the latest iteration.

What's interesting is how quickly these approaches are exported. If Virginia's approach works (survives legal challenges), expect the EU to strengthen the DSA. If it fails legally, the EU might pivot away from age verification entirely.

The real test is not whether the law protects teens from addiction. It's whether courts will accept that government-mandated data collection and behavioral surveillance are justified by the goal of protecting minors.

History suggests courts won't. But 2025 is different from previous eras. Courts are less protective of privacy rights than they were in the 1990s and 2000s. Surveillance is normalized. Teen privacy is seen as less important than public safety.

So Virginia's law might survive even if it doesn't accomplish its goals, simply because courts have shifted their views on surveillance and privacy.

What Comes Next: 2025 and Beyond

Expect several developments in the coming months:

Legal challenges will proceed. At least three lawsuits challenging Virginia's law are in federal court. Decisions could come by mid-2025 or later. Partial injunctions are possible, blocking specific provisions while letting others stand.

Platforms will continue strategic non-compliance. Most major platforms will keep using self-attestation rather than real verification. It satisfies the letter of the law while not meaningfully implementing it. Virginia's Attorney General could pursue enforcement, but that would be expensive and time-consuming.

Other states will follow suit. Arkansas, Florida, and Utah will implement their own versions in 2025. Texas might pass its own bill. The multi-state approach creates a patchwork of different rules that are operationally expensive for platforms but individually harder to challenge legally.

The tech industry will adapt. Platforms will develop age verification infrastructure (either in-house or through third-party vendors). This infrastructure will get better and cheaper. By 2026, age verification might be standard across all platforms, not just in Virginia.

International coordination will increase. The EU and US will likely coordinate on age verification standards. What works in the EU will inform what happens in America. The result could be a de facto global standard for age verification, even without formal international agreement.

Privacy concerns will persist. No matter what courts decide about the law's constitutionality, the privacy risks will remain. Centralized databases of teen identification data are inherent to age verification. That's the real legacy of Virginia's experiment.

The Addiction Question: Are We Solving the Right Problem?

Here's the uncomfortable truth about the entire debate:

Maybe the problem isn't age. Maybe it's the platforms themselves.

Tik Tok is addictive not because young teens use it, but because it's designed to be addictive. The algorithm. The notifications. The engagement metrics. The infinite scroll. These features are engineered to maximize time on platform, regardless of the user's age.

A 30-year-old on Tik Tok for 6 hours a day is just as addicted as a 15-year-old. But we don't regulate adult social media use. We only regulate teen use.

Why? Because we tell ourselves it's about protecting children. But really it's about giving governments and parents leverage to monitor and control teen behavior.

If Virginia were genuinely concerned about social media addiction, the law would ban infinite scroll for everyone. It would require chronological feeds instead of algorithmic feeds. It would disable notifications. It would hide engagement metrics.

Instead, Virginia restricted access and algorithmic recommendations only for minors. The adult experience remains unchanged. The platforms remain unchanged. Only the surveillance infrastructure expanded.

That tells you what the law is really about.

FAQ

What is Virginia's teen safety law?

Virginia's Protecting Virginians Online from Predatory and Addictive Content law, effective January 1, 2025, requires age verification for teens under 14 to access social media and restricts algorithmic recommendations for teens aged 14-17. The law also grants parents extensive monitoring and control capabilities over teen accounts, claiming to address social media addiction and protect minors from harmful content.

How does age verification work under the law?

Platforms can verify age using three methods: government ID verification (upload driver's license or passport), digital age assurance (AI analyzes behavioral patterns to estimate age), or parental consent with ID verification (parent provides their identification to authorize minor access). Each method has significant privacy implications, from data breach risks to behavioral surveillance.

What are the privacy concerns with the law?

The law creates centralized databases of teen identification information, including ID photos, behavioral data, and family relationship information. These databases become targets for hackers and potentially accessible to law enforcement without proper warrants. The behavioral tracking required for age assurance is more granular than traditional identity verification, normalizing invasive surveillance of minors.

Why are tech companies challenging the law?

Major platforms argue the law is operationally impossible, violates First Amendment rights to access information, and creates unconstitutional privacy intrusions. Some platforms claim age verification is technically unreliable, requiring massive data collection for imperfect results. Companies also worry about liability if age verification data is breached and used by bad actors targeting minors.

Will the law actually reduce social media addiction?

Privacy experts and researchers doubt the law will meaningfully reduce addiction because it doesn't address the core design features that drive compulsive use. Algorithmic feeds, notifications, and engagement metrics remain intact for most users. Teens can request parental consent to access algorithmic features, and VPNs or shared accounts allow easy workarounds.

What are other states doing?

At least 12 states including Arkansas, Florida, Utah, and Texas have passed similar laws or have pending legislation requiring age verification for minors on social media. If Virginia's law survives legal challenges, expect rapid adoption across other states, creating a patchwork of inconsistent requirements that effectively becomes a national standard.

What happens to age verification data after collection?

The law requires platforms to keep data "secure" but doesn't define security standards or restrict government access. Law enforcement can potentially request age verification data for investigations. The law doesn't mandate encryption, warrant requirements, or teen notification if data is accessed by authorities, creating significant surveillance risks.

How can teens and parents protect their privacy?

Technically savvy teens can use VPNs to mask their Virginia location and bypass age restrictions. Parents concerned about privacy can avoid self-identifying through parental monitoring tools and educate teens about data minimization. However, these are workarounds rather than solutions to the law's underlying privacy architecture.

Are platforms complying with the law?

Major platforms are using self-attestation (users confirm their age through a checkbox) rather than genuine verification. This technically complies with the law while not meaningfully verifying age. Some smaller platforms blocked Virginia entirely. Real compliance through government ID or behavioral AI would be more expensive and contentious legally.

When will courts decide if the law is constitutional?

Multiple lawsuits challenging Virginia's law on First Amendment and privacy grounds are proceeding through federal court. Decisions could come by mid-2025 or later. Legal experts expect partial strikes striking down some provisions, particularly those restricting algorithmic speech and creating unreasonable data collection.

Conclusion: What This Means for Your Digital Life in 2025

Virginia's teen safety law represents a inflection point in how America regulates technology and privacy. On the surface, it's about protecting kids from social media addiction. Underneath, it's about establishing infrastructure for data collection, behavioral surveillance, and government oversight of teen digital life.

The law probably won't achieve its stated goal of reducing addiction. Social media platforms will remain designed to maximize engagement. Teens will find workarounds. Compliance will remain spotty and inconsistent.

But the law will create something durable: a template for age verification infrastructure that can be scaled across states. A precedent showing that government-mandated data collection on minors is acceptable if framed as protection. A surveillance architecture that will outlast the specific provisions courts strike down.

For parents, the law offers tools to monitor teens while simultaneously creating risks from centralized data collection. The tradeoff might not be worth it. For teens, the law is mostly irrelevant thanks to easy workarounds and platform non-compliance. For platforms, the law is an expensive nuisance that they're trying to minimize.

The real question is constitutional. Will courts accept that protecting children from addiction justifies creating surveillance databases? Or will they recognize that the law conflates child protection with government monitoring, and therefore violates constitutional privacy and free speech rights?

The answer will define how tech regulation works for the next decade.

What's certain: Virginia's experiment is being watched by legislatures worldwide. If it survives legal challenges, expect your state to pass something similar. If it fails legally, expect regulators to get more creative and harder to challenge. Either way, teen privacy in America is about to change significantly.

The only question is whether the change will happen through transparent legal debate or through the steady accumulation of surveillance infrastructure justified by incremental claims about child protection. So far, the trend suggests the latter.

Key Takeaways

- Virginia's law requires age verification for teens under 14 on social media, effective January 1, 2025, creating centralized databases of teen identification data and behavioral information

- Three age verification methods (government ID, digital age assurance, parental consent) all create significant privacy risks, from data breach targets to invasive behavioral surveillance

- Parental monitoring tools normalize 24/7 surveillance of teen digital activity, raising questions about consent and privacy expectations between minors and guardians

- Legal challenges on First Amendment and constitutional privacy grounds are likely to succeed, with courts expected to strike down portions of the law by late 2025 or 2026

- The law likely won't reduce social media addiction because it doesn't address the core design features (infinite scroll, notifications, algorithmic feeds) that drive compulsive use

- At least 12 other states are implementing similar laws, making Virginia's legal challenges and practical outcomes a template for national tech regulation in 2025-2026

![Virginia Teen Safety Law: Privacy Concerns & Legal Battles [2025]](https://tryrunable.com/blog/virginia-teen-safety-law-privacy-concerns-legal-battles-2025/image-1-1767805782385.jpg)