Introduction: A Watershed Moment for Social Media Regulation

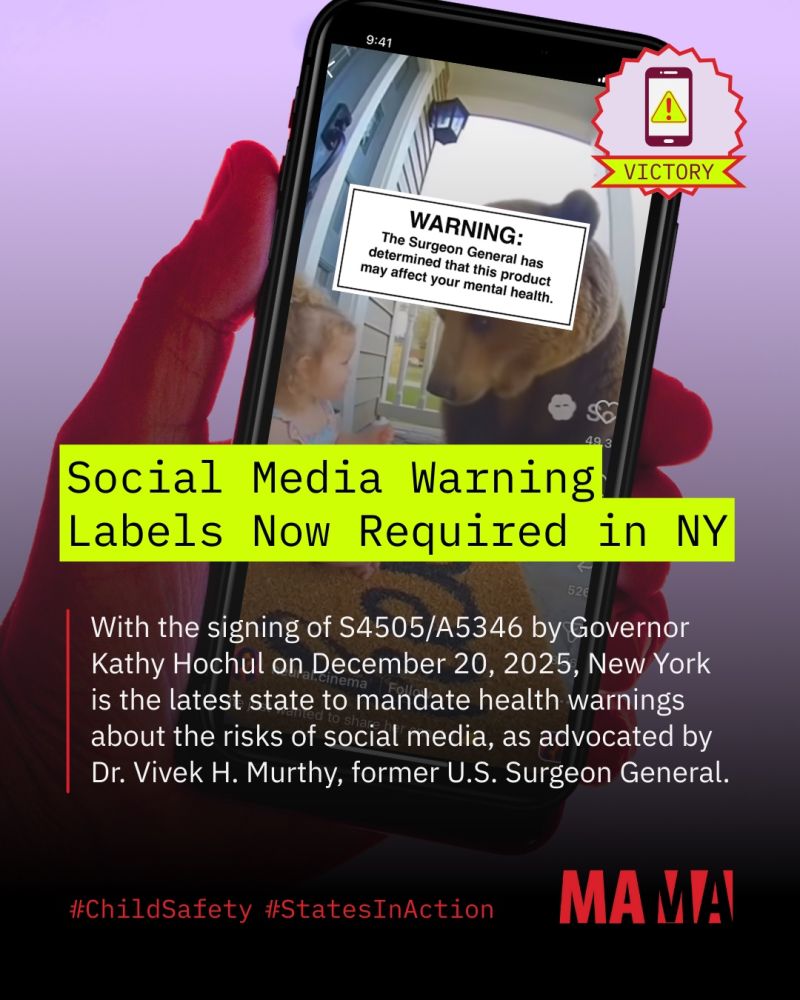

When New York Governor Kathy Hochul signed legislation requiring warning labels on social media platforms earlier this year, it marked a turning point in how America approaches digital regulation. The bill wasn't flashy. It didn't make headlines the way antitrust suits against tech giants do. But in its own way, it's potentially more consequential than any courtroom battle.

Here's the core idea: social media platforms—the ones designed to keep you scrolling endlessly through feeds—now have to tell younger users exactly what they're being exposed to. Before a teenager encounters infinite scroll, autoplay video, or algorithmic push notifications, they see a warning label. Not once. Repeatedly. And critically, they can't skip it.

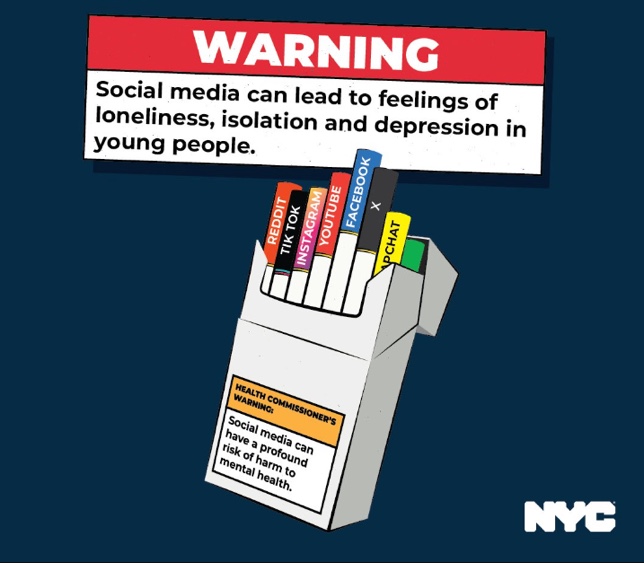

It's the digital equivalent of those tobacco warning labels you see on cigarette packs. Except this time, the addictive substance isn't nicotine. It's engagement mechanics deliberately engineered to maximize time on platform.

Why should you care? Because this law signals something larger: regulators are finally moving beyond hand-wringing about social media's effects on mental health and toward concrete action. If New York's approach sticks, expect California, Massachusetts, and other states to follow. Tech platforms will face pressure to either redesign their core features or face a patchwork of state-by-state compliance burdens.

This article breaks down what the law actually requires, how platforms will respond, what the science says about social media and addiction, the legal battles ahead, and what this means for the future of digital regulation.

TL; DR

- The Law: New York requires social media platforms to display warning labels before exposing younger users to addictive features like infinite scroll, autoplay, and push notifications

- Scope: Applies to platforms that use "addictive feeds" as a significant part of their services, with some exceptions for features serving valid purposes unrelated to engagement

- Enforcement: Warnings must appear when a young user first encounters these features and periodically thereafter, with no ability to bypass them

- Legal Precedent: The bill mirrors existing warning labels on tobacco, alcohol, and media with flashing lights, suggesting courts may uphold it

- Industry Impact: Platforms will likely face compliance costs, potential feature redesigns, and regulatory precedent that spreads to other states

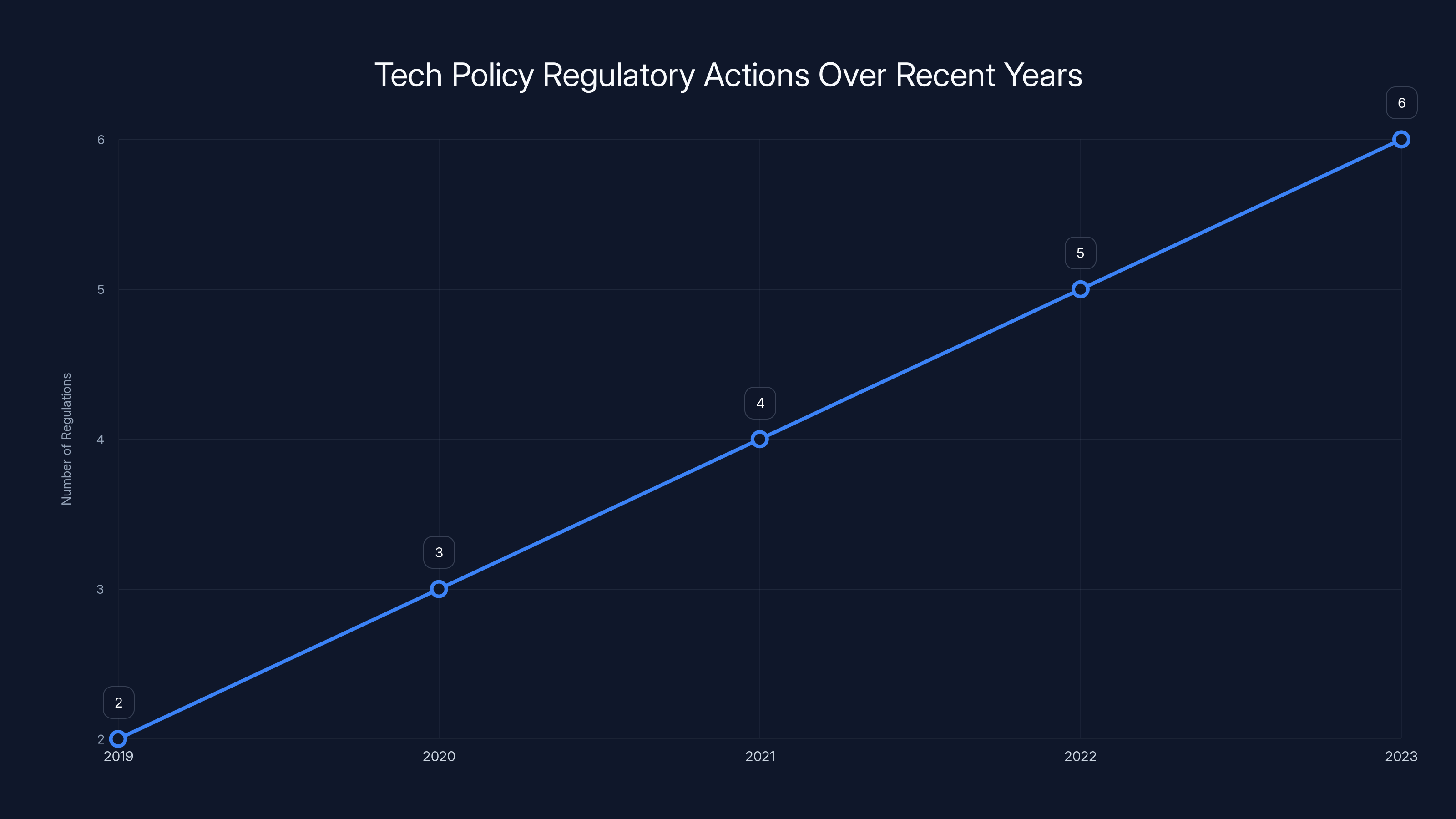

Estimated data shows an increasing trend in major tech regulations from 2019 to 2023, reflecting growing government intervention in tech policy.

The Bill's Requirements: What Exactly Has to Be Labeled?

The legislation, formally known as S4505/A5346, doesn't require platforms to eliminate addictive features. Instead, it requires transparency through mandatory warnings. But the specifics matter because they reveal exactly what regulators consider problematic.

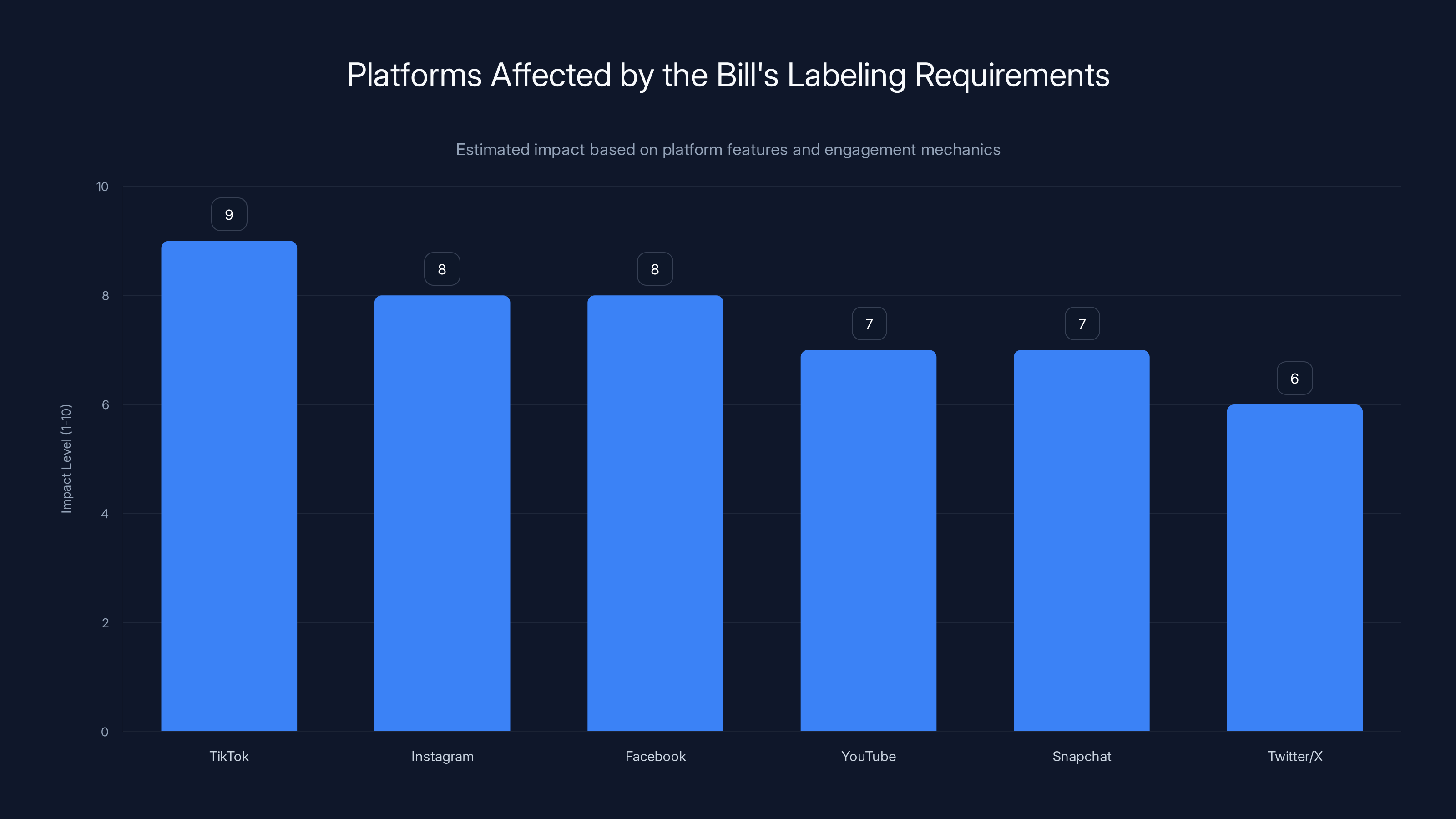

The bill targets platforms that offer "an addictive feed, push notifications, autoplay, infinite scroll, and/or like counts as a significant part" of their services. This language is deliberately broad. It's designed to capture Tik Tok, Instagram, Facebook, YouTube, Snapchat, and Twitter/X, but not necessarily platforms where these features are peripheral rather than central to the user experience.

Consider the distinction. LinkedIn offers infinite scroll and push notifications, but they're not core to its value proposition—networking is. Reddit offers infinite scroll, but it's organized by community rather than by an algorithmic feed designed to maximize dwell time. The law seems engineered to hit platforms where engagement mechanics are the primary product.

When a younger user first encounters one of these features, they get a warning label. Not a one-time notice in settings. A warning that appears whenever they try to use the feature. The platform must continue to display these warnings "periodically thereafter," which almost certainly means on a recurring schedule rather than just the first time.

The language about periodicity matters. Some platforms might argue that warning users once per session is sufficient. Regulators likely expect more frequent reminders. If a 15-year-old opens Tik Tok every day, do they see the warning daily? Multiple times per day? The bill doesn't specify, which will likely become a point of contention during implementation.

There's also a built-in flexibility mechanism. The bill allows exceptions if New York's Attorney General determines a particular feature is being used for "a valid purpose unrelated to prolonging use of such platform." This theoretically protects features that serve legitimate functions beyond pure engagement manipulation. A video autoplay feature that helps users with disabilities navigate content, for example, might qualify. But the bar for this exception appears intentionally high.

The comparison to existing warning systems is instructive. Just as tobacco warnings now cover 30% of cigarette packages and include images of health consequences, social media warnings will need to be prominent enough to actually register with users. A tiny notification in gray text won't cut it. The warning label model suggests something more visually arresting.

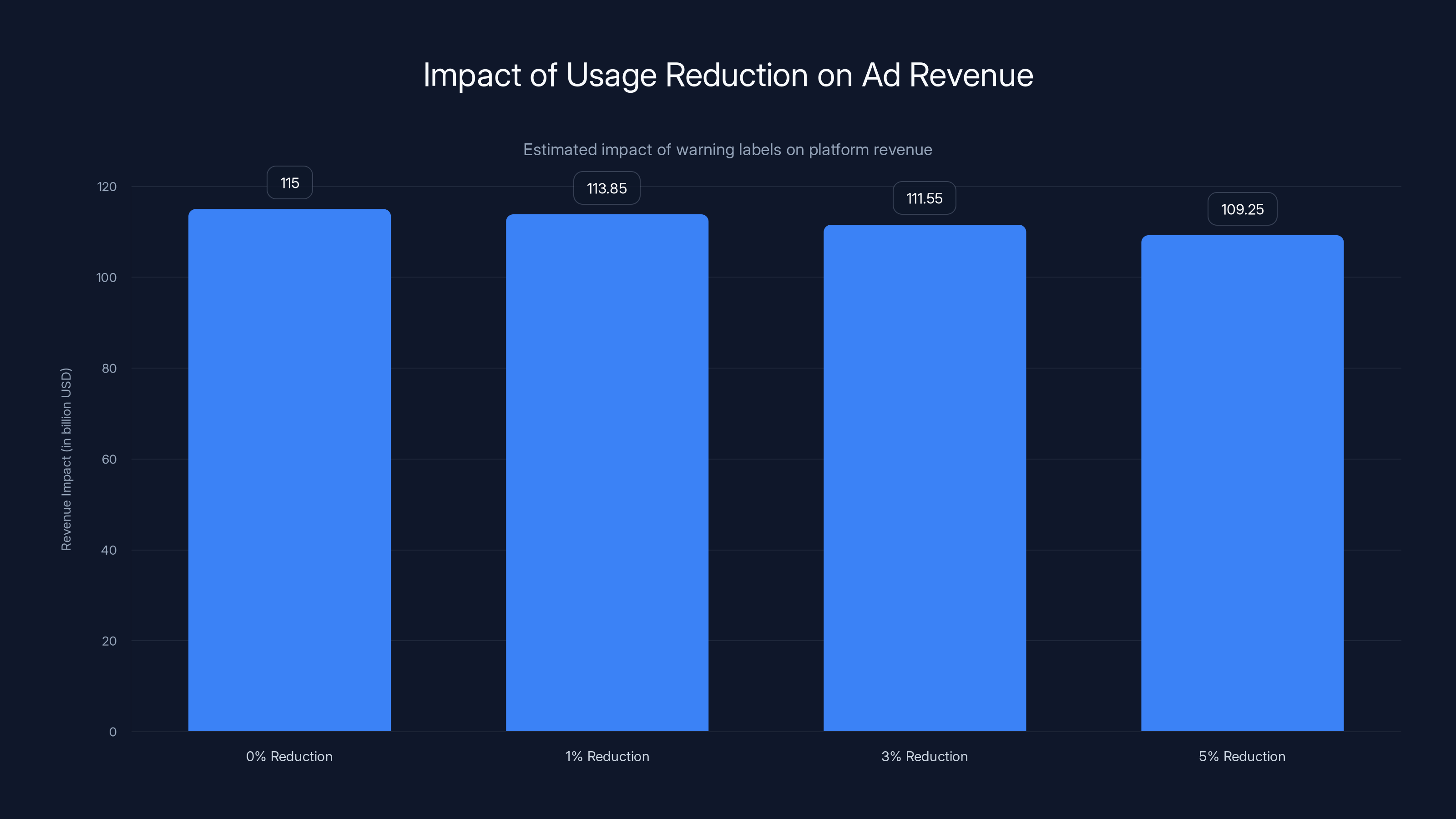

A 5% reduction in user engagement due to warning labels could lead to a $5.75 billion decrease in annual ad revenue for platforms like Facebook/Instagram. Estimated data.

The Science Behind "Addictive" Social Media: What Research Actually Shows

Before dismissing this as political theater, it's worth understanding what researchers have actually found about social media and engagement mechanics. The science is more nuanced than "social media bad," but it's also more concerning than "it's just entertainment."

Starting around 2016, researchers began publishing studies on how specific design features affect user behavior and mental health. The findings paint a consistent picture: infinite scroll, autoplay, and push notifications work. They keep people engaged longer. Whether that constitutes "addiction" depends on how you define the term.

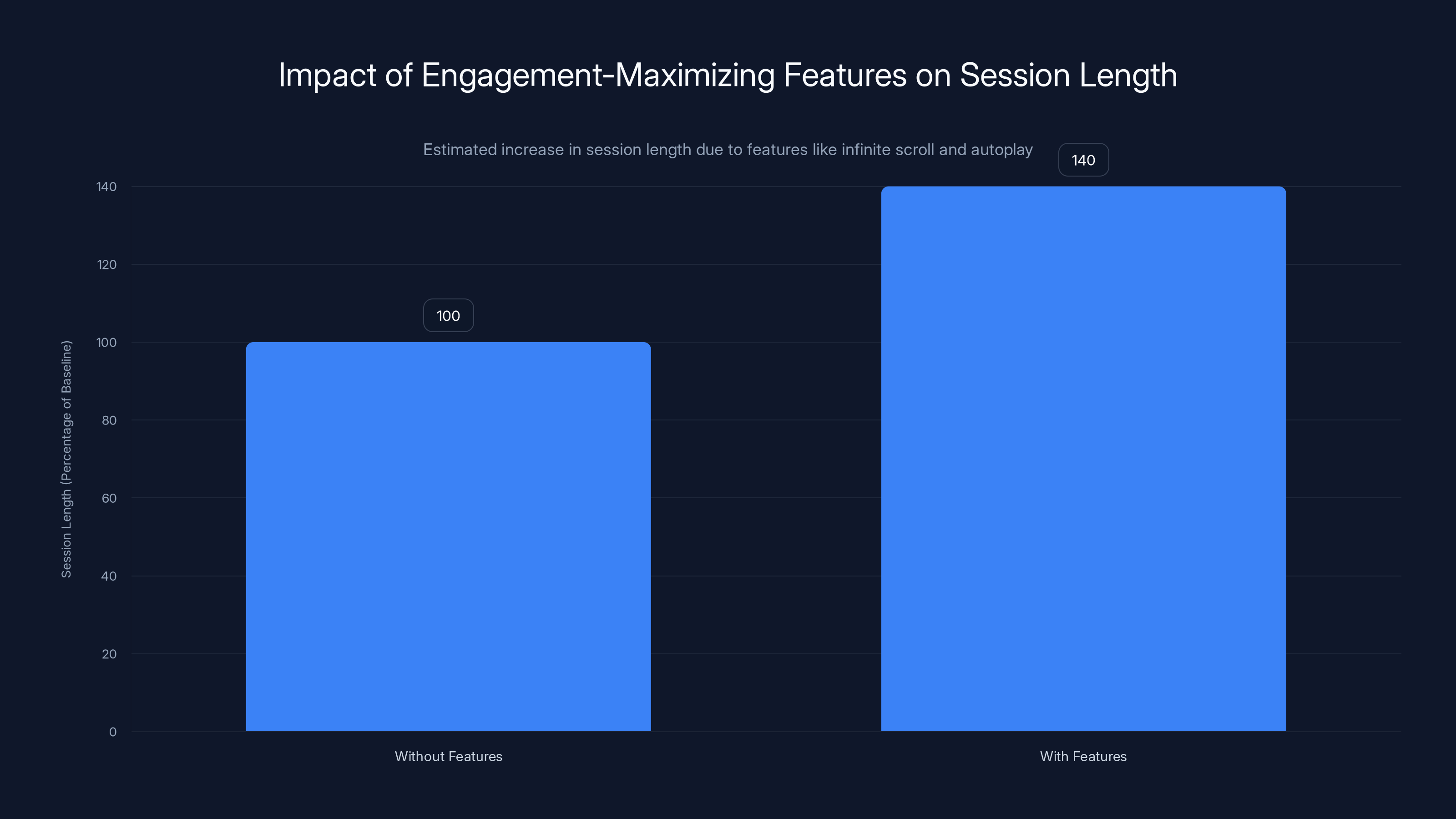

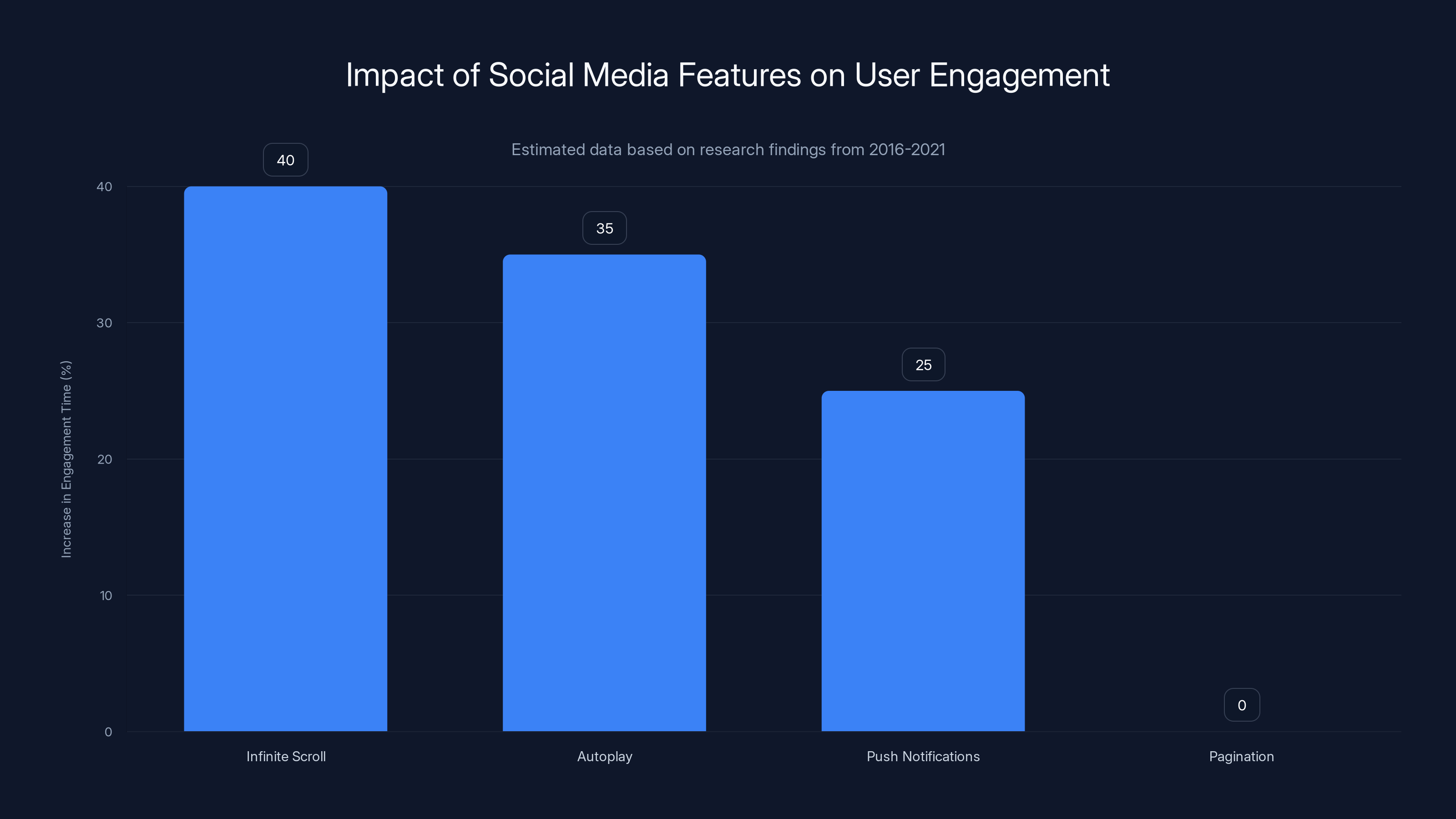

A 2021 study published in research showed that users who encounter infinite scroll spend measurably more time on platforms than those with pagination (click to load more). The effect isn't subtle. We're talking 30-50% longer sessions. Autoplay creates similar effects. Users intending to watch one YouTube video end up watching five because the next one starts automatically.

Push notifications are maybe the most deliberately manipulative. They're interruptions—your phone buzzes with a notification that someone liked your post, or a friend is live, or there's breaking news in your feed. These notifications are specifically timed and crafted to trigger habitual checking behavior. Apps A/B test notification timing to determine when you're most likely to respond.

What makes this scientifically interesting (and arguably problematic) is that these mechanics exploit psychological principles. There's the variable reward schedule—you don't know if the next scroll will show something interesting, so you keep scrolling. There's the social validation loop—seeing likes on your post creates a dopamine hit. There's FOMO (fear of missing out), deliberately triggered by notifications.

Do these mechanics create clinical addiction? That's where things get murky. Most users aren't addicted to Tik Tok the way someone is addicted to heroin. But for teenagers specifically, the effects can be significant.

The US Surgeon General issued a report highlighting social media's effects on adolescent mental health, noting that while not all effects are negative, the potential harms are significant enough to warrant action. Teenagers' brains are still developing, particularly the prefrontal cortex responsible for impulse control and long-term planning. This developmental window makes them particularly vulnerable to engagement mechanics designed to maximize immediate interaction.

The research on this is not settled in the sense that scientists agree 100%, but there's a legitimate evidence base that engagement mechanics designed to maximize time on platform can contribute to problematic usage patterns, particularly in younger users. This is what makes warning labels a defensible policy response.

How the Warning Label Approach Compares to Other Policy Options

Why warning labels specifically? Why not just ban these features outright, as some countries have considered?

The answer reveals something about regulatory philosophy. A ban would be more aggressive and potentially more effective, but it would also raise questions about government overreach into product design decisions. Warning labels are a lighter-touch approach that respects the market while providing information.

Consider tobacco regulation. Countries didn't ban cigarettes entirely (with rare exceptions). Instead, they required warning labels, increased taxes, restricted marketing, and designated smoke-free spaces. Cigarette use declined substantially, not because cigarettes disappeared, but because the combination of measures made smoking less convenient and more costly—socially, financially, and informationally.

Social media regulation could follow a similar trajectory. Warning labels represent the first step. If they prove ineffective, subsequent steps might include algorithmic transparency requirements (showing users how content is ranked), time-limit features, or restrictions on certain features for users below specific ages.

Other countries have already moved further. The UK's Online Safety Bill includes requirements for platforms to assess and mitigate harms to minors. The EU's Digital Services Act requires transparency about algorithms and content moderation. These aren't warning label approaches—they're structural requirements that affect how platforms operate.

The US has been slower to act, partly because of First Amendment concerns and partly because of tech industry lobbying. New York's approach splits the difference. It mandates information provision (the warning) without mandating product changes. This is legally more defensible and politically more achievable.

But here's the thing: warning labels only work if they actually change behavior. The evidence on tobacco warning labels is mixed. They've increased awareness and contributed to declining smoking rates, but they haven't prevented millions from continuing to smoke. If social media warning labels follow the same pattern, they'll inform users about addictive features without necessarily reducing usage significantly.

This creates pressure for stronger regulation. If warning labels appear ineffective after a few years, legislators will likely propose more aggressive measures. Platforms should be aware that this law is potentially the thin end of the wedge.

Engagement-maximizing features like infinite scroll and autoplay can increase session lengths by 30-50%. Estimated data based on typical usage patterns.

The Legal Landscape: Challenges and Constitutional Questions

There's virtually no chance this law survives legal challenge without modification. Not because the reasoning is flawed, but because of how American First Amendment doctrine works.

Compelled speech—forcing companies to say something they don't want to say—is constitutionally protected in some contexts but highly restricted in others. Tobacco warning labels survived court challenges because courts determined that warning about a dangerous product is not an unreasonable compulsion. Alcohol warnings survived for similar reasons.

But social media is different. Platforms will argue, and they have a point, that their services aren't inherently dangerous in the way tobacco is. Users suffer no direct physical harm from infinite scroll in the way smokers suffer lung damage. This creates a weaker factual basis for mandated warnings.

There are also commercial speech concerns. Platforms might argue that a warning label about "addictive" features is misleading characterization of their products, and that forcing them to display such labels violates their own First Amendment rights.

These aren't frivolous arguments. But they're not slam dunks either. Regulators can point to the mental health research, the Surgeon General's statements, and the precedent of product warning labels. They can argue that informational labeling doesn't prevent platforms from continuing to offer these features, only that users must be informed.

Anticipate a long court battle. This will likely reach the Supreme Court eventually, unless platforms and regulators reach a settlement on modified language or implementation. The outcome isn't predetermined, but the legal doctrine on compelled speech suggests some skepticism from judges.

Meanwhile, similar bills are advancing in other states. California passed its own social media regulation requiring parental consent for users under 18 accessing certain features. Massachusetts has considered bills along similar lines. Texas and Arkansas passed bills restricting social media for minors. The regulatory pressure is building from multiple angles.

California's Parallel Approach: A Template for National Regulation

New York isn't alone in this space. California lawmakers have proposed similar legislation, and the California approach provides insight into how this regulation might evolve.

California's regulatory history is important context. The state doesn't just regulate tech for its residents; by virtue of its market size and cultural influence, California regulations often become de facto national standards. If a platform needs different features for California users versus everyone else, they often just redesign for all users rather than maintain multiple versions.

California's proposed requirements go somewhat further than New York's. They focus on explicit parental consent before younger users access certain features. This is slightly different from the warning label approach. Rather than just informing users, it requires affirmative parental approval.

The difference matters tactically. A warning label might reduce some frivolous usage but won't prevent motivated teenagers from continuing to use platforms. Parental consent creates a structural barrier. A parent would need to explicitly approve the use of infinite scroll for their 14-year-old, which is a much higher bar.

But parental consent also creates implementation challenges. How does a platform verify parental consent? Will platforms accept parent email addresses and phone numbers? Credit card verification? This opens data privacy questions. And it assumes parents are paying attention and capable of making informed decisions, which isn't universally true.

Between New York's warning label approach and California's parental consent model, you're seeing different philosophies emerge. New York trusts users (even young ones) to make informed decisions if given information. California trusts parents to control access to features. Neither approach trusts platforms to self-regulate, which is perhaps the point of agreement.

If both New York and California laws survive legal challenge, platforms will face a complicated compliance landscape. They might implement warning labels for all users (since the cost of customization exceeds the cost of uniform implementation), and they might implement parental consent verification for California residents. Over time, the more stringent California requirements might extend nationally for the same reason.

Platforms like TikTok and Instagram are most affected due to their reliance on addictive features. Estimated data based on feature centrality.

How Social Media Platforms Are Likely to Respond

What will Tik Tok, Instagram, Facebook, YouTube, and other platforms actually do in response to these laws?

The cynical answer is: as little as possible. They'll implement technically compliant warning labels that satisfy the letter of the law while minimizing impact on engagement. The warning will be dismissable (if the law allows), easy to ignore, or so common that users develop notification blindness.

But there's likely to be some real response too, because the reputational and regulatory risks outweigh the benefits of defying the law. Platforms also recognize that if they're seen as deliberately circumventing these requirements, it accelerates harsher regulation.

Most likely outcomes:

Warning label design: Platforms will craft warnings that technically comply but minimize visual salience. Think small icons in corners rather than prominent banner messages. The warnings will likely use neutral language rather than explicitly stating "this feature is addictive." Something like "This feature uses infinite scroll and algorithmic recommendations" states facts without moral judgment.

Feature modifications: Some platforms might implement toggles allowing users to disable autoplay or infinite scroll, framing this as "giving users control" while technically satisfying the law. The toggles will default to enabled, and disabling them will require navigating settings menus.

Age verification systems: Platforms will likely strengthen age verification processes since the law specifically targets younger users. This could mean requiring ID verification, which creates privacy concerns but also authenticates age. Alternatively, platforms might use behavioral heuristics (device type, app store account age, etc.) to estimate age and apply warnings selectively.

Lobbying and legal challenges: Platforms will fund legal challenges to the law and support industry groups fighting regulatory efforts. They'll also lobby legislators to water down requirements or add exemptions for features that don't primarily drive engagement.

Content creator accommodation: Some platforms might create separate feature sets for business accounts (creators and influencers) that have fewer restrictions, arguing that infinite scroll serves a different purpose for creators versus consumers.

The outcome depends on implementation details the law leaves ambiguous. If regulators interpret the law aggressively and enforce compliance strictly, platforms will be forced to make more meaningful changes. If enforcement is light, platforms will do the minimum.

The Broader Regulatory Trend: Where This Fits in the Tech Policy Landscape

This law doesn't exist in isolation. It's part of a larger regulatory moment in tech policy characterized by skepticism toward platform self-regulation and movement toward government intervention.

Consider what's happened in just the last few years. The EU passed the Digital Markets Act, requiring major platforms to open their ecosystems to competitors. The UK passed the Online Safety Bill, making platforms responsible for removing certain content and protecting minors. The US passed the Kids Online Safety Act, requiring age-appropriate design and parental controls. Individual states have passed laws restricting social media for minors and requiring age verification.

Each of these laws reflects the same underlying theory: platforms have externalized the costs of their services onto users, particularly younger users, and government intervention is necessary to internalize those costs. The warning label approach reflects the milder end of this intervention spectrum. Other policies require platforms to actually change how they operate.

Why the shift? Several factors:

Accumulation of evidence: Years of research on social media's effects on mental health, sleep, academic performance, and social development created a scientific basis for regulatory action.

Platform resistance to self-regulation: Despite public commitments to addressing harms, platforms have made only incremental changes to engagement mechanics while continuing to optimize for usage growth.

Political alignment: A rare moment of bipartisan agreement that tech regulation is necessary. Both progressive politicians focused on protecting minors and conservative politicians skeptical of Big Tech support these measures.

Public opinion shifts: Polling consistently shows strong majorities support regulations requiring social media to protect children and limit addictive features.

This regulatory trend will likely accelerate. Once the first state implements a major social media regulation successfully (or even somewhat successfully), other states copy it. Federal legislation becomes possible once states demonstrate that regulation doesn't collapse the internet or eliminate social media services.

Tech platforms should expect that the regulatory environment they operated in from 2004-2020 (essentially unregulated) is permanently over. The question is no longer whether regulation comes, but what kind and how stringent it becomes.

Research indicates that features like infinite scroll and autoplay can increase user engagement time by 30-50%, with push notifications also significantly contributing. Estimated data.

What "Addictive" Really Means in the Context of Social Media

A critical point often missed in discussions of this law: the term "addictive" is doing a lot of work legally and scientifically, but it's not precisely defined.

In clinical psychology, addiction has specific criteria. The DSM-5 (Diagnostic and Statistical Manual of Mental Disorders) doesn't currently include "Internet addiction" as an official diagnosis, though there's considerable discussion about behavioral addictions that mirror substance addictions.

When regulators call social media features "addictive," they're not claiming that using Instagram constitutes clinical addiction for most users. They're using "addictive" more colloquially to mean "designed and engineered to maximize engagement and create habitual usage patterns," even if those patterns don't rise to the level of psychiatric disorder.

This distinction matters because it affects how courts interpret the law. Platforms will argue that calling features "addictive" is misleading characterization because most users don't experience addiction. Regulators will argue that features can be designed additively (with addiction-like mechanisms) without all users being addicted, just as not all people who smoke become addicted to nicotine, but cigarettes are still validly described as addictive.

The behavioral evidence is clearer than the clinical definitions. We know that infinite scroll causes longer sessions than pagination. We know that autoplay causes more video consumption than requiring user action. We know that notifications interrupt and drive habitual checking. These are measurable effects, even if they don't constitute clinical addiction for every user.

The law seems to define addictiveness not by user outcome (whether individuals become addicted) but by design intent and mechanism. If a feature is deliberately engineered to increase time on platform beyond what users would choose without that engineering, it's "addictive" for purposes of the law.

This is actually a clever regulatory move because it avoids the impossible task of proving that individual users are clinically addicted. Instead, it focuses on observable design patterns. Courts and regulators can assess whether a feature is designed to drive engagement, independent of whether specific users experience psychological dependence.

The Mental Health Question: Do We Actually Know These Features Harm Teenagers?

Underlying the entire regulatory push is an assumption that these features harm mental health, particularly for younger users. The evidence supports this to some degree, but with important caveats.

Multiple studies have found correlations between heavy social media use and depression, anxiety, sleep problems, and self-harm in teenagers. A 2019 meta-analysis of over 70 studies found consistent associations between social media use and increased depression and anxiety symptoms.

But here's the critical distinction: correlation is not causation. Heavy social media use correlates with depression, but does social media cause depression, or do depressed teenagers use social media more (perhaps as a coping mechanism or because depression increases isolation)?

Longitudinal studies (following the same people over time) provide stronger evidence of causality than cross-sectional studies (measuring at one point in time). Some longitudinal work suggests that social media use does contribute to depression and anxiety, not just reflect it. But the effects are typically modest.

More specific research on engagement mechanisms is helpful here. Studies looking specifically at how features like infinite scroll, autoplay, or notifications affect behavior show measurable engagement increases. Whether those engagement increases translate to mental health harms is less clear, and probably depends on individual factors (personality, existing mental health status, family relationships, offline social connections, etc.).

The most honest assessment is: engagement features clearly drive more usage. More usage likely increases risks for some people, probably younger people whose brains are still developing. But we can't make sweeping claims that infinite scroll causes depression in a direct causal sense.

This creates a regulatory challenge. If you're requiring warnings about features that "may affect mental health" based on correlational evidence, are you justified in compelled speech? Stronger evidence of direct causation would make regulatory action easier to defend.

That said, the existence of uncertainty doesn't mean regulation is unjustified. We regulate medications, food additives, and environmental exposures even when the evidence of harm is probabilistic rather than absolutely certain. Reasonable people can accept correlational evidence as sufficient justification for informing users about potential risks.

One nuance worth adding: not all social media effects are negative. Research also shows benefits including social connection, community building, creative expression, and activism. The question is whether the benefits outweigh the risks, particularly for younger users with developing brains. The policy approach of the warning label tries to be neutral on this question—it doesn't say "don't use social media," just "be aware of these design features and their effects."

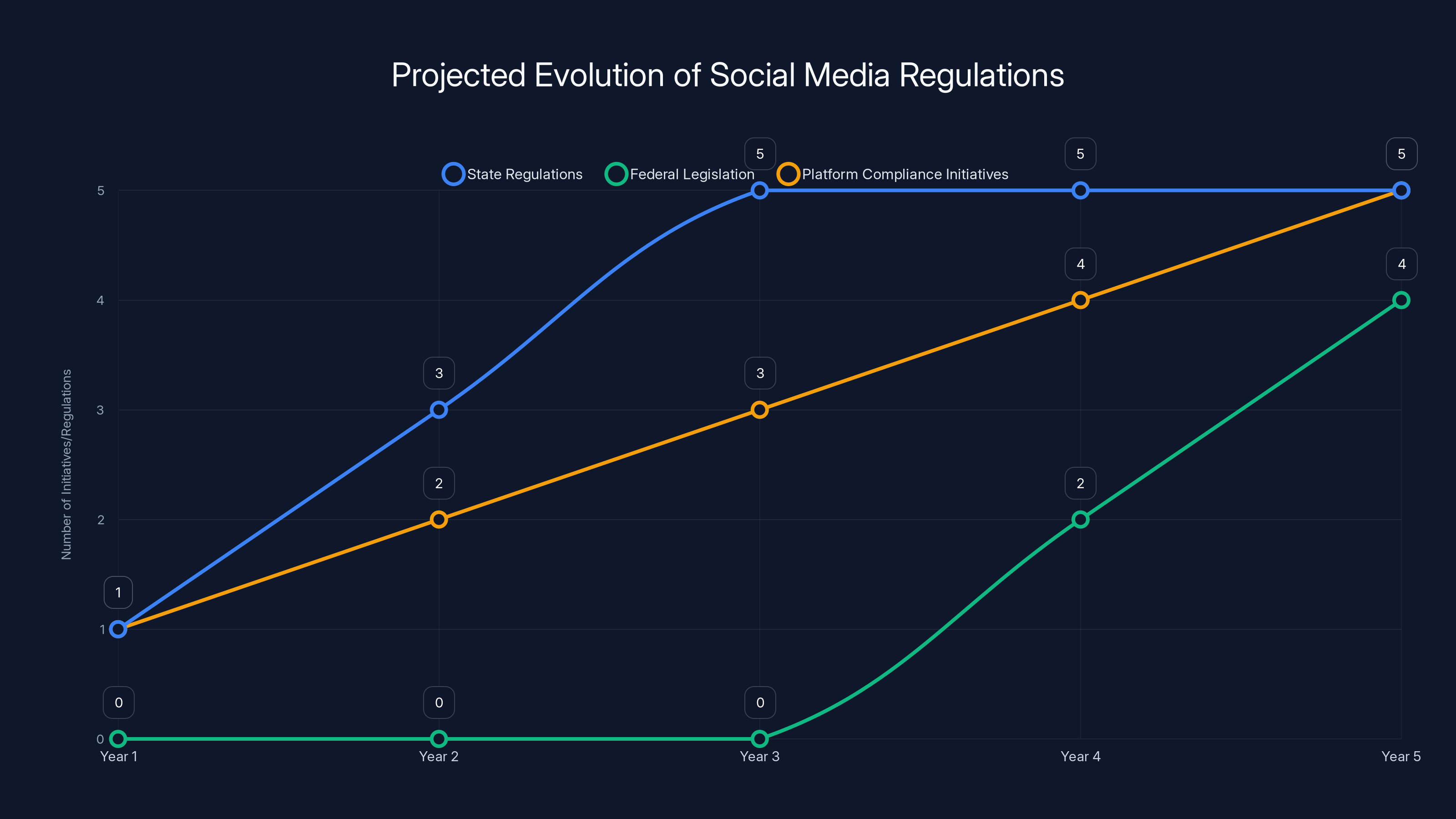

Estimated data shows a gradual increase in state regulations and platform compliance initiatives, with federal legislation potentially emerging by Year 4. Estimated data.

Implementation Challenges: The Practical Reality of Compliance

Here's where the law gets complicated: how exactly would these warnings work in practice?

Consider Tik Tok's For You Page (FYP). The entire experience is infinite scroll combined with algorithmic recommendations and autoplay video. When a user first opens Tik Tok, they encounter all of these features simultaneously. Does the warning appear once? Multiple times? Every time the user opens the app?

The law says warnings must appear "when a young user initially uses the predatory feature and periodically thereafter." This language is vague. Does "initially uses" mean the first time ever? First time per session? First time per day? And "periodically thereafter" could mean daily, weekly, or monthly.

Different interpretations lead to vastly different user experiences. If warnings appear every session, users will quickly develop notification blindness—the psychological phenomenon where repeated warnings become invisible. If warnings appear rarely, they may not meaningfully inform users.

Age verification creates another complexity. How do platforms determine who qualifies as a "young user"? Platforms already collect age data (at least supposedly), but many users lie about their age during signup. Real age verification would require government-issued ID or similar credentials, raising privacy concerns.

Some platforms might implement age-gating differently. They could require more robust age verification, which would deter younger users from accessing platforms entirely. Or they could estimate age using heuristics (the device they're using, when their account was created, content they engage with), which is less reliable.

There's also the question of what "warning label" looks like. Is it text? Icon? Audio? Video? Colors? The law compares it to tobacco warnings, which are highly visual and specific. Social media warnings will need to be similarly clear and unavoidable.

Imagine: Tik Tok implements a modal dialog that appears when a user first encounters infinite scroll, showing a warning with specific language about how the feature is designed to encourage extended use. The warning doesn't prevent access—it just requires acknowledgment before proceeding. This would be legally compliant and minimally disruptive to the user experience.

But what if regulators later argue that such warnings aren't prominent enough or frequently enough? Implementation becomes an arms race. Platforms push boundaries of minimum compliance. Regulators clarify requirements in guidance documents. Platforms adjust. This is normal in regulatory environments, but it's slow and inefficient.

International Regulatory Precedent: How Other Countries Have Handled This

New York didn't invent this approach. Looking at how other countries have regulated social media provides useful context for predicting how this law might evolve.

France has been aggressive on social media regulation. Regulators there have fined Meta for violating GDPR privacy rules and have pushed for algorithmic transparency. But they haven't specifically required warning labels for engagement features. Instead, they focus on transparency and user rights to explanation.

The UK's Online Safety Bill, which goes into effect in 2025, is arguably more comprehensive. It requires platforms to conduct risk assessments identifying potential harms to users, particularly minors. Platforms must then mitigate identified risks through design, content moderation, or other means. This is prescriptive regulation—telling platforms what outcomes they must achieve without specifying the methods.

But even the UK hasn't specifically mandated warning labels for infinite scroll or autoplay. The regulatory approach is more about accountability and transparency than consumer information.

Australia passed legislation requiring age verification for social media, effectively banning minors under 16 from platforms entirely. This is more restrictive than warning labels or even parental consent models—it's outright prohibition.

China regulates social media heavily but focuses on content and speech rather than engagement mechanics. Other Asian countries have taken varied approaches, some requiring local servers and moderation, others more hands-off.

That New York is specifically choosing the warning label approach suggests influence from how other consumer protection domains work (tobacco, alcohol, medications). It positions social media as a product with foreseeable risks that users should understand, rather than as speech that can't be regulated or as a special category needing industry-specific rules.

International precedent suggests this approach will either work or evolve relatively quickly. Other countries have found that warning labels alone aren't sufficient for social media risks and have moved toward more prescriptive requirements (like the UK's risk assessment mandate) or outright restrictions (like Australia's age ban). New York's law might be a first step in a progression toward more aggressive regulation.

What Users Actually Need to Know: Practical Implications

Setting aside the regulatory and legal complexity, what does this law actually mean for someone using social media?

If you're over 18, probably not much immediately. The law targets younger users. If you're in New York and under 18, you'll start seeing warning labels on platforms that use addictive features, assuming they comply with the law.

These warnings will presumably explain what infinite scroll is and how it's designed to encourage extended use. Similarly for autoplay and push notifications. The intent is to create awareness that these features aren't neutral—they're intentionally designed to influence behavior.

Will the warnings change how people use social media? The evidence on warning labels suggests modest effects. Tobacco warnings increased awareness and probably reduced some usage, but millions of people continued smoking despite clear information about health risks. Social media warnings will likely follow a similar pattern.

But for some people, particularly teenagers who are more susceptible to peer influence and still developing impulse control, warnings might create helpful pause points. Instead of mindlessly scrolling through infinite scroll, you might consciously decide "I'm going to use this feature even though it's designed to make me spend more time here." That's not nothing.

For parents, the law creates an opportunity for conversations with teenagers. When a kid sees a warning about addictive features, parents can discuss why those features exist, how they work, and how to use social media intentionally rather than habitually.

Longer term, if warnings appear across multiple platforms and states, they might normalize conversations about engagement mechanics. This could drive demand for platforms or features that don't use aggressive engagement tactics. Some people might prefer a non-infinite-scroll social network, even if it's less popular, because they want to use social media without the addictive architecture.

The practical bottom line: if you're a young person in New York, expect to see more disclosures about how social media features work. If you're a parent, use these as conversation starters. If you're someone who cares about intentional technology use, this law creates pressure on platforms to be transparent about engagement mechanics, which is generally positive.

The Business Economics: How This Affects Platforms' Bottom Lines

From a business perspective, this law is a threat to platform profitability. Here's why: engagement drives advertising revenue, and engagement-maximizing features drive engagement.

Social media companies make money primarily through advertising. The more time users spend on the platform, the more ads they see, the more money advertisers pay. The entire business model is built on maximizing engagement.

Features like infinite scroll, autoplay, and algorithmic recommendations are core to engagement maximization. They're not incidental features—they're fundamental to how modern social media works. Requiring warnings about these features, and particularly if warnings actually reduce usage, directly threatens revenue.

Consider the math. If warning labels reduce daily average usage by even 5%, that translates to a 5% reduction in ad impressions and revenue. For a platform like Facebook/Instagram generating

Platforms have financial incentive to minimize the impact of warnings. They'll design warnings to be as easy to dismiss as possible. They'll argue against frequent warning repetition. They'll lobby for exemptions. The economic pressures are severe.

But there's a countervailing force: the cost of defying regulation or appearing to defy it. If a platform is caught actively circumventing warning label requirements, reputational damage and regulatory backlash could be worse than the engagement loss. Plus, once one state implements this law, others will follow, and maintaining multiple compliant feature sets is expensive.

There's also the possibility that some users actually prefer platforms without aggressive engagement mechanics. If a platform (or a new competitor) offered a version without infinite scroll and autoplay, with a smaller but more intentional user base, it might find a profitable niche.

Historically, this has been hard because the largest platforms benefit from network effects—more users make a platform more valuable, so smaller alternatives never achieve critical mass. But regulatory pressure might disrupt this dynamic. If regulators require features on all platforms, new competitors might build from the ground up without those features, potentially attracting users fatigued by engagement-maximizing design.

Predictions: How This Law and Similar Regulations Will Evolve

Assuming New York's law survives legal challenge, what's likely to happen next?

First 12-18 months: Platforms implement technically compliant warning labels with minimal visual prominence. Compliance is tracked by regulators and enforced loosely. The law becomes operational but has unclear impact on user behavior or platform engagement.

Year 2-3: Other states pass similar laws. California's parental consent requirement goes into effect. The regulatory patchwork becomes complex, but most platforms still implement uniform changes across all users rather than state-by-state variations.

Year 4-5: Federal legislation becomes possible as states demonstrate that regulation doesn't break the internet and platforms continue operating profitably. A federal law might unify requirements and preempt state variations.

Parallel track: Lawsuits challenging the law's constitutionality reach higher courts. Some requirements might be struck down (particularly if warnings are deemed excessive compelled speech), while others survive. The law likely survives in modified form.

Additional regulations: If warning labels prove insufficient to address mental health concerns, regulators will propose stronger measures. These might include:

- Mandatory algorithm transparency (showing users how content is ranked)

- Limits on notification frequency

- Forced implementation of time limit features

- Age-based access restrictions (similar to Australia's approach)

- Restrictions on certain features specifically for users under 16

Platform response: Platforms will gradually redesign features to be less obviously addictive, claiming to be proactive rather than reactive. They'll develop separate feature sets for young users with fewer aggressive engagement mechanics. They'll invest in "responsible AI" initiatives and mental health features (screen time trackers, break reminders) that sound good but don't fundamentally change the underlying business model.

Market dynamics: New platforms might emerge specifically designed without aggressive engagement mechanics, potentially gaining traction with users fatigued by existing platforms. Alternatively, existing platforms might create "lite" versions targeted at younger users.

International harmonization: Eventually, regulations across US states and major international jurisdictions might converge on similar standards, making global compliance easier for platforms.

None of this is certain. Regulatory outcomes depend on political shifts, court decisions, and technological changes. But based on historical patterns of product regulation and the clear political momentum behind social media regulation, some version of this trajectory seems likely.

How to Navigate This as a User, Parent, or Professional

If you're affected by this law or anticipate future similar laws, here's practical guidance.

As a young person using social media: Once warning labels appear, pay attention to them. They're telling you something true about how the features work. Consider whether you want to engage with these features despite understanding how they're designed. Some people consciously choose to use infinite scroll knowing what it does; others decide to limit use or use platforms differently.

As a parent: Use the warning labels as conversation starters with your kids. Ask them what the warnings mean, how the features work, and whether they want to limit their usage. Involved parenting combined with transparency about technology is more effective than pure restriction.

As a platform employee in product, policy, or legal: Prepare for a regulatory environment where engagement maximization is under scrutiny. Start thinking about product design that creates value without relying on behavioral addiction mechanics. What features serve user needs genuinely, independent of engagement optimization?

As a platform business executive: Accept that the regulatory environment is changing and budget for compliance. Invest in legal and regulatory affairs. Consider whether the company's business model might need to adapt if engagement maximization becomes explicitly prohibited.

As a digital product designer: Start thinking about intentional design that serves user goals rather than engagement maximization. Can you create experiences people want to use without dark patterns and engagement tricks? This might become a competitive advantage if regulators restrict traditional engagement mechanics.

As an investor in tech: Factor in regulatory risk as a material factor in social media company valuations. Companies that depend entirely on engagement maximization face more regulatory risk than companies with diversified business models.

As someone interested in tech regulation: Follow how these laws are implemented and enforced. Early implementation will establish precedent for how courts interpret "addictive features" and compelled speech. Your views and advocacy now shape how this regulatory framework develops.

Conclusion: The Beginning of a Longer Regulatory Arc

New York's social media warning label law isn't the end of regulation in this space—it's the beginning. It signals that the era of essentially unregulated social media is over and government intervention is now politically feasible and legally defensible.

The specific mechanism (warning labels) is relatively mild compared to outright bans or mandated feature removal. This mild approach actually makes the law more likely to survive legal challenge and more likely to become a template for other states. But mildness is also a double-edged sword—if warning labels prove ineffective at addressing mental health harms, pressure for stronger regulation will intensify.

What makes this moment important isn't the specific warning label requirement. It's that lawmakers, regulators, and the public have reached consensus that something needs to change. For years, the argument was binary: either regulate the internet heavily (which many feared would chill innovation and speech) or don't regulate at all (which continued current harms). Now there's middle ground being explored through careful requirements focused on specific harms and specific mechanisms.

For platforms, this is a critical juncture. Those that adapt proactively and redesign products thoughtfully around user wellbeing will likely navigate the regulatory transition better than those that resist or try to circumvent requirements. The platforms that treat regulation as an external constraint rather than a signal about where society wants the product ecosystem to go will face repeated regulatory battles.

For users and especially for teenagers, this law creates opportunities for more intentional technology use. Warning labels, if implemented honestly and frequently, provide information that most people lacked before. Parents and young people can use this information to make more conscious choices about social media rather than passively using platforms designed to maximize their engagement.

Historically, product regulation doesn't kill industries—it reshapes them. Cars were regulated with safety and emissions requirements. Restaurants were regulated with health codes. Pharmaceuticals were regulated with approval processes and safety monitoring. In each case, regulation changed the industry significantly but didn't eliminate it. The same will likely happen with social media.

The regulatory pressure is real and accelerating. Whether it takes the form of warning labels, algorithmic transparency requirements, age-based restrictions, or something else, social media platforms will be more regulated in five years than they are today. The question is what form that regulation takes and whether it strikes a reasonable balance between protecting users (especially younger users) and preserving the benefits of digital connectivity and expression.

New York's law is one significant step in a longer arc. Watch it carefully—how it's implemented, whether it survives legal challenge, whether it changes user behavior, and whether it spreads to other states. These details will shape the future of social media regulation more than any high-level theory about what regulation should be.

FAQ

What exactly is New York's social media warning label law?

New York's law (S4505/A5346), signed by Governor Hochul, requires social media platforms to display warning labels to younger users before exposing them to "addictive" features like infinite scroll, autoplay, push notifications, and like counts. The warnings must appear when users first encounter these features and periodically thereafter, and users cannot bypass them. The law specifically targets platforms where these features are a significant part of the service.

Which social media platforms does this law apply to?

The law applies to platforms offering "an addictive feed, push notifications, autoplay, infinite scroll, and/or like counts as a significant part" of their services. This primarily targets Tik Tok, Instagram, Facebook, YouTube, Snapchat, and similar algorithmic feed-based platforms. Platforms where these features are peripheral rather than central (like LinkedIn or Reddit, though both have feed features) may have more limited obligations depending on how regulators interpret the law's scope.

What are the scientific arguments for regulating these social media features?

Research shows that engagement-maximizing features like infinite scroll, autoplay, and push notifications measurably increase time spent on platforms—typically 30-50% longer sessions than platforms without these features. Additional research correlates heavy social media use with depression, anxiety, and sleep problems, particularly in teenagers. Regulators argue that while individual usage doesn't necessarily constitute clinical addiction, these features are deliberately engineered to exploit psychological vulnerabilities and create habitual usage patterns, particularly concerning for teenagers whose brains are still developing.

How will platforms technically implement these warning labels?

Implementation details aren't fully specified in the law, leaving platforms some flexibility. Most likely, platforms will display warnings when users first encounter addictive features (like when opening Tik Tok) and periodically thereafter—possibly with each session or daily. Warnings might take the form of modal dialogs, banners, or icons that explain the feature and acknowledge that it's designed to encourage extended use. Warnings will likely require user acknowledgment before allowing access to the feature.

Could this law be struck down by courts?

Yes, there's meaningful risk of legal challenge. Platforms will likely argue the law constitutes unconstitutional compelled speech, forcing them to make statements about their products they disagree with. Regulators counter that informational labeling is constitutional, citing precedent with tobacco, alcohol, and pharmaceutical warnings. The outcome depends on how courts weigh these arguments, with the strongest legal uncertainty around whether social media rises to the level of danger that justifies compelled warning labels. Expect lengthy legal battles regardless of the initial ruling.

How does New York's warning label approach compare to other states' social media regulations?

New York chose the warning label model, which provides information while allowing continued access to features. California is moving toward parental consent requirements, which create structural barriers to younger users accessing certain features. Australia passed age-based bans, preventing anyone under 16 from using social media entirely. The UK requires platforms to conduct risk assessments and mitigate identified harms. Each approach represents a different regulatory philosophy, from informational to restrictive to prohibitive. If multiple states pass different laws, platforms will likely implement the most stringent requirements nationwide rather than maintaining multiple versions.

Will these warning labels actually change how teenagers use social media?

Historical evidence on warning labels is mixed. Tobacco warnings increased awareness and contributed to declining smoking rates, but many people continued smoking despite understanding health risks. Social media warnings will likely create awareness and inform some users' decisions without dramatically changing overall usage patterns. However, for some teenagers, warnings might prompt more intentional use or encourage conversations with parents about social media habits. The effects will likely be modest but meaningful for a subset of users.

What could happen if this law proves ineffective at addressing mental health concerns?

If warning labels don't meaningfully reduce problematic social media use, regulatory pressure will likely escalate toward stronger measures. These might include mandatory algorithm transparency (showing users how content is ranked), limits on notification frequency, required implementation of time-limit features, or actual restrictions on certain features for users under specific ages. The warning label approach might be just the first step in a progression toward more prescriptive regulation, similar to how tobacco regulation started with warnings and progressed to taxation, marketing restrictions, and age-based sales limits.

How will this law affect social media platforms' business models?

The law threatens platform profitability because advertising revenue depends on maximizing engagement through features like infinite scroll and autoplay. Warning labels that reduce engagement will reduce ad impressions and revenue—a 5% engagement reduction would cost major platforms billions annually. However, the cost of defying regulations, facing reputational damage, and dealing with regulatory backlash might exceed the engagement impact. Platforms will likely minimize warning impact through design choices, but expect long-term business model shifts if regulations spread and become more stringent.

What should parents do about social media warning labels?

When warning labels appear, parents should use them as conversation starters with teenagers about how social media is designed. Discuss what infinite scroll, autoplay, and push notifications do, why platforms use them, and whether the teenager wants to engage with these features despite understanding their purpose. Involved, transparent parenting combined with information about technology is more effective than pure restriction. Warning labels provide facts; parents provide context and guidance about intentional technology use.

Could New York's law become a national standard?

If the law survives legal challenge and multiple states pass similar legislation, federal regulation becomes likely. Federal law would unify requirements and prevent platforms from managing multiple state-specific compliance schemes. However, the form of federal regulation isn't predetermined—it could adopt New York's warning label approach, California's parental consent model, or something stronger. The current regulatory momentum toward social media regulation across states and internationally suggests that some form of substantial federal legislation is likely within 5-10 years.

Key Takeaways

- New York's law requires warning labels on addictive social media features (infinite scroll, autoplay, push notifications) for younger users, modeled after tobacco warning precedent

- The law targets engagement-maximizing mechanics that research shows increase session time by 30-50%, potentially affecting mental health, particularly in teenagers

- Implementation challenges include vague definitions of 'young users,' unclear warning frequency requirements, and age verification mechanisms that raise privacy concerns

- Platforms will likely implement technical compliance with minimal impact on engagement, setting up potential for legal challenges and regulatory escalation

- This law represents the beginning of a regulatory arc—if warning labels prove insufficient, states and federal government may move toward stronger requirements like mandatory algorithm transparency or age-based access restrictions

- International precedent from EU, UK, and Australia shows regulatory progression from transparency requirements toward prescriptive mandates and outright restrictions on certain features for minors

Related Articles

- New York's Social Media Warning Labels Law: What You Need to Know [2025]

- Apple's Brazil App Store Deal: Third-Party Stores and Payment Processing [2025]

- Best Distraction Blockers for Focus and Productivity [2025]

- Why Data Centers Became the Most Controversial Tech Issue of 2025

- Apple Pauses Texas App Store Changes After Age Verification Court Block [2025]

![New York's Social Media Warning Label Law: What It Means for Users and Platforms [2025]](https://tryrunable.com/blog/new-york-s-social-media-warning-label-law-what-it-means-for-/image-1-1766851918712.jpg)