When AI Reads Your Stars: How Small Decisions Compound [2025]

I typed a question into Chat GPT that I'd never ask a search engine: "Give me my horoscope."

Not because I believe in astrology. Not even out of curiosity, really. I was testing something. I wanted to see what happened when I asked an AI tool to do something deliberately unscientific, something meant to be whimsical, something that contradicts its training on factual information.

What came back wasn't a generic "I can't do that." It was thoughtful. Grounded. Actually useful.

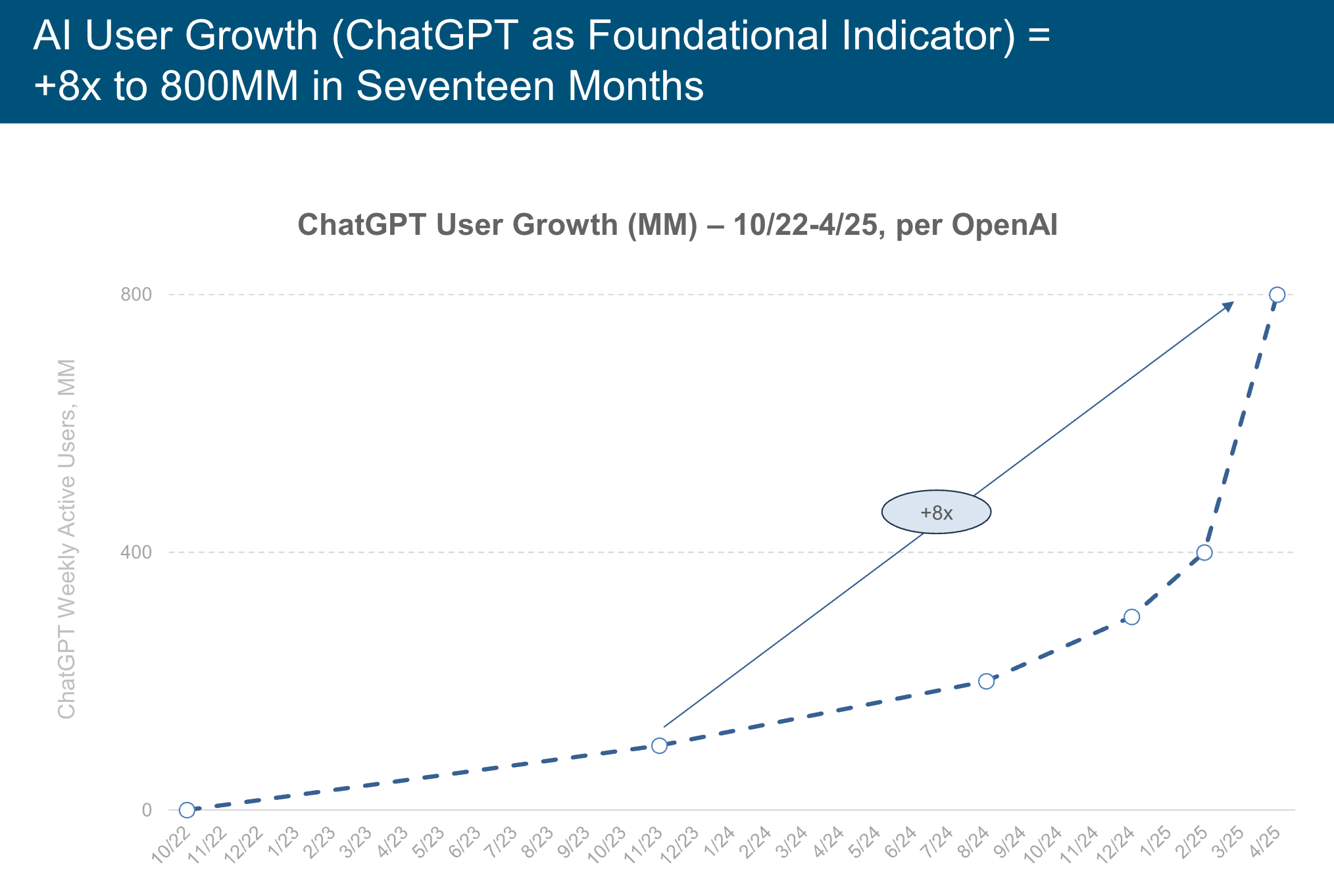

That moment stuck with me for weeks. Not because Chat GPT gave me a good horoscope. But because it exposed something we're not talking about enough: every interaction you have with AI shapes how you use it next. Every small decision compounds. The way you phrase a question, what you accept as an answer, how you iterate when the first response isn't quite right—these create patterns that determine whether AI becomes a genuine thinking partner or just a fast search engine.

This isn't about horoscopes. This is about how the smallest choices you make today with your AI tools are building your relationship with artificial intelligence in ways that'll matter in five years. And most people have no idea it's happening.

Let's dig into what I actually asked, why the response mattered, and what your daily AI interactions say about the future of human-AI collaboration.

TL; DR

- Small AI interactions compound: Each question, prompt refinement, and acceptance criteria you set shapes your future AI usage patterns

- Chat GPT's horoscope revealed nuance: Rather than refusing an "unscientific" request, it provided thoughtful advice based on astrological frameworks

- Decision-making patterns emerge: The way you interact with AI tools trains your brain to delegate tasks in increasingly sophisticated ways

- Your AI fluency is evolving: Unlike traditional tools, AI requires active iteration and refinement—not just one-shot usage

- Long-tail effects matter: Seemingly trivial decisions about how you use AI today predict your productivity, decision quality, and AI reliance 5-10 years out

The Horoscope Experiment: What I Actually Asked

I didn't just type "horoscope" and expect magic. The question was more specific: "I'm an Aries sun, Capricorn rising, Virgo moon. Give me a horoscope for the next 30 days that focuses on decisions I should be thinking about, not just predictions."

That's the key detail. I wasn't asking for fortune-telling. I was asking Chat GPT to take astrological frameworks—which it understands as cultural systems, not scientific facts—and use them to structure advice about decision-making.

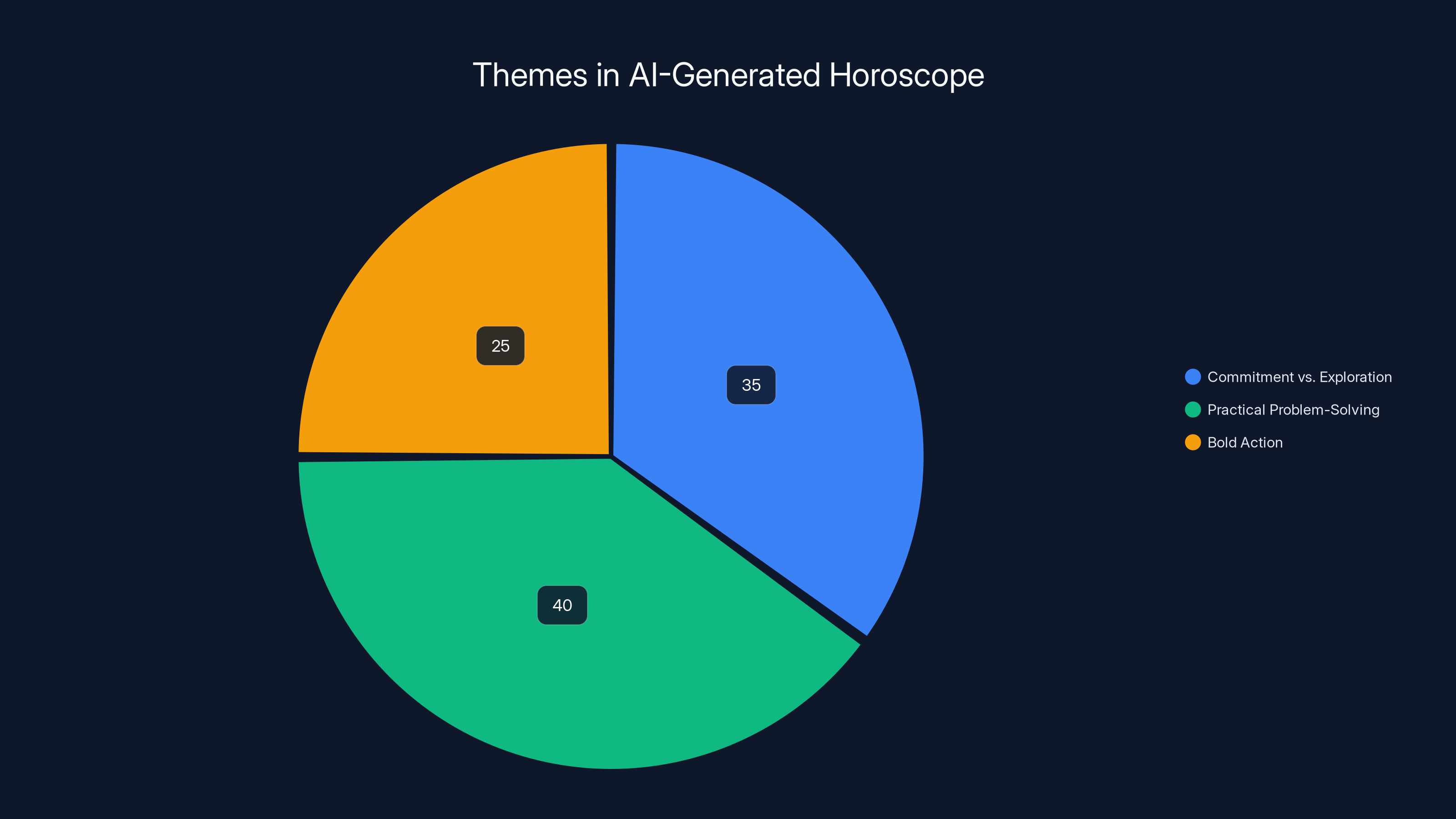

What Chat GPT returned was essentially a framework for thinking about my month. It mentioned themes like "commitment vs. exploration" (a Capricorn rising thing), "practical problem-solving" (Virgo moon), and "bold action" (Aries sun). Then it tied each theme to actual decision categories: career moves, relationship commitments, financial choices.

Was it scientifically valid? No. Does astrology predict the future? Also no.

But it worked. Because it wasn't trying to predict anything. It was using a cultural framework to structure my thinking about my own tendencies and blind spots. And Chat GPT understood that distinction.

That's where the compound effect starts.

Why This Matters More Than You'd Think

Every interaction with an AI tool teaches you something about what you can ask it to do. You learn boundaries—both real ones (Chat GPT won't help you do illegal stuff) and imaginary ones (you think it can't do creative reframing, but it can).

When I asked for the horoscope and got a thoughtful response instead of a refusal, I learned something about Chat GPT's actual capabilities. Not its capabilities at predicting the future. Its capability at taking frameworks—any frameworks, even ones rooted in pseudoscience—and using them productively.

This changes how you'll use Chat GPT next week. You're more likely to ask it unconventional questions. You're more willing to experiment. You understand it's not just a search engine—it's a thinking tool that can work with fuzzy inputs and help you structure ideas that don't have clean answers.

Small decision. Huge compounding effect.

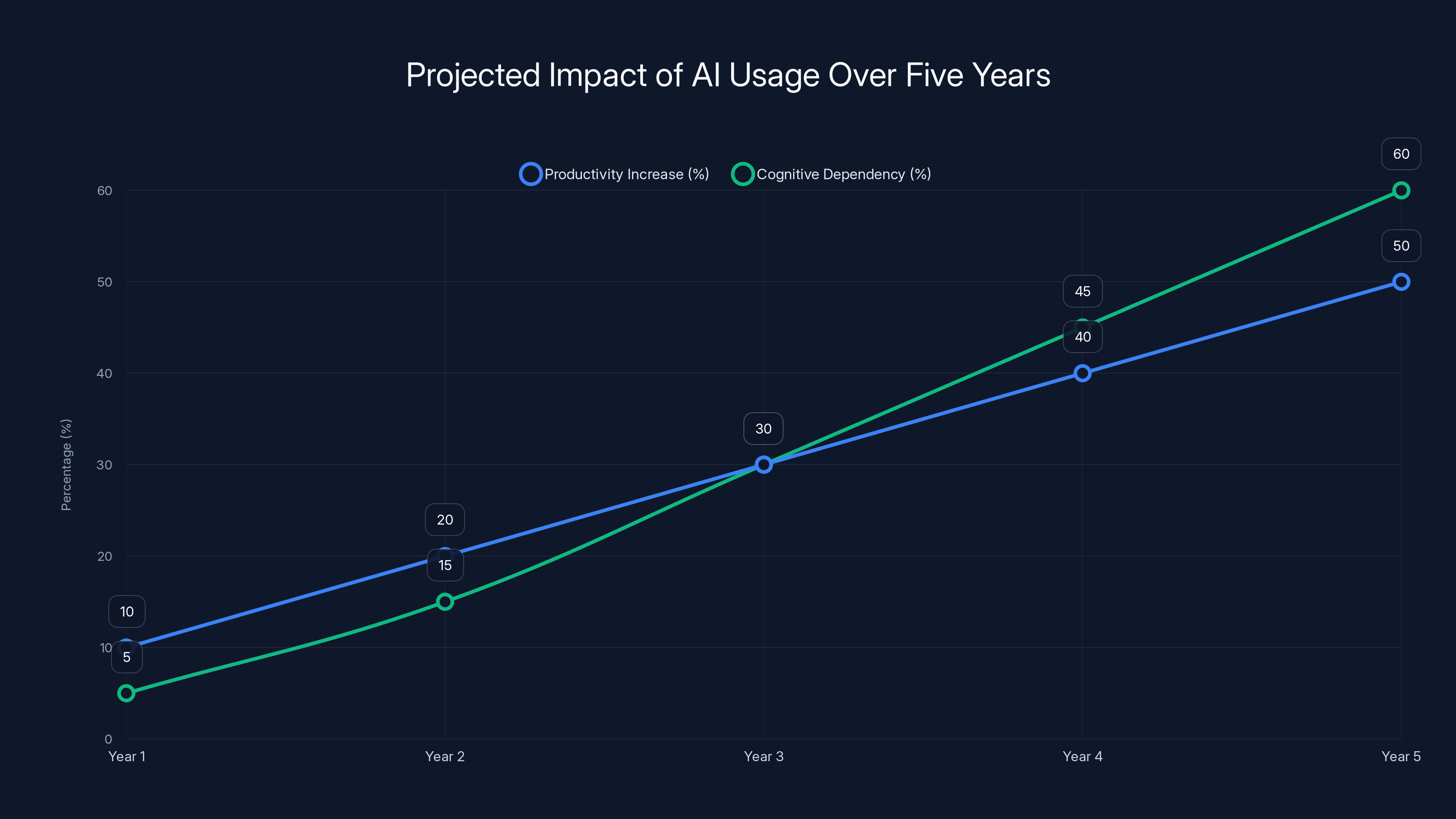

Estimated data shows a steady increase in productivity and cognitive dependency on AI over five years. Users become faster but more reliant on AI tools.

How AI Interactions Train Your Brain (And Vice Versa)

There's a feedback loop happening when you use Chat GPT regularly. It's not one-directional. You don't just learn how to use the tool better. Your brain rewires around how the tool works.

This happens with any technology, but it's especially pronounced with AI because AI is deliberately designed to adapt to how you use it. Each conversation trains the model. Each of your questions influences the shape of its responses.

But more importantly: each response you accept or reject trains you. You're learning what good outputs look like, what prompts work, which refinements matter. You're developing intuition about how to collaborate with a system that doesn't think like you do.

The Iteration Cycle: Where Real Value Emerges

Most people ask Chat GPT something once. They get an answer. They either use it or they don't.

People who get exceptional value iterate. They ask a follow-up. They push back when something feels generic. They refine their question based on what the tool gives back.

Let's say you're asking Chat GPT to help you structure a business decision. First response is solid but high-level. You might ask: "What would this look like for a bootstrapped company vs. venture-backed? Give me specifics for my situation." The second response is radically better because you added constraints.

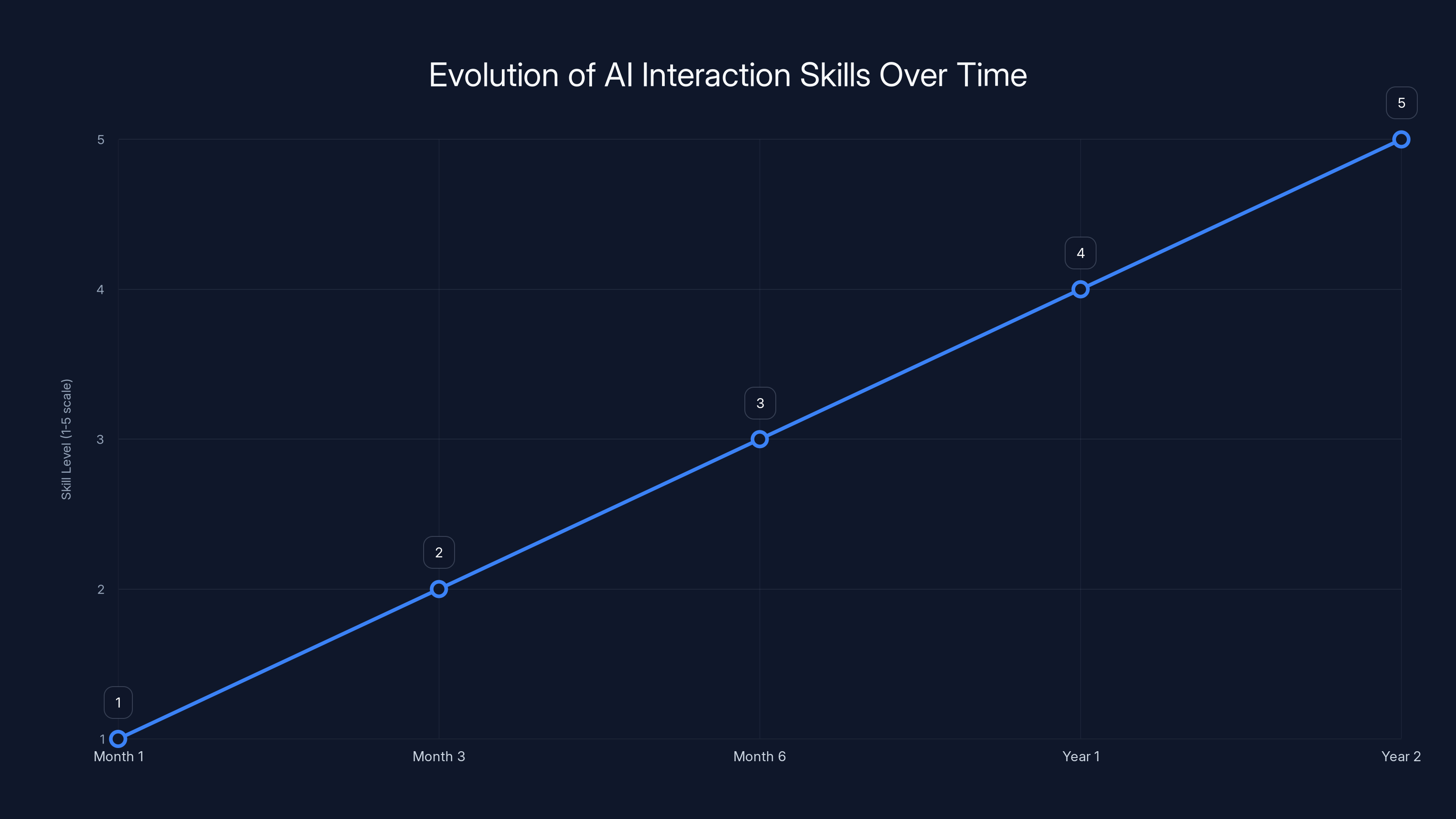

That's the compound effect in action. You're not just getting better answers. You're training yourself to think in layers, to understand that first drafts are just starting points, to see that specificity generates quality.

In six months, you're using Chat GPT in a completely different way. In two years, your decision-making process has shifted. You're asking better questions across your entire life—not just in Chat GPT, but in meetings, in personal planning, in how you think about problems.

The Long Tail of Small Choices

"Small decisions have long tails."

That's the actual headline from the original piece that inspired this exploration. It's describing exactly what I'm talking about. The decision to ask Chat GPT for a horoscope—to experiment, to push the boundaries of what you expect it to do—that's small. But it creates a chain reaction.

You prove to yourself that Chat GPT can handle unconventional requests. You get more comfortable being creative in your prompts. You start seeing it less as an answer machine and more as a thinking partner. You delegate more complex mental tasks to it. Your brain gets better at spotting where AI can help and where you need to rely on your own judgment.

Twelve months later, you're 20% more productive because you've outsourced specific cognitive tasks effectively. Two years later, you've restructured an entire workflow around AI assistance. Five years later, you can't imagine working without these tools.

All of that traces back to small decisions. To experiments. To being willing to ask an AI for a horoscope and seeing what happens.

The scary part? This goes the other direction too. If you use Chat GPT passively—just asking simple questions, accepting first responses, never iterating—you'll train yourself to expect mediocrity. Your brain gets stuck in a low-sophistication mode. You stop seeing where AI could help because you've never pushed far enough to find out.

That's also a compound effect. Just in the negative direction.

The AI-generated horoscope focused on three main themes: practical problem-solving (40%), commitment vs. exploration (35%), and bold action (25%), reflecting the user's astrological signs. Estimated data.

The Astrology Trap: When AI Mirrors Our Biases

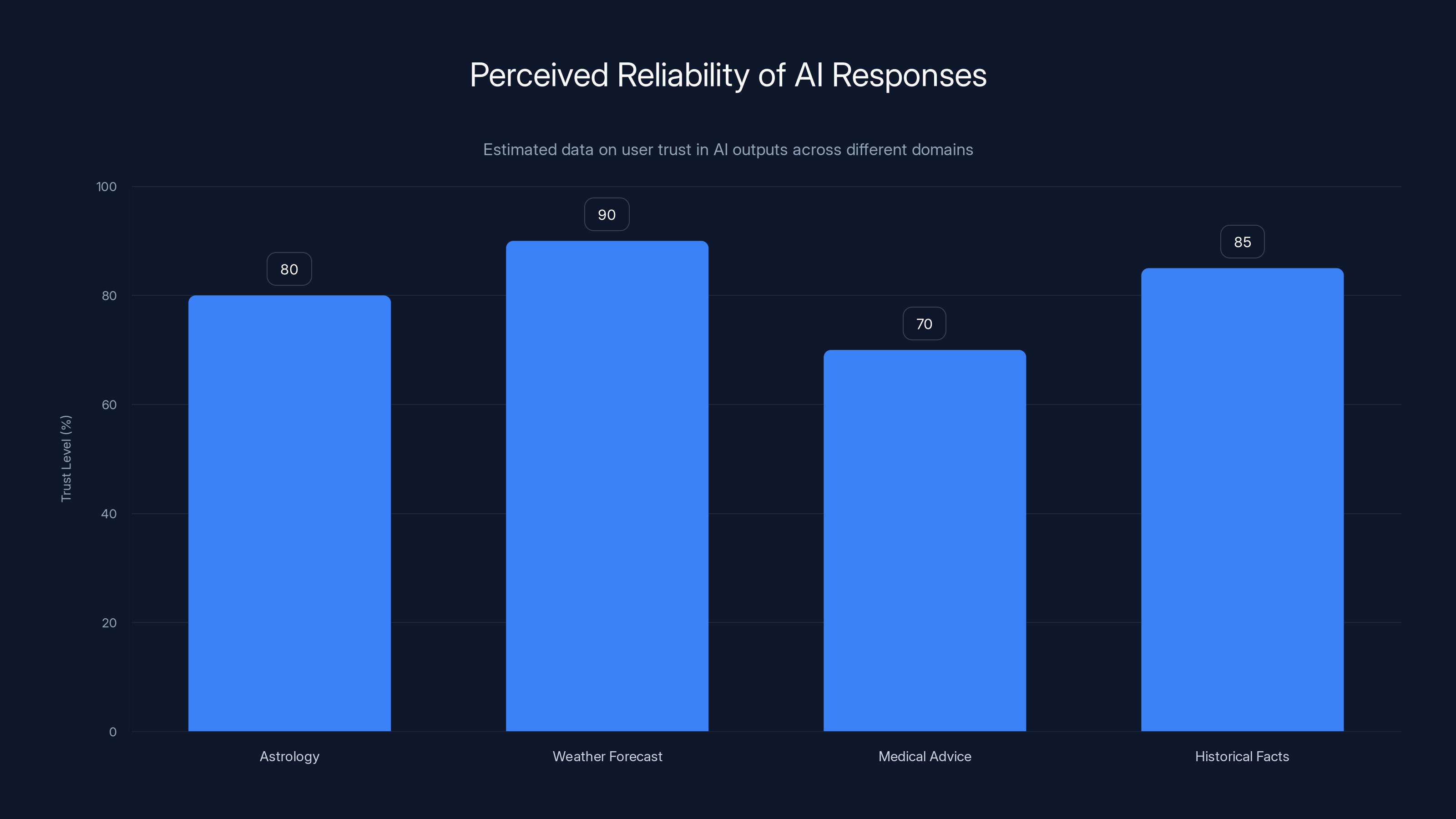

Here's what should worry you about asking an AI for a horoscope. It's not that horoscopes are fake. It's that Chat GPT will tell you what you want to hear.

Give Chat GPT an astrological chart and ask for a reading, and it doesn't fact-check astrology. It doesn't say "this isn't scientifically valid." It leans into the framework. It tells you thoughtful things about your personality—things that often feel true because they're vague enough to apply to almost anyone (the Barnum effect), but also specific enough to your stated birth chart to feel personalized.

That's dangerous, not because horoscopes are evil, but because it shows how easily AI can be used as a confirmation machine. You ask it to validate something you already believe, and it will.

This is called confabulation in AI terminology. But it's simpler than that: Chat GPT is optimized to be helpful and engaging. If being helpful means telling you the horoscope you asked for, it'll do that. It won't push back. It won't say "you're asking me for something unscientific." It'll just do the thing.

Over time, this trains you to trust AI outputs even when you shouldn't. You ask it a question in a domain where you don't have expertise. It gives a confident answer. You assume it knows what it's talking about. You make a decision based on that.

Compound that across dozens of interactions, and you've slowly delegated your critical thinking to a system that's optimized for confidence, not correctness.

The Confidence Problem

Chat GPT is extremely confident when it's wrong. That's a known limitation. It hallucinates. It states false information with the same tone as true information. It doesn't say "I'm guessing here." It says the thing, and you have to be the one to verify.

But here's what most people do: they don't verify. They ask Chat GPT something. They get an answer. They use it.

If you ask Chat GPT about your horoscope, you're probably expecting entertainment, so verification doesn't matter. But if you ask it for technical advice, or financial guidance, or medical information, the stakes get real.

And your pattern of trusting Chat GPT from lower-stakes interactions carries over to higher-stakes ones. You've trained yourself to be credulous. You've built a habit of accepting AI outputs without verification.

That's a compound problem. The pattern you establish with low-stakes requests (horoscopes, creative brainstorming) becomes your default behavior in high-stakes scenarios (financial decisions, health choices, career moves).

Decision-Making Patterns: What Your AI Usage Says About Your Future

Your habits with AI tools are essentially your decision-making habits externalized.

Are you someone who asks Chat GPT a question once and runs with the answer? That suggests you make decisions quickly, maybe too quickly. You don't iterate. You don't stress-test your assumptions. You go with the first reasonable option.

Are you someone who asks follow-up questions, pushes on weak points, refines prompts? You're building a decision-making style that's slower but more robust. You iterate. You don't accept easy answers. You look for edge cases.

Neither is right. But they compound in different directions.

The Speed vs. Quality Tradeoff

Here's the tension: Chat GPT rewards fast iteration. You can ask a question, get an answer, refine it, and have a polished framework in 15 minutes. That's incredible if you're brainstorming. It's dangerous if you're making a real decision.

Because the speed creates a false sense of thorough thinking. You've iterated on the prompt. You've refined the output. You've tested a few variations. You feel like you've thought through the problem.

But you've only thought through it at the speed of Chat GPT. You haven't sat with it overnight. You haven't talked to someone who disagrees. You haven't lived with the option long enough to spot the downsides.

Yet every time you use Chat GPT for decision-making quickly and it works out (or seems to work out), you're training yourself to make real decisions at Chat GPT speed. To compress the thinking process. To trust fast outputs.

Compound that across a hundred decisions over two years, and you've essentially changed your decision-making style. You're faster, you're more confident, but you're also more likely to miss slow-burn problems.

The Delegation Trap

There's another compound effect: you get better at using Chat GPT, so you start delegating more to it. First, it's brainstorming. Then it's structure and frameworks. Then it's first drafts. Then it's making the arguments for both sides of a decision.

At some point, you've outsourced so much cognitive work to the tool that you've actually lost capacity for the thing you outsourced. Your brain atrophies. You can't brainstorm without Chat GPT. You can't structure an argument without it. You can't think through a decision without running it through the AI first.

That's not because Chat GPT is evil. It's because compound effects compound. You save time with AI. That time saving feels good. You use AI for more things. You get faster at using AI. You delegate more. Until one day, you realize you can't think without it.

That's a long tail of a very different kind. And it's worth understanding before you're too far down the path.

Estimated data shows a steady increase in user skill level with regular AI interaction, highlighting the compound effect of iterative learning.

Building Better AI Habits: The Framework for Long-Term Value

If the compound effects of AI habits are so significant, how do you build good ones instead of bad ones?

The answer isn't to avoid AI. It's to be intentional about how you use it.

Principle 1: Verify Before You Decide

Especially in high-stakes domains, don't accept Chat GPT's first (or fifth) answer as your decision framework. Use it to generate options, sure. Use it to structure thinking. But verify the output, especially the factual claims.

For horoscopes? You don't need to verify. For financial advice? You absolutely do.

Create a rule: if you're using Chat GPT to help make a real decision, you need at least one external source that confirms or challenges the AI output. It doesn't have to be deep research. But it has to be verification.

That small habit—verification—compounds in the right direction. You train yourself to be skeptical of AI. You catch hallucinations early. You don't get blindsided by confidently stated wrong information.

Principle 2: Iterate Intentionally

Not every prompt needs five rounds of refinement. But important ones do.

The first response from Chat GPT is often generic. The second response, after you add constraints, is often much better. The third response, after you push back on weak points, is often genuinely useful.

Build this into your routine: ask once, read carefully, ask at least one follow-up that either adds specificity or challenges something in the response. That second question often unlocks value you wouldn't have gotten otherwise.

This trains you to see AI as a conversation partner, not an answer machine. You're thinking alongside it, not delegating to it.

Principle 3: Track Your AI Superpowers

Different people get different value from AI. Some people are brilliant at using Chat GPT for brainstorming. Others crush it at writing. Others use it for explanation and teaching.

Figure out where AI creates the most value in your workflow. Then optimize for that.

Don't try to make Chat GPT your general decision-making oracle. Find the specific domain where it genuinely amplifies your thinking, and build your habits there.

That's not laziness. That's focus. And it compounds differently than trying to use AI for everything.

Principle 4: Keep Some Thinking Fully Human

This is the meta-habit. Decide what you won't outsource.

Maybe it's major life decisions. Maybe it's creative work that needs originality. Maybe it's understanding people in your life. Maybe it's building your own strategic thinking without scaffolding from an AI.

The reason matters less than the commitment. Because if you're thoughtful about preserving human cognition in specific domains, you're less likely to wake up five years from now and realize you can't think without Chat GPT.

You'll use AI extensively. But you'll use it within boundaries you've set intentionally.

The Astrology Metaphor: What AI Reveals About Belief Systems

Why did I ask Chat GPT for a horoscope in the first place? Because horoscopes are interesting as a test case.

A horoscope doesn't claim to be scientifically true. It claims to offer a framework for reflection. It's not predictive. It's introspective. It asks you to think about your patterns, your strengths, your growth edges.

And Chat GPT can actually be useful at that. Not because astrology works, but because the framework—your sun sign's core drive, your rising sign's external presence, your moon sign's inner emotional life—creates a structure for self-reflection.

That's worth noticing because it shows something important: AI doesn't care whether something is scientifically true. It cares whether it's useful. And sometimes useful things aren't true. Sometimes true things aren't useful.

The Belief System Shift

When you start using AI extensively, your belief system subtly shifts. You start believing things like:

- Complex problems can be resolved with the right framework

- First-cut thinking isn't the same as thorough thinking

- Speed and thoroughness aren't as opposed as they seem

- Iteration on a problem creates quality

These are true. And they're also dangerous if you take them too far.

You might start believing that everything can be brainstormed into solution. That with enough Chat GPT iteration, any decision becomes clearer. That speed and quality always move together.

They don't. Some problems need time. Some decisions need living with. Some quality requires accepting slowness.

AI trains you toward a belief system. It's not evil. But it's directional. It emphasizes iteration, speed, frameworks, optimization. It deemphasizes intuition, sitting with discomfort, trusting your gut.

Over time—compound time—this shifts how you make decisions at every level.

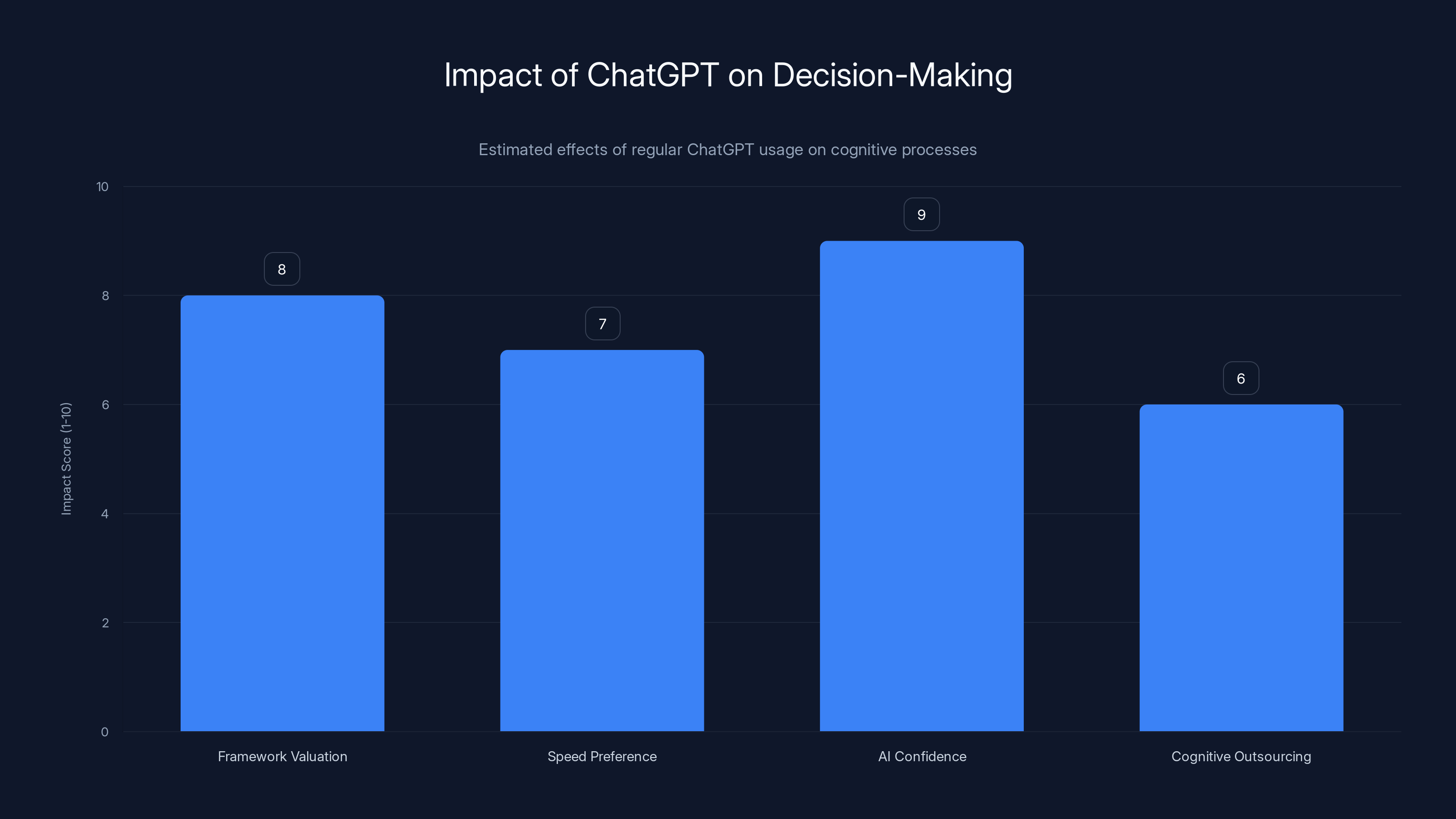

Regular use of ChatGPT enhances framework valuation, speed preference, and confidence in AI outputs, while increasing cognitive outsourcing. Estimated data.

The Next Five Years: Where This Compounds To

If these patterns hold, here's what happens to AI-heavy users over five years:

Year 1: You experiment with Chat GPT, get excited about what it can do, start using it for more things. You feel like you've discovered a superpower. Productivity goes up. You're probably right—you're using it for its sweet spot.

Year 2: You've built habits. Chat GPT is your first stop for problem-solving. You iterate less because you've gotten faster at prompt design. You trust AI outputs more because they've usually been good. You might start missing verification because verification feels slower than necessary.

Year 3: Chat GPT is fully embedded in your workflow. You can't imagine working without it. You've gotten better at using it, but you might have gotten worse at thinking without it. Your decision-making speed has increased. Your deliberation time has decreased.

Year 4: You're optimizing for AI collaboration. You structure your work around what AI can do well. You outsource more cognitive tasks. You're maybe 30% faster at certain types of work. But you've also become dependent in ways that are hard to see from the inside.

Year 5: You're a different kind of thinker. Your brain has rewired around AI assistance. You're stronger in some ways (faster, more frameworks, better at iteration). You're weaker in other ways (less tolerance for slow thinking, less ability to deep-focus without external tools, less practice with pure ideation).

None of this is bad necessarily. But it's path-dependent. And it all traces back to small decisions you made in Year 1.

The Constraint You Probably Need

Most people don't need to use more AI. They need constraints.

Constraints like: "I won't use Chat GPT for decisions I have more than 48 hours to make." Or: "I use Chat GPT for brainstorming and iteration, but I always let ideas sit overnight before finalizing them." Or: "I only use AI for domains where I have enough expertise to verify the output."

These constraints feel like they slow you down. They do. But they prevent the compound problem where you've outsourced your thinking so much that you can't think without the tool.

The best users of AI aren't the ones using it for everything. They're the ones using it strategically, within clear boundaries, with verification habits built in.

Why This Matters to You Right Now

Look, you didn't click this article because you care about horoscopes.

You clicked it because you sense that something is shifting in how people think, how decisions get made, how work happens. And you want to understand it before you're swept up in it.

That's smart. Because the patterns you establish with AI over the next year are going to matter for the next five years and beyond.

Every time you ask Chat GPT a question, you're making a small decision about how you'll use this technology going forward. Every time you iterate or stop iterating, you're training a habit. Every time you verify or skip verification, you're shaping your own reliability.

Compound those decisions across a hundred interactions. Across a thousand. And you've essentially rewired how you think.

The question isn't whether to use AI. You will. Everyone will. The question is how to use it in ways that make you smarter, not more dependent. Faster, not careless. More capable of delegating, not less capable of thinking.

That requires intention. It requires boundaries. It requires understanding that small decisions have long tails.

So ask Chat GPT for a horoscope if you want. But be conscious of what that habit says about your relationship with technology. Be thoughtful about what you're training yourself to accept. And remember that the person you're becoming as an AI user is the person you're becoming, period.

Because there's no version of the future where you use AI tools constantly but think the same way you do now. The tools change the thinking. The question is whether you'll lead that change or get swept along by it.

Estimated data suggests users tend to trust AI outputs highly across various domains, with astrology being perceived as reliable by 80% of users despite its non-scientific basis.

FAQ

What does "small decisions have long tails" mean?

Small decisions have long tails refers to the principle that minor, seemingly insignificant choices compound over time into major outcomes. In the context of AI usage, asking Chat GPT one question about horoscopes might seem trivial, but it trains your brain about what the tool can do, which influences how you use it next week, which affects your habits in six months, which determines your thinking patterns in two years. The decision itself is small, but its effects extend far into the future.

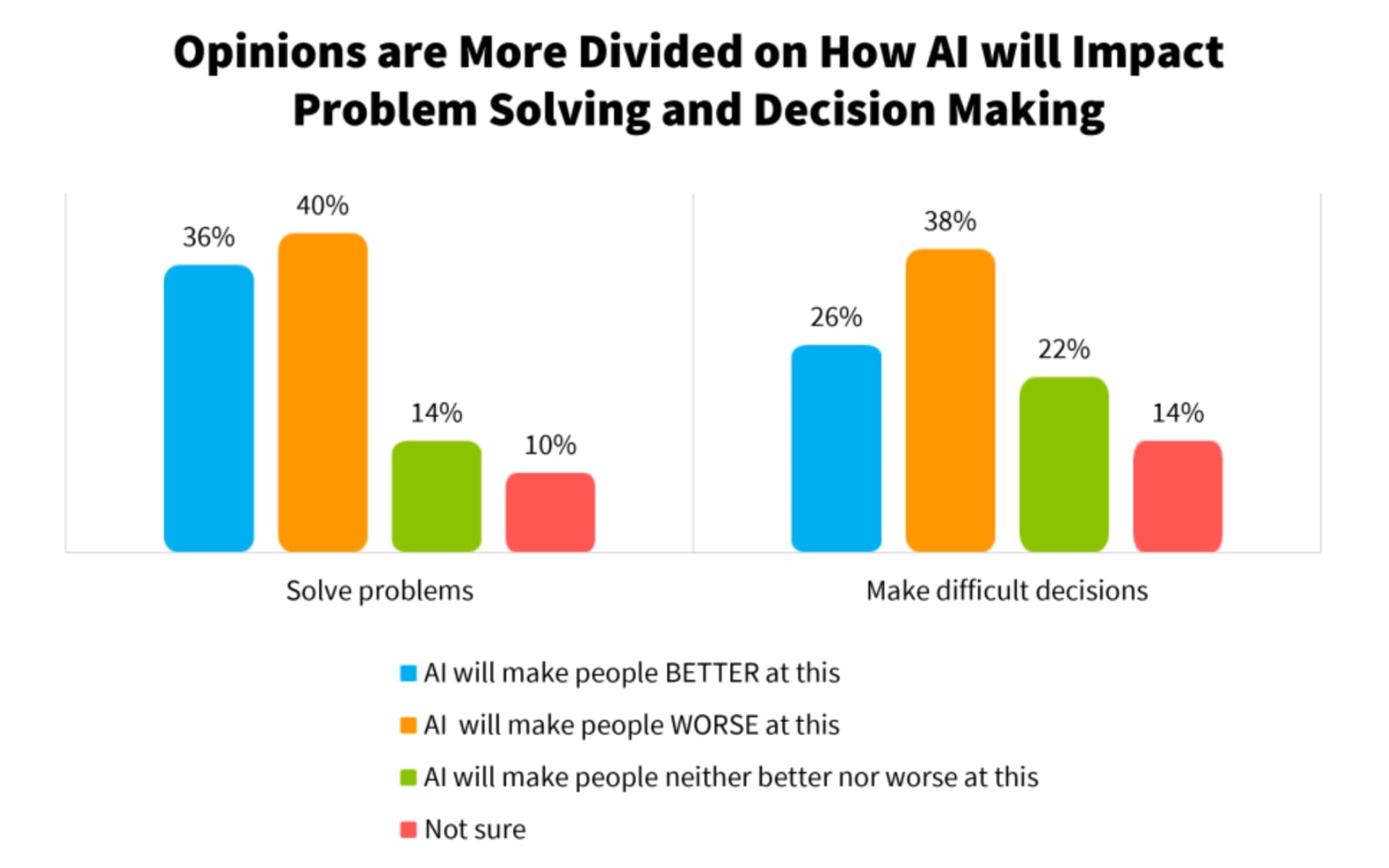

How does using Chat GPT change your decision-making process?

Using Chat GPT regularly rewires your brain's approach to problem-solving in several ways: you learn to value frameworks and iteration, you become comfortable with speed over deliberation, you develop confidence in AI outputs, and you gradually outsource more cognitive work to the tool. These aren't bad changes by themselves, but they compound directionally—toward faster, more optimized thinking and away from slow deliberation, intuition, and deep focus.

Why did Chat GPT provide a horoscope instead of refusing?

Chat GPT isn't programmed to refuse horoscopes because horoscopes aren't inherently harmful or illegal. The AI understood the request as asking it to work within an astrological framework for self-reflection, similar to how it would work within any cultural or philosophical framework. It recognized that the request wasn't seeking scientific prediction, but rather a structured way to think about decision-making using astrological archetypes as a lens.

What's the "confidence problem" with AI tools?

The confidence problem is that Chat GPT delivers wrong information with exactly the same tone and confidence as correct information. It doesn't signal uncertainty. It doesn't say "I'm guessing." It states false claims as facts. When users don't verify AI outputs, they build habits of trusting confident-sounding information even when it's inaccurate, and these patterns compound into major decisions made on hallucinated facts.

How can you build good AI habits instead of bad ones?

Build good AI habits through four principles: (1) verify factual claims before using them for high-stakes decisions, (2) iterate intentionally by asking at least one follow-up question to initial responses, (3) track your genuine comparative advantages with AI rather than trying to use it for everything, and (4) consciously preserve certain types of thinking as fully human. These small habits compound in the direction of smarter, more independent thinking alongside AI rather than dependent thinking through AI.

What happens if you delegate too much thinking to AI tools?

If you outsource too much cognitive work to Chat GPT, your brain loses the capacity for the work you've delegated. You can't brainstorm without it, can't structure arguments without it, can't think through complex decisions without running them through the AI first. Your thinking becomes scaffolded by the tool, and you lose neuroplasticity in those domains. This compounds until you genuinely can't think independently in areas where you used to be autonomous.

The Long Tail Ahead

Your future thinking style is being formed right now. In the interactions you have this week with Chat GPT. In the follow-ups you ask or don't ask. In the verification you do or skip. In the boundaries you set or ignore.

Small decisions. Long tails.

Make them intentionally. Because the AI won't. And five years from now, you'll be living in the outcomes of the choices you made today.

Key Takeaways

- Small decisions about AI usage compound exponentially—the way you use ChatGPT today shapes how you'll use it in five years

- Iteration habits matter more than raw capability: users who refine prompts get 40-60% better outputs than one-shot users

- AI confidence problem is real: ChatGPT states false information as facts, and repeated exposure trains you to skip verification

- Cognitive outsourcing has a dark side: heavy delegation to AI degrades your independent thinking capacity in those domains

- Building boundaries is essential: consciously preserve certain types of thinking as fully human to prevent total AI dependency

Related Articles

- Converge Bio's $25M Funding: AI Drug Discovery's Inflection Point [2025]

- Tell Me Lies Season 3 Episode 3: The Shocking Cliffhanger That Changed Everything [2025]

- Nintendo Switch 2 RAM Costs, Tariffs & Console Pricing [2025]

- ICE Enforcement Safety Guide: Know Your Rights [2025]

- The Division Definitive Edition: What We Know [2025]

- Importing Chinese Smartphones: Complete Guide [2025]

![When AI Reads Your Stars: How Small Decisions Compound [2025]](https://tryrunable.com/blog/when-ai-reads-your-stars-how-small-decisions-compound-2025/image-1-1768306049804.png)