Why AI Can't Make Good Video Game Worlds Yet: The Hard Truth About Generative Gaming

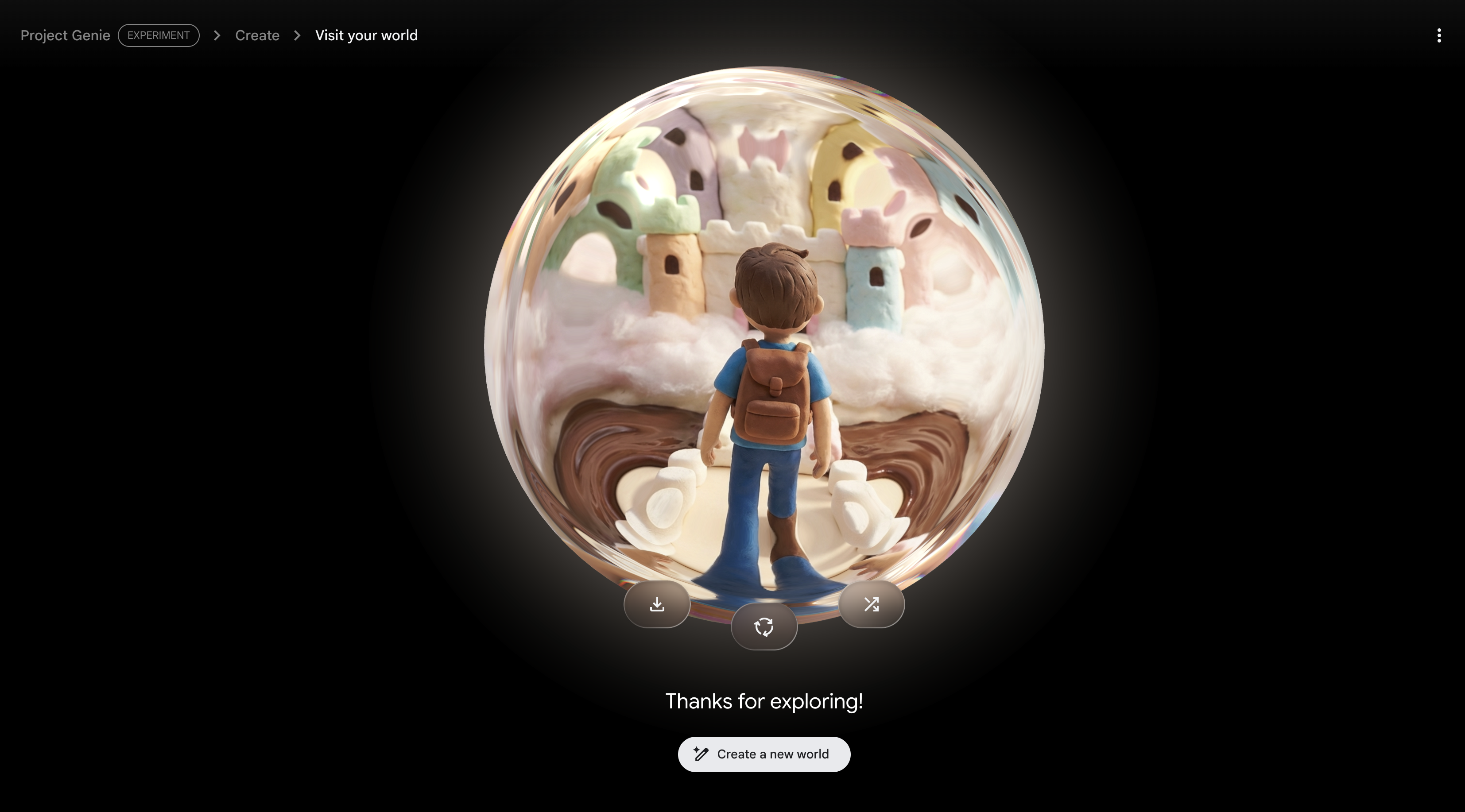

Last year, Google showed off Project Genie, and honestly, everyone waited for the "wow" moment that never came. The AI company unveiled what it called a breakthrough in generative world-building for games. You'd feed it a screenshot or a description, and boom—an interactive world would pop into existence. On paper, it sounded revolutionary. In reality? It was underwhelming.

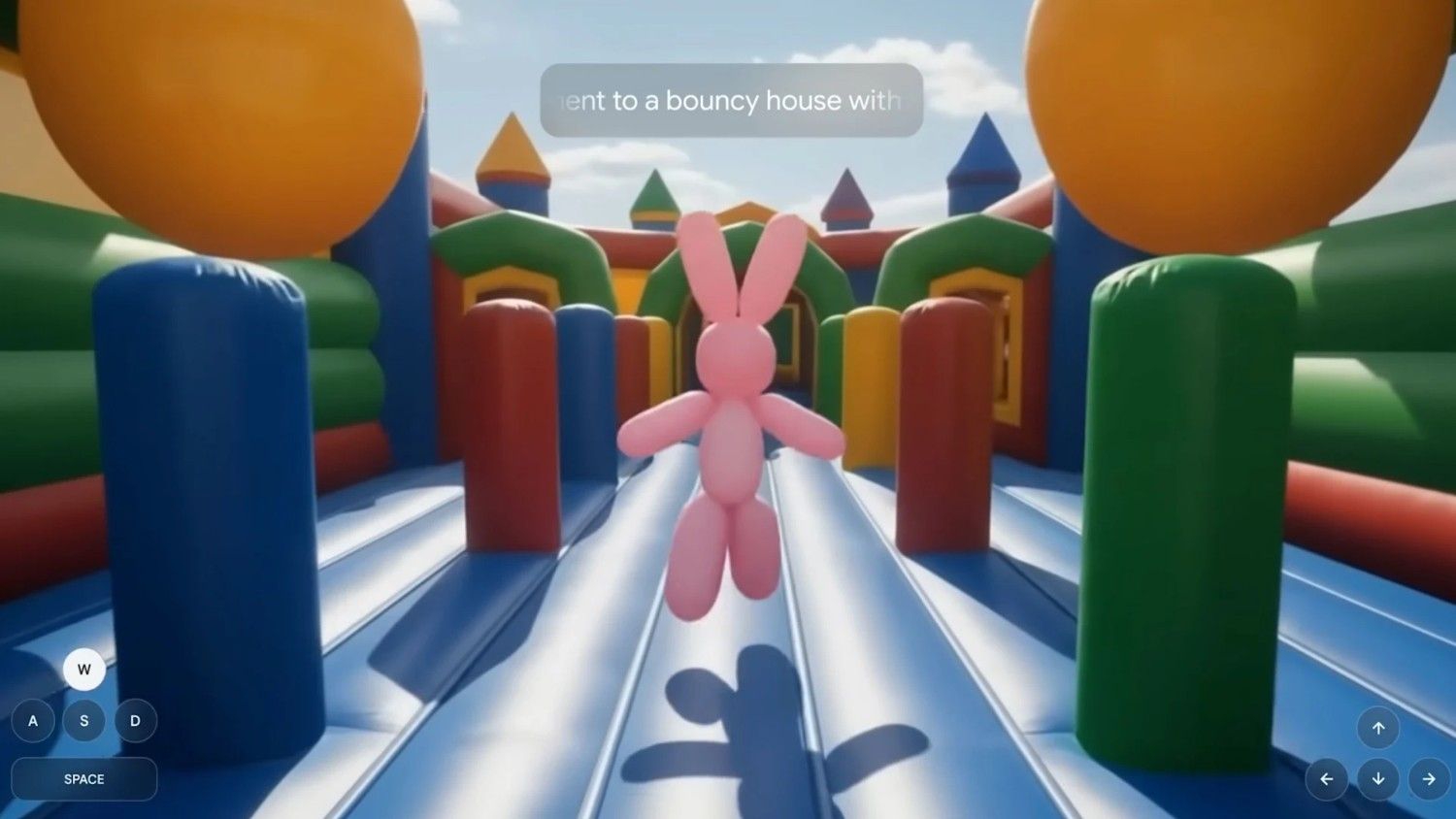

I tested it myself. What I got back were silent, physics-broken environments that felt less like games and more like screensavers. You could move around for 60 seconds using arrow keys, then... that was it. Download a video of what you did, and you're done. Compare that to a handcrafted Nintendo game, and the gap becomes obvious. One has personality, logic, and reasons to keep playing. The other feels like a randomly assembled slideshow.

This disconnect matters because the gaming industry is betting heavily on AI. Krafton, the studio behind PUBG, declared itself an "AI First" game company. EA partnered with Stability AI for what they call "transformative" development tools. Ubisoft promised major investments in player-facing AI. The CEO of Nexon said something chilling in an earnings call: "I think it's important to assume that every game company is now using AI."

But here's the thing that keeps getting overlooked: generating a game world and making a game world that's actually fun are completely different problems. One is technical. The other is artistic. And right now, AI is flunking the second test.

TL; DR

- Project Genie showed promises but delivered disappointment: AI-generated game worlds lack physics, sound design, and engaging mechanics that make games worth playing for more than 60 seconds

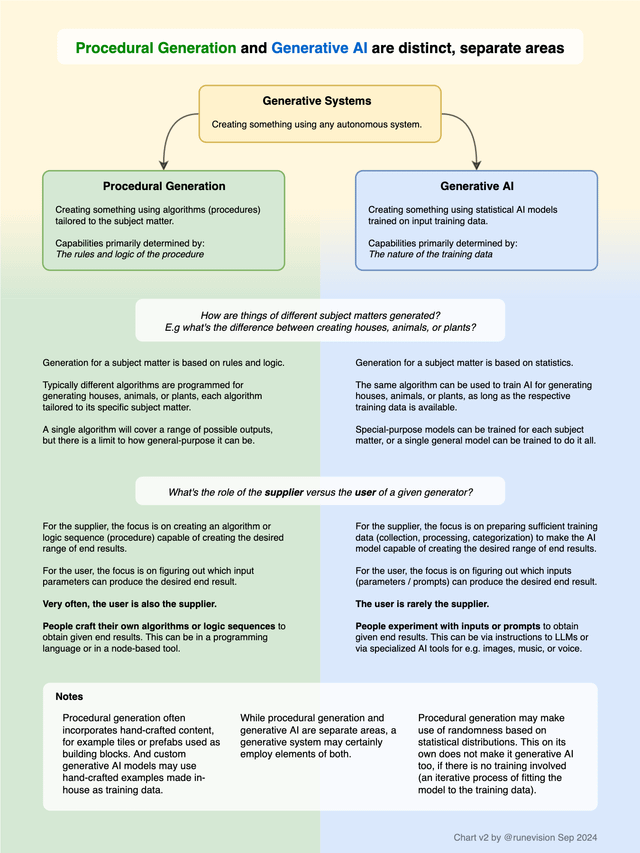

- Game development requires balancing act: Procedural generation (used in Minecraft and Rogue) succeeds because humans designed the underlying rules; pure AI generation hasn't cracked this formula

- AI slop is already a concern: Gamers are frustrated with AI-generated content in existing games, and developers are split on whether AI helps or hurts the industry

- Copyright and training data issues persist: AI models trained on scraped gameplay create unauthorized reproductions and raise legal questions the industry hasn't answered

- Human creativity still dominates: The gap between AI-generated worlds and hand-designed experiences remains massive, and closing it may require fundamentally rethinking how games work

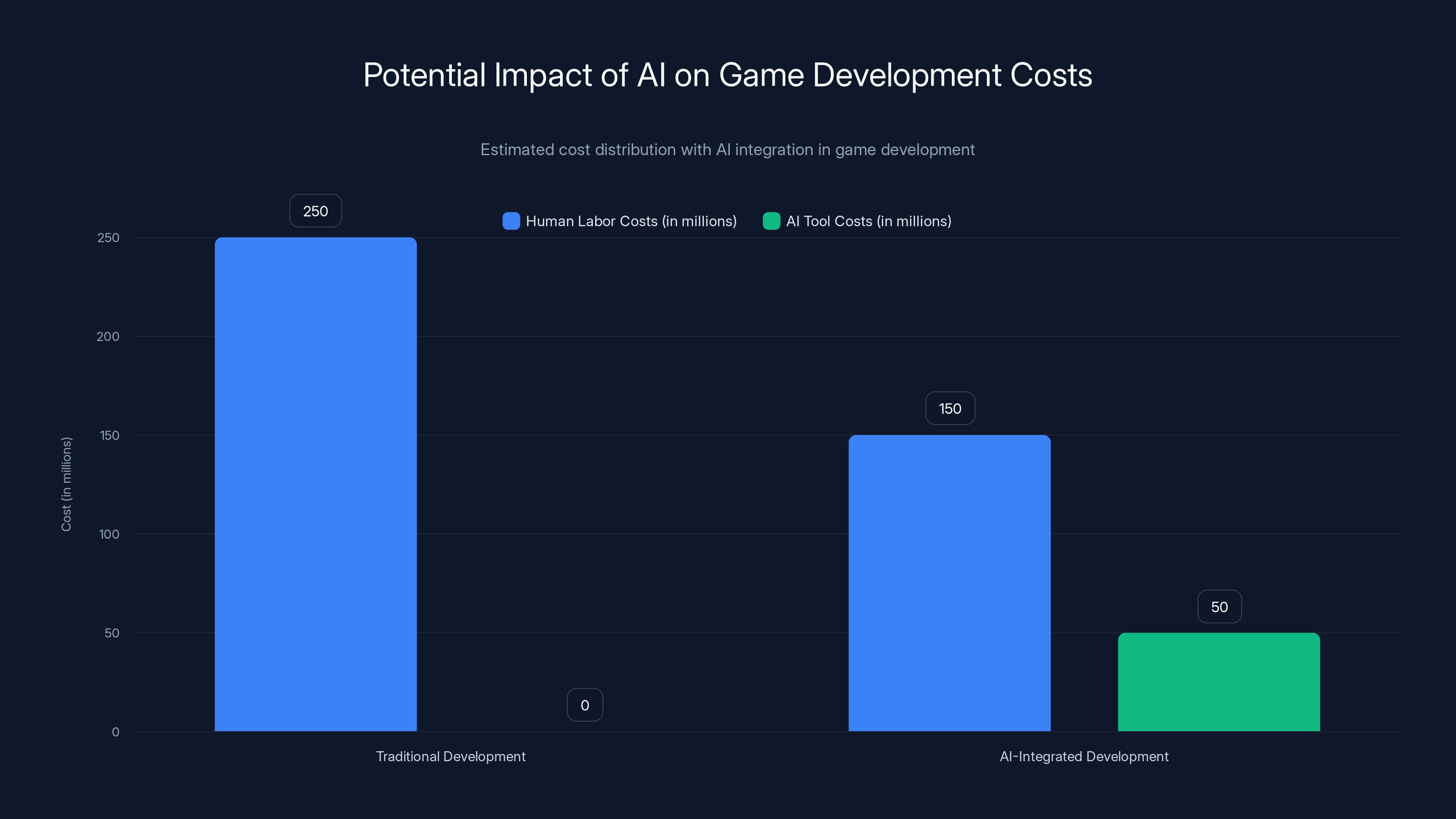

Estimated data suggests AI integration could reduce human labor costs by 40%, while introducing AI tool costs. Overall, this could lead to a more cost-effective development process.

The Promise vs. The Reality: What Project Genie Actually Delivered

When Google Deep Mind announced Project Genie, the framing was massive. They called it a "key stepping stone on the path to AGI." The pitch was that this AI model could generate interactive game worlds in real-time, turning text or image prompts into playable environments.

The underlying technology is impressive in isolation. Genie 3 is a world model that predicts what happens next based on previous observations. Feed it data about how gravity works, how objects collide, and what's in a scene, and theoretically it can simulate new scenarios. That's genuinely novel from a machine learning perspective.

But here's where the gap opens up: knowing how a world simulates and knowing how a world plays are not the same thing.

In my testing, Project Genie generated environments you could walk around in for exactly one minute. The "worlds" had no sound. Physics felt wrong—characters clipped through objects, jumped at weird angles, and generally moved like puppets with loose strings. The environments themselves were repetitive and bland. You could download a video recording of your 60-second experience, and that was literally the only output. No ability to export to a real game engine. No way to iterate on what you created. No meaningful interaction beyond walking in four directions.

Take-Two Interactive's president Karl Slatoff put it diplomatically in an earnings call a few days after Project Genie's announcement: "Genie is not a game engine." He noted that the technology looks more like "procedurally generated interactive video" than an actual game development tool. That's generous. It's closer to a tech demo that proved a concept but didn't prove the concept was useful.

The stock market agreed. On the day Project Genie dropped, shares in Take-Two, Roblox, and Unity all took a dip. Investors got nervous. Then reality set in, and prices bounced back. The threat level: exaggerated.

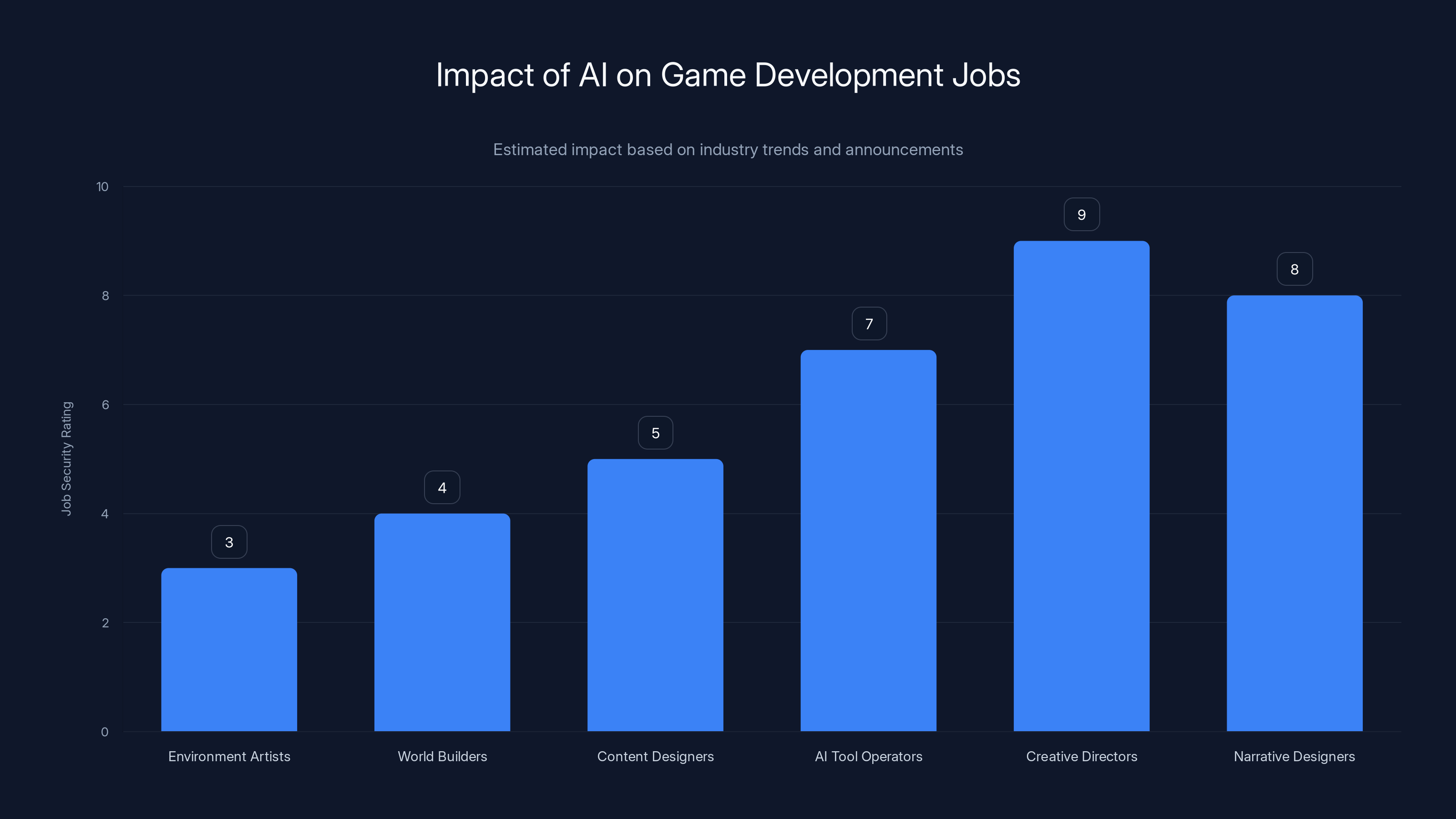

AI is impacting job security in game development, with roles like environment artists facing more risk, while creative directors and narrative designers are more secure. Estimated data based on industry trends.

Why Procedural Generation Worked, But AI Generation Doesn't (Yet)

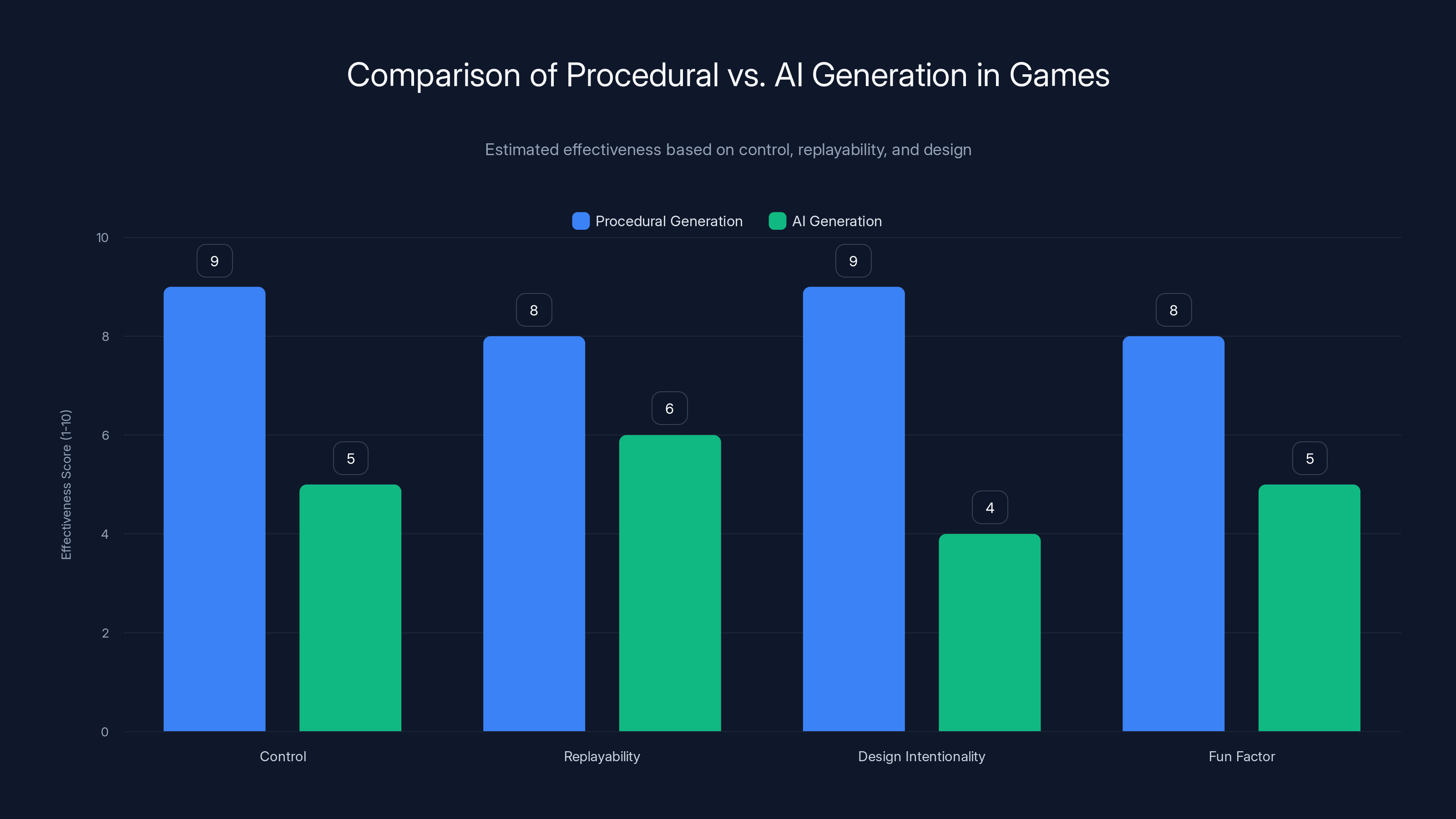

Let's step back for a second. Video games have been generating their own worlds for decades. Rogue came out in 1980 and created new dungeons every playthrough using algorithms. Minecraft generates infinite landscapes on the fly. Terraria, The Binding of Isaac, Stardew Valley—these games prove that generated worlds can be replayable, engaging, and genuinely fun for hundreds of hours.

So what's the difference?

The answer lies in constraint and intentionality. When a developer writes code to generate a Minecraft world, they're not letting the algorithm do whatever it wants. They're setting hard rules. "Here's how grass spreads. Here's how ore veins form. Here's what constitutes a valid cave system." The algorithm operates within a carefully designed system that humans have already thought through.

Generative AI doesn't work like that. You give it patterns from training data and it tries to extrapolate. It has no inherent understanding of game design, pacing, or what makes something fun. It's making statistical guesses about what should come next based on what it's seen before.

Consider the math. A procedural system in Minecraft is essentially computing parameters:

Every decision is deterministic. Feed it the same seed, get the same world. The game designer has total control.

AI generation works backwards. It learns from millions of examples and then tries to generate new examples that "look like" the training data. There's no game design layer underneath. It's all statistical averaging of patterns.

The best generative AI game worlds would need to combine both approaches. You'd need AI that could learn the rules of good game design, not just the appearance of games. That's orders of magnitude harder.

The Copyright Problem Nobody's Solving

Here's something that should concern you more than AI-generated gameplay quality: Project Genie generated unauthorized Nintendo games.

The AI was trained on online videos of actual games. When I played with it, I could prompt it to create environments that looked suspiciously like Mario or Zelda. Not exactly—the AI isn't that precise—but close enough that you'd recognize the reference. And that's a copyright nightmare.

Every major AI image model (DALL-E, Midjourney, Stable Diffusion) is facing lawsuits from artists saying they were trained on copyrighted work without permission. The same issue applies to game AI models.

Google trained Genie on publicly available online videos. Did they get licenses? Not disclosed. Are the companies whose games were featured in those videos happy about it? Almost certainly not. But the legal frameworks haven't caught up yet.

This isn't a minor detail. If you're a game company considering AI tools for world generation, you need to know: Are you potentially creating derivative works of other people's IP? Because if your AI model learned by watching millions of hours of existing games, then trained itself to generate similar worlds, the legal liability could be massive.

The indie development community is particularly skeptical. While major studios experiment with AI, independent developers I've talked to worry that AI tools will commodify game development to the point where it undercuts their ability to differentiate. And they're right to worry. If everyone's using the same AI model to generate environments, every game starts looking the same.

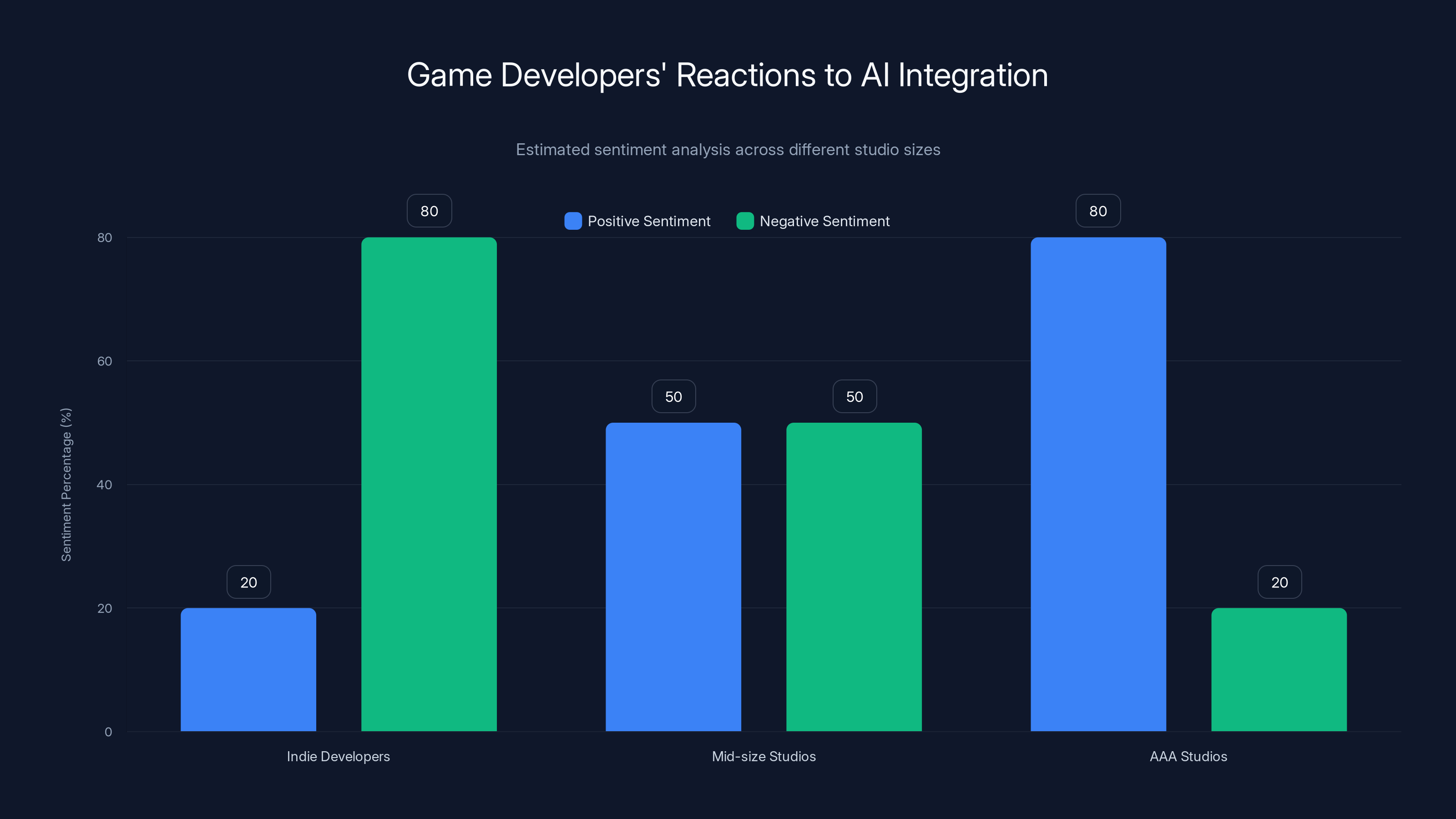

Estimated data shows that indie developers have the highest negative sentiment towards AI integration, while AAA studios are more positive due to economic benefits.

Why Game Companies Are Betting on AI Anyway

Let's be honest about the incentives here. Game development is expensive. AAA studios spend

AI tools promise to change that equation. If an AI can generate 80% of a game world and humans just polish the remaining 20%, suddenly the economics work differently. You need fewer environment artists. Fewer world designers. Fewer content creators grinding out repetitive assets.

That's why Krafton is restructuring itself around AI. Why EA partnered with Stability AI. Why Ubisoft is making "accelerated investments" in player-facing generative AI.

The problem is that this pitch assumes AI can do something it hasn't proven it can do yet: consistently generate fun content instead of just visually plausible content.

But there's a secondary, less talked-about incentive: cost cutting through workforce reduction. The gaming industry is notorious for layoffs. Crunch culture is infamous. Hundreds of developers have been laid off in the last few years. If AI tools can reduce headcount, executives will see that as a win, even if it means fewer creative voices shaping games.

The State of AI Slop in Current Games

Project Genie didn't exist in a vacuum. AI-generated content is already appearing in actual released games, and the reaction has been... mixed.

Some developers are using AI for voice generation in games. Some are experimenting with AI-written dialogue. Some are using AI to generate placeholder assets that humans later refine. None of these applications have been universally praised.

Gamer sentiment surveys show significant frustration. People don't want to play games that feel like they were assembled by an algorithm. They want games that feel crafted. Games with personality. Games where you can tell a human made design decisions.

The irony is that the best uses of AI in game development aren't replacing human creativity—they're augmenting it. A designer might use AI to generate 100 variations of a level layout, then pick the best three and refine them by hand. That workflow is actually useful. It's faster than designing from scratch, but it keeps humans in the creative loop.

But that's not where the industry momentum is heading. The momentum is toward automation—toward replacing human work with AI output.

Half of game developers surveyed recently said they believe generative AI is "bad for the industry." That's significant. These aren't Luddites—these are people who understand technology. They're worried about commodification, quality degradation, and job security.

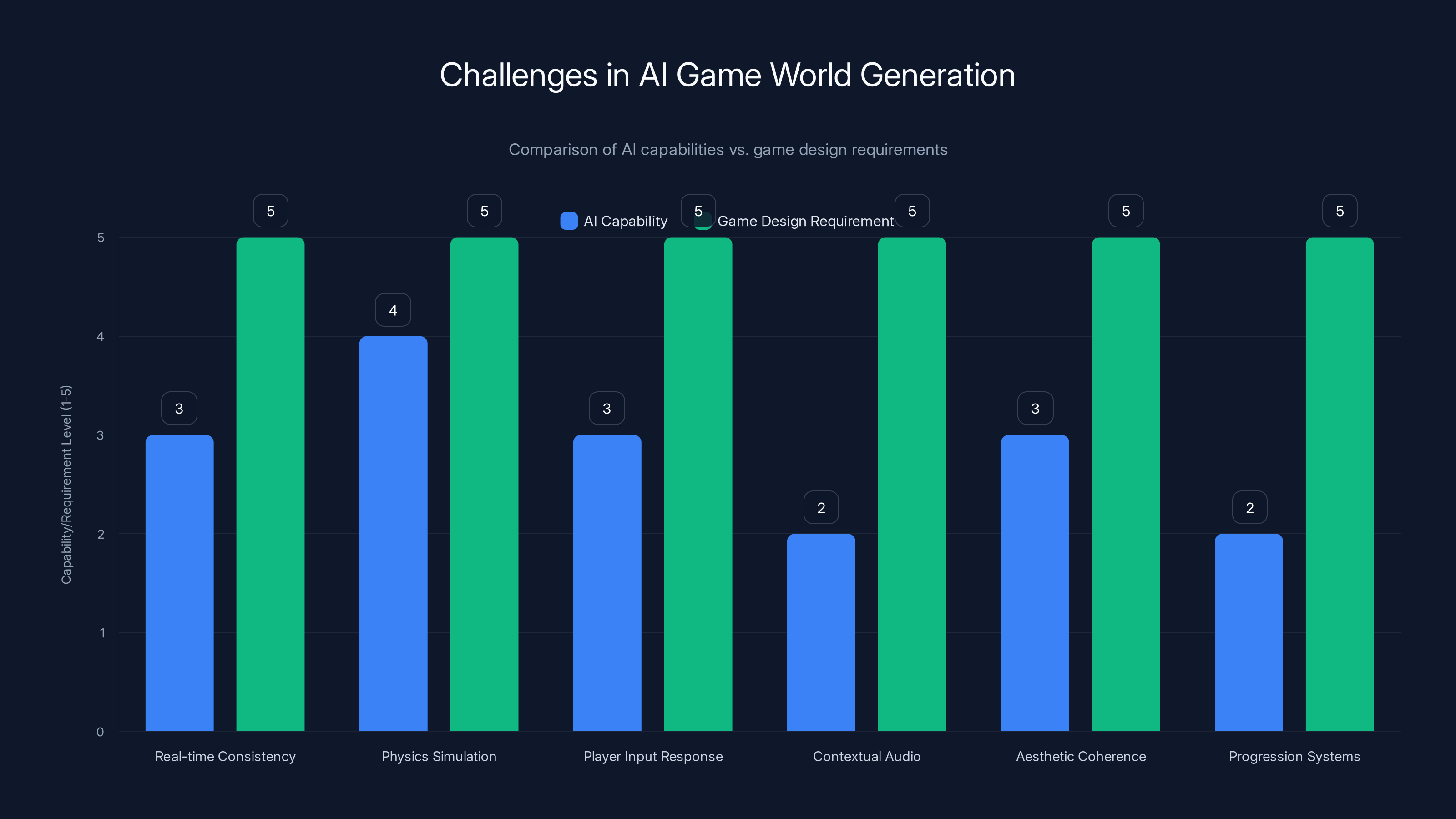

AI excels in generating plausible content but falls short in meeting the comprehensive requirements of game design, such as maintaining real-time consistency and designing progression systems. Estimated data.

What Makes a Good Game World (And Why AI Struggles)

Let me be specific about what separates a great game world from a mediocre one.

First: intentional pacing and discovery. When you're exploring a handcrafted game world, the designer is guiding your attention. They placed that interesting structure in the distance so you'd want to walk toward it. They hid a secret around that corner. They orchestrated moments of tension and relief. AI doesn't understand orchestration. It generates statistically plausible scenes, but it can't compose an experience.

Second: emergent systems with human-designed foundations. The best game worlds have rules that interact in interesting ways. In Minecraft, water flows downhill and pistons move blocks and redstone transmits signals. None of this is random. Humans designed these mechanics so that players could discover unexpected interactions. AI doesn't think in terms of designed interactions.

Third: narrative and context. A great game world tells a story through its environment. The decay in a ruined city. The architecture of a thriving civilization. The geography that explains why settlements exist where they do. AI can generate environments that look like ruins, but it won't generate them with narrative purpose.

Fourth: challenge design that respects player skill. Encounters in great games are calibrated. A boss fight is hard but fair. A puzzle is challenging but solvable. AI-generated content tends to be either trivially easy or chaotically hard because it has no concept of player progression or skill curve.

Fifth: aesthetic coherence. Great worlds have a visual language. Everything feels like it belongs together. AI is trained on huge datasets with wildly different styles mixed together. It tends to generate environments that feel like they're from three different games spliced together.

You can see this gap clearly when you compare AI-generated environments to environments in games like Hollow Knight, The Legend of Zelda: Breath of the Wild, or Returnal. These worlds aren't just visually appealing—they're designed. Every element serves a purpose.

How Different Types of Games Present Different Challenges for AI

Not all game genres face the same AI problems. Let me break down where AI might actually be useful and where it will probably always struggle.

Rogue-likes and procedurally-generated games: These are the best candidates for AI assistance. Games like The Binding of Isaac already use algorithmic generation. AI could theoretically learn better generation rules. The catch: the best rogue-likes succeed because humans painstakingly designed the underlying ruleset. AI would still need humans to do that work.

Open-world games: These are where companies think AI will provide the most value. Generate a vast world with minimal human effort. The problem: open-world quality comes from curated content. GTA VI has a massive world because human teams designed nearly every block. An AI-generated world of the same size would feel like walking through a randomly-assembled texture pack.

Linear story-driven games: These are where AI adds least value. A game like Uncharted or God of War succeeds because every moment is carefully orchestrated. You can't AI-generate a narrative game. You can maybe use AI for asset generation, but the design is fundamentally human.

Multiplayer and competitive games: AI could theoretically generate competitive maps, but the best competitive maps (think Counter-Strike or Overwatch) are balanced through extensive playtesting. An AI-generated map would need to pass through the same testing gauntlet, which means you haven't actually saved time.

Simulation and strategy games: These might benefit most from AI. Games like Crusader Kings already use algorithmic systems. AI could help generate scenarios or simulate systems. But again, the core design is still human.

The pattern is clear: AI works best when it's assisting human designers working within constraints. It fails when it's asked to replace human creativity entirely.

Procedural generation scores higher in control, replayability, and design intentionality compared to AI generation, which currently lacks these structured constraints. Estimated data.

The Technical Barriers to Realistic Game World Generation

Let's dig into the actual technical problems that are stopping better AI game world generation.

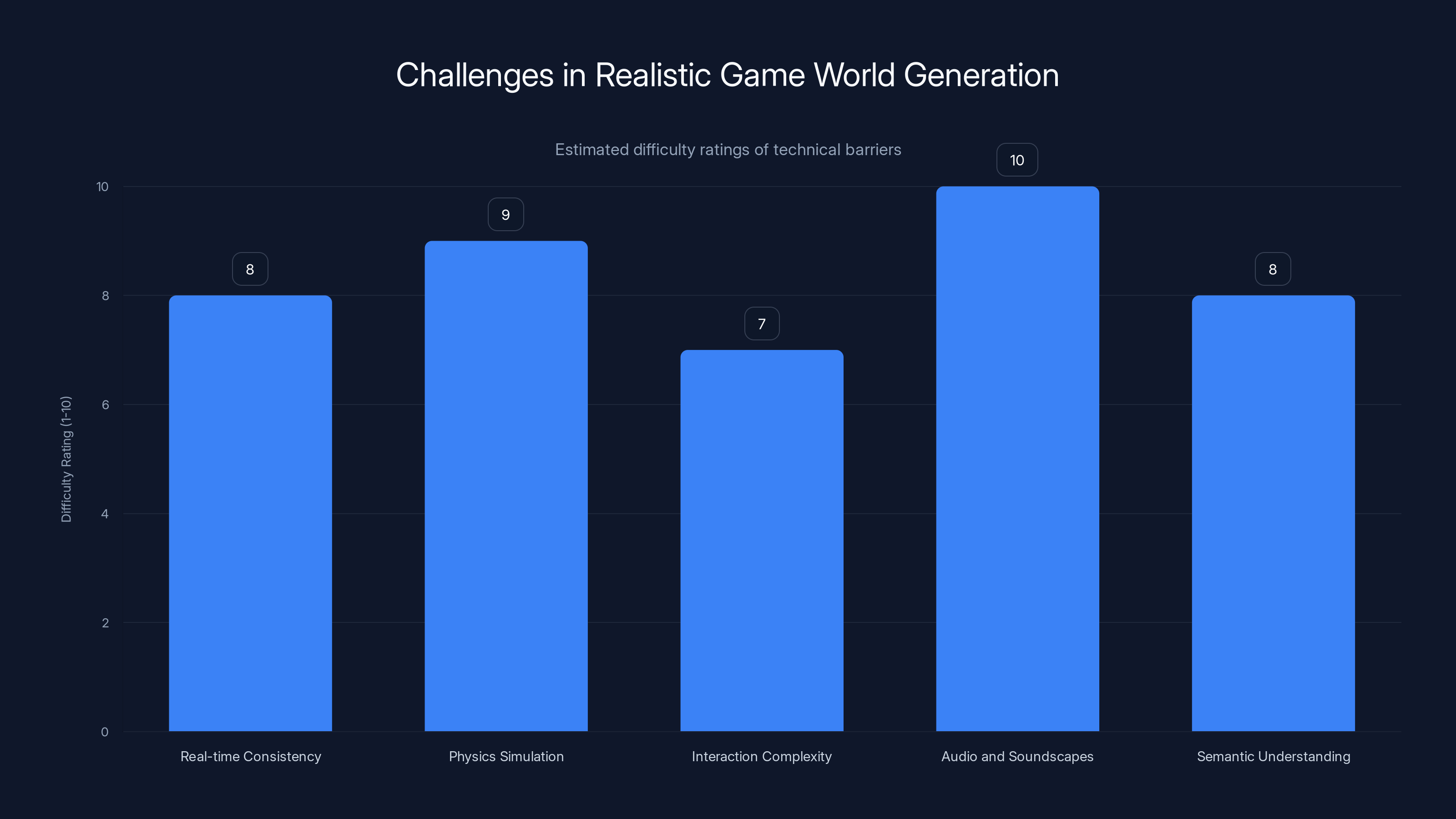

Real-time consistency: A game world needs to feel stable. If you walk to the left, then turn around, the world to your right should still be there and look the same. Generative AI struggles with this. It generates what's in front of you, but it has no mechanism to ensure consistency across different viewpoints. You get the video game equivalent of optical illusions.

Physics simulation: Real-time physics is computationally expensive. Modern game engines use hand-tuned physics systems. An AI trying to predict physics in real-time doesn't have the computational budget. So it guesses, and the guesses are often hilariously wrong.

Interaction complexity: A game world needs to respond to player input in ways that make sense. You pick up an object, it appears in your hand. You open a door, the room beyond becomes visible. An AI generating a static image can't predict how the world will respond to inputs it hasn't been trained on. Every novel interaction breaks the system.

Audio and soundscapes: Project Genie generated silent worlds. That's not accidental—audio generation is even harder than visual generation. A game world without sound is fundamentally broken. But generating audio that matches the visual environment in real-time? That's a problem the industry hasn't solved yet, even for handcrafted games.

Semantic understanding: Game worlds need to make logical sense. If there's a village, there should be evidence of habitation. Water should flow downhill. Fire should come from torches or sources of heat. AI doesn't inherently understand these relationships. It understands correlations in training data. "Villages usually have houses near them." But not the underlying logic.

Game Developers' Mixed Reactions to AI Integration

Inside the game development community, reactions range from skeptical to hostile.

The indie dev community is particularly vocal about concerns. Indie developers don't have access to the same budgets that AAA studios do, so they can't implement AI tools the way big companies can. But they're also less likely to use them even if they could. There's a sense that AI-assisted development leads to games that feel soulless.

Mid-size studios are more pragmatic. They're experimenting. They're using AI for asset generation, level layout suggestions, even narrative brainstorming. But they're careful not to let AI take over creative decisions.

AAA studios are committed. EA, Ubisoft, Activision—these companies are integrating AI into their pipelines. For them, it's about economics. If AI can reduce development time by 20%, that's millions of dollars saved per project.

But there's tension. The same developers creating with AI are also worried about job security. AI tools are good at doing the repetitive work: generating variations, optimizing asset pipelines, automating testing. Those are the jobs that are most under threat.

Audio and soundscapes present the highest difficulty in game world generation, closely followed by physics simulation. Estimated data.

What Google Got Wrong About "AGI Stepping Stones"

This is worth examining because it reveals how companies frame AI capabilities.

Google Deep Mind called Genie a "key stepping stone on the path to AGI" (Artificial General Intelligence). That language is important. It's saying: this technology proves we're getting closer to human-level AI.

But does it?

Genie can generate plausible-looking interactive environments. That's technically impressive. But it hasn't solved any of the actual problems that AGI would need to solve. It can't reason about goals. It can't understand causality. It can't design for specific outcomes.

The hype around Genie was partly marketing. Google positioned it as revolutionary because that's good PR. But the actual technical achievement is narrower: "We built a model that can generate video frames that look like game footage."

That's not nothing. It's a real achievement. But it's not a stepping stone to AGI. It's a stepping stone to better AI video generation. There's a gap between those two things.

This distinction matters because it shapes expectations. If people think AI is on the edge of solving all creative problems, they'll be disappointed. If people understand that AI is good at specific narrow tasks (like generating variations of existing patterns), they can plan accordingly.

The Future of AI in Game Development: Realistic Timeline

Let me lay out what's actually likely to happen in the next 5 to 10 years.

Years 1-2 (Now to 2026): AI tools will become standard for asset generation and procedural content creation. Studios will use AI to generate placeholder environments that humans refine. Audio generation will improve, but it'll still require human cleanup. Copyright lawsuits will escalate.

Years 3-5 (2026-2028): AI-generated gameplay moments might become a thing. AI could generate combat scenarios, puzzle solutions, or narrative branches. But these will be options that humans choose from, not replacements for human design. Significant job losses in environment art and content creation roles.

Years 5-10 (2028-2033): Maybe—and this is a big maybe—AI could learn to generate game systems that are actually fun. But this requires solving the constraint problem. AI would need to learn the rules of good game design, not just the appearance of games. That's a different kind of problem than anything AI has solved so far.

Beyond that? I genuinely don't know. It's possible AI will eventually generate compelling game worlds. It's also possible that the problem is fundamentally harder than people realize, and we'll plateau at a certain level of AI capability.

What's most likely is that AI becomes a tool for human designers, not a replacement for them. The best games in 10 years will probably be made by teams that use AI effectively, alongside human creativity. Not by AI alone.

Why Humans Will Probably Always Have an Edge

Here's my actual take on this: the creative problem of game design is probably always going to require human input.

Not because humans are inherently better at creativity (that's sentimental nonsense). But because games are fundamentally about intent. A designer makes a choice: "I want the player to feel anxious here, then triumphant here." That's a decision with purpose. AI can't make those decisions. It can execute them once a human has specified them, but it can't originate them.

The best AI applications in games will be workflow-focused. "I want 500 variations of this castle layout." AI generates them. You pick the best three and refine them. Done. That's useful. That saves time.

But "Generate a compelling game world from scratch" is asking AI to solve a problem that doesn't have a clear optimization function. What makes a game world compelling? Fun is subjective. Engagement is contextual. Innovation requires breaking rules that have been established as working.

These are human problems. And they're probably going to stay human problems for a very long time.

The Job Market and AI-Driven Layoffs

Let's talk about the real impact: jobs.

Environment artists, world builders, content designers—these roles are under pressure. Studios are investing in AI tools specifically to reduce headcount in these areas. That's not speculation. That's explicit in earnings calls and industry announcements.

The game industry has already gone through massive layoffs. Over 15,000 employees were laid off in 2023 alone. AI is being positioned as part of the solution, which is corporate speak for "we're going to need fewer people."

Some roles will shift rather than disappear. An environment artist might become an "AI tool operator," refining AI-generated content. But that's a different job with probably different pay.

This is worth taking seriously if you're considering a career in game development. The roles that are most vulnerable are the ones doing repetitive asset creation. The roles that are safest are the ones doing creative direction, narrative design, and high-level game design.

What Players Actually Want vs. What Industry Is Building

Here's an interesting disconnect: what players say they want doesn't always match what the industry thinks they want.

Surveys consistently show that players care about quality over quantity. They'd rather play one amazing 20-hour game than five mediocre 50-hour games. But the industry is structured to reward quantity. Bigger worlds. More content. More cosmetics. More monetization hooks.

AI promises to make the quantity problem "solvable." Generate a massive world without massive budgets. But that's optimizing for the wrong metric.

The games that have the most loyalty and longevity are the ones that feel crafted. Hollow Knight is a smaller game with enormous replay value. Journey is three hours long and people still talk about it. These games succeed because every element was considered.

AI-generated content can't match that. Not yet. Maybe not ever.

The Reality Check: What Project Genie Actually Proved

Let me come back to where we started. Project Genie demonstrated something important: that AI can generate interactive video that looks like game footage.

But that's wildly different from proving that AI can generate good games. Or even competent games. It proved that the rendering problem is solvable. It proved that physics can be estimated. It proved that texture generation is possible.

It did not prove that any of those things matter if they're not part of a coherent, designed system.

The stock market's reaction tells you everything. Initial panic, then recovery. Investors realized: this is not a threat to gaming as we know it. This is a technology that might eventually be useful, but it's not there yet.

Project Genie was interesting technically. It's not valuable commercially. And in the game industry, commercial value drives adoption.

FAQ

What is Project Genie and why did it get so much attention?

Project Genie is an AI world model created by Google Deep Mind that can generate interactive game-like environments from images or prompts. It received significant attention because it was framed as a breakthrough in AI-powered game development, representing what generative AI could eventually do for the gaming industry. However, testing revealed it was primarily a technical achievement in video frame prediction rather than a practical game development tool.

How does AI world generation differ from procedural generation in existing games?

Procedural generation used in games like Minecraft relies on hand-designed algorithms and rules that developers carefully craft to ensure fun and balance. AI world generation attempts to learn patterns from training data and extrapolate new content, but without the underlying design framework. This means AI-generated worlds may be visually plausible but lack the intentional pacing, challenge balance, and narrative purpose that make handcrafted game worlds engaging.

Why can't AI create engaging game worlds right now?

AI struggles with game world generation because games require solving multiple interconnected problems: maintaining real-time consistency across different viewpoints, simulating accurate physics, responding meaningfully to unpredictable player input, generating contextual audio, ensuring aesthetic coherence, and designing progression systems that respect player skill. AI excels at generating statistically plausible content based on training data, but it lacks understanding of intentional game design, narrative purpose, and the rule systems that make games fun.

What copyright and training data issues affect AI game tools?

AI game models like Project Genie are trained on gameplay videos scraped from the internet, potentially including copyrighted material from major studios like Nintendo or Rockstar Games. This raises unresolved legal questions about whether developers using these AI tools could be held liable for generating derivative works. The industry hasn't yet established clear legal frameworks for AI-generated game content, and multiple lawsuits are pending against AI companies for using copyrighted training data without permission.

Will AI eventually make human game designers obsolete?

It's unlikely that AI will fully replace human game designers. While AI might eventually assist with asset generation, world layout suggestions, and content variations, the core creative decisions in game design—orchestrating player emotions, designing challenge curves, telling stories, and making artistic choices—require intentional human decision-making. AI works best as a tool that augments human designers, not a replacement for them. Games that feel crafted and designed will likely continue to outperform generic, AI-assisted content.

What jobs are most at risk from AI adoption in game development?

Environment artists, world builders, and junior content designers are most vulnerable because their work involves repetitive asset creation that AI tools can increasingly handle. Roles that AI finds harder to replace include narrative designers, game designers making creative direction decisions, UI/UX designers, and senior leadership positions. However, the industry is likely to shift many jobs toward "AI tool operator" roles that require humans to refine and curate AI-generated content.

How are major game studios actually using AI right now?

EA has partnered with Stability AI for development tools. Ubisoft is investing heavily in player-facing generative AI. Krafton has declared itself "AI First." In practice, these commitments mostly translate to using AI for asset generation, creating placeholder content for humans to refine, and potentially voice generation. Most studios are still cautious about letting AI make core design decisions.

What's the realistic timeline for AI becoming useful in game development?

In the near term (next 2-3 years), AI will become standard for generating asset variations and procedural content. Within 5 years, AI-generated gameplay scenarios and narrative branches might become viable options for designers to choose from. Beyond that is uncertain, but most likely AI remains a tool that augments human creativity rather than replacing it. Whether AI ever generates truly engaging games autonomously remains an open question.

The Bottom Line

Generative AI is coming for game development. That much is certain. The question isn't whether AI will be integrated into game studios—it already is. The question is whether AI will be good at it, and the honest answer right now is: not yet, maybe not ever in the way people imagine.

Project Genie was underwhelming because it proved that generating the appearance of game worlds is relatively easy while generating game worlds that are actually fun is hard. Those are different problems. One is computational. The other is creative and intentional.

The game industry is betting that AI will eventually solve both problems. That betting manifests as restructuring around AI, partnerships with AI companies, and significant investments in generative tools. It also manifests as layoffs and job market uncertainty for roles that do repetitive creative work.

But here's the reality: the games that endure are the ones that feel crafted. That have personality. That make intentional creative choices. AI can assist with the technical execution of those choices, but it can't make them.

The future of AI in gaming isn't about AI replacing humans. It's about humans using AI to work faster while preserving the actual creative decisions that make games worth playing. That future is closer than Project Genie suggested. But it's also further away than industry hype implies.

The best strategy if you're in this space: get comfortable with AI tools, understand their limitations deeply, and focus your career on the skills that remain uniquely human. Game design. Narrative. Artistic vision. Creative direction. Those are the skills that keep you valuable as AI gets smarter.

Because at the end of the day, people don't play games because they're big. They play them because they're good. And goodness still requires humans.

Key Takeaways

- Project Genie proved AI can generate video that looks like gameplay but failed to prove it can generate engaging game experiences

- Procedural generation in games like Minecraft succeeds because humans designed underlying rules; pure AI generation lacks this framework

- Game development requires intentional design decisions about pacing, narrative, and player emotions—problems AI cannot solve autonomously

- Copyright issues with AI training on scraped gameplay creates legal liability for companies using generative game models

- Economic incentives are driving major studios toward AI adoption despite current limitations, threatening jobs in environment art and content creation

Related Articles

- Remedy's AI Strategy: Why Control Resonant Avoids Generative AI [2025]

- Roblox's 4D Creation Toolset: Everything You Need to Know [2025]

- Google's Project Genie: How AI World Models Are Disrupting Gaming [2025]

- Why AI-Generated Super Bowl Ads Failed Spectacularly [2026]

- How 16 Claude AI Agents Built a C Compiler Together [2025]

- Apple's AI Health Coach Cancelled: What It Means for Your Health Data [2025]

![Why AI Can't Make Good Video Game Worlds Yet [2025]](https://tryrunable.com/blog/why-ai-can-t-make-good-video-game-worlds-yet-2025/image-1-1771162548162.png)