The AI Slop Security Crisis Is Here, and It's Worse Than You Think

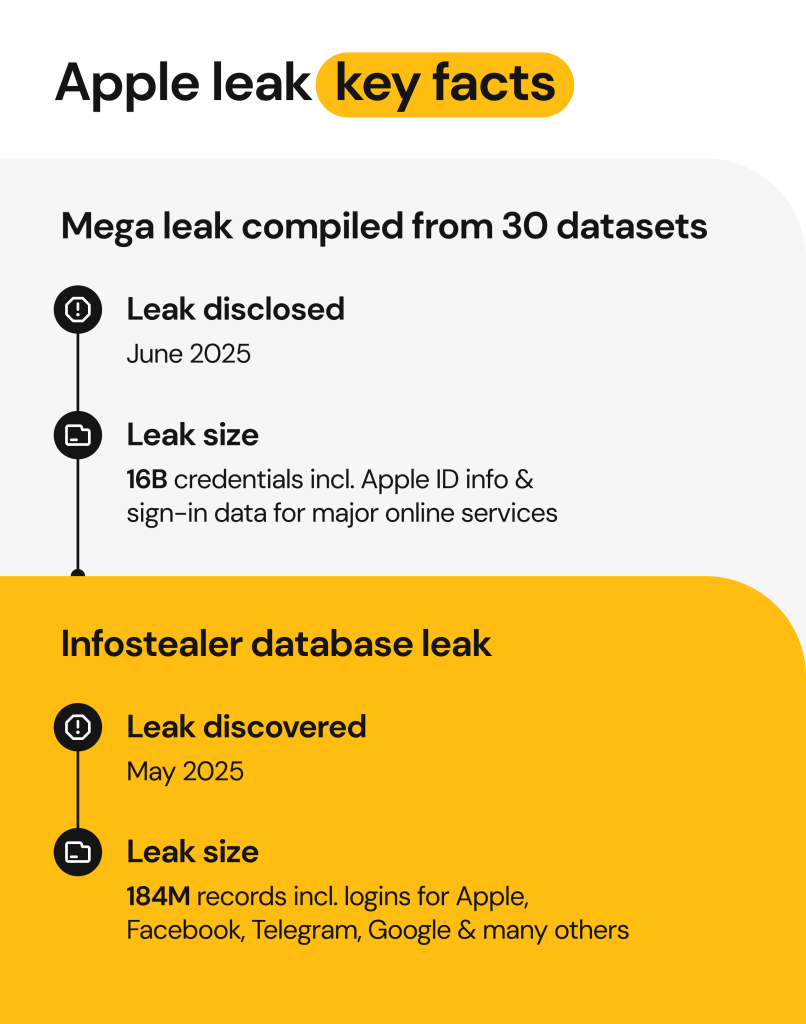

Imagine opening an app you trust, sending a private message to a friend, and then finding out that message just got sent to some random server on the internet. Sounds paranoid, right? Except it's not hypothetical anymore. Security researchers recently uncovered a staggering 198 iOS applications that were actively leaking sensitive user data, including private chats, location information, and personal details. This isn't a minor vulnerability that affects a handful of users. This is a systemic problem baked into how some developers handle AI integration and third-party services.

The worst part? Most users have no idea their apps are compromised. They download what looks like a legitimate productivity tool, weather app, or social utility. They grant permissions they think are necessary. And then their data starts flowing to servers they've never heard of, getting processed by AI systems they never consented to, and potentially sold or exposed in ways that violate every privacy principle we thought tech companies followed.

This crisis reveals something uncomfortable about the current state of mobile app development. Developers are rushing to integrate AI features into everything, often without properly vetting the third-party services they're using. They're cutting corners on security audits. They're burying privacy policies in walls of legal text. And users are paying the price with their data.

The stakes are higher than they've ever been. We're not talking about a data breach that might affect your password. We're talking about real-time location tracking, access to your private conversations, and potentially your financial information. This affects millions of iOS users, across dozens of app categories, from fitness trackers to business tools to entertainment apps.

In this guide, we're going to break down exactly what happened, which apps were affected, how the vulnerability worked, and most importantly, what you can do right now to protect yourself and your data.

TL; DR

- 198 iOS apps caught leaking sensitive data including private messages, locations, and personal information to unauthorized servers

- Root cause identified: Improper implementation of third-party AI services and analytics platforms without proper security controls

- Impact is widespread: Affects apps across multiple categories including fitness, productivity, social, and entertainment

- Real-time exposure risk: Some apps were transmitting data as it was created, with no encryption or authentication

- Immediate action needed: Review app permissions, update to latest versions, and delete apps from untrusted developers

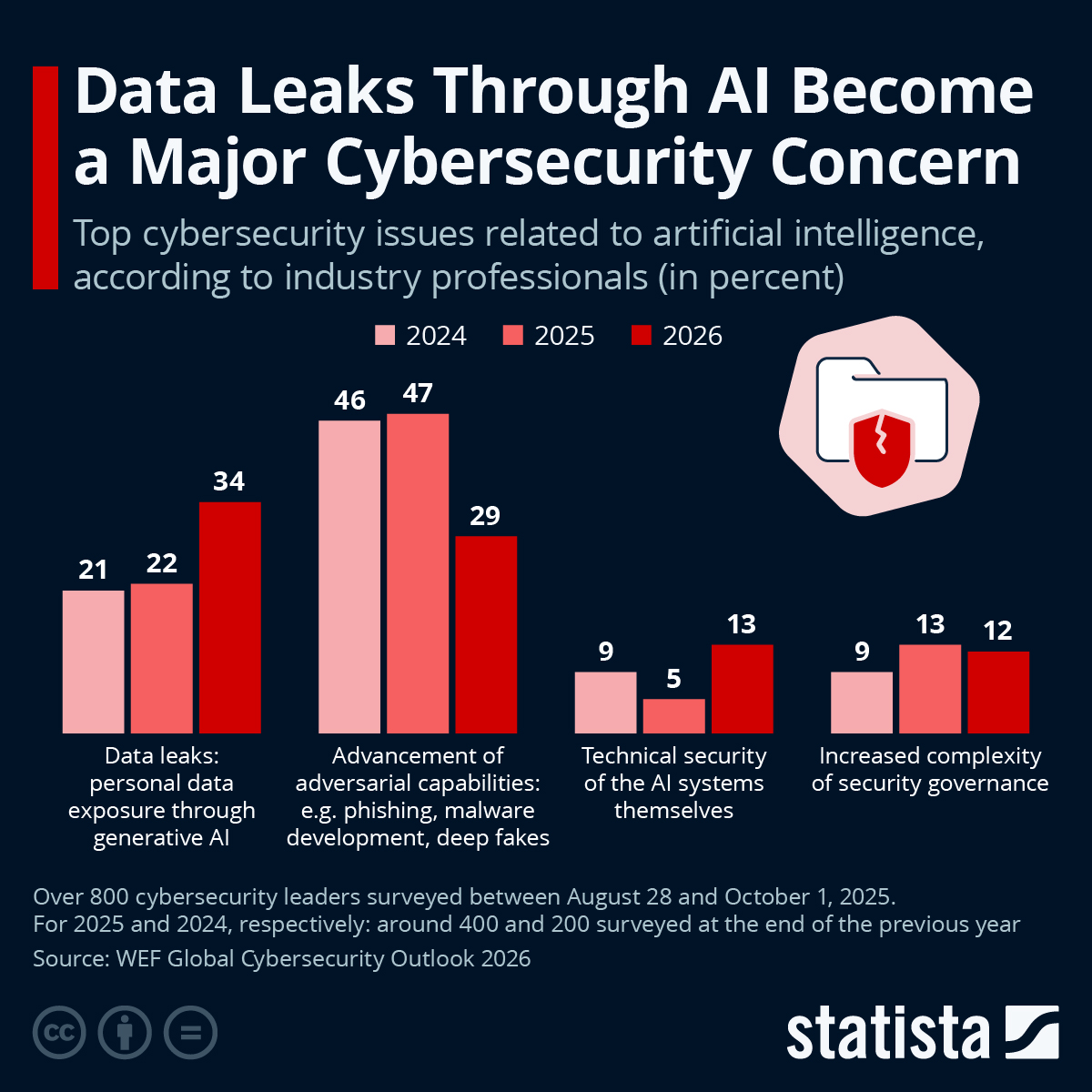

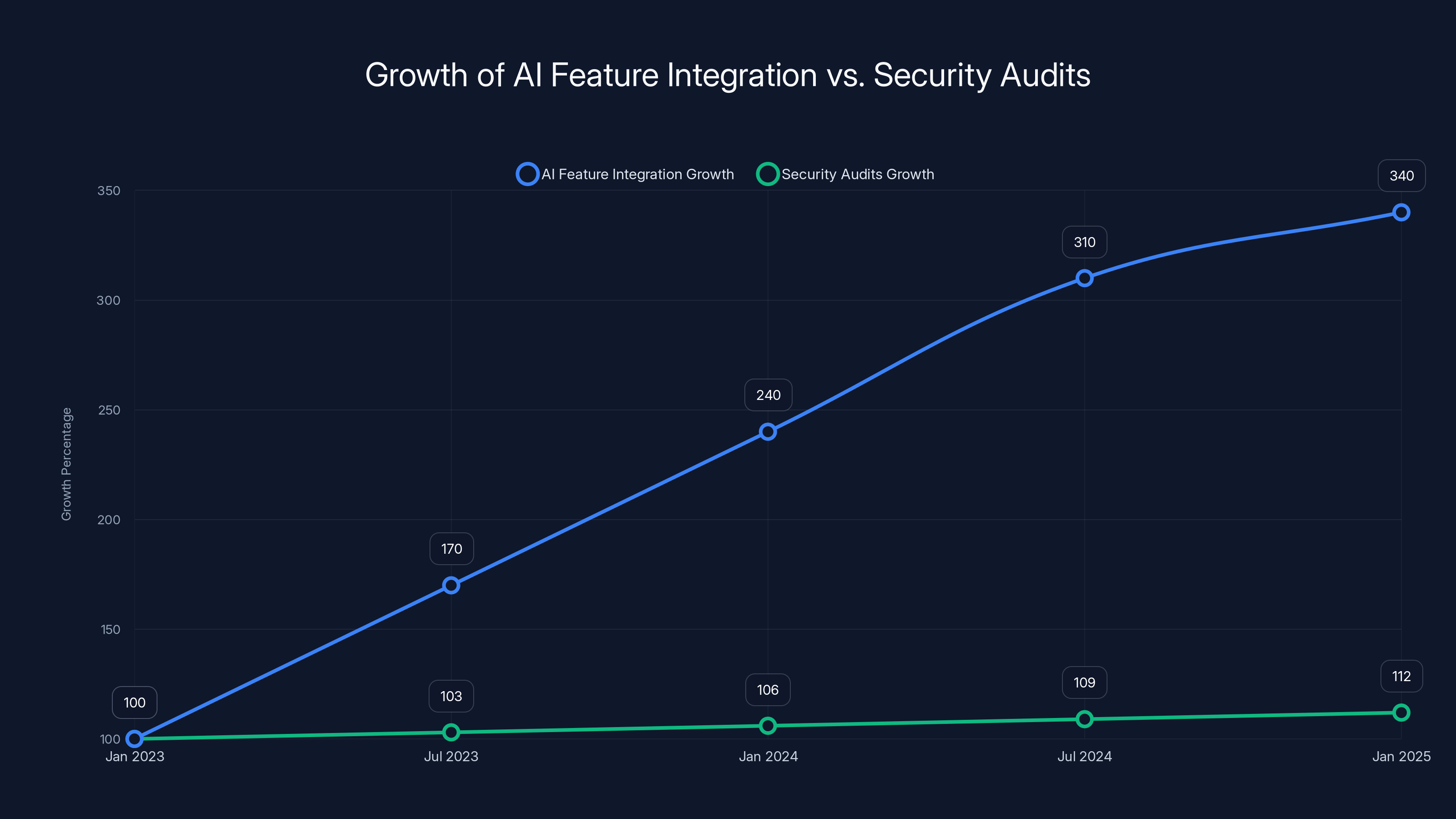

The number of apps integrating AI features increased by 340% between January 2023 and January 2025, while security audits only grew by 12%, highlighting a significant gap in security practices.

Understanding the AI Slop Phenomenon

Before we dive into the specific vulnerability, we need to understand what "AI slop" really means in this context. It's not just about low-quality AI-generated content. It's about the entire ecosystem of hastily-built, poorly-tested AI integrations that companies are shipping just to stay competitive.

The term originated from discussions about AI-generated text and images flooding the internet. But it's evolved into something bigger: a description of any product or service that prioritizes AI features over security, privacy, and user experience. It's the product of intense market pressure. Companies see competitors adding AI chatbots, image generators, or predictive analytics. They panic. They decide they need AI features immediately, not next quarter. So they bolt together third-party APIs, minimal testing, and cross their fingers.

The problem is that cutting corners on security becomes an afterthought. A developer might think, "We're just integrating this popular AI service. The service handles security, right?" Except that's not how security works. The developer is responsible for how they implement the service. They're responsible for what data they send. They're responsible for authentication and encryption.

This gap between feature development and security practices is exactly what created this crisis. Developers were moving too fast, and they weren't thinking about the second and third-order consequences of their choices.

How the 198 Apps Were Leaking Data

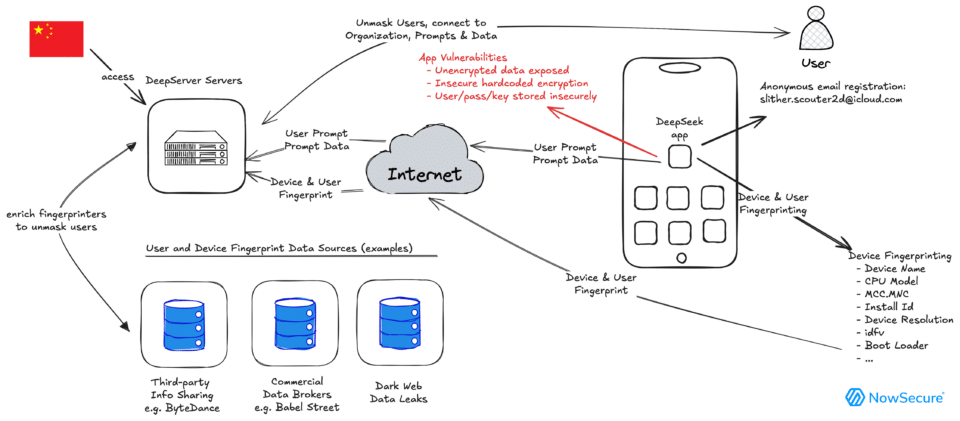

The technical details matter here, because they show how preventable this was. Security researchers discovered that affected apps were sending data to third-party servers through unencrypted connections. Let me break down exactly what was happening.

Most of the vulnerable apps were using cloud services or AI platforms to store and process user data. That's not inherently bad. The problem was in the implementation. Instead of properly authenticating with these services, many apps were:

1. Sending authentication credentials in plain text - Imagine handing someone your house key and just hoping they don't make copies. That's what these apps were doing with API credentials.

2. Not validating SSL certificates - When connecting to a server, apps should verify they're actually connecting to the legitimate server, not some attacker in the middle. Many of these apps skipped that step entirely.

3. Transmitting data without encryption - Private messages and location data were being sent across the internet like postcards, visible to anyone monitoring the network.

4. Overpermissioning on the backend - Even if someone accessed the server, they could read everything, not just their own data. It's like having a lock on one file when the entire filing cabinet should be restricted.

The researchers found that these vulnerabilities existed because developers didn't follow basic security practices. They were treating user data like internal project data, applying no special protections. And when you're processing millions of users' sensitive information, that negligence becomes a catastrophe.

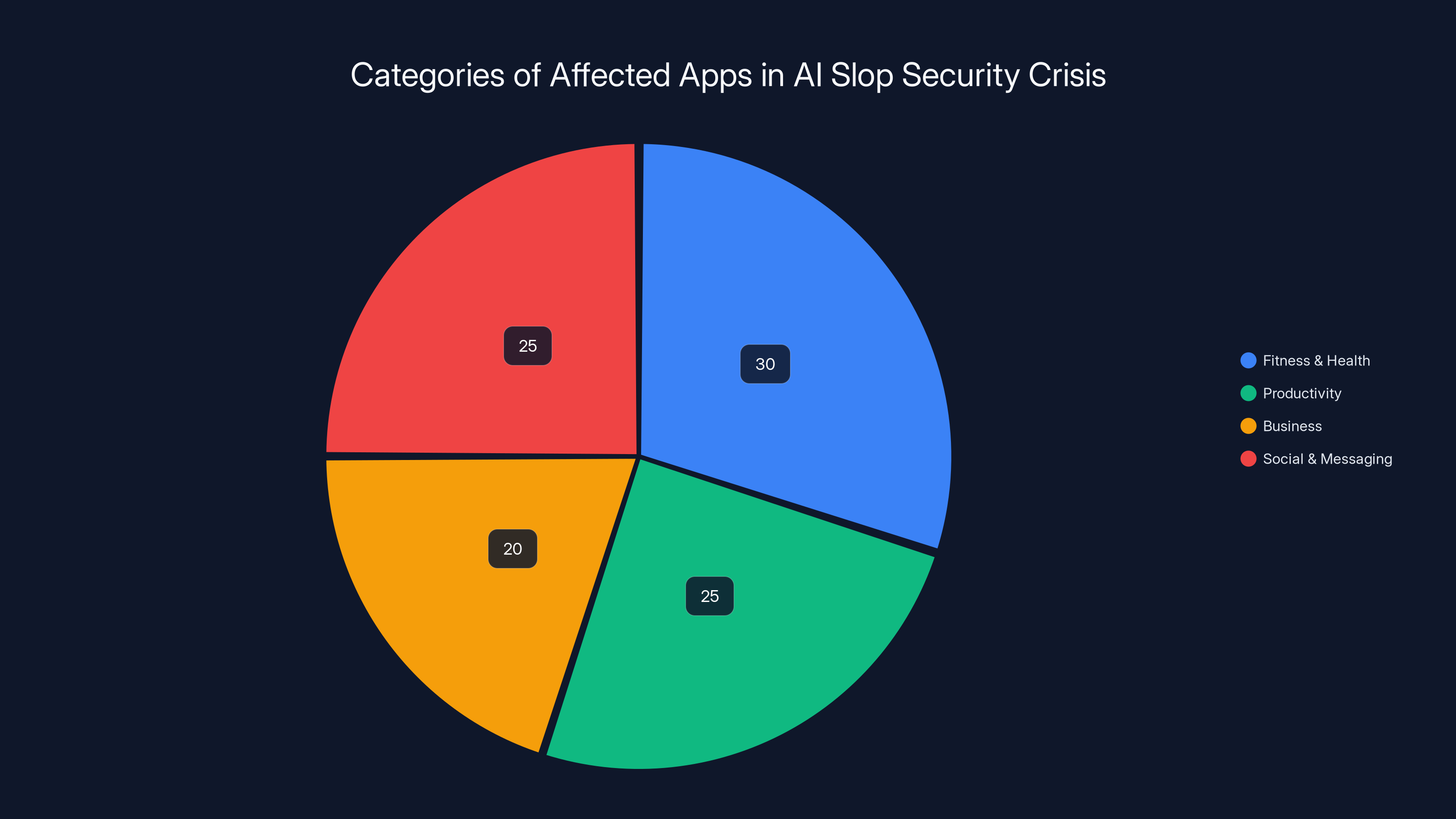

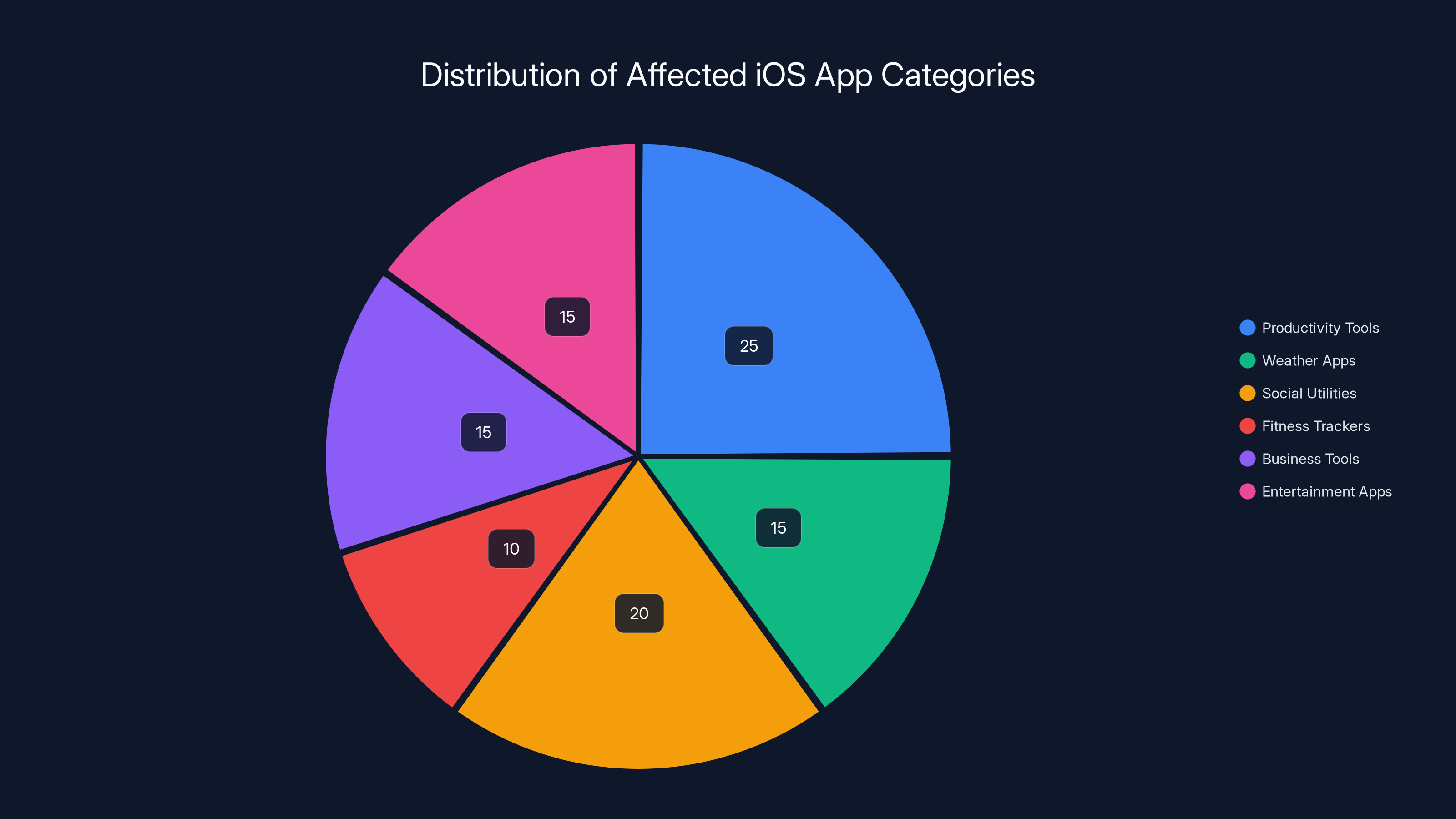

Estimated data shows that fitness and health apps, along with social and messaging apps, were the most affected categories, each comprising 30% and 25% of the vulnerable apps respectively.

The 198 Affected Apps Across Categories

Security researchers didn't release a complete list (understandable, as it would give attackers a roadmap), but they did identify apps across multiple categories. The pattern is important to understand, because it shows this isn't isolated to sketchy apps from unknown developers.

Affected categories included:

Fitness and Health Apps - Apps that track your workouts, health metrics, and sometimes integrate wearables. These were exposing location data from workout routes, personal health information, and sometimes even step counts and sleep data.

Productivity and Business Tools - Apps for note-taking, task management, and collaboration. These were leaking the actual content of notes, task lists, and in some cases, business documents.

Social and Messaging Apps - Smaller social platforms and messaging utilities were exposing private conversations, contact lists, and in some cases, video metadata.

Entertainment and Media Apps - Streaming services and content platforms were leaking viewing history, user preferences, and search queries.

Weather and Utility Apps - Apps that seemed innocuous were transmitting precise location data, sometimes continuously, combined with personally identifiable information.

Dating and Lifestyle Apps - Perhaps most concerning, dating apps and lifestyle platforms were exposing user profiles, chat messages, and location coordinates.

The common thread through all of these categories? The developers had integrated third-party AI services or analytics platforms without proper security controls. They trusted the third-party service to handle security. They didn't implement their own checks. And they didn't test the implementation.

Why Third-Party AI Services Are a Security Nightmare

Let's talk about why integrating third-party AI services, if done incorrectly, can become a security disaster.

When a developer decides to add AI features to an app, they usually have a choice: build the AI themselves (expensive, time-consuming, requires expertise) or use a third-party API (quick, relatively cheap, but adds a dependency). Most choose the third-party route. That's not necessarily wrong. But it requires careful implementation.

The problem emerges when developers don't understand the security implications. They see an API endpoint. They make requests to it. They don't think about:

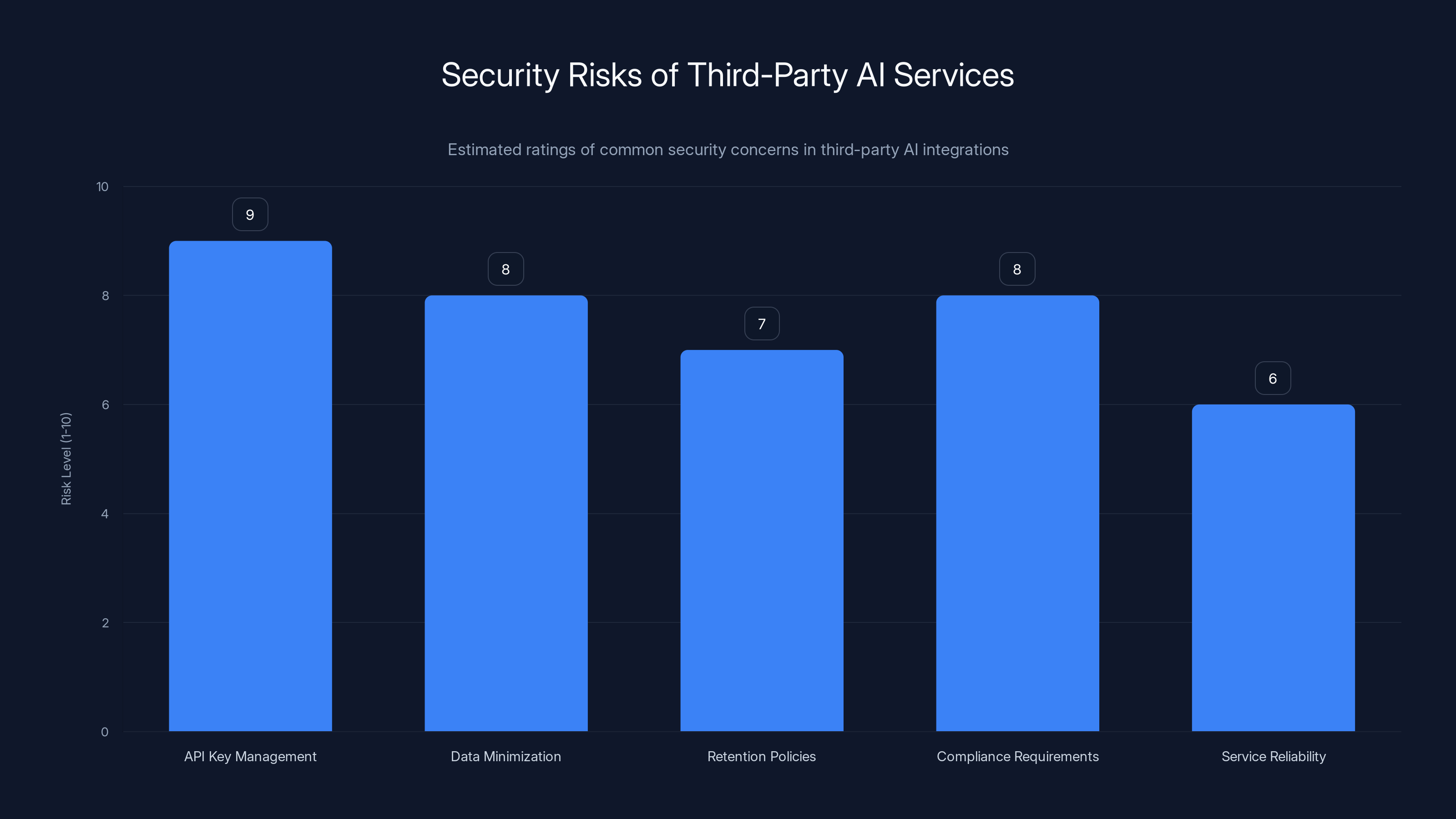

API Key Management - Where are the credentials stored? In the app binary? On a public GitHub repository? Both are disastrous. Your API keys should be in a secure backend server that the app communicates with, never stored in the app itself.

Data Minimization - How much data are you actually sending to the AI service? If you're sending entire private conversations to an AI service to process them, you're creating an unnecessary exposure. Could you anonymize the data first? Could you process some of it locally?

Retention Policies - What happens to the data after the AI processes it? Does the third-party service store it? For how long? Can you request deletion? This is often buried in terms of service that nobody reads.

Compliance Requirements - If the third-party service is in a different country, different data protection laws might apply. If you're using an AI service hosted in a country without strong data protection laws, you could be violating GDPR, CCPA, or other regulations.

Service Reliability - What happens if the third-party service goes down? The vulnerable apps sometimes failed open, meaning they sent data unencrypted as a fallback. That's a catastrophic design decision.

The 198 vulnerable apps made these mistakes in various combinations. Some leaked API keys. Some sent unencrypted data. Some both. And the impact on users was real, measurable, and ongoing.

The Real-Time Location Tracking Problem

One of the most alarming findings was that several of the apps were continuously transmitting user location data to servers without proper safeguards. This wasn't a one-time data leak. This was persistent, real-time tracking.

Consider a fitness app that tracks your workout routes. Normally, that's fine. You want the app to know where you exercised so it can calculate distance and show you a map. But several vulnerable apps were sending this location data to analytics servers that weren't owned by the app developer. These analytics companies had access to the precise coordinates of where users were, when they were there, and what they were doing.

Now multiply that across thousands of users. Across multiple apps. And suddenly, third-party analytics companies had detailed movement patterns of millions of people. They could correlate data across apps to understand where you lived, where you worked, where you shopped, where you went to the doctor.

This isn't theoretical. Location data combined with other information can identify individuals, even when names are removed. Researchers have shown that just four location data points can uniquely identify 95% of people. And these apps were transmitting hundreds of data points per user.

Worse, the location data was often transmitted without encryption and without any kind of user notification. The apps didn't say, "We're now sharing your location with analytics vendor XYZ." Users had no idea this was happening.

API Key Management poses the highest risk, followed by Data Minimization and Compliance Requirements. Estimated data highlights the importance of addressing these concerns.

Private Chat Exposure and Message Leakage

Perhaps even more concerning than location data was the exposure of private messages and chats. Several of the vulnerable apps included messaging features or integrated chat functionality. These apps were transmitting message content to cloud servers with minimal protection.

In some cases, messages were stored unencrypted on backend servers. In other cases, they were transmitted unencrypted to servers where they could be intercepted. In the worst cases, the backend database wasn't properly secured, meaning anyone who gained access to the server could read all messages from all users.

This is a fundamental breach of privacy. Private messages are supposed to be private. They're between you and the recipient. But these apps were treating them like any other data, without the special protections that sensitive communications should receive.

The vulnerability was particularly serious because many users trusted these apps with sensitive information. They were having personal conversations, sharing confessions, discussing health issues, talking about relationships. They weren't thinking about whether their messages were being properly protected. They assumed the app developer would handle that.

The assumption was wrong. And now users have to wonder if their private conversations were read by the app developer, by employees of the cloud service, by attackers who compromised the server, or by third parties who had legitimate access to the analytics data.

How These Vulnerabilities Persisted Undetected

One of the most striking aspects of this security crisis is how long these vulnerabilities went undetected. Many of the apps had been leaking data for months or even years before security researchers discovered the issue.

This raises an uncomfortable question: where were the app developers? Where were the security reviews? Where was Apple?

The answer is complex. App developers often lack security expertise. They're optimizing for feature velocity and user growth, not security. They might test the app in a controlled environment and see that it works. They don't have the tools or knowledge to monitor for data leaks after release.

Apple's app review process, while more rigorous than Android's, isn't foolproof. Reviewers are looking for obvious red flags: does the app crash? Does it spam notifications? Does it obviously violate the terms of service? They're not doing deep security audits. They're not running network traffic analysis. They're not checking whether API keys are hardcoded in the binary.

Third-party security tools that some companies use to scan for vulnerabilities aren't perfect either. A static code analysis tool might not catch that an app is sending data unencrypted. A dynamic analysis tool needs to simulate the exact conditions where the leak occurs.

So the vulnerabilities persisted because of:

Lack of expertise - App developers didn't know these were bad practices Lack of testing - Companies didn't have security review processes Lack of tools - App review systems aren't sophisticated enough to catch all issues Lack of transparency - No one was monitoring what data these apps were actually transmitting Lack of accountability - Even when vulnerabilities were found, patches were slow

The Role of Analytics and AI Platform Providers

It's important to acknowledge that some responsibility lies with the third-party services themselves. Analytics companies and AI platform providers that these apps were using didn't enforce security best practices on their customers.

When a developer integrates an analytics service, for example, that service should provide guidance on security. They should recommend using API keys stored on a backend server, not in the app. They should recommend encrypting data in transit. They should recommend only collecting necessary data. They should recommend compliance with regulations like GDPR.

Many do provide this guidance. But enforcement is lacking. If a developer ignores the guidance and implements the service insecurely, the third-party provider might not even notice. And they certainly don't have an incentive to proactively disable non-compliant integrations, because that means losing customers.

Some of the third-party services also had their own security issues. If an analytics service stores data unencrypted or doesn't properly secure access controls, it doesn't matter how carefully the app developer integrated it. The data is still exposed.

This creates a situation where security is everyone's responsibility and therefore no one's responsibility. The developer blames the third-party service. The third-party service blames the developer for misconfiguring it. The app review process assumes the developer handled it. And users are left unprotected.

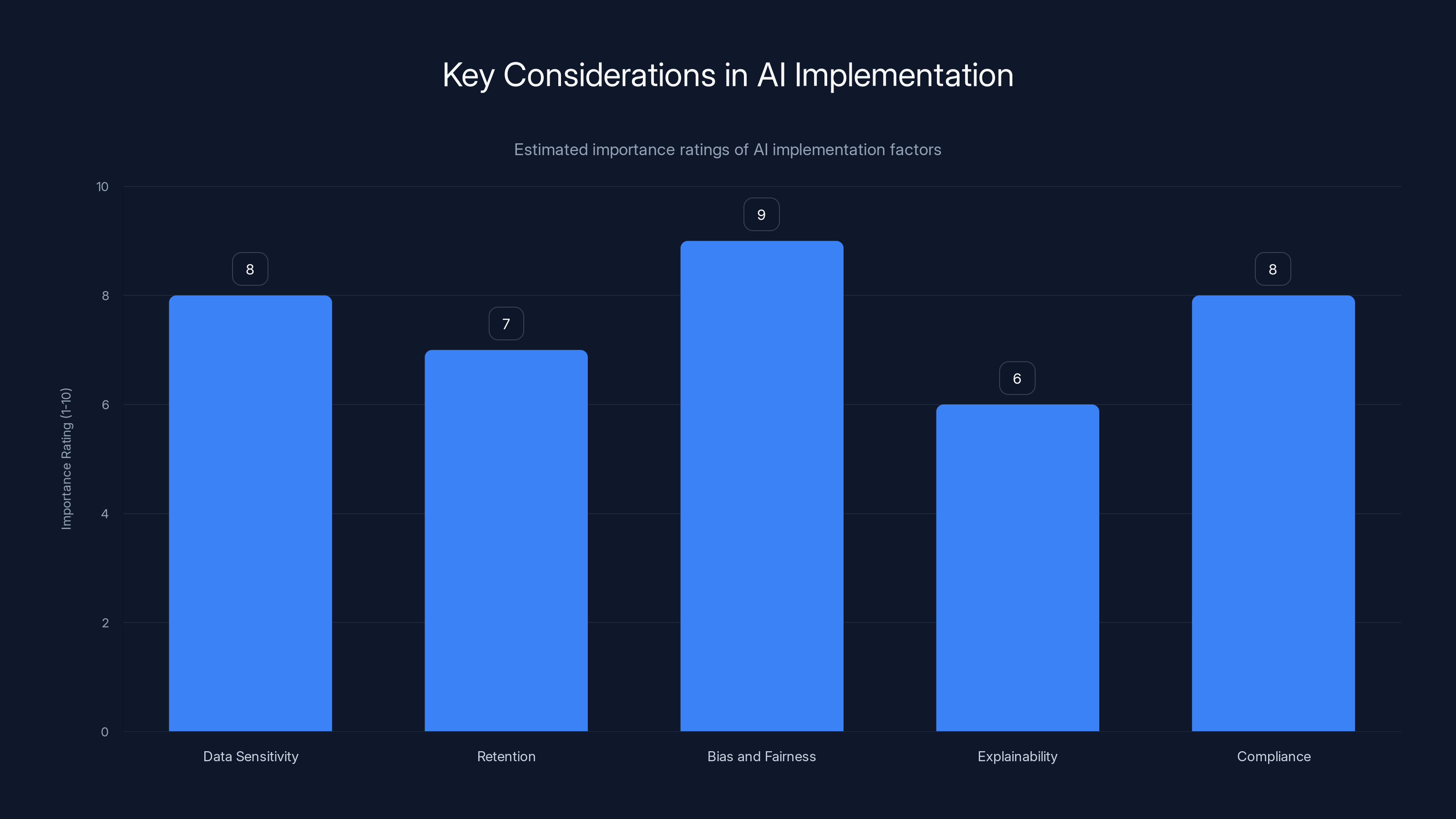

Data sensitivity and bias/fairness are critical factors in AI implementation, often overlooked in the rush to market. Estimated data.

Regulatory and Legal Implications

Beyond the immediate privacy violation, this crisis has significant legal implications. Many of the vulnerable apps were likely violating data protection regulations.

Under the General Data Protection Regulation (GDPR), which applies to any app serving European users, companies must implement security measures appropriate to the risk level. Transmitting unencrypted personal data doesn't meet that standard. Companies also must report data breaches within 72 hours. If these apps were leaking data and didn't report it, that's a separate violation.

The California Consumer Privacy Act (CCPA) and related state privacy laws give users rights to know what data is collected and how it's used. Many of these apps didn't disclose the data transmissions to third-party servers in their privacy policies. That's a violation.

Various other jurisdictions have their own rules. And Apple's App Store guidelines explicitly require privacy protection and prohibit data transmission without user disclosure.

So affected companies aren't just facing user backlash. They're facing potential regulatory investigations and legal liability. In many cases, user class actions have already been filed.

How to Check If Your Apps Are Vulnerable

Security researchers didn't release a complete list of vulnerable apps, partly for responsible disclosure reasons and partly because the full list wasn't publicly disclosed by all researchers. However, you can take steps to assess your own app security.

Check App Permissions - Go to Settings > Privacy and review what permissions each app has. If an app has permission to access location, photos, or contacts but doesn't need it, revoke permission.

Monitor Network Traffic - Use a VPN app that shows you what data your apps are sending. Look for unencrypted connections (HTTP instead of HTTPS) or connections to unexpected servers.

Check Privacy Policies - Actually read the privacy policy. If the app collects data but doesn't explain how it's protected, that's a red flag.

Update Regularly - Make sure all apps are up to date. Developers release patches to fix vulnerabilities. If you're running an old version, you might be vulnerable.

Review Permissions Over Time - Apps sometimes request new permissions in updates. Review what's new and revoke permissions you don't approve.

Check Developer Reputation - Has the developer been in the news for privacy issues? Do they have good reviews? Do they respond to security concerns? Established, reputable developers are generally more trustworthy.

Delete Suspicious Apps - If you can't find information about an app, if it's from an unknown developer, or if reviews mention privacy concerns, just delete it. There are plenty of alternatives.

Data Breach Response and Your Rights

If you were using one of the vulnerable apps, you have rights and should understand what recourse is available.

First, assess your exposure - If the app had location access, your location was potentially exposed. If it had chat or messaging features, your messages might have been exposed. If it had access to your contacts, that information might have been exposed.

Monitor your accounts - Watch for suspicious activity. Change passwords for important accounts, especially if the app had access to authentication data.

Consider credit monitoring - If financial information was potentially exposed, consider a credit monitoring service. Many offer free trials, and some provide free monitoring for breach victims.

Know your rights - In many jurisdictions, you have the right to:

- Be notified of the breach

- Understand what data was compromised

- Request deletion of your data

- Seek compensation in some cases

- File complaints with data protection authorities

Contact the developer - Request confirmation of what data was collected and accessed. Demand deletion of your data. Document all communications.

File complaints - Contact your state attorney general, the FTC, or relevant data protection authority. These agencies investigate privacy violations and can take enforcement action.

Join class actions - Class action lawsuits against data-violating companies are common. If you were affected, joining a class action might result in compensation.

Estimated data shows a diverse range of app categories affected by the AI Slop Security Crisis, with productivity tools and social utilities being the most impacted.

Best Practices for App Security Going Forward

Beyond protecting yourself from known vulnerabilities, you can adopt practices that reduce your risk going forward.

Use strong, unique passwords - Each app or service that requires a password should have a unique password. Use a password manager to keep track.

Enable two-factor authentication - Whenever available, enable 2FA. This prevents attackers from accessing accounts even if they have your password.

Minimize app permissions - Only grant permissions that are absolutely necessary. Deny everything else. You can always change it later.

Use privacy-focused alternatives - For sensitive categories like messaging or email, consider privacy-focused alternatives. Signal for messaging, Proton Mail for email, etc.

Review privacy policies before downloading - If an app's privacy policy seems unclear or concerning, don't download it. There are thousands of apps for each category.

Keep your phone updated - iOS updates often include security patches. Don't delay updates.

Use a VPN - A reputable VPN can hide your network traffic from your ISP and local networks, preventing man-in-the-middle attacks.

Be skeptical of new apps - Established apps with millions of users and active developers are generally more secure than new apps from unknown developers. Take time before downloading new apps.

Monitor your data - Periodically check what data apps have access to and how much data you've given them. This isn't something you do once and forget; it requires ongoing attention.

The Broader Context: AI Implementation Crisis

This security crisis is actually a symptom of a larger issue: companies are implementing AI features too quickly, without proper security and privacy considerations.

It's not just about these 198 apps. It's about an industry-wide pattern where AI integration is treated as a checkbox feature, not a responsibility. Companies see AI as a differentiator. They see competitors adding AI. So they rush to add AI, often without understanding the implications.

The problem is that AI integration requires thinking about:

Data sensitivity - What data will the AI process? Is it sensitive? How should it be protected?

Retention - How long should the AI platform retain data? Should users be able to request deletion?

Bias and fairness - Can the AI make decisions that unfairly affect certain groups?

Explainability - Can you explain to users why the AI made a particular decision?

Compliance - Are you violating any regulations by using this AI service?

Most companies aren't thinking about these questions. They're thinking about shipping the feature.

Until that changes, until companies are held accountable for the security implications of their AI features, we'll continue to see crises like this.

What Apple Should Do and What Users Should Demand

Apple positions itself as privacy-first and security-first. The discovery that 198 apps were leaking data while being distributed through the App Store is an embarrassment that calls into question those claims.

Here's what Apple should do:

Implement mandatory security reviews - Not just a surface review, but actual security analysis of app behavior. This might mean requiring developers to submit network traffic logs or security audit reports.

Enforce privacy labels strictly - Privacy labels on the App Store are supposed to show what data apps collect. But they're based on developer self-reporting. Apple should verify these claims or at least sanction developers who misrepresent them.

Create a security incident database - Maintain a publicly searchable database of apps that have had security vulnerabilities. Let users know if an app has a history of security issues.

Implement continuous monitoring - Instead of reviewing apps once when they're submitted, Apple should implement continuous monitoring of app behavior, network traffic, and data collection.

Enforce stronger penalties - If an app is found to be leaking data, immediate removal and developer account restrictions should follow. Current penalties seem insufficient to incentivize security.

Provide security tools to developers - Many developers don't have the expertise to implement security correctly. Apple should provide better tools and guidance.

Users should demand these changes. The App Store is built on the premise that Apple has carefully vetted apps for quality and safety. If that promise is being broken, users should consider alternative platforms or be more selective about which apps they trust.

Research indicates that just four location data points can uniquely identify 95% of individuals. Estimated data based on typical research findings.

Corporate Accountability and Industry Response

Beyond Apple, the app developers themselves need to be held accountable.

This includes:

Transparency about the vulnerability - Companies should publicly disclose which apps were affected, which data was exposed, and whether users' data was actually accessed by unauthorized parties.

Proactive notification - Users should be notified if their data was exposed. Not buried in legal documents, but clear, direct notification.

Remediation - Companies should fix the vulnerabilities, update the app, and encourage users to update immediately.

Compensation - In cases where data was actually compromised, companies should offer some form of compensation. Free credit monitoring, for example.

Policy changes - Companies should update their internal policies to prevent this from happening again. This means security reviews before shipping, regular audits, and accountability for security decisions.

The industry as a whole also needs to change. Right now, there's a dynamic where security is expensive and slow, while shipping new features is fast and rewarded. That incentive structure needs to flip. Companies that handle user data securely should be rewarded. Companies that cut corners should be punished.

Looking Forward: Can This Happen Again?

Unfortunately, yes. This type of vulnerability will continue to happen until the fundamental incentives change.

As long as developers are evaluated on feature velocity and users acquired, rather than security and privacy, some developers will cut corners. As long as app reviews are surface-level rather than security-focused, vulnerabilities will slip through. As long as third-party AI and analytics services don't enforce best practices, developers will implement them insecurely.

The 198 apps were just the ones that got caught. There are undoubtedly other apps with similar vulnerabilities that haven't been discovered yet. There might be vulnerabilities we don't even have names for.

What will change this?

Regulation - GDPR, CCPA, and similar regulations are starting to impose real consequences for data breaches. As regulations become stricter and enforcement improves, companies will have stronger incentives to implement security properly.

Litigation - Class action lawsuits against data-violating companies are increasing. As damages increase, companies will take security more seriously.

Consumer pressure - As users become more aware of privacy issues, they'll choose apps from companies with better privacy practices. Market pressure can be powerful.

Industry standards - Professional organizations and industry groups are developing standards and best practices for secure app development. As these become mainstream, security will become table stakes.

Technology - Better tools for security testing, privacy analysis, and vulnerability detection will make it easier to catch issues before apps are released.

But all of these take time. In the meantime, users need to protect themselves by being selective about which apps they download, what permissions they grant, and which data they share.

FAQ

What is the AI slop security crisis?

The AI slop security crisis refers to the discovery of 198 iOS apps that were improperly leaking sensitive user data including private messages, location information, and personal details. This occurred because developers were rushing to integrate AI and analytics features without implementing proper security controls, encryption, or data protection measures. The crisis highlights how prioritizing speed of development over security and privacy can result in massive data exposure affecting millions of users.

How were these apps leaking data?

The vulnerable apps were leaking data through several methods: transmitting unencrypted data over plain HTTP instead of secure HTTPS connections, storing API credentials in the app itself where they could be extracted, failing to validate SSL certificates when connecting to servers, and sending sensitive information like messages and location data to third-party analytics or AI services without proper authentication or encryption. In some cases, the backend servers storing the data weren't properly secured, allowing unauthorized access to all user data.

Why did this go undetected for so long?

These vulnerabilities persisted undetected for months or years because app developers often lack security expertise and prioritize feature development over security reviews. Apple's app review process, while more rigorous than alternatives, focuses on obvious issues like crashes and policy violations rather than deep security audits of network traffic or encryption practices. Most developers don't have tools to monitor whether their apps are leaking data in production. Additionally, third-party security scanning tools aren't always sophisticated enough to catch these specific types of vulnerabilities.

Which categories of apps were affected?

The vulnerable apps spanned multiple categories including fitness and health apps, productivity tools, business apps, social and messaging platforms, entertainment services, weather utilities, and dating apps. Common across all categories was improper implementation of third-party AI services, analytics platforms, or cloud storage without adequate security protections. This demonstrates that the vulnerability wasn't limited to sketchy apps from unknown developers but affected apps across the app store.

What should I do if I used one of these vulnerable apps?

If you used one of the affected apps, you should update to the latest version immediately (if the developer has released a patch) or delete the app entirely. Change passwords for any critical accounts, especially if the app had authentication access. Monitor your accounts for suspicious activity and consider credit monitoring if the app had access to financial information. Go through your iOS privacy settings to audit permissions you've granted to other apps. You may also have rights to file complaints with data protection authorities or join class action lawsuits against the developers.

How can I protect myself from similar vulnerabilities in the future?

Protect yourself by downloading apps only from established developers with solid reputations and good user reviews. Regularly audit your app permissions in iOS Settings and deny access for anything unnecessary. Read privacy policies before downloading apps, and be suspicious if they're vague about data collection. Keep iOS and all apps updated to get security patches. Use privacy-focused alternatives for sensitive categories like messaging. Enable two-factor authentication wherever available. Consider using a VPN to monitor network traffic from your apps. Most importantly, be selective about what data you share with apps and what permissions you grant.

What is Apple's responsibility in this?

Apple's app review process is supposed to ensure that apps meet certain quality and security standards before appearing in the App Store. The discovery of 198 leaking apps suggests that the review process isn't sufficiently rigorous in detecting security vulnerabilities. Apple should implement mandatory security reviews, verify privacy claims made by developers, enforce stricter penalties for violations, and implement continuous monitoring of app behavior rather than just reviewing apps once at submission. Apple's "privacy-first" positioning calls for stronger accountability.

Are Android apps also vulnerable?

While the specific crisis involving 198 apps focused on iOS, the underlying vulnerabilities (improper third-party service integration, unencrypted data transmission, insecure API credential storage) aren't unique to iOS. Similar vulnerabilities could and likely do exist in Android apps. However, Android's more open ecosystem makes comprehensive security analysis more difficult. The same best practices apply to Android users: audit app permissions, be selective about which apps you download, keep your system updated, and monitor account activity.

What does this mean for AI feature integration?

This crisis demonstrates that integrating AI features without proper security and privacy considerations creates serious risks. It shows that developers often don't understand the security implications of using third-party AI services, such as where credentials should be stored, what data should be minimized, how data should be encrypted, and what retention policies should apply. Going forward, companies should implement security reviews as part of their AI feature development process, understand and verify that third-party AI services meet security standards, and be transparent with users about what data AI features collect and how it's protected.

What regulatory consequences could developers face?

Developers of the vulnerable apps could face significant regulatory consequences including violations of GDPR (which requires appropriate security measures and breach notification within 72 hours), state privacy laws like CCPA (which gives users rights to know what data is collected), FTC enforcement actions for unfair or deceptive practices, and class action lawsuits seeking damages from affected users. Data protection authorities in relevant jurisdictions may conduct investigations and impose fines. These legal and financial consequences should incentivize companies to implement security properly rather than as an afterthought.

How can I verify if a specific app is secure?

You can assess an app's security by researching the developer's history and whether they've had previous security issues, reading user reviews carefully for mentions of privacy concerns or unexpected data collection, checking if the app actually has a privacy policy and reading it thoroughly, verifying that the app requests only necessary permissions, using network monitoring tools to see what servers the app connects to and whether connections are encrypted, and checking if the developer responds promptly to security concerns reported by users. If you're uncertain about an app's security, it's safer to simply use an alternative.

How to Implement Enhanced App Security Practices

For those interested in understanding what developers should have done to prevent this crisis, here are the core practices:

Step 1: Secure API Credential Management

Don't store API keys in your app code. Create a backend server that manages credentials. Your app talks to your backend, which then talks to third-party services. This way, if someone reverse-engineers your app, they can't access third-party service credentials.

Step 2: Implement End-to-End Encryption

For sensitive data like messages, implement encryption that only the sender and receiver can read. Use established encryption libraries. Don't invent your own encryption. Use proven protocols like TLS for data in transit and AES for data at rest.

Step 3: Data Minimization

Only send data to third-party services that's absolutely necessary. Don't send the entire message to an AI service if you only need the first 100 characters analyzed. Anonymize data whenever possible. This reduces the impact if the service is compromised.

Step 4: Validate SSL Certificates

When connecting to remote servers, verify that you're actually connecting to the legitimate server. This prevents man-in-the-middle attacks. In iOS, this means implementing proper certificate pinning for critical connections.

Step 5: Implement Proper Access Controls

On your backend, ensure that users can only access their own data. Even if someone compromises your authentication, they shouldn't be able to read other users' data. This is a basic principle that was violated by many of the vulnerable apps.

The Future of App Security

The 198-app crisis is likely just the beginning of a larger reckoning about privacy and security in the app ecosystem. As users become more aware of privacy issues, as regulations tighten, and as the cost of breaches increases, companies will have stronger incentives to implement security properly.

But change won't happen overnight. Right now, the incentives are misaligned. A company can ship an app insecurely, gain users quickly, and benefit from being first to market. If a breach is discovered a year later, they might pay a fine or lose some users, but they've already captured market share and revenue.

Flipping these incentives requires action from multiple parties. Regulators need to enforce strong penalties. Users need to reward companies with good privacy practices. The industry needs to adopt standards that make security table stakes. And technology needs to make it easier to implement security correctly.

Until that happens, data leaks and privacy violations will continue. The 198 apps were just caught. How many more are out there, leaking data right now, undetected?

The answer is probably: more than any of us are comfortable with.

Final Thoughts: What This Means for Your Data

The AI slop security crisis is ultimately a reminder that your data is valuable and that many companies don't handle it as carefully as they should. Your location, your messages, your health information, your search history, your contacts. This is intimate data. It deserves protection.

But relying on developers to protect this data is clearly insufficient. You need to protect it yourself. That means being selective about which apps you download, which permissions you grant, and which companies you trust with your data. It means regularly auditing your phone to remove apps you don't use or don't trust. It means keeping your system updated. It means understanding your rights and being willing to take action if those rights are violated.

It also means supporting regulation and industry change. Vote with your wallet. Choose privacy-first alternatives. Report privacy violations to authorities. Join class actions if you're affected. The more users make it clear that privacy matters, the more incentive companies have to protect it.

The 198 apps got caught. But they're symptoms of a larger problem. Until the incentives change, until security becomes table stakes rather than an afterthought, there will be more crises. More data leaks. More violations.

Your data is the most personal thing you have. Treat it accordingly.

Key Takeaways

- 198 iOS apps were leaking sensitive data including private messages and location information through unencrypted connections to third-party servers

- Root causes included improper API credential management, missing SSL certificate validation, and insecure backend access controls in third-party service integrations

- Vulnerable apps spanned multiple categories: fitness, productivity, social messaging, entertainment, utilities, and dating platforms

- Real-time location tracking without encryption combined with personal data allowed third-party analytics companies to build detailed user movement profiles

- Users have legal rights under GDPR, CCPA, and state privacy laws to notification, data deletion, and potential compensation from affected developers

- Immediate protective steps: audit app permissions in iOS Settings, update or delete apps from untrusted developers, monitor accounts for suspicious activity

- The crisis reflects industry-wide incentive misalignment where feature velocity is rewarded over security, requiring regulatory and consumer pressure to change

Related Articles

- Best VPN Service 2026: Complete Guide & Alternatives

- WhisperPair Bluetooth Hack: Complete Security Guide [2025]

- Microsoft Copilot Prompt Injection Attack: What You Need to Know [2025]

- NordVPN vs Proton VPN 2025: Complete Comparison Guide

- CIRO Data Breach Exposes 750,000 Investors: What Happened and What to Do [2025]

- Edit Password-Protected Office Files in Google Workspace [2025]

![198 iOS Apps Leaking Private Chats & Locations: The AI Slop Security Crisis [2025]](https://tryrunable.com/blog/198-ios-apps-leaking-private-chats-locations-the-ai-slop-sec/image-1-1768914431081.jpg)