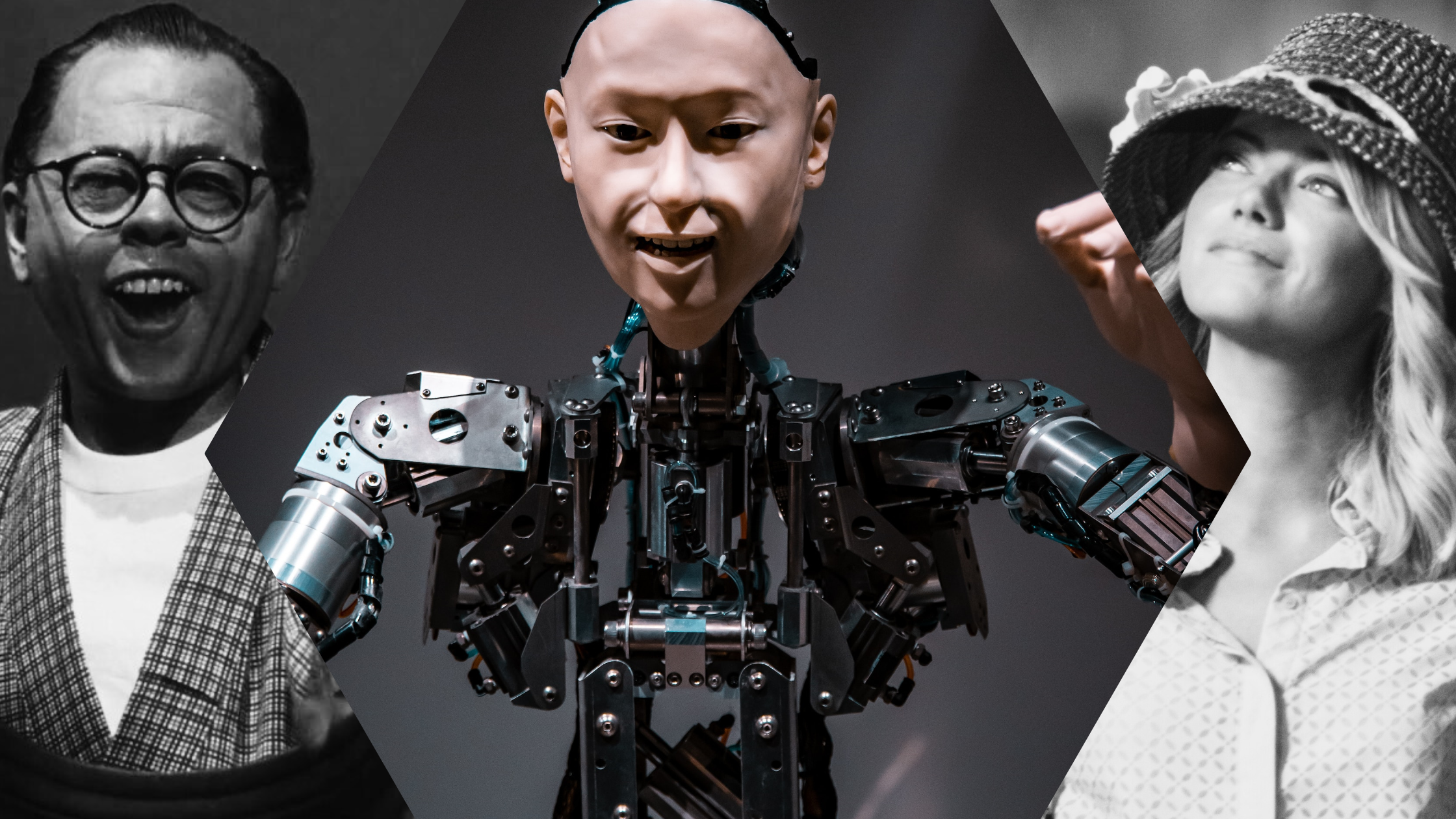

The Rise of AI-Generated Actors: A Watershed Moment in Entertainment

The entertainment industry stands at a critical juncture. For the first time in cinema's 130-year history, the fundamental question of what constitutes an "actor" has become genuinely uncertain. We're witnessing the emergence of synthetic performers—digital humans created entirely through artificial intelligence—that can deliver performances indistinguishable from their human counterparts. This technological shift represents more than just an incremental advancement; it challenges the very foundation of an industry built on human talent, craftsmanship, and authentic emotional performance.

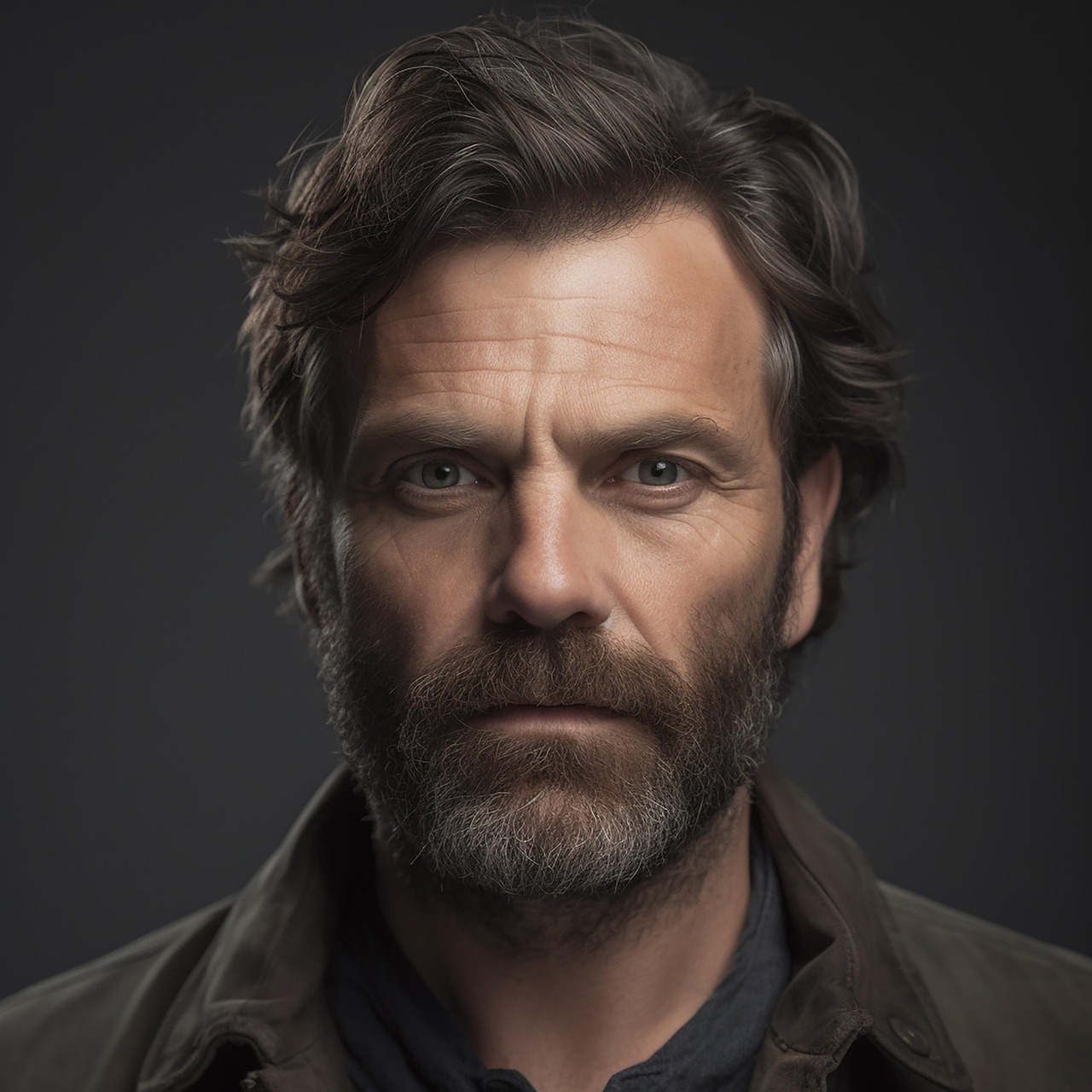

Actors, writers, directors, and industry veterans have begun sounding the alarm about this transformation. Among the most vocal advocates for protecting human actors is Sean Astin, the acclaimed performer known for his roles in major film franchises and television series. Astin's warnings about the "onslaught" of AI actors aren't mere speculation about distant futures—they reflect genuine anxieties about a technology that's advancing faster than the industry can adequately regulate or respond to.

What makes this moment truly unprecedented is the convergence of several technological and economic forces. Artificial intelligence has achieved remarkable capabilities in facial synthesis, voice generation, motion capture interpretation, and emotional expression simulation. Meanwhile, the financial incentives driving studios toward AI solutions are substantial: eliminating pension obligations, avoiding union negotiations, reducing post-production timelines, and scaling content creation exponentially. For major studios, AI actors represent not just innovation—they represent operational transformation with profound cost implications.

The stakes couldn't be higher for performers. Acting isn't simply about physical appearance or vocal delivery; it encompasses a complex ecosystem of creative professionals—casting directors, acting coaches, stunt coordinators, makeup artists, and dozens of other specialized roles that depend on human talent being cast and employed. When AI replaces actors, it doesn't just eliminate jobs; it cascades through an entire economic system.

Yet the conversation remains deeply nuanced. Technology itself is neutral; the harm comes from implementation without ethical guardrails. Some applications of AI in entertainment—enhancing de-aged actors, creating convincing historical reconstructions, or generating background characters for expensive productions—offer genuine value. The question isn't whether AI should exist in entertainment; it's how the industry will ensure that this technology serves creative ambition rather than pure cost-cutting at the expense of human livelihoods.

This comprehensive exploration examines the technological landscape of AI actors, the legitimate concerns raised by industry professionals, the economic drivers behind adoption, the legal and ethical frameworks being developed, and practical strategies for ensuring that AI enhances rather than diminishes human creativity in entertainment.

Understanding AI Actor Technology: How Digital Humans Are Created

The Foundation: Deepfakes and Facial Synthesis

At its core, AI actor technology builds on deepfake capabilities that have evolved dramatically since their introduction in the mid-2010s. Early deepfakes were obviously artificial—glitchy, unrealistic, and computationally expensive to produce. Today's technology has matured to the point where synthetic facial expressions, eye movements, and lip-sync are virtually undetectable to casual viewers and increasingly difficult for trained observers to distinguish from reality.

The technical foundation relies on generative adversarial networks (GANs)—a machine learning architecture consisting of two neural networks in competition. One network (the generator) creates synthetic images, while another (the discriminator) tries to identify fake images. This adversarial process drives continuous improvement, with each iteration producing more realistic results. Modern implementations use transformer-based architectures and diffusion models that achieve unprecedented fidelity.

What's particularly significant is that facial synthesis has become modular. Studios no longer need to film extensive footage of source actors; algorithms can now generate realistic facial performances by learning from relatively limited reference material. Some systems require only 30 seconds to a few minutes of video to generate convincing replicas that can perform entirely new scenes with novel dialogue and emotional expressions.

Voice Synthesis and Emotional Expression

Simultaneously, text-to-speech technology has evolved from robotic and obviously synthetic to surprisingly natural and emotionally nuanced. Modern voice synthesis systems don't merely reproduce phonemes mechanically; they learn prosody patterns, emotional inflection, breathing patterns, and the subtle vocal variations that make human speech feel authentic. Leading systems can now generate voice performances that carry emotional weight—conveying anger, sadness, confusion, or joy through vocal quality rather than just word choice.

The emotional expression layer is where AI actor technology becomes genuinely sophisticated. Real acting involves subtle micro-expressions, eye contact variations, breathing patterns, postural shifts, and thousands of micro-movements that collectively communicate emotional authenticity. Contemporary AI systems trained on extensive footage of human performances can now generate these subtleties with remarkable accuracy. An AI actor can convincingly portray complex emotional transitions—moving from skepticism to conviction, or from confidence to doubt—in ways that read as authentic to audiences.

Motion Capture and Body Performance

Body performance remains partially more straightforward than facial performance, yet equally crucial. Motion capture technology has existed for decades, but AI has transformed how captured motion is synthesized. Rather than simply recording and replaying human movement, AI systems can now generate novel movements that maintain stylistic consistency with a specific performer's movement vocabulary. An AI system trained on an actor's physicality can generate new movements—novel combinations of existing patterns—that feel entirely authentic to that performer's movement style.

This capability is particularly valuable for generating background performers, crowd scenes, or sequences where human stunt performers might face injury risk. The synthesis can generate thousands of unique variations of movement from limited source material, enabling cost-effective scaling of extras and minor roles.

Real-Time Performance Synthesis

One of the most recent breakthroughs involves real-time performance synthesis. Rather than rendering AI-generated performances offline over hours or days, emerging systems can now generate synthetic performances in real-time, responding to live direction and immediate creative adjustments. This represents a crucial transition point: synthetic performers are moving from pre-recorded, pre-programmed assets into responsive, interactive participants in creative processes.

Real-time synthesis enables interactive applications where directors can literally direct AI actors as they would human performers, adjusting emotional intensity, timing, and physicality during production rather than in post-production. This technological capability fundamentally changes the relationship between directors and synthetic talent—making it feel less like post-production manipulation and more like actual performance direction.

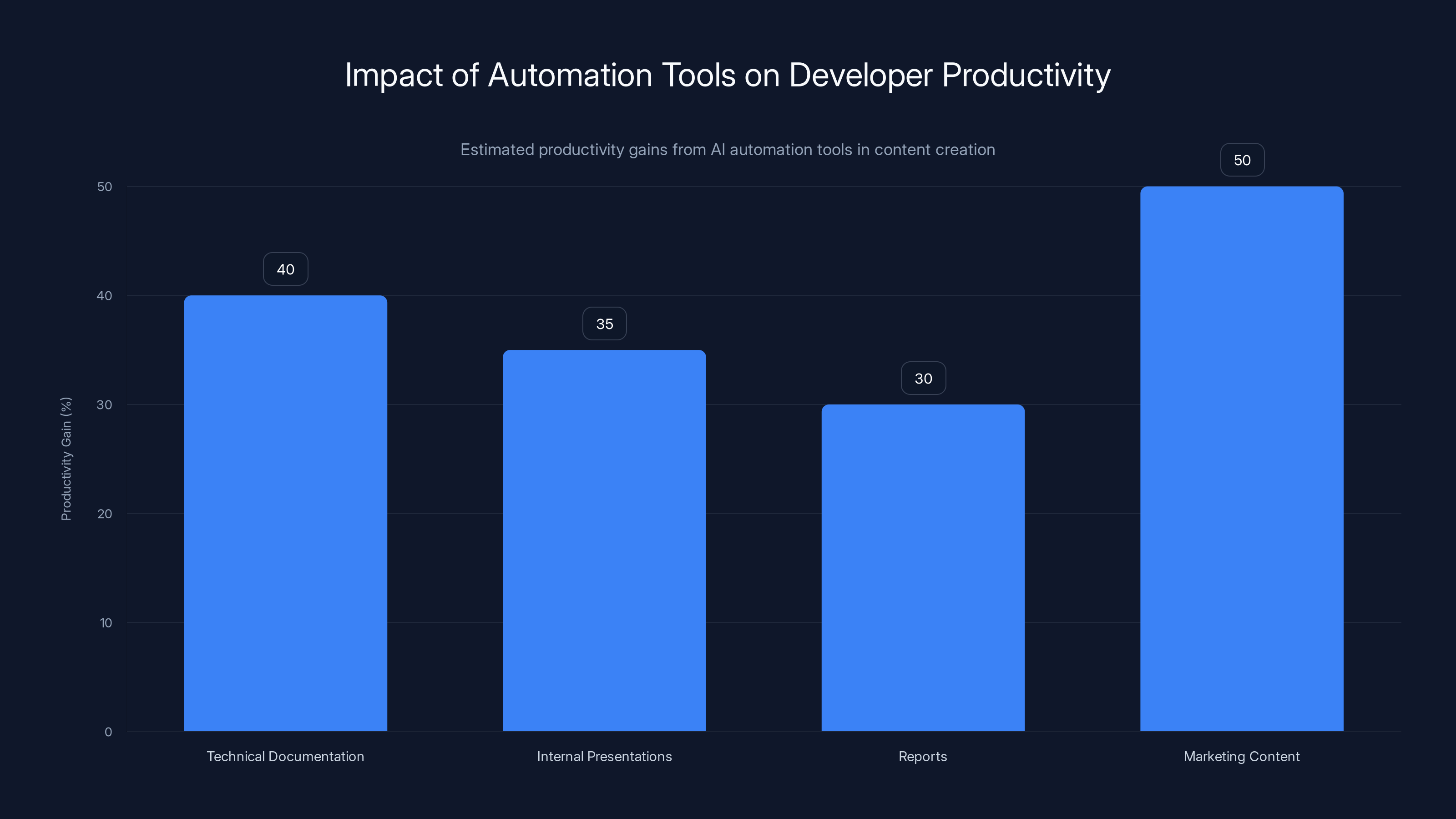

Estimated data shows significant productivity gains across various content types when using AI automation tools, with marketing content seeing the highest improvement.

Industry Concerns: Why Sean Astin and Other Professionals Are Alarmed

The Existential Threat to Performance Work

Sean Astin's warnings about AI actors shouldn't be dismissed as technophobic resistance to innovation. Instead, they reflect legitimate professional concerns grounded in decades of industry experience. Astin understands something that economists sometimes miss: employment in entertainment isn't merely about the top-earning stars. The vast majority of professional actors—character actors, supporting performers, ensemble cast members, and background extras—build sustainable careers by consistently booking work across television, film, and streaming projects.

AI actors pose an existential threat to this working ecosystem. Unlike star power, which remains irreplaceable for marquee roles, background performers, supporting cast, and many character actors are economically fungible from a studio's perspective. An AI system that can generate unlimited variations of background performers, minor characters, or supporting roles eliminates the primary employment pathway for the vast majority of professional actors. These aren't the celebrated names we recognize; they're the people who've invested years in training, networking, and building sustainable careers.

The economic logic is straightforward and terrifying from actors' perspective: if a studio can generate a crowd scene with 500 unique AI-generated extras in an afternoon for essentially zero labor cost, why would they hire 500 background performers? If an AI system can generate a convincing supporting character performance with no pension obligations, union negotiations, or scheduling constraints, why would they cast a human actor? The answer, from a purely financial perspective, is they wouldn't—unless forced to by contractual requirements or audience demand.

Loss of Creative Control and Ethical Concerns

Beyond employment, Astin and his peers raise essential concerns about creative control and ethical use of performer likenesses. The creation of synthetic replicas raises fundamental questions: Do performers have perpetual control over digital versions of themselves? Can studios create AI performers modeled on recognizable actors without ongoing consent or compensation? What prevents the creation of AI replicas of deceased performers, potentially putting words in their mouths and emotions in their synthesized faces that they never intended to express?

These questions become particularly acute when considering how AI performers might be used in contexts the original actor would never approve. An actor's likeness could be used to generate explicit content, propaganda, or performances radically inconsistent with their public values or artistic choices. Unlike actors who can refuse roles, an AI replica has no agency to decline inappropriate uses.

Devaluation of the Acting Craft

There's also a profound concern about the devaluation of acting as a craft. Professional acting represents hundreds of hours of training—studying voice, movement, emotional authenticity, script analysis, scene work, and the subtle arts of inhabiting characters and communicating internal states to audiences. The suggestion that this could be replaced by AI algorithms trained on filmed performances treats acting as a collection of reproducible patterns rather than as a genuine creative practice.

Actors worry that normalizing AI performance will diminish the cultural value placed on human acting. If audiences become accustomed to synthetic performances, will they develop appreciation for the subtle authenticity of human acting? Will investment in acting training, drama schools, and actor development programs decline if the industry perceives diminished demand for human talent? These concerns about cultural devaluation may ultimately prove more significant than direct employment loss.

Regulatory and Contractual Gaps

Crucially, the infrastructure for managing AI actors simply doesn't exist yet. Union contracts predate AI actors by decades and contain vague provisions about digital likenesses that were designed for different contexts. Studios can currently exploit significant gray areas: what constitutes a "digital likeness"? What rights do performers retain over synthesized versions of themselves? How long do rights persist after a project concludes? The contractual and regulatory landscape provides minimal protection for performers against misuse of AI replicas.

This regulatory vacuum creates perverse incentives. Studios lack clear guidance on ethical boundaries, so some studios may exploit permissive interpretations of existing rules. Performers lack clear protections and recourse mechanisms. The industry is essentially operating on honor system in a context where economic incentives strongly encourage cutting corners.

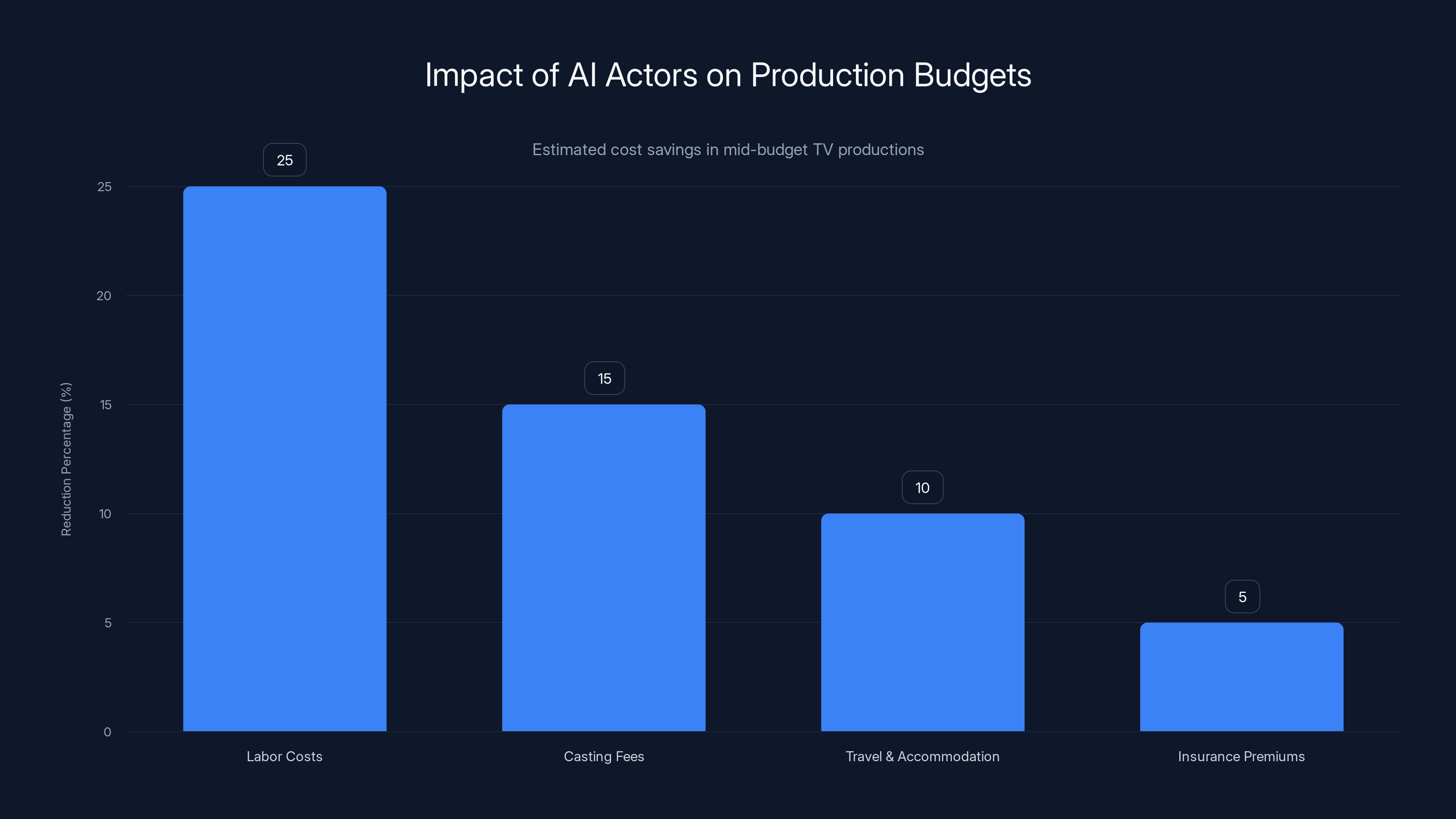

AI actors can significantly reduce labor costs by 20-30% and other related costs, leading to substantial budget reallocation. Estimated data based on industry insights.

The Technology Stack: How Studios Are Implementing AI Actors

Leading AI Actor Platforms and Services

Several companies have emerged as leaders in AI actor generation and synthesis. These aren't obscure startups working in shadows—they're well-funded ventures backed by major industry players and investment firms. Some platforms focus on complete performance synthesis, while others provide specialized tools for specific aspects of performance generation or enhancement.

The most prominent platforms include systems designed specifically for film and television production. These tools integrate with standard filmmaking workflows, allowing directors and producers to generate AI performances that slot seamlessly into existing post-production pipelines. Some systems offer libraries of pre-trained synthetic performers available for licensing, while others enable studios to create custom AI actors based on specific aesthetic requirements or performer archetypes.

What's particularly striking is how rapidly these technologies have matured. Five years ago, AI-generated performances would be obviously synthetic and primarily useful for special effects or visual effects contexts. Today, studios are considering AI actors for primary roles in actual productions, not just background or crowd work. The technology has crossed the threshold from novelty to practical production tool remarkably quickly.

Integration Into Production Workflows

Studios are beginning to integrate AI actor systems into standard production pipelines. Rather than requiring specialized knowledge of machine learning, contemporary systems provide user interfaces designed for filmmakers—directors, cinematographers, and editors who understand storytelling and visual language but aren't ML specialists. This democratization of access is crucial; it means that even mid-level productions can access AI actor technology rather than only major studios with dedicated AI research divisions.

The workflow typically involves pre-production planning where directors decide which roles or sequences might use synthetic performers, acquisition of reference footage or training data for specific characters, rendering and synthesis phases where AI systems generate performances, and integration into post-production alongside human-performed footage. The goal is for audiences to be unable to identify where human performances end and synthetic performances begin.

Some productions are experimenting with hybrid approaches: using human actors for principal photography while augmenting performances through AI-enabled color grading, expression adjustment, or digital de-aging. This represents an intermediate adoption pathway—using AI to enhance human performance rather than replace it entirely. However, such hybrid approaches still eliminate traditional post-production roles and reduce the need for expensive reshoots to adjust specific performance elements.

Cost Economics and Adoption Drivers

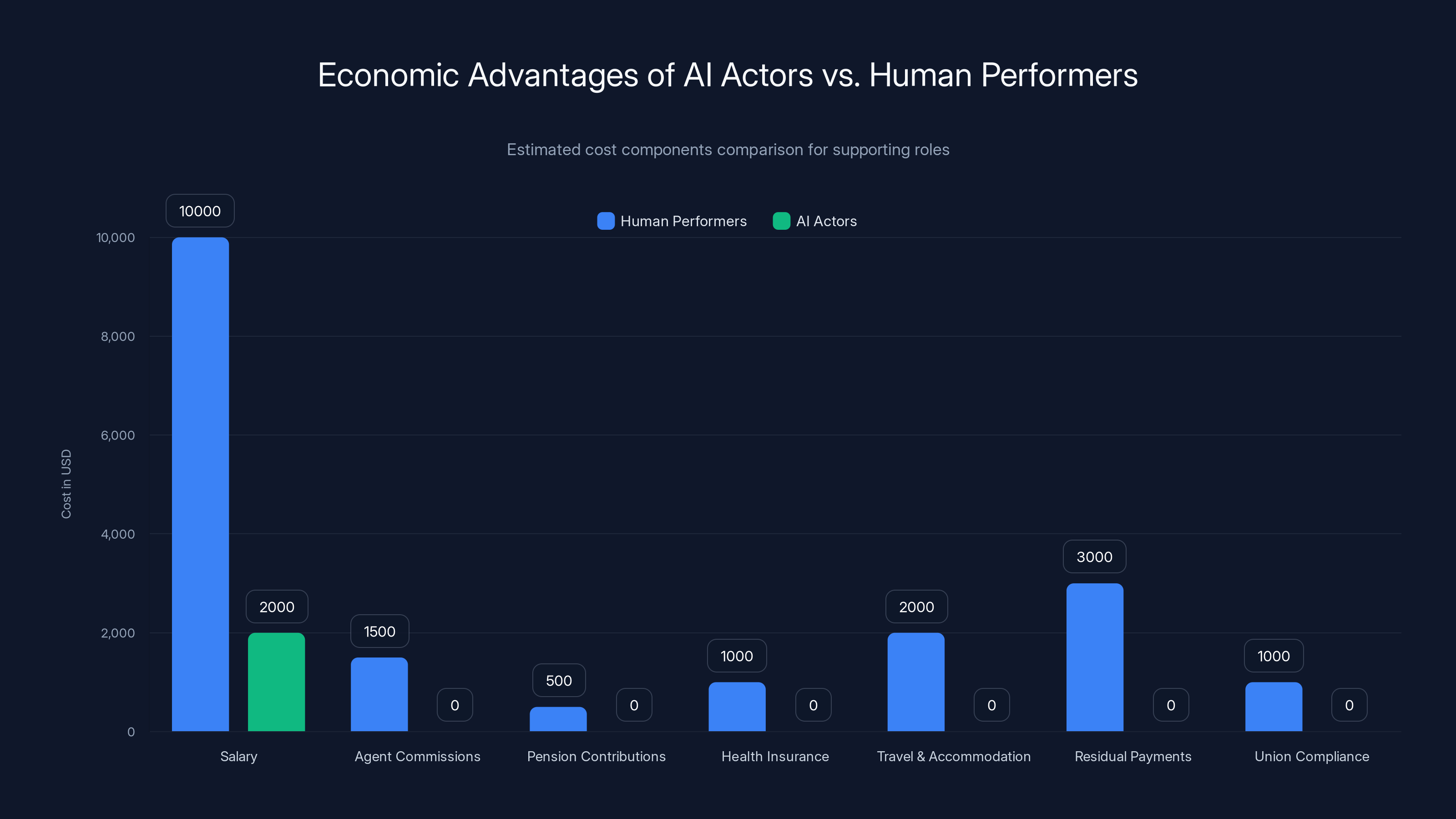

The economic case for AI actors is compelling, which explains rapid industry interest despite ethical concerns. Consider a typical supporting role: hiring a human actor requires casting director fees, agent commissions, salary, travel and accommodation, per diem, insurance, pension contributions, and residual payments if the production gets subsequent exploitation through streaming, syndication, or international sales. The total cost of employing a supporting actor across multiple production days typically ranges from tens of thousands to hundreds of thousands of dollars depending on actor status and union requirements.

Contrast this with AI synthesis: once systems are trained and integrated into production pipelines, generating a new performance requires computational resources (measured in dollars per scene, not per day), no ongoing obligations, and no residuals. The math is obvious: AI becomes economically dominant once training costs amortize across multiple productions. A studio might spend $500,000 developing a custom AI performer but then generate thousands of performances from that system across years of production.

Beyond direct labor cost, AI actors offer schedule flexibility—performances can be generated whenever needed without coordinating actor availability. They eliminate liability concerns—no injuries, scandals, or behavioral issues affect production. They enable easy reshoots without scheduling logistics. For large-budget productions where even day-to-day scheduling of supporting talent creates logistical complexity, AI actors offer genuine operational advantages beyond pure labor cost reduction.

The Legal Landscape: Current Protections and Gaps

Union Contracts and Legacy Protections

Union agreements in entertainment predate AI actors by decades and contain provisions developed for entirely different technological contexts. Screen Actors Guild (SAG-AFTRA) contracts include provisions about "digital likenesses," but these were originally conceived in contexts of de-aging existing actors for sequences in specific films, not creating entirely synthetic performers independent of any human actor.

Traditional contracts grant studios relatively broad rights to use filmed footage in subsequent exhibitions—theatrical releases, television broadcasts, streaming platforms, and so forth. However, these contracts were negotiated when the primary question was geographic and temporal exploitation rights: could a film be shown in additional countries? Could it be broadcast on television years later? The contracts don't clearly address whether studios can synthesize entirely new performances using an actor's digital likeness, or whether actors retain ongoing control over how synthesized versions of themselves appear.

This ambiguity creates genuine disputes. Studios might argue that hiring an actor to perform scenes grants them rights to use that actor's likeness—including synthesized versions—for derivative performances. Actors argue that such rights weren't contemplated in original negotiations and that selling the right to be filmed shouldn't implicitly grant rights to create entirely new synthetic performances never approved by the original actor.

Emerging Legal Frameworks and Legislation

Recognizing these gaps, several jurisdictions have begun developing specific legal frameworks for AI-generated performances. Some legislation focuses on requiring explicit consent and ongoing compensation when performers' likenesses are used to generate synthetic content. Other frameworks emphasize disclosure—audiences should know when they're watching AI-generated performances rather than human actors.

California has led legislative efforts, recognizing its role as the center of entertainment industry. Recent proposals would strengthen performer rights over digital likenesses, extend residual payment obligations to synthetic performances, and require clear contractual language about AI use. The European Union has taken broader approaches through AI Act provisions that require transparency about synthetic content and establish stronger consent requirements across multiple applications, including entertainment.

However, legal frameworks remain incomplete and inconsistent across jurisdictions. An AI actor trained in California and rendered in London using code developed in Estonia creates jurisdictional complexity. International treaties and standards don't yet exist. Studios working across multiple countries face unclear regulatory terrain. This regulatory uncertainty is slowly pushing toward standardization—industry participants recognize that sustainable operations require clear rules—but the process is glacial relative to technology advancement.

Intellectual Property and Rights Management

The intellectual property questions extend beyond performer protections. Who owns an AI actor system trained on footage of multiple performers? Can studios claim ownership of synthetic performers trained on their proprietary footage? Can actors claim ownership of their synthesized likenesses and license them independently to competing studios? These questions have begun reaching courts, but precedent remains limited.

There's also the question of whether AI-generated performers constitute derivative works of source performances, potentially triggering copyright obligations and residual payments, or whether they constitute entirely new works owned by whoever trained the systems. This distinction has profound economic implications—it determines whether actor residuals apply to AI-generated performances, and ultimately whether human performers receive ongoing compensation for performances synthesized from their likenesses years after original production.

International Variations and Forum Shopping

Different countries are approaching AI actor regulation with varying stringency. This creates incentives for forum shopping: studios might structure productions through subsidiaries in jurisdictions with permissive AI actor regulations, then distribute content globally. Without international harmonization, regulatory arbitrage becomes attractive to cost-conscious producers.

Some countries have moved toward strict consent requirements and robust performer protections. Others maintain permissive frameworks emphasizing innovation. The variation creates pressure toward either: harmonization toward stricter standards (if international audiences and creators demand protection), or toward looser standards (if studios successfully migrate to permissive jurisdictions). Current trajectory suggests slow convergence toward reasonable middle ground, though that convergence lags far behind technology advancement.

AI actors significantly reduce costs associated with hiring human performers by eliminating expenses like salaries, agent commissions, and union compliance. (Estimated data)

The Economics of AI Actors: Budget Impact and Industry Transformation

Production Budget Reallocation

When studios adopt AI actors, the savings cascade through production budgets in multiple ways. Direct labor costs decrease—obviously and substantially. But secondary savings accumulate: casting director fees decline as studios rely less on traditional casting processes; travel and accommodation costs vanish; insurance premiums drop; union pension and health benefits obligations decline; scheduling coordination complexity decreases.

For a mid-budget television production, replacing background performers and supporting roles with AI actors could reduce total labor costs by 20-30%. For effects-heavy productions where large-scale crowd sequences are otherwise expensive to produce, the savings could exceed 50% for those specific sequences. These aren't marginal improvements; they're material changes to production economics.

However, this reallocation doesn't represent pure savings. Studios typically reinvest savings into expanded production—more episodes per season, more ambitious visual effects, longer post-production schedules. The economic pressure isn't necessarily to reduce total spending, but rather to shift spending from human talent toward technology and capital. This distinction is crucial: the industry doesn't necessarily shrink, but its composition changes dramatically.

Market Concentration and Consolidation Incentives

AI actor technology creates incentives toward market concentration. Developing proprietary AI actor systems requires substantial upfront investment in AI research, data acquisition, and system integration. These costs are high, with barriers to entry that smaller production companies cannot reasonably overcome. Consequently, AI actor technology becomes most accessible to major studios with capital for large R&D budgets.

This creates a vicious cycle: major studios invest in AI capability, enabling them to produce content more cost-effectively than smaller competitors. Competitive advantages translate into market share gains. Market concentration increases, giving major studios greater leverage with distributors and consumers. Greater revenue enables larger R&D budgets for the next generation of AI tools. Within a decade, AI actor technology could become a primary competitive moat for major studios, accessible only to those with sufficient capital to amortize massive development costs.

Smaller production companies, independent producers, and emerging creative talent would be disadvantaged—unable to access advanced AI tools and therefore unable to compete on cost with studios leveraging proprietary synthetic performers. This consolidation pressure works against creative diversity and independent filmmaking.

Employment Ecosystem Collapse Risks

The entertainment industry's employment structure is precarious and highly interdependent. Success for struggling actors often comes through background work and supporting roles—these gigs provide steady employment while actors develop their craft and seek larger opportunities. Cinematographers build experience through lower-budget projects. Editors, sound designers, and crew develop expertise across productions of varying scales. The entire ecosystem depends on constant production activity at all budget levels.

If AI actors reduce demand for background performers and supporting actors by even 50%, the employment consequences would be catastrophic for struggling performers. These aren't stars who can transition to different industries; they've invested years in specialized training and built professional networks within entertainment. Widespread displacement would eliminate the career pathways that historically supported people entering the industry.

The concern isn't merely about job loss; it's about ecosystem stability. If the middle layers of employment collapse, the foundation supporting aspiring talent crumbles. The next generation of stars wouldn't have access to the development opportunities and experience that current stars had. Long-term, this could damage creative talent pipelines, reducing the quality and diversity of creative work emerging from entertainment.

Geographic and Sectoral Variations

AI actor adoption won't occur uniformly across entertainment sectors. High-budget studio films will likely adopt AI extensively for background work and crowd sequences where the technology is least likely to be scrutinized. Television production might adopt AI actors more rapidly than theatrical films, where audiences and critics scrutinize performances more intensively. Streaming productions might experiment more aggressively than traditional broadcast, where standards and audience expectations remain more conservative.

Geographically, adoption will likely be fastest where labor costs are highest (justifying capital investment in AI systems) and where regulatory frameworks are permissive. Hollywood might actually be slower to adopt than Singapore or Eastern European production hubs with lower labor costs and different regulatory environments. This geographic variation creates potential for "AI production havens" emerging in jurisdictions offering both permissive regulations and competitive labor costs.

Creative and Artistic Implications: Beyond Economics

Performance Quality and Emotional Authenticity

Here's a nuanced reality that economic arguments often overlook: the best human performances contain elements that are genuinely difficult to synthesize. An actor can't fully explain why a specific vocal inflection feels right, or why a subtle eye movement at a particular moment communicates something essential. These choices emerge from intuition, training, and ineffable creative instincts that are difficult to program into algorithms.

When synthesizing performances from training data, AI systems learn statistical patterns. They understand that when characters express doubt, certain vocal patterns and expressions tend to appear. But they might miss the specific magic moment where an actor's unique interpretation—their individualized approach to a character—transcends the patterns and reaches audiences in unexpected ways. This is where great acting becomes art rather than craft.

AI actors might be sufficient for many roles, particularly supporting characters with limited development. But for complex protagonists undergoing significant character arcs—roles requiring actors to communicate internal psychological transformation—AI synthesis might produce technically competent performances lacking the ineffable authenticity that distinguishes great acting from adequate acting. Audiences might not consciously recognize the difference, but the impact on emotional resonance could be significant.

Creative Collaboration and Artistic Vision

Directing is fundamentally collaborative. Directors work with actors to discover performances—trying different approaches, building on suggestions, exploring emotional territories that become apparent only through the process of rehearsal and performance. This iterative collaboration generates creative results that neither director nor actor could produce independently.

AI actors, conversely, are tools. They're not collaborators offering unexpected creative insights or bringing their own artistic vision to roles. An AI system does what it's programmed to do, executing with consistency but without genuine creative agency. The direction becomes unilateral rather than collaborative.

This distinction matters artistically. Some of cinema's most celebrated performances emerged through genuine collaboration between directors and actors who challenged each other, explored unexpected directions, and discovered creative possibilities neither anticipated alone. AI actors eliminate that collaborative potential. The creative process becomes more instrumental—implementing the director's vision rather than co-creating it.

Diversity and Representation Concerns

Interestingly, AI actors could enhance diversity in representation, or they could entrench existing biases. If studios train AI actors on diverse source material, they could generate performances representing diverse ethnicities, body types, disabilities, and other characteristics without requiring casting of human performers with those characteristics. This could reduce barriers to representing diverse stories with authentic representation.

Conversely, AI actors could further concentrate opportunities among already-successful performers. Studios might create AI replicas of bankable stars to generate infinite variations of similar talent. The market consolidation pressures discussed earlier would disproportionately disadvantage underrepresented groups who already struggle to break into industry networks and secure representation.

There's also the risk that AI systems trained primarily on existing film and television—which significantly under-represents many communities—would perpetuate historical biases. If AI actors are trained on biased datasets, they would perpetuate and amplify those biases at scale.

Audience Perception and Trust

A final artistic consideration involves audience psychology. Audiences have developed sophisticated capabilities to detect inauthenticity. This isn't about identifying technical flaws; it's about sensing when performers aren't genuinely invested in their characters. Known or suspected AI actors might trigger skepticism in audiences, creating distance between viewers and performances even if the synthetic acting is technically excellent.

Conversely, audiences might become indifferent to synthetic performances, developing new entertainment norms where AI actors are accepted as normal. This represents a fundamental shift in audience expectations and in how performances communicate authenticity. Whether this is positive or negative depends partially on how the technology is presented and integrated into creative work.

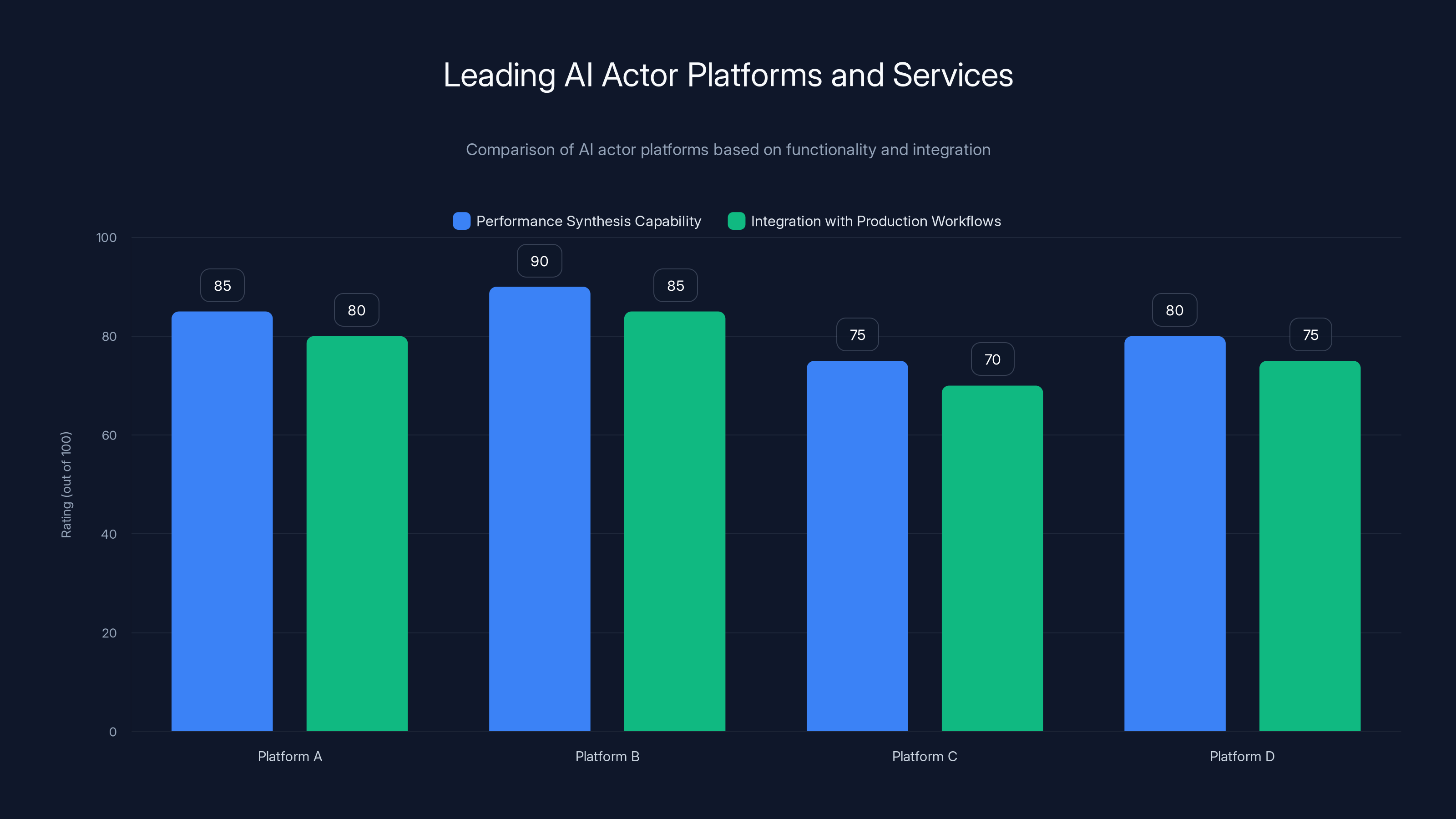

Leading AI actor platforms are rated based on their performance synthesis capabilities and ease of integration into production workflows. Platform B scores highest in both categories, highlighting its advanced features and user-friendly interface. (Estimated data)

Advocacy and Protection Strategies: How Industry Professionals Are Responding

Union Organizing and Contract Negotiations

Actors' unions have begun serious negotiations to establish clearer protections in contracts. The goal is moving beyond vague provisions about digital likenesses toward specific requirements: explicit consent for AI-generated performances, ongoing compensation if likenesses are synthesized, time limits on the use of performer data, and clear delineation of what rights studios acquire when they hire actors.

These negotiations are contentious because studios perceive union demands as threatening to the efficiency gains that make AI adoption attractive. A compromise position being discussed would permit AI actors for background performers and crowd work (where employment is already precarious and episodic) while maintaining protections for speaking roles and featured characters. However, defining the boundary between background and featured work has proven surprisingly complex.

Legislative Advocacy and Policy Development

Performers' organizations have also engaged directly in policy development. Advocacy has focused on establishing clear legal requirements for disclosure when synthetic performances are used, establishing residual payment obligations for synthesized performances using performer likenesses, and creating registry systems where performers can track unauthorized use of their synthetic replicas.

Some proposals would require studios to obtain explicit written consent before creating AI replicas of performers, with clear compensation structures whenever those replicas are used. Other proposals focus on the right to be forgotten—enabling performers to prevent future synthesis of their likenesses after a specified period. These proposals reflect the principle that performers should maintain meaningful control over their digital representations.

Industry Initiatives and Standards Development

Beyond union and legislative efforts, industry associations have begun developing voluntary standards and best practices. These initiatives recognize that not all regulation can come through law; industry self-regulation, when developed thoughtfully with stakeholder input, can sometimes establish norms more quickly than legislative processes.

Some initiatives focus on transparency standards—requiring clear disclosure in credits when AI actors are used, enabling audiences to understand what they're watching. Others emphasize consent frameworks, proposing standard contract language that studios should use when obtaining rights to perform actor likenesses. Still others focus on data governance—establishing protocols for how training data should be acquired, stored, and protected against misuse.

These industry initiatives remain voluntary, which limits their effectiveness. Studios without ethical commitments have little incentive to adopt standards that reduce their competitive advantages. However, the existence of standards creates pressure—consumers and industry partners increasingly demand demonstrable ethical practices, potentially making adoption economically attractive through reputation benefits.

The Case for AI Actors: Legitimate Applications and Creative Possibilities

Safety and Risk Mitigation

Despite legitimate concerns, AI actors do offer genuine benefits in specific contexts. The most unambiguous application involves dangerous sequences. Stunt work carries genuine physical risk—actors suffer injuries, occasionally fatal, performing dangerous scenes. AI-generated performances could eliminate this risk for specified sequences while maintaining authentic appearances.

Consider an action sequence involving an explosion near the performer, a fall from significant height, or other dangerous stunts. Rather than hiring a stunt performer and accepting injury risk, studios could generate an AI-synthesized performance. The synthetic performer experiences no pain, faces no danger, and can be directed toward specific movements. This application seems almost universally beneficial—it preserves actor employment for non-dangerous sequences while eliminating unnecessary injury risk.

Beyond physical danger, AI actors could be used for sequences involving exploitation, explicit content, or other morally problematic performances. Rather than asking actors to perform degrading or sexually explicit scenes, studios could use AI-generated performances. This protects performers from harm while enabling storytelling that might otherwise be impossible without risking performer exploitation.

Scale and Efficiency for Specific Contexts

Certain productions inherently require enormous numbers of minor characters or background performers. Historical epics, science fiction world-building, or fantasy productions might need hundreds or thousands of unique background characters. Hiring and coordinating that many human extras is logistically challenging and expensive. AI-generated background performers could dramatically reduce these logistical challenges while enabling richer, more detailed crowd scenes.

This application is particularly valuable for lower-budget productions that can't afford extensive extras work. An independent filmmaker could generate convincing crowd scenes that would otherwise require substantial production budgets. Streaming productions could expand the scale of production design without proportionally increasing labor costs. From a creative perspective, this enables storytelling possibilities that budgets otherwise preclude.

Accessibility and Representation Possibilities

AI actors could enhance casting possibilities for performers with disabilities or other characteristics that studios traditionally found difficult to accommodate. Rather than requiring actors to endure difficult on-set conditions or limiting casting based on physical capabilities, productions could cast performers and use AI to synthesize performances under optimal conditions.

More speculatively, AI could enable representation of historical figures or deceased performers. Documentaries about historical events could feature synthetic performances of central figures, enabling audiences to see and hear historical actors rather than relying on narration or reenactment by contemporary performers. This could enhance educational and historical content.

Creative Exploration and Experimentation

Some directors might use AI actors as tools for creative exploration—generating multiple variations of scenes with different performances, enabling rapid iteration and experimentation. Rather than filming multiple takes with human actors across days, directors could generate dozens of variations in hours, selecting the performance that best serves storytelling. This could accelerate creative processes and enable more ambitious creative iteration.

This application treats AI as a creative tool rather than a replacement for human performers—more akin to digital editing or color correction than to replacing actors. It augments the director's creative capabilities rather than eliminating employment. If structured appropriately, it could enhance rather than diminish human creativity.

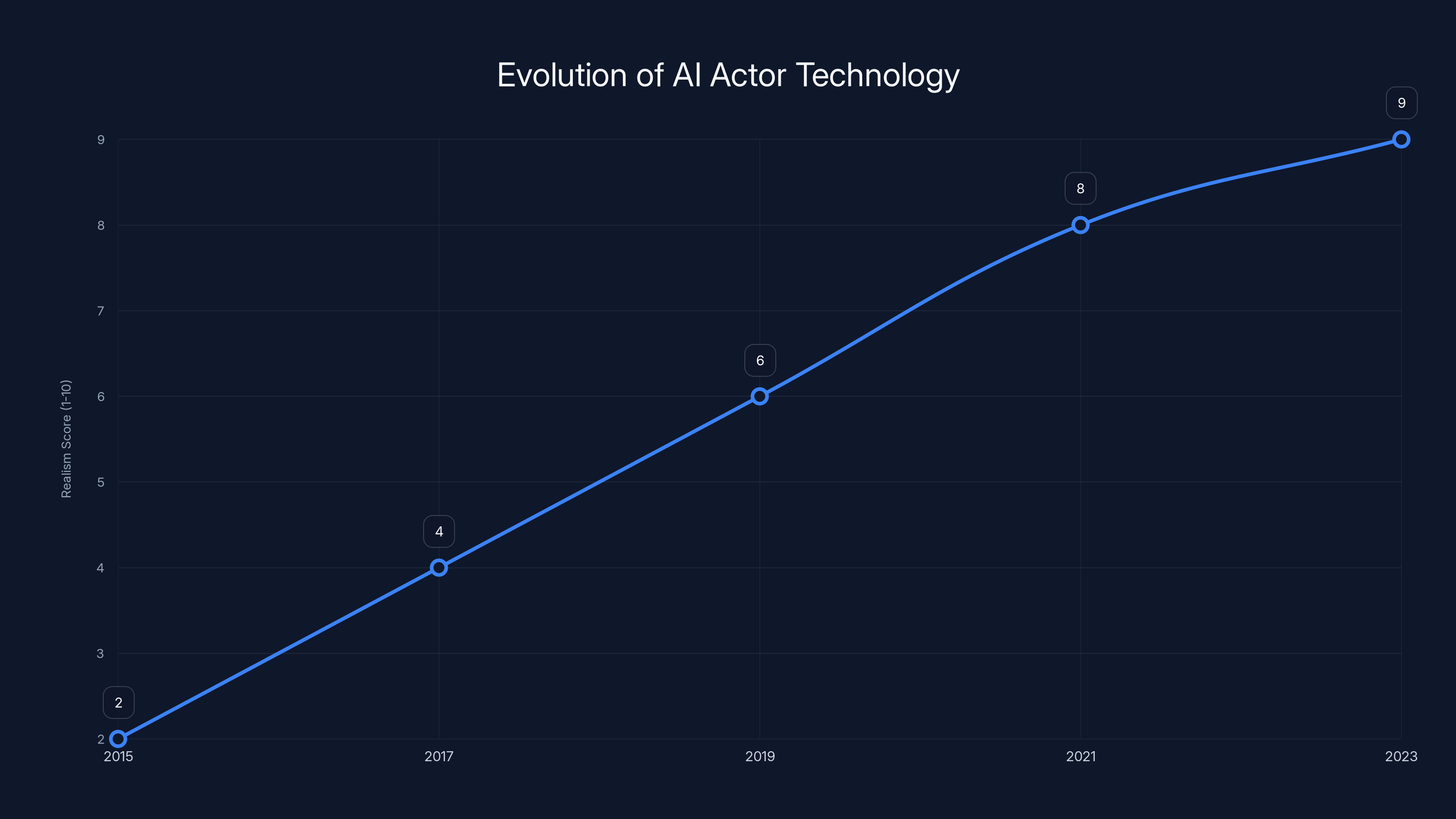

The realism of AI actor technology has significantly improved from 2015 to 2023, with current systems achieving near-indistinguishable results from real human performances. Estimated data.

The Path Forward: Balancing Innovation and Protection

Framework Principles for Sustainable Integration

Sustainable integration of AI actors requires establishing some fundamental principles that balance innovation with protection. First: consent and control. Performers should explicitly authorize any use of their likenesses in AI synthesis, with clear understanding of how those likenesses might be used. This goes beyond traditional filming consent—it acknowledges that synthetic performances constitute new creative works requiring new consent.

Second: transparency and disclosure. Audiences should know when they're watching AI-generated performances. This isn't about preventing AI use; it's about honest communication with audiences, enabling informed viewing experiences. Clear credits indicating AI involvement become necessary.

Third: compensation and residuals. If studios profit from synthesized performances using performer likenesses, performers should share in that profit. This might involve upfront fees for consent plus residual payments proportional to usage, similar to traditional residual structures but adapted for synthetic content.

Fourth: time-limited rights. Rather than granting perpetual rights to use performer likenesses, contracts should establish defined periods after which studios lose synthesis rights. After some period—perhaps 10-20 years—performers or their heirs regain control over their synthetic representations.

Fifth: protected applications. Certain roles—lead protagonists, complex characters requiring substantial creative collaboration—should maintain protections against AI synthesis. Not all work is equal; positions of significant creative visibility and control should remain reserved for human performers.

Differentiated Approaches for Different Applications

Regulation and standards shouldn't treat all AI actor applications identically. Safety-critical applications (stunts, dangerous sequences, protection from exploitation) warrant permissive frameworks emphasizing innovation. General background work and minor characters warrant moderate protections—requiring consent and reasonable compensation but enabling broad adoption. Lead roles and significantly featured characters warrant strict protections, essentially reserving human performers for these positions.

This differentiated approach acknowledges that the stakes differ. A background performer being replaced by an AI actor loses income from single day-work they might have booked. A principal actor being replaced loses a substantial role that might substantially advance their career. The employment consequences differ in magnitude and significance.

Differentiated regulation also preserves incentives for human performance in the highest-status positions. If lead roles remain reserved for human actors, the industry maintains meaningful employment pathways for aspiring talent. Studios face strong creative incentives to cast human actors for visible roles—audiences still care about seeing recognizable talent in significant roles, and the artistic benefits of collaboration remain valuable.

Multi-Stakeholder Governance Structures

Sustainable frameworks require participation from multiple stakeholders: performers and their representatives, studio executives and producers, technology companies developing AI systems, audiences and consumer representatives, regulators and policymakers, and creative professionals across disciplines. No single perspective captures all legitimate concerns, and solutions imposing rules without stakeholder consensus generate compliance resistance.

Effective governance structures might include: industry working groups developing best practices; regulatory agencies establishing baseline legal requirements; contractual templates that studios adopt as standards; third-party auditing and certification of ethical practices; and consumer education initiatives helping audiences understand how AI is being used in entertainment.

Such structures work best when they balance stakeholder interests rather than privileging any single group. Performers benefit from protections maintaining employment opportunities. Studios benefit from regulatory clarity enabling efficient operations. Audiences benefit from transparency about what they're watching. Creative professionals benefit from frameworks that neither eliminate their work nor prevent beneficial technology adoption. Consumers benefit from access to diverse, high-quality content produced sustainably.

International Harmonization Efforts

As discussed earlier, variation in regulatory frameworks creates forum-shopping incentives. To prevent regulatory arbitrage, international coordination and harmonization become valuable. This might involve industry associations developing global standards, international treaties establishing baseline legal protections, or multilateral agreements on AI use in entertainment.

Full harmonization is unrealistic—different countries have legitimately different values and priorities. However, basic principles could be harmonized: consent requirements, transparency standards, minimum compensation frameworks, and prohibited applications. Even rough harmonization would dramatically reduce incentives for studios to locate production in permissive jurisdictions specifically to avoid ethical constraints.

The Workforce Transition: Preparing for Inevitable Change

Reskilling and Career Adaptation

If AI actors become prevalent, performance professionals will need alternative career pathways. Some will transition into directing, producing, or other creative roles. Some might specialize in AI system training—using their performance expertise to develop more sophisticated AI actors. Some might focus on voice acting, motion capture, or other specialized roles less vulnerable to replacement.

Proactive industry investment in reskilling programs could ease this transition. Industry associations, studios, and governments might jointly fund training programs enabling mid-career actors to develop skills in adjacent fields. Rather than abandoning performers to unemployment, the industry could invest in enabling transitions to adjacent careers where their expertise and experience remain valuable.

This isn't merely altruistic; it's pragmatic. An industry that abandons its workers faces reputational damage, regulatory scrutiny, and difficulty attracting talent to remaining positions. Industries that manage transitions thoughtfully and invest in worker adaptation develop sustainable transitions and maintain legitimacy.

New Role Creation and Emerging Opportunities

Simultaneously, new roles will emerge that don't currently exist. Voice direction for AI performers becomes important work—professionals who understand both acting and AI systems, able to guide synthetic performances toward emotional authenticity. Motion capture and performance capture specialists will become more valuable as the foundation for AI actor training. Data scientists specializing in entertainment applications will be in demand.

These new roles won't directly replace lost acting work—one voice director won't replace dozens of displaced actors. However, they create some employment opportunities partially offsetting displacement. More importantly, they demonstrate that technology doesn't simply destroy work; it transforms the nature of work. Understanding what new opportunities emerge helps workforce planning and reskilling efforts.

Social Safety Net and Income Support

Ultimately, significant workforce displacement may require broader social responses beyond industry-specific interventions. If entertainment technology eliminates substantial employment, society might need enhanced unemployment benefits, healthcare support, and retraining assistance for displaced workers. This becomes a broader question about how societies respond to technology-driven displacement across industries.

The entertainment industry won't be unique in facing displacement—similar challenges exist across manufacturing, transportation, and service sectors as automation and AI advance. Policymakers are beginning to grapple with universal approaches to technology displacement, potentially including income support programs, healthcare decoupling from employment, or universal basic income proposals. The specific approach entertainment takes will likely follow broader societal frameworks rather than remaining industry-unique.

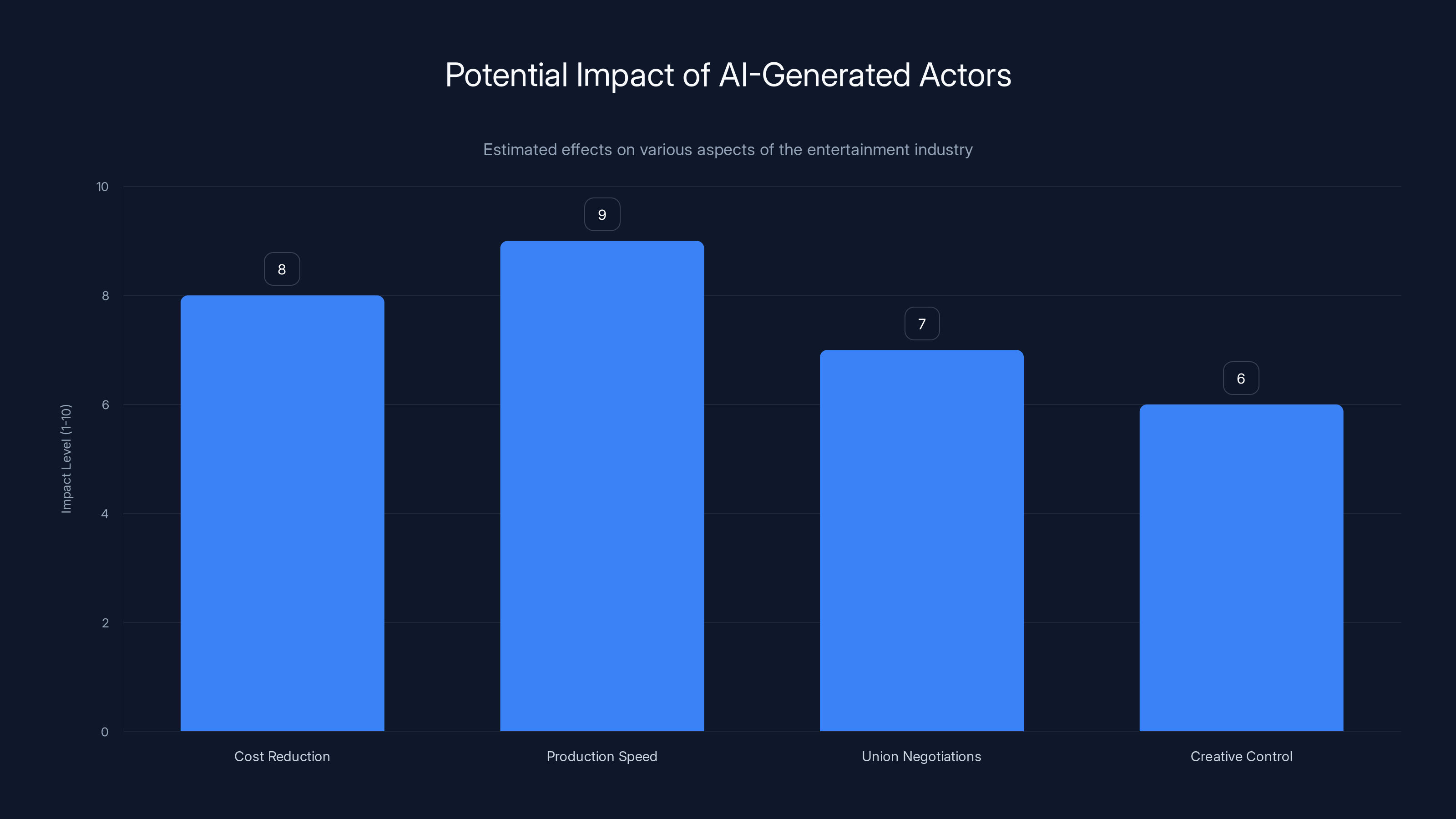

AI-generated actors are expected to significantly reduce costs and increase production speed, while also affecting union negotiations and creative control. (Estimated data)

Looking Ahead: Scenarios and Possibilities for Entertainment's Future

Conservative Scenario: Carefully Regulated Integration

In a conservative scenario, regulations successfully establish strong protections for performers while permitting AI actors in specific applications. Studios use AI primarily for background work, crowd sequences, and dangerous stunts—applications with broad industry and public acceptance. Lead roles and significantly featured characters remain almost exclusively performed by human actors.

Performers benefit from clear contractual protections, meaningful consent requirements, and compensation when their likenesses are synthesized. Union contracts establish industry standards protecting most working actors from AI-driven displacement. New opportunities emerge in voice direction and motion capture specialization. The industry transforms incrementally rather than revolutionarily.

In this scenario, AI enhances creative capabilities and production efficiency while preserving the human-centered core of entertainment. The technology becomes a tool augmenting human creativity rather than replacing human performers. Economic pressures push toward adoption, but regulatory frameworks and audience preferences for human-performed lead roles maintain significant employment for skilled performers.

Aggressive Scenario: Minimal Regulation and Rapid Displacement

Conversely, an aggressive scenario involves minimal regulation as studios successfully resist or circumvent restrictions. AI actors expand into lead roles and featured characters as the technology becomes sufficiently sophisticated. Studios optimize ruthlessly for cost reduction, adopting AI actors wherever possible.

In this scenario, employment for supporting actors and background performers collapses. Major productions shift to a small number of principal human actors in lead roles (preserved because audiences demand recognizable talent) with entirely synthesized supporting casts. Mid-budget productions increasingly use AI actors for cost competitiveness. Independent and lower-budget productions disappear as the cost advantage of major studios becomes insurmountable.

Performers and crew members face widespread displacement without adequate protections or transition support. The cultural value placed on human acting declines as audiences acclimate to synthetic performances. The industry consolidates toward major studios controlling proprietary AI systems, with reduced creative opportunities for emerging talent. Entertainment production becomes increasingly concentrated geographically and organizationally.

Balanced Scenario: Hybrid Human-Synthetic Collaboration

A middle scenario involves successful development of frameworks enabling both human performance and AI synthesis to thrive in different contexts. High-status roles remain performed by humans. Lower-status work increasingly uses AI. Studios develop mixed approaches—using human actors for principal characters with emotional depth while using AI for supporting characters and background work.

New creative possibilities emerge from human-AI collaboration. Directors work with human actors for creative collaboration while using AI to extend performances beyond what human actors could practically perform. Voice actors work with synthesized visual performances. Motion capture specialists enable performers to contribute to AI systems without being replaced by them.

This scenario requires thoughtful regulation establishing clear frameworks while avoiding rigid restrictions that prevent beneficial innovation. It requires industry leadership making ethical choices even when economic incentives point toward aggressive displacement. It requires audience expectations that certain roles should feature human performers while accepting AI in other contexts.

This scenario arguably represents the most sustainable path forward—one that preserves human employment and creativity while enabling technological innovation. Whether the industry chooses this path depends on whether protections are established proactively before displacement becomes severe.

Emerging Tools and Technologies: The Automation Future for Developers

Beyond Performance: Automation in Content Creation

While the discussion of AI actors focuses on entertainment performance, the underlying technologies enabling synthetic performers also represent significant tools for broader content creation and automation. Development teams and creative professionals increasingly seek ways to automate repetitive creative tasks, scale content production, and maintain consistent creative voice across large volumes of output.

Platforms like Runable offer automation capabilities extending beyond performance synthesis into broader content creation. These tools use AI agents to generate documents, presentations, reports, and other structured content—automating the production of assets that currently require human creation time. For development teams creating technical documentation, internal presentations, or reports, automation tools can dramatically reduce the time spent on these essential but non-core creative tasks.

The economics mirror those of AI actors: once AI systems are trained on existing content, generating new variations becomes inexpensive compared to human creation. Teams using such tools report significant productivity gains—developers spending less time on documentation can focus on core development work. Similarly, marketing teams can generate more content variations with smaller teams. The efficiency gains, while not as dramatically visible as displacing actors, are meaningful in professional contexts.

Integration into Developer Workflows

Modern development platforms increasingly incorporate AI-powered automation directly into development workflows. Rather than treating automation as a separate tool, it becomes embedded in the environment where developers already work. This integration reduces friction—developers don't need to learn new tools or adopt new processes; automation features are available within familiar contexts.

For teams building applications, this means CI/CD pipelines that automatically generate documentation from code, testing procedures that synthesize edge cases, or deployment tools that automatically generate release notes from commit messages. These aren't replacements for human judgment—they're tools augmenting human capabilities by handling repetitive, formulaic tasks automatically.

Cost-effectiveness becomes particularly significant for smaller teams lacking resources for dedicated documentation, testing, or reporting roles. An automation tool at $9/month makes sophisticated automation capabilities accessible to startups and small teams that couldn't justify dedicated personnel for these functions. This democratization of capabilities enables smaller teams to scale operations that previously required proportionally larger teams.

The Broader Automation Wave

The rise of AI actors in entertainment reflects a broader wave of AI-driven automation across industries. Entertainment is visible and attention-grabbing, making it a focal point for discussion, but similar displacement and transformation is occurring in customer service (chatbots), software development (code generation), writing (text generation), and countless other fields.

Understanding the entertainment industry's navigation of these issues—including both the risks and opportunities—informs how other industries should approach similar transitions. The regulatory frameworks being developed, the consent requirements being negotiated, the ethical principles being established in entertainment will likely influence broader approaches to AI automation across sectors.

Conclusion: Humanity, Technology, and the Future of Performance

Sean Astin's warning about "an unbelievable moment in the course of human history" isn't hyperbole—it reflects genuine recognition that the entertainment industry stands at a genuine inflection point. For the first time, the possibility that human performers could be replaced by artificial systems at scale is genuinely feasible, technically and economically. This technological capability raises profound questions about the future of creative work, employment, artistic authenticity, and cultural values.

The path forward isn't predetermined. The industry could adopt AI actors recklessly, maximizing short-term cost reduction while devastating employment and cultural authenticity. Alternatively, it could develop thoughtful frameworks that enable beneficial innovation while preserving the human creativity and employment that make entertainment meaningful. Or any of countless possibilities between these extremes.

What seems clear is that reactive response won't suffice. The technology is advancing rapidly, and the economic incentives driving adoption are powerful. Without proactive development of regulatory frameworks, contractual protections, and ethical standards, the industry will default toward aggressive adoption unconstrained by worker protections or ethical considerations. That default outcome appears to be the least desirable from most perspectives.

Effective response requires action from multiple stakeholders. Unions must negotiate contractual protections before AI adoption becomes widespread—negotiating from strength rather than attempting to establish rules after displacement has already occurred. Regulators must develop clear legal frameworks establishing minimum standards and clarifying performer rights. Industry leaders must make ethical choices that prioritize sustainability over short-term cost reduction. Technology companies must design systems with ethical constraints built in rather than treating ethics as optional add-ons.

Audiences and consumers have roles as well. If audiences demand that human performers be used in significant roles, that preference constrains studios' incentives to displace actors. Cultural values matter—if audiences value human performance enough to prefer it to synthetic alternatives, that preference becomes economically consequential. Industry leaders ultimately respond to what audiences demand; if audiences expect human performers in lead roles, that expectation shapes industry decisions.

The stakes extend beyond entertainment industry economics. How the entertainment industry navigates AI actor technology will establish precedent for how other industries address AI-driven displacement. The frameworks developed, the protections established, the ethical principles negotiated in entertainment will influence policy in customer service, software development, creative fields, and beyond. The industry's choices ripple outward.

Ultimately, the question isn't whether AI actors should exist—that ship has sailed; the technology will advance regardless. The question is how the industry will ensure that AI serves creative ambition and enhances rather than diminishes human creativity, employment, and cultural value. That answer will be determined through the choices the industry makes now, in this genuinely unbelievable moment in entertainment's history.

FAQ

What exactly are AI actors, and how are they different from traditional deepfakes?

AI actors are synthetic performers generated through machine learning systems capable of producing realistic performances including facial expressions, voice, movement, and emotional expression. While early deepfakes focused primarily on facial synthesis for specific individuals, contemporary AI actors represent more sophisticated systems that can generate entirely new performances—novel scenes, previously unfiled dialogue, and original actions. Modern AI actors learn performance patterns from training footage and can generate variations that extend far beyond the source material, making them practical tools for production rather than just novelty effects.

How do studios currently create AI actors for film and television production?

The process involves several stages: acquiring reference footage of specific performers or archetypes, training machine learning models on that footage to learn performance patterns and characteristics, and then using trained models to generate synthetic performances for new scenes. The workflow integrates into standard production pipelines—directors provide direction about desired emotional beats and actions, AI systems generate performances matching those specifications, and the synthetic footage integrates into post-production alongside human-performed sequences. Real-time synthesis represents the latest advancement, enabling directors to work with AI actors much as they would with human performers, adjusting direction during generation rather than only in post-production.

What are the main economic advantages of using AI actors instead of hiring human performers?

The primary advantage is cost reduction. Hiring supporting actors involves salary payments, agent commissions, pension contributions, health insurance, travel and accommodation, residual payments, and union compliance costs. For a single supporting role across multiple production days, total costs easily reach tens of thousands of dollars or more. Generating synthetic performances via AI systems costs a fraction of human hiring once the systems are developed and trained. Additional advantages include schedule flexibility—performances can be generated whenever needed without coordinating actor availability—and elimination of liability concerns from injuries or behavioral issues. For studios, the economic calculation is straightforward: AI becomes dominant once development costs amortize across sufficient productions.

What legal protections currently exist for performers regarding AI actor technology?

Legal protections remain incomplete and evolving. Union contracts contain provisions about "digital likenesses," but these were developed for different technological contexts and contain significant ambiguities about AI-synthesized performances. Some jurisdictions including California have begun developing specific legislation establishing stronger consent requirements, ongoing compensation obligations, and performer rights over digital replicas. However, international variation creates gaps—what's prohibited in one jurisdiction might be permitted in another. The regulatory landscape remains in flux, with industry standards and best practices still being negotiated. This creates a window where studios can operate with relatively minimal constraints, though this window is gradually closing as regulations solidify.

Can AI actors realistically replace human performers in lead roles with complex character arcs?

Currently, AI actors perform best in roles with limited dialogue, straightforward emotional expression, and minimal character development—background performers, supporting characters, and minor roles. For complex protagonists requiring nuanced emotional expression, multifaceted character arcs, and subtle performance choices that communicate internal psychological states, AI synthesis currently produces technically adequate but artistically somewhat flat results. More significantly, the creative collaboration between directors and actors—where actors bring unexpected interpretations and drive creative discovery—cannot yet be replicated by synthetic performers. However, the technology improves rapidly, and it's unclear whether future improvements might sufficiently close this gap. For near-term predictions (5-10 years), human performers likely remain superior for significant lead roles, but longer-term possibilities remain uncertain.

What is being done to protect actors and ensure ethical use of AI performers?

Multiple protective mechanisms are being developed simultaneously. Unions are negotiating stronger contractual language requiring explicit consent for AI synthesis, establishing compensation structures for synthesized performances, and setting time limits on rights studios acquire. Legislation in various jurisdictions is establishing baseline legal protections—California has led efforts to strengthen performer rights, and the EU is developing AI-specific regulations addressing entertainment applications. Industry associations are developing voluntary best practices emphasizing transparency, consent frameworks, and ethical data governance. Some proposals would establish registries where performers can track use of their likenesses, and others would require clear disclosure in credits when AI actors are used. The combination of contractual, legislative, and voluntary standards is gradually establishing guardrails, though implementation remains uneven.

How might AI actors affect diversity and representation in entertainment?

AI actors present both opportunities and risks for diversity. Positively, AI systems could generate performances representing diverse characteristics—ethnicities, body types, disabilities, and other features—without requiring casting decisions that exclude performers based on those characteristics. This could enhance representation without perpetuating historical casting biases. Conversely, AI development could entrench existing biases if training data over-represents already dominant groups. Studios might preferentially create AI replicas of bankable stars, further concentrating opportunities. Additionally, the cost advantages of AI adoption could disproportionately disadvantage underrepresented performers who already struggle with access to industry networks and casting opportunities. Whether AI enhances or diminishes representation depends largely on how systems are developed and implemented—the technology itself is neutral, but development and deployment decisions carry profound implications.

What are some legitimate applications of AI actors where the technology clearly benefits productions without raising major ethical concerns?

Safety represents the clearest legitimate application—using AI to generate performances for dangerous stunts eliminates injury risk for performers while maintaining authentic visual presentation. Historical documentaries could use AI to recreate performances of historical figures, enhancing educational content. Sequences requiring explicit or exploitative content could use AI performers rather than asking human actors to endure potentially harmful situations. Large-scale crowd scenes for budget-constrained productions become feasible when background performers can be synthesized. Background work and minor characters with minimal development are appropriate applications where employment impact is modest. These applications demonstrate that AI actors aren't inherently problematic; concerns arise when technology is deployed to displace primary performers in significant roles or when used without performer consent or appropriate compensation.

How are international differences in AI actor regulation creating challenges for the entertainment industry?

Different jurisdictions are adopting divergent regulatory approaches—some emphasizing strict consent requirements and performer protections, others maintaining permissive frameworks prioritizing innovation. This variation creates incentives for "regulatory arbitrage," where studios structure productions through subsidiaries in permissive jurisdictions to avoid stricter regulations elsewhere. A production using AI actors in relaxed regulatory environments might distribute content globally, creating de facto permissive standards even if strict regulations exist in some markets. Without international harmonization, studios can avoid the costliest regulations by locating production where rules are most favorable. International coordination and treaty development are beginning to address this issue, but full harmonization is unlikely—different countries legitimately have different values—though basic principle-level harmonization could substantially reduce arbitrage incentives.

What workforce transition strategies could help performers adapt to increased AI actor adoption?

Proactive transition strategies include reskilling programs enabling mid-career performers to develop expertise in adjacent fields such as directing, producing, voice direction for AI systems, or motion capture specialization. Industry-sponsored training could ease transitions to filmmaking, screenwriting, or other creative roles where acting expertise transfers. New positions will emerge—AI performance specialists, voice directors, motion capture technicians—creating some employment opportunities partially offsetting displacement. Beyond industry-specific strategies, broader social responses might include enhanced unemployment benefits, healthcare decoupling from employment, or universal basic income proposals that address technology-driven displacement across sectors. The most sustainable approaches combine industry investment in worker transition with broader social safety net enhancements recognizing that entertainment is one of many sectors facing AI-driven employment changes.

What is the broader significance of the AI actors debate beyond the entertainment industry itself?

The entertainment industry's response to AI actors will establish precedent for how other sectors address similar technology-driven disruption. Similar challenges exist in customer service (chatbots replacing service representatives), software development (AI code generation), writing and journalism (text generation systems), and numerous other fields. The regulatory frameworks developed, the ethical principles established, the protections negotiated, and the transition strategies implemented in entertainment will likely influence policymaking across sectors. Additionally, how entertainment handles the cultural question of whether and when to use synthetic performances versus human performances touches on broader questions about cultural values, technological governance, and what aspects of human experience should remain reserved for human participation. The choices the entertainment industry makes ripple outward, shaping how society as a whole approaches AI integration across domains.

Key Takeaways

- AI actor technology has matured from obvious deepfakes to photorealistic synthetic performances capable of generating entirely new scenes with original dialogue and emotional expression

- Economic incentives strongly favor adoption: generating synthetic performances costs a fraction of hiring human actors once systems are trained, eliminating pension obligations and scheduling complexity

- Legal protections for performers remain incomplete and vary dramatically by jurisdiction, creating regulatory gaps and arbitrage incentives for studios

- Some AI actor applications are legitimately beneficial (safety-critical stunts, dangerous sequences, historical recreation) while others pose clear threats to human performer employment

- Effective response requires multi-stakeholder action: union negotiation of protective contracts, regulatory frameworks establishing baseline standards, industry ethical commitments, and audience preference for human performers in significant roles

- The entertainment industry's approach to AI actors will establish precedent for how other sectors address technology-driven employment disruption across customer service, software development, writing, and other fields

- Content automation tools like Runable demonstrate that similar AI-driven efficiency gains are occurring across creative and technical work beyond performance

- Sustainable integration requires frameworks establishing consent requirements, transparency standards, ongoing compensation for synthesized performances, and time-limited rights over performer likenesses

- Workforce transition strategies including reskilling programs and new role development can partially offset displacement but require broader social safety net enhancements

- The choice between careful regulation protecting human creativity versus aggressive displacement for cost reduction is not predetermined—industry leaders and policymakers will determine the path forward

Related Articles

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- Reality Still Matters: How Truth Survives Technology and Politics [2025]

- Grok's Deepfake Crisis: UK Regulation and AI Abuse [2025]

- CES 2026: Complete Guide to All Major Tech Announcements

- Deepfakes & Digital Trust: How Human Provenance Rebuilds Confidence [2025]

- Grok Deepfake Crisis: Global Investigation & AI Safeguard Failure [2025]