AI Agents & Collective Intelligence: Transforming Enterprise Collaboration

Picture this: You're a Fortune 500 executive with 30,000 employees spread across continents. You need their insights on a critical business decision. You could send a survey. You could hold town halls. Or you could tap into something far more powerful: the collective intelligence of your entire organization, in real-time, at scale.

For decades, that sounded impossible. Conventional wisdom said you can't have meaningful conversations with more than a handful of people. Too many voices, too much noise, too little time. Someone always feels unheard. The dialogue breaks down. You end up with a lowest-common-denominator decision instead of an optimized solution.

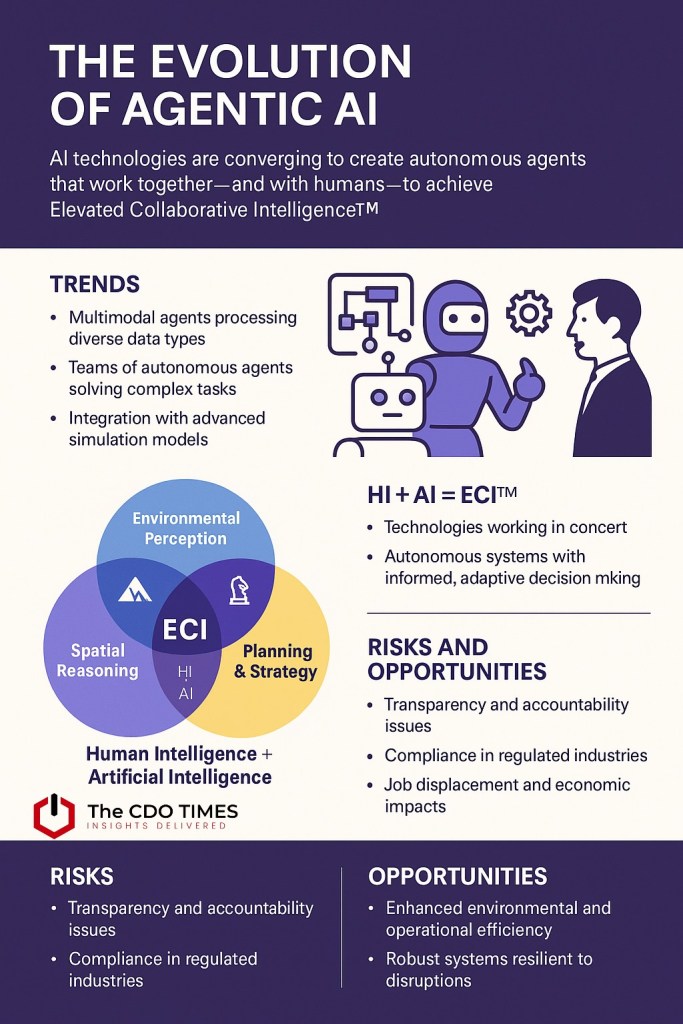

But what if that's no longer true? What if artificial intelligence—specifically, AI agents working in concert—could fundamentally change how large organizations think and decide?

This isn't theoretical. It's happening right now, and the implications for enterprise operations are staggering.

The Fundamental Problem With Scaling Conversations

Let's start with the hard truth: human conversations don't scale. This isn't opinion. It's cognitive science.

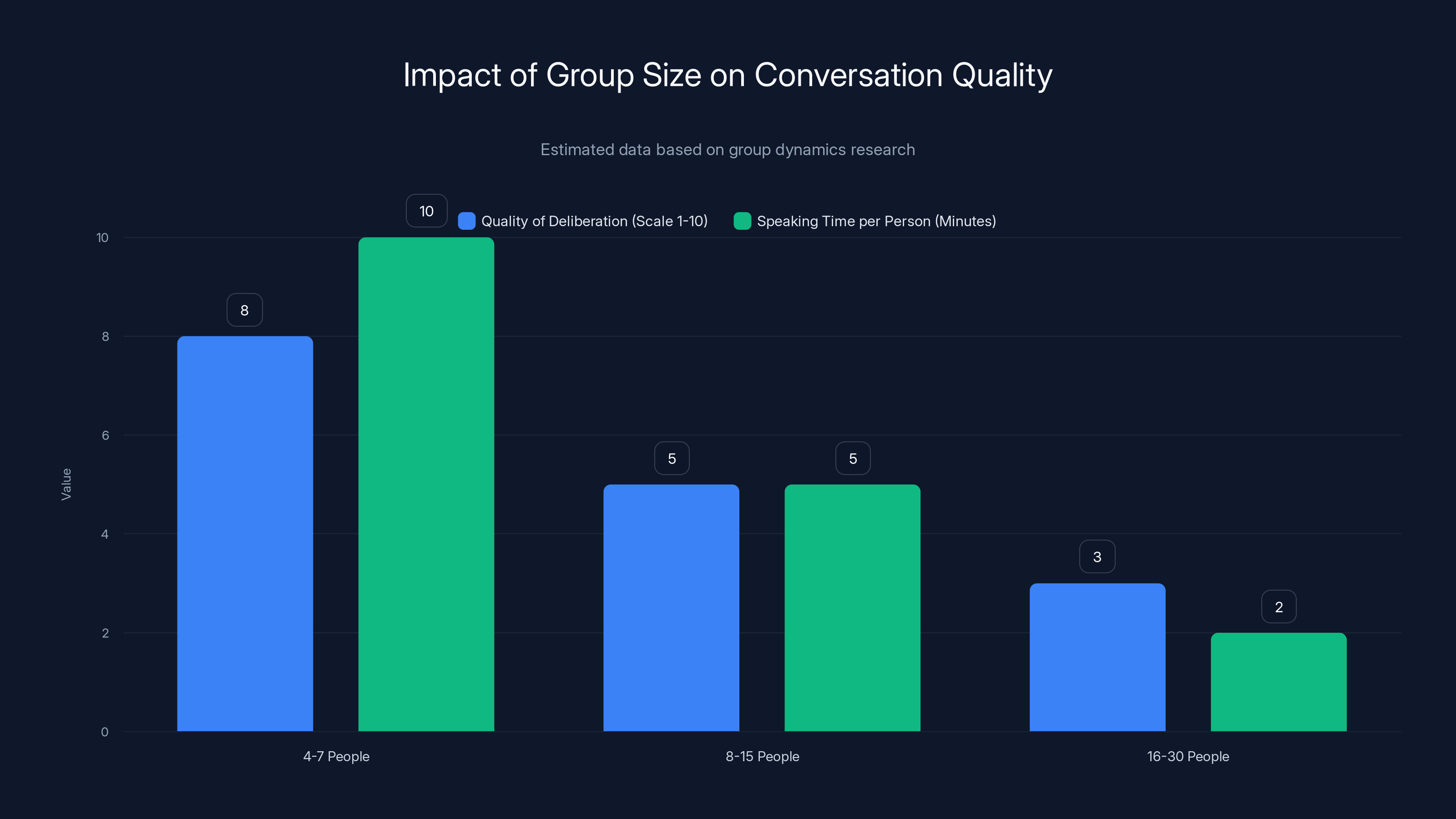

Research in group dynamics shows that the "sweet spot" for productive real-time discussion is between four and seven people. That's when everyone has adequate time to speak, when ideas get proper debate, when reasoning gets articulated. People feel heard. Solutions emerge through genuine deliberation.

What happens when you double the group to fifteen people? Speaking time per person drops by fifty percent. Wait times increase. Frustration mounts. The quality of deliberation collapses. Add another fifteen people, and you've created a crowd, not a thinking team.

This is why your company probably uses surveys. You need input from hundreds or thousands of people. You can't have them all in a room debating for six hours. So you resort to polls, questionnaires, voting systems. You aggregate data points. You extract useful signal from the noise.

But here's what gets lost: the person no longer feels like a thoughtful contributor. They're reduced to a data point. There's no give-and-take, no counterargument to respond to, no moment where someone says "Ah, I didn't think of it that way." The collaborative magic of deliberation—where ideas get refined, challenged, and improved through dialogue—simply doesn't exist in a survey.

And the consequences are real. Survey-based decisions tend to be suboptimal. They miss emergent insights that surface only when smart people argue about something. They lack the buy-in that comes from people feeling genuinely heard. They optimize for popularity instead of merit.

So organizations faced a choice: accept the limitations of small-group conversation, or sacrifice conversation quality for scale. Until now, you had to pick one.

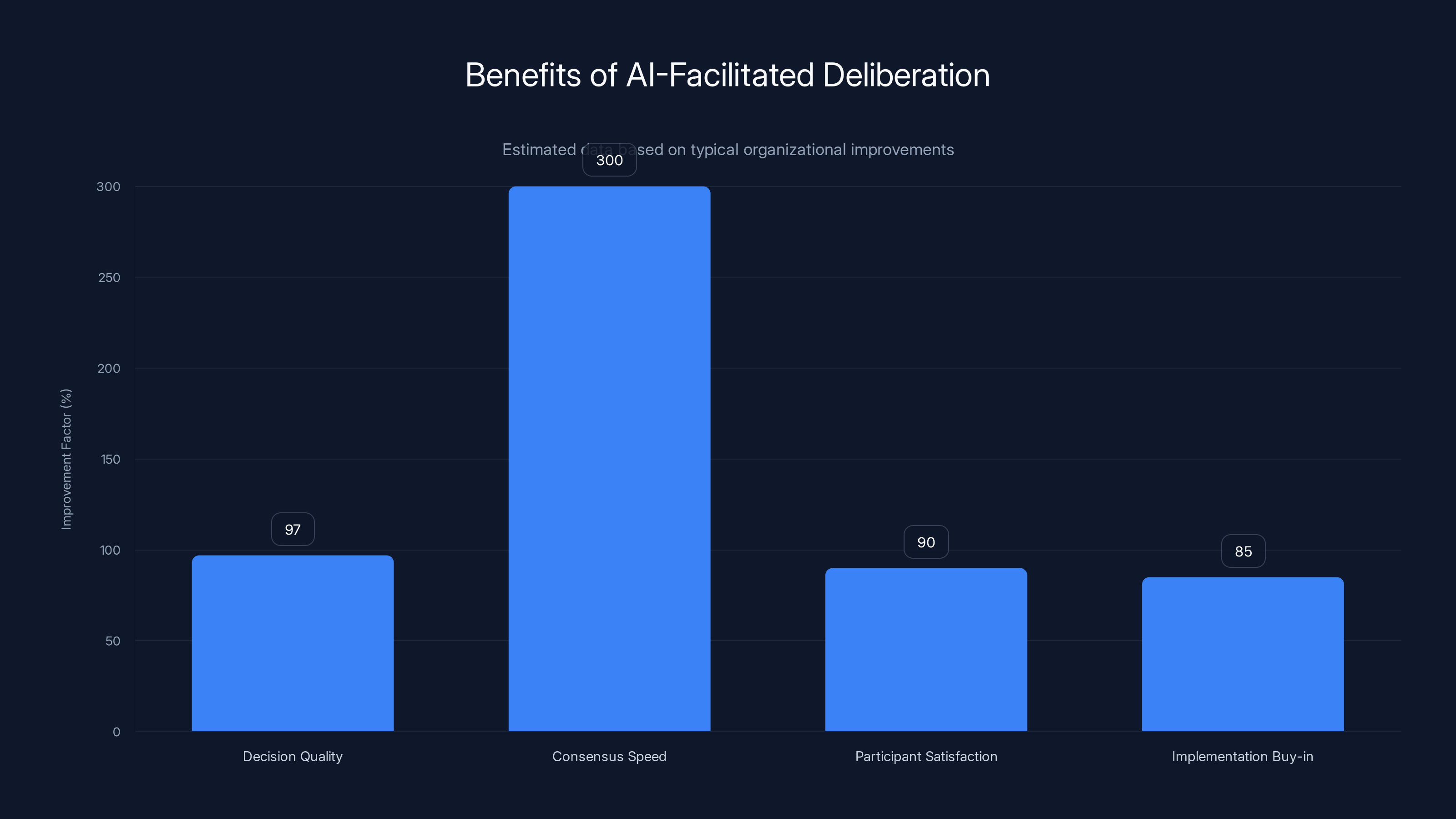

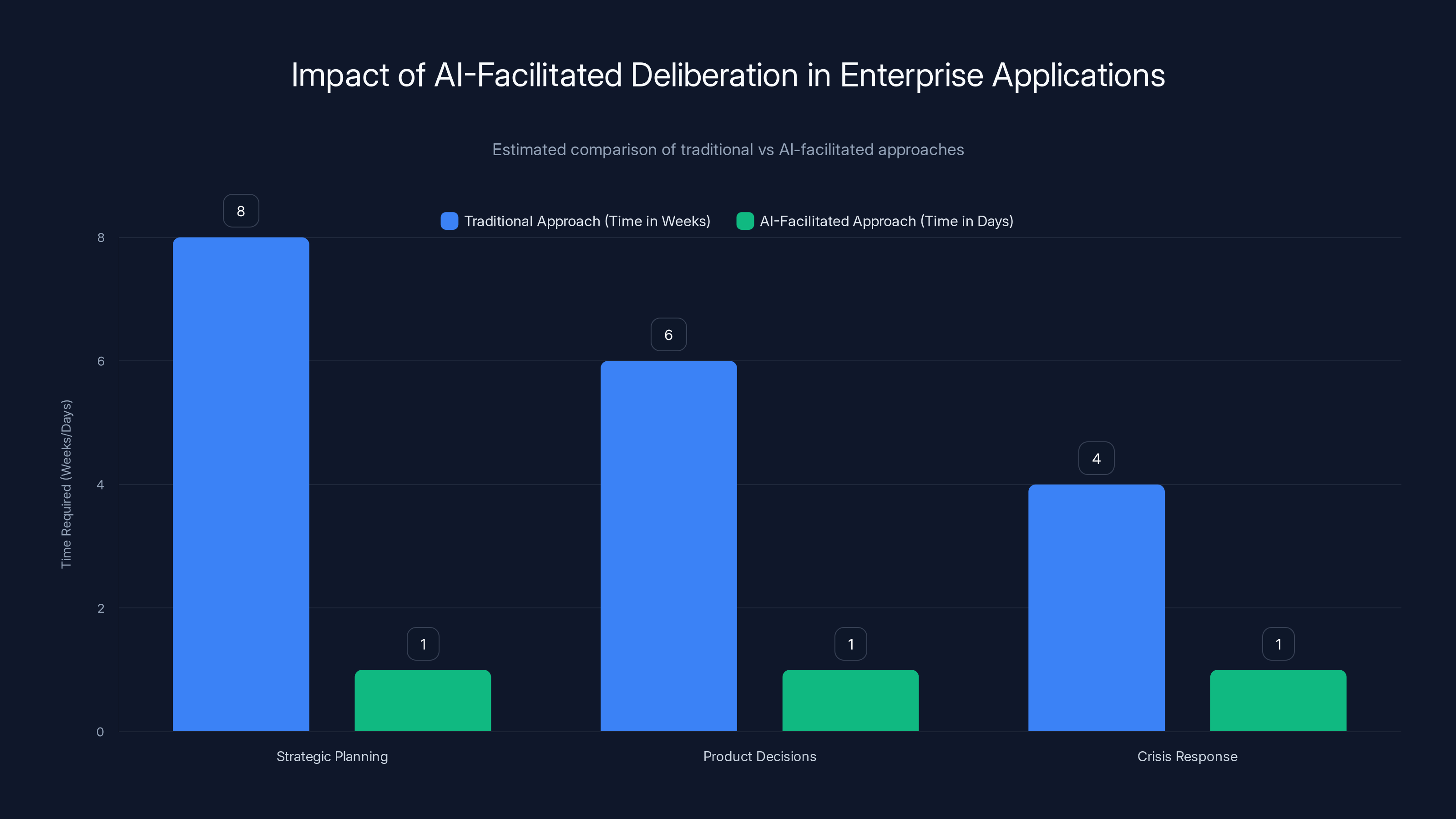

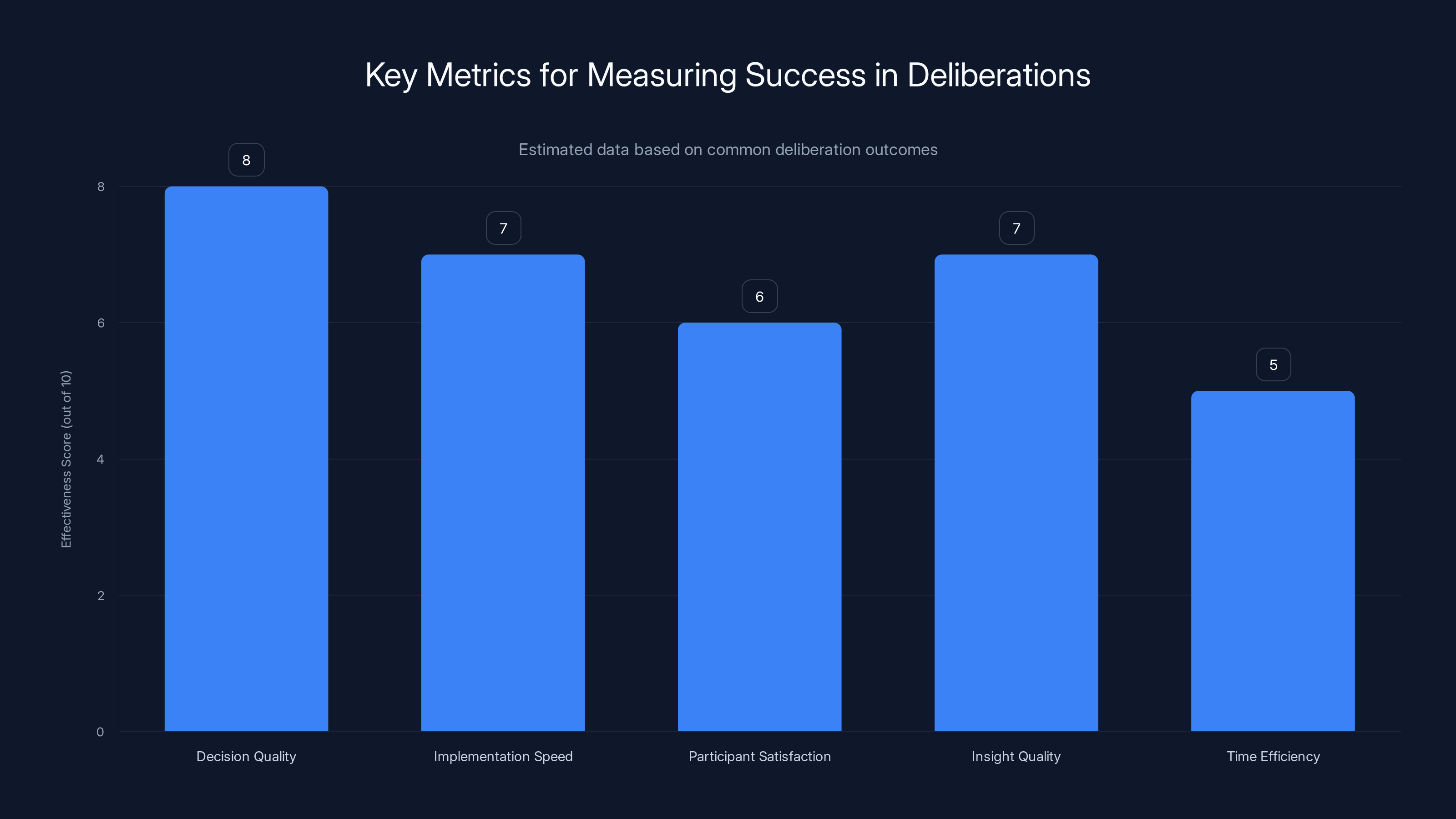

AI-facilitated deliberation significantly enhances decision quality, speeds up consensus-building by 3-5x, and boosts participant satisfaction and implementation buy-in. Estimated data.

Enter Swarm Intelligence and AI Agents

Here's where it gets interesting. A few years ago, researchers started looking at how nature solves this problem.

Consider a flock of birds. Thousands of starlings move in coordinated patterns, shifting and turning in unison without any central command. No bird is "in charge." Yet somehow, local interactions between neighbors create global coherence. Biologists call this swarm intelligence.

Or think about ant colonies. Individual ants have limited intelligence. But the collective system solves complex problems—finding optimal food routes, adapting to environmental changes, organizing labor—through local chemical communication that creates emergent global intelligence.

What if you could apply these principles to human decision-making?

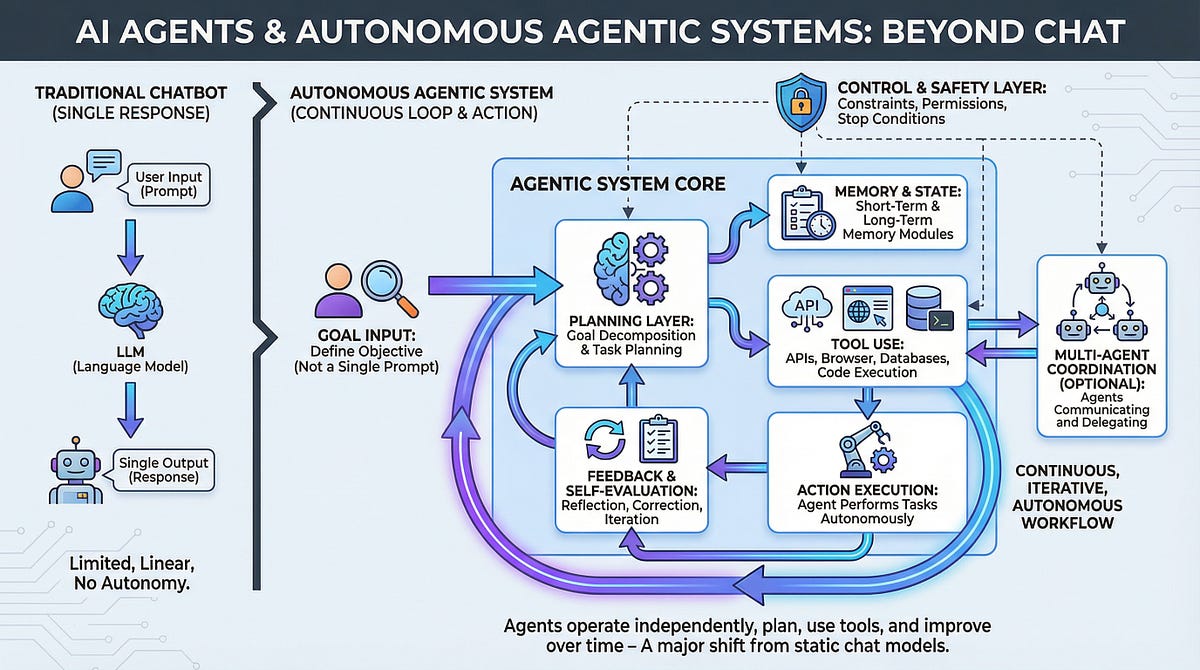

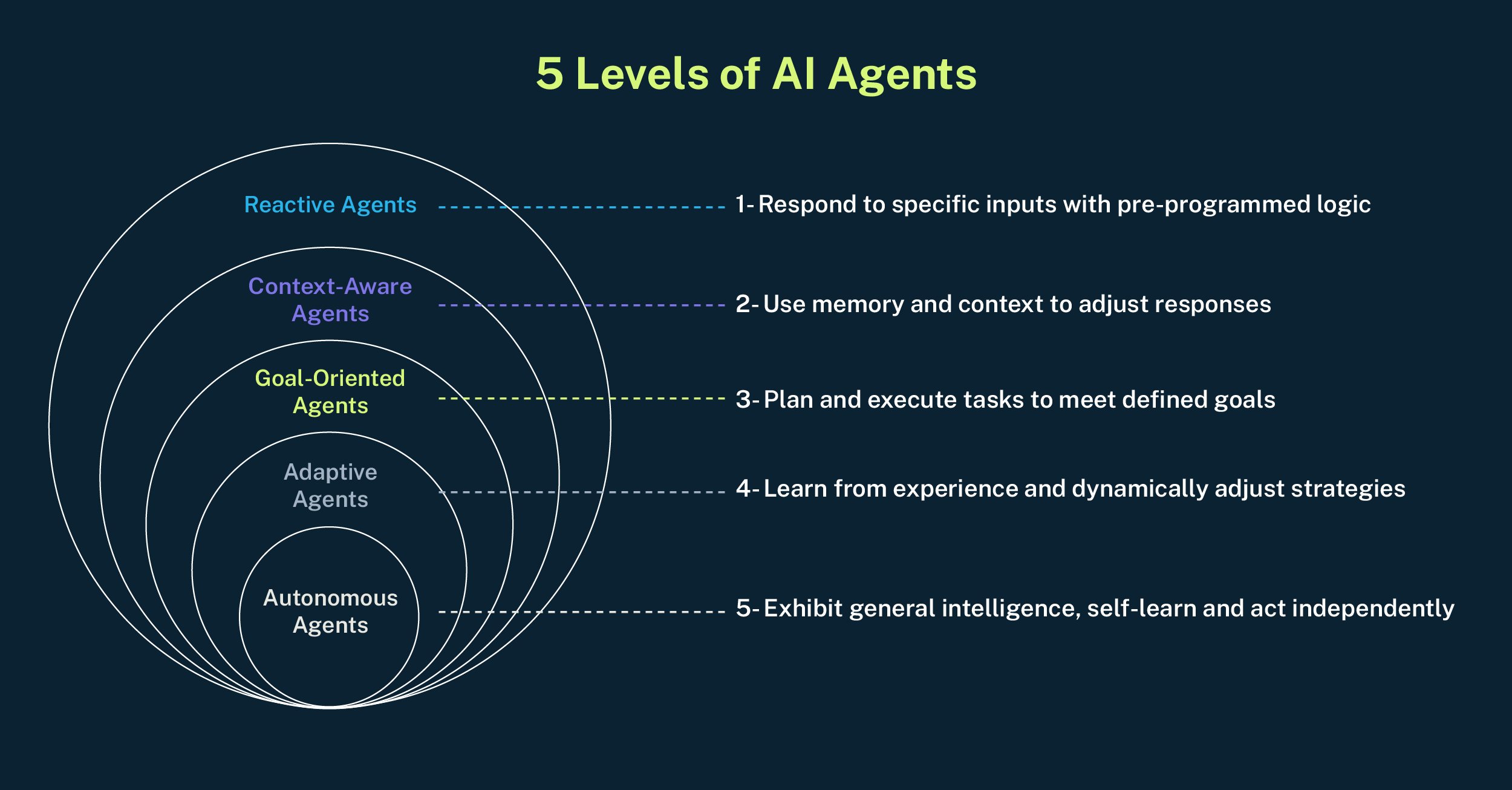

This is where AI agents enter the picture. Not autonomous agents in the sense of "robots taking over." Rather, software entities designed to listen, synthesize, and bridge communication. Think of them as conversational connectors.

The mechanism is elegant. You divide your large group into smaller subgroups. Each small group (four to seven people, the optimal size) gets one AI agent embedded in their conversation. That agent's job is simple: listen carefully to what people are saying, identify key insights and themes, then share those insights with agents in other subgroups.

When an agent from another subgroup shares an insight, the local agent presents it as a new perspective for that group to consider. "Hey, the team across the way just made a point about this. What do you think?" This triggers new discussion, new refinement, new insights that flow back to other groups.

The result: all the subgroups are simultaneously having conversations, yet they're connected through the agent layer. Local discussions remain intimate and high-quality. But globally, the entire population is debating as if they were in one large, miraculously coherent conversation.

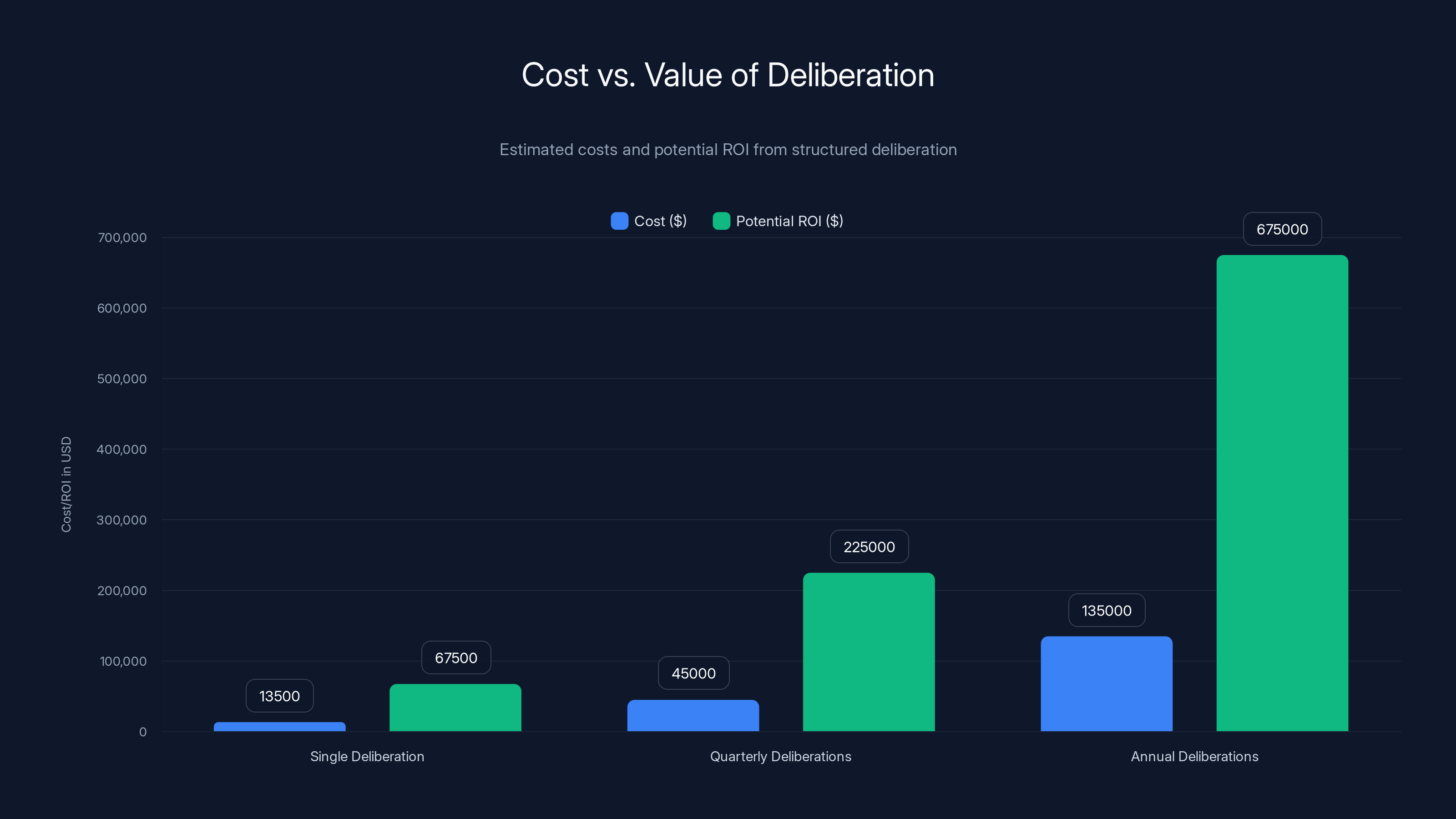

The cost of structured deliberation ranges from

How the Technology Actually Works

Let's get concrete. This isn't magic—it's sophisticated system design.

Suppose you're bringing together 110 people to solve a complex problem. The system does this:

Segmentation: Divide the 110 into groups of 4-5 humans each. You now have roughly 22-28 subgroups. Each subgroup needs facilitator capacity, so you assign one AI agent per subgroup.

Local Conversation: The humans in each subgroup engage in real-time discussion via text, voice, or video. They debate. They propose ideas. They ask questions. They challenge each other. Meanwhile, their AI agent is listening, building a real-time understanding of what's emerging in that group.

Agent Synthesis: The AI agent, using large language models and decision-tree analysis, identifies the most important themes, insights, and positions emerging from the conversation. It's not transcribing everything. It's extracting signal: "This subgroup is converging on idea X, but person Y raised concern Z. That's significant."

Cross-Subgroup Bridging: These identified insights get broadcast to agents in other subgroups. An agent might communicate: "In another group, someone pointed out that this approach has legal risks. Here's that risk analysis. How does it affect your thinking?"

Local Response and Iteration: When a subgroup receives outside insight, it's integrated into their local conversation. People respond to it. They either adopt the concern, refute it with their own reasoning, or propose an amendment. This triggers new local insight.

Iterative Refinement: This process repeats in multiple cycles over the course of the discussion. Each cycle adds more perspective, more reasoning, more synthesis. The conversation becomes increasingly coherent and optimized.

Aggregate Output: At the end, the system has access to all the reasoning from all the groups. It can rank options not just by popularity, but by the depth of deliberative support they received. It can identify consensus, identify remaining disagreements, and explain the reasoning on both sides.

The technical magic happens in how the AI synthesizes and bridges. Modern large language models are surprisingly good at extracting the essence of a debate. They can identify not just what people think, but why they think it. They can spot when two different groups are making essentially the same point using different language. They can find the core disagreements that deserve elevation to the full group.

So instead of information flowing upward to a decision-maker (the classic hierarchy), it flows laterally across the network of subgroups. The decision-maker sees the result: a fully deliberated, multi-perspective analysis where the reasoning is visible and traceable.

The Super Bowl Experiment: Proof in Practice

Let's look at a real test case, because talking about collective intelligence in the abstract only goes so far. What happens when you actually run this?

A group of researchers brought together 110 random people who had watched the Super Bowl that week. The task: as a distributed group, debate and reach consensus on which advertisement was the most effective, and why.

This might seem light-hearted, but think about it from an enterprise perspective. These 110 people had diverse viewing experiences. They had different perspectives on what "effective" means. They had seen 66 different ads that night. In a traditional setup, you'd survey them and aggregate responses. You'd lose the reasoning. You'd lose the dialogue.

Instead, the 110 were divided into 24 subgroups of 4-5 people each. Each group got one AI agent. The subgroups engaged in real-time discussion about the ads.

What happened was remarkable. In just ten minutes—ten minutes—the group had converged on a clear answer. They identified a Pepsi ad (featuring Coca-Cola's iconic polar bear in a humorous role reversal) as the most effective of the night. This wasn't a narrow vote. The AI system detected this as a statistically significant consensus result.

But here's the part that matters from an enterprise perspective: the system generated not just a winner, but a ranked list of 54 candidate ads, ordered by the strength of deliberative support each received across the full population.

How was this possible in ten minutes? Because the system didn't try to get everyone to directly interact. It kept conversations small and high-quality. But it wove those conversations together through the agent layer, so that insights from one group could immediately inform others.

Consider what this means for your enterprise. If you have 10,000 employees and you want their input on strategic direction, market opportunity, or operational priorities, you don't need them all in a conference room. You don't need weeks of surveys. You set up a structured deliberation with subgroups, agent facilitation, and cross-group synthesis. In a few hours, you have the best thinking of your entire organization, plus clear visibility into where consensus exists and where thoughtful people genuinely disagree.

AI-facilitated deliberation significantly reduces the time required for strategic planning, product decisions, and crisis response, enhancing efficiency and inclusivity. Estimated data.

Collective IQ Amplification: What the Research Shows

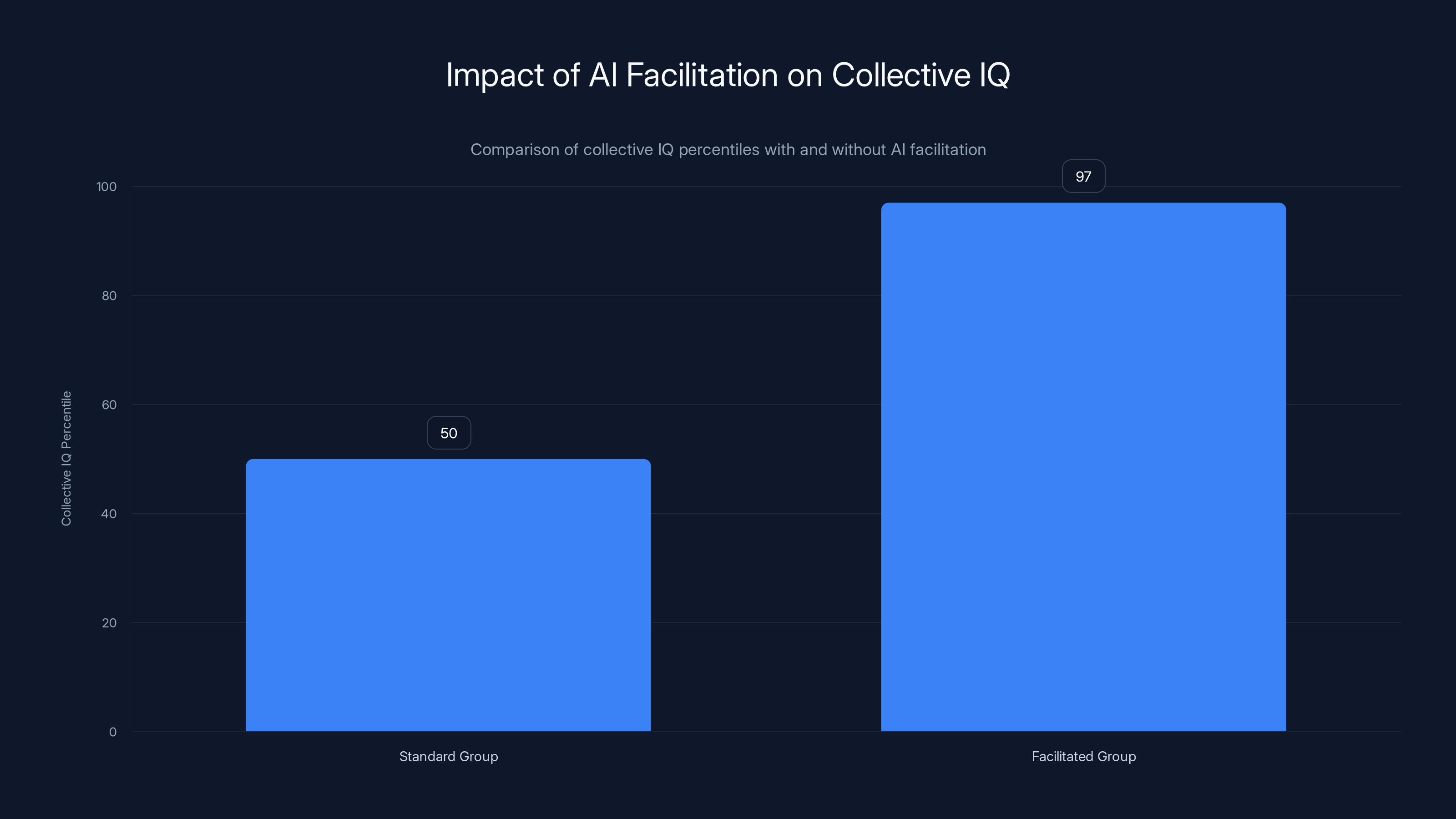

So far, I've described the mechanism and shown an example. But what does the data actually say about performance?

The research is striking. When groups engage in this kind of facilitated deliberation, their collective performance on reasoning tasks doesn't just improve—it improves dramatically.

In one study involving groups organized through AI agent facilitation, the researchers measured collective intelligence on a standard IQ test adapted for groups. The results: the facilitated groups achieved a collective IQ equivalent to the 97th percentile. For context, an IQ of 130 puts you in the top 2%. These groups performed as if they were collectively genius-level in their reasoning capacity.

Why? Several factors compound. First, you remove the dominance problem. In traditional large groups, one or two dominant personalities drive the conversation. Introverts get squeezed out. People with good ideas but poor public speaking confidence stay silent. In structured subgroups with agent facilitation, the quiet person's insight gets voiced because the agent is actively listening and drawing people out.

Second, you prevent anchoring bias. If someone makes a strong first statement in a large group, it disproportionately anchors everyone else's thinking. They're responding to that initial claim rather than engaging with the underlying problem. In subgroups, especially when agents are actively synthesizing multiple perspectives, different framings of the same issue can develop in parallel.

Third, you enable cross-pollination of ideas. If Group A develops a novel approach to a problem, and Group B is stuck on a different aspect, the agent layer ensures Group B learns about Group A's approach and can apply it to their own challenge. This is harder to achieve in either all-in-one large groups or completely siloed small groups.

A study conducted with Carnegie Mellon University measured something different: subjective experience. Researchers compared groups using AI-facilitated deliberation to groups using traditional collaboration tools like Microsoft Teams, Google Meet, and Slack.

The results were clear. People in AI-facilitated groups reported:

- Significantly higher sense of being heard and understood

- Greater sense of collaboration and shared purpose

- Higher perceived productivity

- Stronger buy-in to the decisions that emerged

This matters because buy-in predicts implementation success. A team that feels heard is a team that commits. That's not a soft metric—it's predictive of actual organizational outcomes.

Why Existing Collaboration Tools Fall Short

You might be thinking: "Wait, we already have Slack and Microsoft Teams. We have video conferencing. Why do we need something different?"

Good question. The answer lies in how these tools are designed—and more importantly, what they optimize for.

Slack, Teams, and similar platforms optimize for asynchronous, persistent communication. Great for many things. Terrible for real-time deliberation at scale.

Here's why. In Slack, if you want input from a large group, what typically happens? Someone posts a question. Responses trickle in over the course of hours or days. People's attention gets fragmented. By the time person #47 responds, person #5's comment is buried in the backlog and nobody's building on it. The conversation doesn't flow—it pools in eddies.

Video conferencing platforms like Zoom are better for synchronous discussion, but they have the opposite problem. If everyone's on one call, you hit the scaling wall immediately. With more than 8-10 active speakers, it falls apart. So you resort to having someone present while others passively listen. That's not deliberation; that's broadcast.

Certain people dominate. Others disappear. The conversation meanders because there's no structure and no active synthesis of what's emerging.

Slack and Teams were designed to be flexible communication platforms. They're not optimized to solve this specific problem: how do you get the best thinking from a large, distributed group in real-time?

That's the gap that AI-facilitated deliberation fills. It's not replacing your existing tools. It's a specialized layer optimized for one thing: helping large groups think together.

Estimated data suggests that optimal group size for quality deliberation is 4-7 people, with significant drops in speaking time and quality as group size increases.

Enterprise Applications: Where This Creates Real Value

Let's move from theory to practice. Where in your enterprise would this actually matter?

Strategic Planning and Competitive Analysis

Imagine you're kicking off a strategic planning cycle. You want input from senior leadership across business units, but you also want to tap the front-line wisdom of people closest to customers and operations. That could be 200+ people.

Traditional approach: hire a consultant to run a multi-phase process over weeks or months. Expensive. Slow. Loses momentum.

AI-facilitated approach: run structured deliberation sessions where the 200+ people debate key questions simultaneously. In a few hours, you have synthesized input from all levels of the organization. People feel heard. You get better strategy because you've integrated perspective that usually gets filtered out through hierarchy.

Product and Market Decisions

You're deciding whether to enter a new market or kill an underperforming product line. The decision hinges on analyzing risk, opportunity, competitive dynamics, and internal capability.

You could run it through a standard decision process where executive leadership debates and decides. You'll miss valuable input from engineers (who understand technical feasibility constraints), sales (who understand customer reception), customer success (who understand implementation realities), and HR (who understands talent availability).

With AI-facilitated deliberation, you can structure conversations where all these perspectives contribute simultaneously. Engineers in one subgroup can respond to sales concerns. Customer success insights can surface technical risks. This produces better decisions because they're informed by comprehensive perspective.

Crisis Response and Scenario Planning

When things go wrong, you need to act fast but thoughtfully. You have limited time to gather input, but the cost of a bad decision is high.

AI-facilitated deliberation lets you do rapid sense-making. You can bring together 50-100+ people with relevant expertise and have them collectively analyze what happened, what it means, and what to do about it. You're not waiting for written reports to trickle in. You're synthesizing perspective in real-time, which builds shared understanding and faster alignment on action.

Innovation and Ideation

You want to source innovation ideas from across your organization. You could have an innovation challenge where people submit ideas asynchronously. Then what? You have hundreds of submissions and no clear way to synthesize them.

Or you could run AI-facilitated deliberation where groups brainstorm, debate feasibility, assess impact, and collectively identify which ideas deserve investment. The AI layer helps surface connections between ideas from different groups: "Team A's approach to supply chain optimization could enhance Team C's customer delivery model."

Knowledge Capture and Institutional Learning

When an expert is leaving the company, or when you need to document critical institutional knowledge, deliberative sessions where subject matter experts discuss and debate creates better documentation than traditional knowledge capture processes. People ask each other questions. They surface assumptions. This generates richer knowledge artifacts.

Implementation: How to Actually Deploy This

So you're convinced this could work in your organization. How do you actually make it happen?

Step 1: Clarify Your Question

Before you bring anyone together, you need a clear question or set of questions you're trying to resolve. This isn't broad brainstorming (though that can work). This is: "What market should we enter?" or "How should we restructure our engineering organization?" or "What are the top three risks to this strategic plan?"

The clearer your question, the more focused and productive the deliberation. Vague questions generate vague insights.

Step 2: Recruit Diverse Participants

The whole value of this approach is that you're tapping diverse perspective. So recruit deliberately for diversity. You want different departments, different levels of seniority, different functional expertise, and different thinking styles.

The typical minimum is 25-30 people. There's no meaningful maximum, but 50-200 is the sweet spot for most organizational questions. Above 200, logistics get complex without adding proportional value.

Step 3: Design Subgroups Strategically

How you divide people into subgroups affects the conversation quality. Avoid homogeneous groups (all engineers with engineers). Mix it. Put someone from marketing with engineers and operations. This forces perspective collision, which is where good thinking emerges.

Also consider: do any individuals need to be in the same subgroup together? Are there key relationships or conflicts that should be separated or aligned? Good facilitation starts with thoughtful group design.

Step 4: Brief Participants

Don't just dump people into a subgroup and hope they understand what they're doing. Spend 10-15 minutes explaining:

- The purpose of the deliberation

- The question you're trying to answer

- How the agent facilitation works (briefly—you don't need to explain the algorithm)

- The norms of productive discussion

People are more productive when they understand why they're there.

Step 5: Run the Deliberation

A typical session is 60-90 minutes. Your facilitator (human) opens with context and the key question. Subgroups begin discussion. The AI agents are listening and synthesizing in the background. If the conversation feels like it's stalling or going off track, the facilitator or an agent can introduce a new perspective or refocus.

The rhythm matters. You want sustained discussion, but also natural breaks where the system can synthesize across groups.

Step 6: Aggregate and Analyze Results

When the deliberation ends, you don't just have individual responses. You have a record of reasoning, synthesis, and emergence. The system generates:

- Ranked options ordered by deliberative support

- Key reasons for each recommendation

- Dissenting views, clearly articulated

- Consensus areas and genuine disagreement areas

This becomes input to decision-makers.

Facilitated groups achieved a collective IQ at the 97th percentile, significantly higher than standard groups. Estimated data based on research context.

The Role of AI Agents: More Facilitator Than Automaton

Let me be clear about what the AI agent is and isn't, because there's a common misconception.

It's not making decisions. It's not optimizing for some pre-programmed outcome. It's not biasing the conversation toward a predetermined answer.

What it is: a very sophisticated listener and synthesizer. It's doing what a brilliant human facilitator does, but at scale and across multiple simultaneous conversations.

A good facilitator does these things:

- Listens carefully to what people are saying

- Identifies patterns and themes emerging in the conversation

- Summarizes back to ensure understanding

- Asks clarifying questions

- Surfaces perspectives that aren't being voiced

- Connects dots between different speakers' comments

- Helps the group notice when they've converged on something

The AI agent does all of these. It's not replacing human judgment. It's augmenting human capacity to do collective thinking.

There's a philosophical point here. We often think of AI as replacing human capability. "AI will take your job." Here, AI is enabling a human capability that previously didn't scale. Instead of replacing deliberation, it's making deliberation possible where it wasn't before.

Overcoming Skepticism and Resistance

When you first propose this to your organization, you'll hit skepticism. People will ask legitimate questions.

"Won't AI just tell people what we want to hear?"

Not if it's designed well. The agent's incentive structure is to synthesize what's actually being said, not to optimize for a predetermined outcome. Think of it like audio recording—the recording captures what happened, not what we hoped would happen. The AI is more like a really smart recording that also identifies themes and makes connections.

"This seems like it takes forever."

Actually, it's often faster than alternatives. The Super Bowl experiment took ten minutes to reach consensus on which of 66 ads was most effective. Compare that to how long a traditional decision process would take. Your speed depends on question complexity, not participant count.

"What about people who don't feel comfortable speaking up?"

This is actually where the approach shines. In a traditional large meeting, introverts get crowded out. In a structured subgroup with 4-5 people and an agent actively facilitating, everyone gets voice. The agent can literally ask: "We've heard from A, B, and C. D, what's your perspective on this?"

"Will this really produce better decisions?"

It depends on how you measure. If you mean "faster reaching consensus," yes. If you mean "more thoughtfully reasoned," the research says yes. If you mean "the decision I already wanted to make will emerge," then it depends on whether you're right. The whole point is that you don't know the answer in advance.

Decision Quality and Implementation Speed are often the most impactful metrics in measuring the success of deliberations, with scores of 8 and 7 respectively. Estimated data.

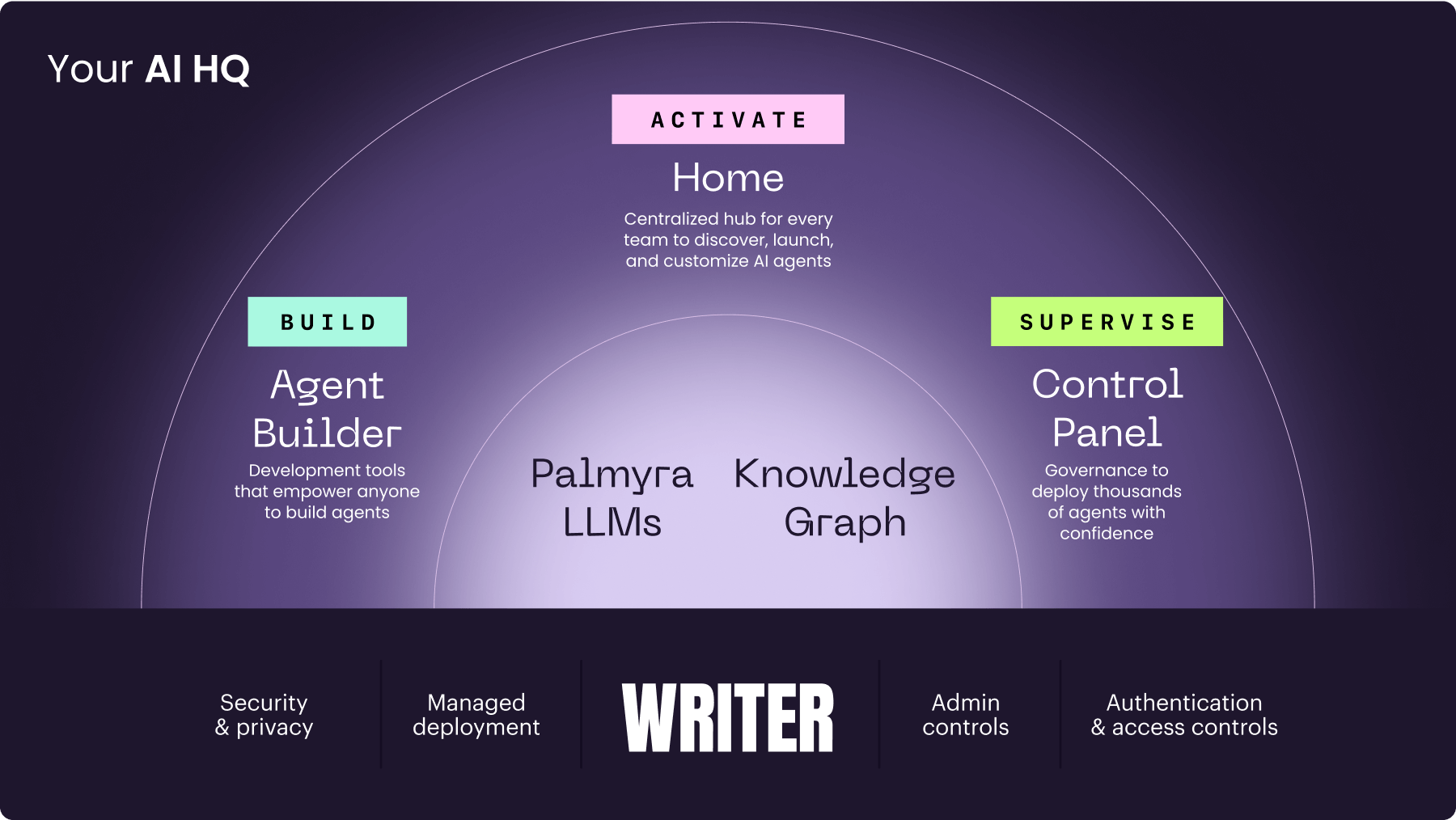

Integration With Your Existing Technology Stack

You already have systems for communication, project management, decision tracking, and documentation. How does AI-facilitated deliberation fit?

Typically, as a layer that sits alongside your existing stack, not replacing it. You'd use it for specific, high-value decisions or planning processes. You'd feed the outputs into your project management system (decisions made), your documentation system (reasoning captured), and your communication systems (decisions broadcast).

The platform integrating this should have API connections to your key systems so data flows smoothly. You don't want to manually export a report from deliberation and manually import it into your planning tool. That friction kills adoption.

You also want integration with however people are already participating. If your organization works in Teams, you want easy Teams integration. If people use Slack, that. The deliberation shouldn't require a completely separate login and learning curve. It should feel like an extension of how people already work.

Common Failure Modes and How to Avoid Them

Not every deliberation goes perfectly. Here are the failure modes to watch for.

Homogeneous Subgroups

If you accidentally create groups where everyone thinks alike, you get agreement without productive friction. There's no debate because there's no disagreement. The outcome is consensus, but it's shallow consensus. You prevented this through diverse recruitment and thoughtful group design.

Insufficient Briefing

If people don't understand the question or the process, they get frustrated. They're confused about what they're supposed to be doing. Contribution quality drops. Spend the time upfront to explain clearly.

Dominant Personalities in Subgroups

Even in small groups, you can have someone who dominates. The agent should be listening for this and actively working to draw out other voices. If this is a persistent problem with certain individuals, you might need to adjust group composition.

Premature Anchoring on Wrong Metric

Sometimes groups converge on an answer, but for the wrong reasons. Everyone agrees on Option A, but half the group likes it for reason X and half for reason Y, and they'd actually disagree if they realized that. The agent's job includes identifying this. "Hold on, I'm hearing two different reasons for supporting Option A. Let me surface that for discussion."

Insufficient Time for Emergence

If you cut the deliberation short before the conversation has naturally deepened, you miss synthesis. There's often a moment where everything clicks and the group suddenly sees something they didn't before. This typically takes time. Plan for it.

Measuring Success: What Actually Matters

You've run a deliberation. Now what? How do you know if it worked?

Decision Quality

The ultimate test is whether the decision made was sound. This is hard to measure in real-time, but you can track it over time. After you've implemented decisions made via AI-facilitated deliberation, do they work out better than decisions made through other processes? Track key metrics and compare.

Implementation Speed

Does buy-in from the deliberation process actually translate to faster implementation? Track time from decision to measurable progress. Organizations often report 2-3x speed improvement because people understand the decision and feel ownership of it.

Participant Satisfaction and Engagement

Did people feel heard? Did they feel like the process was valuable? This matters for future participation and organizational culture. Survey participants after deliberation.

Insight Quality

Did the deliberation surface insights you wouldn't have gotten from surveys or traditional meetings? Sometimes the best measure is qualitative: Did someone point out something important that nobody else had considered?

Time Efficiency

How much human time did the process consume, and did you get proportional value? A two-hour deliberation with 100 people is 200 human-hours of input. Was it worth it? Usually yes, if you're making important decisions. Usually no if you're deciding lunch catering.

The Future: Beyond Business Applications

We've been discussing enterprise use cases, but the implications go broader.

Why are democracies struggling? One core reason: citizens can't have meaningful deliberation at scale. You can't have a town hall with a million people. You're reduced to voting on options decided by someone else. The ability for large groups to actually deliberate—to hear different perspectives, argue about them, refine thinking—has atrophied.

What if AI-facilitated deliberation changed that? What if city councils could structure citizen deliberation where people actually engage with each other's reasoning instead of just voting yes/no on something a consultant proposed?

Similarly, scientific communities struggle with how to integrate knowledge from thousands of experts across disciplines. What if researchers could structure deliberative sessions where they collectively analyzed evidence and identified the most promising research directions?

These applications are further out. But the technology is moving toward them. Enterprise use comes first because businesses have budget and urgency. But the principles apply anywhere humans need to think together at scale.

Making the Shift: Organizational Readiness

Before you implement AI-facilitated deliberation at scale, assess your organization's readiness.

Culture

Does your organization value input from across levels? Or is decision-making highly centralized? This approach works best in cultures that already lean collaborative. If you're a command-and-control hierarchy, you'd be fighting cultural gravity.

Technical Readiness

Can people easily access the platform? Is your IT infrastructure secure enough? Are people comfortable with technology-mediated collaboration? These are practical questions but manageable with good onboarding.

Leadership Buy-In

This is the key variable. If executives are convinced that tapping the organization's collective intelligence matters, they'll resource it. If they see it as a nice-to-have, it'll get squeezed out by firefighting. You need leadership commitment.

Willingness to Actually Use Results

Here's a subtle one: are decision-makers actually prepared to have their minds changed by the output? If you run a deliberation and then disregard the results because you had a different answer in mind, people notice. That destroys trust. Don't do it unless you're genuinely open to the group's reasoning.

The Economics: Cost vs. Value

Let's talk money. Is this worth the investment?

A typical implementation: platform licensing ($500-5,000/month depending on scale), plus facilitation expertise (either hiring someone or training internal staff), plus participant time (your main cost).

A deliberation with 100 people for two hours costs you 200 human-hours of time. At an average fully-loaded cost of

Total: $12,000-15,000 for one deliberation.

When does that make sense? When the decision you're making has material impact on the organization. Strategic decisions, major process changes, product/market direction, organizational restructuring. These are decisions where a 5-10% improvement in quality has huge downstream value.

When doesn't it make sense? Routine operational decisions, tactical planning, anything where the cost of a slightly suboptimal decision is small relative to the deliberation cost.

Most organizations find a steady-state where they run 1-2 major deliberations per quarter. Quarterly strategy reviews, half-yearly strategic planning, major product decisions. That's maybe $60K-180K per year in deliberation costs (including platform), which is reasonable if it improves your strategic decision-making.

Ethical Considerations and Governance

One more thing before we wrap up: ethics.

AI systems can embed biases. If your training data is skewed, your agent might show bias. If the subgroups are selected poorly, you might miss perspective.

Good governance means:

- Transparency about how the system works. People should understand that agents are synthesizing, not deciding.

- Explicit attention to whose voice might be missing. Did you recruit for diversity? If you're only hearing from senior people, you're missing crucial perspective from newer employees.

- Regular audits of outcomes. Are certain perspectives consistently getting elevated more than others? If so, why?

- Clear boundaries on what gets decided by deliberation. Not every decision benefits from this process. Use it thoughtfully.

- Honest communication about limitations. No process is perfect. Be clear about what this does well and where it might fall short.

Done well, AI-facilitated deliberation is a technology that amplifies human intelligence. Done poorly, it's a tool for manipulating consensus. The difference is governance and transparency.

Bringing It All Together: The Vision

Let's zoom out. What's the big picture here?

Traditionally, organizations have made decisions through some combination of: top-down leadership directive (fast but not wise), committee consensus (slow and often politically driven), or broad input like surveys (inclusive but low-quality information).

What if there was a fourth way? What if you could combine the speed of leadership decision-making with the collective wisdom of broad organizational input? What if people felt genuinely heard and genuinely contributed to decisions?

That's what AI-facilitated deliberation at scale offers. It's not a replacement for leadership. Leaders still make final calls. But they make them with far better input. And the organization moves faster because people understand the reasoning and feel ownership.

This is where we're heading: organizations that have learned to think together. Where size is no longer an impediment to collective intelligence. Where you can tap the wisdom of thousands in real-time instead of slow asynchronous processes.

It requires technology. It requires thoughtful design. It requires culture that values input. But the payoff is real: better decisions, faster implementation, more engaged employees, and the emergence of organizational intelligence that's genuinely greater than the sum of individual parts.

That's not just a productivity improvement. That's a fundamental shift in how organizations operate.

FAQ

What is collective intelligence in the enterprise context?

Collective intelligence refers to the enhanced decision-making capacity that emerges when groups of people think together effectively. In enterprise settings, it means leveraging the combined knowledge, experience, and perspective of your entire organization to solve problems and make decisions that no individual or small leadership team could make as well alone. Rather than reducing employees to survey respondents, collective intelligence treats them as active thinkers who contribute to refined solutions through genuine dialogue.

How do AI agents facilitate large-group deliberation?

AI agents work as conversational connectors in a distributed network of small discussion groups. Each agent listens carefully to local conversation, identifies emerging insights and themes, and shares those with agents in other groups who introduce them as new perspectives for their local group to consider. This creates a web of interconnected conversations where local discussion quality remains high (4-7 people) while globally the entire population is engaged in synthesized deliberation. The agents don't decide anything—they bridge communication and synthesize perspective across parallel conversations.

What are the key benefits of AI-facilitated deliberation?

Organizations using this approach report significantly improved decision quality (better reasoning, fewer blind spots), faster consensus-building (often 3-5x faster than traditional processes), higher participant satisfaction (people feel heard and understood), stronger implementation buy-in, and better utilization of organizational knowledge. Research shows that large groups facilitated this way achieve collective intelligence in the 97th percentile range, meaning their group problem-solving performs at the level of highly gifted individuals. Beyond the metrics, there's a cultural benefit: people feel valued and genuinely part of organizational thinking.

How is this different from using Slack, Teams, or other existing collaboration platforms?

While those platforms are excellent for asynchronous communication and general team collaboration, they're not optimized for real-time deliberation at scale. Slack conversations get buried in backlogs, dispersing attention. Video calls with more than 8-10 active speakers become chaotic. Those tools lack the deliberative structure (small-group dynamics with agent synthesis and cross-group bridging) that enables large populations to think together effectively. AI-facilitated deliberation is a specialized layer designed specifically for this challenge.

What types of decisions work best with AI-facilitated deliberation?

This approach is most valuable for decisions that require: diverse perspective (strategic direction, market entry, organizational restructuring), buy-in from across the organization (major process changes, cultural shifts), integration of expertise from different functions, or time-sensitive synthesis of complex information (crisis response). It's less useful for routine operational decisions or situations where the decision has minimal organizational impact. Most organizations find they use it for roughly one major deliberation per month or quarter.

How do you avoid the risk of AI bias or echo chambers in these deliberations?

Good governance is critical. This includes: transparent communication about how the system works, deliberate recruitment for diversity (different functions, levels, thinking styles) to avoid homogeneous groups, explicit attention to whose voices might be missing, regular audits of outcomes to spot patterns that suggest bias, and clear boundaries on which decisions get decided through deliberation. The goal is ensuring that the system amplifies human intelligence rather than embedding biases. Well-designed subgroup composition and active facilitation of diverse participation are the primary safeguards.

What's the actual ROI of implementing this at scale?

Costs include platform licensing (typically

Can existing teams run their own AI-facilitated deliberations, or do you need external experts?

Most organizations benefit from external facilitation when starting out. Someone experienced with deliberative process design can ensure the question is well-framed, subgroups are thoughtfully composed, and the session is run effectively. However, after running a few sessions, internal teams can often manage the process independently with light platform training. The key is understanding what makes deliberation work (subgroup size, diversity, agent role, synthesis) rather than having special expertise in complex technology.

How does this scale to very large organizations with tens of thousands of employees?

The beauty of the approach is that it actually scales better than traditional large-group processes. You're not trying to get all 10,000 people in one conversation. Instead, you'd divide into roughly 1,500-2,000 subgroups of 5-6 people each, each with an AI agent. The agents synthesize both locally (within their subgroup) and globally (sharing insights across all groups), creating one coherent deliberation. The time to consensus is determined by the complexity of the question, not the number of participants. Research shows this maintains quality even at very large scales.

What happens after the deliberation ends? How do decision-makers use the results?

The system generates structured output: ranked options ordered by deliberative support, key reasoning for each position, dissenting views clearly articulated, and visibility into areas of genuine disagreement. Decision-makers then synthesize this input with any additional factors they need to consider (budget constraints, regulatory requirements, etc.) and make final calls. The transparency of reasoning—people can see exactly why the group converged on a particular option—builds confidence in the decision and increases implementation commitment. Importantly, people understand the reasoning even if they might have voted differently personally.

Key Takeaways

- Productive conversations scale only to 4-7 people; surveys sacrifice deliberation quality for breadth

- AI agents facilitate cross-group synthesis, enabling small-group conversation quality at large-group scale

- Groups using AI-facilitated deliberation achieve collective intelligence in the 97th percentile

- Enterprise applications include strategic planning, product decisions, crisis response, and innovation

- ROI typically 5-10x within first year through improved decision quality and faster implementation

- Implementation requires clear questions, diverse recruitment, thoughtful group design, and 60-90 minute facilitated sessions

- Success depends on organizational culture valuing input and leadership commitment to using results

Related Articles

- Zoom AI Companion 3.0: The Complete Guide to AI-Powered Productivity [2025]

- How to Operationalize Agentic AI in Enterprise Systems [2025]

- WebMCP: How Google's New Standard Transforms Websites Into AI Tools [2025]

- Why Customer Support Hiring Collapsed 65% in 2 Years [2025]

- Chrome's Auto Browse Agent Tested: Real Results [2025]

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

![AI Agents & Collective Intelligence: Transforming Enterprise Collaboration [2025]](https://tryrunable.com/blog/ai-agents-collective-intelligence-transforming-enterprise-co/image-1-1771009615536.png)