Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

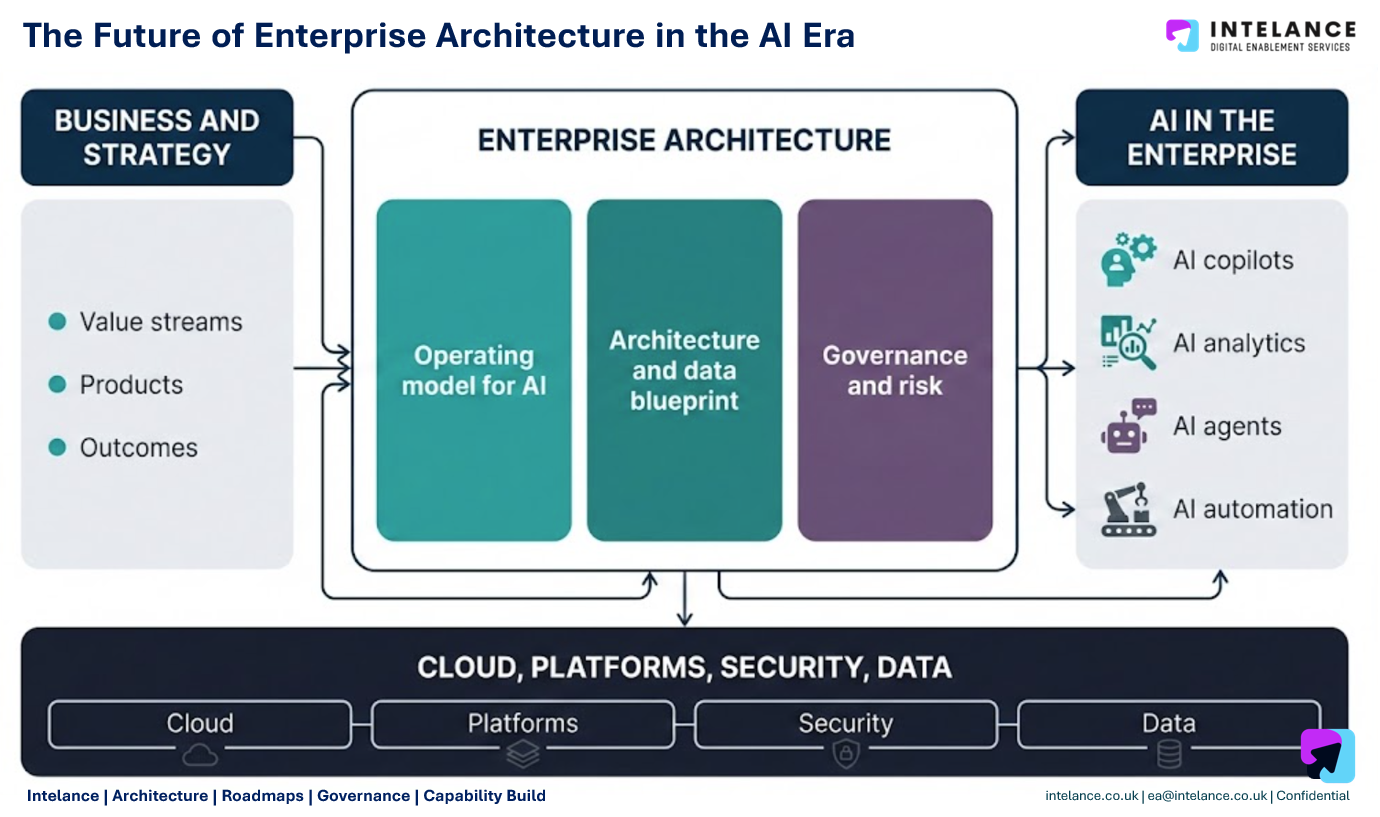

Enterprise AI started with chatbots answering questions. That era is ending. The next wave isn't about answering—it's about doing. It's about systems that actually work across your organization, connecting to internal databases, understanding permissions, and delivering intelligence exactly where employees need it.

But here's the critical question that keeps CIOs up at night: who owns the AI layer that powers all of it?

This isn't theoretical. It's the difference between a

The answer used to be simple: you'd buy an AI tool from a tech giant, bolt it onto your infrastructure, and hope it worked. Microsoft threw Copilot into everything. Google launched Duet and then renamed it and relaunched it. Everyone had an AI answer, but nobody had the right architecture.

Then startups like Glean changed the game. Instead of positioning themselves as a chatbot vendor, they positioned themselves as the infrastructure layer underneath all AI experiences. The work assistant that sits between your employees and your data. The system that understands what you can access, what you should see, and what actually needs to happen next.

This shift matters because it reframes the entire AI conversation. You're not asking "which AI tool should we buy?" anymore. You're asking "who controls the layer that coordinates all AI tools in my organization?"

The stakes are enormous. Enterprise software architecture decisions made right now will lock in your organization's AI capabilities for the next five to ten years. Get it wrong and you're managing chaos: multiple AI systems that don't know about each other, security teams losing sleep over data access, and employees frustrated that AI isn't actually helping them work faster.

Get it right and you unlock something genuinely valuable: AI that understands your business context, respects your security posture, and actually automates the work that slows people down.

This article explores what's really happening in enterprise AI architecture, why the AI layer question is more important than the AI models question, and what you need to know to make the right architectural decision for your organization.

TL; DR

- Enterprise AI is shifting from Q&A chatbots to agentic work assistants that actually execute tasks across your organization

- The AI layer matters more than the LLM because it determines permissions, integrations, context, and what actually gets done

- Three ownership models are emerging: platform consolidation (Microsoft, Google), standalone specialists (Glean, Claude), or internal build (rare, expensive)

- The real competition isn't about AI models anymore, it's about who controls the integration point between employees and your company's data

- Most enterprises will adopt a hybrid approach that combines best-of-breed AI with unified infrastructure, similar to how cloud adoption happened

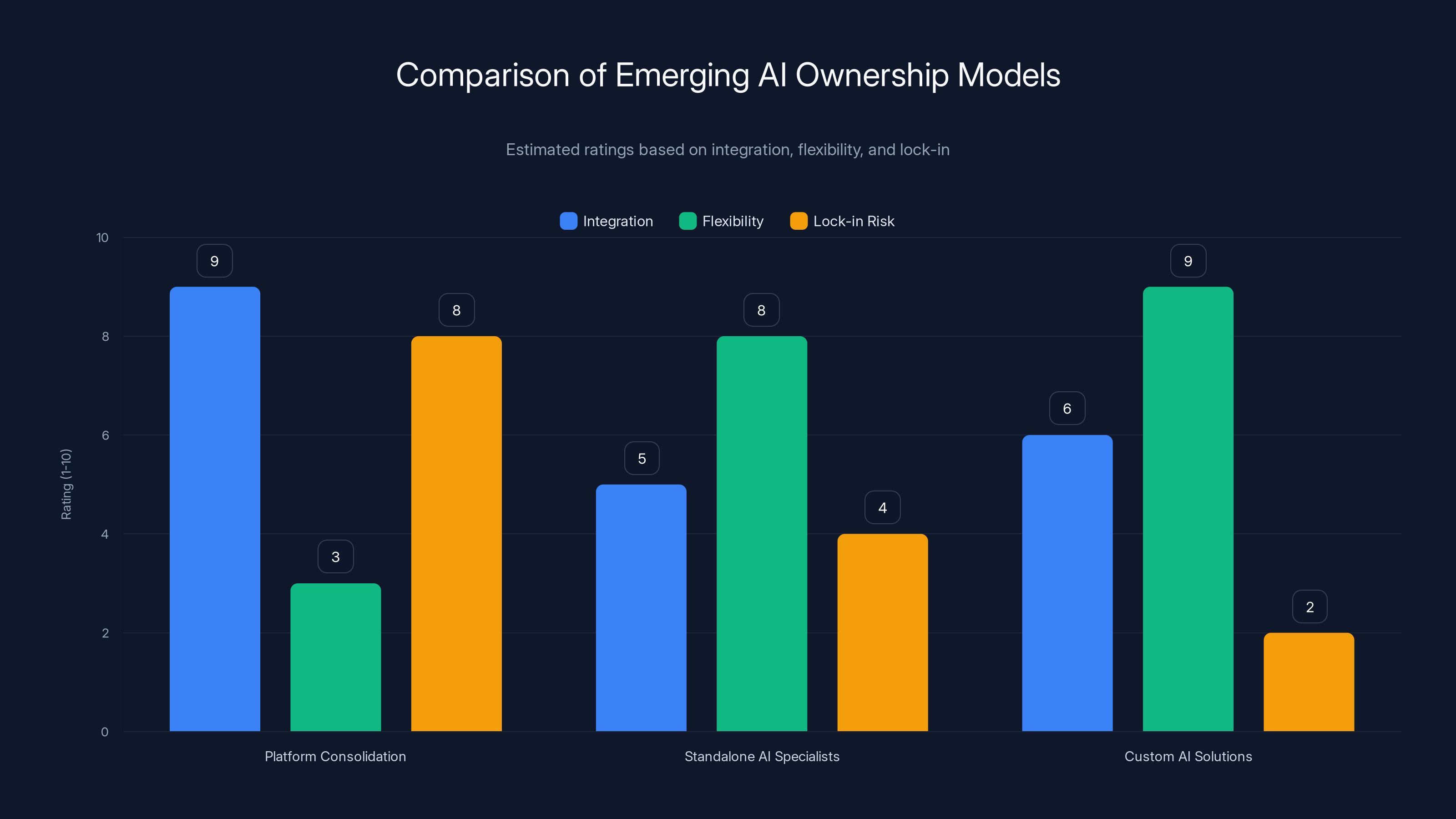

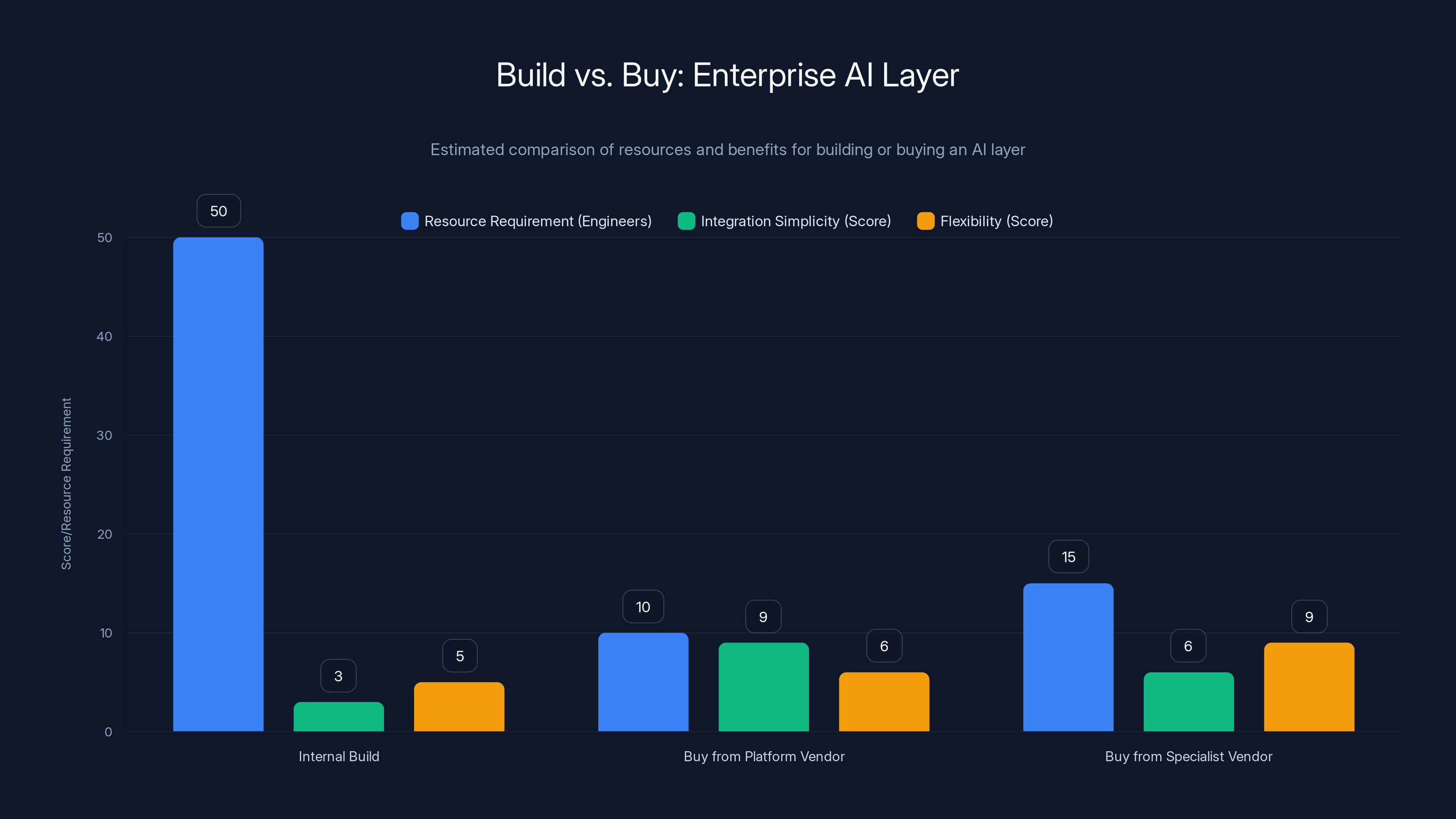

Platform Consolidation offers high integration but at the cost of flexibility and increased lock-in risk. Standalone AI Specialists provide a balance, while Custom AI Solutions offer the most flexibility with minimal lock-in. Estimated data.

The Evolution: From Chatbots to Work Assistants

Let's be honest about where we were 18 months ago. AI in enterprise meant Chat GPT behind a login, or maybe an internal chatbot that answered HR questions. It was helpful. It was also kind of pointless.

You'd ask your enterprise chatbot a question, it would search your knowledge base, and it would give you an answer. Then you'd have to do the work yourself. You'd have to switch to another application, click through three screens, fill in a form, upload a file, and wait for something to happen. The AI saved you maybe 15 seconds on information retrieval. It didn't save you from the actual work.

The inflection point came when people started building AI systems that actually take actions. Not just answer questions. Automate the action. Reserve the meeting room. Update the record. Create the documentation. Submit the request.

This seems obvious in hindsight, but it changed everything about how you need to think about AI architecture.

When AI was just Q&A, it didn't matter much who built it. You could have five different chatbots in different parts of your organization. They'd all be a little redundant, but they wouldn't break anything.

When AI starts doing things, suddenly everything breaks if you don't have coordination. If Chatbot A reserves a meeting room and Chatbot B doesn't know that room is reserved, you've created chaos. If the AI system accessing your customer database doesn't know the same permission rules as your actual database, you're leaking data.

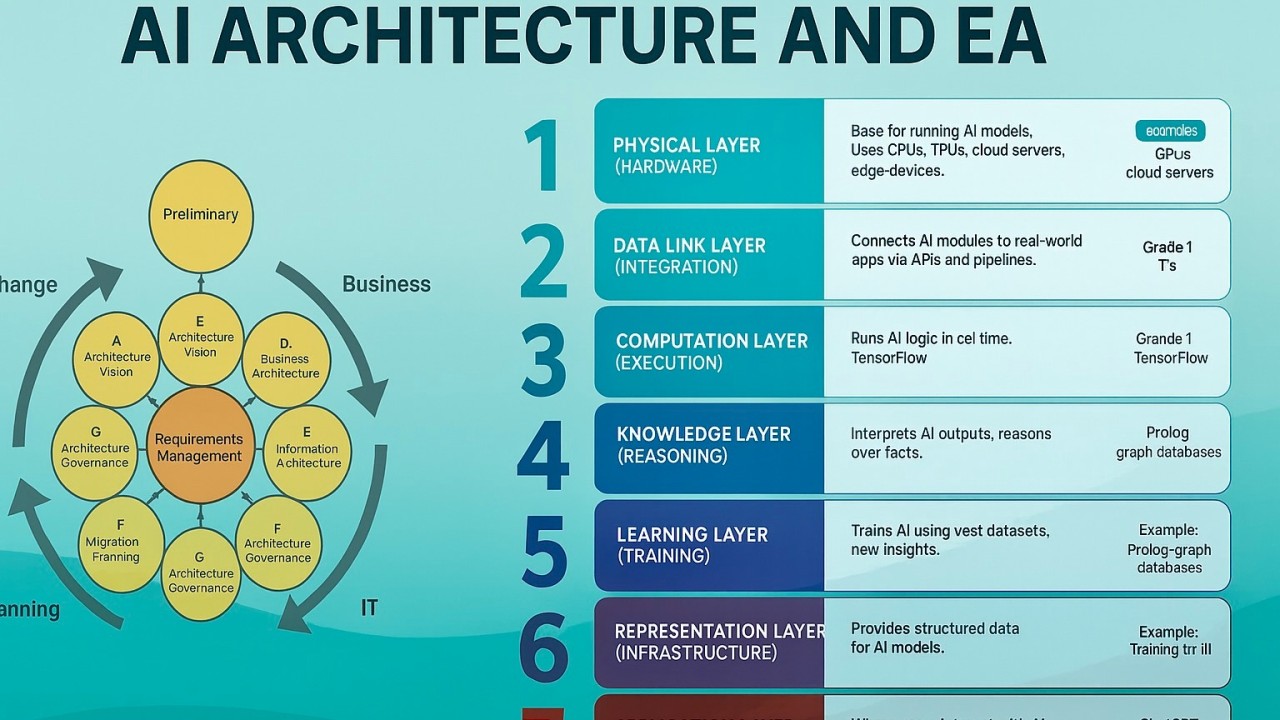

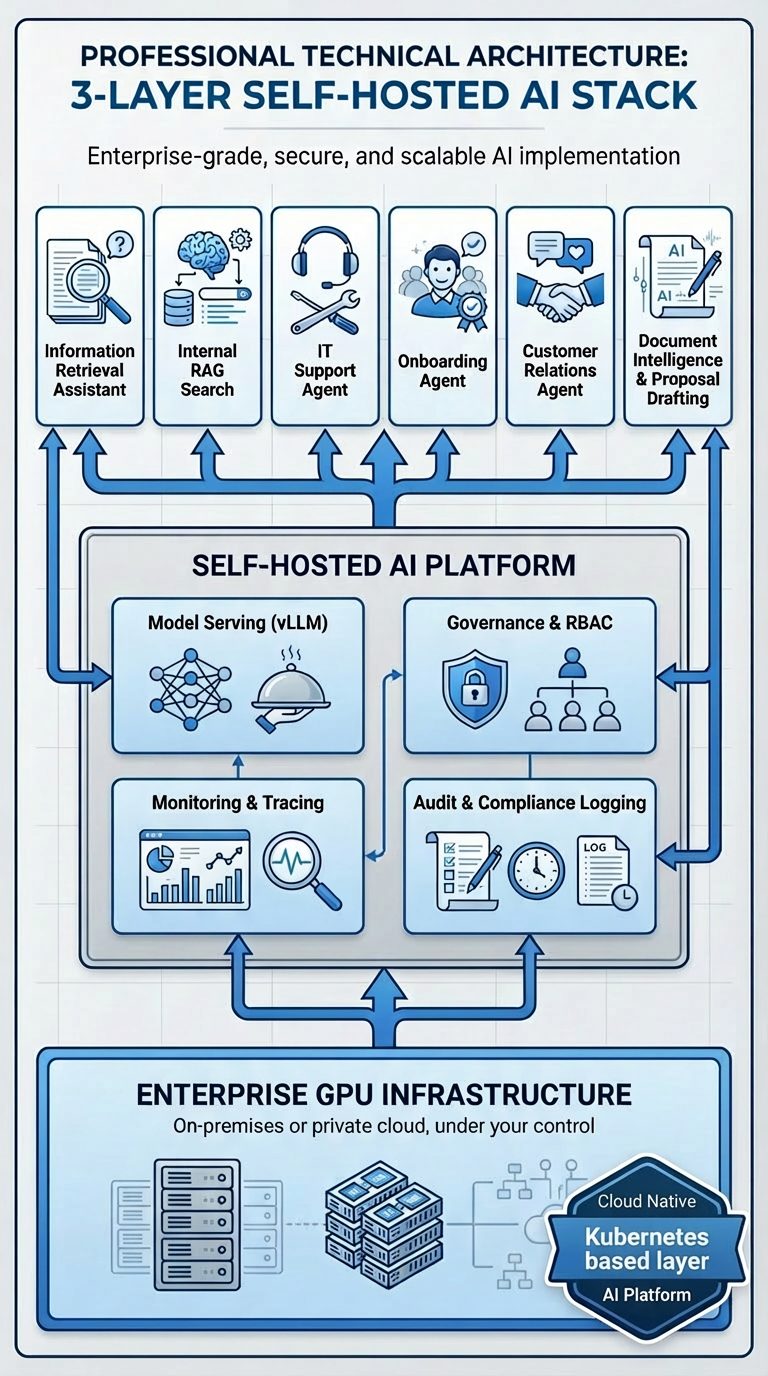

The second you move from Q&A to action, you need infrastructure. You need something that sits underneath all your AI experiences and says: "Here are the actual systems we can connect to. Here's what data you can access. Here's what permission levels apply. Here's what actually happened when you took that action."

That's the AI layer. That's the thing companies are fighting over right now.

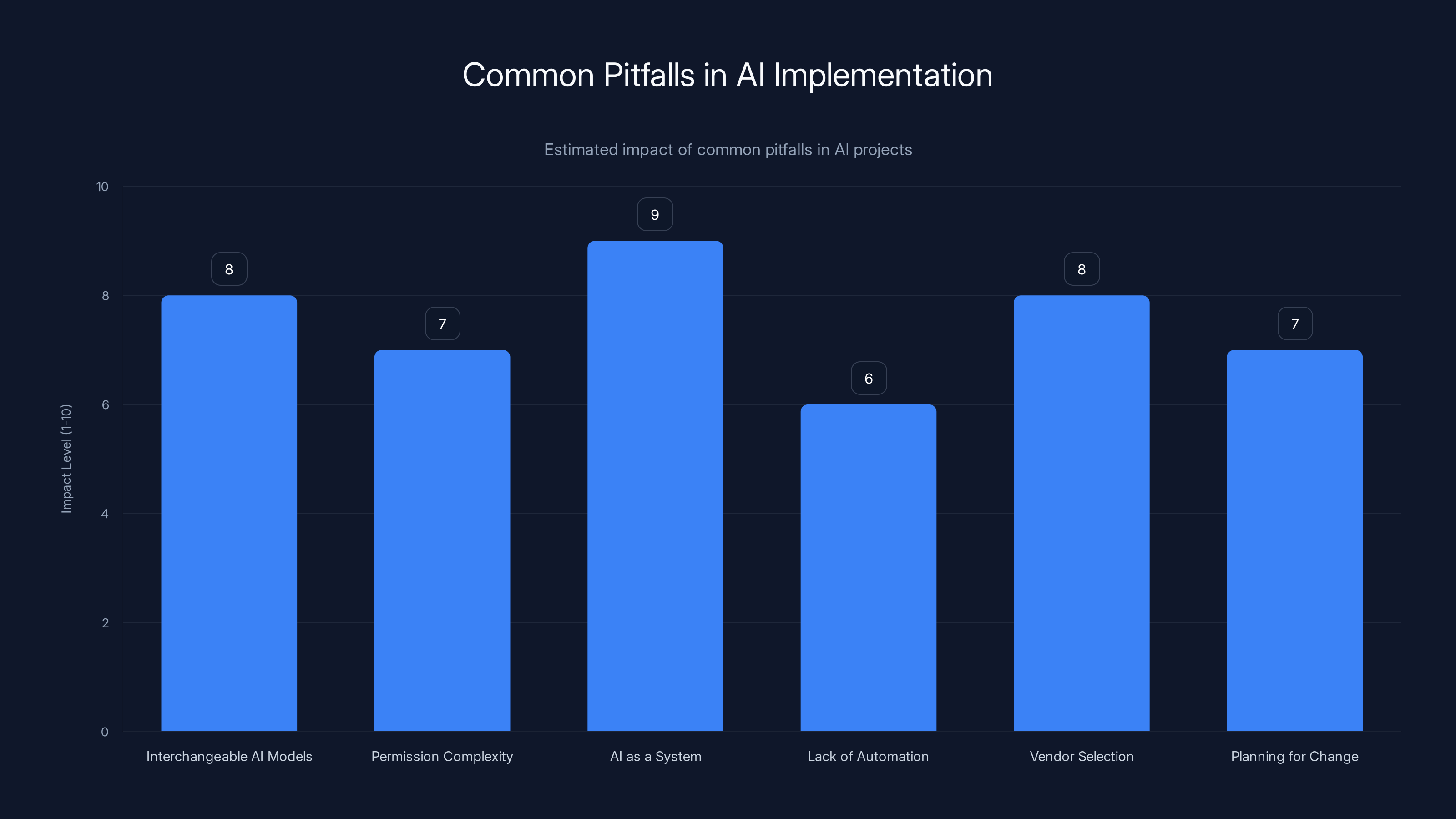

The chart estimates the impact level of common pitfalls in AI implementation. Treating AI as a system and choosing the right vendor are critical, with high impact levels of 9 and 8 respectively. Estimated data.

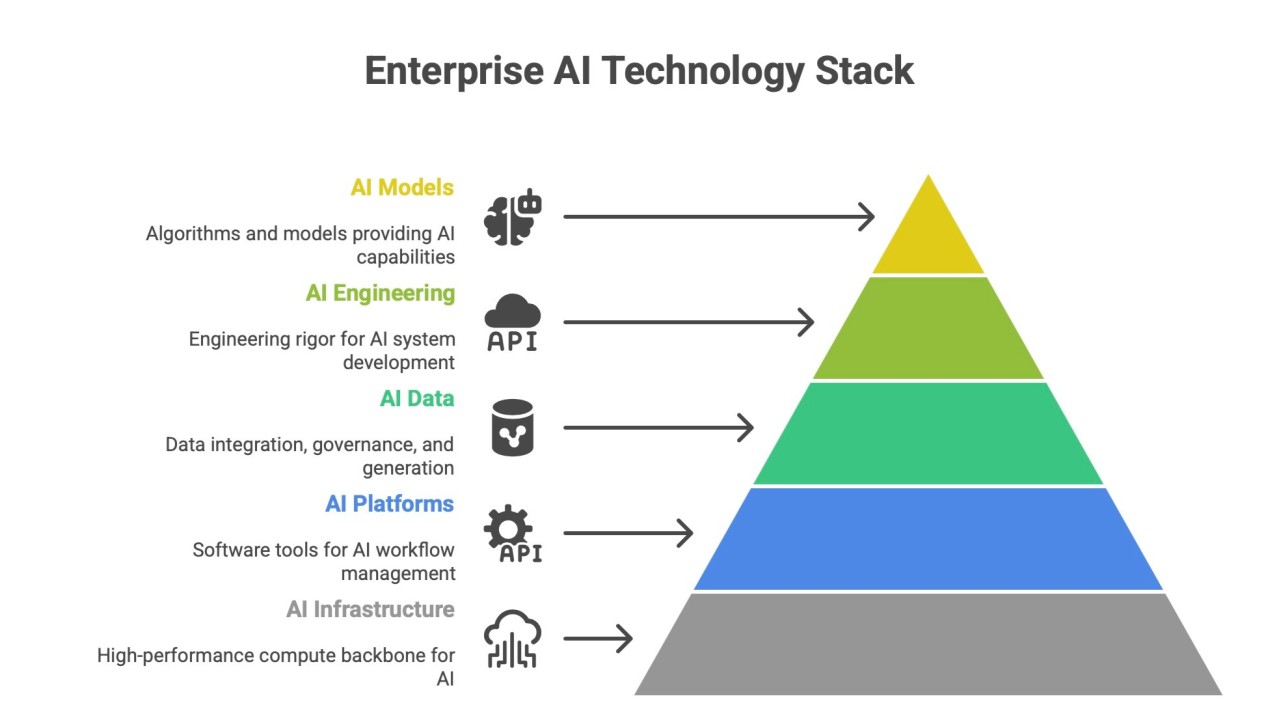

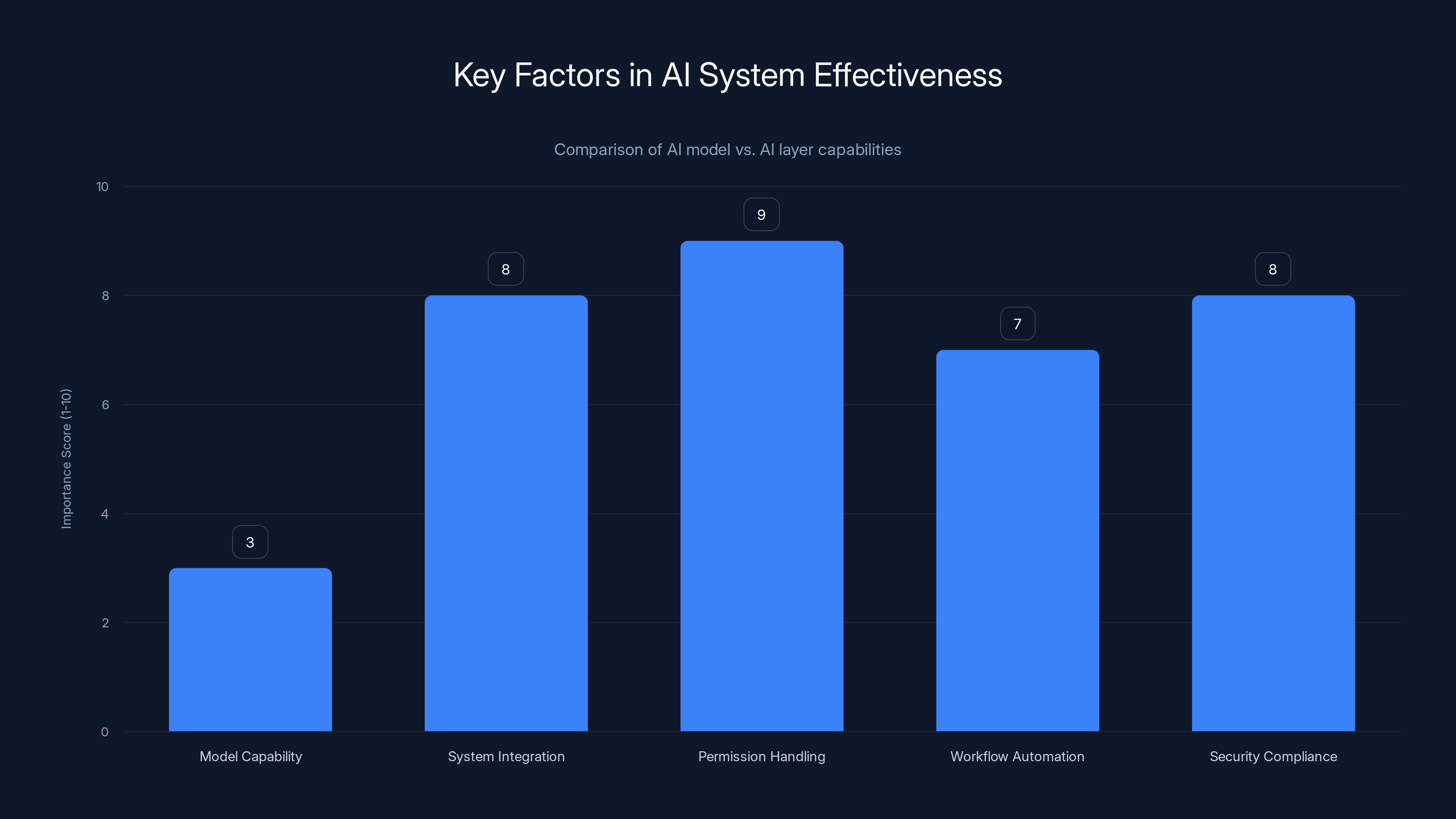

Why the AI Layer Beats the AI Model

Here's a truth that nobody in the AI industry wants to admit: the language model you use matters way less than you think.

GPT-4o is impressive. Claude 3.5 is impressive. Grok has a weird sense of humor. But all of them are roughly in the same capability range for most enterprise tasks. They can answer questions. They can write code. They can summarize documents. The differences between them are real, but marginal.

What actually determines whether an AI system creates value in your organization isn't the model. It's everything else.

It's whether the AI can connect to your actual systems. It's whether it understands your permission structure so it doesn't show someone a document they shouldn't see. It's whether it can write to your database and trigger workflows. It's whether it knows the difference between a legitimate request and someone trying to misuse it.

Think about it this way: you could have the smartest AI model in the world, but if it can't access your customer relationship database, it's useless for your sales team. You could have the best reasoning model ever built, but if it doesn't understand your organization's security policies, you can't actually deploy it.

The AI layer is the thing that makes the model useful. It's the translation layer between "this is a smart AI" and "this AI actually understands our business."

This is why companies like Glean started as search engines. Search is boring. Search isn't a $7 billion company. But search is infrastructure. Search is the thing that lets you query massive amounts of data efficiently and respect permission structures at scale.

Building the AI layer on top of great search infrastructure means you start with permission handling, data understanding, and integration patterns already solved. Then you add the fancy AI on top.

Compare that to what happens when a big tech company tries to bolt AI onto their existing stack. Microsoft built Copilot by taking their existing Office infrastructure and adding an AI model on top. Google tried something similar with Workspace. They had to solve the permission problem, the integration problem, the security problem, and the context problem, but from the opposite direction. They started with the model and tried to retrofit the infrastructure.

Neither approach is wrong, but they have different scaling characteristics. One says, "We have the infrastructure, now add intelligence." The other says, "We have the intelligence, now add infrastructure."

Enterprise customers are discovering that the infrastructure part is actually harder than the intelligence part.

The Three Emerging Ownership Models

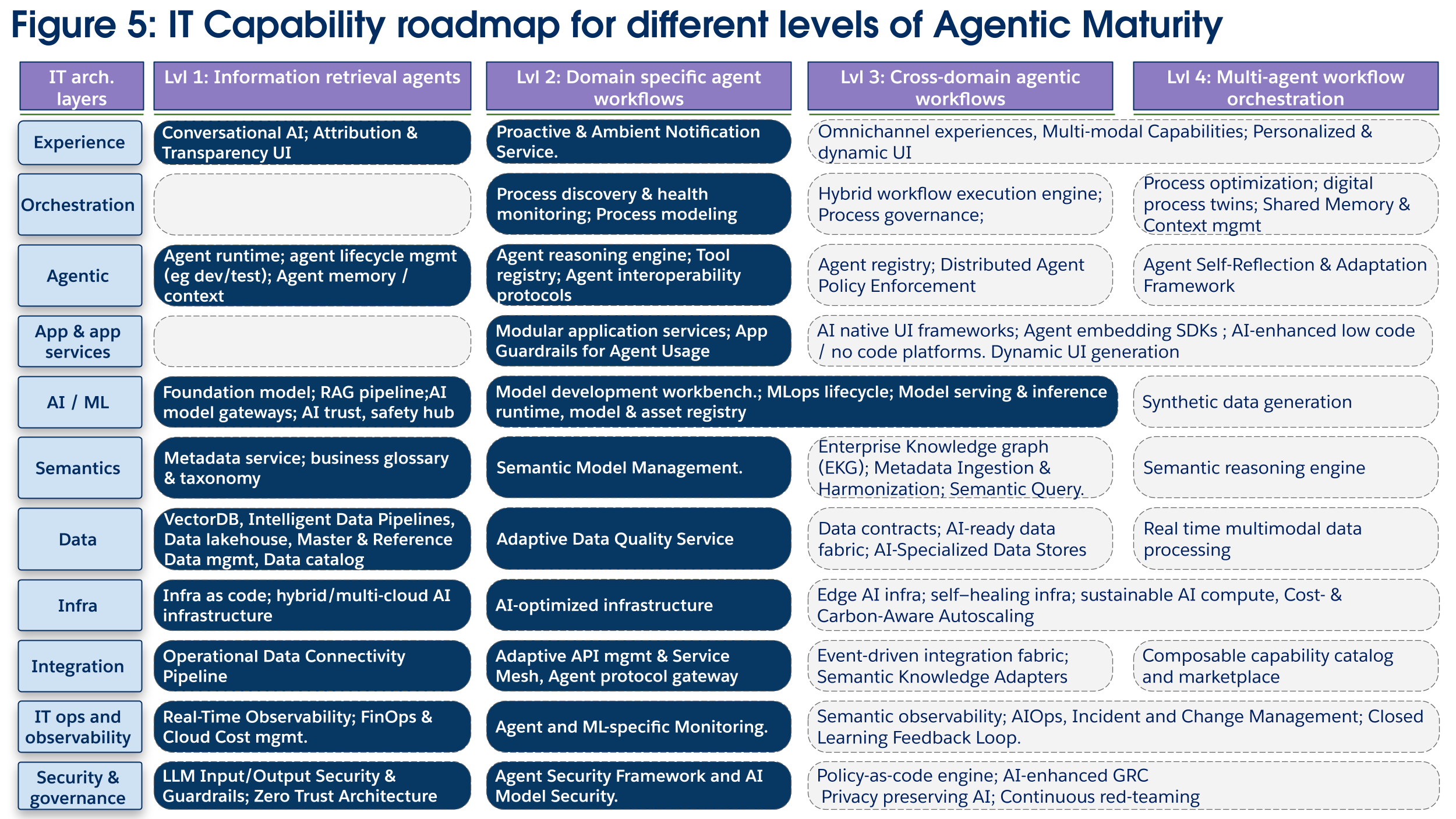

Right now, enterprises are choosing from three fundamentally different approaches to who owns the AI layer.

Model 1: Platform Consolidation

Microsoft and Google and Apple are all trying to convince you that they should own your AI layer because they already own so much of your infrastructure.

Microsoft's play is straightforward: you already have Office, Teams, Share Point, Outlook, and Azure. We'll put Copilot everywhere. It'll understand your Office data, Teams conversations, Share Point documents, and calendar. It'll work across your email and your meetings and your documents.

The advantage is obvious: tight integration with tools you already use every day. No separate dashboard. No API keys to manage. Microsoft controls the whole stack.

The disadvantage is also obvious: you're locked in. Your AI layer works great with Microsoft products and sort of okay with everything else. You want to use it with Salesforce? You can, but it'll be clunky. You want to use it with your custom internal tools? Good luck.

Google's making similar bets with Workspace. Apple is trying with Siri and whatever they're building into their next operating system. Amazon is positioned to own the AI layer in AWS environments.

This model works best for: organizations heavily invested in one platform already. Organizations that value tight integration over flexibility. Organizations that don't have complex custom software. Organizations that are okay with lock-in because the alternative is managing integrations themselves.

It doesn't work for: complex enterprises with heterogeneous systems. Organizations that want to choose best-of-breed AI models. Organizations that need flexibility as the AI landscape evolves.

Model 2: Standalone AI Infrastructure Specialists

Glean is the most obvious player here, but Anthropic's Claude enterprise deployments, custom implementations of open-source models, and a handful of startups are pursuing this model.

The pitch is simple: we don't own your entire stack, but we own the coordination layer. We understand all your systems, we handle permissions correctly, we integrate with whatever you want to use, and we don't care which AI model powers the actual intelligence.

The advantage of this model is flexibility. You can swap AI models. You can add new integrations. You can maintain independence from any single large vendor.

The disadvantage is complexity. You need to evaluate this vendor independently. You need to integrate it. You need to maintain it. You're now dependent on a company that doesn't have the resources of Microsoft or Google.

This model works best for: large enterprises with complex, heterogeneous systems. Organizations that want to maintain independence from platform vendors. Organizations with sophisticated enough IT teams to handle additional integrations. Organizations that want to evaluate multiple AI models and choose what works best.

It doesn't work for: small organizations that want to minimize complexity. Organizations that are already fully committed to one platform. Organizations that don't have the IT sophistication to manage additional infrastructure.

Model 3: Internal Build (The Rare Unicorn)

Some organizations are building this themselves. Google did. Microsoft did (well, they built Copilot but they also have custom stuff). Some really large enterprises are attempting it.

Internal build means you hire Ph D-level engineers, you understand your own data, you understand your own permission structures, and you build the infrastructure to coordinate all your AI systems.

The advantage is ultimate flexibility and control. The disadvantage is that you need 50+ really excellent engineers for 3+ years. You need world-class AI expertise. You need deep knowledge of your own infrastructure. You need to actually compete with teams at Microsoft and Google who are solving these problems full-time.

This model works best for: technology companies with world-class talent. Organizations so unique that no vendor solution could work. Organizations so large that building is cheaper than licensing.

It doesn't work for: everyone else. This is not a realistic option for 99% of enterprises.

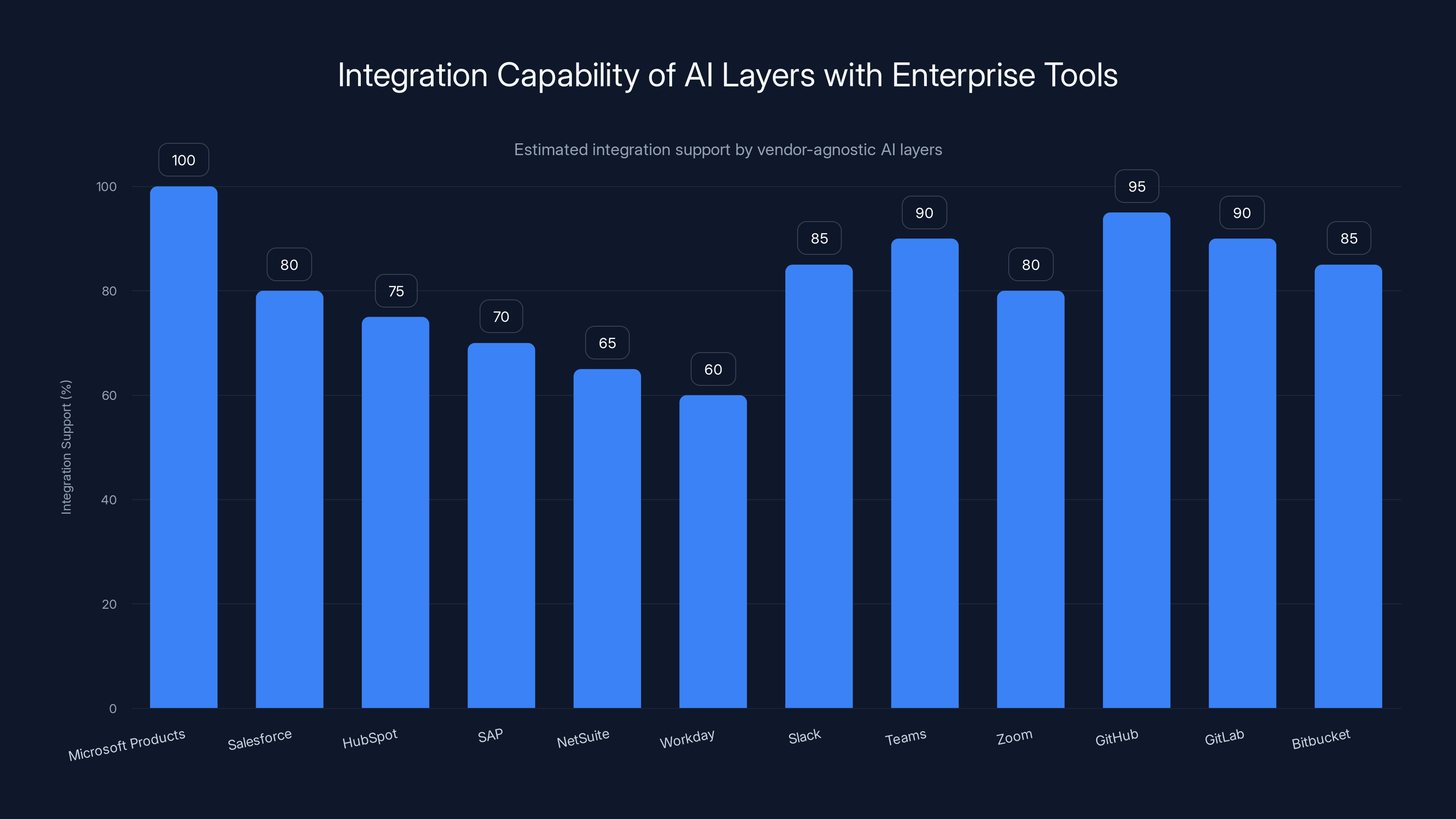

Vendor-agnostic AI layers provide broader integration support across various enterprise tools, making them more valuable in diverse software ecosystems. Estimated data.

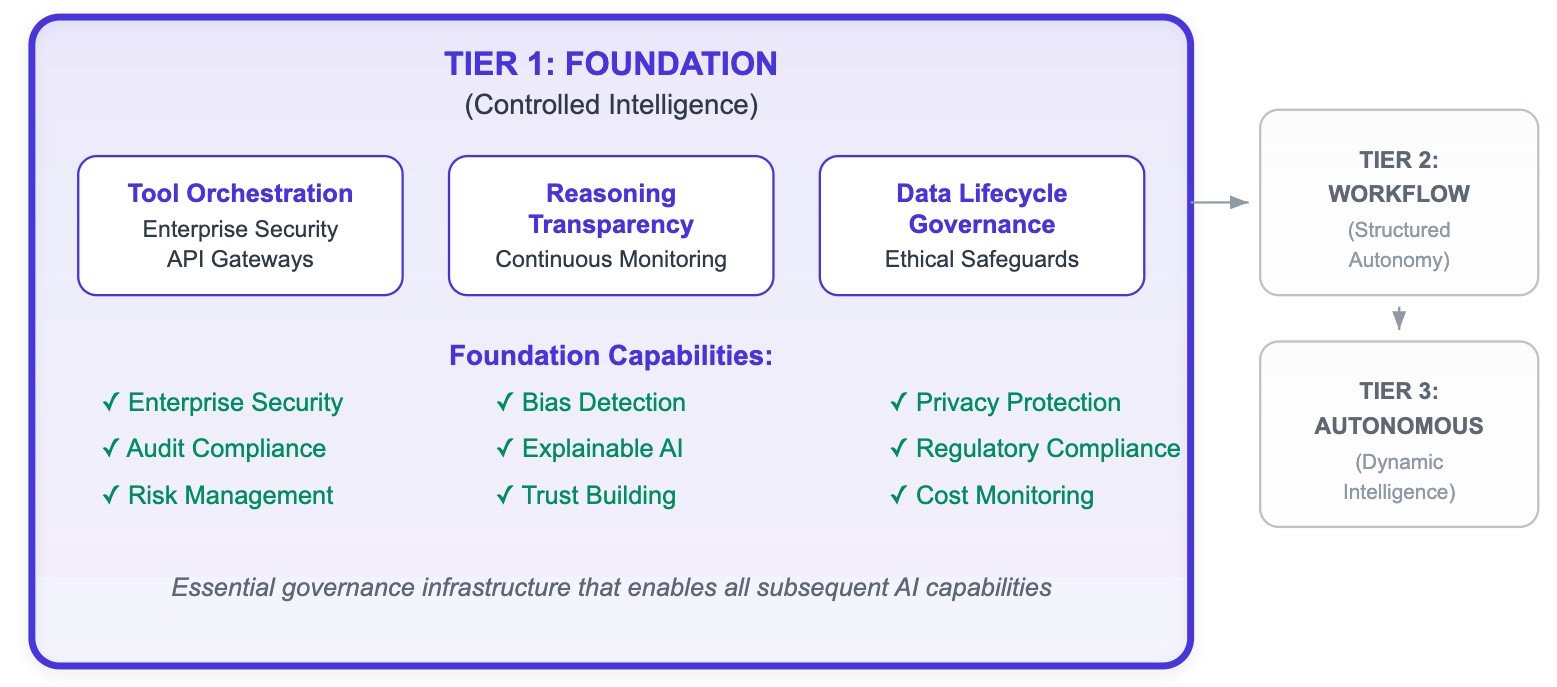

The Security and Governance Complexity

Here's where the AI layer problem gets genuinely hard.

Traditional enterprise software enforced permissions at the application level. You had access to Salesforce or you didn't. You could see a specific account in Salesforce or you couldn't. The permission rules were declarative and relatively static.

AI systems blow this up because they need to reason about permission across heterogeneous systems in real-time.

Say you ask your AI assistant: "Show me all our biggest accounts that are at risk of churn, and tell me what conversations we've had with them in the last 90 days."

To answer that question, the AI needs to:

- Query your CRM to find accounts at risk of churn

- Filter those results to only accounts you have permission to see

- Query your email archive for conversations with those accounts

- Filter those to only conversations you should have access to

- Query your call recording system for calls with those accounts

- Filter those to only calls where you were a participant or have been granted access

- Synthesize all of that information

- Make sure nothing in the synthesis reveals information you shouldn't see

And you need to do all that in less than 5 seconds, with perfect accuracy on the permission parts. One mistake and you've exposed confidential information.

This is not a problem that the AI model solves. This is not a problem that better prompting solves. This is an infrastructure problem, and it's the core of what the AI layer does.

Glean's entire engineering effort is basically solving this problem at scale. How do you understand permission structures across heterogeneous systems? How do you enforce them in real-time without degrading performance? How do you audit and log every access so you can prove you didn't leak data?

Microsoft's entire effort with Copilot is also solving this problem, but within their own systems where permission structures are relatively standard.

The organizations that get this right will have an enormous competitive advantage. They'll be able to use AI in sensitive functions like legal, finance, sales, and HR because they can actually prove they're enforcing permissions correctly.

The organizations that get this wrong will face data leaks, regulatory violations, and internal security breaches that will make everyone distrust AI in the organization.

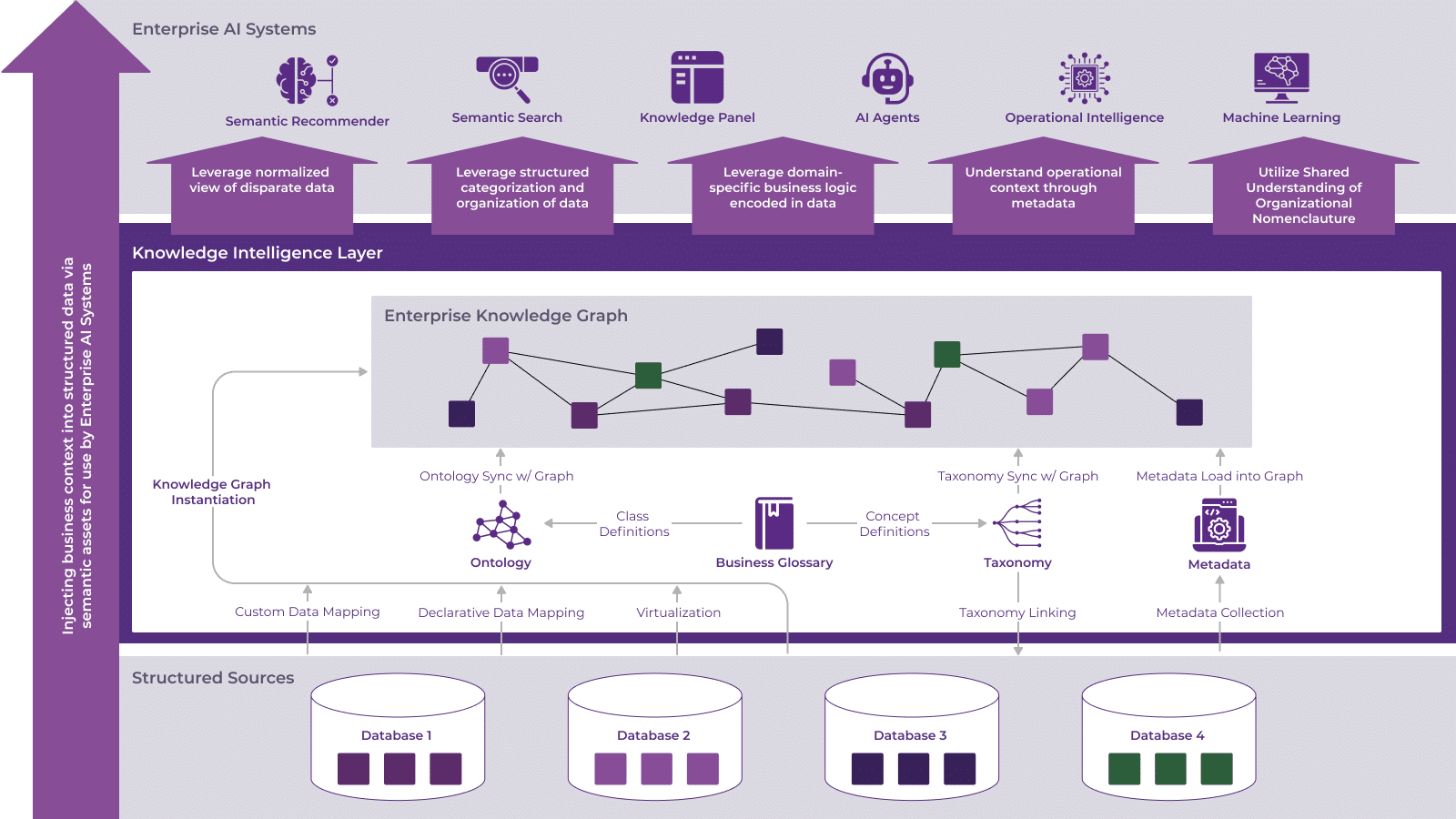

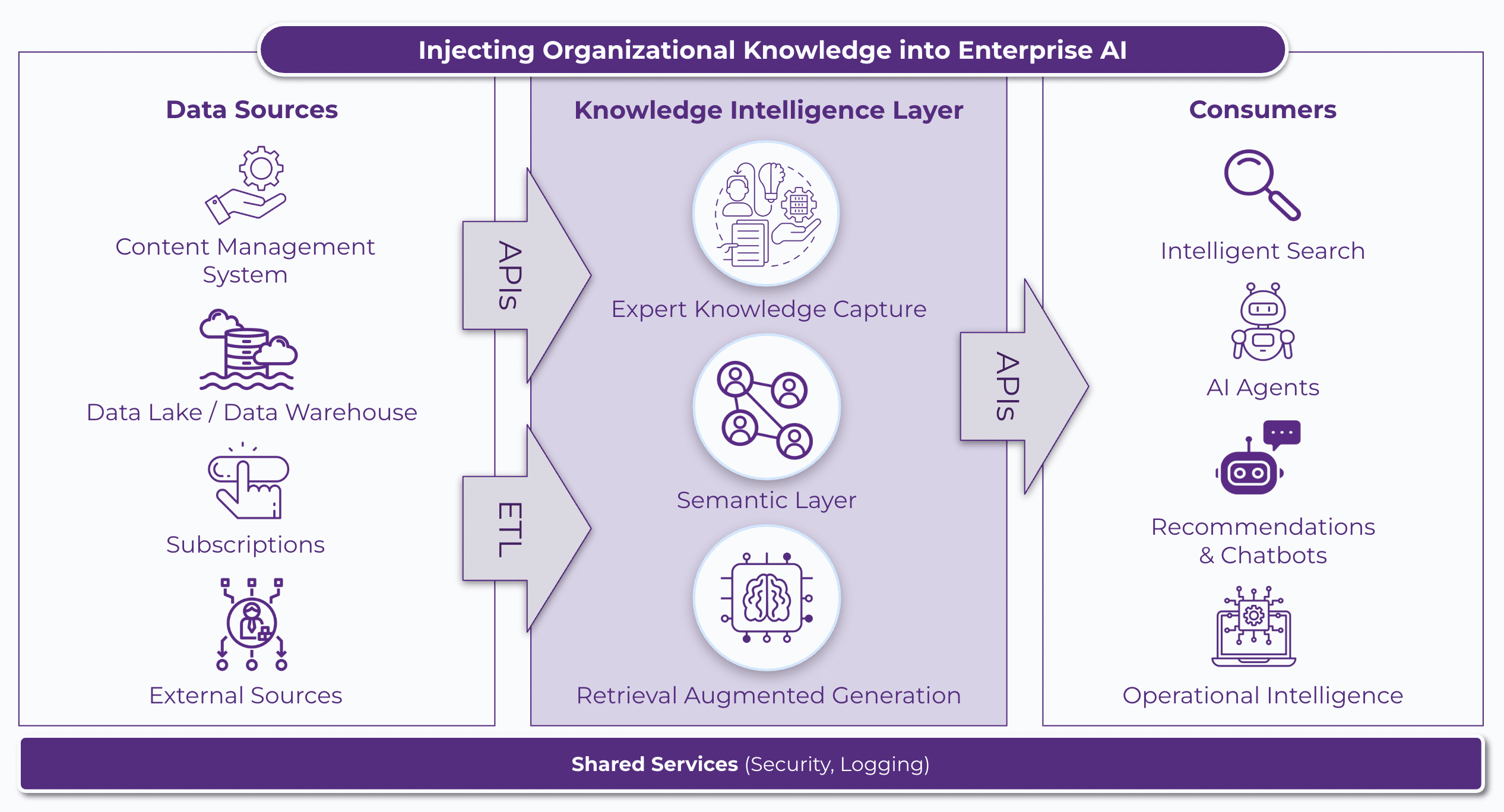

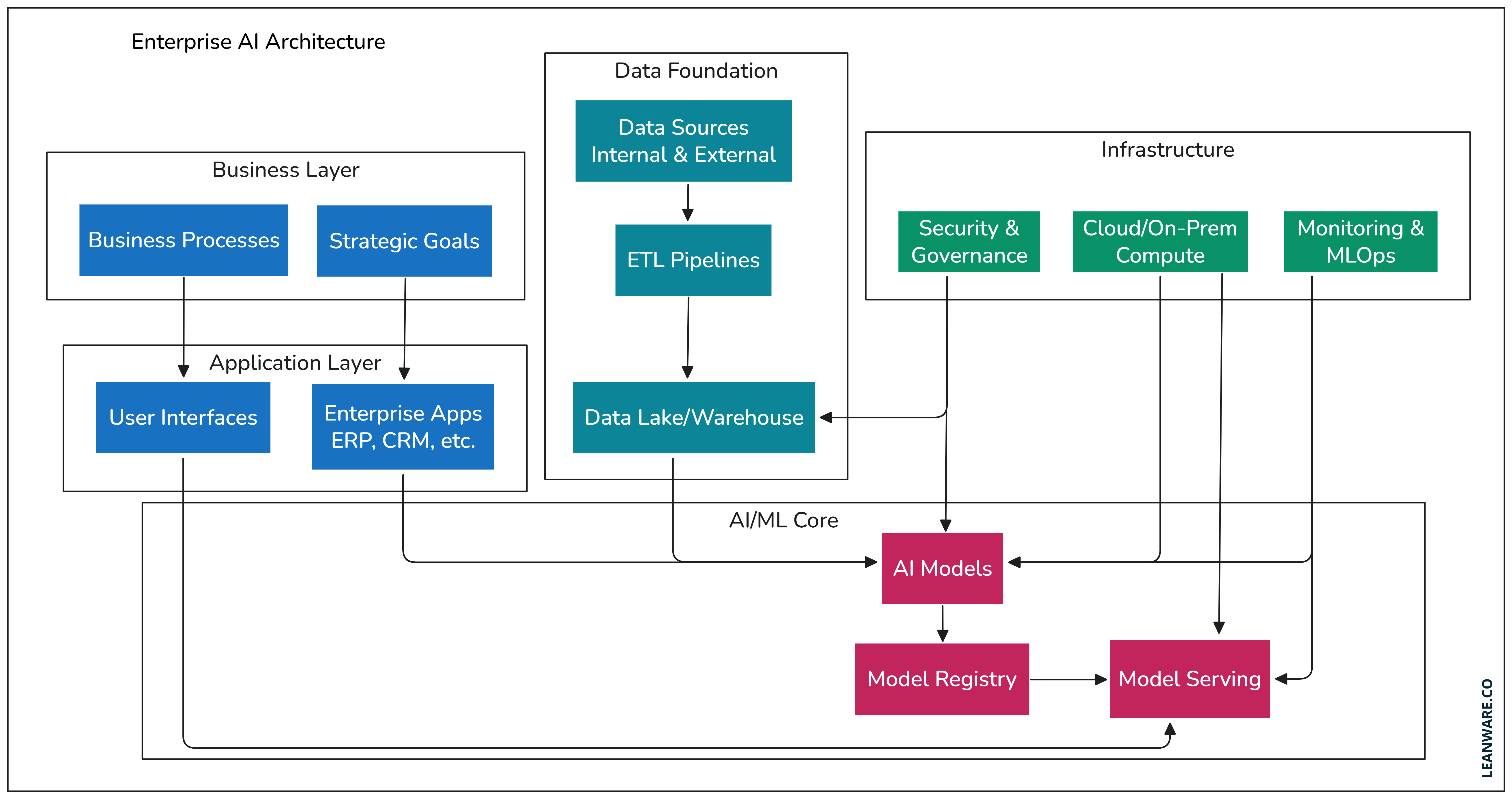

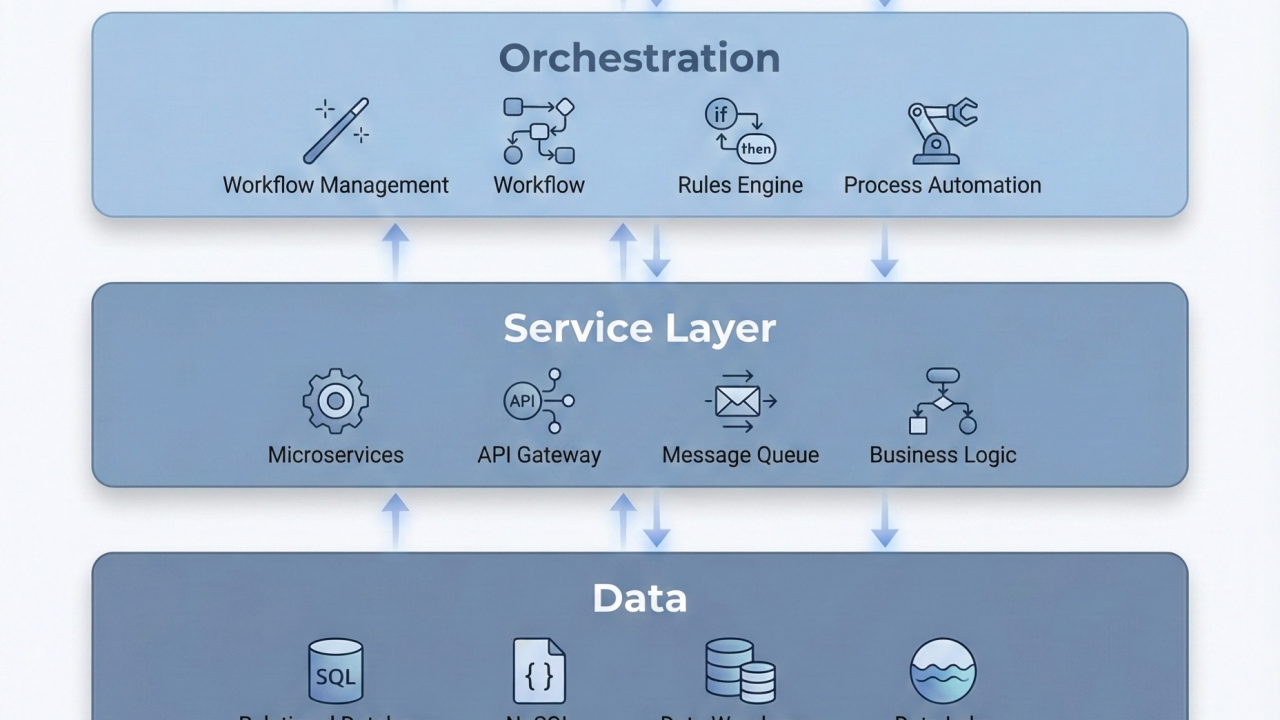

Understanding Enterprise Data Connectivity

The second-hardest problem the AI layer solves is data connectivity and context.

Most enterprises have data in 15+ different systems. CRM data is in Salesforce or Hub Spot. HR data is in Workday or Bamboo HR. Engineering data is in Git Hub or Git Lab. Product usage data is in Amplitude or Mixpanel. Internal documents are in Sharepoint or Google Drive or Notion. Customer support is in Zendesk or Intercom. Finance is in Net Suite or SAP.

That's a conservative list. Most large enterprises have 30+ major data systems.

Building an AI system that understands all of that is the problem.

When the AI layer is good, it can take a simple question like "Which of our biggest customers haven't logged in this month?" and simultaneously query Salesforce for customer data, Amplitude for usage data, and your billing system for revenue data, then synthesize it all into an answer with context.

When the AI layer is bad, the AI answers based on whatever data it's been told about, which is inevitably incomplete, outdated, or wrong.

This is why companies are investing in semantic understanding of their data. The AI layer needs to know that "customer ARR" in your Salesforce system is called "annual contract value" in your finance system and "annual revenue per customer" in your BI tool. It needs to understand these are the same thing.

It needs to know that your customer success team calls churn "customer exit" and your finance team calls it "revenue churn" and your product team calls it "negative NRR." These all mean different things, but the AI layer needs to understand the relationships.

Glean started by building search that understood this kind of semantic meaning. Elasticsearch searches by keyword. Glean's search understands what you mean. That's why they could layer AI on top—the foundation was already handling data understanding.

Most other AI systems are still searching by keyword and hallucinating answers. It's a more fundamental problem than people realize.

Building an AI layer internally requires significant resources (50+ engineers), while buying from a vendor offers varying levels of integration simplicity and flexibility. Estimated data.

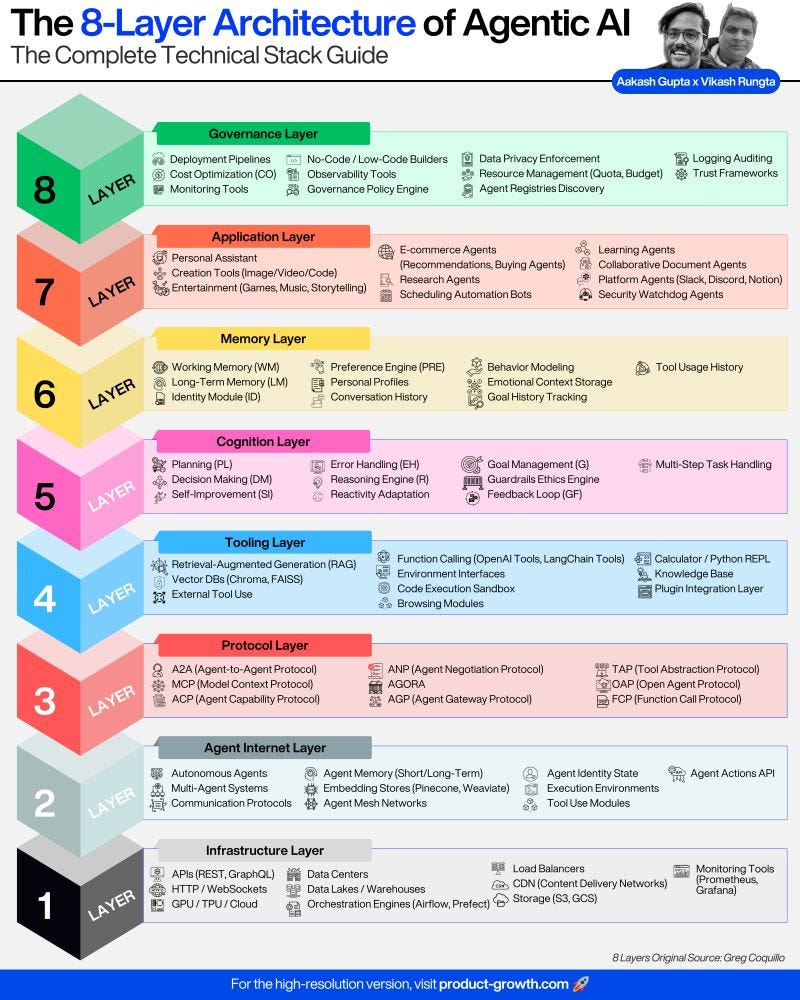

Agent Architecture and Autonomous Action

Here's where things get genuinely weird, because the industry is now focused on "agents," and everyone has different definitions of what an agent is.

A chatbot is reactive. You ask it something, it answers.

An agent is supposed to be proactive. It watches for conditions. It takes action. It coordinates multiple systems. It reasons about what to do next.

But building real agents is significantly harder than building good chatbots.

Take a simple example: "Create a summary of customer feedback from last month and share it with the product team." That sounds simple, but an agent needs to:

- Understand what "customer feedback" means (could be support tickets, feature requests, user research, tweets, reviews, calls)

- Figure out where that data lives

- Query all of those sources

- Filter to last month

- Understand what "share with the product team" means (Slack channel? Email? Document?)

- Synthesize the feedback

- Format it appropriately

- Deliver it

- Confirm it was delivered

- Handle any errors

Right now, most "agents" are really just sophisticated chatbots that can call APIs. They can answer questions and trigger some actions, but they can't really reason about ambiguity or handle complex multi-system tasks.

This is why the most successful autonomous systems in enterprises are narrowly scoped. Like, "every time a support ticket comes in, automatically assign it to the right person and send them a summary." That's not really an agent. That's a well-configured automation.

Real agents would look more like: "Understand what's happening with our churn rate. Investigate why. Identify customers at risk. Alert the right people. Suggest specific interventions. Track which interventions work best. Optimize over time."

We're maybe 20% of the way to building agents that can actually do that.

The AI layer matters here because agent infrastructure is much harder to build than question-answering infrastructure. You need:

- Better error handling

- Better confirmation mechanisms

- Better rollback capabilities

- Better logging and auditability

- Better permission checks before taking action

Microsoft's approach has been to build agents slowly and carefully within their own products. Google's approach has been similar. The startup community has been hyping agents more than building them, which is typical.

But the companies that actually build scalable agent infrastructure first will own the enterprise AI layer for the next decade.

The Role of Context Windows and Real-Time Data

Context window size has gotten a lot of attention lately. You can now give Claude 200,000 tokens of context. GPT-4o can handle similar amounts. That's huge.

But here's the thing: for enterprise applications, context window size matters less than real-time data access.

You could give an AI system your entire customer database as context, but if that database changes between when you load it and when the user uses the system, your context is outdated.

This is why the AI layer needs to do real-time query, not batch context loading.

Glean's model is to query your actual systems and return recent, accurate data. Microsoft's model is to have AI understand your systems and query them in real-time. Both approaches require the AI layer, not just the model.

Large context windows are genuinely useful for things like analyzing historical documents or understanding complex technical specifications. But for day-to-day enterprise work, real-time data access matters more.

This also means that the AI model doesn't need to be as large. A 7B parameter model with real-time data access can outperform a 70B parameter model using stale context.

Enterprises are starting to figure this out, which changes the economics of AI infrastructure. You don't need to pay for expensive large models if you have good data access.

The AI layer's ability to integrate with systems, handle permissions, automate workflows, and comply with security is more critical than the model's standalone capability. Estimated data.

Integration Patterns and API Ecosystems

The future AI layer success depends on how well it integrates with existing software ecosystems.

This is where you're seeing the consolidation play from Microsoft and Google make sense. They have deep integration with their own products. They can make sure Copilot works flawlessly in Office, Teams, and Azure.

But most enterprises use software from 15+ vendors. An AI layer that only works with Microsoft products is missing 80% of where work actually happens.

This is why startups building vendor-agnostic AI layers have an advantage. They have to support Salesforce and Hub Spot and both. They have to work with SAP and Net Suite and Workday. They have to handle Slack and Teams and Zoom. They have to support Git Hub and Git Lab and Bitbucket.

The vendor-agnostic approach is harder, but it's also more valuable. It's why Glean could raise $150 million and why similar infrastructure startups are raising massive rounds.

The API ecosystem also matters because most AI access will flow through APIs, not through UI. A product manager won't be asking their AI assistant in the Copilot UI to give them forecast data. They'll have it embedded in their BI tool's dashboard. A sales rep won't be going to Glean to see account information. They'll have it appear in their CRM.

The AI layer that becomes the de facto standard for AI API access in enterprise will win. It's similar to how Twilio became the de facto standard for SMS APIs, or how Stripe became the de facto standard for payment processing.

Cost and ROI Mathematics

Let's talk about the actual economics because they matter to the decision.

Traditional Saa S costs are predictable. You pay per user, per month.

AI infrastructure costs are weird because they're variable. If employees ask their AI assistant to process a million documents, you're paying for the compute. If they ask it to process a thousand documents, you're paying less.

Most vendors structure this as:

- Base fee for access to the platform

- Per-query or per-token charges for AI operations

- Storage charges for cached data

- Integration charges for connectors to systems

For a company with 500 employees using the AI layer heavily, budget

The ROI calculation is straightforward:

If you have 500 employees and the AI layer saves 2 hours per week for each employee, that's 52,000 hours per year. At a fully-loaded cost of

If the AI infrastructure costs $300K per year, the ROI is 17x. That's phenomenal.

But here's the catch: you need to actually get 2 hours per week of productive time savings from the AI layer. Most early implementations get 0.5 hours per week. Then they plateau.

To get 2 hours per week, you need:

- Deep integration with systems where work actually happens

- Truly autonomous agents that don't require human oversight

- AI that understands your specific business context

- Workflows that are actually automated, not just informed

Number 2 and 3 and 4 require the AI layer to be really good. And being really good at those things is hard, which is why this is where the real competition is happening.

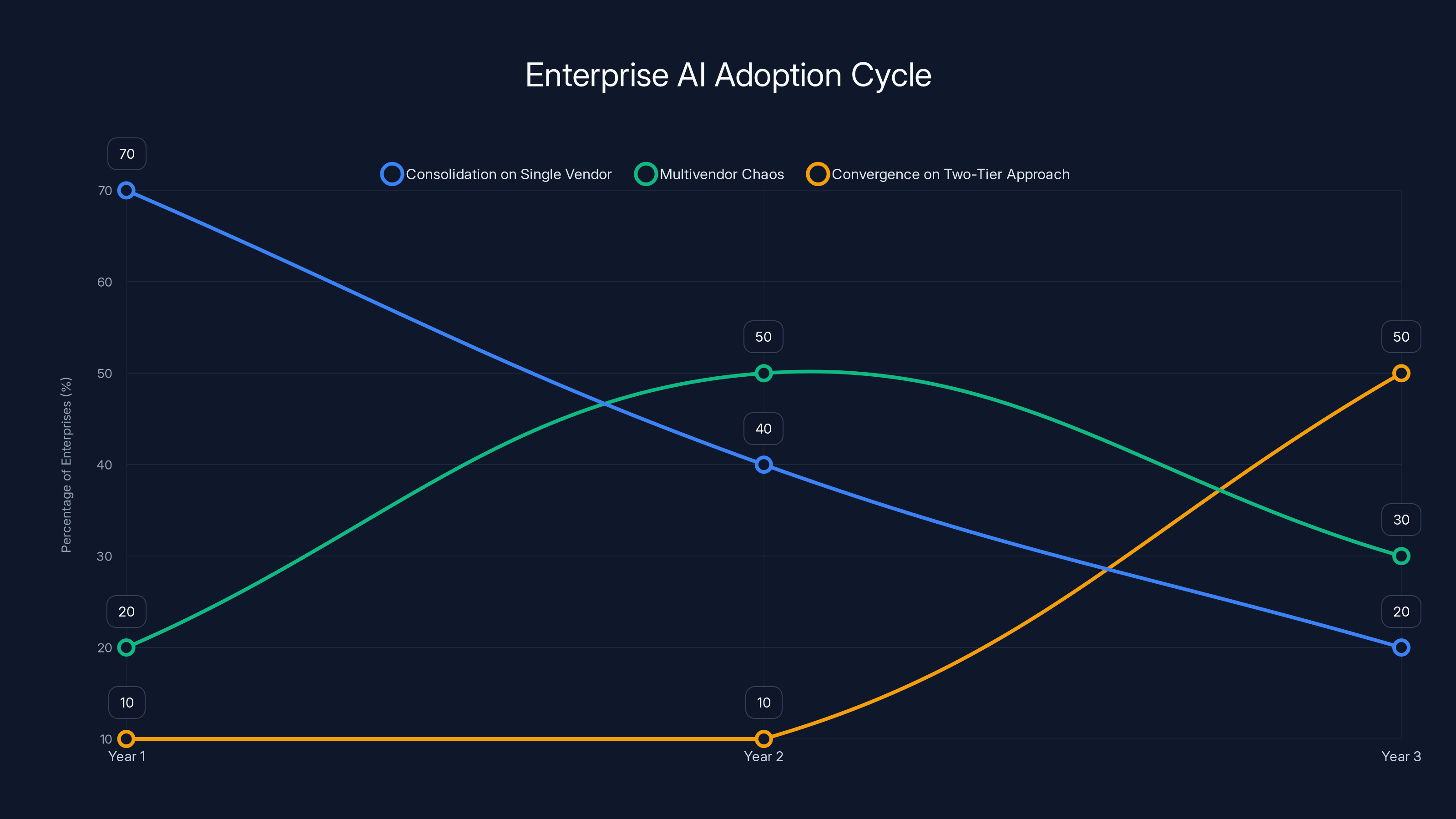

Estimated data shows a shift from single vendor consolidation to a two-tier approach over three years, reflecting a trend towards specialization in enterprise AI adoption.

Decision Framework: Which Model Should You Choose?

Here's the framework I'd use:

If you're deeply embedded in one platform ecosystem (all Microsoft, all Google, all AWS): Consolidation model. Take the integrated solution from your existing vendor. Yes, you lose some flexibility, but you gain seamless integration and you don't have to manage another vendor.

If you have a complex, heterogeneous environment with 20+ significant software systems: Standalone AI layer specialist. You need flexibility more than you need consolidation. Glean or similar infrastructure plays make sense.

If you have less than 5 core business systems and you want to minimize complexity: Probably still consolidation. Glean is built for complexity. If you don't have it, you're overengineering.

If you have unique business models or proprietary systems that competitors don't use: Build internally or augment a vendor solution with custom development. You probably need too much customization for an off-the-shelf solution anyway.

If you're not sure and you want to experiment: Start with your platform vendor's AI layer (Copilot, Duet, etc.). It's free or cheap. See if it creates value. If it doesn't, you haven't invested much. If it does, you've got a foundation to build on.

The mistake most enterprises make is choosing before they understand what they actually need the AI layer to do. They buy a tool first, then try to figure out how to use it.

Reverse that: figure out what you want the AI layer to do. Then choose the vendor that can actually do that.

The Future: Convergence and Specialization

Look at how other infrastructure decisions happened in enterprises.

Cloud adoption looked like consolidation at first (AWS will own everything). Then it became multicloud (we'll use AWS and Azure). Then it became cloud-agnostic (we'll abstract the infrastructure).

AI layer adoption will probably follow a similar pattern.

Year one: companies try to consolidate on one vendor's AI layer. Microsoft tries to own it. Google tries to own it. Some succeed with some customers.

Year two: companies realize no single vendor covers everything. They end up with multiple AI systems. Chaos ensues.

Year three and beyond: companies converge on a two-tier approach. A platform vendor for what they can handle well (Copilot for Office work). A specialist AI layer for everything else. Both feeding into integrated systems.

We're probably in Year 1.5 of this cycle right now.

The vendors who will win are:

- The platform consolidators who are really good at what they do (Microsoft seems to be ahead here)

- The specialist infrastructure vendors who understand enterprise complexity (Glean is the main player, but others will emerge)

- The open-source communities that provide alternatives for organizations that want independence

- The niche specialists who own specific domains (sales AI, engineering AI, HR AI, etc.)

The vendors who will lose are those trying to be everything to everyone with a generic AI assistant. That's too crowded and too competitive. Specificity beats generality.

Implementation: From Strategy to Execution

Assuming you've decided which model makes sense for your organization, here's how to actually implement it.

Phase 1: Audit and Understand (Month 1-2)

Before you can build an AI layer, you need to understand what it needs to do.

- Map all your data systems and what data they contain

- Map all your workflows and where friction points exist

- Interview employees in key roles about what AI would help them most

- Understand your permission structure and security requirements

- Understand your integration capabilities (who can manage APIs, who has access to databases, etc.)

This phase is boring but critical. Most implementations fail because they skip this and jump straight to "let's use AI."

Phase 2: Pilot and Learn (Month 3-6)

Start with a narrow use case. Not "use AI everywhere." Something specific like "use AI to help our customer support team respond faster to tickets."

- Choose your vendor

- Get pilot access

- Get one team to use it heavily

- Measure what happens (do tickets get resolved faster? Are responses better?)

- Learn what worked and what didn't

Most importantly, learn what your people actually need from the AI layer. Theory is wrong. Reality is right.

Phase 3: Scale Thoughtfully (Month 7-12)

Once you've proved the concept with one use case, expand to adjacent teams.

But expand thoughtfully. Each expansion teaches you something about what the AI layer needs to do.

Phase 4: Integrate (Ongoing)

The real work is integrating the AI layer into how work actually happens.

This is not about having a separate "AI tool" that people use. It's about AI being where people work.

In Salesforce, in your BI tool, in Slack, in your internal systems.

This integration work is 60% of the effort and 90% of the value.

Real Challenges and Common Pitfalls

Here's what actually happens in organizations trying to build this:

Pitfall 1: Assuming AI Models Are Interchangeable

Many vendors claim you can swap LLMs easily. This is not true. Once you've built your AI layer on top of one LLM's performance characteristics, switching to another LLM with different speeds, different reasoning abilities, and different ways of handling ambiguity is a major rewrite.

Choose your underlying model carefully. You're probably going to stick with it for years.

Pitfall 2: Underestimating Permission Complexity

Everyone says, "Yeah, we'll respect permissions." Then they actually try to do it and discover that getting permissions right is 40% of the engineering effort.

Budget for this. Don't assume your new AI layer vendor has solved it just because they claim they have. Test it.

Pitfall 3: Treating AI as a Tool Instead of a System

You don't get value from having an AI tool. You get value from having AI integrated into your systems.

If you're asking people to switch to a different tool to use AI, you're doing it wrong.

Pitfall 4: Not Automating Enough

Most enterprise AI deployments provide information retrieval. "Here's the answer to your question." Very few actually automate work.

Information retrieval is valuable but it has a ceiling. Automation has much more upside.

Pitfall 5: Choosing Vendors Based on Model Benchmarks Instead of Real-World Performance

GPT-4 scores well on benchmarks. In practice, Claude often works better for specific enterprise tasks. GPT-4o is faster and cheaper in production.

Benchmarks are not reality. Test on your actual workloads.

Pitfall 6: Failing to Plan for Change

AI is evolving incredibly fast. Models are getting better. New capabilities are emerging every quarter.

Architect your AI layer so you can swap components without rewriting everything. This is the whole point of the standalone specialist approach—modularity and changeability.

Competitive Advantage: Who Gets There First

Here's the thing about getting the AI layer right: first-mover advantage is real but not overwhelming. Second-mover advantage is actually more real.

First mover gets to learn what works and what doesn't. They'll make mistakes. They'll build things they don't need. They'll miss things they should have built.

Second mover watches, learns, and builds the better version.

This is why the consolidation players and the specialist infrastructure players both have a shot. Microsoft was first with Copilot but they're not best. Glean came after but they're arguably better architected for heterogeneous environments.

The real competitive advantage isn't being first. It's being better.

The organizations that will win with enterprise AI are those that:

- Get the infrastructure right (permissions, integrations, real-time data)

- Customize it to their specific business

- Automate, not just inform

- Measure what actually matters (time saved, quality improved, customer satisfaction increased)

- Iterate fast based on data

Getting there first is less important than getting there right.

Looking Forward: The Next Five Years

In five years, we won't call this the "AI layer." We'll just call it infrastructure. Like we don't call databases the "data layer" anymore. It's just infrastructure.

What we'll be arguing about is whether it's Microsoft's infrastructure, Google's infrastructure, Amazon's infrastructure, a specialist vendor's infrastructure, or home-built infrastructure.

My prediction: multicloud, multivendor approaches will dominate. Organizations will use best-of-breed AI models through APIs. Most will have one primary platform vendor for deep integration. Many will have a specialist AI infrastructure vendor for flexibility. Some will have open-source alternatives.

The consolidation narrative will continue but it'll be less dominant than the current hype suggests. There's too much value in flexibility and too much technical complexity in connecting everything.

The organizations winning in five years will be those that figured out their AI layer early and iterated relentlessly. Those that waited for "the winner" to emerge will be behind.

FAQ

What exactly is an enterprise AI layer?

An enterprise AI layer is the infrastructure system that sits between your employees and your company's data, handling permissions, integrations, real-time data access, and coordinating how multiple AI experiences can safely access and act on company information while enforcing security policies. It's the foundation that makes AI actually useful in business context instead of just a general-purpose chatbot.

How is an AI layer different from a chatbot or AI assistant?

A chatbot is a tool you go to when you need an answer. It's reactive. An AI layer is infrastructure that other applications and experiences build on top of. It's proactive, understands permissions, connects to your actual business systems, and enables AI functionality throughout your organization. A chatbot is something you use. An AI layer is something that powers how your organization uses AI.

Why does permission handling matter so much for AI layers?

Because the AI needs to respect the same access rules that your actual systems enforce. If a person can't see customer data in Salesforce, they shouldn't be able to ask the AI for that data. If someone shouldn't have access to confidential financial information, the AI shouldn't reveal it in synthesis or summaries. Getting this wrong risks data leaks, regulatory violations, and breaches of customer privacy. This is an infrastructure problem, not a model problem.

Should my company build an AI layer internally or buy from a vendor?

Internal build makes sense only if you're a technology company with world-class infrastructure engineering talent and the ability to dedicate 50+ engineers for multiple years. For most organizations, buying is better. The choice then becomes: buy from your platform vendor (Microsoft, Google, etc.) for integration simplicity, or buy from a specialist vendor (like Glean) for flexibility and heterogeneous environment support. Most mid-sized to large enterprises benefit from the specialist approach.

How do I know if an AI layer solution is actually handling permissions correctly?

Test it deliberately. Create test users with different permission levels. Try to get the AI to reveal information they shouldn't have access to. Ask it to show information from systems they're not supposed to access. If you find even one permission bypass, the solution isn't ready. Also ask to see audit logs showing every query and what data was accessed. Permission handling needs to be verifiable and auditable.

What's the typical cost and ROI for enterprise AI layer implementations?

Budget

Can I use multiple AI layer vendors or do I need to choose one?

Multiple vendor approaches are becoming more common, but they add complexity. The pattern emerging is: deep integration with one primary vendor (usually your platform vendor) for core workflows, plus specialized tools for specific domains or use cases. But unless you have sophisticated infrastructure teams, simpler is usually better. Choose one vendor, make it work really well, then expand if you need more.

How long does it take to see ROI from an AI layer investment?

Small pilots can show ROI in 2-3 months. Full organization rollout showing meaningful impact usually takes 6-12 months. The pattern is: month 1-2 learning what's possible, month 3-4 first pilots, month 5-8 scaling to adjacent teams, month 9-12 integrating into actual workflows where the real impact happens. Anyone promising faster results is overselling.

Conclusion: The AI Layer Is Your Next Strategic Infrastructure Decision

The AI layer question isn't a technology question. It's a business strategy question.

It's the same decision that enterprises made about database platforms in the 1990s, cloud platforms in the 2010s, and data warehouse architecture in the 2020s. Get it right and you have a foundation for a decade. Get it wrong and you're migrating off it in three years.

The wrong way to think about this: "Which AI model should we use?" The models are commoditizing fast. GPT-4o, Claude, Llama 3.1, Grok, and others are all pretty good. The differences matter less than you think.

The right way to think about it: "How should we architect AI into our organization?" That's the decision that matters. That's what determines whether AI creates massive value or becomes another expensive tool that sits unused.

The three paths are clear: consolidate on your platform vendor, choose a specialist infrastructure vendor, or build it yourself. Most enterprises will choose the specialist path because they have complex environments and value flexibility. Some will choose consolidation because they want simplicity and tight integration. Almost none should choose internal build—that's for companies like Microsoft and Google.

The best time to make this decision was six months ago. The second best time is right now.

Choose based on your actual environment, your actual needs, and your actual capability to manage the solution. Then execute disciplined, measure obsessively, and iterate quickly.

The organizations that do this right will have AI that actually works. That actually saves time. That actually creates value. That actually changes how people work.

Most won't. Most will have "AI" that's more hype than substance. That requires switching to a separate tool. That gives you answers you could have found yourself. That doesn't actually automate anything.

The difference isn't the model. It's the layer.

Get that right and everything else follows.

Key Takeaways

- Enterprise AI is evolving from Q&A chatbots to autonomous work assistants that execute tasks across organizations, requiring sophisticated infrastructure rather than just smart models.

- The AI layer—the infrastructure that sits between employees and company data—matters more than the underlying language model because it handles permissions, integrations, and real-world execution.

- Three ownership models are emerging: platform consolidation (Microsoft, Google), specialist vendors (Glean), and internal build, each with different tradeoffs for flexibility, integration, and complexity.

- Permission handling, real-time data access, and cross-system integration are the hard problems that determine whether AI creates value or just provides information, making infrastructure decisions critical.

- Most enterprises should choose a specialist AI infrastructure vendor if they have complex, heterogeneous environments, or their platform vendor if deeply committed to one ecosystem, with 12-24 month timelines to value being realistic.

Related Articles

- How AI Transforms Startup Economics: Enterprise Agents & Cost Reduction [2025]

- Meridian AI's $17M Raise: Redefining Agentic Financial Modeling [2025]

- Admin Work is Stealing Your Team's Productivity: Can AI Actually Help? [2025]

- OpenAI's Responses API: Agent Skills and Terminal Shell [2025]

- Observational Memory: How AI Agents Cut Costs 10x vs RAG [2025]

- Telegram Blocked in Russia: How Citizens Are Fighting Back With VPNs [2025]

![Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]](https://tryrunable.com/blog/who-owns-your-company-s-ai-layer-enterprise-architecture-str/image-1-1770844022436.jpg)