How to Operationalize Agentic AI in Enterprise Systems [2025]

The hype around agentic AI has been real. Autonomous systems that can reason, plan, and act independently sound transformative. The problem? Most organizations are stuck in a painful gap between proof-of-concept and production.

You've probably seen it yourself. A team runs a promising pilot with an AI agent handling customer support tickets. Results look good in the lab. Then reality hits. The agent needs to integrate with three legacy systems. Your data's scattered across different databases. Security and compliance requirements get complicated fast. Six months later, the pilot dies quietly, and the team moves on to the next shiny tool.

Here's what's really happening. The issue isn't the AI models themselves. Claude, GPT-4, and other frontier models are powerful enough. The bottleneck is architectural. Your existing infrastructure wasn't built for autonomous agents acting in real-time across multiple systems. You're trying to bolt AI onto architecture designed for human workflows and batch processes.

Operationalizing agentic AI requires a fundamental shift in how enterprises build, deploy, and govern software. It's not about buying a better model. It's about creating the operational foundation that lets AI agents actually work.

This guide walks you through what that looks like in practice.

TL; DR

- Agentic AI pilots fail because of architectural mismatches, not AI limitations—legacy systems, siloed data, and governance gaps prevent production deployment

- Orchestration beats sophistication—unified platforms that connect agents to enterprise systems matter far more than building increasingly complex agents

- Low-code platforms are essential—composable, prebuilt connectors eliminate custom integrations and accelerate time-to-value

- Governance and monitoring must come first—agent autonomy requires visibility, audit trails, and security controls before deployment

- Hybrid models win—combining custom-built agents with agent-as-a-service (AaaS) solutions provides flexibility and standardization simultaneously

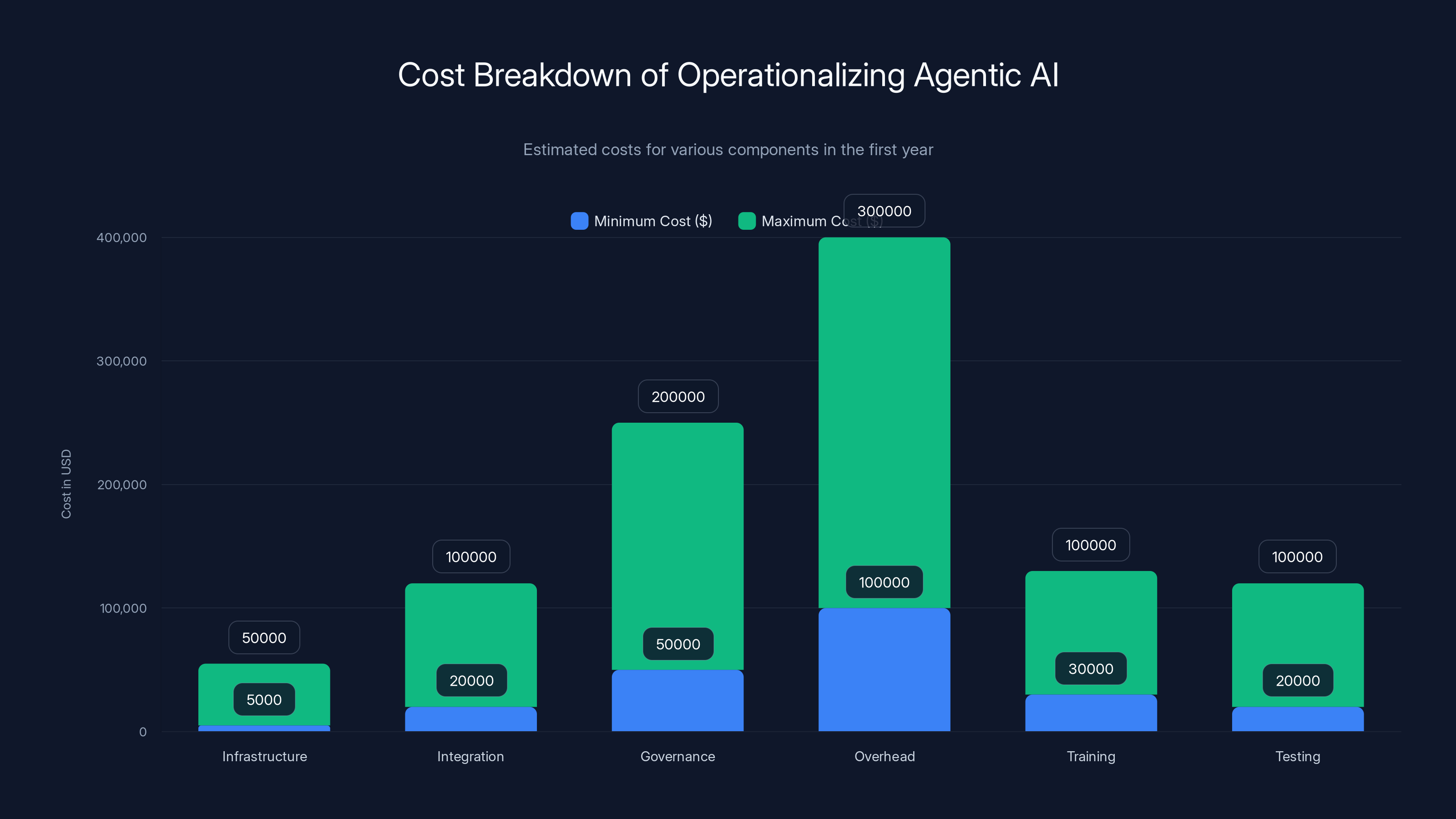

Estimated costs for operationalizing agentic AI range from

The Reality Gap: Why Agentic AI Pilots Stall

Let's be direct. The problem with agentic AI isn't ambition. It's execution. Across industries, CIOs report the same pattern: promising pilots that never make it to production. In some cases, 60% of AI initiatives never leave the lab. The reasons sound technical, but they're actually organizational and architectural.

When you deploy an AI agent in a controlled environment—a single database, a specific workflow, one API—it works great. The agent learns the patterns. It makes decisions. It completes tasks. But the moment you try to move that agent into the real world, where it needs to touch multiple systems, access regulated data, integrate with human workflows, and maintain audit trails, everything breaks.

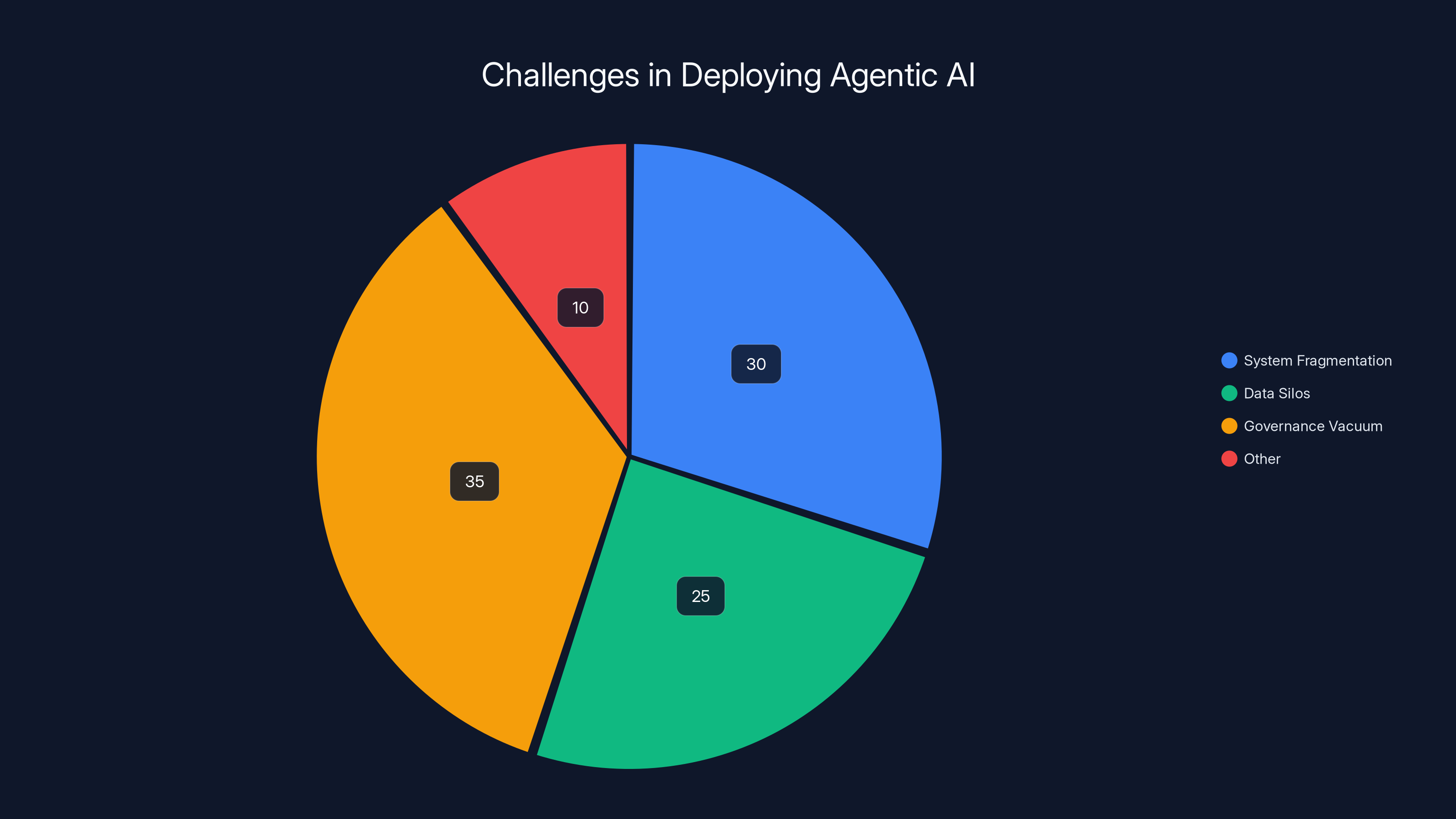

The first issue is system fragmentation. Most enterprises run on a patchwork of legacy systems. You might have SAP for finance, Salesforce for CRM, a homegrown supply chain system, and three different HR databases. An agentic AI system needs to understand all of these systems simultaneously, know which data lives where, understand the relationships between them, and act across all of them without breaking consistency or compliance.

The second issue is data silos. Even when you have data, it's locked away. Sales data lives in one system. Inventory data in another. Customer interaction history in a third. An autonomous agent that needs to make decisions based on complete information can't access it. Building custom data pipelines for each agent defeats the purpose of having autonomous systems in the first place.

The third issue—and this is where many organizations get stuck—is governance vacuum. Autonomous systems create liability. If an agent makes a decision that harms a customer, violates compliance, or costs money, who's responsible? How do you audit what happened? What controls exist to prevent bad decisions? Most enterprises don't have answers to these questions, so they lock the agent down so much that it's no longer autonomous.

The fourth issue is development velocity. Building an agentic system today means writing custom code. Custom integrations with each system. Custom data connectors. Custom monitoring. Custom governance layers. A single agent that touches five systems might require weeks or months of engineering. That's why pilots work—the scope is narrow. But scaling that approach breaks immediately.

These aren't AI problems. They're infrastructure problems. Your organization has spent two decades optimizing software delivery around the assumption that humans make decisions. Agents make continuous autonomous decisions. That's a different game.

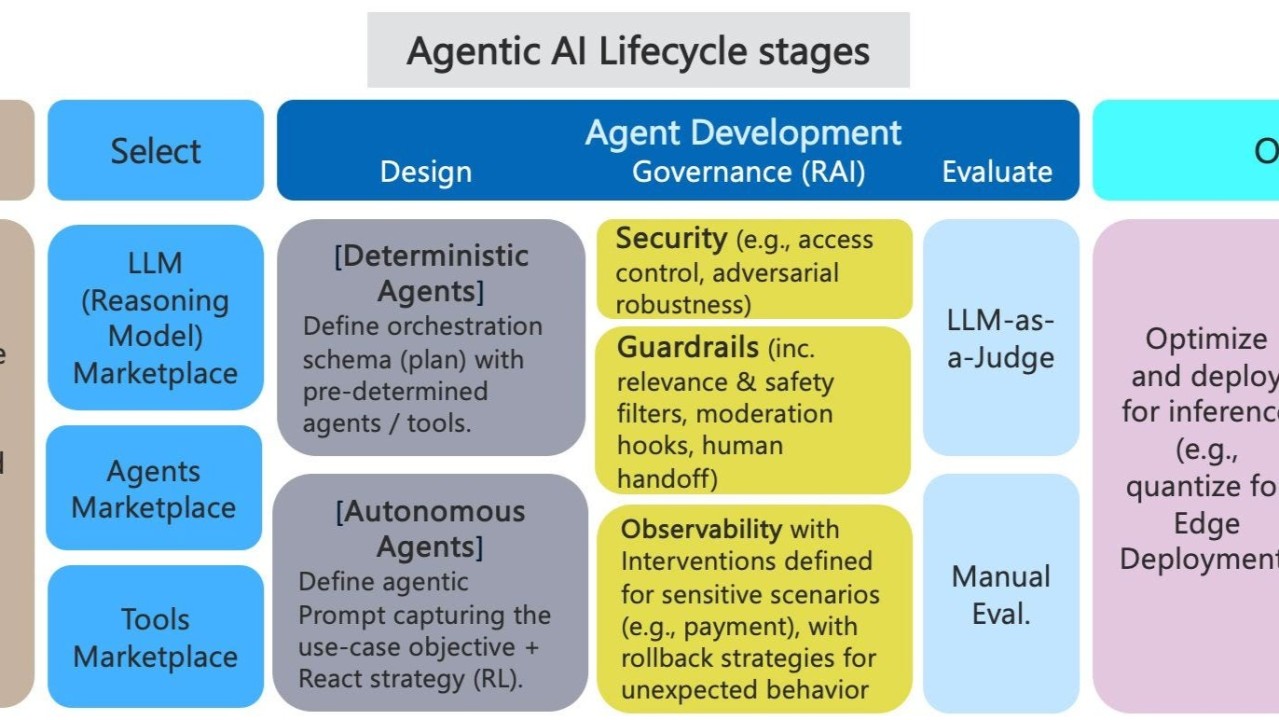

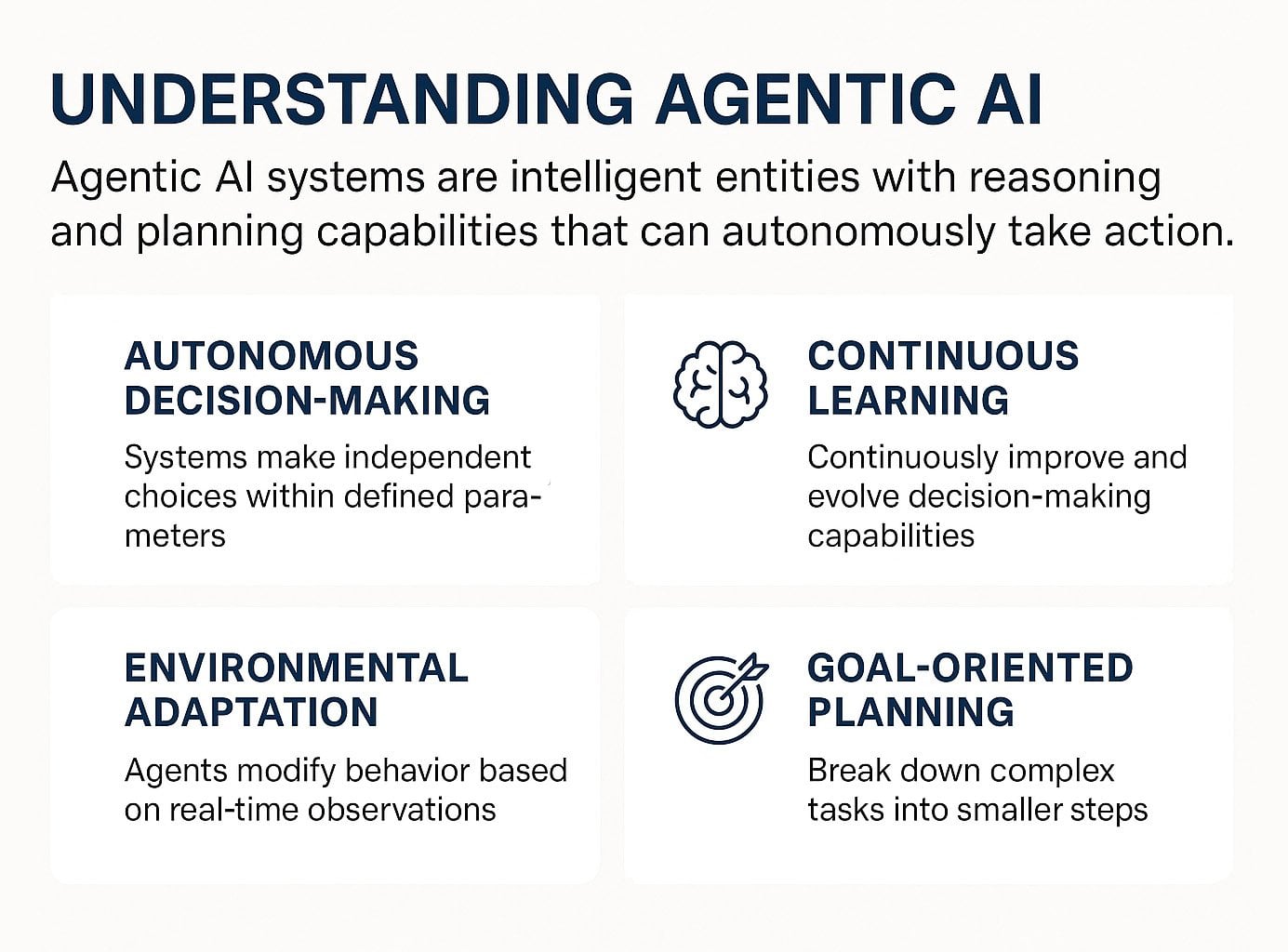

Understanding Agentic AI vs. Generative AI

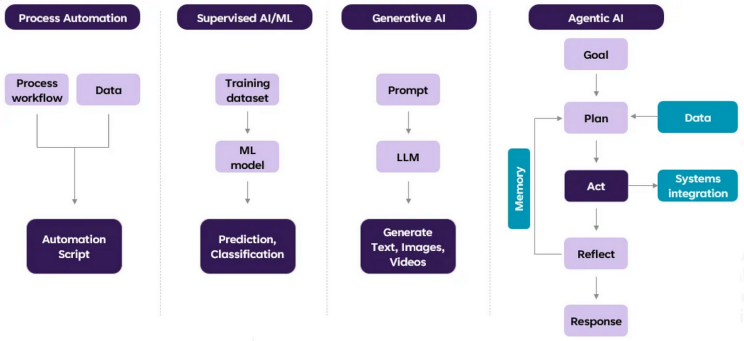

Let's clarify a critical distinction that trips up a lot of organizations. Generative AI and agentic AI are fundamentally different animals, even though they sometimes use the same underlying models.

Generative AI is reactive. You give it a prompt. It generates output. A customer asks Chat GPT a question. Chat GPT returns an answer. A developer asks Copilot to write code. Copilot suggests a function. Every interaction is initiated by a human. The AI produces content based on that request.

Agentic AI is proactive. It observes conditions. It makes decisions. It takes actions. Nobody asks it to do anything. An inventory agent notices that stock is running low. It analyzes demand forecasts. It checks supplier lead times. It places an order. All of that happens autonomously, without human initiation.

This difference changes everything about how you need to build systems. Generative AI can be somewhat of a black box. Users might not understand exactly why GPT-4 phrased something a particular way, but they got value. Agentic AI cannot be a black box. If an agent makes a decision that causes problems, you need to know why it made that decision.

Generative AI systems are usually stateless. You submit a prompt, get a response, move on. Agentic systems maintain state. They remember previous decisions. They learn from outcomes. They build context over time. That means they need persistent storage, historical tracking, and continuous monitoring.

Generative AI operates within bounds. A language model can't actually do anything except produce text. What it does with that text is someone else's responsibility. Agentic AI operates without bounds. An agent can execute transactions, modify data, delete information, commit resources. Autonomy is power, but power requires responsibility.

Here's the practical consequence: many organizations that are "using AI" are really just using generative AI through a GUI. Someone writes a prompt, gets an answer, takes action based on that answer. The human is still in the decision loop. That's not agentic AI. That's AI-assisted work.

True agentic AI removes the human from the decision loop. The system observes, reasons, decides, and acts. This is exponentially more powerful and exponentially more risky. Which is why operationalizing it requires a completely different approach.

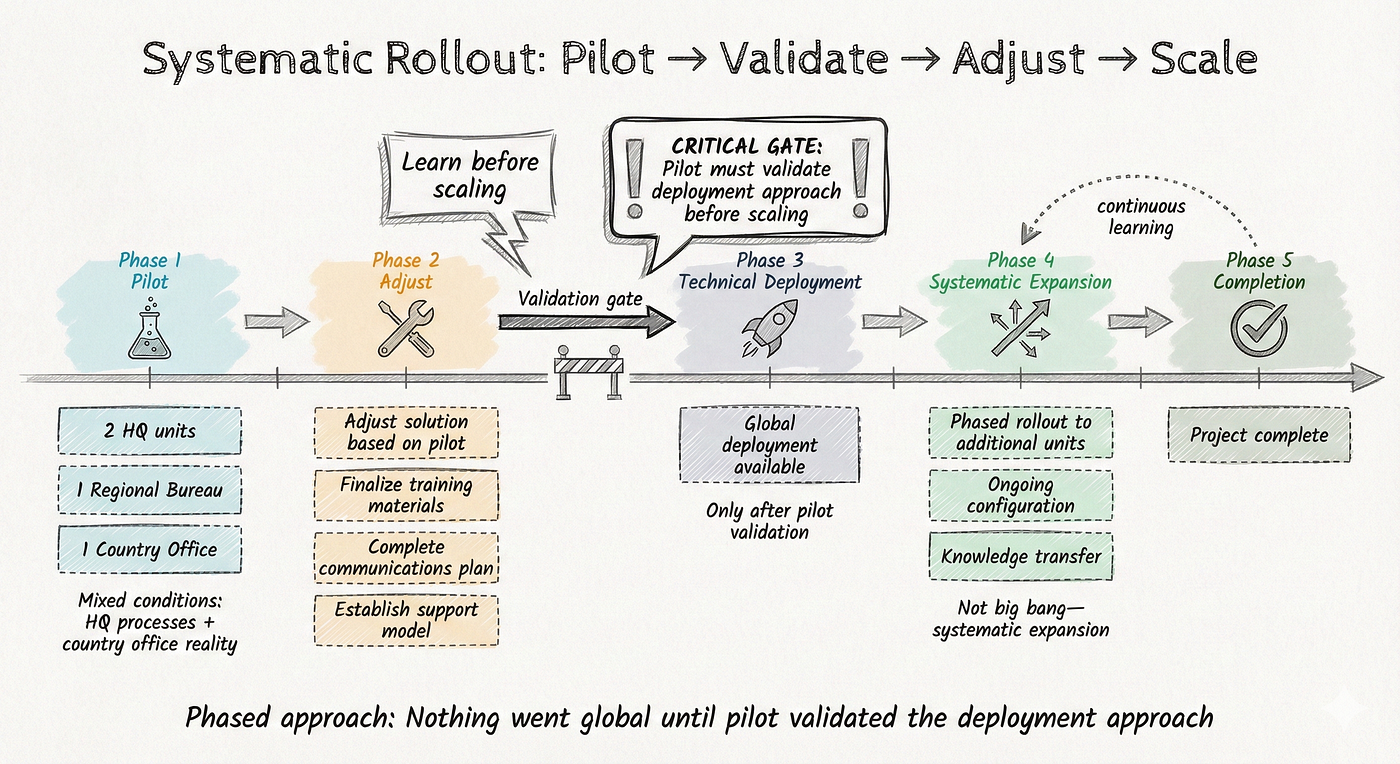

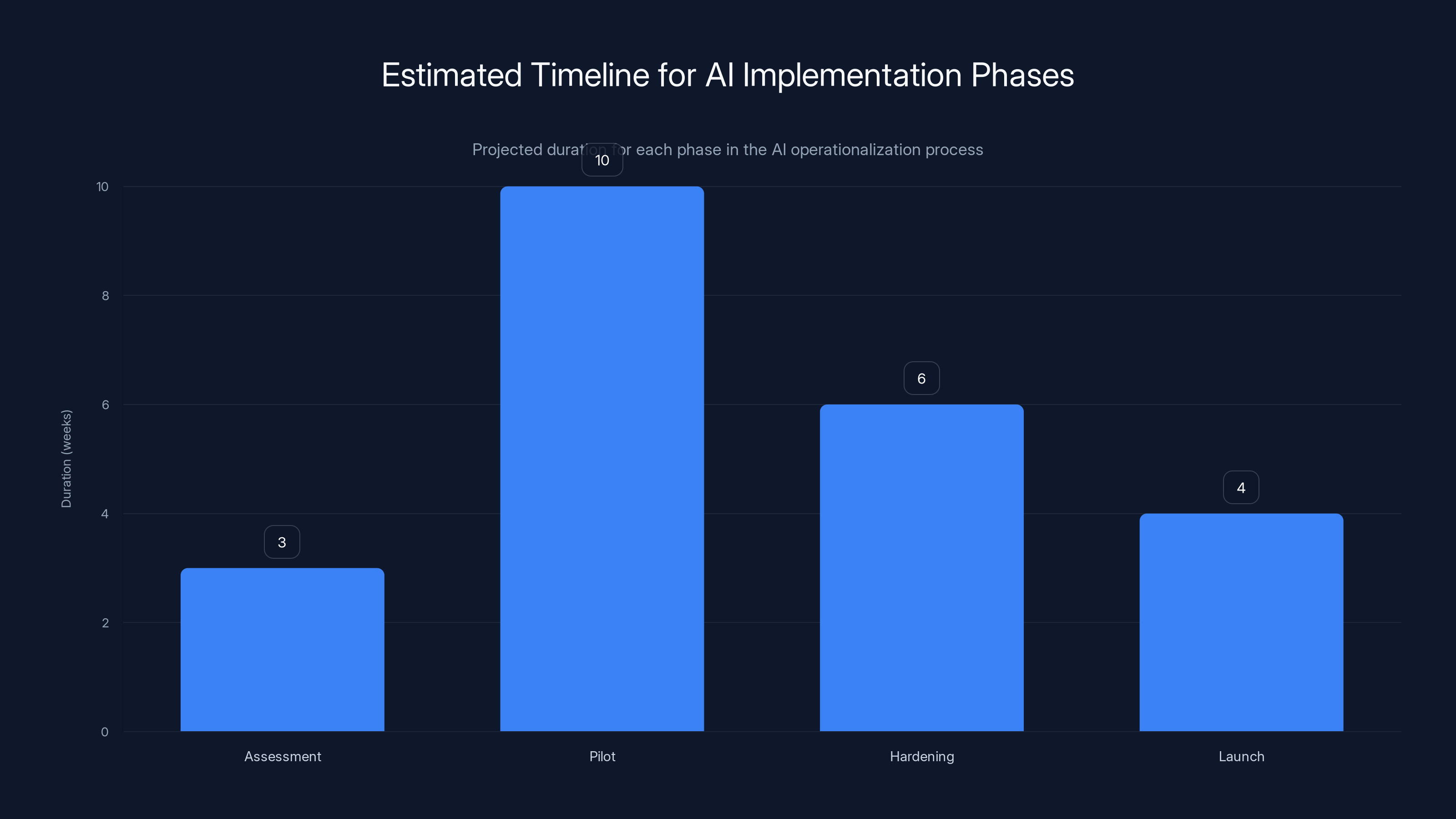

Operationalizing agentic AI typically takes 6-8 months, with key stages including assessment, advisory mode, hardening, and launch. Estimated data based on typical project timelines.

The Architectural Foundation: Why Platform Choice Matters

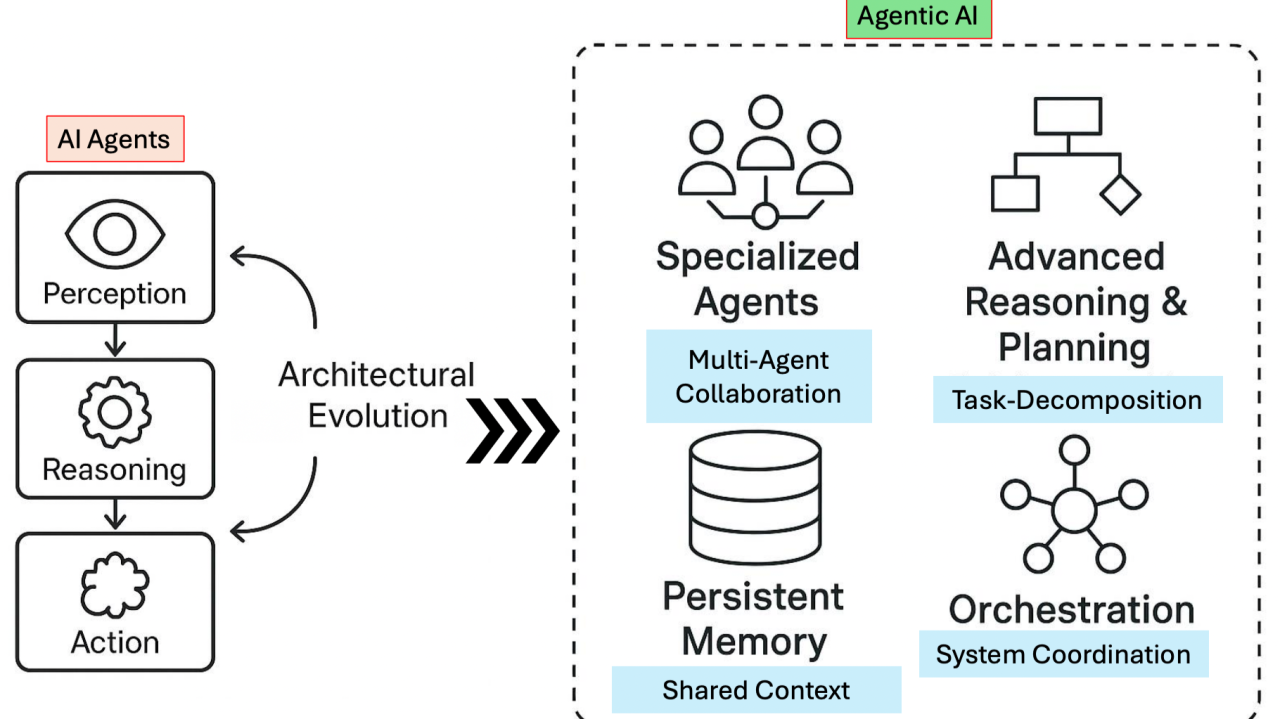

Let's talk about what "operationalizing" actually means at the infrastructure level. It means building a foundation that connects three layers: agents, systems, and governance.

The agent layer is where reasoning happens. The models themselves. But increasingly, it's also about the frameworks and tools that help agents reason better. Things like Re Act (Reasoning + Acting), chain-of-thought prompting, and tool use. These frameworks help agents break down problems, plan sequences of actions, and handle uncertainty.

The systems layer is everything the agent needs to interact with. APIs, databases, workflows, external services. In a real enterprise, this is messy. You have SAP APIs that require OAuth 2, homegrown endpoints that use basic auth, webhooks that send data unpredictably, and legacy systems that don't expose APIs at all. The agent needs to navigate this chaos without custom code for each system.

The governance layer is the control mechanism. Monitoring what agents are doing. Understanding why they made specific decisions. Blocking or rolling back actions that violate policy. Maintaining audit trails for compliance. This is where most organizations fail. They build the agent and the system connections, but they skip governance because it's complex and seems like it can be added later. It can't be. Governance has to be foundational.

Connecting these layers requires a platform. Not just a model API. A platform that understands enterprise architecture. Here's why that matters.

Imagine you build an agent using just a model API (say, the Open AI API). You write code that calls the model, tells it what tools are available, and asks it to complete a task. This works for simple use cases. But now you want to:

- Connect to a Salesforce system using OAuth

- Query a Postgre SQL database

- Call a REST API that requires request signing

- Track every decision for audit purposes

- Implement role-based access controls so the agent can only see authorized data

- Monitor token usage to control costs

- Handle retries when external services fail

- Implement rate limiting so you don't overwhelm systems

- Create a UI where humans can monitor the agent

- Run multiple agents in parallel without conflicts

At this point, you're not building an agent anymore. You're building an agent infrastructure. You're writing thousands of lines of code just to connect the agent to enterprise reality. Most organizations don't have the engineering resources for this. So the pilot stays in the lab.

A platform abstracts this complexity. Instead of writing custom code, you use prebuilt connectors. Instead of building custom governance, you get it out of the box. Instead of creating monitoring infrastructure, it's there. The platform becomes the multiplier on your AI investment.

This is also where solutions like Runable become valuable. They provide a platform designed specifically for operationalizing AI workflows. Instead of building custom agents from scratch, teams can use AI agents to automate document generation, create presentations, generate reports, and orchestrate multi-step workflows—all with built-in governance and monitoring. Runable starts at $9/month and lets teams focus on business logic instead of infrastructure plumbing.

From Pilots to Production: The Operationalization Framework

Operationalizing agentic AI follows a specific progression. Skip steps, and you'll end up with ungoverned agents creating chaos. Follow the progression, and you build something sustainable.

Step 1: Define the decision boundary. Before building an agent, be explicit about what decisions it can make. What's the scope? An agent that can approve purchases under $1,000 is different from an agent that can approve any purchase. An agent that can modify customer data is riskier than an agent that can only read it. Define these boundaries precisely. Document them. Build them into the system.

Step 2: Map the data dependencies. What information does the agent need to make good decisions? Where does that information live? Is it in systems the agent can access? Is the data accurate and timely? A supply chain agent needs accurate inventory data. If your inventory system is updated once a day and the agent makes decisions in real-time, you've got a problem. Identify these gaps before building.

Step 3: Implement observation and monitoring first. Before an agent takes any autonomous action, build the ability to watch what it's doing. Log every decision. Track why it made that decision. Monitor the outcomes. This isn't optional. This is foundational. You need six months of monitoring data before you give an agent real autonomy, just so you understand its behavior patterns.

Step 4: Start with advisory mode. Your agent should first exist in a read-only state. It observes conditions. It makes recommendations. It suggests actions. But humans execute the actions. This is where you learn. You see whether the agent's recommendations are good. You identify edge cases. You fine-tune the decision logic.

Step 5: Implement approval gates. Move to a mode where the agent can take small actions autonomously but needs human approval for larger decisions. A support agent can mark a ticket as resolved, but needs approval to issue a refund. An inventory agent can reorder from usual suppliers but needs approval to use expensive expedited shipping. Approval gates let you balance autonomy with safety.

Step 6: Graduate to autonomous operation. Only after months of observing behavior, refining logic, and building confidence do you remove approval gates. And even then, you maintain monitoring. An autonomous agent isn't a "set it and forget it" system. It's a system you watch continuously.

Orchestration: The Missing Piece Most Organizations Overlook

Here's where most agentic AI implementations go sideways. Organizations build sophisticated agents. Then they realize agents don't exist in isolation. They need to coordinate with other agents. They need to respect human workflows. They need to handle exceptions gracefully.

This is orchestration. And it's what separates toy demos from production systems.

Imagine a customer support scenario. A customer emails with an issue. An agentic system needs to handle this. But the workflow might look like this:

- Receive email

- Route to appropriate agent based on category

- That agent gathers information about the customer

- Another agent runs diagnostics on the customer's account

- A third agent checks inventory

- Those three agents coordinate to develop a solution

- If the solution is complex, escalate to a human agent

- If it's simple, the primary agent implements it

- Follow-up workflows run separately

None of this happens by accident. You need orchestration logic. You need the ability to route between agents. You need agents to wait for each other. You need to handle the case where one agent fails. You need to manage priorities.

Orchestration is what multi-agent systems actually are. It's not about having a more sophisticated single agent. It's about having many agents that coordinate. A supply chain agent talks to a supplier agent. A finance agent talks to a procurement agent. They coordinate without humans in the middle.

This is genuinely difficult to build. Orchestration tools exist (things like AI orchestration platforms, workflow engines, and multi-agent frameworks), but they're not always designed for enterprise requirements. They often lack governance. They're hard to monitor. They don't integrate with existing enterprise systems cleanly.

This is another place where platform choice becomes critical. A platform designed for agentic AI understands orchestration natively. It helps you define when agents should run in sequence versus parallel. It tracks the state across multiple agents. It handles exceptions. It maintains the audit trail across the entire workflow.

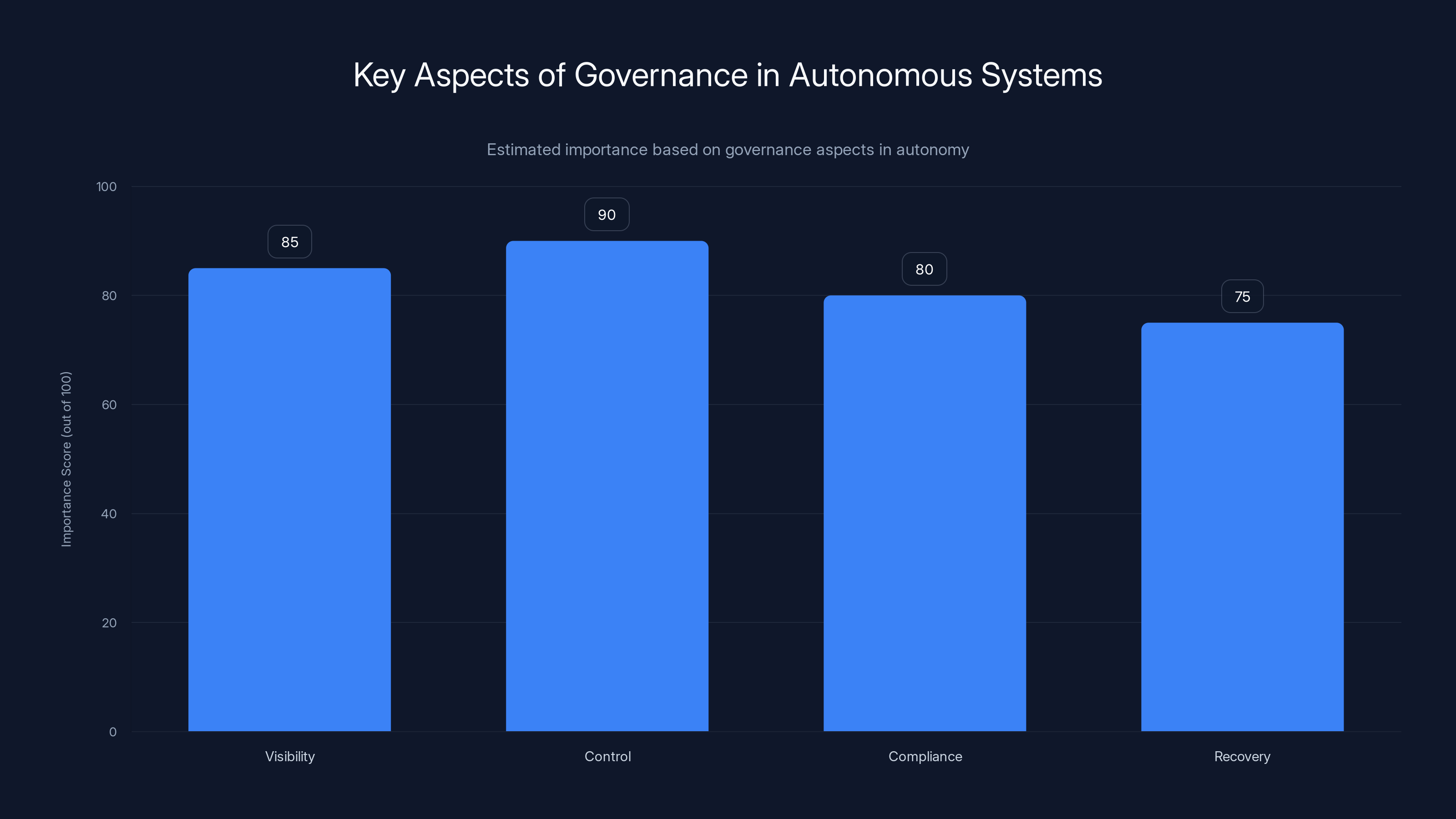

Control is estimated as the most critical governance aspect in autonomous systems, followed by visibility, compliance, and recovery. Estimated data based on governance priorities.

Governance: Building Safety Into Autonomy

Autonomy without governance is just chaos. The more autonomous your agents become, the more critical governance becomes.

Governance is primarily about four things: visibility, control, compliance, and recovery.

Visibility means you can see what agents are doing. Not just "agent A executed 500 transactions today," but "agent A executed these 500 specific transactions for these specific reasons." You need detailed logging. You need decision tracing. You need to understand the chain of reasoning that led to each action.

Control means you can prevent bad outcomes before they happen. This includes:

- Policy enforcement: Rules that prevent actions that violate policy

- Rate limiting: Preventing agents from executing too many actions too quickly

- Resource limits: Constraining how much agents can spend, how much data they can access, how many external calls they can make

- Role-based access control: Ensuring agents only see data they should see

- Approval workflows: Requiring human sign-off for sensitive actions

Compliance means you can prove you're following regulations. In heavily regulated industries (finance, healthcare, regulated utilities), agents need to maintain compliance automatically. That means:

- Audit trails that demonstrate what happened, when, and why

- The ability to replay decisions for review

- Clear separation between what's automated and what requires human judgment

- Documentation of decision logic

- Regular testing and validation

Recovery means you can undo bad decisions. If an agent makes a mistake, you need to roll back the change. You need to understand the scope of the damage. You need to correct data. You need to notify affected parties.

Building this takes time. It's not something you can add after the fact. The monitoring infrastructure needs to be there from day one. The policy engine needs to be embedded in the decision loop. The audit trail needs to be automatic.

This is another argument for platforms. Building governance from scratch is a massive undertaking. A platform provides it. You just need to configure policies.

The Low-Code Advantage: Why Platform Architecture Matters

Let's get specific about why low-code platforms matter for agentic AI. It's not about speed (though that's a benefit). It's about enablement.

When you build agents with code, you're writing software. You need software engineers. You need to manage dependencies. You need to test. You need to maintain code. You need to version control everything. If something breaks, you need to debug it. This is all necessary, but it's also slow and expensive.

Low-code platforms abstract away the plumbing. Instead of writing code to connect to Salesforce, you click a few buttons and select a connector. Instead of writing authentication logic, the platform handles it. Instead of building your own monitoring dashboard, it's there.

This doesn't mean "no code." Builders still need to understand logic and architecture. They still need to think through workflows. They just don't need to write boilerplate.

For agentic AI specifically, low-code matters because:

First, it democratizes agent building. Not everyone who needs to build agents is a software engineer. A product manager, a business analyst, or a subject matter expert might have deep insight into how a process should work. In a code-first world, they can't build agents. In a low-code world, they can. This expands who can participate in AI innovation.

Second, it accelerates time-to-value. Building an agent from scratch takes weeks or months. Building one on a low-code platform takes days. This means you can iterate faster. You can try different approaches. You can learn what works and what doesn't.

Third, it reduces fragmentation. When every team writes agents in their own way, you end up with a mess. Different teams use different frameworks. Different patterns. Different monitoring approaches. Low-code platforms enforce consistency. Everyone uses the same patterns. Everyone's agents are monitorable the same way. Everyone's agents integrate the same way.

Fourth, it facilitates composition. Agents should be composable. You should be able to take an agent built by one team and use it as a component in another system. Low-code platforms make this possible. Code-first approaches make it hard.

Now, does low-code matter for every use case? No. Some agents need custom logic that you can't express in a visual workflow. But for the majority of enterprise use cases, low-code is probably the right approach.

Building vs. Buying vs. Orchestrating

Most organizations face a classic dilemma when it comes to agentic AI. Build custom agents? Buy prebuilt tools? Use open-source frameworks? The answer is increasingly: all of the above.

The build option appeals to enterprises that want full control. You write custom agents using frameworks like Lang Chain, Auto Gen, or Crew AI. You build exactly what you need. The downside: you own all the complexity. Building agents is easy. Building agents that are production-ready, monitored, governed, and secure is hard.

The buy option appeals to organizations that want someone else to manage the complexity. Prebuilt AI solutions handle specific domains. Specialized agents for customer support, supply chain, finance, HR. You implement the solution. The downside: prebuilt solutions usually fit 80% of your needs and 20% require customization you can't do.

The orchestrate option is what's emerging as the winner. Use prebuilt agents (from vendors) where they make sense. Build custom agents for what's unique to your business. Orchestrate them together under a unified platform. This gives you the benefits of both approaches.

For this to work, you need a platform that supports all three. Agent-as-a-Service (AaaS) solutions are the mechanism. Instead of buying a product (customer support agent), you buy the ability to use agents as services. Your custom agents call those services. Everything gets orchestrated together.

Here's what this looks like in practice. Your organization has:

- Custom agents: A supply chain agent built specifically for your business logic

- Prebuilt agents: A customer support agent from a specialized vendor

- Third-party agents: An invoice processing agent from an accounting software company

All three types of agents exist in the same system. They're orchestrated together. A customer support ticket might trigger the custom supply chain agent (to check availability) and the prebuilt support agent (to draft a response) simultaneously. They coordinate. They share information. They work together.

The platform makes this possible. Without a platform, each agent is siloed. With a platform, they're integrated.

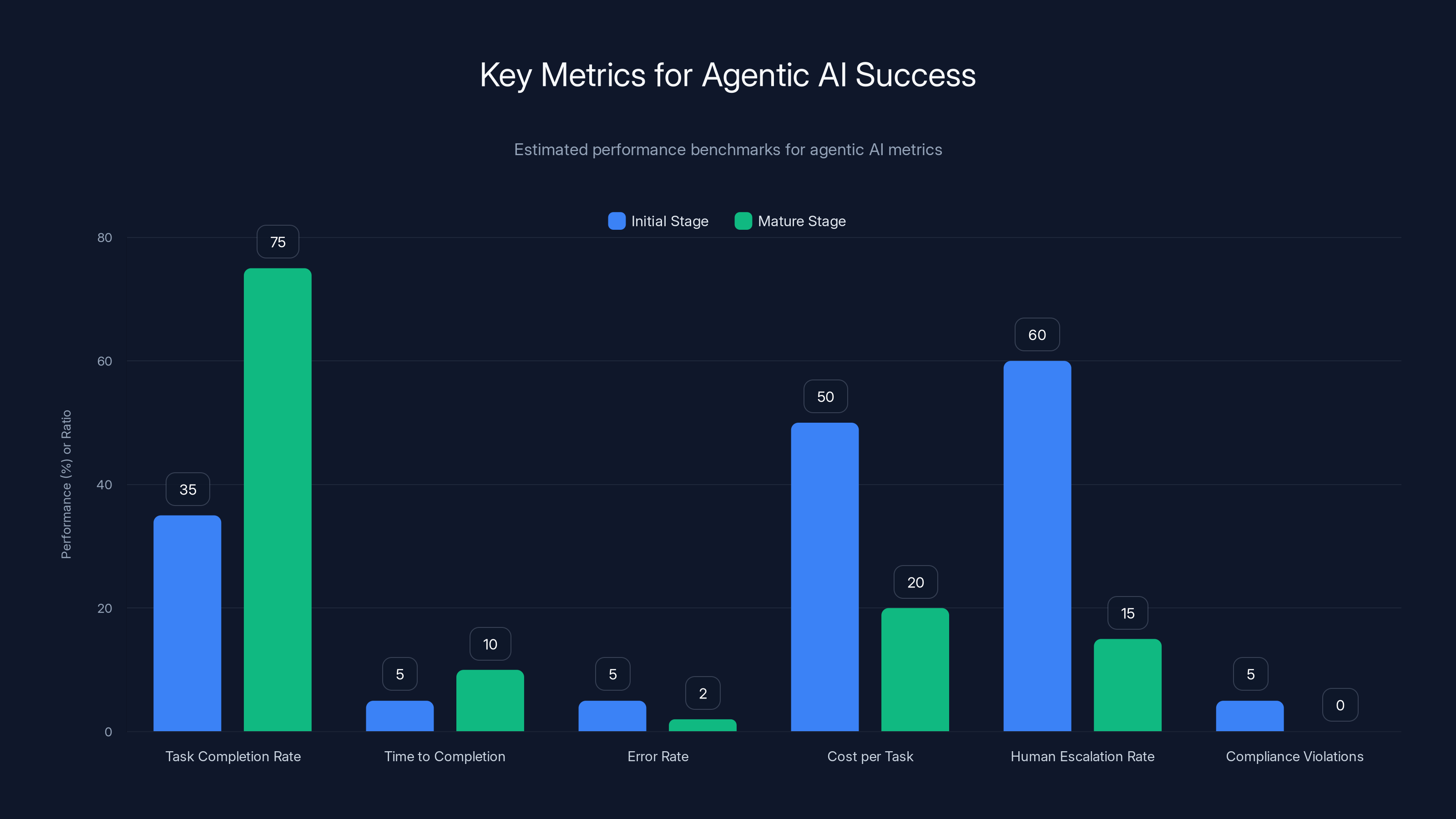

This bar chart illustrates the progression of key metrics for agentic AI from initial to mature stages. Notably, task completion rates and time to completion improve significantly, while error rates and human escalation rates decrease. (Estimated data)

Real-World Operationalization: A Supply Chain Example

Let's walk through what operationalization actually looks like in practice. Consider a supply chain scenario.

The business problem: Your company sells physical products. When demand exceeds inventory, you lose sales. When you order too much, you carry excess inventory. The current process is manual: someone checks stock levels daily, manually reviews orders, manually contacts suppliers, manually updates systems. It's slow. It's error-prone. It leaves money on the table.

The agentic solution: An autonomous agent monitors inventory continuously. When stock reaches a threshold, it:

- Analyzes historical demand patterns

- Checks current orders in the pipeline

- Evaluates multiple suppliers and their lead times

- Calculates optimal order quantities

- Considers cash flow and storage constraints

- Places orders automatically

- Notifies relevant teams

- Updates the ERP system

- Monitors delivery status

- Adjusts subsequent orders based on actual demand

The operationalization process:

Month 1-2: Build and monitor. The agent is built in advisory mode. It observes inventory. It calculates what orders it would place. But humans execute those orders. Meanwhile, the team monitors the agent's recommendations. Are they good? Does the agent correctly understand demand patterns? Is it considering the right constraints?

Month 3: Approval gates. The agent now places routine orders automatically. But orders above a certain threshold require approval. Unusual situations require escalation. The team monitors outcomes. Are actual deliveries matching predictions? Are any suppliers consistently underperforming?

Month 4-6: Autonomous operation. The agent has earned trust through six months of observation. It can now place orders without approval, for all products up to a spending limit. Orders above the limit still require approval. The team shifts from approval to monitoring. They watch for edge cases. They check cost metrics. They validate that the agent's autonomy is delivering promised benefits.

The business outcomes: In this scenario, the organization typically sees:

- 15-25% reduction in inventory carrying costs (less excess inventory)

- 10-15% improvement in fulfillment rates (fewer stockouts)

- 30-40% faster response to demand changes (real-time vs. daily decisions)

- Significant reduction in manual labor (no more daily inventory reviews)

None of this happens by accident. It happens because the implementation followed the operationalization framework. It started with clear decision boundaries. It implemented monitoring from day one. It moved through stages of increasing autonomy only after earning trust. And it maintained governance throughout.

Integration Architecture: Connecting Agents to Enterprise Systems

Here's a technical reality that trips up many teams: integrating agents with enterprise systems is harder than it sounds.

Your agent needs to read data from systems. Systems are designed for humans or batch processes, not continuous autonomous queries. Your agent needs to write data back. Systems have validation logic, business rules, and constraints. Your agent needs to understand all of this.

Consider a simple example: updating a customer record in Salesforce. Seems straightforward. But there's:

- Authentication (OAuth, API keys, session tokens)

- Authorization (the agent can only update accounts it's responsible for)

- Validation (the agent can't set invalid data)

- Business rules (certain fields might trigger workflows)

- Audit trails (who made the change and when)

- Concurrency (what if another process updates the record simultaneously)

Multiply this across 10 different systems, and you've got a massive integration problem. This is why custom code implementations stall. Teams spend 80% of their effort on integration plumbing, 20% on actual agent logic.

Integration platforms solve this through connectors. A Salesforce connector handles all the Salesforce-specific complexity. An ERP connector handles ERP-specific complexity. Agents use these connectors instead of writing custom code.

But connectors alone aren't enough. You also need data consistency mechanisms. If an agent writes to Salesforce and then Salesforce syncs to your data warehouse, there might be a lag. The agent might be making decisions based on stale data. You need ways to maintain eventual consistency across systems.

You also need error handling and retries. External systems fail. APIs time out. Data validation fails. Your agent needs to handle this gracefully. Retry with backoff. Log failures. Escalate to humans. Not crash.

You also need transformation logic. Data formats differ across systems. A date in one system is a string in another. A relationship in one system is a foreign key in another. The agent needs to understand these transformations.

All of this is why integration is a core platform concern. It's not something you can bolt on. It needs to be foundational.

Regulatory Considerations: Governance in Regulated Industries

If your organization operates in a regulated industry (finance, healthcare, utilities, telecommunications), operationalizing agentic AI is different. Not impossible, just more complex.

Regulations typically require:

Explainability: You need to explain why an autonomous decision was made. Not in technical terms ("the neural network output 0.87"). In business terms ("we denied the loan because the applicant's debt-to-income ratio exceeded our policy threshold of 50%, which is 52%").

Auditability: You need to maintain a complete audit trail. What decision was made? When? By what agent? Based on what data? With what reasoning? For how long? This audit trail needs to be maintained for years, often.

Human oversight: Some decisions can't be fully automated. Regulatory frameworks often require human judgment for edge cases or high-stakes decisions. Your agent architecture needs to support this. An agent that recommends actions and escalates to humans is different from an agent that takes actions directly.

Algorithmic fairness: If your agent makes decisions about people (credit decisions, hiring decisions, insurance decisions), you need to ensure the agent isn't discriminating. This means testing for bias. It means maintaining fairness metrics. It means being able to demonstrate compliance with fairness regulations.

Data privacy: If your agent processes personal data, you need to comply with privacy regulations. GDPR, CCPA, and others have specific requirements about who can access data, what data can be stored, how long it can be stored, and how subjects can request deletion or correction.

All of this requires infrastructure. Data retention policies. Audit logging. Fairness testing. Privacy impact assessments. This isn't something you add after the fact. It needs to be designed in from the beginning.

This is another place where platforms matter. A platform designed for regulated industries has these features. A homegrown implementation probably doesn't.

The AI implementation process is estimated to take approximately 23 weeks, with the Pilot phase being the longest at 10 weeks. Estimated data based on provided phase durations.

Scaling Agents: From One Agent to Agent Networks

Once you've successfully operationalized your first agent, the temptation is to build more. Your first agent handled supply chain. Now build one for customer support. Now one for finance. And so on.

This is where things get complicated. Single agents can be managed. Agent networks are harder. Multiple agents sharing systems create new problems.

Conflicts: What happens when two agents want to do conflicting things? Agent A wants to approve a purchase order. Agent B (finance) has determined that budget is frozen. Who wins?

Ordering: What's the right sequence for agents to act? If you update inventory first, that changes the purchasing decision. If you update purchasing first, that changes the inventory calculation. Ordering matters.

Resource contention: If multiple agents query the same system simultaneously, you might overwhelm it. You need coordination mechanisms.

Emergent behavior: When agents interact, sometimes unintended behaviors emerge. Agent A and Agent B might create a feedback loop that amplifies. You need to be able to detect and prevent this.

Debugging: When something goes wrong with a single agent, debugging is straightforward. When it involves five agents coordinating across three systems, debugging is nightmare-fuel. You need robust observability.

Governance: As the network grows, governance becomes harder. You need policies that prevent agents from interfering with each other. You need rules about resource usage. You need mechanisms to prevent one agent's mistake from cascading.

This is where orchestration becomes critical. You need orchestration frameworks that understand multi-agent dynamics. That can express complex interaction patterns. That can monitor the system as a whole, not just individual agents.

Most organizations underestimate this complexity. They build one agent successfully. Then they try to build five agents the same way. It doesn't work. You need different tools, different processes, different monitoring approaches.

Cost Considerations: The Real Economics of Operationalization

Organizations typically underestimate the cost of operationalizing agentic AI. They focus on model costs (API calls to Claude or GPT-4) and forget about everything else.

Here's what actually costs money:

Infrastructure: Platforms, databases, monitoring tools, logging systems. These aren't free. You might spend

Integration development: Building connectors to your specific systems. Even with a platform, you might need custom integration work.

Governance and compliance: Audit logging, monitoring, fairness testing, documentation. For regulated industries, this can cost

Organizational overhead: Managing agentic systems is new work. You need people who understand the technology. Who can monitor agents. Who can handle exceptions. Who can refine policies. This is 1-3 FTE depending on scale.

Training and change management: Helping your organization understand what agents can and can't do. Changing workflows to work with autonomous systems. Training people on new processes.

Testing and validation: Before an agent touches production, you need to be confident it works. Comprehensive testing. Simulation. Comparison against baseline processes.

Add it up. A non-trivial agentic AI implementation costs

This is expensive. But when it works, the ROI is typically 3-10x within 18 months. The agent removes manual work, reduces errors, and improves speed. If it handles work that costs

Understanding the economics is important because it changes how you prioritize. You don't build agents for processes that don't cost much. You build agents for high-cost, high-volume, repeatable processes. That's where ROI is real.

Industry-Specific Operationalization Strategies

Agentic AI doesn't operationalize the same way across industries. Different industries have different constraints, opportunities, and risks.

Financial services: Highly regulated. High stakes. Slow to change. But high costs associated with manual processes. The strategy is: start small, demonstrate value, build governance, scale carefully. Focus on back-office processes first (operations, finance, compliance) before customer-facing agents.

Retail and e-commerce: Fast-moving. Lots of data. Continuous operations. The strategy is: start with supply chain and inventory. Then move to customer service. Then to personalization and recommendation. These are high-volume, repeatable processes.

Manufacturing: Complex systems. High integration requirements. The strategy is: start with supply chain and maintenance. These are well-defined processes with clear ROI. Manufacturing-specific details require custom integration, so expect longer development cycles.

Healthcare: Heavily regulated. Life-and-death consequences. The strategy is: start with administrative processes (scheduling, records management, billing). Move carefully to clinical decision support, which requires extensive validation and physician oversight.

Telecommunications: High volume of customer interactions. Existing AI investments. The strategy is: enhance existing chatbots with agentic capabilities. Use agents to handle escalations and complex issues that chatbots can't.

The common thread: start with high-volume, repeatable, well-understood processes. Build governance. Demonstrate value. Then move to more complex use cases.

The primary challenges in deploying agentic AI are governance vacuum (35%), system fragmentation (30%), and data silos (25%). These organizational and architectural issues often stall AI initiatives. Estimated data.

Measuring Success: Metrics That Matter

You can't operationalize what you can't measure. So what metrics actually matter for agentic AI?

Task completion rate: What percentage of tasks does the agent complete without human intervention? Start with 30-40% and work up to 70-80% for mature agents.

Time to completion: How fast does the agent complete tasks compared to humans? Agents should be 5-10x faster.

Error rate: What percentage of agent decisions are incorrect? Industry-specific, but generally less than 5% for agents in autonomous operation.

Cost per task: How much does it cost the agent (model API calls, infrastructure) to complete a task? Should be 20-50% of the cost of human completion.

Human escalation rate: What percentage of tasks get escalated to humans? Start high (50-70%), should trend down to 10-20% as the agent matures.

Compliance violations: How many times did the agent violate policy? Should be zero or near-zero for autonomous agents.

User satisfaction: Do people think the agent is helpful? For agents that interact with humans, satisfaction scores matter.

Business impact: Did the agent actually deliver the promised business benefit? Revenue increase? Cost reduction? Time saved? This is ultimately what matters.

Most organizations focus on the easy metrics (completion rate, speed) and ignore the hard ones (business impact, compliance). The easy metrics feel good. The hard metrics are what actually justifies the investment.

Future Trends: Where Agentic AI Is Heading

The current state of agentic AI is still early. A few trends are likely to shape the next 2-3 years.

Multi-modal agents: Agents that work with text, images, audio, and video simultaneously. Right now, most agents work with structured data or text. Multi-modal opens up new possibilities (e.g., visual inspection agents that can analyze photos).

Specialized models: As frontier models reach good-enough performance, specialized models will emerge. Instead of one giant model, you'll have domain-specific models optimized for finance, healthcare, manufacturing. Smaller. Faster. More appropriate.

Federated agents: Agents that can act across organizational boundaries. A supplier's agent coordinates with your procurement agent. They negotiate directly. This requires new standards and protocols.

Continuous learning: Most current agents don't learn from outcomes. In the future, agents will update their behavior based on what worked and what didn't. This is safer in closed systems, risky in open ones.

Efficient inference: Right now, every agent call hits an API. Future architectures will run smaller models locally, hitting remote APIs only when necessary. Faster. Cheaper. More resilient.

Agent marketplaces: As agents become standardized, marketplaces will emerge. You'll buy agents like you buy libraries. This will accelerate adoption significantly.

These trends will make agentic AI easier to operationalize. But the fundamental challenge—integrating autonomous systems into enterprise architecture—won't go away.

Implementation Playbook: Getting Started

If you're ready to operationalize agentic AI in your organization, here's a concrete playbook:

Phase 1: Assessment (2-4 weeks)

- Identify high-impact processes: What are the top 5 business processes that consume the most labor or have the highest error rates?

- Map the architecture: What systems do these processes touch? Where's the data? What are the constraints?

- Evaluate platforms: What tools/platforms could support this? What's the cost? What's the learning curve?

- Build business case: What's the ROI? How much time and money could you save?

- Set governance requirements: What compliance requirements exist? What approval workflows do you need?

Phase 2: Pilot (2-3 months)

- Select the right process: Pick something high-impact but not the most complex. Something that will deliver value quickly.

- Build the agent in advisory mode: The agent observes and recommends, but humans execute.

- Implement monitoring from day one: Track every decision, every recommendation, every outcome.

- Establish clear metrics: Define what success looks like before you start.

- Get stakeholder buy-in: Help the people who'll use this understand what's happening.

- Test extensively: Run the agent against historical data. Compare its decisions to what humans would have done.

Phase 3: Hardening (1-2 months)

- Move to approval gates: The agent can take small actions autonomously, but needs approval for larger ones.

- Refine the logic: Based on pilot learnings, improve the agent's decision-making.

- Strengthen governance: Implement policies, controls, and monitoring.

- Document everything: Build playbooks for managing this agent.

- Train the team: Help people understand how to work with the agent.

- Plan for scale: What does it look like when this agent handles 10x more volume?

Phase 4: Launch (1 month)

- Go autonomous: Remove approval gates. The agent now acts independently (within policy bounds).

- Establish SLOs: Define the acceptable performance level. What triggers human investigation?

- Create incident response: If something goes wrong, what's the playbook?

- Monitor closely: Watch the agent continuously in the first few weeks.

- Be ready to rollback: If things go sideways, you need to be able to turn the agent off quickly.

- Celebrate success: When it works, acknowledge the effort.

Phase 5: Optimize and Scale (ongoing)

- Continuously refine: Improve the agent based on outcomes.

- Build more agents: Apply learnings to new processes.

- Connect agents: Start orchestrating multiple agents together.

- Extract best practices: Codify what you've learned so other teams can use it.

- Build internal capability: Train your teams so they can build agents without external help.

This playbook assumes a 6-8 month cycle from idea to autonomous operation. Adjust based on your organization's pace.

Common Pitfalls and How to Avoid Them

Organizations that fail at operationalizing agentic AI typically stumble on one of these rocks.

Pitfall 1: Building before understanding. Teams get excited and start coding immediately. Then they realize the agent can't access the data it needs. Or it can't integrate with the systems it needs to touch. Do the assessment work first. It feels slow, but it prevents wasting months of engineering.

Pitfall 2: Skipping governance. Governance feels like bureaucracy. But it's the difference between a useful agent and a liability. Build governance in from day one. Plan for failure. Assume the agent will make mistakes.

Pitfall 3: Moving too fast. Organizations want to go from pilot to autonomous in a month. That's too fast. You need time to build confidence. Spend at least 2-3 months in advisory mode. Another month in approval-gate mode. This isn't wasted time. It's validation.

Pitfall 4: Underestimating integration complexity. Connecting to one system looks easy. Connecting to five systems is five times harder. Not because of the systems themselves, but because of the coordination. Plan for integration to consume 40-50% of your timeline.

Pitfall 5: Ignoring change management. New autonomous systems require organizational change. People need to understand what the agent does. Workflows need to change. Teams need training. Don't ignore this. Budget for it.

Pitfall 6: Choosing the wrong process. Building an agent for a 10-person team that does important-but-rare work is a waste. Build for high-volume, repeatable processes where the ROI is clear.

Pitfall 7: Treating agents as temporary. Once an agent is in production, it needs ongoing maintenance. New systems need connectors built. Policies need adjustment. Monitoring needs tuning. Budget for ongoing work, not just the initial build.

The Platform Decision: What to Look For

If you decide to use a platform (which I'd recommend for most organizations), what should you evaluate?

Connector library: How many pre-built connectors does it have? Salesforce, SAP, Oracle, Service Now, etc. More is better. Every custom connector you need to build is time and money.

Orchestration capabilities: Can you express complex workflows? Can agents run in parallel? Can you route between agents? Can you coordinate across systems? These are table-stakes.

Governance features: Can you define policies? Implement rate limits? Maintain audit trails? Control data access? These should be built-in, not bolt-ons.

Monitoring and observability: Can you see what agents are doing? Can you trace decisions? Can you set alerts? Good monitoring is non-negotiable.

Integration with existing tools: Does it work with your existing CI/CD? Your existing data platforms? Your existing monitoring? You don't want to add yet another tool to manage.

Cost model: Is it per-API-call? Per-agent? Per-feature? Make sure the pricing aligns with your expected usage. Some platforms are cheap at small scale and expensive at large scale, and vice versa.

Support and community: Can you get help? Is there documentation? Are there examples? Community adoption? This matters more than you think.

Roadmap: Is the platform evolving in directions that matter to you? Or is it stagnating? Check if new features align with your needs.

Don't just look at the headline features. Dig into the details. Talk to customers. Ask about their implementation.

Building Internal Capability

Eventually, you want your organization to be self-sufficient in building agentic AI systems. This requires building internal capability.

Hire or grow experts: You need 1-2 people who deeply understand agentic AI. Not model experts. System architects. People who understand integration, governance, and operationalization.

Document patterns: When you build your first agent, document the process. What worked? What didn't? What would you do differently? This becomes a playbook for future agents.

Build reusable components: Don't start from scratch each time. Create templates. Reusable governance patterns. Standard integration approaches. This accelerates future projects.

Train your teams: Help software engineers understand how agents work. Help product managers understand what agents can do. Help operations teams understand how to monitor agents. Broad understanding accelerates adoption.

Establish standards: Define how your organization does agentic AI. What platforms you use. What governance you require. What monitoring is mandatory. Standards eliminate endless debates about the "right" way.

Create feedback loops: As teams build agents, they learn things. Make sure that learning flows back to your central capability team. Learning concentrates when you create mechanisms for it.

Building internal capability is a multi-year effort. But it's essential if agentic AI is going to be strategic for your organization, not a one-off project.

Conclusion: From Potential to Reality

The promise of agentic AI is real. Autonomous systems can transform how enterprises work. But promise isn't delivery. Too many organizations are stuck between promise and delivery. Pilots that don't move to production. Agents locked in advisory mode. Technology that never generates ROI.

The gap isn't technical. It's architectural and organizational. Operationalizing agentic AI requires fundamentally rethinking how you build, deploy, and govern software. It requires choosing platforms that understand enterprise complexity. It requires moving through stages of increasing autonomy carefully. It requires governance built in from day one. It requires organizational change.

Do this right, and agentic AI becomes a strategic asset. Teams work faster. Decisions improve. Costs drop. Growth accelerates. The organizations that operationalize agentic AI well will have significant competitive advantages over those that don't.

The time to start is now. Not because agentic AI is perfect (it isn't). But because the organizations that figure out how to operationalize it will define the next decade of enterprise technology.

Your first step is to identify one high-impact process. One process that's expensive, error-prone, and repetitive. Then build an agent for it. Not in production. In advisory mode. Start monitoring. Start learning. Then iterate.

That's how operationalization begins. Not with grand vision. With one small step that delivers real value.

For teams looking to accelerate agent implementation without custom infrastructure overhead, Runable provides a platform specifically designed for this. It handles the plumbing—agents, connectors, governance, monitoring—so your teams can focus on business logic. Starting at $9/month, it's a practical way to experiment with agentic AI workflows without massive upfront investment.

Use Case: Automate your weekly reports and document generation with AI agents that update in real-time.

Try Runable For FreeFAQ

What exactly is agentic AI and how is it different from regular AI?

Agentic AI refers to autonomous systems that can observe conditions, make decisions, and take actions without human intervention for each step. Unlike regular AI (like Chat GPT), which responds to prompts with generated content, agentic AI works proactively and continuously. An agentic system might monitor your supply chain, identify a problem, evaluate solutions, and execute a response—all without anyone asking it to do so. The key difference is autonomy: regular AI produces content, agentic AI performs actions.

Why do most agentic AI pilots fail to reach production?

Pilots stall because of architectural mismatches between the isolated environment where pilots run and the complex enterprise reality. Successful pilots typically exist in controlled settings with access to a single database or system. Production requires connecting to multiple legacy systems, handling data from different sources, maintaining compliance, and respecting governance frameworks. Integration complexity, lack of monitoring infrastructure, and missing governance controls are the primary reasons pilots don't become operational systems.

How long does it typically take to operationalize an agentic AI system?

A realistic timeline from decision to autonomous operation is 6-8 months. This includes assessment (2-4 weeks), building the agent in advisory mode (2-3 months), hardening and governance (1-2 months), and launch with close monitoring (1 month). Organizations that try to compress this timeline typically run into problems during the autonomous phase. The intermediate stages—advisory mode and approval-gate mode—aren't wasted time; they're essential validation periods that build confidence in the system.

What role do platforms play in successful operationalization?

Platforms are the difference between custom infrastructure and sustainable systems. A platform provides pre-built connectors to enterprise systems, orchestration capabilities for managing agents, governance controls, and monitoring infrastructure. Without a platform, building an agent that works in production requires custom code for every integration point, governance control, and monitoring feature. This dramatically extends timelines and increases costs. With a platform, you configure rather than code, which typically reduces time-to-value by 50-60%.

What's the most critical governance requirement for autonomous agents?

Visibility is the foundation of all governance. Before agents take autonomous action, you need complete observability: detailed logging of what the agent did, why it made specific decisions, and what the outcomes were. This audit trail isn't optional compliance overhead—it's how you build trust in the system. You should spend 2-3 months in advisory mode just collecting monitoring data before you allow any autonomous action. This helps you understand agent behavior patterns and identify edge cases.

How do you determine ROI for agentic AI initiatives?

ROI comes from three sources: labor cost reduction, error reduction, and speed improvement. Labor cost is clearest: if an agent handles work that costs

What's the difference between building custom agents versus using prebuilt ones?

Custom agents (built with code) offer maximum flexibility but require maximum engineering effort. You own all the complexity. Prebuilt agents (from vendors) offer faster deployment but limited customization. Modern best practice is hybrid: use prebuilt agents where they fit your needs (70-80% of use cases) and build custom agents for what's unique to your business. Orchestrate them together under a unified platform. This gives you deployment speed plus flexibility.

How do you handle regulatory compliance with autonomous agents?

Regulation requires explainability, auditability, and human oversight. Your agent needs to explain decisions in business terms (not technical terms), maintain complete audit trails for review, and escalate edge cases to humans. In regulated industries, assume 5-10% of decisions will need human judgment. Build your governance to support this, not fight it. Compliance should be designed in from day one, not added after the fact. Platforms designed for regulated industries have these controls built-in.

What's the biggest mistake organizations make when operationalizing agentic AI?

Moving too fast. Teams build a pilot that works, then try to go autonomous immediately. This is risky. You need 2-3 months of advisory-mode operation (agent recommends, humans execute) followed by 1+ months in approval-gate mode (agent acts autonomously for small decisions, humans approve larger ones). This timeline feels slow, but it's how you build confidence and identify edge cases. Organizations that skip these stages often have costly failures that damage credibility and set adoption back months.

Key Takeaways

- Agentic AI pilots fail due to architectural mismatches between isolated environments and complex enterprise systems, not AI limitations

- Orchestration platforms that connect agents, systems, and governance are critical multipliers—custom integration code defeats the purpose of automation

- A realistic 6-8 month operationalization timeline includes 2-3 months in advisory mode before allowing autonomous action—rushing this causes failures

- Governance and monitoring must be designed in from day one, not bolted on after—visibility and control are foundations of agent autonomy

- Hybrid models combining custom agents with agent-as-a-service solutions provide flexibility while maintaining standardization and scalability

Related Articles

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

- Ever's AI-Native EV Marketplace: How $31M Redefines Auto Retail [2025]

- How AI Transforms Startup Economics: Enterprise Agents & Cost Reduction [2025]

- WebMCP: How Google's New Standard Transforms Websites Into AI Tools [2025]

- Why Customer Support Hiring Collapsed 65% in 2 Years [2025]

- Chrome's Auto Browse Agent Tested: Real Results [2025]

![How to Operationalize Agentic AI in Enterprise Systems [2025]](https://tryrunable.com/blog/how-to-operationalize-agentic-ai-in-enterprise-systems-2025/image-1-1770982758712.jpg)