AI Coding Tools Work—Here's Why Developers Are Worried [2025]

Two years ago, AI coding assistants felt like a novelty. Today, they're transforming how software gets built.

The shift happened quietly, then suddenly. Tools like Claude Code, Codex, and Cursor went from suggesting single lines to building entire applications from prompts. Some developers now spend their days directing AI agents rather than typing code. OpenAI uses its own Codex to build Codex. The capability is undeniable.

Yet something strange is happening. The more powerful these tools become, the more worried developers get.

It's not that the tools don't work. They do. That's exactly the problem.

When AI coding agents reliably write working code, when they can debug their own mistakes, when they understand your architecture well enough to suggest improvements, a new set of concerns emerges. Developers aren't asking "can these tools code?" anymore. They're asking "what happens when they do the coding for us?"

I spent weeks talking to software engineers across companies ranging from startups to Microsoft. Their responses revealed something the industry hasn't fully grasped: enthusiasm and anxiety coexist. Developers love the speed. They're terrified of the consequences.

This is the story of what's actually happening with AI coding tools right now, beyond the marketing. It's messier than either "AI will replace engineers" or "AI is just autocomplete" headlines suggest. The truth involves difficult tradeoffs nobody advertises.

The Capability Jump Nobody Expected

Not long ago, AI coding tools felt incremental. Better autocomplete. Nice, but not revolutionary.

That changed around mid-2024. The shift accelerated through 2025.

Roland Dreier, a software engineer with extensive Linux kernel contributions, described experiencing a "step-change" in AI capability. Where he once used AI for autocomplete and occasional questions, he now delegates entire debugging sessions. He tells the agent, "this test is failing, debug and fix it," and it works.

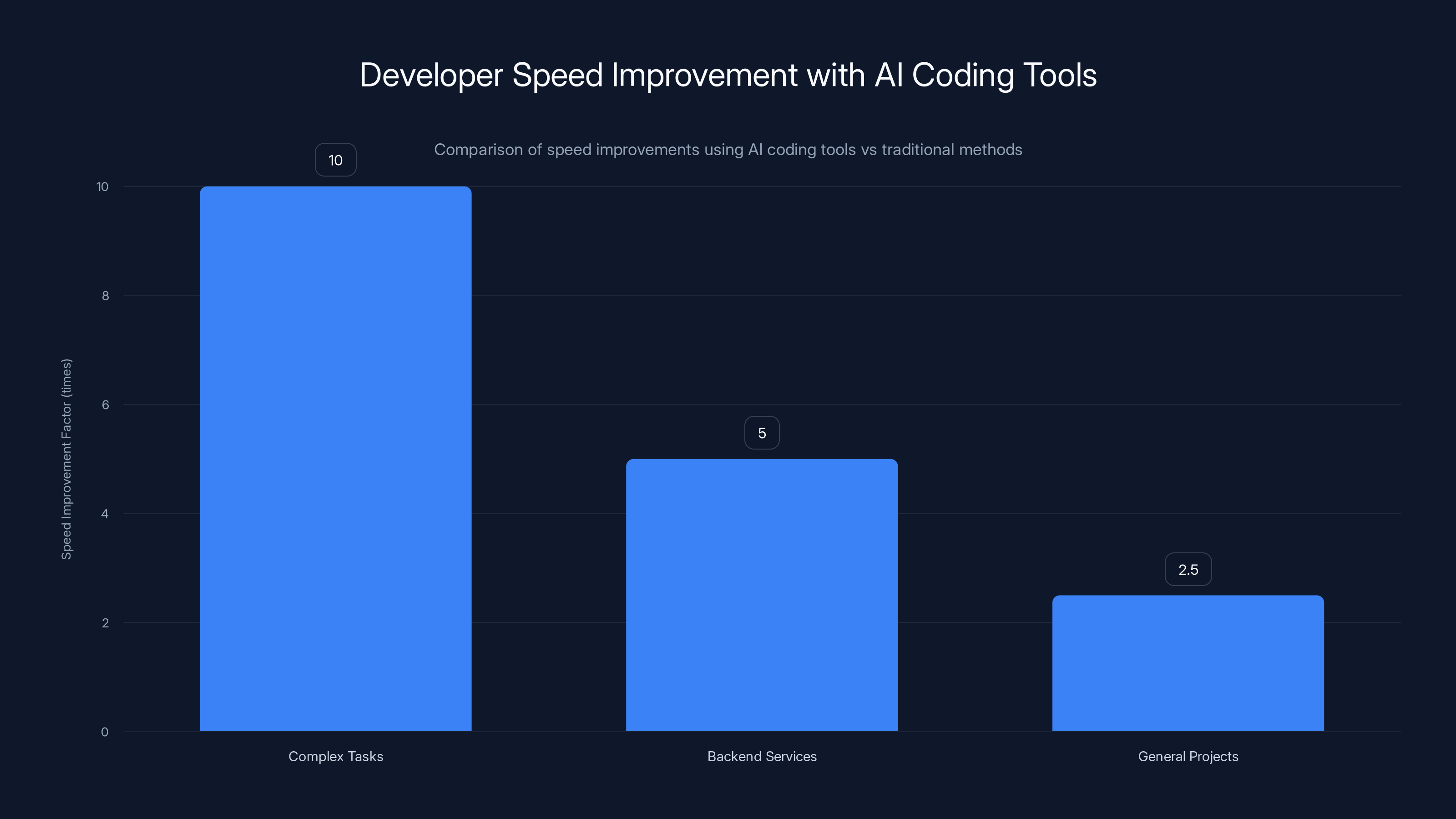

For complex tasks involving backend services in Rust, Terraform configuration, and Svelte frontends, Dreier estimates a 10x speed improvement. That's not marketing. That's an experienced engineer saying a decade of expertise produces roughly the same output in one-tenth the time.

A software architect at a pricing management SaaS company reported something similar. After 30 years of traditional coding, AI tools transformed his work entirely. He delivered a feature in two weeks that he estimated would have taken a year traditionally.

These aren't outliers. Multiple developers reported comparable experiences. The capability is real.

But capability isn't the same as safety. That distinction matters.

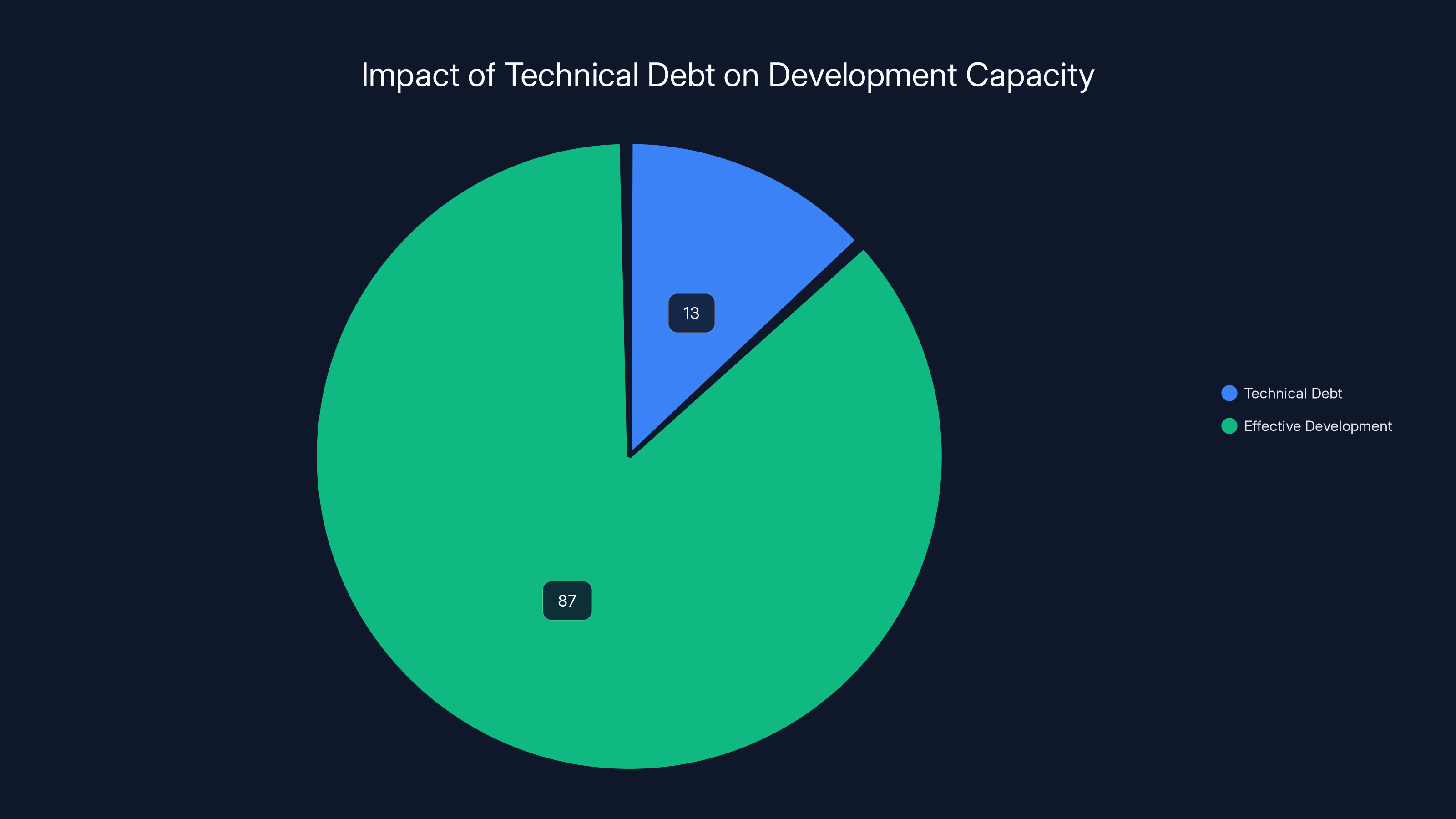

Technical debt consumes approximately 13% of development capacity, equating to about 1.5 months per year per engineer. Estimated data.

The Speed Problem

Faster development seems obviously good. Ship features quicker. Iterate quicker. Get feedback faster.

Except velocity without understanding creates problems.

When an AI agent writes code 10x faster, humans can't review code 10x faster. Our brains don't work that way. Reading code requires understanding architecture, design patterns, and the reasoning behind each decision. You can't scan a 2,000-line module in 30 seconds and genuinely understand it.

Yet that's what's happening. Developers are accepting AI-generated code faster than they can meaningfully review it.

Darren Mart, a senior engineer at Microsoft with 19 years at the company, used Claude to build a Next.js application integrating Azure Functions. The AI model "successfully built roughly 95% of it according to my spec." But Mart remains cautious. He only uses AI for tasks he "already fully understand," because otherwise "there's no way to know if I'm being led down a perilous path and setting myself up for a mountain of future debt."

Mart isn't being paranoid. He's describing a real problem: code that works today but creates problems tomorrow.

Consider what happens when an AI agent chooses between two architectural approaches. Both work. One scales to 100,000 users. The other hits a wall at 10,000. The AI has no way to know which future you're building for. It just picks an approach that satisfies the current spec.

Eighteen months later, you're rewriting everything. That's technical debt.

The problem compounds. When developers don't review code carefully, they don't learn how the system works. When something breaks, they're helpless. They can't debug what they don't understand. They ask the AI to fix it. Repeat.

Eventually, your codebase is held together by a chain of dependency on AI understanding it.

The Term That Started All This: "Vibe Coding"

In early 2025, former OpenAI researcher Andrej Karpathy coined the term "vibe coding." It stuck because it perfectly captures something real.

Vibe coding means writing code by chatting with AI without fully understanding the result. You describe what you want. The AI delivers it. You move on. The code works, at least immediately.

This isn't necessarily wrong for throwaway scripts or prototypes. It's extremely useful for spinning up something quickly.

But here's what worries experienced engineers: the line between "prototype" and "production code" gets blurry. Prototypes ship. Prototypes become services. Services become critical infrastructure.

Tim Kellogg, a developer who actively builds with agentic AI, is blunt about traditional syntax coding: "It's over. AI coding tools easily take care of the surface level of detail." He describes building, then rebuilding three times in less time than typing it manually once, with cleaner architecture as a result.

Kellogg represents developers who've fully embraced AI direction rather than coding. He's not wrong about what's possible. But he's building in a specific style with specific constraints that don't apply to every project.

Vibe coding works when you understand the domain. It works when the stakes are low. It works when someone senior reviews the output against actual requirements.

It breaks when junior developers use it without supervision. It breaks when nobody's asking hard questions about design. It breaks when speed becomes the only metric that matters.

Developers experience up to 10x speed improvements on complex tasks with AI coding tools, while general projects see a 2.5x improvement. Estimated data based on typical developer reports.

Architectural Decisions Made Without Understanding

Code is instructions. Architecture is constraints.

Good architecture anticipates problems. It builds in room to grow. It doesn't paint you into corners.

AI agents are terrible at anticipating problems they haven't been explicitly told about. They can't ask the right questions because they don't know what questions exist.

An AI agent can choose between three database schema designs. It picks the one that satisfies the current requirements most efficiently. It has no way to know you'll need to support multi-tenancy in six months. It doesn't know you're planning a geographic expansion. It can't predict that performance matters more than flexibility, or vice versa.

Humans make these mistakes too. The difference is that humans learn from mistakes, talk about mistakes, and gradually build intuition. AI repeats the same type of mistakes identically across projects.

A data scientist working in real estate analytics, who asked to remain anonymous, keeps AI "on a very short leash." He uses GitHub Copilot for line-by-line completions—useful 75% of the time—but never for larger decisions. He doesn't trust AI with architectural choices in code affecting customer data.

That's wisdom earned the hard way. Data scientists know what happens when systems don't handle edge cases correctly. They've seen what happens when assumptions break. They're cautious not because they're paranoid, but because they've been burned before.

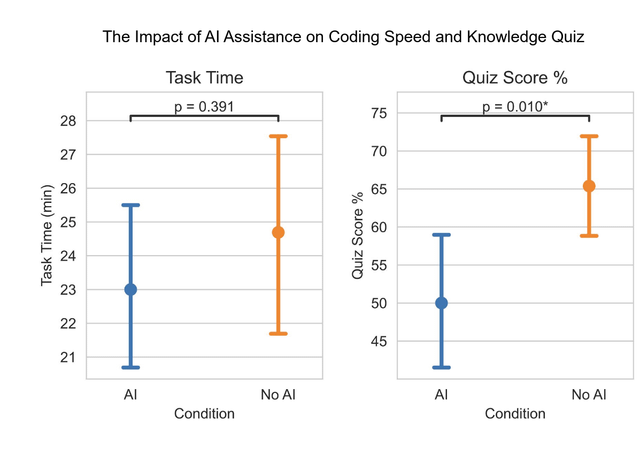

The Skills Problem Nobody Wants to Discuss

Here's what happens when AI writes most of your code: you stop learning how to write code.

Junior developers who rely on AI to build 95% of their projects don't learn software engineering. They learn how to prompt AI. These aren't the same thing.

An experienced engineer can use AI as a multiplier because they already know what good code looks like. They catch when the AI is wrong. They know whether a suggested approach is sound.

A junior without that foundation just trusts the AI. They ship code they don't understand. They can't debug it when it breaks. When something unusual happens, they're lost.

Five years from now, you end up with teams that look competent on the surface but crumble the moment something unexpected happens. That's a massive problem that nobody's addressing.

Companies are hiring AI-era developers without the fundamentals. These developers will face incredible challenges when they encounter problems AI couldn't solve during their onboarding. They'll be building on a foundation of trust in tools rather than understanding.

This isn't a moral judgment. It's a practical concern. The best developers I know still write code by hand regularly. Not because they're nostalgic. Because understanding architecture requires writing it, testing it, and living with the consequences.

AI skips that last part. You never live with the consequences because someone else handles them later.

When AI Coding Actually Works Well

Let's be clear: AI coding tools aren't useless. They're genuinely powerful for specific things.

Roland Dreier had a five-year-old shell script for copying photos off camera SD cards. It was janky. He kept meaning to rewrite it. Never did, because the effort wasn't worth it.

With AI agents, he spent a few hours building a full released package with a text UI, written in Rust with unit tests. "Nothing profound there, but I never would have had the energy to type all that code out by hand," he told me.

That's the winning use case. Clear, well-understood problem. Low risk. High effort with manual coding.

AI excels at:

- Boilerplate generation: Repetitive code that follows predictable patterns

- Glue code: Connecting existing systems without novel logic

- Prototyping: Quick validation of ideas before serious engineering

- Migration tasks: Moving code from one framework to another

- Testing: Writing unit tests for existing code (often better than humans)

- Documentation and comments: Explaining working code

- Performance optimization: Suggesting improvements to existing working code

Where AI struggles:

- Core algorithms: Novel logic requiring creative problem-solving

- Architectural decisions: Choices with long-term implications

- Security-critical code: Authentication, encryption, data protection

- System design: Building systems that handle failure gracefully

- Debugging complex issues: Problems requiring deep domain knowledge

- Code that must scale: Anticipating future growth

The winning strategy isn't "use AI for everything" or "never use AI." It's knowing the difference.

AI-generated code saves

The Productivity Paradox

Developers report massive speed improvements. Shipping faster. Building more. Prototyping quicker.

Yet nobody's shipping higher quality software. In fact, some companies report more bugs, more rewrites, more technical debt.

The paradox resolves when you separate two things:

- Getting code written (AI is great)

- Getting good code shipped (AI creates challenges)

AI made writing code faster. It didn't make shipping good code faster. The review process is actually slower because there's more code to review.

Tim Kellogg can rebuild an application three times faster than building it once manually. That's real. But how many rebuild cycles does a typical project need before shipping?

Most don't need three. Most need one, maybe two refinements. So the comparison is misleading. AI let him explore more options, but most projects don't benefit from that exploration.

For straightforward projects with clear requirements, traditional coding is faster when you count the whole cycle: requirements gathering, development, review, testing, deployment, and maintenance.

AI wins when projects are exploratory, requirements are fuzzy, or velocity on throwaway code matters.

That's a narrower use case than the hype suggests.

The Supervision Problem

Every developer who's enthusiastic about AI coding emphasized the same thing: human supervision is critical.

"I still need to be able to read and review code," Dreier said. "But very little of my typing is actual Rust or whatever language I'm working in."

So supervision is still required. Review is still necessary. It's not "AI builds software." It's "AI builds, humans review."

That's a crucial difference. The promise was "let AI handle coding." The reality is "let AI handle typing, humans handle engineering."

Except humans get tired. Code reviews get sloppy when you're doing fifty a day. You start approving things faster. You stop asking hard questions. You trust the AI because it usually works.

Then one day it doesn't. But by then, the bad code is in production, and you're paying the cost.

Hallucinations Still Happen

AI coding agents confabulate. They suggest APIs that don't exist. They call functions with wrong parameters. They invent library features that aren't real.

The code they write often looks correct. It passes syntax checks. It compiles. But at runtime, it fails.

For straightforward tasks, this is fine. You get an error, fix it, move on.

For complex systems where failures are subtle, it's a nightmare. An AI-generated database query might return wrong results under specific conditions. A caching strategy might create race conditions. A dependency version mismatch might only break in production.

Every experienced engineer I spoke with mentioned this. The AI is confident and wrong just often enough that you can't relax. You still need to verify everything.

That verification takes time. Sometimes as much time as writing it manually would have taken.

So the speed advantage isn't as dramatic as headlines suggest. You're not saving time on review if you need to be as careful as you would have been anyway.

AI tools have significantly improved development speed, with debugging and backend services seeing a 10x improvement, and full feature development up to 26x faster. Estimated data based on developer reports.

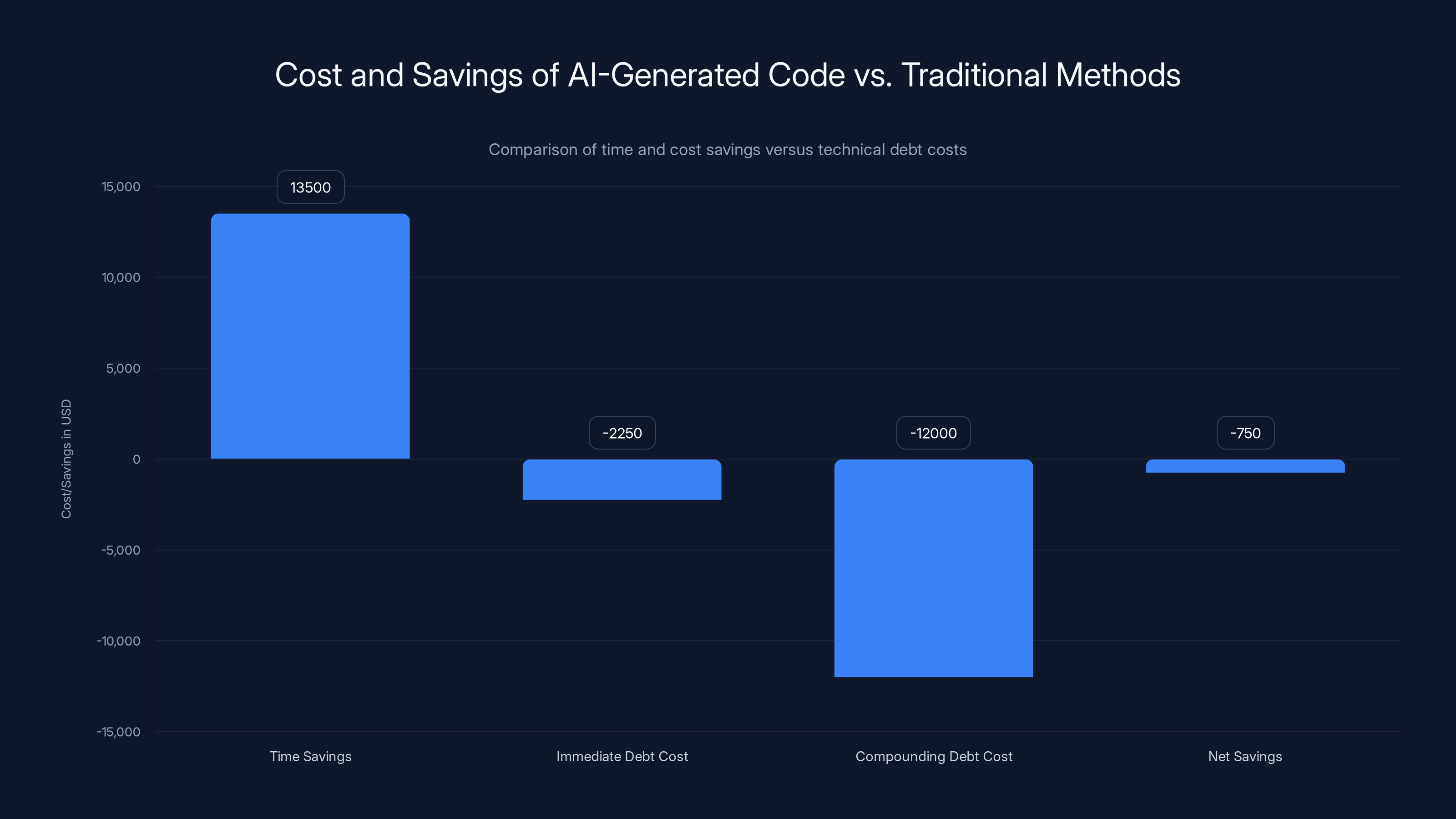

The Economics of AI-Generated Debt

Let's put numbers on this.

Assume an engineer using traditional methods takes 100 hours to build a feature. Using AI coding agents, they take 10 hours.

That's 90 hours saved. At

But assume that the AI-generated code has architectural problems that create 15 hours of technical debt—extra complexity that makes future changes harder. This debt compounds.

Every future modification to that feature costs 20% more because the foundation is questionable. Over a five-year product lifecycle with continuous changes, that debt accumulates.

Doing rough math:

- Debt cost: 15 hours × 2,250 immediate

- Compounding debt: assume 2 hours of extra cost on every future change, 8 major changes per year, 5 years = 80 hours × 12,000

- Total cost of debt: $14,250

- Net savings: 14,250 = negative $750

You actually lose money.

This is theoretical, but the logic holds. Speed without quality isn't cost savings. It's expense deferral.

Smartly used, AI avoids this. You use AI for low-debt tasks—boilerplate, glue code, testing. You maintain human control over architectural decisions. You review carefully. You measure debt accumulation.

Used carelessly, AI creates a hidden cost structure that doesn't appear in quarterly reports until it's massive.

Security and AI-Generated Code

Security vulnerabilities in AI-generated code are a specific, measurable problem.

AI models trained on public code learn common security mistakes alongside working code. They reproduce those mistakes confidently. An AI might generate a SQL injection vulnerability that looks correct because it's syntactically valid.

An experienced security engineer catches that. A junior who doesn't understand SQL injection approves it. It ships.

For features handling customer data, payment processing, or authentication, AI code requires security review from experts. But most companies don't have enough security experts to review all code.

So they don't. They ship code written by AI and reviewed by someone without security expertise. That's a recipe for breaches.

The Stanford study I mentioned earlier found security vulnerabilities in 26% of Copilot suggestions. That's not acceptable for production code. It's a liability.

Yet companies are shipping it because velocity matters more than security in quarterly planning cycles.

What Happens to Code Ownership?

When an AI agent writes code, who owns it?

Legally, the question is murky. AI training involves questions about copyright on training data. AI-generated code potentially carries licensing obligations. If you use GitHub Copilot trained on open-source code, you might need to include open-source licenses in your proprietary product.

But beyond legal questions, there's an engineering question: if nobody on your team wrote the code and nobody fully understands it, who's responsible when something breaks?

Typically, the person who approved it. But if they didn't truly understand it, that's unfair.

Teams that use AI well distribute ownership: the person requesting the feature remains responsible, but they're supported by AI writing it. They review it, understand it, own it.

Teams that use AI poorly lose that connection. Code appears, gets reviewed quickly, ships. When it breaks, finger-pointing begins.

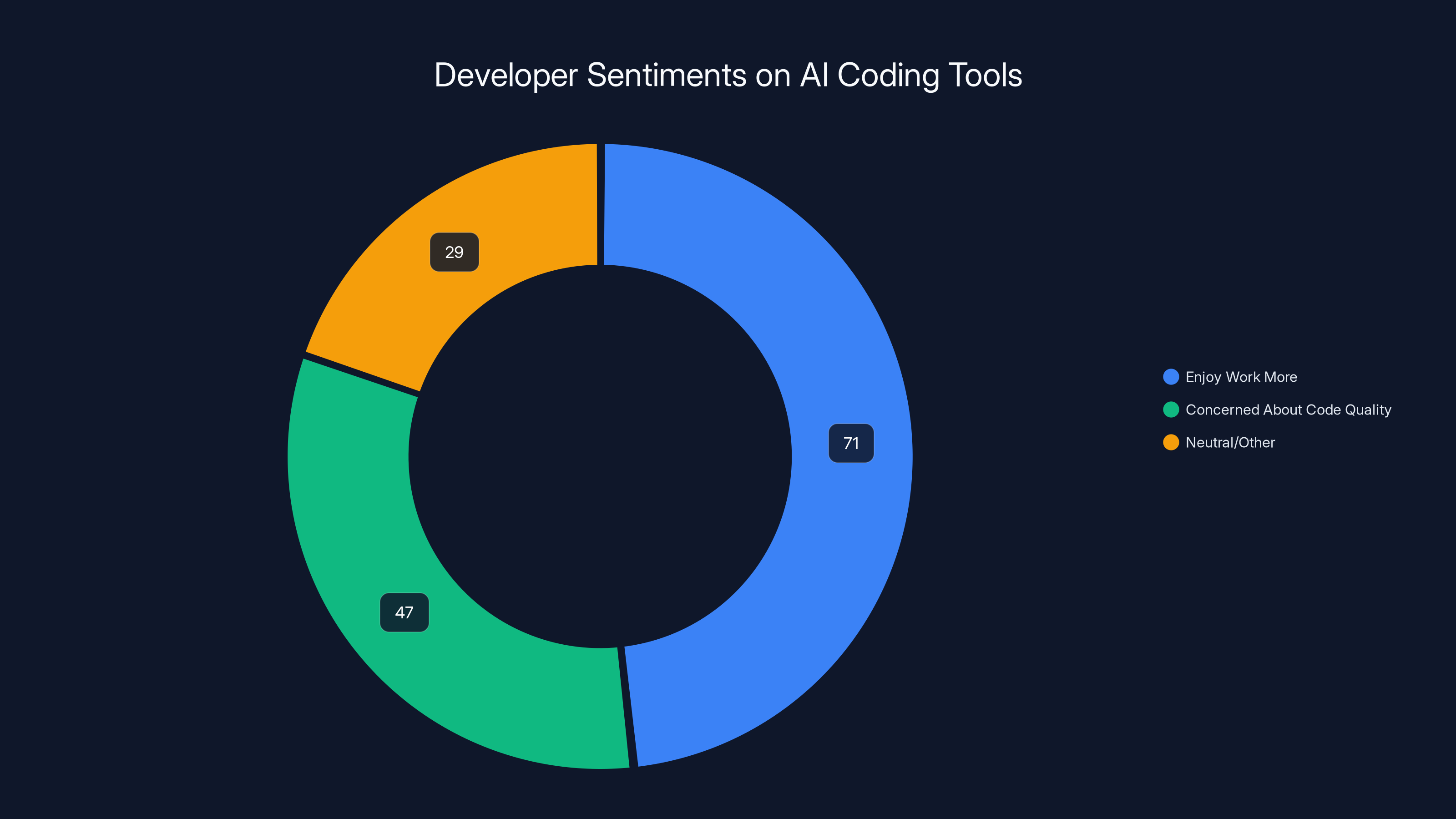

71% of developers enjoy their work more with AI tools, but 47% worry about long-term code quality. Estimated data for neutral/other category.

The Future of Code Review

Code review as an institution is changing.

Traditionally, reviews were about catching bugs and suggesting improvements. An expert reads code and thinks about edge cases.

When AI writes code, reviews become something different. They're more like quality checkpoints. Does this code match the requirements? Does this approach seem right? Can I understand this?

Reviews are less detailed because there's so much volume. They're faster because slowing down would grind development to a halt.

This is untenable for critical code.

The industry needs new tools and processes around review. Automated security scanning that catches entire categories of vulnerabilities. Architectural linting that flags decisions that don't match your patterns. Debt tracking that measures the long-term cost of each decision.

Without these tools, code review either becomes a bottleneck that prevents deployment, or becomes a rubber-stamp process that catches nothing.

Companies are currently choosing the rubber-stamp approach. That's the crisis that isn't being talked about loudly enough.

The Developer Experience Paradox

Here's something interesting: developers report enjoying coding more with AI tools.

The anonymous architect: "I have way more fun now than I ever did doing traditional coding."

It's not surprising. If you hate writing boilerplate and tedious glue code, removing that is delightful. If you get to spend your time on interesting problems instead of syntax, that's better.

But pleasure isn't the same as skill development or good outcomes.

You can have fun and still be creating problems for the future.

A junior developer might have more fun building features with AI because they're not struggling with syntax. But they're also not learning how to debug, how to think through design, how to handle edge cases.

Fun isn't a reliable metric for whether something is good.

What Replacing Syntax Really Means

Tim Kellogg's statement—"syntax programming is over"—requires interpretation.

What he means is: the act of typing out code in a programming language is no longer the bottleneck for experienced developers. They can think in terms of what they want to build, have AI handle syntax, and move faster.

That's true. It's also not the same as "programming is over" or "we don't need programmers anymore."

We still need people who understand algorithms, data structures, system design, and debugging. We still need people who can ask the right questions about requirements. We still need people who can evaluate whether an AI-generated approach is sound.

What's changing is that mechanical code writing—the part that doesn't require much thought—gets automated.

That's great if it frees humans to do higher-value work. It's a disaster if it makes people think they don't need to understand fundamentals anymore.

The profession isn't ending. It's shifting. Whether that shift is positive depends entirely on how the industry responds.

Building Teams in the AI Era

If you're managing engineers now, you face new questions.

Do you hire for coding speed or thinking depth? AI makes coding fast, so speed matters less. Thinking depth matters more.

Do you expect engineers to be able to write code without AI? Maybe not, but they should understand what good code looks like. They should be able to evaluate AI output.

Do you trust junior engineers to ship AI-generated code? Not without review from someone experienced.

Do you measure productivity in lines of code? God, no. If AI writes more lines and they're worse, you're losing.

The smartest companies are building teams where experienced engineers work with AI-augmented juniors. Seniors understand architecture and make big decisions. Juniors use AI to implement quickly. The combination is powerful.

Weaker approaches: let AI-era juniors build independently (high velocity, low quality), or prevent AI use entirely (low velocity, false confidence in quality).

The winning approach requires discipline, clear processes, and accepting that some projects will be slower because they need more careful engineering.

The Marketing vs. Reality Gap

OpenAI, Anthropic, and others have incentive to oversell what their tools can do.

OpenAI announcing that it uses Codex to build Codex is technically impressive. It's also marketing. Humans still review that code. Humans still make architectural decisions. The news makes it sound more autonomous than it is.

Developers know this. That skepticism is healthy.

David Hagerty, a point-of-sale systems developer, was explicit: "All of the AI companies are hyping up the capabilities so much." He acknowledges LLMs are "revolutionary and will have an immense impact," but won't accept the marketing without evidence.

That's the right stance. Tools are powerful. Marketing around tools is exaggerated. Smart people separate the two.

The danger is when non-technical decision-makers believe the marketing without skepticism. That's when you get teams shipping AI code without proper review, architecting systems by accepting first suggestions, and building technical debt at maximum velocity.

The Path Forward

AI coding tools are here. They're not going away. The capability is real.

The question isn't whether to use them. It's how to use them without destroying code quality.

That requires:

Clear guidelines for what AI can and cannot do. Use it for boilerplate, testing, glue code. Don't use it for core algorithms, security, or architecture.

Serious code review with different standards for AI-generated code. More scrutiny on architecture. Security review for sensitive code. Focus on intent, not just syntax.

Debt tracking as a real metric. Measure the long-term cost of AI decisions, not just short-term speed.

Skill preservation in teams. Don't let AI writing code prevent junior developers from learning fundamentals.

Honest assessment of whether AI made something actually better or just faster.

Companies doing this well are winning. They ship faster and with fewer problems because they use AI for what it's good at and keep humans in control of what matters.

Companies assuming AI can handle everything are about to pay the cost. In two years, their codebases will be harder to modify, more fragile, and filled with decisions nobody fully understands.

FAQ

What makes AI coding agents different from traditional autocomplete tools?

AI coding agents like Claude Code can work on software projects for hours, writing multiple functions, running tests, debugging failures, and refining code based on feedback. Traditional autocomplete suggests single lines or small code blocks. Agents understand context across entire projects and can make decisions about architecture, whereas autocomplete is stateless.

How much faster can developers work with AI coding tools?

Experienced developers report 10x speed improvements on complex tasks like building backend services with deployment configuration. However, this assumes the AI-generated code is acceptable quality, supervision is minimal, and architectural decisions don't need revision. For most projects, the improvement is closer to 2x to 3x when accounting for review time and architectural refinement.

What is technical debt, and why do AI tools create more of it?

Technical debt occurs when code that works initially creates problems later because it uses poor design choices. AI creates debt because it optimizes for current requirements without anticipating future needs, and developers often skip careful review of AI-generated code. It compounds because maintaining code you didn't write and don't fully understand costs more.

Can junior developers safely use AI coding tools without supervision?

No. Junior developers lack the experience to evaluate whether AI-generated code is architecturally sound. They can't catch subtle bugs or recognize design decisions that create future problems. AI should be supervised by experienced engineers, especially for production code. Unsupervised AI use by juniors is how technical debt scales rapidly.

What types of code shouldn't be written by AI?

Security-critical code (authentication, encryption, data protection), core algorithms requiring creative problem-solving, architectural decisions with long-term implications, and any code handling customer data should be reviewed by subject matter experts before shipping. AI is confident and wrong just often enough that these areas require human expertise and careful verification.

How do companies measure whether AI made them more productive?

Measure outcomes, not line count. Did features ship faster? Did they cost less? Are they more reliable? Does the codebase remain maintainable? If you're shipping 2x faster but paying for it with 3x the maintenance burden, you're losing money. Real productivity improvement requires both speed and quality metrics.

What happens to code quality as teams adopt AI coding tools?

Code quality depends on processes around AI adoption. Teams with strict review requirements, architectural guidelines, and debt tracking maintain quality. Teams that treat AI as "ship it faster" see quality decline rapidly. The tool doesn't determine quality—how you use it does.

Will traditional programming skills become obsolete?

Understanding architecture, debugging, algorithms, and system design will always matter. What's changing is that mechanical code writing—the part that doesn't require much thought—is being automated. Programmers still need deep technical skills; they just spend less time on typing and more on thinking.

How should managers evaluate developer productivity in the AI era?

Stop using lines of code, velocity in story points, or tickets closed as primary metrics. Focus on code quality metrics (bugs found in production, rewrite frequency, technical debt accumulation), delivery time from requirement to deployment, and long-term maintainability. A developer who ships half as much code but it costs 75% less to maintain is more productive than one who ships fast and creates debt.

What's the real risk of widespread AI coding adoption?

The biggest risk is that teams optimize for velocity without maintaining quality standards. Code compounds. Bad decisions made today cause problems for years. If entire organizations adopt AI without governance, review standards, or debt tracking, you'll see massive technical debt accumulation that eventually paralyzes development. That's a 3-5 year timeline from adoption to crisis.

Conclusion

AI coding tools work. That's the consensus from experienced developers. But working isn't the same as wise.

The technology genuinely increases development speed. The question is whether that speed creates value or just delays problems.

Every developer who praised AI tools also expressed caution. None said "use AI for everything." They said "use AI for the parts that don't require deep thinking, but stay in control of things that do."

That requires discipline. It requires processes. It requires accepting that some projects will be slower because they need careful engineering.

The companies getting this right are distinguishing between two different categories of work: executing known solutions (where AI excels) and creating new solutions (where human thinking is irreplaceable). They're using AI to amplify their best engineers, not replace thinking with speed.

The companies that will struggle are those treating AI as a substitute for engineering judgment. They'll ship fast initially. In two to three years, they'll be dealing with codebases so entangled and poorly architected that any changes become expensive. That's when the real cost of moving fast appears.

Developers aren't worried about AI because they think it doesn't work. They're worried because it works well enough to cause problems without obviously causing them. The code runs. The features ship. The debt accumulates invisibly until it's too late.

If your organization adopts AI coding tools, do it thoughtfully. Establish clear guidelines for what AI can and cannot do. Require serious review of AI-generated code, especially for architectural decisions. Track technical debt as a real metric. Preserve learning for junior developers. Measure actual outcomes, not just speed.

Done right, AI multiplies what good engineering teams can accomplish. Done wrong, it creates a debt-financed velocity illusion that looks great until it doesn't.

The choice is yours. So is the outcome.

Key Takeaways

- AI coding agents achieve 10x speed improvements on complex tasks by handling syntax and boilerplate, not architectural thinking

- Technical debt from AI-generated code compounds invisibly: smart code today can require expensive rewrites tomorrow

- 26% of AI-suggested code contains security vulnerabilities, making security review non-negotiable

- The skill gap is real: junior developers using AI without understanding fundamentals cannot debug or architect effectively

- Companies winning with AI tools separate execution (where AI excels) from design decisions (where humans must lead)

Related Articles

- LinkedIn Vibe Coding Skills: The Future of AI Developer Credentials [2025]

- Why Microsoft Is Adopting Claude Code Over GitHub Copilot [2025]

- Flapping Airplanes and Research-Driven AI: Why Data-Hungry Models Are Becoming Obsolete [2025]

- AI-Powered Smart Home Automation With Claude Code [2025]

- Moltbot: The Open Source AI Assistant Taking Over—And Why It's Dangerous [2025]

- Windows 11 January 2026 Update: Complete Guide to Issues & Fixes [2026]

![AI Coding Tools Work—Here's Why Developers Are Worried [2025]](https://tryrunable.com/blog/ai-coding-tools-work-here-s-why-developers-are-worried-2025/image-1-1769801887025.jpg)