Flapping Airplanes and Research-Driven AI: Why Data-Hungry Models Are Becoming Obsolete [2025]

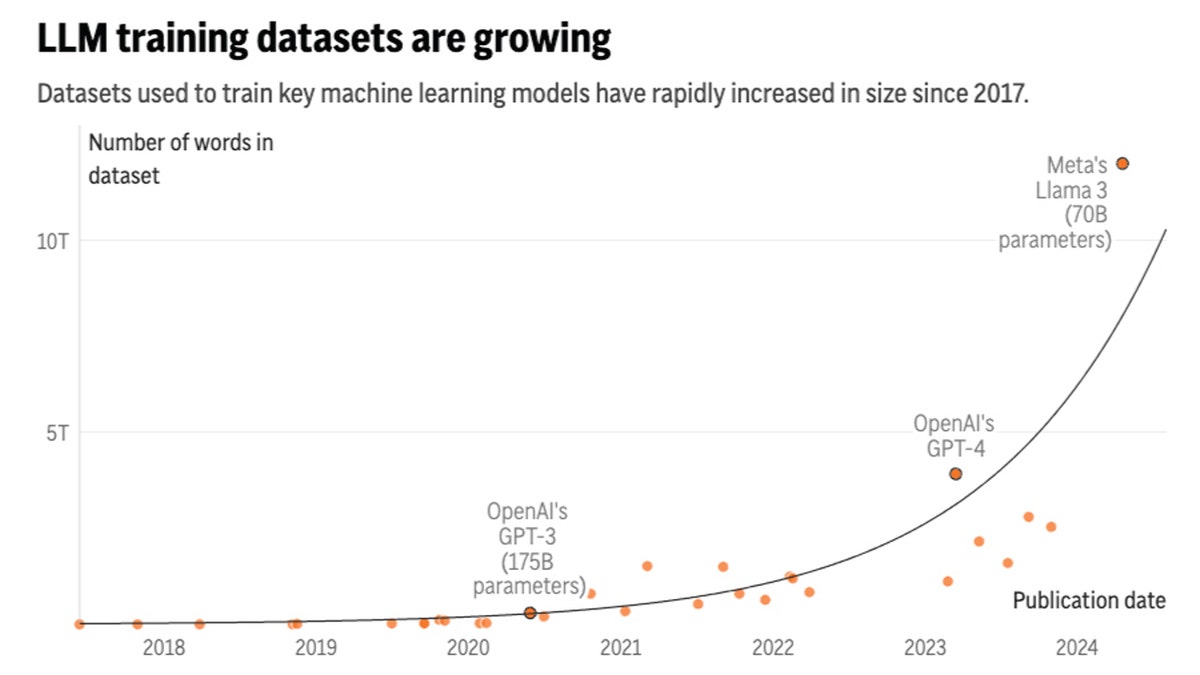

The AI industry has been operating under a single assumption for nearly a decade: bigger equals better. More data, more compute, more parameters. Scale everything, and intelligence will eventually emerge.

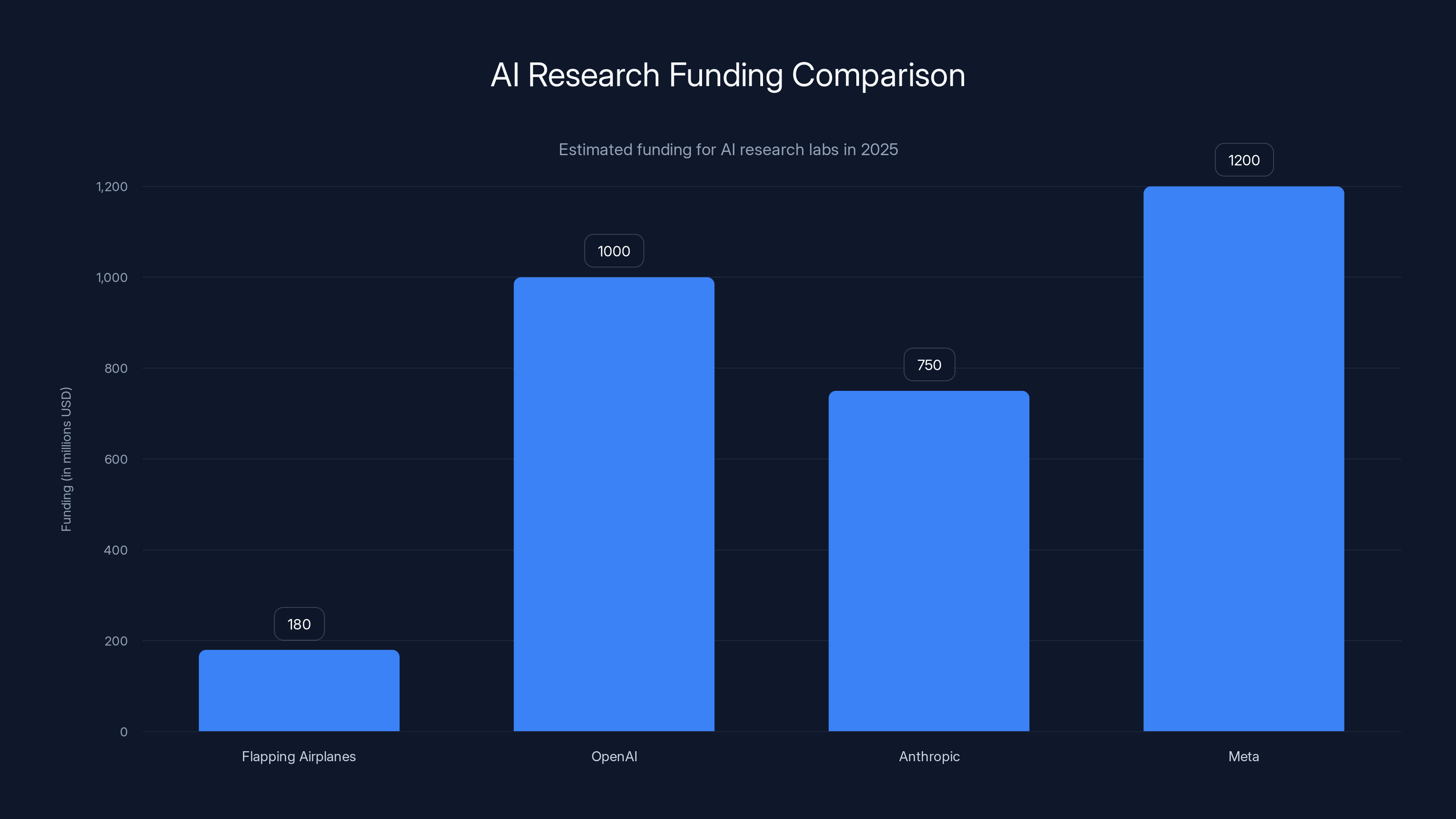

Then Flapping Airplanes showed up with $180 million in funding and a completely different playbook.

On January 29, 2026, a new AI research lab launched with backing from some of the smartest investors in tech: Google Ventures, Sequoia, and Index. The founding team includes world-class researchers who've spent years questioning whether the current path makes sense. And their mission directly challenges the scaling-first mentality that's defined AI development since the transformer revolution.

This isn't just another AI startup with a clever pitch. This is the first major inflection point in how the industry approaches fundamental AI research. For years, we've watched companies like OpenAI, Anthropic, and Meta pour billions into larger models, assuming that scale alone would eventually lead to artificial general intelligence (AGI). Flapping Airplanes argues we're approaching this problem backwards.

The question they're asking is deceptively simple: what if we've already got enough data? What if the breakthrough isn't more—it's different?

Let me walk you through what Flapping Airplanes actually represents, why it matters, and what this shift means for everyone building AI products over the next five to ten years.

TL; DR

- Flapping Airplanes raised $180M to pursue research-first AI development focused on fundamental breakthroughs rather than scaling existing models

- The scaling paradigm is shifting: The industry is moving from compute-first approaches (short-term wins) to research-first approaches (5-10 year bets)

- Data efficiency is the new frontier: Finding ways to train effective models with less data and compute is becoming the competitive advantage

- Research-driven innovation takes patience: Projects may take years to show results, but the payoff could fundamentally reshape AI architecture

- Bottom line: The next wave of AI advancement won't come from bigger GPUs—it'll come from better science

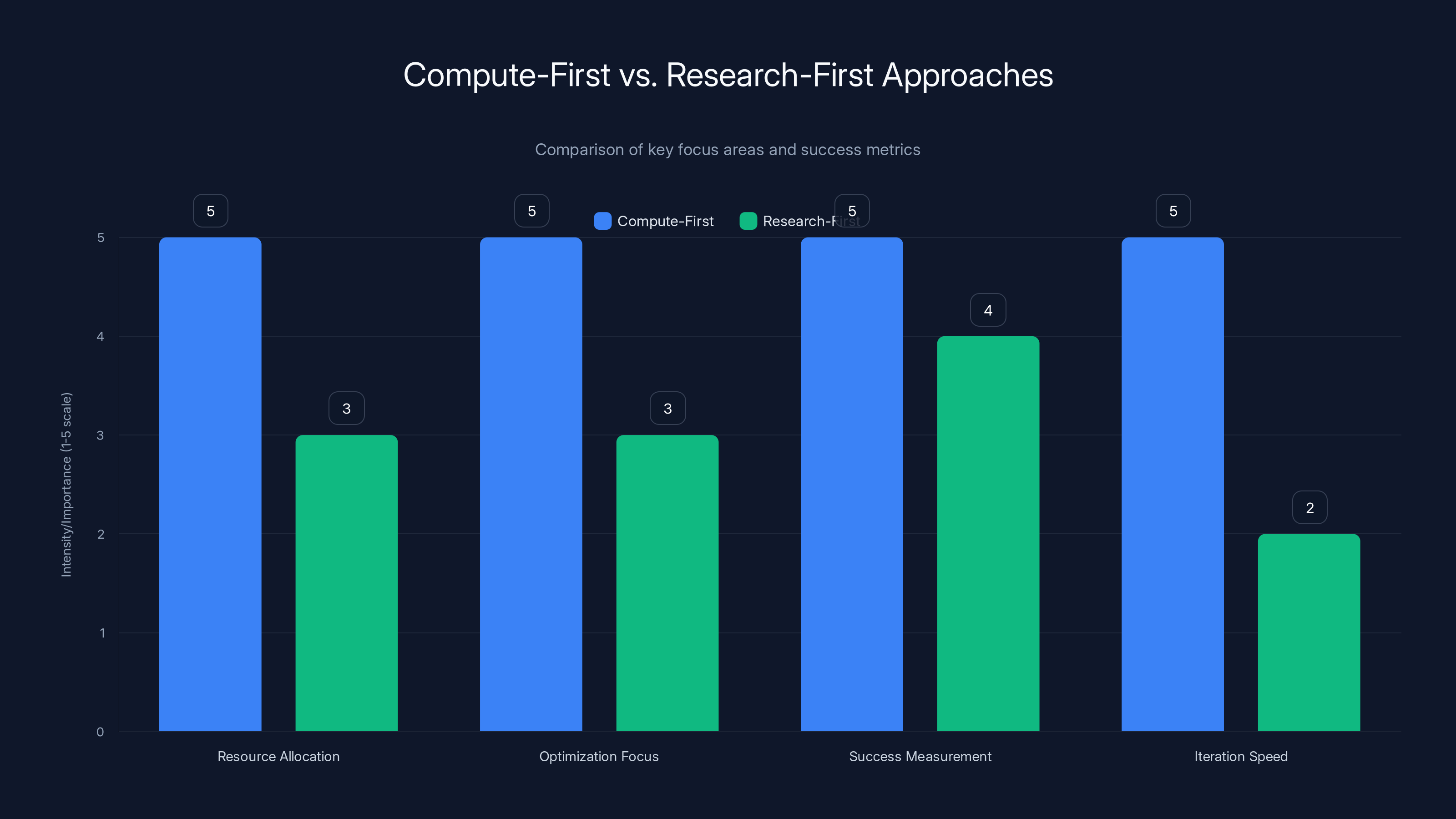

Compute-first organizations prioritize speed and market capture, while research-first organizations focus on depth and discovery. Estimated data based on typical organizational strategies.

The Scaling Paradigm That Built Modern AI

Understanding why Flapping Airplanes matters requires understanding what came before. For the last five years, the AI industry has operated under a clear assumption: if you want more capable models, you need more of everything.

More data. More compute. More parameters. More training time. The formula was straightforward, almost mechanical. And it worked. Spectacularly.

This approach, often called the scaling hypothesis, suggested that intelligence was primarily a function of model size. Researchers published papers showing that larger models performed better on nearly every metric. Performance scaled predictably with compute. The pattern held across different architectures, different domains, different training objectives.

So companies responded rationally. OpenAI built bigger models. Anthropic built bigger models. Google, Meta, Microsoft, every major player—bigger models. The race wasn't subtle. It was explicit.

This strategy delivered real results. GPT-3 was better than GPT-2. GPT-4 was better than GPT-3. Claude 3 improved on Claude 2. Each generation showed measurable improvements in reasoning, coding, creative writing, and thousands of other tasks. The scaling hypothesis wasn't just a theory—it was a money-printing machine.

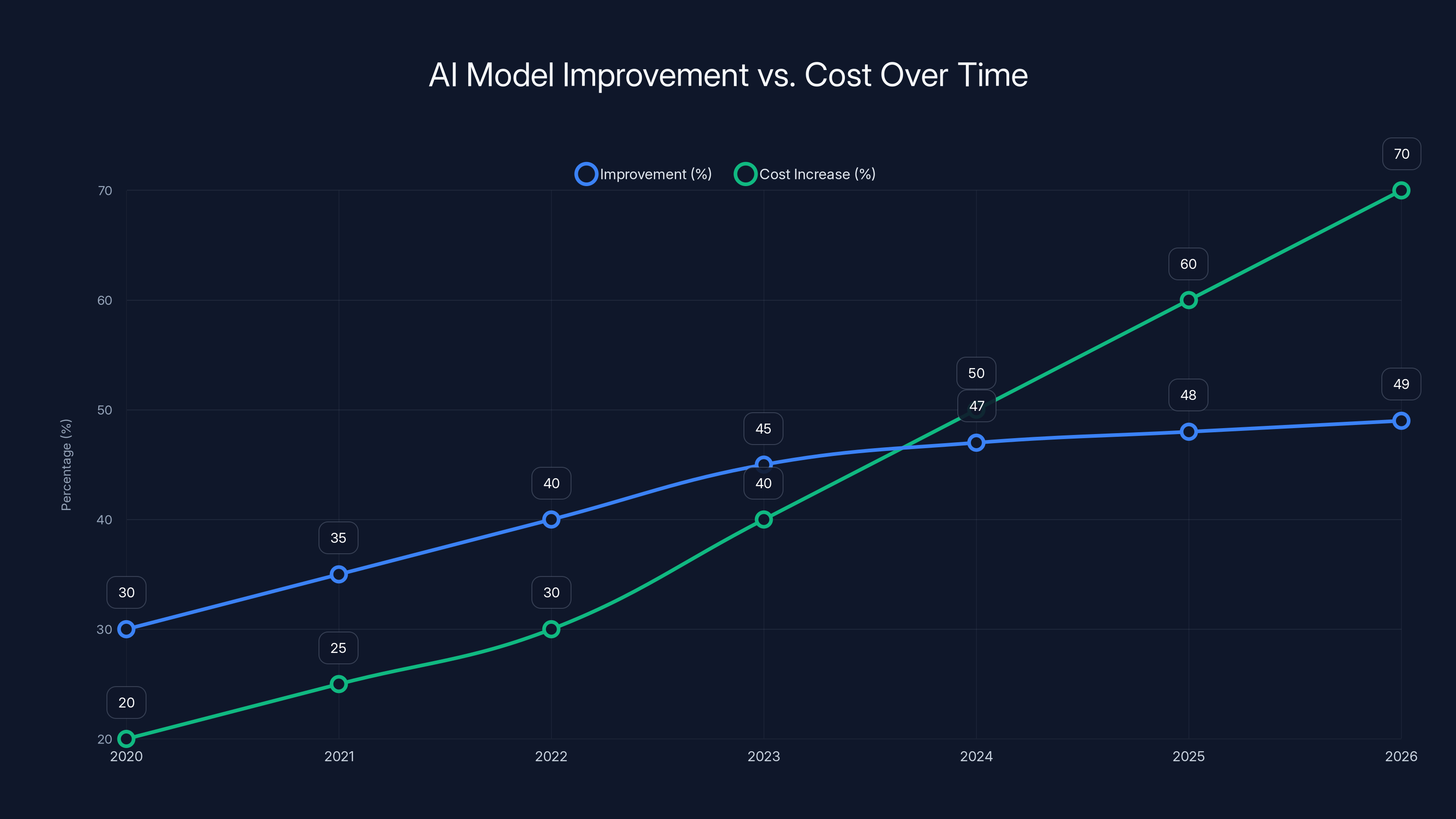

But scaling has costs. The financial costs are obvious: training runs that cost tens of millions of dollars. The environmental costs are significant: data centers burning enormous amounts of power. The temporal costs are real too: the gap between research breakthroughs and product deployment stretches longer with each generation.

More subtly, there's a strategic cost. When you're committed to scaling, you're essentially betting everything on the idea that you're on the right path. There's no room for fundamental questions about whether the underlying approach makes sense.

Flapping Airplanes is essentially asking: what if that bet is wrong?

The Research Paradigm: A Different Kind of Bet

David Cahn's Sequoia partner perspective crystallized something that's been building in the research community for a while. There's an alternative to the scaling hypothesis, and it rests on a fundamentally different assumption about how intelligence works.

The research paradigm doesn't deny that scaling matters. Instead, it suggests we're somewhere around two to three major research breakthroughs away from artificial general intelligence. Not through larger models, but through different architectures, novel training methods, or completely new ways of thinking about how systems learn.

If that's true, then the optimal allocation of resources changes dramatically. Instead of concentrating resources on the next generation of the same approach, you'd want to spread bets across many different research directions. Some of those bets would fail. Most would fail, probably. But collectively, they'd expand the search space for what's possible.

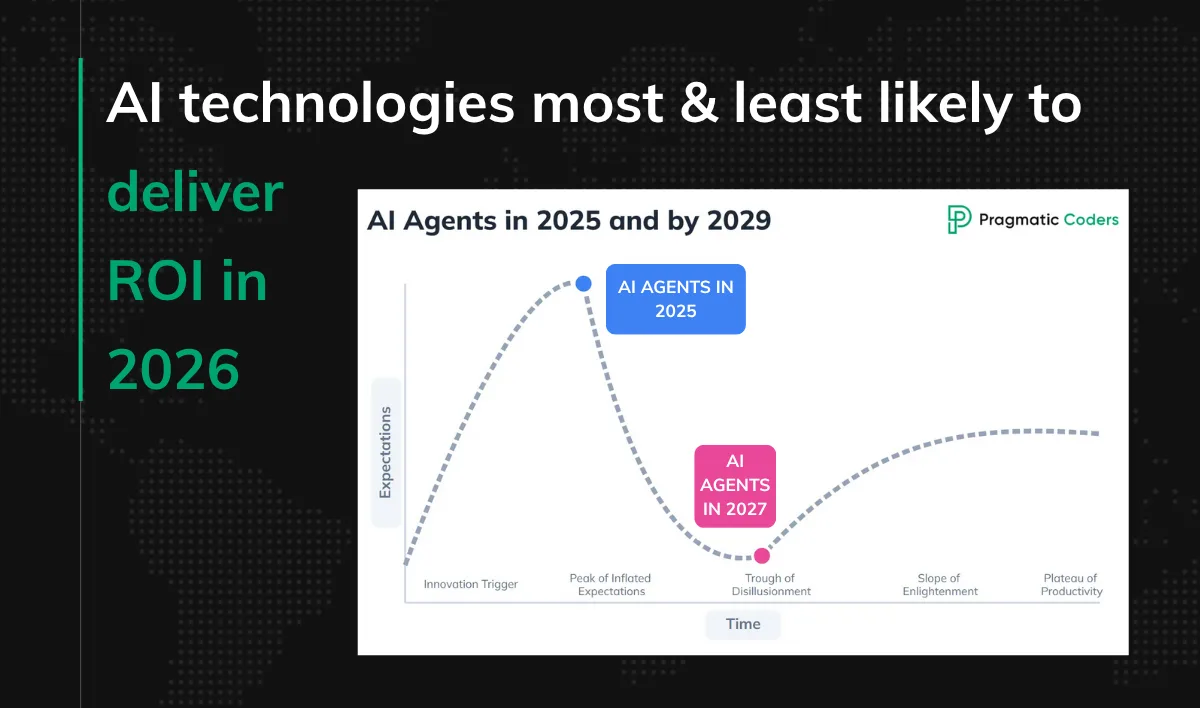

This changes the time horizon too. Scaling produces results quickly—a new model release every year or two. Research doesn't work on that timeline. A genuine breakthrough might take five, seven, even ten years to develop. And that's exactly the bet Flapping Airplanes is making.

The difference between these two approaches isn't academic. It changes everything about how you structure a company, allocate resources, evaluate talent, and measure progress.

A compute-first organization prioritizes short-term wins. You measure success by next quarter's benchmark improvements and next year's product releases. You favor engineering excellence and operational scale. These are genuine strengths—shipping products matters.

A research-first organization prioritizes long-term breakthroughs. You measure success by the scope of problems you can explore and the number of novel approaches you can test simultaneously. You favor theoretical insight and radical experimentation. You're willing to pursue ideas that have a low probability of success but could reshape the entire field.

These aren't just different priorities. They're different organizational cultures. Different hiring profiles. Different incentive structures. Different definitions of success.

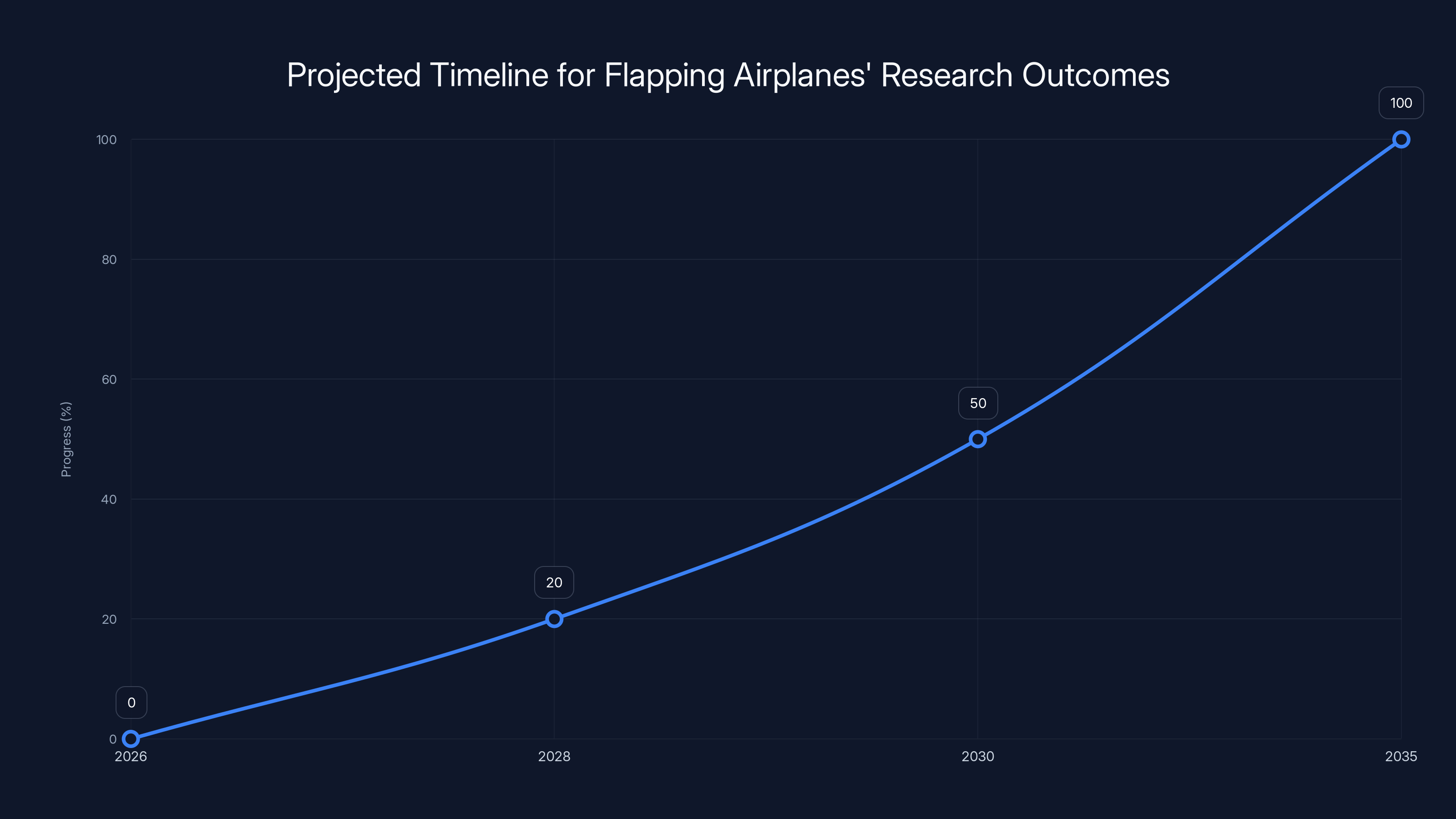

Flapping Airplanes aims to achieve significant AI research milestones over a 5-10 year period, with initial research publications expected within 1-2 years and broader industry adoption by 2035. Estimated data.

What Makes Data Efficiency the Next Frontier

At the core of Flapping Airplanes' mission is a specific focus: finding ways to train effective large language models with significantly less data and compute.

This sounds like a technical problem, but it's actually a profound strategic insight. If you can crack data efficiency—if you can build models that learn faster and retain more from smaller datasets—you've fundamentally altered the economics of AI development.

Currently, training a state-of-the-art model requires hundreds of billions of tokens of data. That's data that needs to be collected, cleaned, stored, and processed. The total addressable dataset for quality information isn't infinite. We're probably not going to run out in the next year or two, but the consensus among researchers is that simple scaling of data won't work indefinitely.

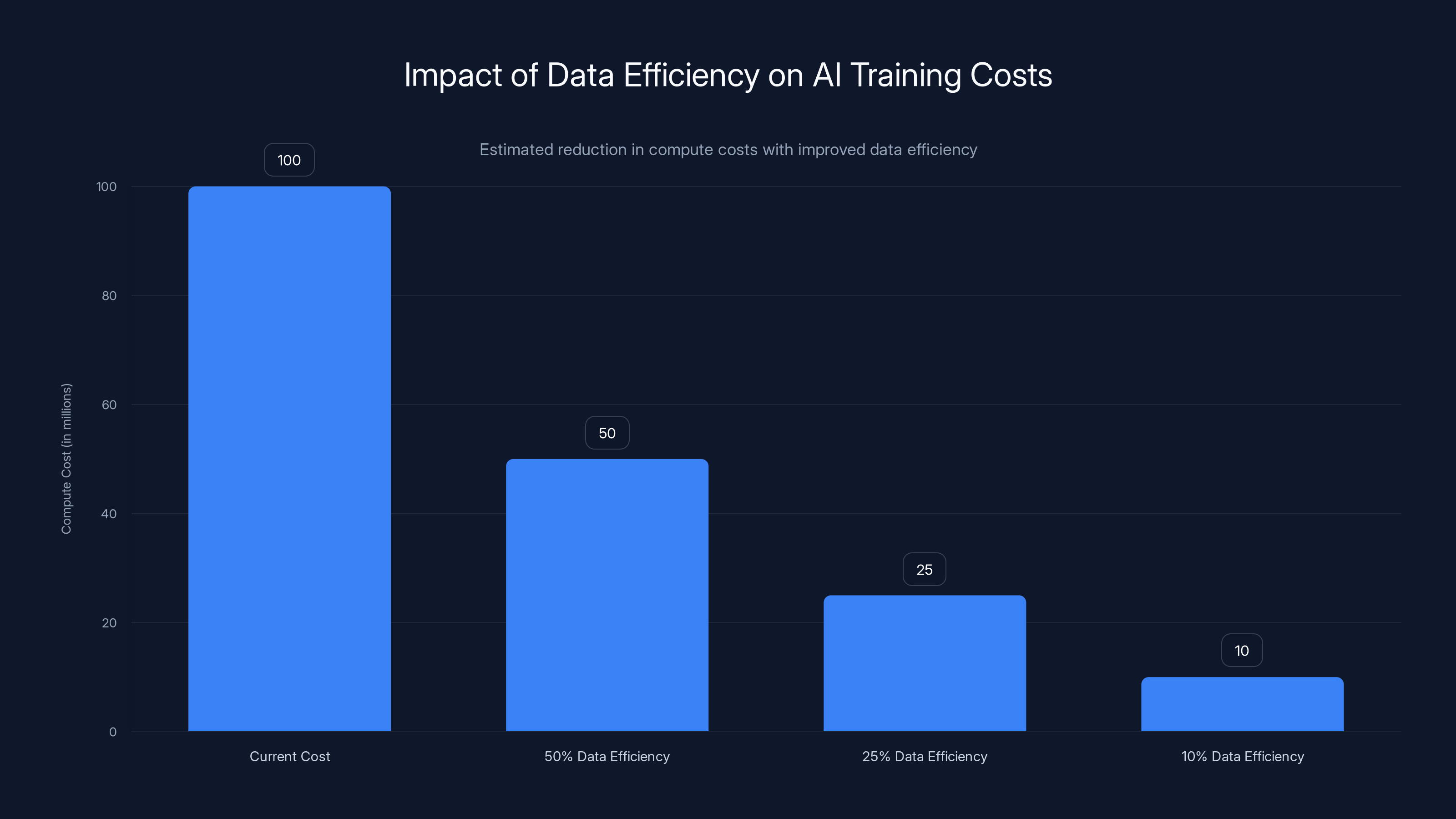

Data efficiency research asks: what if you could do the same thing with half the data? A quarter of the data? What architectural changes, training techniques, or learning paradigms would make that possible?

The potential applications are staggering. You could train models faster. You could train models cheaper. You could train models on smaller, more specialized datasets. You could deploy models to edge devices that currently require cloud infrastructure. You could train on proprietary data without needing massive amounts of it.

From a business perspective, this is genuinely revolutionary. It would democratize AI development. Right now, training frontier models is essentially limited to organizations with access to hundreds of millions or billions in compute budget. If you could achieve similar capability with 10% of the compute, suddenly hundreds of companies could compete.

This isn't just theoretical. There's already active research in this space:

- Knowledge distillation: Teaching smaller models to replicate the behavior of larger models using less data

- Few-shot learning: Training models to learn new tasks from only a handful of examples

- Efficient fine-tuning: Adapting pre-trained models to specific tasks without retraining from scratch

- Mixture of experts: Using multiple specialized models instead of one massive general model

- Curriculum learning: Structuring training data in ways that facilitate learning more efficiently

The research community has papers on all these topics. What Flapping Airplanes represents is a major commitment to turning research insights into actual breakthroughs at scale. Not just publishing papers, but building systems that prove these concepts work in the real world.

The Team and the Track Record

Flapping Airplanes didn't announce major team details publicly, but the roster is impressive enough that it attracted backing from some of the most selective investors in the world. When Google Ventures and Sequoia both write checks for the same AI research lab, it's a signal that the founding team has serious credibility.

Sequoia's decision to back this project is particularly interesting. Sequoia has invested in most of the major AI labs that exist today. They understand the space deeply. That they're willing to make a major bet on a research-first approach, not just another scaling play, suggests they see something fundamental changing in the market.

The team composition matters too. This isn't a group of engineers who want to build products. This is a research organization. The founding team likely includes Ph D-level researchers with publication records, people who've worked in university labs or at research-focused organizations, individuals whose track record is measured by scientific contribution, not commercial product success.

That's a different hiring profile than companies built to ship products quickly. It attracts different talent. It creates different incentive structures. It means the organization is genuinely willing to pursue ideas that might not generate revenue for five years, if they could be important enough.

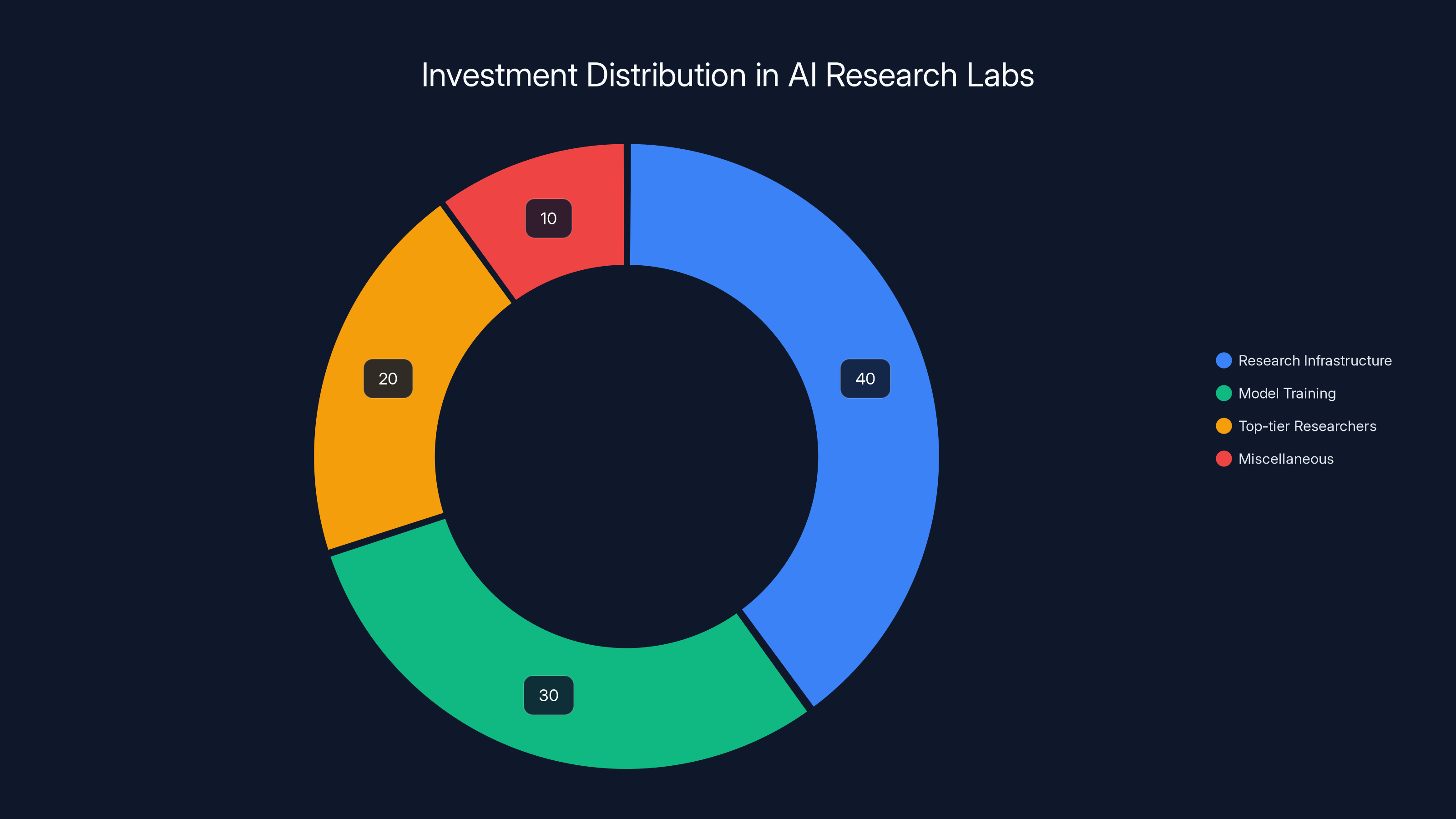

The $180 million in seed funding is generous by any standard, but it's actually conservative for the scope of what they're attempting. Training large models is expensive. Running research infrastructure is expensive. Paying top-tier researchers is expensive. One hundred eighty million gives them a runway to pursue multiple research directions simultaneously without needing to immediately chase revenue.

The Historical Context: Why This Timing Matters

Flapping Airplanes didn't emerge in a vacuum. It's a response to a specific moment in AI development.

For the last five years, the conversation has been dominated by scaling. Bigger models, more data, more compute. This was genuinely exciting and productive. But by 2025-2026, several things have become clear:

First, scaling continues to work but with diminishing returns. Each new generation of model is more impressive than the last, but the improvement-to-cost ratio is getting worse. GPT-4 was a major leap forward. But how much better is GPT-5? Are we talking 5% improvement or 50%? That difference affects investment calculus completely.

Second, the data scaling ceiling is approaching. Not reached yet, but visible. There's a finite amount of high-quality training data available on the public internet. Companies are now scraping training data from paywalled sources, using synthetic data, and running into quality issues. Eventually, you can't just collect more data—you need a fundamentally different approach.

Third, the geopolitical and environmental costs of scaling are becoming harder to ignore. Training models that consume as much electricity as small nations requires justification. Concentrating AI development in the hands of a few well-capitalized companies creates policy questions that governments are starting to ask.

Fourth, and maybe most important, the research community has been quietly building up theoretical foundations for alternative approaches. For years, individual papers have explored different architectures, learning paradigms, and training techniques. No single paper is the breakthrough. But collectively, they suggest there's another path.

Flapping Airplanes is essentially saying: we believe in that other path enough to bet $180 million and years of our careers on it.

Historically, this is similar to moments when foundational technologies shifted direction. The shift from monolithic computing to distributed systems. The move from relational databases to different database paradigms. The transition from rule-based AI to machine learning. In each case, there was a dominant approach that worked well, and then research-driven alternatives emerged that eventually changed the entire field.

Estimated data shows that while AI model improvements continue, the cost increase is outpacing the rate of improvement from 2020 to 2026, highlighting diminishing returns.

How the Compute-First vs. Research-First Distinction Actually Works

The conceptual distinction between compute-first and research-first approaches is important, but let's make it concrete.

A compute-first organization looks something like this:

- Start with the best-performing architecture (transformers)

- Allocate maximum resources to training larger versions of that architecture

- Optimize for speed—get to market with new capabilities as fast as possible

- Measure success by benchmark performance and user adoption

- Iterate quickly, release often, capture market share

This is OpenAI's playbook (rough sketch). Release GPT-3, release GPT-4, release GPT-4 Turbo, release GPT-4 Vision. Each release is better than the last. Each release finds eager customers. The organization is structured to maximize output speed.

A research-first organization works differently:

- Ask fundamental questions about whether current approaches are optimal

- Allocate resources across multiple research directions, most of which might not work

- Optimize for depth—spend years understanding why something works or doesn't

- Measure success by whether you learn something that changes the field

- Iterate slowly, publish carefully, pursue moonshots

This is how Bell Labs operated. How Xerox PARC operated. How university research labs operate. The culture and incentive structures are built around discovery, not deployment.

Neither approach is inherently better. They serve different purposes. Compute-first organizations are phenomenal at building products, shipping features, and capturing market opportunity. Research-first organizations are phenomenal at asking "what if?" and pursuing ideas that might be important but aren't immediately obvious.

The AI industry has been almost entirely compute-first for five years. Flapping Airplanes represents a deliberate swing back toward research-first, with the capital and institutional support to actually execute.

Specific Research Areas Flapping Airplanes Could Pursue

We don't have a detailed roadmap for Flapping Airplanes' research directions, but looking at the broader research landscape, several areas are ripe for breakthrough:

Efficient Attention Mechanisms: Current transformer models use dense attention, which scales quadratically with sequence length. If you could develop sparse attention mechanisms that retain performance while scaling linearly, you've made models exponentially more efficient. Research labs are already working on this. Flapping Airplanes could accelerate it.

Novel Training Paradigms: What if you could interleave reinforcement learning and supervised learning differently? What if you could use fewer but higher-quality training examples by structuring the curriculum smarter? What if you trained models to reason step-by-step about their reasoning? These aren't theoretical ideas—they're active research areas that could compound into major breakthroughs.

Cross-Modal Learning: Current language models learn from text. But language, images, video, and audio all encode information about the world. Models that learned to think across modalities more efficiently might need less data to achieve equivalent understanding. This is already a research area, but it's underfunded relative to pure language model scaling.

Mechanistic Interpretability: Understanding exactly how models work internally could reveal new architectures or training approaches. Why do some attention heads specialize in specific types of reasoning? What's the computational structure of a model's representation space? These questions might seem academic, but they could lead to models that are designed better from scratch.

Continual Learning: Current models train once, then stop. They don't learn from new data without retraining. Solving continual learning—models that learn and update as they encounter new information—could reduce training data requirements dramatically.

Each of these is a multi-year research effort. None of them is guaranteed to work. But collectively, they're the kind of bets a research-first organization would make.

The Business Model Question: How Does Research Become Revenue?

Here's the question that follows inevitably: Flapping Airplanes raised $180 million for research. How do they become a sustainable business?

There are several paths:

Licensing breakthrough technology: If they develop genuinely better approaches to model training, they could license those techniques to other AI companies. That's a high-margin, low-deployment-cost revenue stream.

Building products with the efficiency gains: If they crack data efficiency, they could build products that smaller organizations can run locally or on cheaper infrastructure. That opens entirely new markets.

Selling to cloud providers: Cloud companies like AWS, Google Cloud, and Azure need continuous improvements in ML efficiency. If Flapping Airplanes develops better approaches, they could sell them to these giants.

Publishing and influence: This is less obvious but real. Influence and reputation in the AI research community is valuable. Papers, talent, and thought leadership attract acquisition opportunities, partnerships, and follow-on funding.

Building the next generation of AI products: If their research succeeds, they'll understand how to build models more efficiently than anyone else. That's a genuine competitive advantage. They could use it to build the next important AI product.

The point is, the research doesn't need to directly produce revenue. It needs to produce either a capability that's valuable to other organizations, or an insight that leads to valuable products. Flapping Airplanes has multiple paths to that outcome.

Estimated data shows that achieving 10% data efficiency could reduce AI training costs from

Comparison: How This Differs From Existing AI Labs

To understand what makes Flapping Airplanes distinct, it helps to compare against existing organizations in the space.

OpenAI is compute-first through and through. They have a research team, and they publish papers, but their primary focus is building increasingly capable models and turning them into products. GPT-4 is impressive because it's big and powerful, not because it represents a breakthrough in efficiency or novel architecture.

Anthropic is somewhere in between. They've published important research on AI alignment and safety. But they're still primarily focused on building larger models with improved training techniques. They're researching, but in service of building better products.

DeepMind is actually research-first, but they're owned by Google, which changes the dynamics. Google has essentially unlimited compute and talent. DeepMind can research fundamental questions because Google can monetize whatever they discover across the entire company.

University labs are purely research-focused, but they lack scale and resources. A good university lab might have 20-30 researchers. Flapping Airplanes with $180 million can have 100+.

Flapping Airplanes sits in a unique position. They have enough capital to be independent. They have enough focus to be genuinely research-oriented. They have enough ambition to pursue questions that could reshape the field.

The closest historical analog might be Bell Labs in the 1950s-1960s: a well-capitalized research organization, independent enough to ask fundamental questions, but focused enough to eventually produce valuable technology.

Why The Industry Needs Both Approaches

Here's something important: the existence of Flapping Airplanes doesn't mean the scaling approach is wrong. Both can coexist and both are valuable.

Compute-first organizations are phenomenal at turning research into products. Without OpenAI shipping GPT-4, millions of people wouldn't have access to powerful language models. Without Anthropic pushing on safety and capability improvements, the field wouldn't be advancing as quickly. Product-focused companies drive adoption, create market incentives for improvement, and actually deliver value to users.

Research-first organizations are essential for asking whether we're going down the right path at all. They explore ideas that don't yet have obvious commercial applications. They challenge assumptions. They pursue moonshots.

Over time, good research-first organizations become compute-first organizations. Or they get acquired by them. Or their research findings get integrated into product organizations. The ecosystem works because there's both momentum (scaling) and exploration (research).

The concern that existed before Flapping Airplanes was that the balance had tilted entirely toward momentum. Everyone was scaling. Nobody was exploring fundamentally different approaches. That imbalance is what Flapping Airplanes is trying to correct.

The Five-to-Ten-Year Horizon: Why This Timeline Matters

Flapping Airplanes is explicitly betting on a five-to-ten-year timeline. This isn't accidental. It's because breakthrough research doesn't work on faster timelines.

Why does fundamental research take so long? Several reasons:

False starts are inevitable: When you're exploring truly novel directions, most attempts won't work out. You pursue an idea for six months, write 50,000 lines of code, run 100 experiments, and discover the approach doesn't scale. Then you try something different. Over a decade, you might have five major directions, three of which fail completely, one that partially works, and one that becomes the breakthrough.

Compound knowledge builds slowly: Each failed experiment teaches you something. Each partial success reveals what's actually important. Over years, you accumulate understanding that makes the breakthrough possible. It's not that you need to be working for five years. It's that you need five years for human insight to accumulate.

Verification takes time: Once you think you have a breakthrough, you need to verify it rigorously. Can you reproduce it? Does it work at scale? Does it generalize to other domains? This verification process is methodical and can't be rushed.

Talent takes time to build: A research organization needs to assemble the right team. That's not just hiring smart people—it's finding people who are excited about the specific research direction, who think deeply about the problems, who can work together effectively. Building that culture takes time.

Market transitions are slow: Even if Flapping Airplanes makes a genuine breakthrough in 2027 or 2028, it'll take years for that breakthrough to propagate through the rest of the industry. Other companies need to understand it, implement it, validate it, build on it. Technology adoption follows S-curves, not hockey sticks.

The five-to-ten-year horizon isn't pessimistic. It's realistic. And it's exactly why Flapping Airplanes needed patient capital from investors who understand research timelines.

Estimated data shows that a significant portion of investment in AI research labs is allocated to research infrastructure and model training, highlighting the high costs associated with these areas.

The Geopolitical Dimension: Why Nations Care About AI Research Strategy

Flapping Airplanes' research-first approach has implications that go beyond technology. It matters geopolitically.

Right now, AI development is concentrated in a few countries, primarily the United States. Within the United States, it's concentrated among a few organizations with massive capital. That concentration creates strategic vulnerabilities and raises governance questions.

If all the world's best AI development happens through pure scaling—spending billions on compute—then dominance in AI becomes a function of computational resources and capital. Countries with the most money and electricity win.

If AI development is driven by fundamental research breakthroughs—novel architectures, new learning paradigms, better efficiency—then the landscape changes. A smaller country or organization with brilliant researchers might make a breakthrough that leapfrogs larger competitors. The game becomes about ideas, not just capital.

This matters to governments. The United States wants to maintain AI leadership. The European Union is concerned about dependency on US AI systems. China is investing heavily in AI research and development. From a strategic perspective, countries need to encourage both compute-scale development and fundamental research.

Flapping Airplanes, backed by American investors and presumably composed of American and international researchers, is one signal that the US is placing strategic bets on research-first AI development, not just scaling.

What This Means for AI Teams and Organizations

Flapping Airplanes might seem like a distant news story if you're building products with existing AI tools. But it has implications for anyone thinking about AI strategy.

For product-focused organizations: The existence of research-first competitors means you need to stay aware of emerging techniques and approaches that could disrupt your product. You can't assume the current stack remains optimal forever. Invest in understanding new research directions, even if you're optimized for deploying current technology.

For AI researchers: The creation of a major research lab that's genuinely independent and research-focused opens up career opportunities. Research roles at well-funded labs have become rarer as organizations have consolidated. Flapping Airplanes represents a reopening of that space.

For infrastructure providers: If Flapping Airplanes succeeds in developing more efficient training techniques, demand for massive GPU clusters might eventually decline. That's a strategic concern for companies like Lambda Labs or CoreWeave who provide compute infrastructure. But it also creates opportunities for companies that help with distributed, efficient training.

For companies building AI-powered products: Flapping Airplanes' work could eventually make AI capabilities cheaper and more accessible. That's both a threat (more competition) and an opportunity (lower costs). Understanding when and how these transitions happen could be strategically important.

The Meta Question: Is This Actually About AI, or About Business Models?

There's a deeper question lurking underneath all of this: is the scaling vs. research distinction really about what works technically, or is it about business models?

From a technical perspective, both approaches are valid. Scaling works. Research works. The question is what to optimize for.

From a business model perspective, the distinction is more interesting. Scaling creates a first-mover advantage for whoever can move fastest and has the most capital. Compute-first organizations can release new capabilities every year, get customer lock-in, build network effects. It's a winner-take-most dynamic.

Research-first approaches are slower but potentially more disruptive. If you discover something truly novel, you don't just improve incrementally—you potentially reshape the entire field. But that comes with risk. You might spend five years and discover your approach doesn't work.

Flapping Airplanes is essentially betting that the research-first model will prove more valuable long-term, even if it's slower. That's both a technical bet and a business model bet.

Flapping Airplanes received $180 million in funding, challenging the traditional scale-first AI research approach. Estimated data.

Looking Forward: What Success Looks Like

When will we know if Flapping Airplanes succeeded? It depends on the timeframe.

In 1-2 years: We should see published research from the organization. Not necessarily breakthroughs, but serious papers that advance the field's understanding of model efficiency, alternative training paradigms, or novel architectures. If they're not publishing, it's a red flag.

In 3-5 years: They should have demonstrated proof-of-concept for at least one major novel approach. A model trained with 50% less data than comparable systems. A new architecture that scales more efficiently. A training technique that enables something previously impossible. The research should be concrete, reproducible, and impressive.

In 5-10 years: The breakthroughs should be making their way into the broader AI ecosystem. Other organizations should be adopting Flapping Airplanes' techniques. Their research should be shaping how people think about and build AI systems. That's the ultimate measure of research success—not just your own progress, but the field's adoption of your insights.

Failure looks like: five years in, no published research. Seven years in, their work hasn't influenced other organizations. Ten years in, the organization still hasn't produced anything that changed how people build AI systems.

Given the team quality, the funding level, and the investor backing, I'd estimate reasonable probability of at least partial success. Whether they hit the full-scale breakthrough is harder to predict. That's why it's a research bet, not a sure thing.

The Broader Shift In AI Thinking

Flapping Airplanes isn't an anomaly. It's part of a broader shift in how smart people think about AI's future.

For five years, the narrative was "keep scaling, AGI is scaling." That narrative produced real progress. But it also created a kind of inevitability mindset—the idea that the path was already set, you just needed to follow it.

Increasing numbers of researchers are questioning that inevitability. Not because scaling doesn't work, but because they think scaling alone won't get to AGI. They think there are architectural innovations, learning paradigms, or fundamental insights still undiscovered. And they think hunting for those insights is a valuable use of resources.

Flapping Airplanes is the most visible institutional bet on that perspective. But it's not the only bet. Universities are increasing research into efficient learning. Some companies are starting to diversify beyond pure scaling. There's growing interest in mechanistic interpretability, in understanding what models learn and how.

This shift doesn't invalidate the scaling approach. OpenAI, Anthropic, Google, Meta—they should keep building larger models. That's valuable and important. But having some portion of the AI research community focused on different questions is healthy for the field overall.

A Reality Check: What Could Go Wrong

Flapping Airplanes is an exciting project with an impressive team and real capital. But let's acknowledge the ways this could fail:

The scaling hypothesis could be correct: Scaling might continue to work better than any alternative approach for the next decade. If that's true, then research into alternatives is less valuable than pure scaling. Flapping Airplanes would be optimizing for the wrong thing.

Organizational challenges: Research organizations are hard to manage. You need to balance letting brilliant people pursue their ideas with actually making progress on specific problems. You need to maintain institutional momentum without crushing creativity. Most research organizations that get large enough to have real impact struggle with this balance.

Market timing: Even if Flapping Airplanes makes genuine breakthroughs, the commercial timing might be wrong. Maybe their breakthroughs matter most in 2035, but they run out of funding in 2032. Timing research breakthroughs to market opportunity is genuinely hard.

Talent retention: Keeping brilliant researchers focused on long-term goals while competitors are hiring them away with equity upside and product impact is a real challenge. Research orgs often lose talent to more commercial opportunities.

Integration difficulty: Maybe their research works in isolation but doesn't actually integrate well with the rest of the AI stack. A brilliant new training technique doesn't matter if it requires rebuilding your entire infrastructure.

These aren't arguments against making the bet. They're reasons why betting on research is riskier than betting on scaling. The payoff, if it works, could be much higher. But the risk of failure is real.

The Competitive Dynamics: What Other Organizations Might Do

Flapping Airplanes' existence might catalyze changes in other organizations.

OpenAI: Might increase its research budget to explore alternatives alongside scaling. They've got the capital. Adding a dedicated research-focused division wouldn't threaten their core product business.

Anthropic: Could double down on research into interpretability and safety, using those insights to inform model development. They're already somewhat research-forward, but could lean harder in that direction.

Google DeepMind: Might use their scale to fund moonshot research programs that compete with Flapping Airplanes in specific domains. They've got the resources to place multiple bets.

New startups: Flapping Airplanes' success (or failure) will influence what types of AI startups get funded. If they succeed, we'll see more research-focused startups. If they fail, funding will consolidate around product-focused companies.

Governments: Might increase funding for university AI research programs, viewing them as a strategic national asset. Countries that can't compete on scaling might compete on fundamental research quality.

The competitive dynamics around AI are starting to shift from "who can scale biggest" to "who can scale AND innovate." Flapping Airplanes is part of that shift.

Lessons for Technical Leaders and Founders

Whether or not you're in AI, there are broader lessons from Flapping Airplanes' approach:

1. Fundamentals matter more than speed when the game is truly long-term: If you're betting on a five-to-ten-year horizon, it's more important to get the fundamentals right than to move fast. Speed matters for building products. Fundamentals matter for building something that lasts.

2. Patient capital is underrated: The ability to make a bet that doesn't pay off for years is genuinely rare. If you can access patient capital, that's a competitive advantage. Venture capital is great for product companies. Patient, research-focused capital is essential for fundamental innovation.

3. Your organizational culture determines what you can accomplish: A research-first organization and a product-first organization with identical budgets will accomplish completely different things. You need to explicitly design your culture to match your goals.

4. Institutional backing from smart people matters: Flapping Airplanes isn't just about the team—it's about the validation and support from investors like Sequoia and Google Ventures. That backing provides legitimacy, access to resources, and a network of smart people thinking about related problems.

5. You can't optimize for everything: Flapping Airplanes is choosing research depth over product speed. That's a real trade-off. There's no way to have both simultaneously. You need to choose what you're optimizing for and build everything else around that choice.

Conclusion: The Next Chapter of AI Development

Flapping Airplanes represents something important: a deliberate institutional bet that the path to advanced AI goes through fundamental research, not just scaling.

For five years, scaling worked so well that it crowded out nearly everything else. The narrative became simple: build bigger models, they'll be smarter. That narrative produced real progress. But it also created a kind of tunnel vision in the research community.

Flapping Airplanes is an attempt to break that tunnel vision. To say: scaling is important and should continue, but let's also place serious bets on fundamentally different approaches. Let's spend five to ten years asking "what if?" instead of just asking "how much bigger?"

Will it work? That's the question. The team looks strong. The funding is real. The investor backing is serious. But research is inherently uncertain. You can increase the probability of success through good execution, but you can't guarantee it.

What matters more than any individual organization is that the field is starting to think about this question again. Researchers are asking whether scaling is sufficient or if we need innovation in architecture and learning paradigms. That conversation is healthy. It's the kind of conversation that leads to genuine breakthroughs.

The next major advance in AI might come from scaling to 10x larger models. Or it might come from a fundamentally different approach to learning that uses 10x less data. Or from something nobody has thought of yet. Flapping Airplanes is betting that there's value in exploring the latter possibilities seriously.

For anyone paying attention to AI's future, this is worth watching. Not because Flapping Airplanes will necessarily win. But because the question they're asking—how do we build better AI through research and innovation, not just resources and compute—is the right question.

The scaling paradigm got us to large language models that can think in multiple languages, write code, reason about complex problems, and assist with creative tasks. That's genuinely impressive. But if there are better approaches, we should find them. And if there aren't, we'll learn that through careful research. Either way, the field benefits from having serious people asking the question.

Flapping Airplanes is that serious question, institutionalized and funded.

FAQ

What exactly is Flapping Airplanes?

Flapping Airplanes is a new AI research lab that launched in January 2026 with $180 million in seed funding from Google Ventures, Sequoia, and Index Ventures. The organization is focused on fundamental AI research with an emphasis on finding more efficient ways to train large language models, rather than purely scaling existing approaches.

What's the difference between the scaling paradigm and the research paradigm?

The scaling paradigm prioritizes larger models and more compute to achieve better performance, focusing on short-term improvements measured in 1-2 years. The research paradigm takes a longer view, betting on fundamental breakthroughs that might take 5-10 years but could reshape how AI systems are built entirely.

Why does data efficiency matter for AI development?

Data efficiency is crucial because the amount of high-quality training data available is finite. If models can achieve comparable performance with less data, it becomes cheaper and faster to train new models, democratizes AI development for smaller organizations, and reduces environmental costs associated with massive training runs.

How long will Flapping Airplanes take to show results?

The organization is explicitly betting on a 5-10 year timeline. Within 1-2 years, you should expect published research. Within 3-5 years, concrete proof-of-concepts of novel approaches. Full success would be measured by whether their breakthroughs get adopted across the broader AI industry over the full 5-10 year horizon.

Could Flapping Airplanes' approach fail, and what would that look like?

Yes, research bets fail regularly. Failure would look like either the scaling hypothesis proving correct (scaling is actually the best approach), or the organization struggling with execution, talent retention, or integration challenges. You'd know within 5-7 years if the core research wasn't producing meaningful progress.

How does this affect people building AI products today?

For product-focused organizations, Flapping Airplanes' work could eventually make AI capabilities cheaper and more accessible. Understanding emerging research directions helps you anticipate how the underlying technology landscape might shift, even if changes take years to propagate through the industry.

Why would investors back a research organization instead of a product company?

Patient, research-focused investors like Sequoia and Google Ventures understand that fundamental breakthroughs can create more value long-term than iterative product improvements. A breakthrough in model efficiency could be worth billions. The longer time horizon and research focus are features, not bugs, for investors willing to place decade-long bets.

Is Flapping Airplanes competition for OpenAI and Anthropic?

Not directly. OpenAI and Anthropic focus on building products and scaling models. Flapping Airplanes focuses on research. However, if Flapping Airplanes makes breakthroughs, those breakthroughs could eventually influence how product companies build systems. They're playing different games on partially overlapping fields.

What happens if Flapping Airplanes succeeds in making models more efficient?

If they crack data efficiency, it would reduce the capital barrier to entry for AI model development, potentially democratizing access to model training. It could shift competitive advantage away from pure compute spending toward architectural innovation and research quality. The AI landscape would become less dominated by organizations with unlimited capital.

How does Flapping Airplanes fit into broader AI safety and governance discussions?

More efficient, research-driven AI development could help distribute AI development more broadly rather than concentrating it in the hands of a few well-capitalized organizations. This could improve governance diversity and reduce single points of failure, though efficiency alone doesn't solve safety or alignment questions.

Use Case: Track emerging AI research papers and breakthroughs in real-time by automatically generating research summaries and trend reports from hundreds of sources daily.

Try Runable For FreeKey Takeaways

- Flapping Airplanes represents a major institutional bet on research-first AI development with $180M in funding from top-tier investors

- The shift from scaling-first to research-first approaches addresses concerns about data efficiency and fundamental breakthroughs needed for AGI

- Research-driven organizations require 5-10 year time horizons and accept that most research directions will fail, unlike product-focused companies

- Data efficiency and novel training paradigms could democratize AI development by reducing capital barriers currently dominated by well-resourced organizations

- Both scaling and research approaches are valuable: scaling delivers products, research asks whether current paths are optimal

Related Articles

- Cognitive Diversity in LLMs: Transforming AI Interactions [2025]

- AI Glasses & the Metaverse: What Zuckerberg Gets Wrong [2025]

- How AI Is Accelerating Scientific Research Globally [2025]

- Tesla's $2B xAI Investment: What It Means for AI and Robotics [2025]

- ChatGPT 5.2 Writing Quality Problem: What Sam Altman Said [2025]

- AI Discovers 1,400 Cosmic Anomalies in Hubble Archive [2025]

![Flapping Airplanes and Research-Driven AI: Why Data-Hungry Models Are Becoming Obsolete [2025]](https://tryrunable.com/blog/flapping-airplanes-and-research-driven-ai-why-data-hungry-mo/image-1-1769701218322.jpg)