Why Yann Le Cun's Exit From Meta Signals a Seismic Shift in AI

When a Turing Prize winner walks away from one of the world's largest AI labs, people pay attention. But when that person is Yann Le Cun, the legendary AI scientist who spent nearly a decade helping build Meta's AI Research laboratory (FAIR), the entire industry stops and listens.

Le Cun's departure from Meta in 2024 to found AMI Labs wasn't just another startup announcement. It represented a fundamental disagreement about the direction of artificial intelligence itself. While the rest of Silicon Valley obsessed over making large language models bigger and more powerful, Le Cun quietly began assembling a team to pursue something radically different: world models.

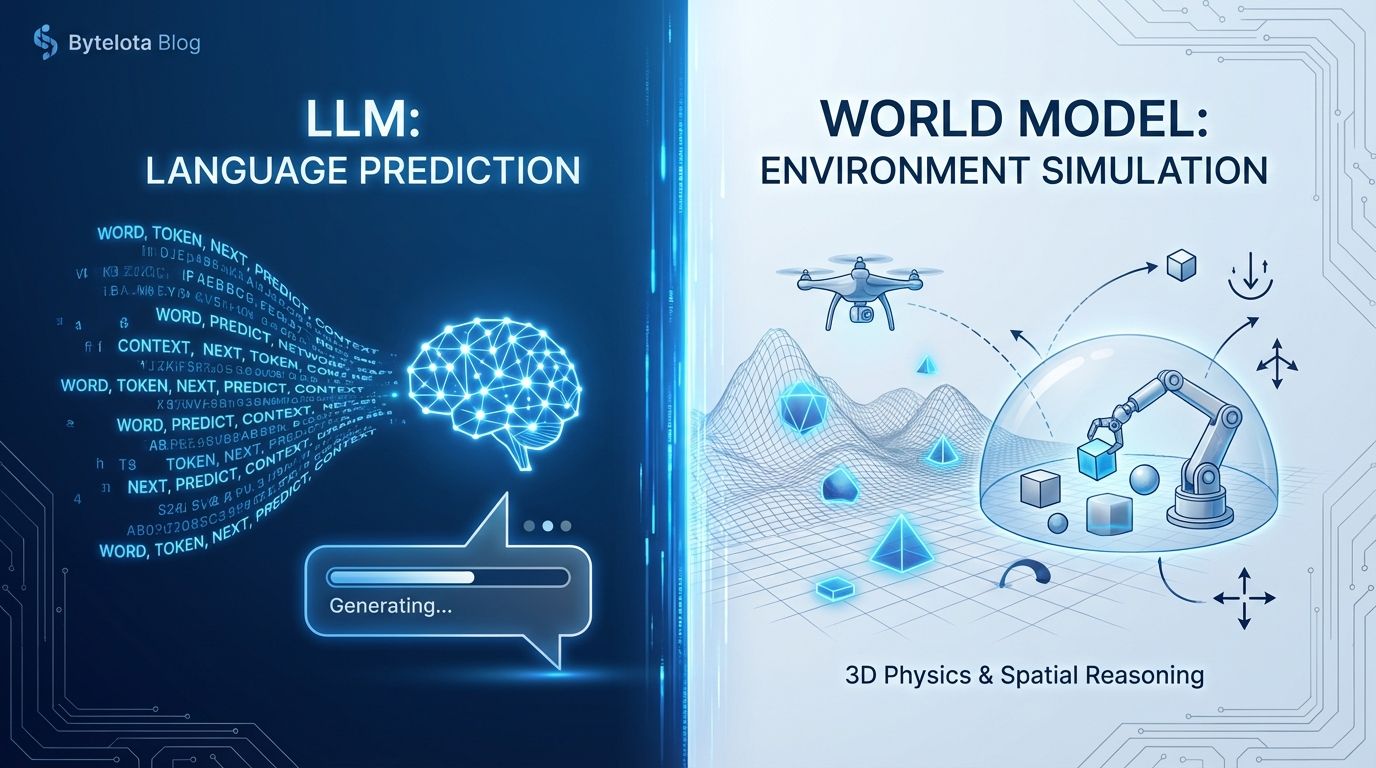

World models represent a completely different approach to building intelligent systems. Instead of predicting the next token in a sequence (what large language models do), world models aim to build AI systems that understand the actual structure and physics of reality. They can predict what happens when you drop an object, how liquids flow, and how objects interact in three-dimensional space. For Le Cun, this isn't just an academic curiosity. It's the future of AI.

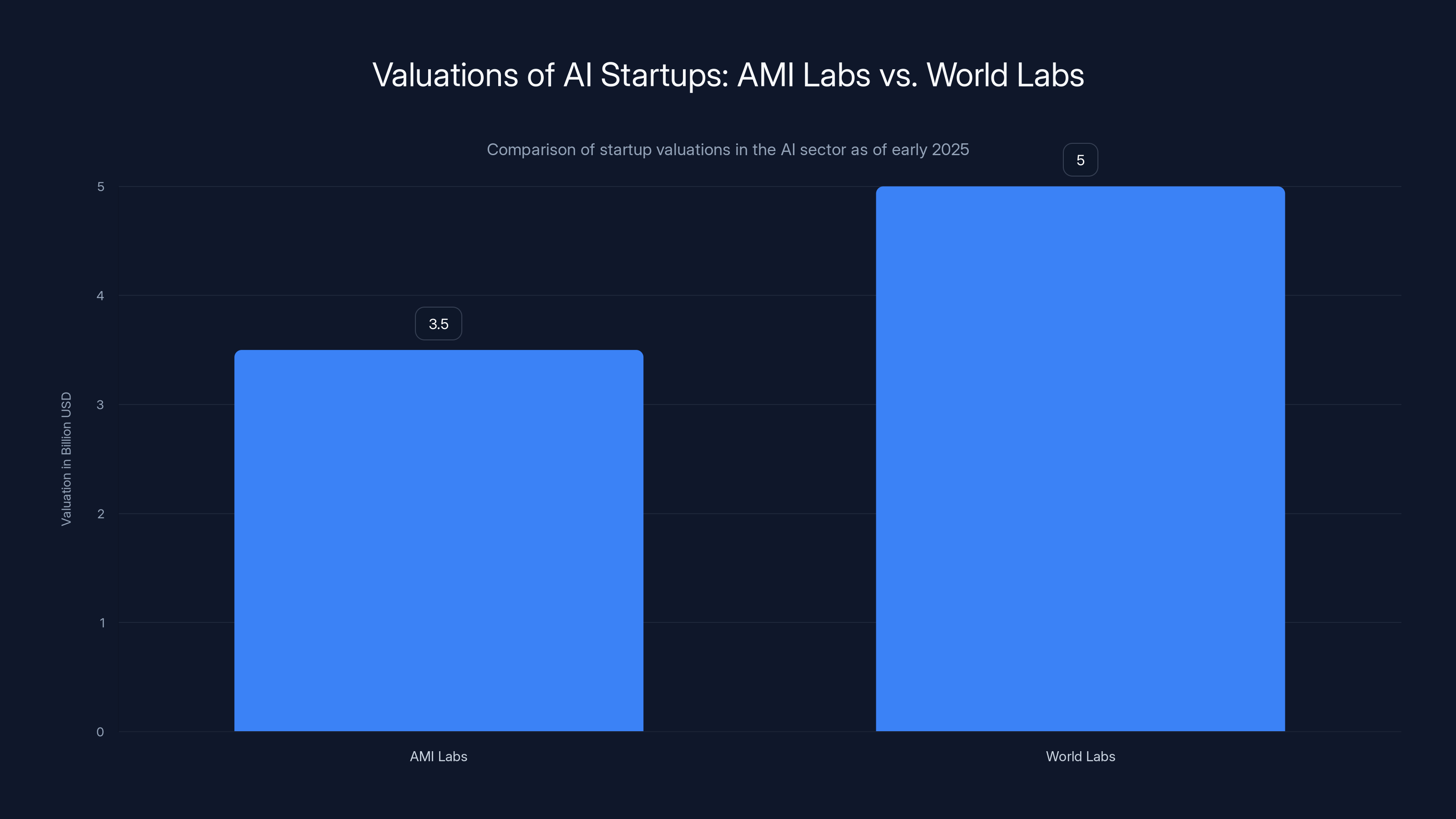

But here's what makes AMI Labs particularly fascinating: it's not just Le Cun's personal project. The startup has quietly assembled one of the most impressive teams in AI, including executives from Meta's leadership, healthcare AI pioneers, and researchers who've been thinking about world models for years. The company raised funding at a $3.5 billion valuation, according to Bloomberg, despite not having publicly released a product yet. That kind of capital commitment reveals how serious investors are taking this bet against large language models.

This article dives deep into who's actually running AMI Labs, what they're building, and why their approach could fundamentally reshape how we think about artificial intelligence over the next five to ten years.

TL; DR

- AMI Labs is Yann Le Cun's new venture focused on building "world models" that help AI systems understand real-world physics and structure, not just predict text

- The team includes Alex Le Brun as CEO (formerly at Nabla), Laurent Solly (ex-Meta VP for Europe), and other FAIR veterans

- The startup raised at a $3.5 billion valuation with interest from top-tier VCs including Cathay Innovation, Greycroft, and Hiro Capital

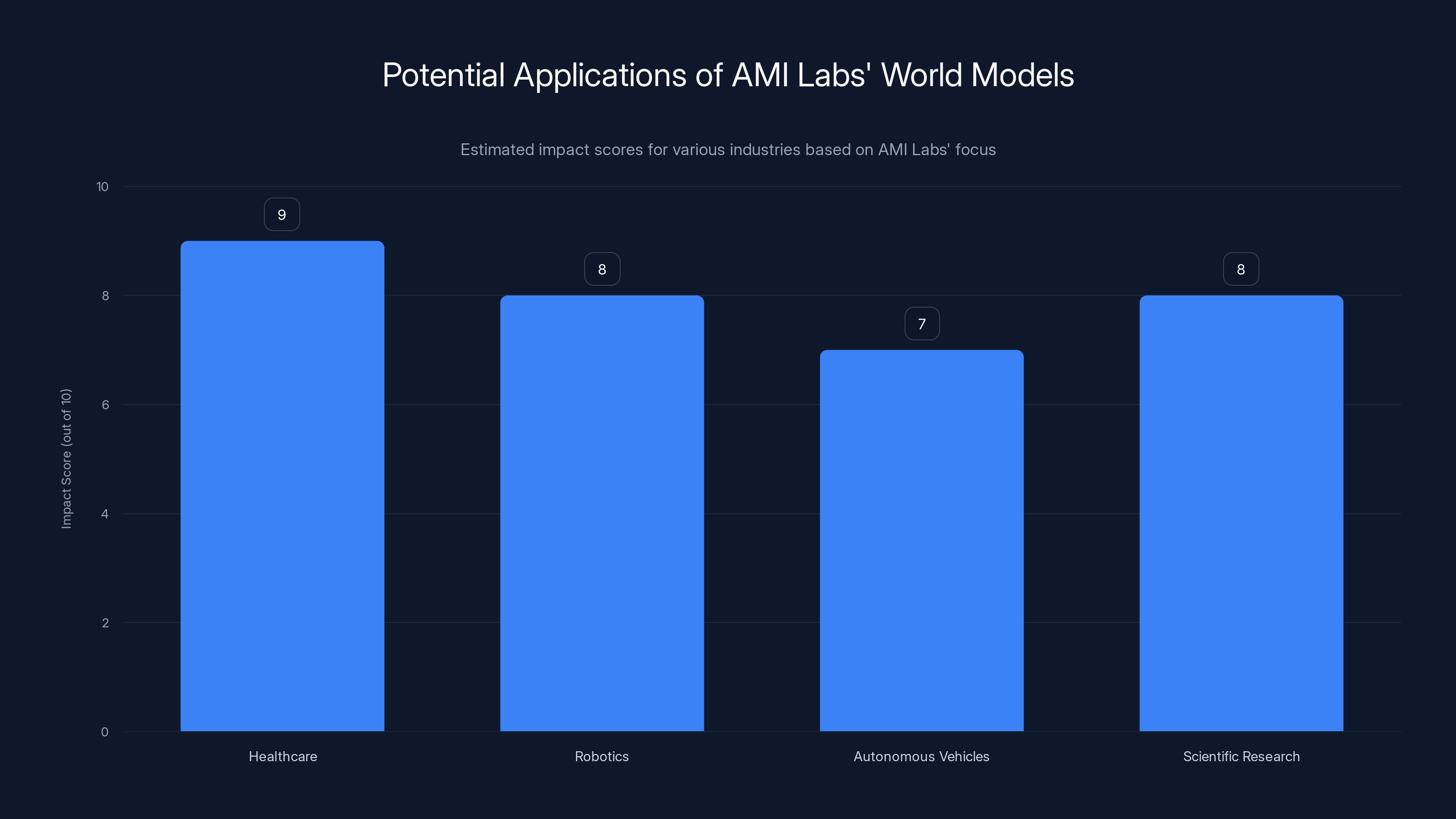

- World models represent a philosophical counter-argument to large language models, with applications in healthcare, robotics, autonomous systems, and scientific research

- Paris-based headquarters signal a strategic bet on Europe's AI ambitions while maintaining offices in Montreal, New York, and Singapore

AMI Labs, despite not having a public product, has secured a

Understanding World Models: The Alternative to LLMs

Before you can understand AMI Labs, you need to understand what world models actually are. And to do that, you have to understand what large language models are not.

Large language models like GPT-4 work by predicting the next most likely word in a sequence. Given billions of examples of human text, they learn statistical patterns about language. But here's the critical limitation: they don't actually understand the physical world. They can tell you about physics, but they can't predict what happens when you pour water into a cup because they've never actually understood the concept of containment or gravity.

World models operate on a fundamentally different principle. They build an internal representation of how the world works. A world model doesn't just predict "the ball falls." It understands why the ball falls, maintains a continuous representation of where that ball is in space, and can predict dozens of future states of that ball based on actual physics.

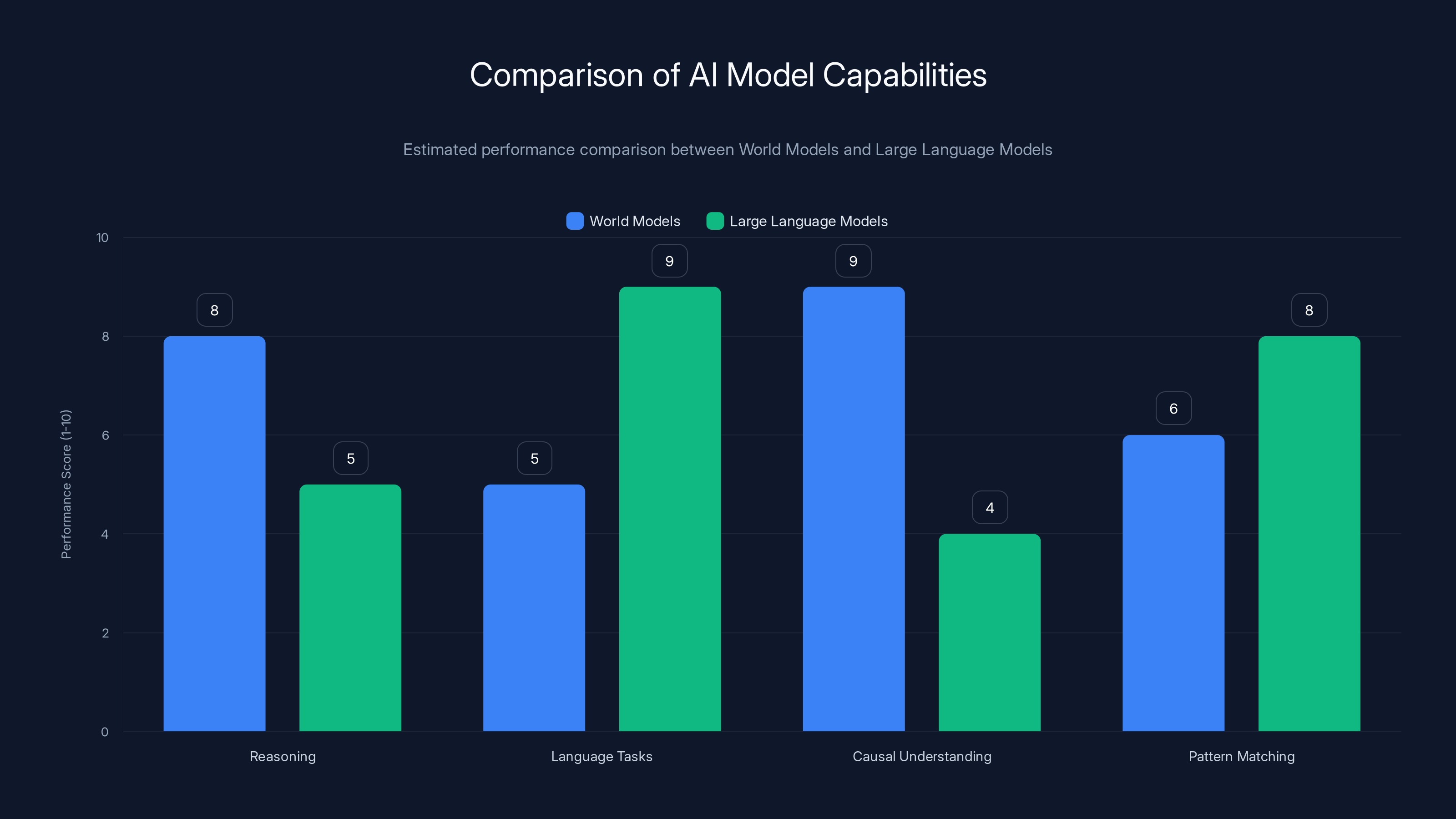

Le Cun has been surprisingly vocal about this distinction. He's published research showing that large language models have profound limitations when it comes to reasoning about cause and effect. They're excellent at pattern matching but terrible at understanding the underlying mechanisms that drive real-world phenomena.

This matters because many of the most important AI applications require actual understanding, not just pattern matching. If you're building an autonomous vehicle, you need to predict how pedestrians move in three-dimensional space. Pattern matching isn't good enough. If you're developing medical AI that assists doctors, hallucinations (where the model confidently states false information) can literally kill patients. A system based on understanding actual causal relationships between symptoms and treatments would be fundamentally safer.

World models solve this problem by building AI systems that maintain an internal model of reality. They don't just predict the next token. They maintain a persistent, updatable representation of the world that can be queried, manipulated, and reasoned about.

The implications of this distinction are enormous. If world models actually work at scale, they represent a genuine paradigm shift in AI. Not an incremental improvement to existing approaches, but a fundamental rethinking of how we build intelligent systems.

World models excel in reasoning and causal understanding, while large language models perform better in language tasks and pattern matching. Estimated data based on typical model capabilities.

The Leadership Team: Who's Actually Running AMI Labs

When you read headlines about "Yann Le Cun's startup," you might assume he's the CEO. But that's where most people get it wrong. Le Cun is explicitly the executive chairman of AMI Labs, not the CEO. This distinction matters.

The actual CEO is Alex Le Brun, a serial entrepreneur and AI engineer who previously co-founded and led Nabla, a healthcare AI startup with offices in Paris and New York. Le Brun's background is perfect for this role. He's not just an academic theorist. He's someone who's actually built AI products that needed to work reliably in high-stakes environments where mistakes carry serious consequences.

Le Brun's healthcare background is particularly important to understanding AMI Labs' strategic direction. In healthcare, hallucinations aren't just annoying mistakes. They can be fatal. If an AI system confidently tells a doctor to prescribe a medication that interacts badly with the patient's existing medications, someone could die. This pushes healthcare AI toward systems that actually understand causality and can explain their reasoning, not just generate plausible-sounding text.

When Le Brun left Nabla to join AMI Labs, he didn't just leave quietly. There was an explicit partnership agreement. Nabla's board supported Le Brun's transition from CEO to chief AI scientist and chairman in exchange for privileged access to AMI Labs' world model technology. This reveals something important about how Le Cun thinks about this venture: it's not just research for research's sake. The technology needs to work in real applications.

Laurent Solly is another key member of the leadership team. Solly spent years as Meta's vice president for Europe before stepping down in late 2024 to join AMI Labs. His expertise isn't in machine learning. It's in scaling organizations, managing complex stakeholder relationships, and building global companies. His presence suggests that AMI Labs isn't content to be a scrappy research lab. They're building something that's meant to scale internationally.

But here's what's perhaps most interesting: the team has deep roots in Meta's AI Research (FAIR) lab. After Le Brun's previous startup, Wit.ai, was acquired by Facebook, he worked under Le Cun's leadership at FAIR. He knows how Le Cun thinks. He understands the research philosophy. And according to Forbes, Meta could well become AMI Labs' first client.

The talent recruitment strategy appears to follow a deliberate pattern. Rather than hiring pure academics, Le Cun is assembling people who've spent time in the trenches actually deploying AI. People who understand not just what's theoretically possible, but what's practically necessary.

The Funding Story: Why VCs Are Betting $3.5 Billion on a Stealth Startup

Let's talk about the elephant in the room: AMI Labs raised at a $3.5 billion valuation without publicly releasing a product. As of early 2025, the startup hasn't shipped anything that users can actually try. There's no demo. No beta access. No downloadable software. Yet investors are willing to write enormous checks.

This isn't normal venture capital behavior. Typically, investors want to see proof of concept. They want to see user traction, revenue, or at minimum a compelling product demo. But the venture capitalists pursuing AMI Labs are making a different bet entirely. They're betting on the team, the research direction, and their conviction that world models represent the future of AI.

The funding discussion reportedly includes several top-tier investors. Cathay Innovation has long been interested in frontier AI research. Greycroft is known for early bets on transformative technology companies. Hiro Capital has a track record of backing AI founders. Additionally, several other major VCs are reportedly in talks, including 20VC, Bpifrance (a French government-backed investment vehicle), Daphni, and HV Capital.

The fact that Bpifrance and Daphni are involved is strategically significant. These are French institutional investors. Their participation signals that AMI Labs isn't just a Silicon Valley startup. It's explicitly positioning itself as a European AI company.

Consider what happened with World Labs, AMI Labs' most direct competitor. World Labs was founded by Fei-Fei Li, another legendary AI researcher who pioneered the Image Net dataset that jumpstarted the modern deep learning revolution. World Labs released a product called Marble, which generates physically sound 3D worlds. Within a relatively short timeframe after launch, World Labs achieved unicorn status (a

This creates a compelling narrative for AMI Labs' valuation. If world models are indeed the next frontier of AI, and if Fei-Fei Li's company reached

The investment thesis for world models seems to run something like this: "Large language models are hitting diminishing returns. They've become extraordinarily good at some tasks but fundamentally incapable of others. The next wave of AI value will go to companies that solve the reasoning and causality problem. Le Cun is arguably the most credible voice in AI arguing that world models are the path forward. He's already proven he can build world-class research labs at Meta. This is where the smart money should go."

Whether that thesis proves correct over the next five to ten years will determine whether AMI Labs becomes a transformative company or a well-funded research project that eventually runs out of runway.

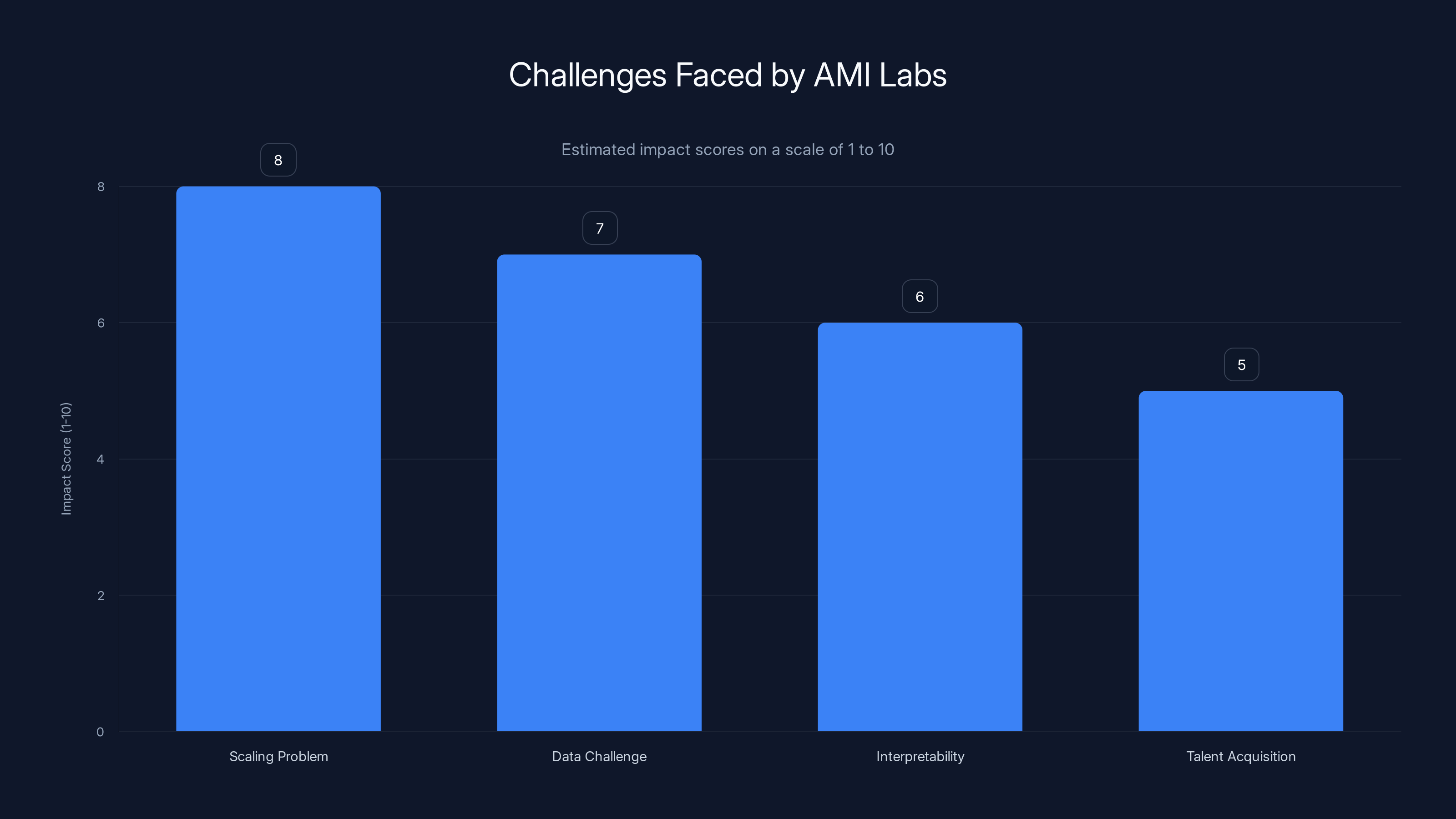

The Scaling Problem poses the highest challenge for AMI Labs, followed by Data and Interpretability issues. Talent Acquisition is also a significant challenge. Estimated data based on typical industry challenges.

Why Paris? The Headquarters Decision and European AI Ambitions

One of the most striking decisions AMI Labs made was choosing Paris as its headquarters. In 2025, when most ambitious AI startups establish themselves in the Bay Area, Singapore, or other traditional tech hubs, Le Cun chose France.

This wasn't a random decision. Le Cun is French-born. He spent formative years in Paris. And critically, he sees Paris as emerging as a genuine center for AI research and development, not just another provincial tech hub trying to compete with Silicon Valley.

The French government clearly agrees. French President Emmanuel Macron publicly praised Le Cun's decision, essentially saying that France will do whatever it takes to support AMI Labs' success. That kind of government backing matters. It means favorable tax treatment, subsidized research partnerships, and political capital when recruiting talent.

Paris-based offices will operate alongside locations in Montreal, New York, and Singapore. Montreal hosts one of the world's leading AI research communities, with deep ties to the University of Montreal and a history of breakthrough research in deep learning. New York is essential because Le Cun maintains his professor position at NYU, teaching one class per year and supervising Ph D and postdoctoral students. Singapore provides a beachhead into Asian markets and represents the growing importance of AI development in Southeast Asia.

But Paris is the center of gravity. It's the headquarters. It's where strategic decisions get made.

This reveals something important about how Le Cun thinks about AMI Labs' future. He's not building another Silicon Valley startup that might sell to Google or Meta in five years. He's building a genuinely global company with European ambitions. That's a different playbook entirely.

Paris isn't a random choice either. The city has become an increasingly serious hub for AI research. Mistral AI, founded by former Meta AI researchers, is based in Paris. The European AI landscape has matured dramatically over the past few years. There's been a deliberate, government-backed effort to build European alternatives to U. S.-dominated AI companies. AMI Labs fits neatly into that narrative.

The decision to headquarter in Paris rather than Silicon Valley also signals something about Le Cun's philosophy. He's not chasing venture capital hype. He's not trying to maximize short-term networking opportunities. He's building a company aligned with his own values and his vision of where AI research should happen.

Whether this proves strategically sound depends partly on factors beyond Le Cun's control. Can Paris attract and retain the world-class engineering talent needed to build competitive AI infrastructure? Can a Paris-based company compete effectively for top researcher attention with offers from Silicon Valley? These questions will be tested over the next several years.

The Vision: What AMI Labs Actually Plans to Build

Okay, so AMI Labs is raising billions to build world models. But what does that actually mean in terms of products and applications? What's the concrete vision?

According to the startup's official communications, AMI Labs is focused on developing world models to "build intelligent systems that understand the real world." That's pretty abstract. Let's translate that into something more concrete.

The company plans to license its technology to industry partners for real-world applications. They're not aiming to build consumer products. Instead, think of AMI Labs as a research company that will eventually become a platform provider. They develop the core world model technology, and then other companies integrate it into their products.

Healthcare is explicitly one focus area. Le Brun, the CEO, has talked openly about wanting to apply world models to healthcare. Why? Because healthcare is where the stakes are highest and where hallucinations are most dangerous. If you build an AI system that understands causal relationships between symptoms, test results, and treatments, you've just created something fundamentally more useful and safer than a large language model trained on medical literature.

Beyond healthcare, world models have obvious applications in robotics. If a robot understands physics intuitively, it can manipulate objects more effectively. It can predict how its actions affect the environment. Self-driving cars are another huge application. Autonomous vehicles need to predict not just where other vehicles are, but how they'll move. A world model that understands traffic dynamics could be profoundly safer than systems based purely on pattern recognition.

Scientific research is yet another domain. Climate modeling, molecular simulation, weather prediction—all of these involve understanding how complex systems evolve over time. World models could revolutionize these fields by enabling researchers to run simulations that capture actual physics rather than just interpolating from historical data.

The company has promised to contribute to building the future of AI "with the global academic research community via open publications and open source." This is significant. Le Cun could have structured AMI Labs as a purely closed company, hoarding proprietary research. Instead, he's committing to publishing research findings and releasing open-source code. This approach builds goodwill in the academic community, helps attract top researchers who want their work to have scientific impact, and ensures that AMI Labs benefits from feedback and contributions from outside researchers.

The fundamental claim is that AMI Labs' AI systems will differ from large language models in crucial ways. They'll have persistent memory, meaning they can maintain an understanding of a situation over time. They'll have reasoning and planning capabilities, meaning they can think through multi-step problems. Most critically, they'll be controllable and safe. When your AI system is based on understanding actual causality rather than pattern matching, you can reason about its behavior and constrain it more effectively.

But here's where things get interesting. Le Cun has publicly criticized some of Meta's strategic choices. He disagrees with the company's heavy bet on large language models and generative AI. He thinks the industry is missing something important. That's the core philosophical disagreement that led him to leave Meta and start AMI Labs.

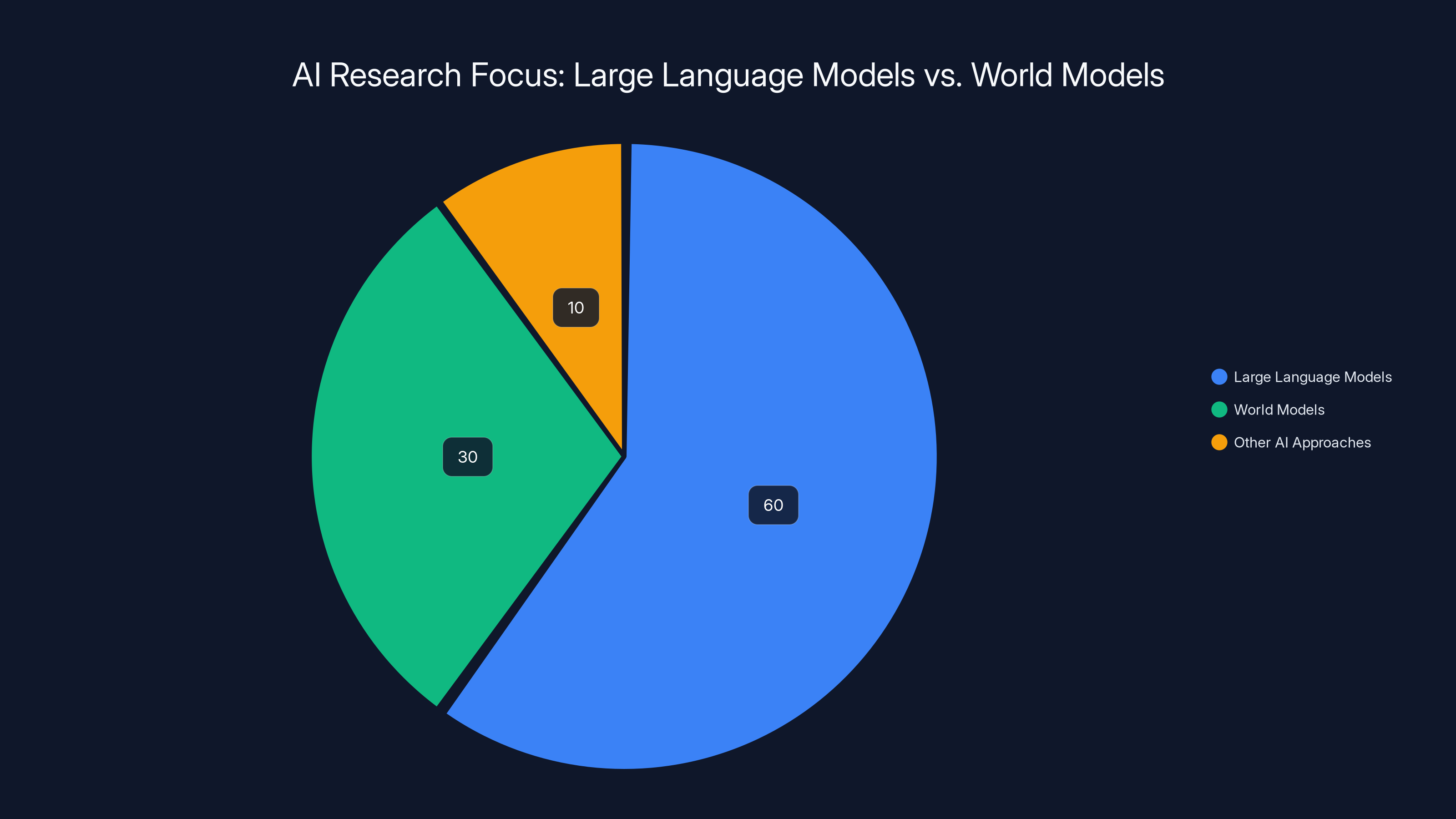

Estimated data suggests a shift in AI research focus, with 30% now exploring world models, a significant increase due to LeCun's influence.

The Philosophical Divide: Le Cun's Critique of LLM-Centric AI

To understand why Le Cun left Meta, you need to understand his fundamental disagreement with the direction the entire AI industry has taken over the past few years.

Large language models have achieved genuinely remarkable feats. They write coherent essays, debug code, answer complex questions, and can engage in seemingly intelligent conversation. The capabilities are real and impressive. But Le Cun sees critical limitations that most people in the industry gloss over.

First, there's the hallucination problem. Large language models confidently state false information. They don't just sometimes make mistakes. They actively fabricate facts, cite non-existent papers, and construct elaborate false narratives. This isn't a minor bug in a system that's otherwise working well. It's a fundamental feature of how these models work. They're trained to predict plausible-sounding text, not to ensure accuracy.

Second, there's the reasoning limitation. Large language models struggle with systematic reasoning about cause and effect. They can describe how Newtonian physics works, but they can't reliably predict what happens when you place objects in a physical space and apply forces to them. This matters for any application where you need genuine reasoning rather than clever pattern matching.

Third, there's the interpretability problem. Large language models are black boxes. You can't easily explain why they generated a particular response. They just did. If you're trying to deploy AI in healthcare, finance, or other high-stakes domains, the inability to explain the system's reasoning is a serious problem.

Le Cun's argument is that these aren't temporary limitations that will be solved by making models bigger. These are fundamental limitations of the approach. They're baked into the architecture. You can make a pattern-matching system larger, but it's still fundamentally a pattern-matching system.

World models, by contrast, are based on building genuine understanding of how the world works. They maintain an internal model of reality that can be queried and reasoned about. This approach has different strengths and weaknesses, but the weaknesses aren't fatal hallucinations and the inability to reason. The weaknesses are more about computational efficiency and how you handle truly novel situations.

The tech industry has been remarkably dogmatic about large language models. Everyone's building them. Everyone's betting on them. Everyone's assuming that making them bigger and training them on more data will eventually solve every problem. Le Cun thinks this is a dead end, or at least a local optimum that the industry is trapped in.

That conviction is what drove him to leave Meta, raise billions of dollars, and build a company explicitly positioned against the LLM-centric approach. He's betting his reputation on the proposition that world models are the more promising research direction.

Isn't it possible he's wrong? Absolutely. Novel research directions often fail. But this is what high-stakes AI research looks like in 2025: not incremental improvements to existing approaches, but fundamental disagreements about which direction is promising.

The Competitive Landscape: AMI Labs vs. World Labs and Others

AMI Labs isn't pioneering world models in isolation. There's a thriving ecosystem of researchers and companies working on these problems. Understanding the competitive landscape helps clarify AMI Labs' positioning.

The most direct competitor is World Labs, founded by Fei-Fei Li. World Labs released Marble, their first product, which generates physically sound 3D worlds. When you give Marble a description like "a wooden table with books on it," it generates a 3D environment that actually respects physics. Objects fall correctly. Liquids pour as they should. Shadows are rendered accurately.

Marble is impressive from a technical standpoint, but it's also narrow. It's focused on 3D world generation. It's not trying to build a general-purpose world model that understands all aspects of reality. It's not building healthcare applications or autonomous systems. It's generating visually correct 3D environments.

AMI Labs appears to be aiming broader. They're explicitly interested in healthcare applications, robotics, scientific simulation, and general reasoning about how the world works. They're not trying to be the best at generating 3D worlds. They're trying to build foundational technology that can be applied across multiple domains.

Beyond World Labs, there are academic research groups working on related problems. Researchers at MIT, Stanford, Berkeley, and other institutions are exploring world models, causal reasoning, and AI systems that can plan and reason about future states. But most of this is still in the research phase. AMI Labs has resources to move these ideas toward actual products more quickly.

There are also robotics companies and autonomous vehicle companies that are implicitly working on similar problems. A self-driving car needs to understand physics and predict how objects move. But these companies are usually focused on their specific domain. AMI Labs is trying to build general-purpose world model technology that multiple industries can license.

The competitive advantage for AMI Labs comes down to two things. First, Le Cun's reputation and credibility in AI research. When he says world models are the future, people listen. Second, the team's ability to assemble world-class talent and resources. With billions in funding and a compelling mission, AMI Labs can attract researchers who might otherwise be scattered across universities and big tech companies.

The broader trend is clear: the AI industry is recognizing that large language models, while powerful, have real limitations. A new wave of research is exploring alternatives and complementary approaches. AMI Labs is well-positioned to lead this wave, but they're definitely not alone in pursuing it.

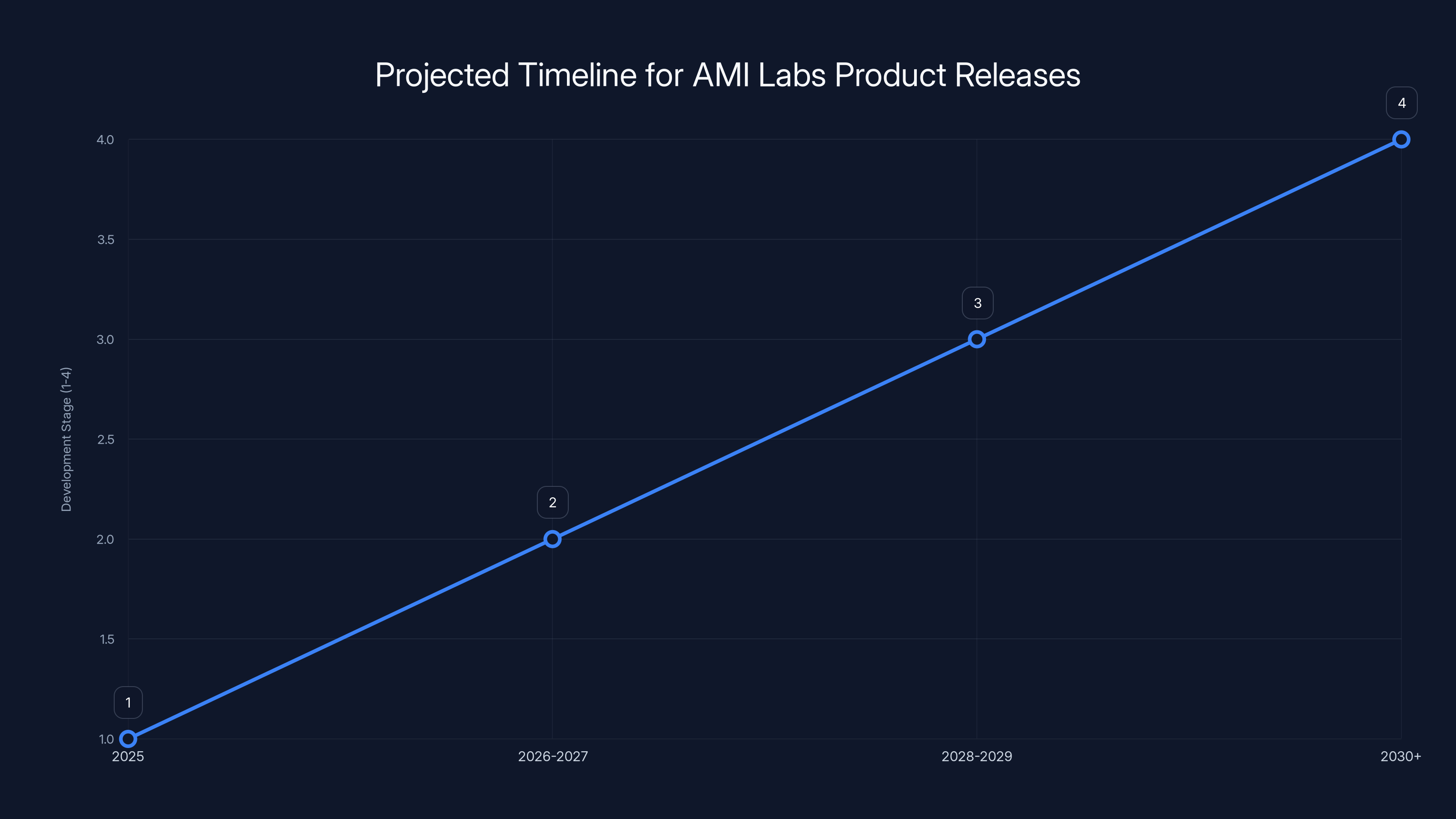

AMI Labs is projected to move from foundational research in 2025 to broader deployment by 2030, assuming steady progress. Estimated data.

Practical Applications: Where World Models Actually Matter

Understanding world models in the abstract is interesting, but the real value emerges when you think about concrete applications. Where does this technology actually make a difference?

Healthcare and Diagnosis

Consider a doctor trying to diagnose a patient with chest pain. The symptom could indicate heart disease, anxiety, muscle strain, or dozens of other conditions. A large language model can tell you all the possibilities. It can cite medical literature. But it can't actually reason about causality in the patient's specific case. A world model trained on medical knowledge could understand the causal relationships. It could model how a patient's age, smoking history, family history, current medications, and test results all interact to produce the observed symptoms. It could then reason through likely diagnoses and predict how different treatments might affect the patient's condition.

This is fundamentally safer than an LLM because it's based on understanding actual causal mechanisms rather than statistical correlations in training data.

Autonomous Vehicles

A self-driving car navigating a busy intersection needs to predict not just where other vehicles are, but how they'll move. Will the car turning left have time to make the turn before oncoming traffic? If a pedestrian steps into the road, will they continue walking or stop? A world model that understands the physics and dynamics of traffic can predict these situations more reliably than a system that only matches patterns from historical driving data.

In edge cases where historical data is sparse, a genuine understanding of physics and human behavior becomes invaluable.

Climate and Weather Modeling

Climate scientists need to simulate how the Earth's systems evolve over decades and centuries. Traditional models are based on physical equations and conservation laws. But there's enormous room for improvement. A world model that learns from observational data how the climate system actually evolves, while respecting the underlying physics, could make more accurate predictions. It could also help scientists understand novel interactions and edge cases.

Robotics and Manipulation

A robot trying to pick up a delicate object needs to understand physics intuitively. How much force will break it? What's the optimal grasping strategy? A robot with a good world model can make better decisions than a robot that's only pattern-matched millions of grasping examples. It can generalize to novel objects and scenarios.

Scientific Discovery

Researchers in fields like materials science, drug discovery, and molecular biology spend enormous time running simulations. A world model that understands chemistry and physics could run more efficient simulations, explore larger design spaces, and help researchers discover materials and drugs that wouldn't have been found through traditional methods.

In all these domains, the critical difference is that world models enable genuine prediction and reasoning about novel situations. An LLM can tell you about traffic dynamics or medical diagnosis. But a world model can predict, simulate, and reason in ways that transfer to new situations.

The Research Roadmap: What's Coming from AMI Labs

We don't have a detailed public roadmap for AMI Labs. The company is still relatively new and hasn't released extensive documentation about their development plans. But we can infer some likely directions based on what Le Cun has said publicly and what makes sense given their funding and team.

The immediate focus is likely on foundational research. Building world models that actually work reliably at scale is hard. It's genuinely unsolved. The first phase of AMI Labs' work is probably focused on proving that world models can achieve superhuman performance on reasoning and prediction tasks. That means publishing research papers, building datasets, and developing training methods.

Once foundational research establishes that the approach works, the company needs to move toward practical applications. This probably means partnering with healthcare institutions, robotics companies, or autonomous vehicle developers to test world models in real scenarios. These partnerships will generate feedback about what works and what doesn't.

Eventually, AMI Labs will need to productize their technology. That means creating software libraries, APIs, and frameworks that other companies can use to build world models for their specific domains. Think of it like Tensor Flow (Google's deep learning framework) or Py Torch (Facebook's deep learning framework). These are foundational technologies that thousands of companies build on top of.

The open-source commitment is important here. By releasing code and publishing research, AMI Labs builds a community around world models. Other researchers contribute improvements. Other companies deploy the technology. This creates a virtuous cycle where the core technology improves faster than if it were proprietary.

Long-term, if world models prove to be as revolutionary as Le Cun believes, AMI Labs could become something like a platform company. They'd provide the foundational world model technology, and then an entire ecosystem of companies would build applications on top. Healthcare companies would integrate world models into diagnostic systems. Robotics companies would use them for manipulation and planning. Autonomous vehicle companies would use them for prediction and reasoning.

But none of that happens if the foundational research doesn't work. That's the critical bet.

Estimated data: AMI Labs' world models are expected to have the highest impact in healthcare, followed closely by scientific research and robotics.

Challenges AMI Labs Will Face

It's easy to get excited about AMI Labs' vision and mission. Yann Le Cun is a legendary researcher, the team is strong, and the funding is substantial. But let's be honest about the challenges the company faces.

The Scaling Problem

Building world models that work for toy problems is one thing. Building them that work for complex, real-world scenarios at scale is something else entirely. Training these models requires enormous computational resources. It requires high-quality training data that captures the real complexity of the world. And it requires novel algorithms that we haven't figured out yet.

Large language models achieved scale through sheer computational resources and large datasets. But that approach might not work as well for world models, which need to capture causal structure, not just statistical patterns.

The Data Challenge

Training a world model requires diverse, high-quality data that captures the relationships between actions and their consequences. For some domains like video of natural scenes, there's an enormous amount of training data available. For other domains like industrial robotics or healthcare, good training data is scarce and expensive to collect.

AMI Labs will need to figure out how to build generalizable world models without perfect data for every scenario. This is genuinely hard.

The Interpretability Question

One of the key selling points of world models is that they should be interpretable and explainable. But that's not automatic. A learned world model is still a neural network. It's not inherently more interpretable than a large language model. Making it interpretable requires design decisions that might hurt performance.

Balancing performance and interpretability is an ongoing challenge.

The Talent Acquisition Challenge

The best machine learning researchers have options. They can work at Google, Meta, Open AI, Anthropic, or any number of well-funded startups. Convincing world-class talent to leave established companies and move to Paris to work on unproven technology is hard. AMI Labs has Le Cun's reputation and substantial funding, which helps. But this will be an ongoing challenge.

The Path to Revenue

Billions in funding can sustain a company for years. But eventually, AMI Labs needs to generate revenue. They need to license their technology to customers who find it valuable. If world models turn out to be interesting research but not particularly useful in practice, the funding dries up. The company needs to find paying customers relatively quickly, or justify extended runway based on breakthrough research results.

These are substantial challenges. But they're also exactly the kinds of challenges that leading AI companies successfully navigate. The question is whether AMI Labs can navigate them too.

The Broader AI Industry Implications

Whether AMI Labs succeeds or fails, the company represents something important about the current state of AI research. It signals that the industry is fragmenting into different camps with genuinely different visions of how AI should evolve.

For years, there was rough consensus that deep learning and neural networks were the path forward. But within that consensus, there are now serious disagreements. Should we focus on scaling up large models? Should we explore different architectures? Should we pursue neuro-symbolic approaches that combine learning with reasoning? Should we build systems that understand causality?

AMI Labs is explicitly betting that world models and causality-understanding are more promising than scaling up language models further. If they're right, we could see a wave of new startups and research directions focused on world models over the next few years.

If they're wrong, AMI Labs will be a well-funded research lab that didn't achieve its mission. That's okay. Not every ambitious bet works out. But the attempt itself is valuable. It pushes the field forward by exploring possibilities that otherwise wouldn't get funded.

There's also a geopolitical dimension. AMI Labs is explicitly a European company with a French headquarters. This fits into a broader pattern of European AI ambitions. If world models turn out to be important, Europe gets a leading company early. If large language models remain dominant, Europe still has meaningful research contributions. Either way, it strengthens Europe's position in AI.

For the United States and China, AMI Labs is a reminder that leadership in AI isn't guaranteed to remain in the U. S. Other regions can attract top talent and capital by offering compelling visions and committed resources.

Expert Insights: What AI Researchers Think About World Models

The consensus among leading AI researchers is that world models represent a genuinely important research direction. But there's disagreement about how important and how near-term.

Le Cun is probably the most bullish. He's consistently argued that world models are essential for building AI systems that can reason about cause and effect. He believes this is necessary for safe, aligned AI. He thinks the industry's obsession with large language models is a mistake.

Other researchers are more cautious. They agree world models are interesting and worth researching. But they're not convinced they'll replace large language models as the dominant paradigm. They think the two approaches might work well together, complementary rather than competitive.

There are also researchers who think world models are theoretically interesting but practically hard to apply. They argue that for many real-world applications, simpler approaches based on large language models plus some additional tools might work fine.

What's clear is that nobody thinks world models are unimportant or not worth researching. The disagreement is about magnitude and timing, not about whether they matter.

Financial Sustainability: Can AMI Labs Make Money?

Here's the uncomfortable question: how does AMI Labs actually become a sustainable business?

One model is licensing. AMI Labs develops world model technology and licenses it to companies that build applications. Hospitals license it for diagnostic systems. Robotics companies license it for manipulation systems. Autonomous vehicle companies license it for prediction systems. This is how companies like NVIDIA or ARM make money: they develop foundational technology and license it widely.

Another model is building applications directly. AMI Labs develops world model-based products and sells them to customers. This is more capital-intensive and competitive, but it allows the company to capture more value.

A hybrid model combines research, licensing, and limited applications. The company does frontier research that gets published and open-sourced, raising their profile and credibility. They license technology to strategic partners. And they build a few key applications where they think they have a competitive advantage.

The key question is timing. How long can the company operate on venture funding before it needs to generate revenue? With billions in funding, they can probably operate for 5-10 years even without significant revenue. But eventually, returns need to materialize.

Le Cun has faced this question before. He built FAIR at Meta, which was a research lab that generated enormous quantities of publishable research and open-source code. But it also demonstrated practical applications and contributed to Meta's competitive advantages in AI. Le Cun is probably envisioning something similar for AMI Labs: foundational research that establishes the company's credibility and leadership, plus applications that prove the technology's value.

Timeline: When Will We See Actual Products from AMI Labs?

This is the question everyone wants answered. When will AMI Labs release something we can actually use?

Based on what we know about AI research timelines and the company's current stage, a realistic timeline might look like this:

2025: Continued foundational research. Published papers establishing AMI Labs' technical capabilities. Strategic partnerships with universities and other research institutions. Continued talent acquisition.

2026-2027: First application pilots. Partnerships with healthcare institutions or robotics companies to test world models on real problems. Initial product development. More published research.

2028-2029: Limited release of world model software libraries and APIs. Early adopters begin experimenting with incorporating world models into their systems.

2030+: Broader deployment. More companies using AMI Labs' technology. Revenue generation from licensing and partnerships.

This timeline assumes things go well. If technical challenges are harder than expected, everything shifts right. If breakthroughs happen faster than anticipated, things shift left.

The important thing to understand is that AMI Labs is not trying to move fast and break things. They're pursuing frontier research. These efforts typically take years from conception to deployment.

FAQ

What exactly is a world model?

A world model is an AI system that learns to represent the structure and dynamics of the physical world. Instead of just predicting text tokens like a language model, a world model builds an internal representation of how objects move, how forces interact, and how causes produce effects. It can predict what will happen when you perform actions in the world and reason about how to achieve desired outcomes.

How is AMI Labs' approach different from large language models?

Large language models predict the next most likely word in a sequence. They're excellent at pattern matching but struggle with reasoning about cause and effect. World models, by contrast, build explicit representations of causal relationships and physics. This makes them better at tasks requiring genuine reasoning, but they're typically not as good at language tasks as specialized language models. AMI Labs believes world models are the more promising direction for building genuinely intelligent AI systems.

Who is Yann Le Cun?

Yann Le Cun is a legendary AI researcher who won the Turing Prize in 2019 for his work on convolutional neural networks, which form the foundation of modern deep learning. He spent nearly a decade leading Meta's AI Research (FAIR) laboratory before leaving in 2024 to found AMI Labs. He's widely respected in the AI research community for his technical contributions and his willingness to voice contrarian opinions about research directions.

Why did Le Cun leave Meta to start AMI Labs?

Le Cun has been publicly critical of the AI industry's heavy focus on large language models, arguing that they're hitting fundamental limitations that won't be solved by scaling. He believes world models represent a more promising research direction. His disagreement with Meta's strategic choices under Mark Zuckerberg's leadership contributed to his decision to leave and pursue world models full-time.

What is Alex Le Brun's role at AMI Labs?

Alex Le Brun is the CEO of AMI Labs. Previously, he co-founded and led Nabla, a healthcare AI startup. He's known for building AI products that actually work reliably in high-stakes environments. His healthcare background is important because healthcare is one of AMI Labs' key application focus areas. While Le Cun serves as executive chairman, Le Brun handles day-to-day operations and strategic execution.

When will AMI Labs release a product?

AMI Labs hasn't publicly committed to a specific launch date for products. The company is currently focused on foundational research and establishing partnerships. Based on typical AI research timelines, limited product releases might occur in 2026-2027, with broader deployment in subsequent years. For a company pursuing frontier research, the timeline is measured in years, not months.

How is AMI Labs funded?

AMI Labs raised funding at a $3.5 billion valuation, according to reports. Investors include top-tier VCs like Cathay Innovation and Greycroft, as well as European government-backed vehicles like Bpifrance. The funding amount hasn't been publicly disclosed, but it's likely in the hundreds of millions of dollars range.

Why is AMI Labs headquartered in Paris?

Le Cun chose Paris as AMI Labs' headquarters to signal the company's European ambitions and build on Paris' emerging status as an AI research hub. The decision received support from French President Emmanuel Macron and attracted investment from French government-backed funds. While the company has offices in Montreal, New York, and Singapore, Paris is the strategic center.

What are the practical applications for world models?

World models have potential applications in healthcare (diagnostic AI systems), autonomous vehicles (prediction and reasoning about traffic), robotics (manipulation and planning), climate science (simulation and prediction), and scientific research (drug discovery and materials science). Any domain requiring genuine reasoning about how the world works could benefit from world models.

Is AMI Labs competition for Open AI, Anthropic, or other LLM companies?

Not directly. Open AI and Anthropic are focused on large language models and building general-purpose AI assistants. AMI Labs is pursuing world models, a fundamentally different technology. That said, there's an indirect competitive dynamic. If world models prove to be more useful than LLMs for certain tasks, that could shift investment and talent away from language model companies.

Conclusion: The Bet That Could Reshape AI

AMI Labs represents something rare in the venture capital world: a massive bet on a genuinely novel research direction by one of the most credible researchers in AI. Yann Le Cun's decision to leave Meta, raise billions, and focus entirely on world models signals that he's serious about this being the right direction for AI.

But here's what's important to understand: this bet could easily turn out wrong. World models might turn out to be interesting research that doesn't scale to practical applications. Large language models might continue dominating despite their limitations. The computational requirements for world models might prove prohibitive. The team might struggle to recruit world-class talent to Paris. Revenue generation might prove harder than anticipated.

Any of these things could happen. Most ambitious AI research bets do fail, at least in their original form. But the attempt itself matters. By funding and pursuing world models seriously, AMI Labs pushes the entire AI field forward. It forces people to think about alternatives to LLM dominance. It attracts research talent to a different problem set. It generates papers and code that other researchers build on.

If AMI Labs succeeds, we might look back at 2024-2025 as the moment when the AI industry's paradigm began to shift. We'd see a new wave of startups and research groups pursuing world models. We'd see applications in healthcare, robotics, and scientific research that simply weren't possible with language models. We'd see AI systems that can reason about cause and effect, maintain persistent memory, and operate reliably in domains where hallucinations are unacceptable.

If AMI Labs fails, it will have still contributed meaningful research that advances our understanding of how to build intelligent systems. It will have created space for alternative approaches to flourish. And it will have demonstrated that even in a capital-intensive field, genuine research bets can still happen.

The next few years will be fascinating. AMI Labs will publish papers that either vindicate Le Cun's vision or reveal fundamental challenges that need to be solved. They'll build partnerships that either demonstrate that world models work in practice or show that the approach has limitations. They'll attract talent that will contribute to a vibrant research community exploring these ideas.

What we can say with certainty is that Yann Le Cun's new venture has already changed the conversation about where AI research should go next. And sometimes, shifting the conversation is the most important thing a startup can do.

Related Resources and Further Reading

To stay updated on AMI Labs and world models:

- Follow Yann Le Cun's official social media accounts for research updates and technical insights

- Monitor academic conferences (Neur IPS, ICML, ICLR) for papers from AMI Labs researchers

- Read the MIT Technology Review's coverage of world models and frontier AI research

- Explore papers on world models and causal reasoning from academic research groups at MIT, Stanford, and other institutions

- Track investment announcements from major AI VCs for updates on AMI Labs' funding and partnerships

Key Takeaways

- AMI Labs represents a fundamental bet that world models, not large language models, are the future of AI—a contrarian but credible thesis from Yann LeCun

- The team includes healthcare AI pioneer Alex LeBrun (CEO), Meta veterans, and global leadership in AI research with offices spanning Paris, Montreal, New York, and Singapore

- At $3.5 billion valuation, AMI Labs shows VCs are serious about funding alternatives to LLM-centric approaches, especially for applications requiring causal reasoning and safety

- World models have concrete applications in healthcare diagnostics, autonomous vehicles, robotics, and scientific simulation where hallucination-prone LLMs fail

- The company plans to publish research openly and contribute to academic community while licensing technology to industry partners—a model that could establish European leadership in AI

Related Articles

- Tesla's Dojo Supercomputer Restart: What Musk's AI Vision Really Means [2025]

- Thinking Machines Cofounder: Workplace Misconduct & AI Exodus

- Rippling vs Deel Corporate Spying Scandal: DOJ Criminal Investigation

- Google Photos Me Meme: Complete Guide & AI Alternatives [2025]

- Apple's Siri AI Chatbot Revolution: What Changed & Alternatives

- Apple's AI Chatbot Siri: Complete Guide & Alternatives 2026

![AMI Labs: Inside Yann LeCun's World Model Startup [2025]](https://tryrunable.com/blog/ami-labs-inside-yann-lecun-s-world-model-startup-2025/image-1-1769215330887.jpg)