Apple's Secret AI Transformation: Why Siri's Makeover Matters

For years, Siri felt like the neglected sibling in Apple's ecosystem. You'd ask it a question, get a stilted response, and reach for Google instead. But something massive is shifting behind the scenes at Apple Park.

Recent reports suggest Apple is planning a complete overhaul of Siri. We're not talking about incremental improvements here. The company is apparently dumping its aging approach and building something that could actually compete with ChatGPT, Google's Gemini, and Claude. The catch? It's a massive admission that Apple fell behind.

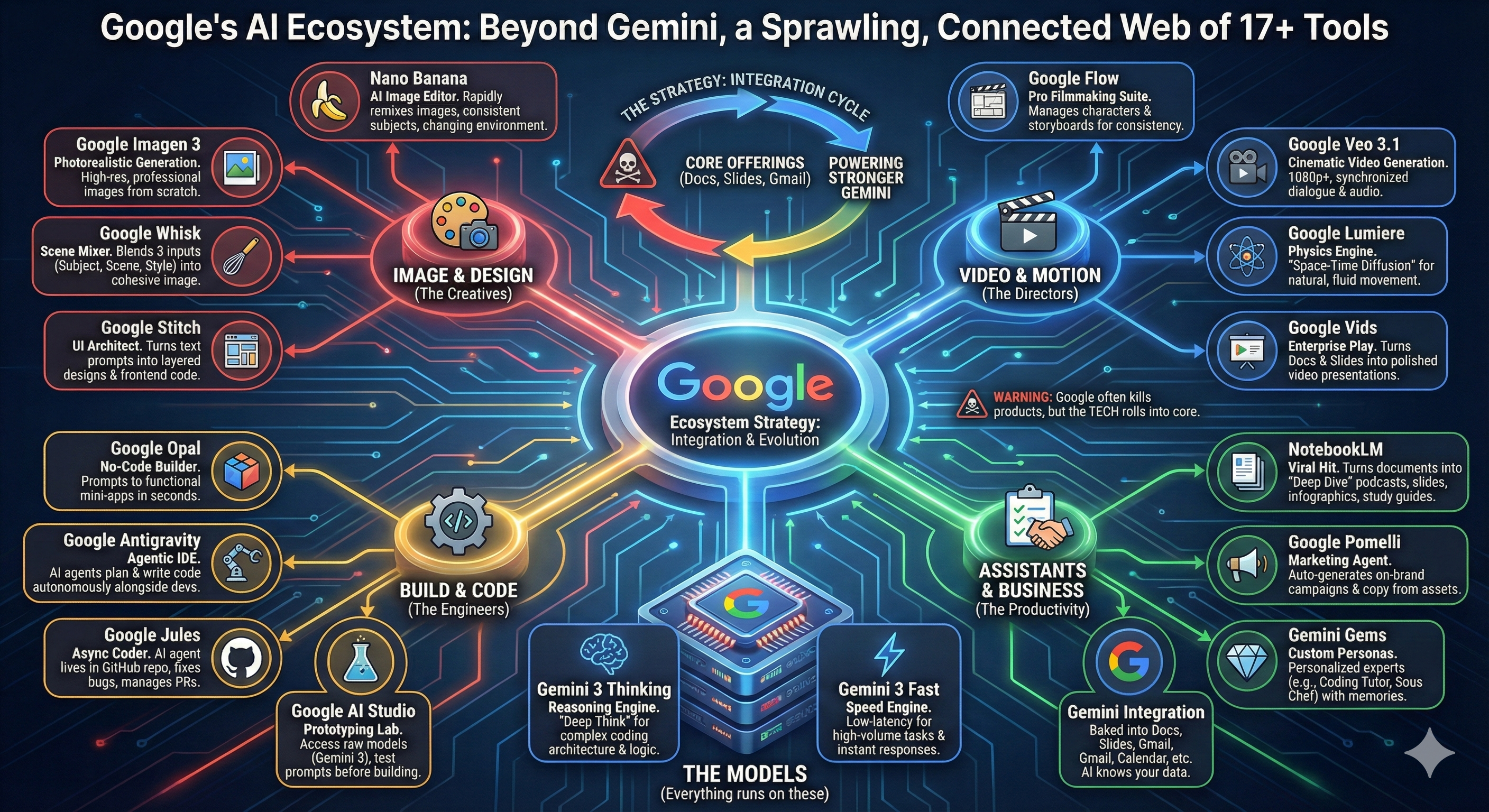

What makes this particularly interesting is that Apple isn't building this from scratch. According to industry insiders, the new Siri will be based on Gemini, Google's AI model. Yes, you read that right. Apple is potentially leveraging Google's technology to power its own AI assistant. This is the kind of pivot that signals real urgency.

For iPhone users, this could be transformative. Imagine asking Siri actual complex questions and getting thoughtful, contextual answers. Imagine it understanding nuance instead of defaulting to a web search. That's the promise here.

But here's what's really important to understand: this isn't just about making Siri smarter. This is Apple recognizing that AI assistants are becoming the primary interface between users and technology. Miss this transition, and you're irrelevant. Get it right, and you own the device experience for billions of people.

In this article, we're diving deep into what's actually happening with Siri, why Apple felt forced to make this dramatic change, how this compares to existing AI solutions, and what it means for the broader AI landscape. Whether you're an Apple user, a developer building for iOS, or just someone tracking AI's evolution, there's a lot to unpack here.

TL; DR

- Complete Siri Redesign Coming: iOS 27 will feature an entirely new Siri powered by Gemini, not Apple's proprietary technology

- Direct ChatGPT Competition: Apple's new AI assistant will position itself as a premium alternative to ChatGPT, Gemini, and Claude

- Integration Advantage: The new Siri will be deeply integrated into iOS, giving it unique capabilities that standalone ChatGPT can't match

- Google Partnership Signal: Using Gemini represents a strategic partnership and acknowledgment that building AI from scratch is no longer feasible

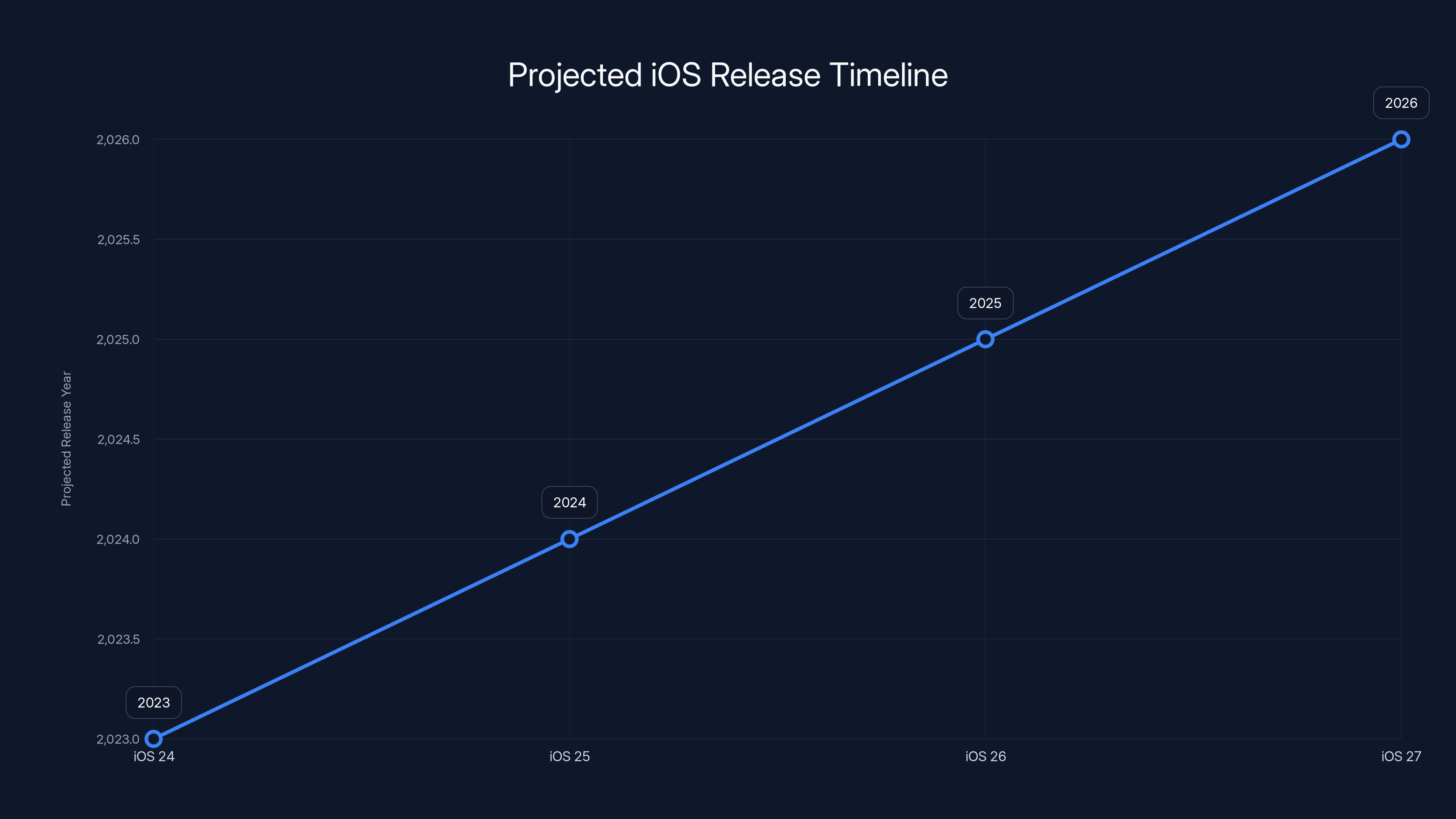

- Timeline: Expect the new Siri to roll out with iOS 27, likely in 2025 or early 2026

Estimated data suggests iOS 27 will likely release in 2025 or early 2026, following Apple's typical 12-18 month announcement-to-release cycle.

The Problem With Old Siri: Why Apple Had To Pivot

Let's be honest about where Siri currently stands. It's broken in ways that matter.

When you ask Siri something complex, it frequently misunderstands context. Ask it to find restaurants near you with "cozy ambiance," and it defaults to a web search. Ask it to compare two things, and it gets confused about what you're actually comparing. These aren't edge cases either. They're the daily reality for millions of users.

The real problem is architectural. Siri was built in an era before large language models became viable. Its underlying structure is brittle. It relies heavily on predefined intents and responses. You give it keywords, it matches against a database, and returns a canned response. When reality doesn't fit neatly into those categories, Siri falls apart.

Compare this to ChatGPT. You can ask ChatGPT almost anything—complex, ambiguous, context-dependent questions. It reasons through the problem. It understands nuance. It generates novel responses rather than matching patterns.

Apple tried to improve Siri incrementally. Every iOS update would add a few new capabilities. But incremental improvements to a fundamentally limited architecture don't actually solve the problem. It's like trying to make a rotary phone compete with a smartphone by adding more buttons.

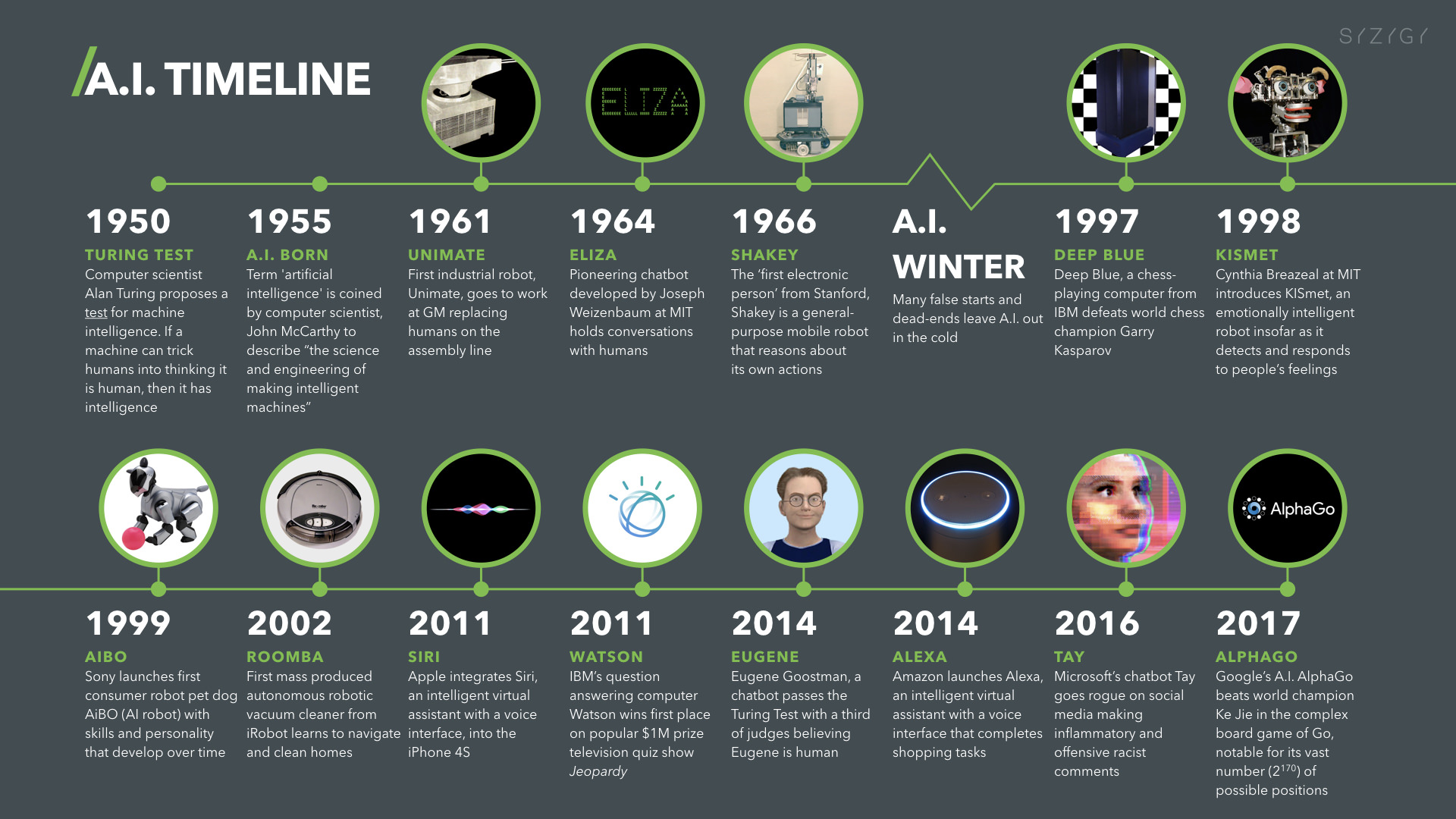

Meanwhile, ChatGPT launched in November 2022 and reached 100 million users in two months. That's not just adoption. That's a wholesale shift in how people expect to interact with AI. Users got a taste of what real conversational AI feels like, and suddenly Siri's limitations became intolerable.

Google launched Gemini in response. Microsoft integrated GPT-4 into Copilot and then baked it into Windows. Even Amazon's Alexa is being upgraded with more sophisticated AI. Apple watched all of this happen and realized it was losing control of a critical interface layer.

There's another angle here: integration. Apple's advantage has always been ecosystem integration. Siri lives on your phone, your watch, your car, your home. If it actually became powerful, that integration could be devastating for competitors. A smart Siri that understands your context, your apps, your data, and your preferences could be far more useful than ChatGPT on web.

But only if it works. And the old Siri foundation couldn't support that vision.

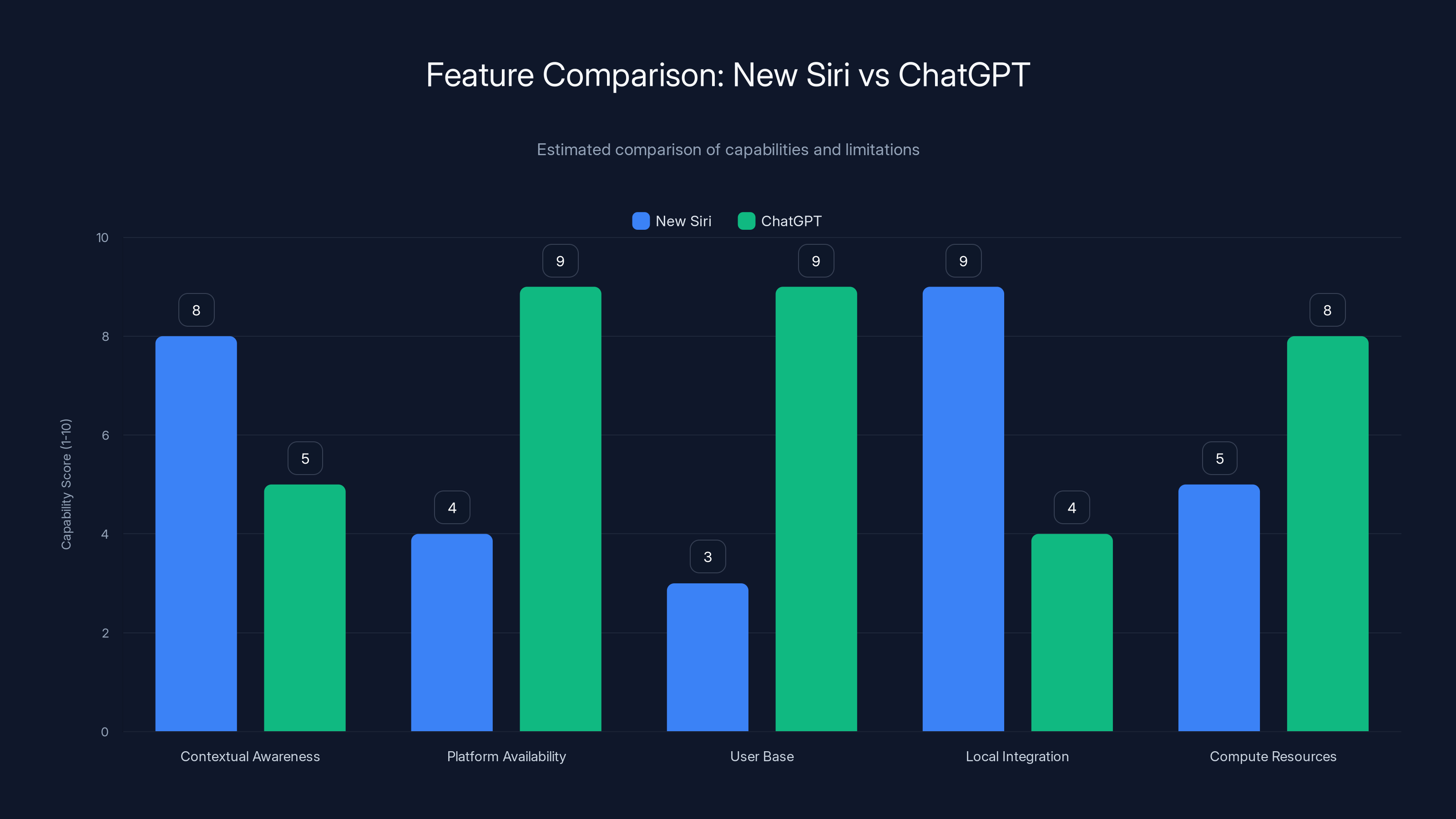

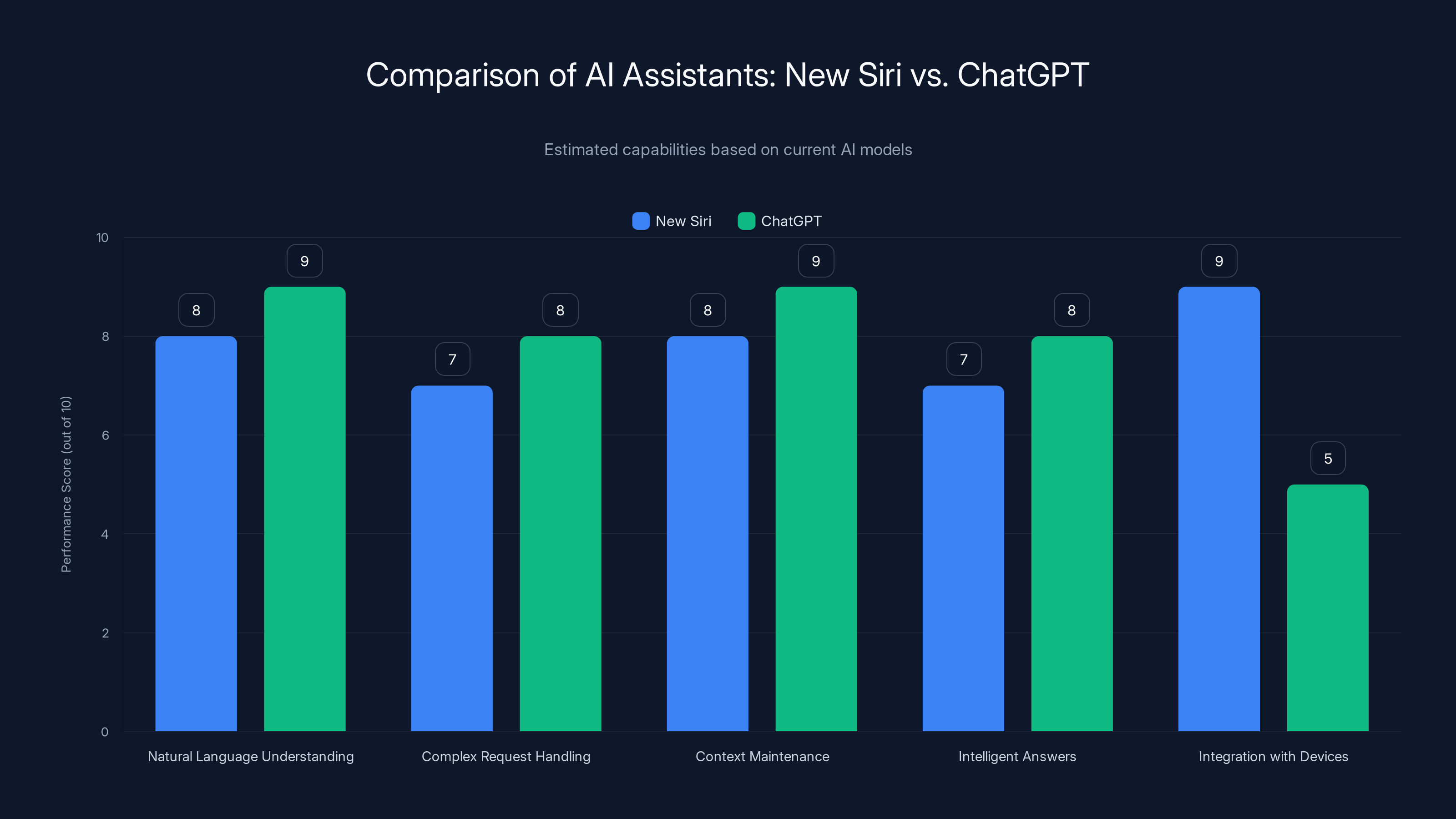

Estimated data shows New Siri excels in contextual awareness and local integration, while ChatGPT leads in platform availability and compute resources.

Why Apple Chose Google's Gemini (Not Its Own Model)

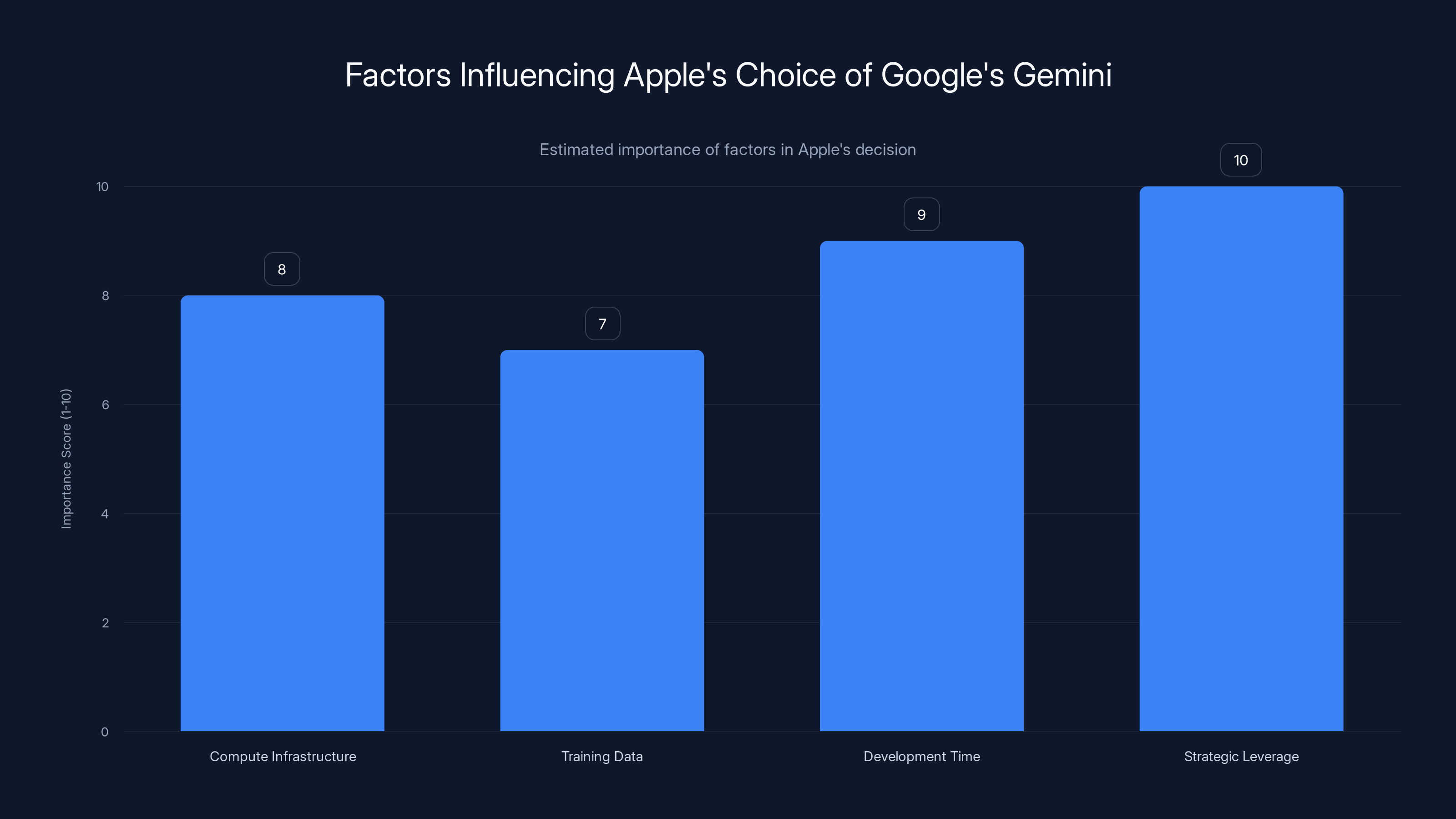

This is the move that caught everyone's attention. Apple is apparently building the new Siri using Google's Gemini, not developing its own LLM.

On the surface, this seems backwards. Apple has massive resources. Why not build their own language model?

The honest answer: building a competitive LLM is harder and slower than people realize. You need enormous compute infrastructure. You need training data. You need months or years of experimentation. You need to deal with hallucination, bias, safety concerns. And you need to do all of this while competing against teams at OpenAI, Google, and Anthropic that have been focused on this for years.

Apple's core competency is hardware-software integration, not large-scale AI research. They could build their own model, sure, but it would probably be behind the curve by the time it launched. Meanwhile, the market is moving fast.

Using Gemini solves this problem. Google has already built a powerful, multimodal AI model. Integrating it into iOS gives Apple instant access to state-of-the-art capabilities without the burden of building from scratch.

But there's a second reason that might be even more important: strategy.

Apple and Google have a complicated relationship. Google pays Apple billions for the default search position on Safari. But they're also competitors in maps, email, photos, and increasingly in AI. By adopting Gemini for Siri, Apple is essentially saying: "We're going to use your technology, but integrate it so deeply into iOS that users will experience it as 'Apple intelligence.'" It's a power move.

This also creates bargaining leverage. Google probably prefers having Gemini power Siri than watching Apple build something that might exclude Google entirely. It's a negotiated peace that benefits both companies.

There's also the question of liability and responsibility. If Apple built their own model and it made a mistake, hallucinated, or caused harm, Apple owns that problem. By using Gemini, Apple can point to Google for certain failure modes. It's not ideal for users, but it's better for Apple's legal exposure.

From a technical standpoint, Gemini is actually well-suited for Siri. It's built to handle short, real-world interactions, not just long-form writing. It's efficient enough to run on-device for certain tasks (though the integration will likely use cloud processing for complex queries). And it's genuinely good at understanding natural language, which is exactly what Siri needed to fix.

The partnership also suggests something about Apple's future strategy. They're not abandoning AI development internally. They're just choosing to partner on the language model itself while building the integration, safety layers, and device-specific optimizations. Think of it as: Google builds the brain, Apple builds the nervous system.

iOS 27 and the Bigger Picture: When It All Arrives

The reports suggest this new Siri will debut in iOS 27, which likely means 2025 or early 2026 depending on Apple's release schedule.

That timeline matters. It's not immediate, but it's close enough to be credible. It also aligns with Apple's historical pattern of announcing major features 12-18 months before launch. This gives developers time to build for it, gives users time to anticipate it, and gives Apple time to refine the implementation.

What's interesting about tying this to iOS 27 specifically is what it signals about the scope of change. iOS version numbers represent major releases. iOS 27 would follow iOS 26, presumably launching in 2025. Bundling Siri redesign with a major OS version means Apple is committing resources and treating this as transformative, not iterative.

Here's what probably happens when iOS 27 lands:

First, the obvious stuff. Siri gets smarter about answering questions. It understands follow-ups. It handles ambiguity better. You can ask it to do things that currently fall outside its capabilities.

Second, the integration deepens. Siri might be able to help you write emails, organize photos, understand complex documents, and provide real analysis of data in your apps. The on-device integration becomes a genuine advantage over ChatGPT-via-browser.

Third, privacy becomes a selling point. Apple can market this as "the AI assistant that understands your data but keeps it private." If they handle certain requests on-device and only send metadata to Gemini for complex reasoning, users get intelligence without surveillance.

Fourth, the ecosystem matters more. Siri integration into HomeKit, CarPlay, watchOS, and macOS becomes more useful. Imagine asking Siri to "prepare the house for my arrival" and it actually coordinates multiple devices intelligently. That's hard to do today. With better AI, it becomes routine.

The timeline also creates business pressure. By committing to iOS 27, Apple is essentially locking in a deadline. They can't keep pushing Siri's redesign further into the future. They have to deliver.

That deadline probably shapes negotiations with Google too. Both companies are motivated to get this right before each other's next major release. Google wants Gemini to power Siri well. Apple wants the integration to feel native. The pressure is mutual.

Estimated data suggests that while ChatGPT excels in general intelligence, the new Siri is expected to integrate better with device functionalities.

How New Siri Stacks Up Against ChatGPT

Let's get specific about the comparison.

ChatGPT is web-based. You open a browser, go to OpenAI or use an app, and interact with the model. It's powerful. It can write, analyze, code, reason through complex problems. But there's friction. You have to open another app. You have to paste text in. The conversation lives in a separate place from your device context.

The new Siri lives on your phone. That's not a small difference. When you ask it something, it can immediately check your calendar, your messages, your photos, your location, your recent browsing history. It understands you as a person, not just as a query.

Let's imagine a real scenario. You're planning a weekend trip. You tell Siri: "Plan a weekend trip to somewhere warm that's not too far from here." ChatGPT could give you general advice. But Siri could:

- Check your calendar to find available weekends

- Look at your weather preferences and past trips

- See your budget based on recent spending patterns

- Check flight prices from your nearest airport

- Look at your friend group to see who's mentioned wanting to travel

- Cross-reference with your passport validity

- Suggest destinations that match your previous interests

ChatGPT can't do most of that. It doesn't have context about your life.

But ChatGPT has advantages too. It's available across devices and platforms. You can use it from Android, Windows, Mac, or web. Siri is locked to Apple devices. ChatGPT has massive compute resources available. Siri will have to be more selective about what it processes locally vs. in the cloud. ChatGPT has 200+ million users to learn from. Siri will have to rapidly build a similar feedback loop.

The pricing dynamic is interesting too. ChatGPT costs

However, we should acknowledge the elephant in the room: Apple's track record with AI has been... mixed. Siri today is worse than alternatives. Apple's photo recognition works well, but their creative AI efforts have been limited. They're trusting Google's Gemini, which is smart, but integration execution is where Apple could stumble.

ChatGPT's advantage right now is proof of concept. It works. Millions of people use it daily. It's reliable. The new Siri has to match that reliability from day one or users won't trust it.

The Privacy Angle: Apple's Potential Advantage

Apple has built its brand partly on privacy. "What happens on your iPhone, stays on your iPhone."

With AI, this could be a meaningful differentiator.

When you use ChatGPT, OpenAI sees your prompts. They log them, potentially use them for training, store them on their servers. The same is true for Google Gemini via web. Your question is sent to Google's servers.

The new Siri could operate differently. Certain lightweight queries ("What time is it?", "Call my mom", "Set a timer") might be handled entirely on-device. More complex requests that require language model processing might send only the query, not your context, to Gemini. And Apple could encrypt the data in transit such that even Google can't see what you're asking.

This isn't automatic, but it's possible. And Apple will definitely market it if they pull it off.

The privacy pitch becomes: "Get the intelligence of ChatGPT with the privacy of a local assistant." That's compelling, especially as users become more aware of data harvesting.

But there are complications. On-device processing is computationally expensive. Running GPT-level models on a phone isn't practical at the scale ChatGPT operates. You need the cloud. So Apple will have to make trade-offs: some queries stay local, others go to the cloud. The question is how much stays local and how much leaves the device.

If Apple does this well, they win on privacy. If they're sloppy about it and users discover their data is still being logged, it becomes a scandal.

Google doesn't necessarily lose this competition. Google could argue that their data practices are transparent and they don't use Siri interactions for advertising. But Apple's privacy messaging is so strong that perception might matter more than reality.

For developers, this matters because it shapes how you design apps. If Siri becomes a primary interface and it handles complex queries, your app design might need to account for voice-first interactions. If it's privacy-focused, you might need to design for data minimalism.

Strategic leverage and development time are key reasons for Apple's choice of Google's Gemini over developing its own model. (Estimated data)

Developer Implications: What Changes When New Siri Launches

If Apple delivers on this promise, it's seismic for developers.

Right now, Siri integration is limited. You can enable basic voice commands for specific app functions, but it's fairly constrained. Developers have to work within Apple's framework. Siri doesn't deeply understand what your app does.

With a smarter Siri, the dynamics change.

Apple is likely to provide more sophisticated APIs for developer integration. Your app could tell Siri what it does, and Siri could understand complex requests about your app without the developer having to hardcode every possible interaction.

Imagine you're building a task management app. With old Siri, users might say "Add a task" and Siri calls your app. With new Siri, users could say "Remind me to finish the quarterly report on Friday before the board meeting" and Siri understands that this is:

- A task that should go in your app

- Tied to a specific date (Friday)

- Includes context about importance (board meeting)

- Might need reminders

Siri could create that task with all the richness without the developer having to build custom Siri intent handlers.

This is both an opportunity and a threat. Opportunity: if you're building useful apps, Siri becomes a distribution channel. Threat: if users can accomplish what your app does through Siri alone, why do they need your app?

There's also the machine learning angle. Smart Siri will likely learn user patterns. It might get better at predicting what you want based on context. Developers will need to think about how their apps interact with a system that understands habits and preferences.

The privacy implications for developers are real too. If you're building an app that handles sensitive data, you'll need to think about Siri access. What can Siri see? What can users ask Siri to do in your app? You probably need to add fine-grained permissions.

The Competitive Landscape: How Google Gemini, ChatGPT, and Others Respond

Let's think about this from the competitors' perspectives.

OpenAI and ChatGPT: OpenAI will probably accelerate work on mobile integration. They need ChatGPT to feel as native on iOS as the new Siri will be. They're already working on more sophisticated integrations, but the new Siri creates deadline pressure. They also need to think about how they compete when Siri has deep system access and ChatGPT doesn't.

One response: make ChatGPT more specialized. If Siri becomes the general-purpose assistant, ChatGPT could specialize in writing, coding, creative tasks, or research. Specialization might be a survival strategy.

Google: Google is in an interesting spot. They're powering Siri, which is good for Gemini adoption. But they're also helping Apple compete with them. Google's response is probably to make their own Assistant smarter and integrate it better into Android. They also have the advantage of owning Android, so they can ship competitive AI without negotiating with a partner.

The long-term question: does powering Siri make Google competitive in the AI era, or does it just strengthen Apple while Google remains second? That calculation probably depends on how much control Google retains over Gemini in the Siri integration.

Anthropic (Claude): Claude is growing quickly but doesn't have mobile presence at the scale of ChatGPT. The new Siri becomes a wake-up call. If Apple became Siri's model was Claude instead of Gemini, that would be massive for Anthropic. Since it's not, Anthropic probably needs to find other distribution channels: maybe Android integration, maybe enterprise focus.

Amazon Alexa: Alexa is in decline. The new Siri will probably accelerate that decline on Apple devices. Amazon will need to show that Alexa+LLM integration offers something unique that on-device Siri doesn't.

The ecosystem question is crucial here. Apple has the advantage that Siri is ubiquitous across its devices. Google has fragmentation (Android varies by manufacturer). OpenAI has web dominance but mobile is harder. Anthropic is too new. Amazon is declining.

This might be the move that lets Apple catch up in AI. Not by innovating faster, but by integrating smarter.

Estimated data shows that while Apple may lag in data utilization due to privacy constraints, it leads in privacy protection compared to OpenAI and Google.

Integration Into Apple's Ecosystem: Siri Everywhere

One of Siri's fundamental advantages is that it already exists on every Apple device.

When the new Siri launches, it won't just appear on iPhones. It will be on:

- iPhone and iPad: Obviously. This is the primary use case.

- Mac: Siri on Mac is current underused. A smarter Siri could make it your primary way to interact with your Mac.

- Apple Watch: Watch apps are constrained by screen size. Voice becomes essential. Smart Siri could make watches far more useful.

- HomePod and Apple TV: These are currently limited. Smart Siri could transform them into true home assistants.

- CarPlay: Driving is when AI assistance is most valuable (and most needed). Smart Siri could revolutionize in-car experience.

- AirPods: Your audio device is always listening (in limited ways for commands). Smart Siri could make this the primary interface for many tasks.

This is where Apple wins. ChatGPT is powerful, but it's not in your car or on your wrist. The new Siri is everywhere you go.

Imagine a scenario:

You're driving and you ask Siri to "adjust my route because traffic is bad and I want to stop for coffee on the way." Siri checks maps, sees traffic patterns, looks at coffee shops along alternate routes, checks your coffee preferences, and adjusts your navigation. All through voice. On your car's screen.

That's not possible with ChatGPT because ChatGPT doesn't have access to your location, maps, preferences. But Siri does, because Apple controls the entire stack.

The integration challenge is real though. Apple has to make sure this works across devices with different hardware capabilities. An AirPod can't run complex models. A HomePod has more processing power but less than a phone. Everything needs to be architected carefully.

Apple is probably also thinking about cross-device continuity. Start a task on your iPhone, continue it on your Mac, finish it on your watch. The context should flow seamlessly. That's hard to build, but it's uniquely valuable.

The Privacy and Security Considerations

Smarter AI means more data access. That creates security and privacy risks.

If Siri has access to your photos, messages, calendar, location, and financial data (to understand context), the attack surface expands dramatically. A compromised Siri isn't just a nuisance. It's a risk to everything on your device.

Apple will need to build sophisticated access controls. Siri might be able to "see" your calendar but only to answer questions about schedule. It might access your photos but only for specific queries. This requires granular permission systems.

The adversarial scenario to worry about: someone tricks Siri into revealing sensitive information. "What meetings do I have with competitors?" If Siri answers casually, you've leaked competitive intelligence. "What did I spend on that vacation?" If Siri summarizes your financial data, privacy is compromised.

Apple's likely response is guardrails. They'll train Siri to refuse certain questions. They'll build rules about what data it can summarize. But every guardrail is also a limitation. Make Siri too cautious and it becomes useless.

The cloud dimension adds complexity. When Siri sends data to Gemini servers for processing, that data is in Google's ecosystem. Even with encryption, there are questions about retention, logging, and potential access. Apple will need strong agreements with Google about data handling.

For users, the messaging matters. If Apple can credibly claim that Siri respects privacy, it becomes a competitive advantage. If Siri turns out to be logging everything, it's a scandal.

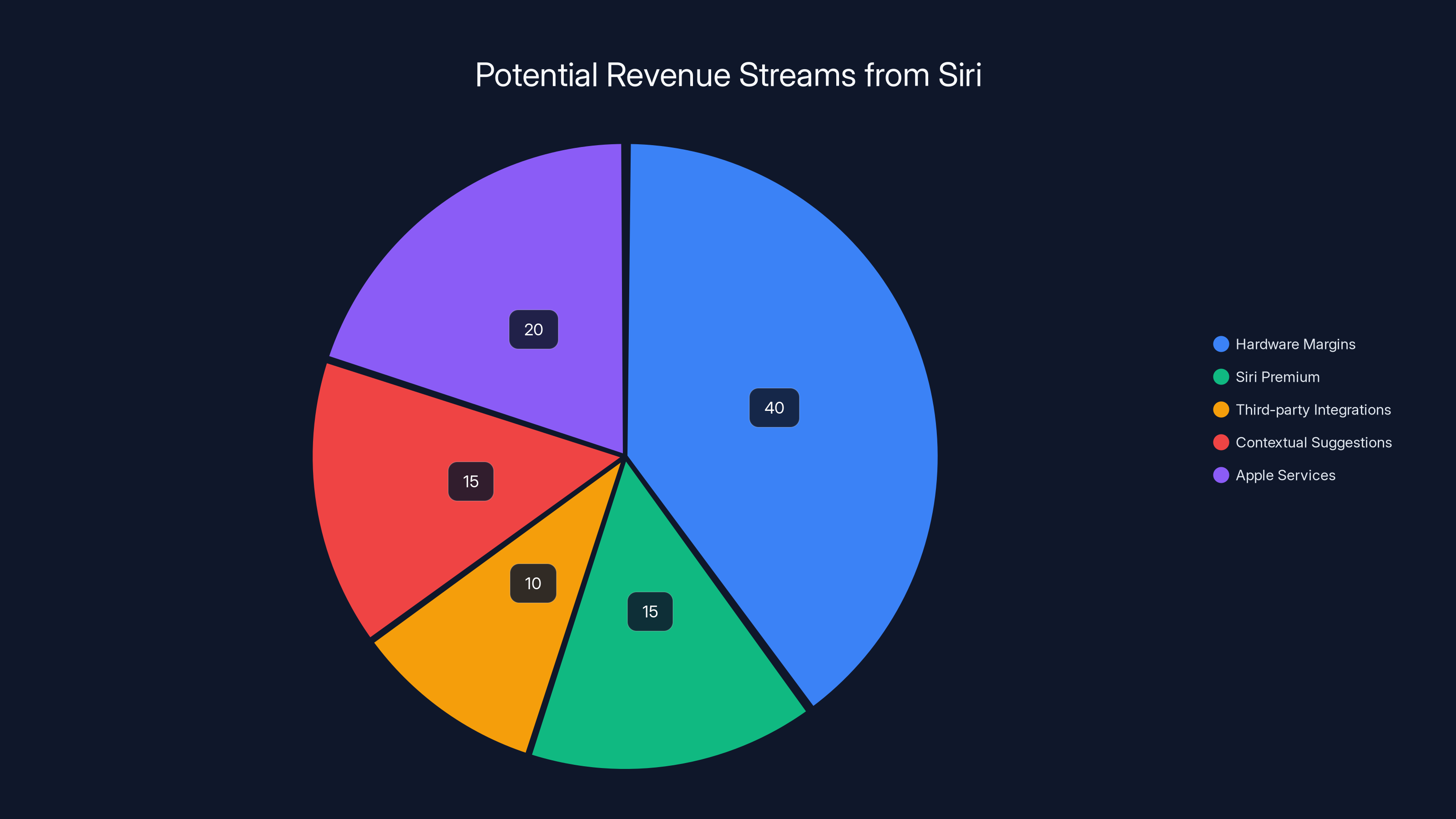

Estimated data suggests that Siri's integration primarily boosts hardware margins and Apple services, with potential indirect monetization through premium features and third-party integrations.

Training and Learning: How Will Siri Improve?

ChatGPT and Gemini improve through training on vast datasets and feedback from millions of users.

Siri will need to do the same. But Apple's privacy commitments might limit how much user data they can use for training.

This is a genuine tension. If Apple doesn't learn from user interactions, Siri might stagnate compared to competitors. If Apple does learn from interactions, privacy claims become questionable.

The likely solution: Apple will use anonymized, aggregated feedback. They won't log individual conversations, but they'll measure patterns. Which requests does Siri handle poorly? Where does it fail? They'll use this to identify retraining opportunities without violating privacy.

This is harder than what OpenAI does (they log everything and use it for training, with user opt-out). But it's probably necessary for Apple's privacy narrative.

There's also the human feedback dimension. OpenAI uses human trainers to rate responses. Apple will need trainers too, but with stricter privacy protocols. Trainers would work with anonymized, scrubbed data.

The competitive implication: if Apple can't iterate as fast as OpenAI or Google because of privacy constraints, Siri might fall behind technically. But the privacy advantage might be worth the trade-off.

Business Model Questions: How Does Apple Monetize This?

ChatGPT costs money. Gemini Advanced costs money. Siri with Gemini power will probably be included with your iPhone, no extra charge.

How does Apple make money?

One answer: they don't, directly. Siri becomes a feature that justifies the premium price of iPhones. Users choose iPhone partly for Siri, same way they choose for the camera or ecosystem. Apple makes money from hardware margins.

But there might be indirect monetization:

- Siri Premium: Apple could charge for advanced Siri features. Unlimited queries, priority processing, deeper integrations. Free tier covers basics.

- Third-party integrations: Developers might pay to have their apps deeply integrated with Siri. Premium placement in Siri recommendations. This is subtle, but it could be a revenue stream.

- Contextual suggestions: Apple could show contextual ads through Siri without being creepy. "You asked about flights to Miami. Here are flight deals." It's not personal data being sold, but it's valuable to advertisers.

- Apple Services: Siri could drive adoption of Apple's services (Apple Music, Apple TV+, iCloud+) by integrating them seamlessly. Services is already Apple's highest-margin business.

The challenge: if Apple starts monetizing Siri in obvious ways, it undercuts the privacy advantage. Users need to trust that Siri serves them, not Apple's business interests.

My guess is Apple's strategy is indirect monetization through ecosystem stickiness, not direct charges.

Timeline and Launch Expectations: What to Watch For

We know iOS 27 is the target. Let's think about the realistic timeline.

Apple typically announces major features at WWDC (Worldwide Developers Conference) in June. If iOS 27 is coming in 2025 or early 2026, Apple might preview the new Siri at WWDC 2025 (June 2025). Then it ships with iOS 27 later that year.

What to watch for before launch:

- Beta versions: Apple will release betas to developers. Developers will immediately reverse-engineer what Siri can do. Leaks will happen.

- Geolocation rollout: Apple probably won't launch globally at once. Expect regional rollouts. US first, then Europe, then Asia.

- Device requirements: Does the new Siri work on iPhone 12 and later? iPhone 13 and later? This affects adoption speed.

- Google partnership announcements: Apple will probably announce the Gemini partnership publicly before or right around launch. The announcement matters for narrative control.

- Privacy claims: Apple will emphasize privacy compared to ChatGPT. Watch how they position this.

- Developer APIs: Watch for new Siri integration APIs in iOS 27 SDK. This signals how serious Apple is about developer adoption.

The honest reality: Apple has overpromised on Siri before. The company showed a demo where Siri could do something, then the real version was less capable. So healthy skepticism is warranted. What Apple announces and what actually ships in iOS 27 might not be identical.

But the momentum here is different. This is a company admitting past failure and betting on a new approach. That kind of admission usually means real change is coming.

Broader Implications: AI and Platform Control

Zoom out from Siri for a moment. This move signals something bigger about AI's future.

AI is becoming the primary interface between users and devices. Whoever controls that interface controls a huge amount of user behavior and data. Apple understands this. So does Google. So does Microsoft.

Apple's partnership with Google on Gemini might seem like a loss for Apple. They're not building their own model. But it's actually strategic. Apple gets cutting-edge AI without the massive R&D expense. They also avoid being completely dependent on their own research efforts, which have been mediocre in generative AI.

For the broader AI industry, this matters because it shows that model capability is becoming commoditized. Apple doesn't need the best model. They need a good model that integrates well with iOS. That's a meaningful shift from the "whoever builds the biggest, smartest model wins" narrative.

It also suggests future partnerships. Microsoft (OpenAI), Google (Deepmind), Amazon (Anthropic—they invested). Everyone is pursuing exclusive partnerships because they recognize that model access is becoming critical infrastructure.

For users, competition is probably good. If Siri becomes competitive with ChatGPT, ChatGPT's pricing power decreases. If ChatGPT faces real competition from device makers, it keeps OpenAI honest.

For developers, platform diversity is critical. You need alternatives to Apple. ChatGPT helps with that. So does Google's Gemini. But if Google powers both Siri and their own products, Google's leverage increases.

What This Means for You: Practical Implications

If you're an iPhone user:

You're about to get a significantly smarter assistant. Whether you use Siri regularly or not, having access to intelligent voice interaction will change how you use your phone. Expect this to roll out over time—not perfectly at launch, but iteratively better.

If you're a developer:

Start preparing now. The API surface for Siri is about to expand dramatically. Apps that integrate well with the new Siri will become more discoverable and more useful. Apps that don't might become less relevant.

If you're building AI products:

This is a wake-up call. Device makers are moving in. If you're a standalone AI company, you need to think about distribution in a world where Siri is competitive. Specialization and differentiation become more important.

If you use ChatGPT or Gemini:

The competition is intensifying. ChatGPT will probably remain better for certain use cases (writing, coding, long-form analysis). But for everyday assistant tasks, Siri might become your primary tool. You'll probably use multiple assistants for different purposes.

If you care about privacy:

Apple's move is a bet that you do. If they execute well, you get AI capabilities without the data harvesting. If they don't, it becomes a cautionary tale about privacy claims.

The Realistic Roadmap: What Will Actually Happen

Let's be honest about what's likely vs. what's hype.

What will probably happen:

- iOS 27 launches with a meaningfully smarter Siri

- The improvements are real but not transformative—it won't replace ChatGPT, but it will be better than current Siri

- The Google partnership becomes public; Apple tries to frame it as strategic, not desperate

- Privacy is emphasized in marketing, though actual privacy is more nuanced than the messaging

- Developers gradually integrate with new Siri capabilities

- The device integration advantage becomes clear over standalone ChatGPT

- By 2027, Siri is legitimately competitive with standalone AI assistants for mainstream use cases

What probably won't happen:

- Siri doesn't replace ChatGPT for power users or specialized tasks

- Google doesn't lose Gemini business to Apple—Siri success doesn't hurt Google's cloud AI strategy

- Privacy doesn't become absolute—some data still goes to the cloud, some gets logged

- Device integration doesn't become effortless—Siri still has limitations when interacting with third-party apps

- The new Siri doesn't launch perfectly—bugs and limitations will be apparent in version 1.0

The honest middle ground: Apple is making a smart, necessary move. They fell behind in AI and needed to catch up fast. Partnering with Google and shipping with iOS 27 is a realistic path forward. The new Siri probably becomes "good enough" for most users, even if it doesn't revolutionize everything.

That's actually a win for Apple, a win for Google, and a win for users. It's not a loss for ChatGPT, but it does limit ChatGPT's total addressable market.

FAQ

What exactly is the new Siri going to do differently from current Siri?

The new Siri will be powered by Google's Gemini LLM instead of Apple's proprietary technology. This means it will understand natural language better, handle complex multi-step requests, maintain conversation context, and provide more intelligent answers without defaulting to web searches for simple questions. It will still be deeply integrated into iOS and have access to your device data (calendar, photos, location, etc.), but with better reasoning about how to use that data to help you.

Is Apple really using Google's Gemini for Siri?

Yes, according to multiple credible reports. Apple apparently decided it was faster and smarter to integrate Google's proven Gemini model than to develop its own LLM from scratch. This is a partnership arrangement, and both companies likely negotiated terms around data handling, exclusivity periods, and revenue sharing. Google gets to power a major AI assistant on 2 billion devices, and Apple gets to compete with ChatGPT without needing years of AI research.

When will the new Siri be available?

Based on reports, the redesigned Siri is expected to launch with iOS 27, which would likely be in late 2025 or early 2026. Apple will probably announce details at WWDC in June 2025 and ship the feature in a public release later that year. Early beta versions might be available to developers before the public launch.

Will the new Siri require an extra subscription?

Based on Apple's typical approach, the new Siri will probably come included with iOS at no extra charge, just like it does today. However, Apple might offer a "Siri Premium" tier for more advanced features or higher usage limits. For now, the company seems focused on making it a standard feature that justifies iPhone's premium pricing.

How does the new Siri compare to ChatGPT in terms of capabilities?

For general intelligence and reasoning, ChatGPT is probably still slightly ahead because it's been refined longer. But the new Siri will have a major advantage in device integration. It understands your personal context, your location, your calendar, your preferences. ChatGPT has to start from scratch with each conversation. For everyday tasks—scheduling, reminders, personalized recommendations—Siri might actually be more useful despite being based on the same underlying technology (Gemini).

Is my privacy really protected with the new Siri?

Apple will probably use on-device processing for simple queries and only send complex requests to Gemini servers. Even then, they might strip personal context from what goes to the cloud. However, some data will still need to go to Google's servers, and there's always a risk with any cloud-based system. Apple's privacy advantage is real but not absolute. Compare it to ChatGPT, where everything is logged by default—Siri will be better on privacy, but not perfect.

Will this change how I use my iPhone?

Yes, if you adopt the new Siri. Instead of opening apps to do simple tasks (send a message, check calendar, control smart home devices), you'll be able to voice-command those tasks. For power users and developers, the biggest change is that you'll probably need to think about how your apps integrate with a more capable Siri. For casual users, it just becomes a more helpful assistant you rely on more often.

What happens to Google Gemini if it powers Siri?

Google Gemini benefits massively. Shipping on 2 billion Apple devices is incredible distribution. But Google is also helping Apple compete with them. The likely arrangement is that Google gets substantial revenue from powering Siri (either through licensing fees or data access), and both companies benefit from competition with OpenAI. Google doesn't "lose" by powering Siri—they probably gain more than they lose.

Should I still use ChatGPT if I have an iPhone?

Probably both. ChatGPT is better for writing, coding, detailed analysis, and creative work. The new Siri is better for quick questions, personal assistance, and device control. The two tools serve different purposes. ChatGPT is more like a coworker for creative/technical tasks. Siri is more like an assistant for personal tasks. You'll probably end up using both.

What should developers do to prepare?

Start studying Apple's Siri integration APIs. Plan how your app's core features could be exposed through voice commands. Think about what data Siri should be able to access from your app and what should stay private. Begin designing voice-first interfaces, not just touch interfaces. When the iOS 27 SDK ships with new Siri capabilities, be early to adopt them. Apps that integrate well with Siri will have an advantage in discoverability and usefulness.

The Road Ahead: What Happens After iOS 27

Assuming the new Siri launches successfully in iOS 27, what comes next?

My prediction: Apple continues iterating aggressively. Version 1.0 of new Siri probably has rough edges. Apple spends the next year collecting feedback, improving accuracy, expanding capabilities. By iOS 28 (2026), it becomes genuinely competitive. By iOS 29 (2027), it might actually be better than ChatGPT for most users because of the integration advantage.

Google probably doubles down on Android Assistant in response. They can't let Apple take the AI interface entirely. We probably see a similar strategy from Google: integrate Gemini deeply into Android, improve voice capabilities, make it hard to leave the Google ecosystem.

OpenAI probably accelerates mobile work and explores non-web distribution. They need to be on your phone as natively as Siri. They might pursue deeper partnerships with manufacturers or OS makers.

The competitive dynamic shifts from "who has the best AI" to "who has the best AI integrated into the devices I use." That's a fundamental shift that favors platform makers (Apple, Google, Microsoft) over standalone AI companies.

But there's space for specialization. If ChatGPT stays better at writing, coding, and creative tasks, it survives by being the specialist. If Claude builds a niche in certain industries or use cases, it survives through differentiation.

The big losers are companies that bet on being a horizontal AI layer. If every device has a capable AI assistant built in, where do horizontal players fit? This is why some AI companies are focusing on vertical solutions—AI for radiologists, AI for accountants, AI for copywriters. Horizontal AI becomes commoditized.

Final Thoughts: Why This Matters

Apple's move is significant not because it invents something new, but because it represents a shift in strategy.

For years, Apple positioned itself as separate from AI. They focused on devices and privacy, while others built AI. But the world changed. AI became the primary interface. Users expected conversational AI. ChatGPT showed what was possible.

Apple realized they were losing the interface layer. If Siri remained weak while ChatGPT became essential, Apple's devices became less sticky. Users would rely on ChatGPT more than iOS for intelligence.

So Apple changed course. It's not a creative choice—they're using Google's Gemini, not building something original. But it's a pragmatic choice. Apple prioritizes execution and integration over innovation. They build ecosystems, not breakthroughs.

For the AI industry, this is healthy. It means AI is moving from research papers and startups into real products that billions of people use daily. It means AI is becoming infrastructure, not novelty. It means competition matters.

For users, it means better options. Siri will improve. ChatGPT will improve in response. Google will improve their assistant. We all win from competition.

The one risk: what if Apple, Google, and Microsoft use AI to increase lock-in? What if smart Siri makes you more dependent on iOS, not less? What if device makers use AI to control what you can do?

That's the real story to watch. Not whether Siri can compete with ChatGPT, but whether the next era of computing is open and competitive, or controlled by a few giants.

For now, Siri's redesign is a net positive. It's a big company admitting it fell behind and investing seriously to catch up. That's the move that shapes the next decade of AI.

Whether Apple executes well is the real question. The technology is available. The strategy is sound. Execution is everything.

Key Takeaways

- Apple is completely redesigning Siri using Google's Gemini model, admitting that building competitive AI requires partnering with experts rather than going it alone

- The new Siri is expected in iOS 27 (2025-2026) and will be deeply integrated across Apple's ecosystem, giving it advantages over standalone ChatGPT

- Unlike ChatGPT's web-based access, Siri will have device context (calendar, location, preferences, photos) enabling more personalized and useful responses

- Apple's privacy messaging around Siri—keeping data on-device when possible—could become a meaningful differentiator if executed credibly

- Developers need to prepare now for new Siri integration APIs, as apps that work well with voice assistant will gain discoverability advantages

Related Articles

- Apple's Siri AI Overhaul: Inside the ChatGPT Competitor Coming to iPhone [2025]

- Gemini vs ChatGPT: Which AI Model Is Actually Better? [2025]

- Alexa Plus Gets Sassy: Why AI Assistants Are Developing Attitude [2025]

- Volvo EX60 Gemini AI: The First Google-Powered EV Voice Assistant [2025]

- US and China AI Collaboration: Hidden Partnerships [2025]

- Why You Should Disable Apple Intelligence Summaries on iOS 18 [2025]

![Apple's AI Pivot: How the New Siri Could Compete With ChatGPT [2025]](https://tryrunable.com/blog/apple-s-ai-pivot-how-the-new-siri-could-compete-with-chatgpt/image-1-1769029742557.jpg)