Introduction: The Siri Reset That Changes Everything

For over a decade, Siri has been the punchline of Apple's AI ambitions. You'd ask it a simple question, get a nonsensical response, and immediately reach for Google Assistant or Alexa instead. Apple knew this. And according to recent reports, the company is finally doing something about it—in a big way.

Apple is building an entirely new version of Siri that ditches the voice-command gimmick and transforms the assistant into something that actually resembles Chat GPT or Gemini. This isn't a minor update. It's a complete rethinking of what Siri can do, how you interact with it, and what it's capable of understanding. The chatbot version will let you type or speak, dive into complex conversations, and handle nuanced requests that the old Siri would've botched immediately.

The stakes here are massive. Google has Gemini. Microsoft has Copilot. OpenAI has Chat GPT. Samsung has Galaxy AI. Apple's been playing catch-up for years, and this new Siri is the company's most direct assault on the AI assistant market yet. It's called Project Campos internally, and it's supposed to ship this fall as the centerpiece of iOS 18, iPadOS 18, and macOS 15.

But here's what makes this different from the constant stream of Siri rumors: Apple's actually partnering with Google. Yes, that Google. The company that makes the search engine Apple users actively avoid on their phones. The partnership means Siri will get access to Gemini's intelligence, plus Apple's own machine learning magic layered on top. It's a pragmatic move that signals Apple finally understands it can't win this fight alone.

Let's break down what's actually happening, why it matters, and what this means for the future of AI assistants on your iPhone.

TL; DR

- Apple's Siri is getting a complete redesign into a conversational AI chatbot that works through typing or voice, similar to Chat GPT

- Project Campos launches this fall as the headline feature of iOS 18, becoming the "primary new addition" to Apple's operating systems

- Google Gemini powers the core AI, through a multi-year partnership announced earlier in 2025

- The chatbot version significantly surpasses Apple's existing AI personalization features in capability and sophistication

- Users will interact with Siri differently, moving from voice commands to conversational exchanges across iPhone, iPad, and Mac

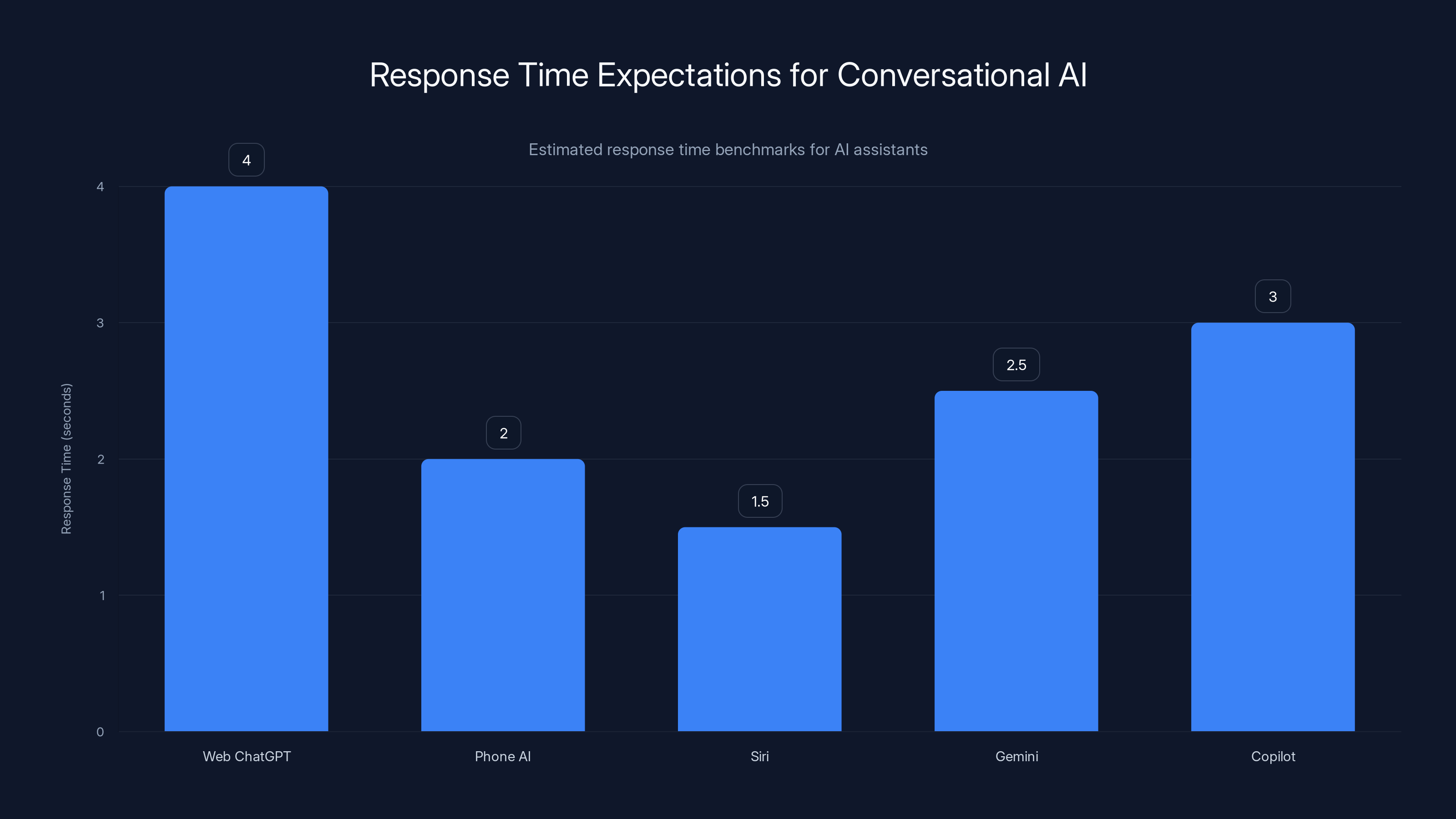

Estimated data shows that AI on phones aims for faster response times compared to web-based AI, with Siri targeting sub-2 second responses.

The Death of Command-Based Voice Assistants

For years, Siri's entire design philosophy centered on voice commands. You'd say "Hey Siri, set a timer" or "Hey Siri, call my mom." It worked for simple, discrete tasks. Everything beyond that? Disaster.

Try asking Siri a compound question like "What restaurants are open near me that serve Italian food and have outdoor seating?" You'd get a deflection to a search result. Ask it to understand context from a previous conversation? Forget about it. Request it to write something creative or work through a complex problem? That's not happening on the old Siri.

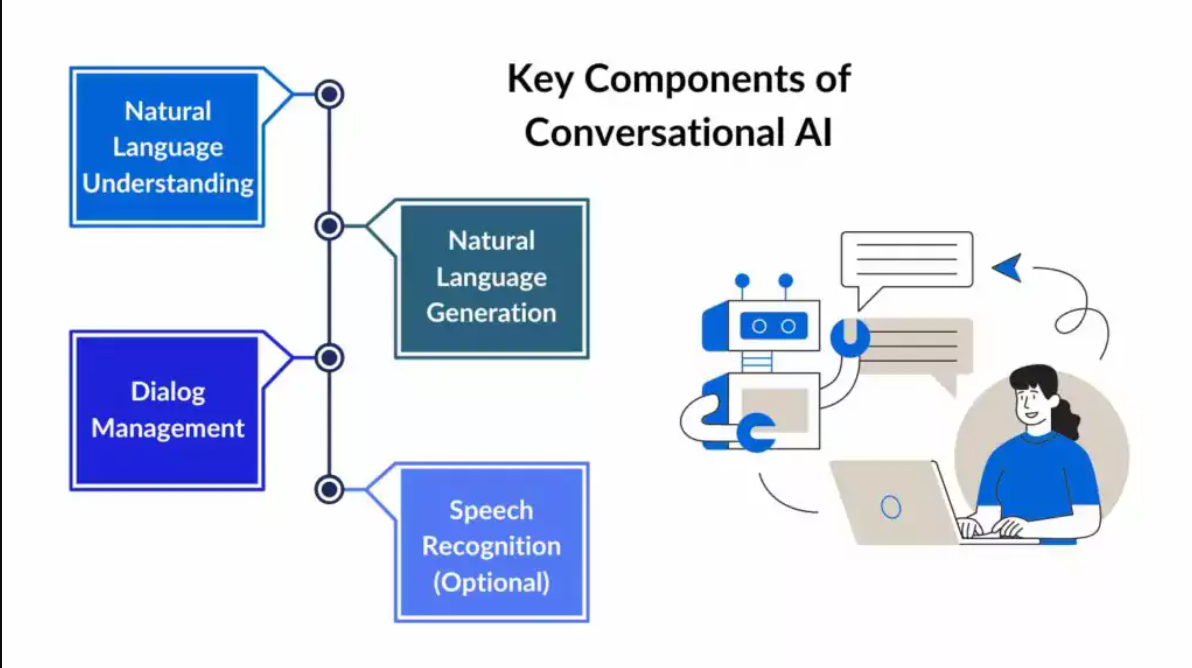

The fundamental issue was architectural. Voice-command systems operate in what's basically a matching game. You say something, the system pattern-matches it to a pre-defined action, and then executes. It's efficient. It's deterministic. It's also brittle as hell. Any variation in phrasing or unexpected request throws it completely off the rails.

Conversational AI works differently. Instead of treating your input as a command to parse, it understands context, nuance, and intent across multiple turns of conversation. You can say "that was wrong" and it knows you're referring to something from three messages ago. You can ask follow-up questions and it maintains the thread. You can be vague, indirect, or even slightly contradictory, and it still figures out what you actually want.

Apple's new Siri represents a fundamental shift in that architecture. Instead of voice commands, it's moving to conversational turns. Instead of button-pushing, it's moving to dialogue. The interface changes from tapping the home button to typing (or still speaking, but in a different way). The experience changes from transactional to generative.

This is why every major tech company is doing this right now. Google shifted Assistant toward conversational modes. Microsoft completely rebuilt Cortana around Copilot. Samsung's Galaxy AI isn't really about commands anymore. Amazon's Alexa has been adding conversational capabilities for years. The entire paradigm is shifting because conversational AI is just fundamentally more capable.

The irony, of course, is that Apple's been calling Siri "intelligent" for over a decade while everyone knew it wasn't. This redesign is Apple finally admitting that voice commands weren't the future. Conversation is.

Project Campos: The Internal Codename Behind the Biggest Siri Overhaul

Every major Apple project gets a codename. Sometimes they're random. Sometimes they're hints at what's inside. Project Campos isn't particularly revealing—"campos" means fields in Spanish, which doesn't tell us much—but the internal scope of this project signals Apple's commitment level.

This isn't a team of eight engineers working on a side feature. This is a massive undertaking with resources dedicated specifically to overhaul one of Apple's core software experiences. Apple's basically saying: we're willing to reorganize how people interact with their devices.

The timeline is also aggressive. Apple announced this is coming in the fall, which means they're targeting iOS 18, iPadOS 18, and macOS 15 releases. That's roughly a 12-15 month window from initial conception (probably earlier in 2024) to shipping something this complex to hundreds of millions of devices. For context, major generative AI features usually take 18-24 months minimum. Apple's either confident in what they've built, or they've been working on this longer than publicly known.

The early rollout in summer at WWDC is also strategic. Developers need to understand how this works so they can integrate with it. If Siri becomes genuinely powerful, third-party apps will want to hook into it. Apple learned this lesson with Siri's original launch—a powerful assistant needs a robust API ecosystem to actually be useful.

One thing that's interesting about Project Campos is that it's separate from the incremental AI updates coming to Siri in the next few months. Apple's actually releasing two versions: a faster personalization update coming sooner, and then the full Campos chatbot redesign coming in the fall. This two-track approach suggests Apple's balancing the urgency of catching up to competitors with the complexity of shipping something this new.

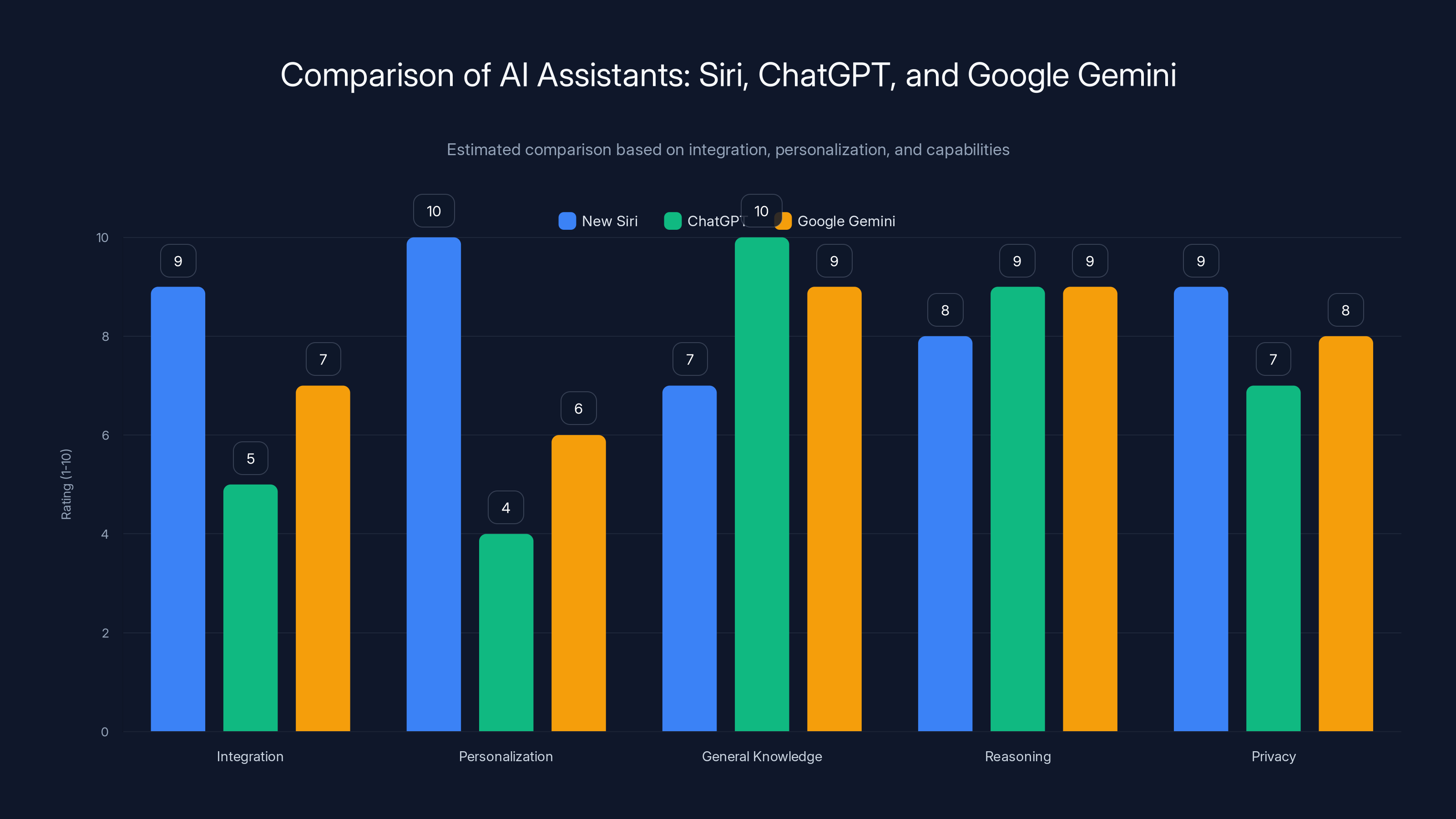

Estimated data shows Siri excels in integration and personalization due to its device integration, while ChatGPT and Google Gemini lead in general knowledge and reasoning.

Google Gemini as the AI Engine: Why Apple Partnered With Its Biggest Rival

This is the real shock of the announcement: Apple partnering with Google for AI. For years, Apple's entire marketing position relied on doing things differently than Google—specifically, doing them privately, on-device, without selling your data. Now, Siri's intelligence is partially dependent on Google's cloud models.

It's a pragmatic decision, not a philosophical one. Apple looked at the AI landscape and realized it couldn't compete with Google's research, training data, and computational resources by going alone. Google's Gemini is genuinely impressive. It's multimodal (text, images, audio). It's fast. It's been trained on a massive corpus of data. More importantly, it's been fine-tuned for conversations in ways Apple's existing AI systems haven't been.

The partnership is multi-year, which suggests long-term commitment. Apple isn't licensing Gemini for a quick fix. It's betting that Google's AI will remain competitive over the next 3-5 years. That's either arrogant or insightful depending on how Gemini evolves.

The interesting part is how Apple positions this to users. The company will likely emphasize that Siri's conversational abilities come from Gemini, but that Siri's personalization, privacy integration, and on-device smarts come from Apple. It's a division of labor: Google handles raw intelligence and conversation; Apple handles context and privacy.

Let's be clear about what this means technically. When you ask Siri a question, your request probably gets encrypted and sent to Apple's servers, where it might get routed to Google's Gemini API for processing. Google sees the request. Google processes it. Google's systems improve from it (assuming Google logs for training, which they do). Apple adds a privacy layer on top, but the fundamental dynamic hasn't changed: your intimate conversations with your AI assistant are being processed by Google.

Apple will justify this as necessary for real intelligence. And honestly, they're probably right. You can't build a genuinely conversational AI system without massive computational resources and training data. Apple has the former (barely) but not the latter. Partnering with Google gives them both.

The broader implication is that device AI is becoming more about orchestration than innovation. Apple's not innovating on the core language model anymore. It's innovating on how that model integrates with your phone, your apps, your contacts, your calendar. That's a shift in where the value lies.

iOS 18 Integration: How the Chatbot Lives on Your Phone

Siri's not getting a new floating button or a redesigned icon. It's getting a completely different interaction model. When iOS 18 ships, you'll have options for how you interact with Siri: you can still speak to it, but you can also type. You can have back-and-forth conversations. You can ask follow-up questions. You can build context across multiple exchanges.

The interface will probably look familiar to anyone who's used Chat GPT, Gemini, or Claude. A message box at the bottom. Your message appears on the right. Siri's response appears on the left. You can scroll up to see conversation history. It's not revolutionary from a UI perspective, but it's revolutionary for Apple.

One thing Apple has always cared about is privacy, and they're going to push this hard as a differentiator. While your conversations with the chatbot version of Siri might include Google (for actual AI processing), Apple's going to tout all the stuff that happens on-device. Calendar analysis, contact detection, app integration—that's all local.

The architecture probably works like this: your request gets analyzed on your phone first. Apple's on-device models identify what type of request this is (personal information query, creative task, general knowledge question, etc.). For personal stuff, it stays local. For general knowledge or complex reasoning, it gets sent to Gemini with heavy anonymization and encryption.

This is more sophisticated than just "send everything to Google." It's a hybrid model that tries to keep sensitive stuff local while getting the benefit of Gemini's intelligence for broader questions.

The integration with iOS will be deep. Siri will have access to your apps, your files, your location, your contacts—everything it already has access to, but now it can actually reason about it and help you with it in conversational ways. You could theoretically ask Siri to "find all emails from my boss from last quarter that mentioned quarterly revenue," and it would actually do that. The old Siri would've opened Mail and hoped for the best.

The Bigger Picture: Apple Playing Catch-Up in AI

Let's be honest about what's happening here: Apple's been losing ground in AI for three years straight. OpenAI launched Chat GPT in November 2022. Google launched Bard (now Gemini) in 2023. Microsoft integrated Copilot everywhere in 2024. Samsung launched Galaxy AI in 2024. Apple? Apple's been talking about on-device AI and saying "we'll do more later."

The problem is that "later" has been arriving at everyone else's products but not Apple's. While other companies shipped AI features that actually impressed people, Apple shipped reminders that text-to-speech can be used to read you your calendar. The gap widened. Developers started building for Gemini and Chat GPT first, with Apple support as an afterthought.

This Siri redesign is Apple's attempt to close that gap. It's not going to close it completely—Google's still going to have more sophisticated AI in more places—but it puts Apple back in the conversation as a company that understands where technology is moving.

The irony is that Apple's strategy of "we'll do AI privately on-device" never actually worked. Because private on-device AI is fundamentally limited. You can do classification, recognition, simple pattern matching on-device. But genuine conversational intelligence requires the kind of computational power and training data that only a handful of companies have. Apple's finally admitting this by partnering with Google.

This is actually healthy for the market. It means Apple's being pragmatic instead of dogmatic. It means they're willing to partner when necessary. It means they recognize that "not invented here" is a luxury they can't afford in AI.

What this doesn't mean is that Apple's suddenly going to have the best AI assistant. Google's integrated its AI into search, email, photos, documents, sheets, slides, and a dozen other products. Microsoft's AI is threaded through Office, Windows, Azure, and Edge. Apple's got Siri. One product. One integration point. That's a disadvantage that a good interface and privacy story might partially overcome, but not completely.

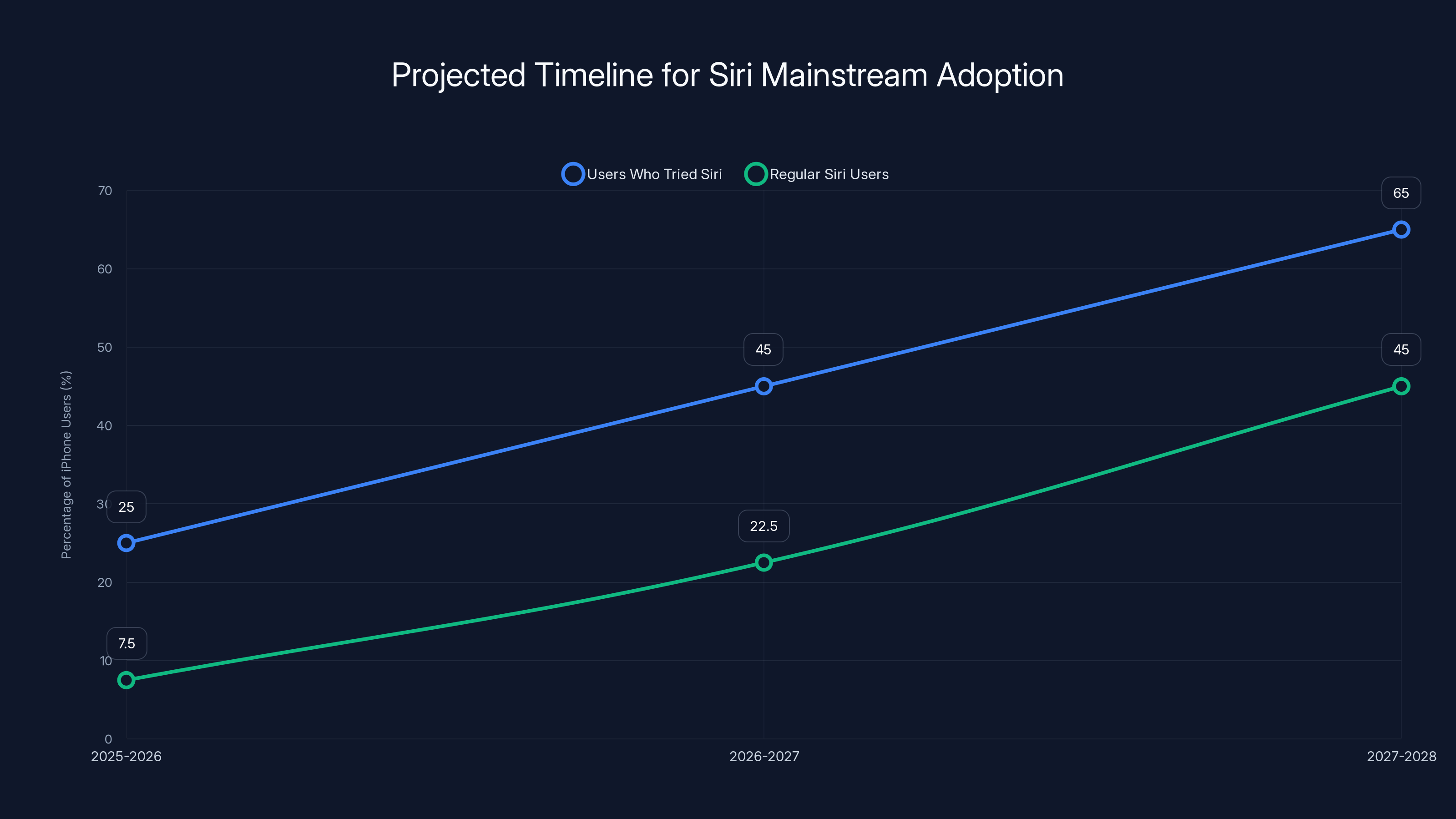

Estimated data shows a gradual increase in Siri adoption, with significant growth in regular users by 2027-2028 as integration and user experience improve.

Conversational Capabilities: What the New Siri Can Actually Do

Here's where the real differences between old and new Siri show up. The old Siri operated in simple declarative sentences: "Set a timer for 10 minutes." "Call my mom." "What's the weather?" Those were commands. They were direct. They worked or they didn't.

The new Siri operates in conversations. You can say something like: "I need to schedule a meeting with everyone from last week's strategy session, but skip John since he's on vacation. Find a time that works for all of them and send a calendar invite." The old Siri would've frozen. The new one will ask clarifying questions if it doesn't understand, cross-reference your calendar, check everyone's availability, draft an invite, and execute the whole thing.

You can follow up: "Actually, move it earlier if possible." And Siri understands you mean the meeting it just scheduled, not some hypothetical other meeting. You can ask: "Who's not able to make that time?" and get a specific answer. You can refine: "What if we pick Tuesday instead?" and Siri recalculates.

This is genuinely different. This is not just "faster Siri." This is Siri that understands context, remembers what you've said, reasons about your requests, and can have back-and-forth dialogue.

Apple's going to lean heavily on privacy when explaining what this can do. You can ask Siri deeply personal questions about your life—financial information, health details, relationship stuff—and it will understand because it has access to your apps. But that same information stays on your device unless you're asking something that requires cloud processing.

The generative capabilities are new territory for Siri. You could ask it to write something for you. Draft an email, compose a social media post, brainstorm ideas for a project. The old Siri couldn't do any of that. The new one can, because Gemini can, and Apple's just exposing that capability through the Siri interface.

But there are still limits. Siri won't be able to install new apps, change system settings without your explicit approval, or access information you haven't given it permission to access. Apple's building in guardrails because they understand that a powerful assistant that could do anything would be a privacy and security nightmare.

Privacy Trade-Offs: The Uncomfortable Reality

Let's address the elephant in the room: using Google's AI means your conversations with Siri aren't purely private anymore. Apple's going to encrypt the connection and anonymize what's possible, but Google sees the request. Google processes it. Google might log it.

Now, Apple will argue (correctly) that you also have privacy controls. You can choose not to use certain Siri features. You can disable the cloud integration. You can use only on-device Siri. But most users won't read those settings. Most users will just start using Siri and assume it's private because it's Apple.

The privacy story here is more nuanced than "Siri is private" or "Siri is not private." It's "Siri is partly private, partly cloud-based, with controls that let you adjust the balance." That's more honest than Apple's been in the past, but it's not as clean as the privacy narrative Apple built its brand on.

This is going to be a major point of criticism. Privacy advocates will point out that an AI assistant with access to your calendar, contacts, email, and location data, powered partially by Google's infrastructure, represents a significant privacy trade-off. Apple will counter that it's optional and that the privacy controls are robust. Both will be somewhat true.

The realistic scenario is that power users will read about the privacy implications and make informed choices. Casual users will just use Siri and not think about it. Apple will probably tout the privacy angle as much as possible while downplaying Google's role. It's the corporate equivalent of the Siri privacy story: technically true but missing important nuance.

The Competition: How New Siri Stacks Up Against Google, Microsoft, and Others

Let's be concrete about the landscape. Google Gemini is built into Android and Pixel phones. It's integrated into search, Gmail, Google Docs, Google Photos. It's everywhere, and it's genuinely useful. Microsoft Copilot is integrated into Windows, Edge, Office, Azure. It's less consumer-focused than Google's offering but arguably more powerful in productivity contexts. Samsung Galaxy AI is integrated into Samsung phones and uses a mix of on-device models and cloud AI.

Where does Siri fit? Probably in the middle. More privacy-focused than Google, more integrated into your daily experience than Microsoft (for iPhone users), more genuinely intelligent than Samsung's offering. But less universally useful than Google because it's siloed to Apple devices, and less powerful than Microsoft in productivity contexts because Apple's productivity suite doesn't compete with Office.

The real question isn't whether Siri beats Chat GPT. It doesn't. Siri's an assistant integrated into your phone. Chat GPT is a standalone interface to a powerful language model. Different things. The question is whether new Siri is good enough that iPhone users think "I'll ask Siri" instead of opening Chat GPT.

For many use cases, it probably will be. If you're an iPhone user asking Siri something about your calendar, your contacts, your location, or your personal information, local integration makes Siri actually better than going to Chat GPT. But for general knowledge, research, complex reasoning, or creative tasks, Siri might feel limited because it's tied to Apple's ecosystem.

Apple's betting that local integration plus decent cloud AI is enough. That most people use their AI assistants for personal stuff, not for extended research sessions. That's probably a reasonable bet. Most interactions with voice assistants today are still pretty basic. The new Siri should handle those better than the old Siri while being smoother than switching to another app.

What new Siri probably won't do is convert people who are already happy with Chat GPT or Gemini. But it might prevent people from feeling like they need another app on their iPhone. That's a win for Apple even if it's not a category killer.

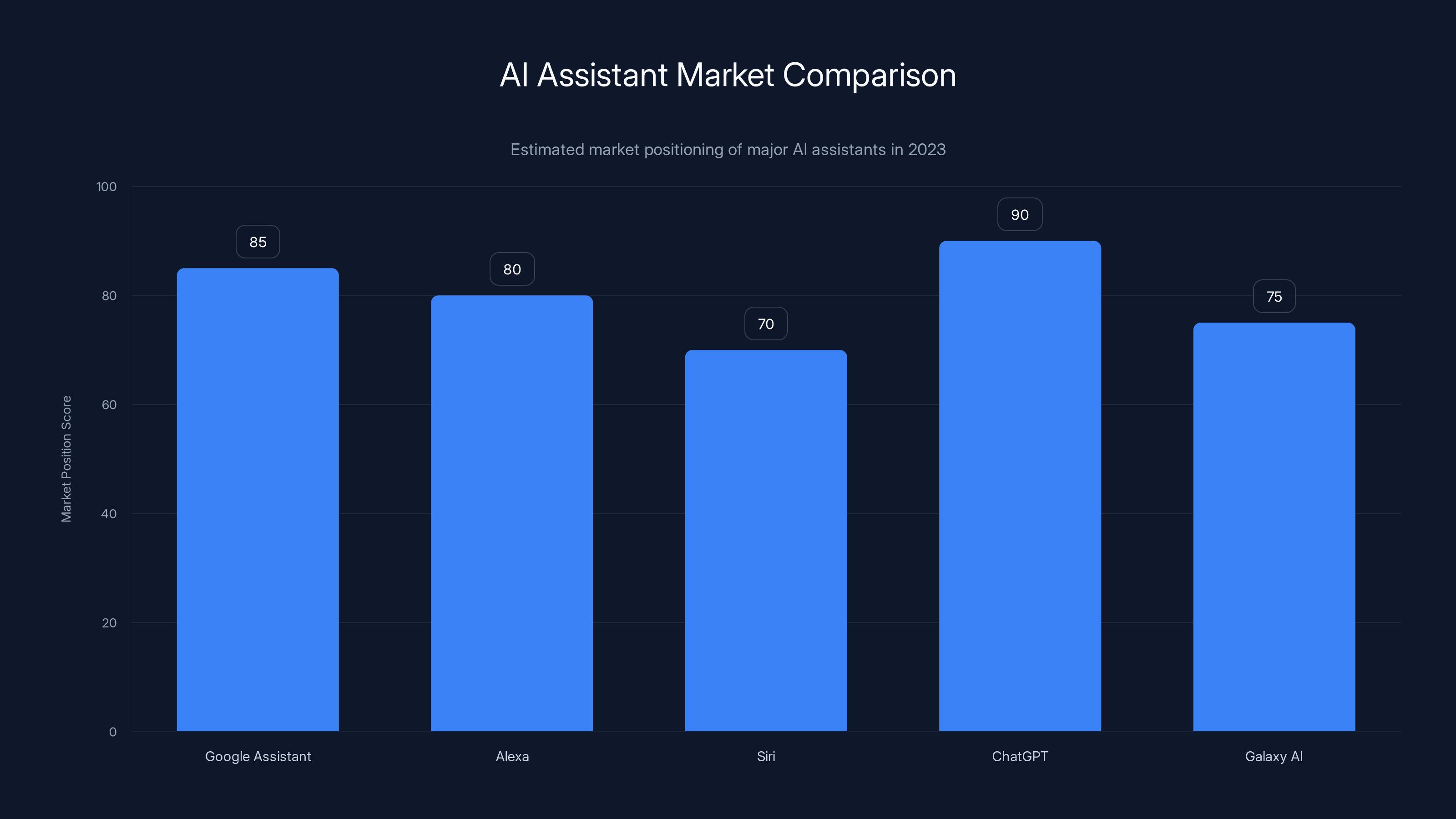

Siri's upcoming transformation aims to boost its market position, currently trailing behind competitors like Google Assistant and ChatGPT. (Estimated data)

Timing and Release: Why Fall 2025 Matters

Apple's announcing this at WWDC in June 2025 and shipping it in September 2025. That's an aggressive timeline, but it's also strategic. By announcing at WWDC, Apple gets developer buy-in early. By shipping in September, it hits the market right before the iPhone 17 launch, where AI is probably going to be the headline story.

Apple's learned over the years that announcing AI features too early just invites negative coverage when the initial version is limited. But announcing too late means competitors ship first. This timing puts new Siri front and center when people are evaluating whether to switch phones or upgrade.

The fall release also means the feature is going to drive massive media attention. "Apple finally builds a real AI assistant" is a legitimate news story. It signals that Apple's serious about AI, not just throwing features at the wall. It gives the company a fighting chance in the narrative about who's winning the AI war.

One thing that's important: Apple's saying this is the "primary new addition" to iOS 18. Not the only addition, but the main one. That means other OS updates will be mostly stability and minor improvements. Apple's put all its chips on this one feature for the fall. If it ships well, iOS 18 is a success. If it's half-baked, iOS 18 is a disappointment.

That's a huge amount of pressure on this team. They have to ship something that actually works, that doesn't embarrass Apple with privacy breaches, and that makes people feel like Siri finally caught up to 2025. That's achievable, but not guaranteed.

Technical Architecture: How Modern Conversation AI Works

If you want to understand what's actually happening under the hood, it's worth knowing how conversational AI systems work at a high level.

First, there's the language model. That's Gemini in this case. It takes your text input, processes it through layers and layers of neural networks, and produces a response. The quality of that response depends entirely on the quality of the model. Gemini's good. Not perfect, but good.

Second, there's the context window. The language model needs to remember what you've said and what it's said so far. That's not magic—it's literally feeding the previous messages back into the model each time. If your conversation is too long, it runs out of context window and forgets earlier parts. Apple will probably limit how far back conversation history goes.

Third, there's the grounding layer. This is where Siri gets interesting. Raw language models are great at sounding confident while being wrong. You ask about your calendar, and the model might make something up. Grounding means connecting the language model to actual data (your real calendar, your real contacts, your real location). When Siri talks about your calendar, it's pulling actual data and feeding it to Gemini with instructions like "here's the user's calendar, answer their question about it."

Fourth, there's the action layer. Not everything Siri does is generative. Sometimes it needs to actually do things: send emails, schedule meetings, navigate to a location. This requires integrating with iOS apps and APIs. The language model decides what action to take, then Siri executes it. This is harder than it sounds because you need to make sure the language model doesn't hallucinate about what it can do.

Fifth, there's the privacy layer. This is Apple's contribution. When your request gets sent to Google, Apple encrypts it. Google processes it. Apple strips identifying information where possible. The response comes back encrypted. This layer isn't perfect—nothing ever is—but it's real. Google doesn't get your raw conversation history.

All of this has to happen in a few hundred milliseconds for it to feel natural. That's the real engineering challenge. The language model part is solved. The privacy part is solved. The hard part is making the whole system feel responsive and natural on a mobile device with latency and bandwidth constraints.

Machine Learning on Device: The Part Apple Actually Controls

While Gemini handles the core conversation, Apple's going to use on-device machine learning for everything it can. This is where Apple actually innovates in the Siri redesign.

For example, when you mention someone's name, Apple's on-device models will identify that it's a contact in your phone. When you mention a time, on-device models will parse it into actual calendar dates. When you reference something you've said before, on-device models will maintain conversation context. None of this requires cloud processing.

Apple's been quietly building some genuinely impressive on-device AI over the past few years. The camera system uses on-device ML to recognize objects and scenes. The keyboard uses on-device ML for autocorrect and predictive text. Live Transcription is mostly on-device. Apple's had time to build expertise here.

What's new is applying this expertise to conversational context. Understanding not just what you said, but what you meant. Maintaining conversation state. Integrating with personal data intelligently. These are harder problems than just processing an image or transcribing audio, but they're solvable.

The privacy benefit of on-device ML is real. Your conversation history stays on your phone. Your personal data cross-references stay on your phone. Only the core reasoning and generation parts of the conversation go to Gemini. It's a meaningful privacy win compared to systems that send everything to the cloud.

What Apple's not going to do is have on-device models that are as good as Gemini. That's computationally infeasible. A 70-billion parameter model doesn't fit on a phone. Apple will use 1-5 billion parameter models for local stuff, and those are decent but not great. They're good enough for parsing and context, not good enough for sophisticated reasoning.

This hybrid approach—local for personal data and parsing, cloud for reasoning—is probably the best Apple can do. It's not perfect, but it's better than either all-local (limited) or all-cloud (privacy nightmare).

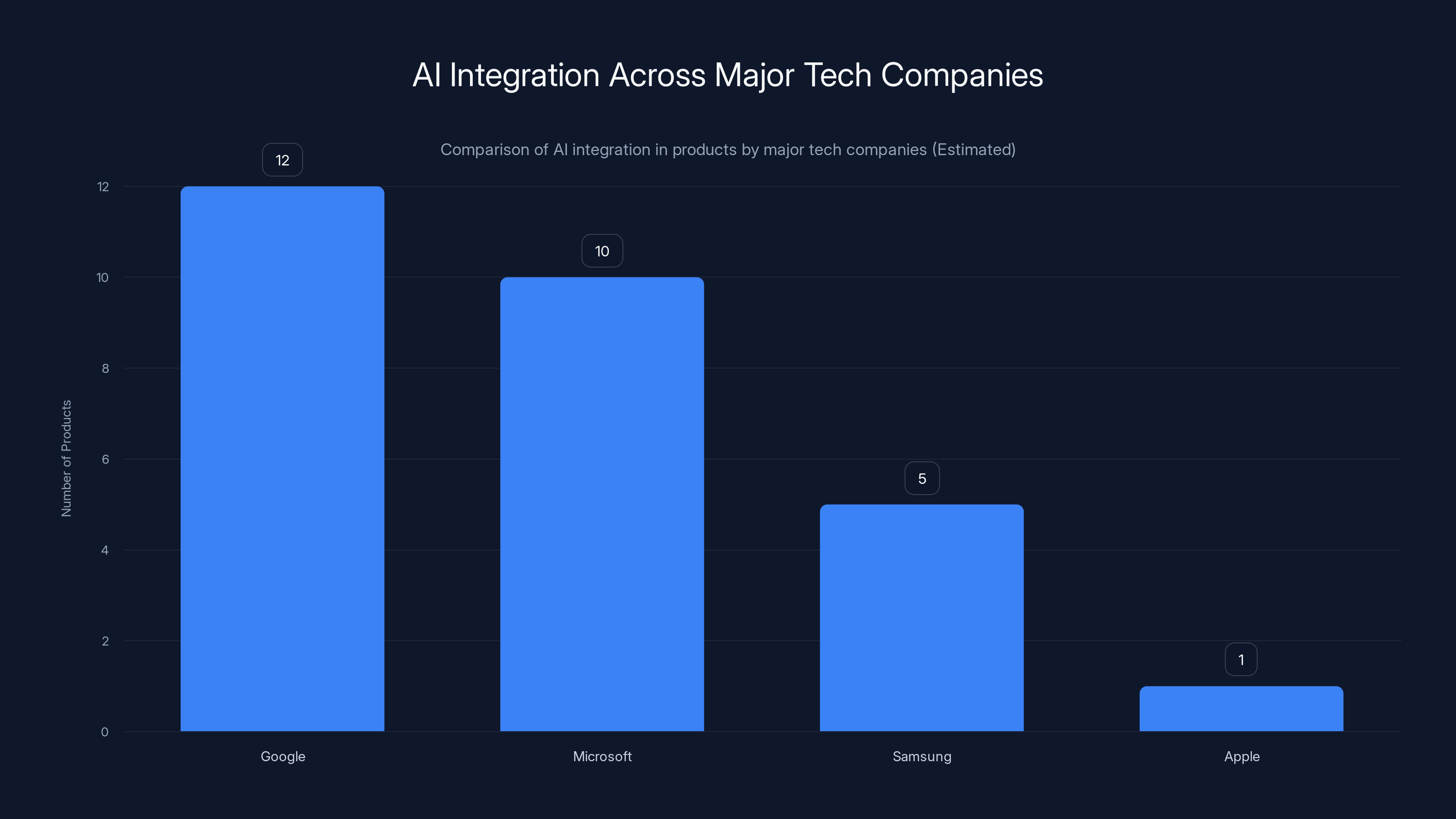

Google leads with AI integrated into 12 products, followed by Microsoft with 10. Apple lags behind with AI in only one product. Estimated data.

Integration With Third-Party Apps and Services

Apple learned a lesson with Siri's original launch: an assistant is only as useful as what it can do. If Siri can't integrate with your apps, it's just a toy. New Siri will have APIs for third-party developers to hook into.

Imagine having Siri integrated with Uber: "Can you get me a ride home?" Siri understands the request, calls the Uber API, shows you available options, gets your confirmation, books the ride. That's way more useful than the current system where you'd open Uber yourself.

Or Siri integrated with restaurant apps: "Find me a Thai restaurant nearby that's open right now and has good reviews." Siri queries multiple restaurant apps, ranks results, shows you options. You pick one, Siri books a reservation.

Or Siri integrated with shopping apps: "Find me that running shoe I saw in a TikTok last month." Siri searches through the store history, finds the product, shows you options to buy. It's way more useful than the current situation where you'd have to remember the store and search manually.

Apple's API documentation for this is going to be crucial. Developers need to understand how to structure their apps so Siri can understand what they can do and execute requests. This is easier said than done. The API has to be flexible enough to handle millions of different apps and use cases, but structured enough that Siri can actually understand what's possible.

The companies that integrate well with new Siri will get massive distribution advantages. Users will prefer asking Siri over opening apps. That's huge. So app developers are going to care a lot about Siri integration.

Apple will probably provide SDKs, documentation, and best practices for this. They'll probably incentivize early adopters with feature releases or App Store prominence. This is how you make a platform useful—you get developers invested in making it work.

The International Dimension: Language Support and Localization

One thing Apple's going to have to figure out is how to make this work in different languages. Gemini supports a lot of languages, which is helpful. But conversational understanding is much harder in languages with different grammar structures, more ambiguity, or less training data.

English is well-represented in training data. Spanish, Mandarin, Hindi, French—all decent. But getting Siri to understand nuanced conversation in every language Apple operates in is going to be challenging. There's probably going to be an initial release in English and a phased rollout to other languages over time.

Localization is also about cultural understanding. The way you ask for something in Japanese is fundamentally different from the way you ask in English. Humor, politeness levels, indirect references—these are all language and culture-specific. Getting a conversational AI to understand all of that is hard.

Apple's probably starting with English, then moving to major European languages, then Asian languages, then everything else. It's not ideal, but it's realistic given the complexity involved.

The international rollout will also depend on regulatory approvals and local partnerships. If Apple needs to partner with local AI companies or data centers in certain countries, that adds time and complexity. For example, in China, Apple might need to use a locally-trained model rather than Gemini due to regulatory requirements.

Siri's Existing Capabilities: What's Moving, What's Staying

Apple's not throwing away everything Siri currently does. Voice commands still work. Quick actions are still available. Setting timers, checking weather, calling people—all of that persists.

What's changing is that you get a new way to access Siri through conversation. And the things Siri can do are expanding because it's now backed by a powerful language model instead of a brittle command-parsing system.

This transition matters because it sets expectations. Long-time Siri users have learned what Siri can and can't do. They've adapted their behavior. With the new chatbot version, some of those limitations go away, but not all. Siri still can't reboot your phone or change system settings without your explicit tap confirmation. It still can't access information you haven't given it permission to access.

Apple's going to need to clearly communicate what's new and what's the same. Otherwise, people will try to do something that the old Siri couldn't do, assume it's fixed, get disappointed when Siri takes a permission-driven action that Siri should be asking about explicitly.

The transition is important for another reason: it signals that Apple's not abandoning voice assistants. Voice control is still valuable for accessibility, for quick commands, for situations where you can't look at a screen. The chatbot version is additive, not replacement. Both interaction models will coexist.

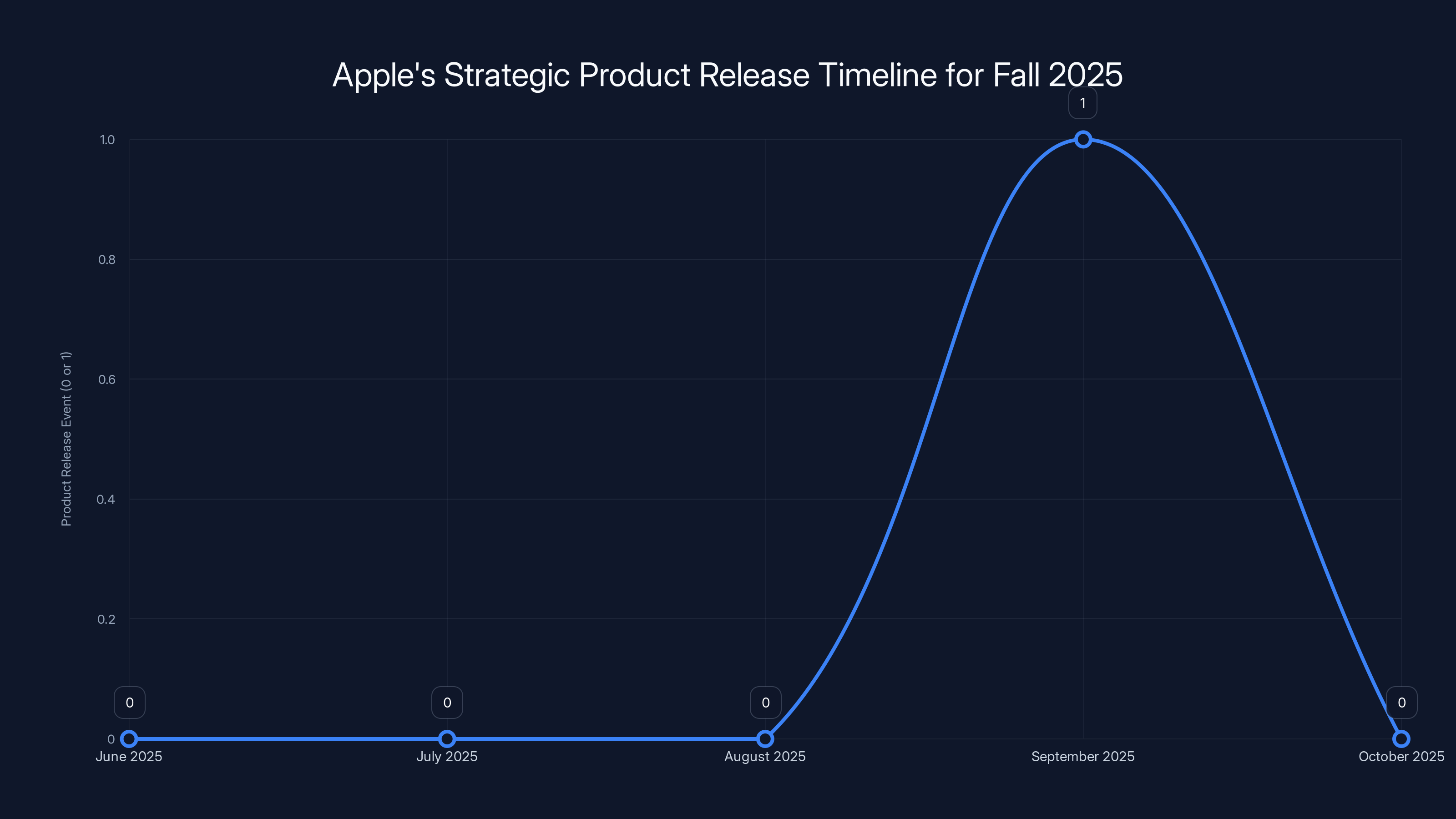

Apple's strategic timeline involves announcing at WWDC in June and shipping in September 2025, aligning with the iPhone 17 launch. Estimated data.

Performance and Latency: The Invisible Engineering Challenge

Here's something people don't think about: making conversational AI feel natural on a phone is incredibly hard from an engineering perspective. In Chat GPT on the web, you might wait 3-5 seconds for a response and it feels fine. You're at a computer. You're patient.

On your phone, anything over 2 seconds feels slow. Users get irritated. If Siri takes 5 seconds to respond to a request, people will switch to opening an app manually because it feels faster.

This creates enormous pressure on Apple to optimize. What can happen locally? What has to go to the cloud? How do you minimize round-trip time? How do you handle network latency? How do you provide feedback while the request is processing?

Apple probably has benchmarks for this: 90% of requests should return in under 2 seconds. 99% in under 4 seconds. Getting to those numbers requires architecture decisions: caching common responses, doing local analysis first, streaming responses back incrementally, predictively loading data.

Google's dealing with the same problem for Gemini on Android. Microsoft's dealing with it for Copilot on Windows. Everyone's figuring out that conversational AI at scale has latency challenges that aren't obvious until you ship to hundreds of millions of devices.

Apple's got advantages here. They control the entire stack: hardware, OS, network. They can optimize in ways that Google and Microsoft can't. But they also have higher standards because Apple users expect things to "just work." If new Siri feels laggy, people will complain loudly.

Expect some performance-related bugs in the initial release. Expect Apple to push updates that optimize latency. Expect the feature to feel noticeably smoother six months after launch than it does at launch. This is how software gets shipped on schedule and then improved over time.

The Competitive Response: What Google, Microsoft, and Samsung Will Do

Here's where it gets interesting. Apple announcing a new Siri doesn't exist in a vacuum. Google's going to respond with deeper Gemini integration. Microsoft's going to tout Copilot's sophistication. Samsung's going to push Galaxy AI harder.

Google's already integrated Gemini into Android at a deep level. When Google hears that Apple's shipping a Gemini-powered Siri, Google's going to lean harder into Gemini on Android, not because Siri is a threat (it isn't to Google's core search business), but because competitors will make noise about it.

Microsoft's going to focus on productivity. Copilot on Windows and in Office is useful for knowledge workers. Copilot on mobile is... less of a priority. But Microsoft might finally get serious about Windows Phone's successor or Android integration.

Samsung's going to continue iterating on Galaxy AI. Samsung's in an interesting position: they're on Android, so they could just use Google's AI. Instead, they've built their own with partnerships. When they see Apple succeeding with a hybrid approach (on-device + cloud), Samsung might accelerate that strategy.

The real competitive pressure is between Apple and Google, because they both control full stacks and have users across devices. Android users with multiple Google products get a more integrated Google AI experience. iPhone users with multiple Apple products will get a more integrated Siri experience. Both are good pitches.

Microsoft's playing a different game. Copilot is about productivity, not lifestyle integration. The competition there is more about feature parity than philosophical differences. Microsoft's not trying to be the AI assistant on your phone; they're trying to be the AI assistant at your desk.

What you'll probably see is increasing feature parity. Google will make Gemini more conversational on phones. Microsoft will make Copilot more available on phones. Samsung will refine Galaxy AI. Everyone will toast to being "the most advanced AI assistant" while quietly copying each other's features.

The customer benefits from this competition are real, though. Each company raising the bar forces the others to improve. A year ago, voice assistants were jokes. A year from now, they'll be genuinely useful. That's competition working the way it's supposed to.

Developer Perspective: Building for New Siri

If you're an iOS developer, new Siri represents both opportunity and complexity. Opportunity because integrating with Siri means your app gets used more—users will access your features through voice/chat instead of opening your app directly. Complexity because building for Siri requires understanding how Siri interprets requests and how to expose your app's capabilities in a Siri-compatible way.

Apple will probably provide an Intent framework (or an updated version of it) that lets apps define what they can do. A restaurant app would say "I can search restaurants, get recommendations, make reservations." A music app would say "I can play songs, create playlists, recommend music." Siri learns these capabilities and can orchestrate them when users ask.

The challenge is that building for Siri requires thinking about your app in a new way. Instead of a visual interface that guides users through steps, you have to define intents and conversational flows. It's a different paradigm. Some app categories (restaurants, transportation, shopping) will find this natural. Others (photography, gaming, note-taking) will struggle to expose their value through conversation.

Apple's going to need strong developer documentation and examples. Developers need to understand best practices. They need code samples. They need to be able to test locally. Apple will provide all of this, but it's a lot of work.

The early adopters—the apps that integrate well with new Siri right at launch—will see distribution gains. Users will discover them through Siri. Other users will see them recommended as Siri learns what app serves which purpose. It's a competitive advantage that incentivizes early investment.

For smaller apps, integrating with Siri might be out of reach. It requires engineering resources to build and maintain. But for established apps, it's probably worth doing. The question is how much developer demand will materialize. If nobody integrates well with Siri, the feature remains limited. If lots of apps integrate, the feature becomes genuinely transformative.

User Privacy and Data Security: The Operational Side

Here's the thing about privacy that nobody talks about: it's not just about how the technology works, it's about operations. Apple is going to have employees, contractors, data center workers, and service vendors who have some level of access to the system. Conversations with Siri might be analyzed by humans for quality assurance. Data might be stored in ways that are technically compliant but philosophically questionable.

Apple claims to be privacy-focused, and they do better than many companies. But perfect privacy doesn't exist. Nothing's more human than just making something and then realizing later there's a vulnerability or a privacy edge case you didn't think about.

For example: if you ask Siri something deeply personal, and Siri routes it to Gemini (Google's servers), a Google employee might see it during debugging or quality assurance. Is this acceptable? Most people would say no. Apple would argue that safeguards prevent this. But fundamentally, you're trusting both Apple and Google with sensitive information.

The security question is about encryption and data protection. Apple will encrypt in transit (probably with TLS 1.3 or better) and at rest. But encryption doesn't prevent the problem of a malicious actor gaining access to the keys or the code that manages keys. Siri will be a target for security researchers because it has access to so much personal information.

Apple's response to this is likely going to be "we take security seriously" and "report vulnerabilities responsibly." That's standard stuff. But the real question is whether they're doing better than competitors. My guess: slightly. Apple's security track record is pretty good, though not perfect. But Siri's complexity will create surface area for vulnerabilities that a simpler system wouldn't have.

Long-term, this is an operational expense for Apple. They're going to need security teams, infrastructure teams, legal teams, and privacy advocates all focused on making sure Siri doesn't become a liability. It's the price of building something powerful.

The AI Arms Race: Why Siri Matters Beyond Apple

At the highest level, Siri's redesign signals that the AI arms race is here. Every major tech company is betting its future on AI being genuinely useful and integrated into daily life. Apple's redesign of Siri is an admission that voice commands weren't the future. Conversation is.

This matters for the entire industry because it means investment, competition, and innovation are only accelerating. Companies that don't get conversational AI right are going to look outdated. Companies that do are going to have massive advantages in user engagement, data collection, and ecosystem lock-in.

Apple's move also matters because it legitimizes cloud AI integration. Apple's entire brand was built on privacy and on-device processing. For Apple to partner with Google and route conversations through Google's servers signals that even the most privacy-conscious company recognizes cloud AI is necessary for real capability. That's a green light for every other company to be aggressive about cloud AI.

The long-term implication is that your AI assistant is going to know more about you, be more capable, and be more integrated with your devices and apps. That's simultaneously exciting (genuine helpfulness) and concerning (privacy and autonomy). We'll figure out how to live with this tension, but it's real.

For developers, the lesson is: conversational AI is the new platform. If you want to build something that reaches mainstream users, you need to think about how it integrates with Siri, Gemini, and Copilot. Standalone apps are still valuable, but apps that integrate with AI assistants have an advantage.

For users, the lesson is: your AI assistant is about to get a lot better. Siri's going to be genuinely useful for many tasks. But it's also going to have visibility into more of your life. Reading and understanding the privacy settings is going to matter more than it did before.

Potential Issues and Concerns: What Could Go Wrong

Let's be realistic. A project this ambitious has risks. Here are the main ones:

Quality and Reliability: The new Siri needs to work well at launch. If it doesn't, users will just go back to voice commands or to Chat GPT. Apple gets one shot at first impression. If the feature is half-baked, it'll take years to recover user trust.

Privacy Controversies: Any privacy issue—real or perceived—will be amplified 1000x because Apple built its brand on privacy. A single news story about Siri conversations being mishandled could tank adoption. Apple's going to be extra paranoid about this, which is good.

Integration Complexity: Getting hundreds of thousands of apps to work well with Siri requires a lot of developer effort and a lot of learning on Apple's side. There will be apps that don't integrate well. There will be confusing situations where Siri tries to do something unexpected.

Latency and Performance: If the feature feels slow or unreliable, people won't use it. Apple's got to nail the performance metrics or face criticism as being behind the times.

Google Dependency: If Google changes the Gemini API, removes capabilities, or raises pricing, Apple's stuck renegotiating. Long-term reliance on Google for core functionality is a risk that Apple probably doesn't love but has to accept.

Competitive Acceleration: Announcing a new Siri basically guarantees Google, Microsoft, and Samsung all accelerate their own projects. The competitive pressure will be intense, which is good for users but puts enormous engineering pressure on Apple.

None of these are deal-breakers, but they're all real risks. How Apple manages them will determine whether new Siri is seen as a triumph or a missed opportunity.

Timeline to Mainstream Adoption

Assuming Apple ships Siri in September 2025, what's the realistic timeline to mainstream adoption?

Year 1 (2025-2026): Early adopters use it. Developers start integrating. Apple refines based on feedback. By the end of the year, maybe 20-30% of iPhone users have tried it, but only 5-10% use it regularly for non-obvious tasks. The feature gains credibility but isn't yet mainstream.

Year 2 (2026-2027): Third-party app integration improves. Siri becomes more useful because more apps work with it. User reviews improve as the initial bugs get fixed. By the end of the year, maybe 40-50% of users have tried it, 20-25% use it regularly. It starts feeling like a real feature.

Year 3 (2027-2028): Siri becomes the default way many users access certain tasks. Restaurant reservations? Through Siri. Ride booking? Through Siri. Calendar management? Through Siri. Not everyone uses it this way, but a meaningful percentage does. Market adoption maybe 60-70% have tried it, 40-50% use it regularly.

This timeline assumes Apple doesn't screw up. If the feature is buggy or limited, adoption could be slower. If it's great, adoption could be faster. But realistically, it takes time for users to change behavior and for developers to build integrations.

The important metric isn't the number of people who try it. It's the number of people who change their behavior because of it. That's adoption. And that takes time.

The Long-Term Vision: What Siri Could Become

Five years out, if Apple nails this, what's the vision? A truly personal AI that understands your life, your relationships, your goals, and your constraints. An assistant that can manage your calendar not just by moving things around, but by understanding what matters to you and protecting time for it. An assistant that reviews your emails before you do, flagging the important ones. An assistant that manages your finances, watches your health data, and reminds you of things that matter.

Not in a creepy, "Big Brother" way. In a helpful, "this person understands me and is making my life better" way. It's the difference between an assistant that executes commands and an assistant that anticipates needs.

That's the vision Apple's working toward. And it's genuinely compelling. A Siri that knows you're stressed and suggests breathing exercises. That knows you've been working too hard and suggests taking a break. That knows your partner's birthday is coming up and suggests gift ideas based on their interests.

To get there, Siri needs to understand you. That requires continuous learning. That requires access to your data. And that's where the philosophical tension lies. The most useful version of Siri is also the most privacy-intrusive. Apple's trying to find a middle ground, but it's a hard problem.

The companies that figure this out—that find the balance between capability and privacy—will win the AI era. Apple's betting they're the company that figures it out. Time will tell.

Conclusion: The Siri Moment That Changes Everything

Apple's redesign of Siri represents a turning point. The company that once insisted on command-based voice assistants is now building a conversational AI system. The company that built its brand on on-device processing is now routing conversations through Google's servers. The company that prioritized simplicity is now shipping a complex, multi-layered system that tries to be both powerful and private.

None of this is surprising if you understand where technology is moving. Voice commands were always going to become conversation. On-device AI was always going to run into capability limits. Simplicity was always going to lose to usefulness.

What's interesting is that Apple's willing to make these trade-offs now. That signals genuine commitment to catching up in AI. That signals the company understands that the future of computing is conversational and intelligent.

For users, the immediate impact is that Siri's going to get a lot better. For developers, the opportunity is that Siri's going to become a distribution channel. For Apple, the bet is that integrating these capabilities into the phone will beat standalone chatbot apps.

The real winner here might be Google, which gets Gemini integrated into hundreds of millions of iPhones, meaning Google becomes the AI brain powering Apple's devices. That's a huge win that flies under the radar. But it's also what Apple chose because they recognize that genuine intelligence requires resources they don't have.

Looking forward, expect Siri to be the headline feature for iPhone for the next few years. Expect massive competitive response from Google, Microsoft, and Samsung. Expect rapid iteration as Apple learns what users actually want from conversational AI. Expect some initial disappointment as the feature launches with limitations, followed by steady improvement over time.

But most importantly, expect conversational AI to finally feel native to your device instead of like a separate thing you open an app for. That's the real shift. That's what makes this moment interesting.

FAQ

What exactly is Apple's new Siri?

Apple's new Siri is a conversational AI assistant powered by Google Gemini, designed to work through both typing and voice on iPhones, iPads, and Macs. Unlike the current command-based Siri, the new version engages in dialogue, understands context from previous messages, and can handle complex, multi-step tasks that require reasoning.

When will the new Siri be available?

The new Siri, codenamed Project Campos, is scheduled to launch in fall 2025 with iOS 18, iPadOS 18, and macOS 15. Apple plans to announce it during WWDC in June 2025 before the September release alongside the iPhone 17 launch.

How is the new Siri different from Chat GPT or Google Gemini?

The new Siri is integrated directly into your device with access to your personal data like calendar, contacts, emails, and location. Chat GPT and Google Gemini are standalone applications focused on general knowledge and reasoning. Siri's advantage is knowing your personal context; Chat GPT and Gemini's advantage is more general capabilities. They're complementary tools, not competitors.

Does the new Siri process my conversations on servers?

Yes, some processing happens on Apple's and Google's servers. Apple encrypts your request before sending it to Google's Gemini models for processing. Apple claims to minimize personal data sent to Google and uses on-device processing for your calendar, contacts, and other private information. You have privacy controls to adjust which features use cloud processing, but the chatbot functionality does require some cloud interaction.

Will the old voice commands for Siri still work?

Yes. Apple is keeping voice commands and quick actions alongside the new chatbot version. You can still say "Hey Siri, set a timer" and get instant results. The conversational chatbot is an additional interaction method, not a replacement for voice commands. Both will coexist on your device.

How will third-party apps integrate with new Siri?

Apple will provide an Intent framework or updated version allowing app developers to define what their apps can do through Siri. Developers can specify actions like "search restaurants," "book reservations," or "make payments." When users ask Siri to perform these tasks, Siri understands which app to access and orchestrates the request, with your explicit approval for sensitive actions.

What about privacy concerns with Google having access to conversations?

Apple encrypts data in transit and at rest, limiting what Google sees. Most personal data processing happens on your device before anything leaves your phone. However, some requests do go to Google's servers unencrypted for processing, which represents a privacy trade-off from Apple's previous messaging about on-device processing. You can disable certain features to maintain more privacy, but the core chatbot requires Google integration.

Will new Siri work offline?

Not fully. While some on-device capabilities will work without internet, the conversational chatbot functionality requires cloud processing through Google Gemini. You'll need a network connection for the most powerful features. Quick voice commands and some device controls might work in airplane mode, but the chatbot conversation won't function without internet access.

How accurate will Siri be at understanding what I'm asking?

Accuracy depends on context clarity and task complexity. Siri should handle straightforward requests very well (scheduling meetings, finding restaurants, sending messages). More ambiguous requests will get clarifying questions from Siri, which can then refine the response. Like all language models, it will sometimes misunderstand, but the conversational nature means you can correct it immediately with follow-up messages.

Will this cost extra or is it included with iPhone?

Apple hasn't announced separate pricing. The chatbot Siri will almost certainly be included as part of iOS 18 at no extra cost, just like current Siri features. Apple might bundle premium cloud features into a subscription tier eventually (similar to their approach with other services), but initial availability will be free to all iOS users.

Ready to explore how conversational AI is transforming productivity? If you're interested in how organizations are automating workflows and document generation with AI, explore modern automation platforms that combine intelligence with simplicity.

Use Case: Automatically generate meeting summaries and action items from voice conversations in real-time

Try Runable For Free

Key Takeaways

- Apple's Project Campos transforms Siri from voice-command assistant to conversational chatbot powered by Google Gemini

- New Siri combines on-device processing for personal data with cloud AI, creating hybrid privacy model that balances capability and security

- iOS 18 launch in September 2025 positions Siri as Apple's answer to ChatGPT and Gemini, with conversational capabilities previously unavailable

- Deep integration with calendar, contacts, and third-party apps makes new Siri contextually aware of your personal life and goals

- Apple's partnership with Google signals that even privacy-focused companies recognize cloud AI is necessary for genuine intelligence and capability

Related Articles

- Apple & Google's Gemini Partnership: The Future of AI Siri [2025]

- Alexa Plus Gets Sassy: Why AI Assistants Are Developing Attitude [2025]

- OpenAI Ads in ChatGPT: What It Means for Users [2025]

- Why Apple Chose Google Gemini for Next-Gen Siri [2025]

- Is Alexa+ Worth It? The Real Truth Behind AI Assistant Expectations [2025]

- Ring's AI Intelligent Assistant Era: Privacy, Security & Innovation [2025]

![Apple's Siri AI Overhaul: Inside the ChatGPT Competitor Coming to iPhone [2025]](https://tryrunable.com/blog/apple-s-siri-ai-overhaul-inside-the-chatgpt-competitor-comin/image-1-1769027973705.jpg)