Apple's AI Wearables Revolution: What We Know About the Trio

Apple isn't usually the first to bet big on emerging hardware categories. But when the company does move, it tends to move decisively—and right now, it's sprinting toward the AI wearables space with three distinct products in active development.

The rumors started quietly. The Information reported that Apple was building an Air Tag-sized AI pendant with cameras. Then Bloomberg added the real shock: Apple is developing not just one, but three AI-powered wearables simultaneously, and the timelines are accelerating faster than anyone expected.

Here's what makes this moment critical. The AI hardware space has shifted dramatically in the past eighteen months. Humane's AI Pin didn't revolutionize anything. Meta's Ray-Ban smart glasses found genuine traction. Snap's upcoming Specs generated real interest. And in that gap, Apple watched. Studied. Planned. Now it's moving.

The question isn't whether Apple will release AI wearables—the company clearly will. The real questions are messier: What will these devices actually do? How will they integrate with the iPhone ecosystem? And can Apple avoid the pitfalls that sank earlier attempts?

Let's dig into what we know, what we can infer, and what it all means for the future of wearable computing.

The Three-Device Strategy: Breaking Down the Roadmap

The AI Pendant: Apple's Answer to the Camera Wearable

Let's start with the device that caught everyone's attention: the AI pendant.

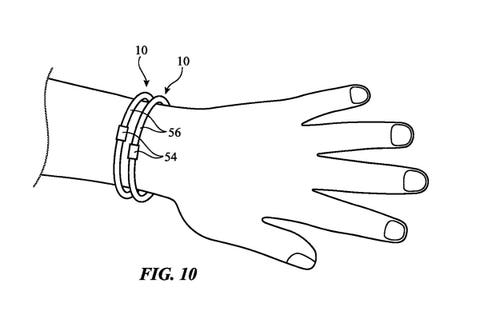

This thing is conceptually simple but functionally complex. Imagine an Air Tag. Now bolt on two cameras—one pointing forward, one pointing down. Add AI processing, battery life measured in hours rather than days, and Siri integration. That's roughly what we're looking at.

The form factor matters more than it sounds. A pendant doesn't compete with your phone for attention. It sits there, passively recording what you see, what your hands are doing, what's in front of you. When you ask Siri a question, the AI can reference visual context from those cameras.

"What recipe uses these ingredients?" Point at your fridge. The pendant's camera sees them. Siri processes that input. You get an answer. Compare that to typing on your phone or speaking into the void without context.

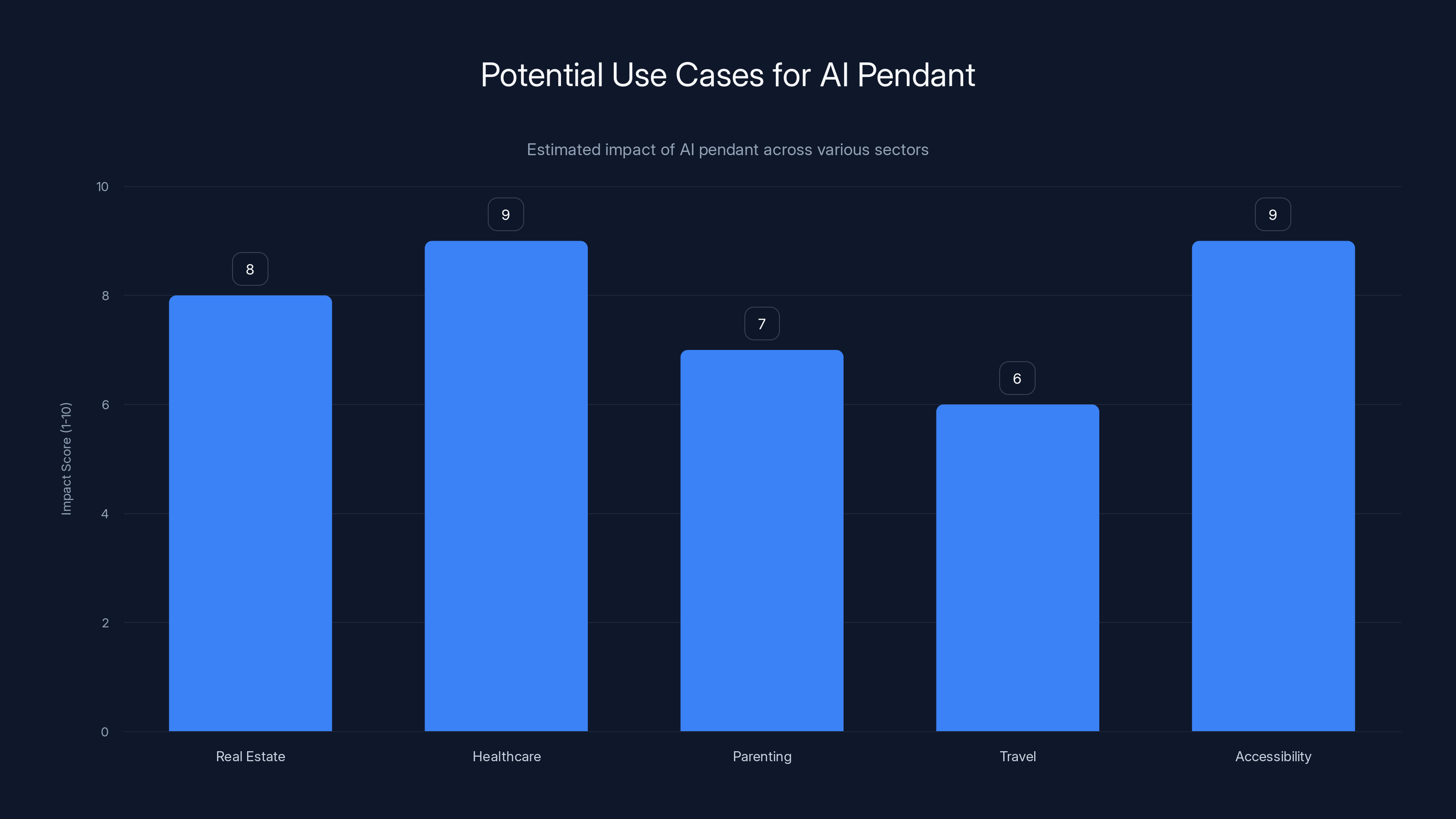

The use cases multiply quickly. Real estate agents could stream property tours. Surgeons could document procedures for training. Parents could keep tabs on what their kids are looking at while playing. Travel guides could provide contextualized information as you walk through a city. Accessibility applications become obvious too: vision impairment compensation, real-time object recognition, spatial awareness assistance.

But here's the catch everyone's dancing around. Cameras worn on your body that constantly record are privacy nightmares. Not in theory—in immediate, practical ways. Recording other people without consent. Recording in bathrooms, locker rooms, doctor's offices. The regulatory minefield hasn't even been fully mapped yet.

Apple's likely approach? Strict geofencing. Limited recording windows. Mandatory indicators that the device is recording. Tight privacy controls baked into the OS, not bolted on as an afterthought like other companies do. The company's entire privacy positioning depends on getting this right from day one.

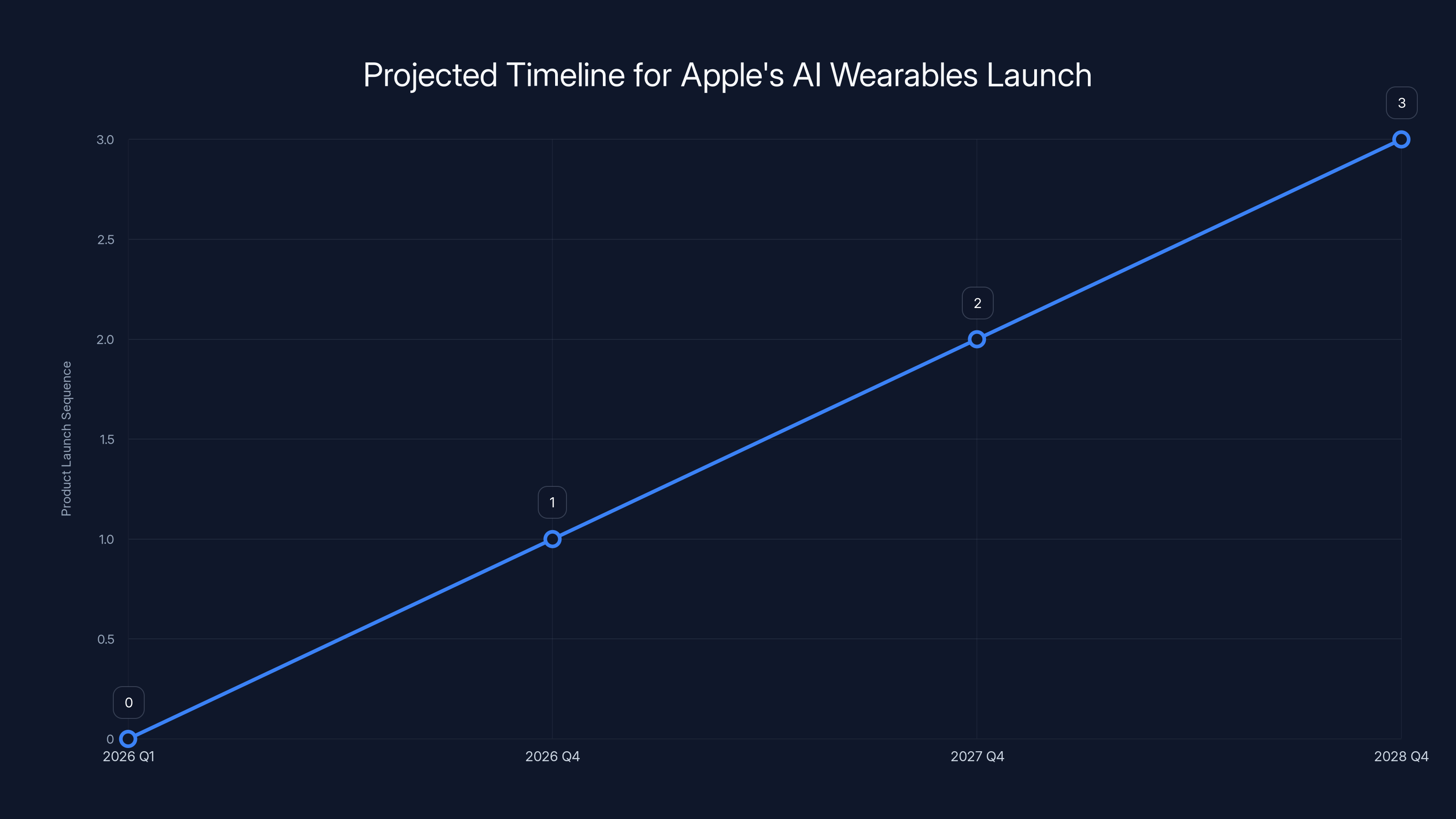

Timeline-wise, expect a release sometime in late 2026 or early 2027. That's aggressive enough to stay competitive but conservative enough to handle regulatory questions. The pendant won't launch without clear FCC approval, probably some form of FTC clearance on the privacy side, and state-level legal clarity in California, New York, and other restrictive jurisdictions.

The Smart Glasses (N50): The Premium Play

Apple's smart glasses carry an internal code name: N50. That's important to note because it signals serious development stage. Code names don't get assigned to vaporware.

The smart glasses strategy differs fundamentally from the pendant. Where the pendant is passive and contextual, smart glasses are active and intentional. You put them on. They're part of your look. They're a statement.

Rumor has it these aren't Google Glass knockoffs. Apple's likely building something closer to premium sunglasses with AR capabilities. Think high-resolution displays embedded in the lenses. Processing power in the frames themselves. A design that doesn't look like a cyborg experiment gone wrong.

The specifications floating around suggest:

- High-resolution microdisplays in both lenses for true binocular vision

- Directional audio that plays sound directly to your ears without blocking ambient noise

- Hand gesture recognition for control without raising your wrist or speaking aloud

- Eye tracking for input and personalization

- Visual AI processing in real time, not relayed to your phone

- All-day battery, or close enough that you don't think about it between morning and night

Apple isn't chasing novelty here. The company is building something you'd actually want to wear, not something that feels like carrying a research prototype.

The real pivot point is integration with existing Apple services. Maps gets AR navigation. Translate gets live language conversion as you watch people speak. FaceTime gets spatial presence where you see the other person in AR positioned in your room. Photos becomes dimensional, not flat. Apple Intelligence gets a visual input channel that makes the whole system smarter and more contextual.

Production ramping is targeting December 2026 with consumer availability in 2027, according to Bloomberg's reporting. That's aggressive. It means Apple's already past the technical prototype phase and into the manufacturing optimization phase. The company needs confidence in the concept to commit hardware supply chain resources at that scale.

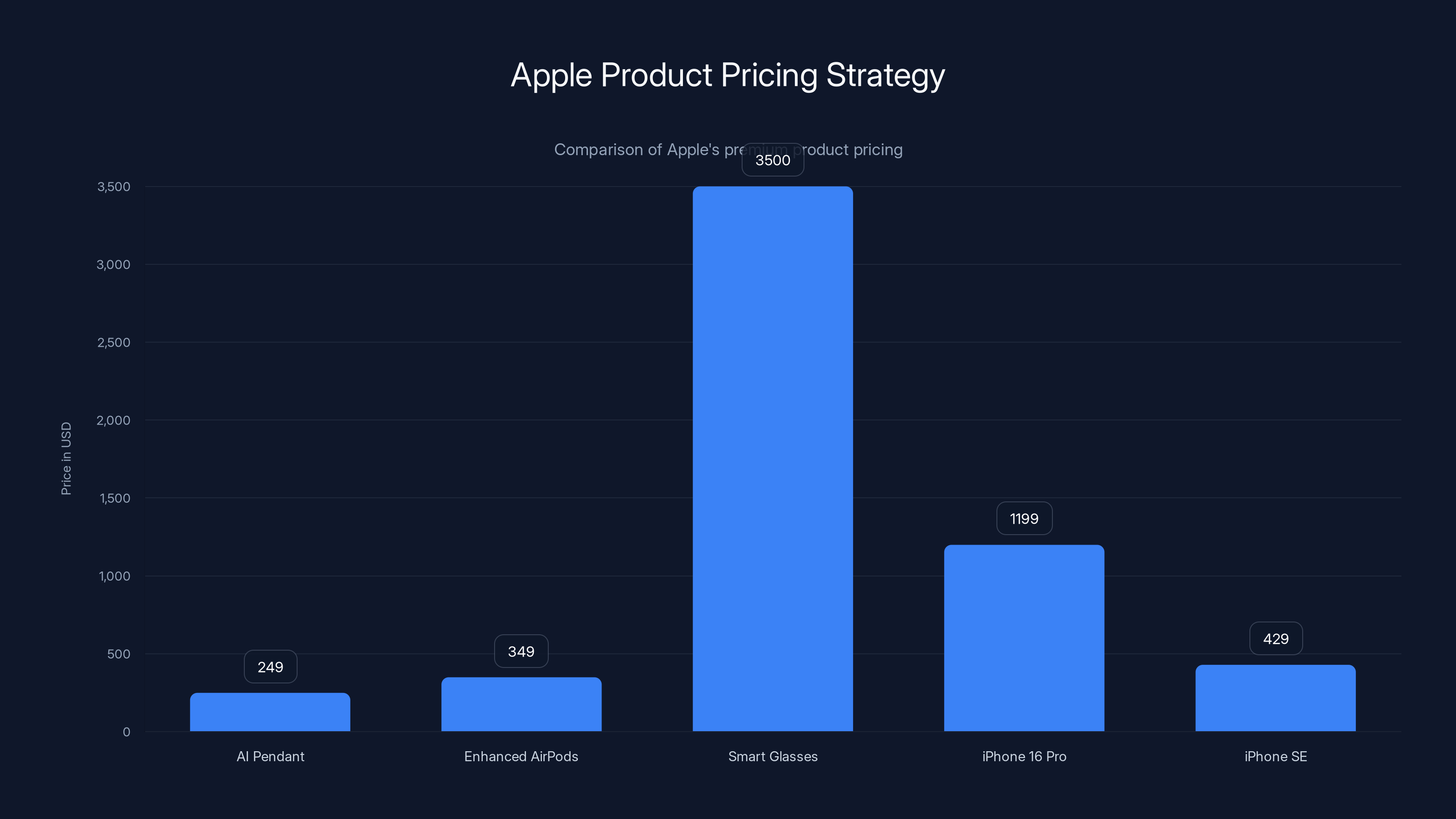

Pricing will be brutal. These will start around

Enhanced AirPods: The Accessibility Angle

The third product in Apple's trio is the most understated but potentially most important: AI-powered AirPods.

Ongoing AirPods development is nothing new. Apple refreshes this product line roughly every 18-24 months. But the "AI capabilities" angle is what shifts them from incremental to transformative.

What does AI actually do in earbuds? Several things:

- Real-time translation: You're talking to someone in Japanese. The AirPods translate immediately into your ear. They hear your response translated back. Conversation flows naturally.

- Noise signature analysis: The system learns your hearing profile over weeks of use and adapts audio processing to your individual ears, not just frequency curves.

- On-device voice AI: Siri processes commands locally, not by sending audio to Apple servers. Faster. More private. More responsive.

- Spatial audio generation: Creating directional audio from mono or stereo sources, with full spatial presence.

- Health integration: Hearing loss detection, alerts about sudden loud noises, integration with the Health app's hearing metrics.

- Personalized audio mixing: Blend music with ambient sound, calls, navigation, or health alerts based on context and preference.

The prosthetic angle matters more than fashion tech coverage typically acknowledges. Better hearing aid functionality without the stigma or complexity of traditional hearing aids. Accessibility features that cost

Battery life becomes critical here. If AI processing burns through power faster, these devices become tethered charging accessories. Apple's silicon teams have obviously been optimizing for low-power AI inference—the AirPods will likely match or slightly exceed current battery life despite added capability.

Release timing is probably concurrent with the other devices, though AirPods will likely launch first since the form factor is proven. Expect announcement in late 2026, availability in early 2027. Pricing probably stays in the

The AI pendant could significantly impact sectors like healthcare and accessibility, with high potential for real-time applications. (Estimated data)

The Competitive Landscape: Why Apple's Timing Matters

Meta's Smart Glasses Are Already Winning

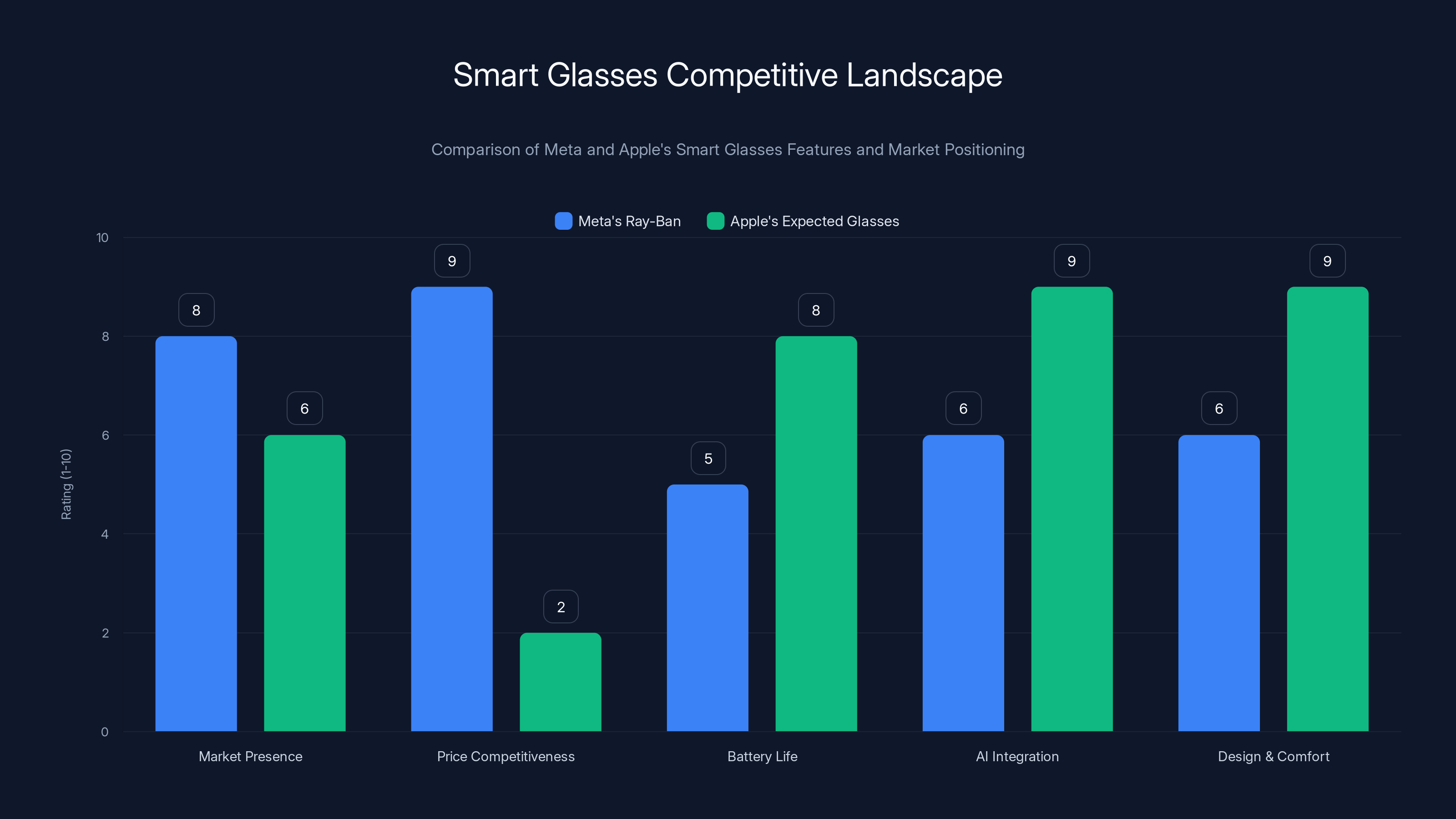

Let's be honest about where the competition stands. Meta's Ray-Ban smart glasses are shipping in volume. They work. People actually wear them. They're not perfect—the cameras drain battery, the AI isn't particularly sophisticated, and the display is essentially notification-based rather than full AR. But they exist in the market right now.

Meta has an eighteen-month lead on Apple. That's significant in hardware. It's enough time to identify failure modes, iterate on design, refine software, build ecosystem partnerships. Meta's also running significantly cheaper—

So why isn't Meta winning decisively? Because the experience is still rough. The glasses feel heavy. Battery life is disappointing. The camera quality is adequate, not exceptional. AI features feel bolted on, not integrated. Meta is winning by showing up first, not by winning on execution.

Apple's entering into that gap: the company waits until the category is proven but before market definition becomes fixed. Then Apple releases something so much better engineered that it becomes the template everyone else copies.

Google Is Remarkably Absent

Here's the weird part: Google basically ghosted the smart glasses category after Google Glass flopped a decade ago. X Development (Alphabet's moonshot division) killed the glasses project. The company's pursued AR through partnerships with others, but it's not building consumer hardware in this space.

Why? Probably because Google understands its competitive advantage lives in software and services, not hardware manufacturing. Selling eyewear requires precision engineering, manufacturing partnerships, supply chains, retail presence, and customer service commitments that Google doesn't want. Google would rather license its AR OS to hardware makers and take 30% of the profit.

That actually helps Apple. It means Apple doesn't face Goliath competition. Just Meta, Snap, and a handful of startups most people haven't heard of.

Microsoft Is Competing in Enterprise

Microsoft's HoloLens is expensive, focused on enterprise, and requires serious technical knowledge to operate effectively. The device starts at $3,500 and goes up from there. Microsoft isn't trying to sell to consumers. The company is selling to manufacturers, military contractors, medical facilities, and large enterprises.

Apple could eventually compete in that space too, but not with these initial products. The first consumer AI wearables need to be accessible, intuitive, and delightful. Enterprise comes later.

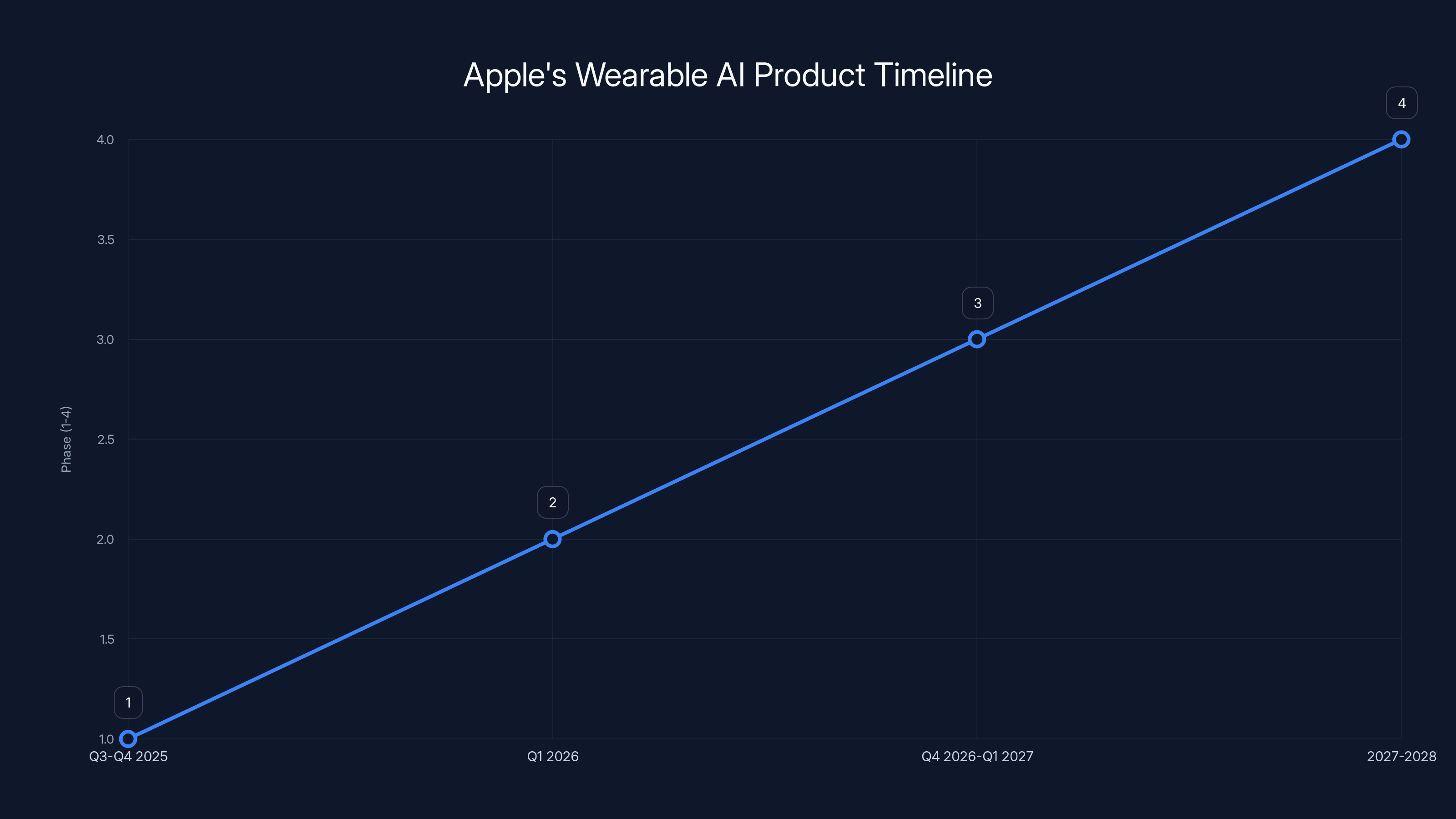

Apple's AI wearables are expected to launch in sequence: AirPods in late 2026, the pendant in late 2027, and smart glasses potentially by late 2028. Estimated data based on typical cycles.

Integration With The iPhone Ecosystem: The Connectivity Layer

Apple's greatest strength in this category is something competitors can't easily replicate: integration with the iPhone.

Imagine this workflow. You're wearing the smart glasses. You're also carrying your iPhone. You're also wearing the enhanced AirPods with translation. And the AI pendant is hanging on your shirt.

These devices don't compete for your attention—they orchestrate it. The smart glasses handle visual AR. The AirPods handle audio and translation. The pendant provides contextual awareness when you need it. Your iPhone is the hub that synchronizes everything.

This is where Siri becomes actually useful instead of a punchline. With contextual awareness from multiple input sources, Siri can make intelligent assumptions. "Who should I call?" The system knows who you were just looking at in photos. Who you've been texting. Who's nearby via Bluetooth. Siri suggests the right person.

"What did I just see that article about?" The pendant's cameras captured it. The glasses didn't display it. But the system cross-references, finds the article on your phone, surfaces it.

Microsoft tried building this kind of ecosystem with Cortana years ago. It never quite worked because Windows devices didn't have enough unified sensing. Apple has every ingredient: tight hardware-software control, consistent operating systems across devices, onboard processing power that's actually sufficient, and users accustomed to paying premium prices for polish.

Privacy is critical here too. All this sensor data—cameras, audio, location, health metrics—stays on-device by default. Syncing to iCloud happens with full encryption. Apple isn't building a data collection apparatus and calling it a feature. The company is building a local AI system that occasionally talks to servers on terms the user controls explicitly.

Compare that to how Google, Meta, and Microsoft typically operate. Those companies' incentives fundamentally point toward data collection. Apple's incentives point toward device efficiency and customer lock-in through experience quality. For a change, those incentives align with user privacy.

Technical Challenges Apple Probably Overcame

Power Management and Battery Life

Wearable AI is a battery problem wearing a hardware costume. Running AI models on-device—actually inferencing in real time, not just offloading to servers—requires immense computational power per joule of battery capacity.

Apple's solution: custom silicon designed specifically for this problem. The company's neural processing units (NPUs) in recent chips (A17 Pro, M4, A18) evolved precisely for this use case. Full AI models running locally, burning minimal power, generating minimal heat.

The trade-off is memory. These chips have limited RAM and storage specifically because expanding both drains battery dramatically. So the AI models need to be pruned, quantized, distilled down to their essentials. A full GPT-4 model won't fit. But a specialized GPT-4-small fine-tuned for specific tasks? That works.

Apple's been hiring heavily in model compression and efficient inference. The company's acquired startups working on exactly this problem. This isn't theoretical for Apple—it's operational.

Thermal Design

Processing power generates heat. Wearables can't dissipate heat effectively because they're small and worn against your skin. Get too hot and your glasses become uncomfortable. Your pendant feels like a heating element.

Apple's likely solution: intelligent workload management. Heavy computation happens when the user's idle, results cache locally. Real-time processing happens on low-power models. Offload heavier work to the iPhone when it's nearby. This is transparent to users but keeps thermals in the sweet spot.

Microsoft struggled with this on HoloLens. Devices got hot. Users complained. Apple learned from that mistake before shipping anything.

Display Technology

High-resolution displays that fit inside smart glasses don't exist in mass production yet. That's why Apple acquired companies working in micro-OLED and miniaturized display technology. The company's building the displays in-house, probably with suppliers like Sony or Samsung handling components.

The technical challenges are genuine: brightness (needs to work in daylight), field of view (too narrow feels wrong), resolution (low resolution ruins text readability), power consumption (displays are battery killers), and reliability (if the display fails, the whole glasses are useless).

Apple's patience on smart glasses—waiting until now versus launching something half-baked years ago—reflects confidence that display technology is finally good enough. The company doesn't ship products with mediocre core technologies.

Hand Gesture Recognition

Controlling glasses with hand gestures sounds futuristic but is technically complex. You need cameras pointed at your hands, always tracking, with models that understand hundreds of possible gestures, distinguish intentional from incidental movements, and work reliably regardless of lighting, skin tone, or hand size.

Apple's been refining this through its AR development and iPad-based interactions for years. The company's acquired multiple firms working on gesture recognition specifically. By 2026-2027, the technology will be mature enough to integrate into glasses without requiring users to learn an entirely new interaction model.

Meta's smart glasses lead in market presence and price, but Apple's anticipated entry is expected to excel in design, battery life, and AI integration. (Estimated data)

Manufacturing and Supply Chain Implications

The Display Supply Network

Apple is almost certainly partnering with Samsung and Sony for display technology. Both companies have advanced micro-display production. Samsung supplies OLED panels for iPhones. Sony builds high-end sensors for Apple's cameras.

But here's the constraint: micro-OLED production at scale is hard. The yield rates are probably in the 30-40% range right now, not the 80%+ Apple needs. So the company is probably investing in production equipment, working with suppliers to optimize manufacturing, or building its own factory specifically for this.

That investment won't pay off unless Apple's confident it'll ship millions of units. Which suggests the company isn't expecting this to be a niche product. Apple's planning for real volume.

Processing Power and Chip Design

Apple's custom silicon teams are building specifically designed processors for wearables. These chips need to be:

- Tiny (they fit in glasses and earbuds)

- Low power (measured in milliwatts, not watts)

- Fast enough for real-time AI

- Reliable (no throttling or crashing)

- Secure (on-device processing means no sending data to servers)

Apple's probably already built these chips. The company's silicon roadmap extends years into the future. Wearable-specific processors are probably already in test production, ready for scaling.

Supply Chain Security

Apple doesn't want any surprises. The company probably has qualified suppliers for every component, backup suppliers for critical parts, and inventory buffers exceeding normal levels. Wearables with cameras are sensitive products—both technically and politically. Any supply chain failure creates headlines.

Expect Apple to announce supply partnerships before or alongside product announcements. These aren't secret. They're leverage. "We're working with [reputable supplier] to ensure [quality/privacy/reliability]." It's marketing disguised as supply chain transparency.

Regulatory and Privacy Considerations

The Camera Problem

Body-worn cameras recording the world are legally ambiguous in most jurisdictions. California has been moving toward requiring explicit consent before recording. New York's considering similar legislation. The FTC is actively investigating camera-equipped wearables for privacy implications.

Apple's likely strategy: be the privacy leader. Announce strong privacy commitments before regulation forces them. Make the pendant legally compliant in every major market. Probably include hardware switches to disable cameras, not just software toggles. Encrypt all recordings on-device. Never send camera data to Apple servers without explicit user permission. Make privacy so integral to the product that it becomes a selling point.

Compare that to Humane's AI Pin, which faced immediate privacy concerns. Apple learned from those mistakes before shipping.

Biometric Data and Health Information

AirPods with health sensing trigger different regulations. The FDA regulates medical devices. If Apple claims hearing loss detection or hearing aid functionality, the device becomes subject to medical device regulations.

Apple's playing this carefully. The current AirPods Pro have hearing health features, but Apple doesn't market them as hearing aids. The enhanced versions will probably follow the same approach: powerful health features that don't cross the line into making medical claims that trigger device classification.

It's regulatory gamesmanship, but it works. Users get the functionality they want. Apple avoids the certification burden of medical device status.

International Variations

Europe's GDPR makes wearable recording far more complicated. China has different privacy norms. India is developing its own regulations. Apple's almost certainly designing these products to be globally compliant or having regional variants. More likely, Apple builds the most restrictive version globally and gains marketing points for privacy leadership.

The timeline predicts Apple's wearable AI products will move from design finalization in late 2025, to announcements in early 2026, availability by late 2026, and market definition by 2027-2028. Estimated data.

Siri's Role: Making These Devices Actually Useful

Siri has been a punchline for years. It's not as good as ChatGPT. It's not as accurate as Google Assistant. It's not as seamless as Alexa in smart homes.

But Siri on these new wearables could actually be differentiated. Here's why:

Siri will have context. It will see what the user sees (from the pendant and glasses). It will hear what the user hears (from the AirPods). It will know the user's location, recent activity, and upcoming calendar. It will understand what app the user just used or what message just arrived.

With that context, Siri can make smarter suggestions and more accurate interpretations of ambiguous commands. "Book that restaurant" becomes unambiguous when Siri knows which restaurant you were just looking at. "Call them back" makes sense when Siri knows who just called.

This is the difference between AI assistants that are broadly capable and AI assistants that are contextually brilliant. Apple's betting on the latter.

Apple's also likely heavily integrating Claude or other advanced language models through on-device APIs, not just relying on Siri's proprietary technology. The company did this quietly with some AI features already. Expect more of it.

Pricing Strategy and Market Positioning

Premium Positioning

Apple isn't competing with Meta's $300 smart glasses. The company's competing with the experience of using the iPhone—and the premium price users accept for that experience.

Expect positioning like this:

- AI Pendant: 299 as an accessory that enhances phone experience

- Enhanced AirPods: 399 as a premium audio product with intelligence

- Smart Glasses: 4,000 as a luxury computing device

These aren't expensive because the components cost that much. They're expensive because Apple controls the market and users accept premium pricing for Apple experiences. It's the same reason an iPhone 16 Pro costs

Ecosystem Lock-in

Apple's pricing strategy includes deliberate lock-in. These products work best together. They work okay with other iPhones. They work poorly or not at all with Android devices.

For wearable AI, that matters less than for phones because your wearable choices don't lock you into a phone ecosystem for a decade. But Apple's betting that a great wearable experience makes people stickier to iPhones anyway. If your glasses work seamlessly with your iPhone, switching to Android becomes more friction.

It's not as aggressive as locking users in through proprietary chargers or components. It's lock-in through experience quality and integration depth. And honestly, for users who love the Apple ecosystem, that's probably worth the premium.

Current micro-OLED production yield rates are estimated at 35%, significantly below Apple's target of 80%+. Estimated data.

Real-World Use Cases and Killer Apps

Navigation and Wayfinding

Smart glasses with high-resolution displays absolutely demolish phone-based navigation. You're looking forward, not down. Directions appear in your field of view. You see the world in context while getting instructions. Apple's Maps is finally good enough that this feature would be genuinely useful, not just a novelty.

Walking in an unfamiliar city becomes trivial. The glasses overlay street names, building information, real-time traffic. You never get lost because you're not consulting your phone—the information comes to you.

Real-Time Translation

The AirPods translation feature—hearing someone speak in Japanese and understanding their words instantly in English—is legitimately science fiction that's already possible. Apple's translator is already surprisingly good. Make it real-time and contextual, and you've solved language barriers for millions of travelers.

Business implications are huge. International meetings where English isn't everyone's first language become easier. Tourism gets better. Even immigrants navigating unfamiliar countries benefit immediately.

Health and Accessibility

Accessibility is where wearable AI shines.

For vision-impaired users, the pendant's cameras plus Siri's processing could provide verbal descriptions of surroundings. Real-time object recognition. Hazard warnings. Emergency detection. This isn't marketing speak—it's life-changing functionality.

For hearing-impaired users, the AirPods become powerful hearing aids without the stigma. Bone conduction audio on the glasses could provide spatial sound information. Real-time captioning on all audio.

For elderly users, fall detection, medication reminders, and emergency assistance become more sophisticated. The glasses could recognize slip hazards. The pendant could alert caregivers to unusual patterns.

Apple doesn't market this well, but accessibility is one of the company's genuine strong points. Expect the marketing to emphasize health and accessibility heavily, even though lifestyle marketing sells products better.

Work and Productivity

For knowledge workers, smart glasses change how we interact with information.

Engineer on a construction site, hands full. Glasses display relevant blueprints. Pendant documents progress. AirPods provide hands-free communication. All contextual, all visual-first.

Surgeon in an operating room needs information without scrubbing out. Glasses display patient imaging. Real-time updates on vitals. Historical context on previous procedures. Hands-free voice commands.

Salesperson in a meeting needs customer context without checking their phone. Glasses display relevant account information, previous interactions, pricing authority. Pendant documents the meeting visually.

These aren't hypothetical. Meta's already pursuing some of these use cases. Apple's entering the space with better hardware and integration.

Competitive Responses and Market Dynamics

What Meta Will Do

Meta will respond with better displays and smarter AI, probably by 2027 or 2028. The company has the technical capability. It has the supply chain partnerships. It has the financial resources.

Meta's advantage: the company already has users wearing glasses. Incremental improvements to an existing product usually succeed better than new product categories. Meta can iterate faster because the manufacturing pipeline is already established.

Apple's advantage: the company has design excellence and the ability to integrate with the iPhone ecosystem in ways Meta simply can't replicate.

What Google Might Do

Google's probably watching Apple more carefully than anyone except Meta. If Apple's strategy succeeds, Google might finally re-enter the smart glasses space. The company has the technology—mostly sitting idle since the Google Glass era ended.

But Google's incentive structure argues against it. Google makes money from search and advertising. Smart glasses that work seamlessly with iPhones don't make money for Google. Smart glasses that work with Android might, but Android lacks the tight integration that makes Apple's approach work.

Expect Google to partner with other manufacturers, license its technology, and take a back-seat approach. That's the pattern Google has followed consistently.

Startup Opportunities

There's massive opportunity in specialized wearable applications. Healthcare startups. Industrial applications. Fitness and sports. Entertainment and gaming.

The companies that will win are the ones that build on top of Apple's ecosystem, not against it. A healthcare startup building cloud-based data processing that works with Apple Health and the new wearables could win huge. A fitness platform that leverages glasses-based coaching could dominate.

Startups that build incompatible wearables will struggle. Markets consolidate around winners. Apple's position is so strong that third-party wearable makers will need genuine differentiation or they'll lose.

Apple's pricing strategy emphasizes premium positioning, with products like Smart Glasses priced significantly higher due to perceived value rather than component cost. Estimated data.

Timeline Predictions and What to Watch

Q3-Q4 2025: The Calm Before the Storm

Expect rumors to quiet down as Apple finalizes designs and begins production scaling. The company typically goes silent 4-6 months before major hardware announcements. If rumors dry up, assume announcements are coming in early 2026.

Watch for supply chain signals: orders to display makers, finalizations of processor volumes, regulatory filings. These are the tell-tale signs of imminent product launches.

Q1 2026: Announcement Phase

Apple probably announces the AI wearables at WWDC 2026 (typically June) or a special event in spring. The company needs time to explain the concepts, build developer interest, and start pre-orders.

Expect heavy focus on privacy, integration, and design. Light focus on technical specifications. Apple doesn't market based on specs—it markets based on what you can do.

Q4 2026-Q1 2027: Availability Phase

The AirPods probably ship first (easiest manufacturing, proven product line). The pendant follows. The smart glasses launch last, with possibly a limited initial run.

Prices probably come down quickly on the AirPods (following the typical Apple trajectory). Glasses pricing stays sticky because the product is new and supply-constrained.

2027-2028: Market Definition

By 2027, wearable AI is either a real category or it's fading. Apple's success or failure with these products determines whether competitors double down or give up.

Wearable AI probably becomes the next iPhone-sized category: something that didn't exist ten years ago, now represents 15-20% of consumer tech spending, and is considered essential by millions.

Or it becomes like 3D TVs: an interesting experiment that never reached critical mass.

Given Apple's track record, betting on the former seems reasonable.

The Bigger Picture: Computing Interface Evolution

From Phone-First to Context-First

Wearable AI represents a fundamental shift in computing interfaces. For fifteen years, the smartphone has been the primary computing device. You pull it out. You look at the screen. You interact.

Wearable AI inverts this. The computer comes to you. Information appears in your context. You interact through gestures, voice, and attention. The phone becomes a secondary device you use when you need intensive computing, not the primary interface for all information.

This is actually how humans have always worked. You don't keep a notepad in front of your face—you glance at it when needed. Wearable AI mirrors that natural interface pattern.

The Spatial Computing Bet

Apple's been using "spatial computing" as a descriptor for its AR strategy. The company means it literally: computing that happens in physical space, integrated with your surroundings.

Vision Pro is Apple's first spatial computer. It's expensive, requires a room, and isn't practical for daily use. But it proved the technology works.

These new wearables are the practical evolution: spatial computing at consumer prices, wearable form factors, integrated with phones. They're bringing spatial computing out of the lab and into daily life.

If Apple succeeds, spatial computing becomes the way people interface with digital information within five to ten years. That's genuinely transformative.

Privacy as a Competitive Moat

Apple's been building a privacy-first narrative for years. It's been mostly marketing. But with wearables that involve cameras and sensors recording constantly, privacy isn't optional—it's existential.

If Apple executes on privacy commitments while competitors don't, the company wins on trust. And in consumer tech, trust compounds. Users who believe Apple respects their privacy are stickier, more loyal, and more willing to pay premium prices.

This is where Apple's control of the entire stack—hardware, software, services—becomes genuinely advantageous. The company can architect privacy into the system at every level, not just add it on top.

Potential Pitfalls and How Apple Might Avoid Them

The Failure Mode: Product-Market Fit

Wearable AI devices could fail if they solve no genuine problem that users actually care about. The AI pendant might be interesting, but is it necessary? Would you really wear something around your neck just for contextual camera recording?

Apple's likely to avoid this by shipping features people actually want immediately. Not flashy demos. Actual useful functionality on day one.

Meta's smart glasses succeeded because they could record video and take pictures—things users already wanted to do. Apple's likely building similar real-world value into these products.

The Failure Mode: Privacy Backlash

If Apple ships camera wearables and then privacy issues emerge, the entire product category suffers. Users stop wearing them. Regulators intervene. The market collapses.

Apple's likely to avoid this through obsessive testing, conservative initial features (limited recording, geofencing, explicit consent), and regulatory compliance. The company learned from others' mistakes.

The Failure Mode: Integration Complexity

If these devices require complex setup or feel disconnected from the iPhone ecosystem, they become enthusiast toys, not consumer products.

Apple's likely to avoid this through excellent software, deep iOS integration, and probably a dedicated app that handles setup and configuration. The company's product design prioritizes simplicity over features—users get easy setup and powerful defaults, not overwhelming options.

The Failure Mode: Battery Life

If the smart glasses need charging every eight hours and the pendant every six hours, they're dead on arrival. Wearable devices must work all day or close to it.

Apple's likely to avoid this through custom silicon optimized for power efficiency and intelligent software that manages workloads intelligently. The company won't ship until battery life hits targets (probably 12+ hours for glasses, 16+ hours for AirPods, 10+ hours for the pendant).

Developer Implications and Ecosystem Building

New APIs and Frameworks

Apple will release new APIs for developers building on these platforms. Think ARKit for spatial context. Core ML for on-device AI. New sensor frameworks for pendant and glasses cameras. Probably new frameworks for real-time audio processing (translation, noise reduction, spatial audio).

Developers who get in early on these APIs will have advantage. The company typically makes early SDK access easy for startups and established app makers.

The App Store for Wearables

Expect a new or expanded App Store category specifically for wearable applications. These won't be phone apps ported down—they'll be purposefully built for small screens, voice input, and quick interactions.

The most successful early apps will probably be:

- Navigation and maps (with wearable-specific features)

- Translation and language tools

- Health and fitness apps

- Communication tools (messaging, calling)

- Accessibility applications

- Work-specific tools (for the professional vertical)

Revenue Opportunities

Subscription services work well on wearables. Continuous services (translation, health monitoring, navigation with real-time updates) make sense as subscriptions.

Most of Apple's wearable ecosystem will eventually move toward services. The hardware is the enabler. The services are the recurring revenue.

Developers who build subscription-first applications for these platforms will likely win bigger in the long run than those building one-time purchase apps.

Long-Term Implications for Computing

Will Phones Become Secondary?

Probably not fully. But wearables might capture 30-40% of daily computing interaction within ten years. Phones become powerful mobile computers you use when you need screen real estate. Wearables become your primary interface for quick information, communication, and context.

It mirrors the shift from desktop to mobile. The old category didn't disappear—it just became secondary.

The Augmented Perception Era

When most people have access to AI-powered glasses with real-time translation, object recognition, and contextual information, human perception changes fundamentally.

We might navigate the world differently. Process information differently. Interact with strangers differently. This is potentially transformative for human behavior, not just technology.

Apple's AI wearables probably represent the first glimpse of what society looks like when AI augmentation is normal, not novel.

TL; DR

- Apple is building three AI wearables simultaneously: an AI pendant with cameras, smart glasses (N50) with high-resolution displays, and enhanced AirPods with on-device AI

- Timeline is aggressive: production starting as early as December 2026, with consumer releases in 2027, suggesting high confidence in the technology

- Positioning is premium: prices ranging from 299 for the pendant and AirPods to4,000 for the smart glasses

- Integration with iPhone ecosystem is the key differentiator: these aren't standalone devices, they're coordinated components that work better together than competitors' fragmented offerings

- Privacy and regulatory strategy will make or break these products: Apple is probably designing privacy controls more carefully than any competitor, learning from earlier failures in the space

- Real-world applications are genuinely useful: real-time translation, hands-free computing, accessibility features, and spatial information all solve actual problems, not just add novelty

- Bottom line: Apple's entering the wearable AI space with advantages in design, privacy, ecosystem integration, and manufacturing that could make these products category-defining, assuming execution is excellent

FAQ

What is an AI wearable, and how does it differ from current smart devices?

AI wearables are computing devices you wear on your body that process information locally using onboard AI models, rather than relying primarily on smartphone apps. They differ from current smartwatches or earbuds because they're designed with dedicated AI processing as the core function, not as an afterthought. Instead of asking your phone a question via Siri, you ask glasses or a pendant, and the device understands context from its built-in cameras and sensors to provide smarter, more contextual responses.

How will Apple's AI pendant differ from existing wearable cameras?

Apple's pendant distinguishes itself through tight integration with the iPhone ecosystem, sophisticated privacy protections, and AI processing that's specifically optimized for wearable contexts. Unlike existing action cameras or body cams, the pendant will understand what you're looking at and provide contextual intelligence through Siri. Every previous attempt at wearable cameras faced privacy backlash because they seemed invasive. Apple's design likely includes hardware switches, geofencing, and explicit user controls that make privacy the primary feature, not an afterthought.

What is the realistic timeline for when these products will be available?

Based on reporting from reputable technology outlets and Apple's typical product development cycles, expect announcement in early to mid-2026, with AirPods launching first (late 2026 or early 2027), followed by the pendant, with smart glasses arriving last, potentially in late 2027 or early 2028. Apple often announces products 6-12 months before availability, so actual shipping could slip if the company encounters supply chain challenges or regulatory complications.

Will these AI wearables work with non-Apple devices like Android phones?

They'll work with basic functionality, but the real intelligence and integration requires an iPhone. Apple's strategy is always to make their ecosystem products work much better together than with competitors' devices. You could probably pair the AirPods with Android—they'll function as wireless earbuds—but real-time translation and sophisticated Siri integration probably won't work without iOS. This is deliberate ecosystem lock-in strategy, though not unusually aggressive compared to how Apple has always operated.

What are the main privacy risks with wearable cameras, and how might Apple address them?

The core risk is that body-worn cameras recording everything create serious privacy violations for both the wearer and people around them. They could capture intimate moments, people in bathrooms or changing rooms, confidential workplace information, or medical settings. Apple's likely addressing this through hardware controls (actual switches to physically disable cameras), transparent recording indicators, strict geofencing to disable recording in sensitive locations, local encryption of all footage, and probably some form of regulatory compliance ahead of laws forcing it. The company's bet is that being privacy-first becomes a major marketing advantage.

How will Siri improve on wearables compared to current implementations?

Siri will gain contextual awareness from multiple input sources: cameras on the glasses and pendant, location data, health metrics from the AirPods, iPhone proximity, and user activity patterns. Instead of Siri being a generic voice assistant, it becomes specific to your situation. "What's in front of me?" refers to what the glasses see. "Call them back" makes sense because Siri knows who just contacted you. This contextual intelligence makes Siri actually useful, which it hasn't historically been as a generic voice assistant.

Will these products compete directly with Meta's Ray-Ban glasses, or is Apple targeting a different market?

They're competing in the same space but at different price points and capability levels. Meta's glasses at

What happens to the traditional iPhone if wearables become primary computing interfaces?

The iPhone probably doesn't disappear, but it becomes less central. Similar to how desktops didn't vanish when mobile phones arrived—they just became secondary. Wearables are best for quick interactions: navigation, translation, health monitoring, notifications. When you need to create something complex, edit documents, or do heavy computing, you grab your iPhone. The phone becomes a productivity tool you use when necessary, not the center of your digital life. This is likely Apple's long-term vision anyway.

How much will these devices cost, and is that pricing justified?

Based on Apple's historical pricing and the manufacturing complexity, expect

What should developers know about building apps for these wearables?

Develop specifically for small screens and voice-first interaction, not by porting iPhone apps down. The best wearable applications answer a single question or accomplish a single task in seconds. Build with subscription models in mind—continuous services (translation, monitoring, analysis) work better on wearables than one-time purchases. Optimize ruthlessly for battery life and on-device processing. Assume the devices have always-on connections but will regularly go offline. Get into the SDK early through Apple's developer program and build something genuinely useful rather than just experimenting with the new platform.

Could these products fail like previous wearable attempts (Google Glass, Snap Spectacles)?

Absolutely. Google Glass failed because it wasn't significantly better than smartphones for most use cases, it looked weird, had poor battery life, and raised privacy concerns from day one. Snap Spectacles never gained mainstream adoption. Apple's products could fail for similar reasons: limited real-world usefulness, battery inadequacy, privacy backlash, or simply that people don't want to wear multiple devices. The difference is Apple has enormous resources to iterate if version one doesn't work. Meta can do the same. Most startups can't. Apple's biggest advantage is the ability to live through failure and eventually get it right.

Future Outlook: The Wearable AI Inflection Point

We're probably at an inflection point in personal computing. The smartphone has dominated for fifteen years. But as AI processing becomes more efficient, battery technology improves, and display miniaturization reaches practical limits, wearable AI becomes genuinely viable.

Apple's timing is deliberate. The company waited until the technology was good enough. Until the problems were solvable. Until competitors proved the market exists. Now Apple's moving decisively.

If these products execute well, you'll see them everywhere within three years. Offices will fill with people wearing glasses and pendants. Airports will have real-time translation happening silently. Healthcare providers will use these tools as standard equipment.

If they fail, Apple will iterate quietly until it gets it right. And if even Apple can't make wearable AI work, the market probably isn't real yet.

Either way, these devices represent the company's biggest bet on a new category since the iPad. That alone makes them worth paying attention to.

For most users, waiting until 2027 and seeing what Apple actually ships makes sense. The early reviews will tell you if the hype matches reality. For developers, getting familiar with the APIs and building proof-of-concept applications right now gives you a head start when these products actually launch.

The wearable AI era is coming. Apple's probably going to define what it looks like.

Key Takeaways

- Apple is simultaneously developing three AI wearables: a pendant with cameras, smart glasses (N50), and enhanced AirPods with local AI processing

- Production is targeting December 2026 with consumer releases in 2027, signaling strong confidence that technology is production-ready

- Pricing will be premium: 299 for pendant and AirPods,4,000 for smart glasses, positioning these as luxury category devices

- Integration with iPhone ecosystem and privacy-first architecture are key differentiators from competitors like Meta and Snap

- Real-world applications include real-time translation, hands-free navigation, accessibility features, and contextual AI assistance via enhanced Siri

- Regulatory compliance and privacy safeguards will determine success—Apple's learning from failures of earlier wearable camera attempts

Related Articles

- Apple's AI Hardware Push: Smart Glasses, Pendant, and AirPods [2025]

- Best Apple Watch 2026: Series 11, SE 3, Ultra 3 Comparison [2026]

- Tech Gear News: Samsung Galaxy Unpacked, Fitbit AI Coach, iOS Updates [2025]

- Why VR's Golden Age is Over (And What Comes Next) [2025]

- VR Isn't Dying: Why XR Arcade Games Are Reviving Headset Magic [2025]

- OpenAI's GPT-5.3-Codex-Spark: Breaking Free from Nvidia [2025]

![Apple's AI Wearables Revolution: What We Know About the Trio [2025]](https://tryrunable.com/blog/apple-s-ai-wearables-revolution-what-we-know-about-the-trio-/image-1-1771360634543.jpg)