Introduction: From Garage Gadget to AI-Powered Home Guardian

Five years after selling Ring to Amazon for over $1 billion, founder Jamie Siminoff found himself burned out—literally and figuratively. By 2023, he'd pushed so hard for so long that stepping away seemed inevitable. He wasn't motivated to start something new because everything he wanted to build belonged on Ring's platform. Then AI changed everything.

When generative AI exploded onto the scene, Siminoff saw something most people missed: an opportunity to transform Ring from a reactive security camera into a proactive intelligent assistant. Then came January 2025, when the devastating Palisades Fires swept through Southern California, destroying the very garage where Ring was born. The tragedy crystallized his vision.

Today, Ring isn't just watching your front door anymore. It's learning your family's patterns, recognizing familiar faces, identifying dangers before they escalate, helping reunite lost pets with their families, and even assisting firefighters during catastrophic disasters. But this transformation comes with real questions about privacy, surveillance, and how much data we're willing to trade for convenience.

This shift represents something larger than one company's evolution. It's a glimpse into how AI is fundamentally reshaping the smart home landscape. Devices that once simply recorded video are becoming decision-making agents that interpret context, predict problems, and take action. The implications are profound—and they're worth understanding now, before these systems become even more embedded in our daily lives.

Siminoff's mantra for this new era is deceptively simple: "Turn AI backwards—it's IA, it's an intelligent assistant." But behind that wordplay lies a radical reimagining of what home security could mean when artificial intelligence gets involved.

TL; DR

- Ring's Strategic Pivot: Founder Jamie Siminoff returned to the company to transform it from a doorbell maker into an AI-powered intelligent assistant for homes, launched with five major AI features at CES 2025.

- Fire Watch Innovation: AI monitors for smoke, fire, and embers in shared footage during major fire events, inspired by the 2025 Palisades Fires that destroyed Siminoff's original garage.

- Search Party Success: The AI-powered lost pet finder reunites one family per day with their dogs using "facial recognition for dogs" technology.

- Privacy Tradeoffs: Law enforcement integration with Flock Safety and Axon partnerships allows police to request footage, raising concerns about mass surveillance and government overreach.

- Familiar Faces Controversy: Facial recognition storage of regular visitors (family, babysitters) improves personalization but triggers concerns from the EFF and U.S. senators about privacy erosion.

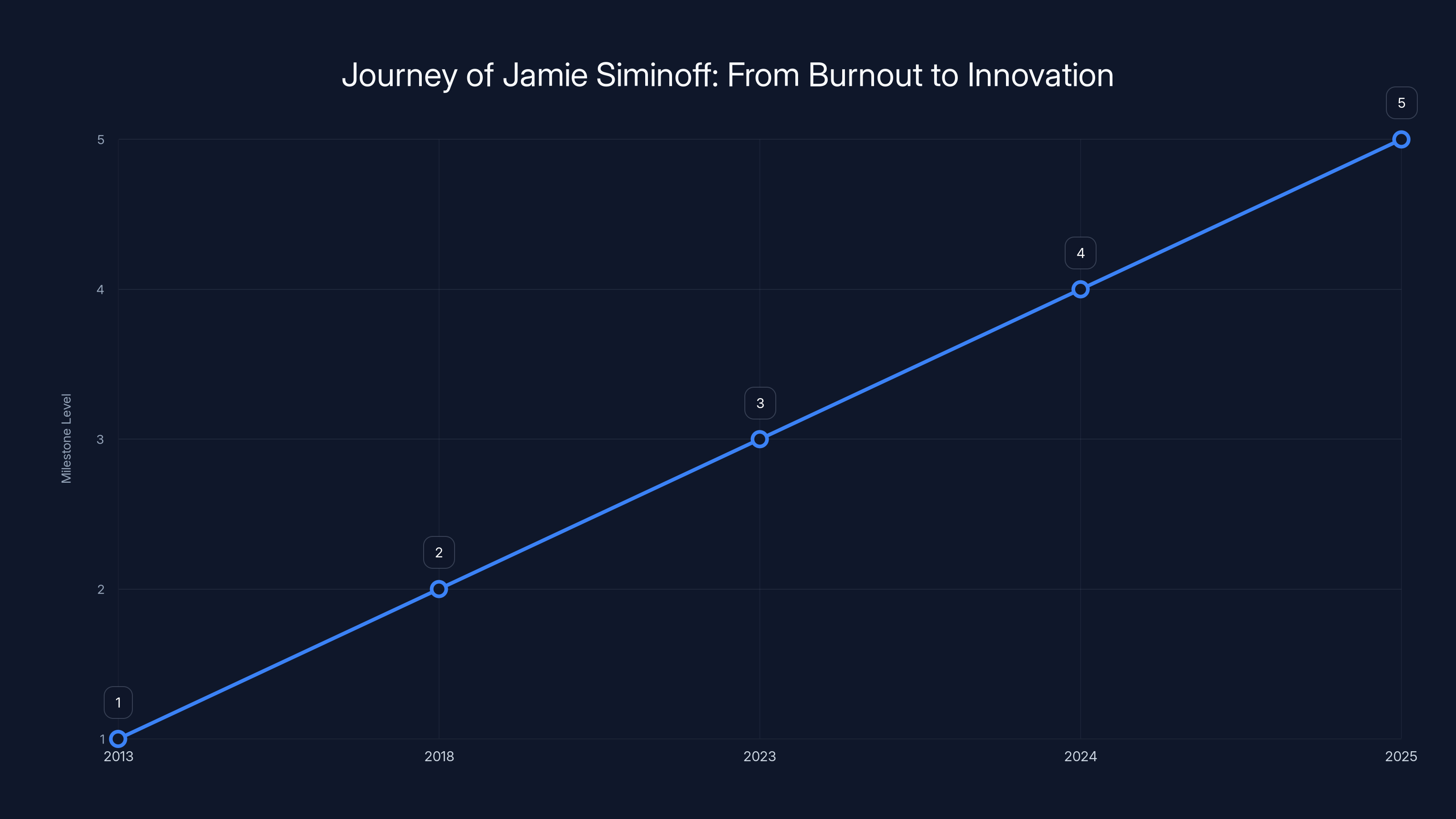

Estimated data shows Jamie Siminoff's career path from founding Ring to returning as Executive Chairman, highlighting key moments of innovation and transformation.

The Return of a Burned-Out Founder

Jamie Siminoff's departure from Ring in 2023 wasn't dramatic. It was the natural endpoint of an entrepreneur who'd already won the biggest game. He'd built something in his garage, scaled it to profitability, sold it to Amazon, and continued pushing for five more years after that. By any reasonable metric, he'd accomplished what most founders only dream about.

But burnout is a specific kind of hell. Siminoff describes it not as depression or dissatisfaction, but as exhaustion from throttle. He had one speed—maximum—and he'd been at it for over a decade. When he finally left Amazon's fold, the plan was simple: rest, reflect, maybe do something completely different. He'd earned that luxury.

What he didn't anticipate was how quickly AI would make that retirement plan obsolete. When large language models, vision systems, and real-time inference became viable, Siminoff realized the things he was most excited about building all belonged to Ring's ecosystem. The infrastructure was there. The user base was there. The trust was there. Starting from scratch would be reinventing the wheel while watching someone else play on the field.

So he came back. Not because he wanted to stay retired—clearly, that was never going to happen—but because the opportunity to build something fundamentally new on Ring's foundation was irresistible. He joined as Executive Chairman in 2024, taking an active role in steering the company's transformation.

"AI comes out, and you realize, 'Oh my God, there's so much we could do,'" Siminoff explained in conversations at CES 2025. That realization wasn't abstract. It was specific, actionable, and urgent. The technical capabilities finally existed to build features that Ring customers had implicitly been asking for all along: understand what you're seeing, not just record it.

The return of a burned-out founder is typically a cautionary tale. But in Siminoff's case, it's been a catalyst for genuine innovation. Sometimes the best product decisions come not from someone pushing harder, but from someone who took a break, came back refreshed, and sees the landscape with new eyes.

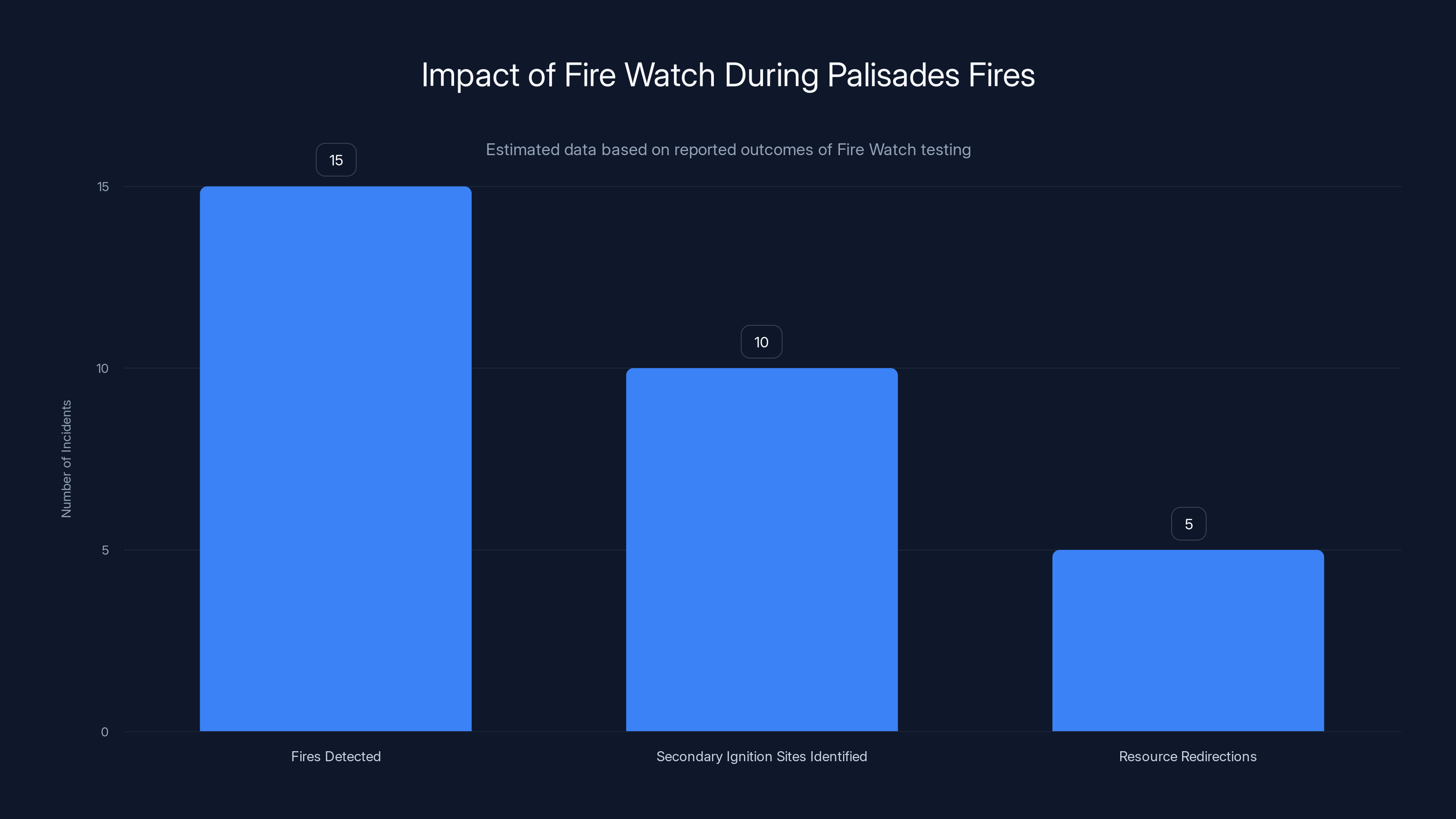

Fire Watch significantly enhanced firefighting efforts by detecting 15 fires, identifying 10 secondary ignition sites, and facilitating 5 resource redirections. Estimated data.

When Fire Becomes the Catalyst: The Palisades Fires and Fire Watch

The Palisades Fires of January 2025 were catastrophic. Burning across Pacific Palisades and Altadena in Southern California, the fires destroyed thousands of structures, claimed multiple lives, and forced over 100,000 people to evacuate. One of those people was Jamie Siminoff, who watched as flames threatened his neighborhood and ultimately destroyed the garage where Ring was born.

Personal tragedy often crystallizes vision in ways that abstract thinking never can. For Siminoff, watching his home threatened by fire while running a security camera company created a moment of moral clarity. Ring's technology could help. It should help. The question was how to do it at scale without compromising privacy.

Fire Watch is the answer. Built in partnership with Watch Duty, a nonprofit fire monitoring organization, the feature works like this: when a major fire event is detected in a geographic area, Ring can alert customers in that region and ask them to opt in to sharing footage. Unlike traditional surveillance partnerships, this is fully voluntary, fully transparent, and fully directed at a specific public safety crisis.

Once footage is shared, Ring's AI scans it specifically for smoke, fire, embers, and flame. The AI doesn't look at faces. It doesn't identify people. It's purely hunting for fire evidence. Watch Duty uses this aggregated data to create better maps of fire progression, identify problematic areas where firefighting resources need to be deployed, and ultimately save lives.

The impact is measurable. During testing, Fire Watch detected fire progression that human observers missed, helped firefighters identify secondary ignition sites, and in at least one case, provided evidence that firefighters used to redirect resources more efficiently. It's a proof point that AI surveillance, when used with explicit consent for a time-limited purpose, can solve genuine problems.

But Fire Watch also reveals something important about AI in security systems: context matters. The same facial recognition technology that raises privacy concerns when used for routine monitoring becomes acceptable when it's deployed for disaster response. The same data collection that feels invasive for law enforcement feels necessary during a fire. We don't have a universal rule for when surveillance is okay and when it isn't. Instead, we have a spectrum, and Fire Watch sits on the "clearly justified" end of it.

Siminoff is acutely aware that this goodwill doesn't extend to all of Ring's new features. Fire Watch is the easy sell. Other AI additions are much more complicated.

Search Party: Turning AI Into a Lost Pet Finder

One of Ring's most touching AI features emerged from a simple question: can facial recognition work for dogs? The answer, it turns out, is yes. And the results are extraordinary.

Search Party launched as an experiment. Ring customers can post photos of their lost pets, and other Ring users can opt in to share their camera footage for matching against those photos. The AI doesn't just look for any dog—it looks for that specific dog, matching distinctive markings, body shape, coloring, and behavioral patterns captured in Ring footage.

When there's a match, the system alerts the pet owner. No human moderator. No manual review. Just AI making the connection and helping reunite families with their missing pets.

Siminoff's original goal for Search Party was modest: find one dog by the end of Q1 2025. As a stretch goal. As something nobody else had ever done. The actual results? One dog per day. Every single day. In the first few months.

That's remarkable both because it works and because it reveals something about the scale at which Ring operates. Ring has tens of millions of cameras deployed across North America. That density of coverage, combined with AI powerful enough to perform individual pet facial recognition, creates a capability that didn't exist before. It's a public good that emerges from private infrastructure.

Search Party works precisely because it's low-friction. Sharing footage to help find a lost pet is a no-brainer for most people. There's minimal privacy concern because the matching is specific and temporary. And the outcomes are measurable and emotionally compelling. One dog per day isn't a statistic—it's a family getting their pet back.

The success of Search Party also demonstrates something crucial about AI adoption: it works best when the problem it solves is universally understood and the solution is transparently implemented. Everyone knows the panic of a lost pet. Everyone understands why you'd want to help find it. Everyone can see exactly how the feature works. There's no mystery, no hidden agenda, no question of what the system is doing with the data.

This creates a blueprint for other AI features. If Ring wants to build trust around facial recognition and behavior analysis, the path forward is clear: solve obvious problems, be transparent about methods, make participation voluntary, and show measurable results. Search Party does all four.

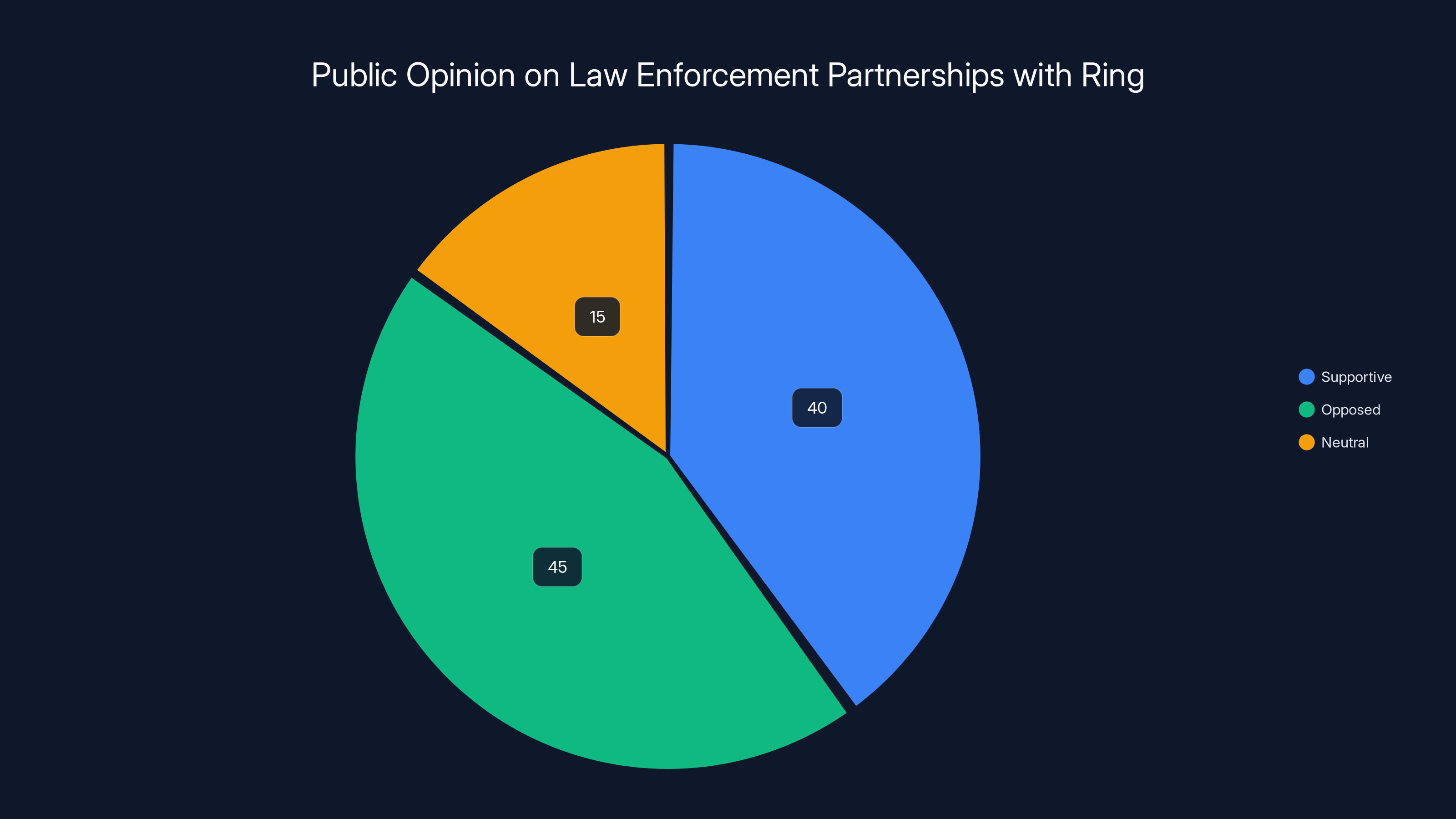

Estimated data suggests a divided public opinion on Ring's partnerships with law enforcement, with a slight majority opposing due to privacy concerns.

Familiar Faces: Convenience Meets Privacy Concerns

Not all of Ring's new AI features generate the same emotional response as Search Party. Familiar Faces, the company's facial recognition feature for identifying regular visitors, has triggered significant pushback from privacy advocates.

Here's how it works: Ring's AI learns to recognize and store the faces of people who regularly visit your home. You can assign names to these faces—"Mom," "Babysitter," "Mail Carrier." Over time, the system builds a visual profile of who belongs in your home's context. When one of these familiar faces appears, you get a notification: "Mom is at the front door." It's convenient. It's personalized. It's also facial recognition.

The Electronic Frontier Foundation (EFF), a nonprofit digital rights organization, raised serious concerns. A U.S. senator joined the chorus. The core issue: facial recognition technology, even when used in home security contexts, represents a step change in surveillance capability. Once faces are stored and catalogued, they can theoretically be cross-referenced with other databases, shared with law enforcement, or used for purposes the user never anticipated.

Siminoff's defense of Familiar Faces centers on several points. First, the feature is optional. You don't have to enable it. Second, it creates personalization that reduces alert fatigue. If the system knows your mail carrier's face, it won't notify you every time they deliver a package. Third, the faces are stored locally on your Ring devices, not in Amazon's cloud (though Amazon does have access to camera footage for other purposes).

But the privacy concern isn't really about Siminoff's intentions. It's about capability. Once you accept that Ring can store and recognize faces, you've opened a door that's historically been very hard to close. Governments have demanded access to facial recognition databases. Companies have misused facial recognition for surveillance. The technology has disproportionate error rates for people of color and women.

Siminoff's argument—that Familiar Faces makes sense because it personalizes Ring to your home's unique "fingerprint"—is compelling but incomplete. Yes, the feature serves the user. But it also serves Amazon, which owns Ring and could theoretically use the facial data for advertising, security research, or other purposes. Trust has to be earned through transparency, not assumed through intention.

The practical reality is this: Familiar Faces works well and solves a genuine problem. Most users will find value in it. But the fact that it works well and solves a problem doesn't mean the privacy implications disappear. They have to be managed, not dismissed.

Law Enforcement Partnerships: Balancing Security and Surveillance

Ring's relationship with law enforcement has been contentious, complicated, and evolving. In 2024, the company terminated an earlier program that allowed police departments to request footage from Ring customers after consumer backlash. The criticism was harsh: Ring was enabling mass surveillance, cooperating too closely with law enforcement, and putting customers in an uncomfortable position.

Then 2025 happened. Ring announced partnerships with Flock Safety and Axon, companies that specialize in law enforcement technology. These partnerships reintroduced the ability for police to request images and videos from Ring customers. Siminoff defended the decision, but it also demonstrated something important: when it comes to police partnerships, there are no perfect answers.

Here's the structure of the new partnerships: when law enforcement is looking for someone (a robbery suspect, a missing person, a vehicle of interest), they can issue an alert in a geographic area. Ring customers in that area receive a notification asking if they want to share relevant footage. Crucially, if a customer declines, law enforcement never knows who declined. The system is anonymous for non-participants. Only people who choose to share are identified.

Siminoff points to specific cases to justify the partnerships. The Brown University shooting in December 2024 was solved with the help of surveillance footage, including Ring cameras. If Ring had terminated police partnerships in response to abstract privacy concerns, law enforcement would have had less evidence to work with. The shooting suspect was apprehended faster because of the surveillance infrastructure, and the community benefited.

But that's also the trap. Good outcomes don't prove good policy. Lots of things that violate privacy rights have good outcomes. The question isn't whether police should ever have access to surveillance footage. Clearly, they should in emergencies and with proper warrants. The question is whether a system where police can broadcast requests to millions of cameras, and most people will probably share, represents an acceptable level of surveillance infrastructure.

Siminoff's argument is that customer choice makes all the difference. You can opt in or opt out. You're never forced. But the existence of the system creates a default behavior. Most people will share footage if they think it might help catch a criminal. Over time, that default behavior shapes what law enforcement expects to have access to. It normalizes the idea that private surveillance cameras are part of the public safety infrastructure.

The honest answer is that Ring's law enforcement partnerships are neither purely good nor purely bad. They're a tradeoff. The question is whether the public, collectively, thinks that tradeoff is worth making. So far, the answer seems to be "yes, but cautiously."

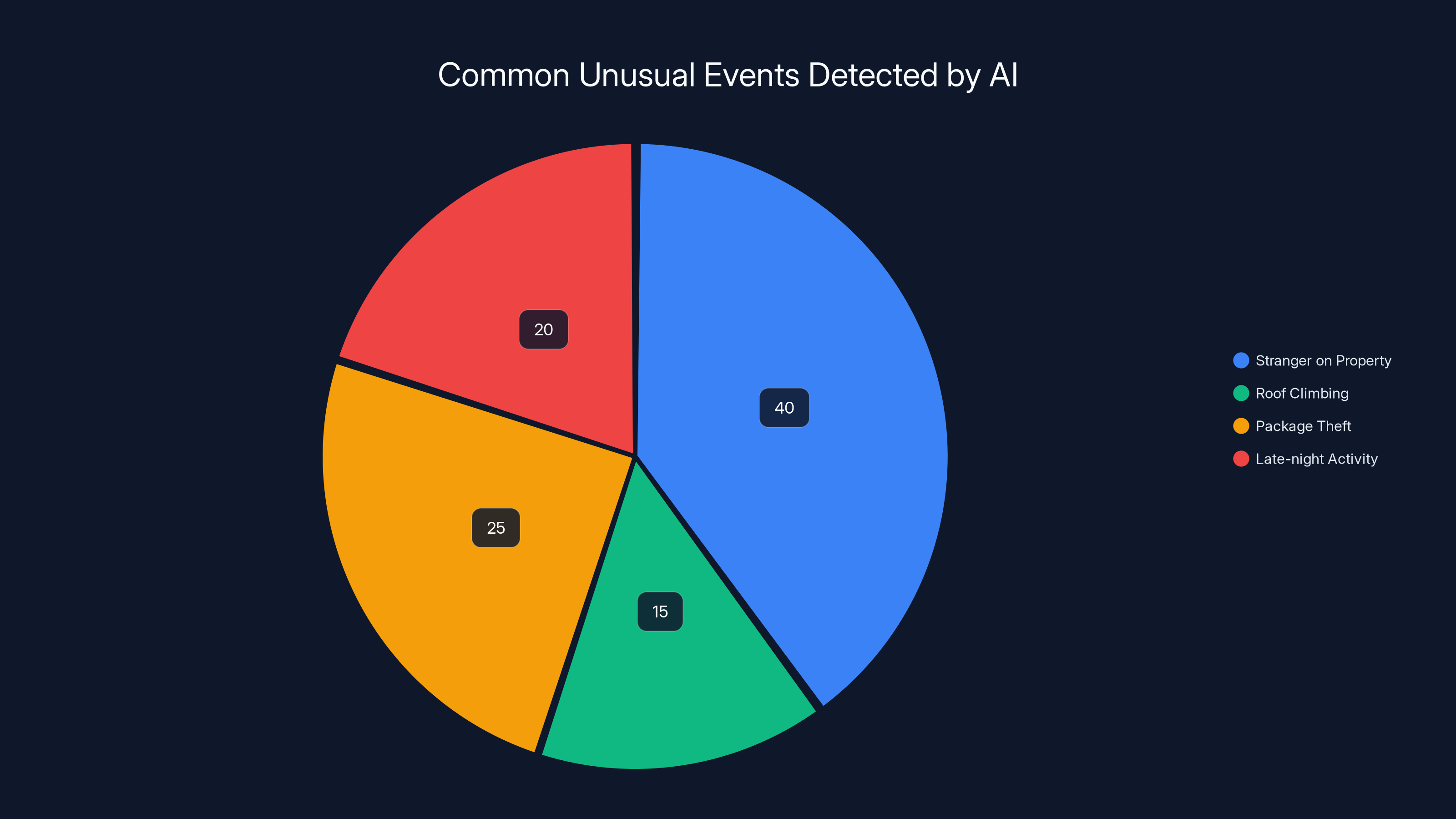

Estimated data suggests that 'Stranger on Property' is the most common unusual event detected by Ring's AI, followed by 'Package Theft' and 'Late-night Activity'.

Conversational AI: Talking to Your Home

One of Ring's quieter but potentially important new features is conversational AI. Instead of just getting notifications about events, you can have a conversation with your Ring system. "What's happening at my front door?" "Did anyone arrive while I was out?" "Show me what happened in the last hour."

For older Ring products, this sounds like a fancy interface improvement. For the newer generation of Ring products with advanced audio and processing capability, it's something more fundamental. It's the beginning of a natural language interface to your home's security infrastructure.

Conversational AI in home security serves several practical purposes. First, it makes video review faster. Instead of scrubbing through footage manually, you can ask questions and have the system pull the relevant clips. Second, it reduces the cognitive load of managing multiple devices. You don't have to remember which app does what or navigate complex menus. You just ask.

But conversational AI also represents a shift in how much intelligence and autonomy you're delegating to your home's systems. When you can talk to your security system like it's a person, you start relating to it differently. You trust it more. You rely on it more. You delegate more decisions to it. That's not necessarily bad, but it's a shift.

One subtle implication: as Ring's conversational AI gets better, it will increasingly understand context. It won't just answer "What's at my front door?" It will understand "Is this the package I was expecting?" or "Have I seen this person before?" That contextual understanding requires the AI to integrate information across multiple data sources—historical footage, delivery records, recognized faces, learned patterns.

Siminoff describes this as reducing "cognitive load." You interact less with Ring unless something actually requires attention. The system handles the routine stuff automatically and escalates exceptions. That's useful. It's also a form of delegation that, if misapplied, could shift decision-making power from the user to the algorithm.

The key safeguard is transparency. Users need to understand what their conversational AI is doing, how it's making decisions, and what data it's accessing. If those things are clear, conversational AI is just a better interface. If they're opaque, it's a black box that happens to control your home security.

Unusual Events: AI as Anomaly Detector

Another addition to Ring's AI arsenal is the ability to detect "unusual events." The phrasing is deliberately vague because unusual is contextual. For some homes, an unusual event is a stranger on the property. For others, it's someone climbing onto the roof. For still others, it's a package being stolen.

Unusual events detection works by learning the baseline pattern of a home's daily activity and then flagging deviations. If packages are frequently left on porches, a package is normal. If someone is on your porch at 2 AM when packages never arrive at 2 AM, that's unusual and worth an alert.

This is where Ring's AI gets truly sophisticated. The system has to understand not just visual events, but temporal context. It has to learn not just what happens at your home, but when it typically happens and in what sequence. A delivery truck at 10 AM on Tuesday is normal. A delivery truck at 11 PM on Saturday is unusual.

The challenge with unusual events detection is avoiding false positives while catching genuine threats. If Ring alerts you to something unusual every time a friend visits or a guest arrives, the feature becomes useless. Too many false alarms and users disable it. But if Ring is too conservative and only alerts on obvious threats, it misses the interesting cases.

Ring's solution is to combine visual anomaly detection with known patterns. If a face is recognized as familiar, the system suppresses the "unusual person on property" alert, even if their arrival time is atypical. If a vehicle is recognized as a regular delivery truck, the system suppresses alerts about unusual vehicles. Over time, the system learns what's normal for your home specifically.

The benefit is that alarm fatigue drops dramatically. The cost is more data collection and more algorithmic decision-making. Ring has to track every visitor, every vehicle, every delivery to build accurate baseline patterns. It has to remember which people are regular visitors and which are strangers. It has to understand the typical rhythm of activity at the property.

For users, this trade is often worthwhile. For privacy advocates, it's another reason to be cautious about how much behavioral data Ring collects and retains.

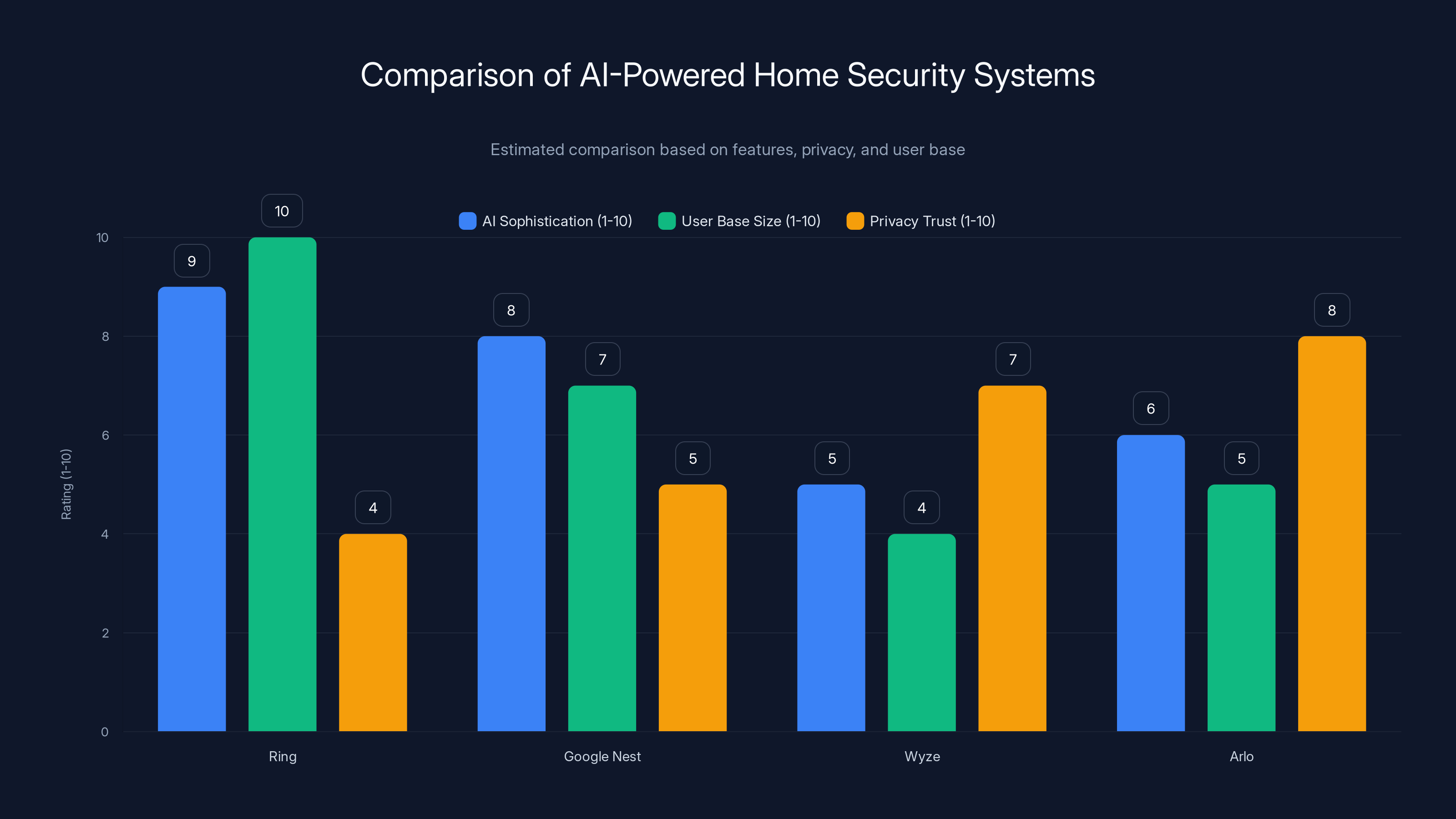

Ring leads in AI sophistication and user base size but lags behind in privacy trust. Estimated data shows how competitors stack up in these areas.

The Personalization Paradox: Less Interaction vs. More Surveillance

All of Ring's new features cluster around a single theme: reducing the amount of interaction required from the user. Siminoff describes this as decreasing "cognitive load." The system learns your home's patterns and only bothers you when something actually matters. You don't have to review every video, filter through routine activity, or decide what's worth your attention. The AI handles that.

This is genuinely useful. Alert fatigue is real. Most Ring users get far more notifications than they need. Filtering that down to only important stuff is a service. But reducing interaction requires increasing surveillance. The system has to see and categorize everything to know what's routine and what isn't.

This is the personalization paradox. More personalization equals more data collection. More convenience equals more capability for the system to track, catalog, and understand your patterns. You can't have one without the other.

Siminoff's argument is that Ring stores much of this data locally on the devices themselves, not in Amazon's cloud. Faces are stored locally. Patterns are learned locally. The cloud integration happens at a higher level of abstraction. But Ring also acknowledges that Amazon has access to video footage for "service improvement," and that access provides opportunities for mining behavioral data that Ring hasn't fully disclosed.

The honest assessment is this: Ring's AI features are more privacy-friendly than many alternatives because there are options to disable them and because the company has been relatively transparent about data use. But they're not privacy-neutral. They require acceptance of increased surveillance as the price of reduced interaction.

Users have to decide if that trade is worth it. The fact that it's a choice at all is what matters. Forced surveillance is unambiguously bad. Voluntary surveillance with clear benefits and clear data practices is a more nuanced question that reasonable people can disagree about.

The Scale Problem: Why Ring's Size Matters

All of these AI features would be interesting technical achievements even if Ring had a small user base. But Ring doesn't. Ring has deployed tens of millions of cameras across North America. That scale changes everything.

When one company controls tens of millions of cameras, their AI capabilities become infrastructure. They're not just a vendor offering a service. They're a critical part of the urban surveillance landscape. Their choices about what to monitor, how to monitor, and who gets access to the monitoring all cascade outward.

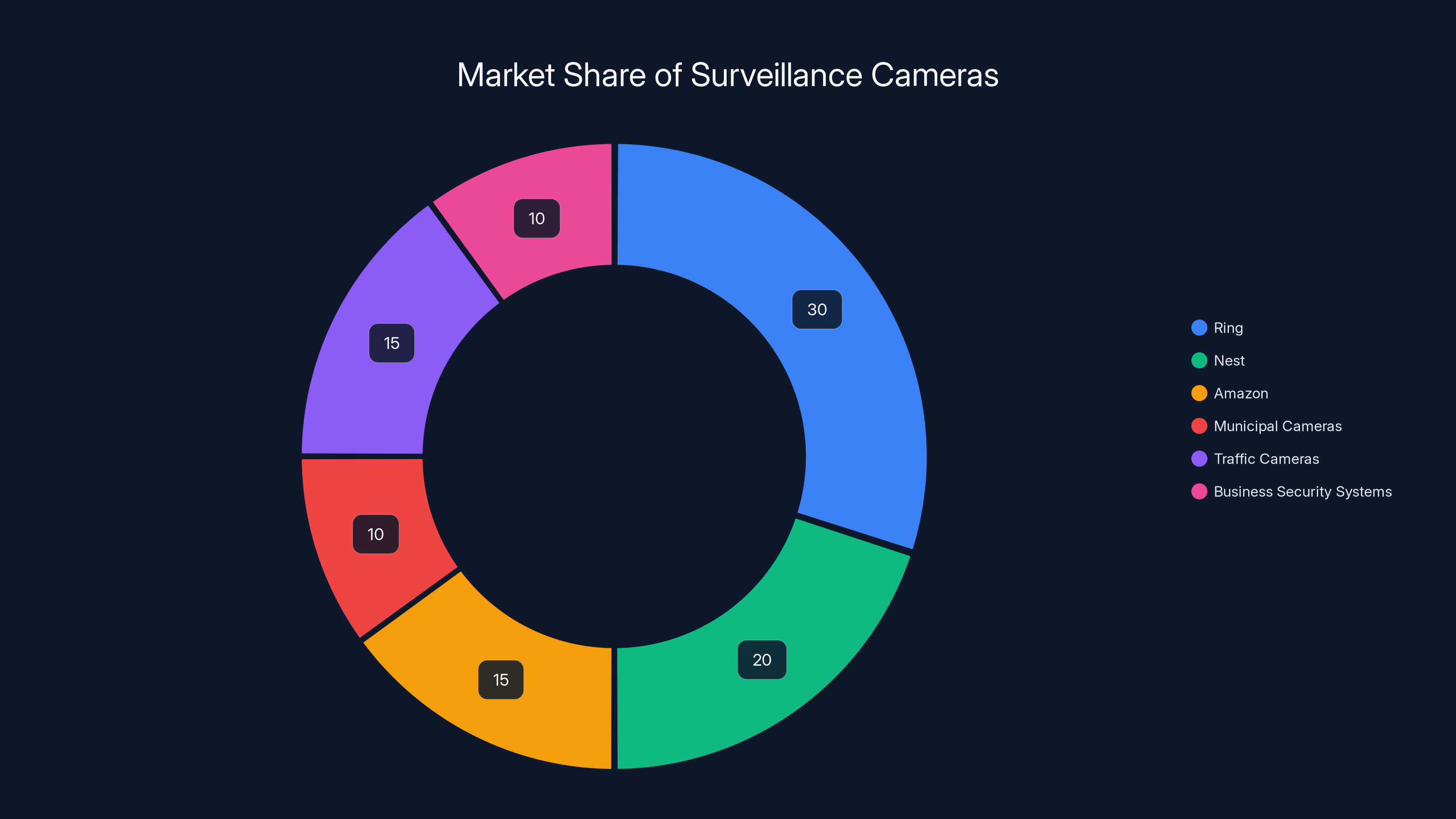

Consider the law enforcement integration again. Ring alone isn't necessarily a problem for police. Ring plus Nest plus Amazon's other smart home products plus municipal cameras plus traffic cameras plus security systems installed by businesses—that's approaching comprehensive coverage in many cities.

Siminoff is aware of this. In his conversations at CES, he repeatedly acknowledged the responsibility that comes with Ring's size. He talks about scrutiny not as criticism to dismiss but as a necessary part of operating infrastructure-scale services. The fact that he welcomes scrutiny is good. But scrutiny alone doesn't solve the structural problems that come with scale.

One of those problems is path dependency. Once Ring has deployed tens of millions of cameras and built AI systems that rely on that data, it's very hard to change course. If Ring's AI relies on facial recognition to personalize the experience, you can't suddenly disable facial recognition without degrading the product. If law enforcement has come to expect access to Ring footage, you can't suddenly revoke access without creating friction with police departments.

Scale also creates externalities. Ring's choices about surveillance standards don't just affect Ring users. They affect how law enforcement views surveillance capability. They influence what other companies think is acceptable. They shape regulatory expectations. When Ring does something first and succeeds, it becomes the template for what comes next.

This is why the way Ring implements these features matters so much. If Ring does AI-powered home security well—with real privacy protections, transparent practices, and user control—it sets a good template. If Ring cuts corners and prioritizes convenience over privacy, it sets a bad template that competitors will copy.

Ring holds a significant share of the surveillance camera market in North America, influencing surveillance standards and regulatory expectations. (Estimated data)

The Technology Stack: What's Actually Powering Ring's AI

Understanding Ring's AI features requires understanding what technology is actually running them. This is important because marketing descriptions of "AI" often obscure important technical details.

For most of Ring's visual AI tasks—smoke detection, facial recognition, object detection—Ring is likely using a combination of custom-trained deep learning models and off-the-shelf models from providers like TensorFlow and PyTorch. Ring employees have published research on efficient inference (running AI models on edge devices rather than in the cloud), which suggests Ring invests heavily in optimizing AI models to run on relatively modest hardware.

This matters because it means Ring can do some AI processing directly on the camera hardware rather than sending everything to Amazon's servers. That's architecturally better for privacy. It's also faster—edge inference is instant, while cloud inference has latency.

For the conversational AI, Ring is almost certainly using language models from OpenAI, Google, or Anthropic. The company hasn't publicly stated which, but those are the main options. The conversational interface appears to use retrieval-augmented generation, where the language model has access to Ring-specific information (recent video events, device status) and incorporates that into its responses.

For the unusual events detection, Ring is likely using autoencoders or other unsupervised learning techniques that can learn baseline patterns and detect deviations. This is a classic anomaly detection problem, and there are well-established approaches.

None of this is particularly exotic. Ring isn't using cutting-edge AI research. It's competently applying well-understood techniques to the home security domain. The innovation is in the application and integration, not in the underlying research.

This is actually reassuring. Exotic AI techniques often have failure modes and biases that aren't well understood. Established techniques have known limitations and can be applied safely. Ring's AI features probably won't have surprising failure modes. They'll have the failure modes that all facial recognition systems have, all anomaly detection systems have, all language models have. Those are manageable if you know what they are.

Comparing Ring to Competitors: Who Else Is Doing This

Ring isn't alone in the AI-powered home security space. Competitors like Wyze, Arlo, and Google Nest are all deploying similar features. Understanding how Ring compares helps contextualize its choices.

Google Nest, owned by Google, has several advantages. It can integrate with Google's AI infrastructure directly. Nest cameras can use Google's language models for conversational features and Google's computer vision research for object detection. Google's main constraint is that users are hyperaware of Google's data practices and privacy concerns. Nest adoption is lower than Ring because users are skeptical about Google's motivations.

Wyze is cheaper and has less sophisticated AI, but it also has much smaller deployment. Wyze is mainly competing on price and on the "we're not Google or Amazon" positioning. That works for budget-conscious users who are willing to accept weaker features in exchange for less data collection.

Arlo has positioned itself as a privacy-first alternative with AI features added later. Arlo doesn't sell data to advertisers and is more transparent about data retention. But Arlo's AI features are less sophisticated than Ring's, and Arlo has a smaller user base.

Ring's advantage is scale, Amazon's infrastructure, and Siminoff's vision for what the product should become. Ring has deployed enough cameras that the AI features actually work well—Search Party reunites one dog per day partly because Ring has so many cameras. Competitors with smaller user bases can't match that.

Ring's disadvantage is trust. Amazon's data practices are notoriously opaque. Users are right to be skeptical about what Amazon is doing with Ring footage. Amazon has a history of moving fast and breaking things, and privacy has often been one of the broken things.

From a pure product perspective, Ring is probably doing the best work on intelligent home security. From a privacy perspective, that doesn't necessarily make it the best choice. It depends on how much you trust Amazon.

The Regulatory Horizon: What Might Come Next

Ring's expansion into AI-powered home security is happening in a regulatory gray area. There's no comprehensive U.S. federal law governing facial recognition in private home security systems. There are no clear rules about what law enforcement can request from homeowners. There are emerging best practices, but no legal standards.

This is likely to change. California has been considering facial recognition regulation. The FTC has started investigating Ring specifically over privacy practices. Europe's GDPR already restricts biometric data processing, which affects how Ring can operate internationally. As AI surveillance becomes more sophisticated and more pervasive, regulation will inevitably follow.

The question is what form that regulation will take. There are several possibilities. First, comprehensive facial recognition bans for certain applications. This would likely exclude home security, but might restrict police access or require explicit consent.

Second, data minimization requirements. Instead of allowing Ring to collect and store extensive behavioral data, regulations might require that only essential data be collected and that data be deleted after a certain period.

Third, transparency requirements. Companies like Ring would have to disclose exactly how their AI systems work, what data they collect, and how that data is used. This is the approach the EU is taking with the AI Act.

Fourth, user control requirements. Regulations might mandate that users can easily delete their facial recognition profiles, opt out of behavioral learning, and see exactly what data the company has about them.

Siminoff has indicated that Ring welcomes appropriate regulation. That's a good sign, but it also suggests the company knows regulation is coming. Smart companies position themselves ahead of regulations to avoid being constrained by overly strict rules written without industry input.

The regulatory environment will probably shift Ring's product development. Some features might become legally required to have explicit opt-in. Others might become prohibited entirely. Data retention policies will likely become more restrictive. The good news is that most of these changes would improve privacy without destroying functionality.

The Emotional Layer: Why People Care About Ring

Ring's explosive growth has been driven partly by good product design and availability, but also by emotion. People care deeply about their homes. They care about their families' safety. They care about catching thieves. They care about being in control of their property.

Ring taps into all of that. A doorbell camera isn't just a security device. It's peace of mind. It's the ability to check on your home while you're away. It's the reassurance that if something bad happens, you'll have evidence. It's the power to catch criminals and stop crimes.

This emotional dimension makes Ring resistant to privacy criticism. Yes, privacy advocates say facial recognition is risky. But Ring users say they feel safer when they know who's at the door. Yes, law enforcement integration is concerning in theory. But in practice, it helps catch bad guys and helps solve crimes.

Siminoff understands this. When he talks about the Brown University shooting, he's not making a libertarian argument about property rights. He's making an emotional argument about safety. When he talks about Search Party reuniting dogs with their families every day, he's not discussing technical achievement. He's describing heartwarming outcomes.

The challenge with emotion-based arguments is that they can obscure important tradeoffs. Yes, facial recognition makes you feel safer when you know who's at the door. But it also creates a behavioral profile of everyone who visits your home. Yes, law enforcement integration helps solve crimes. But it also normalizes the idea that your home is part of a public surveillance infrastructure.

The responsible approach is to acknowledge the emotional value of these features while still taking seriously the privacy implications. You can be glad that Search Party reunites dogs with families and cautious about facial recognition. You can appreciate that law enforcement integration helped solve the Brown shooting and concerned about mass surveillance. Those things aren't contradictory. They're realistic.

Siminoff's framing—"intelligent assistant" rather than "surveillance system"—is partly emotional positioning. It makes the product sound friendly and helpful rather than dystopian and intrusive. But it's also not entirely wrong. Ring's AI is becoming more assistant-like as it learns patterns and takes autonomous action rather than just recording passively.

The key is maintaining realistic expectations about what kind of assistant this is. It's an assistant that works for Amazon. It's an assistant that can share information with law enforcement. It's an assistant that learns and remembers everything it observes. Understanding those constraints is important for making good decisions about whether to invite this assistant into your home.

Looking Forward: The Next Era of Home Intelligence

Ring's shift toward intelligent assistant status is part of a broader trajectory in smart home technology. Devices are becoming more autonomous, more aware, and more capable of taking action without explicit user commands.

This trajectory will probably accelerate. As AI models improve, they'll enable more sophisticated features. Ring's future probably includes predictive alerts—not just "there's an unknown person at your door" but "this is the same vehicle that cased your neighborhood three days ago." Not just "someone's attempting to break in" but "based on patterns, this is likely a burglary in progress and police have been alerted."

It probably includes integration with other smart home systems. Ring will work with smart locks to suggest auto-locking based on presence detection. It will work with lighting systems to simulate occupancy when you're away. It will work with security systems to coordinate responses to threats.

It probably includes increasing sophistication in conversational interaction. Instead of just answering questions, Ring will provide proactive warnings. "Your porch has been shadowed three times in the last week at the same time of day—you might want to take additional security measures." "Your babysitter arrived at 2:45 PM, which is 15 minutes later than usual." "A package was delivered while you were out." All without you asking.

That vision has enormous appeal. It's genuinely useful. It addresses real problems. But it also represents a level of surveillance and autonomy that most people haven't fully thought through. We're approaching a point where your home is not just observing you and your environment. It's analyzing that observation in real time, making inferences about what's happening, and taking actions based on those inferences.

The question isn't whether this technology will exist. It will. The question is what norms, regulations, and expectations surround it. Will it be transparent or opaque? Voluntary or default? User-controlled or company-controlled? Those things are still being determined.

Siminoff's return to Ring seems designed partly to shape those questions. He has credibility as a founder and the power to make significant product decisions. Using that credibility to push toward transparency and user control would be genuinely valuable. Using it to push toward convenience and company benefit would be harmful.

So far, the signals are mixed. Fire Watch and Search Party suggest a company thinking carefully about responsible AI. Law enforcement integration and facial recognition suggest a company taking shortcuts for capability. The truth is probably in between, with ongoing tension between what's convenient and what's responsible.

Real-World Implications: What This Means for You

If you're a Ring customer, Ring's shift toward intelligent assistant status affects you in several practical ways.

First, you'll get fewer but more relevant notifications. This is genuinely good. Alert fatigue is annoying and dangerous (people ignore alarms when they're constantly false). More intelligent filtering means the alerts you do get are more likely to require actual attention.

Second, Ring will understand your home better. The system will learn when you're typically home, who usually visits, what patterns are normal, and what patterns are anomalies. This is useful for security but also represents more detailed behavioral tracking than before.

Third, you'll have more access to conversational features. Ask your Ring system questions instead of navigating menus. This is convenient but also means the system needs to understand context and integrate information across multiple data sources.

Fourth, you'll be part of law enforcement infrastructure if you opt in. Your cameras aren't just protecting your property. They're also helping police investigate crimes. If you value this, you'll likely enable sharing. If you're concerned about surveillance, you'll disable it. Either way, you're making a choice about whether your home is part of the public safety infrastructure.

Fifth, your facial recognition data will be stored and used to identify family members and regular visitors. This improves personalization but also represents a new form of behavioral data that doesn't currently exist.

None of these changes is inherently bad. They're all defensible and some are genuinely valuable. But they all involve tradeoffs between convenience and privacy, between security and autonomy, between individual benefit and collective surveillance.

The responsible path forward is to make these choices consciously rather than defaulting into them. Read the privacy settings. Understand what each feature does. Enable the ones you genuinely value and disable the ones you don't. Review your settings periodically as Ring's capabilities evolve.

Conclusion: The Intelligent Assistant Era Has Challenges, But Also Promise

Jamie Siminoff's vision for Ring as an "intelligent assistant" rather than a doorbell is compelling. It points toward a future where home security is less about passive recording and more about active understanding. Where systems learn your home's patterns and act to protect those patterns. Where technology works with you rather than requiring constant attention and interaction.

That future is possible. Ring's new AI features demonstrate that the technical pieces are in place. Fire Watch saves lives by detecting fires. Search Party reunites lost pets with families. Conversational AI reduces friction in system interaction. These aren't dystopian science fiction. They're real benefits that real people are experiencing right now.

But the intelligent assistant era also has challenges. Facial recognition technology has a troubling history. Mass surveillance infrastructure enables oppression. Companies have consistently misused data when given the opportunity. Law enforcement has overreached when given new tools. These aren't theoretical concerns. They're patterns with historical precedent.

The tension between Ring's benefits and Ring's risks is real and irreducible. You can't have a truly intelligent home security system without collecting and analyzing detailed behavioral data. You can't have an assistant that understands context without the system tracking patterns and remembering details. The convenience requires the surveillance.

That doesn't mean the tradeoff is wrong. For many people, it's clearly worth it. But it means making the tradeoff consciously, with full awareness of what you're accepting in exchange for convenience. It means demanding that Ring be transparent about what data it collects, how it uses that data, and what safeguards protect that data. It means staying aware of regulatory developments and pushing for protections that preserve privacy while enabling genuine benefits.

Siminoff's return to Ring suggests he's thinking seriously about how to navigate these tensions. His stated commitment to scrutiny and transparency is encouraging. His actual practices with law enforcement integration and facial recognition are more mixed. Real progress will require sustained pressure from users, advocates, and regulators to ensure that Ring's intelligent assistant era is shaped by privacy-conscious choices rather than just convenience-maximizing ones.

The next few years will determine what smart home security looks like. Ring has enormous power to influence that future. The question is whether that power will be used responsibly. The answer isn't obvious yet, but it matters enough to keep watching.

FAQ

What makes Ring's AI features different from previous versions?

Ring's new AI capabilities go beyond recording and alerting. They include real-time fire detection during disasters, facial recognition for familiar people, conversational interfaces for asking questions about your home, and anomaly detection that learns what's normal for your specific property. The shift is from passive recording to active understanding and autonomous decision-making.

How does Ring's facial recognition work without storing faces in the cloud?

Ring stores facial recognition profiles locally on your Ring devices rather than uploading faces to Amazon's servers. The system learns to recognize familiar people by processing images on the device hardware itself. However, Ring acknowledges that Amazon has access to video footage for "service improvement," which may include analyzing facial data for other purposes.

Can you disable Ring's AI features if you're concerned about privacy?

Yes, most of Ring's AI features can be disabled individually in privacy settings. You can turn off facial recognition, unusual event detection, and law enforcement sharing. However, some features like standard motion detection and person recognition are harder to disable without significantly reducing the product's core functionality.

What happens if you decline to share footage with law enforcement?

If law enforcement issues an alert and you decline to share footage, the system is designed to be anonymous—police don't know who declined or even receive information about specific non-respondents. Only people who voluntarily share are identified. This is theoretically protective of privacy, though it depends on Ring accurately implementing the anonymity mechanism.

How often does Ring share data with Amazon, and can you prevent that?

Ring synchronizes camera footage and event data with Amazon's servers. The full extent and frequency of this data sharing isn't completely transparent, but it appears to happen continuously for cloud storage and analysis. You can't prevent this synchronization if you want to use Ring's cloud features, but you can limit what types of data Amazon accesses by disabling optional features like video recording and facial recognition.

Is Ring safer than not using any camera at all?

That depends on your threat model. Ring reduces the risk of break-ins because burglars often avoid homes with visible cameras. Ring helps you respond quickly to delivery package theft. Ring provides evidence if crimes occur. But Ring also creates a detailed record of who visits your home, when they visit, and patterns in your family's behavior. For some people, the security benefits outweigh the privacy costs. For others, the opposite is true.

Will Ring's AI features work offline if your internet connection fails?

Some Ring AI features work offline or with degraded functionality. Local video recording and basic motion detection continue working. However, most sophisticated AI features—facial recognition, conversational AI, unusual event detection—require cloud connectivity to function. Ring's edge processing capabilities are still limited, though the company is investing in improving offline functionality.

How does Ring determine what qualifies as an "unusual event"?

Unusual events are detected by algorithms that learn the baseline patterns of activity at your specific home. If something deviates from that learned pattern, it's flagged as unusual. However, the system factors in context—recognized faces are excluded from "unknown person" alerts, delivery vehicles are distinguished from suspicious vehicles based on time and frequency. The system tries to balance sensitivity with avoiding false alarms.

Summary

Ring's transformation from a video doorbell company to an AI-powered intelligent assistant represents both opportunity and risk. The company has deployed genuinely useful features like Fire Watch and Search Party that solve real problems. But it's also expanding surveillance capabilities through facial recognition, law enforcement integration, and behavioral learning.

The future of home security—and potentially privacy in the smart home era—will be shaped significantly by how Ring implements and governs these technologies. Siminoff's return to the company suggests serious commitment to building intelligence into home security. Whether that intelligence is used responsibly depends on choices still being made.

For users, the path forward requires conscious engagement with privacy settings, realistic understanding of the tradeoffs, and ongoing pressure for transparency and accountability from both Ring and regulators. The intelligent assistant era is here. Making sure it's also the responsible era will require sustained attention.

Key Takeaways

- Ring shifted from reactive doorbell camera to AI-powered intelligent assistant with real-time fire detection, pet finding, and facial recognition capabilities.

- Fire Watch and Search Party prove AI surveillance can solve genuine public safety problems when implemented with transparency and user consent.

- Familiar Faces and law enforcement integration raise valid privacy concerns despite Siminoff's emphasis on user choice and anonymity.

- Ring's scale with tens of millions of deployed cameras means its decisions shape surveillance norms and law enforcement expectations industry-wide.

- Future of smart home security hinges on whether AI convenience features are balanced against privacy protections through transparent practices and smart regulation.

Related Articles

- Best Smart Home Devices at CES 2026 [Updated 2025]

- The Robots of CES 2026: Humanoids, Pets & Home Helpers [2025]

- SwitchBot's AI Recording Device: Privacy, Ethics & What You Need to Know [2025]

- Anker's Home Battery System vs Tesla Powerwall [2025]

- Amazon's Bee Acquisition: Why AI Wearables Are the Next Frontier [2025]

- Honeywell Home X2S Smart Thermostat Review [2025]

![Ring's AI Intelligent Assistant Era: Privacy, Security & Innovation [2025]](https://tryrunable.com/blog/ring-s-ai-intelligent-assistant-era-privacy-security-innovat/image-1-1768333279121.jpg)