Why Apple Chose Google Gemini for Next-Gen Siri [2025]

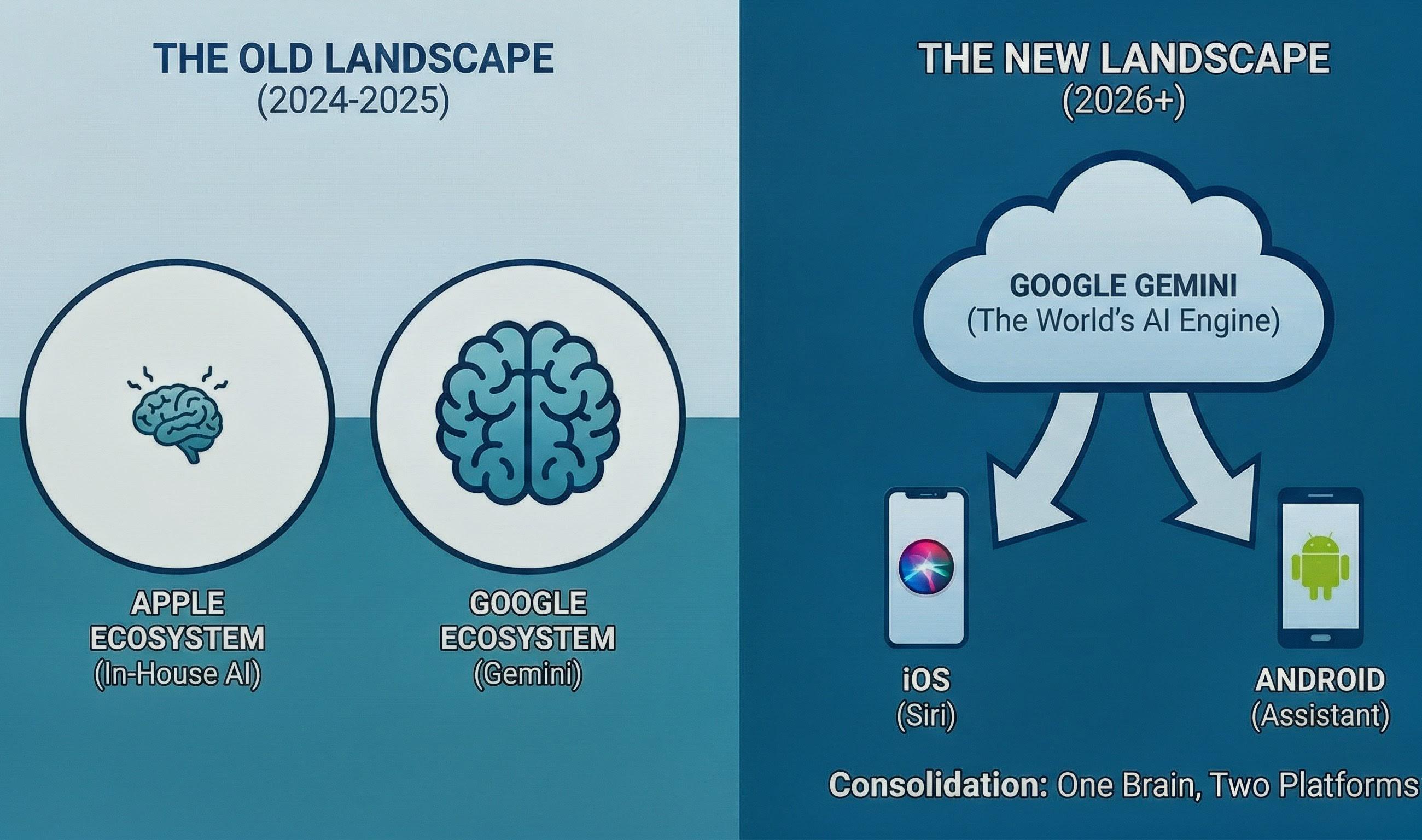

Apple just dropped a bombshell. After years of promising a smarter, more capable Siri, the company announced it would power its next-generation voice assistant using Google's Gemini AI model. Not Open AI's Chat GPT. Not Anthropic's Claude. Not even Apple's own proprietary models. Google's Gemini.

If you're scratching your head right now, you're not alone. This partnership feels counterintuitive on the surface. Apple and Google have been competitors for over a decade. Google makes the Android ecosystem that directly challenges iOS. Yet here we are, with Apple essentially outsourcing one of its most important features to its biggest rival in mobile.

But this decision wasn't made in a vacuum. And it wasn't made lightly. Behind this partnership lies a complex web of technical constraints, business realities, patent considerations, and market dynamics that most people don't see. Understanding why Apple made this choice requires digging into the actual state of AI development, the limitations of building world-class foundation models, and the pragmatism that drives decisions at the world's most valuable company.

Let me be direct: this is one of the most significant AI partnerships in recent tech history. And it tells us something crucial about where the AI industry actually stands in 2025.

TL; DR

- Apple partnered with Google instead of Open AI or Anthropic because building a competitive foundation model requires billions in compute resources and years of development that Apple wasn't willing to invest for a voice assistant feature.

- Gemini's multimodal capabilities made it ideal for Siri because it can analyze photos, understand context from your digital life, and process information across Google's ecosystem—something Siri needed to compete with Chat GPT's expanding abilities.

- The partnership protects Apple's autonomy by licensing technology rather than becoming dependent on a single AI vendor while avoiding the PR nightmare of relying on Open AI given ongoing Elon Musk controversies.

- User privacy remained the core requirement, which is why Apple insisted on on-device processing for sensitive tasks and only sending data to Google's servers when necessary—a compromise that took months to negotiate.

- This decision signals that even trillion-dollar tech companies recognize that building everything in-house isn't always the optimal strategy, especially when specialized AI partners already have working solutions.

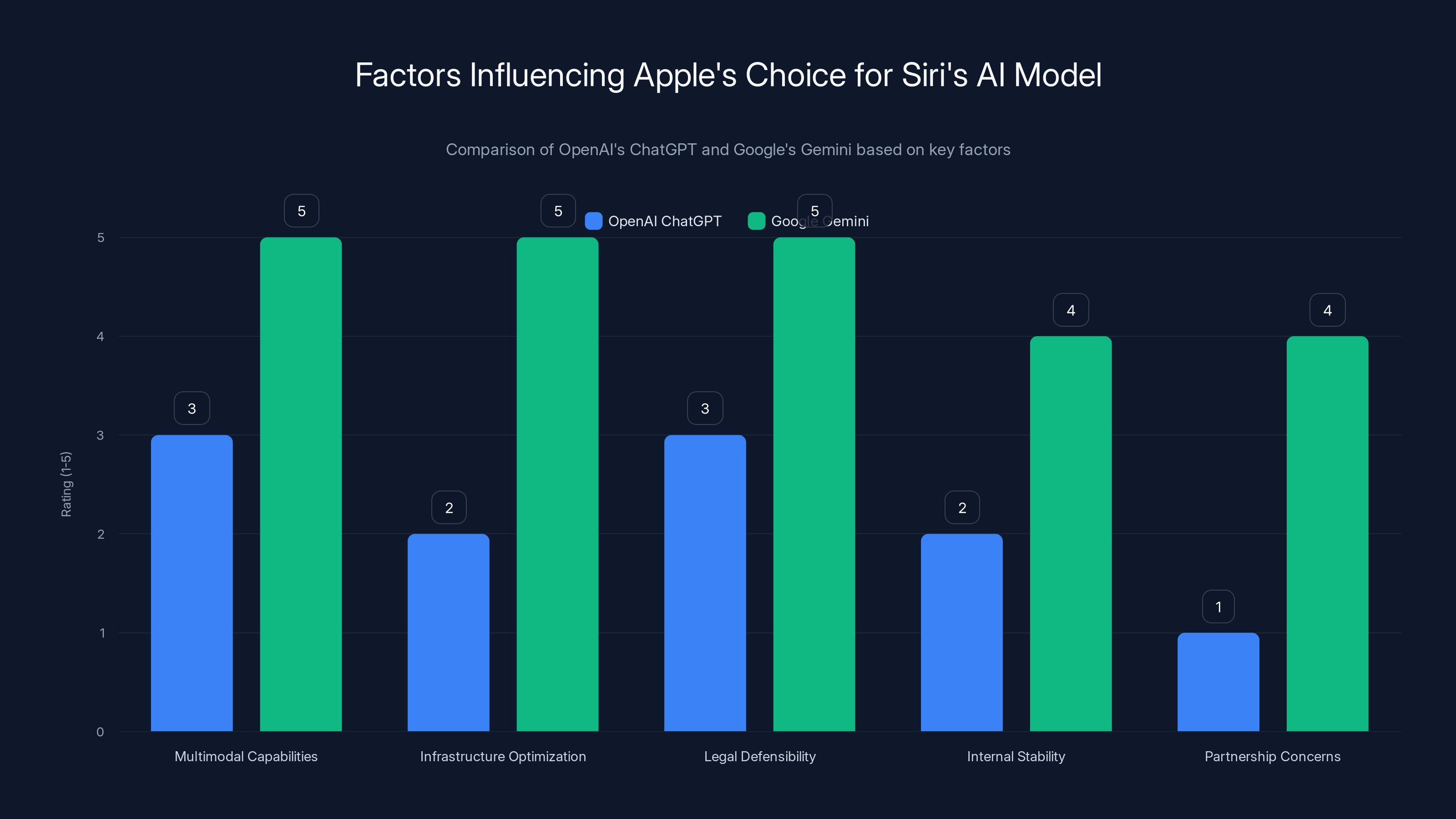

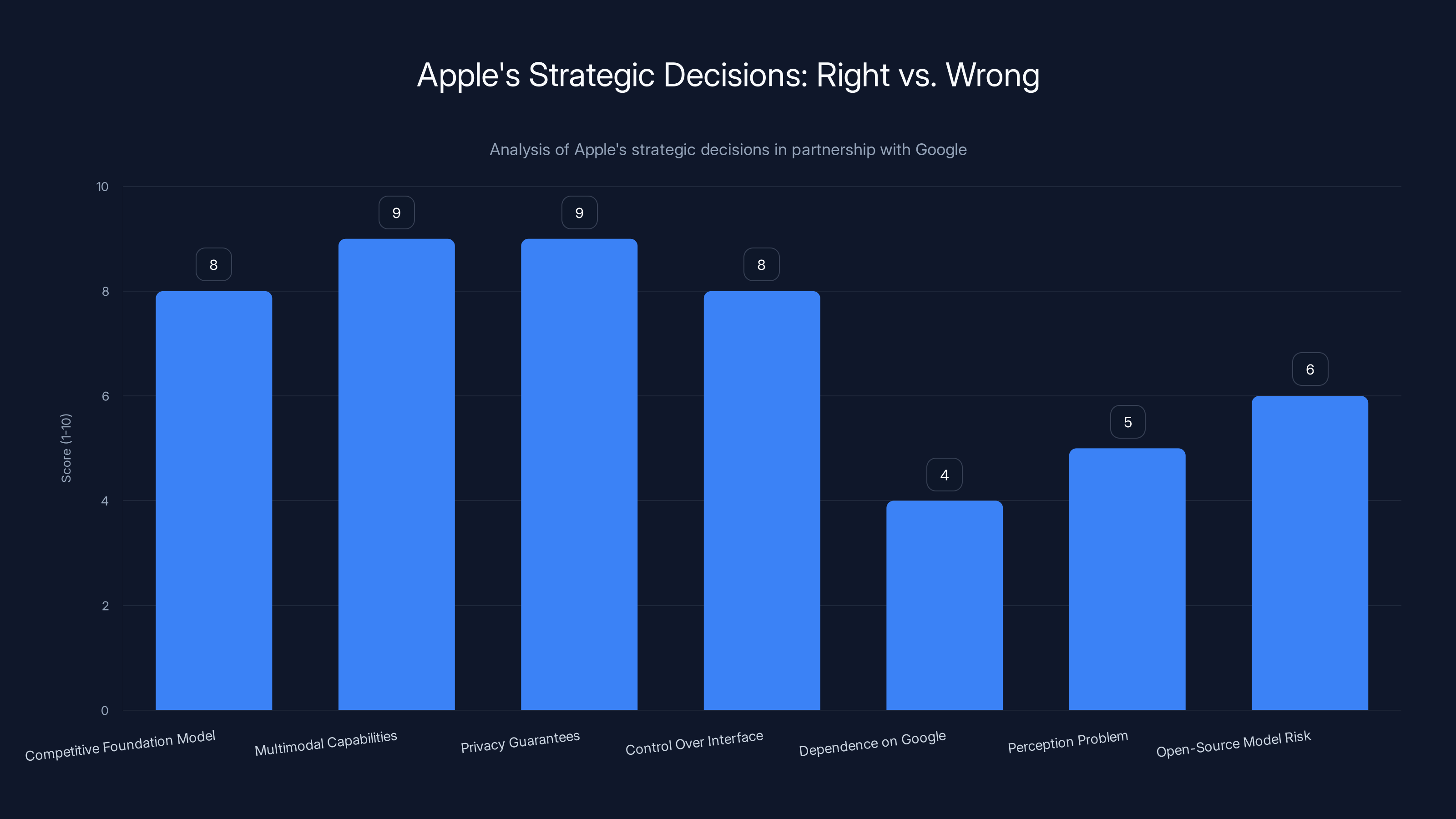

Google's Gemini scored higher across key factors such as multimodal capabilities and infrastructure optimization, making it a better fit for Apple's Siri requirements. (Estimated data)

The Problem Apple Was Trying to Solve

Siri, in its current form, is broken. Not broken in the sense that it doesn't work—it technically does. But broken in the sense that it can't do what modern AI assistants can do, and users know it. Ask Chat GPT to help you write an email, and it'll draft something thoughtful. Ask Siri the same thing, and you get a basic text suggestion. Ask Claude to analyze an image you took, and it understands context, composition, and intent. Ask Siri, and it just tries to search the web.

This gap between what Siri can do and what other AI assistants can do has become Apple's biggest embarrassment in AI. The company that revolutionized mobile computing, that created the smartphone category, that made Siri the first mainstream voice assistant—that company now has the worst AI assistant among major platforms. And it's been that way for years.

The problem started with Apple's original approach to Siri. When Apple acquired the Siri startup in 2010, the technology was genuinely groundbreaking. A voice assistant that could understand natural language and actually accomplish tasks? That was magic. But Siri's architecture was built for a different era of AI. It relied on rigid command structures, limited context awareness, and shallow understanding of what users actually wanted.

Over the years, Apple kept iterating. Voice recognition improved dramatically. The system learned to understand more complex requests. Integration with Apple's services got deeper. But the fundamental architecture remained the same—pattern matching and rule-based systems layered on top of statistical language models that were never designed to be truly intelligent.

Meanwhile, the AI world moved on. The transformer architecture revolutionized everything. Large language models proved that scale, data, and compute could create genuinely intelligent systems. Open AI released GPT-4 and showed that AI could match or exceed human performance on complex reasoning tasks. Anthropic built Claude, proving that scaling up wasn't the only path to capable AI. And Google eventually released Gemini, which worked across text, images, audio, and video.

Apple watched all of this happen. And the company realized: we can't keep building Siri the old way. It won't work. Users are comparing it to Chat GPT every single day. Our assistant is falling further behind. We need something better, and we need it soon.

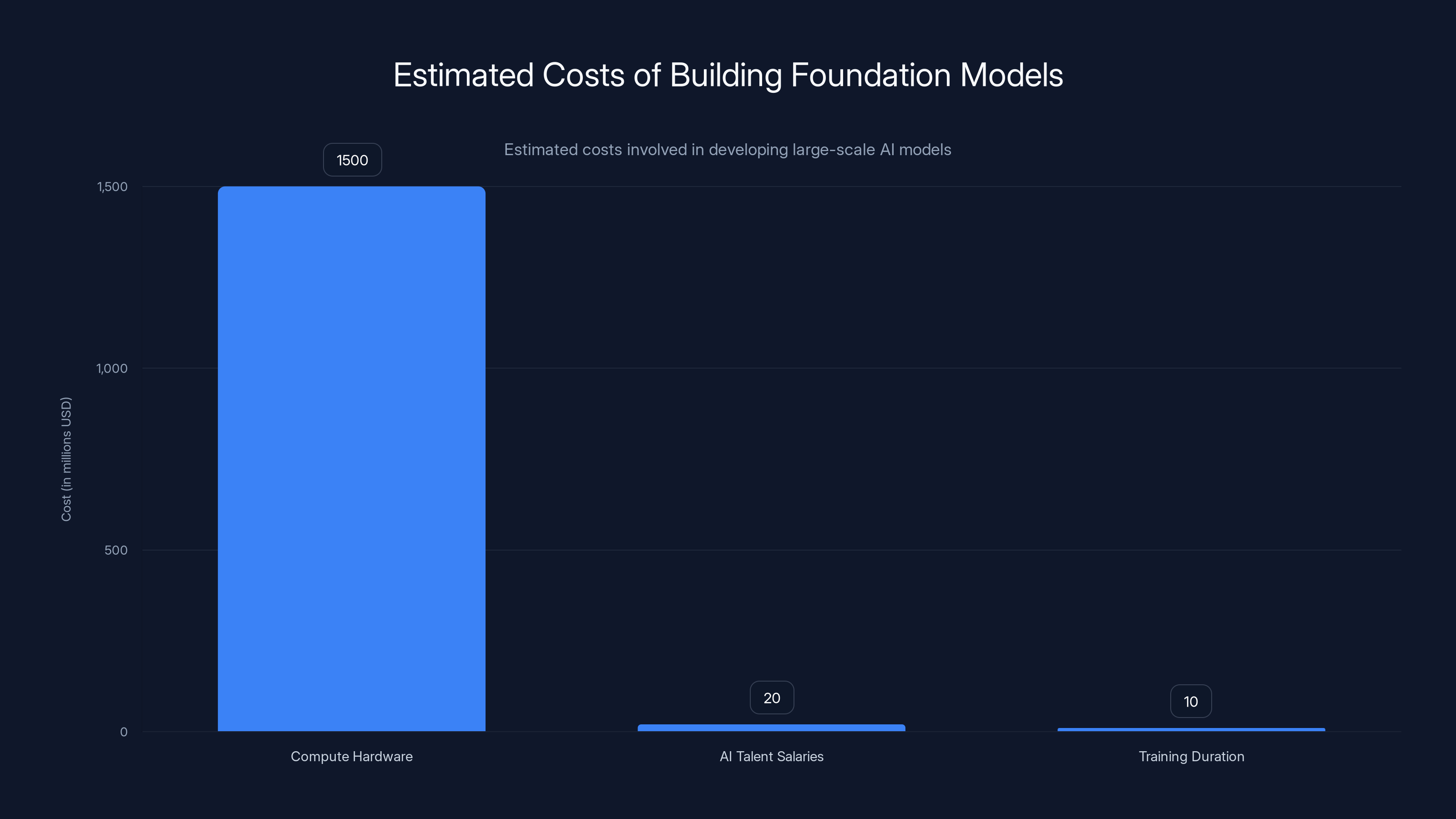

Building foundation models involves significant costs, with compute hardware being the largest expense, estimated at

Why Building Foundation Models Is Incredibly Hard (And Expensive)

This is the part that most tech journalists miss when they write about Apple's partnership with Google. They focus on the business implications or the competitive angles. But the real story is about physics, mathematics, and the brutal economics of foundation model development.

Building a world-class foundation model—the kind that powers Chat GPT, Claude, or Gemini—requires solving two interdependent problems. First, you need enormous amounts of training data. We're talking hundreds of billions of text tokens, millions of hours of video, billions of images. Second, you need equally enormous amounts of compute to process all that data through neural networks with billions or trillions of parameters.

Let's do some math. The computational cost for training a large foundation model is measured in "FLOPs"—floating-point operations. Training GPT-4 required an estimated 10^25 FLOPs or more. Converting that to real-world cost: Google spends an estimated $12-15 million on compute hardware alone just to train a Gemini model once. And that's for a tech giant with custom chips and access to massive data centers. For a company starting from scratch, costs would be three to five times higher.

But wait, there's more. Those GPUs and TPUs that train the model? They cost tens of thousands of dollars each. Building a compute cluster large enough to train a competitive foundation model requires purchasing tens of thousands of these chips upfront. That's

Now, add in the timeline. Training a foundation model takes 3 to 6 months on a top-tier cluster. But you're probably going to need to train multiple versions, do dozens of experiments, try different architectures. So you're looking at 18 to 36 months minimum to get a production-ready system. During that entire time, your competitors are iterating and improving their models. You're always chasing something that's already better than what you're building.

Here's where it gets really brutal: it's not just the raw cost. Building a competitive foundation model requires something you can't buy, no matter how much money you have. It requires expertise. Decades of collective experience in machine learning, scaling neural networks, understanding training dynamics, and knowing what actually works at scale. The people who have that expertise work at Open AI, Anthropic, Google, Meta, and maybe five other companies worldwide. They're not leaving those jobs to go work for you.

Apple looked at this equation. The company has money, no doubt. Apple's cash on hand exceeds

Apple makes roughly

So Apple asked a smarter question: why build a foundation model in-house when there's a company that's already built one that we can license?

The Case for Using Gemini Instead of Building In-House

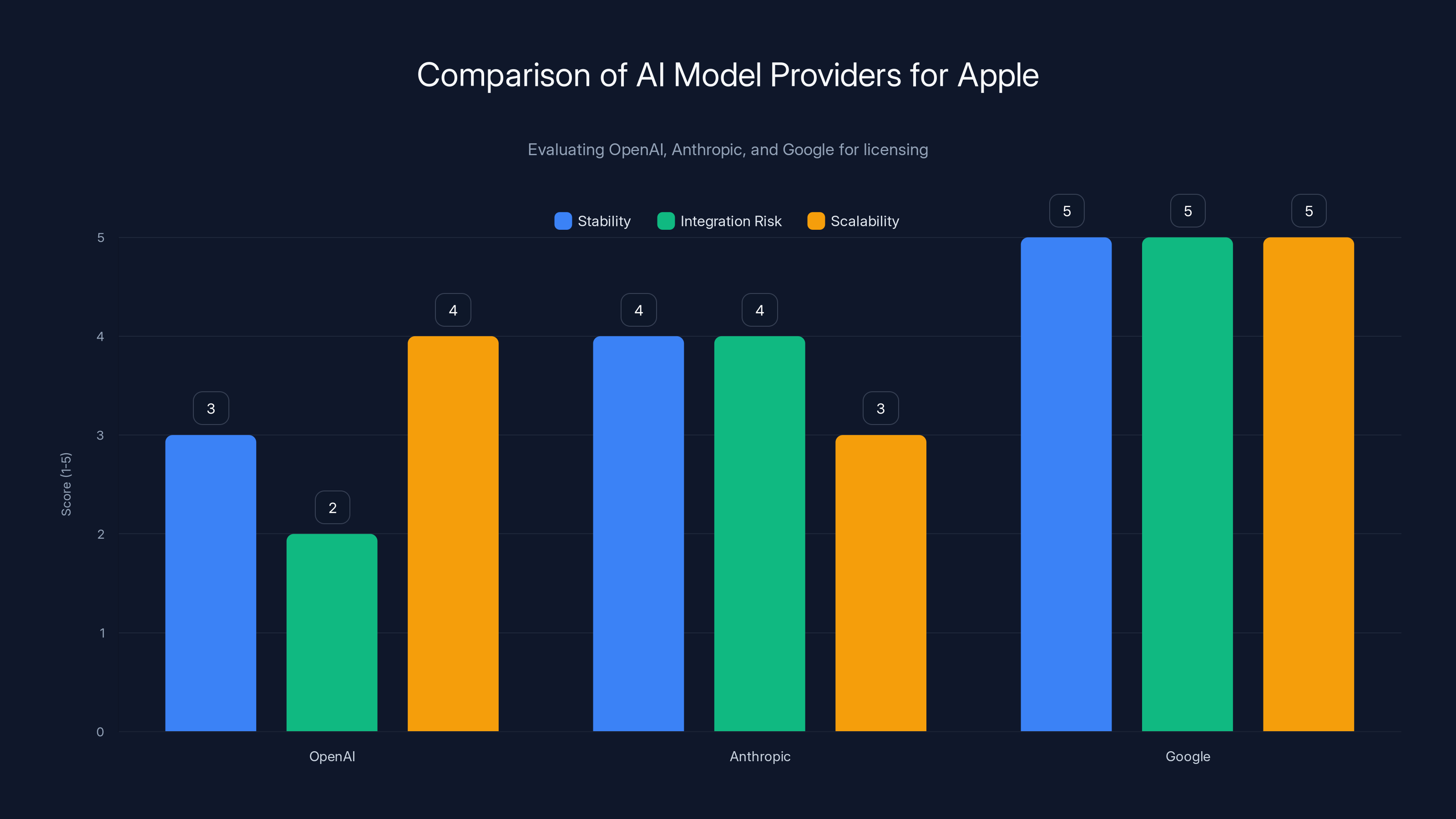

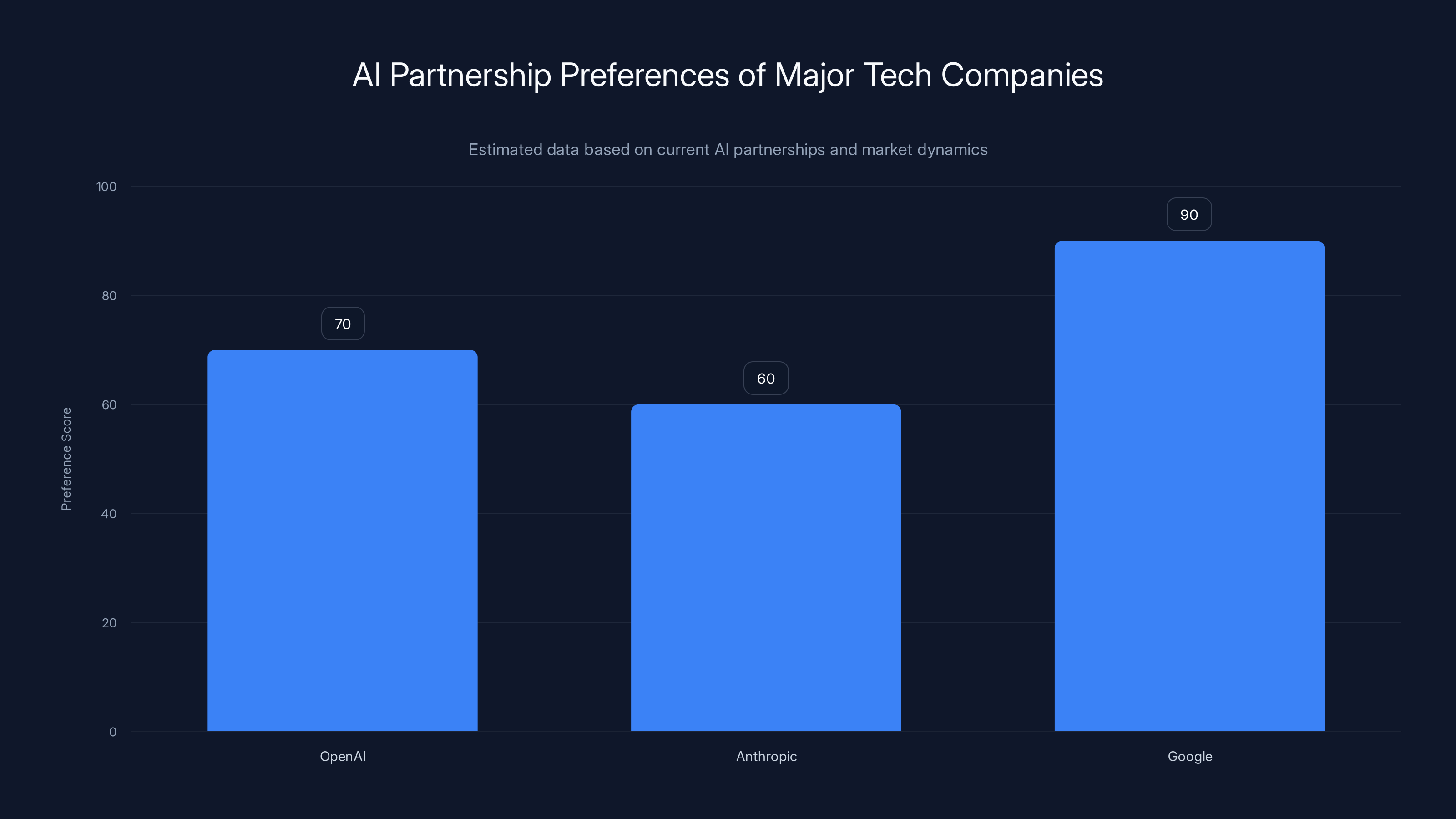

Once Apple decided not to build its own foundation model from scratch, the question became: whose model should we license?

There were three realistic options: Open AI, Anthropic, or Google. Each came with different advantages and constraints.

Open AI seemed obvious at first. Chat GPT is the most popular AI assistant in the world. It's what people think of when they think of AI. And Open AI already had relationships with tech companies—Microsoft integrated Chat GPT into everything. But here's the problem: Open AI was fractured. In late 2023 and early 2024, Sam Altman was fired, then rehired. The company's leadership was in chaos. And Elon Musk, who founded Open AI and still had significant influence in tech circles, was involved in a public lawsuit against the company over its transition to a for-profit structure. Apple, which values stability and predictability, saw Open AI as a risky bet.

There's another issue with Open AI: the company was already deeply integrated with Microsoft. Microsoft had invested $13 billion into Open AI and had exclusive rights to integrate GPT models into Microsoft products. Depending on Open AI for Siri would make Apple feel like it was depending on Microsoft, indirectly. Apple doesn't like that kind of dependency.

Anthropic was Apple's second option. Claude is genuinely impressive. Many experts argue it's more capable than GPT-4 in certain domains like reasoning and factual accuracy. The company is well-managed, the founding team has deep expertise, and there are no baggage concerns. But Anthropic is also still relatively young. Founded in 2021, the company is still proving out its long-term viability. There were questions about whether Anthropic could scale its operations fast enough to support Apple's needs, whether it would remain independent or get acquired, and whether its model was truly multimodal enough to power everything Siri needed to do.

Google was the dark horse. On the surface, it seems like the worst choice. Why would Apple partner with its biggest competitor? But when you actually examine the technical and business logic, Google made more sense than people initially realized.

First, Gemini is genuinely capable. It's not the fanciest model, and some benchmarks favor Claude or GPT-4 in specific domains. But Gemini works across text, images, audio, and video. It can understand context from Google Search results, YouTube history, and Google Photos. It has real-time information access through Google's search infrastructure. For a voice assistant that needs to understand what you're photographing, what you're reading about, and what you've recently searched for, that multimodal integration is incredibly valuable.

Second, Google has the infrastructure at scale. Google runs the world's largest computing infrastructure. The company processes 8.5 billion searches per day. That operational expertise—understanding how to run AI at scale in production—is something Open AI and Anthropic are still learning. Google has been running massive machine learning models in production for over a decade.

Third, and this is crucial: Google was already making Gemini available through its Pixel phones. If Apple was going to use any third-party foundation model, Google had already proven it could make it work in a mobile environment. The infrastructure was there. The mobile optimization was done. Apple didn't have to wait for anyone to figure out how to run Gemini on a phone—it was already happening.

Fourth, there's a strategic advantage nobody talks about: competition. If Apple used GPT-4, it would essentially be dependent on Open AI's roadmap. If Open AI improved GPT-4 and integrated it tightly with Microsoft services, Apple would be playing catch-up. But by using Gemini, Apple creates a situation where Google and Apple have aligned incentives to keep improving the model. Google wants Gemini to be the best because it reflects on Google's AI capabilities. Apple wants Gemini to be the best because it powers Siri. That alignment is strategically valuable.

Fifth, and most importantly: licensing Gemini lets Apple maintain the appearance of control. Siri is now "powered by Gemini," but Apple controls the voice interface, the on-device processing, the privacy guarantees, and the feature integration. Apple hasn't outsourced Siri to Google—Apple has licensed one component (the foundation model) while maintaining everything else in-house. That's the distinction that matters.

Google scores highest in stability and integration risk, making it a strong candidate. OpenAI's integration risk is high due to its ties with Microsoft. (Estimated data)

The Technical Architecture: How Apple and Google Actually Made This Work

You can't just drop Google's Gemini model directly into Siri and call it a day. The technical integration required careful architecture to maintain Apple's privacy requirements while leveraging Google's capabilities.

Here's roughly how it works: When you speak to Siri, your voice is processed on-device on your iPhone. Apple's on-device speech recognition converts audio to text. That text then gets sent to what Apple calls "Apple Intelligence"—a combination of on-device processing and cloud-based processing depending on the task.

For simple tasks—setting a timer, checking the weather, controlling smart home devices—everything happens locally on your phone. No data goes to Apple's servers, let alone Google's. Apple's on-device models handle these tasks, and they're fast enough that the latency is imperceptible.

For complex tasks—understanding nuanced requests, reasoning about context, generating detailed responses—the system needs more power than what's available on-device. This is where Gemini comes in. The text (never the raw audio) gets sent to Google's servers. Gemini processes the request and returns a response. Then Apple's system delivers that response back to you through Siri.

But here's the critical part: Apple negotiated specific privacy guarantees with Google. According to Apple's documentation, requests sent to Google for Gemini processing are anonymized and don't get logged in a way that's tied to your Google account. Google doesn't use your Siri requests to train future models or improve Google Search. Your request history isn't shared with Google's advertising business. This is a fundamental difference from how Google normally operates.

These privacy guarantees didn't come easily. They were the result of months of negotiation. Google normally leverages user data to improve products and train models. Apple was asking Google to deliberately not do that for Siri requests. That required contractual language, technical implementation changes, and probably some serious financial incentives.

The architecture also includes what Apple calls "on-device fallback." If the request is something sensitive—health information, financial data, intimate personal matters—the system tries to handle it locally first. Only if on-device processing can't adequately respond does it consider sending anonymized data to Gemini. And if the request is deemed too sensitive (sexual content, health conditions, financial details), Siri simply refuses to send it to the cloud and tells you it can only process that locally. This puts the user in control.

Another layer: Apple uses what's called "private cloud compute" for some tasks. Instead of sending data to Google directly, Apple sends encrypted data to Apple's own secure servers, which then make encrypted requests to Google. This adds a layer of obfuscation—Google processes the request but doesn't know whose request it is or what personal context might be attached.

The system is intentionally designed so that Google sees the minimum necessary data to respond accurately. Google doesn't see your contact list, your location, your photos, or your search history—only the specific request needed to generate a response. This is technically challenging to implement and requires careful API design, but it's the boundary that Apple insisted on.

Why This Partnership Reveals Something Bigger About AI

Apple's decision to use Gemini isn't just about Siri. It's a signal about something fundamental in the AI industry that most people haven't fully grasped yet.

The conventional wisdom in tech is that every company should build everything in-house. Google built search in-house. Amazon built cloud services in-house. Meta built recommendation algorithms in-house. The assumption is that proprietary technology gives you competitive advantage and strategic control.

But foundation models might be different. A foundation model is like electricity. You could try to generate your own power, but if there's already an efficient power grid, you're better off using that and focusing your resources on what makes your product unique.

Consider what happened with Android. Google built Android as a base OS, and device makers licensed it instead of building their own. That didn't make Android companies less competitive—it made them more competitive because they could focus on hardware, services, and user experience instead of building OS infrastructure from scratch.

Apple is making a similar bet with Gemini: the foundation model is becoming like the OS layer, and focusing on the user experience and feature integration is where the competitive advantage lies.

This is actually good news for smaller companies. If every company had to build its own foundation model to be competitive, only a few companies with enormous resources could compete. But if foundation models become a commodity that any company can license—whether from Google, Open AI, Anthropic, or future providers—then the competitive landscape opens up. A small startup could theoretically build an amazing voice assistant by licensing a foundation model and focusing all its engineering resources on UX, privacy, and integration.

This pattern is already emerging. Perplexity built a search interface on top of licensed models. Runway built creative tools using various foundation models. Zapier integrated multiple AI providers to build automation workflows. None of these companies built their foundation models from scratch. They won by focusing on solving specific problems better than competitors.

Apple is learning the same lesson, but a few years late. The company tried to build Siri from first principles. That worked when the competition was also building from first principles. But once the foundation model revolution happened, trying to build from scratch became the wrong strategy. Licensing became faster, cheaper, and actually smarter.

Apple's strategic decisions show strengths in privacy guarantees and multimodal capabilities but face risks in dependence on Google and perception issues. (Estimated data)

The Competitive Response: What This Means for Open AI and Anthropic

When Apple announced the Gemini partnership, there was a palpable shift in the venture capital and AI startup ecosystem. People realized: Apple, the company that controls the iPhone and the most valuable tech brand on earth, is betting on Google instead of Open AI. That's not a small signal.

For Open AI, this is a setback. Open AI has been positioning itself as the default AI partner for major tech companies. The company has deals with Microsoft, partnerships with companies across media and entertainment, and integration with countless apps. But Apple's choice showed that even Open AI's dominance can't guarantee partnerships with the most important tech companies.

There were structural reasons for this. Open AI is still recovering from internal turmoil. The company's pricing has gotten more expensive. And the perception, fair or not, is that Open AI is increasingly Microsoft's AI division rather than an independent company. Apple might not want to feel like it's indirectly dependent on Microsoft.

For Anthropic, the response has been interesting. Anthropic's CEO Dario Amodei gave statements acknowledging the partnership but also pointing out that different companies have different needs. Anthropic's position is that its models might not be ideal for voice assistants (which favor speed and latency) but are perfect for other applications. That's probably true—Claude's reasoning capabilities are better than Gemini's in certain benchmarks, but that doesn't matter as much for a voice assistant as speed and multimodal capability do.

But both Open AI and Anthropic got a clear message: being the best model isn't enough. You also need to prove you can execute at scale, maintain stability, provide privacy guarantees, and integrate seamlessly into existing platforms. Open AI stumbled on stability. Anthropic hasn't proven itself at scale yet. Google, despite being a competitor, proved it could deliver on all fronts.

Longer term, this partnership might actually accelerate the commodification of foundation models. If Apple—one of the most vertically integrated companies in existence—is willing to license AI from Google, it signals that foundation models are becoming more like infrastructure than differentiated products. That should push model providers to compete on dimensions that actually matter: inference speed, factual accuracy, multimodal capability, privacy, and cost. That competition is good for the entire ecosystem.

What Apple Got Right (and What It Got Wrong)

Let's be honest: this partnership isn't perfect. And Apple's strategy isn't without risks.

What Apple got right: Apple recognized that building a competitive foundation model wasn't worth the investment for this particular feature. The company correctly identified that Gemini's multimodal capabilities and production-scale infrastructure were the right technical fit for modernizing Siri. And Apple fought hard to negotiate privacy guarantees that actually protect user data rather than feed Google's advertising machine. These are genuinely smart decisions.

Apple also maintained control over the parts that matter. Siri's voice interface is still Apple's. The feature integration is still Apple's. The on-device processing is still Apple's. Gemini is just the reasoning engine underneath. That's the right architectural boundary.

What Apple might have gotten wrong: There's real risk in depending on Google. What if Google changes the terms of the deal? What if Google decides Gemini needs to be integrated differently to serve Google's interests better? What if there's a geopolitical event that forces Google to restrict the model for certain regions? Apple has some contractual protection, but ultimately Apple is dependent on Google's goodwill.

There's also a perception problem that Apple might not fully resolve. For years, Apple marketed itself as the privacy-first tech company. Now, for the smartest voice assistant features, you need Google's servers processing your requests. Even if the privacy architecture is technically sound, the optics aren't great. Marketing "Siri powered by Gemini" is challenging when your core brand value is privacy.

And there's a longer-term risk: what if open-source models eventually become competitive with Gemini? There's genuine progress happening in the open-source model space. Llama 3 and similar models are getting surprisingly capable. If an open-source model reaches parity with Gemini in 2-3 years, Apple will have trained Google's executives to expect a certain licensing revenue from voice assistant features. It'll be harder for Apple to switch to an open-source alternative by then.

But on balance, for 2025 and the next few years, Apple's decision makes strategic sense. The company identified the real constraint (foundation models cost billions to develop), found the best available solution (Google's Gemini), negotiated reasonable terms (privacy guarantees), and maintained control of the parts that matter (UX and feature integration). That's good strategy.

Estimated data suggests Google is currently the preferred AI partner for major tech companies, with a higher score compared to OpenAI and Anthropic, reflecting its ability to deliver on multiple fronts.

The Broader Implications for Siri's Future

Using Gemini as the foundation doesn't mean Siri suddenly becomes amazing overnight. There's still a lot of work to do on Apple's side.

First, Apple needs to figure out how to make Siri actually competitive with Chat GPT. Chat GPT has conversational ability that's hard to match. People use Chat GPT for extended conversations where the AI helps them think through problems, brainstorm ideas, and explore possibilities. Siri needs to support that kind of interaction while also staying device-focused. That's a different UX problem than what Apple was solving before.

Second, Apple needs to handle the latency issue. Voice assistants need to respond quickly. The round trip from your iPhone to Google's servers and back takes time. Apple has some on-device processing to handle simple requests, but for complex requests sent to Gemini, there's inherent latency. Apple will need to invest in predictive systems that anticipate what you might ask and pre-compute responses, or find other ways to mask the latency.

Third, Siri needs to become more proactive. Right now, Siri is reactive—you ask it something, and it responds. But Siri should be predicting what you need and offering help before you ask. "Hey, I noticed you're looking at flights to this destination. Want me to check hotel prices?" That kind of proactive assistance requires understanding context in ways that Siri has never been designed to do.

Fourth, Apple needs to integrate Siri more deeply with its own services. Gemini can handle general intelligence, but Siri needs to be deeply integrated with Apple News, Apple Music, Apple Maps, Apple Health, and all of Apple's services. That integration is Apple's responsibility, not Google's.

The Gemini partnership solves the foundation model problem. But it doesn't solve the product problem. And that's where the real challenge lies.

The Patent Landscape and Legal Considerations

There's actually another reason Apple might have preferred Gemini over Open AI that doesn't get discussed much: patents and legal liability.

Open AI has been involved in multiple legal disputes about the data used to train its models. There are ongoing lawsuits from authors, media companies, and other parties alleging that Open AI used copyrighted material without permission to train GPT models. The legal outcome of those cases is uncertain. If a court rules that Open AI violated copyright and owes damages, Open AI's licensing partners—including potentially Apple—could face liability.

Google, as the largest tech company with the most sophisticated legal team, has taken a more cautious approach to data sourcing. Google has more experience defending its use of data in court (given its decades in search and information indexing). Apple probably preferred partnering with a company that has stronger legal defensibility.

Anthropic has been more transparent about its training data and has actually commissioned third-party audits. But Anthropic is also smaller and has less legal resources if something goes wrong. Apple probably wanted to partner with a company that could defend itself in court if needed.

Google, despite all its other issues, is the safest legal bet. A partnership with Google protects Apple because Google's legal consequences are far greater than any consequences for Apple specifically.

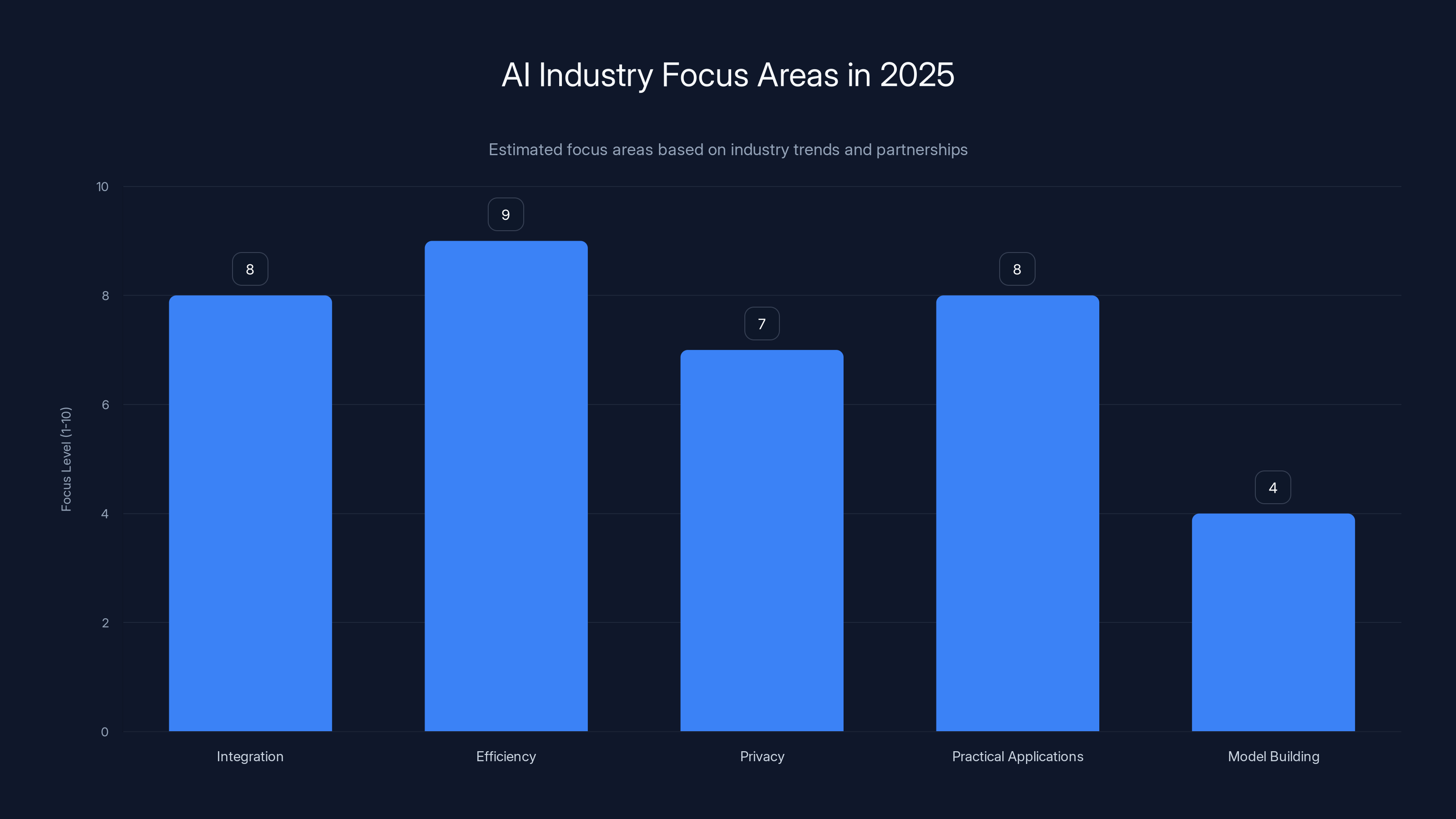

By 2025, the AI industry is expected to focus more on integration, efficiency, and practical applications rather than building new models, indicating a shift towards maturity. (Estimated data)

Looking Ahead: What Comes Next for This Partnership

If the Apple-Google Gemini partnership works well, there are natural extensions. Apple could eventually want Gemini integrated into more products: Apple TV, Apple Watch, macOS. Right now, we're talking about Siri on the iPhone. But the foundation for deeper integration across all of Apple's devices is being laid right now.

There's also a question of whether this partnership becomes exclusive or if Apple might eventually license models from multiple providers. The most future-proof approach would be for Apple to build an abstraction layer that lets the company switch between foundation models. That way, if Anthropic's Claude becomes better for Siri use cases, or if an open-source model becomes viable, Apple can change providers without overhauling Siri's architecture.

Another possibility: Apple might eventually build its own foundation model specifically for on-device Siri features. The company could license Gemini for cloud processing while building a smaller, specialized model for device-side processing. Over time, the on-device model could get better, and Apple could reduce reliance on Gemini.

The longest-term implication is that this partnership proves that even trillion-dollar tech companies have to be pragmatic about where they build and where they buy. Apple's vertical integration strategy worked for hardware and software. But AI is different. The dynamics are different. And Apple had to adapt.

The Bigger Picture: How This Partnership Signals the State of AI in 2025

Apple's decision is actually revealing about where the AI industry stands right now, and where it's headed.

First, foundation models are becoming increasingly standardized. There used to be huge quality variations between models. Now, the top models (GPT-4, Claude 3, Gemini) are all within striking distance of each other on most benchmarks. That convergence means companies can make partnerships based on factors other than pure capability: cost, infrastructure, integration, privacy, business terms, and reliability.

Second, the infrastructure required to run models at scale is consolidating. Google has built the most efficient infrastructure. Open AI has efficient infrastructure through its Microsoft partnership. But the barrier to entry for building competitive infrastructure is so high that most companies will never attempt it. That consolidation is real and it's permanent.

Third, the AI industry is maturing. Two years ago, the story was all about which models were smartest, which companies would win, whether open-source could compete with closed models. By 2025, those questions are starting to feel settled. The conversation is now about integration, efficiency, privacy, and practical applications. That's a sign of a maturing industry.

Fourth, licensing is becoming more common than building. In the early days of AI startups, everyone wanted to build proprietary models. Now successful companies are focusing on applications and leaving the foundation models to specialists. That's actually healthy for the ecosystem because it lets companies focus on what they're good at.

Apple's Gemini partnership is a bellwether for all of this. When the most valuable, most vertically integrated tech company admits that licensing is better than building for AI, it signals a fundamental shift in how the technology industry works.

Common Misconceptions About This Partnership

Let me address some of the confusion I've seen in tech press and industry discussion about this partnership.

Misconception 1: "Apple is surrendering to Google." No. Apple licensed one component (the foundation model). Apple still controls everything that makes Siri distinctive: the voice interface, the privacy architecture, the on-device processing, the integration with Apple services, the feature design. Licensing a component isn't surrender; it's pragmatic resource allocation.

Misconception 2: "Google is getting access to all your Siri data." No. Apple negotiated specific privacy guarantees. Google doesn't get access to your contact list, your location, your identity, or your request history. Google processes the specific request and returns a response. It's not free data for Google.

Misconception 3: "Gemini is now integrated into all of Apple's products." Not yet. Right now, this is Siri-specific. Apple is being strategic about where Gemini is integrated and where it isn't.

Misconception 4: "This means Apple gave up on building AI." No. Apple still builds tons of AI. The company built the neural engine in the A18 chip. Apple built on-device machine learning models. The company is building Apple Intelligence, which includes on-device processing. What Apple is doing is being selective about where to build and where to license.

Misconception 5: "Open AI lost because Apple chose Google." Open AI didn't lose. Open AI has thousands of other customers and partnerships. This is one partnership that went a different direction. The AI market is big enough for multiple companies to win.

Practical Takeaways for Product Teams

If you're building products and thinking about AI strategy, what can you learn from Apple's decision?

First, be honest about your constraints. Apple has $150 billion in cash and the best engineering talent. If Apple decided not to build a foundation model, your company probably shouldn't either. Foundation models are a specialized, capital-intensive category. Unless you're Google, Open AI, or Anthropic, you probably shouldn't be building them.

Second, licensing is legitimate. There's no shame in licensing AI from specialized providers. In fact, it's often the smarter choice. You can focus your resources on the product experience and the problem you're solving, rather than competing on raw model capability.

Third, negotiate for privacy. If you're licensing AI that will process user data, negotiate privacy guarantees from day one. Don't assume the license agreement will protect user privacy. Be specific. Be detailed. Make privacy part of the contract.

Fourth, maintain architectural control. License components, but maintain control of the integration layer. That's where your differentiation lives. Apple didn't hand Siri over to Google. Apple licensed the reasoning engine but maintained control of everything else.

Fifth, plan for alternatives. Don't become so dependent on one model provider that you can't switch. Build abstraction layers. Have contingency plans. The AI market is moving fast, and providers change terms, get acquired, or go out of business.

The Path Forward

Apple's decision to use Gemini for next-gen Siri isn't the end of a story. It's the beginning of a new chapter in how Apple approaches AI. The partnership proves that even the most vertically integrated tech companies have to be pragmatic about where they build and where they license.

What happens next depends on execution. Will Siri actually become competitive with Chat GPT? Will users trust that their Siri requests aren't being logged by Google? Will the partnership hold if geopolitical tensions rise between the US and other countries where Google operates? Will open-source models eventually make this partnership less relevant?

Those are the real questions. The licensing agreement is just the beginning. The hard part is making it work in practice.

One thing's certain: the AI industry has matured from the "who will own AI" question to the "how will AI actually work in real products" question. And that's actually a more interesting problem to solve.

FAQ

Why didn't Apple just use Open AI's Chat GPT for Siri?

Apple did evaluate Open AI, but several factors made Google's Gemini more attractive. Open AI was experiencing internal turmoil in late 2023 and early 2024 after Sam Altman's brief departure and return. Additionally, Open AI's exclusive relationship with Microsoft created concerns about indirect dependence. Google's Gemini offered better multimodal capabilities (handling text, images, audio, and video), production-scale infrastructure that was already optimized for mobile devices through Pixel integration, and Google's stronger legal defensibility. The practical reality is that Gemini simply fit Apple's technical requirements better, even though Open AI's model is more widely known.

Does Google have access to your Siri requests?

Google receives anonymized requests needed to process complex Siri queries, but not your full request history or personal context. Apple negotiated specific privacy guarantees that prevent Google from logging requests in a way tied to your Google account, using Siri data to train future models, or sharing the information with Google's advertising division. Apple's system is designed to send the minimum necessary data to Gemini while keeping sensitive information on-device. However, some data still traverses Google's servers, which is why privacy-conscious users should understand that using advanced Siri features requires sending information outside of Apple's infrastructure.

Could Apple have built its own foundation model instead?

Technically yes, but practically no. Building a competitive foundation model requires

Will Apple's Siri become better than Chat GPT?

Gemini as the foundation engine is already competitive with GPT-4 in most benchmarks, so Siri has the foundational capability to be excellent. The real question is whether Apple's product execution can match Chat GPT's user experience. Chat GPT benefits from being a specialized product focused purely on conversation and reasoning. Siri needs to handle voice I/O, on-device processing, integration with Apple services, and privacy constraints simultaneously. This makes Siri technically harder to build than Chat GPT, but Apple's constraints also differentiate Siri. Within 12-18 months, Siri powered by Gemini could potentially surpass Chat GPT for specific use cases like controlling Apple devices or integrating with Apple services, even if it remains less capable for general conversation.

Is this partnership exclusive to Apple and Google?

The partnership agreement hasn't been fully disclosed, but based on typical licensing terms, Google likely has the right to license Gemini to other companies as well. This isn't like the exclusive Microsoft-Open AI deal. Google probably insisted on maintaining the right to license Gemini elsewhere. However, Apple's specific privacy architecture and integration might be customized enough that other companies would need to negotiate their own terms. The partnership also doesn't prevent Apple from eventually using other foundation models for different products—this is a Siri-specific deal, not an Apple-wide commitment to Gemini.

What happens if Google and Apple's partnership ends?

If the partnership ended, Apple would face several challenges. Apple could theoretically switch to another foundation model (Claude, a future model, or an open-source alternative), but this would require reworking Siri's entire architecture and retraining on new models. More likely, Apple would negotiate a transition period where both Gemini and an alternative model run in parallel while Apple's engineers migrate systems. There's also a contractual question: how much notice would Google provide? Does Apple have the right to keep using existing Gemini versions if the partnership ends? These protections should be in the licensing agreement, but they weren't publicly disclosed.

Does this partnership mean Apple is less innovative?

No. Apple is making a strategic choice to buy a commodity (the foundation model) so it can focus innovation on areas where Apple actually has competitive advantage: on-device processing, privacy architecture, voice interface, feature integration, and hardware-software optimization. This is actually smarter than trying to compete with Google on foundation models—Apple would always be behind because Google's scale and infrastructure advantage are insurmountable. Apple's real innovation will be in how it integrates Gemini into products and how it maintains privacy while using cloud AI. That kind of system-level innovation is harder to copy than building a better foundation model.

Why is Apple's emphasis on privacy important if Google gets requests?

Privacy is important in layers. Even though requests to Gemini get sent to Google's servers, Apple's architecture minimizes how much context Google sees. Your request arrives anonymized, without your contact list, location, identity, search history, or other personal data. This is fundamentally different from how Google normally operates in search or Gmail, where Google sees rich personal context and uses it for product improvement and advertising. Apple's privacy guarantees essentially treat Gemini like a utilities contract—use the service, pay the bill, but don't expect the vendor to monetize your data. For users concerned about privacy, this is meaningful, though not perfect.

What does this mean for the open-source AI movement?

Apple's decision suggests that open-source models probably won't replace proprietary models for enterprise applications in the near term. Apple had the option of using Llama 3 or other open-source models, but chose licensed proprietary models instead. This signals that for mission-critical applications, companies prefer proprietary models with SLA guarantees, dedicated support, and vendor accountability. However, open-source models are becoming increasingly viable for cost-sensitive applications and specialized tasks. The future probably involves both: proprietary models for high-stakes applications (medical, financial, security-related) and open-source models for other use cases.

Key Takeaways

- Apple chose Gemini over OpenAI/Anthropic because building competitive foundation models costs $1-2B+ and takes 2-3 years of development, with unclear ROI for a voice assistant feature

- Gemini's multimodal capabilities (text, image, audio, video) and production-scale infrastructure made it technically superior to other options despite Google being a competitor

- Apple negotiated strict privacy guarantees preventing Google from logging, training on, or monetizing Siri requests, maintaining Apple's privacy brand promise

- This partnership signals foundation models are becoming commoditized infrastructure like electricity, with competitive advantage shifting to integration, UX, and specialized applications

- Even trillion-dollar vertically integrated tech companies now recognize that licensing specialized components is often smarter than building everything in-house

Related Articles

- Wikipedia's Enterprise Access Program: How Tech Giants Pay for AI Training Data [2025]

- Apple's Siri Powers Up With Google Gemini AI Partnership [2025]

- US 25% Tariff on Nvidia H200 AI Chips to China [2025]

- Wikipedia AI Licensing Deals: How Big Tech Is Paying for Knowledge [2025]

- Apple & Google's Gemini Partnership: The Future of AI Siri [2025]

- Semantic Caching: Cut Your LLM Bills by 73% [2025]

![Why Apple Chose Google Gemini for Next-Gen Siri [2025]](https://tryrunable.com/blog/why-apple-chose-google-gemini-for-next-gen-siri-2025/image-1-1768578213364.jpg)