Apple's Siri Relaunch Delayed: What Went Wrong and What's Next [2025]

When Apple first promised a completely reimagined Siri, everyone paid attention. This wasn't going to be the same voice assistant that had become synonymous with misunderstanding commands and providing irrelevant results. The new Siri was supposed to be smarter, faster, and actually useful for the tasks people genuinely wanted their phones to handle. It was supposed to be everything the old Siri wasn't.

Then 2024 happened. Apple announced delays. And now, in 2025, we're learning the hard truth: the new Siri isn't coming in one big update. It's coming piecemeal, rolled out across multiple iOS updates over several months. For a company that prides itself on delivering polished, finished products, this is significant.

But here's what really matters. Understanding what went wrong with Siri's relaunch tells us something crucial about the current state of AI development, the gaps between what we promise and what we can actually deliver, and why Apple—a company with virtually unlimited resources—is struggling to launch a voice assistant. That's the story worth exploring.

TL; DR

- Apple's redesigned Siri is hitting major software problems including sluggish performance, delayed task completion, and query processing failures discovered during testing, as reported by Bloomberg.

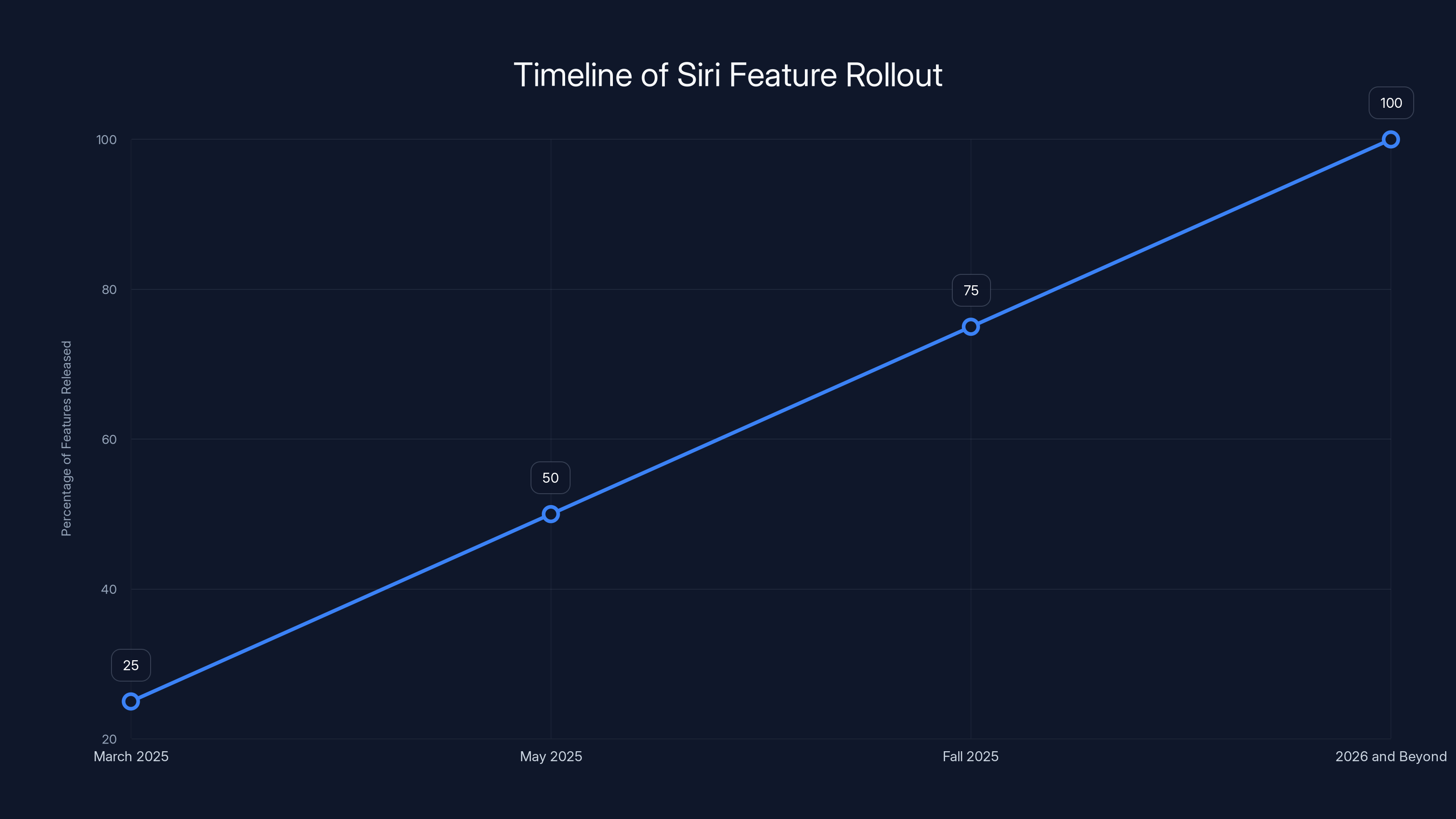

- The rollout is now fragmented with capabilities spread across iOS 18.4 (March), iOS 18.5 (May), and iOS 19 later in 2025 instead of a single launch, according to Engadget.

- Google's Gemini models power the new Siri, marking a significant shift in Apple's approach to AI infrastructure, as detailed by Campus Technology.

- The redesign promises real functionality like finding specific photos, editing images, managing contacts, and sending email summaries—not just voice recognition, as noted by CNET.

- Timeline slipped from 2025 to ongoing with initial features possibly arriving in March 2025 but complete functionality pushed back indefinitely, as reported by Business Standard.

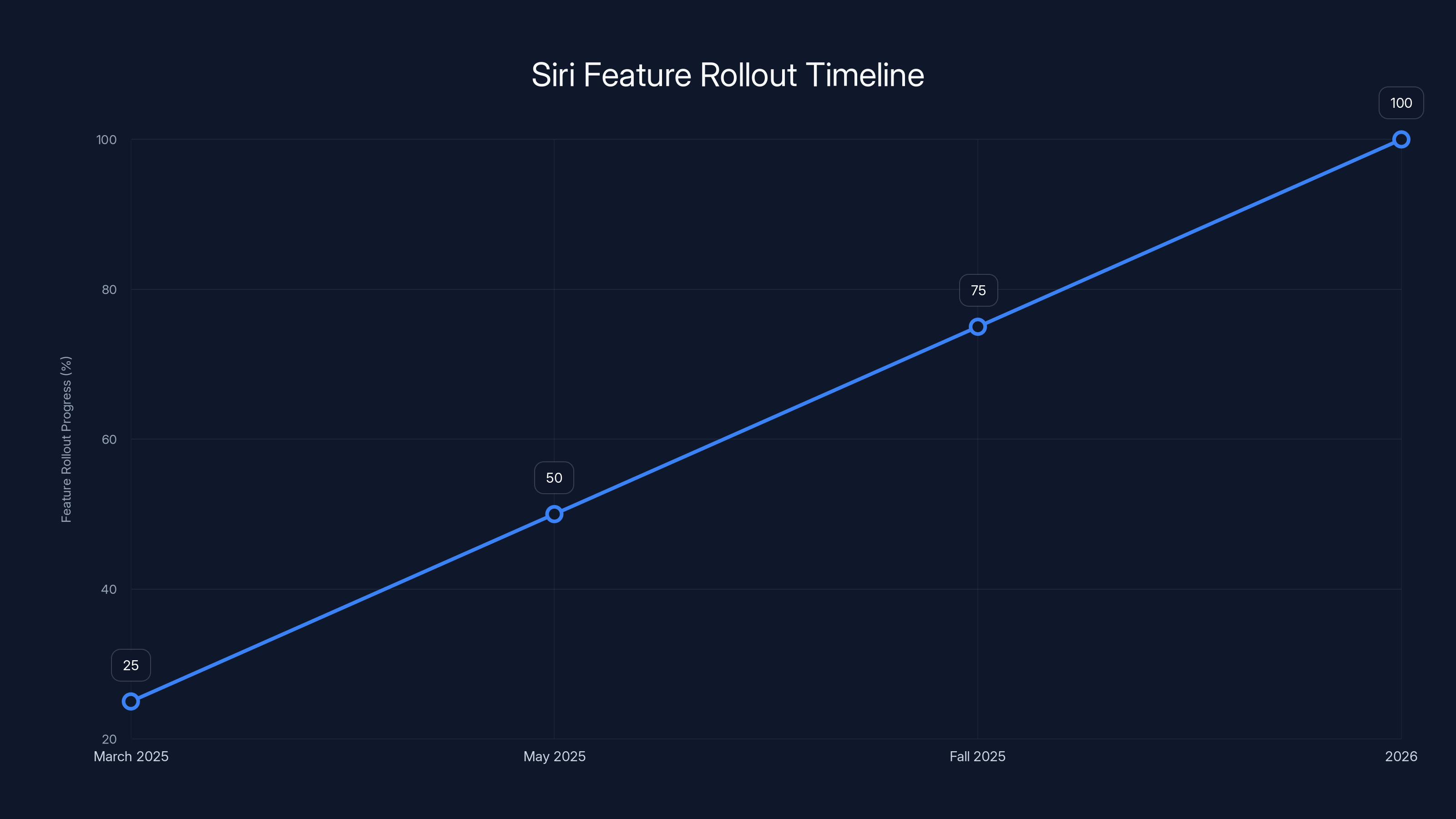

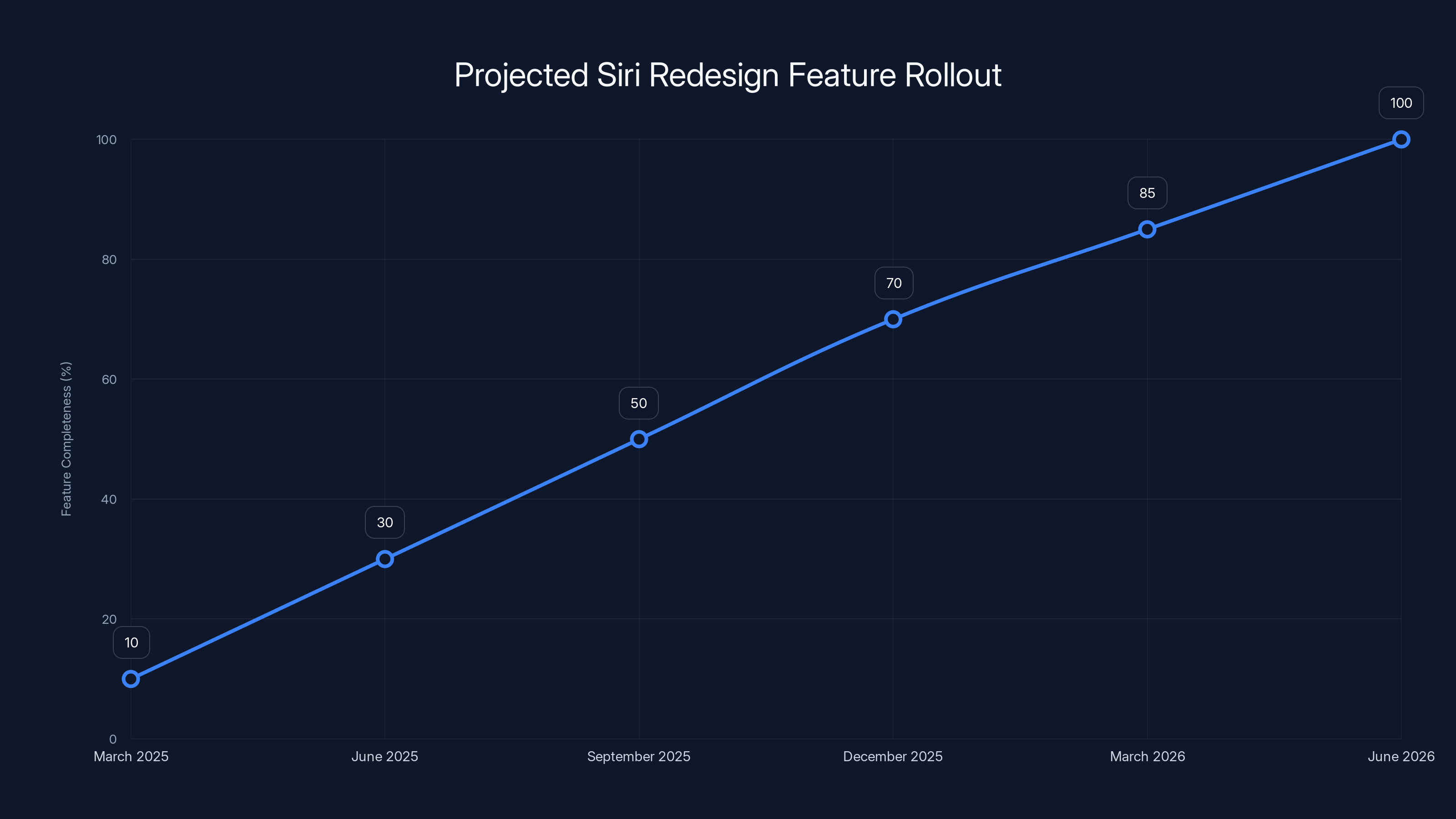

Apple plans a phased rollout of Siri features, starting with iOS 18.4 in March 2025 and continuing through 2026. Estimated data.

What Apple Originally Promised: The Vision That Started It All

Back in 2024, Apple made grand announcements about Siri. The company unveiled a voice assistant that would finally bridge the gap between what users wanted and what AI could realistically deliver. For years, Siri had been the punchline—people openly mocked it while using Google Assistant or Alexa instead. Apple needed to fix this reputation problem, and they committed to doing it right.

The original promise was substantial. The new Siri would understand context across your device, access your personal information intelligently, and actually perform complex tasks without forcing you through multiple steps. Need to find a photo from your Hawaii trip where you wore the blue dress? Tell Siri. Need to extract contact information from an email and add it to a contact card? Done. Need to edit a photo and send it as an email summary? Siri handles it.

These weren't flashy features designed for demo videos. They were genuinely useful capabilities that would integrate AI into the actual workflows people have every day. If they worked properly, Siri could transform from a novelty into something people actually relied on.

Apple announced the redesign would hit devices in 2025. The timeline seemed reasonable. The company had been working on this for years. They had the engineering talent. They had the resources. They had the motivation. What could go wrong?

Everything.

The Testing Phase Revealed Critical Problems

Here's where the story takes a turn into uncomfortable territory. Apple's testing of the new Siri revealed fundamental problems that nobody wants to talk about when launching an AI product. The software was slow. Not just a little slow. Slow enough that developers questioned whether shipping it would damage Apple's reputation even further.

When you ask Siri to do something, you expect a response in seconds. Three seconds is probably the upper limit before it feels unresponsive. Users interpret delays as failure. They interpret it as the system not understanding what they asked. But the new Siri was taking too long to process queries, completing tasks sluggishly, or—in some cases—not processing requests at all, as reported by Bloomberg.

This creates a specific problem for Apple. The company can't ship a voice assistant that's noticeably slower than the competition. They also can't ship one that misses requests frequently. Both of those issues would compound the existing perception that Siri is unreliable. Rather than fixing the brand's reputation, a buggy launch would cement the idea that Siri is fundamentally broken.

What makes this more complicated is that these aren't simple bugs. These are architectural issues. The performance problems suggest the new Siri's AI models aren't optimized for mobile devices, or the integration between Apple's infrastructure and the AI reasoning layer isn't efficient, or the on-device components are bottlenecking the server-side processing. None of these are quick fixes. These are problems that require rethinking the entire technical approach.

The developers internally questioned whether Apple would need to push back the launch by months, potentially sliding it into 2026. That's a long delay. But pushing it back entirely also isn't palatable. Apple needs to show progress on AI. They need to demonstrate that they're serious about this technology and that they're investing in it. Canceling the Siri redesign would be admitting that the entire project was a failure.

So Apple made a different choice. Instead of delaying everything, they'd fragment the rollout.

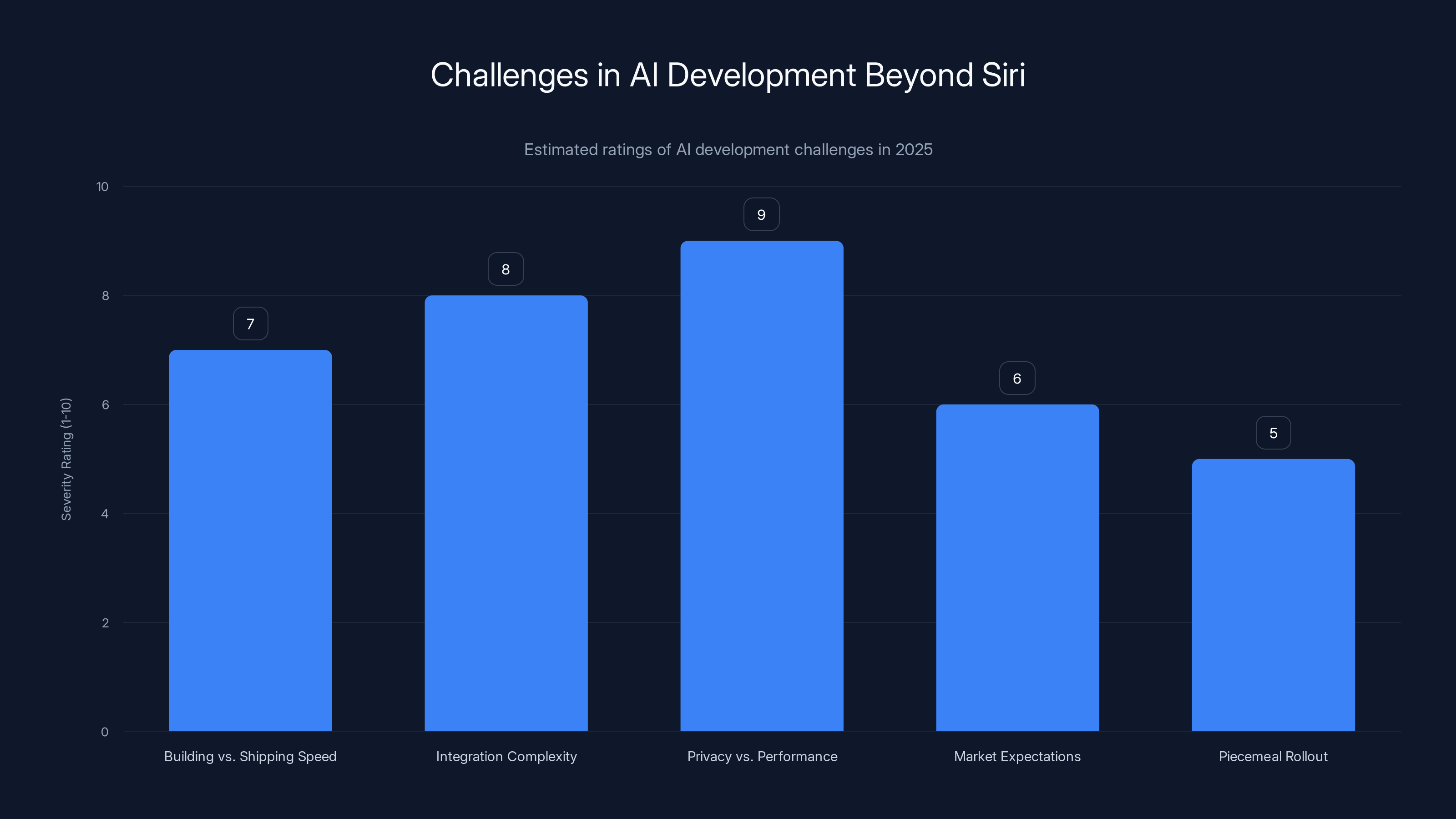

AI development faces significant challenges, with privacy vs. performance and integration complexity rated as the most severe. (Estimated data)

The Pivot to Piecemeal Rollout Strategy

Rather than launching the complete redesigned Siri in one update, Apple decided to distribute the features across multiple iOS releases. Some capabilities would arrive in iOS 18.4 (expected in March 2025). Others would come in iOS 18.5 (May). Additional functionality would roll out with iOS 19 later in the year. Even more features might arrive after that, as reported by Engadget.

This is a significant shift in Apple's approach. The company normally launches major features in big, coordinated updates. They announce them at WWDC in June. They arrive with the next iOS version in September. Users get a complete feature set. Apple gets maximum marketing impact from a single, finished product.

But the piecemeal approach offers something important: it gives Apple time to fix issues incrementally. They can roll out the most stable features first—the ones they're confident will work reliably. They can gather feedback from millions of users. They can identify problems that testing didn't catch. They can fix those problems in subsequent releases.

It's also a more honest approach, even if it doesn't look good from a marketing perspective. Rather than pretending everything is ready when it isn't, Apple is admitting that the redesign is complex and that they'll need time to get it right. Some people will see this as disappointing. Others will see it as prudent.

The timeline now looks something like this:

- iOS 18.4 (March 2025): Initial Siri capabilities begin rolling out

- iOS 18.5 (May 2025): Additional features arrive

- iOS 19 (fall 2025): More substantial feature additions

- Beyond: Ongoing rollout of remaining capabilities throughout 2026 and beyond

This is different from the original promise of "2025." It's also different from the revised promise of March 2025 specifically. Now it's March 2025 plus everything after that.

Google Gemini: The AI Engine Powering New Siri

One of the most interesting revelations about the new Siri is that Apple is using Google's Gemini AI models as the foundation. This is huge. Apple built its reputation partly on the idea that it develops its own technology. It makes its own chips. It builds its own software frameworks. And historically, it would never rely on a competitor for core functionality, as noted by Bloomberg.

But this decision makes sense. Building best-in-class AI models requires massive amounts of computing power, training data, and AI expertise. It requires staying on the cutting edge of research. OpenAI has this with ChatGPT. Google has this with Gemini. Meta has this with Llama. Apple... was building toward it but needed something now.

So Apple made a pragmatic choice. Use Google's Gemini as the reasoning engine. Keep the on-device processing, the privacy layers, and the Apple-specific integrations in Apple's hands. Let Google handle the hard part of understanding complex language and reasoning through tasks.

This approach solves a real problem. The performance issues discovered during testing might partly be about having the right AI model. The new Siri needs to understand context, reason about tasks, and generate appropriate actions. That requires a powerful language model. Gemini has proven itself in those areas. It's fast. It's relatively reliable. It understands instructions well.

Apple announced this partnership in January 2025, saying: "After careful evaluation, Apple determined that Google's AI technology provides the most capable foundation for Apple Foundation Models and is excited about the innovative new experiences it will unlock for Apple users," as reported by Mexico Business News.

Translate that from corporate speak: We tried building this ourselves. It didn't work as well as we needed. Google's solution is better.

The Gemini integration also explains some of the delays. Integrating an external AI service into Apple's ecosystem isn't trivial. You need to handle API calls, manage authentication, ensure privacy (Apple needs to promise that your queries aren't going to Google unnecessarily), handle offline functionality, and optimize for mobile devices. All of that takes time.

Why Performance Matters More Than Features

When Apple's developers discovered performance problems during testing, the company faced a choice. Ship something that works but feels slow. Or delay shipping until it's fast. For a voice assistant, this choice is critical.

Here's why: speed is part of the user experience equation with voice interfaces. When you speak to a device, you're in a specific mental state. You're waiting for a response. You've committed to the interaction. If the response takes too long, your brain interprets it as the device not understanding you. Your confidence drops. You start repeating the request. You switch to typing or using your hands.

This is different from regular app performance, where being slow by a second or two might just be annoying. With voice interfaces, sluggishness is a deal-breaker. Users expect immediate acknowledgment that their request was heard, then processing happens in the background.

The original Siri had this problem. It would take time to process requests, and users interpreted that as failure. Apple's Siri could never quite shake the reputation of being slow and unreliable, even though much of the problem was expectations rather than actual performance.

The redesigned Siri can't repeat those mistakes. If users perceive it as slow, they won't use it. They'll switch to Google Assistant on Android devices. They'll use ChatGPT on their phones. They'll just ask Alexa on their smart speakers. And Siri goes back to being a punchline.

So performance optimization became the critical blocker. You can fix feature bugs in a point release. You can't fix perception. That requires shipping something that genuinely feels responsive and fast.

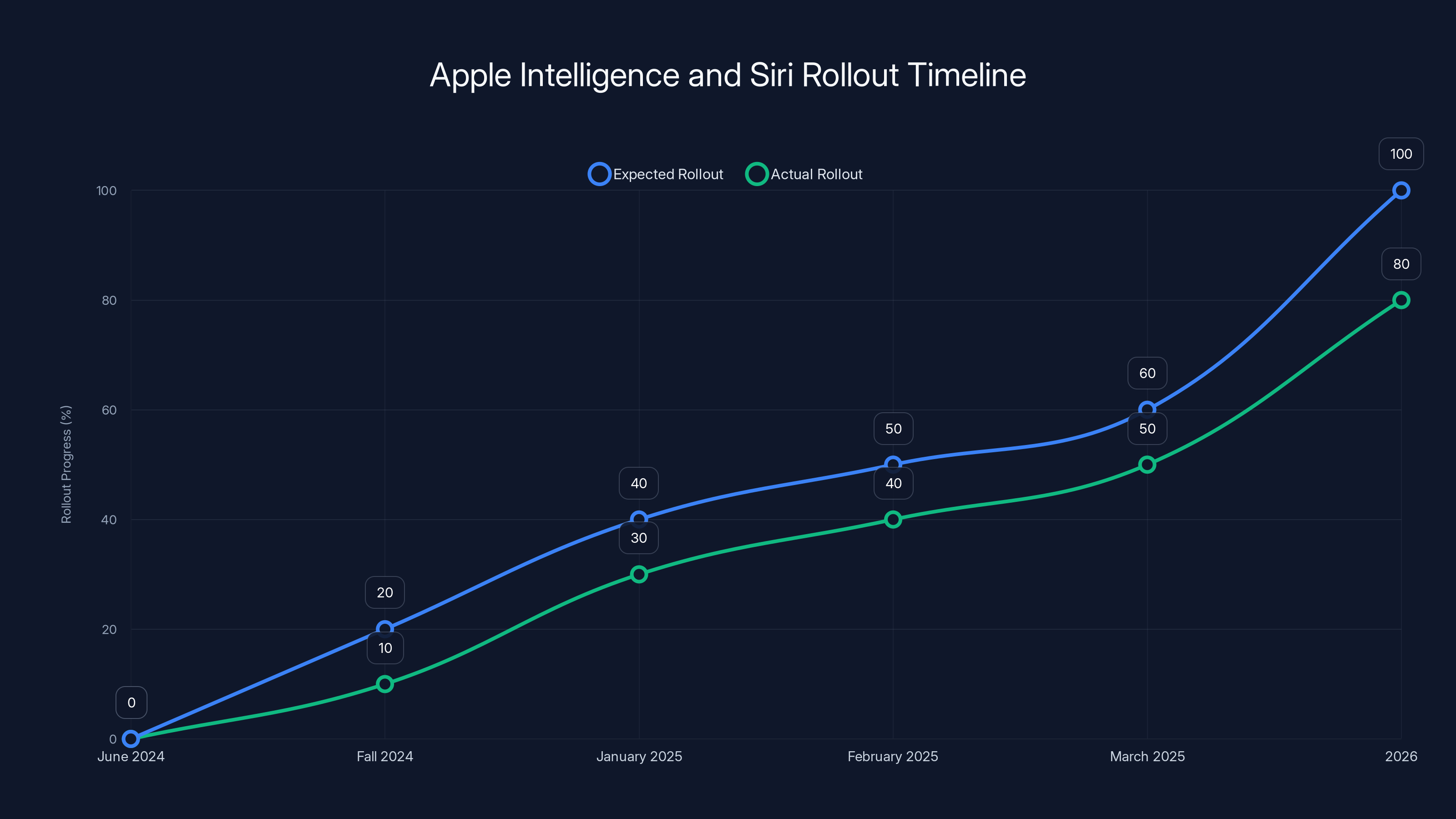

The timeline shows a significant delay in the rollout of Apple Intelligence and Siri features, with full functionality now expected in 2026. Estimated data based on announcements and reports.

The Original 2024 Announcement: Apple Intelligence and Siri

Back at WWDC 2024, Apple introduced the concept of "Apple Intelligence"—a broad umbrella for AI features integrated directly into iOS, iPadOS, and macOS. Siri's redesign was supposed to be a centerpiece of Apple Intelligence. The company showed demos where Siri would understand complex, multi-step requests and handle them intelligently.

The demos looked good. They looked polished. They looked ready. But demos are always polished. The real work starts when you try to make those demos work on millions of different devices, with millions of different usage patterns, with millions of different types of requests in different contexts.

That's where things broke down.

Apple Intelligence also includes other features: writing tools that help refine emails and messages, photo search that actually understands what's in images, notification summarization, and other capabilities. Some of those features have already shipped or are shipping on schedule. But Siri—the marquee feature—is the one that's struggling.

This is telling. Apple Intelligence as a brand is supposed to represent AI done right. AI that respects privacy. AI that's integrated thoughtfully. AI that's actually useful. But if the flagship feature—Siri—ships buggy or slow, the entire brand promise becomes questionable.

Timeline Expectations vs. Reality: From 2025 to 2026

Let's trace the timeline because it reveals how much slippage has actually happened.

2024, WWDC (June): Apple announces Apple Intelligence and redesigned Siri. Sets expectations for 2025 rollout.

Fall 2024: Apple announces delay. Says features will arrive in 2025 but not immediately. Introduces phased rollout concept.

January 2025: Apple confirms Google Gemini partnership. Announces more delays are likely, as reported by CNET.

February 2025 (current moment): Reports emerge that initial Siri features might not arrive until March, with full functionality pushed into 2026 and beyond, as noted by Bloomberg.

This is a significant slip. Going from "2025" to "late 2025 and beyond" is effectively a year-plus delay from the original announcement. That's substantial for a feature that's supposed to be a flagship component of Apple Intelligence.

What's notable is that Apple is being relatively transparent about this. They're not pretending everything is on track. They're not hyping features that aren't ready. They're admitting that this is harder than expected and that it will take longer than hoped.

That's actually more credible than the alternative, even if it's less satisfying for users waiting for the redesigned Siri.

What Gets Delayed First? The Hierarchy of Siri Features

When Apple rolls out Siri capabilities piecemeal, which features arrive first and which get pushed back? That's an important question because it tells us what Apple considers most stable and most important.

Based on available information, the initial rollout will likely focus on features that have the clearest use cases and the most straightforward implementations:

Photo finding and image search: Users ask Siri to find photos by describing what they want to find. This involves image recognition (which Apple has already proven works well in Photos) combined with natural language understanding. If Google's Gemini is good at understanding "find my photos from Hawaii where I'm wearing the blue dress," this should work.

Contact management: Adding information to contact cards. Extracting phone numbers or emails from messages and adding them to contacts. This is straightforward data manipulation once Siri understands what information to extract.

Photo editing: Basic edits like cropping or adjusting filters. These are deterministic—Siri either performs the edit or doesn't. No ambiguity about whether it worked.

Email composition and summaries: Writing email summaries based on notes or conversations. Sending those summaries as emails. This involves text generation and some formatting, but it's relatively straightforward.

Features that will likely arrive later:

Cross-app task automation: Complex workflows that involve multiple apps. Integrating with third-party services. These require more testing and more potential points of failure.

Contextual understanding: Understanding what you're doing and proactively offering help. This requires deeper integration and more AI reasoning.

Real-time processing: Handling requests in-flight as you're using other apps. This requires deeper system integration.

Apple's approach makes sense: ship the features that work well first. Build confidence with users. Then add complexity as you gain more confidence in the system.

Estimated data suggests that Siri's redesign will gradually roll out, reaching full feature completeness by mid-2026. Estimated data.

Comparing Siri to Competitors: Why This Matters

Siri doesn't exist in a vacuum. Users compare it to Google Assistant, Alexa, and increasingly to ChatGPT and Claude. Understanding how the redesigned Siri stacks up matters for expectations.

Google Assistant has been doing complex voice commands for years. Understanding context. Integrating with Google services. The integration is deep because Google owns Android. This is where Google has the advantage—they control the entire stack, so integration is easier.

Alexa is primarily a smart home assistant. It controls lights, locks, thermostats. It handles shopping lists and reminders. It's good at specific use cases but less generalized than Siri is trying to be.

ChatGPT and Claude via mobile apps are more general-purpose. They can reason about complex problems. They can help with creative tasks. But they're not integrated into the OS. You have to explicitly launch them and type (or voice record) requests.

The redesigned Siri is trying to be something different: a system-wide voice interface that understands your personal context (your photos, your contacts, your emails) while also reasoning about complex requests using AI. That's harder than any of those competitors because it requires integration across so many parts of the system.

It's also why the delays matter more for Apple than for competitors. If Google Assistant is slow, you use Alexa instead. If Alexa doesn't support something, you use Assistant. If ChatGPT isn't available on your phone, you use Claude. But Siri is integrated into iOS at the OS level. If it's slow or unreliable, you can't just switch. You're stuck with it. So Apple has to get it right in a way competitors don't.

The Developer Perspective: What This Means for the AI Ecosystem

For developers building AI products, Apple's Siri delays tell an important story about the challenge of integrating AI into real products. In tech, there's often a gap between what's theoretically possible and what's practically shippable.

OpenAI can release ChatGPT and let it operate with minimal constraints because it's a web app. Users tolerate some latency. If the model makes mistakes, they try again. There are minimal consequences.

Apple can't operate with that same tolerance. Siri is an OS-level service. It needs to be fast. It needs to be reliable. It needs to respect privacy. It needs to integrate seamlessly with the rest of the system. Those constraints are much tighter than what OpenAI deals with.

What this tells us is that integrating state-of-the-art AI into production systems is genuinely hard, even for the best-resourced companies in the world. Apple has unlimited funding. They have world-class engineers. They own the entire stack. And even they're struggling to ship something that meets their standards.

For other companies building AI products, this is important context. If Apple can't ship perfect Siri integration on the first try, what does that say about everyone else? It suggests that shipping AI features quickly requires accepting lower standards or narrower scope. You can't do everything perfectly. You have to prioritize.

Privacy Implications: How Local Processing Affects Speed

One hidden factor in Siri's performance issues might be Apple's privacy commitments. Apple promises that personal information stays on your device when possible. Photos don't get sent to Apple's servers to identify them. Contacts don't get processed remotely. Your information stays private.

But on-device processing has trade-offs. It's generally slower than cloud processing because mobile devices are less powerful than servers. It also constrains what's possible—not everything can run efficiently on a phone.

Apple's approach with Siri seems to be: keep personal data on the device as much as possible, send only the request to Google's Gemini for reasoning, then return results to the device. But that architecture requires careful design. You can't send your entire photo library to Gemini. You can't send all your contacts. You have to be selective about what information you share, even with a trusted partner like Google.

That architectural constraint might be contributing to performance issues. Every safeguard you add to protect privacy adds complexity. Every check you need to perform to ensure you're not exposing too much data adds latency. It's possible that some of Siri's slowness comes from doing privacy right rather than doing reasoning wrong.

That's actually a good problem to have. It means the delay is partly about Apple choosing privacy over speed. But it doesn't change the fact that users will perceive it as slowness.

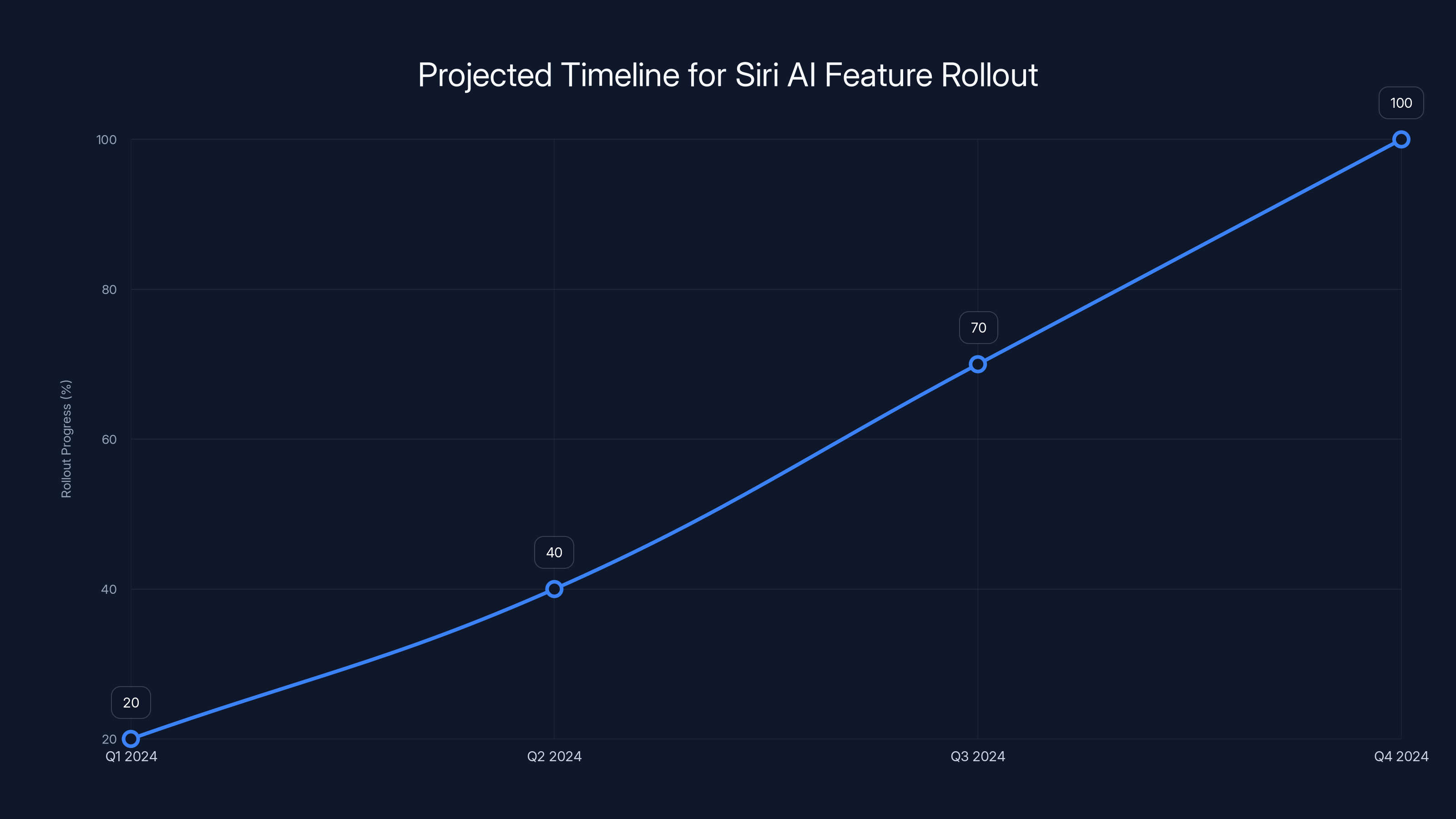

Apple's incremental rollout strategy for Siri AI features is projected to reach full deployment by Q4 2024. Estimated data based on strategic insights.

What Gets Built First in AI Assistants: Priority Hierarchies

When building an AI assistant, teams have to choose what gets built first. This reveals priorities. For Siri, the choices were:

Reasoning about tasks: Making sure Siri understands what you're asking and can break it into steps.

Accessing personal data: Having permission to read your photos, contacts, emails, etc., and using that context to improve responses.

Performing actions: Actually doing things like sending emails, adding contacts, editing photos.

Speed and reliability: Making sure it works fast and doesn't fail unpredictably.

The delays suggest that Apple got the first three working before properly optimizing for the fourth. They can build the reasoning. They can access the data. They can perform the actions. But making it all work together smoothly and quickly proved harder than expected.

This is a common pattern in AI development. It's easier to build something that works than to build something that works well. The last 20% of work—optimization, edge case handling, performance tuning—takes 80% of the effort.

When Will Each Feature Actually Arrive?

Based on the reported timeline, here's what we might expect:

March 2025 (iOS 18.4): Initial Siri features. Likely photo search, basic contact management, simple writing assistance. Nothing too complex.

May 2025 (iOS 18.5): Additional capabilities. Possibly email summaries, more complex photo editing, expanded cross-app support.

September 2025 (iOS 19): Broader feature set. Apple would use WWDC 2025 (June) to announce iOS 19 features, and new Siri capabilities would probably be highlighted.

Late 2025 and beyond: Ongoing feature expansion. Some capabilities might not arrive until 2026.

But here's the thing about timelines—they're optimistic by nature. The features Apple thinks will arrive in March might slip to May. May features might arrive in September. And late 2025 features might arrive in 2026.

The smart bet is to add 2-4 months to any announced timeline for AI features in 2025.

The Broader Context: AI Delays Across the Industry

Apple's Siri delays aren't unique. Throughout 2024 and 2025, AI features across the industry have been delayed:

Google Gemini: Launched with fanfare, then rolled back some features due to inaccuracies. Being re-released more carefully, as reported by CNBC.

OpenAI o1: An advanced reasoning model announced in fall 2024, still in limited release because it's slower than expected.

Meta's Llama models: Open-sourced and available, but companies struggle to deploy them at scale due to compute requirements and optimization challenges.

Microsoft Copilot: Broadly available but often slower than expected, with reliability issues in some scenarios.

The pattern is clear: AI features that look good in demos often struggle when deployed to millions of users in production. There's a meaningful gap between "works in testing" and "works at scale in production."

Apple's delays are notable because Apple is simultaneously announcing delays while also being one of the companies best-positioned to implement AI correctly. If they're struggling, everyone else probably is too.

Estimated data shows a gradual rollout of Siri features, starting in March 2025 with iOS 18.4 and continuing into 2026 and beyond. This piecemeal strategy allows Apple to incrementally address issues and gather user feedback.

Market Expectations vs. Reality: The Credibility Problem

Here's what matters most: Apple made promises. Users got excited. Now Apple is admitting those promises were too optimistic. That creates a credibility problem.

For a company like Apple, credibility is everything. Users expect that when Apple announces something, it will ship as described. When that doesn't happen, it affects trust. Users start wondering: if Siri is delayed, what else might be? Are Apple's other AI features also not ready? Is Apple Intelligence as a whole overblown?

The piecemeal rollout approach tries to address this by delivering something rather than nothing. Users get some new Siri capabilities in March instead of waiting until everything is perfect in 2026. That builds trust. "Apple shipped something," even if not everything.

But it also sets a new expectation: Apple Intelligence features will arrive in phases over many months. That's different from the traditional Apple model where features arrive complete. It signals that this is harder than Apple thought, and probably harder than users realize.

For the AI industry broadly, this has implications. If even Apple—with unlimited resources and decades of OS experience—struggles to ship polished AI features, how long should we expect other companies to take? When should we believe their timelines?

The smart approach is skepticism tempered with understanding. Yes, be skeptical of AI feature announcements. But understand that the difficulty is real, not hype.

What's at Stake for Apple: The Bigger Picture

Siri's redesign matters for Apple in ways that go beyond voice assistants. Here's why:

Competitive positioning: Apple is trying to prove that it can compete with Google and OpenAI in AI. If Siri lags behind competitors, that narrative fails.

Premium positioning: Apple charges premium prices partly because their software is polished. If Siri ships unpolished or sluggish, that premium positioning gets questioned.

Integration story: Apple's whole strategy is about integration across devices and services. If Siri can't be reliably integrated across iOS, iPadOS, and macOS, that weakens the whole ecosystem story.

Developer confidence: Developers choosing whether to build Apple apps or Android apps are watching this. If Apple's own AI integration is mediocre, why would developers invest in Apple's platform?

User retention: Users comparing iPhones to Android phones or considering switching to another ecosystem are watching this. If Siri doesn't improve significantly, one of Apple's key differentiators is still missing.

So the stakes are high. It's not just about shipping a voice assistant. It's about whether Apple can compete in the AI era and whether the iPhone remains the platform developers and users want.

Technical Debt and AI: A Case Study in Complexity

Part of why Siri's redesign is taking longer than expected comes down to technical debt and the complexity of integrating AI into a massive, existing codebase.

Siri isn't a new app. It's been around since 2011. Over a decade, it's accumulated millions of lines of code, complex dependencies, integrations with every part of iOS, and maintenance from different teams. Redesigning Siri means reimplementing those integrations with new AI foundations.

Adding Google Gemini to the mix adds another layer of complexity. You can't just bolt Gemini on top of existing Siri code. You need to integrate it deeply, handle API communication, manage fallbacks when the API isn't available, ensure privacy at every step, and optimize for mobile devices.

That integration work is what's probably taking the most time. Not building the AI—Google did that. Not building the features—Apple can do that. But integrating an external service into a mature, complex system is harder than people realize.

This is a lesson that applies across software development: the cost of integration often exceeds the cost of building the thing being integrated. Apple is probably discovering this the hard way.

Lessons Learned: What the Siri Delays Teach Us

If we step back from the Siri story, several lessons emerge:

First: Performance matters more for AI assistants than feature completeness. Users would rather have fewer features that work well than more features that work sluggishly. Apple understood this and was willing to delay shipping rather than ship something slow.

Second: Integration is the hard part. Building AI models is getting easier (thanks to open-source frameworks and cloud services). Integrating those models into real products used by millions of people is still very hard.

Third: Outside partnerships are viable for core features. Apple's use of Google Gemini signals that even the best companies benefit from leveraging others' strengths rather than building everything from scratch.

Fourth: Transparency about delays builds trust more than hyped-up promises. By admitting delays and being honest about challenges, Apple maintains credibility even when they're not hitting original targets.

Fifth: AI features take longer than expected. If this is true for Apple with unlimited resources, it's true for everyone. Expect delays. Plan accordingly.

The Competitive Advantage Question: Is Siri Worth the Wait?

From a competitive perspective, the question is: will the redesigned Siri be good enough to justify all this effort?

Google Assistant is already quite capable. Alexa dominates smart home control. ChatGPT is available on every platform. For Siri to be worth the wait, it needs to offer something meaningfully better than these alternatives.

The theoretical advantage is integration with iOS. Siri can do things that external apps can't because it has OS-level access. But that advantage only matters if Siri actually uses that access well and performs noticeably better than alternatives.

If Siri ships and it's roughly as good as Google Assistant but a few months later, the delays won't have been worth it. Apple will have wasted engineering effort for incremental improvement.

But if Siri ships with genuinely useful features that take advantage of iOS integration—features that Google Assistant can't do as well—then the delays were justified. The redesigned Siri becomes a real differentiator.

Everything depends on execution. And execution is exactly what these delays suggest might be uncertain.

What Users Should Expect: Setting Realistic Expectations

If you're an iPhone user waiting for the redesigned Siri, here's what to realistically expect:

Timeline: Initial features in March 2025, with more arriving throughout the year and into 2026. The "complete" redesign probably won't be finished in 2025. It might not be finished in 2026.

Quality: The features that do ship should be polished and relatively fast. Apple wouldn't ship them otherwise. But there will probably be edge cases and unexpected interactions that require fixes in subsequent updates.

Scope: The first batch of features will be relatively limited and focused on scenarios that work well. More ambitious features come later as Apple builds confidence.

Reliability: Don't expect 100% reliability from day one. Expect occasional failures or misunderstandings. That's normal for voice assistants, even good ones.

Privacy: Apple's privacy approach should hold up. Your personal data should stay mostly on your device, with only queries going to Google's servers. But verify this yourself by checking what permissions Siri requires.

Competition: Google Assistant will probably still be capable and might have better integration with Google services. ChatGPT will probably still be more capable at reasoning. Alexa will probably still be better at smart home control. Siri will be better at iOS integration and accessing your personal information.

The redesigned Siri probably won't be "the best" at everything. But it should be noticeably better than the current Siri and competitive with alternatives for iOS-specific tasks.

The AI Development Lessons Beyond Siri

The Siri story has implications that go way beyond voice assistants. It reveals truths about AI development in 2025:

Building AI is fast. Shipping AI is slow. OpenAI can train GPT-4 in months. But shipping it to billions of devices while maintaining privacy, performance, and security takes much longer.

Integration complexity increases with scale. The larger the install base, the more edge cases you need to handle, the more privacy constraints you need to respect, and the more performance optimization you need to do.

Privacy and performance trade off. You can make things faster by sending data to servers. You can make things private by keeping data local. You can't easily maximize both.

Market expectations are hard to manage. Once you announce an AI feature, users expect it to be transformational. When it ships with limitations or delays, disappointment follows. Under-promise and over-deliver is harder in the AI era because everyone expects AI to be transformational.

Piecemeal rollout is becoming the norm. Instead of big launches, expect features to trickle out over time. This allows companies to iterate based on real-world feedback but frustrates users expecting complete features.

Looking Forward: What's Next for Siri and Apple Intelligence

Assuming Apple gets Siri shipped and working well, what comes next?

The logical next phase is deeper integration. Siri becomes more predictive. It proactively suggests actions based on context. It learns your preferences and patterns. It integrates more deeply with third-party apps, not just Apple's built-in apps.

That's where the real differentiation comes. Any company can build a voice interface that processes queries. Apple's advantage is having such deep integration with iOS that Siri can be predictively helpful across the entire system.

But getting there requires solving the current problems first. Performance needs to be solid. Reliability needs to be high. Privacy needs to be maintained. Features need to actually work. Once those foundations are solid, Apple can build more ambitious capabilities.

The timeline for that? Probably another 18-24 months. Which means the fully realized vision of Apple Intelligence probably doesn't arrive until 2026 or 2027.

That's further out than anyone wants to wait. But given what we're learning about AI development, it's probably realistic.

FAQ

What is Apple Intelligence and how does Siri fit into it?

Apple Intelligence is Apple's umbrella brand for AI features integrated across iOS, iPadOS, and macOS. The redesigned Siri is positioned as the centerpiece of Apple Intelligence, serving as a natural language interface for accessing AI-powered features. Siri would understand complex requests, access your personal information, and perform tasks across the system using AI reasoning provided by Google's Gemini models combined with Apple's on-device processing.

Why is the new Siri being delayed?

The new Siri is being delayed because testing revealed significant problems including sluggish performance, slow task completion, and query processing failures. Rather than shipping a voice assistant that feels slow or unreliable (which would damage Apple's reputation further), Apple chose to delay the rollout and implement fixes. The company discovered that integrating external AI models into iOS while maintaining privacy and performance standards is more complex than initially anticipated, as detailed by Bloomberg.

What does piecemeal rollout mean for Siri's release schedule?

Instead of launching the complete redesigned Siri at once, Apple is distributing features across multiple iOS updates. Initial capabilities will arrive in iOS 18.4 (March 2025), more features in iOS 18.5 (May 2025), and additional functionality with iOS 19 (fall 2025), with potentially more features rolling out throughout 2026. This approach allows Apple to ship stable features first, gather user feedback, identify issues, and fix problems in subsequent releases rather than delaying everything until perfect.

Why is Apple using Google Gemini instead of building its own AI?

Apple determined that Google's Gemini models provide better reasoning capabilities and language understanding than Apple could deliver on its own timeline. Building best-in-class AI models requires massive computing resources, specialized AI expertise, and continuous research investments. By using Gemini for the reasoning layer while keeping on-device processing and privacy controls in Apple's hands, Apple can deliver better AI features faster. This represents a pragmatic choice prioritizing product quality over complete in-house development, as noted by Campus Technology.

What features are arriving first in the Siri redesign?

The initial rollout will likely focus on features with straightforward implementations, including photo search and discovery, contact management and information extraction, basic photo editing, and email composition and summaries. More complex features involving cross-app automation, contextual understanding, and advanced task workflows will arrive in later updates as Apple gains confidence in the system's reliability and performance.

How does the redesigned Siri compare to competitors like Google Assistant and ChatGPT?

The redesigned Siri aims to be different from competitors by combining system-level integration with iOS with advanced AI reasoning. Unlike ChatGPT which is a separate app, or Google Assistant which requires Android integration, Siri will have deep access to your personal data (photos, contacts, emails) while using Gemini for complex reasoning tasks. However, Google Assistant is already quite capable, Alexa dominates smart home control, and ChatGPT offers superior general reasoning. Siri's advantage lies specifically in iOS integration, not overall capability.

When will the complete redesigned Siri actually be available?

Based on current reports, initial Siri features should arrive in March 2025, with additional capabilities arriving throughout 2025 and into 2026. A fully "complete" redesign with all promised features is likely not arriving before late 2025 or 2026. However, Apple's timelines for AI features have proven optimistic in the past, so adding 2-4 months to any announced date is prudent planning on the user's part.

What does this delay mean for Apple's credibility?

Apple's announcement and subsequent delays have created a credibility challenge. Users were promised AI-powered Siri in 2025, then learned about delays and now understand it's a piecemeal rollout over many months. However, Apple's transparency about challenges and willingness to prioritize quality over meeting original timelines can rebuild credibility if the shipped features actually work well. The key is whether the eventual product justifies the wait and aligns with what users expected.

Conclusion: The Road Ahead for Apple and AI

Apple's Siri relaunch delays tell a story that extends far beyond one voice assistant. They reveal the genuine difficulty of shipping polished AI features at scale, even for the world's most capable technology companies. When a company with unlimited resources, world-class engineers, and decades of OS development experience needs to delay and fragment a major feature rollout, it sends a clear message: AI integration is harder than the hype suggests.

But this story also contains hope. Apple is being honest about challenges rather than pretending everything is on track. They're partnering strategically with Google to access better AI rather than insisting on building everything themselves. They're choosing quality over speed, which is exactly the right priority for a company built on premium positioning.

The piecemeal rollout approach, while less satisfying than a complete feature launch, actually makes sense from a product perspective. It lets Apple ship something real and gather feedback from millions of users. It lets them fix problems discovered in the real world. It lets them iterate toward something genuinely useful rather than shipping something ambitious that doesn't work well.

For users, the lesson is to manage expectations. The redesigned Siri will arrive in pieces over months, not all at once. Some features will work better than others. Performance will probably be acceptable but not blazingly fast. Reliability will improve over time as Apple fixes edge cases.

But most importantly, Apple is actually making progress. The delays are frustrating, but they represent Apple choosing the right course even when it's not the popular one. Ship quality over hype. Deliver incrementally rather than over-promising. Use partnerships when they make sense. Prioritize privacy and performance.

Those are exactly the right principles for building AI products that last. The Siri relaunch will eventually deliver something useful. Whether it justifies all the wait will depend on execution. But Apple's willingness to delay rather than ship something mediocre suggests they understand what's at stake.

In the end, that matters more than hitting arbitrary deadlines. The AI features that succeed in 2025 and beyond won't be the ones that shipped first. They'll be the ones that actually work well when millions of people use them. Apple's delays are frustrating, but they might be exactly what's needed for Siri to finally become good.

Key Takeaways

- Apple's redesigned Siri faces significant performance issues including sluggish processing and failed query completion that forced delays from 2025 to piecemeal 2025-2026 rollout, as detailed by Bloomberg.

- Rather than shipping a slow voice assistant, Apple chose to distribute features incrementally across iOS 18.4, 18.5, and iOS 19 to prioritize quality over speed, as reported by Engadget.

- Google's Gemini AI models now power Siri's reasoning engine, showing Apple needed external partnership to deliver competitive AI capabilities, as noted by Campus Technology.

- Initial rollout focuses on simpler features like photo search and contact management, with complex cross-app automation arriving later as confidence builds, as outlined by Bloomberg.

- Siri delays reflect broader industry pattern where AI features announced with enthusiasm take significantly longer to ship polished versions at production scale, as reported by CNBC.

Related Articles

- Apple's Siri AI Overhaul: Why the Delays Keep Coming [2025]

- Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

- Kindle Scribe + Alexa: Send to Alexa Feature Guide [2025]

- Siri's Gemini Upgrade: How to Try AI-Powered Features in iOS 26.4 [2025]

- Apple's 2026 Hardware Roadmap: MacBook Pro, iPad, iPhone 17e [2026]

- New iPad Models Launching Soon: What You Need to Know [2025]

![Apple's Siri Relaunch Delayed: What Went Wrong and What's Next [2025]](https://tryrunable.com/blog/apple-s-siri-relaunch-delayed-what-went-wrong-and-what-s-nex/image-1-1770907448531.jpg)