Bandcamp's AI Music Ban: What It Means for Artists and the Music Industry [2025]

When Bandcamp announced its ban on AI-generated music in early 2026, the internet did what it does best: it split into camps. Some saw it as a principled stand for human creativity. Others called it a futile gesture against an unstoppable technological wave.

Here's what actually happened, why it matters, and what it tells us about the future of music creation in an age of artificial intelligence.

TL; DR

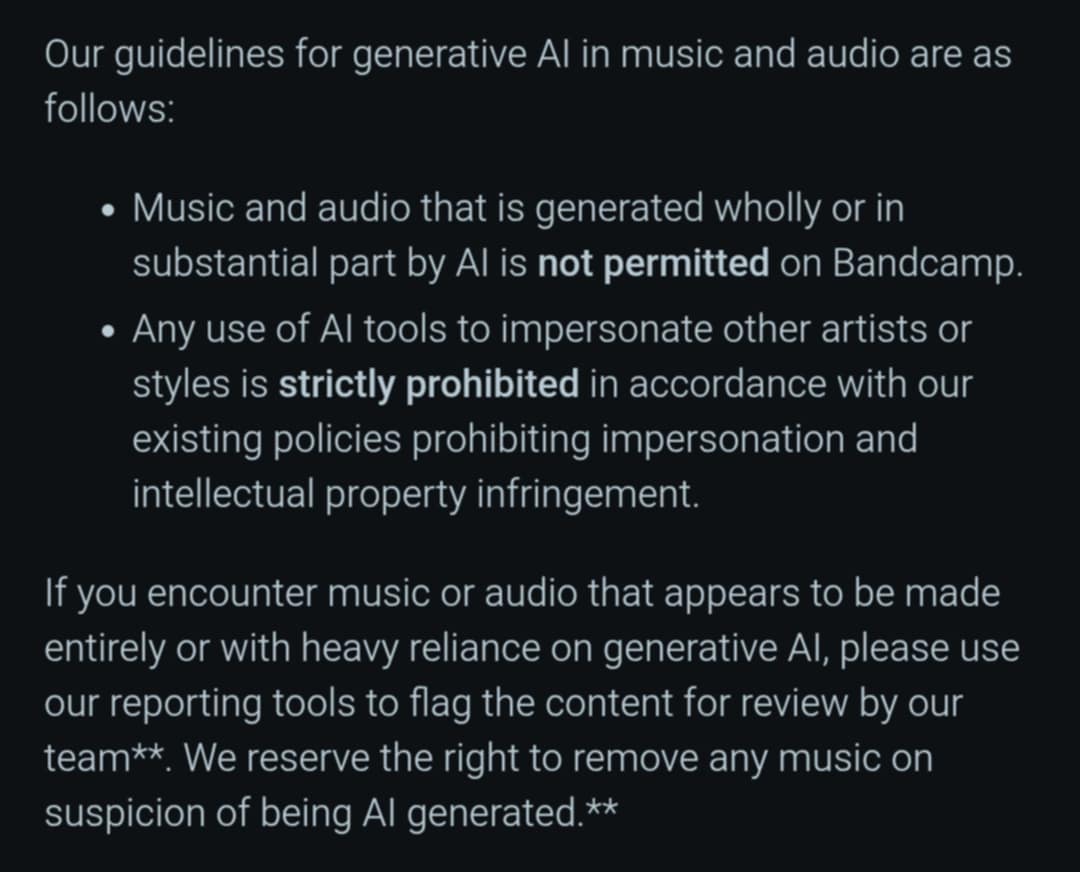

- Bandcamp explicitly banned AI-generated music and audio created wholly or substantially by AI tools, positioning itself as a human-first platform according to Bandcamp's official blog.

- The platform prohibits AI impersonation of other artists or styles, closing loopholes that could allow synthetic recreations of established musicians as reported by PC Gamer.

- AI music is already commercially viable, with AI-generated tracks topping Spotify and Billboard charts, blurring lines between synthetic and authentic music as noted by Billboard.

- Major lawsuits threaten AI music companies, with Sony, Universal, and Warner suing Suno for alleged copyright infringement in training data according to The Atlantic.

- This reflects a broader industry divide between platforms protecting human creators and those embracing AI as a creative tool as discussed by Nashville Banner.

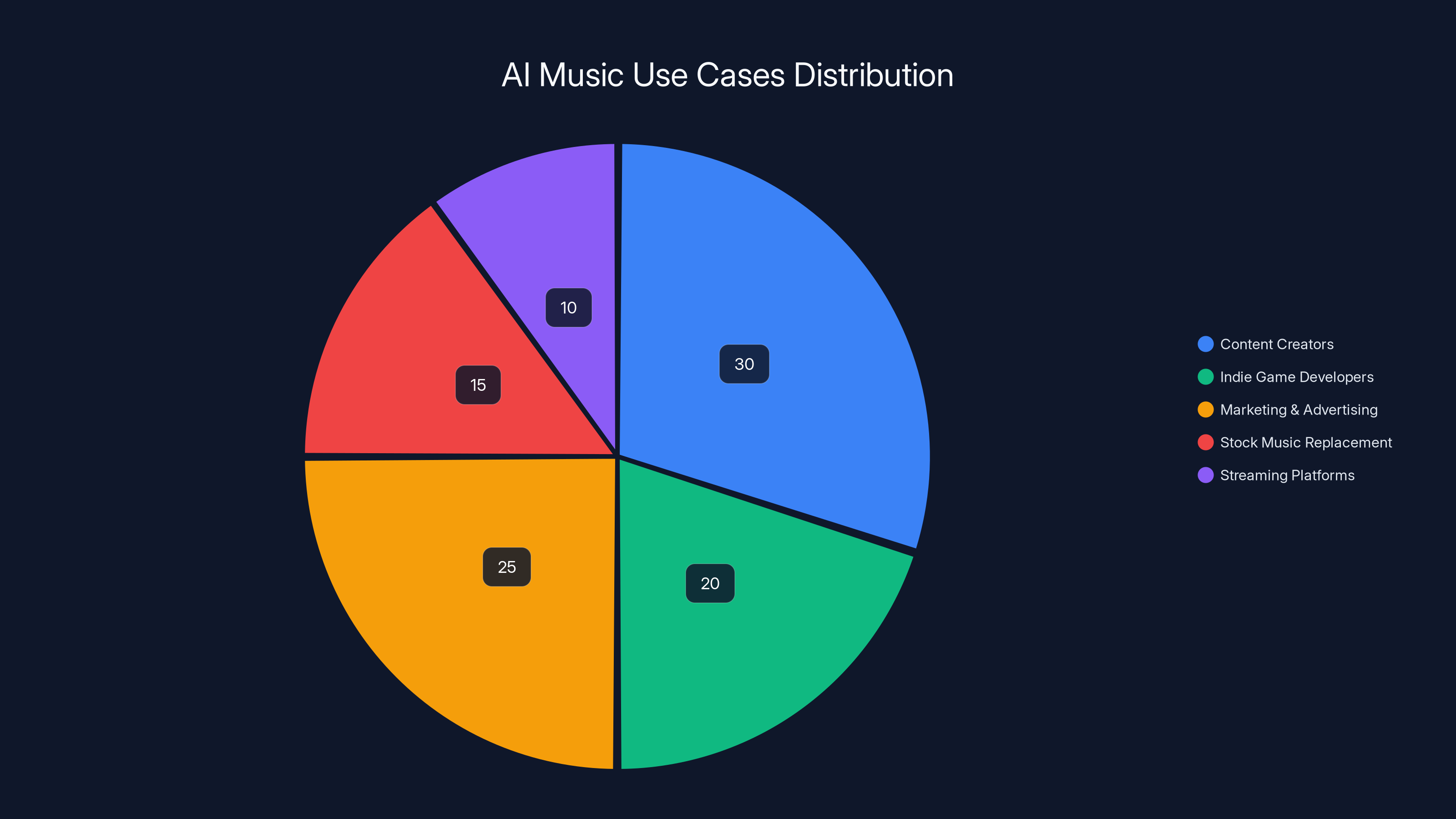

Estimated data suggests that content creators and marketing/advertising sectors are the largest adopters of AI music, leveraging its cost-effectiveness and functionality.

The Decision: What Bandcamp Actually Said

Bandcamp didn't make a quiet policy change buried in terms of service updates. The company went public with its position through a Reddit post that read like a manifesto. "We want musicians to keep making music, and for fans to have confidence that the music they find on Bandcamp was created by humans" as detailed in their blog.

That's a specific, deliberate choice of words. Not "we prefer human music" or "we're skeptical of AI." The platform said it wants fans to have confidence in human creation. That's about trust, not just aesthetics.

The actual policy prohibits music and audio generated "wholly or in substantial part by AI." That's doing some heavy lifting. The "substantial part" clause prevents the technicality defense—you can't claim 51% human and 49% AI and slip past the filter.

Bandcamp also explicitly banned AI tools used to impersonate other artists or styles. So if someone trained an AI to sound exactly like Taylor Swift or Drake, they couldn't upload it. If they tried to create synthetic music "in the style of" a famous artist, the policy blocks it.

This is important because the loophole would've been obvious: "This isn't Drake, it just sounds like Drake." Bandcamp closed that door entirely.

Why This Matters: The Context Behind the Ban

Bandcamp's move didn't emerge from nowhere. The music industry was already in crisis mode about AI. Several factors collided to force this decision.

AI music tools have gotten scary good. Suno, in particular, went from "cute toy" to "actual threat" in about eighteen months. You can type a prompt—literally describe a song in words—and get back a finished track with lyrics, melody, and production. It doesn't sound like a demo anymore. It sounds like a finished product.

The Xania Monet case crystallized everything. Telisha Jones used Suno to generate R&B tracks. She created an AI persona called Xania Monet. That persona went viral. Multiple record labels bid on Xania Monet. The AI "artist" eventually signed a deal with Hallwood Media for reportedly $3 million as noted by Nashville Banner.

Think about what that means. A human used AI to create music, presented it as a real artist, and got major label deals. The deal is real. The money is real. The artist is not.

This broke something psychologically in the music industry. If an AI can get a $3 million record deal, what does that say about the economics of music? What does it say about artist value? What does it say about authenticity in a world where fans can't tell the difference?

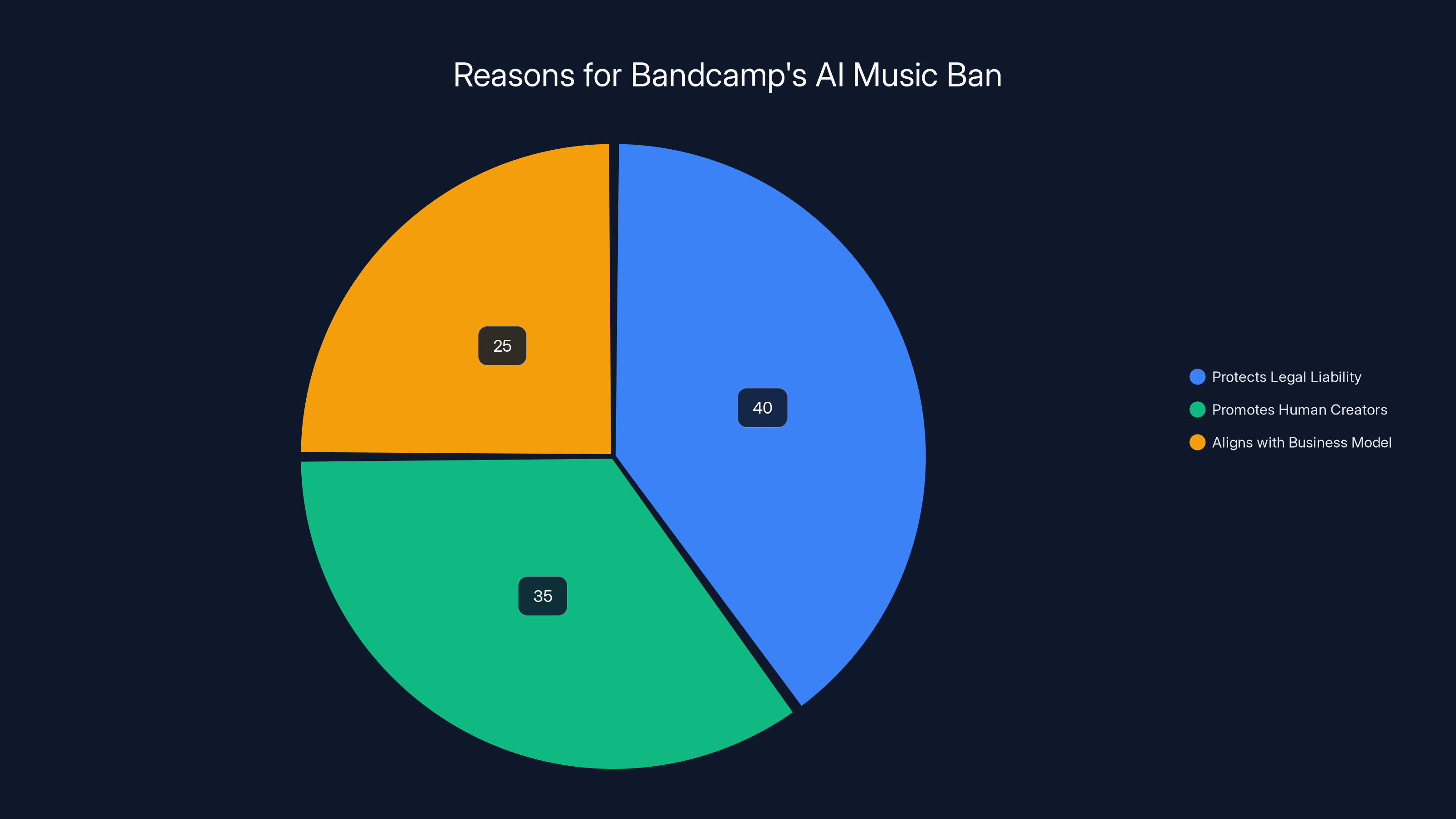

Bandcamp's AI music ban is primarily to protect against legal liability (40%), promote human creators (35%), and align with its business model (25%). Estimated data.

The Legal Minefield: Why Bandcamp Had to Take a Stand

Bandcamp's ban isn't just philosophy. It's also risk management. The legal situation around AI music is absolutely chaotic right now.

Suno is being sued by every major label: Sony Music Entertainment, Universal Music Group, and Warner Music Group. The accusation is straightforward: Suno trained its AI models on copyrighted music without permission or compensation. The lawsuits allege that Suno scraped protected recordings to feed into its machine learning models as detailed by The Atlantic.

This is the same argument playing out everywhere in AI. Did the AI companies break the law by using copyrighted material to train their models? Legally speaking, it's murky.

A judge recently ruled in the case involving Anthropic (the company behind Claude) that the firm could use copyrighted books it downloaded illegally to train its AI models. The illegal part, according to the ruling, was the downloading itself. Training the AI on those books was considered fair use.

Anthropic got hit with a

So from a legal standpoint, AI companies have one of two paths forward:

- Train on copyrighted material, get sued, pay a fine that's smaller than the value they extracted

- Get licenses from labels, which makes the models less effective but legally safe

Guess which path the venture-backed AI companies are taking? The first one. It's cheaper.

Bandcamp, by contrast, can't afford to be caught in the crosshairs. The platform makes money from artist sales, not ad revenue or licensing deals. If labels start pursuing Bandcamp for hosting AI-generated music trained on their copyrighted material, the company could face significant legal liability.

Banning AI music upfront eliminates that risk. It's a preemptive legal move disguised as a values statement.

The Economics of AI Music: Why It's Spreading

Despite the legal risks, AI music is exploding. Suno raised a

Why would investors throw $250 million at an AI music company facing three simultaneous lawsuits from the world's biggest music labels? Because the market opportunity is genuinely enormous.

Here's the thing: creating music is expensive. A decent producer costs thousands per track. Session musicians cost more. Studios cost more. Mixing and mastering costs more. If you can generate passable music for free, that's not just convenient—it's economically revolutionary.

Consider the use cases:

- Content creators: A YouTube channel needs background music. Paying for licensing is expensive. Creating original music is expensive. Generating it with AI is free.

- Indie game developers: A small game studio can't afford a composer. AI can generate adaptive music that changes based on gameplay.

- Marketing and advertising: Brands need custom jingles, background tracks, sonic branding. AI can generate them instantly.

- Stock music replacement: Why license stock music when you can generate unique tracks?

- Streaming platforms: Hypothetically, a service could generate unlimited custom music for users' playlists.

None of these use cases require music to be good. They require it to be functional. And AI music is absolutely functional for these purposes.

The musicians most threatened by AI aren't the Taylors and the Drakes. They're the session musicians, the background composers, the people creating hundreds of tracks for libraries. These are middle-class creative jobs that AI can directly automate.

Bandcamp's Unique Position: Why This Platform Mattered Most

Bandcamp isn't Spotify or Apple Music. That's crucial to understanding why Bandcamp's ban carries weight that other policies wouldn't.

Spotify and Apple Music are streaming services. They make money from subscriptions. The artists themselves are largely irrelevant to the business model. A song that costs Spotify $0.003 per stream to license is worth the same whether it's by a famous artist or a nobody. The economics don't care about authenticity.

Bandcamp has a different model entirely. The platform doesn't pay artists per stream. Instead, artists use Bandcamp to sell music directly to fans. Bandcamp takes a cut of the sales. The company only makes money if artists actually sell things.

This creates a fundamentally different incentive structure. Bandcamp only benefits if fans trust the platform enough to spend money on music. If the platform filled with AI-generated garbage, why would fans keep coming back to buy?

Bandcamp's business model is actually aligned with artist interests. Unlike Spotify, which profits regardless of artist survival, Bandcamp profits when artists thrive.

That said, let's not get too romantic about it. A tech company is still a tech company. The bottom line still matters. Bandcamp's morality hasn't separated from Bandcamp's profit motive. They've just aligned for now.

But the practical effect is real. Bandcamp is positioning itself as the anti-AI music platform. For artists who care about authenticity and fans who want to support real creators, Bandcamp becomes the obvious choice.

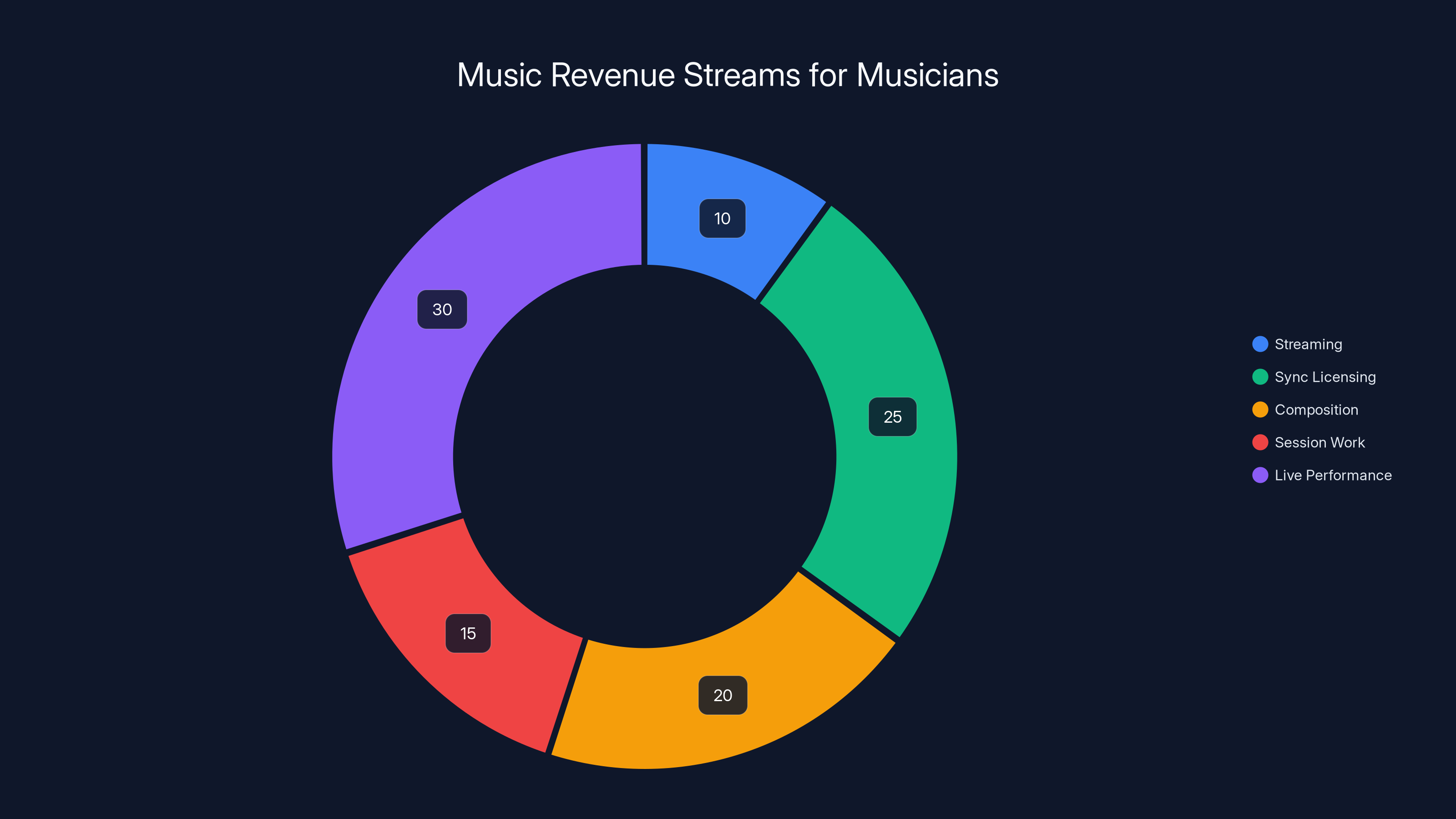

Live performance remains the most significant revenue source for musicians, accounting for an estimated 30% of total income, highlighting its resilience against AI competition. Estimated data.

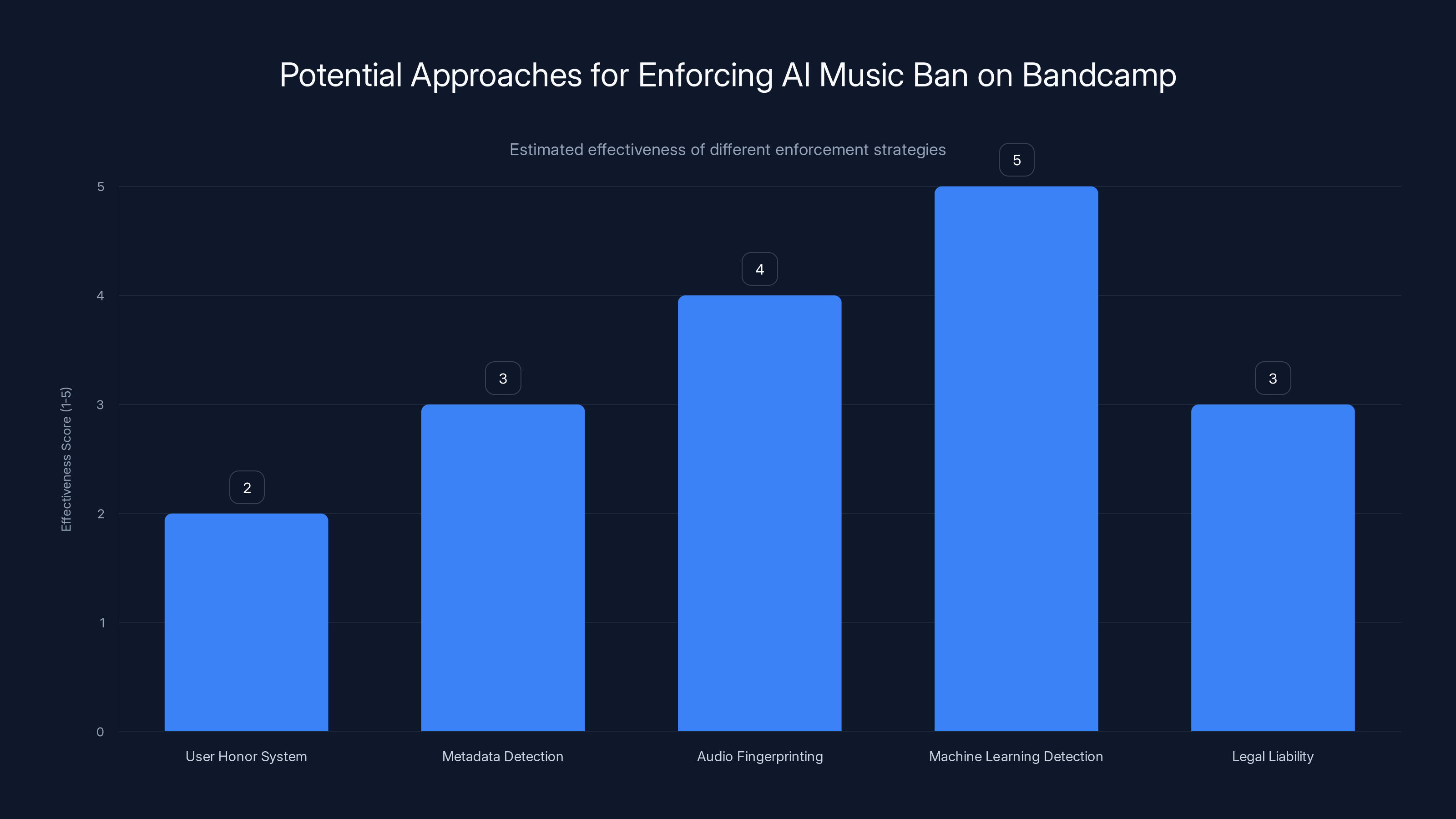

The Statement Versus the Reality: Can Bandcamp Actually Enforce This?

Here's where it gets complicated. Banning AI music is easy. Detecting AI music is hard.

AI-generated music doesn't have an audible watermark. It doesn't have metadata that screams "I'm synthetic." An AI music track sounds like a real song. If someone uploads it, Bandcamp's moderation team can't tell just by listening.

Suno and other AI music tools are designed to be invisible. They don't add signatures to the output. They don't announce their presence. A song generated by Suno sounds indistinguishable from a song recorded in a bedroom with a MIDI keyboard and Ableton Live.

So how does Bandcamp enforce this?

Possible approaches:

- User honor system: Rely on artists to self-report and not upload AI music

- Metadata detection: Analyze file metadata for signs of AI generation

- Audio fingerprinting: Compare uploads against known AI music outputs

- Machine learning detection: Train a model to identify AI-generated audio

- Legal liability: Hold artists legally responsible for violating the policy

The user honor system works until it doesn't. As soon as someone uploads AI music and gets a record deal from it, the system collapses.

Metadata detection is easily defeated. You can regenerate a file, strip metadata, and reupload.

Audio fingerprinting might work if Bandcamp can access fingerprints of AI outputs, but that requires Suno, Udio, and other AI music companies to cooperate—or be reverse-engineered.

Machine learning detection is theoretically possible but gets caught in an arms race. AI detection models are trained on AI music from a certain time period. As the AI tools improve, detection falls behind.

Legal liability is interesting. If Bandcamp makes a musician sign terms saying they created the music themselves, and then sues them for breach when they didn't, that could create consequences. But that only works if Bandcamp detects the violation first.

The most likely scenario is that Bandcamp will rely on community reporting and occasional audits. Users will report uploads that sound suspiciously AI-generated. Bandcamp will investigate and remove them. Some AI music will slip through. It's not perfect, but it creates a deterrent and a policy foundation.

The real question is whether Bandcamp's policy will become a competitive advantage. If fans trust Bandcamp more because of the AI ban, artists will choose Bandcamp to maintain that trust. If fans don't care, the policy becomes meaningless.

Given that Bandcamp's entire appeal is built on authenticity and artist-first values, the policy seems aligned with the platform's brand. It's not a surprising move; it's the logical evolution of Bandcamp's positioning.

What Other Platforms Are Doing: The Diverging Landscape

Bandcamp isn't the only platform making AI music policy choices, but its approach is noteworthy because it's explicitly restrictive.

Spotify hasn't banned AI music, but the company has created policies requiring AI music to be clearly labeled. Artists uploading music to Spotify now have to confirm whether the music contains AI-generated content. It's not a ban—it's transparency as reported by Billboard.

This is a very different approach. Spotify is saying: we'll allow AI music, but users need to know it's AI. Let the market decide.

Apple Music has been quiet on the topic. The company hasn't made a public statement about AI music, which is typical of Apple. It doesn't comment on policy until forced to.

YouTube's approach has evolved. Initially, the platform had no specific AI music policy. Now, YouTube requires creators using AI-generated content to disclose it in the upload process and to add appropriate labels.

These policies sit on a spectrum:

- Bandcamp: Ban AI music entirely

- Spotify/YouTube: Allow AI music with mandatory disclosure

- Apple Music: No public policy (likely allowing it)

- SoundCloud: Open to AI music, no restrictions

The philosophical divide is clear. Some platforms (Bandcamp) believe AI music threatens the integrity of human artistry and will damage user trust. Other platforms (Spotify, YouTube) believe AI music is inevitable and will instead focus on transparency and user choice.

Neither approach is inherently wrong. They reflect different business models and values. For artists, the choice of platform increasingly reflects which vision of the future they want to support.

The Artist Perspective: Who Benefits? Who Loses?

Bandcamp's policy creates winners and losers among musicians.

Winners:

Human musicians uploading to Bandcamp now have explicit platform endorsement. They can advertise their music as "created by a real artist," knowing that Bandcamp backs that claim. They're competing against humans, not against endless AI-generated options.

Artists who've been frustrated with commodification of music now have a home. If you care about craft, authenticity, and direct relationships with fans, Bandcamp is signaling that it's your platform.

Niche and experimental artists benefit from reduced AI noise. Music genres with smaller audiences often get flooded with mediocre AI-generated content. Banning AI music keeps the platform focused on quality human work.

Losers:

Musicians who wanted to use AI tools as creative assistance are now restricted. If someone used AI to generate a base and then heavily modified it with human creativity, Bandcamp's policy catches them. The "substantial part" language is vague enough to exclude these use cases.

Productive independent musicians who used AI for demos, arrangements, or production elements now have to remove those tools from their workflow or upload elsewhere.

Content creators who relied on Bandcamp for background music now need to find other platforms or pay for licensing.

But here's the deeper point: the artist community is split on AI music. It's not like all musicians oppose it. Some see it as a tool. Some see it as a threat. Bandcamp's policy makes a choice about which artists it wants to serve.

That's actually healthy. Not every platform needs the same policy. Artists can choose environments aligned with their values.

AI music is projected to capture a larger market share for functional applications, while human music retains a niche market with premium pricing. Estimated data based on industry trends.

The Broader Question: Is This a Moment or a Movement?

Bandcamp's move is symbolic. It's the platform saying: we're siding with human creativity in the AI era. That's worth attention because it signals a possible direction for the creative economy.

But will it matter in five years? Will other platforms follow? Will musicians actually migrate to Bandcamp because of this policy?

Here's the honest assessment: Bandcamp's ban is meaningful but limited. It affects Bandcamp. The larger music industry will move forward with AI music because the economics demand it. Music labels want AI music. Content creators want AI music. Tech companies want AI music.

What Bandcamp is saying is: if you're an artist who cares about human-made music, this is your home. If you're a fan who wants to support human creators, this is where to buy.

That's a legitimate value proposition. But it only works if Bandcamp can maintain it and if the market actually cares.

The Detection Problem: How Real Is the Enforcement Risk?

Let's get specific about how hard it actually is to detect AI music.

AI music generators like Suno and Udio have trained on massive datasets of real music. Their outputs are designed to be indistinguishable from human-created music. They generate in multiple formats, with various instruments and styles.

The forensic approach: AI music might leave statistical signatures. An AI model trained on specific data might produce music with certain harmonic or rhythmic patterns. But these signatures are subtle and change as the models improve.

The metadata approach: AI tools might embed information about their origin in file metadata. But this information can be stripped out. A user can regenerate the file, convert formats, or re-render it in a DAW to remove any traces.

The fingerprint approach: Bandcamp could potentially fingerprint known AI music outputs and check uploads against them. But this requires maintaining a database of AI music, which would need constant updates as new models release new outputs.

The practical approach: Most enforcement would likely come from user reports, audio analysis by Bandcamp moderators, and legal enforcement against violators. If someone uploads AI music and gets caught, Bandcamp removes it and bans the uploader.

But the gaps are obvious. Sophisticated users will get around detection. The question is whether it's worth the effort.

For a musician trying to monetize AI music on Bandcamp, the risk is: your uploads get reported, your account gets banned, and you lose access to the platform. That's a real deterrent.

For a serious content creator trying to use AI music to avoid licensing fees, the risk is lower. They can use Suno music elsewhere—YouTube, TikTok, or their own platforms.

So Bandcamp's enforcement is probably asymmetrical. It stops casual or lazy violators but not determined ones.

Investment Implications: What This Says About AI Music Companies

Suno just raised

Two reasons:

-

The use case is real: Even if AI music can't replace human musicians for serious artistic creation, it can replace human musicians for content background, games, marketing, and other functional applications. That market is enormous.

-

The legal situation might not matter: If judges rule that training on copyrighted material is fair use, then Suno's legal risks disappear. They pay fines as a cost of doing business, and the model remains profitable.

For investors, Suno represents a bet that AI music will become ubiquitous despite artist protests. The company has a clear path to profitability through licensing, API usage, and premium tiers.

Bandcamp, by contrast, is betting that there will always be a market segment that cares about human authenticity enough to pay more for it. That's a smaller market, but it might be more loyal and less price-sensitive.

Both bets could be right. AI music becomes ubiquitous for commodity use cases. Human music maintains premium pricing for serious artists and discerning fans.

Estimated data suggests that Bandcamp's focus on authenticity appeals to a smaller, but potentially more loyal, segment of the music market.

The $3 Million Question: What Did the Xania Monet Deal Actually Prove?

Telisha Jones, the person behind Xania Monet, got a reported $3 million deal for AI-generated music. That's the moment that made this real for the industry.

What does it prove?

One interpretation: AI music is now commercially viable and indistinguishable from human music. Labels are willing to invest in it. The market is ready.

Another interpretation: A human used AI as a tool to create music, then marketed that music to labels. The human is still the artist. The AI was the instrument. The deal isn't proof that AI can replace musicians; it's proof that humans can use AI to create music faster.

The truth probably splits the difference. Telisha Jones created something that sounded like professional R&B. The AI was the main tool. But she wrote the prompts, directed the music, and decided which outputs to use. She exercised creative judgment.

The question for labels was: does it matter? If the music is good and can be marketed, does it matter if it was created with AI or a piano?

Apparently, it didn't matter to Hallwood Media. They signed Xania Monet based on the quality and marketability of the music, not the origin story.

But it matters enormously to other labels, artists, and fans. The deal became controversial precisely because it suggested that human artistry wasn't the necessary ingredient—just music that sounds good.

Bandcamp's response is: we disagree. We think the human element matters. We're going to explicitly value it.

The Future State: Three Scenarios

Where does this go?

Scenario 1: AI Music Becomes Ubiquitous

Within three years, AI music is everywhere. It dominates background music, game soundtracks, and advertising. Platforms stop trying to distinguish between AI and human music because the distinction loses economic meaning. Bandcamp's ban becomes irrelevant because the platform becomes niche—a specialty store for human music enthusiasts, like vinyl records.

In this scenario, human musicians compete in a premium market. They're still valuable, but for different reasons: live performance, direct fan relationships, artistic credibility. The streaming and licensing model collapses.

Scenario 2: Regulated Bifurcation

Governments and industry groups establish standards: AI music must be labeled. Human music gets premium positioning. The market bifurcates between AI music (cheap, functional, transparent) and human music (premium, artistic, authenticated).

Bandcamp thrives in this scenario because it's explicitly human-first. But the music industry as a whole has to accept AI music's existence and relevance.

Scenario 3: Legal Resolution Destroys AI Music Economics

Courts rule that training AI models on copyrighted music without permission is not fair use. AI music companies face massive damages. The legal costs make AI music too expensive to develop. The threat recedes.

This seems unlikely given current judicial precedent, but it's possible if the political and legal environment shifts.

Most likely, elements of all three scenarios happen. AI music becomes ubiquitous in some domains, regulated in others, and remains a legal liability for its creators.

The Philosophical Angle: What Does Authenticity Actually Mean?

Bandcamp's ban rests on a philosophical claim: music created by humans has value that AI-generated music doesn't. That's worth examining.

What exactly is the value? Is it the listening experience? AI music can sound as good as human music. Maybe better.

Is it the creative process? Music created by humans involves more intentionality, skill, and decision-making. But does the listener care about the process or just the output?

Is it the artist? We value music because we connect with the artist. We think about their intentions and their story. AI music doesn't have an artist to connect with.

Or is it about economics? We want to ensure that musicians can make a living. AI music threatens that. So we value human-made music to protect the economic viability of the profession.

Bandcamp is implicitly making all these arguments. We want musicians to keep making music. That suggests the economic argument. We want fans to have confidence that music was created by humans. That suggests the artist/authenticity argument.

Neither argument is weak. But both are vulnerable to counterargument. If AI music becomes good enough, do we really care if a human created it? If AI music is cheaper and functional, why not use it for background purposes?

Bandcamp is choosing to side with authenticity and artist protection. That's a legitimate choice. But it's not self-evident. Future generations might look back and wonder why we cared so much about the human origin of music.

Machine learning detection is estimated to be the most effective approach for enforcing an AI music ban, though it faces challenges as AI tools evolve. Estimated data.

What This Means for the Broader AI Music Industry

Bandcamp's ban doesn't kill AI music. But it does something important: it establishes a major platform position against it. That matters for market perception and investor sentiment.

AI music companies might face more pressure to secure licensing from major labels instead of training on copyrighted material without permission. That makes the business model more expensive but more legally sound.

AI music tools might pivot toward explicitly licensed use cases: game development, content creation, commercial applications—anything that's not competing with human musicians for fan money.

The music industry will likely develop a two-tier system: AI music for commodity purposes, human music for serious artistic projects. That's a reasonable equilibrium.

For musicians, the message is mixed. Yes, AI is a threat to certain types of music work (background composition, production assistance). But it also creates opportunities. Musicians who embrace AI tools can produce more efficiently. Musicians who resist AI can position themselves as authentic alternatives.

Bandcamp is betting that the latter group—musicians and fans who care about authenticity—will become more valuable over time. That's not guaranteed. But it's a defensible bet.

The Platform's Bet: Can Bandcamp Sustain This Position?

Here's the real question: can Bandcamp actually maintain this policy long-term?

Three challenges emerge:

Challenge 1: Enforcement

As discussed, detecting AI music gets harder as the technology improves. Bandcamp needs to either invest heavily in detection technology or rely on user reports and spot checks. The former is expensive; the latter is imperfect.

Challenge 2: Community Pressure

As AI music becomes normal in the industry, there will be pressure on Bandcamp to relax its stance. Artists will want to upload hybrid work using AI and human elements. Investors might push Bandcamp to expand its market by accepting AI.

Challenge 3: Competitive Disadvantage

If other platforms allow AI music and Bandcamp doesn't, musicians might choose other platforms for distribution even if they prefer Bandcamp. The policy becomes a liability if it loses market share.

Bandcamp is betting that these challenges are outweighed by the benefits: platform differentiation, artist loyalty, and community trust.

Given Bandcamp's history and positioning, it's a defensible bet. The platform built its brand on being artist-first. A strong stance on human creativity is consistent with that brand.

But it also means Bandcamp is choosing a specific market segment: artists and fans who care about authenticity. That's smaller than the total music market, but potentially more profitable and loyal.

Case Study: The Year AI Music Hit the Mainstream

Let's ground this in a concrete timeline. How did we get here?

2023: Suno and Udio launch public AI music generation tools. Early outputs are obviously synthetic but improving rapidly.

Late 2023: AI-generated music starts appearing on Spotify and other platforms. Quality is mixed. Community backlash begins.

Early 2024: The Xania Monet story goes viral. An AI-generated artist gets a $3 million deal. The music industry panics. Lawsuits from major labels start against Suno.

Mid 2024: AI music starts topping charts. Suno raises massive funding despite legal troubles. Platforms start creating AI disclosure policies.

Early 2025: Adoption accelerates. Content creators use AI music to avoid licensing costs. Platforms struggle with enforcement.

January 2026: Bandcamp announces its AI music ban, marking a major platform's explicit rejection of AI music.

That's the trajectory. From cute experiment to existential threat in about three years.

Bandcamp's ban is a moment in this timeline—a moment when a major platform with 200+ million listeners says: not here, not on our platform.

The Economic Reality: Why Musicians Should Pay Attention

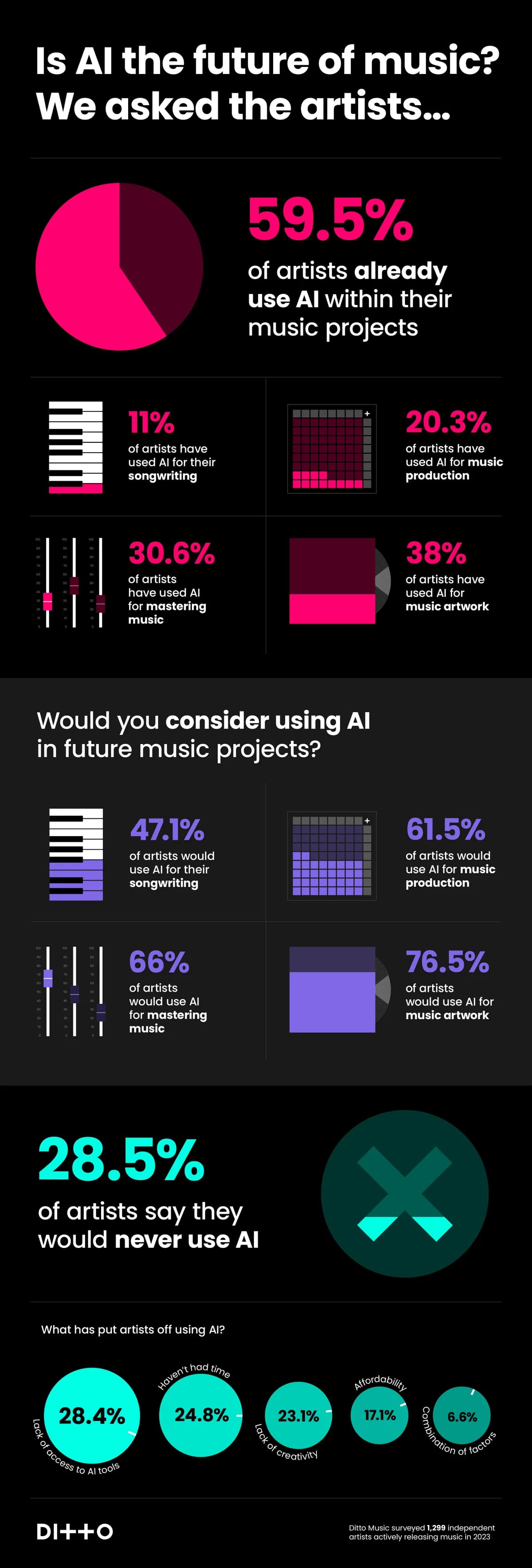

Let's talk economics. For a working musician, AI music represents a significant threat to income.

Consider the breakdown of music revenue:

- Streaming: 0.005 per stream (increasingly marginal for most artists)

- Sync licensing: 10,000+ per placement (increasingly displaced by AI)

- Composition for content: 1,000+ per track (increasingly automated)

- Session work: 500+ per session (increasingly unnecessary)

- Live performance: $0-unlimited (still valuable, still human)

AI directly threatens the middle tiers. If AI can generate background music for YouTube videos, sync licensing for commercials, and composition for indie games, those income streams dry up.

What survives?

- Live performance: You can't AI-generate a concert experience

- Direct fan support: Patreon, merchandise, crowdfunding

- Premium composition: High-quality work for major projects where the artist relationship matters

- Unique voice: Artists with distinctive styles that command premium pricing

Bandcamp's ban actually protects musicians by saying: your human artistry has value here. We'll help you monetize it directly without competing against AI alternatives.

That's economically significant for musicians who can position themselves as premium alternatives.

Looking Ahead: Five Critical Questions for 2026 and Beyond

Question 1: Will other platforms follow Bandcamp's lead?

Unlikely in the near term. Most platforms benefit from higher upload volume, even if it includes AI music. SoundCloud, YouTube, and others won't ban AI. But niche platforms focused on human artists might.

Question 2: Can Bandcamp actually enforce this policy at scale?

Not perfectly. But enforcement doesn't need to be perfect to be effective. Detection, user reports, and legal liability create enough friction to deter casual violations.

Question 3: Will musicians actually care about Bandcamp's stance?

Some will. Artists who care about authenticity will see it as validation. Artists trying to monetize AI music will use other platforms.

Question 4: How will AI music quality evolve over the next two years?

Rapidly. By 2027 or 2028, AI music will be indistinguishable from human music in many domains. Detection will become nearly impossible.

Question 5: Will governments regulate AI music?

Eventually. The EU is moving toward stricter AI regulation. Copyright offices are examining AI music. Expect regulatory clarity by 2027 or 2028.

The Reality: AI Music Isn't Going Away

Let's be clear about something. Bandcamp's ban is a strong statement, but it doesn't stop AI music. It's like a single platform's content moderation policy: meaningful within its scope, but not transformative for the broader industry.

AI music will continue developing. AI music companies will continue raising funding and improving their products. Labels will continue experimenting with AI music. Content creators will continue using it to avoid licensing fees.

What Bandcamp is doing is carving out a space for human music within that landscape. That's valuable. It's not revolutionary, but it's not meaningless either.

For musicians, the message is: adapt or specialize. Learn how to market yourself on platforms that value human artistry. Build direct relationships with fans. Develop skills that AI can't easily replicate. Or embrace AI as a tool and focus on the distinctly human elements of your work.

For fans, the message is: the music you love might increasingly be AI-generated, or it might be human-made. The origin story is becoming a label like "organic" or "fair trade." You can choose to support human creators or not.

The Core Issue: Trust in the Music Ecosystem

Underlying everything is a question of trust. Bandcamp says: "for fans to have confidence that the music they find on Bandcamp was created by humans."

Trust is the variable here. If fans care about whether their music is human-made, then Bandcamp's policy creates competitive advantage. If fans don't care, the policy is irrelevant.

My read is that enough fans do care—at least enough to sustain Bandcamp as a successful platform for human-made music. Maybe not to dominate the market, but to survive as a profitable alternative.

The broader music industry will increasingly bifurcate. AI music for commodity purposes. Human music for premium positioning. Some overlap in the middle where hybrid work exists.

Bandcamp is betting that the human music segment will remain valuable and that explicit endorsement of human creativity will be a competitive advantage.

That's not a guarantee. But it's a defensible bet, and it's consistent with Bandcamp's brand and business model.

FAQ

What is AI-generated music?

AI-generated music is created using machine learning models trained on massive datasets of existing music. Users provide prompts (text descriptions of what they want), and the AI generates original music that matches those descriptions. Tools like Suno can create full songs with lyrics, melodies, and production in seconds, without any human musician involvement.

How does Bandcamp's AI music ban work?

Bandcamp prohibits uploading any music created "wholly or in substantial part by AI." The platform also bans using AI to impersonate other artists or styles. If you violate the policy, Bandcamp will remove your music and potentially ban your account. Enforcement relies on user reports, audio analysis, and community moderation, though sophisticated users might be able to bypass detection.

Why did Bandcamp ban AI music?

Bandcamp stated it wants musicians to keep making music and for fans to have confidence in human creators. Practically, the ban protects the platform from legal liability if AI music was trained on copyrighted material without permission. It also positions Bandcamp as an artist-first platform in contrast to platforms that allow AI music. The policy aligns with Bandcamp's business model, where the platform profits when artists succeed and fans trust the platform.

What does this mean for musicians?

For human musicians uploading to Bandcamp, the policy is beneficial because you now compete against other human creators rather than unlimited AI-generated options. Your human-made music has explicit platform endorsement. For musicians who wanted to use AI as a creative tool, the policy is restrictive and might force migration to other platforms. The broader message is that human artistry has value and that platforms will increasingly differentiate on authenticity.

Will other platforms follow Bandcamp's lead?

Unlikely in the immediate future. Most platforms benefit from higher upload volume, even with AI music included. Spotify, YouTube, and SoundCloud have created disclosure requirements rather than bans. But niche platforms and communities focused on human creators might adopt similar policies. The divide will likely grow: some platforms embrace AI music, others explicitly reject it.

How does AI music affect copyright and artist rights?

AI music companies like Suno are being sued by Sony, Universal, and Warner for allegedly training models on copyrighted music without permission. The legal status of AI music training is still unclear. Recent judicial precedent suggests that training on copyrighted material might be considered fair use even if the original download was illegal. This remains unsettled and will likely face regulatory attention in 2026 and beyond.

Can Bandcamp actually detect and enforce the ban?

Partially. Detecting AI-generated music is technically challenging because modern AI outputs are indistinguishable from human-created music by ear. Bandcamp likely relies on a combination of user reports, audio analysis, metadata examination, and legal enforcement against violators. While sophisticated users can bypass detection, the policy creates enough friction to deter casual violations and signals clear platform values.

Is AI music really commercially viable?

Yes. AI music companies have raised over

What's the difference between using AI as a tool versus uploading pure AI music?

Artists using AI to assist their creative process (generating a base that they heavily modify) are different from uploading entirely AI-generated tracks. Bandcamp's policy prohibits music created "substantially" by AI, which technically catches both. This might be overly restrictive for artists who see AI as one tool among many, but the policy's vague language makes this ambiguous.

Where is music creation headed if AI continues improving?

Likely toward bifurcation: AI music for commodity purposes (background, games, content), human music for premium positioning and artistic credibility. Musicians will increasingly need to differentiate through live performance, direct fan relationships, and distinctive artistry. Platforms like Bandcamp will serve the human music segment, while others accommodate AI music. Regulatory frameworks will eventually clarify copyright and disclosure requirements.

Key Takeaways

- Bandcamp explicitly bans AI-generated music to protect human creators and maintain fan trust in platform authenticity.

- AI music companies like Suno are valued at $2.4 billion and continue raising funding despite lawsuits from major labels over copyright training data.

- Musicians face real economic threats from AI automation in background music, sync licensing, and composition work, but opportunities exist in live performance and direct fan relationships.

- Platform policies are diverging: Bandcamp bans AI music entirely while Spotify and YouTube focus on transparent disclosure and user choice.

- The broader music industry will likely bifurcate into commodity AI music for functional use cases and premium human music for artistic positioning.

Related Articles

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- Elgato Stream Deck Plus: Ultimate Control Hub for Streamers & Creators [2025]

- YouTube Shorts Filter: Complete Guide to Excluding Shorts from Search [2025]

- Nvidia Music Flamingo & Universal Music AI Deal [2025]

- Meta Ray-Ban Display Glasses Get Teleprompter and EMG Handwriting [2025]

- Corsair Galleon 100 SD: The Ultimate Stream Deck Gaming Keyboard [2025]

![Bandcamp's AI Music Ban: What It Means for Artists [2025]](https://tryrunable.com/blog/bandcamp-s-ai-music-ban-what-it-means-for-artists-2025/image-1-1768412218750.jpg)